You are viewing a plain text version of this content. The canonical link for it is here.

Posted to commits@airflow.apache.org by GitBox <gi...@apache.org> on 2020/10/12 13:20:44 UTC

[GitHub] [airflow] ashb opened a new pull request #11467: Add docs about Scheduler HA, how to use it and DB requirements

ashb opened a new pull request #11467:

URL: https://github.com/apache/airflow/pull/11467

This PR documents (from a user point of view) how Scheduler HA #10956 works, what the requirements are, and what config options there are that users might want to configure.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] potiuk commented on a change in pull request #11467: Add docs about Scheduler HA, how to use it and DB requirements

Posted by GitBox <gi...@apache.org>.

potiuk commented on a change in pull request #11467:

URL: https://github.com/apache/airflow/pull/11467#discussion_r503950861

##########

File path: docs/scheduler.rst

##########

@@ -65,3 +65,72 @@ If you want to use 'external trigger' to run future-dated execution dates, set `

This only has effect if your DAG has no ``schedule_interval``.

If you keep default ``allow_trigger_in_future = False`` and try 'external trigger' to run future-dated execution dates,

the scheduler won't execute it now but the scheduler will execute it in the future once the current date rolls over to the execution date.

+

+Running more than one scheduler

+-------------------------------

+

+Airflow 2.0 is the first release that officially supports running more one scheduler concurrently -- both for

+performance reasons and for resiliency.

+

+Overview

+""""""""

+

+The HA scheduler is written to take advantage of the existing metadata database. This was primarily done for

+operational simplicity: every component already has to speak to this DB, and by not using direct communication

+or consensus algorithm between schedulers (Raft, Paxos, etc.) nor another consensus tool (Apache Zookeeper, or

+Consul for instance) we have kept the "operational surface area" to a minimum.

+

+The scheduler now uses the serialized DAG representation to make it's scheduling decisions and the rough

+outline of the scheduling loop is:

+

+- Check for any DAGs needing a new DagRun, and create them

+- Examine a batch of DagRuns for schedulable TaskInstances or complete DagRuns

+- Select schedulable TaskInstances, and whilst respecting Pool limits and other concurrency limits, enqueue

+ them for execution

+

+This does however place some requirements on the Database.

+

+Database Requirements

+"""""""""""""""""""""

+

+The short version is that users of PostgreSQL 9.6+ or MySQL 8+ are all ready to go -- simple start running as

+many copies of the scheduler as you like -- there is no further set up or config options needed. If you are

+using a different database please read on

+

+To maintain performance and throughput there is one part of the scheduling loop that does a number of

+calculations in memory (because having to round-trip to the DB for each TaskInstance would be too slow) so we

+need to ensure that only a single scheduler is in this critical section at once - otherwise limits would not

+be correctly respected. To achieve this we use database row-level locks (using ``SELECT ... FOR UPDATE``).

+

+This critical section is where TaskInstances go from scheduled state and are enqueued to the executor, whilst

+ensuring the various concurrency and pool limits are respected. The critical section is obtained by asking for

+a row-level write lock on every row of the Pool table (roughly equivalent to ``SELECT * FROM slot_pool FOR

+UPDATE NOWAIT`` but the exact query is slightly different).

+

+The following databases are fully supported and provide an "optimal" experience:

+

+- PostgreSQL 9.6+

+- MySQL 8+

+

+MaraiDB will have slightly "degraded" performance, as it does not implement the ``SKIP LOCKED`` or ``NOWAIT`` SQL clauses --

Review comment:

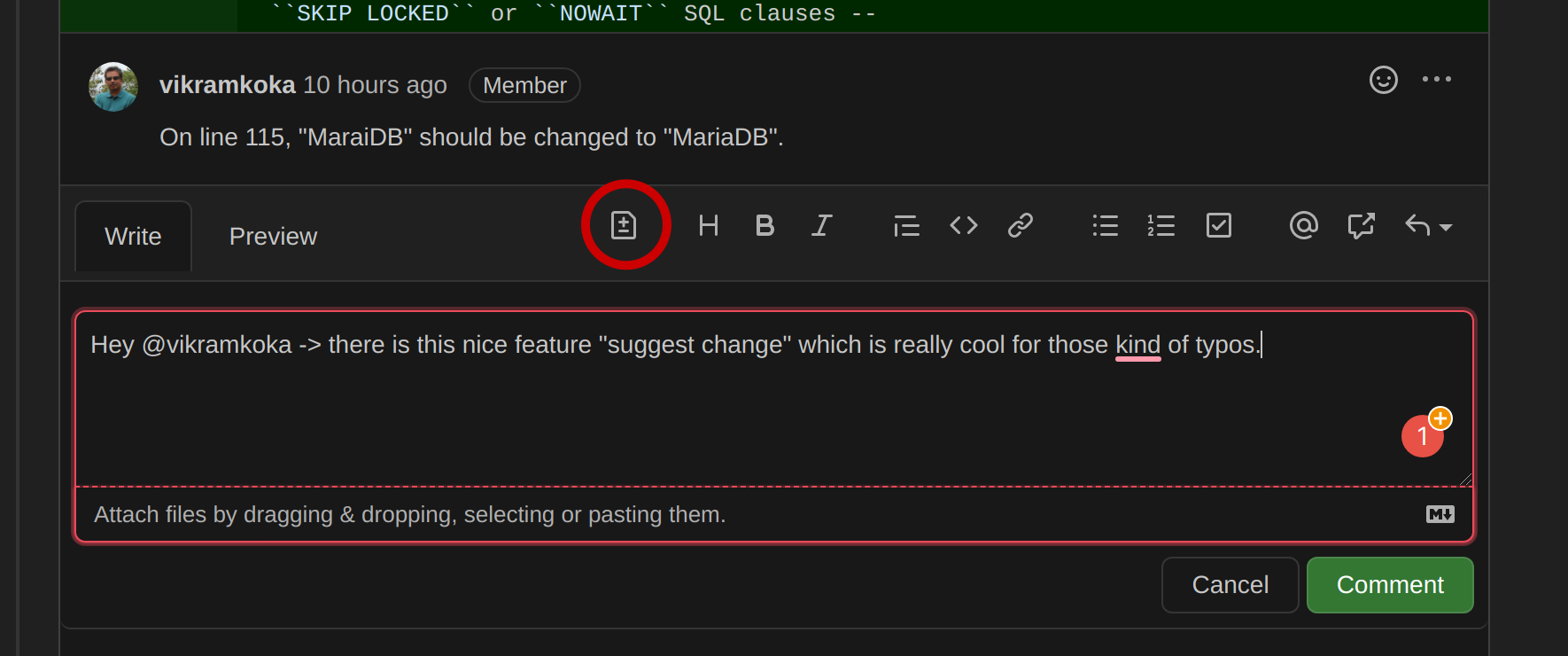

Hey @vikramkoka -> FYI. there is this nice feature "suggest change" which is really cool for those kind of typos. It's not easily discoverable - I initially also did not know that it exist, but once I started using it, I even change small snippets of code this way :)

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] github-actions[bot] commented on pull request #11467: Add docs about Scheduler HA, how to use it and DB requirements

Posted by GitBox <gi...@apache.org>.

github-actions[bot] commented on pull request #11467:

URL: https://github.com/apache/airflow/pull/11467#issuecomment-718001675

[The Workflow run](https://github.com/apache/airflow/actions/runs/333885380) is cancelling this PR. It has some failed jobs matching ^Pylint$,^Static checks,^Build docs$,^Spell check docs$,^Backport packages$,^Provider packages,^Checks: Helm tests$,^Test OpenAPI*.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] github-actions[bot] commented on pull request #11467: Add docs about Scheduler HA, how to use it and DB requirements

Posted by GitBox <gi...@apache.org>.

github-actions[bot] commented on pull request #11467:

URL: https://github.com/apache/airflow/pull/11467#issuecomment-717943353

[The Workflow run](https://github.com/apache/airflow/actions/runs/333714690) is cancelling this PR. It has some failed jobs matching ^Pylint$,^Static checks,^Build docs$,^Spell check docs$,^Backport packages$,^Provider packages,^Checks: Helm tests$,^Test OpenAPI*.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] vikramkoka commented on a change in pull request #11467: Add docs about Scheduler HA, how to use it and DB requirements

Posted by GitBox <gi...@apache.org>.

vikramkoka commented on a change in pull request #11467:

URL: https://github.com/apache/airflow/pull/11467#discussion_r503642685

##########

File path: docs/scheduler.rst

##########

@@ -65,3 +65,72 @@ If you want to use 'external trigger' to run future-dated execution dates, set `

This only has effect if your DAG has no ``schedule_interval``.

If you keep default ``allow_trigger_in_future = False`` and try 'external trigger' to run future-dated execution dates,

the scheduler won't execute it now but the scheduler will execute it in the future once the current date rolls over to the execution date.

+

+Running more than one scheduler

+-------------------------------

+

+Airflow 2.0 is the first release that officially supports running more one scheduler concurrently -- both for

+performance reasons and for resiliency.

+

+Overview

+""""""""

+

+The HA scheduler is written to take advantage of the existing metadata database. This was primarily done for

+operational simplicity: every component already has to speak to this DB, and by not using direct communication

+or consensus algorithm between schedulers (Raft, Paxos, etc.) nor another consensus tool (Apache Zookeeper, or

+Consul for instance) we have kept the "operational surface area" to a minimum.

+

+The scheduler now uses the serialized DAG representation to make it's scheduling decisions and the rough

+outline of the scheduling loop is:

+

+- Check for any DAGs needing a new DagRun, and create them

+- Examine a batch of DagRuns for schedulable TaskInstances or complete DagRuns

+- Select schedulable TaskInstances, and whilst respecting Pool limits and other concurrency limits, enqueue

+ them for execution

+

+This does however place some requirements on the Database.

+

+Database Requirements

+"""""""""""""""""""""

+

+The short version is that users of PostgreSQL 9.6+ or MySQL 8+ are all ready to go -- simple start running as

+many copies of the scheduler as you like -- there is no further set up or config options needed. If you are

+using a different database please read on

+

+To maintain performance and throughput there is one part of the scheduling loop that does a number of

+calculations in memory (because having to round-trip to the DB for each TaskInstance would be too slow) so we

+need to ensure that only a single scheduler is in this critical section at once - otherwise limits would not

+be correctly respected. To achieve this we use database row-level locks (using ``SELECT ... FOR UPDATE``).

+

+This critical section is where TaskInstances go from scheduled state and are enqueued to the executor, whilst

+ensuring the various concurrency and pool limits are respected. The critical section is obtained by asking for

+a row-level write lock on every row of the Pool table (roughly equivalent to ``SELECT * FROM slot_pool FOR

+UPDATE NOWAIT`` but the exact query is slightly different).

+

+The following databases are fully supported and provide an "optimal" experience:

+

+- PostgreSQL 9.6+

+- MySQL 8+

+

+MaraiDB will have slightly "degraded" performance, as it does not implement the ``SKIP LOCKED`` or ``NOWAIT`` SQL clauses --

Review comment:

On line 115, "MaraiDB" should be changed to "MariaDB".

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] ashb commented on pull request #11467: Add docs about Scheduler HA, how to use it and DB requirements

Posted by GitBox <gi...@apache.org>.

ashb commented on pull request #11467:

URL: https://github.com/apache/airflow/pull/11467#issuecomment-707116289

This is only a start, I'll add more. What would else would people like to see?

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] XD-DENG commented on a change in pull request #11467: Add docs about Scheduler HA, how to use it and DB requirements

Posted by GitBox <gi...@apache.org>.

XD-DENG commented on a change in pull request #11467:

URL: https://github.com/apache/airflow/pull/11467#discussion_r503441682

##########

File path: docs/scheduler.rst

##########

@@ -65,3 +65,72 @@ If you want to use 'external trigger' to run future-dated execution dates, set `

This only has effect if your DAG has no ``schedule_interval``.

If you keep default ``allow_trigger_in_future = False`` and try 'external trigger' to run future-dated execution dates,

the scheduler won't execute it now but the scheduler will execute it in the future once the current date rolls over to the execution date.

+

+Running more than one scheduler

+-------------------------------

+

+Airflow 2.0 is the first release that officially supports running more one scheduler concurrently -- both for

Review comment:

`... more one scheduler...` -> `... more than one scheduler...`

##########

File path: docs/scheduler.rst

##########

@@ -65,3 +65,72 @@ If you want to use 'external trigger' to run future-dated execution dates, set `

This only has effect if your DAG has no ``schedule_interval``.

If you keep default ``allow_trigger_in_future = False`` and try 'external trigger' to run future-dated execution dates,

the scheduler won't execute it now but the scheduler will execute it in the future once the current date rolls over to the execution date.

+

+Running more than one scheduler

Review comment:

I would suggest to use sentence case to keep consistent with other titles in this page

##########

File path: docs/scheduler.rst

##########

@@ -65,3 +65,72 @@ If you want to use 'external trigger' to run future-dated execution dates, set `

This only has effect if your DAG has no ``schedule_interval``.

If you keep default ``allow_trigger_in_future = False`` and try 'external trigger' to run future-dated execution dates,

the scheduler won't execute it now but the scheduler will execute it in the future once the current date rolls over to the execution date.

+

+Running more than one scheduler

+-------------------------------

+

+Airflow 2.0 is the first release that officially supports running more one scheduler concurrently -- both for

+performance reasons and for resiliency.

+

+Overview

+""""""""

+

+The HA scheduler is written to take advantage of the existing metadata database. This was primarily done for

+operational simplicity: every component already has to speak to this DB, and by not using direct communication

+or consensus algorithm between schedulers (Raft, Paxos, etc.) nor another consensus tool (Apache Zookeeper, or

+Consul for instance) we have kept the "operational surface area" to a minimum.

+

+The scheduler now uses the serialized DAG representation to make it's scheduling decisions and the rough

Review comment:

`it's` -> `its`

##########

File path: docs/scheduler.rst

##########

@@ -65,3 +65,72 @@ If you want to use 'external trigger' to run future-dated execution dates, set `

This only has effect if your DAG has no ``schedule_interval``.

If you keep default ``allow_trigger_in_future = False`` and try 'external trigger' to run future-dated execution dates,

the scheduler won't execute it now but the scheduler will execute it in the future once the current date rolls over to the execution date.

+

+Running more than one scheduler

+-------------------------------

+

+Airflow 2.0 is the first release that officially supports running more one scheduler concurrently -- both for

+performance reasons and for resiliency.

+

+Overview

+""""""""

+

+The HA scheduler is written to take advantage of the existing metadata database. This was primarily done for

+operational simplicity: every component already has to speak to this DB, and by not using direct communication

+or consensus algorithm between schedulers (Raft, Paxos, etc.) nor another consensus tool (Apache Zookeeper, or

+Consul for instance) we have kept the "operational surface area" to a minimum.

+

+The scheduler now uses the serialized DAG representation to make it's scheduling decisions and the rough

+outline of the scheduling loop is:

+

+- Check for any DAGs needing a new DagRun, and create them

+- Examine a batch of DagRuns for schedulable TaskInstances or complete DagRuns

+- Select schedulable TaskInstances, and whilst respecting Pool limits and other concurrency limits, enqueue

+ them for execution

+

+This does however place some requirements on the Database.

+

+Database Requirements

+"""""""""""""""""""""

+

+The short version is that users of PostgreSQL 9.6+ or MySQL 8+ are all ready to go -- simple start running as

Review comment:

`simple start...` -> `simply start...`

##########

File path: docs/scheduler.rst

##########

@@ -65,3 +65,72 @@ If you want to use 'external trigger' to run future-dated execution dates, set `

This only has effect if your DAG has no ``schedule_interval``.

If you keep default ``allow_trigger_in_future = False`` and try 'external trigger' to run future-dated execution dates,

the scheduler won't execute it now but the scheduler will execute it in the future once the current date rolls over to the execution date.

+

+Running more than one scheduler

+-------------------------------

+

+Airflow 2.0 is the first release that officially supports running more one scheduler concurrently -- both for

+performance reasons and for resiliency.

+

+Overview

+""""""""

+

+The HA scheduler is written to take advantage of the existing metadata database. This was primarily done for

+operational simplicity: every component already has to speak to this DB, and by not using direct communication

+or consensus algorithm between schedulers (Raft, Paxos, etc.) nor another consensus tool (Apache Zookeeper, or

+Consul for instance) we have kept the "operational surface area" to a minimum.

+

+The scheduler now uses the serialized DAG representation to make it's scheduling decisions and the rough

+outline of the scheduling loop is:

+

+- Check for any DAGs needing a new DagRun, and create them

+- Examine a batch of DagRuns for schedulable TaskInstances or complete DagRuns

+- Select schedulable TaskInstances, and whilst respecting Pool limits and other concurrency limits, enqueue

+ them for execution

+

+This does however place some requirements on the Database.

+

+Database Requirements

+"""""""""""""""""""""

+

+The short version is that users of PostgreSQL 9.6+ or MySQL 8+ are all ready to go -- simple start running as

+many copies of the scheduler as you like -- there is no further set up or config options needed. If you are

+using a different database please read on

+

+To maintain performance and throughput there is one part of the scheduling loop that does a number of

+calculations in memory (because having to round-trip to the DB for each TaskInstance would be too slow) so we

+need to ensure that only a single scheduler is in this critical section at once - otherwise limits would not

+be correctly respected. To achieve this we use database row-level locks (using ``SELECT ... FOR UPDATE``).

+

+This critical section is where TaskInstances go from scheduled state and are enqueued to the executor, whilst

+ensuring the various concurrency and pool limits are respected. The critical section is obtained by asking for

+a row-level write lock on every row of the Pool table (roughly equivalent to ``SELECT * FROM slot_pool FOR

+UPDATE NOWAIT`` but the exact query is slightly different).

+

+The following databases are fully supported and provide an "optimal" experience:

+

+- PostgreSQL 9.6+

+- MySQL 8+

+

+MaraiDB will have slightly "degraded" performance, as it does not implement the ``SKIP LOCKED`` or ``NOWAIT`` SQL clauses --

+see `MDEV-13115 <https://jira.mariadb.org/browse/MDEV-13115>`_. Multiple schedulers will operate without this

+extension, but instead of skipping over the critical section and carrying on with other scheduling work

+(creating DagRuns, progressing TaskInstances to the scheduled state) they will wait for the critical section

+lock to be released.

+

+.. warning::

+

+ MySQL 5.x also does not support ``SKIP LOCKED`` or ``NOWAIT``, and additionally is more prone to deciding

+ queries are deadlocked, so running with a more than a single scheduler on MySQL 5.x is not supported or

Review comment:

`...running with a more than a single scheduler...` -> `...running with more than a single scheduler...`

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] kaxil commented on a change in pull request #11467: Add docs about Scheduler HA, how to use it and DB requirements

Posted by GitBox <gi...@apache.org>.

kaxil commented on a change in pull request #11467:

URL: https://github.com/apache/airflow/pull/11467#discussion_r512636397

##########

File path: docs/scheduler.rst

##########

@@ -65,3 +65,99 @@ If you want to use 'external trigger' to run future-dated execution dates, set `

This only has effect if your DAG has no ``schedule_interval``.

If you keep default ``allow_trigger_in_future = False`` and try 'external trigger' to run future-dated execution dates,

the scheduler won't execute it now but the scheduler will execute it in the future once the current date rolls over to the execution date.

+

+Running More Than One Scheduler

+-------------------------------

+

+.. versionadded: 2.0.0

+

+Airflow supports running more than one scheduler concurrently -- both for performance reasons and for

+resiliency.

+

+Overview

+""""""""

+

+The :abbr:`HA (highly available)` scheduler is designed to take advantage of the existing metadata database.

+This was primarily done for operational simplicity: every component already has to speak to this DB, and by

+not using direct communication or consensus algorithm between schedulers (Raft, Paxos, etc.) nor another

+consensus tool (Apache Zookeeper, or Consul for instance) we have kept the "operational surface area" to a

+minimum.

+

+The scheduler now uses the serialized DAG representation to make its scheduling decisions and the rough

+outline of the scheduling loop is:

+

+- Check for any DAGs needing a new DagRun, and create them

+- Examine a batch of DagRuns for schedulable TaskInstances or complete DagRuns

+- Select schedulable TaskInstances, and whilst respecting Pool limits and other concurrency limits, enqueue

+ them for execution

+

+This does however place some requirements on the Database.

+

+.. _scheduler:ha:db_requirements:

+

+Database Requirements

+"""""""""""""""""""""

+

+The short version is that users of PostgreSQL 9.6+ or MySQL 8+ are all ready to go -- you can start running as

+many copies of the scheduler as you like -- there is no further set up or config options needed. If you are

+using a different database please read on.

+

+To maintain performance and throughput there is one part of the scheduling loop that does a number of

+calculations in memory (because having to round-trip to the DB for each TaskInstance would be too slow) so we

+need to ensure that only a single scheduler is in this critical section at once - otherwise limits would not

+be correctly respected. To achieve this we use database row-level locks (using ``SELECT ... FOR UPDATE``).

+

+This critical section is where TaskInstances go from scheduled state and are enqueued to the executor, whilst

+ensuring the various concurrency and pool limits are respected. The critical section is obtained by asking for

+a row-level write lock on every row of the Pool table (roughly equivalent to ``SELECT * FROM slot_pool FOR

+UPDATE NOWAIT`` but the exact query is slightly different).

+

+The following databases are fully supported and provide an "optimal" experience:

+

+- PostgreSQL 9.6+

+- MySQL 8+

+

+MariaDB will have slightly "degraded" performance, as it does not implement the ``SKIP LOCKED`` or ``NOWAIT`` SQL clauses --

+see `MDEV-13115 <https://jira.mariadb.org/browse/MDEV-13115>`_. Multiple schedulers will operate without this

+extension, but instead of skipping over the critical section and carrying on with other scheduling work

+(creating DagRuns, progressing TaskInstances to the scheduled state) they will wait for the critical section

+lock to be released.

+

+.. warning::

+

+ MySQL 5.x also does not support ``SKIP LOCKED`` or ``NOWAIT``, and additionally is more prone to deciding

+ queries are deadlocked, so running with more than a single scheduler on MySQL 5.x is not supported or

+ recommended.

+

+.. note::

+

+ Microsoft SQLServer has not been tested with HA.

+

+.. _scheduler:ha:tunables:

+

+Scheduler Tuneables

+"""""""""""""""""""

+

+The following config settings can be used to control aspects of the Scheduler HA loop.

+

+- :ref:`config:scheduler__max_dagruns_to_create_per_loop`

+

+ This changes the number of dags that are locked by each scheduler when

+ creating dag runs. One possible reason for setting this lower is if you

+ have huge dags and are running multiple schedules, you won't want one

+ scheduler to do all the work.

+

+- :ref:`config:scheduler__max_dagruns_per_loop_to_schedule`

+

+ How many DagRuns should a scheduler examine (and lock) when scheduling

+ and queuing tasks. Increasing this limit will allow more throughput for

+ smaller DAGs but will likely slow down throughput for larger (>500

+ tasks for example) DAGs. Setting this too high when using multiple

+ schedulers could also lead to one scheduler taking all the dag runs

+ leaving no work for the others.

+

+- :ref:`config:scheduler__use_row_level_locking`

+

+ Should the scheduler issue `SELECT ... FOR UPDATE` in relevant queries.

+ If this is set to False then you should not run more than a single

+ scheduler at once

Review comment:

```suggestion

Should the scheduler issue ``SELECT ... FOR UPDATE`` in relevant queries.

If this is set to False then you should not run more than a single

scheduler at once

```

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] ashb commented on a change in pull request #11467: Add docs about Scheduler HA, how to use it and DB requirements

Posted by GitBox <gi...@apache.org>.

ashb commented on a change in pull request #11467:

URL: https://github.com/apache/airflow/pull/11467#discussion_r503299224

##########

File path: docs/scheduler.rst

##########

@@ -65,3 +65,72 @@ If you want to use 'external trigger' to run future-dated execution dates, set `

This only has effect if your DAG has no ``schedule_interval``.

If you keep default ``allow_trigger_in_future = False`` and try 'external trigger' to run future-dated execution dates,

the scheduler won't execute it now but the scheduler will execute it in the future once the current date rolls over to the execution date.

+

+Running more than one scheduler

+-------------------------------

+

+Airflow 2.0 is the first release that officially supports running more one scheduler concurrently -- both for

+performance reasons and for resiliency.

+

+Overview

+""""""""

+

+The HA scheduler is written to take advantage of the existing metadata database. This was primarily done for

+operational simplicity: every component already has to speak to this DB, and by not using direct communication

+or consensus algorithm between schedulers (Raft, Paxos, etc.) nor another consensus tool (Apache Zookeeper, or

+Consul for instance) we have kept the "operational surface area" to a minimum.

+

+The scheduler now uses the serialized DAG representation to make it's scheduling decisions and the rough

+outline of the scheduling loop is:

+

+- Check for any DAGs needing a new DagRun, and create them

+- Examine a batch of DagRuns for schedulable TaskInstances or complete DagRuns

+- Select schedulable TaskInstances, and whilst respecting Pool limits and other concurrency limits, enqueue

+ them for execution

+

+This does however place some requirements on the Database.

+

+Database Requirements

+"""""""""""""""""""""

+

+The short version is that users of PostgreSQL 9.6+ or MySQL 8+ are all ready to go -- simple start running as

+many copies of the scheduler as you like -- there is no further set up or config options needed. If you are

+using a different database please read on

+

+To maintain performance and throughput there is one part of the scheduling loop that does a number of

+calculations in memory (because having to round-trip to the DB for each TaskInstance would be too slow) so we

+need to ensure that only a single scheduler is in this critical section at once - otherwise limits would not

+be correctly respected. To achieve this we use database row-level locks (using ``SELECT ... FOR UPDATE``).

+

+This critical section is where TaskInstances go from scheduled state and are enqueued to the executor, whilst

+ensuring the various concurrency and pool limits are respected. The critical section is obtained by asking for

+a row-level write lock on every row of the Pool table (roughly equivalent to ``SELECT * FROM slot_pool FOR

+UPDATE NOWAIT`` but the exact query is slightly different).

+

+The following databases are fully supported and provide an "optimal" experience:

+

+- PostgreSQL 9.6+

+- MySQL 8+

+

+MaraiDB will have slightly "degraded" performance, as it does not implement the ``SKIP LOCKED`` or ``NOWAIT`` SQL clauses --

+see `MDEV-13115 <https://jira.mariadb.org/browse/MDEV-13115>`_. Multiple schedulers will operate without this

+extension, but instead of skipping over the critical section and carrying on with other scheduling work

+(creating DagRuns, progressing TaskInstances to the scheduled state) they will wait for the critical section

+lock to be released.

+

+.. warning::

+

+ MySQL 5.x also does not support ``SKIP LOCKED`` or ``NOWAIT``, and additionally is more prone to deciding

+ queries are deadlocked, so running with a more than a single scheduler on MySQL 5.x is not supported or

+ recommended.

+

+.. note::

+

+ Microsoft SQLServer has not been tested with HA.

+

+Tuneables

+"""""""""

+

+- :ref:`config:scheduler__max_dagruns_to_create_per_loop`

Review comment:

I'm almost tempted to move the docs out of the config and include them here, or expand on them here and just leave a short version in the config and point at this section from there.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] ashb commented on a change in pull request #11467: Add docs about Scheduler HA, how to use it and DB requirements

Posted by GitBox <gi...@apache.org>.

ashb commented on a change in pull request #11467:

URL: https://github.com/apache/airflow/pull/11467#discussion_r512588189

##########

File path: docs/scheduler.rst

##########

@@ -65,3 +65,72 @@ If you want to use 'external trigger' to run future-dated execution dates, set `

This only has effect if your DAG has no ``schedule_interval``.

If you keep default ``allow_trigger_in_future = False`` and try 'external trigger' to run future-dated execution dates,

the scheduler won't execute it now but the scheduler will execute it in the future once the current date rolls over to the execution date.

+

+Running more than one scheduler

+-------------------------------

+

+Airflow 2.0 is the first release that officially supports running more one scheduler concurrently -- both for

+performance reasons and for resiliency.

+

+Overview

+""""""""

+

+The HA scheduler is written to take advantage of the existing metadata database. This was primarily done for

+operational simplicity: every component already has to speak to this DB, and by not using direct communication

+or consensus algorithm between schedulers (Raft, Paxos, etc.) nor another consensus tool (Apache Zookeeper, or

+Consul for instance) we have kept the "operational surface area" to a minimum.

Review comment:

Hmmm, not sure about this one -- I like the idea, but I think I'd rather the docs be the source of truth rather than linking to the AIP.

I might link to the AIP for now though.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] turbaszek commented on a change in pull request #11467: Add docs about Scheduler HA, how to use it and DB requirements

Posted by GitBox <gi...@apache.org>.

turbaszek commented on a change in pull request #11467:

URL: https://github.com/apache/airflow/pull/11467#discussion_r503549470

##########

File path: docs/scheduler.rst

##########

@@ -65,3 +65,72 @@ If you want to use 'external trigger' to run future-dated execution dates, set `

This only has effect if your DAG has no ``schedule_interval``.

If you keep default ``allow_trigger_in_future = False`` and try 'external trigger' to run future-dated execution dates,

the scheduler won't execute it now but the scheduler will execute it in the future once the current date rolls over to the execution date.

+

+Running more than one scheduler

+-------------------------------

+

+Airflow 2.0 is the first release that officially supports running more one scheduler concurrently -- both for

Review comment:

```suggestion

Airflow supports running more than one scheduler concurrently -- both for

```

Shorter and will be longer "up to date"

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] github-actions[bot] commented on pull request #11467: Add docs about Scheduler HA, how to use it and DB requirements

Posted by GitBox <gi...@apache.org>.

github-actions[bot] commented on pull request #11467:

URL: https://github.com/apache/airflow/pull/11467#issuecomment-717275951

[The Workflow run](https://github.com/apache/airflow/actions/runs/331465268) is cancelling this PR. It has some failed jobs matching ^Pylint$,^Static checks,^Build docs$,^Spell check docs$,^Backport packages$,^Provider packages,^Checks: Helm tests$,^Test OpenAPI*.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] github-actions[bot] removed a comment on pull request #11467: Add docs about Scheduler HA, how to use it and DB requirements

Posted by GitBox <gi...@apache.org>.

github-actions[bot] removed a comment on pull request #11467:

URL: https://github.com/apache/airflow/pull/11467#issuecomment-717943828

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] mik-laj commented on pull request #11467: Add docs about Scheduler HA, how to use it and DB requirements

Posted by GitBox <gi...@apache.org>.

mik-laj commented on pull request #11467:

URL: https://github.com/apache/airflow/pull/11467#issuecomment-707367311

Can you also add some documentation on the database setup page? If users know about this requirement in advance, they will be able to select these databases when creating an instance. Otherwise, users will rely on the default values provided by the cloud provider.

https://airflow.readthedocs.io/en/latest/howto/initialize-database.html

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] github-actions[bot] commented on pull request #11467: Add docs about Scheduler HA, how to use it and DB requirements

Posted by GitBox <gi...@apache.org>.

github-actions[bot] commented on pull request #11467:

URL: https://github.com/apache/airflow/pull/11467#issuecomment-717943828

[The Workflow run](https://github.com/apache/airflow/actions/runs/333715165) is cancelling this PR. It has some failed jobs matching ^Pylint$,^Static checks,^Build docs$,^Spell check docs$,^Backport packages$,^Provider packages,^Checks: Helm tests$,^Test OpenAPI*.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] kaxil commented on a change in pull request #11467: Add docs about Scheduler HA, how to use it and DB requirements

Posted by GitBox <gi...@apache.org>.

kaxil commented on a change in pull request #11467:

URL: https://github.com/apache/airflow/pull/11467#discussion_r503354926

##########

File path: docs/scheduler.rst

##########

@@ -65,3 +65,72 @@ If you want to use 'external trigger' to run future-dated execution dates, set `

This only has effect if your DAG has no ``schedule_interval``.

If you keep default ``allow_trigger_in_future = False`` and try 'external trigger' to run future-dated execution dates,

the scheduler won't execute it now but the scheduler will execute it in the future once the current date rolls over to the execution date.

+

+Running more than one scheduler

+-------------------------------

+

+Airflow 2.0 is the first release that officially supports running more one scheduler concurrently -- both for

+performance reasons and for resiliency.

+

+Overview

+""""""""

+

+The HA scheduler is written to take advantage of the existing metadata database. This was primarily done for

+operational simplicity: every component already has to speak to this DB, and by not using direct communication

+or consensus algorithm between schedulers (Raft, Paxos, etc.) nor another consensus tool (Apache Zookeeper, or

+Consul for instance) we have kept the "operational surface area" to a minimum.

+

+The scheduler now uses the serialized DAG representation to make it's scheduling decisions and the rough

+outline of the scheduling loop is:

+

+- Check for any DAGs needing a new DagRun, and create them

+- Examine a batch of DagRuns for schedulable TaskInstances or complete DagRuns

+- Select schedulable TaskInstances, and whilst respecting Pool limits and other concurrency limits, enqueue

+ them for execution

+

+This does however place some requirements on the Database.

+

+Database Requirements

+"""""""""""""""""""""

+

+The short version is that users of PostgreSQL 9.6+ or MySQL 8+ are all ready to go -- simple start running as

+many copies of the scheduler as you like -- there is no further set up or config options needed. If you are

+using a different database please read on

+

+To maintain performance and throughput there is one part of the scheduling loop that does a number of

+calculations in memory (because having to round-trip to the DB for each TaskInstance would be too slow) so we

+need to ensure that only a single scheduler is in this critical section at once - otherwise limits would not

+be correctly respected. To achieve this we use database row-level locks (using ``SELECT ... FOR UPDATE``).

+

+This critical section is where TaskInstances go from scheduled state and are enqueued to the executor, whilst

+ensuring the various concurrency and pool limits are respected. The critical section is obtained by asking for

+a row-level write lock on every row of the Pool table (roughly equivalent to ``SELECT * FROM slot_pool FOR

+UPDATE NOWAIT`` but the exact query is slightly different).

+

+The following databases are fully supported and provide an "optimal" experience:

+

+- PostgreSQL 9.6+

+- MySQL 8+

+

+MaraiDB will have slightly "degraded" performance, as it does not implement the ``SKIP LOCKED`` or ``NOWAIT`` SQL clauses --

+see `MDEV-13115 <https://jira.mariadb.org/browse/MDEV-13115>`_. Multiple schedulers will operate without this

+extension, but instead of skipping over the critical section and carrying on with other scheduling work

+(creating DagRuns, progressing TaskInstances to the scheduled state) they will wait for the critical section

+lock to be released.

+

+.. warning::

+

+ MySQL 5.x also does not support ``SKIP LOCKED`` or ``NOWAIT``, and additionally is more prone to deciding

+ queries are deadlocked, so running with a more than a single scheduler on MySQL 5.x is not supported or

+ recommended.

+

+.. note::

+

+ Microsoft SQLServer has not been tested with HA.

+

+Tuneables

+"""""""""

+

+- :ref:`config:scheduler__max_dagruns_to_create_per_loop`

Review comment:

I will vote for: Expanding them here and just leave a short version in the config and point at this section from there.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] ashb commented on a change in pull request #11467: Add docs about Scheduler HA, how to use it and DB requirements

Posted by GitBox <gi...@apache.org>.

ashb commented on a change in pull request #11467:

URL: https://github.com/apache/airflow/pull/11467#discussion_r512587355

##########

File path: docs/scheduler.rst

##########

@@ -65,3 +65,72 @@ If you want to use 'external trigger' to run future-dated execution dates, set `

This only has effect if your DAG has no ``schedule_interval``.

If you keep default ``allow_trigger_in_future = False`` and try 'external trigger' to run future-dated execution dates,

the scheduler won't execute it now but the scheduler will execute it in the future once the current date rolls over to the execution date.

+

+Running more than one scheduler

+-------------------------------

+

+Airflow 2.0 is the first release that officially supports running more one scheduler concurrently -- both for

+performance reasons and for resiliency.

+

+Overview

+""""""""

+

+The HA scheduler is written to take advantage of the existing metadata database. This was primarily done for

+operational simplicity: every component already has to speak to this DB, and by not using direct communication

+or consensus algorithm between schedulers (Raft, Paxos, etc.) nor another consensus tool (Apache Zookeeper, or

+Consul for instance) we have kept the "operational surface area" to a minimum.

+

+The scheduler now uses the serialized DAG representation to make it's scheduling decisions and the rough

+outline of the scheduling loop is:

+

+- Check for any DAGs needing a new DagRun, and create them

+- Examine a batch of DagRuns for schedulable TaskInstances or complete DagRuns

+- Select schedulable TaskInstances, and whilst respecting Pool limits and other concurrency limits, enqueue

+ them for execution

+

+This does however place some requirements on the Database.

+

+Database Requirements

+"""""""""""""""""""""

+

+The short version is that users of PostgreSQL 9.6+ or MySQL 8+ are all ready to go -- simple start running as

+many copies of the scheduler as you like -- there is no further set up or config options needed. If you are

+using a different database please read on

+

+To maintain performance and throughput there is one part of the scheduling loop that does a number of

+calculations in memory (because having to round-trip to the DB for each TaskInstance would be too slow) so we

+need to ensure that only a single scheduler is in this critical section at once - otherwise limits would not

+be correctly respected. To achieve this we use database row-level locks (using ``SELECT ... FOR UPDATE``).

+

+This critical section is where TaskInstances go from scheduled state and are enqueued to the executor, whilst

+ensuring the various concurrency and pool limits are respected. The critical section is obtained by asking for

+a row-level write lock on every row of the Pool table (roughly equivalent to ``SELECT * FROM slot_pool FOR

+UPDATE NOWAIT`` but the exact query is slightly different).

+

+The following databases are fully supported and provide an "optimal" experience:

+

+- PostgreSQL 9.6+

+- MySQL 8+

+

+MaraiDB will have slightly "degraded" performance, as it does not implement the ``SKIP LOCKED`` or ``NOWAIT`` SQL clauses --

+see `MDEV-13115 <https://jira.mariadb.org/browse/MDEV-13115>`_. Multiple schedulers will operate without this

+extension, but instead of skipping over the critical section and carrying on with other scheduling work

+(creating DagRuns, progressing TaskInstances to the scheduled state) they will wait for the critical section

+lock to be released.

+

+.. warning::

+

+ MySQL 5.x also does not support ``SKIP LOCKED`` or ``NOWAIT``, and additionally is more prone to deciding

+ queries are deadlocked, so running with a more than a single scheduler on MySQL 5.x is not supported or

+ recommended.

+

+.. note::

+

+ Microsoft SQLServer has not been tested with HA.

+

+Tuneables

+"""""""""

+

+- :ref:`config:scheduler__max_dagruns_to_create_per_loop`

Review comment:

Done

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] potiuk commented on a change in pull request #11467: Add docs about Scheduler HA, how to use it and DB requirements

Posted by GitBox <gi...@apache.org>.

potiuk commented on a change in pull request #11467:

URL: https://github.com/apache/airflow/pull/11467#discussion_r511504146

##########

File path: docs/scheduler.rst

##########

@@ -65,3 +65,72 @@ If you want to use 'external trigger' to run future-dated execution dates, set `

This only has effect if your DAG has no ``schedule_interval``.

If you keep default ``allow_trigger_in_future = False`` and try 'external trigger' to run future-dated execution dates,

the scheduler won't execute it now but the scheduler will execute it in the future once the current date rolls over to the execution date.

+

+Running more than one scheduler

+-------------------------------

+

+Airflow 2.0 is the first release that officially supports running more one scheduler concurrently -- both for

+performance reasons and for resiliency.

+

+Overview

+""""""""

+

+The HA scheduler is written to take advantage of the existing metadata database. This was primarily done for

+operational simplicity: every component already has to speak to this DB, and by not using direct communication

+or consensus algorithm between schedulers (Raft, Paxos, etc.) nor another consensus tool (Apache Zookeeper, or

+Consul for instance) we have kept the "operational surface area" to a minimum.

+

+The scheduler now uses the serialized DAG representation to make it's scheduling decisions and the rough

+outline of the scheduling loop is:

+

+- Check for any DAGs needing a new DagRun, and create them

+- Examine a batch of DagRuns for schedulable TaskInstances or complete DagRuns

+- Select schedulable TaskInstances, and whilst respecting Pool limits and other concurrency limits, enqueue

+ them for execution

+

+This does however place some requirements on the Database.

+

+Database Requirements

+"""""""""""""""""""""

+

+The short version is that users of PostgreSQL 9.6+ or MySQL 8+ are all ready to go -- simple start running as

+many copies of the scheduler as you like -- there is no further set up or config options needed. If you are

+using a different database please read on

+

+To maintain performance and throughput there is one part of the scheduling loop that does a number of

+calculations in memory (because having to round-trip to the DB for each TaskInstance would be too slow) so we

+need to ensure that only a single scheduler is in this critical section at once - otherwise limits would not

+be correctly respected. To achieve this we use database row-level locks (using ``SELECT ... FOR UPDATE``).

+

+This critical section is where TaskInstances go from scheduled state and are enqueued to the executor, whilst

+ensuring the various concurrency and pool limits are respected. The critical section is obtained by asking for

+a row-level write lock on every row of the Pool table (roughly equivalent to ``SELECT * FROM slot_pool FOR

+UPDATE NOWAIT`` but the exact query is slightly different).

+

+The following databases are fully supported and provide an "optimal" experience:

+

+- PostgreSQL 9.6+

+- MySQL 8+

+

+MaraiDB will have slightly "degraded" performance, as it does not implement the ``SKIP LOCKED`` or ``NOWAIT`` SQL clauses --

Review comment:

I think we should also update information about supported versions in https://github.com/apache/airflow#requirements - with some * disclaimer that the HA Scheduler works in MySQL 8 but both Maria DB and Mysql 5.7 have limitations.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] kaxil commented on a change in pull request #11467: Add docs about Scheduler HA, how to use it and DB requirements

Posted by GitBox <gi...@apache.org>.

kaxil commented on a change in pull request #11467:

URL: https://github.com/apache/airflow/pull/11467#discussion_r503353612

##########

File path: docs/scheduler.rst

##########

@@ -65,3 +65,72 @@ If you want to use 'external trigger' to run future-dated execution dates, set `

This only has effect if your DAG has no ``schedule_interval``.

If you keep default ``allow_trigger_in_future = False`` and try 'external trigger' to run future-dated execution dates,

the scheduler won't execute it now but the scheduler will execute it in the future once the current date rolls over to the execution date.

+

+Running more than one scheduler

+-------------------------------

+

+Airflow 2.0 is the first release that officially supports running more one scheduler concurrently -- both for

+performance reasons and for resiliency.

+

+Overview

+""""""""

+

+The HA scheduler is written to take advantage of the existing metadata database. This was primarily done for

+operational simplicity: every component already has to speak to this DB, and by not using direct communication

+or consensus algorithm between schedulers (Raft, Paxos, etc.) nor another consensus tool (Apache Zookeeper, or

+Consul for instance) we have kept the "operational surface area" to a minimum.

+

+The scheduler now uses the serialized DAG representation to make it's scheduling decisions and the rough

+outline of the scheduling loop is:

+

+- Check for any DAGs needing a new DagRun, and create them

+- Examine a batch of DagRuns for schedulable TaskInstances or complete DagRuns

+- Select schedulable TaskInstances, and whilst respecting Pool limits and other concurrency limits, enqueue

+ them for execution

+

+This does however place some requirements on the Database.

+

+Database Requirements

+"""""""""""""""""""""

+

+The short version is that users of PostgreSQL 9.6+ or MySQL 8+ are all ready to go -- simple start running as

+many copies of the scheduler as you like -- there is no further set up or config options needed. If you are

+using a different database please read on

Review comment:

```suggestion

using a different database please read on.

```

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] ashb commented on a change in pull request #11467: Add docs about Scheduler HA, how to use it and DB requirements

Posted by GitBox <gi...@apache.org>.

ashb commented on a change in pull request #11467:

URL: https://github.com/apache/airflow/pull/11467#discussion_r512558521

##########

File path: docs/scheduler.rst

##########

@@ -65,3 +65,72 @@ If you want to use 'external trigger' to run future-dated execution dates, set `

This only has effect if your DAG has no ``schedule_interval``.

If you keep default ``allow_trigger_in_future = False`` and try 'external trigger' to run future-dated execution dates,

the scheduler won't execute it now but the scheduler will execute it in the future once the current date rolls over to the execution date.

+

+Running more than one scheduler

+-------------------------------

+

+Airflow 2.0 is the first release that officially supports running more one scheduler concurrently -- both for

+performance reasons and for resiliency.

+

+Overview

+""""""""

+

+The HA scheduler is written to take advantage of the existing metadata database. This was primarily done for

+operational simplicity: every component already has to speak to this DB, and by not using direct communication

+or consensus algorithm between schedulers (Raft, Paxos, etc.) nor another consensus tool (Apache Zookeeper, or

+Consul for instance) we have kept the "operational surface area" to a minimum.

+

+The scheduler now uses the serialized DAG representation to make it's scheduling decisions and the rough

+outline of the scheduling loop is:

+

+- Check for any DAGs needing a new DagRun, and create them

+- Examine a batch of DagRuns for schedulable TaskInstances or complete DagRuns

+- Select schedulable TaskInstances, and whilst respecting Pool limits and other concurrency limits, enqueue

+ them for execution

+

+This does however place some requirements on the Database.

+

+Database Requirements

+"""""""""""""""""""""

+

+The short version is that users of PostgreSQL 9.6+ or MySQL 8+ are all ready to go -- simple start running as

Review comment:

"simply" and "just" are bad words to use in cos `simple` -> `you can`

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] kaxil commented on a change in pull request #11467: Add docs about Scheduler HA, how to use it and DB requirements

Posted by GitBox <gi...@apache.org>.

kaxil commented on a change in pull request #11467:

URL: https://github.com/apache/airflow/pull/11467#discussion_r512636397

##########

File path: docs/scheduler.rst

##########

@@ -65,3 +65,99 @@ If you want to use 'external trigger' to run future-dated execution dates, set `

This only has effect if your DAG has no ``schedule_interval``.

If you keep default ``allow_trigger_in_future = False`` and try 'external trigger' to run future-dated execution dates,

the scheduler won't execute it now but the scheduler will execute it in the future once the current date rolls over to the execution date.

+

+Running More Than One Scheduler

+-------------------------------

+

+.. versionadded: 2.0.0

+

+Airflow supports running more than one scheduler concurrently -- both for performance reasons and for

+resiliency.

+

+Overview

+""""""""

+

+The :abbr:`HA (highly available)` scheduler is designed to take advantage of the existing metadata database.

+This was primarily done for operational simplicity: every component already has to speak to this DB, and by

+not using direct communication or consensus algorithm between schedulers (Raft, Paxos, etc.) nor another

+consensus tool (Apache Zookeeper, or Consul for instance) we have kept the "operational surface area" to a

+minimum.

+

+The scheduler now uses the serialized DAG representation to make its scheduling decisions and the rough

+outline of the scheduling loop is:

+

+- Check for any DAGs needing a new DagRun, and create them

+- Examine a batch of DagRuns for schedulable TaskInstances or complete DagRuns

+- Select schedulable TaskInstances, and whilst respecting Pool limits and other concurrency limits, enqueue

+ them for execution

+

+This does however place some requirements on the Database.

+

+.. _scheduler:ha:db_requirements:

+

+Database Requirements

+"""""""""""""""""""""

+

+The short version is that users of PostgreSQL 9.6+ or MySQL 8+ are all ready to go -- you can start running as

+many copies of the scheduler as you like -- there is no further set up or config options needed. If you are

+using a different database please read on.

+

+To maintain performance and throughput there is one part of the scheduling loop that does a number of

+calculations in memory (because having to round-trip to the DB for each TaskInstance would be too slow) so we

+need to ensure that only a single scheduler is in this critical section at once - otherwise limits would not

+be correctly respected. To achieve this we use database row-level locks (using ``SELECT ... FOR UPDATE``).

+

+This critical section is where TaskInstances go from scheduled state and are enqueued to the executor, whilst

+ensuring the various concurrency and pool limits are respected. The critical section is obtained by asking for

+a row-level write lock on every row of the Pool table (roughly equivalent to ``SELECT * FROM slot_pool FOR

+UPDATE NOWAIT`` but the exact query is slightly different).

+

+The following databases are fully supported and provide an "optimal" experience:

+

+- PostgreSQL 9.6+

+- MySQL 8+

+

+MariaDB will have slightly "degraded" performance, as it does not implement the ``SKIP LOCKED`` or ``NOWAIT`` SQL clauses --

+see `MDEV-13115 <https://jira.mariadb.org/browse/MDEV-13115>`_. Multiple schedulers will operate without this

+extension, but instead of skipping over the critical section and carrying on with other scheduling work

+(creating DagRuns, progressing TaskInstances to the scheduled state) they will wait for the critical section

+lock to be released.

+

+.. warning::

+

+ MySQL 5.x also does not support ``SKIP LOCKED`` or ``NOWAIT``, and additionally is more prone to deciding

+ queries are deadlocked, so running with more than a single scheduler on MySQL 5.x is not supported or

+ recommended.

+

+.. note::

+

+ Microsoft SQLServer has not been tested with HA.

+

+.. _scheduler:ha:tunables:

+

+Scheduler Tuneables

+"""""""""""""""""""

+

+The following config settings can be used to control aspects of the Scheduler HA loop.

+

+- :ref:`config:scheduler__max_dagruns_to_create_per_loop`

+

+ This changes the number of dags that are locked by each scheduler when

+ creating dag runs. One possible reason for setting this lower is if you

+ have huge dags and are running multiple schedules, you won't want one

+ scheduler to do all the work.

+

+- :ref:`config:scheduler__max_dagruns_per_loop_to_schedule`

+

+ How many DagRuns should a scheduler examine (and lock) when scheduling

+ and queuing tasks. Increasing this limit will allow more throughput for

+ smaller DAGs but will likely slow down throughput for larger (>500

+ tasks for example) DAGs. Setting this too high when using multiple

+ schedulers could also lead to one scheduler taking all the dag runs

+ leaving no work for the others.

+

+- :ref:`config:scheduler__use_row_level_locking`

+

+ Should the scheduler issue `SELECT ... FOR UPDATE` in relevant queries.

+ If this is set to False then you should not run more than a single

+ scheduler at once

Review comment:

```suggestion

Should the scheduler issue ``SELECT ... FOR UPDATE`` in relevant queries.

If this is set to ``False`` then you should not run more than a single

scheduler at once.

```

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] turbaszek commented on a change in pull request #11467: Add docs about Scheduler HA, how to use it and DB requirements

Posted by GitBox <gi...@apache.org>.

turbaszek commented on a change in pull request #11467:

URL: https://github.com/apache/airflow/pull/11467#discussion_r503549978

##########

File path: docs/scheduler.rst

##########

@@ -65,3 +65,72 @@ If you want to use 'external trigger' to run future-dated execution dates, set `

This only has effect if your DAG has no ``schedule_interval``.

If you keep default ``allow_trigger_in_future = False`` and try 'external trigger' to run future-dated execution dates,

the scheduler won't execute it now but the scheduler will execute it in the future once the current date rolls over to the execution date.

+

+Running more than one scheduler

+-------------------------------

+

+Airflow 2.0 is the first release that officially supports running more one scheduler concurrently -- both for

+performance reasons and for resiliency.

+

+Overview

+""""""""

+

+The HA scheduler is written to take advantage of the existing metadata database. This was primarily done for

Review comment:

```suggestion

The highly available (HA) scheduler is designed to take advantage of the existing metadata database. This was primarily done for

```

Not every user have to be familiar with this acronym

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] richard-williamson commented on a change in pull request #11467: Add docs about Scheduler HA, how to use it and DB requirements

Posted by GitBox <gi...@apache.org>.

richard-williamson commented on a change in pull request #11467:

URL: https://github.com/apache/airflow/pull/11467#discussion_r511502978

##########

File path: docs/scheduler.rst

##########

@@ -65,3 +65,72 @@ If you want to use 'external trigger' to run future-dated execution dates, set `

This only has effect if your DAG has no ``schedule_interval``.

If you keep default ``allow_trigger_in_future = False`` and try 'external trigger' to run future-dated execution dates,

the scheduler won't execute it now but the scheduler will execute it in the future once the current date rolls over to the execution date.

+

+Running more than one scheduler

+-------------------------------

+

+Airflow 2.0 is the first release that officially supports running more one scheduler concurrently -- both for

+performance reasons and for resiliency.

+

+Overview

+""""""""

+

+The HA scheduler is written to take advantage of the existing metadata database. This was primarily done for

+operational simplicity: every component already has to speak to this DB, and by not using direct communication

+or consensus algorithm between schedulers (Raft, Paxos, etc.) nor another consensus tool (Apache Zookeeper, or

+Consul for instance) we have kept the "operational surface area" to a minimum.

+

+The scheduler now uses the serialized DAG representation to make it's scheduling decisions and the rough

+outline of the scheduling loop is:

+

+- Check for any DAGs needing a new DagRun, and create them

+- Examine a batch of DagRuns for schedulable TaskInstances or complete DagRuns

+- Select schedulable TaskInstances, and whilst respecting Pool limits and other concurrency limits, enqueue

+ them for execution

+

+This does however place some requirements on the Database.

+

+Database Requirements

+"""""""""""""""""""""

+

+The short version is that users of PostgreSQL 9.6+ or MySQL 8+ are all ready to go -- simple start running as

+many copies of the scheduler as you like -- there is no further set up or config options needed. If you are

+using a different database please read on

+

+To maintain performance and throughput there is one part of the scheduling loop that does a number of

+calculations in memory (because having to round-trip to the DB for each TaskInstance would be too slow) so we

+need to ensure that only a single scheduler is in this critical section at once - otherwise limits would not

+be correctly respected. To achieve this we use database row-level locks (using ``SELECT ... FOR UPDATE``).

+

+This critical section is where TaskInstances go from scheduled state and are enqueued to the executor, whilst

+ensuring the various concurrency and pool limits are respected. The critical section is obtained by asking for

+a row-level write lock on every row of the Pool table (roughly equivalent to ``SELECT * FROM slot_pool FOR

+UPDATE NOWAIT`` but the exact query is slightly different).

+

+The following databases are fully supported and provide an "optimal" experience:

+

+- PostgreSQL 9.6+

+- MySQL 8+

+

+MaraiDB will have slightly "degraded" performance, as it does not implement the ``SKIP LOCKED`` or ``NOWAIT`` SQL clauses --

Review comment:

Maybe worth clarifying MariaDB can only be ran with one scheduler currently here (per https://apache-airflow.slack.com/archives/CCVDRN0F9/p1603564032117100?thread_ts=1603544070.099000&cid=CCVDRN0F9)

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] ashb commented on pull request #11467: Add docs about Scheduler HA, how to use it and DB requirements

Posted by GitBox <gi...@apache.org>.

ashb commented on pull request #11467:

URL: https://github.com/apache/airflow/pull/11467#issuecomment-717189913

> Can you also add some documentation on the database setup page? If users know about this requirement in advance, they will be able to select these databases when creating an instance. Otherwise, users will rely on the default values provided by the cloud provider.

> https://airflow.readthedocs.io/en/latest/howto/initialize-database.html

I've cross-linked it from there

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] turbaszek commented on a change in pull request #11467: Add docs about Scheduler HA, how to use it and DB requirements

Posted by GitBox <gi...@apache.org>.

turbaszek commented on a change in pull request #11467:

URL: https://github.com/apache/airflow/pull/11467#discussion_r503550725

##########

File path: docs/scheduler.rst

##########

@@ -65,3 +65,72 @@ If you want to use 'external trigger' to run future-dated execution dates, set `

This only has effect if your DAG has no ``schedule_interval``.

If you keep default ``allow_trigger_in_future = False`` and try 'external trigger' to run future-dated execution dates,

the scheduler won't execute it now but the scheduler will execute it in the future once the current date rolls over to the execution date.

+

+Running more than one scheduler

+-------------------------------

+

+Airflow 2.0 is the first release that officially supports running more one scheduler concurrently -- both for

+performance reasons and for resiliency.

+

+Overview

+""""""""

+

+The HA scheduler is written to take advantage of the existing metadata database. This was primarily done for

+operational simplicity: every component already has to speak to this DB, and by not using direct communication

+or consensus algorithm between schedulers (Raft, Paxos, etc.) nor another consensus tool (Apache Zookeeper, or

+Consul for instance) we have kept the "operational surface area" to a minimum.

Review comment:

```suggestion

Consul for instance) we have kept the "operational surface area" to a minimum. For more information on architectural choices check `Airflow Improvement Proposal 15 <https://cwiki.apache.org/confluence/pages/viewpage.action?pageId=103092651>`_

```

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] turbaszek commented on a change in pull request #11467: Add docs about Scheduler HA, how to use it and DB requirements

Posted by GitBox <gi...@apache.org>.

turbaszek commented on a change in pull request #11467:

URL: https://github.com/apache/airflow/pull/11467#discussion_r503550725

##########

File path: docs/scheduler.rst

##########

@@ -65,3 +65,72 @@ If you want to use 'external trigger' to run future-dated execution dates, set `

This only has effect if your DAG has no ``schedule_interval``.

If you keep default ``allow_trigger_in_future = False`` and try 'external trigger' to run future-dated execution dates,

the scheduler won't execute it now but the scheduler will execute it in the future once the current date rolls over to the execution date.

+

+Running more than one scheduler

+-------------------------------

+

+Airflow 2.0 is the first release that officially supports running more one scheduler concurrently -- both for

+performance reasons and for resiliency.

+

+Overview

+""""""""

+

+The HA scheduler is written to take advantage of the existing metadata database. This was primarily done for

+operational simplicity: every component already has to speak to this DB, and by not using direct communication

+or consensus algorithm between schedulers (Raft, Paxos, etc.) nor another consensus tool (Apache Zookeeper, or

+Consul for instance) we have kept the "operational surface area" to a minimum.

Review comment:

```suggestion

Consul for instance) we have kept the "operational surface area" to a minimum. For more information on architectural choices check `Airflow Improvement Proposal 15 <https://cwiki.apache.org/confluence/pages/viewpage.action?pageId=103092651>`_.

```

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] ashb merged pull request #11467: Add docs about Scheduler HA, how to use it and DB requirements

Posted by GitBox <gi...@apache.org>.

ashb merged pull request #11467:

URL: https://github.com/apache/airflow/pull/11467

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] ashb commented on a change in pull request #11467: Add docs about Scheduler HA, how to use it and DB requirements

Posted by GitBox <gi...@apache.org>.

ashb commented on a change in pull request #11467:

URL: https://github.com/apache/airflow/pull/11467#discussion_r512602214

##########

File path: docs/scheduler.rst

##########

@@ -65,3 +65,72 @@ If you want to use 'external trigger' to run future-dated execution dates, set `

This only has effect if your DAG has no ``schedule_interval``.

If you keep default ``allow_trigger_in_future = False`` and try 'external trigger' to run future-dated execution dates,

the scheduler won't execute it now but the scheduler will execute it in the future once the current date rolls over to the execution date.

+