You are viewing a plain text version of this content. The canonical link for it is here.

Posted to issues@bookkeeper.apache.org by GitBox <gi...@apache.org> on 2022/05/04 18:12:05 UTC

[GitHub] [bookkeeper] RaulGracia opened a new issue, #3258: Bookkeeper 4.15 shows a write throughput drop of ~20% compared to Bookkeeper 4.14

RaulGracia opened a new issue, #3258:

URL: https://github.com/apache/bookkeeper/issues/3258

**BUG REPORT**

***Describe the bug***

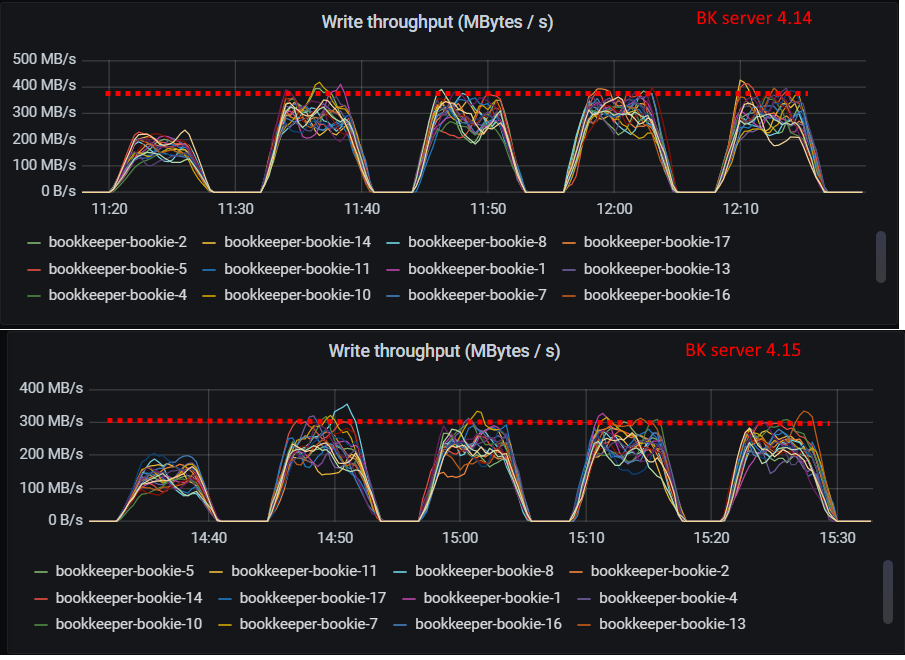

We have done some performance regression tests on the Bookkeeper 4.15 RC and compared it with Bookkeeper 4.14. We have detected a performance regression of around 20% in write throughput in the new release candidate (the same performance regression may be also impacting current master).

We have used the following configurations:

- BK client 4.14 - BK server 4.14

- BK client 4.15 - BK server 4.14

- BK client 4.15 - BK server 4.15

The problems are only visible when using Bookkeeper 4.15 server (so we can discard that the problem is in the client). The benchmark consists of write-only workload to a Pravega (https://github.com/pravega/pravega) cluster using the above combinations of Bookkeeper clients and server (also keeping the same configuration of Pravega and Bookkeeper across tests).

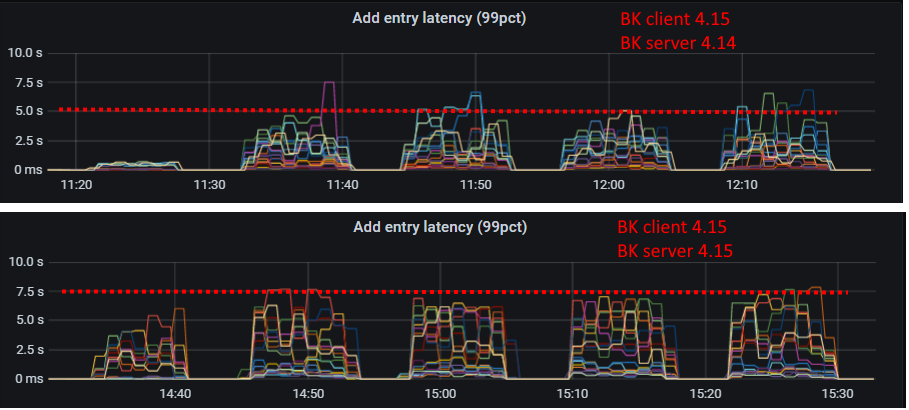

- Internal BK write latency metrics chart shows higher latency for Bookkeeper 4.15:

- On BK drives stats we observed much lower IOPS for BK server 4.15, at the same time the average write size on the drive is similar for both BK server versions:

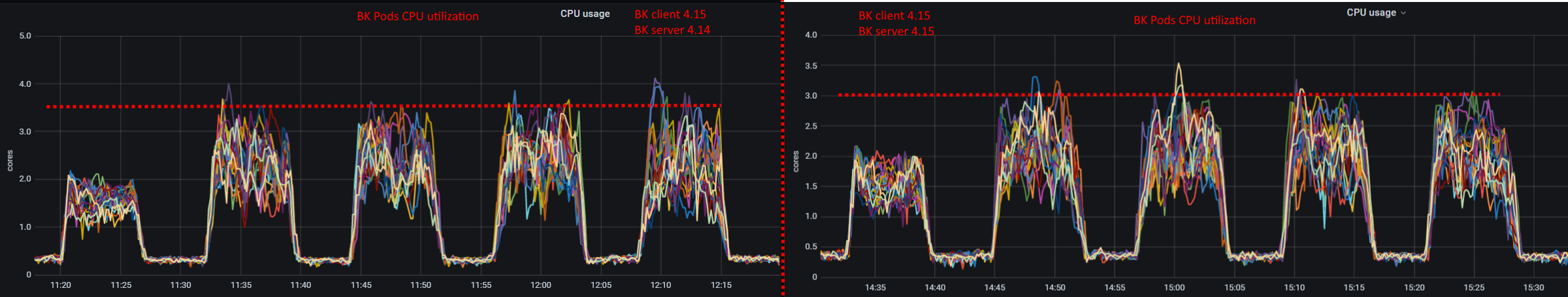

- BK Pods CPU utilization is a little bit higher for BK 4.14 because of higher performance (it is expected):

***To Reproduce***

We have done the experiments on high-end cluster (Kubernetes, 18 Bookies (1 journal/1 ledger drives), NVMes).

But it is likely that the performance problem could be visible doing much smaller scale tests.

***Expected behavior***

Bookkeeper 4.15 should be at least as fast as Bookkeeper 4.14.

***Screenshots***

Posted above.

***Additional context***

We will start searching for the commit that could have caused the regression. We will also provide profiling information to help fixing the performance problem.

Credits to @OlegKashtanov.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscribe@bookkeeper.apache.org.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [bookkeeper] eolivelli commented on issue #3258: Bookkeeper 4.15 shows a write throughput drop of ~20% compared to Bookkeeper 4.14

Posted by GitBox <gi...@apache.org>.

eolivelli commented on issue #3258:

URL: https://github.com/apache/bookkeeper/issues/3258#issuecomment-1117743865

Are you using a docker image build from Bk repo?

Or are you using some BK package built from Pravega?

I am asking this because the set of dependencies may look different, especially Netty is usually a problem.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscribe@bookkeeper.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [bookkeeper] RaulGracia closed issue #3258: Bookkeeper 4.15 shows a write throughput drop of ~20% compared to Bookkeeper 4.14

Posted by GitBox <gi...@apache.org>.

RaulGracia closed issue #3258: Bookkeeper 4.15 shows a write throughput drop of ~20% compared to Bookkeeper 4.14

URL: https://github.com/apache/bookkeeper/issues/3258

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscribe@bookkeeper.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [bookkeeper] eolivelli commented on issue #3258: Bookkeeper 4.15 shows a write throughput drop of ~20% compared to Bookkeeper 4.14

Posted by GitBox <gi...@apache.org>.

eolivelli commented on issue #3258:

URL: https://github.com/apache/bookkeeper/issues/3258#issuecomment-1117751575

Can you share the bk configuration?

Is is exactly the same with 4.14 and 4.15?

Are you inheriting defaults or setting explicitly all the values?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscribe@bookkeeper.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [bookkeeper] dave2wave commented on issue #3258: Bookkeeper 4.15 shows a write throughput drop of ~20% compared to Bookkeeper 4.14

Posted by GitBox <gi...@apache.org>.

dave2wave commented on issue #3258:

URL: https://github.com/apache/bookkeeper/issues/3258#issuecomment-1118033982

I ran the DataStax version of the OMB with two different Pulsar clusters based on 2.10. One with BookKeeper 4.15.0 and the other 4.14.5 using different changes provided by Andrey.

I ran a 1 million messages per second 10 topic 3 partition with 100 byte messages and a 400K messages per second 10 topic 3 partition with 1K messages scenario. Both tests ran in AWS us-west-2a and were nearly identical.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscribe@bookkeeper.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [bookkeeper] merlimat commented on issue #3258: Bookkeeper 4.15 shows a write throughput drop of ~20% compared to Bookkeeper 4.14

Posted by GitBox <gi...@apache.org>.

merlimat commented on issue #3258:

URL: https://github.com/apache/bookkeeper/issues/3258#issuecomment-1118057912

Thanks @dave2wave! It looks a very tiny regression on 99pct (~0.2 ms), which would be interesting to understand but I don't think it's really a blocker for the release.

Did you also run a max-throughput test?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscribe@bookkeeper.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [bookkeeper] OlegKashtanov commented on issue #3258: Bookkeeper 4.15 shows a write throughput drop of ~20% compared to Bookkeeper 4.14

Posted by GitBox <gi...@apache.org>.

OlegKashtanov commented on issue #3258:

URL: https://github.com/apache/bookkeeper/issues/3258#issuecomment-1118235944

> Can you include the throughput charts on the bookies too?

I've added the Bookies write throughput charts. We can observe the same throughput difference as on Pravega side.

> Are you using a docker image build from Bk repo? r are you using some BK package built from Pravega?

We are using the BK code from apache/bookkeeper repo for building the BK package. After that we build the BK docker image using our own Dockerfile with different base image. In our case it's Alpine.

> Can you share the bk configuration? Is is exactly the same with 4.14 and 4.15?

We are using the https://github.com/pravega/bookkeeper-operator for BK deploying. We overwrite some of the options via operator, but for that level there is no difference for deployment between 4.14 and 4.15 - only the BK docker image. We've checked these BK configuration options and all of them were applied by both BK servers (checked it in the logs).

But today we've compared the bk_server.conf file for 4.14 and 4.15 and observed several new added options in 4.15 which could impact in our result:

```

+# Set the Channel Provider for journal.

+# The default value is

+# journalChannelProvider=org.apache.bookkeeper.bookie.DefaultFileChannelProvider

...

+# Max number of concurrent requests in garbage collection of overreplicated ledgers.

+# gcOverreplicatedLedgerMaxConcurrentRequests=1000

...

+# True if bookie should persist entrylog file metadata and avoid in-memory object allocation

+gcEntryLogMetadataCacheEnabled=false

+# Directory to persist Entrylog metadata if gcPersistentEntrylogMetadataMapEnabled is true

+# [Default: it creates a sub-directory under a first available base ledger directory with

+# name "entrylogIndexCache"]

+# gcEntryLogMetadataCachePath=

...

+# Semaphore limit of getting ledger from zookeeper. Used to throttle the zookeeper client request operation

+# sending to Zookeeper server. Default value is 500

+# auditorMaxNumberOfConcurrentOpenLedgerOperations=500

...

+# Semaphore limit of getting ledger from zookeeper. Used to throttle the zookeeper client request operation

+# sending to Zookeeper server. Default value is 500

+# auditorMaxNumberOfConcurrentOpenLedgerOperations=500

```

We'll add the flamegraphs, which could help to identify the root cause IMO.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscribe@bookkeeper.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [bookkeeper] RaulGracia commented on issue #3258: Bookkeeper 4.15 shows a write throughput drop of ~20% compared to Bookkeeper 4.14

Posted by GitBox <gi...@apache.org>.

RaulGracia commented on issue #3258:

URL: https://github.com/apache/bookkeeper/issues/3258#issuecomment-1120779745

After multiple experiments, @OlegKashtanov has concluded that this issue in throughput drop seems not consistent based on the Bookkeeper commit being used and therefore, it may be related to our internal deployment/environment. Due to this, I will be closing this issue and re-open it if we identify that there is any consistent Bookkeeper-related performance problem. Thanks to all that helped to look into this issue.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscribe@bookkeeper.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [bookkeeper] merlimat commented on issue #3258: Bookkeeper 4.15 shows a write throughput drop of ~20% compared to Bookkeeper 4.14

Posted by GitBox <gi...@apache.org>.

merlimat commented on issue #3258:

URL: https://github.com/apache/bookkeeper/issues/3258#issuecomment-1117677048

@RaulGracia Thanks for looking into this.

For the latency part, I think the 5 secs for 99pct is already a **huge** number, so I wouldn't weight too much on 5sec vs 7.5 secs. I would be interesting to see it in a more reasonable setting with <5ms 99pct and see the comparison 4.14 vs 4.15.

Can you include the throughput charts on the bookies too?

For profiling, it would be very helpful to get a flame graph (https://github.com/jvm-profiling-tools/async-profiler) with the per-thread break down.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscribe@bookkeeper.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [bookkeeper] dave2wave commented on issue #3258: Bookkeeper 4.15 shows a write throughput drop of ~20% compared to Bookkeeper 4.14

Posted by GitBox <gi...@apache.org>.

dave2wave commented on issue #3258:

URL: https://github.com/apache/bookkeeper/issues/3258#issuecomment-1118061042

The 1K test is the reverse. I really don't think these are too different. I've left the clusters up and will do any suggested workloads in the morning. Feel free to provide any particular workload configuration.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscribe@bookkeeper.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org