You are viewing a plain text version of this content. The canonical link for it is here.

Posted to issues@flink.apache.org by GitBox <gi...@apache.org> on 2022/04/28 02:44:51 UTC

[GitHub] [flink-web] JingsongLi opened a new pull request, #531: Add Table Store 0.1.0 release

JingsongLi opened a new pull request, #531:

URL: https://github.com/apache/flink-web/pull/531

- Add Blog

- Add table store to download page

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscribe@flink.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [flink-web] openinx commented on a diff in pull request #531: Add Table Store 0.1.0 release

Posted by GitBox <gi...@apache.org>.

openinx commented on code in PR #531:

URL: https://github.com/apache/flink-web/pull/531#discussion_r866528803

##########

_config.yml:

##########

@@ -198,6 +198,24 @@ flink_kubernetes_operator_releases:

asc_url: "https://downloads.apache.org/flink/flink-kubernetes-operator-0.1.0/flink-kubernetes-operator-0.1.0-helm.tgz.asc"

sha512_url: "https://downloads.apache.org/flink/flink-kubernetes-operator-0.1.0/flink-kubernetes-operator-0.1.0-helm.tgz.sha512"

+flink_table_store_releases:

+ -

+ version_short: "0.1"

+ source_release:

+ name: "Apache Flink Table Store 0.1.0"

+ id: "010-table-store-download-source"

+ flink_version: "1.15.0"

+ url: "https://www.apache.org/dyn/closer.lua/flink/flink-table-store-0.1.0/flink-table-store-0.1.0-src.tgz"

+ asc_url: "https://downloads.apache.org/flink/flink-table-store-0.1.0/flink-table-store-0.1.0-src.tgz.asc"

+ sha512_url: "https://downloads.apache.org/flink/flink-table-store-0.1.0/flink-table-store-0.1.0-src.tgz.sha512"

+ binaries_release:

+ name: "Apache Flink Table Store Binaries 0.1.0"

+ id: "010-kubernetes-operator-download-binaries"

+ flink_version: "1.15.0"

+ url: "https://repo.maven.apache.org/maven2/org/apache/flink/flink-table-store-dist/0.1.0/flink-table-store-dist-0.1.0.jar"

+ asc_url: "https://repo.maven.apache.org/maven2/org/apache/flink/flink-table-store-dist/0.1.0/flink-table-store-dist-0.1.0.jar.asc"

+ sha1_url: "https://repo.maven.apache.org/maven2/org/apache/flink/flink-table-store-dist/0.1.0/flink-table-store-dist-0.1.0.jar.sha1"

Review Comment:

`sha1` or `sha512` ? Why the dist artifiact has the different sha algorithm as the source artifiacts ?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscribe@flink.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [flink-web] JingsongLi commented on a diff in pull request #531: Add Table Store 0.1.0 release

Posted by GitBox <gi...@apache.org>.

JingsongLi commented on code in PR #531:

URL: https://github.com/apache/flink-web/pull/531#discussion_r867604787

##########

_posts/2022-05-01-release-table-store-0.1.0.md:

##########

@@ -0,0 +1,110 @@

+---

+layout: post

+title: "Apache Flink Table Store 0.1.0 Release Announcement"

+subtitle: "Unified streaming and batch store for building dynamic tables on Apache Flink."

+date: 2022-05-01T08:00:00.000Z

+categories: news

+authors:

+- Jingsong Lee:

+ name: "Jingsong Lee"

+

+---

+

+The Apache Flink community is pleased to announce the preview release of the

+[Apache Flink Table Store](https://github.com/apache/flink-table-store) (0.1.0).

+

+Flink Table Store is a unified streaming and batch store for building dynamic tables

+on Apache Flink. It uses a full Log-Structured Merge-Tree (LSM) structure for high speed

+and a large amount of data update & query capability.

+

+Please check out the full [documentation]({{site.DOCS_BASE_URL}}flink-table-store-docs-release-0.1/) for detailed information and user guides.

+

+Note: Flink Table Store is still in beta status and undergoing rapid development,

+we do not recommend that you use it directly in a production environment.

+

+## What is Flink Table Store

+

+Open [Flink official website](https://flink.apache.org/), you can see the following line:

+`Apache Flink - Stateful Computations over Data Streams.` Flink focuses on distributed computing,

+which brings real-time big data computing. Users need to combine Flink with some kind of external storage.

+

+The message queue will be used in both source & intermediate stages in streaming pipeline, to guarantee the

+latency stay within seconds. There will also be a real-time OLAP system receiving processed data in streaming

+fashion and serving user’s ad-hoc queries.

+

+Everything works fine as long as users only care about the aggregated results. But when users start to care

+about the intermediate data, they will immediately hit a blocker: Intermediate kafka tables are not queryable.

+

+Therefore, users use multiple systems. Writing to a lake store like Apache Hudi, Apache Iceberg while writing to Queue,

+the lake store keeps historical data at a lower cost.

+

+There are two main issues with doing this:

+- High understanding bar for users: It’s also not easy for users to understand all the SQL connectors,

+ learn the capabilities and restrictions for each of those. Users may also want to play around with

+ streaming & batch unification, but don't really know how, given the connectors are most of the time different

+ in batch and streaming use cases.

+- Increasing architecture complexity: It’s hard to choose the most suited external systems when the requirements

+ include streaming pipelines, offline batch jobs, ad-hoc queries. Multiple systems will increase the operation

+ and maintenance complexity. Users at least need to coordinate between the queue system and file system of each

+ table, which is error-prone.

+

+The Flink Table Store aims to provide a unified storage abstraction:

+- Table Store provides storage of historical data while providing queue abstraction.

+- Table Store provides competitive historical storage with lake storage capability, using LSM file structure

+ to store data on DFS, providing real-time updates and queries at a lower cost.

+- Table Store coordinates between the queue storage and historical storage, providing hybrid read and write capabilities.

+- Table Store is a storage created for Flink, which satisfies all the concepts of Flink SQL and is the most

+ suitable storage abstraction for Flink.

+

+## Core Features

+

+Flink Table Store supports the following usage:

+- **Streaming Insert**: Write changelog streams, including CDC from the database and streams.

+- **Batch Insert**: Write batch data as offline warehouse, including OVERWRITE support.

+- **Batch/OLAP Query**: Read the snapshot of the storage, efficient querying of real-time data.

+- **Streaming Query**: Read the storage changes, ensure exactly-once consistency.

Review Comment:

I reorganized the language

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscribe@flink.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [flink-web] JingsongLi commented on a diff in pull request #531: Add Table Store 0.1.0 release

Posted by GitBox <gi...@apache.org>.

JingsongLi commented on code in PR #531:

URL: https://github.com/apache/flink-web/pull/531#discussion_r867605695

##########

_posts/2022-05-01-release-table-store-0.1.0.md:

##########

@@ -0,0 +1,110 @@

+---

+layout: post

+title: "Apache Flink Table Store 0.1.0 Release Announcement"

+subtitle: "Unified streaming and batch store for building dynamic tables on Apache Flink."

+date: 2022-05-01T08:00:00.000Z

+categories: news

+authors:

+- Jingsong Lee:

+ name: "Jingsong Lee"

+

+---

+

+The Apache Flink community is pleased to announce the preview release of the

+[Apache Flink Table Store](https://github.com/apache/flink-table-store) (0.1.0).

+

+Flink Table Store is a unified streaming and batch store for building dynamic tables

+on Apache Flink. It uses a full Log-Structured Merge-Tree (LSM) structure for high speed

+and a large amount of data update & query capability.

+

+Please check out the full [documentation]({{site.DOCS_BASE_URL}}flink-table-store-docs-release-0.1/) for detailed information and user guides.

+

+Note: Flink Table Store is still in beta status and undergoing rapid development,

+we do not recommend that you use it directly in a production environment.

+

+## What is Flink Table Store

+

+Open [Flink official website](https://flink.apache.org/), you can see the following line:

+`Apache Flink - Stateful Computations over Data Streams.` Flink focuses on distributed computing,

+which brings real-time big data computing. Users need to combine Flink with some kind of external storage.

+

+The message queue will be used in both source & intermediate stages in streaming pipeline, to guarantee the

+latency stay within seconds. There will also be a real-time OLAP system receiving processed data in streaming

+fashion and serving user’s ad-hoc queries.

+

+Everything works fine as long as users only care about the aggregated results. But when users start to care

+about the intermediate data, they will immediately hit a blocker: Intermediate kafka tables are not queryable.

+

+Therefore, users use multiple systems. Writing to a lake store like Apache Hudi, Apache Iceberg while writing to Queue,

+the lake store keeps historical data at a lower cost.

+

+There are two main issues with doing this:

Review Comment:

I mainly think it's better to focus on the problems solved by the current version, which avoids bringing relatively big disappointment to users. We can have some follow-up plans

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscribe@flink.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [flink-web] LadyForest commented on a diff in pull request #531: Add Table Store 0.1.0 release

Posted by GitBox <gi...@apache.org>.

LadyForest commented on code in PR #531:

URL: https://github.com/apache/flink-web/pull/531#discussion_r865974310

##########

_posts/2022-05-01-release-table-store-0.1.0.md:

##########

@@ -0,0 +1,93 @@

+---

+layout: post

+title: "Apache Flink Table Store 0.1.0 Release Announcement"

+subtitle: "Unified streaming and batch store for building dynamic tables on Apache Flink."

+date: 2022-05-01T08:00:00.000Z

+categories: news

+authors:

+- Jingsong Lee:

+ name: "Jingsong Lee"

+

+---

+

+The Apache Flink Community is pleased to announce the preview release of the

+[Apache Flink Table Store](https://github.com/apache/flink-table-store) (0.1.0).

+

+Flink Table Store is a unified streaming and batch store for building dynamic tables

+on Apache Flink. It uses a full Log-Structured Merge-Tree (LSM) structure for high speed

+and large amount of data update & query capability.

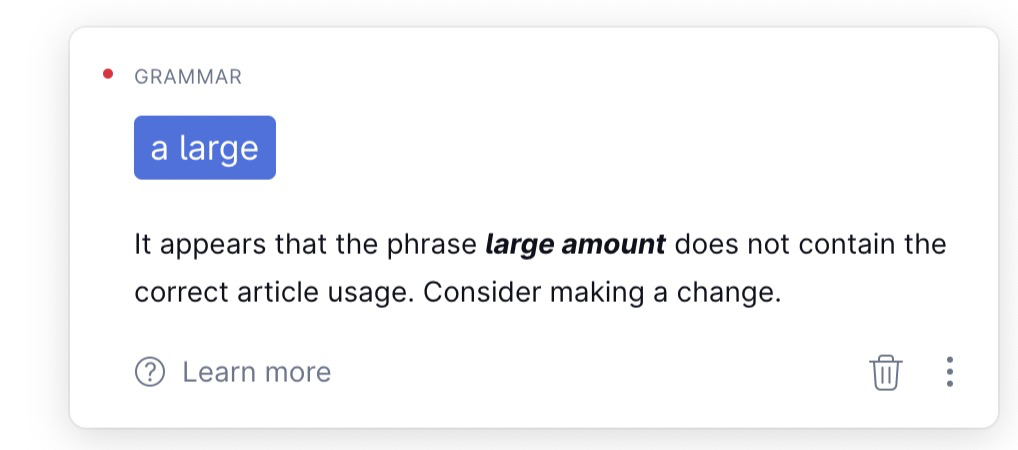

Review Comment:

and a large amount of

Ref: Grammarly

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscribe@flink.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [flink-web] openinx commented on a diff in pull request #531: Add Table Store 0.1.0 release

Posted by GitBox <gi...@apache.org>.

openinx commented on code in PR #531:

URL: https://github.com/apache/flink-web/pull/531#discussion_r866532269

##########

_posts/2022-05-01-release-table-store-0.1.0.md:

##########

@@ -0,0 +1,93 @@

+---

+layout: post

+title: "Apache Flink Table Store 0.1.0 Release Announcement"

+subtitle: "Unified streaming and batch store for building dynamic tables on Apache Flink."

+date: 2022-05-01T08:00:00.000Z

+categories: news

+authors:

+- Jingsong Lee:

+ name: "Jingsong Lee"

+

+---

+

+The Apache Flink Community is pleased to announce the preview release of the

+[Apache Flink Table Store](https://github.com/apache/flink-table-store) (0.1.0).

+

+Flink Table Store is a unified streaming and batch store for building dynamic tables

+on Apache Flink. It uses a full Log-Structured Merge-Tree (LSM) structure for high speed

+and large amount of data update & query capability.

+

+Please check out the full [documentation]({{site.DOCS_BASE_URL}}flink-table-store-docs-release-0.1/) for detailed information and user guides.

+

+Note: Flink Table Store is still in beta status and undergoing rapid development,

+we do not recommend that you use it directly in a production environment.

+

+## What is Flink Table Store

+

+Open [Flink official website](https://flink.apache.org/), you can see the following line:

+`Apache Flink - Stateful Computations over Data Streams.` Flink focuses on distributed computing,

+which brings real-time big data computing. But pure computation doesn't bring value, users need

+to combine Flink with some kind of external storage.

+

+For a long time, we found that no external storage can fit Flink's computation model perfectly,

+which brings troubles to users. So Flink Table Store was born, it is a storage built specifically

+for Flink, for big data real-time update scenario. From now on, Flink is no longer just a computing

+engine.

+

+Flink Table Store is a unified streaming and batch table format:

Review Comment:

> Flink Table Store is a unified streaming and batch table format

We are talking about the short-term roadmap, right ?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscribe@flink.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [flink-web] LadyForest commented on a diff in pull request #531: Add Table Store 0.1.0 release

Posted by GitBox <gi...@apache.org>.

LadyForest commented on code in PR #531:

URL: https://github.com/apache/flink-web/pull/531#discussion_r866111227

##########

_posts/2022-05-01-release-table-store-0.1.0.md:

##########

@@ -0,0 +1,93 @@

+---

+layout: post

+title: "Apache Flink Table Store 0.1.0 Release Announcement"

+subtitle: "Unified streaming and batch store for building dynamic tables on Apache Flink."

+date: 2022-05-01T08:00:00.000Z

+categories: news

+authors:

+- Jingsong Lee:

+ name: "Jingsong Lee"

+

+---

+

+The Apache Flink Community is pleased to announce the preview release of the

+[Apache Flink Table Store](https://github.com/apache/flink-table-store) (0.1.0).

+

+Flink Table Store is a unified streaming and batch store for building dynamic tables

+on Apache Flink. It uses a full Log-Structured Merge-Tree (LSM) structure for high speed

+and large amount of data update & query capability.

+

+Please check out the full [documentation]({{site.DOCS_BASE_URL}}flink-table-store-docs-release-0.1/) for detailed information and user guides.

+

+Note: Flink Table Store is still in beta status and undergoing rapid development,

+we do not recommend that you use it directly in a production environment.

+

+## What is Flink Table Store

+

+Open [Flink official website](https://flink.apache.org/), you can see the following line:

+`Apache Flink - Stateful Computations over Data Streams.` Flink focuses on distributed computing,

+which brings real-time big data computing. But pure computation doesn't bring value, users need

Review Comment:

> But pure computation doesn't bring value

I'm afraid that this expression is not very appropriate? We can highlight the significance of Table Store, but it does not have to sentence pure computing is valueless. What do you think?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscribe@flink.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [flink-web] JingsongLi commented on a diff in pull request #531: Add Table Store 0.1.0 release

Posted by GitBox <gi...@apache.org>.

JingsongLi commented on code in PR #531:

URL: https://github.com/apache/flink-web/pull/531#discussion_r867605296

##########

downloads.md:

##########

@@ -162,6 +162,27 @@ This version is compatible with Apache Flink version {{ flink_kubernetes_operato

{% endfor %}

+Apache Flink® Table Store {{ site.FLINK_TABLE_STORE_VERSION_STABLE }} is the latest stable release for the [Flink Table Store](https://github.com/apache/flink-table-store).

+

+{% for flink_table_store_release in site.flink_table_store_releases %}

+

+## {{ flink_table_store_release.source_release.name }}

+

+<p>

+<a href="{{ flink_table_store_release.source_release.url }}" id="{{ flink_table_store_release.source_release.id }}">{{ flink_table_store_release.source_release.name }} Source Release</a>

+(<a href="{{ flink_table_store_release.source_release.asc_url }}">asc</a>, <a href="{{ flink_table_store_release.source_release.sha512_url }}">sha512</a>)

+</p>

+<p>

+<a href="{{ flink_table_store_release.binaries_release.url }}" id="{{ flink_table_store_release.binaries_release.id }}">{{ flink_table_store_release.binaries_release.name }} Binaries Release</a>

+(<a href="{{ flink_table_store_release.binaries_release.asc_url }}">asc</a>, <a href="{{ flink_table_store_release.binaries_release.sha1_url }}">sha1</a>)

+</p>

+

+This version is compatible with Apache Flink version {{ flink_table_store_release.source_release.flink_version }}.

Review Comment:

Just one version

##########

_posts/2022-05-01-release-table-store-0.1.0.md:

##########

@@ -0,0 +1,110 @@

+---

+layout: post

+title: "Apache Flink Table Store 0.1.0 Release Announcement"

+subtitle: "Unified streaming and batch store for building dynamic tables on Apache Flink."

+date: 2022-05-01T08:00:00.000Z

+categories: news

+authors:

+- Jingsong Lee:

+ name: "Jingsong Lee"

+

+---

+

+The Apache Flink community is pleased to announce the preview release of the

+[Apache Flink Table Store](https://github.com/apache/flink-table-store) (0.1.0).

+

+Flink Table Store is a unified streaming and batch store for building dynamic tables

+on Apache Flink. It uses a full Log-Structured Merge-Tree (LSM) structure for high speed

+and a large amount of data update & query capability.

+

+Please check out the full [documentation]({{site.DOCS_BASE_URL}}flink-table-store-docs-release-0.1/) for detailed information and user guides.

+

+Note: Flink Table Store is still in beta status and undergoing rapid development,

+we do not recommend that you use it directly in a production environment.

+

+## What is Flink Table Store

+

+Open [Flink official website](https://flink.apache.org/), you can see the following line:

+`Apache Flink - Stateful Computations over Data Streams.` Flink focuses on distributed computing,

+which brings real-time big data computing. Users need to combine Flink with some kind of external storage.

+

+The message queue will be used in both source & intermediate stages in streaming pipeline, to guarantee the

+latency stay within seconds. There will also be a real-time OLAP system receiving processed data in streaming

+fashion and serving user’s ad-hoc queries.

+

+Everything works fine as long as users only care about the aggregated results. But when users start to care

+about the intermediate data, they will immediately hit a blocker: Intermediate kafka tables are not queryable.

+

+Therefore, users use multiple systems. Writing to a lake store like Apache Hudi, Apache Iceberg while writing to Queue,

+the lake store keeps historical data at a lower cost.

+

+There are two main issues with doing this:

+- High understanding bar for users: It’s also not easy for users to understand all the SQL connectors,

+ learn the capabilities and restrictions for each of those. Users may also want to play around with

+ streaming & batch unification, but don't really know how, given the connectors are most of the time different

+ in batch and streaming use cases.

+- Increasing architecture complexity: It’s hard to choose the most suited external systems when the requirements

+ include streaming pipelines, offline batch jobs, ad-hoc queries. Multiple systems will increase the operation

+ and maintenance complexity. Users at least need to coordinate between the queue system and file system of each

+ table, which is error-prone.

+

+The Flink Table Store aims to provide a unified storage abstraction:

+- Table Store provides storage of historical data while providing queue abstraction.

+- Table Store provides competitive historical storage with lake storage capability, using LSM file structure

+ to store data on DFS, providing real-time updates and queries at a lower cost.

Review Comment:

I will add cloud storage

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscribe@flink.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [flink-web] openinx commented on a diff in pull request #531: Add Table Store 0.1.0 release

Posted by GitBox <gi...@apache.org>.

openinx commented on code in PR #531:

URL: https://github.com/apache/flink-web/pull/531#discussion_r867594000

##########

_posts/2022-05-01-release-table-store-0.1.0.md:

##########

@@ -0,0 +1,110 @@

+---

+layout: post

+title: "Apache Flink Table Store 0.1.0 Release Announcement"

+subtitle: "Unified streaming and batch store for building dynamic tables on Apache Flink."

+date: 2022-05-01T08:00:00.000Z

+categories: news

+authors:

+- Jingsong Lee:

+ name: "Jingsong Lee"

+

+---

+

+The Apache Flink community is pleased to announce the preview release of the

+[Apache Flink Table Store](https://github.com/apache/flink-table-store) (0.1.0).

+

+Flink Table Store is a unified streaming and batch store for building dynamic tables

+on Apache Flink. It uses a full Log-Structured Merge-Tree (LSM) structure for high speed

+and a large amount of data update & query capability.

+

+Please check out the full [documentation]({{site.DOCS_BASE_URL}}flink-table-store-docs-release-0.1/) for detailed information and user guides.

+

+Note: Flink Table Store is still in beta status and undergoing rapid development,

+we do not recommend that you use it directly in a production environment.

+

+## What is Flink Table Store

+

+Open [Flink official website](https://flink.apache.org/), you can see the following line:

+`Apache Flink - Stateful Computations over Data Streams.` Flink focuses on distributed computing,

+which brings real-time big data computing. Users need to combine Flink with some kind of external storage.

+

+The message queue will be used in both source & intermediate stages in streaming pipeline, to guarantee the

+latency stay within seconds. There will also be a real-time OLAP system receiving processed data in streaming

+fashion and serving user’s ad-hoc queries.

+

+Everything works fine as long as users only care about the aggregated results. But when users start to care

+about the intermediate data, they will immediately hit a blocker: Intermediate kafka tables are not queryable.

+

+Therefore, users use multiple systems. Writing to a lake store like Apache Hudi, Apache Iceberg while writing to Queue,

+the lake store keeps historical data at a lower cost.

+

+There are two main issues with doing this:

Review Comment:

I think there is another critical benefits which is not mentioned. In the newly introduced flink unified table storage. We unified both the realtime physical data set and batch offline physical data set into a single physical data set, which means we have to separate the realtime data from the batch offline data because they usually don't have the same table format and we don't have the practical approach to flush those realtime serving data into the batch offline table format in the old architecture.

In the new architecture, we are trying to share the same data set for both realtime data and batch offline data, and the realtime will be flushed to batch offline data automatically as time advance. The batch offline data can also be accelerated for the OLAP query. The key benefit in the new architecture is: we don't need to maintain two different data set for realtime OLAP query and batch offline query, and people will save lots of ETL processing to transform data between those two kinds of data warehouse.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscribe@flink.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [flink-web] LadyForest commented on a diff in pull request #531: Add Table Store 0.1.0 release

Posted by GitBox <gi...@apache.org>.

LadyForest commented on code in PR #531:

URL: https://github.com/apache/flink-web/pull/531#discussion_r866066641

##########

_posts/2022-05-01-release-table-store-0.1.0.md:

##########

@@ -0,0 +1,93 @@

+---

+layout: post

+title: "Apache Flink Table Store 0.1.0 Release Announcement"

+subtitle: "Unified streaming and batch store for building dynamic tables on Apache Flink."

+date: 2022-05-01T08:00:00.000Z

+categories: news

+authors:

+- Jingsong Lee:

+ name: "Jingsong Lee"

+

+---

+

+The Apache Flink Community is pleased to announce the preview release of the

+[Apache Flink Table Store](https://github.com/apache/flink-table-store) (0.1.0).

+

+Flink Table Store is a unified streaming and batch store for building dynamic tables

+on Apache Flink. It uses a full Log-Structured Merge-Tree (LSM) structure for high speed

+and large amount of data update & query capability.

+

+Please check out the full [documentation]({{site.DOCS_BASE_URL}}flink-table-store-docs-release-0.1/) for detailed information and user guides.

+

+Note: Flink Table Store is still in beta status and undergoing rapid development,

+we do not recommend that you use it directly in a production environment.

+

+## What is Flink Table Store

+

+Open [Flink official website](https://flink.apache.org/), you can see the following line:

+`Apache Flink - Stateful Computations over Data Streams.` Flink focuses on distributed computing,

+which brings real-time big data computing. But pure computation doesn't bring value, users need

+to combine Flink with some kind of external storage.

+

+For a long time, we found that no external storage can fit Flink's computation model perfectly,

+which brings troubles to users. So Flink Table Store was born, it is a storage built specifically

+for Flink, for big data real-time update scenario. From now on, Flink is no longer just a computing

+engine.

+

+Flink Table Store is a unified streaming and batch table format:

+- As the storage of Flink, it first provides the capability of Queue.

+- On top of the Queue capability, it precipitates historical data to data lakes.

+- The data on data lakes can be updated and analyzed in near real-time.

+

+## Core Features

+

+Flink Table Store supports the following usage:

+- **Streaming Insert**: Write changelog streams, including CDC from database and streams.

+- **Batch Insert**: Write batch data as offline warehouse, including OVERWRITE support.

+- **Batch/OLAP Query**: Read snapshot of the storage, efficient querying of real-time data.

Review Comment:

Nit: "the snapshot"

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscribe@flink.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [flink-web] LadyForest commented on a diff in pull request #531: Add Table Store 0.1.0 release

Posted by GitBox <gi...@apache.org>.

LadyForest commented on code in PR #531:

URL: https://github.com/apache/flink-web/pull/531#discussion_r865974310

##########

_posts/2022-05-01-release-table-store-0.1.0.md:

##########

@@ -0,0 +1,93 @@

+---

+layout: post

+title: "Apache Flink Table Store 0.1.0 Release Announcement"

+subtitle: "Unified streaming and batch store for building dynamic tables on Apache Flink."

+date: 2022-05-01T08:00:00.000Z

+categories: news

+authors:

+- Jingsong Lee:

+ name: "Jingsong Lee"

+

+---

+

+The Apache Flink Community is pleased to announce the preview release of the

+[Apache Flink Table Store](https://github.com/apache/flink-table-store) (0.1.0).

+

+Flink Table Store is a unified streaming and batch store for building dynamic tables

+on Apache Flink. It uses a full Log-Structured Merge-Tree (LSM) structure for high speed

+and large amount of data update & query capability.

Review Comment:

"and a large amount of"

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscribe@flink.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [flink-web] openinx commented on a diff in pull request #531: Add Table Store 0.1.0 release

Posted by GitBox <gi...@apache.org>.

openinx commented on code in PR #531:

URL: https://github.com/apache/flink-web/pull/531#discussion_r866533145

##########

_posts/2022-05-01-release-table-store-0.1.0.md:

##########

@@ -0,0 +1,93 @@

+---

+layout: post

+title: "Apache Flink Table Store 0.1.0 Release Announcement"

+subtitle: "Unified streaming and batch store for building dynamic tables on Apache Flink."

+date: 2022-05-01T08:00:00.000Z

+categories: news

+authors:

+- Jingsong Lee:

+ name: "Jingsong Lee"

+

+---

+

+The Apache Flink Community is pleased to announce the preview release of the

+[Apache Flink Table Store](https://github.com/apache/flink-table-store) (0.1.0).

+

+Flink Table Store is a unified streaming and batch store for building dynamic tables

+on Apache Flink. It uses a full Log-Structured Merge-Tree (LSM) structure for high speed

+and large amount of data update & query capability.

+

+Please check out the full [documentation]({{site.DOCS_BASE_URL}}flink-table-store-docs-release-0.1/) for detailed information and user guides.

+

+Note: Flink Table Store is still in beta status and undergoing rapid development,

+we do not recommend that you use it directly in a production environment.

+

+## What is Flink Table Store

+

+Open [Flink official website](https://flink.apache.org/), you can see the following line:

+`Apache Flink - Stateful Computations over Data Streams.` Flink focuses on distributed computing,

+which brings real-time big data computing. But pure computation doesn't bring value, users need

+to combine Flink with some kind of external storage.

+

+For a long time, we found that no external storage can fit Flink's computation model perfectly,

+which brings troubles to users. So Flink Table Store was born, it is a storage built specifically

+for Flink, for big data real-time update scenario. From now on, Flink is no longer just a computing

+engine.

+

+Flink Table Store is a unified streaming and batch table format:

+- As the storage of Flink, it first provides the capability of Queue.

+- On top of the Queue capability, it precipitates historical data to data lakes.

+- The data on data lakes can be updated and analyzed in near real-time.

+

+## Core Features

+

+Flink Table Store supports the following usage:

+- **Streaming Insert**: Write changelog streams, including CDC from database and streams.

+- **Batch Insert**: Write batch data as offline warehouse, including OVERWRITE support.

+- **Batch/OLAP Query**: Read snapshot of the storage, efficient querying of real-time data.

+- **Streaming Query**: Read changes of the storage, ensure exactly-once consistency.

+

+Flink Table Store uses the following technologies to support the above user usages:

+- Hybrid Storage: Integrating Apache Kafka to achieve real-time stream computation.

+- LSM Structure: For large amount of data updates and high performance query.

+- Columnar File Format: Use Apache ORC to support efficient querying.

+- Lake Storage: Metadata and data on DFS and Object Store.

+

+Many thanks for the inspiration of the following systems: Apache Iceberg, RocksDB.

Review Comment:

btw, could please add the official links for those two projects ? Thanks.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscribe@flink.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [flink-web] JingsongLi commented on a diff in pull request #531: Add Table Store 0.1.0 release

Posted by GitBox <gi...@apache.org>.

JingsongLi commented on code in PR #531:

URL: https://github.com/apache/flink-web/pull/531#discussion_r867585779

##########

_posts/2022-05-01-release-table-store-0.1.0.md:

##########

@@ -0,0 +1,110 @@

+---

+layout: post

+title: "Apache Flink Table Store 0.1.0 Release Announcement"

+subtitle: "Unified streaming and batch store for building dynamic tables on Apache Flink."

+date: 2022-05-01T08:00:00.000Z

+categories: news

+authors:

+- Jingsong Lee:

+ name: "Jingsong Lee"

+

+---

+

+The Apache Flink community is pleased to announce the preview release of the

+[Apache Flink Table Store](https://github.com/apache/flink-table-store) (0.1.0).

+

+Flink Table Store is a unified streaming and batch store for building dynamic tables

+on Apache Flink. It uses a full Log-Structured Merge-Tree (LSM) structure for high speed

+and a large amount of data update & query capability.

+

+Please check out the full [documentation]({{site.DOCS_BASE_URL}}flink-table-store-docs-release-0.1/) for detailed information and user guides.

+

+Note: Flink Table Store is still in beta status and undergoing rapid development,

+we do not recommend that you use it directly in a production environment.

+

+## What is Flink Table Store

+

+Open [Flink official website](https://flink.apache.org/), you can see the following line:

+`Apache Flink - Stateful Computations over Data Streams.` Flink focuses on distributed computing,

+which brings real-time big data computing. Users need to combine Flink with some kind of external storage.

+

+The message queue will be used in both source & intermediate stages in streaming pipeline, to guarantee the

+latency stay within seconds. There will also be a real-time OLAP system receiving processed data in streaming

+fashion and serving user’s ad-hoc queries.

+

+Everything works fine as long as users only care about the aggregated results. But when users start to care

+about the intermediate data, they will immediately hit a blocker: Intermediate kafka tables are not queryable.

+

+Therefore, users use multiple systems. Writing to a lake store like Apache Hudi, Apache Iceberg while writing to Queue,

+the lake store keeps historical data at a lower cost.

+

+There are two main issues with doing this:

+- High understanding bar for users: It’s also not easy for users to understand all the SQL connectors,

+ learn the capabilities and restrictions for each of those. Users may also want to play around with

+ streaming & batch unification, but don't really know how, given the connectors are most of the time different

+ in batch and streaming use cases.

+- Increasing architecture complexity: It’s hard to choose the most suited external systems when the requirements

+ include streaming pipelines, offline batch jobs, ad-hoc queries. Multiple systems will increase the operation

+ and maintenance complexity. Users at least need to coordinate between the queue system and file system of each

+ table, which is error-prone.

Review Comment:

> I share the same feeling, and maybe adding a picture to depict the (better, easier, cleaner - as indicated here) architecture with the flink table store solution could help readers to understand.

> I also have the same feeling the announcement misses a picture to explain the position and capability of the table store.

We should do this.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscribe@flink.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [flink-web] LadyForest commented on a diff in pull request #531: Add Table Store 0.1.0 release

Posted by GitBox <gi...@apache.org>.

LadyForest commented on code in PR #531:

URL: https://github.com/apache/flink-web/pull/531#discussion_r866034934

##########

_posts/2022-05-01-release-table-store-0.1.0.md:

##########

@@ -0,0 +1,93 @@

+---

+layout: post

+title: "Apache Flink Table Store 0.1.0 Release Announcement"

+subtitle: "Unified streaming and batch store for building dynamic tables on Apache Flink."

+date: 2022-05-01T08:00:00.000Z

+categories: news

+authors:

+- Jingsong Lee:

+ name: "Jingsong Lee"

+

+---

+

+The Apache Flink Community is pleased to announce the preview release of the

+[Apache Flink Table Store](https://github.com/apache/flink-table-store) (0.1.0).

+

+Flink Table Store is a unified streaming and batch store for building dynamic tables

+on Apache Flink. It uses a full Log-Structured Merge-Tree (LSM) structure for high speed

+and large amount of data update & query capability.

+

+Please check out the full [documentation]({{site.DOCS_BASE_URL}}flink-table-store-docs-release-0.1/) for detailed information and user guides.

+

+Note: Flink Table Store is still in beta status and undergoing rapid development,

+we do not recommend that you use it directly in a production environment.

+

+## What is Flink Table Store

+

+Open [Flink official website](https://flink.apache.org/), you can see the following line:

+`Apache Flink - Stateful Computations over Data Streams.` Flink focuses on distributed computing,

+which brings real-time big data computing. But pure computation doesn't bring value, users need

+to combine Flink with some kind of external storage.

+

+For a long time, we found that no external storage can fit Flink's computation model perfectly,

+which brings troubles to users. So Flink Table Store was born, it is a storage built specifically

+for Flink, for big data real-time update scenario. From now on, Flink is no longer just a computing

Review Comment:

Nit: "scenarios"

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscribe@flink.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [flink-web] openinx commented on a diff in pull request #531: Add Table Store 0.1.0 release

Posted by GitBox <gi...@apache.org>.

openinx commented on code in PR #531:

URL: https://github.com/apache/flink-web/pull/531#discussion_r866527445

##########

_config.yml:

##########

@@ -198,6 +198,24 @@ flink_kubernetes_operator_releases:

asc_url: "https://downloads.apache.org/flink/flink-kubernetes-operator-0.1.0/flink-kubernetes-operator-0.1.0-helm.tgz.asc"

sha512_url: "https://downloads.apache.org/flink/flink-kubernetes-operator-0.1.0/flink-kubernetes-operator-0.1.0-helm.tgz.sha512"

+flink_table_store_releases:

+ -

+ version_short: "0.1"

+ source_release:

+ name: "Apache Flink Table Store 0.1.0"

+ id: "010-table-store-download-source"

+ flink_version: "1.15.0"

+ url: "https://www.apache.org/dyn/closer.lua/flink/flink-table-store-0.1.0/flink-table-store-0.1.0-src.tgz"

+ asc_url: "https://downloads.apache.org/flink/flink-table-store-0.1.0/flink-table-store-0.1.0-src.tgz.asc"

+ sha512_url: "https://downloads.apache.org/flink/flink-table-store-0.1.0/flink-table-store-0.1.0-src.tgz.sha512"

Review Comment:

I see those links are reporting `404 Not founds`. Does it means we leave those links here unless we released those packages into apache maven repository ( I see we are still voting for the release in the mail list ).

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscribe@flink.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [flink-web] LadyForest commented on a diff in pull request #531: Add Table Store 0.1.0 release

Posted by GitBox <gi...@apache.org>.

LadyForest commented on code in PR #531:

URL: https://github.com/apache/flink-web/pull/531#discussion_r866015165

##########

_posts/2022-05-01-release-table-store-0.1.0.md:

##########

@@ -0,0 +1,93 @@

+---

+layout: post

+title: "Apache Flink Table Store 0.1.0 Release Announcement"

+subtitle: "Unified streaming and batch store for building dynamic tables on Apache Flink."

+date: 2022-05-01T08:00:00.000Z

+categories: news

+authors:

+- Jingsong Lee:

+ name: "Jingsong Lee"

+

+---

+

+The Apache Flink Community is pleased to announce the preview release of the

+[Apache Flink Table Store](https://github.com/apache/flink-table-store) (0.1.0).

+

+Flink Table Store is a unified streaming and batch store for building dynamic tables

+on Apache Flink. It uses a full Log-Structured Merge-Tree (LSM) structure for high speed

+and large amount of data update & query capability.

+

+Please check out the full [documentation]({{site.DOCS_BASE_URL}}flink-table-store-docs-release-0.1/) for detailed information and user guides.

+

+Note: Flink Table Store is still in beta status and undergoing rapid development,

+we do not recommend that you use it directly in a production environment.

+

+## What is Flink Table Store

+

+Open [Flink official website](https://flink.apache.org/), you can see the following line:

+`Apache Flink - Stateful Computations over Data Streams.` Flink focuses on distributed computing,

+which brings real-time big data computing. But pure computation doesn't bring value, users need

+to combine Flink with some kind of external storage.

+

+For a long time, we found that no external storage can fit Flink's computation model perfectly,

+which brings troubles to users. So Flink Table Store was born, it is a storage built specifically

+for Flink, for big data real-time update scenario. From now on, Flink is no longer just a computing

+engine.

+

+Flink Table Store is a unified streaming and batch table format:

+- As the storage of Flink, it first provides the capability of Queue.

+- On top of the Queue capability, it precipitates historical data to data lakes.

+- The data on data lakes can be updated and analyzed in near real-time.

+

+## Core Features

+

+Flink Table Store supports the following usage:

+- **Streaming Insert**: Write changelog streams, including CDC from database and streams.

+- **Batch Insert**: Write batch data as offline warehouse, including OVERWRITE support.

+- **Batch/OLAP Query**: Read snapshot of the storage, efficient querying of real-time data.

+- **Streaming Query**: Read changes of the storage, ensure exactly-once consistency.

+

+Flink Table Store uses the following technologies to support the above user usages:

+- Hybrid Storage: Integrating Apache Kafka to achieve real-time stream computation.

+- LSM Structure: For large amount of data updates and high performance query.

Review Comment:

Nit: "a large number of" and "high-performance"

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscribe@flink.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [flink-web] JingsongLi commented on a diff in pull request #531: Add Table Store 0.1.0 release

Posted by GitBox <gi...@apache.org>.

JingsongLi commented on code in PR #531:

URL: https://github.com/apache/flink-web/pull/531#discussion_r867604541

##########

_posts/2022-05-01-release-table-store-0.1.0.md:

##########

@@ -0,0 +1,110 @@

+---

+layout: post

+title: "Apache Flink Table Store 0.1.0 Release Announcement"

+subtitle: "Unified streaming and batch store for building dynamic tables on Apache Flink."

+date: 2022-05-01T08:00:00.000Z

+categories: news

+authors:

+- Jingsong Lee:

+ name: "Jingsong Lee"

+

+---

+

+The Apache Flink community is pleased to announce the preview release of the

+[Apache Flink Table Store](https://github.com/apache/flink-table-store) (0.1.0).

+

+Flink Table Store is a unified streaming and batch store for building dynamic tables

+on Apache Flink. It uses a full Log-Structured Merge-Tree (LSM) structure for high speed

+and a large amount of data update & query capability.

+

+Please check out the full [documentation]({{site.DOCS_BASE_URL}}flink-table-store-docs-release-0.1/) for detailed information and user guides.

+

+Note: Flink Table Store is still in beta status and undergoing rapid development,

+we do not recommend that you use it directly in a production environment.

+

+## What is Flink Table Store

+

+Open [Flink official website](https://flink.apache.org/), you can see the following line:

+`Apache Flink - Stateful Computations over Data Streams.` Flink focuses on distributed computing,

+which brings real-time big data computing. Users need to combine Flink with some kind of external storage.

+

+The message queue will be used in both source & intermediate stages in streaming pipeline, to guarantee the

+latency stay within seconds. There will also be a real-time OLAP system receiving processed data in streaming

+fashion and serving user’s ad-hoc queries.

+

+Everything works fine as long as users only care about the aggregated results. But when users start to care

+about the intermediate data, they will immediately hit a blocker: Intermediate kafka tables are not queryable.

+

+Therefore, users use multiple systems. Writing to a lake store like Apache Hudi, Apache Iceberg while writing to Queue,

Review Comment:

I will delete this sentence

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscribe@flink.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [flink-web] wuchong commented on a diff in pull request #531: Add Table Store 0.1.0 release

Posted by GitBox <gi...@apache.org>.

wuchong commented on code in PR #531:

URL: https://github.com/apache/flink-web/pull/531#discussion_r867366040

##########

_posts/2022-05-01-release-table-store-0.1.0.md:

##########

@@ -0,0 +1,110 @@

+---

+layout: post

+title: "Apache Flink Table Store 0.1.0 Release Announcement"

+subtitle: "Unified streaming and batch store for building dynamic tables on Apache Flink."

+date: 2022-05-01T08:00:00.000Z

+categories: news

+authors:

+- Jingsong Lee:

+ name: "Jingsong Lee"

+

+---

+

+The Apache Flink community is pleased to announce the preview release of the

+[Apache Flink Table Store](https://github.com/apache/flink-table-store) (0.1.0).

+

+Flink Table Store is a unified streaming and batch store for building dynamic tables

+on Apache Flink. It uses a full Log-Structured Merge-Tree (LSM) structure for high speed

+and a large amount of data update & query capability.

+

+Please check out the full [documentation]({{site.DOCS_BASE_URL}}flink-table-store-docs-release-0.1/) for detailed information and user guides.

+

+Note: Flink Table Store is still in beta status and undergoing rapid development,

+we do not recommend that you use it directly in a production environment.

+

+## What is Flink Table Store

+

+Open [Flink official website](https://flink.apache.org/), you can see the following line:

+`Apache Flink - Stateful Computations over Data Streams.` Flink focuses on distributed computing,

+which brings real-time big data computing. Users need to combine Flink with some kind of external storage.

+

+The message queue will be used in both source & intermediate stages in streaming pipeline, to guarantee the

+latency stay within seconds. There will also be a real-time OLAP system receiving processed data in streaming

+fashion and serving user’s ad-hoc queries.

+

+Everything works fine as long as users only care about the aggregated results. But when users start to care

+about the intermediate data, they will immediately hit a blocker: Intermediate kafka tables are not queryable.

+

+Therefore, users use multiple systems. Writing to a lake store like Apache Hudi, Apache Iceberg while writing to Queue,

+the lake store keeps historical data at a lower cost.

+

+There are two main issues with doing this:

+- High understanding bar for users: It’s also not easy for users to understand all the SQL connectors,

+ learn the capabilities and restrictions for each of those. Users may also want to play around with

+ streaming & batch unification, but don't really know how, given the connectors are most of the time different

+ in batch and streaming use cases.

+- Increasing architecture complexity: It’s hard to choose the most suited external systems when the requirements

+ include streaming pipelines, offline batch jobs, ad-hoc queries. Multiple systems will increase the operation

+ and maintenance complexity. Users at least need to coordinate between the queue system and file system of each

+ table, which is error-prone.

+

+The Flink Table Store aims to provide a unified storage abstraction:

+- Table Store provides storage of historical data while providing queue abstraction.

+- Table Store provides competitive historical storage with lake storage capability, using LSM file structure

+ to store data on DFS, providing real-time updates and queries at a lower cost.

Review Comment:

It sounds like Table Store only supports storing data on DFS and doesn't support object storage.

##########

_posts/2022-05-01-release-table-store-0.1.0.md:

##########

@@ -0,0 +1,110 @@

+---

+layout: post

+title: "Apache Flink Table Store 0.1.0 Release Announcement"

+subtitle: "Unified streaming and batch store for building dynamic tables on Apache Flink."

+date: 2022-05-01T08:00:00.000Z

+categories: news

+authors:

+- Jingsong Lee:

+ name: "Jingsong Lee"

+

+---

+

+The Apache Flink community is pleased to announce the preview release of the

+[Apache Flink Table Store](https://github.com/apache/flink-table-store) (0.1.0).

+

+Flink Table Store is a unified streaming and batch store for building dynamic tables

+on Apache Flink. It uses a full Log-Structured Merge-Tree (LSM) structure for high speed

+and a large amount of data update & query capability.

+

+Please check out the full [documentation]({{site.DOCS_BASE_URL}}flink-table-store-docs-release-0.1/) for detailed information and user guides.

+

+Note: Flink Table Store is still in beta status and undergoing rapid development,

+we do not recommend that you use it directly in a production environment.

+

+## What is Flink Table Store

+

+Open [Flink official website](https://flink.apache.org/), you can see the following line:

+`Apache Flink - Stateful Computations over Data Streams.` Flink focuses on distributed computing,

+which brings real-time big data computing. Users need to combine Flink with some kind of external storage.

+

+The message queue will be used in both source & intermediate stages in streaming pipeline, to guarantee the

+latency stay within seconds. There will also be a real-time OLAP system receiving processed data in streaming

+fashion and serving user’s ad-hoc queries.

+

+Everything works fine as long as users only care about the aggregated results. But when users start to care

+about the intermediate data, they will immediately hit a blocker: Intermediate kafka tables are not queryable.

+

+Therefore, users use multiple systems. Writing to a lake store like Apache Hudi, Apache Iceberg while writing to Queue,

+the lake store keeps historical data at a lower cost.

+

+There are two main issues with doing this:

+- High understanding bar for users: It’s also not easy for users to understand all the SQL connectors,

+ learn the capabilities and restrictions for each of those. Users may also want to play around with

+ streaming & batch unification, but don't really know how, given the connectors are most of the time different

+ in batch and streaming use cases.

+- Increasing architecture complexity: It’s hard to choose the most suited external systems when the requirements

+ include streaming pipelines, offline batch jobs, ad-hoc queries. Multiple systems will increase the operation

+ and maintenance complexity. Users at least need to coordinate between the queue system and file system of each

+ table, which is error-prone.

Review Comment:

I also have the same feeling the announcement misses a picture to explain the position and capability of the table store.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscribe@flink.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [flink-web] LadyForest commented on a diff in pull request #531: Add Table Store 0.1.0 release

Posted by GitBox <gi...@apache.org>.

LadyForest commented on code in PR #531:

URL: https://github.com/apache/flink-web/pull/531#discussion_r866066641

##########

_posts/2022-05-01-release-table-store-0.1.0.md:

##########

@@ -0,0 +1,93 @@

+---

+layout: post

+title: "Apache Flink Table Store 0.1.0 Release Announcement"

+subtitle: "Unified streaming and batch store for building dynamic tables on Apache Flink."

+date: 2022-05-01T08:00:00.000Z

+categories: news

+authors:

+- Jingsong Lee:

+ name: "Jingsong Lee"

+

+---

+

+The Apache Flink Community is pleased to announce the preview release of the

+[Apache Flink Table Store](https://github.com/apache/flink-table-store) (0.1.0).

+

+Flink Table Store is a unified streaming and batch store for building dynamic tables

+on Apache Flink. It uses a full Log-Structured Merge-Tree (LSM) structure for high speed

+and large amount of data update & query capability.

+

+Please check out the full [documentation]({{site.DOCS_BASE_URL}}flink-table-store-docs-release-0.1/) for detailed information and user guides.

+

+Note: Flink Table Store is still in beta status and undergoing rapid development,

+we do not recommend that you use it directly in a production environment.

+

+## What is Flink Table Store

+

+Open [Flink official website](https://flink.apache.org/), you can see the following line:

+`Apache Flink - Stateful Computations over Data Streams.` Flink focuses on distributed computing,

+which brings real-time big data computing. But pure computation doesn't bring value, users need

+to combine Flink with some kind of external storage.

+

+For a long time, we found that no external storage can fit Flink's computation model perfectly,

+which brings troubles to users. So Flink Table Store was born, it is a storage built specifically

+for Flink, for big data real-time update scenario. From now on, Flink is no longer just a computing

+engine.

+

+Flink Table Store is a unified streaming and batch table format:

+- As the storage of Flink, it first provides the capability of Queue.

+- On top of the Queue capability, it precipitates historical data to data lakes.

+- The data on data lakes can be updated and analyzed in near real-time.

+

+## Core Features

+

+Flink Table Store supports the following usage:

+- **Streaming Insert**: Write changelog streams, including CDC from database and streams.

+- **Batch Insert**: Write batch data as offline warehouse, including OVERWRITE support.

+- **Batch/OLAP Query**: Read snapshot of the storage, efficient querying of real-time data.

Review Comment:

Nit: "the snapshot", "and efficient querying"

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscribe@flink.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [flink-web] JingsongLi merged pull request #531: Add Table Store 0.1.0 release

Posted by GitBox <gi...@apache.org>.

JingsongLi merged PR #531:

URL: https://github.com/apache/flink-web/pull/531

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscribe@flink.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [flink-web] carp84 commented on a diff in pull request #531: Add Table Store 0.1.0 release

Posted by GitBox <gi...@apache.org>.

carp84 commented on code in PR #531:

URL: https://github.com/apache/flink-web/pull/531#discussion_r867320198

##########

_posts/2022-05-01-release-table-store-0.1.0.md:

##########

@@ -0,0 +1,110 @@

+---

+layout: post

+title: "Apache Flink Table Store 0.1.0 Release Announcement"

+subtitle: "Unified streaming and batch store for building dynamic tables on Apache Flink."

+date: 2022-05-01T08:00:00.000Z

+categories: news

+authors:

+- Jingsong Lee:

+ name: "Jingsong Lee"

+

+---

+

+The Apache Flink community is pleased to announce the preview release of the

+[Apache Flink Table Store](https://github.com/apache/flink-table-store) (0.1.0).

+

+Flink Table Store is a unified streaming and batch store for building dynamic tables

+on Apache Flink. It uses a full Log-Structured Merge-Tree (LSM) structure for high speed

+and a large amount of data update & query capability.

+

+Please check out the full [documentation]({{site.DOCS_BASE_URL}}flink-table-store-docs-release-0.1/) for detailed information and user guides.

+

+Note: Flink Table Store is still in beta status and undergoing rapid development,

+we do not recommend that you use it directly in a production environment.

+

+## What is Flink Table Store

+

+Open [Flink official website](https://flink.apache.org/), you can see the following line:

+`Apache Flink - Stateful Computations over Data Streams.` Flink focuses on distributed computing,

+which brings real-time big data computing. Users need to combine Flink with some kind of external storage.

+

+The message queue will be used in both source & intermediate stages in streaming pipeline, to guarantee the

+latency stay within seconds. There will also be a real-time OLAP system receiving processed data in streaming

+fashion and serving user’s ad-hoc queries.

+

+Everything works fine as long as users only care about the aggregated results. But when users start to care

+about the intermediate data, they will immediately hit a blocker: Intermediate kafka tables are not queryable.

+

+Therefore, users use multiple systems. Writing to a lake store like Apache Hudi, Apache Iceberg while writing to Queue,

+the lake store keeps historical data at a lower cost.

+

+There are two main issues with doing this:

+- High understanding bar for users: It’s also not easy for users to understand all the SQL connectors,

+ learn the capabilities and restrictions for each of those. Users may also want to play around with

+ streaming & batch unification, but don't really know how, given the connectors are most of the time different

+ in batch and streaming use cases.

+- Increasing architecture complexity: It’s hard to choose the most suited external systems when the requirements

+ include streaming pipelines, offline batch jobs, ad-hoc queries. Multiple systems will increase the operation

+ and maintenance complexity. Users at least need to coordinate between the queue system and file system of each

+ table, which is error-prone.

+

+The Flink Table Store aims to provide a unified storage abstraction:

+- Table Store provides storage of historical data while providing queue abstraction.

+- Table Store provides competitive historical storage with lake storage capability, using LSM file structure

+ to store data on DFS, providing real-time updates and queries at a lower cost.

+- Table Store coordinates between the queue storage and historical storage, providing hybrid read and write capabilities.

+- Table Store is a storage created for Flink, which satisfies all the concepts of Flink SQL and is the most

+ suitable storage abstraction for Flink.

+

+## Core Features

+

+Flink Table Store supports the following usage:

+- **Streaming Insert**: Write changelog streams, including CDC from the database and streams.

+- **Batch Insert**: Write batch data as offline warehouse, including OVERWRITE support.

+- **Batch/OLAP Query**: Read the snapshot of the storage, efficient querying of real-time data.

+- **Streaming Query**: Read the storage changes, ensure exactly-once consistency.

+

+Flink Table Store uses the following technologies to support the above user usages:

+- Hybrid Storage: Integrating Apache Kafka to achieve real-time stream computation.

+- LSM Structure: For a large amount of data updates and high performance queries.

+- Columnar File Format: Use Apache ORC to support efficient querying.

+- Lake Storage: Metadata and data on DFS and Object Store.

+

+Many thanks for the inspiration of the following systems: [Apache Iceberg](https://iceberg.apache.org/) and [RocksDB](http://rocksdb.org/).

+

+## Getting started

+

+For a detailed [getting started guide]({{site.DOCS_BASE_URL}}flink-table-store-docs-release-0.1/docs/try-table-store/quick-start/) please check the documentation site.

Review Comment:

```suggestion

Please refer to the [getting started guide]({{site.DOCS_BASE_URL}}flink-table-store-docs-release-0.1/docs/try-table-store/quick-start/) for more details.

```

##########

_posts/2022-05-01-release-table-store-0.1.0.md:

##########

@@ -0,0 +1,110 @@

+---

+layout: post

+title: "Apache Flink Table Store 0.1.0 Release Announcement"

+subtitle: "Unified streaming and batch store for building dynamic tables on Apache Flink."

+date: 2022-05-01T08:00:00.000Z

+categories: news

+authors:

+- Jingsong Lee:

+ name: "Jingsong Lee"

+

+---

+

+The Apache Flink community is pleased to announce the preview release of the

+[Apache Flink Table Store](https://github.com/apache/flink-table-store) (0.1.0).

+

+Flink Table Store is a unified streaming and batch store for building dynamic tables

+on Apache Flink. It uses a full Log-Structured Merge-Tree (LSM) structure for high speed

+and a large amount of data update & query capability.

Review Comment:

The description of "unified streaming and batch store" sounds a little bit odd to me, and talking about data structure (LSM-tree) is too detailed. How about changing into something like "Flink Table Store is for building dynamic tables for both stream and batch processing in Flink, supporting high speed data ingestion and timely data query"?

##########

_posts/2022-05-01-release-table-store-0.1.0.md:

##########

@@ -0,0 +1,110 @@

+---

+layout: post

+title: "Apache Flink Table Store 0.1.0 Release Announcement"

+subtitle: "Unified streaming and batch store for building dynamic tables on Apache Flink."

+date: 2022-05-01T08:00:00.000Z

+categories: news

+authors:

+- Jingsong Lee:

+ name: "Jingsong Lee"

+

+---

+

+The Apache Flink community is pleased to announce the preview release of the

+[Apache Flink Table Store](https://github.com/apache/flink-table-store) (0.1.0).

+

+Flink Table Store is a unified streaming and batch store for building dynamic tables

+on Apache Flink. It uses a full Log-Structured Merge-Tree (LSM) structure for high speed

+and a large amount of data update & query capability.

+

+Please check out the full [documentation]({{site.DOCS_BASE_URL}}flink-table-store-docs-release-0.1/) for detailed information and user guides.

+

+Note: Flink Table Store is still in beta status and undergoing rapid development,

+we do not recommend that you use it directly in a production environment.

+

+## What is Flink Table Store

+

+Open [Flink official website](https://flink.apache.org/), you can see the following line:

+`Apache Flink - Stateful Computations over Data Streams.` Flink focuses on distributed computing,

+which brings real-time big data computing. Users need to combine Flink with some kind of external storage.

+

+The message queue will be used in both source & intermediate stages in streaming pipeline, to guarantee the

+latency stay within seconds. There will also be a real-time OLAP system receiving processed data in streaming

+fashion and serving user’s ad-hoc queries.

+

+Everything works fine as long as users only care about the aggregated results. But when users start to care

+about the intermediate data, they will immediately hit a blocker: Intermediate kafka tables are not queryable.

+

+Therefore, users use multiple systems. Writing to a lake store like Apache Hudi, Apache Iceberg while writing to Queue,

+the lake store keeps historical data at a lower cost.

+

+There are two main issues with doing this:

+- High understanding bar for users: It’s also not easy for users to understand all the SQL connectors,

+ learn the capabilities and restrictions for each of those. Users may also want to play around with

+ streaming & batch unification, but don't really know how, given the connectors are most of the time different

+ in batch and streaming use cases.

+- Increasing architecture complexity: It’s hard to choose the most suited external systems when the requirements

+ include streaming pipelines, offline batch jobs, ad-hoc queries. Multiple systems will increase the operation

+ and maintenance complexity. Users at least need to coordinate between the queue system and file system of each

+ table, which is error-prone.

+

+The Flink Table Store aims to provide a unified storage abstraction:

+- Table Store provides storage of historical data while providing queue abstraction.

+- Table Store provides competitive historical storage with lake storage capability, using LSM file structure

+ to store data on DFS, providing real-time updates and queries at a lower cost.

+- Table Store coordinates between the queue storage and historical storage, providing hybrid read and write capabilities.

+- Table Store is a storage created for Flink, which satisfies all the concepts of Flink SQL and is the most

+ suitable storage abstraction for Flink.

+

+## Core Features

+

+Flink Table Store supports the following usage:

+- **Streaming Insert**: Write changelog streams, including CDC from the database and streams.

+- **Batch Insert**: Write batch data as offline warehouse, including OVERWRITE support.

+- **Batch/OLAP Query**: Read the snapshot of the storage, efficient querying of real-time data.

+- **Streaming Query**: Read the storage changes, ensure exactly-once consistency.

+

+Flink Table Store uses the following technologies to support the above user usages:

+- Hybrid Storage: Integrating Apache Kafka to achieve real-time stream computation.

+- LSM Structure: For a large amount of data updates and high performance queries.

+- Columnar File Format: Use Apache ORC to support efficient querying.

+- Lake Storage: Metadata and data on DFS and Object Store.

Review Comment:

I wonder whether it's necessary to expose the implementation details in the release blog post, especially when the table store is still in a preview status and implementations may change in the future.

OTOH, if we think it's still valuable to provide details here, I would suggest to add a "In this preview version" at the beginning of the paragraph.

##########

_posts/2022-05-01-release-table-store-0.1.0.md:

##########

@@ -0,0 +1,110 @@

+---

+layout: post

+title: "Apache Flink Table Store 0.1.0 Release Announcement"

+subtitle: "Unified streaming and batch store for building dynamic tables on Apache Flink."

+date: 2022-05-01T08:00:00.000Z

+categories: news

+authors:

+- Jingsong Lee:

+ name: "Jingsong Lee"

+

+---

+

+The Apache Flink community is pleased to announce the preview release of the

+[Apache Flink Table Store](https://github.com/apache/flink-table-store) (0.1.0).

+

+Flink Table Store is a unified streaming and batch store for building dynamic tables

+on Apache Flink. It uses a full Log-Structured Merge-Tree (LSM) structure for high speed

+and a large amount of data update & query capability.

+

+Please check out the full [documentation]({{site.DOCS_BASE_URL}}flink-table-store-docs-release-0.1/) for detailed information and user guides.

+

+Note: Flink Table Store is still in beta status and undergoing rapid development,

+we do not recommend that you use it directly in a production environment.

+

+## What is Flink Table Store

+

+Open [Flink official website](https://flink.apache.org/), you can see the following line:

+`Apache Flink - Stateful Computations over Data Streams.` Flink focuses on distributed computing,

+which brings real-time big data computing. Users need to combine Flink with some kind of external storage.

+

+The message queue will be used in both source & intermediate stages in streaming pipeline, to guarantee the

+latency stay within seconds. There will also be a real-time OLAP system receiving processed data in streaming

+fashion and serving user’s ad-hoc queries.

+

+Everything works fine as long as users only care about the aggregated results. But when users start to care

+about the intermediate data, they will immediately hit a blocker: Intermediate kafka tables are not queryable.

+

+Therefore, users use multiple systems. Writing to a lake store like Apache Hudi, Apache Iceberg while writing to Queue,

+the lake store keeps historical data at a lower cost.

+

+There are two main issues with doing this:

+- High understanding bar for users: It’s also not easy for users to understand all the SQL connectors,

+ learn the capabilities and restrictions for each of those. Users may also want to play around with

+ streaming & batch unification, but don't really know how, given the connectors are most of the time different

+ in batch and streaming use cases.

+- Increasing architecture complexity: It’s hard to choose the most suited external systems when the requirements

+ include streaming pipelines, offline batch jobs, ad-hoc queries. Multiple systems will increase the operation

+ and maintenance complexity. Users at least need to coordinate between the queue system and file system of each

+ table, which is error-prone.

Review Comment:

I share the same feeling, and maybe adding a picture to depict the (better, easier, cleaner - as indicated here) architecture with the flink table store solution could help readers to understand.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscribe@flink.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org