You are viewing a plain text version of this content. The canonical link for it is here.

Posted to commits@zeppelin.apache.org by zj...@apache.org on 2020/05/09 02:12:40 UTC

[zeppelin] branch branch-0.9 updated: [ZEPPELIN-4795]. Update

zeppelin tutorial notes

This is an automated email from the ASF dual-hosted git repository.

zjffdu pushed a commit to branch branch-0.9

in repository https://gitbox.apache.org/repos/asf/zeppelin.git

The following commit(s) were added to refs/heads/branch-0.9 by this push:

new 38c2a80 [ZEPPELIN-4795]. Update zeppelin tutorial notes

38c2a80 is described below

commit 38c2a8028154b9c23b887dd06c075d9ceda61137

Author: Jeff Zhang <zj...@apache.org>

AuthorDate: Fri May 8 13:26:48 2020 +0800

[ZEPPELIN-4795]. Update zeppelin tutorial notes

### What is this PR for?

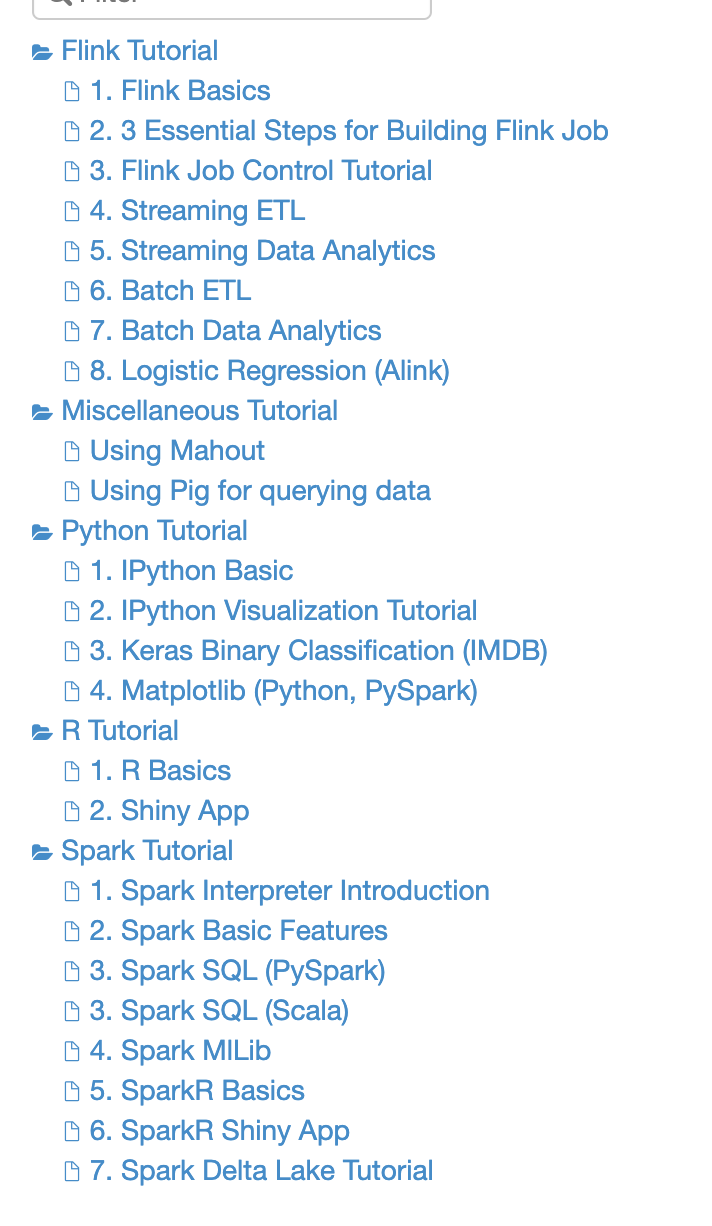

This PR is to just reorgnize zeppelin tutorial notes, so that user know which tutorial to read first.

### What type of PR is it?

[Improvement]

### Todos

* [ ] - Task

### What is the Jira issue?

* https://issues.apache.org/jira/browse/ZEPPELIN-4795

### How should this be tested?

* No test needed

### Screenshots (if appropriate)

### Questions:

* Does the licenses files need update? No

* Is there breaking changes for older versions? No

* Does this needs documentation? No

Author: Jeff Zhang <zj...@apache.org>

Closes #3766 from zjffdu/ZEPPELIN-4795 and squashes the following commits:

801d0fdb5 [Jeff Zhang] [ZEPPELIN-4795]. Update zeppelin tutorial notes

(cherry picked from commit 03211a1a4873f73e86997d7cd6f1f963927aac44)

Signed-off-by: Jeff Zhang <zj...@apache.org>

---

.../Using Mahout_2BYEZ5EVK.zpln | 0

.../Using Pig for querying data_2C57UKYWR.zpln | 0

...DJKFFY.zpln => 1. IPython Basic_2EYDJKFFY.zpln} | 0

... IPython Visualization Tutorial_2F1S9ZY8Z.zpln} | 0

...as Binary Classification (IMDB)_2F2AVWJ77.zpln} | 0

...4. Matplotlib (Python, PySpark)_2C2AUG798.zpln} | 0

...s_2BWJFTXKJ.zpln => 1. R Basics_2BWJFTXKJ.zpln} | 0

..._2EZ66TM57.zpln => 2. Shiny App_2EZ66TM57.zpln} | 0

.... Spark Interpreter Introduction_2F8KN6TKK.zpln | 508 +++++++++++++

...zpln => 2. Spark Basic Features_2A94M5J1Z.zpln} | 57 +-

....zpln => 3. Spark SQL (PySpark)_2EWM84JXA.zpln} | 0

...VR.zpln => 3. Spark SQL (Scala)_2EYUV26VR.zpln} | 0

...EZFM3GJA.zpln => 4. Spark MlLib_2EZFM3GJA.zpln} | 0

...JFTXKM.zpln => 5. SparkR Basics_2BWJFTXKM.zpln} | 0

...4TT.zpln => 6. SparkR Shiny App_2F1CHQ4TT.zpln} | 0

.../7. Spark Delta Lake Tutorial_2F8VDBMMT.zpln | 311 ++++++++

.../Flink Batch Tutorial_2EN1E1ATY.zpln | 602 ---------------

.../Flink Stream Tutorial_2ER62Y5VJ.zpln | 427 -----------

...Using Flink for batch processing_2C35YU814.zpln | 806 ---------------------

19 files changed, 844 insertions(+), 1867 deletions(-)

diff --git a/notebook/Zeppelin Tutorial/Using Mahout_2BYEZ5EVK.zpln b/notebook/Miscellaneous Tutorial/Using Mahout_2BYEZ5EVK.zpln

similarity index 100%

rename from notebook/Zeppelin Tutorial/Using Mahout_2BYEZ5EVK.zpln

rename to notebook/Miscellaneous Tutorial/Using Mahout_2BYEZ5EVK.zpln

diff --git a/notebook/Zeppelin Tutorial/Using Pig for querying data_2C57UKYWR.zpln b/notebook/Miscellaneous Tutorial/Using Pig for querying data_2C57UKYWR.zpln

similarity index 100%

rename from notebook/Zeppelin Tutorial/Using Pig for querying data_2C57UKYWR.zpln

rename to notebook/Miscellaneous Tutorial/Using Pig for querying data_2C57UKYWR.zpln

diff --git a/notebook/Python Tutorial/IPython Basic_2EYDJKFFY.zpln b/notebook/Python Tutorial/1. IPython Basic_2EYDJKFFY.zpln

similarity index 100%

rename from notebook/Python Tutorial/IPython Basic_2EYDJKFFY.zpln

rename to notebook/Python Tutorial/1. IPython Basic_2EYDJKFFY.zpln

diff --git a/notebook/Python Tutorial/IPython Visualization Tutorial_2F1S9ZY8Z.zpln b/notebook/Python Tutorial/2. IPython Visualization Tutorial_2F1S9ZY8Z.zpln

similarity index 100%

rename from notebook/Python Tutorial/IPython Visualization Tutorial_2F1S9ZY8Z.zpln

rename to notebook/Python Tutorial/2. IPython Visualization Tutorial_2F1S9ZY8Z.zpln

diff --git a/notebook/Python Tutorial/Keras Binary Classification (IMDB)_2F2AVWJ77.zpln b/notebook/Python Tutorial/3. Keras Binary Classification (IMDB)_2F2AVWJ77.zpln

similarity index 100%

rename from notebook/Python Tutorial/Keras Binary Classification (IMDB)_2F2AVWJ77.zpln

rename to notebook/Python Tutorial/3. Keras Binary Classification (IMDB)_2F2AVWJ77.zpln

diff --git a/notebook/Python Tutorial/Matplotlib (Python, PySpark)_2C2AUG798.zpln b/notebook/Python Tutorial/4. Matplotlib (Python, PySpark)_2C2AUG798.zpln

similarity index 100%

rename from notebook/Python Tutorial/Matplotlib (Python, PySpark)_2C2AUG798.zpln

rename to notebook/Python Tutorial/4. Matplotlib (Python, PySpark)_2C2AUG798.zpln

diff --git a/notebook/R Tutorial/R Basics_2BWJFTXKJ.zpln b/notebook/R Tutorial/1. R Basics_2BWJFTXKJ.zpln

similarity index 100%

rename from notebook/R Tutorial/R Basics_2BWJFTXKJ.zpln

rename to notebook/R Tutorial/1. R Basics_2BWJFTXKJ.zpln

diff --git a/notebook/R Tutorial/Shiny App_2EZ66TM57.zpln b/notebook/R Tutorial/2. Shiny App_2EZ66TM57.zpln

similarity index 100%

rename from notebook/R Tutorial/Shiny App_2EZ66TM57.zpln

rename to notebook/R Tutorial/2. Shiny App_2EZ66TM57.zpln

diff --git a/notebook/Spark Tutorial/1. Spark Interpreter Introduction_2F8KN6TKK.zpln b/notebook/Spark Tutorial/1. Spark Interpreter Introduction_2F8KN6TKK.zpln

new file mode 100644

index 0000000..28dd67c

--- /dev/null

+++ b/notebook/Spark Tutorial/1. Spark Interpreter Introduction_2F8KN6TKK.zpln

@@ -0,0 +1,508 @@

+{

+ "paragraphs": [

+ {

+ "title": "",

+ "text": "%md\n\n# Introduction\n\nThis tutorial is for how to use Spark Interpreter in Zeppelin.\n\n1. Specify `SPARK_HOME` in interpreter setting. If you don\u0027t specify `SPARK_HOME`, Zeppelin will use the embedded spark which can only run in local mode. And some advanced features may not work in this embedded spark.\n2. Specify `master` for spark execution mode.\n * `local[*]` - Driver and Executor would both run in the same host of zeppelin server. It is only for testing [...]

+ "user": "anonymous",

+ "dateUpdated": "2020-05-04 13:44:39.482",

+ "config": {

+ "tableHide": false,

+ "editorSetting": {

+ "language": "markdown",

+ "editOnDblClick": true,

+ "completionKey": "TAB",

+ "completionSupport": false

+ },

+ "colWidth": 12.0,

+ "editorMode": "ace/mode/markdown",

+ "fontSize": 9.0,

+ "editorHide": true,

+ "results": {},

+ "enabled": true

+ },

+ "settings": {

+ "params": {},

+ "forms": {}

+ },

+ "results": {

+ "code": "SUCCESS",

+ "msg": [

+ {

+ "type": "HTML",

+ "data": "\u003cdiv class\u003d\"markdown-body\"\u003e\n\u003ch1\u003eIntroduction\u003c/h1\u003e\n\u003cp\u003eThis tutorial is for how to use Spark Interpreter in Zeppelin.\u003c/p\u003e\n\u003col\u003e\n\u003cli\u003eSpecify \u003ccode\u003eSPARK_HOME\u003c/code\u003e in interpreter setting. If you don\u0026rsquo;t specify \u003ccode\u003eSPARK_HOME\u003c/code\u003e, Zeppelin will use the embedded spark which can only run in local mode. And some advanced features may not wo [...]

+ }

+ ]

+ },

+ "apps": [],

+ "progressUpdateIntervalMs": 500,

+ "jobName": "paragraph_1588214762311_275695566",

+ "id": "20180530-211919_1936070943",

+ "dateCreated": "2020-04-30 10:46:02.311",

+ "dateStarted": "2020-05-04 13:44:39.482",

+ "dateFinished": "2020-05-04 13:44:39.508",

+ "status": "FINISHED"

+ },

+ {

+ "title": "Use Generic Inline Configuration instead of Interpreter Setting",

+ "text": "%md\n\nCustomize your spark interpreter is indispensible for Zeppelin Notebook. E.g. You want to add third party jars, change the execution mode, change the number of exceutor or its memory and etc. You can check this link for all the available [spark configuration](http://spark.apache.org/docs/latest/configuration.html)\nAlthough you can customize these in interpreter setting, it is recommended to do via the generic inline configuration. Because interpreter setting is sha [...]

+ "user": "anonymous",

+ "dateUpdated": "2020-05-04 13:45:44.204",

+ "config": {

+ "tableHide": false,

+ "editorSetting": {

+ "language": "markdown",

+ "editOnDblClick": true,

+ "completionKey": "TAB",

+ "completionSupport": false

+ },

+ "colWidth": 12.0,

+ "editorMode": "ace/mode/markdown",

+ "fontSize": 9.0,

+ "editorHide": true,

+ "title": true,

+ "results": {},

+ "enabled": true

+ },

+ "settings": {

+ "params": {},

+ "forms": {}

+ },

+ "results": {

+ "code": "SUCCESS",

+ "msg": [

+ {

+ "type": "HTML",

+ "data": "\u003cdiv class\u003d\"markdown-body\"\u003e\n\u003cp\u003eCustomize your spark interpreter is indispensible for Zeppelin Notebook. E.g. You want to add third party jars, change the execution mode, change the number of exceutor or its memory and etc. You can check this link for all the available \u003ca href\u003d\"http://spark.apache.org/docs/latest/configuration.html\"\u003espark configuration\u003c/a\u003e\u003cbr /\u003e\nAlthough you can customize these in inter [...]

+ }

+ ]

+ },

+ "apps": [],

+ "progressUpdateIntervalMs": 500,

+ "jobName": "paragraph_1588214762316_737450410",

+ "id": "20180531-100923_1307061430",

+ "dateCreated": "2020-04-30 10:46:02.316",

+ "dateStarted": "2020-05-04 13:45:44.204",

+ "dateFinished": "2020-05-04 13:45:44.221",

+ "status": "FINISHED"

+ },

+ {

+ "title": "Generic Inline Configuration",

+ "text": "%spark.conf\n\nSPARK_HOME \u003cPATH_TO_SPAKR_HOME\u003e\n\n# set driver memrory to 8g\nspark.driver.memory 8g\n\n# set executor number to be 6\nspark.executor.instances 6\n\n# set executor memrory 4g\nspark.executor.memory 4g\n\n# Any other spark properties can be set here. Here\u0027s avaliable spark configruation you can set. (http://spark.apache.org/docs/latest/configuration.html)\n",

+ "user": "anonymous",

+ "dateUpdated": "2020-04-30 10:56:30.840",

+ "config": {

+ "editorSetting": {

+ "language": "text",

+ "editOnDblClick": false,

+ "completionKey": "TAB",

+ "completionSupport": true

+ },

+ "colWidth": 12.0,

+ "editorMode": "ace/mode/text",

+ "fontSize": 9.0,

+ "results": {},

+ "enabled": true,

+ "title": true

+ },

+ "settings": {

+ "params": {},

+ "forms": {}

+ },

+ "apps": [],

+ "progressUpdateIntervalMs": 500,

+ "jobName": "paragraph_1588214762316_1311021507",

+ "id": "20180531-101615_648039641",

+ "dateCreated": "2020-04-30 10:46:02.316",

+ "status": "READY"

+ },

+ {

+ "title": "Use Third Party Library",

+ "text": "%md\n\nThere\u0027re 2 ways to add third party libraries.\n\n* `Generic Inline Configuration` It is the recommended way to add third party jars/packages. Use `spark.jars` for adding local jar file and `spark.jars.packages` for adding packages\n* `Interpreter Setting` You can also config `spark.jars` and `spark.jars.packages` in interpreter setting, but since adding third party libraries is usually application specific. It is recommended to use `Generic Inline Configura [...]

+ "user": "anonymous",

+ "dateUpdated": "2020-04-30 10:59:35.270",

+ "config": {

+ "tableHide": false,

+ "editorSetting": {

+ "language": "markdown",

+ "editOnDblClick": true,

+ "completionKey": "TAB",

+ "completionSupport": false

+ },

+ "colWidth": 12.0,

+ "editorMode": "ace/mode/markdown",

+ "fontSize": 9.0,

+ "editorHide": true,

+ "title": true,

+ "results": {},

+ "enabled": true

+ },

+ "settings": {

+ "params": {},

+ "forms": {}

+ },

+ "results": {

+ "code": "SUCCESS",

+ "msg": [

+ {

+ "type": "HTML",

+ "data": "\u003cdiv class\u003d\"markdown-body\"\u003e\n\u003cp\u003eThere\u0026rsquo;re 2 ways to add third party libraries.\u003c/p\u003e\n\u003cul\u003e\n\u003cli\u003e\u003ccode\u003eGeneric Inline Configuration\u003c/code\u003e It is the recommended way to add third party jars/packages. Use \u003ccode\u003espark.jars\u003c/code\u003e for adding local jar file and \u003ccode\u003espark.jars.packages\u003c/code\u003e for adding packages\u003c/li\u003e\n\u003cli\u003e\u003 [...]

+ }

+ ]

+ },

+ "apps": [],

+ "progressUpdateIntervalMs": 500,

+ "jobName": "paragraph_1588214762323_1299339607",

+ "id": "20180530-212309_72587811",

+ "dateCreated": "2020-04-30 10:46:02.323",

+ "dateStarted": "2020-04-30 10:59:35.271",

+ "dateFinished": "2020-04-30 10:59:35.282",

+ "status": "FINISHED"

+ },

+ {

+ "title": "",

+ "text": "%spark.conf\n\n# Must set SPARK_HOME for this example, because it won\u0027t work for Zeppelin\u0027s embedded spark mode. The embedded spark mode doesn\u0027t \n# use spark-submit to launch spark interpreter, so spark.jars and spark.jars.packages won\u0027t take affect. \nSPARK_HOME \u003cPATH_TO_SPAKR_HOME\u003e\n\n# set execution mode\nmaster yarn-client\n\n# spark.jars can be used for adding any local jar files into spark interpreter\n# spark.jars \u003cpath_to_local_ [...]

+ "user": "anonymous",

+ "dateUpdated": "2020-04-30 11:01:36.681",

+ "config": {

+ "editorSetting": {

+ "language": "text",

+ "editOnDblClick": false,

+ "completionKey": "TAB",

+ "completionSupport": true

+ },

+ "colWidth": 6.0,

+ "editorMode": "ace/mode/text",

+ "fontSize": 9.0,

+ "results": {},

+ "enabled": true,

+ "editorHide": false

+ },

+ "settings": {

+ "params": {},

+ "forms": {}

+ },

+ "results": {

+ "code": "SUCCESS",

+ "msg": []

+ },

+ "apps": [],

+ "progressUpdateIntervalMs": 500,

+ "jobName": "paragraph_1588214762323_630094194",

+ "id": "20180530-222209_612020876",

+ "dateCreated": "2020-04-30 10:46:02.324",

+ "status": "READY"

+ },

+ {

+ "title": "",

+ "text": "%spark\n\nimport com.databricks.spark.avro._\n\nval df \u003d spark.read.format(\"com.databricks.spark.avro\").load(\"users.avro\")\ndf.printSchema\n\n",

+ "user": "anonymous",

+ "dateUpdated": "2020-04-30 10:46:02.324",

+ "config": {

+ "editorSetting": {

+ "language": "scala",

+ "editOnDblClick": false,

+ "completionKey": "TAB",

+ "completionSupport": true

+ },

+ "colWidth": 6.0,

+ "editorMode": "ace/mode/scala",

+ "fontSize": 9.0,

+ "results": {},

+ "enabled": true

+ },

+ "settings": {

+ "params": {},

+ "forms": {}

+ },

+ "results": {

+ "code": "SUCCESS",

+ "msg": [

+ {

+ "type": "TEXT",

+ "data": "import com.databricks.spark.avro._\ndf: org.apache.spark.sql.DataFrame \u003d [name: string, favorite_color: string ... 1 more field]\n+------+--------------+----------------+\n| name|favorite_color|favorite_numbers|\n+------+--------------+----------------+\n|Alyssa| null| [3, 9, 15, 20]|\n| Ben| red| []|\n+------+--------------+----------------+\n\n"

+ }

+ ]

+ },

+ "apps": [],

+ "progressUpdateIntervalMs": 500,

+ "jobName": "paragraph_1588214762324_60233930",

+ "id": "20180530-222838_1995256600",

+ "dateCreated": "2020-04-30 10:46:02.324",

+ "status": "READY"

+ },

+ {

+ "title": "Enable Hive",

+ "text": "%md\n\nIf you want to work with hive tables, you need to enable hive via the following 2 steps:\n\n1. Set `zeppelin.spark.useHiveContext` to `true`\n2. Put `hive-site.xml` under `SPARK_CONF_DIR` (By default it is the conf folder of `SPARK_HOME`). \n\n**To be noticed**, You can only enable hive when specifying `SPARK_HOME` explicitly. It doens\u0027t work with zeppelin\u0027s embedded spark.\n\n",

+ "user": "anonymous",

+ "dateUpdated": "2020-04-30 10:46:02.324",

+ "config": {

+ "editorSetting": {

+ "language": "scala",

+ "editOnDblClick": false,

+ "completionKey": "TAB",

+ "completionSupport": true

+ },

+ "colWidth": 12.0,

+ "editorMode": "ace/mode/scala",

+ "fontSize": 9.0,

+ "editorHide": true,

+ "title": true,

+ "results": {},

+ "enabled": true

+ },

+ "settings": {

+ "params": {},

+ "forms": {}

+ },

+ "results": {

+ "code": "SUCCESS",

+ "msg": [

+ {

+ "type": "HTML",

+ "data": "\u003cdiv class\u003d\"markdown-body\"\u003e\n\u003cp\u003eIf you want to work with hive tables, you need to enable hive via the following 2 steps:\u003c/p\u003e\n\u003col\u003e\n \u003cli\u003eSet \u003ccode\u003ezeppelin.spark.useHiveContext\u003c/code\u003e to \u003ccode\u003etrue\u003c/code\u003e\u003c/li\u003e\n \u003cli\u003ePut \u003ccode\u003ehive-site.xml\u003c/code\u003e under \u003ccode\u003eSPARK_CONF_DIR\u003c/code\u003e (By default it is the conf fold [...]

+ }

+ ]

+ },

+ "apps": [],

+ "progressUpdateIntervalMs": 500,

+ "jobName": "paragraph_1588214762324_975709991",

+ "id": "20180601-095002_1719356880",

+ "dateCreated": "2020-04-30 10:46:02.324",

+ "status": "READY"

+ },

+ {

+ "title": "Code Completion in Scala",

+ "text": "%md\n\nSpark interpreter provide code completion feature. As long as you type `tab`, code completion will start to work and provide you with a list of candiates. Here\u0027s one screenshot of how it works. \n\n**To be noticed**, code completion only works after spark interpreter is launched. So it will not work when you type code in the first paragraph as the spark interpreter is not launched yet. For me, usually I will run one simple code such as `sc.version` to launch sp [...]

+ "user": "anonymous",

+ "dateUpdated": "2020-04-30 11:03:03.127",

+ "config": {

+ "tableHide": false,

+ "editorSetting": {

+ "language": "markdown",

+ "editOnDblClick": true,

+ "completionKey": "TAB",

+ "completionSupport": false

+ },

+ "colWidth": 12.0,

+ "editorMode": "ace/mode/markdown",

+ "fontSize": 9.0,

+ "editorHide": true,

+ "title": true,

+ "results": {},

+ "enabled": true

+ },

+ "settings": {

+ "params": {},

+ "forms": {}

+ },

+ "results": {

+ "code": "SUCCESS",

+ "msg": [

+ {

+ "type": "HTML",

+ "data": "\u003cdiv class\u003d\"markdown-body\"\u003e\n\u003cp\u003eSpark interpreter provide code completion feature. As long as you type \u003ccode\u003etab\u003c/code\u003e, code completion will start to work and provide you with a list of candiates. Here\u0026rsquo;s one screenshot of how it works.\u003c/p\u003e\n\u003cp\u003e\u003cstrong\u003eTo be noticed\u003c/strong\u003e, code completion only works after spark interpreter is launched. So it will not work when you typ [...]

+ }

+ ]

+ },

+ "apps": [],

+ "progressUpdateIntervalMs": 500,

+ "jobName": "paragraph_1588214762324_1893956125",

+ "id": "20180531-095404_2000387113",

+ "dateCreated": "2020-04-30 10:46:02.324",

+ "dateStarted": "2020-04-30 11:03:03.136",

+ "dateFinished": "2020-04-30 11:03:03.147",

+ "status": "FINISHED"

+ },

+ {

+ "title": "PySpark",

+ "text": "%md\n\nFor using PySpark, you need to do some other pyspark configration besides the above spark configuration we mentioned before. The most important property you need to set is python path for both driver and executor. If you hit the following error, it means your python on driver is mismatched with that of executor. In this case you need to check the 2 properties: `PYSPARK_PYTHON` and `PYSPARK_DRIVER_PYTHON`. (You can use `spark.pyspark.python` and `spark.pyspark.driver [...]

+ "user": "anonymous",

+ "dateUpdated": "2020-04-30 11:04:18.086",

+ "config": {

+ "tableHide": false,

+ "editorSetting": {

+ "language": "markdown",

+ "editOnDblClick": true,

+ "completionKey": "TAB",

+ "completionSupport": false

+ },

+ "colWidth": 12.0,

+ "editorMode": "ace/mode/markdown",

+ "fontSize": 9.0,

+ "editorHide": true,

+ "title": true,

+ "results": {},

+ "enabled": true

+ },

+ "settings": {

+ "params": {},

+ "forms": {}

+ },

+ "results": {

+ "code": "SUCCESS",

+ "msg": [

+ {

+ "type": "HTML",

+ "data": "\u003cdiv class\u003d\"markdown-body\"\u003e\n\u003cp\u003eFor using PySpark, you need to do some other pyspark configration besides the above spark configuration we mentioned before. The most important property you need to set is python path for both driver and executor. If you hit the following error, it means your python on driver is mismatched with that of executor. In this case you need to check the 2 properties: \u003ccode\u003ePYSPARK_PYTHON\u003c/code\u003e a [...]

+ }

+ ]

+ },

+ "apps": [],

+ "progressUpdateIntervalMs": 500,

+ "jobName": "paragraph_1588214762324_1658396672",

+ "id": "20180531-104119_406393728",

+ "dateCreated": "2020-04-30 10:46:02.324",

+ "dateStarted": "2020-04-30 11:04:18.087",

+ "dateFinished": "2020-04-30 11:04:18.098",

+ "status": "FINISHED"

+ },

+ {

+ "title": "",

+ "text": "%spark.conf\n\n# If you python path on driver and executor is the same, then you only need to set PYSPARK_PYTHON\nPYSPARK_PYTHON \u003cpython_path\u003e\nspark.pyspark.python \u003cpython_path\u003e\n\n# You need to set PYSPARK_DRIVER_PYTHON as well if your python path on driver is different from executors.\nPYSPARK_DRIVER_PYTHON \u003cpython_path\u003e\nspark.pyspark.driver.python \u003cpython_path\u003e\n",

+ "user": "anonymous",

+ "dateUpdated": "2020-04-30 11:04:52.984",

+ "config": {

+ "editorSetting": {

+ "language": "text",

+ "editOnDblClick": false,

+ "completionKey": "TAB",

+ "completionSupport": true

+ },

+ "colWidth": 12.0,

+ "editorMode": "ace/mode/text",

+ "fontSize": 9.0,

+ "editorHide": false,

+ "results": {},

+ "enabled": true

+ },

+ "settings": {

+ "params": {},

+ "forms": {}

+ },

+ "results": {

+ "code": "SUCCESS",

+ "msg": []

+ },

+ "apps": [],

+ "progressUpdateIntervalMs": 500,

+ "jobName": "paragraph_1588214762324_496073034",

+ "id": "20180531-110822_21877516",

+ "dateCreated": "2020-04-30 10:46:02.324",

+ "status": "READY"

+ },

+ {

+ "title": "Use IPython",

+ "text": "%md\n\nStarting from Zeppelin 0.8.0, `ipython` is integrated into Zeppelin. And `PySparkInterpreter`(`%spark.pyspark`) would use `ipython` if it is avalible. It is recommended to use `ipython` interpreter as it provides more powerful feature than the old PythonInterpreter. Spark create a new interpreter called `IPySparkInterpreter` (`%spark.ipyspark`) which use IPython underneath. You can use all the `ipython` features in this IPySparkInterpreter. There\u0027s one ipython [...]

+ "user": "anonymous",

+ "dateUpdated": "2020-04-30 11:10:07.426",

+ "config": {

+ "tableHide": false,

+ "editorSetting": {

+ "language": "markdown",

+ "editOnDblClick": true,

+ "completionKey": "TAB",

+ "completionSupport": false

+ },

+ "colWidth": 12.0,

+ "editorMode": "ace/mode/markdown",

+ "fontSize": 9.0,

+ "editorHide": true,

+ "title": true,

+ "results": {},

+ "enabled": true

+ },

+ "settings": {

+ "params": {},

+ "forms": {}

+ },

+ "results": {

+ "code": "SUCCESS",

+ "msg": [

+ {

+ "type": "HTML",

+ "data": "\u003cdiv class\u003d\"markdown-body\"\u003e\n\u003cp\u003eStarting from Zeppelin 0.8.0, \u003ccode\u003eipython\u003c/code\u003e is integrated into Zeppelin. And \u003ccode\u003ePySparkInterpreter\u003c/code\u003e(\u003ccode\u003e%spark.pyspark\u003c/code\u003e) would use \u003ccode\u003eipython\u003c/code\u003e if it is avalible. It is recommended to use \u003ccode\u003eipython\u003c/code\u003e interpreter as it provides more powerful feature than the old PythonInt [...]

+ }

+ ]

+ },

+ "apps": [],

+ "progressUpdateIntervalMs": 500,

+ "jobName": "paragraph_1588214762324_1303560480",

+ "id": "20180531-104646_1689036640",

+ "dateCreated": "2020-04-30 10:46:02.324",

+ "dateStarted": "2020-04-30 11:10:07.428",

+ "dateFinished": "2020-04-30 11:10:07.436",

+ "status": "FINISHED"

+ },

+ {

+ "title": "Enable Impersonation",

+ "text": "%md\n\nBy default, all the spark interpreter will run as user who launch zeppelin server. This is OK for single user, but expose potential issue for multiple user scenaior. For multiple user scenaior, it is better to enable impersonation for Spark Interpreter in yarn mode.\nThere are 3 steps you need to do to enable impersonation.\n\n1. Enable it in Spark Interpreter Setting. You have to choose Isolated Per User mode, and then click the impersonation option as following sc [...]

+ "user": "anonymous",

+ "dateUpdated": "2020-04-30 11:11:51.383",

+ "config": {

+ "tableHide": false,

+ "editorSetting": {

+ "language": "markdown",

+ "editOnDblClick": true,

+ "completionKey": "TAB",

+ "completionSupport": false

+ },

+ "colWidth": 12.0,

+ "editorMode": "ace/mode/markdown",

+ "fontSize": 9.0,

+ "editorHide": true,

+ "title": true,

+ "results": {},

+ "enabled": true

+ },

+ "settings": {

+ "params": {},

+ "forms": {}

+ },

+ "results": {

+ "code": "SUCCESS",

+ "msg": [

+ {

+ "type": "HTML",

+ "data": "\u003cdiv class\u003d\"markdown-body\"\u003e\n\u003cp\u003eBy default, all the spark interpreter will run as user who launch zeppelin server. This is OK for single user, but expose potential issue for multiple user scenaior. For multiple user scenaior, it is better to enable impersonation for Spark Interpreter in yarn mode.\u003cbr /\u003e\nThere are 3 steps you need to do to enable impersonation.\u003c/p\u003e\n\u003col\u003e\n\u003cli\u003eEnable it in Spark Interp [...]

+ }

+ ]

+ },

+ "apps": [],

+ "progressUpdateIntervalMs": 500,

+ "jobName": "paragraph_1588214762324_1470430553",

+ "id": "20180531-105943_1008146830",

+ "dateCreated": "2020-04-30 10:46:02.324",

+ "dateStarted": "2020-04-30 11:11:51.385",

+ "dateFinished": "2020-04-30 11:11:51.395",

+ "status": "FINISHED"

+ },

+ {

+ "title": "",

+ "text": "%spark\n",

+ "user": "anonymous",

+ "dateUpdated": "2020-04-30 10:46:02.325",

+ "config": {},

+ "settings": {

+ "params": {},

+ "forms": {}

+ },

+ "apps": [],

+ "progressUpdateIntervalMs": 500,

+ "jobName": "paragraph_1588214762325_1205048464",

+ "id": "20180531-134529_63265354",

+ "dateCreated": "2020-04-30 10:46:02.325",

+ "status": "READY"

+ }

+ ],

+ "name": "1. Spark Interpreter Introduction",

+ "id": "2F8KN6TKK",

+ "defaultInterpreterGroup": "spark",

+ "version": "0.9.0-SNAPSHOT",

+ "noteParams": {},

+ "noteForms": {},

+ "angularObjects": {},

+ "config": {

+ "isZeppelinNotebookCronEnable": false

+ },

+ "info": {}

+}

\ No newline at end of file

diff --git a/notebook/Spark Tutorial/Spark Basic Features_2A94M5J1Z.zpln b/notebook/Spark Tutorial/2. Spark Basic Features_2A94M5J1Z.zpln

similarity index 92%

rename from notebook/Spark Tutorial/Spark Basic Features_2A94M5J1Z.zpln

rename to notebook/Spark Tutorial/2. Spark Basic Features_2A94M5J1Z.zpln

index a6d29da..b801f1d 100644

--- a/notebook/Spark Tutorial/Spark Basic Features_2A94M5J1Z.zpln

+++ b/notebook/Spark Tutorial/2. Spark Basic Features_2A94M5J1Z.zpln

@@ -54,7 +54,7 @@

"title": "Load data into table",

"text": "import org.apache.commons.io.IOUtils\nimport java.net.URL\nimport java.nio.charset.Charset\n\n// Zeppelin creates and injects sc (SparkContext) and sqlContext (HiveContext or SqlContext)\n// So you don\u0027t need create them manually\n\n// load bank data\nval bankText \u003d sc.parallelize(\n IOUtils.toString(\n new URL(\"https://s3.amazonaws.com/apache-zeppelin/tutorial/bank/bank.csv\"),\n Charset.forName(\"utf8\")).split(\"\\n\"))\n\ncase class Bank(age [...]

"user": "anonymous",

- "dateUpdated": "2020-01-21 22:58:52.064",

+ "dateUpdated": "2020-05-08 11:18:36.766",

"config": {

"colWidth": 12.0,

"title": true,

@@ -95,14 +95,14 @@

"jobName": "paragraph_1423500779206_-1502780787",

"id": "20150210-015259_1403135953",

"dateCreated": "2015-02-10 01:52:59.000",

- "dateStarted": "2020-01-21 22:58:52.084",

- "dateFinished": "2020-01-21 22:59:18.740",

+ "dateStarted": "2020-05-08 11:18:36.791",

+ "dateFinished": "2020-05-08 11:19:58.268",

"status": "FINISHED"

},

{

"text": "%sql \nselect age, count(1) value\nfrom bank \nwhere age \u003c 30 \ngroup by age \norder by age",

"user": "anonymous",

- "dateUpdated": "2020-01-19 16:58:04.490",

+ "dateUpdated": "2020-05-04 23:34:43.954",

"config": {

"colWidth": 4.0,

"results": [

@@ -142,7 +142,9 @@

"enabled": true,

"editorSetting": {

"language": "sql",

- "editOnDblClick": false

+ "editOnDblClick": false,

+ "completionKey": "TAB",

+ "completionSupport": true

},

"editorMode": "ace/mode/sql",

"fontSize": 9.0

@@ -165,14 +167,14 @@

"jobName": "paragraph_1423500782552_-1439281894",

"id": "20150210-015302_1492795503",

"dateCreated": "2015-02-10 01:53:02.000",

- "dateStarted": "2016-12-17 15:30:13.000",

- "dateFinished": "2016-12-17 15:31:04.000",

+ "dateStarted": "2020-05-04 23:34:43.959",

+ "dateFinished": "2020-05-04 23:34:52.126",

"status": "FINISHED"

},

{

"text": "%sql \nselect age, count(1) value \nfrom bank \nwhere age \u003c ${maxAge\u003d30} \ngroup by age \norder by age",

"user": "anonymous",

- "dateUpdated": "2020-01-19 16:58:04.541",

+ "dateUpdated": "2020-05-04 23:34:45.514",

"config": {

"colWidth": 4.0,

"results": [

@@ -212,7 +214,9 @@

"enabled": true,

"editorSetting": {

"language": "sql",

- "editOnDblClick": false

+ "editOnDblClick": false,

+ "completionKey": "TAB",

+ "completionSupport": true

},

"editorMode": "ace/mode/sql",

"fontSize": 9.0

@@ -230,28 +234,19 @@

}

}

},

- "results": {

- "code": "SUCCESS",

- "msg": [

- {

- "type": "TABLE",

- "data": "age\tvalue\n19\t4\n20\t3\n21\t7\n22\t9\n23\t20\n24\t24\n25\t44\n26\t77\n27\t94\n28\t103\n29\t97\n30\t150\n31\t199\n32\t224\n33\t186\n34\t231\n"

- }

- ]

- },

"apps": [],

"progressUpdateIntervalMs": 500,

"jobName": "paragraph_1423720444030_-1424110477",

"id": "20150212-145404_867439529",

"dateCreated": "2015-02-12 14:54:04.000",

- "dateStarted": "2016-12-17 15:30:58.000",

- "dateFinished": "2016-12-17 15:31:07.000",

+ "dateStarted": "2020-05-04 23:34:45.520",

+ "dateFinished": "2020-05-04 23:34:54.074",

"status": "FINISHED"

},

{

"text": "%sql \nselect age, count(1) value \nfrom bank \nwhere marital\u003d\"${marital\u003dsingle,single|divorced|married}\" \ngroup by age \norder by age",

"user": "anonymous",

- "dateUpdated": "2020-01-19 16:58:04.590",

+ "dateUpdated": "2020-05-04 23:34:47.079",

"config": {

"colWidth": 4.0,

"results": [

@@ -291,7 +286,9 @@

"enabled": true,

"editorSetting": {

"language": "sql",

- "editOnDblClick": false

+ "editOnDblClick": false,

+ "completionKey": "TAB",

+ "completionSupport": true

},

"editorMode": "ace/mode/sql",

"fontSize": 9.0,

@@ -335,8 +332,8 @@

"jobName": "paragraph_1423836262027_-210588283",

"id": "20150213-230422_1600658137",

"dateCreated": "2015-02-13 23:04:22.000",

- "dateStarted": "2016-12-17 15:31:05.000",

- "dateFinished": "2016-12-17 15:31:09.000",

+ "dateStarted": "2020-05-04 23:34:52.255",

+ "dateFinished": "2020-05-04 23:34:55.739",

"status": "FINISHED"

},

{

@@ -445,19 +442,15 @@

"status": "READY"

}

],

- "name": "Basic Features (Spark)",

+ "name": "2. Spark Basic Features",

"id": "2A94M5J1Z",

"defaultInterpreterGroup": "spark",

- "permissions": {},

"noteParams": {},

"noteForms": {},

- "angularObjects": {

- "2C73DY9P9:shared_process": []

- },

+ "angularObjects": {},

"config": {

"looknfeel": "default",

- "isZeppelinNotebookCronEnable": true

+ "isZeppelinNotebookCronEnable": false

},

- "info": {},

- "path": "/Spark Tutorial/Basic Features (Spark)"

+ "info": {}

}

\ No newline at end of file

diff --git a/notebook/Spark Tutorial/Spark SQL (PySpark)_2EWM84JXA.zpln b/notebook/Spark Tutorial/3. Spark SQL (PySpark)_2EWM84JXA.zpln

similarity index 100%

rename from notebook/Spark Tutorial/Spark SQL (PySpark)_2EWM84JXA.zpln

rename to notebook/Spark Tutorial/3. Spark SQL (PySpark)_2EWM84JXA.zpln

diff --git a/notebook/Spark Tutorial/Spark SQL (Scala)_2EYUV26VR.zpln b/notebook/Spark Tutorial/3. Spark SQL (Scala)_2EYUV26VR.zpln

similarity index 100%

rename from notebook/Spark Tutorial/Spark SQL (Scala)_2EYUV26VR.zpln

rename to notebook/Spark Tutorial/3. Spark SQL (Scala)_2EYUV26VR.zpln

diff --git a/notebook/Spark Tutorial/Spark MlLib_2EZFM3GJA.zpln b/notebook/Spark Tutorial/4. Spark MlLib_2EZFM3GJA.zpln

similarity index 100%

rename from notebook/Spark Tutorial/Spark MlLib_2EZFM3GJA.zpln

rename to notebook/Spark Tutorial/4. Spark MlLib_2EZFM3GJA.zpln

diff --git a/notebook/Spark Tutorial/SparkR Basics_2BWJFTXKM.zpln b/notebook/Spark Tutorial/5. SparkR Basics_2BWJFTXKM.zpln

similarity index 100%

rename from notebook/Spark Tutorial/SparkR Basics_2BWJFTXKM.zpln

rename to notebook/Spark Tutorial/5. SparkR Basics_2BWJFTXKM.zpln

diff --git a/notebook/Spark Tutorial/SparkR Shiny App_2F1CHQ4TT.zpln b/notebook/Spark Tutorial/6. SparkR Shiny App_2F1CHQ4TT.zpln

similarity index 100%

rename from notebook/Spark Tutorial/SparkR Shiny App_2F1CHQ4TT.zpln

rename to notebook/Spark Tutorial/6. SparkR Shiny App_2F1CHQ4TT.zpln

diff --git a/notebook/Spark Tutorial/7. Spark Delta Lake Tutorial_2F8VDBMMT.zpln b/notebook/Spark Tutorial/7. Spark Delta Lake Tutorial_2F8VDBMMT.zpln

new file mode 100644

index 0000000..97f011c

--- /dev/null

+++ b/notebook/Spark Tutorial/7. Spark Delta Lake Tutorial_2F8VDBMMT.zpln

@@ -0,0 +1,311 @@

+{

+ "paragraphs": [

+ {

+ "text": "%md\n\n# Introduction\n\nThis is a tutorial for using spark [delta lake](https://delta.io/) in Zeppelin. You need to run the following paragraph first to load delta package.\n\n",

+ "user": "anonymous",

+ "dateUpdated": "2020-05-04 14:11:57.999",

+ "config": {

+ "colWidth": 12.0,

+ "fontSize": 9.0,

+ "enabled": true,

+ "results": {},

+ "editorSetting": {

+ "language": "markdown",

+ "editOnDblClick": true,

+ "completionKey": "TAB",

+ "completionSupport": false

+ },

+ "editorMode": "ace/mode/markdown",

+ "editorHide": true,

+ "tableHide": false

+ },

+ "settings": {

+ "params": {},

+ "forms": {}

+ },

+ "results": {

+ "code": "SUCCESS",

+ "msg": [

+ {

+ "type": "HTML",

+ "data": "\u003cdiv class\u003d\"markdown-body\"\u003e\n\u003ch1\u003eIntroduction\u003c/h1\u003e\n\u003cp\u003eThis is a tutorial for using spark \u003ca href\u003d\"https://delta.io/\"\u003edelta lake\u003c/a\u003e in Zeppelin. You need to run the following paragraph first to load delta package.\u003c/p\u003e\n\n\u003c/div\u003e"

+ }

+ ]

+ },

+ "apps": [],

+ "progressUpdateIntervalMs": 500,

+ "jobName": "paragraph_1588572279774_1507831415",

+ "id": "paragraph_1588572279774_1507831415",

+ "dateCreated": "2020-05-04 14:04:39.775",

+ "dateStarted": "2020-05-04 14:11:57.999",

+ "dateFinished": "2020-05-04 14:11:58.021",

+ "status": "FINISHED"

+ },

+ {

+ "text": "%spark.conf\n\nspark.jars.packages io.delta:delta-core_2.11:0.6.0",

+ "user": "anonymous",

+ "dateUpdated": "2020-05-04 14:12:12.254",

+ "config": {

+ "colWidth": 12.0,

+ "fontSize": 9.0,

+ "enabled": true,

+ "results": {},

+ "editorSetting": {

+ "language": "text",

+ "editOnDblClick": false,

+ "completionKey": "TAB",

+ "completionSupport": true

+ },

+ "editorMode": "ace/mode/text"

+ },

+ "settings": {

+ "params": {},

+ "forms": {}

+ },

+ "results": {

+ "code": "SUCCESS",

+ "msg": []

+ },

+ "apps": [],

+ "progressUpdateIntervalMs": 500,

+ "jobName": "paragraph_1588147206215_1200788867",

+ "id": "paragraph_1588147206215_1200788867",

+ "dateCreated": "2020-04-29 16:00:06.215",

+ "dateStarted": "2020-04-29 16:10:33.429",

+ "dateFinished": "2020-04-29 16:10:33.434",

+ "status": "FINISHED"

+ },

+ {

+ "title": "Create a table",

+ "text": "%spark\n\nval data \u003d spark.range(0, 5)\ndata.write.format(\"delta\").save(\"/tmp/delta-table\")\n",

+ "user": "anonymous",

+ "dateUpdated": "2020-04-29 16:13:31.957",

+ "config": {

+ "colWidth": 6.0,

+ "fontSize": 9.0,

+ "enabled": true,

+ "results": {},

+ "editorSetting": {

+ "language": "scala",

+ "editOnDblClick": false,

+ "completionKey": "TAB",

+ "completionSupport": true

+ },

+ "editorMode": "ace/mode/scala",

+ "title": true

+ },

+ "settings": {

+ "params": {},

+ "forms": {}

+ },

+ "results": {

+ "code": "SUCCESS",

+ "msg": [

+ {

+ "type": "TEXT",

+ "data": "\u001b[1m\u001b[34mdata\u001b[0m: \u001b[1m\u001b[32morg.apache.spark.sql.Dataset[Long]\u001b[0m \u003d [id: bigint]\n"

+ }

+ ]

+ },

+ "apps": [],

+ "progressUpdateIntervalMs": 500,

+ "jobName": "paragraph_1588147833426_1914590471",

+ "id": "paragraph_1588147833426_1914590471",

+ "dateCreated": "2020-04-29 16:10:33.426",

+ "dateStarted": "2020-04-29 16:11:45.197",

+ "dateFinished": "2020-04-29 16:11:49.694",

+ "status": "FINISHED"

+ },

+ {

+ "title": "Read a table",

+ "text": "%spark\n\nval df \u003d spark.read.format(\"delta\").load(\"/tmp/delta-table\")\ndf.show()",

+ "user": "anonymous",

+ "dateUpdated": "2020-04-29 16:13:35.297",

+ "config": {

+ "colWidth": 6.0,

+ "fontSize": 9.0,

+ "enabled": true,

+ "results": {},

+ "editorSetting": {

+ "language": "scala",

+ "editOnDblClick": false,

+ "completionKey": "TAB",

+ "completionSupport": true

+ },

+ "editorMode": "ace/mode/scala",

+ "title": true

+ },

+ "settings": {

+ "params": {},

+ "forms": {}

+ },

+ "results": {

+ "code": "SUCCESS",

+ "msg": [

+ {

+ "type": "TEXT",

+ "data": "+---+\n| id|\n+---+\n| 0|\n| 3|\n| 1|\n| 2|\n| 4|\n+---+\n\n\u001b[1m\u001b[34mdf\u001b[0m: \u001b[1m\u001b[32morg.apache.spark.sql.DataFrame\u001b[0m \u003d [id: bigint]\n"

+ }

+ ]

+ },

+ "apps": [],

+ "progressUpdateIntervalMs": 500,

+ "jobName": "paragraph_1588147853461_1624743216",

+ "id": "paragraph_1588147853461_1624743216",

+ "dateCreated": "2020-04-29 16:10:53.462",

+ "dateStarted": "2020-04-29 16:11:55.302",

+ "dateFinished": "2020-04-29 16:11:56.658",

+ "status": "FINISHED"

+ },

+ {

+ "title": "Overwrite",

+ "text": "%spark\n\nval data \u003d spark.range(5, 10)\ndata.write.format(\"delta\").mode(\"overwrite\").save(\"/tmp/delta-table\")\ndf.show()",

+ "user": "anonymous",

+ "dateUpdated": "2020-04-29 16:14:41.855",

+ "config": {

+ "colWidth": 6.0,

+ "fontSize": 9.0,

+ "enabled": true,

+ "results": {},

+ "editorSetting": {

+ "language": "scala",

+ "editOnDblClick": false,

+ "completionKey": "TAB",

+ "completionSupport": true

+ },

+ "editorMode": "ace/mode/scala",

+ "title": true

+ },

+ "settings": {

+ "params": {},

+ "forms": {}

+ },

+ "results": {

+ "code": "SUCCESS",

+ "msg": [

+ {

+ "type": "TEXT",

+ "data": "+---+\n| id|\n+---+\n| 5|\n| 6|\n| 7|\n| 9|\n| 8|\n+---+\n\n\u001b[1m\u001b[34mdata\u001b[0m: \u001b[1m\u001b[32morg.apache.spark.sql.Dataset[Long]\u001b[0m \u003d [id: bigint]\n"

+ }

+ ]

+ },

+ "apps": [],

+ "progressUpdateIntervalMs": 500,

+ "jobName": "paragraph_1588148062120_1790808564",

+ "id": "paragraph_1588148062120_1790808564",

+ "dateCreated": "2020-04-29 16:14:22.120",

+ "dateStarted": "2020-04-29 16:14:41.863",

+ "dateFinished": "2020-04-29 16:14:45.093",

+ "status": "FINISHED"

+ },

+ {

+ "title": "Conditional update without overwrite",

+ "text": "%spark\n\nimport io.delta.tables._\nimport org.apache.spark.sql.functions._\n\nval deltaTable \u003d DeltaTable.forPath(\"/tmp/delta-table\")\n\n// Update every even value by adding 100 to it\ndeltaTable.update(\n condition \u003d expr(\"id % 2 \u003d\u003d 0\"),\n set \u003d Map(\"id\" -\u003e expr(\"id + 100\")))\n\n// Delete every even value\ndeltaTable.delete(condition \u003d expr(\"id % 2 \u003d\u003d 0\"))\n\n// Upsert (merge) new data\nval newData \u003d spark.ran [...]

+ "user": "anonymous",

+ "dateUpdated": "2020-04-29 16:15:33.129",

+ "config": {

+ "colWidth": 6.0,

+ "fontSize": 9.0,

+ "enabled": true,

+ "results": {},

+ "editorSetting": {

+ "language": "scala",

+ "editOnDblClick": false,

+ "completionKey": "TAB",

+ "completionSupport": true

+ },

+ "editorMode": "ace/mode/scala",

+ "title": true

+ },

+ "settings": {

+ "params": {},

+ "forms": {}

+ },

+ "results": {

+ "code": "SUCCESS",

+ "msg": [

+ {

+ "type": "TEXT",

+ "data": "+---+\n| id|\n+---+\n| 15|\n| 16|\n| 1|\n| 18|\n| 14|\n| 4|\n| 8|\n| 17|\n| 0|\n| 10|\n| 6|\n| 2|\n| 3|\n| 13|\n| 5|\n| 12|\n| 19|\n| 7|\n| 9|\n| 11|\n+---+\n\nimport io.delta.tables._\nimport org.apache.spark.sql.functions._\n\u001b[1m\u001b[34mdeltaTable\u001b[0m: \u001b[1m\u001b[32mio.delta.tables.DeltaTable\u001b[0m \u003d io.delta.tables.DeltaTable@355329ee\n\u001b[1m\u001b[34mnewData\u001b[0m: \u001b[1m\u001b[32morg.apache.spark.sql.DataFrame\u001b[0m [...]

+ }

+ ]

+ },

+ "apps": [],

+ "progressUpdateIntervalMs": 500,

+ "jobName": "paragraph_1588147954117_626957150",

+ "id": "paragraph_1588147954117_626957150",

+ "dateCreated": "2020-04-29 16:12:34.117",

+ "dateStarted": "2020-04-29 16:15:33.132",

+ "dateFinished": "2020-04-29 16:15:48.086",

+ "status": "FINISHED"

+ },

+ {

+ "title": "Read older versions of data using time travel",

+ "text": "%spark\n\nval df \u003d spark.read.format(\"delta\").option(\"versionAsOf\", 0).load(\"/tmp/delta-table\")\ndf.show()",

+ "user": "anonymous",

+ "dateUpdated": "2020-04-29 16:16:04.935",

+ "config": {

+ "colWidth": 6.0,

+ "fontSize": 9.0,

+ "enabled": true,

+ "results": {},

+ "editorSetting": {

+ "language": "scala",

+ "editOnDblClick": false,

+ "completionKey": "TAB",

+ "completionSupport": true

+ },

+ "editorMode": "ace/mode/scala",

+ "title": true

+ },

+ "settings": {

+ "params": {},

+ "forms": {}

+ },

+ "results": {

+ "code": "SUCCESS",

+ "msg": [

+ {

+ "type": "TEXT",

+ "data": "+---+\n| id|\n+---+\n| 0|\n| 3|\n| 1|\n| 2|\n| 4|\n+---+\n\n\u001b[1m\u001b[34mdf\u001b[0m: \u001b[1m\u001b[32morg.apache.spark.sql.DataFrame\u001b[0m \u003d [id: bigint]\n"

+ }

+ ]

+ },

+ "apps": [],

+ "progressUpdateIntervalMs": 500,

+ "jobName": "paragraph_1588148133131_1770029903",

+ "id": "paragraph_1588148133131_1770029903",

+ "dateCreated": "2020-04-29 16:15:33.131",

+ "dateStarted": "2020-04-29 16:16:04.937",

+ "dateFinished": "2020-04-29 16:16:08.415",

+ "status": "FINISHED"

+ },

+ {

+ "text": "%spark\n",

+ "user": "anonymous",

+ "dateUpdated": "2020-04-29 16:18:21.603",

+ "config": {},

+ "settings": {

+ "params": {},

+ "forms": {}

+ },

+ "apps": [],

+ "progressUpdateIntervalMs": 500,

+ "jobName": "paragraph_1588148301603_1997345504",

+ "id": "paragraph_1588148301603_1997345504",

+ "dateCreated": "2020-04-29 16:18:21.603",

+ "status": "READY"

+ }

+ ],

+ "name": "6. Spark Delta Lake Tutorial",

+ "id": "2F8VDBMMT",

+ "defaultInterpreterGroup": "spark",

+ "version": "0.9.0-SNAPSHOT",

+ "noteParams": {},

+ "noteForms": {},

+ "angularObjects": {},

+ "config": {

+ "isZeppelinNotebookCronEnable": false

+ },

+ "info": {}

+}

\ No newline at end of file

diff --git a/notebook/~Trash/Zeppelin Tutorial/Flink Batch Tutorial_2EN1E1ATY.zpln b/notebook/~Trash/Zeppelin Tutorial/Flink Batch Tutorial_2EN1E1ATY.zpln

deleted file mode 100644

index 018089e..0000000

--- a/notebook/~Trash/Zeppelin Tutorial/Flink Batch Tutorial_2EN1E1ATY.zpln

+++ /dev/null

@@ -1,602 +0,0 @@

-{

- "paragraphs": [

- {

- "title": "Introduction",

- "text": "%md\n\nThis is a tutorial note for Flink batch scenario (`To be noticed, you need to use flink 1.9 or afterwards`), . You can run flink scala api via `%flink` and run flink batch sql via `%flink.bsql`. \nThis note use flink\u0027s DataSet api to demonstrate flink\u0027s batch capablity. DataSet is only supported by flink planner, so here we have to specify the planner `zeppelin.flink.planner` as `flink`, otherwise it would use blink planner by default which doesn\u0027t su [...]

- "user": "anonymous",

- "dateUpdated": "2019-10-08 15:15:54.910",

- "config": {

- "tableHide": false,

- "editorSetting": {

- "language": "markdown",

- "editOnDblClick": true,

- "completionKey": "TAB",

- "completionSupport": false

- },

- "colWidth": 12.0,

- "editorMode": "ace/mode/markdown",

- "fontSize": 9.0,

- "editorHide": true,

- "title": false,

- "runOnSelectionChange": true,

- "checkEmpty": true,

- "results": {},

- "enabled": true

- },

- "settings": {

- "params": {},

- "forms": {}

- },

- "results": {

- "code": "SUCCESS",

- "msg": [

- {

- "type": "HTML",

- "data": "\u003cdiv class\u003d\"markdown-body\"\u003e\n\u003cp\u003eThis is a tutorial note for Flink batch scenario (\u003ccode\u003eTo be noticed, you need to use flink 1.9 or afterwards\u003c/code\u003e), . You can run flink scala api via \u003ccode\u003e%flink\u003c/code\u003e and run flink batch sql via \u003ccode\u003e%flink.bsql\u003c/code\u003e.\u003cbr/\u003eThis note use flink\u0026rsquo;s DataSet api to demonstrate flink\u0026rsquo;s batch capablity. DataSet is onl [...]

- }

- ]

- },

- "apps": [],

- "progressUpdateIntervalMs": 500,

- "jobName": "paragraph_1569489641095_-188362229",

- "id": "paragraph_1547794482637_957545547",

- "dateCreated": "2019-09-26 17:20:41.095",

- "dateStarted": "2019-10-08 15:15:54.910",

- "dateFinished": "2019-10-08 15:15:54.923",

- "status": "FINISHED"

- },

- {

- "title": "Configure Flink Interpreter",

- "text": "%flink.conf\n\nFLINK_HOME \u003cFLINK_INSTALLATION\u003e\n# DataSet is only supported in flink planner, so here we use flink planner. By default it is blink planner\nzeppelin.flink.planner flink\n\n\n",

- "user": "anonymous",

- "dateUpdated": "2019-10-11 10:37:39.948",

- "config": {

- "editorSetting": {

- "language": "text",

- "editOnDblClick": false,

- "completionKey": "TAB",

- "completionSupport": true

- },

- "colWidth": 12.0,

- "editorMode": "ace/mode/text",

- "fontSize": 9.0,

- "editorHide": false,

- "title": false,

- "runOnSelectionChange": true,

- "checkEmpty": true,

- "results": {},

- "enabled": true

- },

- "settings": {

- "params": {},

- "forms": {}

- },

- "results": {

- "code": "SUCCESS",

- "msg": []

- },

- "apps": [],

- "progressUpdateIntervalMs": 500,

- "jobName": "paragraph_1569489641097_1856810430",

- "id": "paragraph_1546565092490_1952685806",

- "dateCreated": "2019-09-26 17:20:41.097",

- "dateStarted": "2019-10-11 10:00:42.031",

- "dateFinished": "2019-10-11 10:00:42.052",

- "status": "FINISHED"

- },

- {

- "text": "%sh\n\ncd /tmp\nwget https://s3.amazonaws.com/apache-zeppelin/tutorial/bank/bank.csv\n",

- "user": "anonymous",

- "dateUpdated": "2019-10-08 15:16:45.127",

- "config": {

- "runOnSelectionChange": true,

- "title": false,

- "checkEmpty": true,

- "colWidth": 12.0,

- "fontSize": 9.0,

- "enabled": true,

- "results": {},

- "editorSetting": {

- "language": "sh",

- "editOnDblClick": false,

- "completionKey": "TAB",

- "completionSupport": false

- },

- "editorMode": "ace/mode/sh"

- },

- "settings": {

- "params": {},

- "forms": {}

- },

- "results": {

- "code": "SUCCESS",

- "msg": [

- {

- "type": "TEXT",

- "data": "--2019-10-08 15:16:46-- https://s3.amazonaws.com/apache-zeppelin/tutorial/bank/bank.csv\nResolving s3.amazonaws.com (s3.amazonaws.com)... 54.231.120.2\nConnecting to s3.amazonaws.com (s3.amazonaws.com)|54.231.120.2|:443... connected.\nHTTP request sent, awaiting response... 200 OK\nLength: 461474 (451K) [application/octet-stream]\nSaving to: \u0027bank.csv\u0027\n\n 0K .......... .......... .......... .......... .......... 11% 91.0K 4s\n 50K .......... ...... [...]

- }

- ]

- },

- "apps": [],

- "progressUpdateIntervalMs": 500,

- "jobName": "paragraph_1569489915993_-1377803690",

- "id": "paragraph_1569489915993_-1377803690",

- "dateCreated": "2019-09-26 17:25:15.993",

- "dateStarted": "2019-10-08 15:16:45.132",

- "dateFinished": "2019-10-08 15:16:50.387",

- "status": "FINISHED"

- },

- {

- "title": "Load Bank Data",

- "text": "%flink\n\nval bankText \u003d benv.readTextFile(\"/tmp/bank.csv\")\nval bank \u003d bankText.map(s \u003d\u003e s.split(\";\")).filter(s \u003d\u003e s(0) !\u003d \"\\\"age\\\"\").map(\n s \u003d\u003e (s(0).toInt,\n s(1).replaceAll(\"\\\"\", \"\"),\n s(2).replaceAll(\"\\\"\", \"\"),\n s(3).replaceAll(\"\\\"\", \"\"),\n s(5).replaceAll(\"\\\"\", \"\").toInt\n )\n )\n\nbtenv.registerDataSet(\"bank\", bank, \u0027age, \u0027j [...]

- "user": "anonymous",

- "dateUpdated": "2019-10-11 10:01:44.897",

- "config": {

- "editorSetting": {

- "language": "scala",

- "editOnDblClick": false,

- "completionKey": "TAB",

- "completionSupport": true

- },

- "colWidth": 12.0,

- "editorMode": "ace/mode/scala",

- "fontSize": 9.0,

- "title": false,

- "runOnSelectionChange": true,

- "checkEmpty": true,

- "results": {

- "0": {

- "graph": {

- "mode": "table",

- "height": 111.0,

- "optionOpen": false

- }

- }

- },

- "enabled": true

- },

- "settings": {

- "params": {},

- "forms": {}

- },

- "results": {

- "code": "SUCCESS",

- "msg": [

- {

- "type": "TEXT",

- "data": "\u001b[1m\u001b[34mbankText\u001b[0m: \u001b[1m\u001b[32morg.apache.flink.api.scala.DataSet[String]\u001b[0m \u003d org.apache.flink.api.scala.DataSet@683ccfd\n\u001b[1m\u001b[34mbank\u001b[0m: \u001b[1m\u001b[32morg.apache.flink.api.scala.DataSet[(Int, String, String, String, Int)]\u001b[0m \u003d org.apache.flink.api.scala.DataSet@35e6c08f\n"

- }

- ]

- },

- "apps": [],

- "progressUpdateIntervalMs": 500,

- "jobName": "paragraph_1569489641099_-2030350664",

- "id": "paragraph_1546584347815_1533642635",

- "dateCreated": "2019-09-26 17:20:41.099",

- "dateStarted": "2019-10-11 10:01:44.904",

- "dateFinished": "2019-10-11 10:02:12.333",

- "status": "FINISHED"

- },

- {

- "text": "%flink.bsql\n\ndescribe bank\n\n\n\n\n\n\n\n\n",

- "user": "anonymous",

- "dateUpdated": "2019-10-11 10:07:51.800",

- "config": {

- "editorSetting": {

- "language": "sql",

- "editOnDblClick": false,

- "completionKey": "TAB",

- "completionSupport": true

- },

- "colWidth": 6.0,

- "editorMode": "ace/mode/sql",

- "fontSize": 9.0,

- "runOnSelectionChange": true,

- "title": false,

- "checkEmpty": true,

- "results": {

- "0": {

- "graph": {

- "mode": "table",

- "height": 300.0,

- "optionOpen": false,

- "setting": {

- "table": {

- "tableGridState": {},

- "tableColumnTypeState": {

- "names": {

- "table": "string"

- },

- "updated": false

- },

- "tableOptionSpecHash": "[{\"name\":\"useFilter\",\"valueType\":\"boolean\",\"defaultValue\":false,\"widget\":\"checkbox\",\"description\":\"Enable filter for columns\"},{\"name\":\"showPagination\",\"valueType\":\"boolean\",\"defaultValue\":false,\"widget\":\"checkbox\",\"description\":\"Enable pagination for better navigation\"},{\"name\":\"showAggregationFooter\",\"valueType\":\"boolean\",\"defaultValue\":false,\"widget\":\"checkbox\",\"description\":\"Enable a footer [...]

- "tableOptionValue": {

- "useFilter": false,

- "showPagination": false,

- "showAggregationFooter": false

- },

- "updated": false,

- "initialized": false

- }

- },

- "commonSetting": {}

- }

- }

- },

- "enabled": true

- },

- "settings": {

- "params": {},

- "forms": {}

- },

- "results": {

- "code": "SUCCESS",

- "msg": [

- {

- "type": "TEXT",

- "data": "Column\tType\nOptional[age]\tOptional[INT]\nOptional[job]\tOptional[STRING]\nOptional[marital]\tOptional[STRING]\nOptional[education]\tOptional[STRING]\nOptional[balance]\tOptional[INT]\n"

- }

- ]

- },

- "apps": [],

- "progressUpdateIntervalMs": 500,

- "jobName": "paragraph_1569491358206_1385745589",

- "id": "paragraph_1569491358206_1385745589",

- "dateCreated": "2019-09-26 17:49:18.206",

- "dateStarted": "2019-10-11 10:07:51.808",

- "dateFinished": "2019-10-11 10:07:52.076",

- "status": "FINISHED"

- },

- {

- "text": "%flink.bsql\n\nselect age, count(1) as v\nfrom bank \nwhere age \u003c 30 \ngroup by age \norder by age",

- "user": "anonymous",

- "dateUpdated": "2019-10-11 10:07:53.645",

- "config": {

- "editorSetting": {

- "language": "sql",

- "editOnDblClick": false,

- "completionKey": "TAB",

- "completionSupport": true

- },

- "colWidth": 6.0,

- "editorMode": "ace/mode/sql",

- "fontSize": 9.0,

- "runOnSelectionChange": true,

- "title": false,

- "checkEmpty": true,

- "results": {

- "0": {

- "graph": {

- "mode": "table",

- "height": 300.0,

- "optionOpen": false,

- "setting": {

- "table": {

- "tableGridState": {},

- "tableColumnTypeState": {

- "names": {

- "age": "string",

- "v": "string"

- },

- "updated": false

- },

- "tableOptionSpecHash": "[{\"name\":\"useFilter\",\"valueType\":\"boolean\",\"defaultValue\":false,\"widget\":\"checkbox\",\"description\":\"Enable filter for columns\"},{\"name\":\"showPagination\",\"valueType\":\"boolean\",\"defaultValue\":false,\"widget\":\"checkbox\",\"description\":\"Enable pagination for better navigation\"},{\"name\":\"showAggregationFooter\",\"valueType\":\"boolean\",\"defaultValue\":false,\"widget\":\"checkbox\",\"description\":\"Enable a footer [...]

- "tableOptionValue": {

- "useFilter": false,

- "showPagination": false,

- "showAggregationFooter": false

- },

- "updated": false,

- "initialized": false

- }

- },

- "commonSetting": {}

- }

- }

- },

- "enabled": true

- },

- "settings": {

- "params": {},

- "forms": {}

- },

- "results": {

- "code": "SUCCESS",

- "msg": [

- {

- "type": "TABLE",

- "data": "age\tv\n19\t4\n20\t3\n21\t7\n22\t9\n23\t20\n24\t24\n25\t44\n26\t77\n27\t94\n28\t103\n29\t97\n"

- },

- {

- "type": "TEXT",

- "data": ""

- }

- ]

- },

- "apps": [],

- "progressUpdateIntervalMs": 500,

- "jobName": "paragraph_1569491511585_-677666348",

- "id": "paragraph_1569491511585_-677666348",

- "dateCreated": "2019-09-26 17:51:51.585",

- "dateStarted": "2019-10-11 10:07:53.654",

- "dateFinished": "2019-10-11 10:08:10.439",

- "status": "FINISHED"

- },

- {

- "text": "%flink.bsql\n\nselect age, count(1) as v \nfrom bank \nwhere age \u003c ${maxAge\u003d30} \ngroup by age \norder by age",

- "user": "anonymous",

- "dateUpdated": "2019-10-11 10:08:13.910",

- "config": {

- "editorSetting": {

- "language": "sql",

- "editOnDblClick": false,

- "completionKey": "TAB",

- "completionSupport": true

- },

- "colWidth": 6.0,

- "editorMode": "ace/mode/sql",

- "fontSize": 9.0,

- "runOnSelectionChange": true,

- "title": false,

- "checkEmpty": true,

- "results": {

- "0": {

- "graph": {

- "mode": "pieChart",

- "height": 300.0,

- "optionOpen": false,

- "setting": {

- "table": {

- "tableGridState": {},

- "tableColumnTypeState": {

- "names": {

- "age": "string",

- "v": "string"

- },

- "updated": false

- },

- "tableOptionSpecHash": "[{\"name\":\"useFilter\",\"valueType\":\"boolean\",\"defaultValue\":false,\"widget\":\"checkbox\",\"description\":\"Enable filter for columns\"},{\"name\":\"showPagination\",\"valueType\":\"boolean\",\"defaultValue\":false,\"widget\":\"checkbox\",\"description\":\"Enable pagination for better navigation\"},{\"name\":\"showAggregationFooter\",\"valueType\":\"boolean\",\"defaultValue\":false,\"widget\":\"checkbox\",\"description\":\"Enable a footer [...]

- "tableOptionValue": {

- "useFilter": false,

- "showPagination": false,

- "showAggregationFooter": false

- },

- "updated": false,

- "initialized": false

- },

- "multiBarChart": {

- "rotate": {

- "degree": "-45"

- },

- "xLabelStatus": "default"

- }

- },

- "commonSetting": {},

- "keys": [

- {

- "name": "age",

- "index": 0.0,

- "aggr": "sum"

- }

- ],

- "groups": [],

- "values": [

- {

- "name": "v",

- "index": 1.0,

- "aggr": "sum"

- }

- ]

- },

- "helium": {}

- }

- },

- "enabled": true

- },

- "settings": {

- "params": {

- "maxAge": "40"

- },

- "forms": {

- "maxAge": {

- "type": "TextBox",

- "name": "maxAge",

- "defaultValue": "30",

- "hidden": false

- }

- }

- },

- "results": {

- "code": "SUCCESS",

- "msg": [

- {

- "type": "TABLE",

- "data": "age\tv\n19\t4\n20\t3\n21\t7\n22\t9\n23\t20\n24\t24\n25\t44\n26\t77\n27\t94\n28\t103\n29\t97\n30\t150\n31\t199\n32\t224\n33\t186\n34\t231\n35\t180\n36\t188\n37\t161\n38\t159\n39\t130\n"

- },

- {

- "type": "TEXT",

- "data": ""

- }

- ]

- },

- "apps": [],

- "progressUpdateIntervalMs": 500,

- "jobName": "paragraph_1569489641100_115468969",

- "id": "paragraph_1546592465802_-1181051373",

- "dateCreated": "2019-09-26 17:20:41.100",

- "dateStarted": "2019-10-11 10:08:13.923",

- "dateFinished": "2019-10-11 10:08:14.876",

- "status": "FINISHED"

- },

- {

- "text": "%flink.bsql\n\nselect age, count(1) as v \nfrom bank \nwhere marital\u003d\u0027${marital\u003dsingle,single|divorced|married}\u0027 \ngroup by age \norder by age",

- "user": "anonymous",

- "dateUpdated": "2019-10-11 10:37:59.493",

- "config": {

- "editorSetting": {

- "language": "sql",

- "editOnDblClick": false,

- "completionKey": "TAB",

- "completionSupport": true

- },

- "colWidth": 6.0,

- "editorMode": "ace/mode/sql",

- "fontSize": 9.0,

- "runOnSelectionChange": true,

- "title": false,

- "checkEmpty": true,

- "results": {

- "0": {

- "graph": {

- "mode": "multiBarChart",

- "height": 300.0,

- "optionOpen": false,

- "setting": {

- "table": {

- "tableGridState": {},

- "tableColumnTypeState": {

- "names": {

- "age": "string",

- "v": "string"

- },

- "updated": false

- },

- "tableOptionSpecHash": "[{\"name\":\"useFilter\",\"valueType\":\"boolean\",\"defaultValue\":false,\"widget\":\"checkbox\",\"description\":\"Enable filter for columns\"},{\"name\":\"showPagination\",\"valueType\":\"boolean\",\"defaultValue\":false,\"widget\":\"checkbox\",\"description\":\"Enable pagination for better navigation\"},{\"name\":\"showAggregationFooter\",\"valueType\":\"boolean\",\"defaultValue\":false,\"widget\":\"checkbox\",\"description\":\"Enable a footer [...]

- "tableOptionValue": {

- "useFilter": false,

- "showPagination": false,

- "showAggregationFooter": false

- },

- "updated": false,

- "initialized": false

- },

- "multiBarChart": {

- "rotate": {

- "degree": "-45"

- },

- "xLabelStatus": "default"

- },

- "lineChart": {

- "rotate": {

- "degree": "-45"

- },

- "xLabelStatus": "default"

- },

- "stackedAreaChart": {

- "rotate": {

- "degree": "-45"

- },

- "xLabelStatus": "default"

- }

- },

- "commonSetting": {},

- "keys": [

- {

- "name": "age",

- "index": 0.0,

- "aggr": "sum"

- }

- ],

- "groups": [],

- "values": [

- {

- "name": "v",

- "index": 1.0,

- "aggr": "sum"

- }

- ]

- },

- "helium": {}

- }

- },

- "enabled": true

- },

- "settings": {

- "params": {

- "marital": "married"

- },

- "forms": {

- "marital": {

- "type": "Select",

- "options": [

- {

- "value": "single"

- },

- {

- "value": "divorced"

- },

- {

- "value": "married"

- }

- ],

- "name": "marital",

- "defaultValue": "single",

- "hidden": false

- }

- }

- },

- "results": {

- "code": "SUCCESS",

- "msg": [

- {

- "type": "TABLE",

- "data": "age\tv\n23\t3\n24\t11\n25\t11\n26\t18\n27\t26\n28\t23\n29\t37\n30\t56\n31\t104\n32\t105\n33\t103\n34\t142\n35\t109\n36\t117\n37\t100\n38\t99\n39\t88\n40\t105\n41\t97\n42\t91\n43\t79\n44\t68\n45\t76\n46\t82\n47\t78\n48\t91\n49\t87\n50\t74\n51\t63\n52\t66\n53\t75\n54\t56\n55\t68\n56\t50\n57\t78\n58\t67\n59\t56\n60\t36\n61\t15\n62\t5\n63\t7\n64\t6\n65\t4\n66\t7\n67\t5\n68\t1\n69\t5\n70\t5\n71\t5\n72\t4\n73\t6\n74\t2\n75\t3\n76\t1\n77\t5\n78\t2\n79\t3\n80\t6\n81\t1\n83\t [...]

- },

- {

- "type": "TEXT",

- "data": ""

- }

- ]

- },

- "apps": [],

- "progressUpdateIntervalMs": 500,

- "jobName": "paragraph_1569489641100_-1622522691",

- "id": "paragraph_1546592478596_-1766740165",

- "dateCreated": "2019-09-26 17:20:41.100",

- "dateStarted": "2019-10-11 10:08:16.423",

- "dateFinished": "2019-10-11 10:08:17.295",

- "status": "FINISHED"

- },

- {

- "text": "\n",

- "user": "anonymous",

- "dateUpdated": "2019-09-26 17:54:48.292",

- "config": {

- "colWidth": 12.0,

- "fontSize": 9.0,

- "enabled": true,

- "results": {},

- "editorSetting": {

- "language": "scala",

- "editOnDblClick": false,

- "completionKey": "TAB",

- "completionSupport": true

- },

- "editorMode": "ace/mode/scala"

- },

- "settings": {

- "params": {},

- "forms": {}

- },

- "apps": [],

- "progressUpdateIntervalMs": 500,

- "jobName": "paragraph_1569489641101_-1182720873",

- "id": "paragraph_1553093710610_-1734599499",

- "dateCreated": "2019-09-26 17:20:41.101",

- "status": "READY"

- }

- ],

- "name": "Flink Batch Tutorial",

- "id": "2EN1E1ATY",

- "defaultInterpreterGroup": "spark",

- "version": "0.9.0-SNAPSHOT",

- "permissions": {},

- "noteParams": {},

- "noteForms": {},

- "angularObjects": {},

- "config": {

- "isZeppelinNotebookCronEnable": false

- },

- "info": {},

- "path": "/Flink Batch Tutorial"

-}

\ No newline at end of file

diff --git a/notebook/~Trash/Zeppelin Tutorial/Flink Stream Tutorial_2ER62Y5VJ.zpln b/notebook/~Trash/Zeppelin Tutorial/Flink Stream Tutorial_2ER62Y5VJ.zpln

deleted file mode 100644

index b8f3658..0000000

--- a/notebook/~Trash/Zeppelin Tutorial/Flink Stream Tutorial_2ER62Y5VJ.zpln

+++ /dev/null

@@ -1,427 +0,0 @@

-{

- "paragraphs": [

- {

- "title": "Introduction",

- "text": "%md\n\nThis is a tutorial note for Flink Streaming application. You can run flink scala api via `%flink` and run flink stream sql via `%flink.ssql`. We provide 2 examples in this tutorial:\n\n* Classical word count example via Flink streaming\n* We simulate a web log stream source, and then query and visualize this streaming data in Zeppelin.\n\n\nFor now, the capability of supporting flink streaming job is very limited in Zeppelin. For example, canceling job is not suppor [...]

- "user": "anonymous",

- "dateUpdated": "2019-10-08 15:18:50.038",

- "config": {

- "tableHide": false,

- "editorSetting": {

- "language": "markdown",

- "editOnDblClick": true,

- "completionKey": "TAB",

- "completionSupport": false

- },

- "colWidth": 12.0,

- "editorMode": "ace/mode/markdown",

- "fontSize": 9.0,

- "editorHide": false,

- "title": false,

- "runOnSelectionChange": true,

- "checkEmpty": true,

- "results": {},

- "enabled": true

- },

- "settings": {

- "params": {},

- "forms": {}

- },

- "results": {

- "code": "SUCCESS",

- "msg": [

- {

- "type": "HTML",

- "data": "\u003cdiv class\u003d\"markdown-body\"\u003e\n\u003cp\u003eThis is a tutorial note for Flink Streaming application. You can run flink scala api via \u003ccode\u003e%flink\u003c/code\u003e and run flink stream sql via \u003ccode\u003e%flink.ssql\u003c/code\u003e. We provide 2 examples in this tutorial:\u003c/p\u003e\n\u003cul\u003e\n \u003cli\u003eClassical word count example via Flink streaming\u003c/li\u003e\n \u003cli\u003eWe simulate a web log stream source, and [...]

- }

- ]

- },

- "apps": [],

- "progressUpdateIntervalMs": 500,

- "jobName": "paragraph_1569491705460_-1023073277",

- "id": "paragraph_1548052720723_1943177100",

- "dateCreated": "2019-09-26 17:55:05.460",

- "dateStarted": "2019-10-08 15:18:47.711",

- "dateFinished": "2019-10-08 15:18:47.724",

- "status": "FINISHED"

- },

- {

- "title": "Configure Flink Interpreter",

- "text": "%flink.conf\n\nFLINK_HOME \u003cFLINK_INSTALLATION\u003e\n# Use blink planner for flink streaming scenario\nzeppelin.flink.planner blink\n",

- "user": "anonymous",

- "dateUpdated": "2019-10-11 10:38:09.734",

- "config": {

- "editorSetting": {

- "language": "text",

- "editOnDblClick": false,

- "completionKey": "TAB",

- "completionSupport": true

- },

- "colWidth": 12.0,

- "editorMode": "ace/mode/text",

- "fontSize": 9.0,

- "title": false,

- "runOnSelectionChange": true,

- "checkEmpty": true,

- "results": {},

- "enabled": true

- },

- "settings": {

- "params": {},

- "forms": {}

- },

- "results": {

- "code": "SUCCESS",

- "msg": []

- },

- "apps": [],

- "progressUpdateIntervalMs": 500,

- "jobName": "paragraph_1569491705461_327101264",

- "id": "paragraph_1546571299955_275296580",

- "dateCreated": "2019-09-26 17:55:05.461",

- "dateStarted": "2019-10-08 15:21:48.153",

- "dateFinished": "2019-10-08 15:21:48.165",

- "status": "FINISHED"

- },

- {

- "title": "Stream WordCount",

- "text": "%flink\n\nval data \u003d senv.fromElements(\"hello world\", \"hello flink\", \"hello hadoop\")\ndata.flatMap(line \u003d\u003e line.split(\"\\\\s\"))\n .map(w \u003d\u003e (w, 1))\n .keyBy(0)\n .sum(1)\n .print\n\nsenv.execute()\n",

- "user": "anonymous",

- "dateUpdated": "2019-10-08 15:19:07.936",

- "config": {

- "editorSetting": {

- "language": "scala",

- "editOnDblClick": false,

- "completionKey": "TAB",

- "completionSupport": true

- },

- "colWidth": 12.0,

- "editorMode": "ace/mode/scala",

- "fontSize": 9.0,

- "title": false,

- "runOnSelectionChange": true,

- "checkEmpty": true,

- "results": {},

- "enabled": true

- },

- "settings": {

- "params": {},

- "forms": {}

- },

- "results": {

- "code": "SUCCESS",

- "msg": [

- {

- "type": "TEXT",

- "data": "\u001b[1m\u001b[34mdata\u001b[0m: \u001b[1m\u001b[32morg.apache.flink.streaming.api.scala.DataStream[String]\u001b[0m \u003d org.apache.flink.streaming.api.scala.DataStream@5a099566\n\u001b[1m\u001b[34mres0\u001b[0m: \u001b[1m\u001b[32morg.apache.flink.streaming.api.datastream.DataStreamSink[(String, Int)]\u001b[0m \u003d org.apache.flink.streaming.api.datastream.DataStreamSink@58805cef\n(hello,1)\n(world,1)\n(hello,2)\n(flink,1)\n(hello,3)\n(hadoop,1)\n\u001b[1m\u00 [...]

- }

- ]

- },

- "apps": [],

- "progressUpdateIntervalMs": 500,

- "jobName": "paragraph_1569491705461_377981606",

- "id": "paragraph_1546571324670_-435705916",

- "dateCreated": "2019-09-26 17:55:05.461",

- "dateStarted": "2019-10-08 15:19:07.941",

- "dateFinished": "2019-10-08 15:19:23.511",

- "status": "FINISHED"

- },

- {

- "title": "Register a Stream DataSource to simulate web log",

- "text": "%flink\n\nimport org.apache.flink.streaming.api.functions.source.SourceFunction\nimport org.apache.flink.table.api.TableEnvironment\nimport org.apache.flink.streaming.api.TimeCharacteristic\nimport org.apache.flink.streaming.api.checkpoint.ListCheckpointed\nimport java.util.Collections\nimport scala.collection.JavaConversions._\n\nsenv.setStreamTimeCharacteristic(TimeCharacteristic.EventTime)\nsenv.enableCheckpointing(1000)\n\nval data \u003d senv.addSource(new SourceFunct [...]

- "user": "anonymous",

- "dateUpdated": "2019-10-08 15:19:31.503",

- "config": {

- "editorSetting": {

- "language": "scala",

- "editOnDblClick": false,

- "completionKey": "TAB",

- "completionSupport": true

- },

- "colWidth": 12.0,

- "editorMode": "ace/mode/scala",

- "fontSize": 9.0,

- "title": false,

- "runOnSelectionChange": true,

- "checkEmpty": true,

- "results": {},

- "enabled": true

- },

- "settings": {

- "params": {},

- "forms": {}

- },

- "results": {

- "code": "SUCCESS",

- "msg": [

- {

- "type": "TEXT",

- "data": "import org.apache.flink.streaming.api.functions.source.SourceFunction\nimport org.apache.flink.table.api.TableEnvironment\nimport org.apache.flink.streaming.api.TimeCharacteristic\nimport org.apache.flink.streaming.api.checkpoint.ListCheckpointed\nimport java.util.Collections\nimport scala.collection.JavaConversions._\n\u001b[1m\u001b[34mres4\u001b[0m: \u001b[1m\u001b[32morg.apache.flink.streaming.api.scala.StreamExecutionEnvironment\u001b[0m \u003d org.apache.flink. [...]

- }

- ]

- },

- "apps": [],

- "progressUpdateIntervalMs": 500,

- "jobName": "paragraph_1569491705461_-1986381705",

- "id": "paragraph_1546571333074_1869171983",

- "dateCreated": "2019-09-26 17:55:05.461",

- "dateStarted": "2019-10-08 15:19:31.507",

- "dateFinished": "2019-10-08 15:19:34.040",

- "status": "FINISHED"

- },

- {

- "title": "Total Page View",

- "text": "%flink.ssql(type\u003dsingle, parallelism\u003d1, refreshInterval\u003d3000, template\u003d\u003ch1\u003e{1}\u003c/h1\u003e until \u003ch2\u003e{0}\u003c/h2\u003e, enableSavePoint\u003dfalse, runWithSavePoint\u003dfalse)\n\nselect max(rowtime), count(1) from log\n",

- "user": "anonymous",

- "dateUpdated": "2019-10-08 15:19:41.998",

- "config": {

- "savepointPath": "file:/tmp/save_point/savepoint-13e681-f552013d6184",

- "editorSetting": {

- "language": "sql",

- "editOnDblClick": false,

- "completionKey": "TAB",

- "completionSupport": true

- },

- "colWidth": 6.0,

- "editorMode": "ace/mode/sql",

- "fontSize": 9.0,

- "editorHide": false,

- "title": false,

- "runOnSelectionChange": true,

- "checkEmpty": true,

- "results": {

- "0": {

- "graph": {

- "mode": "table",

- "height": 141.0,

- "optionOpen": false

- }

- }

- },

- "enabled": true

- },

- "settings": {

- "params": {},

- "forms": {}

- },

- "apps": [],

- "progressUpdateIntervalMs": 500,

- "jobName": "paragraph_1569491705461_616808409",

- "id": "paragraph_1546571459644_596843735",

- "dateCreated": "2019-09-26 17:55:05.461",

- "dateStarted": "2019-10-08 15:19:42.003",

- "dateFinished": "2019-09-26 17:57:24.042",

- "status": "ABORT"

- },

- {

- "title": "Page View by Page",

- "text": "%flink.ssql(type\u003dretract, refreshInterval\u003d2000, parallelism\u003d1, enableSavePoint\u003dfalse, runWithSavePoint\u003dfalse)\n\nselect url, count(1) as pv from log group by url",

- "user": "anonymous",

- "dateUpdated": "2019-10-08 15:20:02.708",

- "config": {

- "savepointPath": "file:/flink/save_point/savepoint-e4f781-996290516ef1",

- "editorSetting": {

- "language": "sql",

- "editOnDblClick": false,

- "completionKey": "TAB",

- "completionSupport": true

- },

- "colWidth": 6.0,

- "editorMode": "ace/mode/sql",

- "fontSize": 9.0,

- "editorHide": false,

- "title": false,

- "runOnSelectionChange": true,

- "checkEmpty": true,

- "results": {

- "0": {

- "graph": {

- "mode": "multiBarChart",

- "height": 198.0,

- "optionOpen": false,

- "setting": {

- "table": {

- "tableGridState": {},

- "tableColumnTypeState": {

- "names": {

- "url": "string",

- "pv": "string"

- },

- "updated": false

- },