You are viewing a plain text version of this content. The canonical link for it is here.

Posted to commits@mxnet.apache.org by GitBox <gi...@apache.org> on 2018/03/29 21:24:10 UTC

[GitHub] astonzhang closed pull request #10130: Fix typo in autograd doc

astonzhang closed pull request #10130: Fix typo in autograd doc

URL: https://github.com/apache/incubator-mxnet/pull/10130

This is a PR merged from a forked repository.

As GitHub hides the original diff on merge, it is displayed below for

the sake of provenance:

As this is a foreign pull request (from a fork), the diff is supplied

below (as it won't show otherwise due to GitHub magic):

diff --git a/.github/PULL_REQUEST_TEMPLATE.md b/.github/PULL_REQUEST_TEMPLATE.md

index 193f5b02c4f..cd6fb45e7c2 100644

--- a/.github/PULL_REQUEST_TEMPLATE.md

+++ b/.github/PULL_REQUEST_TEMPLATE.md

@@ -3,7 +3,8 @@

## Checklist ##

### Essentials ###

-- [ ] Passed code style checking (`make lint`)

+Please feel free to remove inapplicable items for your PR.

+- [ ] The PR title starts with [MXNET-$JIRA_ID], where $JIRA_ID refers to the relevant [JIRA issue](https://issues.apache.org/jira/projects/MXNET/issues) created (except PRs with tiny changes)

- [ ] Changes are complete (i.e. I finished coding on this PR)

- [ ] All changes have test coverage:

- Unit tests are added for small changes to verify correctness (e.g. adding a new operator)

@@ -13,6 +14,7 @@

- For user-facing API changes, API doc string has been updated.

- For new C++ functions in header files, their functionalities and arguments are documented.

- For new examples, README.md is added to explain the what the example does, the source of the dataset, expected performance on test set and reference to the original paper if applicable

+- Check the API doc at http://mxnet-ci-doc.s3-accelerate.dualstack.amazonaws.com/PR-$PR_ID/$BUILD_ID/index.html

- [ ] To the my best knowledge, examples are either not affected by this change, or have been fixed to be compatible with this change

### Changes ###

diff --git a/.gitmodules b/.gitmodules

index cdb8a553679..e10eae20fe9 100644

--- a/.gitmodules

+++ b/.gitmodules

@@ -1,17 +1,17 @@

-[submodule "mshadow"]

- path = mshadow

+[submodule "3rdparty/mshadow"]

+ path = 3rdparty/mshadow

url = https://github.com/dmlc/mshadow.git

-[submodule "dmlc-core"]

- path = dmlc-core

+[submodule "3rdparty/dmlc-core"]

+ path = 3rdparty/dmlc-core

url = https://github.com/dmlc/dmlc-core.git

-[submodule "ps-lite"]

- path = ps-lite

+[submodule "3rdparty/ps-lite"]

+ path = 3rdparty/ps-lite

url = https://github.com/dmlc/ps-lite

-[submodule "nnvm"]

- path = nnvm

+[submodule "3rdparty/nnvm"]

+ path = 3rdparty/nnvm

url = https://github.com/dmlc/nnvm

-[submodule "dlpack"]

- path = dlpack

+[submodule "3rdparty/dlpack"]

+ path = 3rdparty/dlpack

url = https://github.com/dmlc/dlpack

[submodule "3rdparty/openmp"]

path = 3rdparty/openmp

diff --git a/dlpack b/3rdparty/dlpack

similarity index 100%

rename from dlpack

rename to 3rdparty/dlpack

diff --git a/dmlc-core b/3rdparty/dmlc-core

similarity index 100%

rename from dmlc-core

rename to 3rdparty/dmlc-core

diff --git a/mshadow b/3rdparty/mshadow

similarity index 100%

rename from mshadow

rename to 3rdparty/mshadow

diff --git a/nnvm b/3rdparty/nnvm

similarity index 100%

rename from nnvm

rename to 3rdparty/nnvm

diff --git a/ps-lite b/3rdparty/ps-lite

similarity index 100%

rename from ps-lite

rename to 3rdparty/ps-lite

diff --git a/CMakeLists.txt b/CMakeLists.txt

index b3a895583b4..116de37fb85 100644

--- a/CMakeLists.txt

+++ b/CMakeLists.txt

@@ -15,7 +15,7 @@ mxnet_option(USE_NCCL "Use NVidia NCCL with CUDA" OFF)

mxnet_option(USE_OPENCV "Build with OpenCV support" ON)

mxnet_option(USE_OPENMP "Build with Openmp support" ON)

mxnet_option(USE_CUDNN "Build with cudnn support" ON) # one could set CUDNN_ROOT for search path

-mxnet_option(USE_SSE "Build with x86 SSE instruction support" AUTO)

+mxnet_option(USE_SSE "Build with x86 SSE instruction support" ON)

mxnet_option(USE_LAPACK "Build with lapack support" ON IF NOT MSVC)

mxnet_option(USE_MKL_IF_AVAILABLE "Use MKL if found" ON)

mxnet_option(USE_MKLML_MKL "Use MKLDNN variant of MKL (if MKL found)" ON IF USE_MKL_IF_AVAILABLE AND UNIX AND (NOT APPLE))

@@ -97,7 +97,7 @@ else(MSVC)

check_cxx_compiler_flag("-std=c++0x" SUPPORT_CXX0X)

# For cross compilation, we can't rely on the compiler which accepts the flag, but mshadow will

# add platform specific includes not available in other arches

- if(USE_SSE STREQUAL "AUTO")

+ if(USE_SSE)

check_cxx_compiler_flag("-msse2" SUPPORT_MSSE2)

else()

set(SUPPORT_MSSE2 FALSE)

@@ -132,7 +132,9 @@ else(MSVC)

endif()

endif(MSVC)

-set(mxnet_LINKER_LIBS "")

+if(NOT mxnet_LINKER_LIBS)

+ set(mxnet_LINKER_LIBS "")

+endif(NOT mxnet_LINKER_LIBS)

if(USE_GPROF)

message(STATUS "Using GPROF")

@@ -221,14 +223,14 @@ endif()

if(USE_CUDA AND FIRST_CUDA)

include(cmake/ChooseBlas.cmake)

- include(mshadow/cmake/Utils.cmake)

+ include(3rdparty/mshadow/cmake/Utils.cmake)

include(cmake/FirstClassLangCuda.cmake)

include_directories(${CMAKE_CUDA_TOOLKIT_INCLUDE_DIRECTORIES})

else()

- if(EXISTS ${CMAKE_CURRENT_SOURCE_DIR}/mshadow/cmake)

- include(mshadow/cmake/mshadow.cmake)

- include(mshadow/cmake/Utils.cmake)

- include(mshadow/cmake/Cuda.cmake)

+ if(EXISTS ${CMAKE_CURRENT_SOURCE_DIR}/3rdparty/mshadow/cmake)

+ include(3rdparty/mshadow/cmake/mshadow.cmake)

+ include(3rdparty/mshadow/cmake/Utils.cmake)

+ include(3rdparty/mshadow/cmake/Cuda.cmake)

else()

include(mshadowUtils)

include(Cuda)

@@ -243,16 +245,16 @@ foreach(var ${C_CXX_INCLUDE_DIRECTORIES})

endforeach()

include_directories("include")

-include_directories("mshadow")

+include_directories("3rdparty/mshadow")

include_directories("3rdparty/cub")

-include_directories("nnvm/include")

-include_directories("nnvm/tvm/include")

-include_directories("dmlc-core/include")

-include_directories("dlpack/include")

+include_directories("3rdparty/nnvm/include")

+include_directories("3rdparty/nnvm/tvm/include")

+include_directories("3rdparty/dmlc-core/include")

+include_directories("3rdparty/dlpack/include")

# commented out until PR goes through

-#if(EXISTS ${CMAKE_CURRENT_SOURCE_DIR}/dlpack)

-# add_subdirectory(dlpack)

+#if(EXISTS ${CMAKE_CURRENT_SOURCE_DIR}/3rdparty/dlpack)

+# add_subdirectory(3rdparty/dlpack)

#endif()

# Prevent stripping out symbols (operator registrations, for example)

@@ -390,37 +392,37 @@ if(USE_CUDNN AND USE_CUDA)

endif()

endif()

-if(EXISTS ${CMAKE_CURRENT_SOURCE_DIR}/dmlc-core/cmake)

- add_subdirectory("dmlc-core")

+if(EXISTS ${CMAKE_CURRENT_SOURCE_DIR}/3rdparty/dmlc-core/cmake)

+ add_subdirectory("3rdparty/dmlc-core")

endif()

-if(EXISTS ${CMAKE_CURRENT_SOURCE_DIR}/mshadow/cmake)

- add_subdirectory("mshadow")

+if(EXISTS ${CMAKE_CURRENT_SOURCE_DIR}/3rdparty/mshadow/cmake)

+ add_subdirectory("3rdparty/mshadow")

endif()

FILE(GLOB_RECURSE SOURCE "src/*.cc" "src/*.h" "include/*.h")

FILE(GLOB_RECURSE CUDA "src/*.cu" "src/*.cuh")

# add nnvm to source

FILE(GLOB_RECURSE NNVMSOURCE

- nnvm/src/c_api/*.cc

- nnvm/src/core/*.cc

- nnvm/src/pass/*.cc

- nnvm/src/c_api/*.h

- nnvm/src/core/*.h

- nnvm/src/pass/*.h

- nnvm/include/*.h)

+ 3rdparty/nnvm/src/c_api/*.cc

+ 3rdparty/nnvm/src/core/*.cc

+ 3rdparty/nnvm/src/pass/*.cc

+ 3rdparty/nnvm/src/c_api/*.h

+ 3rdparty/nnvm/src/core/*.h

+ 3rdparty/nnvm/src/pass/*.h

+ 3rdparty/nnvm/include/*.h)

list(APPEND SOURCE ${NNVMSOURCE})

# add mshadow file

-FILE(GLOB_RECURSE MSHADOWSOURCE "mshadow/mshadow/*.h")

-FILE(GLOB_RECURSE MSHADOW_CUDASOURCE "mshadow/mshadow/*.cuh")

+FILE(GLOB_RECURSE MSHADOWSOURCE "3rdparty/mshadow/mshadow/*.h")

+FILE(GLOB_RECURSE MSHADOW_CUDASOURCE "3rdparty/mshadow/mshadow/*.cuh")

list(APPEND SOURCE ${MSHADOWSOURCE})

list(APPEND CUDA ${MSHADOW_CUDASOURCE})

# add source group

-FILE(GLOB_RECURSE GROUP_SOURCE "src/*.cc" "nnvm/*.cc" "plugin/*.cc")

-FILE(GLOB_RECURSE GROUP_Include "src/*.h" "nnvm/*.h" "mshadow/mshadow/*.h" "plugin/*.h")

-FILE(GLOB_RECURSE GROUP_CUDA "src/*.cu" "src/*.cuh" "mshadow/mshadow/*.cuh" "plugin/*.cu"

+FILE(GLOB_RECURSE GROUP_SOURCE "src/*.cc" "3rdparty/nnvm/*.cc" "plugin/*.cc")

+FILE(GLOB_RECURSE GROUP_Include "src/*.h" "3rdparty/nnvm/*.h" "3rdparty/mshadow/mshadow/*.h" "plugin/*.h")

+FILE(GLOB_RECURSE GROUP_CUDA "src/*.cu" "src/*.cuh" "3rdparty/mshadow/mshadow/*.cuh" "plugin/*.cu"

"plugin/*.cuh" "3rdparty/cub/cub/*.cuh")

assign_source_group("Source" ${GROUP_SOURCE})

assign_source_group("Include" ${GROUP_Include})

@@ -559,7 +561,7 @@ if(USE_PLUGIN_CAFFE)

endif()

endif()

-if(NOT EXISTS "${CMAKE_CURRENT_SOURCE_DIR}/nnvm/CMakeLists.txt")

+if(NOT EXISTS "${CMAKE_CURRENT_SOURCE_DIR}/3rdparty/nnvm/CMakeLists.txt")

set(nnvm_LINKER_LIBS nnvm)

list(APPEND mxnet_LINKER_LIBS ${nnvm_LINKER_LIBS})

endif()

@@ -571,7 +573,14 @@ if(NOT MSVC)

set(CMAKE_C_FLAGS "${CMAKE_C_FLAGS}")

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -std=c++0x")

else()

- set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} /EHsc")

+ set(CMAKE_CXX_FLAGS_DEBUG "${CMAKE_CXX_FLAGS_DEBUG} /EHsc")

+ set(CMAKE_CXX_FLAGS_RELEASE "${CMAKE_CXX_FLAGS_RELEASE} /EHsc /Gy")

+ set(CMAKE_CXX_FLAGS_MINSIZEREL "${CMAKE_CXX_FLAGS_MINSIZEREL} /EHsc /Gy")

+ set(CMAKE_CXX_FLAGS_RELWITHDEBINFO "${CMAKE_CXX_FLAGS_RELWITHDEBINFO} /EHsc /Gy")

+ set(CMAKE_SHARED_LINKER_FLAGS_RELEASE "${CMAKE_SHARED_LINKER_FLAGS_RELEASE} /OPT:REF /OPT:ICF")

+ set(CMAKE_SHARED_LINKER_FLAGS_MINSIZEREL "${CMAKE_SHARED_LINKER_FLAGS_MINSIZEREL} /OPT:REF /OPT:ICF")

+ set(CMAKE_SHARED_LINKER_FLAGS_RELWITHDEBINFO "${CMAKE_SHARED_LINKER_FLAGS_RELWITHDEBINFO} /OPT:REF /OPT:ICF")

+

endif()

set(MXNET_INSTALL_TARGETS mxnet)

@@ -588,13 +597,13 @@ endif()

if(USE_CUDA)

if(FIRST_CUDA AND MSVC)

- target_compile_options(mxnet PUBLIC "$<$<CONFIG:DEBUG>:-Xcompiler=-MTd>")

- target_compile_options(mxnet PUBLIC "$<$<CONFIG:RELEASE>:-Xcompiler=-MT>")

+ target_compile_options(mxnet PUBLIC "$<$<CONFIG:DEBUG>:-Xcompiler=-MTd -Gy>")

+ target_compile_options(mxnet PUBLIC "$<$<CONFIG:RELEASE>:-Xcompiler=-MT -Gy>")

endif()

endif()

if(USE_DIST_KVSTORE)

- if(EXISTS ${CMAKE_CURRENT_SOURCE_DIR}/ps-lite/CMakeLists.txt)

- add_subdirectory("ps-lite")

+ if(EXISTS ${CMAKE_CURRENT_SOURCE_DIR}/3rdparty/ps-lite/CMakeLists.txt)

+ add_subdirectory("3rdparty/ps-lite")

list(APPEND pslite_LINKER_LIBS pslite protobuf)

target_link_libraries(mxnet PUBLIC debug ${pslite_LINKER_LIBS_DEBUG})

target_link_libraries(mxnet PUBLIC optimized ${pslite_LINKER_LIBS_RELEASE})

@@ -707,4 +716,4 @@ if(MSVC)

endif()

set(LINT_DIRS "include src plugin cpp-package tests")

set(EXCLUDE_PATH "src/operator/contrib/ctc_include")

-add_custom_target(mxnet_lint COMMAND ${CMAKE_COMMAND} -DMSVC=${MSVC} -DPYTHON_EXECUTABLE=${PYTHON_EXECUTABLE} -DLINT_DIRS=${LINT_DIRS} -DPROJECT_SOURCE_DIR=${CMAKE_CURRENT_SOURCE_DIR} -DPROJECT_NAME=mxnet -DEXCLUDE_PATH=${EXCLUDE_PATH} -P ${CMAKE_CURRENT_SOURCE_DIR}/dmlc-core/cmake/lint.cmake)

+add_custom_target(mxnet_lint COMMAND ${CMAKE_COMMAND} -DMSVC=${MSVC} -DPYTHON_EXECUTABLE=${PYTHON_EXECUTABLE} -DLINT_DIRS=${LINT_DIRS} -DPROJECT_SOURCE_DIR=${CMAKE_CURRENT_SOURCE_DIR} -DPROJECT_NAME=mxnet -DEXCLUDE_PATH=${EXCLUDE_PATH} -P ${CMAKE_CURRENT_SOURCE_DIR}/3rdparty/dmlc-core/cmake/lint.cmake)

diff --git a/CONTRIBUTORS.md b/CONTRIBUTORS.md

index 8079ce41911..4e5dfdb4255 100644

--- a/CONTRIBUTORS.md

+++ b/CONTRIBUTORS.md

@@ -160,3 +160,5 @@ List of Contributors

* [Sorokin Evgeniy](https://github.com/TheTweak)

* [dwSun](https://github.com/dwSun/)

* [David Braude](https://github.com/dabraude/)

+* [Nick Robinson](https://github.com/nickrobinson)

+* [Kan Wu](https://github.com/wkcn)

diff --git a/Jenkinsfile b/Jenkinsfile

index 73e73f27a71..b7be68c6f73 100644

--- a/Jenkinsfile

+++ b/Jenkinsfile

@@ -21,11 +21,11 @@

// See documents at https://jenkins.io/doc/book/pipeline/jenkinsfile/

// mxnet libraries

-mx_lib = 'lib/libmxnet.so, lib/libmxnet.a, dmlc-core/libdmlc.a, nnvm/lib/libnnvm.a'

+mx_lib = 'lib/libmxnet.so, lib/libmxnet.a, 3rdparty/dmlc-core/libdmlc.a, 3rdparty/nnvm/lib/libnnvm.a'

// mxnet cmake libraries, in cmake builds we do not produce a libnvvm static library by default.

-mx_cmake_lib = 'build/libmxnet.so, build/libmxnet.a, build/dmlc-core/libdmlc.a, build/tests/mxnet_unit_tests, build/3rdparty/openmp/runtime/src/libomp.so'

-mx_cmake_mkldnn_lib = 'build/libmxnet.so, build/libmxnet.a, build/dmlc-core/libdmlc.a, build/tests/mxnet_unit_tests, build/3rdparty/openmp/runtime/src/libomp.so, build/3rdparty/mkldnn/src/libmkldnn.so, build/3rdparty/mkldnn/src/libmkldnn.so.0'

-mx_mkldnn_lib = 'lib/libmxnet.so, lib/libmxnet.a, lib/libiomp5.so, lib/libmklml_gnu.so, lib/libmkldnn.so, lib/libmkldnn.so.0, lib/libmklml_intel.so, dmlc-core/libdmlc.a, nnvm/lib/libnnvm.a'

+mx_cmake_lib = 'build/libmxnet.so, build/libmxnet.a, build/3rdparty/dmlc-core/libdmlc.a, build/tests/mxnet_unit_tests, build/3rdparty/openmp/runtime/src/libomp.so'

+mx_cmake_mkldnn_lib = 'build/libmxnet.so, build/libmxnet.a, build/3rdparty/dmlc-core/libdmlc.a, build/tests/mxnet_unit_tests, build/3rdparty/openmp/runtime/src/libomp.so, build/3rdparty/mkldnn/src/libmkldnn.so, build/3rdparty/mkldnn/src/libmkldnn.so.0'

+mx_mkldnn_lib = 'lib/libmxnet.so, lib/libmxnet.a, lib/libiomp5.so, lib/libmkldnn.so.0, lib/libmklml_intel.so, 3rdparty/dmlc-core/libdmlc.a, 3rdparty/nnvm/lib/libnnvm.a'

// command to start a docker container

docker_run = 'tests/ci_build/ci_build.sh'

// timeout in minutes

@@ -141,6 +141,15 @@ try {

}

}

},

+ 'CPU: CentOS 7 MKLDNN': {

+ node('mxnetlinux-cpu') {

+ ws('workspace/build-centos7-mkldnn') {

+ init_git()

+ sh "ci/build.py --build --platform centos7_cpu /work/runtime_functions.sh build_centos7_mkldnn"

+ pack_lib('centos7_mkldnn')

+ }

+ }

+ },

'GPU: CentOS 7': {

node('mxnetlinux-cpu') {

ws('workspace/build-centos7-gpu') {

@@ -211,11 +220,11 @@ try {

}

}

},

- 'GPU: CUDA8.0+cuDNN5': {

+ 'GPU: CUDA9.1+cuDNN7': {

node('mxnetlinux-cpu') {

ws('workspace/build-gpu') {

init_git()

- sh "ci/build.py --build --platform ubuntu_build_cuda /work/runtime_functions.sh build_ubuntu_gpu_cuda8_cudnn5"

+ sh "ci/build.py --build --platform ubuntu_build_cuda /work/runtime_functions.sh build_ubuntu_gpu_cuda91_cudnn7"

pack_lib('gpu')

stash includes: 'build/cpp-package/example/test_score', name: 'cpp_test_score'

stash includes: 'build/cpp-package/example/test_optimizer', name: 'cpp_test_optimizer'

@@ -278,9 +287,9 @@ try {

copy build_vc14_cpu\\Release\\libmxnet.dll pkg_vc14_cpu\\build

xcopy python pkg_vc14_cpu\\python /E /I /Y

xcopy include pkg_vc14_cpu\\include /E /I /Y

- xcopy dmlc-core\\include pkg_vc14_cpu\\include /E /I /Y

- xcopy mshadow\\mshadow pkg_vc14_cpu\\include\\mshadow /E /I /Y

- xcopy nnvm\\include pkg_vc14_cpu\\nnvm\\include /E /I /Y

+ xcopy 3rdparty\\dmlc-core\\include pkg_vc14_cpu\\include /E /I /Y

+ xcopy 3rdparty\\mshadow\\mshadow pkg_vc14_cpu\\include\\mshadow /E /I /Y

+ xcopy 3rdparty\\nnvm\\include pkg_vc14_cpu\\nnvm\\include /E /I /Y

del /Q *.7z

7z.exe a vc14_cpu.7z pkg_vc14_cpu\\

'''

@@ -311,9 +320,9 @@ try {

copy build_vc14_gpu\\libmxnet.dll pkg_vc14_gpu\\build

xcopy python pkg_vc14_gpu\\python /E /I /Y

xcopy include pkg_vc14_gpu\\include /E /I /Y

- xcopy dmlc-core\\include pkg_vc14_gpu\\include /E /I /Y

- xcopy mshadow\\mshadow pkg_vc14_gpu\\include\\mshadow /E /I /Y

- xcopy nnvm\\include pkg_vc14_gpu\\nnvm\\include /E /I /Y

+ xcopy 3rdparty\\dmlc-core\\include pkg_vc14_gpu\\include /E /I /Y

+ xcopy 3rdparty\\mshadow\\mshadow pkg_vc14_gpu\\include\\mshadow /E /I /Y

+ xcopy 3rdparty\\nnvm\\include pkg_vc14_gpu\\nnvm\\include /E /I /Y

del /Q *.7z

7z.exe a vc14_gpu.7z pkg_vc14_gpu\\

'''

@@ -338,6 +347,14 @@ try {

sh "ci/build.py --build --platform armv7 /work/runtime_functions.sh build_armv7"

}

}

+ },

+ 'Raspberry / ARMv6l':{

+ node('mxnetlinux-cpu') {

+ ws('workspace/build-raspberry-armv6') {

+ init_git()

+ sh "ci/build.py --build --platform armv6 /work/runtime_functions.sh build_armv6"

+ }

+ }

}

} // End of stage('Build')

@@ -378,6 +395,24 @@ try {

}

}

},

+ 'Python2: Quantize GPU': {

+ node('mxnetlinux-gpu-p3') {

+ ws('workspace/ut-python2-quantize-gpu') {

+ init_git()

+ unpack_lib('gpu', mx_lib)

+ sh "ci/build.py --nvidiadocker --build --platform ubuntu_gpu /work/runtime_functions.sh unittest_ubuntu_python2_quantization_gpu"

+ }

+ }

+ },

+ 'Python3: Quantize GPU': {

+ node('mxnetlinux-gpu-p3') {

+ ws('workspace/ut-python3-quantize-gpu') {

+ init_git()

+ unpack_lib('gpu', mx_lib)

+ sh "ci/build.py --nvidiadocker --build --platform ubuntu_gpu /work/runtime_functions.sh unittest_ubuntu_python3_quantization_gpu"

+ }

+ }

+ },

'Python2: MKLDNN-CPU': {

node('mxnetlinux-cpu') {

ws('workspace/ut-python2-mkldnn-cpu') {

@@ -637,6 +672,7 @@ try {

}

}

}

+

// set build status to success at the end

currentBuild.result = "SUCCESS"

} catch (caughtError) {

diff --git a/LICENSE b/LICENSE

index e7d50c37723..b783e3ce3ca 100644

--- a/LICENSE

+++ b/LICENSE

@@ -219,14 +219,14 @@

2. MXNet rcnn - For details, see, example/rcnn/LICENSE

3. scala-package - For details, see, scala-package/LICENSE

4. Warp-CTC - For details, see, src/operator/contrib/ctc_include/LICENSE

- 5. dlpack - For details, see, dlpack/LICENSE

- 6. dmlc-core - For details, see, dmlc-core/LICENSE

- 7. mshadow - For details, see, mshadow/LICENSE

- 8. nnvm/dmlc-core - For details, see, nnvm/dmlc-core/LICENSE

- 9. nnvm - For details, see, nnvm/LICENSE

- 10. nnvm-fusion - For details, see, nnvm/plugin/nnvm-fusion/LICENSE

- 11. ps-lite - For details, see, ps-lite/LICENSE

- 12. nnvm/tvm - For details, see, nnvm/tvm/LICENSE

+ 5. 3rdparty/dlpack - For details, see, 3rdparty/dlpack/LICENSE

+ 6. 3rdparty/dmlc-core - For details, see, 3rdparty/dmlc-core/LICENSE

+ 7. 3rdparty/mshadow - For details, see, 3rdparty/mshadow/LICENSE

+ 8. 3rdparty/nnvm/dmlc-core - For details, see, 3rdparty/nnvm/dmlc-core/LICENSE

+ 9. 3rdparty/nnvm - For details, see, 3rdparty/nnvm/LICENSE

+ 10. nnvm-fusion - For details, see, 3rdparty/nnvm/plugin/nnvm-fusion/LICENSE

+ 11. 3rdparty/ps-lite - For details, see, 3rdparty/ps-lite/LICENSE

+ 12. 3rdparty/nnvm/tvm - For details, see, 3rdparty/nnvm/tvm/LICENSE

13. googlemock scripts/generator - For details, see, 3rdparty/googletest/googlemock/scripts/generator/LICENSE

diff --git a/Makefile b/Makefile

index 2bef8e85779..dba649f7311 100644

--- a/Makefile

+++ b/Makefile

@@ -16,6 +16,7 @@

# under the License.

ROOTDIR = $(CURDIR)

+TPARTYDIR = $(ROOTDIR)/3rdparty

SCALA_VERSION_PROFILE := scala-2.11

@@ -36,16 +37,16 @@ endif

endif

ifndef DMLC_CORE

- DMLC_CORE = $(ROOTDIR)/dmlc-core

+ DMLC_CORE = $(TPARTYDIR)/dmlc-core

endif

CORE_INC = $(wildcard $(DMLC_CORE)/include/*/*.h)

ifndef NNVM_PATH

- NNVM_PATH = $(ROOTDIR)/nnvm

+ NNVM_PATH = $(TPARTYDIR)/nnvm

endif

ifndef DLPACK_PATH

- DLPACK_PATH = $(ROOTDIR)/dlpack

+ DLPACK_PATH = $(ROOTDIR)/3rdparty/dlpack

endif

ifndef AMALGAMATION_PATH

@@ -73,7 +74,7 @@ ifeq ($(USE_MKLDNN), 1)

export USE_MKLML = 1

endif

-include mshadow/make/mshadow.mk

+include $(TPARTYDIR)/mshadow/make/mshadow.mk

include $(DMLC_CORE)/make/dmlc.mk

# all tge possible warning tread

@@ -91,7 +92,7 @@ ifeq ($(DEBUG), 1)

else

CFLAGS += -O3 -DNDEBUG=1

endif

-CFLAGS += -I$(ROOTDIR)/mshadow/ -I$(ROOTDIR)/dmlc-core/include -fPIC -I$(NNVM_PATH)/include -I$(DLPACK_PATH)/include -I$(NNVM_PATH)/tvm/include -Iinclude $(MSHADOW_CFLAGS)

+CFLAGS += -I$(TPARTYDIR)/mshadow/ -I$(TPARTYDIR)/dmlc-core/include -fPIC -I$(NNVM_PATH)/include -I$(DLPACK_PATH)/include -I$(NNVM_PATH)/tvm/include -Iinclude $(MSHADOW_CFLAGS)

LDFLAGS = -pthread $(MSHADOW_LDFLAGS) $(DMLC_LDFLAGS)

ifeq ($(DEBUG), 1)

NVCCFLAGS += -std=c++11 -Xcompiler -D_FORCE_INLINES -g -G -O0 -ccbin $(CXX) $(MSHADOW_NVCCFLAGS)

@@ -293,7 +294,7 @@ $(info Running CUDA_ARCH: $(CUDA_ARCH))

endif

# ps-lite

-PS_PATH=$(ROOTDIR)/ps-lite

+PS_PATH=$(ROOTDIR)/3rdparty/ps-lite

DEPS_PATH=$(shell pwd)/deps

include $(PS_PATH)/make/ps.mk

ifeq ($(USE_DIST_KVSTORE), 1)

@@ -431,9 +432,9 @@ lib/libmxnet.so: $(ALLX_DEP)

-Wl,${WHOLE_ARCH} $(filter %libnnvm.a, $^) -Wl,${NO_WHOLE_ARCH}

ifeq ($(USE_MKLDNN), 1)

ifeq ($(UNAME_S), Darwin)

- install_name_tool -change '@rpath/libmklml.dylib' '@loader_path/libmklml.dylib' lib/libmxnet.so

- install_name_tool -change '@rpath/libiomp5.dylib' '@loader_path/libiomp5.dylib' lib/libmxnet.so

- install_name_tool -change '@rpath/libmkldnn.0.dylib' '@loader_path/libmkldnn.0.dylib' lib/libmxnet.so

+ install_name_tool -change '@rpath/libmklml.dylib' '@loader_path/libmklml.dylib' $@

+ install_name_tool -change '@rpath/libiomp5.dylib' '@loader_path/libiomp5.dylib' $@

+ install_name_tool -change '@rpath/libmkldnn.0.dylib' '@loader_path/libmkldnn.0.dylib' $@

endif

endif

@@ -472,7 +473,7 @@ test: $(TEST)

lint: cpplint rcpplint jnilint pylint

cpplint:

- dmlc-core/scripts/lint.py mxnet cpp include src plugin cpp-package tests \

+ 3rdparty/dmlc-core/scripts/lint.py mxnet cpp include src plugin cpp-package tests \

--exclude_path src/operator/contrib/ctc_include

pylint:

@@ -504,7 +505,7 @@ cyclean:

# R related shortcuts

rcpplint:

- dmlc-core/scripts/lint.py mxnet-rcpp ${LINT_LANG} R-package/src

+ 3rdparty/dmlc-core/scripts/lint.py mxnet-rcpp ${LINT_LANG} R-package/src

rpkg:

mkdir -p R-package/inst

@@ -513,8 +514,8 @@ rpkg:

cp -rf lib/libmxnet.so R-package/inst/libs

mkdir -p R-package/inst/include

cp -rf include/* R-package/inst/include

- cp -rf dmlc-core/include/* R-package/inst/include/

- cp -rf nnvm/include/* R-package/inst/include

+ cp -rf 3rdparty/dmlc-core/include/* R-package/inst/include/

+ cp -rf 3rdparty/nnvm/include/* R-package/inst/include

Rscript -e "if(!require(devtools)){install.packages('devtools', repo = 'https://cloud.r-project.org/')}"

Rscript -e "library(devtools); library(methods); options(repos=c(CRAN='https://cloud.r-project.org/')); install_deps(pkg='R-package', dependencies = TRUE)"

echo "import(Rcpp)" > R-package/NAMESPACE

@@ -562,7 +563,7 @@ scaladeploy:

-Dlddeps="$(LIB_DEP) $(ROOTDIR)/lib/libmxnet.a")

jnilint:

- dmlc-core/scripts/lint.py mxnet-jnicpp cpp scala-package/native/src

+ 3rdparty/dmlc-core/scripts/lint.py mxnet-jnicpp cpp scala-package/native/src

ifneq ($(EXTRA_OPERATORS),)

clean: cyclean $(EXTRA_PACKAGES_CLEAN)

diff --git a/amalgamation/Makefile b/amalgamation/Makefile

index bd9403e620c..9c45885b7cf 100644

--- a/amalgamation/Makefile

+++ b/amalgamation/Makefile

@@ -16,6 +16,7 @@

# under the License.

export MXNET_ROOT=`pwd`/..

+export TPARTYDIR=`pwd`/../3rdparty

# Change this to path or specify in make command

ifndef OPENBLAS_ROOT

@@ -72,15 +73,15 @@ nnvm.d:

dmlc.d: dmlc-minimum0.cc

${CXX} ${CFLAGS} -M -MT dmlc-minimum0.o \

- -I ${MXNET_ROOT}/dmlc-core/include \

+ -I ${TPARTYDIR}/dmlc-core/include \

-D__MIN__=$(MIN) $+ > dmlc.d

mxnet_predict0.d: mxnet_predict0.cc nnvm.d dmlc.d

${CXX} ${CFLAGS} -M -MT mxnet_predict0.o \

- -I ${MXNET_ROOT}/ -I ${MXNET_ROOT}/mshadow/ -I ${MXNET_ROOT}/dmlc-core/include -I ${MXNET_ROOT}/dmlc-core/src \

- -I ${MXNET_ROOT}/nnvm/include \

- -I ${MXNET_ROOT}/dlpack/include \

+ -I ${MXNET_ROOT}/ -I ${TPARTYDIR}/mshadow/ -I ${TPARTYDIR}/dmlc-core/include -I ${TPARTYDIR}/dmlc-core/src \

+ -I ${TPARTYDIR}/nnvm/include \

+ -I ${MXNET_ROOT}/3rdparty/dlpack/include \

-I ${MXNET_ROOT}/include \

-D__MIN__=$(MIN) mxnet_predict0.cc > mxnet_predict0.d

cat dmlc.d >> mxnet_predict0.d

diff --git a/amalgamation/amalgamation.py b/amalgamation/amalgamation.py

index 45742249a69..e038fa44b98 100644

--- a/amalgamation/amalgamation.py

+++ b/amalgamation/amalgamation.py

@@ -85,7 +85,11 @@ def find_source(name, start, stage):

if not candidates: return ''

if len(candidates) == 1: return candidates[0]

for x in candidates:

- if x.split('/')[1] == start.split('/')[1]: return x

+ if '3rdparty' in x:

+ # make sure to compare the directory name after 3rdparty

+ if x.split('/')[2] == start.split('/')[2]: return x

+ else:

+ if x.split('/')[1] == start.split('/')[1]: return x

return ''

@@ -98,6 +102,18 @@ def find_source(name, start, stage):

def expand(x, pending, stage):

+ """

+ Expand the pending files in the current stage.

+

+ Parameters

+ ----------

+ x: str

+ The file to expand.

+ pending : str

+ The list of pending files to expand.

+ stage: str

+ The current stage for file expansion, used for matching the prefix of files.

+ """

if x in history and x not in ['mshadow/mshadow/expr_scalar-inl.h']: # MULTIPLE includes

return

@@ -126,7 +142,8 @@ def expand(x, pending, stage):

if not m:

print(uline + ' not found')

continue

- h = m.groups()[0].strip('./')

+ path = m.groups()[0]

+ h = path.strip('./') if "../3rdparty/" not in path else path

source = find_source(h, x, stage)

if not source:

if (h not in blacklist and

@@ -149,8 +166,8 @@ def expand(x, pending, stage):

expand.fileCount = 0

# Expand the stages

-expand(sys.argv[2], [], "dmlc")

-expand(sys.argv[3], [], "nnvm")

+expand(sys.argv[2], [], "3rdparty/dmlc-core")

+expand(sys.argv[3], [], "3rdparty/nnvm")

expand(sys.argv[4], [], "src")

# Write to amalgamation file

diff --git a/amalgamation/dmlc-minimum0.cc b/amalgamation/dmlc-minimum0.cc

index be1793a51d7..87e08d31c4d 100644

--- a/amalgamation/dmlc-minimum0.cc

+++ b/amalgamation/dmlc-minimum0.cc

@@ -22,13 +22,13 @@

* \brief Mininum DMLC library Amalgamation, used for easy plugin of dmlc lib.

* Normally this is not needed.

*/

-#include "../dmlc-core/src/io/line_split.cc"

-#include "../dmlc-core/src/io/recordio_split.cc"

-#include "../dmlc-core/src/io/indexed_recordio_split.cc"

-#include "../dmlc-core/src/io/input_split_base.cc"

-#include "../dmlc-core/src/io/local_filesys.cc"

-#include "../dmlc-core/src/data.cc"

-#include "../dmlc-core/src/io.cc"

-#include "../dmlc-core/src/recordio.cc"

+#include "../3rdparty/dmlc-core/src/io/line_split.cc"

+#include "../3rdparty/dmlc-core/src/io/recordio_split.cc"

+#include "../3rdparty/dmlc-core/src/io/indexed_recordio_split.cc"

+#include "../3rdparty/dmlc-core/src/io/input_split_base.cc"

+#include "../3rdparty/dmlc-core/src/io/local_filesys.cc"

+#include "../3rdparty/dmlc-core/src/data.cc"

+#include "../3rdparty/dmlc-core/src/io.cc"

+#include "../3rdparty/dmlc-core/src/recordio.cc"

diff --git a/amalgamation/prep_nnvm.sh b/amalgamation/prep_nnvm.sh

index 53498d71b54..b9222945a98 100755

--- a/amalgamation/prep_nnvm.sh

+++ b/amalgamation/prep_nnvm.sh

@@ -17,11 +17,11 @@

# specific language governing permissions and limitations

# under the License.

-DMLC_CORE=$(pwd)/../dmlc-core

-cd ../nnvm/amalgamation

+DMLC_CORE=$(pwd)/../3rdparty/dmlc-core

+cd ../3rdparty/nnvm/amalgamation

make clean

make DMLC_CORE_PATH=$DMLC_CORE nnvm.d

-cp nnvm.d ../../amalgamation/

+cp nnvm.d ../../../amalgamation/

echo '#define MSHADOW_FORCE_STREAM

#ifndef MSHADOW_USE_CBLAS

@@ -43,4 +43,4 @@ echo '#define MSHADOW_FORCE_STREAM

#include "nnvm/tuple.h"

#include "mxnet/tensor_blob.h"' > temp

cat nnvm.cc >> temp

-mv temp ../../amalgamation/nnvm.cc

+mv temp ../../../amalgamation/nnvm.cc

diff --git a/benchmark/python/quantization/benchmark_op.py b/benchmark/python/quantization/benchmark_op.py

new file mode 100644

index 00000000000..5ba7740cc91

--- /dev/null

+++ b/benchmark/python/quantization/benchmark_op.py

@@ -0,0 +1,90 @@

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

+

+import time

+import mxnet as mx

+from mxnet.test_utils import check_speed

+

+

+def quantize_int8_helper(data):

+ min_data = mx.nd.min(data)

+ max_data = mx.nd.max(data)

+ return mx.nd.contrib.quantize(data, min_data, max_data, out_type='int8')

+

+

+def benchmark_convolution(data_shape, kernel, num_filter, pad, stride, no_bias=True, layout='NCHW', repeats=20):

+ ctx_gpu = mx.gpu(0)

+ data = mx.sym.Variable(name="data", shape=data_shape, dtype='float32')

+ # conv cudnn

+ conv_cudnn = mx.sym.Convolution(data=data, kernel=kernel, num_filter=num_filter, pad=pad, stride=stride,

+ no_bias=no_bias, layout=layout, cudnn_off=False, name="conv_cudnn")

+ arg_shapes, _, _ = conv_cudnn.infer_shape(data=data_shape)

+ input_data = mx.nd.random.normal(0, 0.2, shape=data_shape, ctx=ctx_gpu)

+ conv_weight_name = conv_cudnn.list_arguments()[1]

+ args = {data.name: input_data, conv_weight_name: mx.random.normal(0, 1, shape=arg_shapes[1], ctx=ctx_gpu)}

+ conv_cudnn_time = check_speed(sym=conv_cudnn, location=args, ctx=ctx_gpu, N=repeats,

+ grad_req='null', typ='forward') * 1000

+

+ # quantized_conv2d

+ qdata = mx.sym.Variable(name='qdata', shape=data_shape, dtype='int8')

+ weight = mx.sym.Variable(name='weight', shape=arg_shapes[1], dtype='int8')

+ min_data = mx.sym.Variable(name='min_data', shape=(1,), dtype='float32')

+ max_data = mx.sym.Variable(name='max_data', shape=(1,), dtype='float32')

+ min_weight = mx.sym.Variable(name='min_weight', shape=(1,), dtype='float32')

+ max_weight = mx.sym.Variable(name='max_weight', shape=(1,), dtype='float32')

+ quantized_conv2d = mx.sym.contrib.quantized_conv(data=qdata, weight=weight, min_data=min_data, max_data=max_data,

+ min_weight=min_weight, max_weight=max_weight,

+ kernel=kernel, num_filter=num_filter, pad=pad, stride=stride,

+ no_bias=no_bias, layout=layout, cudnn_off=False,

+ name='quantized_conv2d')

+ qargs = {qdata.name: quantize_int8_helper(input_data)[0],

+ min_data.name: quantize_int8_helper(input_data)[1],

+ max_data.name: quantize_int8_helper(input_data)[2],

+ weight.name: quantize_int8_helper(args[conv_weight_name])[0],

+ min_weight.name: quantize_int8_helper(args[conv_weight_name])[1],

+ max_weight.name: quantize_int8_helper(args[conv_weight_name])[2]}

+ qconv_time = check_speed(sym=quantized_conv2d, location=qargs, ctx=ctx_gpu, N=repeats,

+ grad_req='null', typ='forward') * 1000

+

+ print('==================================================================================================')

+ print('data=%s, kernel=%s, num_filter=%s, pad=%s, stride=%s, no_bias=%s, layout=%s, repeats=%s'

+ % (data_shape, kernel, num_filter, pad, stride, no_bias, layout, repeats))

+ print('%s , ctx=%s, time=%.2f ms' % (conv_cudnn.name + '-FP32', ctx_gpu, conv_cudnn_time))

+ print('%s, ctx=%s, time=%.2f ms' % (quantized_conv2d.name, ctx_gpu, qconv_time))

+ print('quantization speedup: %.1fX' % (conv_cudnn_time / qconv_time))

+ print('\n')

+

+

+if __name__ == '__main__':

+ for batch_size in [32, 64, 128]:

+ benchmark_convolution(data_shape=(batch_size, 64, 56, 56), kernel=(1, 1), num_filter=256,

+ pad=(0, 0), stride=(1, 1), layout='NCHW', repeats=20)

+

+ benchmark_convolution(data_shape=(batch_size, 256, 56, 56), kernel=(1, 1), num_filter=64,

+ pad=(0, 0), stride=(1, 1), layout='NCHW', repeats=20)

+

+ benchmark_convolution(data_shape=(batch_size, 256, 56, 56), kernel=(1, 1), num_filter=128,

+ pad=(0, 0), stride=(2, 2), layout='NCHW', repeats=20)

+

+ benchmark_convolution(data_shape=(batch_size, 128, 28, 28), kernel=(3, 3), num_filter=128,

+ pad=(1, 1), stride=(1, 1), layout='NCHW', repeats=20)

+

+ benchmark_convolution(data_shape=(batch_size, 1024, 14, 14), kernel=(1, 1), num_filter=256,

+ pad=(0, 0), stride=(1, 1), layout='NCHW', repeats=20)

+

+ benchmark_convolution(data_shape=(batch_size, 2048, 7, 7), kernel=(1, 1), num_filter=512,

+ pad=(0, 0), stride=(1, 1), layout='NCHW', repeats=20)

diff --git a/benchmark/python/sparse/sparse_end2end.py b/benchmark/python/sparse/sparse_end2end.py

index ecd9057dedf..d032f9d6c38 100644

--- a/benchmark/python/sparse/sparse_end2end.py

+++ b/benchmark/python/sparse/sparse_end2end.py

@@ -239,8 +239,8 @@ def row_sparse_pull(kv, key, data, slices, weight_array, priority):

device = 'gpu' + str(args.num_gpu)

name = 'profile_' + args.dataset + '_' + device + '_nworker' + str(num_worker)\

+ '_batchsize' + str(args.batch_size) + '_outdim' + str(args.output_dim) + '.json'

- mx.profiler.profiler_set_config(mode='all', filename=name)

- mx.profiler.profiler_set_state('run')

+ mx.profiler.set_config(profile_all=True, filename=name)

+ mx.profiler.set_state('run')

logging.debug('start training ...')

start = time.time()

@@ -301,7 +301,7 @@ def row_sparse_pull(kv, key, data, slices, weight_array, priority):

logging.info('|cpu/{} cores| {} | {} | {} |'.format(str(num_cores), str(num_worker), str(average_cost_epoch), rank))

data_iter.reset()

if profiler:

- mx.profiler.profiler_set_state('stop')

+ mx.profiler.set_state('stop')

end = time.time()

time_cost = end - start

logging.info('num_worker = {}, rank = {}, time cost = {}'.format(str(num_worker), str(rank), str(time_cost)))

diff --git a/benchmark/python/sparse/updater.py b/benchmark/python/sparse/updater.py

new file mode 100644

index 00000000000..72f2bfd04a2

--- /dev/null

+++ b/benchmark/python/sparse/updater.py

@@ -0,0 +1,78 @@

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

+

+import time

+import mxnet as mx

+from mxnet.ndarray.sparse import adam_update

+import numpy as np

+import argparse

+

+mx.random.seed(0)

+np.random.seed(0)

+

+parser = argparse.ArgumentParser(description='Benchmark adam updater')

+parser.add_argument('--dim-in', type=int, default=240000, help='weight.shape[0]')

+parser.add_argument('--dim-out', type=int, default=512, help='weight.shape[1]')

+parser.add_argument('--nnr', type=int, default=5000, help='grad.indices.shape[0]')

+parser.add_argument('--repeat', type=int, default=1000, help='num repeat')

+parser.add_argument('--dense-grad', action='store_true',

+ help='if set to true, both gradient and weight are dense.')

+parser.add_argument('--dense-state', action='store_true',

+ help='if set to true, states are dense, indicating standard update')

+parser.add_argument('--cpu', action='store_true')

+

+

+args = parser.parse_args()

+dim_in = args.dim_in

+dim_out = args.dim_out

+nnr = args.nnr

+ctx = mx.cpu() if args.cpu else mx.gpu()

+

+ones = mx.nd.ones((dim_in, dim_out), ctx=ctx)

+

+if not args.dense_grad:

+ weight = ones.tostype('row_sparse')

+ indices = np.arange(dim_in)

+ np.random.shuffle(indices)

+ indices = np.unique(indices[:nnr])

+ indices = mx.nd.array(indices, ctx=ctx)

+ grad = mx.nd.sparse.retain(weight, indices)

+else:

+ weight = ones.copy()

+ grad = ones.copy()

+

+if args.dense_state:

+ mean = ones.copy()

+else:

+ mean = ones.tostype('row_sparse')

+

+var = mean.copy()

+

+# warmup

+for i in range(10):

+ adam_update(weight, grad, mean, var, out=weight, lr=1, wd=0, beta1=0.9,

+ beta2=0.99, rescale_grad=0.5, epsilon=1e-8)

+weight.wait_to_read()

+

+# measure speed

+a = time.time()

+for i in range(args.repeat):

+ adam_update(weight, grad, mean, var, out=weight, lr=1, wd=0, beta1=0.9,

+ beta2=0.99, rescale_grad=0.5, epsilon=1e-8)

+weight.wait_to_read()

+b = time.time()

+print(b - a)

diff --git a/ci/docker/Dockerfile.build.armv6 b/ci/docker/Dockerfile.build.armv6

new file mode 100755

index 00000000000..471846243fc

--- /dev/null

+++ b/ci/docker/Dockerfile.build.armv6

@@ -0,0 +1,40 @@

+# -*- mode: dockerfile -*-

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

+#

+# Dockerfile to build MXNet for ARMv6

+

+FROM dockcross/linux-armv6

+

+ENV ARCH armv6l

+ENV CC /usr/bin/arm-linux-gnueabihf-gcc

+ENV CXX /usr/bin/arm-linux-gnueabihf-g++

+ENV FC /usr/bin/arm-linux-gnueabihf-gfortran

+ENV HOSTCC gcc

+ENV TARGET ARMV6

+

+WORKDIR /work/deps

+

+# Build OpenBLAS

+ADD https://api.github.com/repos/xianyi/OpenBLAS/git/refs/tags/v0.2.9 openblas_version.json

+RUN git clone --recursive -b v0.2.9 https://github.com/xianyi/OpenBLAS.git && \

+ cd OpenBLAS && \

+ make -j$(nproc) && \

+ make PREFIX=$CROSS_ROOT install

+

+COPY runtime_functions.sh /work/

+WORKDIR /work/mxnet

diff --git a/ci/docker/Dockerfile.build.centos7_cpu b/ci/docker/Dockerfile.build.centos7_cpu

index 665f7ddd99a..a44d6464ee3 100755

--- a/ci/docker/Dockerfile.build.centos7_cpu

+++ b/ci/docker/Dockerfile.build.centos7_cpu

@@ -28,6 +28,8 @@ COPY install/centos7_core.sh /work/

RUN /work/centos7_core.sh

COPY install/centos7_python.sh /work/

RUN /work/centos7_python.sh

+COPY install/ubuntu_mklml.sh /work/

+RUN /work/ubuntu_mklml.sh

COPY install/centos7_adduser.sh /work/

RUN /work/centos7_adduser.sh

diff --git a/ci/docker/Dockerfile.build.centos7_gpu b/ci/docker/Dockerfile.build.centos7_gpu

index 3d7482161c4..4dcf5bf08ca 100755

--- a/ci/docker/Dockerfile.build.centos7_gpu

+++ b/ci/docker/Dockerfile.build.centos7_gpu

@@ -18,7 +18,7 @@

#

# Dockerfile to build and run MXNet on CentOS 7 for GPU

-FROM nvidia/cuda:8.0-cudnn5-devel-centos7

+FROM nvidia/cuda:9.1-cudnn7-devel-centos7

ARG USER_ID=0

diff --git a/ci/docker/Dockerfile.build.ubuntu_build_cuda b/ci/docker/Dockerfile.build.ubuntu_build_cuda

index 18c8af7deb2..8bafed43b83 100755

--- a/ci/docker/Dockerfile.build.ubuntu_build_cuda

+++ b/ci/docker/Dockerfile.build.ubuntu_build_cuda

@@ -21,7 +21,7 @@

# package generation, requiring the actual CUDA library to be

# present

-FROM nvidia/cuda:8.0-cudnn5-devel

+FROM nvidia/cuda:9.1-cudnn7-devel

ARG USER_ID=0

@@ -37,8 +37,6 @@ COPY install/ubuntu_r.sh /work/

RUN /work/ubuntu_r.sh

COPY install/ubuntu_perl.sh /work/

RUN /work/ubuntu_perl.sh

-COPY install/ubuntu_lint.sh /work/

-RUN /work/ubuntu_lint.sh

COPY install/ubuntu_clang.sh /work/

RUN /work/ubuntu_clang.sh

COPY install/ubuntu_mklml.sh /work/

@@ -54,4 +52,4 @@ RUN /work/ubuntu_nvidia.sh

COPY runtime_functions.sh /work/

WORKDIR /work/mxnet

-ENV LD_LIBRARY_PATH=${LD_LIBRARY_PATH}:/usr/local/lib

\ No newline at end of file

+ENV LD_LIBRARY_PATH=${LD_LIBRARY_PATH}:/usr/local/lib

diff --git a/ci/docker/Dockerfile.build.ubuntu_cpu b/ci/docker/Dockerfile.build.ubuntu_cpu

index d652a0d89c5..f706f88461f 100755

--- a/ci/docker/Dockerfile.build.ubuntu_cpu

+++ b/ci/docker/Dockerfile.build.ubuntu_cpu

@@ -34,8 +34,6 @@ COPY install/ubuntu_r.sh /work/

RUN /work/ubuntu_r.sh

COPY install/ubuntu_perl.sh /work/

RUN /work/ubuntu_perl.sh

-COPY install/ubuntu_lint.sh /work/

-RUN /work/ubuntu_lint.sh

COPY install/ubuntu_clang.sh /work/

RUN /work/ubuntu_clang.sh

COPY install/ubuntu_mklml.sh /work/

diff --git a/ci/docker/Dockerfile.build.ubuntu_gpu b/ci/docker/Dockerfile.build.ubuntu_gpu

index 826836c78cf..625d57009c9 100755

--- a/ci/docker/Dockerfile.build.ubuntu_gpu

+++ b/ci/docker/Dockerfile.build.ubuntu_gpu

@@ -18,7 +18,7 @@

#

# Dockerfile to run MXNet on Ubuntu 16.04 for CPU

-FROM nvidia/cuda:8.0-cudnn5-devel

+FROM nvidia/cuda:9.1-cudnn7-devel

ARG USER_ID=0

@@ -34,8 +34,6 @@ COPY install/ubuntu_r.sh /work/

RUN /work/ubuntu_r.sh

COPY install/ubuntu_perl.sh /work/

RUN /work/ubuntu_perl.sh

-COPY install/ubuntu_lint.sh /work/

-RUN /work/ubuntu_lint.sh

COPY install/ubuntu_clang.sh /work/

RUN /work/ubuntu_clang.sh

COPY install/ubuntu_mklml.sh /work/

diff --git a/ci/docker/install/centos7_core.sh b/ci/docker/install/centos7_core.sh

index 1688b81ba89..1d7e120d6ae 100755

--- a/ci/docker/install/centos7_core.sh

+++ b/ci/docker/install/centos7_core.sh

@@ -31,9 +31,9 @@ yum -y install openblas-devel

yum -y install lapack-devel

yum -y install opencv-devel

yum -y install openssl-devel

-yum -y install gcc-c++

+yum -y install gcc-c++-4.8.*

yum -y install make

yum -y install cmake

yum -y install wget

yum -y install unzip

-yum -y install ninja-build

\ No newline at end of file

+yum -y install ninja-build

diff --git a/ci/docker/install/ubuntu_core.sh b/ci/docker/install/ubuntu_core.sh

index e78f29ae0a9..dc9b091f205 100755

--- a/ci/docker/install/ubuntu_core.sh

+++ b/ci/docker/install/ubuntu_core.sh

@@ -34,9 +34,7 @@ apt-get install -y \

unzip \

sudo \

software-properties-common \

- ninja-build \

- python-pip

+ ninja-build

# Link Openblas to Cblas as this link does not exist on ubuntu16.04

ln -s /usr/lib/libopenblas.so /usr/lib/libcblas.so

-pip install cpplint==1.3.0 pylint==1.8.2

\ No newline at end of file

diff --git a/ci/docker/install/ubuntu_nvidia.sh b/ci/docker/install/ubuntu_nvidia.sh

index bb1c73eec76..e6d7926dadb 100755

--- a/ci/docker/install/ubuntu_nvidia.sh

+++ b/ci/docker/install/ubuntu_nvidia.sh

@@ -17,13 +17,10 @@

# specific language governing permissions and limitations

# under the License.

-# build and install are separated so changes to build don't invalidate

-# the whole docker cache for the image

-

set -ex

apt install -y software-properties-common

add-apt-repository -y ppa:graphics-drivers

# Retrieve ppa:graphics-drivers and install nvidia-drivers.

# Note: DEBIAN_FRONTEND required to skip the interactive setup steps

apt update

-DEBIAN_FRONTEND=noninteractive apt install -y --no-install-recommends cuda-8-0

\ No newline at end of file

+DEBIAN_FRONTEND=noninteractive apt install -y --no-install-recommends cuda-9-1

diff --git a/ci/docker/install/ubuntu_onnx.sh b/ci/docker/install/ubuntu_onnx.sh

index 72613cd5788..07acba01908 100755

--- a/ci/docker/install/ubuntu_onnx.sh

+++ b/ci/docker/install/ubuntu_onnx.sh

@@ -30,5 +30,5 @@ echo "Installing libprotobuf-dev and protobuf-compiler ..."

apt-get install -y libprotobuf-dev protobuf-compiler

echo "Installing pytest, pytest-cov, protobuf, Pillow, ONNX and tabulate ..."

-pip2 install pytest==3.4.0 pytest-cov==2.5.1 protobuf==3.0.0 onnx==1.0.1 Pillow==5.0.0 tabulate==0.7.5

-pip3 install pytest==3.4.0 pytest-cov==2.5.1 protobuf==3.0.0 onnx==1.0.1 Pillow==5.0.0 tabulate==0.7.5

+pip2 install pytest==3.4.0 pytest-cov==2.5.1 protobuf==3.5.2 onnx==1.1.1 Pillow==5.0.0 tabulate==0.7.5

+pip3 install pytest==3.4.0 pytest-cov==2.5.1 protobuf==3.5.2 onnx==1.1.1 Pillow==5.0.0 tabulate==0.7.5

diff --git a/ci/docker/install/ubuntu_python.sh b/ci/docker/install/ubuntu_python.sh

index b19448eecab..b906b55f750 100755

--- a/ci/docker/install/ubuntu_python.sh

+++ b/ci/docker/install/ubuntu_python.sh

@@ -29,5 +29,5 @@ wget -nv https://bootstrap.pypa.io/get-pip.py

python3 get-pip.py

python2 get-pip.py

-pip2 install nose pylint numpy nose-timer requests h5py scipy

-pip3 install nose pylint numpy nose-timer requests h5py scipy

\ No newline at end of file

+pip2 install nose cpplint==1.3.0 pylint==1.8.3 numpy nose-timer requests h5py scipy

+pip3 install nose cpplint==1.3.0 pylint==1.8.3 numpy nose-timer requests h5py scipy

diff --git a/ci/docker/runtime_functions.sh b/ci/docker/runtime_functions.sh

index 39809f28127..f35de6bef0b 100755

--- a/ci/docker/runtime_functions.sh

+++ b/ci/docker/runtime_functions.sh

@@ -73,6 +73,34 @@ build_jetson() {

popd

}

+build_armv6() {

+ set -ex

+ pushd .

+ cd /work/build

+

+ # Lapack functionality will be included and statically linked to openblas.

+ # But USE_LAPACK needs to be set to OFF, otherwise the main CMakeLists.txt

+ # file tries to add -llapack. Lapack functionality though, requires -lgfortran

+ # to be linked additionally.

+

+ cmake \

+ -DCMAKE_TOOLCHAIN_FILE=$CROSS_ROOT/Toolchain.cmake \

+ -DUSE_CUDA=OFF \

+ -DUSE_OPENCV=OFF \

+ -DUSE_SIGNAL_HANDLER=ON \

+ -DCMAKE_BUILD_TYPE=RelWithDebInfo \

+ -DUSE_MKL_IF_AVAILABLE=OFF \

+ -DUSE_LAPACK=OFF \

+ -Dmxnet_LINKER_LIBS=-lgfortran \

+ -G Ninja /work/mxnet

+ ninja

+ export MXNET_LIBRARY_PATH=`pwd`/libmxnet.so

+ cd /work/mxnet/python

+ python setup.py bdist_wheel --universal

+ cp dist/*.whl /work/build

+ popd

+}

+

build_armv7() {

set -ex

pushd .

@@ -156,6 +184,19 @@ build_centos7_cpu() {

-j$(nproc)

}

+build_centos7_mkldnn() {

+ set -ex

+ cd /work/mxnet

+ make \

+ DEV=1 \

+ USE_LAPACK=1 \

+ USE_LAPACK_PATH=/usr/lib64/liblapack.so \

+ USE_PROFILER=1 \

+ USE_MKLDNN=1 \

+ USE_BLAS=openblas \

+ -j$(nproc)

+}

+

build_centos7_gpu() {

set -ex

cd /work/mxnet

@@ -256,7 +297,7 @@ build_ubuntu_gpu_mkldnn() {

-j$(nproc)

}

-build_ubuntu_gpu_cuda8_cudnn5() {

+build_ubuntu_gpu_cuda91_cudnn7() {

set -ex

make \

DEV=1 \

@@ -333,6 +374,7 @@ unittest_ubuntu_python2_cpu() {

export MXNET_STORAGE_FALLBACK_LOG_VERBOSE=0

nosetests-2.7 --verbose tests/python/unittest

nosetests-2.7 --verbose tests/python/train

+ nosetests-2.7 --verbose tests/python/quantization

}

unittest_ubuntu_python3_cpu() {

@@ -343,6 +385,7 @@ unittest_ubuntu_python3_cpu() {

#export MXNET_MKLDNN_DEBUG=1 # Ignored if not present

export MXNET_STORAGE_FALLBACK_LOG_VERBOSE=0

nosetests-3.4 --verbose tests/python/unittest

+ nosetests-3.4 --verbose tests/python/quantization

}

unittest_ubuntu_python2_gpu() {

@@ -365,6 +408,30 @@ unittest_ubuntu_python3_gpu() {

nosetests-3.4 --verbose tests/python/gpu

}

+# quantization gpu currently only runs on P3 instances

+# need to separte it from unittest_ubuntu_python2_gpu()

+unittest_ubuntu_python2_quantization_gpu() {

+ set -ex

+ export PYTHONPATH=./python/

+ # MXNET_MKLDNN_DEBUG is buggy and produces false positives

+ # https://github.com/apache/incubator-mxnet/issues/10026

+ #export MXNET_MKLDNN_DEBUG=1 # Ignored if not present

+ export MXNET_STORAGE_FALLBACK_LOG_VERBOSE=0

+ nosetests-2.7 --verbose tests/python/quantization_gpu

+}

+

+# quantization gpu currently only runs on P3 instances

+# need to separte it from unittest_ubuntu_python3_gpu()

+unittest_ubuntu_python3_quantization_gpu() {

+ set -ex

+ export PYTHONPATH=./python/

+ # MXNET_MKLDNN_DEBUG is buggy and produces false positives

+ # https://github.com/apache/incubator-mxnet/issues/10026

+ #export MXNET_MKLDNN_DEBUG=1 # Ignored if not present

+ export MXNET_STORAGE_FALLBACK_LOG_VERBOSE=0

+ nosetests-3.4 --verbose tests/python/quantization_gpu

+}

+

unittest_ubuntu_cpu_scala() {

set -ex

make scalapkg USE_BLAS=openblas

diff --git a/cpp-package/example/Makefile b/cpp-package/example/Makefile

index e1b341794c4..7c1216d1dbd 100644

--- a/cpp-package/example/Makefile

+++ b/cpp-package/example/Makefile

@@ -18,7 +18,7 @@

CPPEX_SRC = $(wildcard *.cpp)

CPPEX_EXE = $(patsubst %.cpp, %, $(CPPEX_SRC))

-CFLAGS += -I../../include -I../../nnvm/include -I../../dmlc-core/include

+CFLAGS += -I../../include -I../../3rdparty/nnvm/include -I../../3rdparty/dmlc-core/include

CPPEX_CFLAGS += -I../include

CPPEX_EXTRA_LDFLAGS := -L. -lmxnet

diff --git a/docs/api/python/autograd/autograd.md b/docs/api/python/autograd/autograd.md

index 410d6a94e26..2f9f5710b12 100644

--- a/docs/api/python/autograd/autograd.md

+++ b/docs/api/python/autograd/autograd.md

@@ -39,7 +39,7 @@ and do some computation. Finally, call `backward()` on the result:

## Train mode and Predict Mode

Some operators (Dropout, BatchNorm, etc) behave differently in

-when training and when making predictions.

+training and making predictions.

This can be controlled with `train_mode` and `predict_mode` scope.

By default, MXNet is in `predict_mode`.

@@ -50,9 +50,9 @@ call record with `train_mode=False` and then call `backward(train_mode=False)`

Although training usually coincides with recording,

this isn't always the case.

-To control *training* vs *predict_mode* without changing

+To control *training* vs. *predict_mode* without changing

*recording* vs *not recording*,

-Use a `with autograd.train_mode():`

+use a `with autograd.train_mode():`

or `with autograd.predict_mode():` block.

Detailed tutorials are available in Part 1 of

@@ -60,9 +60,6 @@ Detailed tutorials are available in Part 1 of

-

-

-

<script type="text/javascript" src='../../_static/js/auto_module_index.js'></script>

## Autograd

diff --git a/docs/api/python/gluon/data.md b/docs/api/python/gluon/data.md

index 28433c0ae36..3c6bb02e47c 100644

--- a/docs/api/python/gluon/data.md

+++ b/docs/api/python/gluon/data.md

@@ -69,6 +69,51 @@ In the rest of this document, we list routines provided by the `gluon.data` pack

ImageFolderDataset

```

+#### Vision Transforms

+

+```eval_rst

+.. currentmodule:: mxnet.gluon.data.vision.transforms

+```

+

+Transforms can be used to augment input data during training. You

+can compose multiple transforms sequentially, for example:

+

+```python

+from mxnet.gluon.data.vision import MNIST, transforms

+from mxnet import gluon

+transform = transforms.Compose([

+ transforms.Resize(300),

+ transforms.RandomResizedCrop(224),

+ transforms.RandomBrightness(0.1),

+ transforms.ToTensor(),

+ transforms.Normalize(0, 1)])

+data = MNIST(train=True).transform_first(transform)

+data_loader = gluon.data.DataLoader(data, batch_size=32, num_workers=1)

+for data, label in data_loader:

+ # do something with data and label

+```

+

+```eval_rst

+.. autosummary::

+ :nosignatures:

+

+ Compose

+ Cast

+ ToTensor

+ Normalize

+ RandomResizedCrop

+ CenterCrop

+ Resize

+ RandomFlipLeftRight

+ RandomFlipTopBottom

+ RandomBrightness

+ RandomContrast

+ RandomSaturation

+ RandomHue

+ RandomColorJitter

+ RandomLighting

+```

+

## API Reference

<script type="text/javascript" src='../../../_static/js/auto_module_index.js'></script>

@@ -84,6 +129,9 @@ In the rest of this document, we list routines provided by the `gluon.data` pack

.. automodule:: mxnet.gluon.data.vision.datasets

:members:

+

+.. automodule:: mxnet.gluon.data.vision.transforms

+ :members:

```

diff --git a/docs/api/python/image/image.md b/docs/api/python/image/image.md

index 1a1d0fd1110..a3e2a1697d3 100644

--- a/docs/api/python/image/image.md

+++ b/docs/api/python/image/image.md

@@ -43,7 +43,7 @@ Iterators support loading image from binary `Record IO` and raw image files.

... print(d.shape)

>>> # we can apply lots of augmentations as well

>>> data_iter = mx.image.ImageIter(4, (3, 224, 224), path_imglist='data/custom.lst',

- rand_crop=resize=True, rand_mirror=True, mean=True,

+ rand_crop=True, rand_resize=True, rand_mirror=True, mean=True,

brightness=0.1, contrast=0.1, saturation=0.1, hue=0.1,

pca_noise=0.1, rand_gray=0.05)

>>> data = data_iter.next()

diff --git a/docs/api/python/ndarray/random.md b/docs/api/python/ndarray/random.md

index ae9e69f758f..4341a3ce2cd 100644

--- a/docs/api/python/ndarray/random.md

+++ b/docs/api/python/ndarray/random.md

@@ -35,6 +35,8 @@ In the rest of this document, we list routines provided by the `ndarray.random`

normal

poisson

uniform

+ multinomial

+ shuffle

mxnet.random.seed

```

diff --git a/docs/api/python/symbol/random.md b/docs/api/python/symbol/random.md

index a3492f6f840..22c686ff2fd 100644

--- a/docs/api/python/symbol/random.md

+++ b/docs/api/python/symbol/random.md

@@ -35,6 +35,8 @@ In the rest of this document, we list routines provided by the `symbol.random` p

normal

poisson

uniform

+ multinomial

+ shuffle

mxnet.random.seed

```

diff --git a/docs/build_version_doc/build_doc.sh b/docs/build_version_doc/build_doc.sh

index 427f40c592a..b8a6974c7c9 100755

--- a/docs/build_version_doc/build_doc.sh

+++ b/docs/build_version_doc/build_doc.sh

@@ -83,10 +83,6 @@ then

git checkout tags/$latest_tag

make docs || exit 1

- tests/ci_build/ci_build.sh doc python docs/build_version_doc/AddVersion.py --file_path "docs/_build/html/" --current_version "$latest_tag"

- tests/ci_build/ci_build.sh doc python docs/build_version_doc/AddPackageLink.py \

- --file_path "docs/_build/html/install/index.html" --current_version "$latest_tag"

-

# Update the tag_list (tag.txt).

###### content of tag.txt########

# <latest_tag_goes_here>

@@ -97,6 +93,10 @@ then

echo "++++ Adding $latest_tag to the top of the $tag_list_file ++++"

echo -e "$latest_tag\n$(cat $tag_list_file)" > "$tag_list_file"

cat $tag_list_file

+

+ tests/ci_build/ci_build.sh doc python docs/build_version_doc/AddVersion.py --file_path "docs/_build/html/" --current_version "$latest_tag"

+ tests/ci_build/ci_build.sh doc python docs/build_version_doc/AddPackageLink.py \

+ --file_path "docs/_build/html/install/index.html" --current_version "$latest_tag"

# The following block does the following:

# a. copies the static html that was built from new tag to a local sandbox folder.

diff --git a/docs/faq/env_var.md b/docs/faq/env_var.md

index af698ca639a..f29301dec7a 100644

--- a/docs/faq/env_var.md

+++ b/docs/faq/env_var.md

@@ -73,7 +73,11 @@ export MXNET_GPU_WORKER_NTHREADS=3

* MXNET_KVSTORE_REDUCTION_NTHREADS

- Values: Int ```(default=4)```

- - The number of CPU threads used for summing big arrays.

+ - The number of CPU threads used for summing up big arrays on a single machine

+ - This will also be used for `dist_sync` kvstore to sum up arrays from different contexts on a single machine.

+ - This does not affect summing up of arrays from different machines on servers.

+ - Summing up of arrays for `dist_sync_device` kvstore is also unaffected as that happens on GPUs.

+

* MXNET_KVSTORE_BIGARRAY_BOUND

- Values: Int ```(default=1000000)```

- The minimum size of a "big array".

@@ -110,9 +114,13 @@ When USE_PROFILER is enabled in Makefile or CMake, the following environments ca

## Other Environment Variables

* MXNET_CUDNN_AUTOTUNE_DEFAULT

- - Values: 0(false) or 1(true) ```(default=1)```

- - The default value of cudnn auto tunning for convolution layers.

- - Auto tuning is turned off by default. For benchmarking, set this to 1 to turn it on by default.

+ - Values: 0, 1, or 2 ```(default=1)```

+ - The default value of cudnn auto tuning for convolution layers.

+ - Value of 0 means there is no auto tuning to pick the convolution algo

+ - Performance tests are run to pick the convolution algo when value is 1 or 2

+ - Value of 1 chooses the best algo in a limited workspace

+ - Value of 2 chooses the fastest algo whose memory requirements may be larger than the default workspace threshold

+

* MXNET_GLUON_REPO

- Values: String ```(default='https://apache-mxnet.s3-accelerate.dualstack.amazonaws.com/'```

diff --git a/docs/faq/index.md b/docs/faq/index.md

index 099cd509b14..098d37f5fc0 100644

--- a/docs/faq/index.md

+++ b/docs/faq/index.md

@@ -56,6 +56,8 @@ and full working examples, visit the [tutorials section](../tutorials/index.md).

* [How do I create new operators in MXNet?](http://mxnet.io/faq/new_op.html)

+* [How do I implement sparse operators in MXNet backend?](https://cwiki.apache.org/confluence/display/MXNET/A+Guide+to+Implementing+Sparse+Operators+in+MXNet+Backend)

+

* [How do I contribute an example or tutorial?](https://github.com/apache/incubator-mxnet/tree/master/example#contributing)

* [How do I set MXNet's environmental variables?](http://mxnet.io/faq/env_var.html)

diff --git a/docs/faq/perf.md b/docs/faq/perf.md

index e021f1e9a21..b5d73f69a03 100644

--- a/docs/faq/perf.md

+++ b/docs/faq/perf.md

@@ -228,12 +228,12 @@ See [example/profiler](https://github.com/dmlc/mxnet/tree/master/example/profile

for complete examples of how to use the profiler in code, but briefly, the Python code looks like:

```

- mx.profiler.profiler_set_config(mode='all', filename='profile_output.json')

- mx.profiler.profiler_set_state('run')

+ mx.profiler.set_config(profile_all=True, filename='profile_output.json')

+ mx.profiler.set_state('run')

# Code to be profiled goes here...

- mx.profiler.profiler_set_state('stop')

+ mx.profiler.set_state('stop')

```

The `mode` parameter can be set to

diff --git a/docs/install/index.md b/docs/install/index.md

index e4767618e65..d9d78dd3693 100644

--- a/docs/install/index.md

+++ b/docs/install/index.md

@@ -994,11 +994,11 @@ Refer to [#8671](https://github.com/apache/incubator-mxnet/issues/8671) for stat

<br/>

To build and install MXNet yourself, you need the following dependencies. Install the required dependencies:

-1. If [Microsoft Visual Studio 2015](https://www.visualstudio.com/downloads/) is not already installed, download and install it. You can download and install the free community edition.

+1. If [Microsoft Visual Studio 2015](https://www.visualstudio.com/vs/older-downloads/) is not already installed, download and install it. You can download and install the free community edition.

2. Download and install [CMake](https://cmake.org/) if it is not already installed.

3. Download and install [OpenCV](http://sourceforge.net/projects/opencvlibrary/files/opencv-win/3.0.0/opencv-3.0.0.exe/download).

4. Unzip the OpenCV package.

-5. Set the environment variable ```OpenCV_DIR``` to point to the ```OpenCV build directory```.

+5. Set the environment variable ```OpenCV_DIR``` to point to the ```OpenCV build directory``` (```C:\opencv\build\x64\vc14``` for example). Also, you need to add the OpenCV bin directory (```C:\opencv\build\x64\vc14\bin``` for example) to the ``PATH`` variable.

6. If you don't have the Intel Math Kernel Library (MKL) installed, download and install [OpenBlas](http://sourceforge.net/projects/openblas/files/v0.2.14/).

7. Set the environment variable ```OpenBLAS_HOME``` to point to the ```OpenBLAS``` directory that contains the ```include``` and ```lib``` directories. Typically, you can find the directory in ```C:\Program files (x86)\OpenBLAS\```.

8. Download and install [CUDA](https://developer.nvidia.com/cuda-downloads?target_os=Windows&target_arch=x86_64) and [cuDNN](https://developer.nvidia.com/cudnn). To get access to the download link, register as an NVIDIA community user.

@@ -1213,7 +1213,7 @@ Edit the Makefile to install the MXNet with CUDA bindings to leverage the GPU on

echo "USE_CUDNN=1" >> config.mk

```

-Edit the Mshadow Makefile to ensure MXNet builds with Pascal's hardware level low precision acceleration by editing mshadow/make/mshadow.mk and adding the following after line 122:

+Edit the Mshadow Makefile to ensure MXNet builds with Pascal's hardware level low precision acceleration by editing 3rdparty/mshadow/make/mshadow.mk and adding the following after line 122:

```bash

MSHADOW_CFLAGS += -DMSHADOW_USE_PASCAL=1

```

diff --git a/docs/install/osx_setup.md b/docs/install/osx_setup.md

index c1fa0fcd7f1..4d979b3dccf 100644

--- a/docs/install/osx_setup.md

+++ b/docs/install/osx_setup.md

@@ -1,4 +1,4 @@

-# Installing MXNet froum source on OS X (Mac)

+# Installing MXNet from source on OS X (Mac)

**NOTE:** For prebuild MXNet with Python installation, please refer to the [new install guide](http://mxnet.io/install/index.html).

@@ -65,8 +65,8 @@ Install the dependencies, required for MXNet, with the following commands:

brew install openblas

brew tap homebrew/core

brew install opencv

- # For getting pip

- brew install python

+ # Get pip

+ easy_install pip

# For visualization of network graphs

pip install graphviz

# Jupyter notebook

@@ -167,6 +167,12 @@ You might want to add this command to your ```~/.bashrc``` file. If you do, you

For more details about installing and using MXNet with Julia, see the [MXNet Julia documentation](http://dmlc.ml/MXNet.jl/latest/user-guide/install/).

## Install the MXNet Package for Scala

+

+If you haven't installed maven yet, you need to install it now (required by the makefile):

+```bash

+ brew install maven

+```

+

Before you build MXNet for Scala from source code, you must complete [building the shared library](#build-the-shared-library). After you build the shared library, run the following command from the MXNet source root directory to build the MXNet Scala package:

```bash

diff --git a/docs/install/windows_setup.md b/docs/install/windows_setup.md

index 598a12fc4cc..09a39e2c469 100755

--- a/docs/install/windows_setup.md

+++ b/docs/install/windows_setup.md

@@ -21,11 +21,11 @@ This produces a library called ```libmxnet.dll```.

To build and install MXNet yourself, you need the following dependencies. Install the required dependencies:

-1. If [Microsoft Visual Studio 2015](https://www.visualstudio.com/downloads/) is not already installed, download and install it. You can download and install the free community edition.

+1. If [Microsoft Visual Studio 2015](https://www.visualstudio.com/vs/older-downloads/) is not already installed, download and install it. You can download and install the free community edition.

2. Download and Install [CMake](https://cmake.org/) if it is not already installed.

3. Download and install [OpenCV](http://sourceforge.net/projects/opencvlibrary/files/opencv-win/3.0.0/opencv-3.0.0.exe/download).

4. Unzip the OpenCV package.

-5. Set the environment variable ```OpenCV_DIR``` to point to the ```OpenCV build directory``` (```c:\utils\opencv\build``` for example).

+5. Set the environment variable ```OpenCV_DIR``` to point to the ```OpenCV build directory``` (```C:\opencv\build\x64\vc14``` for example). Also, you need to add the OpenCV bin directory (```C:\opencv\build\x64\vc14\bin``` for example) to the ``PATH`` variable.

6. If you have Intel Math Kernel Library (MKL) installed, set ```MKL_ROOT``` to point to ```MKL``` directory that contains the ```include``` and ```lib```. Typically, you can find the directory in

```C:\Program Files (x86)\IntelSWTools\compilers_and_libraries_2018\windows\mkl```.

7. If you don't have the Intel Math Kernel Library (MKL) installed, download and install [OpenBlas](http://sourceforge.net/projects/openblas/files/v0.2.14/).

diff --git a/docs/mxdoc.py b/docs/mxdoc.py

index caf135680dd..7f567f0b8d0 100644

--- a/docs/mxdoc.py

+++ b/docs/mxdoc.py

@@ -80,9 +80,9 @@ def build_r_docs(app):

def build_scala_docs(app):

"""build scala doc and then move the outdir"""

- scala_path = app.builder.srcdir + '/../scala-package/core/src/main/scala/ml/dmlc/mxnet'

+ scala_path = app.builder.srcdir + '/../scala-package'

# scaldoc fails on some apis, so exit 0 to pass the check

- _run_cmd('cd ' + scala_path + '; scaladoc `find . | grep .*scala`; exit 0')

+ _run_cmd('cd ' + scala_path + '; scaladoc `find . -type f -name "*.scala" | egrep \"\/core|\/infer\" | egrep -v \"Suite\"`; exit 0')

dest_path = app.builder.outdir + '/api/scala/docs'

_run_cmd('rm -rf ' + dest_path)

_run_cmd('mkdir -p ' + dest_path)

@@ -265,9 +265,11 @@ def _get_python_block_output(src, global_dict, local_dict):

ret_status = False

return (ret_status, s.getvalue()+err)

-def _get_jupyter_notebook(lang, lines):

+def _get_jupyter_notebook(lang, all_lines):

cells = []

- for in_code, blk_lang, lines in _get_blocks(lines):

+ # Exclude lines containing <!--notebook-skip-line-->

+ filtered_lines = [line for line in all_lines if "<!--notebook-skip-line-->" not in line]

+ for in_code, blk_lang, lines in _get_blocks(filtered_lines):

if blk_lang != lang:

in_code = False

src = '\n'.join(lines)

diff --git a/docs/tutorials/index.md b/docs/tutorials/index.md

index 3eff299d778..8a597e95bfb 100644

--- a/docs/tutorials/index.md

+++ b/docs/tutorials/index.md

@@ -119,6 +119,8 @@ The Gluon and Module tutorials are in Python, but you can also find a variety of

- [Simple autograd example](http://mxnet.incubator.apache.org/tutorials/gluon/autograd.html)

+- [Inference using an ONNX model](http://mxnet.incubator.apache.org/tutorials/onnx/inference_on_onnx_model.html)

+

</div> <!--end of applications-->

</div> <!--end of gluon-->

diff --git a/docs/tutorials/onnx/inference_on_onnx_model.md b/docs/tutorials/onnx/inference_on_onnx_model.md

new file mode 100644

index 00000000000..182a2ae74cd

--- /dev/null

+++ b/docs/tutorials/onnx/inference_on_onnx_model.md

@@ -0,0 +1,273 @@

+

+# Running inference on MXNet/Gluon from an ONNX model

+

+[Open Neural Network Exchange (ONNX)](https://github.com/onnx/onnx) provides an open source format for AI models. It defines an extensible computation graph model, as well as definitions of built-in operators and standard data types.

+

+In this tutorial we will:

+

+- learn how to load a pre-trained .onnx model file into MXNet/Gluon

+- learn how to test this model using the sample input/output

+- learn how to test the model on custom images

+

+## Pre-requisite

+

+To run the tutorial you will need to have installed the following python modules:

+- [MXNet](http://mxnet.incubator.apache.org/install/index.html)

+- [onnx](https://github.com/onnx/onnx) (follow the install guide)

+- [onnx-mxnet](https://github.com/onnx/onnx-mxnet)

+- matplotlib

+- wget

+

+

+```python

+import numpy as np

+import mxnet as mx

+from mxnet.contrib import onnx as onnx_mxnet

+from mxnet import gluon, nd

+%matplotlib inline

+import matplotlib.pyplot as plt

+import tarfile, os

+import wget

+import json

+```

+

+### Downloading supporting files

+These are images and a vizualisation script

+

+

+```python

+image_folder = "images"

+utils_file = "utils.py" # contain utils function to plot nice visualization

+image_net_labels_file = "image_net_labels.json"

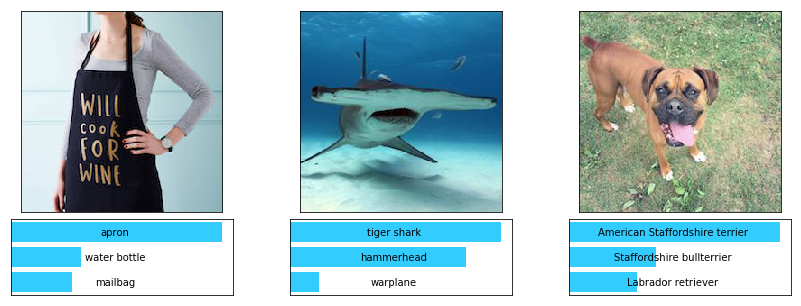

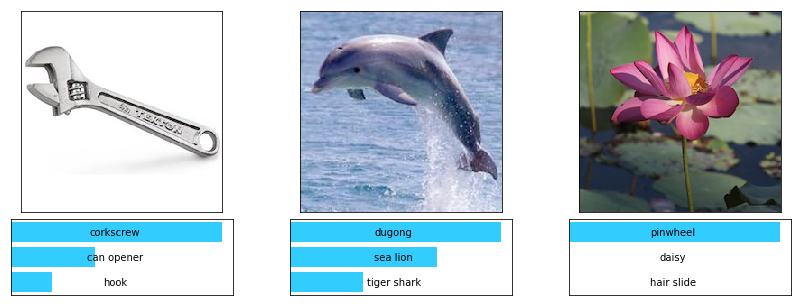

+images = ['apron', 'hammerheadshark', 'dog', 'wrench', 'dolphin', 'lotus']

+base_url = "https://raw.githubusercontent.com/dmlc/web-data/master/mxnet/doc/tutorials/onnx/{}?raw=true"

+

+if not os.path.isdir(image_folder):

+ os.makedirs(image_folder)

+ for image in images:

+ wget.download(base_url.format("{}/{}.jpg".format(image_folder, image)), image_folder)

+if not os.path.isfile(utils_file):

+ wget.download(base_url.format(utils_file))

+if not os.path.isfile(image_net_labels_file):

+ wget.download(base_url.format(image_net_labels_file))

+```

+

+

+```python

+from utils import *

+```

+

+## Downloading a model from the ONNX model zoo

+

+We download a pre-trained model, in our case the [vgg16](https://arxiv.org/abs/1409.1556) model, trained on [ImageNet](http://www.image-net.org/) from the [ONNX model zoo](https://github.com/onnx/models). The model comes packaged in an archive `tar.gz` file containing an `model.onnx` model file and some sample input/output data.

+

+

+```python

+base_url = "https://s3.amazonaws.com/download.onnx/models/"

+current_model = "vgg16"

+model_folder = "model"

+archive = "{}.tar.gz".format(current_model)

+archive_file = os.path.join(model_folder, archive)

+url = "{}{}".format(base_url, archive)

+```

+

+Create the model folder and download the zipped model

+

+

+```python

+if not os.path.isdir(model_folder):

+ os.makedirs(model_folder)

+if not os.path.isfile(archive_file):

+ wget.download(url, model_folder)

+```

+

+Extract the model

+

+

+```python

+if not os.path.isdir(os.path.join(model_folder, current_model)):

+ tar = tarfile.open(archive_file, "r:gz")

+ tar.extractall(model_folder)

+ tar.close()

+```

+

+The models have been pre-trained on ImageNet, let's load the label mapping of the 1000 classes.

+

+

+```python

+categories = json.load(open(image_net_labels_file, 'r'))

+```

+

+## Loading the model into MXNet Gluon

+

+

+```python

+onnx_path = os.path.join(model_folder, current_model, "model.onnx")

+```

+

+We get the symbol and parameter objects

+

+

+```python

+sym, arg_params, aux_params = onnx_mxnet.import_model(onnx_path)

+```

+

+We pick a context, CPU or GPU

+

+

+```python

+ctx = mx.cpu()

+```

+

+And load them into a MXNet Gluon symbol block. For ONNX models the default input name is `input_0`.

+

+

+```python

+net = gluon.nn.SymbolBlock(outputs=sym, inputs=mx.sym.var('input_0'))

+net_params = net.collect_params()

+for param in arg_params:

+ if param in net_params:

+ net_params[param]._load_init(arg_params[param], ctx=ctx)

+for param in aux_params:

+ if param in net_params:

+ net_params[param]._load_init(aux_params[param], ctx=ctx)

+```

+

+We can now cache the computational graph through [hybridization](https://mxnet.incubator.apache.org/tutorials/gluon/hybrid.html) to gain some performance

+

+

+

+```python

+net.hybridize()

+```

+

+## Test using sample inputs and outputs

+The model comes with sample input/output we can use to test that whether model is correctly loaded

+

+

+```python

+numpy_path = os.path.join(model_folder, current_model, 'test_data_0.npz')

+sample = np.load(numpy_path, encoding='bytes')

+inputs = sample['inputs']

+outputs = sample['outputs']

+```

+

+

+```python

+print("Input format: {}".format(inputs[0].shape))

+print("Output format: {}".format(outputs[0].shape))

+```

+

+`Input format: (1, 3, 224, 224)` <!--notebook-skip-line-->

+

+

+`Output format: (1, 1000)` <!--notebook-skip-line-->

+

+

+

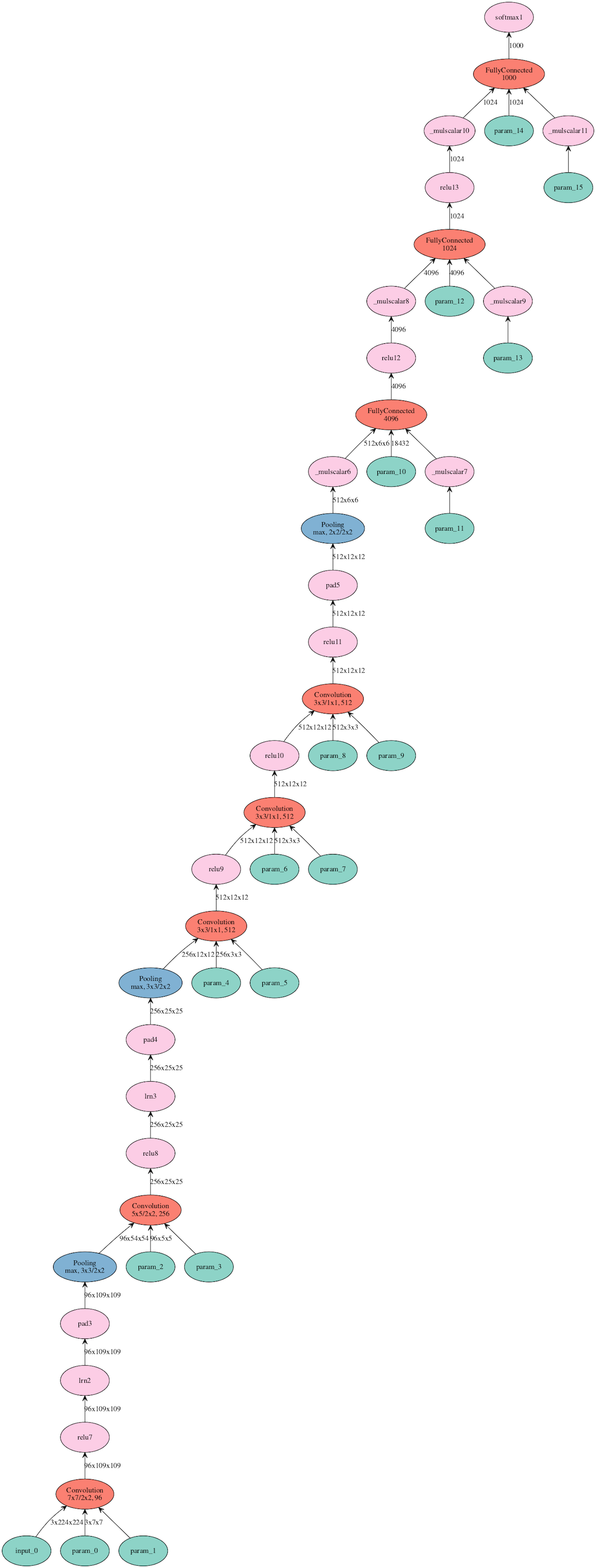

+We can visualize the network (requires graphviz installed)

+

+

+```python

+mx.visualization.plot_network(sym, node_attrs={"shape":"oval","fixedsize":"false"})

+```

+

+

+

+

+<!--notebook-skip-line-->

+

+

+

+This is a helper function to run M batches of data of batch-size N through the net and collate the outputs into an array of shape (K, 1000) where K=MxN is the total number of examples (mumber of batches x batch-size) run through the network.

+

+

+```python

+def run_batch(net, data):

+ results = []

+ for batch in data:

+ outputs = net(batch)

+ results.extend([o for o in outputs.asnumpy()])

+ return np.array(results)

+```

+

+

+```python

+result = run_batch(net, nd.array([inputs[0]], ctx))

+```

+

+

+```python

+print("Loaded model and sample output predict the same class: {}".format(np.argmax(result) == np.argmax(outputs[0])))

+```

+