You are viewing a plain text version of this content. The canonical link for it is here.

Posted to commits@hudi.apache.org by GitBox <gi...@apache.org> on 2020/01/27 09:13:03 UTC

[GitHub] [incubator-hudi] haospotai opened a new issue #1284: [SUPPORT]

haospotai opened a new issue #1284: [SUPPORT]

URL: https://github.com/apache/incubator-hudi/issues/1284

**Pyspark client sync table to hive**

A clear and concise description of the problem.

```

df = self.spark.read.json(data_hdfs)

df.write.format("org.apache.hudi") \

.option("hoodie.datasource.write.precombine.field", "uuid") \

.option("hoodie.table.name", tablename) \

.option("hoodie.datasource.write.keygenerator.class", "org.apache.hudi.NonpartitionedKeyGenerator")\

.option("hoodie.datasource.hive_sync.partition_extractor_class", "org.apache.hudi.hive.NonPartitionedExtractor")\

.option("hoodie.datasource.hive_sync.database", "default") \

.option("hoodie.datasource.hive_sync.enable", "true")\

.option("hoodie.datasource.hive_sync.table", tablename) \

.option("hoodie.datasource.hive_sync.jdbcurl", os.environ['HIVE_JDBC_URL']) \

.option("hoodie.datasource.hive_sync.username", os.environ['HIVE_USER']) \

.option("hoodie.datasource.hive_sync.password", os.environ['HIVE_PASSWORD']) \

.mode("Overwrite") \

.save(self.host + self.hive_base_path)

```

**Expected behavior**

use Pyspark for ETL and sync table to hive without failure

**Environment Description**

* Hudi version :release-0.5.0

* Running on Docker? (yes/no) yes

**Additional context**

**Stacktrace**

```py4j.protocol.Py4JJavaError: An error occurred while calling o64.save.

: java.lang.RuntimeException: java.lang.RuntimeException: class org.apache.hadoop.hive.metastore.DefaultMetaStoreFilterHookImpl not org.apache.hudi.org.apache.hadoop_hive.metastore.MetaStoreFilterHook

at org.apache.hadoop.conf.Configuration.getClass(Configuration.java:2227)

at org.apache.hudi.org.apache.hadoop_hive.metastore.HiveMetaStoreClient.loadFilterHooks(HiveMetaStoreClient.java:247)

at org.apache.hudi.org.apache.hadoop_hive.metastore.HiveMetaStoreClient.<init>(HiveMetaStoreClient.java:142)

at org.apache.hudi.org.apache.hadoop_hive.metastore.HiveMetaStoreClient.<init>(HiveMetaStoreClient.java:128)

at org.apache.hudi.hive.HoodieHiveClient.<init>(HoodieHiveClient.java:109)

at org.apache.hudi.hive.HiveSyncTool.<init>(HiveSyncTool.java:60)

at org.apache.hudi.HoodieSparkSqlWriter$.syncHive(HoodieSparkSqlWriter.scala:235)

at org.apache.hudi.HoodieSparkSqlWriter$.write(HoodieSparkSqlWriter.scala:169)

at org.apache.hudi.DefaultSource.createRelation(DefaultSource.scala:91)

at org.apache.spark.sql.execution.datasources.SaveIntoDataSourceCommand.run(SaveIntoDataSourceCommand.scala:45)

at org.apache.spark.sql.execution.command.ExecutedCommandExec.sideEffectResult$lzycompute(commands.scala:70)

at org.apache.spark.sql.execution.command.ExecutedCommandExec.sideEffectResult(commands.scala:68)

at org.apache.spark.sql.execution.command.ExecutedCommandExec.doExecute(commands.scala:86)

at org.apache.spark.sql.execution.SparkPlan$$anonfun$execute$1.apply(SparkPlan.scala:131)

at org.apache.spark.sql.execution.SparkPlan$$anonfun$execute$1.apply(SparkPlan.scala:127)

at org.apache.spark.sql.execution.SparkPlan$$anonfun$executeQuery$1.apply(SparkPlan.scala:155)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:151)

at org.apache.spark.sql.execution.SparkPlan.executeQuery(SparkPlan.scala:152)

at org.apache.spark.sql.execution.SparkPlan.execute(SparkPlan.scala:127)

at org.apache.spark.sql.execution.QueryExecution.toRdd$lzycompute(QueryExecution.scala:80)

at org.apache.spark.sql.execution.QueryExecution.toRdd(QueryExecution.scala:80)

at org.apache.spark.sql.DataFrameWriter$$anonfun$runCommand$1.apply(DataFrameWriter.scala:654)

at org.apache.spark.sql.DataFrameWriter$$anonfun$runCommand$1.apply(DataFrameWriter.scala:654)

at org.apache.spark.sql.execution.SQLExecution$.withNewExecutionId(SQLExecution.scala:77)

at org.apache.spark.sql.DataFrameWriter.runCommand(DataFrameWriter.scala:654)

at org.apache.spark.sql.DataFrameWriter.saveToV1Source(DataFrameWriter.scala:273)

at org.apache.spark.sql.DataFrameWriter.save(DataFrameWriter.scala:267)

at org.apache.spark.sql.DataFrameWriter.save(DataFrameWriter.scala:225)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at py4j.reflection.MethodInvoker.invoke(MethodInvoker.java:244)

at py4j.reflection.ReflectionEngine.invoke(ReflectionEngine.java:357)

at py4j.Gateway.invoke(Gateway.java:282)

at py4j.commands.AbstractCommand.invokeMethod(AbstractCommand.java:132)

at py4j.commands.CallCommand.execute(CallCommand.java:79)

at py4j.GatewayConnection.run(GatewayConnection.java:238)

at java.lang.Thread.run(Thread.java:748)

Caused by: java.lang.RuntimeException: class org.apache.hadoop.hive.metastore.DefaultMetaStoreFilterHookImpl not org.apache.hudi.org.apache.hadoop_hive.metastore.MetaStoreFilterHook

at org.apache.hadoop.conf.Configuration.getClass(Configuration.java:2221)

... 38 more.```

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-hudi] lamber-ken edited a comment on issue #1284:

[SUPPORT]

Posted by GitBox <gi...@apache.org>.

lamber-ken edited a comment on issue #1284: [SUPPORT]

URL: https://github.com/apache/incubator-hudi/issues/1284#issuecomment-579691082

> ```

> [INFO] Reactor Summary:

> [INFO]

> [INFO] Hudi 0.5.1-incubating-rc1 .......................... SUCCESS [ 2.221 s]

> [INFO] hudi-common ........................................ SUCCESS [ 10.973 s]

> [INFO] hudi-timeline-service .............................. SUCCESS [ 1.678 s]

> [INFO] hudi-hadoop-mr ..................................... SUCCESS [ 5.598 s]

> [INFO] hudi-client ........................................ SUCCESS [ 8.200 s]

> [INFO] hudi-hive .......................................... SUCCESS [ 4.769 s]

> [INFO] hudi-spark_2.11 .................................... SUCCESS [ 23.131 s]

> [INFO] hudi-utilities_2.11 ................................ SUCCESS [ 6.523 s]

> [INFO] hudi-cli ........................................... SUCCESS [ 7.150 s]

> [INFO] hudi-hadoop-mr-bundle .............................. SUCCESS [ 1.489 s]

> [INFO] hudi-hive-bundle ................................... SUCCESS [ 0.628 s]

> [INFO] hudi-spark-bundle_2.11 ............................. SUCCESS [ 7.682 s]

> [INFO] hudi-presto-bundle ................................. SUCCESS [ 0.565 s]

> [INFO] hudi-utilities-bundle_2.11 ......................... SUCCESS [ 7.915 s]

> [INFO] hudi-timeline-server-bundle ........................ SUCCESS [ 5.092 s]

> [INFO] hudi-hadoop-docker ................................. SUCCESS [ 0.565 s]

> [INFO] hudi-hadoop-base-docker ............................ SUCCESS [ 0.037 s]

> [INFO] hudi-hadoop-namenode-docker ........................ SUCCESS [ 0.037 s]

> [INFO] hudi-hadoop-datanode-docker ........................ SUCCESS [ 0.036 s]

> [INFO] hudi-hadoop-history-docker ......................... SUCCESS [ 0.034 s]

> [INFO] hudi-hadoop-hive-docker ............................ SUCCESS [ 0.277 s]

> [INFO] hudi-hadoop-sparkbase-docker ....................... SUCCESS [ 0.037 s]

> [INFO] hudi-hadoop-sparkmaster-docker ..................... SUCCESS [ 0.035 s]

> [INFO] hudi-hadoop-sparkworker-docker ..................... SUCCESS [ 0.034 s]

> [INFO] hudi-hadoop-sparkadhoc-docker ...................... SUCCESS [ 0.034 s]

> [INFO] hudi-hadoop-presto-docker .......................... SUCCESS [ 0.069 s]

> [INFO] hudi-integ-test 0.5.1-incubating-rc1 ............... SUCCESS [ 1.558 s]

> [INFO] ------------------------------------------------------------------------

> [INFO] BUILD SUCCESS

> [INFO] ------------------------------------------------------------------------

> [INFO] Total time: 01:36 min

> [INFO] Finished at: 2020-01-29T10:12:26Z

> [INFO] ------------------------------------------------------------------------

> hadoop@huidi-evaluation:~/incubator-hudi$ git pull

> Already up-to-date.

> ```

Okay, can you try the master branch? thanks

```

git clone git@github.com:apache/incubator-hudi.git

```

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-hudi] bvaradar commented on issue #1284: [SUPPORT]

Posted by GitBox <gi...@apache.org>.

bvaradar commented on issue #1284: [SUPPORT]

URL: https://github.com/apache/incubator-hudi/issues/1284#issuecomment-596121471

Thanks @lamber-ken. Will wait for @haospotai to confirm if there are no more issues and we can close this ticket.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-hudi] n3nash commented on issue #1284: [SUPPORT]

Posted by GitBox <gi...@apache.org>.

n3nash commented on issue #1284: [SUPPORT]

URL: https://github.com/apache/incubator-hudi/issues/1284#issuecomment-578904550

@haospotai It looks like an issue with shading of jars -> The hadoop metastore jars are shaded in the bundle that you might be using but somehow a class hierarchy is expecting a non-shaded one ?

How are you starting the python client ? Could you paste the libs you are passing ?

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-hudi] n3nash edited a comment on issue #1284: [SUPPORT]

Posted by GitBox <gi...@apache.org>.

n3nash edited a comment on issue #1284: [SUPPORT]

URL: https://github.com/apache/incubator-hudi/issues/1284#issuecomment-578904550

@haospotai It looks like an issue with shading of jars -> The hadoop metastore jars are shaded in the bundle that you might be using but somehow a class hierarchy is expecting a non-shaded one ?

How are you starting the python client ? Could you paste the libs you are passing ? Also, please raise a JIRA for further discussions

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-hudi] lamber-ken commented on issue #1284: [SUPPORT]

Posted by GitBox <gi...@apache.org>.

lamber-ken commented on issue #1284: [SUPPORT]

URL: https://github.com/apache/incubator-hudi/issues/1284#issuecomment-579661014

> s

Got it, thanks

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-hudi] haospotai closed issue #1284: [SUPPORT]

Posted by GitBox <gi...@apache.org>.

haospotai closed issue #1284: [SUPPORT]

URL: https://github.com/apache/incubator-hudi/issues/1284

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-hudi] haospotai removed a comment on issue #1284:

[SUPPORT]

Posted by GitBox <gi...@apache.org>.

haospotai removed a comment on issue #1284: [SUPPORT]

URL: https://github.com/apache/incubator-hudi/issues/1284#issuecomment-579010030

> @haospotai It looks like an issue with shading of jars -> The hadoop metastore jars are shaded in the bundle that you might be using but somehow a class hierarchy is expecting a non-shaded one ?

>

> How are you starting the python client ? Could you paste the libs you are passing ? Also, please raise a JIRA for further discussions

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-hudi] n3nash commented on issue #1284: [SUPPORT]

Posted by GitBox <gi...@apache.org>.

n3nash commented on issue #1284: [SUPPORT]

URL: https://github.com/apache/incubator-hudi/issues/1284#issuecomment-579387299

@lamber-ken let's have follow up discussions on JIRA if needed. We want to limit interactions of GH issues please

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-hudi] bvaradar commented on issue #1284: [SUPPORT]

Posted by GitBox <gi...@apache.org>.

bvaradar commented on issue #1284: [SUPPORT]

URL: https://github.com/apache/incubator-hudi/issues/1284#issuecomment-595992007

@haospotai : Can you confirm if you are still having issues. It is not very clear to me.

@lamber-ken : I see discussion about Jira. Are there any tracking jira already. If so, can you add the Jira as a comment for reference.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-hudi] lamber-ken commented on issue #1284: [SUPPORT]

Posted by GitBox <gi...@apache.org>.

lamber-ken commented on issue #1284: [SUPPORT]

URL: https://github.com/apache/incubator-hudi/issues/1284#issuecomment-579204395

Hi @haospotai, give you a demo without sync to hive. When using the latest `release-0.5.1`, I met some error, I am tring to figure it out.

```

export SPARK_HOME=/work/BigData/install/spark/spark-2.4.4-bin-hadoop2.7

${SPARK_HOME}/bin/pyspark --packages org.apache.hudi:hudi-spark-bundle:0.5.0-incubating --conf 'spark.serializer=org.apache.spark.serializer.KryoSerializer'

tableName = "hudi_mor_table"

basePath = "file:///tmp/hudi_mor_table"

datas = [{ "name": "kenken", "ts": "qwer", "age": 12, "location": "latitude"}]

df = spark.read.json(spark.sparkContext.parallelize(datas, 2))

df.write.format("org.apache.hudi"). \

option("hoodie.insert.shuffle.parallelism", "10"). \

option("hoodie.upsert.shuffle.parallelism", "10"). \

option("hoodie.delete.shuffle.parallelism", "10"). \

option("hoodie.bulkinsert.shuffle.parallelism", "10"). \

option("hoodie.datasource.write.recordkey.field", "name"). \

option("hoodie.datasource.write.partitionpath.field", "location"). \

option("hoodie.datasource.write.precombine.field", "ts"). \

option("hoodie.table.name", tableName). \

option("hoodie.table.type", "MERGE_ON_READ"). \

option("hoodie.datasource.write.storage.type", "MERGE_ON_READ"). \

mode("Overwrite"). \

save(basePath)

spark.read.format("org.apache.hudi").load(basePath + "/*/").show()

```

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-hudi] haospotai commented on issue #1284: [SUPPORT]

Posted by GitBox <gi...@apache.org>.

haospotai commented on issue #1284: [SUPPORT]

URL: https://github.com/apache/incubator-hudi/issues/1284#issuecomment-578983670

> @haospotai It looks like an issue with shading of jars -> The hadoop metastore jars are shaded in the bundle that you might be using but somehow a class hierarchy is expecting a non-shaded one ?

>

> How are you starting the python client ? Could you paste the libs you are passing ? Also, please raise a JIRA for further discussions

```

def __init__(self, app_name):

load_dotenv('../.env')

# hudi-spark-bundle-0.5.0-incubating.jar

spark_bundle = ROOT_DIR + "/resources/" + os.environ['HUDI_SPARK_BUNDLE']

self.host = os.environ['HDFS_HOST']

self.hive_base_path = os.environ['HIVE_BASH_PATH']

self.spark = SparkSession.builder \

.master(os.environ['SPARK_MASTER']) \

.appName(app_name) \

.config("spark.jars", spark_bundle) \

.config("spark.driver.extraClassPath", spark_bundle) \

.getOrCreate()

```

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-hudi] lamber-ken commented on issue #1284: [SUPPORT]

Posted by GitBox <gi...@apache.org>.

lamber-ken commented on issue #1284: [SUPPORT]

URL: https://github.com/apache/incubator-hudi/issues/1284#issuecomment-579691082

> ```

> [INFO] Reactor Summary:

> [INFO]

> [INFO] Hudi 0.5.1-incubating-rc1 .......................... SUCCESS [ 2.221 s]

> [INFO] hudi-common ........................................ SUCCESS [ 10.973 s]

> [INFO] hudi-timeline-service .............................. SUCCESS [ 1.678 s]

> [INFO] hudi-hadoop-mr ..................................... SUCCESS [ 5.598 s]

> [INFO] hudi-client ........................................ SUCCESS [ 8.200 s]

> [INFO] hudi-hive .......................................... SUCCESS [ 4.769 s]

> [INFO] hudi-spark_2.11 .................................... SUCCESS [ 23.131 s]

> [INFO] hudi-utilities_2.11 ................................ SUCCESS [ 6.523 s]

> [INFO] hudi-cli ........................................... SUCCESS [ 7.150 s]

> [INFO] hudi-hadoop-mr-bundle .............................. SUCCESS [ 1.489 s]

> [INFO] hudi-hive-bundle ................................... SUCCESS [ 0.628 s]

> [INFO] hudi-spark-bundle_2.11 ............................. SUCCESS [ 7.682 s]

> [INFO] hudi-presto-bundle ................................. SUCCESS [ 0.565 s]

> [INFO] hudi-utilities-bundle_2.11 ......................... SUCCESS [ 7.915 s]

> [INFO] hudi-timeline-server-bundle ........................ SUCCESS [ 5.092 s]

> [INFO] hudi-hadoop-docker ................................. SUCCESS [ 0.565 s]

> [INFO] hudi-hadoop-base-docker ............................ SUCCESS [ 0.037 s]

> [INFO] hudi-hadoop-namenode-docker ........................ SUCCESS [ 0.037 s]

> [INFO] hudi-hadoop-datanode-docker ........................ SUCCESS [ 0.036 s]

> [INFO] hudi-hadoop-history-docker ......................... SUCCESS [ 0.034 s]

> [INFO] hudi-hadoop-hive-docker ............................ SUCCESS [ 0.277 s]

> [INFO] hudi-hadoop-sparkbase-docker ....................... SUCCESS [ 0.037 s]

> [INFO] hudi-hadoop-sparkmaster-docker ..................... SUCCESS [ 0.035 s]

> [INFO] hudi-hadoop-sparkworker-docker ..................... SUCCESS [ 0.034 s]

> [INFO] hudi-hadoop-sparkadhoc-docker ...................... SUCCESS [ 0.034 s]

> [INFO] hudi-hadoop-presto-docker .......................... SUCCESS [ 0.069 s]

> [INFO] hudi-integ-test 0.5.1-incubating-rc1 ............... SUCCESS [ 1.558 s]

> [INFO] ------------------------------------------------------------------------

> [INFO] BUILD SUCCESS

> [INFO] ------------------------------------------------------------------------

> [INFO] Total time: 01:36 min

> [INFO] Finished at: 2020-01-29T10:12:26Z

> [INFO] ------------------------------------------------------------------------

> hadoop@huidi-evaluation:~/incubator-hudi$ git pull

> Already up-to-date.

> ```

Okay, you try can the master branch? thanks

```

git clone git@github.com:apache/incubator-hudi.git

```

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-hudi] haospotai edited a comment on issue #1284:

[SUPPORT]

Posted by GitBox <gi...@apache.org>.

haospotai edited a comment on issue #1284: [SUPPORT]

URL: https://github.com/apache/incubator-hudi/issues/1284#issuecomment-578983670

> @haospotai It looks like an issue with shading of jars -> The hadoop metastore jars are shaded in the bundle that you might be using but somehow a class hierarchy is expecting a non-shaded one ?

>

> How are you starting the python client ? Could you paste the libs you are passing ? Also, please raise a JIRA for further discussions

```

class FunHudi:

def __init__(self, app_name):

load_dotenv('../.env')

# the lib passed

# hudi-spark-bundle-0.5.0-incubating.jar

spark_bundle = ROOT_DIR + "/resources/" + os.environ['HUDI_SPARK_BUNDLE']

self.host = os.environ['HDFS_HOST']

self.hive_base_path = os.environ['HIVE_BASH_PATH']

self.spark = SparkSession.builder \

.master(os.environ['SPARK_MASTER']) \

.appName(app_name) \

.config("spark.jars", spark_bundle) \

.config("spark.driver.extraClassPath", spark_bundle) \

.getOrCreate()

def insert_data_hudi_sync_hive(self, tablename, data_hdfs):

df = self.spark.read.json(data_hdfs)

df.write.format("org.apache.hudi") \

.option("hoodie.datasource.write.precombine.field", "uuid") \

.option("hoodie.table.name", tablename) \

.option("hoodie.datasource.hive_sync.partition_fields", "partitionpath") \

.option("hoodie.datasource.write.partitionpath.field", "partitionpath") \

.option("hoodie.datasource.hive_sync.database", "default") \

.option("hoodie.datasource.hive_sync.enable", "true")\

.option("hoodie.datasource.hive_sync.table", tablename) \

.option("hoodie.datasource.hive_sync.jdbcurl", os.environ['HIVE_JDBC_URL']) \

.option("hoodie.datasource.hive_sync.username", os.environ['HIVE_USER']) \

.option("hoodie.datasource.hive_sync.password", os.environ['HIVE_PASSWORD']) \

.mode("Overwrite") \

.save(self.host + self.hive_base_path)

```

```

class TestSoptaiHudi(unittest.TestCase):

def setUp(self) -> None:

load_dotenv('.env')

self.host = os.getenv('HDFS_HOST')

self.hudiclient = FunHudi("testApp")

self.path = '/data/peoplejson.json'

def test_insert_data_hudi_sync_hive(self):

self.hudiclient.insert_data_hudi_sync_hive("soptaitest", self.path)

```

The exception will be thrown by set ```"hoodie.datasource.hive_sync.enable", "true"```

Cuz the app syncs data to hive at the end

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-hudi] haospotai edited a comment on issue #1284:

[SUPPORT]

Posted by GitBox <gi...@apache.org>.

haospotai edited a comment on issue #1284: [SUPPORT]

URL: https://github.com/apache/incubator-hudi/issues/1284#issuecomment-579103865

hi @lamber-ken

step 1 . git clone -b release-0.5.1 https://github.com/apache/incubator-hudi.git

step 2. cd incubator-hudi && mvn clean package -DskipTests -DskipITs

step 3. cd docker && ./setup_demo.sh

step 4. run below test

```

class TestSoptaiHudi(unittest.TestCase):

def setUp(self) -> None:

load_dotenv('.env')

self.host = os.getenv('HDFS_HOST')

self.hudiclient = FunHudi("testApp")

self.path = '/data/peoplejson.json'

def test_insert_data_hudi_sync_hive(self):

self.hudiclient.insert_data_hudi_sync_hive("soptaitest", self.path)

```

step5 . open spark UI check apps status

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-hudi] lamber-ken commented on issue #1284: [SUPPORT]

Posted by GitBox <gi...@apache.org>.

lamber-ken commented on issue #1284: [SUPPORT]

URL: https://github.com/apache/incubator-hudi/issues/1284#issuecomment-596059447

hi @bvaradar, this has been fixed at master branch https://github.com/apache/incubator-hudi/blob/master/packaging/hudi-spark-bundle/pom.xml

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-hudi] haospotai commented on issue #1284: [SUPPORT]

Posted by GitBox <gi...@apache.org>.

haospotai commented on issue #1284: [SUPPORT]

URL: https://github.com/apache/incubator-hudi/issues/1284#issuecomment-579010412

Hello @n3nash

```

class FunHudi:

def __init__(self, app_name):

load_dotenv('../.env')

# the lib passed

# hudi-spark-bundle-0.5.0-incubating.jar

spark_bundle = ROOT_DIR + "/resources/" + os.environ['HUDI_SPARK_BUNDLE']

self.host = os.environ['HDFS_HOST']

self.hive_base_path = os.environ['HIVE_BASH_PATH']

self.spark = SparkSession.builder \

.master(os.environ['SPARK_MASTER']) \

.appName(app_name) \

.config("spark.jars", spark_bundle) \

.config("spark.driver.extraClassPath", spark_bundle) \

.getOrCreate()

def insert_data_hudi_sync_hive(self, tablename, data_hdfs):

df = self.spark.read.json(data_hdfs)

df.write.format("org.apache.hudi") \

.option("hoodie.datasource.write.precombine.field", "uuid") \

.option("hoodie.table.name", tablename) \

.option("hoodie.datasource.hive_sync.partition_fields", "partitionpath") \

.option("hoodie.datasource.write.partitionpath.field", "partitionpath") \

.option("hoodie.datasource.hive_sync.database", "default") \

.option("hoodie.datasource.hive_sync.enable", "true")\

.option("hoodie.datasource.hive_sync.table", tablename) \

.option("hoodie.datasource.hive_sync.jdbcurl", os.environ['HIVE_JDBC_URL']) \

.option("hoodie.datasource.hive_sync.username", os.environ['HIVE_USER']) \

.option("hoodie.datasource.hive_sync.password", os.environ['HIVE_PASSWORD']) \

.mode("Overwrite") \

.save(self.host + self.hive_base_path)

```

```

class TestSoptaiHudi(unittest.TestCase):

def setUp(self) -> None:

load_dotenv('.env')

self.host = os.getenv('HDFS_HOST')

self.hudiclient = FunHudi("testApp")

self.path = '/data/peoplejson.json'

def test_insert_data_hudi_sync_hive(self):

self.hudiclient.insert_data_hudi_sync_hive("soptaitest", self.path)

```

The exception will be thrown by set ```"hoodie.datasource.hive_sync.enable", "true"```

Cuz the app syncs data to hive at the end

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-hudi] lamber-ken edited a comment on issue #1284:

[SUPPORT]

Posted by GitBox <gi...@apache.org>.

lamber-ken edited a comment on issue #1284: [SUPPORT]

URL: https://github.com/apache/incubator-hudi/issues/1284#issuecomment-579204395

Hi @haospotai, give you a demo using python with `release-0.5.1` version.

**Step1:**

```

git clone -b release-0.5.1 https://github.com/apache/incubator-hudi.git

```

**Step2:**

```

cd incubator-hudi && mvn clean package -DskipTests -DskipITs

```

**Step3:**

```

export SPARK_HOME=/work/BigData/install/spark/spark-2.4.4-bin-hadoop2.7

${SPARK_HOME}/bin/pyspark \

--packages org.apache.spark:spark-avro_2.11:2.4.4 \

--jars `ls packaging/hudi-spark-bundle/target/hudi-spark-bundle_*.*-*.*.*-incubating-rc1.jar` \

--conf 'spark.serializer=org.apache.spark.serializer.KryoSerializer'

tableName = "hudi_mor_table"

basePath = "file:///tmp/hudi_mor_table"

datas = [{ "name": "kenken", "ts": "qwer", "age": 12, "location": "latitude"}]

df = spark.read.json(spark.sparkContext.parallelize(datas, 2))

df.write.format("org.apache.hudi"). \

option("hoodie.insert.shuffle.parallelism", "10"). \

option("hoodie.upsert.shuffle.parallelism", "10"). \

option("hoodie.delete.shuffle.parallelism", "10"). \

option("hoodie.bulkinsert.shuffle.parallelism", "10"). \

option("hoodie.datasource.write.recordkey.field", "name"). \

option("hoodie.datasource.write.partitionpath.field", "location"). \

option("hoodie.datasource.write.precombine.field", "ts"). \

option("hoodie.table.name", tableName). \

mode("Overwrite"). \

save(basePath)

spark.read.format("org.apache.hudi").load(basePath + "/*/").show()

```

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-hudi] haospotai edited a comment on issue #1284:

[SUPPORT]

Posted by GitBox <gi...@apache.org>.

haospotai edited a comment on issue #1284: [SUPPORT]

URL: https://github.com/apache/incubator-hudi/issues/1284#issuecomment-579010412

Hello @n3nash

```

class FunHudi:

def __init__(self, app_name):

load_dotenv('../.env')

# the lib passed

# hudi-spark-bundle-0.5.0-incubating.jar

spark_bundle = ROOT_DIR + "/resources/" + os.environ['HUDI_SPARK_BUNDLE']

self.host = os.environ['HDFS_HOST']

self.hive_base_path = os.environ['HIVE_BASH_PATH']

self.spark = SparkSession.builder \

.master(os.environ['SPARK_MASTER']) \

.appName(app_name) \

.config("spark.jars", spark_bundle) \

.config("spark.driver.extraClassPath", spark_bundle) \

.getOrCreate()

def insert_data_hudi_sync_hive(self, tablename, data_hdfs):

df = self.spark.read.json(data_hdfs)

df.write.format("org.apache.hudi") \

.option("hoodie.datasource.write.precombine.field", "uuid") \

.option("hoodie.table.name", tablename) \

.option("hoodie.datasource.hive_sync.partition_fields", "partitionpath") \

.option("hoodie.datasource.write.partitionpath.field", "partitionpath") \

.option("hoodie.datasource.hive_sync.database", "default") \

.option("hoodie.datasource.hive_sync.enable", "true")\

.option("hoodie.datasource.hive_sync.table", tablename) \

.option("hoodie.datasource.hive_sync.jdbcurl", os.environ['HIVE_JDBC_URL']) \

.option("hoodie.datasource.hive_sync.username", os.environ['HIVE_USER']) \

.option("hoodie.datasource.hive_sync.password", os.environ['HIVE_PASSWORD']) \

.mode("Overwrite") \

.save(self.host + self.hive_base_path)

```

```

class TestSoptaiHudi(unittest.TestCase):

def setUp(self) -> None:

load_dotenv('.env')

self.host = os.getenv('HDFS_HOST')

self.hudiclient = FunHudi("testApp")

self.path = '/data/peoplejson.json'

def test_insert_data_hudi_sync_hive(self):

self.hudiclient.insert_data_hudi_sync_hive("soptaitest", self.path)

```

The exception will be thrown by set ```"hoodie.datasource.hive_sync.enable", "true"```

Cuz the app syncs data to hive at the end

BTW, I run the code in PyCharm IDE

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-hudi] haospotai commented on issue #1284: [SUPPORT]

Posted by GitBox <gi...@apache.org>.

haospotai commented on issue #1284: [SUPPORT]

URL: https://github.com/apache/incubator-hudi/issues/1284#issuecomment-579038915

hi @lamber-ken

i build the latest hudi version , the app is stucked here

```org.apache.spark.sql.DataFrameReader.json(DataFrameReader.scala:397)

sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

java.lang.reflect.Method.invoke(Method.java:498)

py4j.reflection.MethodInvoker.invoke(MethodInvoker.java:244)

py4j.reflection.ReflectionEngine.invoke(ReflectionEngine.java:357)

py4j.Gateway.invoke(Gateway.java:282)

py4j.commands.AbstractCommand.invokeMethod(AbstractCommand.java:132)

py4j.commands.CallCommand.execute(CallCommand.java:79)

py4j.GatewayConnection.run(GatewayConnection.java:238)

java.lang.Thread.run(Thread.java:748)

```

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-hudi] haospotai edited a comment on issue #1284:

[SUPPORT]

Posted by GitBox <gi...@apache.org>.

haospotai edited a comment on issue #1284: [SUPPORT]

URL: https://github.com/apache/incubator-hudi/issues/1284#issuecomment-578983670

> @haospotai It looks like an issue with shading of jars -> The hadoop metastore jars are shaded in the bundle that you might be using but somehow a class hierarchy is expecting a non-shaded one ?

>

> How are you starting the python client ? Could you paste the libs you are passing ? Also, please raise a JIRA for further discussions

```

def __init__(self, app_name):

load_dotenv('../.env')

# hudi-spark-bundle-0.5.0-incubating.jar

spark_bundle = ROOT_DIR + "/resources/" + os.environ['HUDI_SPARK_BUNDLE']

self.host = os.environ['HDFS_HOST']

self.hive_base_path = os.environ['HIVE_BASH_PATH']

self.spark = SparkSession.builder \

.master(os.environ['SPARK_MASTER']) \

.appName(app_name) \

.config("spark.jars", spark_bundle) \

.config("spark.driver.extraClassPath", spark_bundle) \

.getOrCreate()

```

The exception will be thrown by enabled ```"hoodie.datasource.hive_sync.enable", "true"```

Cuz the app syncs data to hive at the end

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-hudi] haospotai opened a new issue #1284: [SUPPORT]

Posted by GitBox <gi...@apache.org>.

haospotai opened a new issue #1284: [SUPPORT]

URL: https://github.com/apache/incubator-hudi/issues/1284

**Pyspark client failed sync table to hive**

A clear and concise description of the problem.

```

df = self.spark.read.json(data_hdfs)

df.write.format("org.apache.hudi") \

.option("hoodie.datasource.write.precombine.field", "uuid") \

.option("hoodie.table.name", tablename) \

.option("hoodie.datasource.write.keygenerator.class", "org.apache.hudi.NonpartitionedKeyGenerator")\

.option("hoodie.datasource.hive_sync.partition_extractor_class", "org.apache.hudi.hive.NonPartitionedExtractor")\

.option("hoodie.datasource.hive_sync.database", "default") \

.option("hoodie.datasource.hive_sync.enable", "true")\

.option("hoodie.datasource.hive_sync.table", tablename) \

.option("hoodie.datasource.hive_sync.jdbcurl", os.environ['HIVE_JDBC_URL']) \

.option("hoodie.datasource.hive_sync.username", os.environ['HIVE_USER']) \

.option("hoodie.datasource.hive_sync.password", os.environ['HIVE_PASSWORD']) \

.mode("Overwrite") \

.save(self.host + self.hive_base_path)

```

**Expected behavior**

use Pyspark for ETL and sync table to hive without failure

**Environment Description**

* Hudi version :release-0.5.0

* Running on Docker? (yes/no) yes

**Additional context**

**Stacktrace**

```

py4j.protocol.Py4JJavaError: An error occurred while calling o64.save.

: java.lang.RuntimeException: java.lang.RuntimeException: class org.apache.hadoop.hive.metastore.DefaultMetaStoreFilterHookImpl not org.apache.hudi.org.apache.hadoop_hive.metastore.MetaStoreFilterHook

at org.apache.hadoop.conf.Configuration.getClass(Configuration.java:2227)

at org.apache.hudi.org.apache.hadoop_hive.metastore.HiveMetaStoreClient.loadFilterHooks(HiveMetaStoreClient.java:247)

at org.apache.hudi.org.apache.hadoop_hive.metastore.HiveMetaStoreClient.<init>(HiveMetaStoreClient.java:142)

at org.apache.hudi.org.apache.hadoop_hive.metastore.HiveMetaStoreClient.<init>(HiveMetaStoreClient.java:128)

at org.apache.hudi.hive.HoodieHiveClient.<init>(HoodieHiveClient.java:109)

at org.apache.hudi.hive.HiveSyncTool.<init>(HiveSyncTool.java:60)

at org.apache.hudi.HoodieSparkSqlWriter$.syncHive(HoodieSparkSqlWriter.scala:235)

at org.apache.hudi.HoodieSparkSqlWriter$.write(HoodieSparkSqlWriter.scala:169)

at org.apache.hudi.DefaultSource.createRelation(DefaultSource.scala:91)

at org.apache.spark.sql.execution.datasources.SaveIntoDataSourceCommand.run(SaveIntoDataSourceCommand.scala:45)

at org.apache.spark.sql.execution.command.ExecutedCommandExec.sideEffectResult$lzycompute(commands.scala:70)

at org.apache.spark.sql.execution.command.ExecutedCommandExec.sideEffectResult(commands.scala:68)

at org.apache.spark.sql.execution.command.ExecutedCommandExec.doExecute(commands.scala:86)

at org.apache.spark.sql.execution.SparkPlan$$anonfun$execute$1.apply(SparkPlan.scala:131)

at org.apache.spark.sql.execution.SparkPlan$$anonfun$execute$1.apply(SparkPlan.scala:127)

at org.apache.spark.sql.execution.SparkPlan$$anonfun$executeQuery$1.apply(SparkPlan.scala:155)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:151)

at org.apache.spark.sql.execution.SparkPlan.executeQuery(SparkPlan.scala:152)

at org.apache.spark.sql.execution.SparkPlan.execute(SparkPlan.scala:127)

at org.apache.spark.sql.execution.QueryExecution.toRdd$lzycompute(QueryExecution.scala:80)

at org.apache.spark.sql.execution.QueryExecution.toRdd(QueryExecution.scala:80)

at org.apache.spark.sql.DataFrameWriter$$anonfun$runCommand$1.apply(DataFrameWriter.scala:654)

at org.apache.spark.sql.DataFrameWriter$$anonfun$runCommand$1.apply(DataFrameWriter.scala:654)

at org.apache.spark.sql.execution.SQLExecution$.withNewExecutionId(SQLExecution.scala:77)

at org.apache.spark.sql.DataFrameWriter.runCommand(DataFrameWriter.scala:654)

at org.apache.spark.sql.DataFrameWriter.saveToV1Source(DataFrameWriter.scala:273)

at org.apache.spark.sql.DataFrameWriter.save(DataFrameWriter.scala:267)

at org.apache.spark.sql.DataFrameWriter.save(DataFrameWriter.scala:225)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at py4j.reflection.MethodInvoker.invoke(MethodInvoker.java:244)

at py4j.reflection.ReflectionEngine.invoke(ReflectionEngine.java:357)

at py4j.Gateway.invoke(Gateway.java:282)

at py4j.commands.AbstractCommand.invokeMethod(AbstractCommand.java:132)

at py4j.commands.CallCommand.execute(CallCommand.java:79)

at py4j.GatewayConnection.run(GatewayConnection.java:238)

at java.lang.Thread.run(Thread.java:748)

Caused by: java.lang.RuntimeException: class org.apache.hadoop.hive.metastore.DefaultMetaStoreFilterHookImpl not org.apache.hudi.org.apache.hadoop_hive.metastore.MetaStoreFilterHook

at org.apache.hadoop.conf.Configuration.getClass(Configuration.java:2221)

... 38 more.```

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-hudi] haospotai edited a comment on issue #1284:

[SUPPORT]

Posted by GitBox <gi...@apache.org>.

haospotai edited a comment on issue #1284: [SUPPORT]

URL: https://github.com/apache/incubator-hudi/issues/1284#issuecomment-578983670

> @haospotai It looks like an issue with shading of jars -> The hadoop metastore jars are shaded in the bundle that you might be using but somehow a class hierarchy is expecting a non-shaded one ?

>

> How are you starting the python client ? Could you paste the libs you are passing ? Also, please raise a JIRA for further discussions

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-hudi] haospotai edited a comment on issue #1284:

[SUPPORT]

Posted by GitBox <gi...@apache.org>.

haospotai edited a comment on issue #1284: [SUPPORT]

URL: https://github.com/apache/incubator-hudi/issues/1284#issuecomment-579010412

Hello @n3nash

```

class FunHudi:

def __init__(self, app_name):

load_dotenv('../.env')

# the lib passed

# hudi-spark-bundle-0.5.0-incubating.jar

spark_bundle = ROOT_DIR + "/resources/" + os.environ['HUDI_SPARK_BUNDLE']

self.host = os.environ['HDFS_HOST']

self.hive_base_path = os.environ['HIVE_BASH_PATH']

self.spark = SparkSession.builder \

.master(os.environ['SPARK_MASTER']) \

.appName(app_name) \

.config("spark.jars", spark_bundle) \

.config("spark.driver.extraClassPath", spark_bundle) \

.getOrCreate()

def insert_data_hudi_sync_hive(self, tablename, data_hdfs):

df = self.spark.read.json(data_hdfs)

df.write.format("org.apache.hudi") \

.option("hoodie.datasource.write.precombine.field", "uuid") \

.option("hoodie.table.name", tablename) \

.option("hoodie.datasource.hive_sync.partition_fields", "partitionpath") \

.option("hoodie.datasource.write.partitionpath.field", "partitionpath") \

.option("hoodie.datasource.hive_sync.database", "default") \

.option("hoodie.datasource.hive_sync.enable", "true")\

.option("hoodie.datasource.hive_sync.table", tablename) \

.option("hoodie.datasource.hive_sync.jdbcurl", os.environ['HIVE_JDBC_URL']) \

.option("hoodie.datasource.hive_sync.username", os.environ['HIVE_USER']) \

.option("hoodie.datasource.hive_sync.password", os.environ['HIVE_PASSWORD']) \

.mode("Overwrite") \

.save(self.host + self.hive_base_path)

```

```

class TestSoptaiHudi(unittest.TestCase):

def setUp(self) -> None:

load_dotenv('.env')

self.host = os.getenv('HDFS_HOST')

self.hudiclient = FunHudi("testApp")

self.path = '/data/peoplejson.json'

def test_insert_data_hudi_sync_hive(self):

self.hudiclient.insert_data_hudi_sync_hive("syncest", self.path)

```

The exception will be thrown by set ```"hoodie.datasource.hive_sync.enable", "true"```

Cuz the app syncs data to hive at the end

BTW, I run the code in PyCharm IDE

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-hudi] lamber-ken edited a comment on issue #1284:

[SUPPORT]

Posted by GitBox <gi...@apache.org>.

lamber-ken edited a comment on issue #1284: [SUPPORT]

URL: https://github.com/apache/incubator-hudi/issues/1284#issuecomment-578906789

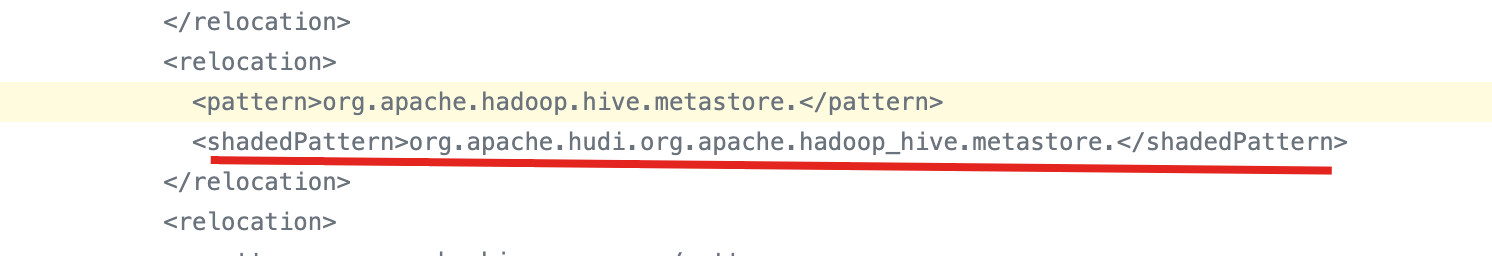

hi @n3nash, you can check `release-0.5.0`

https://github.com/apache/incubator-hudi/blob/1cfd311b40d814b87ede942f770324abdd1affea/packaging/hudi-spark-bundle/pom.xml#L154

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-hudi] haospotai edited a comment on issue #1284:

[SUPPORT]

Posted by GitBox <gi...@apache.org>.

haospotai edited a comment on issue #1284: [SUPPORT]

URL: https://github.com/apache/incubator-hudi/issues/1284#issuecomment-578983670

> @haospotai It looks like an issue with shading of jars -> The hadoop metastore jars are shaded in the bundle that you might be using but somehow a class hierarchy is expecting a non-shaded one ?

>

> How are you starting the python client ? Could you paste the libs you are passing ? Also, please raise a JIRA for further discussions

```

def __init__(self, app_name):

load_dotenv('../.env')

# hudi-spark-bundle-0.5.0-incubating.jar

spark_bundle = ROOT_DIR + "/resources/" + os.environ['HUDI_SPARK_BUNDLE']

self.host = os.environ['HDFS_HOST']

self.hive_base_path = os.environ['HIVE_BASH_PATH']

self.spark = SparkSession.builder \

.master(os.environ['SPARK_MASTER']) \

.appName(app_name) \

.config("spark.jars", spark_bundle) \

.config("spark.driver.extraClassPath", spark_bundle) \

.getOrCreate()

```

The exception will be thrown by set ```"hoodie.datasource.hive_sync.enable", "true"```

Cuz the app syncs data to hive at the end

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-hudi] lamber-ken edited a comment on issue #1284:

[SUPPORT]

Posted by GitBox <gi...@apache.org>.

lamber-ken edited a comment on issue #1284: [SUPPORT]

URL: https://github.com/apache/incubator-hudi/issues/1284#issuecomment-579660639

> this is the branch

>

> ```

> hadoop@huidi-evaluation:~/incubator-hudi$ git branch

> * release-0.5.1

> ```

>

> then use

>

> ```

> pyspark --packages org.apache.spark:spark-avro_2.11:2.4.4 --jars packaging/hudi-spark-bundle/target/hudi-spark-bundle_2.11-0.5.1-incubating-rc1.jar --conf 'spark.serializer=org.apache.spark.serializer.KryoSerializer'

> ```

>

> error

>

> ```

> /spark-2.4.4-bin-hadoop2.7/python/lib/py4j-0.10.7-src.zip/py4j/protocol.py", line 328, in get_return_value

> py4j.protocol.Py4JJavaError: An error occurred while calling o79.save.

> : java.lang.RuntimeException: java.lang.RuntimeException: class org.apache.hadoop.hive.metastore.DefaultMetaStoreFilterHookImpl not org.apache.hudi.org.apache.hadoop_hive.metastore.MetaStoreFilterHook

> at org.apache.hadoop.conf.Configuration.getClass(Configuration.java:2227)

> at org.apache.hudi.org.apache.hadoop_hive.metastore.HiveMetaStoreClient.loadFilterHooks(HiveMetaStoreClient.java:247)

> at org.apache.hudi.org.apache.hadoop_hive.metastore.HiveMetaStoreClient.<init>(HiveMetaStoreClient.java:142)

> at org.apache.hudi.org.apache.hadoop_hive.metastore.HiveMetaStoreClient.<init>(HiveMetaStoreClient.java:128)

> at org.apache.hudi.hive.HoodieHiveClient.<init>(HoodieHiveClient.java:109)

> at org.apache.hudi.hive.HiveSyncTool.<init>(HiveSyncTool.java:60)

> at org.apache.hudi.HoodieSparkSqlWriter$.syncHive(HoodieSparkSqlWriter.scala:235)

> at org.apache.hudi.HoodieSparkSqlWriter$.write(HoodieSparkSqlWriter.scala:169)

> at org.apache.hudi.DefaultSource.createRelation(DefaultSource.scala:91)

> at org.apache.spark.sql.execution.datasources.SaveIntoDataSourceCommand.run(SaveIntoDataSourceCommand.scala:45)

> at org.apache.spark.sql.execution.command.ExecutedCommandExec.sideEffectResult$lzycompute(commands.scala:70)

> at org.apache.spark.sql.execution.command.ExecutedCommandExec.sideEffectResult(commands.scala:68)

> at org.apache.spark.sql.execution.command.ExecutedCommandExec.doExecute(commands.scala:86)

> at org.apache.spark.sql.execution.SparkPlan$$anonfun$execute$1.apply(SparkPlan.scala:131)

> at org.apache.spark.sql.execution.SparkPlan$$anonfun$execute$1.apply(SparkPlan.scala:127)

> at org.apache.spark.sql.execution.SparkPlan$$anonfun$executeQuery$1.apply(SparkPlan.scala:155)

> at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:151)

> at org.apache.spark.sql.execution.SparkPlan.executeQuery(SparkPlan.scala:152)

> at org.apache.spark.sql.execution.SparkPlan.execute(SparkPlan.scala:127)

> at org.apache.spark.sql.execution.QueryExecution.toRdd$lzycompute(QueryExecution.scala:80)

> at org.apache.spark.sql.execution.QueryExecution.toRdd(QueryExecution.scala:80)

> at org.apache.spark.sql.DataFrameWriter$$anonfun$runCommand$1.apply(DataFrameWriter.scala:676)

> at org.apache.spark.sql.DataFrameWriter$$anonfun$runCommand$1.apply(DataFrameWriter.scala:676)

> at org.apache.spark.sql.execution.SQLExecution$$anonfun$withNewExecutionId$1.apply(SQLExecution.scala:78)

> at org.apache.spark.sql.execution.SQLExecution$.withSQLConfPropagated(SQLExecution.scala:125)

> at org.apache.spark.sql.execution.SQLExecution$.withNewExecutionId(SQLExecution.scala:73)

> at org.apache.spark.sql.DataFrameWriter.runCommand(DataFrameWriter.scala:676)

> at org.apache.spark.sql.DataFrameWriter.saveToV1Source(DataFrameWriter.scala:285)

> at org.apache.spark.sql.DataFrameWriter.save(DataFrameWriter.scala:271)

> at org.apache.spark.sql.DataFrameWriter.save(DataFrameWriter.scala:229)

> at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

> at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

> at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

> at java.lang.reflect.Method.invoke(Method.java:498)

> at py4j.reflection.MethodInvoker.invoke(MethodInvoker.java:244)

> at py4j.reflection.ReflectionEngine.invoke(ReflectionEngine.java:357)

> at py4j.Gateway.invoke(Gateway.java:282)

> at py4j.commands.AbstractCommand.invokeMethod(AbstractCommand.java:132)

> at py4j.commands.CallCommand.execute(CallCommand.java:79)

> at py4j.GatewayConnection.run(GatewayConnection.java:238)

> at java.lang.Thread.run(Thread.java:748)

> Caused by: java.lang.RuntimeException: class org.apache.hadoop.hive.metastore.DefaultMetaStoreFilterHookImpl not org.apache.hudi.org.apache.hadoop_hive.metastore.MetaStoreFilterHook

> at org.apache.hadoop.conf.Configuration.getClass(Configuration.java:2221)

> ... 40 more

>

> >>> exit()

> ```

I guess you still used old version, because at `release-0.5.1` version, `org.apache.hudi.org.apache.hadoop_hive.metastore` is not exists.

https://github.com/apache/incubator-hudi/blob/release-0.5.1/packaging/hudi-spark-bundle/pom.xml

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-hudi] haospotai removed a comment on issue #1284:

[SUPPORT]

Posted by GitBox <gi...@apache.org>.

haospotai removed a comment on issue #1284: [SUPPORT]

URL: https://github.com/apache/incubator-hudi/issues/1284#issuecomment-578983670

> @haospotai It looks like an issue with shading of jars -> The hadoop metastore jars are shaded in the bundle that you might be using but somehow a class hierarchy is expecting a non-shaded one ?

>

> How are you starting the python client ? Could you paste the libs you are passing ? Also, please raise a JIRA for further discussions

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-hudi] haospotai commented on issue #1284: [SUPPORT]

Posted by GitBox <gi...@apache.org>.

haospotai commented on issue #1284: [SUPPORT]

URL: https://github.com/apache/incubator-hudi/issues/1284#issuecomment-579010030

> @haospotai It looks like an issue with shading of jars -> The hadoop metastore jars are shaded in the bundle that you might be using but somehow a class hierarchy is expecting a non-shaded one ?

>

> How are you starting the python client ? Could you paste the libs you are passing ? Also, please raise a JIRA for further discussions

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-hudi] haospotai commented on issue #1284: [SUPPORT]

Posted by GitBox <gi...@apache.org>.

haospotai commented on issue #1284: [SUPPORT]

URL: https://github.com/apache/incubator-hudi/issues/1284#issuecomment-579637991

```

df = spark.read.json("hdfs://namenode:8020/data/test.json")

df.write.format("org.apache.hudi") \

.option("hoodie.datasource.write.precombine.field", "uuid") \

.option("hoodie.table.name", "soptai").option("hoodie.datasource.hive_sync.partition_fields", "partitionpath").option("hoodie.datasource.write.partitionpath.field", "partitionpath").option("hoodie.datasource.hive_sync.database", "default").option("hoodie.datasource.hive_sync.enable", "true")\

.option("hoodie.datasource.hive_sync.table", "test") \

.option("hoodie.datasource.hive_sync.jdbcurl", "jdbc:hive2://hiveserver:10000") \

.option("hoodie.datasource.hive_sync.username", "hive") \

.option("hoodie.datasource.hive_sync.password", "hive") \

.mode("Overwrite") \

.save("hdfs://namenode:8020/user/hive/warehouse/test")

```

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-hudi] lamber-ken commented on issue #1284: [SUPPORT]

Posted by GitBox <gi...@apache.org>.

lamber-ken commented on issue #1284: [SUPPORT]

URL: https://github.com/apache/incubator-hudi/issues/1284#issuecomment-579098965

hi @haospotai, can you show me reproducible steps, thanks :)

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-hudi] bvaradar closed issue #1284: [SUPPORT]

Posted by GitBox <gi...@apache.org>.

bvaradar closed issue #1284: [SUPPORT]

URL: https://github.com/apache/incubator-hudi/issues/1284

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-hudi] lamber-ken commented on issue #1284: [SUPPORT]

Posted by GitBox <gi...@apache.org>.

lamber-ken commented on issue #1284: [SUPPORT]

URL: https://github.com/apache/incubator-hudi/issues/1284#issuecomment-579660639

> this is the branch

>

> ```

> hadoop@huidi-evaluation:~/incubator-hudi$ git branch

> * release-0.5.1

> ```

>

> then use

>

> ```

> pyspark --packages org.apache.spark:spark-avro_2.11:2.4.4 --jars packaging/hudi-spark-bundle/target/hudi-spark-bundle_2.11-0.5.1-incubating-rc1.jar --conf 'spark.serializer=org.apache.spark.serializer.KryoSerializer'

> ```

>

> error

>

> ```

> /spark-2.4.4-bin-hadoop2.7/python/lib/py4j-0.10.7-src.zip/py4j/protocol.py", line 328, in get_return_value

> py4j.protocol.Py4JJavaError: An error occurred while calling o79.save.

> : java.lang.RuntimeException: java.lang.RuntimeException: class org.apache.hadoop.hive.metastore.DefaultMetaStoreFilterHookImpl not org.apache.hudi.org.apache.hadoop_hive.metastore.MetaStoreFilterHook

> at org.apache.hadoop.conf.Configuration.getClass(Configuration.java:2227)

> at org.apache.hudi.org.apache.hadoop_hive.metastore.HiveMetaStoreClient.loadFilterHooks(HiveMetaStoreClient.java:247)

> at org.apache.hudi.org.apache.hadoop_hive.metastore.HiveMetaStoreClient.<init>(HiveMetaStoreClient.java:142)

> at org.apache.hudi.org.apache.hadoop_hive.metastore.HiveMetaStoreClient.<init>(HiveMetaStoreClient.java:128)

> at org.apache.hudi.hive.HoodieHiveClient.<init>(HoodieHiveClient.java:109)

> at org.apache.hudi.hive.HiveSyncTool.<init>(HiveSyncTool.java:60)

> at org.apache.hudi.HoodieSparkSqlWriter$.syncHive(HoodieSparkSqlWriter.scala:235)

> at org.apache.hudi.HoodieSparkSqlWriter$.write(HoodieSparkSqlWriter.scala:169)

> at org.apache.hudi.DefaultSource.createRelation(DefaultSource.scala:91)

> at org.apache.spark.sql.execution.datasources.SaveIntoDataSourceCommand.run(SaveIntoDataSourceCommand.scala:45)

> at org.apache.spark.sql.execution.command.ExecutedCommandExec.sideEffectResult$lzycompute(commands.scala:70)

> at org.apache.spark.sql.execution.command.ExecutedCommandExec.sideEffectResult(commands.scala:68)

> at org.apache.spark.sql.execution.command.ExecutedCommandExec.doExecute(commands.scala:86)

> at org.apache.spark.sql.execution.SparkPlan$$anonfun$execute$1.apply(SparkPlan.scala:131)

> at org.apache.spark.sql.execution.SparkPlan$$anonfun$execute$1.apply(SparkPlan.scala:127)

> at org.apache.spark.sql.execution.SparkPlan$$anonfun$executeQuery$1.apply(SparkPlan.scala:155)

> at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:151)

> at org.apache.spark.sql.execution.SparkPlan.executeQuery(SparkPlan.scala:152)

> at org.apache.spark.sql.execution.SparkPlan.execute(SparkPlan.scala:127)

> at org.apache.spark.sql.execution.QueryExecution.toRdd$lzycompute(QueryExecution.scala:80)

> at org.apache.spark.sql.execution.QueryExecution.toRdd(QueryExecution.scala:80)

> at org.apache.spark.sql.DataFrameWriter$$anonfun$runCommand$1.apply(DataFrameWriter.scala:676)

> at org.apache.spark.sql.DataFrameWriter$$anonfun$runCommand$1.apply(DataFrameWriter.scala:676)

> at org.apache.spark.sql.execution.SQLExecution$$anonfun$withNewExecutionId$1.apply(SQLExecution.scala:78)

> at org.apache.spark.sql.execution.SQLExecution$.withSQLConfPropagated(SQLExecution.scala:125)

> at org.apache.spark.sql.execution.SQLExecution$.withNewExecutionId(SQLExecution.scala:73)

> at org.apache.spark.sql.DataFrameWriter.runCommand(DataFrameWriter.scala:676)

> at org.apache.spark.sql.DataFrameWriter.saveToV1Source(DataFrameWriter.scala:285)

> at org.apache.spark.sql.DataFrameWriter.save(DataFrameWriter.scala:271)

> at org.apache.spark.sql.DataFrameWriter.save(DataFrameWriter.scala:229)

> at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

> at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

> at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

> at java.lang.reflect.Method.invoke(Method.java:498)

> at py4j.reflection.MethodInvoker.invoke(MethodInvoker.java:244)

> at py4j.reflection.ReflectionEngine.invoke(ReflectionEngine.java:357)

> at py4j.Gateway.invoke(Gateway.java:282)

> at py4j.commands.AbstractCommand.invokeMethod(AbstractCommand.java:132)

> at py4j.commands.CallCommand.execute(CallCommand.java:79)

> at py4j.GatewayConnection.run(GatewayConnection.java:238)

> at java.lang.Thread.run(Thread.java:748)

> Caused by: java.lang.RuntimeException: class org.apache.hadoop.hive.metastore.DefaultMetaStoreFilterHookImpl not org.apache.hudi.org.apache.hadoop_hive.metastore.MetaStoreFilterHook

> at org.apache.hadoop.conf.Configuration.getClass(Configuration.java:2221)

> ... 40 more

>

> >>> exit()

> ```

I guess you already used old version, because at `release-0.5.1` version, `org.apache.hudi.org.apache.hadoop_hive.metastore` is not exists.

https://github.com/apache/incubator-hudi/blob/release-0.5.1/packaging/hudi-spark-bundle/pom.xml

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-hudi] haospotai commented on issue #1284: [SUPPORT]

Posted by GitBox <gi...@apache.org>.

haospotai commented on issue #1284: [SUPPORT]

URL: https://github.com/apache/incubator-hudi/issues/1284#issuecomment-579103865

hi @lamber-ken

step 1 . git clone -b release-0.5.1 https://github.com/apache/incubator-hudi.git

step 2. cd incubator-hudi && mvn clean package -DskipTests -DskipITs

step 3. cd docker && ./setup_demo.sh

step 4.

```

class TestSoptaiHudi(unittest.TestCase):

def setUp(self) -> None:

load_dotenv('.env')

self.host = os.getenv('HDFS_HOST')

self.hudiclient = FunHudi("testApp")

self.path = '/data/peoplejson.json'

def test_insert_data_hudi_sync_hive(self):

self.hudiclient.insert_data_hudi_sync_hive("soptaitest", self.path)

```

step5 . open spark UI check apps status

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-hudi] lamber-ken commented on issue #1284: [SUPPORT]

Posted by GitBox <gi...@apache.org>.

lamber-ken commented on issue #1284: [SUPPORT]

URL: https://github.com/apache/incubator-hudi/issues/1284#issuecomment-578905337

Hello @haospotai, thanks for reporting this bug.

`org.apache.hudi.org.apache.hadoop_hive.metastore.MetaStoreFilterHook` is relocated at an out-of date version of hudi project. Please build the latest hudi project.

```

mvn clean package -DskipTests -DskipITs

```

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-hudi] haospotai commented on issue #1284: [SUPPORT]

Posted by GitBox <gi...@apache.org>.

haospotai commented on issue #1284: [SUPPORT]

URL: https://github.com/apache/incubator-hudi/issues/1284#issuecomment-579637458

this is the branch

```

hadoop@huidi-evaluation:~/incubator-hudi$ git branch

* release-0.5.1

```

then use

```

pyspark --packages org.apache.spark:spark-avro_2.11:2.4.4 --jars packaging/hudi-spark-bundle/target/hudi-spark-bundle_2.11-0.5.1-incubating-rc1.jar --conf 'spark.serializer=org.apache.spark.serializer.KryoSerializer'

```

error

```

/spark-2.4.4-bin-hadoop2.7/python/lib/py4j-0.10.7-src.zip/py4j/protocol.py", line 328, in get_return_value

py4j.protocol.Py4JJavaError: An error occurred while calling o79.save.

: java.lang.RuntimeException: java.lang.RuntimeException: class org.apache.hadoop.hive.metastore.DefaultMetaStoreFilterHookImpl not org.apache.hudi.org.apache.hadoop_hive.metastore.MetaStoreFilterHook

at org.apache.hadoop.conf.Configuration.getClass(Configuration.java:2227)

at org.apache.hudi.org.apache.hadoop_hive.metastore.HiveMetaStoreClient.loadFilterHooks(HiveMetaStoreClient.java:247)

at org.apache.hudi.org.apache.hadoop_hive.metastore.HiveMetaStoreClient.<init>(HiveMetaStoreClient.java:142)

at org.apache.hudi.org.apache.hadoop_hive.metastore.HiveMetaStoreClient.<init>(HiveMetaStoreClient.java:128)

at org.apache.hudi.hive.HoodieHiveClient.<init>(HoodieHiveClient.java:109)

at org.apache.hudi.hive.HiveSyncTool.<init>(HiveSyncTool.java:60)

at org.apache.hudi.HoodieSparkSqlWriter$.syncHive(HoodieSparkSqlWriter.scala:235)

at org.apache.hudi.HoodieSparkSqlWriter$.write(HoodieSparkSqlWriter.scala:169)

at org.apache.hudi.DefaultSource.createRelation(DefaultSource.scala:91)

at org.apache.spark.sql.execution.datasources.SaveIntoDataSourceCommand.run(SaveIntoDataSourceCommand.scala:45)

at org.apache.spark.sql.execution.command.ExecutedCommandExec.sideEffectResult$lzycompute(commands.scala:70)

at org.apache.spark.sql.execution.command.ExecutedCommandExec.sideEffectResult(commands.scala:68)

at org.apache.spark.sql.execution.command.ExecutedCommandExec.doExecute(commands.scala:86)

at org.apache.spark.sql.execution.SparkPlan$$anonfun$execute$1.apply(SparkPlan.scala:131)

at org.apache.spark.sql.execution.SparkPlan$$anonfun$execute$1.apply(SparkPlan.scala:127)

at org.apache.spark.sql.execution.SparkPlan$$anonfun$executeQuery$1.apply(SparkPlan.scala:155)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:151)

at org.apache.spark.sql.execution.SparkPlan.executeQuery(SparkPlan.scala:152)

at org.apache.spark.sql.execution.SparkPlan.execute(SparkPlan.scala:127)

at org.apache.spark.sql.execution.QueryExecution.toRdd$lzycompute(QueryExecution.scala:80)

at org.apache.spark.sql.execution.QueryExecution.toRdd(QueryExecution.scala:80)

at org.apache.spark.sql.DataFrameWriter$$anonfun$runCommand$1.apply(DataFrameWriter.scala:676)

at org.apache.spark.sql.DataFrameWriter$$anonfun$runCommand$1.apply(DataFrameWriter.scala:676)

at org.apache.spark.sql.execution.SQLExecution$$anonfun$withNewExecutionId$1.apply(SQLExecution.scala:78)

at org.apache.spark.sql.execution.SQLExecution$.withSQLConfPropagated(SQLExecution.scala:125)

at org.apache.spark.sql.execution.SQLExecution$.withNewExecutionId(SQLExecution.scala:73)

at org.apache.spark.sql.DataFrameWriter.runCommand(DataFrameWriter.scala:676)

at org.apache.spark.sql.DataFrameWriter.saveToV1Source(DataFrameWriter.scala:285)

at org.apache.spark.sql.DataFrameWriter.save(DataFrameWriter.scala:271)

at org.apache.spark.sql.DataFrameWriter.save(DataFrameWriter.scala:229)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at py4j.reflection.MethodInvoker.invoke(MethodInvoker.java:244)

at py4j.reflection.ReflectionEngine.invoke(ReflectionEngine.java:357)

at py4j.Gateway.invoke(Gateway.java:282)

at py4j.commands.AbstractCommand.invokeMethod(AbstractCommand.java:132)

at py4j.commands.CallCommand.execute(CallCommand.java:79)

at py4j.GatewayConnection.run(GatewayConnection.java:238)

at java.lang.Thread.run(Thread.java:748)

Caused by: java.lang.RuntimeException: class org.apache.hadoop.hive.metastore.DefaultMetaStoreFilterHookImpl not org.apache.hudi.org.apache.hadoop_hive.metastore.MetaStoreFilterHook

at org.apache.hadoop.conf.Configuration.getClass(Configuration.java:2221)

... 40 more

>>> exit()

```

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-hudi] lamber-ken edited a comment on issue #1284:

[SUPPORT]

Posted by GitBox <gi...@apache.org>.

lamber-ken edited a comment on issue #1284: [SUPPORT]

URL: https://github.com/apache/incubator-hudi/issues/1284#issuecomment-579204395

Hi @haospotai, give you a demo without sync to hive. When using the latest `release-0.5.1`, I met some error, I am tring to figure it out.

```

export SPARK_HOME=/work/BigData/install/spark/spark-2.4.4-bin-hadoop2.7

${SPARK_HOME}/bin/pyspark --packages org.apache.hudi:hudi-spark-bundle:0.5.0-incubating --conf 'spark.serializer=org.apache.spark.serializer.KryoSerializer'

tableName = "hudi_mor_table"

basePath = "file:///tmp/hudi_mor_table"

datas = [{ "name": "kenken", "ts": "qwer", "age": 12, "location": "latitude"}]

df = spark.read.json(spark.sparkContext.parallelize(datas, 2))

df.write.format("org.apache.hudi"). \

option("hoodie.insert.shuffle.parallelism", "10"). \

option("hoodie.upsert.shuffle.parallelism", "10"). \

option("hoodie.delete.shuffle.parallelism", "10"). \

option("hoodie.bulkinsert.shuffle.parallelism", "10"). \

option("hoodie.datasource.write.recordkey.field", "name"). \

option("hoodie.datasource.write.partitionpath.field", "location"). \

option("hoodie.datasource.write.precombine.field", "ts"). \

option("hoodie.table.name", tableName). \

mode("Overwrite"). \

save(basePath)

spark.read.format("org.apache.hudi").load(basePath + "/*/").show()

```

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

With regards,

Apache Git Services

[GitHub] [incubator-hudi] haospotai commented on issue #1284: [SUPPORT]

Posted by GitBox <gi...@apache.org>.

haospotai commented on issue #1284: [SUPPORT]

URL: https://github.com/apache/incubator-hudi/issues/1284#issuecomment-579688448

```

[INFO] Reactor Summary:

[INFO]

[INFO] Hudi 0.5.1-incubating-rc1 .......................... SUCCESS [ 2.221 s]

[INFO] hudi-common ........................................ SUCCESS [ 10.973 s]

[INFO] hudi-timeline-service .............................. SUCCESS [ 1.678 s]

[INFO] hudi-hadoop-mr ..................................... SUCCESS [ 5.598 s]

[INFO] hudi-client ........................................ SUCCESS [ 8.200 s]

[INFO] hudi-hive .......................................... SUCCESS [ 4.769 s]

[INFO] hudi-spark_2.11 .................................... SUCCESS [ 23.131 s]

[INFO] hudi-utilities_2.11 ................................ SUCCESS [ 6.523 s]

[INFO] hudi-cli ........................................... SUCCESS [ 7.150 s]

[INFO] hudi-hadoop-mr-bundle .............................. SUCCESS [ 1.489 s]

[INFO] hudi-hive-bundle ................................... SUCCESS [ 0.628 s]