You are viewing a plain text version of this content. The canonical link for it is here.

Posted to commits@seatunnel.apache.org by GitBox <gi...@apache.org> on 2022/04/17 06:34:37 UTC

[GitHub] [incubator-seatunnel] ruanwenjun opened a new issue, #1708: [Feature][Connectors] Add integration test for connectors

ruanwenjun opened a new issue, #1708:

URL: https://github.com/apache/incubator-seatunnel/issues/1708

### Search before asking

- [X] I had searched in the [feature](https://github.com/apache/incubator-seatunnel/issues?q=is%3Aissue+label%3A%22Feature%22) and found no similar feature requirement.

### Description

SeaTunnel support sync data between many datasource, e.g. MySQL, Kafka, Elasticsearch... For each datasource, we have a connector plugin.

Right now, we have an e2e module for IT(Integration test), but we are missing IT for most of the connectors. It's needed to add IT to make the code more stable.

### Usage Scenario

_No response_

### Related issues

_No response_

### Are you willing to submit a PR?

- [ ] Yes I am willing to submit a PR!

### Code of Conduct

- [X] I agree to follow this project's [Code of Conduct](https://www.apache.org/foundation/policies/conduct)

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@seatunnel.apache.org.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [incubator-seatunnel] tmljob commented on issue #1708: [Feature][Connectors] Add integration test for connectors

Posted by GitBox <gi...@apache.org>.

tmljob commented on issue #1708:

URL: https://github.com/apache/incubator-seatunnel/issues/1708#issuecomment-1103357161

> @tmljob Hi, thanks for your feedback. If you want to run the case in e2e module, you need to start a Docker environment first. Here is a simple doc to introduce [e2e module](https://github.com/apache/incubator-seatunnel/blob/dev/docs/en/contribution/contribute-plugin.md#add-e2e-tests-for-your-plugin).

After adding an IT use case, I want to run it to see if it can run normally. Take FakeSourceToConsoleIT as an example. After startup, the program has been stuck there and cannot run normally. How is this resolved?

https://user-images.githubusercontent.com/69188034/164130444-8b7741be-ff82-4f96-8d43-312d97983bd7.mp4

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@seatunnel.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [incubator-seatunnel] tmljob commented on issue #1708: [Feature][Connectors] Add integration test for connectors

Posted by GitBox <gi...@apache.org>.

tmljob commented on issue #1708:

URL: https://github.com/apache/incubator-seatunnel/issues/1708#issuecomment-1103444727

> > > @tmljob Hi, thanks for your feedback. If you want to run the case in e2e module, you need to start a Docker environment first. Here is a simple doc to introduce [e2e module](https://github.com/apache/incubator-seatunnel/blob/dev/docs/en/contribution/contribute-plugin.md#add-e2e-tests-for-your-plugin).

> >

> >

> > After adding an IT use case, I want to run it to see if it can run normally. Take FakeSourceToConsoleIT as an example. After startup, the program has been stuck there and cannot run normally. How is this resolved?

> > it.mp4

>

> I can't open your `it.mp4`, if you have a Docker environment, when you run the IT case, it will pull the docker image, if it stuck, maybe due to network issue.

After solving the problem that the image cannot be pulled due to network reasons, this error is reported when running the IT use case. How to solve it?

```

22/04/20 11:47:21 ERROR SparkContainer: 22/04/20 03:47:20 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

22/04/20 03:47:20 WARN DependencyUtils: Local jar /opt/bitnami/spark/tmpseatunnel-core-spark.jar does not exist, skipping.

22/04/20 03:47:20 WARN SparkSubmit$$anon$2: Failed to load org.apache.seatunnel.SeatunnelSpark.

java.lang.ClassNotFoundException: org.apache.seatunnel.SeatunnelSpark

at java.net.URLClassLoader.findClass(URLClassLoader.java:382)

at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

at java.lang.Class.forName0(Native Method)

at java.lang.Class.forName(Class.java:348)

at org.apache.spark.util.Utils$.classForName(Utils.scala:238)

at org.apache.spark.deploy.SparkSubmit.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:810)

at org.apache.spark.deploy.SparkSubmit.doRunMain$1(SparkSubmit.scala:167)

at org.apache.spark.deploy.SparkSubmit.submit(SparkSubmit.scala:195)

at org.apache.spark.deploy.SparkSubmit.doSubmit(SparkSubmit.scala:86)

at org.apache.spark.deploy.SparkSubmit$$anon$2.doSubmit(SparkSubmit.scala:924)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:933)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

22/04/20 03:47:20 INFO ShutdownHookManager: Shutdown hook called

22/04/20 03:47:20 INFO ShutdownHookManager: Deleting directory /tmp/spark-1d155996-bd2c-447e-b015-9564eb0ff107

java.lang.AssertionError:

预期:0

实际:101

<点击以查看差异>

at org.junit.Assert.fail(Assert.java:89)

at org.junit.Assert.failNotEquals(Assert.java:835)

at org.junit.Assert.assertEquals(Assert.java:647)

at org.junit.Assert.assertEquals(Assert.java:633)

at org.apache.seatunnel.e2e.spark.fake.FakeSourceToConsoleIT.testFakeSourceToConsoleSine(FakeSourceToConsoleIT.java:37)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.junit.runners.model.FrameworkMethod$1.runReflectiveCall(FrameworkMethod.java:59)

at org.junit.internal.runners.model.ReflectiveCallable.run(ReflectiveCallable.java:12)

at org.junit.runners.model.FrameworkMethod.invokeExplosively(FrameworkMethod.java:56)

at org.junit.internal.runners.statements.InvokeMethod.evaluate(InvokeMethod.java:17)

at org.junit.internal.runners.statements.RunBefores.evaluate(RunBefores.java:26)

at org.junit.internal.runners.statements.RunAfters.evaluate(RunAfters.java:27)

at org.junit.runners.ParentRunner$3.evaluate(ParentRunner.java:306)

at org.junit.runners.BlockJUnit4ClassRunner$1.evaluate(BlockJUnit4ClassRunner.java:100)

at org.junit.runners.ParentRunner.runLeaf(ParentRunner.java:366)

at org.junit.runners.BlockJUnit4ClassRunner.runChild(BlockJUnit4ClassRunner.java:103)

at org.junit.runners.BlockJUnit4ClassRunner.runChild(BlockJUnit4ClassRunner.java:63)

at org.junit.runners.ParentRunner$4.run(ParentRunner.java:331)

at org.junit.runners.ParentRunner$1.schedule(ParentRunner.java:79)

at org.junit.runners.ParentRunner.runChildren(ParentRunner.java:329)

at org.junit.runners.ParentRunner.access$100(ParentRunner.java:66)

at org.junit.runners.ParentRunner$2.evaluate(ParentRunner.java:293)

at org.junit.runners.ParentRunner$3.evaluate(ParentRunner.java:306)

at org.junit.runners.ParentRunner.run(ParentRunner.java:413)

at org.junit.runner.JUnitCore.run(JUnitCore.java:137)

at com.intellij.junit4.JUnit4IdeaTestRunner.startRunnerWithArgs(JUnit4IdeaTestRunner.java:69)

at com.intellij.rt.junit.IdeaTestRunner$Repeater.startRunnerWithArgs(IdeaTestRunner.java:33)

at com.intellij.rt.junit.JUnitStarter.prepareStreamsAndStart(JUnitStarter.java:235)

at com.intellij.rt.junit.JUnitStarter.main(JUnitStarter.java:54)

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@seatunnel.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [incubator-seatunnel] tmljob commented on issue #1708: [Feature][Connectors] Add integration test for connectors

Posted by GitBox <gi...@apache.org>.

tmljob commented on issue #1708:

URL: https://github.com/apache/incubator-seatunnel/issues/1708#issuecomment-1102253224

How to run IT use cases in IDE development environment? I run FakeSourceToConsoleIT and get the error below, is there any configuration I need to do? Are there any instructions?

```

D:\program\java\jdk1.8.0_191\bin\java.exe -ea -Djacoco-agent.destfile=D:\03_sourcecode\incubator-seatunnel\seatunnel-e2e\seatunnel-spark-e2e\target/jacoco.exec -Didea.test.cyclic.buffer.size=1048576 "-javaagent:D:\Program Files\JetBrains\IntelliJ IDEA 2021.2.2\lib\idea_rt.jar=57670:D:\Program Files\JetBrains\IntelliJ IDEA 2021.2.2\bin" -Dfile.encoding=UTF-8 -classpath C:\Users\tianminliang\AppData\Local\Temp\classpath1842213126.jar com.intellij.rt.junit.JUnitStarter -ideVersion5 -junit4 org.apache.seatunnel.e2e.spark.fake.FakeSourceToConsoleIT

22/04/17 13:01:08 ERROR DockerClientProviderStrategy: Could not find a valid Docker environment. Please check configuration. Attempted configurations were:

22/04/17 13:01:08 ERROR DockerClientProviderStrategy: NpipeSocketClientProviderStrategy: failed with exception TimeoutException (org.rnorth.ducttape.TimeoutException: java.util.concurrent.TimeoutException). Root cause TimeoutException (null)

22/04/17 13:01:08 ERROR DockerClientProviderStrategy: As no valid configuration was found, execution cannot continue

java.util.concurrent.CompletionException: java.lang.IllegalStateException: Could not find a valid Docker environment. Please see logs and check configuration

at java.util.concurrent.CompletableFuture.encodeThrowable(CompletableFuture.java:273)

at java.util.concurrent.CompletableFuture.completeThrowable(CompletableFuture.java:280)

at java.util.concurrent.CompletableFuture.uniRun(CompletableFuture.java:708)

at java.util.concurrent.CompletableFuture$UniRun.tryFire(CompletableFuture.java:687)

at java.util.concurrent.CompletableFuture$Completion.run(CompletableFuture.java:442)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

Caused by: java.lang.IllegalStateException: Could not find a valid Docker environment. Please see logs and check configuration

at org.testcontainers.dockerclient.DockerClientProviderStrategy.lambda$getFirstValidStrategy$4(DockerClientProviderStrategy.java:156)

at java.util.Optional.orElseThrow(Optional.java:290)

at org.testcontainers.dockerclient.DockerClientProviderStrategy.getFirstValidStrategy(DockerClientProviderStrategy.java:148)

at org.testcontainers.DockerClientFactory.getOrInitializeStrategy(DockerClientFactory.java:146)

at org.testcontainers.DockerClientFactory.client(DockerClientFactory.java:188)

at org.testcontainers.DockerClientFactory$1.getDockerClient(DockerClientFactory.java:101)

at com.github.dockerjava.api.DockerClientDelegate.authConfig(DockerClientDelegate.java:107)

at org.testcontainers.containers.GenericContainer.start(GenericContainer.java:316)

at java.util.concurrent.CompletableFuture.uniRun(CompletableFuture.java:705)

... 5 more

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@seatunnel.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [incubator-seatunnel] ruanwenjun commented on issue #1708: [Feature][Connectors] Add integration test for connectors

Posted by GitBox <gi...@apache.org>.

ruanwenjun commented on issue #1708:

URL: https://github.com/apache/incubator-seatunnel/issues/1708#issuecomment-1103366742

> > @tmljob Hi, thanks for your feedback. If you want to run the case in e2e module, you need to start a Docker environment first. Here is a simple doc to introduce [e2e module](https://github.com/apache/incubator-seatunnel/blob/dev/docs/en/contribution/contribute-plugin.md#add-e2e-tests-for-your-plugin).

>

> After adding an IT use case, I want to run it to see if it can run normally. Take FakeSourceToConsoleIT as an example. After startup, the program has been stuck there and cannot run normally. How is this resolved?

>

> it.mp4

I can't open your `it.mp4`, if you have a Docker environment, when you run the IT case, it will pull the docker image, if it stuck, maybe due to network issue.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@seatunnel.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [incubator-seatunnel] ruanwenjun commented on issue #1708: [Feature][Connectors] Add integration test for connectors

Posted by GitBox <gi...@apache.org>.

ruanwenjun commented on issue #1708:

URL: https://github.com/apache/incubator-seatunnel/issues/1708#issuecomment-1104634591

> It has been solved because the path generated under windows, but under docker, the path cannot be correctly

Yes, I use macOS, it would be appreciated if you can help to solve the path error on Windows.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@seatunnel.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [incubator-seatunnel] tmljob commented on issue #1708: [Feature][Connectors] Add integration test for connectors

Posted by GitBox <gi...@apache.org>.

tmljob commented on issue #1708:

URL: https://github.com/apache/incubator-seatunnel/issues/1708#issuecomment-1103615177

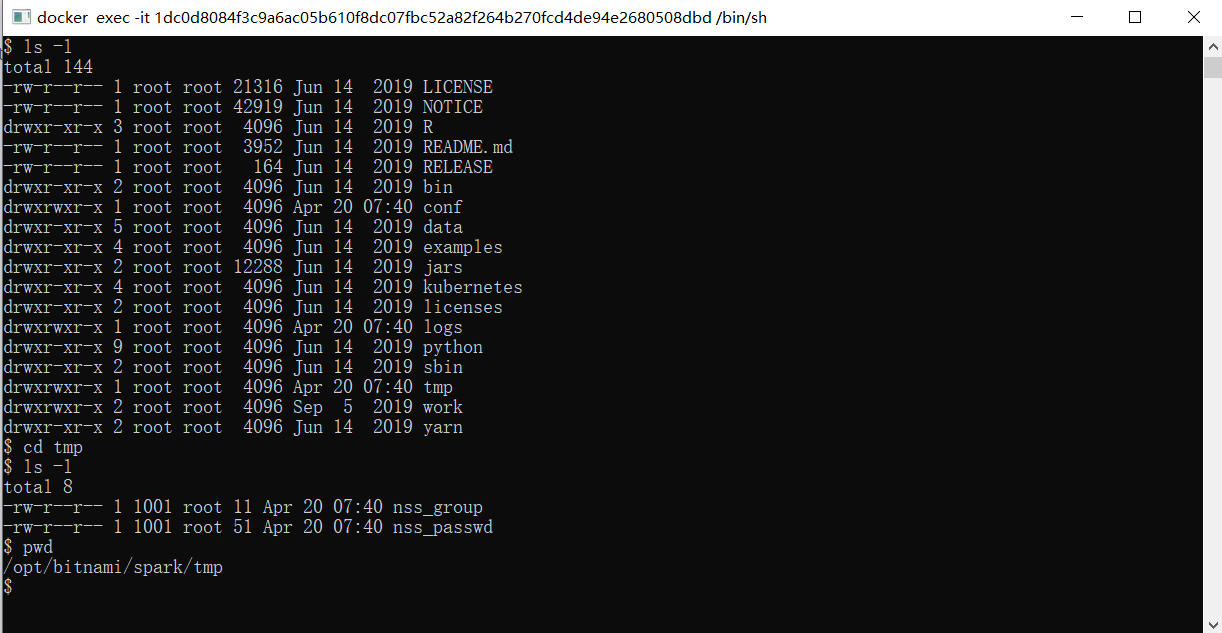

@ruanwenjun After I executed the mvn build, the same error was reported. I debugged and found that the seatunnel-core-spark.jar was not uploaded to the docker container. In addition, why does the log show that the jar package is loaded in this path?

```

22/04/20 07:39:55 WARN DependencyUtils: Local jar /opt/bitnami/spark/tmpseatunnel-core-spark.jar does not exist, skipping.

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@seatunnel.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [incubator-seatunnel] ruanwenjun commented on issue #1708: [Feature][Connectors] Add integration test for connectors

Posted by GitBox <gi...@apache.org>.

ruanwenjun commented on issue #1708:

URL: https://github.com/apache/incubator-seatunnel/issues/1708#issuecomment-1102264500

>

Hi, thanks for your feedback. If you want to run the case in e2e module, you need to start a Docker environment first. Here is a simple doc to introduce [e2e module](https://github.com/apache/incubator-seatunnel/blob/dev/docs/en/contribution/contribute-plugin.md#add-e2e-tests-for-your-plugin).

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@seatunnel.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [incubator-seatunnel] tmljob commented on issue #1708: [Feature][Connectors] Add integration test for connectors

Posted by GitBox <gi...@apache.org>.

tmljob commented on issue #1708:

URL: https://github.com/apache/incubator-seatunnel/issues/1708#issuecomment-1109709178

@ruanwenjun

It seems that SparkContainer does not support the use case of running plugins based on SparkStreamingSource extensions. Can you help clarify the reason?

```

D:\program\java\jdk1.8.0_191\bin\java.exe -ea -Djacoco-agent.destfile=D:\03_sourcecode\incubator-seatunnel\seatunnel-e2e\seatunnel-spark-e2e\target/jacoco.exec -Didea.test.cyclic.buffer.size=1048576 "-javaagent:D:\Program Files\JetBrains\IntelliJ IDEA 2021.2.2\lib\idea_rt.jar=57251:D:\Program Files\JetBrains\IntelliJ IDEA 2021.2.2\bin" -Dfile.encoding=UTF-8 -classpath C:\Users\tianminliang\AppData\Local\Temp\classpath1801293449.jar com.intellij.rt.junit.JUnitStarter -ideVersion5 -junit4 org.apache.seatunnel.e2e.spark.webhook.WebhookSourceToConsoleIT

22/04/26 16:50:51 ERROR SparkContainer: 22/04/26 08:50:49 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

log4j:WARN No appenders could be found for logger (org.apache.seatunnel.config.ConfigBuilder).

log4j:WARN Please initialize the log4j system properly.

log4j:WARN See http://logging.apache.org/log4j/1.2/faq.html#noconfig for more info.

Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

22/04/26 08:50:50 INFO SparkContext: Running Spark version 2.4.3

22/04/26 08:50:50 INFO SparkContext: Submitted application: SeaTunnel

22/04/26 08:50:50 INFO SecurityManager: Changing view acls to: spark

22/04/26 08:50:50 INFO SecurityManager: Changing modify acls to: spark

22/04/26 08:50:50 INFO SecurityManager: Changing view acls groups to:

22/04/26 08:50:50 INFO SecurityManager: Changing modify acls groups to:

22/04/26 08:50:50 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(spark); groups with view permissions: Set(); users with modify permissions: Set(spark); groups with modify permissions: Set()

22/04/26 08:50:50 INFO Utils: Successfully started service 'sparkDriver' on port 34501.

22/04/26 08:50:50 INFO SparkEnv: Registering MapOutputTracker

22/04/26 08:50:50 INFO SparkEnv: Registering BlockManagerMaster

22/04/26 08:50:50 INFO BlockManagerMasterEndpoint: Using org.apache.spark.storage.DefaultTopologyMapper for getting topology information

22/04/26 08:50:50 INFO BlockManagerMasterEndpoint: BlockManagerMasterEndpoint up

22/04/26 08:50:50 INFO DiskBlockManager: Created local directory at /tmp/blockmgr-a78f8502-4143-43d2-bf4b-96da7da8e957

22/04/26 08:50:50 INFO MemoryStore: MemoryStore started with capacity 366.3 MB

22/04/26 08:50:50 INFO SparkEnv: Registering OutputCommitCoordinator

22/04/26 08:50:50 INFO Utils: Successfully started service 'SparkUI' on port 4040.

22/04/26 08:50:50 INFO SparkUI: Bound SparkUI to 0.0.0.0, and started at http://1f4430d6921d:4040

22/04/26 08:50:50 INFO SparkContext: Added JAR file:/tmp/seatunnel-core-spark.jar at spark://1f4430d6921d:34501/jars/seatunnel-core-spark.jar with timestamp 1650963050795

22/04/26 08:50:50 INFO Executor: Starting executor ID driver on host localhost

22/04/26 08:50:50 INFO Utils: Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService' on port 45259.

22/04/26 08:50:50 INFO NettyBlockTransferService: Server created on 1f4430d6921d:45259

22/04/26 08:50:50 INFO BlockManager: Using org.apache.spark.storage.RandomBlockReplicationPolicy for block replication policy

22/04/26 08:50:50 INFO BlockManagerMaster: Registering BlockManager BlockManagerId(driver, 1f4430d6921d, 45259, None)

22/04/26 08:50:50 INFO BlockManagerMasterEndpoint: Registering block manager 1f4430d6921d:45259 with 366.3 MB RAM, BlockManagerId(driver, 1f4430d6921d, 45259, None)

22/04/26 08:50:50 INFO BlockManagerMaster: Registered BlockManager BlockManagerId(driver, 1f4430d6921d, 45259, None)

22/04/26 08:50:50 INFO BlockManager: Initialized BlockManager: BlockManagerId(driver, 1f4430d6921d, 45259, None)

22/04/26 08:50:51 WARN StreamingContext: spark.master should be set as local[n], n > 1 in local mode if you have receivers to get data, otherwise Spark jobs will not get resources to process the received data.

22/04/26 08:50:51 ERROR Seatunnel:

===============================================================================

22/04/26 08:50:51 ERROR Seatunnel: Fatal Error,

22/04/26 08:50:51 ERROR Seatunnel: Please submit bug report in https://github.com/apache/incubator-seatunnel/issues

22/04/26 08:50:51 ERROR Seatunnel: Reason:java.lang.ClassNotFoundException: Plugin class not found by name :[Webhook]

22/04/26 08:50:51 ERROR Seatunnel: Exception StackTrace:java.lang.RuntimeException: java.lang.ClassNotFoundException: Plugin class not found by name :[Webhook]

at org.apache.seatunnel.config.PluginFactory.lambda$createPlugins$0(PluginFactory.java:96)

at java.util.ArrayList.forEach(ArrayList.java:1257)

at org.apache.seatunnel.config.PluginFactory.createPlugins(PluginFactory.java:90)

at org.apache.seatunnel.config.ExecutionContext.<init>(ExecutionContext.java:52)

at org.apache.seatunnel.command.spark.SparkTaskExecuteCommand.execute(SparkTaskExecuteCommand.java:44)

at org.apache.seatunnel.command.spark.SparkTaskExecuteCommand.execute(SparkTaskExecuteCommand.java:36)

at org.apache.seatunnel.Seatunnel.run(Seatunnel.java:48)

at org.apache.seatunnel.SeatunnelSpark.main(SeatunnelSpark.java:27)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.spark.deploy.JavaMainApplication.start(SparkApplication.scala:52)

at org.apache.spark.deploy.SparkSubmit.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:849)

at org.apache.spark.deploy.SparkSubmit.doRunMain$1(SparkSubmit.scala:167)

at org.apache.spark.deploy.SparkSubmit.submit(SparkSubmit.scala:195)

at org.apache.spark.deploy.SparkSubmit.doSubmit(SparkSubmit.scala:86)

at org.apache.spark.deploy.SparkSubmit$$anon$2.doSubmit(SparkSubmit.scala:924)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:933)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

Caused by: java.lang.ClassNotFoundException: Plugin class not found by name :[Webhook]

at org.apache.seatunnel.config.PluginFactory.createPluginInstanceIgnoreCase(PluginFactory.java:132)

at org.apache.seatunnel.config.PluginFactory.lambda$createPlugins$0(PluginFactory.java:92)

... 19 more

22/04/26 08:50:51 ERROR Seatunnel:

===============================================================================

Exception in thread "main" java.lang.RuntimeException: java.lang.ClassNotFoundException: Plugin class not found by name :[Webhook]

at org.apache.seatunnel.config.PluginFactory.lambda$createPlugins$0(PluginFactory.java:96)

at java.util.ArrayList.forEach(ArrayList.java:1257)

at org.apache.seatunnel.config.PluginFactory.createPlugins(PluginFactory.java:90)

at org.apache.seatunnel.config.ExecutionContext.<init>(ExecutionContext.java:52)

at org.apache.seatunnel.command.spark.SparkTaskExecuteCommand.execute(SparkTaskExecuteCommand.java:44)

at org.apache.seatunnel.command.spark.SparkTaskExecuteCommand.execute(SparkTaskExecuteCommand.java:36)

at org.apache.seatunnel.Seatunnel.run(Seatunnel.java:48)

at org.apache.seatunnel.SeatunnelSpark.main(SeatunnelSpark.java:27)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.spark.deploy.JavaMainApplication.start(SparkApplication.scala:52)

at org.apache.spark.deploy.SparkSubmit.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:849)

at org.apache.spark.deploy.SparkSubmit.doRunMain$1(SparkSubmit.scala:167)

at org.apache.spark.deploy.SparkSubmit.submit(SparkSubmit.scala:195)

at org.apache.spark.deploy.SparkSubmit.doSubmit(SparkSubmit.scala:86)

at org.apache.spark.deploy.SparkSubmit$$anon$2.doSubmit(SparkSubmit.scala:924)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:933)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

Caused by: java.lang.ClassNotFoundException: Plugin class not found by name :[Webhook]

at org.apache.seatunnel.config.PluginFactory.createPluginInstanceIgnoreCase(PluginFactory.java:132)

at org.apache.seatunnel.config.PluginFactory.lambda$createPlugins$0(PluginFactory.java:92)

... 19 more

22/04/26 08:50:51 INFO SparkContext: Invoking stop() from shutdown hook

22/04/26 08:50:51 INFO SparkUI: Stopped Spark web UI at http://1f4430d6921d:4040

22/04/26 08:50:51 INFO MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped!

22/04/26 08:50:51 INFO MemoryStore: MemoryStore cleared

22/04/26 08:50:51 INFO BlockManager: BlockManager stopped

22/04/26 08:50:51 INFO BlockManagerMaster: BlockManagerMaster stopped

22/04/26 08:50:51 INFO OutputCommitCoordinator$OutputCommitCoordinatorEndpoint: OutputCommitCoordinator stopped!

22/04/26 08:50:51 INFO SparkContext: Successfully stopped SparkContext

22/04/26 08:50:51 INFO ShutdownHookManager: Shutdown hook called

22/04/26 08:50:51 INFO ShutdownHookManager: Deleting directory /tmp/spark-d35c388f-46a3-4e64-afd8-3a2ffc602f26

22/04/26 08:50:51 INFO ShutdownHookManager: Deleting directory /tmp/spark-5e8ca489-3556-4274-89b2-436bdb69fd21

java.lang.AssertionError:

预期:0

实际:1

<点击以查看差异>

at org.junit.Assert.fail(Assert.java:89)

at org.junit.Assert.failNotEquals(Assert.java:835)

at org.junit.Assert.assertEquals(Assert.java:647)

at org.junit.Assert.assertEquals(Assert.java:633)

at org.apache.seatunnel.e2e.spark.webhook.WebhookSourceToConsoleIT.testWebhookSourceToConsoleSine(WebhookSourceToConsoleIT.java:16)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.junit.runners.model.FrameworkMethod$1.runReflectiveCall(FrameworkMethod.java:59)

at org.junit.internal.runners.model.ReflectiveCallable.run(ReflectiveCallable.java:12)

at org.junit.runners.model.FrameworkMethod.invokeExplosively(FrameworkMethod.java:56)

at org.junit.internal.runners.statements.InvokeMethod.evaluate(InvokeMethod.java:17)

at org.junit.internal.runners.statements.RunBefores.evaluate(RunBefores.java:26)

at org.junit.internal.runners.statements.RunAfters.evaluate(RunAfters.java:27)

at org.junit.runners.ParentRunner$3.evaluate(ParentRunner.java:306)

at org.junit.runners.BlockJUnit4ClassRunner$1.evaluate(BlockJUnit4ClassRunner.java:100)

at org.junit.runners.ParentRunner.runLeaf(ParentRunner.java:366)

at org.junit.runners.BlockJUnit4ClassRunner.runChild(BlockJUnit4ClassRunner.java:103)

at org.junit.runners.BlockJUnit4ClassRunner.runChild(BlockJUnit4ClassRunner.java:63)

at org.junit.runners.ParentRunner$4.run(ParentRunner.java:331)

at org.junit.runners.ParentRunner$1.schedule(ParentRunner.java:79)

at org.junit.runners.ParentRunner.runChildren(ParentRunner.java:329)

at org.junit.runners.ParentRunner.access$100(ParentRunner.java:66)

at org.junit.runners.ParentRunner$2.evaluate(ParentRunner.java:293)

at org.junit.runners.ParentRunner$3.evaluate(ParentRunner.java:306)

at org.junit.runners.ParentRunner.run(ParentRunner.java:413)

at org.junit.runner.JUnitCore.run(JUnitCore.java:137)

at com.intellij.junit4.JUnit4IdeaTestRunner.startRunnerWithArgs(JUnit4IdeaTestRunner.java:69)

at com.intellij.rt.junit.IdeaTestRunner$Repeater.startRunnerWithArgs(IdeaTestRunner.java:33)The Spark Steaming plugin appears to be run as a Batch plugin.

at com.intellij.rt.junit.JUnitStarter.prepareStreamsAndStart(JUnitStarter.java:235)

at com.intellij.rt.junit.JUnitStarter.main(JUnitStarter.java:54)

```

1、Webhook plugin has been packaged into seatunnel-core-spark.jar;

2、The Spark Steaming plugin appears to be run as a Batch plugin.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@seatunnel.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [incubator-seatunnel] ruanwenjun commented on issue #1708: [Feature][Connectors] Add integration test for connectors

Posted by GitBox <gi...@apache.org>.

ruanwenjun commented on issue #1708:

URL: https://github.com/apache/incubator-seatunnel/issues/1708#issuecomment-1103626996

> 22/04/20 07:39:55 WARN DependencyUtils: Local jar /opt/bitnami/spark/tmpseatunnel-core-spark.jar does not exist, skipping.

It seems they're missing a `/`, the jar path in container is defined by below code, I am not sure if this is compatible with Windows.

```java

private static final String SEATUNNEL_SPARK_JAR = "seatunnel-core-spark.jar";

private static final String SPARK_JAR_PATH = Paths.get("/tmp", SEATUNNEL_SPARK_JAR).toString();

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@seatunnel.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [incubator-seatunnel] tmljob commented on issue #1708: [Feature][Connectors] Add integration test for connectors

Posted by GitBox <gi...@apache.org>.

tmljob commented on issue #1708:

URL: https://github.com/apache/incubator-seatunnel/issues/1708#issuecomment-1104624133

> > 22/04/20 07:39:55 WARN DependencyUtils: Local jar /opt/bitnami/spark/tmpseatunnel-core-spark.jar does not exist, skipping.

>

> It seems they're missing a `/`, the jar path in container is defined by below code, I am not sure if this is compatible with Windows. Right now, we didn't have Windows in our CI.

>

> ```java

> private static final String SEATUNNEL_SPARK_JAR = "seatunnel-core-spark.jar";

> private static final String SPARK_JAR_PATH = Paths.get("/tmp", SEATUNNEL_SPARK_JAR).toString();

> ```

It has been solved because the path generated under windows, but under docker, the path cannot be correctly identified due to the linux system. By the way, do you usually develop under linux through idea IDE?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@seatunnel.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [incubator-seatunnel] tmljob commented on issue #1708: [Feature][Connectors] Add integration test for connectors

Posted by GitBox <gi...@apache.org>.

tmljob commented on issue #1708:

URL: https://github.com/apache/incubator-seatunnel/issues/1708#issuecomment-1104681405

> > It has been solved because the path generated under windows, but under docker, the path cannot be correctly

>

> Yes, I use macOS, it would be appreciated if you can help to solve the path error on Windows.

I have submitted a PR to fix it. #1716

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@seatunnel.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [incubator-seatunnel] ruanwenjun commented on issue #1708: [Feature][Connectors] Add integration test for connectors

Posted by GitBox <gi...@apache.org>.

ruanwenjun commented on issue #1708:

URL: https://github.com/apache/incubator-seatunnel/issues/1708#issuecomment-1103450898

@tmljob Good catch, you may need to execute `mvn clean package` first, I will update this on doc.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@seatunnel.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [incubator-seatunnel] ruanwenjun commented on issue #1708: [Feature][Connectors] Add integration test for connectors

Posted by GitBox <gi...@apache.org>.

ruanwenjun commented on issue #1708:

URL: https://github.com/apache/incubator-seatunnel/issues/1708#issuecomment-1109716628

@tmljob Thanks for your feedback, I will skip this IT case at #1722 and fix this in another PR.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@seatunnel.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [incubator-seatunnel] tmljob commented on issue #1708: [Feature][Connectors] Add integration test for connectors

Posted by GitBox <gi...@apache.org>.

tmljob commented on issue #1708:

URL: https://github.com/apache/incubator-seatunnel/issues/1708#issuecomment-1109719041

@ruanwenjun

It seems that SparkContainer does not support the use case of running plugins based on SparkStreamingSource extensions. Can you help clarify the reason?

```

D:\program\java\jdk1.8.0_191\bin\java.exe -ea -Djacoco-agent.destfile=D:\03_sourcecode\incubator-seatunnel\seatunnel-e2e\seatunnel-spark-e2e\target/jacoco.exec -Didea.test.cyclic.buffer.size=1048576 "-javaagent:D:\Program Files\JetBrains\IntelliJ IDEA 2021.2.2\lib\idea_rt.jar=57251:D:\Program Files\JetBrains\IntelliJ IDEA 2021.2.2\bin" -Dfile.encoding=UTF-8 -classpath C:\Users\tianminliang\AppData\Local\Temp\classpath1801293449.jar com.intellij.rt.junit.JUnitStarter -ideVersion5 -junit4 org.apache.seatunnel.e2e.spark.webhook.WebhookSourceToConsoleIT

22/04/26 16:50:51 ERROR SparkContainer: 22/04/26 08:50:49 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

log4j:WARN No appenders could be found for logger (org.apache.seatunnel.config.ConfigBuilder).

log4j:WARN Please initialize the log4j system properly.

log4j:WARN See http://logging.apache.org/log4j/1.2/faq.html#noconfig for more info.

Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

22/04/26 08:50:50 INFO SparkContext: Running Spark version 2.4.3

22/04/26 08:50:50 INFO SparkContext: Submitted application: SeaTunnel

22/04/26 08:50:50 INFO SecurityManager: Changing view acls to: spark

22/04/26 08:50:50 INFO SecurityManager: Changing modify acls to: spark

22/04/26 08:50:50 INFO SecurityManager: Changing view acls groups to:

22/04/26 08:50:50 INFO SecurityManager: Changing modify acls groups to:

22/04/26 08:50:50 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(spark); groups with view permissions: Set(); users with modify permissions: Set(spark); groups with modify permissions: Set()

22/04/26 08:50:50 INFO Utils: Successfully started service 'sparkDriver' on port 34501.

22/04/26 08:50:50 INFO SparkEnv: Registering MapOutputTracker

22/04/26 08:50:50 INFO SparkEnv: Registering BlockManagerMaster

22/04/26 08:50:50 INFO BlockManagerMasterEndpoint: Using org.apache.spark.storage.DefaultTopologyMapper for getting topology information

22/04/26 08:50:50 INFO BlockManagerMasterEndpoint: BlockManagerMasterEndpoint up

22/04/26 08:50:50 INFO DiskBlockManager: Created local directory at /tmp/blockmgr-a78f8502-4143-43d2-bf4b-96da7da8e957

22/04/26 08:50:50 INFO MemoryStore: MemoryStore started with capacity 366.3 MB

22/04/26 08:50:50 INFO SparkEnv: Registering OutputCommitCoordinator

22/04/26 08:50:50 INFO Utils: Successfully started service 'SparkUI' on port 4040.

22/04/26 08:50:50 INFO SparkUI: Bound SparkUI to 0.0.0.0, and started at http://1f4430d6921d:4040

22/04/26 08:50:50 INFO SparkContext: Added JAR file:/tmp/seatunnel-core-spark.jar at spark://1f4430d6921d:34501/jars/seatunnel-core-spark.jar with timestamp 1650963050795

22/04/26 08:50:50 INFO Executor: Starting executor ID driver on host localhost

22/04/26 08:50:50 INFO Utils: Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService' on port 45259.

22/04/26 08:50:50 INFO NettyBlockTransferService: Server created on 1f4430d6921d:45259

22/04/26 08:50:50 INFO BlockManager: Using org.apache.spark.storage.RandomBlockReplicationPolicy for block replication policy

22/04/26 08:50:50 INFO BlockManagerMaster: Registering BlockManager BlockManagerId(driver, 1f4430d6921d, 45259, None)

22/04/26 08:50:50 INFO BlockManagerMasterEndpoint: Registering block manager 1f4430d6921d:45259 with 366.3 MB RAM, BlockManagerId(driver, 1f4430d6921d, 45259, None)

22/04/26 08:50:50 INFO BlockManagerMaster: Registered BlockManager BlockManagerId(driver, 1f4430d6921d, 45259, None)

22/04/26 08:50:50 INFO BlockManager: Initialized BlockManager: BlockManagerId(driver, 1f4430d6921d, 45259, None)

22/04/26 08:50:51 WARN StreamingContext: spark.master should be set as local[n], n > 1 in local mode if you have receivers to get data, otherwise Spark jobs will not get resources to process the received data.

22/04/26 08:50:51 ERROR Seatunnel:

===============================================================================

22/04/26 08:50:51 ERROR Seatunnel: Fatal Error,

22/04/26 08:50:51 ERROR Seatunnel: Please submit bug report in https://github.com/apache/incubator-seatunnel/issues

22/04/26 08:50:51 ERROR Seatunnel: Reason:java.lang.ClassNotFoundException: Plugin class not found by name :[Webhook]

22/04/26 08:50:51 ERROR Seatunnel: Exception StackTrace:java.lang.RuntimeException: java.lang.ClassNotFoundException: Plugin class not found by name :[Webhook]

at org.apache.seatunnel.config.PluginFactory.lambda$createPlugins$0(PluginFactory.java:96)

at java.util.ArrayList.forEach(ArrayList.java:1257)

at org.apache.seatunnel.config.PluginFactory.createPlugins(PluginFactory.java:90)

at org.apache.seatunnel.config.ExecutionContext.<init>(ExecutionContext.java:52)

at org.apache.seatunnel.command.spark.SparkTaskExecuteCommand.execute(SparkTaskExecuteCommand.java:44)

at org.apache.seatunnel.command.spark.SparkTaskExecuteCommand.execute(SparkTaskExecuteCommand.java:36)

at org.apache.seatunnel.Seatunnel.run(Seatunnel.java:48)

at org.apache.seatunnel.SeatunnelSpark.main(SeatunnelSpark.java:27)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.spark.deploy.JavaMainApplication.start(SparkApplication.scala:52)

at org.apache.spark.deploy.SparkSubmit.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:849)

at org.apache.spark.deploy.SparkSubmit.doRunMain$1(SparkSubmit.scala:167)

at org.apache.spark.deploy.SparkSubmit.submit(SparkSubmit.scala:195)

at org.apache.spark.deploy.SparkSubmit.doSubmit(SparkSubmit.scala:86)

at org.apache.spark.deploy.SparkSubmit$$anon$2.doSubmit(SparkSubmit.scala:924)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:933)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

Caused by: java.lang.ClassNotFoundException: Plugin class not found by name :[Webhook]

at org.apache.seatunnel.config.PluginFactory.createPluginInstanceIgnoreCase(PluginFactory.java:132)

at org.apache.seatunnel.config.PluginFactory.lambda$createPlugins$0(PluginFactory.java:92)

... 19 more

22/04/26 08:50:51 ERROR Seatunnel:

===============================================================================

Exception in thread "main" java.lang.RuntimeException: java.lang.ClassNotFoundException: Plugin class not found by name :[Webhook]

at org.apache.seatunnel.config.PluginFactory.lambda$createPlugins$0(PluginFactory.java:96)

at java.util.ArrayList.forEach(ArrayList.java:1257)

at org.apache.seatunnel.config.PluginFactory.createPlugins(PluginFactory.java:90)

at org.apache.seatunnel.config.ExecutionContext.<init>(ExecutionContext.java:52)

at org.apache.seatunnel.command.spark.SparkTaskExecuteCommand.execute(SparkTaskExecuteCommand.java:44)

at org.apache.seatunnel.command.spark.SparkTaskExecuteCommand.execute(SparkTaskExecuteCommand.java:36)

at org.apache.seatunnel.Seatunnel.run(Seatunnel.java:48)

at org.apache.seatunnel.SeatunnelSpark.main(SeatunnelSpark.java:27)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.spark.deploy.JavaMainApplication.start(SparkApplication.scala:52)

at org.apache.spark.deploy.SparkSubmit.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:849)

at org.apache.spark.deploy.SparkSubmit.doRunMain$1(SparkSubmit.scala:167)

at org.apache.spark.deploy.SparkSubmit.submit(SparkSubmit.scala:195)

at org.apache.spark.deploy.SparkSubmit.doSubmit(SparkSubmit.scala:86)

at org.apache.spark.deploy.SparkSubmit$$anon$2.doSubmit(SparkSubmit.scala:924)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:933)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

Caused by: java.lang.ClassNotFoundException: Plugin class not found by name :[Webhook]

at org.apache.seatunnel.config.PluginFactory.createPluginInstanceIgnoreCase(PluginFactory.java:132)

at org.apache.seatunnel.config.PluginFactory.lambda$createPlugins$0(PluginFactory.java:92)

... 19 more

22/04/26 08:50:51 INFO SparkContext: Invoking stop() from shutdown hook

22/04/26 08:50:51 INFO SparkUI: Stopped Spark web UI at http://1f4430d6921d:4040

22/04/26 08:50:51 INFO MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped!

22/04/26 08:50:51 INFO MemoryStore: MemoryStore cleared

22/04/26 08:50:51 INFO BlockManager: BlockManager stopped

22/04/26 08:50:51 INFO BlockManagerMaster: BlockManagerMaster stopped

22/04/26 08:50:51 INFO OutputCommitCoordinator$OutputCommitCoordinatorEndpoint: OutputCommitCoordinator stopped!

22/04/26 08:50:51 INFO SparkContext: Successfully stopped SparkContext

22/04/26 08:50:51 INFO ShutdownHookManager: Shutdown hook called

22/04/26 08:50:51 INFO ShutdownHookManager: Deleting directory /tmp/spark-d35c388f-46a3-4e64-afd8-3a2ffc602f26

22/04/26 08:50:51 INFO ShutdownHookManager: Deleting directory /tmp/spark-5e8ca489-3556-4274-89b2-436bdb69fd21

java.lang.AssertionError:

预期:0

实际:1

<点击以查看差异>

at org.junit.Assert.fail(Assert.java:89)

at org.junit.Assert.failNotEquals(Assert.java:835)

at org.junit.Assert.assertEquals(Assert.java:647)

at org.junit.Assert.assertEquals(Assert.java:633)

at org.apache.seatunnel.e2e.spark.webhook.WebhookSourceToConsoleIT.testWebhookSourceToConsoleSine(WebhookSourceToConsoleIT.java:16)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.junit.runners.model.FrameworkMethod$1.runReflectiveCall(FrameworkMethod.java:59)

at org.junit.internal.runners.model.ReflectiveCallable.run(ReflectiveCallable.java:12)

at org.junit.runners.model.FrameworkMethod.invokeExplosively(FrameworkMethod.java:56)

at org.junit.internal.runners.statements.InvokeMethod.evaluate(InvokeMethod.java:17)

at org.junit.internal.runners.statements.RunBefores.evaluate(RunBefores.java:26)

at org.junit.internal.runners.statements.RunAfters.evaluate(RunAfters.java:27)

at org.junit.runners.ParentRunner$3.evaluate(ParentRunner.java:306)

at org.junit.runners.BlockJUnit4ClassRunner$1.evaluate(BlockJUnit4ClassRunner.java:100)

at org.junit.runners.ParentRunner.runLeaf(ParentRunner.java:366)

at org.junit.runners.BlockJUnit4ClassRunner.runChild(BlockJUnit4ClassRunner.java:103)

at org.junit.runners.BlockJUnit4ClassRunner.runChild(BlockJUnit4ClassRunner.java:63)

at org.junit.runners.ParentRunner$4.run(ParentRunner.java:331)

at org.junit.runners.ParentRunner$1.schedule(ParentRunner.java:79)

at org.junit.runners.ParentRunner.runChildren(ParentRunner.java:329)

at org.junit.runners.ParentRunner.access$100(ParentRunner.java:66)

at org.junit.runners.ParentRunner$2.evaluate(ParentRunner.java:293)

at org.junit.runners.ParentRunner$3.evaluate(ParentRunner.java:306)

at org.junit.runners.ParentRunner.run(ParentRunner.java:413)

at org.junit.runner.JUnitCore.run(JUnitCore.java:137)

at com.intellij.junit4.JUnit4IdeaTestRunner.startRunnerWithArgs(JUnit4IdeaTestRunner.java:69)

at com.intellij.rt.junit.IdeaTestRunner$Repeater.startRunnerWithArgs(IdeaTestRunner.java:33)The Spark Steaming plugin appears to be run as a Batch plugin.

at com.intellij.rt.junit.JUnitStarter.prepareStreamsAndStart(JUnitStarter.java:235)

at com.intellij.rt.junit.JUnitStarter.main(JUnitStarter.java:54)

```

1、Webhook plugin has been packaged into seatunnel-core-spark.jar;

2、The Spark Steaming plugin appears to be run as a Batch plugin.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@seatunnel.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org