You are viewing a plain text version of this content. The canonical link for it is here.

Posted to notifications@apisix.apache.org by "githubxubin (via GitHub)" <gi...@apache.org> on 2023/04/20 03:05:29 UTC

[GitHub] [apisix] githubxubin opened a new issue, #9343: QPS too low!!!

githubxubin opened a new issue, #9343:

URL: https://github.com/apache/apisix/issues/9343

### Description

I used wrk2 for performance tests, but the results were confusing,Is there any problem with the configuration?(centos7 4c32g)

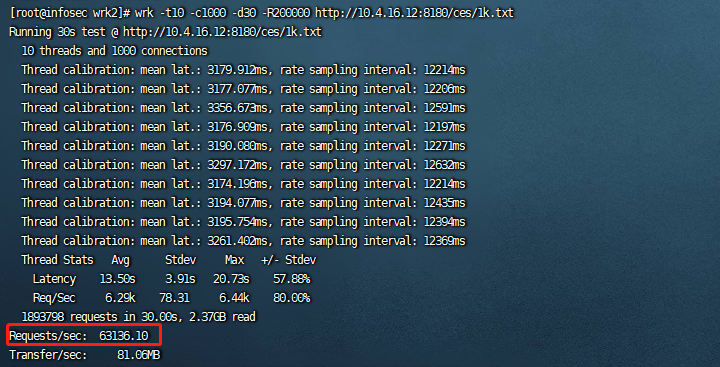

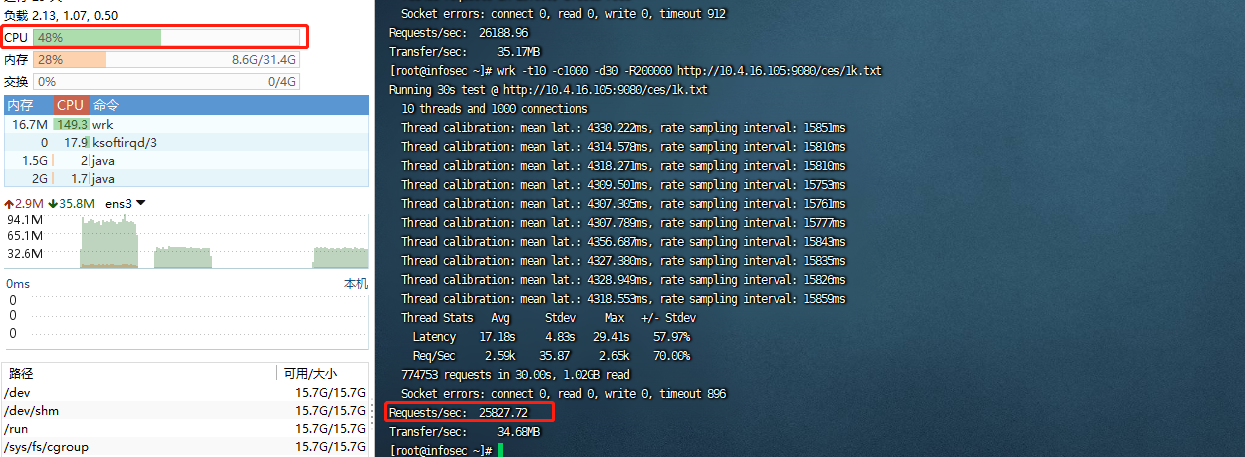

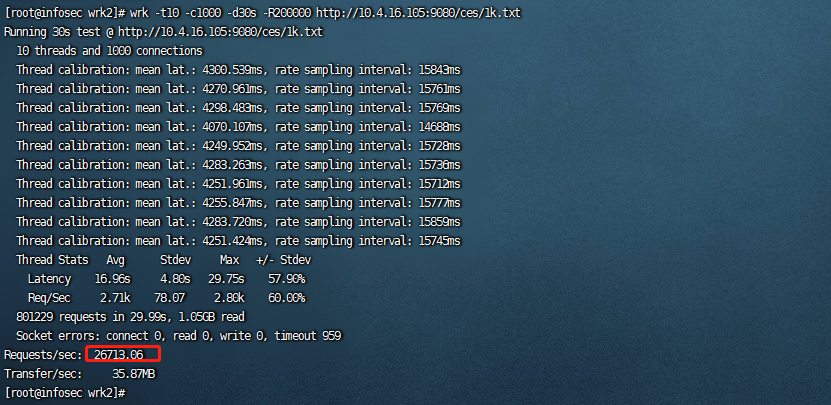

I'll test directly upstream ,the result is :

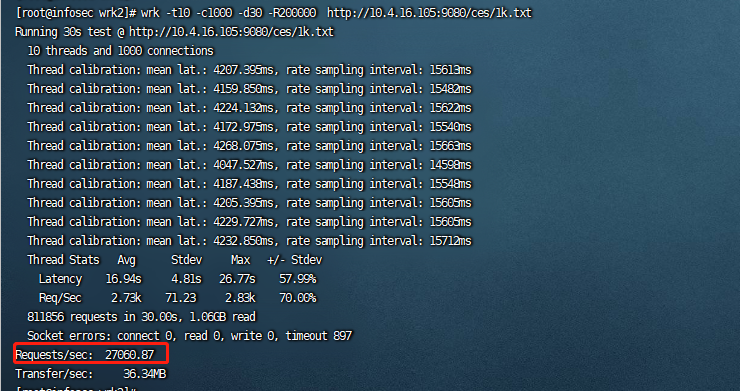

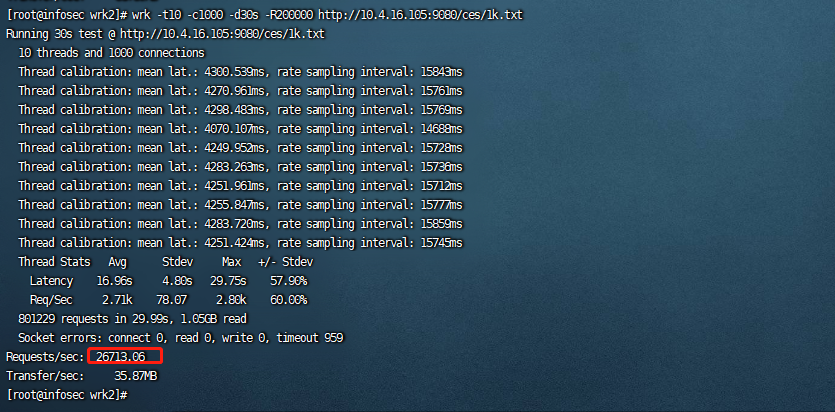

use apisix proxy ,the result is :

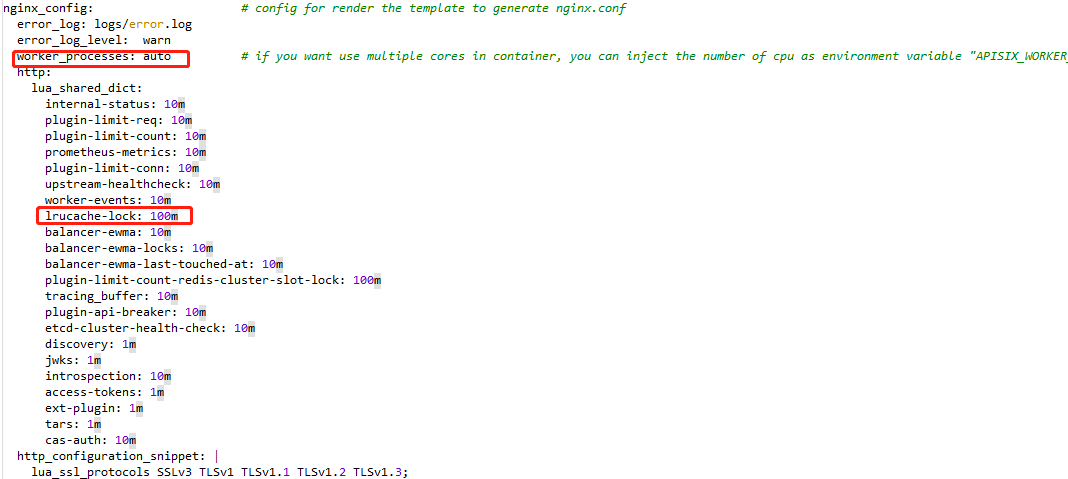

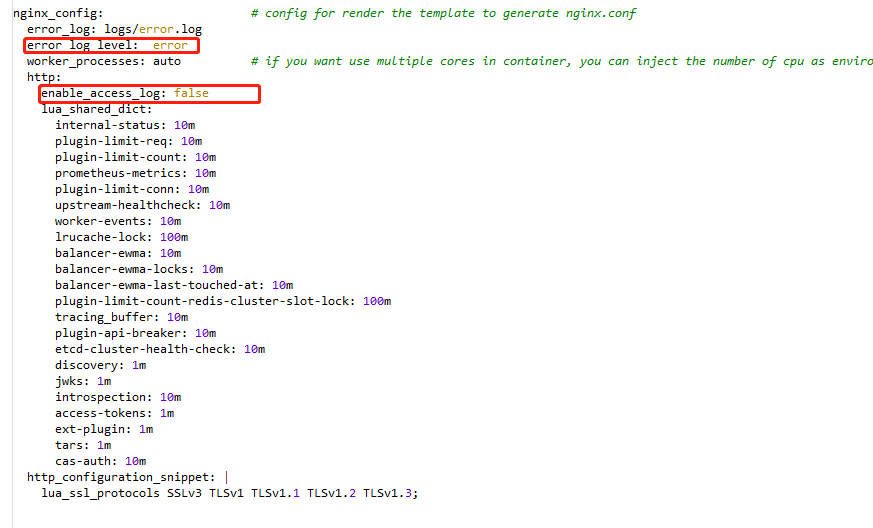

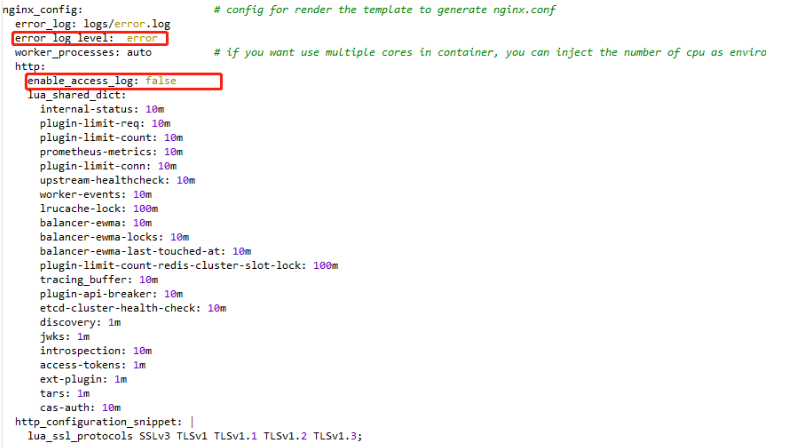

this my config.yaml:

this is my route config:

`{

"uri": "/ces/*",

"name": "ces11",

"priority": 1,

"methods": [

"GET",

"POST",

"PUT",

"DELETE",

"PATCH",

"HEAD",

"OPTIONS",

"CONNECT",

"TRACE"

],

"upstream": {

"nodes": [

{

"host": "10.4.16.12",

"port": 8180,

"weight": 1

}

],

"retries": 4,

"timeout": {

"connect": 10,

"send": 10,

"read": 10

},

"type": "roundrobin",

"scheme": "http",

"pass_host": "pass",

"keepalive_pool": {

"idle_timeout": 60,

"requests": 1000,

"size": 320

},

"retry_timeout": 4

},

"status": 1

}`

### Environment

- APISIX version (run `apisix 2.15-alpine`):

- Operating system (run `uname -a`):

- OpenResty / Nginx version (run `openresty -V` or `nginx -V`):

- etcd version, if relevant (run `curl http://127.0.0.1:9090/v1/server_info`):

- APISIX Dashboard version, if relevant:

- Plugin runner version, for issues related to plugin runners:

- LuaRocks version, for installation issues (run `luarocks --version`):

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: notifications-unsubscribe@apisix.apache.org.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [apisix] hansedong commented on issue #9343: QPS too low!!!

Posted by "hansedong (via GitHub)" <gi...@apache.org>.

hansedong commented on issue #9343:

URL: https://github.com/apache/apisix/issues/9343#issuecomment-1516089117

I can only provide some reference based on my experience.

When I directly load test an HTTP server written in Go (only outputting 'hello world'), the QPS is 89246, while when forwarded by AIPSIX( the configuration of APISIX server is 8 cores and 8GB RAM. ), the QPS is 72765. The concurrency during load testing was 500. From my results, it seems that there is not a significant difference in the load testing results between using APISIX forwarding and directly load testing the target server.

I think the following points can be considered:

1. The bandwidth situation of AIPSIX nodes, investigate whether there are any bottlenecks.

2. Check the kernel parameters of APISIX's Linux node.

Below are my system kernel parameters of APISIX node, you can take a look for reference( do not use directly for production due to differences in network environment. ):

```

kernel.msgmnb = 655360

kernel.msgmax = 65536

kernel.msgmni = 16384

kernel.shmmax = 68719476736

kernel.shmall = 4294967296

net.ipv4.ip_forward = 1

net.ipv4.conf.all.rp_filter = 0

net.ipv4.conf.default.rp_filter = 0

net.ipv4.conf.all.accept_source_route = 0

net.ipv4.conf.default.accept_source_route = 0

net.ipv4.tcp_tw_recycle = 0

net.ipv4.tcp_tw_reuse = 1

net.ipv4.tcp_timestamps = 0

net.ipv4.tcp_syncookies = 1

net.ipv4.conf.lo.arp_ignore = 1

net.ipv4.conf.lo.arp_announce = 2

net.ipv4.conf.all.arp_ignore = 1

net.ipv4.conf.all.arp_announce = 2

net.ipv4.tcp_retrans_collapse = 1

net.ipv4.conf.all.log_martians = 0

net.ipv4.conf.macv-host.log_martians = 0

net.ipv4.conf.default.log_martians = 0

net.ipv4.conf.bond0.log_martians = 0

net.ipv4.ip_nonlocal_bind = 0

fs.inotify.max_queued_events = 16384000

fs.inotify.max_user_instances = 1280000

fs.inotify.max_user_watches = 8192000

net.core.netdev_max_backlog = 200000

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

vm.swappiness = 0

vm.overcommit_memory = 1

vm.panic_on_oom = 0

fs.file-max = 52706963

fs.nr_open = 52706963

net.netfilter.nf_conntrack_tcp_timeout_established = 432000

net.nf_conntrack_max = 10485760

net.netfilter.nf_conntrack_max=10485760

net.netfilter.nf_conntrack_buckets = 655360

net.ipv4.neigh.default.gc_thresh1 = 163840

net.ipv4.neigh.default.gc_thresh2 = 327680

net.ipv4.neigh.default.gc_thresh3 = 500000

net.ipv6.neigh.default.gc_thresh1 = 163840

net.ipv6.neigh.default.gc_thresh2 = 327680

net.ipv6.neigh.default.gc_thresh3 = 500000

kernel.pid_max = 1966080

kernel.threads-max = 2062606

vm.max_map_count = 26214400

net.core.somaxconn = 2621440

net.ipv4.tcp_max_syn_backlog = 3276800

net.ipv4.tcp_max_orphans = 2621440

net.ipv6.conf.all.disable_ipv6 = 1

net.core.rmem_default = 212992

net.core.wmem_default = 212992

net.core.rmem_max = 16777216

net.core.wmem_max = 16777216

net.ipv4.tcp_rmem = 4096 87380 16777216

net.ipv4.tcp_wmem = 4096 65536 16777216

net.ipv4.ip_local_port_range = 10000 65000

net.ipv4.tcp_synack_retries = 5

net.ipv4.tcp_syn_retries = 5

net.ipv4.tcp_keepalive_time = 150

net.ipv4.tcp_fin_timeout = 15

net.ipv4.tcp_max_tw_buckets = 1440000

net.ipv4.tcp_window_scaling = 1

net.ipv4.tcp_sack = 1

vm.min_free_kbytes = 262144

vm.panic_on_oom = 0

vm.vfs_cache_pressure = 200

vm.swappiness = 30

net.ipv4.route.max_size = 5242880

net.ipv4.tcp_syn_retries = 6

net.ipv4.tcp_retries1 = 3

net.ipv4.tcp_retries2 = 6

```

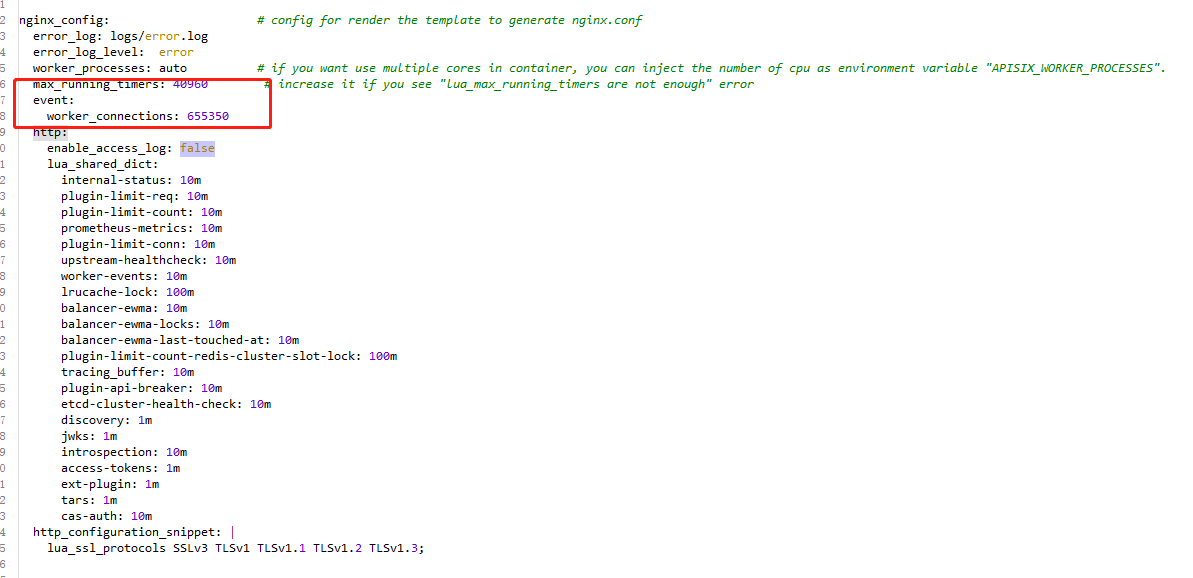

Additionally, you can try increasing the number of worker_connections( vim config.yaml ):

```

max_running_timers: 40960 # increase it if you see "lua_max_running_timers are not enough" error

event:

worker_connections: 655350

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: notifications-unsubscribe@apisix.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [apisix] githubxubin commented on issue #9343: QPS too low!!!

Posted by "githubxubin (via GitHub)" <gi...@apache.org>.

githubxubin commented on issue #9343:

URL: https://github.com/apache/apisix/issues/9343#issuecomment-1649287717

>

> Can you deploy a static website in the same subnet for testing? The picture seems to have a lot of network delay.

Upstream of my setting is a file placed on the linux server with only 1 kb size within the same LAN

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: notifications-unsubscribe@apisix.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [apisix] githubxubin commented on issue #9343: QPS too low!!!

Posted by "githubxubin (via GitHub)" <gi...@apache.org>.

githubxubin commented on issue #9343:

URL: https://github.com/apache/apisix/issues/9343#issuecomment-1515674838

> I think you can provide more information, such as what your Nginx configuration is?

Here is my apisix_conf configuration. I didn't add anything else. Am I missing something

```

apisix:

node_listen: 9080 # APISIX listening port

enable_ipv6: false

ssl:

enable: true

enable_http2: true

listen_port: 9443

ssl_trusted_certificate: /usr/local/apisix/conf/cert/apisix.ca-bundle

allow_admin: # http://nginx.org/en/docs/http/ngx_http_access_module.html#allow

- 0.0.0.0/0 # We need to restrict ip access rules for security. 0.0.0.0/0 is for test.

admin_key:

- name: "admin"

key: edd1c9f034335f136f87ad84b625c8f1

role: admin # admin: manage all configuration data

# viewer: only can view configuration data

- name: "viewer"

key: 4054f7cf07e344346cd3f287985e76a2

role: viewer

enable_control: true

control:

ip: "0.0.0.0"

port: 9092

etcd:

host: # it's possible to define multiple etcd hosts addresses of the same etcd cluster.

- "http://etcd:2379" # multiple etcd address

prefix: "/apisix" # apisix configurations prefix

timeout: 30 # 30 seconds

nginx_config: # config for render the template to generate nginx.conf

error_log: logs/error.log

error_log_level: warn

worker_processes: auto # if you want use multiple cores in container, you can inject the number of cpu as environment variable "APISIX_WORKER_PROCESSES".

http:

lua_shared_dict:

internal-status: 10m

plugin-limit-req: 10m

plugin-limit-count: 10m

prometheus-metrics: 10m

plugin-limit-conn: 10m

upstream-healthcheck: 10m

worker-events: 10m

lrucache-lock: 100m

balancer-ewma: 10m

balancer-ewma-locks: 10m

balancer-ewma-last-touched-at: 10m

plugin-limit-count-redis-cluster-slot-lock: 100m

tracing_buffer: 10m

plugin-api-breaker: 10m

etcd-cluster-health-check: 10m

discovery: 1m

jwks: 1m

introspection: 10m

access-tokens: 1m

ext-plugin: 1m

tars: 1m

cas-auth: 10m

http_configuration_snippet: |

lua_ssl_protocols SSLv3 TLSv1 TLSv1.1 TLSv1.2 TLSv1.3;

plugins:

# the plugins you enabled

- log-rotate

- jwt-auth

- ip-restriction

- jwt-auth

- kafka-logger

- key-auth

- limit-conn

- public-api

- limit-count

- limit-req

- basic-auth

- cors

- proxy-cache

- proxy-mirror

- proxy-rewrite

- redirect

- referer-restriction

- request-id

- request-validation

- response-rewrite

plugin_attr:

log-rotate:

interval: 360000 # rotate interval (unit: second)

max_kept: 168 # max number of log files will be kept

enable_compression: true # enable log file compression(gzip) or not, default false

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: notifications-unsubscribe@apisix.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [apisix] githubxubin commented on issue #9343: QPS too low!!!

Posted by "githubxubin (via GitHub)" <gi...@apache.org>.

githubxubin commented on issue #9343:

URL: https://github.com/apache/apisix/issues/9343#issuecomment-1517266884

>

This is the Intranet environment, I can access the upstream alone request, can reach 60,000 +

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: notifications-unsubscribe@apisix.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [apisix] bin-53 commented on issue #9343: QPS too low!!!

Posted by "bin-53 (via GitHub)" <gi...@apache.org>.

bin-53 commented on issue #9343:

URL: https://github.com/apache/apisix/issues/9343#issuecomment-1515761450

> APISIX node tries to turn off log output and then test again (to eliminate factors such as low disk IO)?

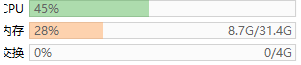

Nothing changed, I found that through apisix proxy, cup is not being fully utilized, Max is only 50%.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: notifications-unsubscribe@apisix.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [apisix] panhow commented on issue #9343: QPS too low!!!

Posted by "panhow (via GitHub)" <gi...@apache.org>.

panhow commented on issue #9343:

URL: https://github.com/apache/apisix/issues/9343#issuecomment-1519693397

> I can only provide some reference based on my experience.

>

> When I directly load test an HTTP server written in Go (only outputting 'hello world'), the QPS is 89246, while when forwarded by AIPSIX( the configuration of APISIX server is 8 cores and 8GB RAM. ), the QPS is 72765. The concurrency during load testing was 500. From my results, it seems that there is not a significant difference in the load testing results between using APISIX forwarding and directly load testing the target server.

>

> I think the following points can be considered:

>

> 1. The bandwidth situation of AIPSIX nodes, investigate whether there are any bottlenecks.

> 2. Check the kernel parameters of APISIX's Linux node.

>

> Below are my system kernel parameters of APISIX node, you can take a look for reference( do not use directly for production due to differences in network environment. ):

>

> ```

> kernel.msgmnb = 655360

> kernel.msgmax = 65536

> kernel.msgmni = 16384

> kernel.shmmax = 68719476736

> kernel.shmall = 4294967296

> net.ipv4.ip_forward = 1

> net.ipv4.conf.all.rp_filter = 0

> net.ipv4.conf.default.rp_filter = 0

> net.ipv4.conf.all.accept_source_route = 0

> net.ipv4.conf.default.accept_source_route = 0

> net.ipv4.tcp_tw_recycle = 0

> net.ipv4.tcp_tw_reuse = 1

> net.ipv4.tcp_timestamps = 0

> net.ipv4.tcp_syncookies = 1

> net.ipv4.conf.lo.arp_ignore = 1

> net.ipv4.conf.lo.arp_announce = 2

> net.ipv4.conf.all.arp_ignore = 1

> net.ipv4.conf.all.arp_announce = 2

> net.ipv4.tcp_retrans_collapse = 1

> net.ipv4.conf.all.log_martians = 0

> net.ipv4.conf.macv-host.log_martians = 0

> net.ipv4.conf.default.log_martians = 0

> net.ipv4.conf.bond0.log_martians = 0

> net.ipv4.ip_nonlocal_bind = 0

> fs.inotify.max_queued_events = 16384000

> fs.inotify.max_user_instances = 1280000

> fs.inotify.max_user_watches = 8192000

> net.core.netdev_max_backlog = 200000

> net.bridge.bridge-nf-call-iptables = 1

> net.bridge.bridge-nf-call-ip6tables = 1

> vm.swappiness = 0

> vm.overcommit_memory = 1

> vm.panic_on_oom = 0

> fs.file-max = 52706963

> fs.nr_open = 52706963

> net.netfilter.nf_conntrack_tcp_timeout_established = 432000

> net.nf_conntrack_max = 10485760

> net.netfilter.nf_conntrack_max=10485760

> net.netfilter.nf_conntrack_buckets = 655360

> net.ipv4.neigh.default.gc_thresh1 = 163840

> net.ipv4.neigh.default.gc_thresh2 = 327680

> net.ipv4.neigh.default.gc_thresh3 = 500000

> net.ipv6.neigh.default.gc_thresh1 = 163840

> net.ipv6.neigh.default.gc_thresh2 = 327680

> net.ipv6.neigh.default.gc_thresh3 = 500000

> kernel.pid_max = 1966080

> kernel.threads-max = 2062606

> vm.max_map_count = 26214400

> net.core.somaxconn = 2621440

> net.ipv4.tcp_max_syn_backlog = 3276800

> net.ipv4.tcp_max_orphans = 2621440

> net.ipv6.conf.all.disable_ipv6 = 1

> net.core.rmem_default = 212992

> net.core.wmem_default = 212992

> net.core.rmem_max = 16777216

> net.core.wmem_max = 16777216

> net.ipv4.tcp_rmem = 4096 87380 16777216

> net.ipv4.tcp_wmem = 4096 65536 16777216

> net.ipv4.ip_local_port_range = 10000 65000

> net.ipv4.tcp_synack_retries = 5

> net.ipv4.tcp_syn_retries = 5

> net.ipv4.tcp_keepalive_time = 150

> net.ipv4.tcp_fin_timeout = 15

> net.ipv4.tcp_max_tw_buckets = 1440000

> net.ipv4.tcp_window_scaling = 1

> net.ipv4.tcp_sack = 1

> vm.min_free_kbytes = 262144

> vm.panic_on_oom = 0

> vm.vfs_cache_pressure = 200

> vm.swappiness = 30

> net.ipv4.route.max_size = 5242880

> net.ipv4.tcp_syn_retries = 6

> net.ipv4.tcp_retries1 = 3

> net.ipv4.tcp_retries2 = 6

> ```

>

> Additionally, you can try increasing the number of worker_connections( vim config.yaml ):

>

> ```

> max_running_timers: 40960 # increase it if you see "lua_max_running_timers are not enough" error

>

> event:

> worker_connections: 655350

> ```

summary above this: per core process 9000 request per second,

in @githubxubin 's case, max cpu usage up to 45% of a 4 core machine, totally qps: 26000 request per second, it's about 26000/(4*0.45) = 14000 request per second, so this test make sense?

maybe @githubxubin should figure out why apisix can't run cpu usage up to 100%

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: notifications-unsubscribe@apisix.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [apisix] kellyseeme commented on issue #9343: QPS too low!!!

Posted by "kellyseeme (via GitHub)" <gi...@apache.org>.

kellyseeme commented on issue #9343:

URL: https://github.com/apache/apisix/issues/9343#issuecomment-1525285939

I find this question too...not as advertised

and i disable more plugins

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: notifications-unsubscribe@apisix.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [apisix] kellyseeme commented on issue #9343: QPS too low!!!

Posted by "kellyseeme (via GitHub)" <gi...@apache.org>.

kellyseeme commented on issue #9343:

URL: https://github.com/apache/apisix/issues/9343#issuecomment-1525358644

> > I find this question too...not as advertised and i disable more plugins

>

> Hi~, can't you reach the data of the official website even after the stress test?

can‘t,and i change more settings,we test it in vm and k8s

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: notifications-unsubscribe@apisix.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [apisix] githubxubin commented on issue #9343: QPS too low!!!

Posted by "githubxubin (via GitHub)" <gi...@apache.org>.

githubxubin commented on issue #9343:

URL: https://github.com/apache/apisix/issues/9343#issuecomment-1526848756

>

If there is any progress, please reply in this, hahah,tks!

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: notifications-unsubscribe@apisix.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [apisix] Sn0rt commented on issue #9343: QPS too low!!!

Posted by "Sn0rt (via GitHub)" <gi...@apache.org>.

Sn0rt commented on issue #9343:

URL: https://github.com/apache/apisix/issues/9343#issuecomment-1654838973

If a user encounters this problem next time, we will reopen this issue

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: notifications-unsubscribe@apisix.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [apisix] AlinsRan commented on issue #9343: QPS too low!!!

Posted by "AlinsRan (via GitHub)" <gi...@apache.org>.

AlinsRan commented on issue #9343:

URL: https://github.com/apache/apisix/issues/9343#issuecomment-1517217412

Your latency seems very high. It doesn't seem to be an intranet environment?

I think you can stress testing upstream on APISIX nodes.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: notifications-unsubscribe@apisix.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [apisix] AlinsRan closed issue #9343: QPS too low!!!

Posted by "AlinsRan (via GitHub)" <gi...@apache.org>.

AlinsRan closed issue #9343: QPS too low!!!

URL: https://github.com/apache/apisix/issues/9343

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: notifications-unsubscribe@apisix.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [apisix] Sn0rt commented on issue #9343: QPS too low!!!

Posted by "Sn0rt (via GitHub)" <gi...@apache.org>.

Sn0rt commented on issue #9343:

URL: https://github.com/apache/apisix/issues/9343#issuecomment-1649311863

> >

>

> > Can you deploy a static website in the same subnet for testing? The picture seems to have a lot of network delay.

>

> Upstream of my setting is a file placed on the linux server with only 1 kb size within the same LAN

can you test the TCP preformance between APISIX machine and upstream machine ?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: notifications-unsubscribe@apisix.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [apisix] githubxubin commented on issue #9343: QPS too low!!!

Posted by "githubxubin (via GitHub)" <gi...@apache.org>.

githubxubin commented on issue #9343:

URL: https://github.com/apache/apisix/issues/9343#issuecomment-1515673028

>

Here is my apisix_conf configuration. I didn't add anything else. Am I missing something

----

apisix:

node_listen: 9080 # APISIX listening port

enable_ipv6: false

ssl:

enable: true

enable_http2: true

listen_port: 9443

ssl_trusted_certificate: /usr/local/apisix/conf/cert/apisix.ca-bundle

allow_admin: # http://nginx.org/en/docs/http/ngx_http_access_module.html#allow

- 0.0.0.0/0 # We need to restrict ip access rules for security. 0.0.0.0/0 is for test.

admin_key:

- name: "admin"

key: edd1c9f034335f136f87ad84b625c8f1

role: admin # admin: manage all configuration data

# viewer: only can view configuration data

- name: "viewer"

key: 4054f7cf07e344346cd3f287985e76a2

role: viewer

enable_control: true

control:

ip: "0.0.0.0"

port: 9092

etcd:

host: # it's possible to define multiple etcd hosts addresses of the same etcd cluster.

- "http://etcd:2379" # multiple etcd address

prefix: "/apisix" # apisix configurations prefix

timeout: 30 # 30 seconds

nginx_config: # config for render the template to generate nginx.conf

error_log: logs/error.log

error_log_level: warn

worker_processes: auto # if you want use multiple cores in container, you can inject the number of cpu as environment variable "APISIX_WORKER_PROCESSES".

http:

lua_shared_dict:

internal-status: 10m

plugin-limit-req: 10m

plugin-limit-count: 10m

prometheus-metrics: 10m

plugin-limit-conn: 10m

upstream-healthcheck: 10m

worker-events: 10m

lrucache-lock: 100m

balancer-ewma: 10m

balancer-ewma-locks: 10m

balancer-ewma-last-touched-at: 10m

plugin-limit-count-redis-cluster-slot-lock: 100m

tracing_buffer: 10m

plugin-api-breaker: 10m

etcd-cluster-health-check: 10m

discovery: 1m

jwks: 1m

introspection: 10m

access-tokens: 1m

ext-plugin: 1m

tars: 1m

cas-auth: 10m

http_configuration_snippet: |

lua_ssl_protocols SSLv3 TLSv1 TLSv1.1 TLSv1.2 TLSv1.3;

plugins:

# the plugins you enabled

- log-rotate

- jwt-auth

- ip-restriction

- jwt-auth

- kafka-logger

- key-auth

- limit-conn

- public-api

- limit-count

- limit-req

- basic-auth

- cors

- proxy-cache

- proxy-mirror

- proxy-rewrite

- redirect

- referer-restriction

- request-id

- request-validation

- response-rewrite

plugin_attr:

log-rotate:

interval: 360000 # rotate interval (unit: second)

max_kept: 168 # max number of log files will be kept

enable_compression: true # enable log file compression(gzip) or not, default false

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: notifications-unsubscribe@apisix.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [apisix] githubxubin commented on issue #9343: QPS too low!!!

Posted by "githubxubin (via GitHub)" <gi...@apache.org>.

githubxubin commented on issue #9343:

URL: https://github.com/apache/apisix/issues/9343#issuecomment-1517187016

>

No, QPS is still low, my server configuration should be fine

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: notifications-unsubscribe@apisix.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [apisix] bin-53 commented on issue #9343: QPS too low!!!

Posted by "bin-53 (via GitHub)" <gi...@apache.org>.

bin-53 commented on issue #9343:

URL: https://github.com/apache/apisix/issues/9343#issuecomment-1515750633

> APISIX node tries to turn off log output and then test again (to eliminate factors such as low disk IO)?

I'll try it

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: notifications-unsubscribe@apisix.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [apisix] Sn0rt commented on issue #9343: QPS too low!!!

Posted by "Sn0rt (via GitHub)" <gi...@apache.org>.

Sn0rt commented on issue #9343:

URL: https://github.com/apache/apisix/issues/9343#issuecomment-1649263697

Can you deploy a static website in the same subnet for testing?

The picture seems to have a lot of network delay.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: notifications-unsubscribe@apisix.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [apisix] hansedong commented on issue #9343: QPS too low!!!

Posted by "hansedong (via GitHub)" <gi...@apache.org>.

hansedong commented on issue #9343:

URL: https://github.com/apache/apisix/issues/9343#issuecomment-1515719764

APISIX node tries to turn off log output and then test again (to eliminate factors such as low disk IO)?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: notifications-unsubscribe@apisix.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [apisix] hansedong commented on issue #9343: QPS too low!!!

Posted by "hansedong (via GitHub)" <gi...@apache.org>.

hansedong commented on issue #9343:

URL: https://github.com/apache/apisix/issues/9343#issuecomment-1515654749

I think you can provide more information, such as what your Nginx configuration is?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: notifications-unsubscribe@apisix.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [apisix] githubxubin commented on issue #9343: QPS too low!!!

Posted by "githubxubin (via GitHub)" <gi...@apache.org>.

githubxubin commented on issue #9343:

URL: https://github.com/apache/apisix/issues/9343#issuecomment-1519730034

> > I can only provide some reference based on my experience.

> > When I directly load test an HTTP server written in Go (only outputting 'hello world'), the QPS is 89246, while when forwarded by AIPSIX( the configuration of APISIX server is 8 cores and 8GB RAM. ), the QPS is 72765. The concurrency during load testing was 500. From my results, it seems that there is not a significant difference in the load testing results between using APISIX forwarding and directly load testing the target server.

> > I think the following points can be considered:

> >

> > 1. The bandwidth situation of AIPSIX nodes, investigate whether there are any bottlenecks.

> > 2. Check the kernel parameters of APISIX's Linux node.

> >

> > Below are my system kernel parameters of APISIX node, you can take a look for reference( do not use directly for production due to differences in network environment. ):

> > ```

> > kernel.msgmnb = 655360

> > kernel.msgmax = 65536

> > kernel.msgmni = 16384

> > kernel.shmmax = 68719476736

> > kernel.shmall = 4294967296

> > net.ipv4.ip_forward = 1

> > net.ipv4.conf.all.rp_filter = 0

> > net.ipv4.conf.default.rp_filter = 0

> > net.ipv4.conf.all.accept_source_route = 0

> > net.ipv4.conf.default.accept_source_route = 0

> > net.ipv4.tcp_tw_recycle = 0

> > net.ipv4.tcp_tw_reuse = 1

> > net.ipv4.tcp_timestamps = 0

> > net.ipv4.tcp_syncookies = 1

> > net.ipv4.conf.lo.arp_ignore = 1

> > net.ipv4.conf.lo.arp_announce = 2

> > net.ipv4.conf.all.arp_ignore = 1

> > net.ipv4.conf.all.arp_announce = 2

> > net.ipv4.tcp_retrans_collapse = 1

> > net.ipv4.conf.all.log_martians = 0

> > net.ipv4.conf.macv-host.log_martians = 0

> > net.ipv4.conf.default.log_martians = 0

> > net.ipv4.conf.bond0.log_martians = 0

> > net.ipv4.ip_nonlocal_bind = 0

> > fs.inotify.max_queued_events = 16384000

> > fs.inotify.max_user_instances = 1280000

> > fs.inotify.max_user_watches = 8192000

> > net.core.netdev_max_backlog = 200000

> > net.bridge.bridge-nf-call-iptables = 1

> > net.bridge.bridge-nf-call-ip6tables = 1

> > vm.swappiness = 0

> > vm.overcommit_memory = 1

> > vm.panic_on_oom = 0

> > fs.file-max = 52706963

> > fs.nr_open = 52706963

> > net.netfilter.nf_conntrack_tcp_timeout_established = 432000

> > net.nf_conntrack_max = 10485760

> > net.netfilter.nf_conntrack_max=10485760

> > net.netfilter.nf_conntrack_buckets = 655360

> > net.ipv4.neigh.default.gc_thresh1 = 163840

> > net.ipv4.neigh.default.gc_thresh2 = 327680

> > net.ipv4.neigh.default.gc_thresh3 = 500000

> > net.ipv6.neigh.default.gc_thresh1 = 163840

> > net.ipv6.neigh.default.gc_thresh2 = 327680

> > net.ipv6.neigh.default.gc_thresh3 = 500000

> > kernel.pid_max = 1966080

> > kernel.threads-max = 2062606

> > vm.max_map_count = 26214400

> > net.core.somaxconn = 2621440

> > net.ipv4.tcp_max_syn_backlog = 3276800

> > net.ipv4.tcp_max_orphans = 2621440

> > net.ipv6.conf.all.disable_ipv6 = 1

> > net.core.rmem_default = 212992

> > net.core.wmem_default = 212992

> > net.core.rmem_max = 16777216

> > net.core.wmem_max = 16777216

> > net.ipv4.tcp_rmem = 4096 87380 16777216

> > net.ipv4.tcp_wmem = 4096 65536 16777216

> > net.ipv4.ip_local_port_range = 10000 65000

> > net.ipv4.tcp_synack_retries = 5

> > net.ipv4.tcp_syn_retries = 5

> > net.ipv4.tcp_keepalive_time = 150

> > net.ipv4.tcp_fin_timeout = 15

> > net.ipv4.tcp_max_tw_buckets = 1440000

> > net.ipv4.tcp_window_scaling = 1

> > net.ipv4.tcp_sack = 1

> > vm.min_free_kbytes = 262144

> > vm.panic_on_oom = 0

> > vm.vfs_cache_pressure = 200

> > vm.swappiness = 30

> > net.ipv4.route.max_size = 5242880

> > net.ipv4.tcp_syn_retries = 6

> > net.ipv4.tcp_retries1 = 3

> > net.ipv4.tcp_retries2 = 6

> > ```

> >

> >

> >

> >

> >

> >

> >

> >

> >

> >

> >

> > Additionally, you can try increasing the number of worker_connections( vim config.yaml ):

> > ```

> > max_running_timers: 40960 # increase it if you see "lua_max_running_timers are not enough" error

> >

> > event:

> > worker_connections: 655350

> > ```

>

> summary above this: per core process 9000 request per second, in @githubxubin 's case, max cpu usage up to 45% of a 4 core machine, totally qps: 26000 request per second, it's about 26000/(4*0.45) = 14000 request per second per core, so this test make sense?

>

> maybe @githubxubin should figure out why apisix can't run cpu usage up to 100%

Firstly,thank you for your reply. The cpu of apisix cannot run up to 100%, which is why I raised this issue. I suspect that there is something wrong with the configuration of apisix, maybe I have neglected some key configuration. .This performance issue may prevent me from using apisix further.

Thank you again

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: notifications-unsubscribe@apisix.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [apisix] githubxubin commented on issue #9343: QPS too low!!!

Posted by "githubxubin (via GitHub)" <gi...@apache.org>.

githubxubin commented on issue #9343:

URL: https://github.com/apache/apisix/issues/9343#issuecomment-1515770418

> (Is that all it takes to set up the log?)Nothing changed, I found that through apisix proxy, cup is not being fully utilized, Max is only 50%.

(Is that all it takes to set up the log?)Nothing changed, I found that through apisix proxy, cup is not being fully utilized, Max is only 50%.

the cup highest:

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: notifications-unsubscribe@apisix.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [apisix] githubxubin commented on issue #9343: QPS too low!!!

Posted by "githubxubin (via GitHub)" <gi...@apache.org>.

githubxubin commented on issue #9343:

URL: https://github.com/apache/apisix/issues/9343#issuecomment-1516015844

> APISIX node tries to turn off log output and then test again (to eliminate factors such as low disk IO)?

For the gateway, we focus on the first is the performance, can help me to answer this problem?tks

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: notifications-unsubscribe@apisix.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [apisix] githubxubin commented on issue #9343: QPS too low!!!

Posted by "githubxubin (via GitHub)" <gi...@apache.org>.

githubxubin commented on issue #9343:

URL: https://github.com/apache/apisix/issues/9343#issuecomment-1525325328

> I find this question too...not as advertised and i disable more plugins

Hi~, can't you reach the data of the official website even after the stress test?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: notifications-unsubscribe@apisix.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org