You are viewing a plain text version of this content. The canonical link for it is here.

Posted to issues@flink.apache.org by GitBox <gi...@apache.org> on 2020/05/22 19:35:25 UTC

[GitHub] [flink] caozhen1937 opened a new pull request #12296: [FLINK-17814][chinese-translation]Translate native kubernetes document to Chinese

caozhen1937 opened a new pull request #12296:

URL: https://github.com/apache/flink/pull/12296

<!--

*Thank you very much for contributing to Apache Flink - we are happy that you want to help us improve Flink. To help the community review your contribution in the best possible way, please go through the checklist below, which will get the contribution into a shape in which it can be best reviewed.*

*Please understand that we do not do this to make contributions to Flink a hassle. In order to uphold a high standard of quality for code contributions, while at the same time managing a large number of contributions, we need contributors to prepare the contributions well, and give reviewers enough contextual information for the review. Please also understand that contributions that do not follow this guide will take longer to review and thus typically be picked up with lower priority by the community.*

## Contribution Checklist

- Make sure that the pull request corresponds to a [JIRA issue](https://issues.apache.org/jira/projects/FLINK/issues). Exceptions are made for typos in JavaDoc or documentation files, which need no JIRA issue.

- Name the pull request in the form "[FLINK-XXXX] [component] Title of the pull request", where *FLINK-XXXX* should be replaced by the actual issue number. Skip *component* if you are unsure about which is the best component.

Typo fixes that have no associated JIRA issue should be named following this pattern: `[hotfix] [docs] Fix typo in event time introduction` or `[hotfix] [javadocs] Expand JavaDoc for PuncuatedWatermarkGenerator`.

- Fill out the template below to describe the changes contributed by the pull request. That will give reviewers the context they need to do the review.

- Make sure that the change passes the automated tests, i.e., `mvn clean verify` passes. You can set up Travis CI to do that following [this guide](https://flink.apache.org/contributing/contribute-code.html#open-a-pull-request).

- Each pull request should address only one issue, not mix up code from multiple issues.

- Each commit in the pull request has a meaningful commit message (including the JIRA id)

- Once all items of the checklist are addressed, remove the above text and this checklist, leaving only the filled out template below.

**(The sections below can be removed for hotfixes of typos)**

-->

## What is the purpose of the change

Translate native kubernetes document to Chinese

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [flink] wangyang0918 commented on a change in pull request #12296: [FLINK-17814][chinese-translation]Translate native kubernetes document to Chinese

Posted by GitBox <gi...@apache.org>.

wangyang0918 commented on a change in pull request #12296:

URL: https://github.com/apache/flink/pull/12296#discussion_r429757191

##########

File path: docs/ops/deployment/native_kubernetes.zh.md

##########

@@ -24,43 +24,41 @@ specific language governing permissions and limitations

under the License.

-->

-This page describes how to deploy a Flink session cluster natively on [Kubernetes](https://kubernetes.io).

+本页面描述了如何在 [Kubernetes](https://kubernetes.io) 原生的部署 Flink session 集群。

* This will be replaced by the TOC

{:toc}

<div class="alert alert-warning">

-Flink's native Kubernetes integration is still experimental. There may be changes in the configuration and CLI flags in latter versions.

+Flink 的原生 Kubernetes 集成仍处于试验阶段。在以后的版本中,配置和 CLI flags 可能会发生变化。

</div>

-## Requirements

+## 要求

-- Kubernetes 1.9 or above.

-- KubeConfig, which has access to list, create, delete pods and services, configurable via `~/.kube/config`. You can verify permissions by running `kubectl auth can-i <list|create|edit|delete> pods`.

-- Kubernetes DNS enabled.

-- A service Account with [RBAC](#rbac) permissions to create, delete pods.

+- Kubernetes 版本 1.9 或以上。

+- KubeConfig 可以查看、创建、删除 pods 和 services,可以通过`~/.kube/config` 配置。你可以通过运行 `kubectl auth can-i <list|create|edit|delete> pods` 来验证权限。

+- 启用 Kubernetes DNS。

+- 具有 [RBAC](#rbac) 权限的 Service Account 可以创建、删除 pods。

## Flink Kubernetes Session

-### Start Flink Session

+### 启动 Flink Session

-Follow these instructions to start a Flink Session within your Kubernetes cluster.

+按照以下说明在 Kubernetes 集群中启动 Flink Session。

-A session will start all required Flink services (JobManager and TaskManagers) so that you can submit programs to the cluster.

-Note that you can run multiple programs per session.

+session 将启动所有必需的 Flink 服务(JobManager 和 TaskManagers),以便你可以将程序提交到集群。

Review comment:

session -> Session集群

##########

File path: docs/ops/deployment/native_kubernetes.zh.md

##########

@@ -168,21 +165,21 @@ appender.console.layout.type = PatternLayout

appender.console.layout.pattern = %d{yyyy-MM-dd HH:mm:ss,SSS} %-5p %-60c %x - %m%n

{% endhighlight %}

-If the pod is running, you can use `kubectl exec -it <PodName> bash` to tunnel in and view the logs or debug the process.

+如果 pod 正在运行,可以使用 `kubectl exec -it <PodName> bash` 进入 pod 并查看日志或调试进程。

-## Flink Kubernetes Application

+## Flink Kubernetes 应用程序

-### Start Flink Application

+### 启动 Flink 应用程序

-Application mode allows users to create a single image containing their Job and the Flink runtime, which will automatically create and destroy cluster components as needed. The Flink community provides base docker images [customized](docker.html#customize-flink-image) for any use case.

+应用程序模式允许用户创建单个镜像,其中包含他们的作业和 Flink 运行时,该镜像将按需自动创建和销毁集群组件。Flink 社区为任何用例提供了基础镜像 [customized](docker.html#customize-flink-image)。

Review comment:

建议改为:Flink社区提供了可以构建多用途自定义镜像的基础镜像

超链接也改一下, [customized] -> "多用途自定义镜像"

##########

File path: docs/ops/deployment/native_kubernetes.zh.md

##########

@@ -24,43 +24,41 @@ specific language governing permissions and limitations

under the License.

-->

-This page describes how to deploy a Flink session cluster natively on [Kubernetes](https://kubernetes.io).

+本页面描述了如何在 [Kubernetes](https://kubernetes.io) 原生的部署 Flink session 集群。

* This will be replaced by the TOC

{:toc}

<div class="alert alert-warning">

-Flink's native Kubernetes integration is still experimental. There may be changes in the configuration and CLI flags in latter versions.

+Flink 的原生 Kubernetes 集成仍处于试验阶段。在以后的版本中,配置和 CLI flags 可能会发生变化。

</div>

-## Requirements

+## 要求

-- Kubernetes 1.9 or above.

-- KubeConfig, which has access to list, create, delete pods and services, configurable via `~/.kube/config`. You can verify permissions by running `kubectl auth can-i <list|create|edit|delete> pods`.

-- Kubernetes DNS enabled.

-- A service Account with [RBAC](#rbac) permissions to create, delete pods.

+- Kubernetes 版本 1.9 或以上。

+- KubeConfig 可以查看、创建、删除 pods 和 services,可以通过`~/.kube/config` 配置。你可以通过运行 `kubectl auth can-i <list|create|edit|delete> pods` 来验证权限。

+- 启用 Kubernetes DNS。

+- 具有 [RBAC](#rbac) 权限的 Service Account 可以创建、删除 pods。

## Flink Kubernetes Session

-### Start Flink Session

+### 启动 Flink Session

-Follow these instructions to start a Flink Session within your Kubernetes cluster.

+按照以下说明在 Kubernetes 集群中启动 Flink Session。

-A session will start all required Flink services (JobManager and TaskManagers) so that you can submit programs to the cluster.

-Note that you can run multiple programs per session.

+session 将启动所有必需的 Flink 服务(JobManager 和 TaskManagers),以便你可以将程序提交到集群。

+注意你可以在每个 session 上运行多个程序。

{% highlight bash %}

$ ./bin/kubernetes-session.sh

{% endhighlight %}

-All the Kubernetes configuration options can be found in our [configuration guide]({{ site.baseurl }}/zh/ops/config.html#kubernetes).

+所有 Kubernetes 配置项都可以在我们的[配置指南]({{ site.baseurl }}/zh/ops/config.html#kubernetes)中找到。

-**Example**: Issue the following command to start a session cluster with 4 GB of memory and 2 CPUs with 4 slots per TaskManager:

+**示例**: 执行以下命令启动 session 集群,每个 TaskManager 分配 4 GB 内存、2 CPUs、4 slots:

-In this example we override the `resourcemanager.taskmanager-timeout` setting to make

-the pods with task managers remain for a longer period than the default of 30 seconds.

-Although this setting may cause more cloud cost it has the effect that starting new jobs is in some scenarios

-faster and during development you have more time to inspect the logfiles of your job.

+在此示例中,我们覆盖了 `resourcemanager.taskmanager-timeout` 配置,为了使运行 taskmanager 的 pod 停留时间比默认的 30 秒更长。

+尽管此设置可能造成更多的云成本,但在某些情况下更快地启动新作业,并且在开发过程中,你有更多的时间检查作业的日志文件。

Review comment:

这里用云成本可能不太合适,不太好理解。建议改为“尽管此设置可能在云环境下增加成本”

##########

File path: docs/ops/deployment/native_kubernetes.zh.md

##########

@@ -92,73 +89,73 @@ $ ./bin/kubernetes-session.sh \

-Dkubernetes.container.image=<CustomImageName>

{% endhighlight %}

-### Submitting jobs to an existing Session

+### 将作业提交到现有 Session

-Use the following command to submit a Flink Job to the Kubernetes cluster.

+使用以下命令将 Flink 作业提交到 Kubernetes 集群。

{% highlight bash %}

$ ./bin/flink run -d -e kubernetes-session -Dkubernetes.cluster-id=<ClusterId> examples/streaming/WindowJoin.jar

{% endhighlight %}

-### Accessing Job Manager UI

+### 访问 Job Manager UI

-There are several ways to expose a Service onto an external (outside of your cluster) IP address.

-This can be configured using `kubernetes.service.exposed.type`.

+有几种方法可以将服务暴露到外部(集群外部) IP 地址。

+可以使用 `kubernetes.service.exposed.type` 进行配置。

-- `ClusterIP`: Exposes the service on a cluster-internal IP.

-The Service is only reachable within the cluster. If you want to access the Job Manager ui or submit job to the existing session, you need to start a local proxy.

-You can then use `localhost:8081` to submit a Flink job to the session or view the dashboard.

+- `ClusterIP`:通过集群内部 IP 暴露服务。

+该服务只能在集群中访问。如果想访问 JobManager ui 或将作业提交到现有 session,则需要启动一个本地代理。

+然后你可以使用 `localhost:8081` 将 Flink 作业提交到 session 或查看仪表盘。

{% highlight bash %}

$ kubectl port-forward service/<ServiceName> 8081

{% endhighlight %}

-- `NodePort`: Exposes the service on each Node’s IP at a static port (the `NodePort`). `<NodeIP>:<NodePort>` could be used to contact the Job Manager Service. `NodeIP` could be easily replaced with Kubernetes ApiServer address.

-You could find it in your kube config file.

+- `NodePort`:通过每个 Node 上的 IP 和静态端口(`NodePort`)暴露服务。`<NodeIP>:<NodePort>` 可以用来连接 JobManager 服务。`NodeIP` 可以很容易地用 Kubernetes ApiServer 地址替换。

+你可以在 kube 配置文件找到它。

-- `LoadBalancer`: Default value, exposes the service externally using a cloud provider’s load balancer.

-Since the cloud provider and Kubernetes needs some time to prepare the load balancer, you may get a `NodePort` JobManager Web Interface in the client log.

-You can use `kubectl get services/<ClusterId>` to get EXTERNAL-IP and then construct the load balancer JobManager Web Interface manually `http://<EXTERNAL-IP>:8081`.

+- `LoadBalancer`:默认值,使用云提供商的负载均衡器在外部暴露服务。

+由于云提供商和 Kubernetes 需要一些时间来准备负载均衡器,因此你可以在客户端日志中获得一个 `NodePort` 的 JobManager Web 界面。

Review comment:

“因此你可以在” -> "因为你可能在"

这个地方的语义是本应得到一个LB地址,实际上返回了NodePort

##########

File path: docs/ops/deployment/native_kubernetes.zh.md

##########

@@ -71,16 +69,15 @@ $ ./bin/kubernetes-session.sh \

-Dresourcemanager.taskmanager-timeout=3600000

{% endhighlight %}

-The system will use the configuration in `conf/flink-conf.yaml`.

-Please follow our [configuration guide]({{ site.baseurl }}/zh/ops/config.html) if you want to change something.

+系统将使用 `conf/flink-conf.yaml` 中的配置。

+如果你更改某些配置,请遵循我们的[配置指南]({{ site.baseurl }}/zh/ops/config.html)。

-If you do not specify a particular name for your session by `kubernetes.cluster-id`, the Flink client will generate a UUID name.

+如果你未通过 `kubernetes.cluster-id` 为 session 指定特定名称,Flink 客户端将会生成一个 UUID 名称。

-### Custom Flink Docker image

+### 自定义 Flink Docker 镜像

-If you want to use a custom Docker image to deploy Flink containers, check [the Flink Docker image documentation](docker.html),

-[its tags](docker.html#image-tags), [how to customize the Flink Docker image](docker.html#customize-flink-image) and [enable plugins](docker.html#using-plugins).

-If you created a custom Docker image you can provide it by setting the [`kubernetes.container.image`](../config.html#kubernetes-container-image) configuration option:

+如果要使用自定义的 Docker 镜像部署 Flink 容器,请查看 [Flink Docker 镜像文档](docker.html)、[镜像 tags](docker.html#image-tags)、[如何自定义 Flink Docker 镜像](docker.html#customize-flink-image)和[启用插件](docker.html#using-plugins)。

+如果创建了自定义的 Docker 镜像,则可以通过设置 [`kubernetes.container.image`](../config.html#kubernetes-container-image) 配置项来提供它:

Review comment:

“来提供它” -> "来指定它"

##########

File path: docs/ops/deployment/native_kubernetes.zh.md

##########

@@ -92,73 +90,73 @@ $ ./bin/kubernetes-session.sh \

-Dkubernetes.container.image=<CustomImageName>

{% endhighlight %}

-### Submitting jobs to an existing Session

+### 将作业提交到现有 Session

-Use the following command to submit a Flink Job to the Kubernetes cluster.

+使用以下命令将 Flink 作业提交到 Kubernetes 集群。

{% highlight bash %}

$ ./bin/flink run -d -e kubernetes-session -Dkubernetes.cluster-id=<ClusterId> examples/streaming/WindowJoin.jar

{% endhighlight %}

-### Accessing Job Manager UI

+### 访问 Job Manager UI

-There are several ways to expose a Service onto an external (outside of your cluster) IP address.

-This can be configured using `kubernetes.service.exposed.type`.

+有几种方法可以将服务暴露到外部(集群外部) IP 地址。

+可以使用 `kubernetes.service.exposed.type` 进行配置。

-- `ClusterIP`: Exposes the service on a cluster-internal IP.

-The Service is only reachable within the cluster. If you want to access the Job Manager ui or submit job to the existing session, you need to start a local proxy.

-You can then use `localhost:8081` to submit a Flink job to the session or view the dashboard.

+- `ClusterIP`:通过集群内部 IP 暴露服务。

+该服务只能在集群中访问。如果想访问 JobManager ui 或将作业提交到现有 session,则需要启动一个本地代理。

+然后你可以使用 `localhost:8081` 将 Flink 作业提交到 session 或查看仪表盘。

{% highlight bash %}

$ kubectl port-forward service/<ServiceName> 8081

{% endhighlight %}

-- `NodePort`: Exposes the service on each Node’s IP at a static port (the `NodePort`). `<NodeIP>:<NodePort>` could be used to contact the Job Manager Service. `NodeIP` could be easily replaced with Kubernetes ApiServer address.

-You could find it in your kube config file.

+- `NodePort`:通过每个 Node 上的 IP 和静态端口(`NodePort`)暴露服务。`<NodeIP>:<NodePort>` 可以用来连接 JobManager 服务。`NodeIP` 可以很容易地用 Kubernetes ApiServer 地址替换。

+你可以在 kube 配置文件找到它。

-- `LoadBalancer`: Default value, exposes the service externally using a cloud provider’s load balancer.

-Since the cloud provider and Kubernetes needs some time to prepare the load balancer, you may get a `NodePort` JobManager Web Interface in the client log.

-You can use `kubectl get services/<ClusterId>` to get EXTERNAL-IP and then construct the load balancer JobManager Web Interface manually `http://<EXTERNAL-IP>:8081`.

+- `LoadBalancer`:默认值,使用云提供商的负载均衡器在外部暴露服务。

Review comment:

Flink中默认使用LoadBalancer的方式来进行暴露的

##########

File path: docs/ops/deployment/native_kubernetes.zh.md

##########

@@ -24,43 +24,41 @@ specific language governing permissions and limitations

under the License.

-->

-This page describes how to deploy a Flink session cluster natively on [Kubernetes](https://kubernetes.io).

+本页面描述了如何在 [Kubernetes](https://kubernetes.io) 原生的部署 Flink session 集群。

Review comment:

原生的->原生地

##########

File path: docs/ops/deployment/native_kubernetes.zh.md

##########

@@ -193,66 +190,66 @@ $ ./bin/flink run-application -p 8 -t kubernetes-application \

local:///opt/flink/usrlib/my-flink-job.jar

{% endhighlight %}

-Note: Only "local" is supported as schema for application mode. This assumes that the jar is located in the image, not the Flink client.

+注意:应用程序模式只支持 "local" 作为 schema。默认 jar 位于镜像中,而不是 Flink 客户端中。

-Note: All the jars in the "$FLINK_HOME/usrlib" directory in the image will be added to user classpath.

+注意:镜像的 "$FLINK_HOME/usrlib" 目录下的所有 jar 将会被加到用户 classpath 中。

-### Stop Flink Application

+### 停止 Flink 应用程序

-When an application is stopped, all Flink cluster resources are automatically destroyed.

-As always, Jobs may stop when manually canceled or, in the case of bounded Jobs, complete.

+当应用程序停止时,所有 Flink 集群资源都会自动销毁。

+与往常一样,在手动取消作业或完成作业的情况下,作业可能会停止。

{% highlight bash %}

$ ./bin/flink cancel -t kubernetes-application -Dkubernetes.cluster-id=<ClusterID> <JobID>

{% endhighlight %}

-## Kubernetes concepts

+## Kubernetes 概念

-### Namespaces

+### 命名空间

-[Namespaces in Kubernetes](https://kubernetes.io/docs/concepts/overview/working-with-objects/namespaces/) are a way to divide cluster resources between multiple users (via resource quota).

-It is similar to the queue concept in Yarn cluster. Flink on Kubernetes can use namespaces to launch Flink clusters.

-The namespace can be specified using the `-Dkubernetes.namespace=default` argument when starting a Flink cluster.

+[Kubernetes 中的命名空间](https://kubernetes.io/docs/concepts/overview/working-with-objects/namespaces/)是一种在多个用户之间划分集群资源的方法(通过资源配额)。

+它类似于 Yarn 集群中的队列概念。Flink on Kubernetes 可以使用命名空间来启动 Flink 集群。

+启动 Flink 集群时,可以使用 `-Dkubernetes.namespace=default` 参数来指定命名空间。

-[ResourceQuota](https://kubernetes.io/docs/concepts/policy/resource-quotas/) provides constraints that limit aggregate resource consumption per namespace.

-It can limit the quantity of objects that can be created in a namespace by type, as well as the total amount of compute resources that may be consumed by resources in that project.

+[资源配额](https://kubernetes.io/docs/concepts/policy/resource-quotas/)提供了限制每个命名空间的合计资源消耗的约束。

+它可以按类型限制可在命名空间中创建的对象数量,以及该项目中的资源可能消耗的计算资源总量。

-### RBAC

+### 基于角色的访问控制

-Role-based access control ([RBAC](https://kubernetes.io/docs/reference/access-authn-authz/rbac/)) is a method of regulating access to compute or network resources based on the roles of individual users within an enterprise.

-Users can configure RBAC roles and service accounts used by Flink JobManager to access the Kubernetes API server within the Kubernetes cluster.

+基于角色的访问控制([RBAC](https://kubernetes.io/docs/reference/access-authn-authz/rbac/))是一种在企业内部基于单个用户的角色来调节对计算或网络资源的访问的方法。

+用户可以配置 RBAC 角色和服务账户,Flink JobManager 使用这些角色和服务帐户访问 Kubernetes 集群中的 Kubernetes API server。

-Every namespace has a default service account, however, the `default` service account may not have the permission to create or delete pods within the Kubernetes cluster.

-Users may need to update the permission of `default` service account or specify another service account that has the right role bound.

+每个命名空间有默认的服务账户,但是`默认`服务账户可能没有权限在 Kubernetes 集群中创建或删除 pod。

+用户可能需要更新`默认`服务账户的权限或指定另一个绑定了正确角色的服务账户。

{% highlight bash %}

$ kubectl create clusterrolebinding flink-role-binding-default --clusterrole=edit --serviceaccount=default:default

{% endhighlight %}

-If you do not want to use `default` service account, use the following command to create a new `flink` service account and set the role binding.

-Then use the config option `-Dkubernetes.jobmanager.service-account=flink` to make the JobManager pod using the `flink` service account to create and delete TaskManager pods.

+如果你不想使用`默认`服务账户,使用以下命令创建一个新的 `flink` 服务账户并设置角色绑定。

+然后使用配置项 `-Dkubernetes.jobmanager.service-account=flink` 来使 JobManager pod 使用 `flink` 服务账户去创建和删除 TaskManager pod。

{% highlight bash %}

$ kubectl create serviceaccount flink

$ kubectl create clusterrolebinding flink-role-binding-flink --clusterrole=edit --serviceaccount=default:flink

{% endhighlight %}

-Please reference the official Kubernetes documentation on [RBAC Authorization](https://kubernetes.io/docs/reference/access-authn-authz/rbac/) for more information.

+有关更多信息,请参考 Kubernetes 官方文档 [RBAC 授权](https://kubernetes.io/docs/reference/access-authn-authz/rbac/)。

-## Background / Internals

+## 背景/内部构造

-This section briefly explains how Flink and Kubernetes interact.

+本节简要解释了 Flink 和 Kubernetes 如何交互。

<img src="{{ site.baseurl }}/fig/FlinkOnK8s.svg" class="img-responsive">

-When creating a Flink Kubernetes session cluster, the Flink client will first connect to the Kubernetes ApiServer to submit the cluster description, including ConfigMap spec, Job Manager Service spec, Job Manager Deployment spec and Owner Reference.

-Kubernetes will then create the Flink master deployment, during which time the Kubelet will pull the image, prepare and mount the volume, and then execute the start command.

-After the master pod has launched, the Dispatcher and KubernetesResourceManager are available and the cluster is ready to accept one or more jobs.

+创建 Flink Kubernetes session 集群时,Flink 客户端首先将连接到 Kubernetes ApiServer 提交集群描述信息,包括 ConfigMap 描述信息、Job Manager Service 描述信息、Job Manager Deployment 描述信息和 Owner Reference。

+Kubernetes 将创建 Flink master 的 deployment,在此期间 Kubelet 将拉取镜像,准备并挂载卷,然后执行 start 命令。

+master pod 启动后,Dispatcher 和 KubernetesResourceManager 都可用并且集群准备好接受作业。

Review comment:

“Dispatcher 和 KubernetesResourceManager 都可用并且集群准备好接受作业” -> "Dispatcher 和 KubernetesResourceManager服务会相继启动,然后集群就准备完成,并等待提交作业"

##########

File path: docs/ops/deployment/native_kubernetes.zh.md

##########

@@ -193,66 +190,66 @@ $ ./bin/flink run-application -p 8 -t kubernetes-application \

local:///opt/flink/usrlib/my-flink-job.jar

{% endhighlight %}

-Note: Only "local" is supported as schema for application mode. This assumes that the jar is located in the image, not the Flink client.

+注意:应用程序模式只支持 "local" 作为 schema。默认 jar 位于镜像中,而不是 Flink 客户端中。

-Note: All the jars in the "$FLINK_HOME/usrlib" directory in the image will be added to user classpath.

+注意:镜像的 "$FLINK_HOME/usrlib" 目录下的所有 jar 将会被加到用户 classpath 中。

-### Stop Flink Application

+### 停止 Flink 应用程序

-When an application is stopped, all Flink cluster resources are automatically destroyed.

-As always, Jobs may stop when manually canceled or, in the case of bounded Jobs, complete.

+当应用程序停止时,所有 Flink 集群资源都会自动销毁。

+与往常一样,在手动取消作业或完成作业的情况下,作业可能会停止。

Review comment:

“与往常一样,在手动取消作业或完成作业的情况下,作业可能会停止。” -> "与往常一样,作业可能会在手动取消或执行完的情况下停止。"

##########

File path: docs/ops/deployment/native_kubernetes.zh.md

##########

@@ -168,21 +165,21 @@ appender.console.layout.type = PatternLayout

appender.console.layout.pattern = %d{yyyy-MM-dd HH:mm:ss,SSS} %-5p %-60c %x - %m%n

{% endhighlight %}

-If the pod is running, you can use `kubectl exec -it <PodName> bash` to tunnel in and view the logs or debug the process.

+如果 pod 正在运行,可以使用 `kubectl exec -it <PodName> bash` 进入 pod 并查看日志或调试进程。

-## Flink Kubernetes Application

+## Flink Kubernetes 应用程序

Review comment:

不建议将Application进行翻译,这个是FlIP-85新增的一种部署模式,后续可能会逐步取代掉perjob。所以Application模式就和Session模式是相互对应的,建议保留这个词。

##########

File path: docs/ops/deployment/native_kubernetes.zh.md

##########

@@ -92,73 +89,73 @@ $ ./bin/kubernetes-session.sh \

-Dkubernetes.container.image=<CustomImageName>

{% endhighlight %}

-### Submitting jobs to an existing Session

+### 将作业提交到现有 Session

-Use the following command to submit a Flink Job to the Kubernetes cluster.

+使用以下命令将 Flink 作业提交到 Kubernetes 集群。

{% highlight bash %}

$ ./bin/flink run -d -e kubernetes-session -Dkubernetes.cluster-id=<ClusterId> examples/streaming/WindowJoin.jar

{% endhighlight %}

-### Accessing Job Manager UI

+### 访问 Job Manager UI

-There are several ways to expose a Service onto an external (outside of your cluster) IP address.

-This can be configured using `kubernetes.service.exposed.type`.

+有几种方法可以将服务暴露到外部(集群外部) IP 地址。

+可以使用 `kubernetes.service.exposed.type` 进行配置。

-- `ClusterIP`: Exposes the service on a cluster-internal IP.

-The Service is only reachable within the cluster. If you want to access the Job Manager ui or submit job to the existing session, you need to start a local proxy.

-You can then use `localhost:8081` to submit a Flink job to the session or view the dashboard.

+- `ClusterIP`:通过集群内部 IP 暴露服务。

+该服务只能在集群中访问。如果想访问 JobManager ui 或将作业提交到现有 session,则需要启动一个本地代理。

+然后你可以使用 `localhost:8081` 将 Flink 作业提交到 session 或查看仪表盘。

{% highlight bash %}

$ kubectl port-forward service/<ServiceName> 8081

{% endhighlight %}

-- `NodePort`: Exposes the service on each Node’s IP at a static port (the `NodePort`). `<NodeIP>:<NodePort>` could be used to contact the Job Manager Service. `NodeIP` could be easily replaced with Kubernetes ApiServer address.

-You could find it in your kube config file.

+- `NodePort`:通过每个 Node 上的 IP 和静态端口(`NodePort`)暴露服务。`<NodeIP>:<NodePort>` 可以用来连接 JobManager 服务。`NodeIP` 可以很容易地用 Kubernetes ApiServer 地址替换。

+你可以在 kube 配置文件找到它。

-- `LoadBalancer`: Default value, exposes the service externally using a cloud provider’s load balancer.

-Since the cloud provider and Kubernetes needs some time to prepare the load balancer, you may get a `NodePort` JobManager Web Interface in the client log.

-You can use `kubectl get services/<ClusterId>` to get EXTERNAL-IP and then construct the load balancer JobManager Web Interface manually `http://<EXTERNAL-IP>:8081`.

+- `LoadBalancer`:默认值,使用云提供商的负载均衡器在外部暴露服务。

+由于云提供商和 Kubernetes 需要一些时间来准备负载均衡器,因此你可以在客户端日志中获得一个 `NodePort` 的 JobManager Web 界面。

+你可以使用 `kubectl get services/<ClusterId>` 获取 EXTERNAL-IP 然后手动构建负载均衡器 JobManager Web 界面 `http://<EXTERNAL-IP>:8081`。

-- `ExternalName`: Map a service to a DNS name, not supported in current version.

+- `ExternalName`:将服务映射到 DNS 名称,当前版本不支持。

-Please reference the official documentation on [publishing services in Kubernetes](https://kubernetes.io/docs/concepts/services-networking/service/#publishing-services-service-types) for more information.

+有关更多信息,请参考官方文档[在 Kubernetes 上发布服务](https://kubernetes.io/docs/concepts/services-networking/service/#publishing-services-service-types)。

-### Attach to an existing Session

+### 连接现有 Session

-The Kubernetes session is started in detached mode by default, meaning the Flink client will exit after submitting all the resources to the Kubernetes cluster. Use the following command to attach to an existing session.

+默认情况下,Kubernetes session 以分离模式启动,这意味着 Flink 客户端在将所有资源提交到 Kubernetes 集群后会退出。使用以下命令来连接现有 session。

Review comment:

"以分离模式启动" -> "以后台模式启动"

##########

File path: docs/ops/deployment/native_kubernetes.zh.md

##########

@@ -193,66 +190,66 @@ $ ./bin/flink run-application -p 8 -t kubernetes-application \

local:///opt/flink/usrlib/my-flink-job.jar

{% endhighlight %}

-Note: Only "local" is supported as schema for application mode. This assumes that the jar is located in the image, not the Flink client.

+注意:应用程序模式只支持 "local" 作为 schema。默认 jar 位于镜像中,而不是 Flink 客户端中。

-Note: All the jars in the "$FLINK_HOME/usrlib" directory in the image will be added to user classpath.

+注意:镜像的 "$FLINK_HOME/usrlib" 目录下的所有 jar 将会被加到用户 classpath 中。

-### Stop Flink Application

+### 停止 Flink 应用程序

-When an application is stopped, all Flink cluster resources are automatically destroyed.

-As always, Jobs may stop when manually canceled or, in the case of bounded Jobs, complete.

+当应用程序停止时,所有 Flink 集群资源都会自动销毁。

+与往常一样,在手动取消作业或完成作业的情况下,作业可能会停止。

{% highlight bash %}

$ ./bin/flink cancel -t kubernetes-application -Dkubernetes.cluster-id=<ClusterID> <JobID>

{% endhighlight %}

-## Kubernetes concepts

+## Kubernetes 概念

-### Namespaces

+### 命名空间

-[Namespaces in Kubernetes](https://kubernetes.io/docs/concepts/overview/working-with-objects/namespaces/) are a way to divide cluster resources between multiple users (via resource quota).

-It is similar to the queue concept in Yarn cluster. Flink on Kubernetes can use namespaces to launch Flink clusters.

-The namespace can be specified using the `-Dkubernetes.namespace=default` argument when starting a Flink cluster.

+[Kubernetes 中的命名空间](https://kubernetes.io/docs/concepts/overview/working-with-objects/namespaces/)是一种在多个用户之间划分集群资源的方法(通过资源配额)。

+它类似于 Yarn 集群中的队列概念。Flink on Kubernetes 可以使用命名空间来启动 Flink 集群。

+启动 Flink 集群时,可以使用 `-Dkubernetes.namespace=default` 参数来指定命名空间。

-[ResourceQuota](https://kubernetes.io/docs/concepts/policy/resource-quotas/) provides constraints that limit aggregate resource consumption per namespace.

-It can limit the quantity of objects that can be created in a namespace by type, as well as the total amount of compute resources that may be consumed by resources in that project.

+[资源配额](https://kubernetes.io/docs/concepts/policy/resource-quotas/)提供了限制每个命名空间的合计资源消耗的约束。

+它可以按类型限制可在命名空间中创建的对象数量,以及该项目中的资源可能消耗的计算资源总量。

-### RBAC

+### 基于角色的访问控制

-Role-based access control ([RBAC](https://kubernetes.io/docs/reference/access-authn-authz/rbac/)) is a method of regulating access to compute or network resources based on the roles of individual users within an enterprise.

-Users can configure RBAC roles and service accounts used by Flink JobManager to access the Kubernetes API server within the Kubernetes cluster.

+基于角色的访问控制([RBAC](https://kubernetes.io/docs/reference/access-authn-authz/rbac/))是一种在企业内部基于单个用户的角色来调节对计算或网络资源的访问的方法。

+用户可以配置 RBAC 角色和服务账户,Flink JobManager 使用这些角色和服务帐户访问 Kubernetes 集群中的 Kubernetes API server。

-Every namespace has a default service account, however, the `default` service account may not have the permission to create or delete pods within the Kubernetes cluster.

-Users may need to update the permission of `default` service account or specify another service account that has the right role bound.

+每个命名空间有默认的服务账户,但是`默认`服务账户可能没有权限在 Kubernetes 集群中创建或删除 pod。

+用户可能需要更新`默认`服务账户的权限或指定另一个绑定了正确角色的服务账户。

{% highlight bash %}

$ kubectl create clusterrolebinding flink-role-binding-default --clusterrole=edit --serviceaccount=default:default

{% endhighlight %}

-If you do not want to use `default` service account, use the following command to create a new `flink` service account and set the role binding.

-Then use the config option `-Dkubernetes.jobmanager.service-account=flink` to make the JobManager pod using the `flink` service account to create and delete TaskManager pods.

+如果你不想使用`默认`服务账户,使用以下命令创建一个新的 `flink` 服务账户并设置角色绑定。

+然后使用配置项 `-Dkubernetes.jobmanager.service-account=flink` 来使 JobManager pod 使用 `flink` 服务账户去创建和删除 TaskManager pod。

{% highlight bash %}

$ kubectl create serviceaccount flink

$ kubectl create clusterrolebinding flink-role-binding-flink --clusterrole=edit --serviceaccount=default:flink

{% endhighlight %}

-Please reference the official Kubernetes documentation on [RBAC Authorization](https://kubernetes.io/docs/reference/access-authn-authz/rbac/) for more information.

+有关更多信息,请参考 Kubernetes 官方文档 [RBAC 授权](https://kubernetes.io/docs/reference/access-authn-authz/rbac/)。

-## Background / Internals

+## 背景/内部构造

-This section briefly explains how Flink and Kubernetes interact.

+本节简要解释了 Flink 和 Kubernetes 如何交互。

<img src="{{ site.baseurl }}/fig/FlinkOnK8s.svg" class="img-responsive">

-When creating a Flink Kubernetes session cluster, the Flink client will first connect to the Kubernetes ApiServer to submit the cluster description, including ConfigMap spec, Job Manager Service spec, Job Manager Deployment spec and Owner Reference.

-Kubernetes will then create the Flink master deployment, during which time the Kubelet will pull the image, prepare and mount the volume, and then execute the start command.

-After the master pod has launched, the Dispatcher and KubernetesResourceManager are available and the cluster is ready to accept one or more jobs.

+创建 Flink Kubernetes session 集群时,Flink 客户端首先将连接到 Kubernetes ApiServer 提交集群描述信息,包括 ConfigMap 描述信息、Job Manager Service 描述信息、Job Manager Deployment 描述信息和 Owner Reference。

+Kubernetes 将创建 Flink master 的 deployment,在此期间 Kubelet 将拉取镜像,准备并挂载卷,然后执行 start 命令。

+master pod 启动后,Dispatcher 和 KubernetesResourceManager 都可用并且集群准备好接受作业。

-When users submit jobs through the Flink client, the job graph will be generated by the client and uploaded along with users jars to the Dispatcher.

-A JobMaster for that Job will be then be spawned.

+当用户通过 Flink 客户端提交作业时,将通过客户端生成 jobGraph 并将其与用户 jar 一起上传到 Dispatcher。

+然后将生成该作业的 JobMaster。

Review comment:

“然后将生成该作业的 JobMaster” -> "然后Dispatcher会为每个job启动一个单独的JobMaster。"

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [flink] klion26 commented on a change in pull request #12296: [FLINK-17814][chinese-translation]Translate native kubernetes document to Chinese

Posted by GitBox <gi...@apache.org>.

klion26 commented on a change in pull request #12296:

URL: https://github.com/apache/flink/pull/12296#discussion_r429517344

##########

File path: docs/ops/deployment/native_kubernetes.zh.md

##########

@@ -24,43 +24,41 @@ specific language governing permissions and limitations

under the License.

-->

-This page describes how to deploy a Flink session cluster natively on [Kubernetes](https://kubernetes.io).

+本页面描述了如何在 [Kubernetes](https://kubernetes.io) 原生的部署 Flink session 集群。

* This will be replaced by the TOC

{:toc}

<div class="alert alert-warning">

-Flink's native Kubernetes integration is still experimental. There may be changes in the configuration and CLI flags in latter versions.

+Flink 的原生 Kubernetes 集成仍处于试验阶段。在以后的版本中,配置和 CLI flags 可能会发生变化。

</div>

-## Requirements

+## 要求

-- Kubernetes 1.9 or above.

-- KubeConfig, which has access to list, create, delete pods and services, configurable via `~/.kube/config`. You can verify permissions by running `kubectl auth can-i <list|create|edit|delete> pods`.

-- Kubernetes DNS enabled.

-- A service Account with [RBAC](#rbac) permissions to create, delete pods.

+- Kubernetes 版本 1.9 或以上。

+- KubeConfig 可以访问列表、删除 pods 和 services,可以通过`~/.kube/config` 配置。你可以通过运行 `kubectl auth can-i <list|create|edit|delete> pods` 来验证权限。

Review comment:

```suggestion

- KubeConfig 可以访问列表、删除 pods 和 services,可以通过 `~/.kube/config` 配置。你可以通过运行 `kubectl auth can-i <list|create|edit|delete> pods` 来验证权限。

```

这里是说 KubeConfig 可以正确的 `list`,`ceate` 以及 `delete` pod 吧

##########

File path: docs/ops/deployment/native_kubernetes.zh.md

##########

@@ -168,13 +166,13 @@ appender.console.layout.type = PatternLayout

appender.console.layout.pattern = %d{yyyy-MM-dd HH:mm:ss,SSS} %-5p %-60c %x - %m%n

{% endhighlight %}

-If the pod is running, you can use `kubectl exec -it <PodName> bash` to tunnel in and view the logs or debug the process.

+如果 pod 正在运行,可以使用 `kubectl exec -it <PodName> bash` 进入 pod 并查看日志或调试进程。

-## Flink Kubernetes Application

+## Flink Kubernetes 应用程序

-### Start Flink Application

+### 启动 Flink 应用程序

-Application mode allows users to create a single image containing their Job and the Flink runtime, which will automatically create and destroy cluster components as needed. The Flink community provides base docker images [customized](docker.html#customize-flink-image) for any use case.

+应用程序模式允许用户创建单个镜像,其中包含他们的作业和 Flink 运行时,该镜像将根据所需自动创建和销毁集群组件。Flink 社区为任何用例提供了基础镜像 [customized](docker.html#customize-flink-image)。

Review comment:

`该镜像将根据所需` -> `该镜像将按需` 会好一些吗?

这里 `docker images customized for any use case` 是指适用任何场景下的基础镜像?

##########

File path: docs/ops/deployment/native_kubernetes.zh.md

##########

@@ -193,66 +191,66 @@ $ ./bin/flink run-application -p 8 -t kubernetes-application \

local:///opt/flink/usrlib/my-flink-job.jar

{% endhighlight %}

-Note: Only "local" is supported as schema for application mode. This assumes that the jar is located in the image, not the Flink client.

+注意:应用程序模式只支持 "local" 作为 schema。假设 jar 位于镜像中,而不是 Flink 客户端中。

-Note: All the jars in the "$FLINK_HOME/usrlib" directory in the image will be added to user classpath.

+注意:镜像的"$FLINK_HOME/usrlib" 目录下的所有 jar 将会被加到用户 classpath 中。

-### Stop Flink Application

+### 停止 Flink 应用程序

-When an application is stopped, all Flink cluster resources are automatically destroyed.

-As always, Jobs may stop when manually canceled or, in the case of bounded Jobs, complete.

+当应用程序停止时,所有 Flink 集群资源都会自动销毁。

+与往常一样,在手动取消作业或完成作业的情况下,作业可能会停止。

{% highlight bash %}

$ ./bin/flink cancel -t kubernetes-application -Dkubernetes.cluster-id=<ClusterID> <JobID>

{% endhighlight %}

-## Kubernetes concepts

+## Kubernetes 概念

-### Namespaces

+### 命名空间

-[Namespaces in Kubernetes](https://kubernetes.io/docs/concepts/overview/working-with-objects/namespaces/) are a way to divide cluster resources between multiple users (via resource quota).

-It is similar to the queue concept in Yarn cluster. Flink on Kubernetes can use namespaces to launch Flink clusters.

-The namespace can be specified using the `-Dkubernetes.namespace=default` argument when starting a Flink cluster.

+[Kubernetes 中的命名空间](https://kubernetes.io/docs/concepts/overview/working-with-objects/namespaces/)是一种在多个用户之间划分集群资源的方法(通过资源配额)。

+它类似于 Yarn 集群中的队列概念。Flink on Kubernetes 可以使用命名空间来启动 Flink 集群。

+启动 Flink 集群时,可以使用`-Dkubernetes.namespace=default` 参数来指定命名空间。

-[ResourceQuota](https://kubernetes.io/docs/concepts/policy/resource-quotas/) provides constraints that limit aggregate resource consumption per namespace.

-It can limit the quantity of objects that can be created in a namespace by type, as well as the total amount of compute resources that may be consumed by resources in that project.

+[资源配额](https://kubernetes.io/docs/concepts/policy/resource-quotas/)提供了限制每个命名空间的合计资源消耗的约束。

+它可以按类型限制可在命名空间中创建的对象数量,以及该项目中的资源可能消耗的计算资源总量。

-### RBAC

+### 基于角色的访问控制

-Role-based access control ([RBAC](https://kubernetes.io/docs/reference/access-authn-authz/rbac/)) is a method of regulating access to compute or network resources based on the roles of individual users within an enterprise.

-Users can configure RBAC roles and service accounts used by Flink JobManager to access the Kubernetes API server within the Kubernetes cluster.

+基于角色的访问控制([RBAC](https://kubernetes.io/docs/reference/access-authn-authz/rbac/))是一种在企业内部基于单个用户的角色来调节对计算或网络资源的访问的方法。

+用户可以配置 RBAC 角色和服务账户,Flink JobManager 使用这些角色和服务帐户访问 Kubernetes 集群中的 Kubernetes API server。

-Every namespace has a default service account, however, the `default` service account may not have the permission to create or delete pods within the Kubernetes cluster.

-Users may need to update the permission of `default` service account or specify another service account that has the right role bound.

+每个命名空间有默认的服务账户,但是`默认`服务账户可能没有权限在 Kubernetes 集群中创建或删除 pod。

+用户可能需要更新`默认`服务账户的权限或指定另一个绑定了正确角色的服务账户。

{% highlight bash %}

$ kubectl create clusterrolebinding flink-role-binding-default --clusterrole=edit --serviceaccount=default:default

{% endhighlight %}

-If you do not want to use `default` service account, use the following command to create a new `flink` service account and set the role binding.

-Then use the config option `-Dkubernetes.jobmanager.service-account=flink` to make the JobManager pod using the `flink` service account to create and delete TaskManager pods.

+如果你不想使用`默认`服务账户,使用以下命令创建一个新的 `flink` 服务账户并设置角色绑定。

+然后使用配置项`-Dkubernetes.jobmanager.service-account=flink` 来使 JobManager pod 使用 `flink` 服务账户去创建和删除 TaskManager pod。

{% highlight bash %}

$ kubectl create serviceaccount flink

$ kubectl create clusterrolebinding flink-role-binding-flink --clusterrole=edit --serviceaccount=default:flink

{% endhighlight %}

-Please reference the official Kubernetes documentation on [RBAC Authorization](https://kubernetes.io/docs/reference/access-authn-authz/rbac/) for more information.

+有关更多信息,请参考 Kubernetes 官方文档 [RBAC 授权](https://kubernetes.io/docs/reference/access-authn-authz/rbac/)。

-## Background / Internals

+## 背景/内部构造

-This section briefly explains how Flink and Kubernetes interact.

+本节简要解释了 Flink 和 Kubernetes 如何交互。

<img src="{{ site.baseurl }}/fig/FlinkOnK8s.svg" class="img-responsive">

-When creating a Flink Kubernetes session cluster, the Flink client will first connect to the Kubernetes ApiServer to submit the cluster description, including ConfigMap spec, Job Manager Service spec, Job Manager Deployment spec and Owner Reference.

-Kubernetes will then create the Flink master deployment, during which time the Kubelet will pull the image, prepare and mount the volume, and then execute the start command.

-After the master pod has launched, the Dispatcher and KubernetesResourceManager are available and the cluster is ready to accept one or more jobs.

+创建 Flink Kubernetes session 集群时,Flink 客户端首先将连接到 Kubernetes ApiServer 提交集群描述信息,包括 ConfigMap 描述信息、Job Manager Service 描述信息、Job Manager Deployment 描述信息和 Owner Reference。

+Kubernetes 将创建 Flink master 的 deployment,在此期间 Kubelet 将拉取镜像,准备并挂载卷,然后执行 start 命令。

+master pod 启动后,Dispatcher 和 KubernetesResourceManager 都可用并且集群准备好接受一个或更多作业。

-When users submit jobs through the Flink client, the job graph will be generated by the client and uploaded along with users jars to the Dispatcher.

-A JobMaster for that Job will be then be spawned.

+当用户通过 Flink 客户端提交作业时,将通过客户端生成 jobGraph 并将其与用户 jar 一起上传到 Dispatcher。

+然后将生成该作业的 JobMaster。

-The JobMaster requests resources, known as slots, from the KubernetesResourceManager.

-If no slots are available, the resource manager will bring up TaskManager pods and registering them with the cluster.

+JobMaster 向 KubernetesResourceManager 请求被称为 slots 的资源。

Review comment:

`请求被称为 slots 的资源` -> `请求 slots 资源` 是否可以呢?

##########

File path: docs/ops/deployment/native_kubernetes.zh.md

##########

@@ -24,43 +24,41 @@ specific language governing permissions and limitations

under the License.

-->

-This page describes how to deploy a Flink session cluster natively on [Kubernetes](https://kubernetes.io).

+本页面描述了如何在 [Kubernetes](https://kubernetes.io) 原生的部署 Flink session 集群。

* This will be replaced by the TOC

{:toc}

<div class="alert alert-warning">

-Flink's native Kubernetes integration is still experimental. There may be changes in the configuration and CLI flags in latter versions.

+Flink 的原生 Kubernetes 集成仍处于试验阶段。在以后的版本中,配置和 CLI flags 可能会发生变化。

</div>

-## Requirements

+## 要求

-- Kubernetes 1.9 or above.

-- KubeConfig, which has access to list, create, delete pods and services, configurable via `~/.kube/config`. You can verify permissions by running `kubectl auth can-i <list|create|edit|delete> pods`.

-- Kubernetes DNS enabled.

-- A service Account with [RBAC](#rbac) permissions to create, delete pods.

+- Kubernetes 版本 1.9 或以上。

+- KubeConfig 可以访问列表、删除 pods 和 services,可以通过`~/.kube/config` 配置。你可以通过运行 `kubectl auth can-i <list|create|edit|delete> pods` 来验证权限。

+- 启用 Kubernetes DNS。

+- 具有 [RBAC](#rbac) 权限的 Service Account 可以创建、删除 pods。

## Flink Kubernetes Session

-### Start Flink Session

+### 启动 Flink Session

-Follow these instructions to start a Flink Session within your Kubernetes cluster.

+按照以下说明在 Kubernetes 集群中启动 Flink Session。

-A session will start all required Flink services (JobManager and TaskManagers) so that you can submit programs to the cluster.

-Note that you can run multiple programs per session.

+session 将启动所有必需的 Flink 服务(JobManager 和 TaskManagers),以便你可以将程序提交到集群。

+注意你可以在每个 session 上运行多个程序。

{% highlight bash %}

$ ./bin/kubernetes-session.sh

{% endhighlight %}

-All the Kubernetes configuration options can be found in our [configuration guide]({{ site.baseurl }}/zh/ops/config.html#kubernetes).

+所有 Kubernetes 配置项都可以在我们的[配置指南]({{ site.baseurl }}/zh/ops/config.html#kubernetes)中找到。

-**Example**: Issue the following command to start a session cluster with 4 GB of memory and 2 CPUs with 4 slots per TaskManager:

+**示例**: 发布以下命令启动 session 集群,每个 TaskManager 分配 4 GB 内存、2 CPUs、4 slots:

Review comment:

"发布以下命令" -> “执行以下命令"?

##########

File path: docs/ops/deployment/native_kubernetes.zh.md

##########

@@ -24,43 +24,41 @@ specific language governing permissions and limitations

under the License.

-->

-This page describes how to deploy a Flink session cluster natively on [Kubernetes](https://kubernetes.io).

+本页面描述了如何在 [Kubernetes](https://kubernetes.io) 原生的部署 Flink session 集群。

* This will be replaced by the TOC

{:toc}

<div class="alert alert-warning">

-Flink's native Kubernetes integration is still experimental. There may be changes in the configuration and CLI flags in latter versions.

+Flink 的原生 Kubernetes 集成仍处于试验阶段。在以后的版本中,配置和 CLI flags 可能会发生变化。

</div>

-## Requirements

+## 要求

-- Kubernetes 1.9 or above.

-- KubeConfig, which has access to list, create, delete pods and services, configurable via `~/.kube/config`. You can verify permissions by running `kubectl auth can-i <list|create|edit|delete> pods`.

-- Kubernetes DNS enabled.

-- A service Account with [RBAC](#rbac) permissions to create, delete pods.

+- Kubernetes 版本 1.9 或以上。

+- KubeConfig 可以访问列表、删除 pods 和 services,可以通过`~/.kube/config` 配置。你可以通过运行 `kubectl auth can-i <list|create|edit|delete> pods` 来验证权限。

+- 启用 Kubernetes DNS。

+- 具有 [RBAC](#rbac) 权限的 Service Account 可以创建、删除 pods。

## Flink Kubernetes Session

-### Start Flink Session

+### 启动 Flink Session

-Follow these instructions to start a Flink Session within your Kubernetes cluster.

+按照以下说明在 Kubernetes 集群中启动 Flink Session。

-A session will start all required Flink services (JobManager and TaskManagers) so that you can submit programs to the cluster.

-Note that you can run multiple programs per session.

+session 将启动所有必需的 Flink 服务(JobManager 和 TaskManagers),以便你可以将程序提交到集群。

+注意你可以在每个 session 上运行多个程序。

{% highlight bash %}

$ ./bin/kubernetes-session.sh

{% endhighlight %}

-All the Kubernetes configuration options can be found in our [configuration guide]({{ site.baseurl }}/zh/ops/config.html#kubernetes).

+所有 Kubernetes 配置项都可以在我们的[配置指南]({{ site.baseurl }}/zh/ops/config.html#kubernetes)中找到。

-**Example**: Issue the following command to start a session cluster with 4 GB of memory and 2 CPUs with 4 slots per TaskManager:

+**示例**: 发布以下命令启动 session 集群,每个 TaskManager 分配 4 GB 内存、2 CPUs、4 slots:

-In this example we override the `resourcemanager.taskmanager-timeout` setting to make

-the pods with task managers remain for a longer period than the default of 30 seconds.

-Although this setting may cause more cloud cost it has the effect that starting new jobs is in some scenarios

-faster and during development you have more time to inspect the logfiles of your job.

+在此示例中,我们覆盖了 `resourcemanager.taskmanager-timeout` 配置,为了使运行 taskmanager 的 pod 停留时间比默认的 30 秒更长。

+尽管此设置可能造成更多的云成本,但它有这样的效果,在某些情况下更快地启动新作业,并且在开发过程中,你有更多的时间检查作业的日志文件。

Review comment:

"但它有这样的效果,在某些情况下更快地启动新作业" -> "但在某些情况下能更快地启动新作业"会好一些吗?

##########

File path: docs/ops/deployment/native_kubernetes.zh.md

##########

@@ -92,73 +90,73 @@ $ ./bin/kubernetes-session.sh \

-Dkubernetes.container.image=<CustomImageName>

{% endhighlight %}

-### Submitting jobs to an existing Session

+### 将作业提交到现有 Session

-Use the following command to submit a Flink Job to the Kubernetes cluster.

+使用以下命令将 Flink 作业提交到 Kubernetes 集群。

{% highlight bash %}

$ ./bin/flink run -d -e kubernetes-session -Dkubernetes.cluster-id=<ClusterId> examples/streaming/WindowJoin.jar

{% endhighlight %}

-### Accessing Job Manager UI

+### 访问 Job Manager UI

-There are several ways to expose a Service onto an external (outside of your cluster) IP address.

-This can be configured using `kubernetes.service.exposed.type`.

+有几种方法可以将服务暴露到外部(集群外部) IP 地址。

+可以使用 `kubernetes.service.exposed.type` 进行配置。

-- `ClusterIP`: Exposes the service on a cluster-internal IP.

-The Service is only reachable within the cluster. If you want to access the Job Manager ui or submit job to the existing session, you need to start a local proxy.

-You can then use `localhost:8081` to submit a Flink job to the session or view the dashboard.

+- `ClusterIP`:通过集群内部 IP 暴露服务。

+该服务只能在集群中访问。如果想访问 JobManager ui 或将作业提交到现有 session,则需要启动一个本地代理。

+然后你可以使用 `localhost:8081` 将 Flink 作业提交到 session 或查看仪表盘。

{% highlight bash %}

$ kubectl port-forward service/<ServiceName> 8081

{% endhighlight %}

-- `NodePort`: Exposes the service on each Node’s IP at a static port (the `NodePort`). `<NodeIP>:<NodePort>` could be used to contact the Job Manager Service. `NodeIP` could be easily replaced with Kubernetes ApiServer address.

-You could find it in your kube config file.

+- `NodePort`:通过每个 Node 上的 IP 和静态端口(`NodePort`)暴露服务。`<NodeIP>:<NodePort>` 可以用来连接 JobManager 服务。`NodeIP` 可以很容易地用 Kubernetes ApiServer 地址替换。

+你可以在 kube 配置文件找到它。

-- `LoadBalancer`: Default value, exposes the service externally using a cloud provider’s load balancer.

-Since the cloud provider and Kubernetes needs some time to prepare the load balancer, you may get a `NodePort` JobManager Web Interface in the client log.

-You can use `kubectl get services/<ClusterId>` to get EXTERNAL-IP and then construct the load balancer JobManager Web Interface manually `http://<EXTERNAL-IP>:8081`.

+- `LoadBalancer`:默认值,使用云提供商的负载均衡器在外部暴露服务。

Review comment:

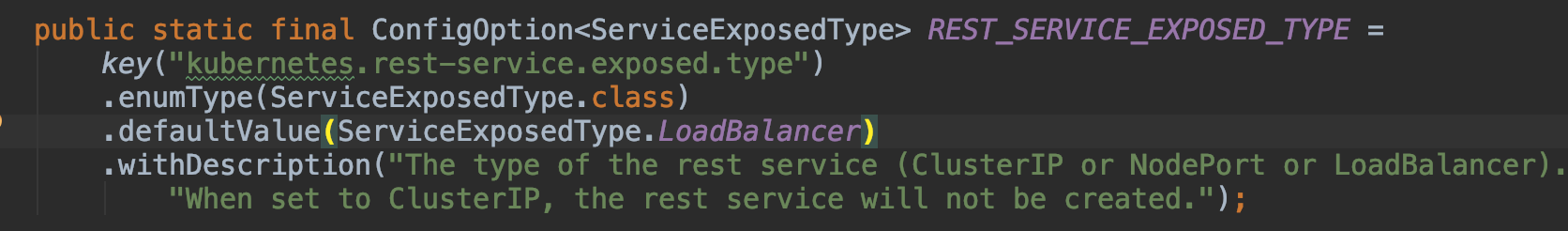

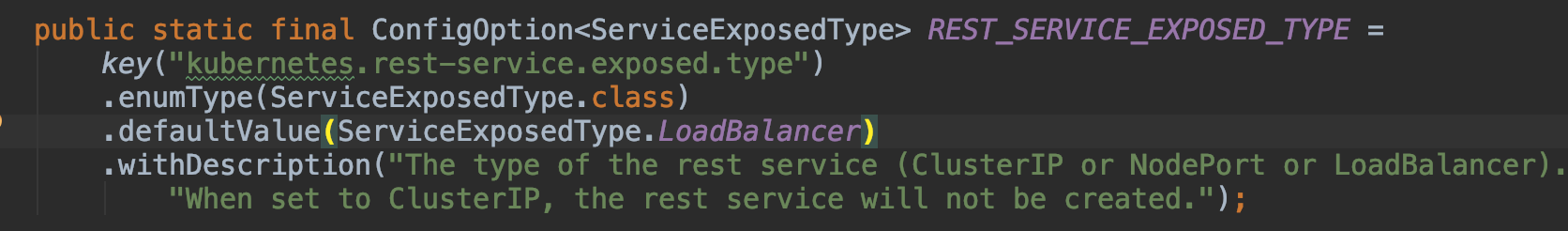

有个疑问,这里 "Default value" 是指 `LoadBalancer` 是 `kubernetes.service.exposed.type` 配置项的默认值吗?

从 [官方文档](https://kubernetes.io/docs/concepts/services-networking/service/#publishing-services-service-types) 中看,`ServiceTypes` 的默认是 `ClusterIP`

##########

File path: docs/ops/deployment/native_kubernetes.zh.md

##########

@@ -92,73 +90,73 @@ $ ./bin/kubernetes-session.sh \

-Dkubernetes.container.image=<CustomImageName>

{% endhighlight %}

-### Submitting jobs to an existing Session

+### 将作业提交到现有 Session

-Use the following command to submit a Flink Job to the Kubernetes cluster.

+使用以下命令将 Flink 作业提交到 Kubernetes 集群。

{% highlight bash %}

$ ./bin/flink run -d -e kubernetes-session -Dkubernetes.cluster-id=<ClusterId> examples/streaming/WindowJoin.jar

{% endhighlight %}

-### Accessing Job Manager UI

+### 访问 Job Manager UI

-There are several ways to expose a Service onto an external (outside of your cluster) IP address.

-This can be configured using `kubernetes.service.exposed.type`.

+有几种方法可以将服务暴露到外部(集群外部) IP 地址。

+可以使用 `kubernetes.service.exposed.type` 进行配置。

-- `ClusterIP`: Exposes the service on a cluster-internal IP.

-The Service is only reachable within the cluster. If you want to access the Job Manager ui or submit job to the existing session, you need to start a local proxy.

-You can then use `localhost:8081` to submit a Flink job to the session or view the dashboard.

+- `ClusterIP`:通过集群内部 IP 暴露服务。

+该服务只能在集群中访问。如果想访问 JobManager ui 或将作业提交到现有 session,则需要启动一个本地代理。

+然后你可以使用 `localhost:8081` 将 Flink 作业提交到 session 或查看仪表盘。

{% highlight bash %}

$ kubectl port-forward service/<ServiceName> 8081

{% endhighlight %}

-- `NodePort`: Exposes the service on each Node’s IP at a static port (the `NodePort`). `<NodeIP>:<NodePort>` could be used to contact the Job Manager Service. `NodeIP` could be easily replaced with Kubernetes ApiServer address.

-You could find it in your kube config file.

+- `NodePort`:通过每个 Node 上的 IP 和静态端口(`NodePort`)暴露服务。`<NodeIP>:<NodePort>` 可以用来连接 JobManager 服务。`NodeIP` 可以很容易地用 Kubernetes ApiServer 地址替换。

+你可以在 kube 配置文件找到它。

-- `LoadBalancer`: Default value, exposes the service externally using a cloud provider’s load balancer.

-Since the cloud provider and Kubernetes needs some time to prepare the load balancer, you may get a `NodePort` JobManager Web Interface in the client log.

-You can use `kubectl get services/<ClusterId>` to get EXTERNAL-IP and then construct the load balancer JobManager Web Interface manually `http://<EXTERNAL-IP>:8081`.

+- `LoadBalancer`:默认值,使用云提供商的负载均衡器在外部暴露服务。

+由于云提供商和 Kubernetes 需要一些时间来准备负载均衡器,因此你可以在客户端日志中获得一个 `NodePort` 的 JobManager Web 界面。

+你可以使用 `kubectl get services/<ClusterId>`获取 EXTERNAL-IP 然后手动构建负载均衡器 JobManager Web 界面 `http://<EXTERNAL-IP>:8081`。

Review comment:

```suggestion

你可以使用 `kubectl get services/<ClusterId>` 获取 EXTERNAL-IP 然后手动构建负载均衡器 JobManager Web 界面 `http://<EXTERNAL-IP>:8081`。

```

##########

File path: docs/ops/deployment/native_kubernetes.zh.md

##########

@@ -71,16 +69,16 @@ $ ./bin/kubernetes-session.sh \

-Dresourcemanager.taskmanager-timeout=3600000

{% endhighlight %}

-The system will use the configuration in `conf/flink-conf.yaml`.

-Please follow our [configuration guide]({{ site.baseurl }}/zh/ops/config.html) if you want to change something.

+系统将使用 `conf/flink-conf.yaml` 中的配置。

+如果你更改某些配置,请遵循我们的[配置指南]({{ site.baseurl }}/zh/ops/config.html)。

-If you do not specify a particular name for your session by `kubernetes.cluster-id`, the Flink client will generate a UUID name.

+如果你未通过 `kubernetes.cluster-id` 为 session 指定特定名称,Flink 客户端将会生成一个 UUID 名称。

-### Custom Flink Docker image

+### 自定义 Flink Docker 镜像

-If you want to use a custom Docker image to deploy Flink containers, check [the Flink Docker image documentation](docker.html),

-[its tags](docker.html#image-tags), [how to customize the Flink Docker image](docker.html#customize-flink-image) and [enable plugins](docker.html#using-plugins).

-If you created a custom Docker image you can provide it by setting the [`kubernetes.container.image`](../config.html#kubernetes-container-image) configuration option:

+如果要使用自定义的 Docker 镜像部署 Flink 容器,请查看 [Flink Docker 镜像文档](docker.html)、

+[镜像 tags ](docker.html#image-tags)、[如何自定义 Flink Docker 镜像](docker.html#customize-flink-image)和[启用插件](docker.html#using-plugins)。

Review comment:

这一行应该和上一行放在一起,不然 "、” 和 “镜像” 中间会有空格,另外 英文和标点符号之间不需要空格

```suggestion

[镜像 tags](docker.html#image-tags)、[如何自定义 Flink Docker 镜像](docker.html#customize-flink-image)和[启用插件](docker.html#using-plugins)。

```

##########

File path: docs/ops/deployment/native_kubernetes.zh.md

##########

@@ -92,73 +90,73 @@ $ ./bin/kubernetes-session.sh \

-Dkubernetes.container.image=<CustomImageName>

{% endhighlight %}

-### Submitting jobs to an existing Session

+### 将作业提交到现有 Session

-Use the following command to submit a Flink Job to the Kubernetes cluster.

+使用以下命令将 Flink 作业提交到 Kubernetes 集群。

{% highlight bash %}

$ ./bin/flink run -d -e kubernetes-session -Dkubernetes.cluster-id=<ClusterId> examples/streaming/WindowJoin.jar

{% endhighlight %}

-### Accessing Job Manager UI

+### 访问 Job Manager UI

-There are several ways to expose a Service onto an external (outside of your cluster) IP address.

-This can be configured using `kubernetes.service.exposed.type`.

+有几种方法可以将服务暴露到外部(集群外部) IP 地址。

+可以使用 `kubernetes.service.exposed.type` 进行配置。

-- `ClusterIP`: Exposes the service on a cluster-internal IP.

-The Service is only reachable within the cluster. If you want to access the Job Manager ui or submit job to the existing session, you need to start a local proxy.

-You can then use `localhost:8081` to submit a Flink job to the session or view the dashboard.

+- `ClusterIP`:通过集群内部 IP 暴露服务。

+该服务只能在集群中访问。如果想访问 JobManager ui 或将作业提交到现有 session,则需要启动一个本地代理。

+然后你可以使用 `localhost:8081` 将 Flink 作业提交到 session 或查看仪表盘。

{% highlight bash %}

$ kubectl port-forward service/<ServiceName> 8081

{% endhighlight %}

-- `NodePort`: Exposes the service on each Node’s IP at a static port (the `NodePort`). `<NodeIP>:<NodePort>` could be used to contact the Job Manager Service. `NodeIP` could be easily replaced with Kubernetes ApiServer address.

-You could find it in your kube config file.

+- `NodePort`:通过每个 Node 上的 IP 和静态端口(`NodePort`)暴露服务。`<NodeIP>:<NodePort>` 可以用来连接 JobManager 服务。`NodeIP` 可以很容易地用 Kubernetes ApiServer 地址替换。

+你可以在 kube 配置文件找到它。

-- `LoadBalancer`: Default value, exposes the service externally using a cloud provider’s load balancer.

-Since the cloud provider and Kubernetes needs some time to prepare the load balancer, you may get a `NodePort` JobManager Web Interface in the client log.

-You can use `kubectl get services/<ClusterId>` to get EXTERNAL-IP and then construct the load balancer JobManager Web Interface manually `http://<EXTERNAL-IP>:8081`.

+- `LoadBalancer`:默认值,使用云提供商的负载均衡器在外部暴露服务。

+由于云提供商和 Kubernetes 需要一些时间来准备负载均衡器,因此你可以在客户端日志中获得一个 `NodePort` 的 JobManager Web 界面。

+你可以使用 `kubectl get services/<ClusterId>`获取 EXTERNAL-IP 然后手动构建负载均衡器 JobManager Web 界面 `http://<EXTERNAL-IP>:8081`。

-- `ExternalName`: Map a service to a DNS name, not supported in current version.

+- `ExternalName`:将服务映射到 DNS 名称,当前版本不支持。

-Please reference the official documentation on [publishing services in Kubernetes](https://kubernetes.io/docs/concepts/services-networking/service/#publishing-services-service-types) for more information.

+有关更多信息,请参考官方文档[在 Kubernetes 上发布服务](https://kubernetes.io/docs/concepts/services-networking/service/#publishing-services-service-types)。

-### Attach to an existing Session

+### 连接现有 Session

-The Kubernetes session is started in detached mode by default, meaning the Flink client will exit after submitting all the resources to the Kubernetes cluster. Use the following command to attach to an existing session.

+默认情况下,Kubernetes session 以分离模式启动,这意味着 Flink 客户端在将所有资源提交到 Kubernetes 集群后会退出。使用以下命令来连接现有 session。

{% highlight bash %}

$ ./bin/kubernetes-session.sh -Dkubernetes.cluster-id=<ClusterId> -Dexecution.attached=true

{% endhighlight %}

-### Stop Flink Session

+### 停止 Flink Session

-To stop a Flink Kubernetes session, attach the Flink client to the cluster and type `stop`.

+要停止 Flink Kubernetes session,将 Flink 客户端连接到集群并键入 `stop`。

{% highlight bash %}

$ echo 'stop' | ./bin/kubernetes-session.sh -Dkubernetes.cluster-id=<ClusterId> -Dexecution.attached=true

{% endhighlight %}

-#### Manual Resource Cleanup

+#### 手动清理资源

-Flink uses [Kubernetes OwnerReference's](https://kubernetes.io/docs/concepts/workloads/controllers/garbage-collection/) to cleanup all cluster components.

-All the Flink created resources, including `ConfigMap`, `Service`, `Pod`, have been set the OwnerReference to `deployment/<ClusterId>`.

-When the deployment is deleted, all other resources will be deleted automatically.

+Flink 用 [Kubernetes OwnerReference's](https://kubernetes.io/docs/concepts/workloads/controllers/garbage-collection/) 来清理所有集群组件。

+所有 Flink 创建的资源,包括 `ConfigMap`、`Service`、`Pod`,已经将 OwnerReference 设置为 `deployment/<ClusterId>`。

+删除 deployment 后,所有其他资源将自动删除。

{% highlight bash %}

$ kubectl delete deployment/<ClusterID>

{% endhighlight %}

-## Log Files

+## 日志文件

-By default, the JobManager and TaskManager only store logs under `/opt/flink/log` in each pod.

-If you want to use `kubectl logs <PodName>` to view the logs, you must perform the following:

+默认情况下,JobManager 和 TaskManager 只把日志存储在每个 pod 中的 `/opt/flink/log` 下。

+如果要使用 `kubectl logs <PodName>` 查看日志,必须执行以下操作:

-1. Add a new appender to the log4j.properties in the Flink client.

-2. Add the following 'appenderRef' the rootLogger in log4j.properties `rootLogger.appenderRef.console.ref = ConsoleAppender`.

-3. Remove the redirect args by adding config option `-Dkubernetes.container-start-command-template="%java% %classpath% %jvmmem% %jvmopts% %logging% %class% %args%"`.

-4. Stop and start your session again. Now you could use `kubectl logs` to view your logs.

+1. 在 Flink 客户端的 log4j.properties 中增加新的 appender。

+2. 在 log4j.properties 的 rootLogger 中增加如下 'appenderRef',`rootLogger.appenderRef.console.ref = ConsoleAppender`。

+3. 通过增加配置项`-Dkubernetes.container-start-command-template="%java% %classpath% %jvmmem% %jvmopts% %logging% %class% %args%"`来删除重定向的参数。

Review comment:

```suggestion

3. 通过增加配置项 `-Dkubernetes.container-start-command-template="%java% %classpath% %jvmmem% %jvmopts% %logging% %class% %args%"` 来删除重定向的参数。

```

##########

File path: docs/ops/deployment/native_kubernetes.zh.md

##########

@@ -193,66 +191,66 @@ $ ./bin/flink run-application -p 8 -t kubernetes-application \

local:///opt/flink/usrlib/my-flink-job.jar

{% endhighlight %}

Review comment:

183 行需要翻译

##########

File path: docs/ops/deployment/native_kubernetes.zh.md

##########

@@ -193,66 +191,66 @@ $ ./bin/flink run-application -p 8 -t kubernetes-application \

local:///opt/flink/usrlib/my-flink-job.jar

{% endhighlight %}

-Note: Only "local" is supported as schema for application mode. This assumes that the jar is located in the image, not the Flink client.

+注意:应用程序模式只支持 "local" 作为 schema。假设 jar 位于镜像中,而不是 Flink 客户端中。

-Note: All the jars in the "$FLINK_HOME/usrlib" directory in the image will be added to user classpath.

+注意:镜像的"$FLINK_HOME/usrlib" 目录下的所有 jar 将会被加到用户 classpath 中。

-### Stop Flink Application

+### 停止 Flink 应用程序

-When an application is stopped, all Flink cluster resources are automatically destroyed.

-As always, Jobs may stop when manually canceled or, in the case of bounded Jobs, complete.

+当应用程序停止时,所有 Flink 集群资源都会自动销毁。

+与往常一样,在手动取消作业或完成作业的情况下,作业可能会停止。

{% highlight bash %}

$ ./bin/flink cancel -t kubernetes-application -Dkubernetes.cluster-id=<ClusterID> <JobID>

{% endhighlight %}

-## Kubernetes concepts

+## Kubernetes 概念

-### Namespaces

+### 命名空间

-[Namespaces in Kubernetes](https://kubernetes.io/docs/concepts/overview/working-with-objects/namespaces/) are a way to divide cluster resources between multiple users (via resource quota).

-It is similar to the queue concept in Yarn cluster. Flink on Kubernetes can use namespaces to launch Flink clusters.

-The namespace can be specified using the `-Dkubernetes.namespace=default` argument when starting a Flink cluster.

+[Kubernetes 中的命名空间](https://kubernetes.io/docs/concepts/overview/working-with-objects/namespaces/)是一种在多个用户之间划分集群资源的方法(通过资源配额)。

+它类似于 Yarn 集群中的队列概念。Flink on Kubernetes 可以使用命名空间来启动 Flink 集群。

+启动 Flink 集群时,可以使用`-Dkubernetes.namespace=default` 参数来指定命名空间。

-[ResourceQuota](https://kubernetes.io/docs/concepts/policy/resource-quotas/) provides constraints that limit aggregate resource consumption per namespace.

-It can limit the quantity of objects that can be created in a namespace by type, as well as the total amount of compute resources that may be consumed by resources in that project.

+[资源配额](https://kubernetes.io/docs/concepts/policy/resource-quotas/)提供了限制每个命名空间的合计资源消耗的约束。

+它可以按类型限制可在命名空间中创建的对象数量,以及该项目中的资源可能消耗的计算资源总量。

-### RBAC

+### 基于角色的访问控制

-Role-based access control ([RBAC](https://kubernetes.io/docs/reference/access-authn-authz/rbac/)) is a method of regulating access to compute or network resources based on the roles of individual users within an enterprise.

-Users can configure RBAC roles and service accounts used by Flink JobManager to access the Kubernetes API server within the Kubernetes cluster.

+基于角色的访问控制([RBAC](https://kubernetes.io/docs/reference/access-authn-authz/rbac/))是一种在企业内部基于单个用户的角色来调节对计算或网络资源的访问的方法。

+用户可以配置 RBAC 角色和服务账户,Flink JobManager 使用这些角色和服务帐户访问 Kubernetes 集群中的 Kubernetes API server。

-Every namespace has a default service account, however, the `default` service account may not have the permission to create or delete pods within the Kubernetes cluster.

-Users may need to update the permission of `default` service account or specify another service account that has the right role bound.

+每个命名空间有默认的服务账户,但是`默认`服务账户可能没有权限在 Kubernetes 集群中创建或删除 pod。

+用户可能需要更新`默认`服务账户的权限或指定另一个绑定了正确角色的服务账户。

{% highlight bash %}

$ kubectl create clusterrolebinding flink-role-binding-default --clusterrole=edit --serviceaccount=default:default

{% endhighlight %}

-If you do not want to use `default` service account, use the following command to create a new `flink` service account and set the role binding.

-Then use the config option `-Dkubernetes.jobmanager.service-account=flink` to make the JobManager pod using the `flink` service account to create and delete TaskManager pods.

+如果你不想使用`默认`服务账户,使用以下命令创建一个新的 `flink` 服务账户并设置角色绑定。

+然后使用配置项`-Dkubernetes.jobmanager.service-account=flink` 来使 JobManager pod 使用 `flink` 服务账户去创建和删除 TaskManager pod。

{% highlight bash %}

$ kubectl create serviceaccount flink

$ kubectl create clusterrolebinding flink-role-binding-flink --clusterrole=edit --serviceaccount=default:flink

{% endhighlight %}

-Please reference the official Kubernetes documentation on [RBAC Authorization](https://kubernetes.io/docs/reference/access-authn-authz/rbac/) for more information.

+有关更多信息,请参考 Kubernetes 官方文档 [RBAC 授权](https://kubernetes.io/docs/reference/access-authn-authz/rbac/)。

-## Background / Internals

+## 背景/内部构造

-This section briefly explains how Flink and Kubernetes interact.

+本节简要解释了 Flink 和 Kubernetes 如何交互。

<img src="{{ site.baseurl }}/fig/FlinkOnK8s.svg" class="img-responsive">

-When creating a Flink Kubernetes session cluster, the Flink client will first connect to the Kubernetes ApiServer to submit the cluster description, including ConfigMap spec, Job Manager Service spec, Job Manager Deployment spec and Owner Reference.

-Kubernetes will then create the Flink master deployment, during which time the Kubelet will pull the image, prepare and mount the volume, and then execute the start command.

-After the master pod has launched, the Dispatcher and KubernetesResourceManager are available and the cluster is ready to accept one or more jobs.

+创建 Flink Kubernetes session 集群时,Flink 客户端首先将连接到 Kubernetes ApiServer 提交集群描述信息,包括 ConfigMap 描述信息、Job Manager Service 描述信息、Job Manager Deployment 描述信息和 Owner Reference。

+Kubernetes 将创建 Flink master 的 deployment,在此期间 Kubelet 将拉取镜像,准备并挂载卷,然后执行 start 命令。

+master pod 启动后,Dispatcher 和 KubernetesResourceManager 都可用并且集群准备好接受一个或更多作业。

Review comment:

`接受一个或多个作业` 是不是简写成 `接受作业` 就行了?

##########

File path: docs/ops/deployment/native_kubernetes.zh.md

##########

@@ -193,66 +191,66 @@ $ ./bin/flink run-application -p 8 -t kubernetes-application \

local:///opt/flink/usrlib/my-flink-job.jar

{% endhighlight %}

-Note: Only "local" is supported as schema for application mode. This assumes that the jar is located in the image, not the Flink client.

+注意:应用程序模式只支持 "local" 作为 schema。假设 jar 位于镜像中,而不是 Flink 客户端中。

Review comment:

`假设 jar 位于镜像中` -> `默认 jar 位于镜像中`会好一些吗

##########

File path: docs/ops/deployment/native_kubernetes.zh.md

##########

@@ -193,66 +191,66 @@ $ ./bin/flink run-application -p 8 -t kubernetes-application \

local:///opt/flink/usrlib/my-flink-job.jar

{% endhighlight %}

-Note: Only "local" is supported as schema for application mode. This assumes that the jar is located in the image, not the Flink client.

+注意:应用程序模式只支持 "local" 作为 schema。假设 jar 位于镜像中,而不是 Flink 客户端中。

-Note: All the jars in the "$FLINK_HOME/usrlib" directory in the image will be added to user classpath.

+注意:镜像的"$FLINK_HOME/usrlib" 目录下的所有 jar 将会被加到用户 classpath 中。

-### Stop Flink Application

+### 停止 Flink 应用程序

-When an application is stopped, all Flink cluster resources are automatically destroyed.

-As always, Jobs may stop when manually canceled or, in the case of bounded Jobs, complete.

+当应用程序停止时,所有 Flink 集群资源都会自动销毁。

+与往常一样,在手动取消作业或完成作业的情况下,作业可能会停止。

{% highlight bash %}

$ ./bin/flink cancel -t kubernetes-application -Dkubernetes.cluster-id=<ClusterID> <JobID>

{% endhighlight %}

-## Kubernetes concepts

+## Kubernetes 概念

-### Namespaces

+### 命名空间

-[Namespaces in Kubernetes](https://kubernetes.io/docs/concepts/overview/working-with-objects/namespaces/) are a way to divide cluster resources between multiple users (via resource quota).

-It is similar to the queue concept in Yarn cluster. Flink on Kubernetes can use namespaces to launch Flink clusters.

-The namespace can be specified using the `-Dkubernetes.namespace=default` argument when starting a Flink cluster.

+[Kubernetes 中的命名空间](https://kubernetes.io/docs/concepts/overview/working-with-objects/namespaces/)是一种在多个用户之间划分集群资源的方法(通过资源配额)。

+它类似于 Yarn 集群中的队列概念。Flink on Kubernetes 可以使用命名空间来启动 Flink 集群。

+启动 Flink 集群时,可以使用`-Dkubernetes.namespace=default` 参数来指定命名空间。

Review comment:

```suggestion

启动 Flink 集群时,可以使用 `-Dkubernetes.namespace=default` 参数来指定命名空间。

```

##########

File path: docs/ops/deployment/native_kubernetes.zh.md

##########

@@ -193,66 +191,66 @@ $ ./bin/flink run-application -p 8 -t kubernetes-application \

local:///opt/flink/usrlib/my-flink-job.jar

{% endhighlight %}

-Note: Only "local" is supported as schema for application mode. This assumes that the jar is located in the image, not the Flink client.

+注意:应用程序模式只支持 "local" 作为 schema。假设 jar 位于镜像中,而不是 Flink 客户端中。

-Note: All the jars in the "$FLINK_HOME/usrlib" directory in the image will be added to user classpath.

+注意:镜像的"$FLINK_HOME/usrlib" 目录下的所有 jar 将会被加到用户 classpath 中。

Review comment:

```suggestion

注意:镜像的 "$FLINK_HOME/usrlib" 目录下的所有 jar 将会被加到用户 classpath 中。

```

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [flink] caozhen1937 commented on a change in pull request #12296: [FLINK-17814][chinese-translation]Translate native kubernetes document to Chinese

Posted by GitBox <gi...@apache.org>.

caozhen1937 commented on a change in pull request #12296:

URL: https://github.com/apache/flink/pull/12296#discussion_r429595609

##########

File path: docs/ops/deployment/native_kubernetes.zh.md

##########

@@ -92,73 +90,73 @@ $ ./bin/kubernetes-session.sh \

-Dkubernetes.container.image=<CustomImageName>

{% endhighlight %}

-### Submitting jobs to an existing Session

+### 将作业提交到现有 Session

-Use the following command to submit a Flink Job to the Kubernetes cluster.

+使用以下命令将 Flink 作业提交到 Kubernetes 集群。

{% highlight bash %}

$ ./bin/flink run -d -e kubernetes-session -Dkubernetes.cluster-id=<ClusterId> examples/streaming/WindowJoin.jar

{% endhighlight %}

-### Accessing Job Manager UI

+### 访问 Job Manager UI

-There are several ways to expose a Service onto an external (outside of your cluster) IP address.

-This can be configured using `kubernetes.service.exposed.type`.

+有几种方法可以将服务暴露到外部(集群外部) IP 地址。

+可以使用 `kubernetes.service.exposed.type` 进行配置。

-- `ClusterIP`: Exposes the service on a cluster-internal IP.

-The Service is only reachable within the cluster. If you want to access the Job Manager ui or submit job to the existing session, you need to start a local proxy.

-You can then use `localhost:8081` to submit a Flink job to the session or view the dashboard.

+- `ClusterIP`:通过集群内部 IP 暴露服务。

+该服务只能在集群中访问。如果想访问 JobManager ui 或将作业提交到现有 session,则需要启动一个本地代理。

+然后你可以使用 `localhost:8081` 将 Flink 作业提交到 session 或查看仪表盘。

{% highlight bash %}

$ kubectl port-forward service/<ServiceName> 8081

{% endhighlight %}

-- `NodePort`: Exposes the service on each Node’s IP at a static port (the `NodePort`). `<NodeIP>:<NodePort>` could be used to contact the Job Manager Service. `NodeIP` could be easily replaced with Kubernetes ApiServer address.

-You could find it in your kube config file.

+- `NodePort`:通过每个 Node 上的 IP 和静态端口(`NodePort`)暴露服务。`<NodeIP>:<NodePort>` 可以用来连接 JobManager 服务。`NodeIP` 可以很容易地用 Kubernetes ApiServer 地址替换。

+你可以在 kube 配置文件找到它。

-- `LoadBalancer`: Default value, exposes the service externally using a cloud provider’s load balancer.

-Since the cloud provider and Kubernetes needs some time to prepare the load balancer, you may get a `NodePort` JobManager Web Interface in the client log.

-You can use `kubectl get services/<ClusterId>` to get EXTERNAL-IP and then construct the load balancer JobManager Web Interface manually `http://<EXTERNAL-IP>:8081`.

+- `LoadBalancer`:默认值,使用云提供商的负载均衡器在外部暴露服务。

Review comment:

看代码中默认值是LoadBalancer

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [flink] caozhen1937 commented on a change in pull request #12296: [FLINK-17814][chinese-translation]Translate native kubernetes document to Chinese

Posted by GitBox <gi...@apache.org>.

caozhen1937 commented on a change in pull request #12296:

URL: https://github.com/apache/flink/pull/12296#discussion_r429777975

##########

File path: docs/ops/deployment/native_kubernetes.zh.md

##########

@@ -193,66 +190,66 @@ $ ./bin/flink run-application -p 8 -t kubernetes-application \

local:///opt/flink/usrlib/my-flink-job.jar

{% endhighlight %}

-Note: Only "local" is supported as schema for application mode. This assumes that the jar is located in the image, not the Flink client.

+注意:应用程序模式只支持 "local" 作为 schema。默认 jar 位于镜像中,而不是 Flink 客户端中。

-Note: All the jars in the "$FLINK_HOME/usrlib" directory in the image will be added to user classpath.

+注意:镜像的 "$FLINK_HOME/usrlib" 目录下的所有 jar 将会被加到用户 classpath 中。

-### Stop Flink Application

+### 停止 Flink 应用程序

-When an application is stopped, all Flink cluster resources are automatically destroyed.

-As always, Jobs may stop when manually canceled or, in the case of bounded Jobs, complete.

+当应用程序停止时,所有 Flink 集群资源都会自动销毁。

+与往常一样,在手动取消作业或完成作业的情况下,作业可能会停止。

{% highlight bash %}

$ ./bin/flink cancel -t kubernetes-application -Dkubernetes.cluster-id=<ClusterID> <JobID>

{% endhighlight %}

-## Kubernetes concepts

+## Kubernetes 概念

-### Namespaces

+### 命名空间

-[Namespaces in Kubernetes](https://kubernetes.io/docs/concepts/overview/working-with-objects/namespaces/) are a way to divide cluster resources between multiple users (via resource quota).

-It is similar to the queue concept in Yarn cluster. Flink on Kubernetes can use namespaces to launch Flink clusters.

-The namespace can be specified using the `-Dkubernetes.namespace=default` argument when starting a Flink cluster.

+[Kubernetes 中的命名空间](https://kubernetes.io/docs/concepts/overview/working-with-objects/namespaces/)是一种在多个用户之间划分集群资源的方法(通过资源配额)。

+它类似于 Yarn 集群中的队列概念。Flink on Kubernetes 可以使用命名空间来启动 Flink 集群。

+启动 Flink 集群时,可以使用 `-Dkubernetes.namespace=default` 参数来指定命名空间。

-[ResourceQuota](https://kubernetes.io/docs/concepts/policy/resource-quotas/) provides constraints that limit aggregate resource consumption per namespace.

-It can limit the quantity of objects that can be created in a namespace by type, as well as the total amount of compute resources that may be consumed by resources in that project.

+[资源配额](https://kubernetes.io/docs/concepts/policy/resource-quotas/)提供了限制每个命名空间的合计资源消耗的约束。

+它可以按类型限制可在命名空间中创建的对象数量,以及该项目中的资源可能消耗的计算资源总量。

-### RBAC

+### 基于角色的访问控制