You are viewing a plain text version of this content. The canonical link for it is here.

Posted to dev@uniffle.apache.org by GitBox <gi...@apache.org> on 2022/09/23 03:03:29 UTC

[GitHub] [incubator-uniffle] leixm opened a new issue, #239: [Problem] RssUtils#transIndexDataToSegments should consider the length of the data file

leixm opened a new issue, #239:

URL: https://github.com/apache/incubator-uniffle/issues/239

Recently, we found that the following exception occurred occasionally in the production environment - `Blocks read inconsistent: expectedxxxxxx`. After investigation, we found that there seem to be some problems with the client's read hdfs.

The following is some information about cluster related configuration and troubleshooting:

Environment

1. rss.client.read.buffer.size=14m

2. storageType=MEMORY_LOCALFILE_HDFS

3. io.file.buffer.size=65536(core-site.xml on ShuffleServer)

We investigate abnormal partition files to see what ShuffleServer/Client does at various points in time,

The result is as follows.

1. 2022-09-20 00:45:07 partition data file creation on hdfs

2. 2022-09-20 00:45:12 flush the first batch of 1690 blocks and close outputstream, At this point the size of the data file is 63101342

3. 2022-09-20 01:26:56 ShuffleServer append partition data file

4. 2022-09-20 01:27:12 getInMemory returns a total of 310 blocks

5. 2022-09-20 01:27:13 The following exceptions appear in sequence: Read index data under flow, read data file EOFException(**with offset[58798659], length[4743511]**), and Blocks read inconsistent, and the task retries four times within 10 seconds or fails, which eventually causes the app to fail

6. 2022-09-20 01:27:37 flush the remaining 310 blocks and close outputstream

Read segments

1. offset=0, length=14709036

2. offset=14709036, length=14698098

3. offset=29407134, length=14700350

4. offset=44107484, length=14691175

5. offset=58798659, length=4743511

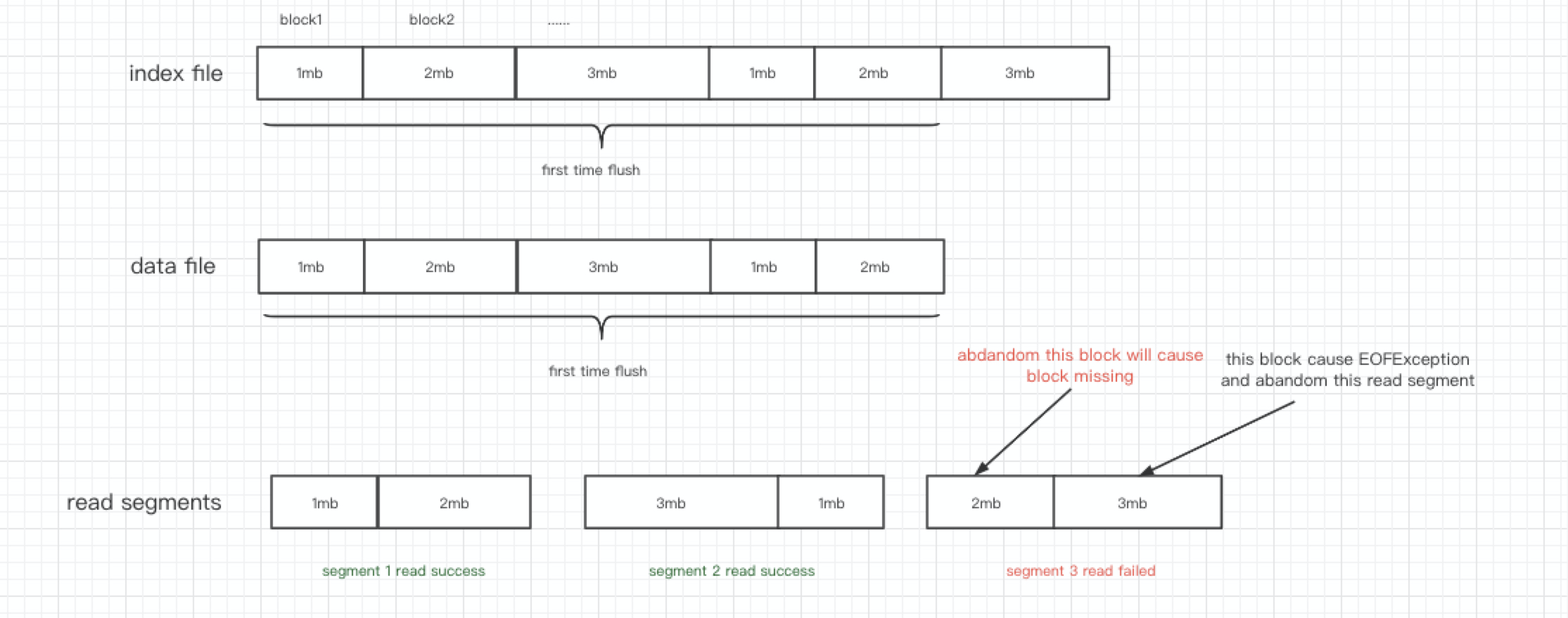

The key point of the problem is that the data file has only 63101342 bytes, but at this time, it has to read 4743511 bytes from the 58798659 offset, which eventually leads to OEFException,And at this time, the block from offset 58798659 to offset 63101342 will be discarded, Eventually lead to block missing.

Another question is, why are there more blocks displayed in the index file than in the data file? This depends on the buffer of the hdfs client. If the data is currently being flushed, we cannot guarantee that the number of blocks in the index is the same as the number of blocks in the data.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: dev-unsubscribe@uniffle.apache.org.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [incubator-uniffle] smallzhongfeng commented on issue #239: [Problem] RssUtils#transIndexDataToSegments should consider the length of the data file

Posted by GitBox <gi...@apache.org>.

smallzhongfeng commented on issue #239:

URL: https://github.com/apache/incubator-uniffle/issues/239#issuecomment-1255786178

This is because the new `segment3` created by the memory reads the `index file` first, but some data in the `data file` has not been written to hdfs, causing `segment3` to read the data file and appearing eof, and then the entire `segment3 will` fail.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: dev-unsubscribe@uniffle.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [incubator-uniffle] leixm commented on issue #239: [Problem] RssUtils#transIndexDataToSegments should consider the length of the data file

Posted by GitBox <gi...@apache.org>.

leixm commented on issue #239:

URL: https://github.com/apache/incubator-uniffle/issues/239#issuecomment-1286386630

> > You are right, we need to know how much data can be read before HdfsFileReader#read, and then read the corresponding data through HdfsFileReader#read, but how much data can be read is currently obtained by parsing the index file , I think the actual amount of data that can be read needs to be considered in the process of parsing the index file, so as to avoid EOFException. Just like the last block in the picture above, when parsing the index file, it is found that there is no such block according to the length of the data file, and this block should not be added to the segment at this time.

>

> Could we catch the EOFException and return the data directly?

It doesn't seem feasible, because we also need to get the correct bufferSegments, if an EOFEXception is thrown, we don't know which bufferSegments are complete.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: dev-unsubscribe@uniffle.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [incubator-uniffle] leixm commented on issue #239: [Problem] RssUtils#transIndexDataToSegments should consider the length of the data file

Posted by GitBox <gi...@apache.org>.

leixm commented on issue #239:

URL: https://github.com/apache/incubator-uniffle/issues/239#issuecomment-1255896557

> @leixm Thanks for your explanation, got your thought.

>

> Could we directly get the expected data in `HdfsFileReader.read()` by analyzing the file size and required length from index.file ?

@zuston The HdfsFileReader.read method seems to be used to read the file content of the specified offset and length, which is not considered here Sophisticated read logic could be better

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: dev-unsubscribe@uniffle.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [incubator-uniffle] leixm commented on issue #239: [Problem] RssUtils#transIndexDataToSegments should consider the length of the data file

Posted by GitBox <gi...@apache.org>.

leixm commented on issue #239:

URL: https://github.com/apache/incubator-uniffle/issues/239#issuecomment-1255893604

You are right, we need to know how much data can be read before HdfsFileReader#read, and then read the corresponding data through HdfsFileReader#read, but how much data can be read is currently obtained by parsing the index file , I think the actual amount of data that can be read needs to be considered in the process of parsing the index file, so as to avoid EOFException.

Just like the last block in the picture above, when parsing the index file, it is found that there is no such block according to the length of the data file, and this block should not be added to the segment at this time.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: dev-unsubscribe@uniffle.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [incubator-uniffle] leixm commented on issue #239: [Problem] RssUtils#transIndexDataToSegments should consider the length of the data file

Posted by GitBox <gi...@apache.org>.

leixm commented on issue #239:

URL: https://github.com/apache/incubator-uniffle/issues/239#issuecomment-1255953708

> > You are right, we need to know how much data can be read before HdfsFileReader#read, and then read the corresponding data through HdfsFileReader#read, but how much data can be read is currently obtained by parsing the index file , I think the actual amount of data that can be read needs to be considered in the process of parsing the index file, so as to avoid EOFException. Just like the last block in the picture above, when parsing the index file, it is found that there is no such block according to the length of the data file, and this block should not be added to the segment at this time.

>

> Could we catch the EOFException and return the data directly?

I will try to test whether the read data is placed in buf after fsDataInputStream.readFully throws an EOFException. If there is data in buf at this time, we can return directly.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: dev-unsubscribe@uniffle.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [incubator-uniffle] zuston commented on issue #239: [Problem] RssUtils#transIndexDataToSegments should consider the length of the data file

Posted by GitBox <gi...@apache.org>.

zuston commented on issue #239:

URL: https://github.com/apache/incubator-uniffle/issues/239#issuecomment-1255857789

@leixm Thanks for your explanation, got your thought.

Could we directly got the expected data in `HdfsFileReader.read()` by analyzing the file size and required length from index.file ?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: dev-unsubscribe@uniffle.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [incubator-uniffle] leixm commented on issue #239: [Problem] RssUtils#transIndexDataToSegments should consider the length of the data file

Posted by GitBox <gi...@apache.org>.

leixm commented on issue #239:

URL: https://github.com/apache/incubator-uniffle/issues/239#issuecomment-1255781712

We can get the length of the data file before transIndexDataToSegments and pass it to the transIndexDataToSegments method. When transIndexDataToSegments divides the read segment, the length of the data file cannot exceed the length of the data file, so that no OEFException will be generated.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: dev-unsubscribe@uniffle.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [incubator-uniffle] jerqi closed issue #239: [Problem] RssUtils#transIndexDataToSegments should consider the length of the data file

Posted by GitBox <gi...@apache.org>.

jerqi closed issue #239: [Problem] RssUtils#transIndexDataToSegments should consider the length of the data file

URL: https://github.com/apache/incubator-uniffle/issues/239

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: dev-unsubscribe@uniffle.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [incubator-uniffle] jerqi commented on issue #239: [Problem] RssUtils#transIndexDataToSegments should consider the length of the data file

Posted by GitBox <gi...@apache.org>.

jerqi commented on issue #239:

URL: https://github.com/apache/incubator-uniffle/issues/239#issuecomment-1286395993

> > > You are right, we need to know how much data can be read before HdfsFileReader#read, and then read the corresponding data through HdfsFileReader#read, but how much data can be read is currently obtained by parsing the index file , I think the actual amount of data that can be read needs to be considered in the process of parsing the index file, so as to avoid EOFException. Just like the last block in the picture above, when parsing the index file, it is found that there is no such block according to the length of the data file, and this block should not be added to the segment at this time.

> >

> >

> > Could we catch the EOFException and return the data directly?

>

> It doesn't seem feasible, because we also need to get the correct bufferSegments, if an EOFEXception is thrown, we don't know which bufferSegments are complete.

I can't get your point. Why don't we know which bufferSegments are complete?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: dev-unsubscribe@uniffle.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [incubator-uniffle] leixm commented on issue #239: [Problem] RssUtils#transIndexDataToSegments should consider the length of the data file

Posted by GitBox <gi...@apache.org>.

leixm commented on issue #239:

URL: https://github.com/apache/incubator-uniffle/issues/239#issuecomment-1255754607

Can you take a look at the problem I described. @jerqi @smallzhongfeng @zuston

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: dev-unsubscribe@uniffle.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [incubator-uniffle] jerqi commented on issue #239: [Problem] RssUtils#transIndexDataToSegments should consider the length of the data file

Posted by GitBox <gi...@apache.org>.

jerqi commented on issue #239:

URL: https://github.com/apache/incubator-uniffle/issues/239#issuecomment-1255775387

> org.apache.uniffle.storage.handler.impl.HdfsFileReader#read

>

> ```

> public byte[] read(long offset, int length) {

> try {

> fsDataInputStream.seek(offset);

> byte[] buf = new byte[length];

> fsDataInputStream.readFully(buf);

> return buf;

> } catch (Exception e) {

> LOG.warn("Can't read data for path:" + path + " with offset["

> + offset + "], length[" + length + "]", e);

> }

> return new byte[0];

> }

> ```

So we should process EOFException in this case.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: dev-unsubscribe@uniffle.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [incubator-uniffle] leixm commented on issue #239: [Problem] RssUtils#transIndexDataToSegments should consider the length of the data file

Posted by GitBox <gi...@apache.org>.

leixm commented on issue #239:

URL: https://github.com/apache/incubator-uniffle/issues/239#issuecomment-1255774299

org.apache.uniffle.storage.handler.impl.HdfsFileReader#read

```

public byte[] read(long offset, int length) {

try {

fsDataInputStream.seek(offset);

byte[] buf = new byte[length];

fsDataInputStream.readFully(buf);

return buf;

} catch (Exception e) {

LOG.warn("Can't read data for path:" + path + " with offset["

+ offset + "], length[" + length + "]", e);

}

return new byte[0];

}

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: dev-unsubscribe@uniffle.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [incubator-uniffle] leixm commented on issue #239: [Problem] RssUtils#transIndexDataToSegments should consider the length of the data file

Posted by GitBox <gi...@apache.org>.

leixm commented on issue #239:

URL: https://github.com/apache/incubator-uniffle/issues/239#issuecomment-1255773384

The last read segment contains two parts of blocks, [offset= 58798659, length=4302684] and [offset=63101343, length=440827], because the data in the second part triggers EOFException, causing the data in the first part to be discarded, Eventually lead to block missing.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: dev-unsubscribe@uniffle.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [incubator-uniffle] zuston commented on issue #239: [Problem] RssUtils#transIndexDataToSegments should consider the length of the data file

Posted by GitBox <gi...@apache.org>.

zuston commented on issue #239:

URL: https://github.com/apache/incubator-uniffle/issues/239#issuecomment-1255762455

> If the data is currently being flushed, we cannot guarantee that the number of blocks in the index is the same as the number of blocks in the data.

Yes, you are right. Please refer to #204 . but this PR wont solve your problems you mentioned, it just make fail fast and log some exception for analysis.

Let revisit this problem, as I know, the reading sequence of client will from memory -> localfile to hdfs, that means the incomplete data reading is not affect the result.

For example, the partial memory shuffle data is being flushed to HDFS or in the flushing queue, it also will get from the read client side. Although the index file in HDFS is incomplete, the partial data has been accepted from memory. So this is not a problem.

So the problems you mentioned make me confused, there should be no problem with the design of reading, there may be some bugs.

By the way, I have also encountered inconsistent block problems, but we are using the memory_localfile mode, which is caused by the instability of grpc service, refer to #198

Feel free to discuss more.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: dev-unsubscribe@uniffle.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [incubator-uniffle] jerqi commented on issue #239: [Problem] RssUtils#transIndexDataToSegments should consider the length of the data file

Posted by GitBox <gi...@apache.org>.

jerqi commented on issue #239:

URL: https://github.com/apache/incubator-uniffle/issues/239#issuecomment-1255935159

Maybe local file will occur this similar problems.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: dev-unsubscribe@uniffle.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [incubator-uniffle] jerqi commented on issue #239: [Problem] RssUtils#transIndexDataToSegments should consider the length of the data file

Posted by GitBox <gi...@apache.org>.

jerqi commented on issue #239:

URL: https://github.com/apache/incubator-uniffle/issues/239#issuecomment-1286420832

When we encounter EOFException, we have read some index data, we can return the data that we have read.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: dev-unsubscribe@uniffle.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [incubator-uniffle] leixm commented on issue #239: [Problem] RssUtils#transIndexDataToSegments should consider the length of the data file

Posted by GitBox <gi...@apache.org>.

leixm commented on issue #239:

URL: https://github.com/apache/incubator-uniffle/issues/239#issuecomment-1255771111

The key point of the problem is that the data file has only 63101342 bytes, but at this time, it has to read 4743511 bytes from the 58798659 offset, which eventually leads to OEFException,And at this time, **the block from offset 58798659 to offset 63101342 will be discarded**, Eventually lead to block missing.

**Any block after offset 63101342 will not cause block missing, and any block before offset 63101342 will cause block missing.**

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: dev-unsubscribe@uniffle.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [incubator-uniffle] leixm commented on issue #239: [Problem] RssUtils#transIndexDataToSegments should consider the length of the data file

Posted by GitBox <gi...@apache.org>.

leixm commented on issue #239:

URL: https://github.com/apache/incubator-uniffle/issues/239#issuecomment-1255780011

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: dev-unsubscribe@uniffle.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [incubator-uniffle] leixm commented on issue #239: [Problem] RssUtils#transIndexDataToSegments should consider the length of the data file

Posted by GitBox <gi...@apache.org>.

leixm commented on issue #239:

URL: https://github.com/apache/incubator-uniffle/issues/239#issuecomment-1286426690

> When we encounter EOFException, we have read some index data, we can return the data that we have read.

DataSkippableReadHandler#readShuffleData return ShuffleDataResult, ShuffleDataResult includes two properties, data and bufferSegments, so we will return the block of bufferSegments to be larger than data.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: dev-unsubscribe@uniffle.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [incubator-uniffle] jerqi commented on issue #239: [Problem] RssUtils#transIndexDataToSegments should consider the length of the data file

Posted by GitBox <gi...@apache.org>.

jerqi commented on issue #239:

URL: https://github.com/apache/incubator-uniffle/issues/239#issuecomment-1255936097

> You are right, we need to know how much data can be read before HdfsFileReader#read, and then read the corresponding data through HdfsFileReader#read, but how much data can be read is currently obtained by parsing the index file , I think the actual amount of data that can be read needs to be considered in the process of parsing the index file, so as to avoid EOFException. Just like the last block in the picture above, when parsing the index file, it is found that there is no such block according to the length of the data file, and this block should not be added to the segment at this time.

Could we catch the EOFException and return the data directly?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: dev-unsubscribe@uniffle.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [incubator-uniffle] zuston commented on issue #239: [Problem] RssUtils#transIndexDataToSegments should consider the length of the data file

Posted by GitBox <gi...@apache.org>.

zuston commented on issue #239:

URL: https://github.com/apache/incubator-uniffle/issues/239#issuecomment-1255776822

> The last read segment contains two parts of blocks, [offset= 58798659, length=4302684] and [offset=63101343, length=440827], because the data in the second part triggers EOFException, causing the data in the first part to be discarded, Eventually lead to block missing.

Got your thought. I think we should throw exception directly after applying #204 . Please let me know if i'm wrong

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: dev-unsubscribe@uniffle.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [incubator-uniffle] leixm commented on issue #239: [Problem] RssUtils#transIndexDataToSegments should consider the length of the data file

Posted by GitBox <gi...@apache.org>.

leixm commented on issue #239:

URL: https://github.com/apache/incubator-uniffle/issues/239#issuecomment-1255904095

Maybe i can raise a pr to fix it? @zuston

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: dev-unsubscribe@uniffle.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [incubator-uniffle] jerqi commented on issue #239: [Problem] RssUtils#transIndexDataToSegments should consider the length of the data file

Posted by GitBox <gi...@apache.org>.

jerqi commented on issue #239:

URL: https://github.com/apache/incubator-uniffle/issues/239#issuecomment-1287569816

> > When we encounter EOFException, we have read some index data, we can return the data that we have read.

>

> DataSkippableReadHandler#readShuffleData return ShuffleDataResult, ShuffleDataResult includes two properties, data and bufferSegments, so we will return the block of bufferSegments to be larger than data.

ok

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: dev-unsubscribe@uniffle.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org