You are viewing a plain text version of this content. The canonical link for it is here.

Posted to commits@hudi.apache.org by GitBox <gi...@apache.org> on 2022/09/27 21:46:58 UTC

[GitHub] [hudi] nochimow opened a new issue, #6811: [SUPPORT] Slow upsert performance

nochimow opened a new issue, #6811:

URL: https://github.com/apache/hudi/issues/6811

**Describe the problem you faced**

After one month of data ingestion, a data ingestion pipeline started to take a very long time during the upsert operation

We currently use Hudi Spark 0.12 running on AWS Glue 3.0 (Spark 3.1), writing the Hudi files in a CoW format into a S3 bucket.

**Environment Details**

The Glue Job currently have 75 Workers of G.2X type. (Each worker have 8 vCPU, 32 GB of memory and 128 GB disk)

This table have currently 9.4TB of storage in S3, and all data is partitioned by year, month and day. Being the first record from 2020-11.

Our typical data input are .AVRO files with the average size of 3,5GB, but splited into 1200 files of an average size of 3MB, Being the smallest 1.72MB and the largest 8MB.

Our job basically load all the files into a spark dataframe and write into Hudi S3 location.

Our Hudi Config is set like this:

"hoodie.datasource.write.keygenerator.class": "org.apache.hudi.keygen.ComplexKeyGenerator",

"hoodie.datasource.write.payload.class": "org.apache.hudi.common.model.DefaultHoodieRecordPayload",

"hoodie.datasource.hive_sync.partition_extractor_class": "org.apache.hudi.hive.MultiPartKeysValueExtractor",

"hoodie.datasource.write.hive_style_partitioning": "true",

"hoodie.write.concurrency.mode": "single_writer",

"hoodie.cleaner.commits.retained": 1,

"hoodie.fail.on.timeline.archiving": False,

"hoodie.keep.max.commits": 3,

"hoodie.keep.min.commits": 2,

"hoodie.bloom.index.use.caching": True,

"hoodie.parquet.compression.codec": "snappy",

"hoodie.index.type": "BLOOM",

"hoodie.metrics.on": True,

"hoodie.metrics.reporter.type": "CLOUDWATCH"

**To Reproduce**

* Hudi version :

0.12

* Spark version :

3.1.1

* Storage (HDFS/S3/GCS..) :

S3

**Stacktrace**

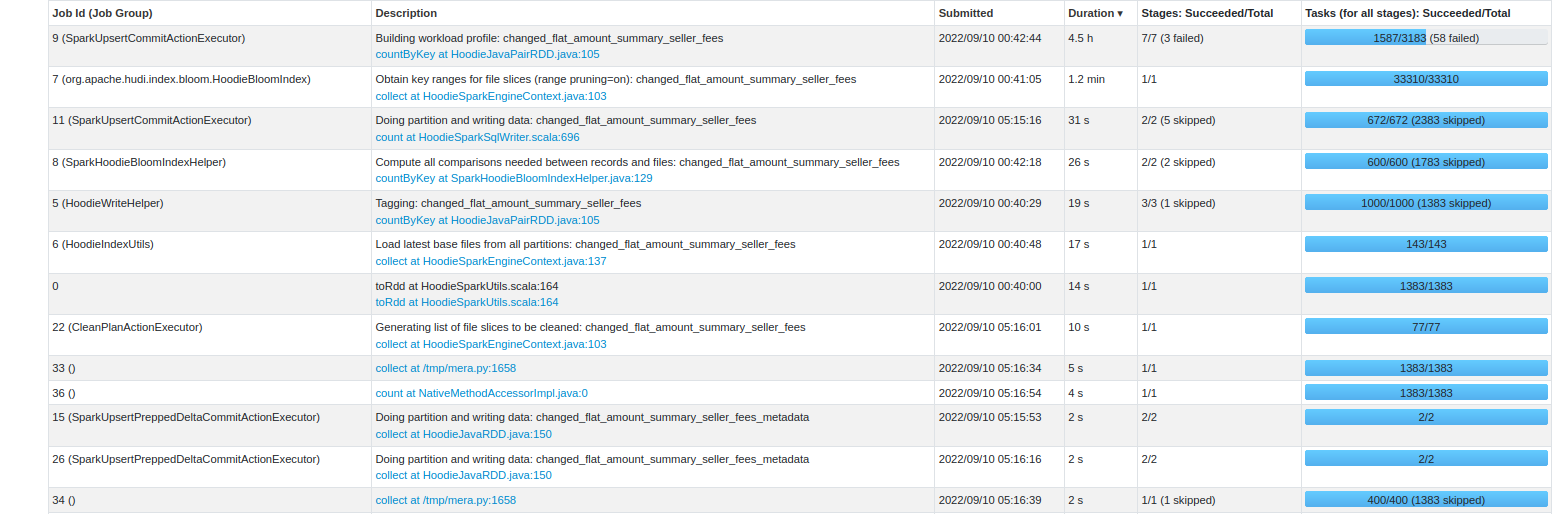

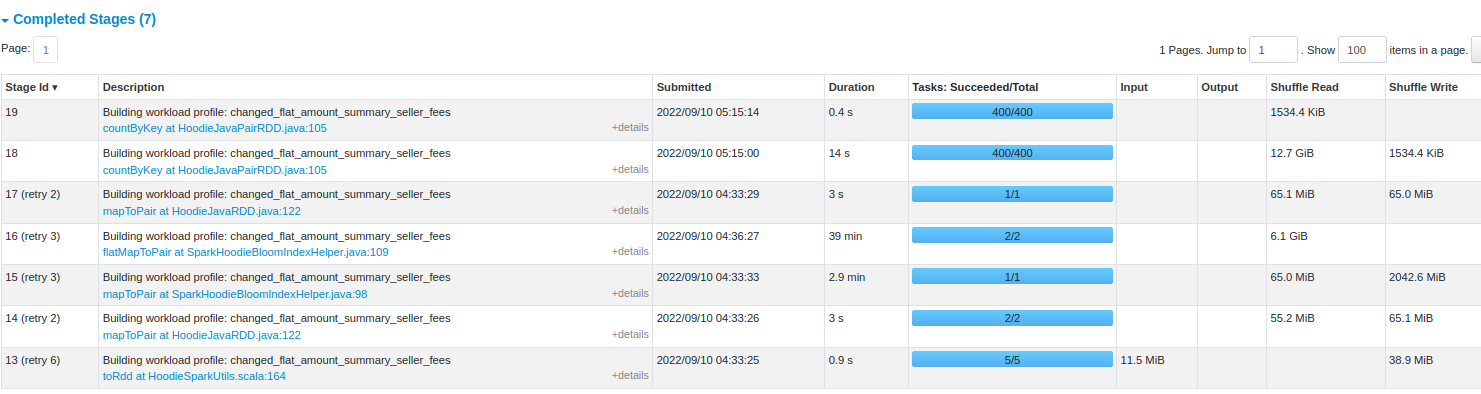

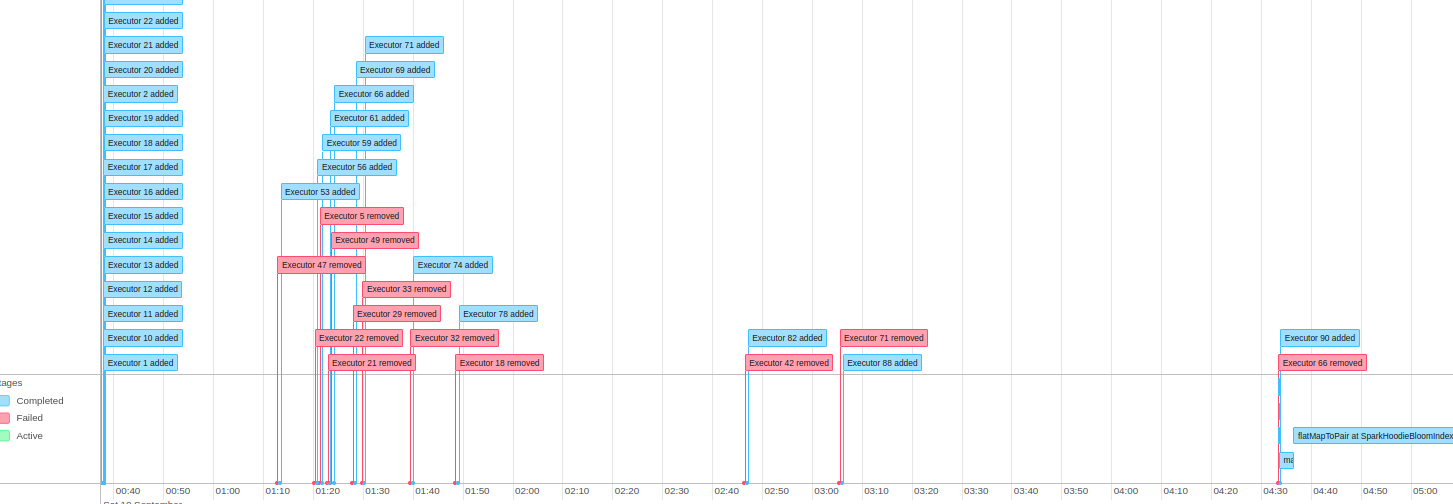

Seeing by the SparkUI the most expensive step is the SparkUpsertCommitActionExecutor, that is taking all the 4.5h of duration.

But seeing this step into detail, we have the following stats

Its also possible to check that we had some failed jobs on this step

Do you have any suggestion that can be done to optimize this job with this characteristics? Since that with running with this performance, the job it becoming really expensive to run.

If there is any other log from this execution just let me know.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] nsivabalan commented on issue #6811: [SUPPORT] Slow upsert performance

Posted by GitBox <gi...@apache.org>.

nsivabalan commented on issue #6811:

URL: https://github.com/apache/hudi/issues/6811#issuecomment-1305153782

Few pointers:

- for failed executors, do check if they are failing due to OOMs. if yes, you may need to tune your spark memory configs.

- I see you have set cleaner.commits.retained = 1. So, this would clean the data files aggressively which could add to your write latency. you can consider relaxing this a bit. Also, you can relax https://hudi.apache.org/docs/configurations/#hoodiecleanmaxcommits if you plan to relax the cleaner commits retained.

- If you have lot of small files in your lake, it might impact your index look up time. May be you can enable clustering to batch lot of small files into larger ones. you can choose to do this async as well.

- If none of the above suggestion works, one another option is to consider BUCKET_INDEX. but that would mean you have to start w/ a new table altogether. but your index look up is expected to be O(1).

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] nochimow commented on issue #6811: [SUPPORT] Slow upsert performance

Posted by GitBox <gi...@apache.org>.

nochimow commented on issue #6811:

URL: https://github.com/apache/hudi/issues/6811#issuecomment-1262804768

Hi @nsivabalan,

~97% of the data should be inserts and the remaning are updates. The updates only touches the latest partitions. (-1 day at max)

No, we are not setting any small file config in this case.

Based on that, there is any tweak suggestion to decrease the index tagging stage?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] nochimow commented on issue #6811: [SUPPORT] Slow upsert performance

Posted by GitBox <gi...@apache.org>.

nochimow commented on issue #6811:

URL: https://github.com/apache/hudi/issues/6811#issuecomment-1295029331

@ctlgdanielli

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] nsivabalan commented on issue #6811: [SUPPORT] Slow upsert performance

Posted by GitBox <gi...@apache.org>.

nsivabalan commented on issue #6811:

URL: https://github.com/apache/hudi/issues/6811#issuecomment-1261794909

whats your workload type. specifically wrt updates. is it completely random? or touches only latest partitions and records.

Also, do you override any of small file related configs?

From the spark UI, seems like it the index tagging stage thats taking 4.5 hours.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org