You are viewing a plain text version of this content. The canonical link for it is here.

Posted to notifications@apisix.apache.org by bz...@apache.org on 2021/10/14 05:02:35 UTC

[apisix-website] branch master updated: docs: update translations

for docs (#659)

This is an automated email from the ASF dual-hosted git repository.

bzp2010 pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/apisix-website.git

The following commit(s) were added to refs/heads/master by this push:

new bb65de4 docs: update translations for docs (#659)

bb65de4 is described below

commit bb65de47891d9c27a2c7a2202654715a1922c81a

Author: yilinzeng <36...@users.noreply.github.com>

AuthorDate: Thu Oct 14 13:02:29 2021 +0800

docs: update translations for docs (#659)

* docs: add translation for apisix vs envoy

* docs: add translation for etcd3 doc

* docs: add translation for sina weibo

* docs: add translation for Plugin Orchestration

* docs: add translation for kong-to-apisix

* docs: add translation for kong-to-apisix2

* fix: lint

* docs: add translation for Nginx+Lua doce

---

...ache-APISIX-and-Envoy-performance-comparison.md | 86 ++++-----

.../06/30/etcd3-support-HTTP-access-perfectly.md | 79 ++++----

...ina-Weibo-API-gateway-based-on-Apache-APISIX.md | 212 ++++++++++-----------

...use-of-plugin-orchestration-in-Apache-APISIX.md | 92 ++++-----

website/blog/2021/08/05/Kong-to-APISIX.md | 176 ++++++++---------

.../08/25/Why-Apache-APISIX-chose-Nginx-and-Lua.md | 113 ++++++-----

6 files changed, 373 insertions(+), 385 deletions(-)

diff --git a/website/blog/2021/06/10/Apache-APISIX-and-Envoy-performance-comparison.md b/website/blog/2021/06/10/Apache-APISIX-and-Envoy-performance-comparison.md

index 7318739..430ae93 100644

--- a/website/blog/2021/06/10/Apache-APISIX-and-Envoy-performance-comparison.md

+++ b/website/blog/2021/06/10/Apache-APISIX-and-Envoy-performance-comparison.md

@@ -1,6 +1,6 @@

---

-title: "Apache APISIX 和 Envoy 性能大比拼"

-author: "王院生"

+title: "Apache APISIX v.s Envoy: Which Has the Better Performance?"

+author: "Yuansheng Wang"

authorURL: "https://github.com/membphis"

authorImageURL: "https://avatars.githubusercontent.com/u/6814606?v=4"

keywords:

@@ -9,52 +9,46 @@ keywords:

- Apache APISIX

- Service Mesh

- API Gateway

-- 性能

-description: 本文介绍了在一定条件下,Apache APISIX 和 Envoy 的性能对比,总体来说 APISIX 在响应延迟和 QPS 层面都略优于 Envoy, 由于 NGINX 的多 worker 的协作方式在高并发场景下更有优势,APISIX 在开启多个 worker 进程后性能提升较 Enovy 更为明显;APISIX 在性能和延迟上的表现使它在处理南北向流量上具有海量的吞吐能力,根据自己的业务场景来选择合理的组件配合插件构建自己的服务。

+- Performance

+description: This article introduces the performance comparison between Apache APISIX and Envoy under certain conditions. In general, APISIX is slightly better than Envoy in terms of response latency and QPS, and APISIX has more advantages than Enovy when multiple worker processes are enabled due to the collaborative approach of NGINX in high concurrency scenarios. The performance and latency of APISIX makes it a massive throughput capability in handling north-south traffic.

tags: [Technology]

---

-> 本文介绍了在一定条件下,Apache APISIX 和 Envoy 的性能对比,总体来说 Apache APISIX 在响应延迟和 QPS 层面都略优于 Envoy,Apache APISIX 在开启多个 worker 进程后性能提升较 Enovy 更为明显;而且 Apache APISIX 在性能和延迟上的表现使它在处理南北向流量上具有海量的吞吐能力。

+> This article introduces the performance comparison between Apache APISIX and Envoy under certain conditions. In general, APISIX is slightly better than Envoy in terms of response latency and QPS, and APISIX has more advantages than Enovy when multiple worker processes are enabled due to the collaborative approach of NGINX in high concurrency scenarios. The performance and latency of APISIX makes it a massive throughput capability in handling north-south traffic.

<!--truncate-->

-> Source: https://www.apiseven.com/zh/blog/Apache-APISIX-and-Envoy-performance-comparison

+I learned about Envoy at the CNCF technology sharing session and did performance tests on Apache APISIX and Envoy after the session.

-在 CNCF 组织的一场技术分享会上,第一次听到了 Envoy 这么一个东西,分享的嘉宾巴拉巴拉讲了一大堆,啥都没记住,就记住了一个特别新颖的概念“通信总线”,后面 google 了下 Envoy 这个东西到底是什么,发现官网上如是描述:

+At a technical sharing session organized by CNCF, I heard about Envoy for the first time, and the guest speaker talked a lot about it, but all I can recall is a particularly novel concept “communication bus”. This is how the official website describes it.

-“Envoy 是专为大型现代 SOA(面向服务架构)架构设计的 L7 代理和通信总线”

+“Envoy is an L7 proxy and communication bus designed for large modern SOA (Service Oriented Architecture) architectures”

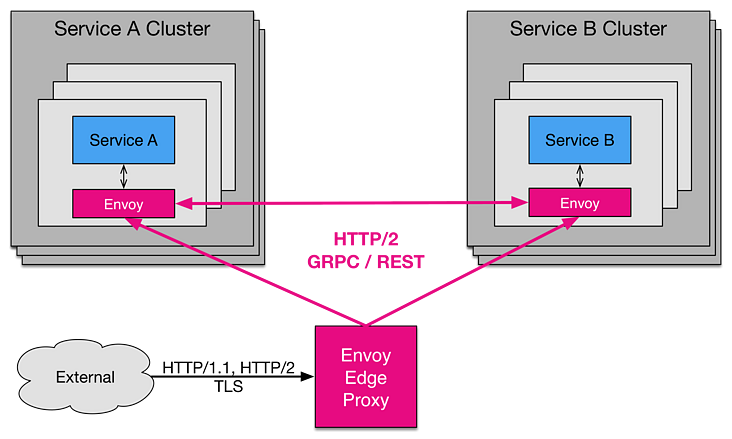

-也就是说, Envoy 是为了解决 Service Mesh 领域而诞生一款 L7 代理软件,这里我网上找了一张图,我理解的 Envoy 大概是如下的部署架构。(如果错了请大佬指教)

+In other words, Envoy is to solve the Server Mesh field and the birth of L7 proxy software. I found a diagram online. My understanding of Envoy is probably the following deployment architecture (please correct me if I am wrong).

-

+Since it is a proxy software for L7, as an experienced user in the OpenResty community for many years, naturally I can’t help but use it to engage in comparison.

-既然是 L7 的代理软件嘛,作为常年混迹 OpenResty 社区的老司机,自然忍不住把它拿来搞一搞,对比对比。

+The object we chose to test is Apache APISIX, which is an API gateway based on OpenResty implementation. (In fact, it is also an L7 proxy and then added routing, authentication, flow restriction, dynamic upstream, and other features)

-我们选择的比试对象是 Apache APISIX,它是基于 OpenResty 实现的 API 网关。(其实也就是 L7 代理然后加了路由、认证,限流、动态上游等等之类的功能)

+Why did I choose it? Because once I heard about the great routing implementation of this product during a community share. Since our business routing system is in a mess, I downloaded the source code of Apache APISIX and found that it is an awesome API gateway, beating all similar products I’ve seen, so I was impressed by it!

-为什么选择它呢,因为有一次社区分享的时候听说这货的路由实现非常棒,正好我们的现在业务的路由系统乱七八糟,扒拉了下 APISIX 的源码,发现确实是 6 到飞起,吊打我看到过的同类产品, 所以印象深刻,就它了!

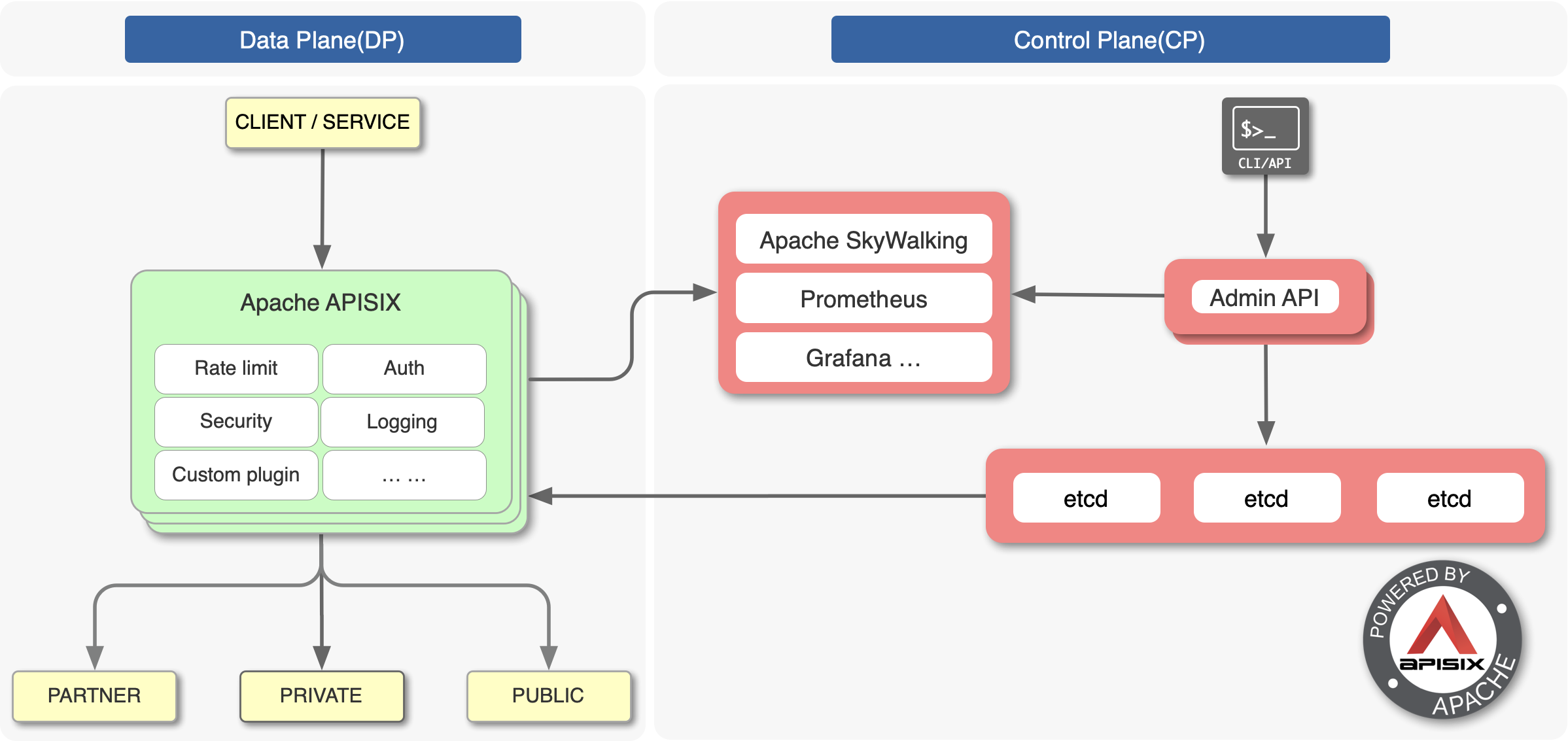

+Here is a diagram from the Apache APISIX official website, a diagram explains things better than words, you can see how Apache APISIX works.

-这里附上一张在 APISIX 官网扒拉的图,真是一图胜千言,一看就知道这玩意儿是怎么工作的。

+

-

+Let’s get started, first we go to the official website to find the most versions of two products: Apache APISIX 1.5 and Envoy 1.14 (the latest version at the time of writing this article).

-开搞吧,首先我们去官网找到两个产品的最版本:

+## Build Environment Preparation

-Apache APISIX 1.5 和 Envoy 1.14

+- Stress test client: wrk.

+- Testing main metrics including: gateway latency, QPS and whether it scales linearly.

+- Test environment: Microsoft Cloud Linux (ubuntu 18.04), Standard D13 v2 (8 vcpus, 56 GiB memory).

+- Test method 1: single-core run for side-by-side comparison (since they are both based on epoll IO model, single-core crush test is used to verify their processing power).

+- Test method 2: using multicore to run a side-by-side comparison, mainly to verify whether their overall processing power can grow linearly under the scenario of adding more (processes|threads).

-(笔者在写这篇文章时的最新版)

+## 测试场景

-#### 构建环境准备

-

-- 压力测试客户端:wrk;

-- 测试主要指标包括:网关延迟、QPS 和是否线性扩展;

-- 测试环境:微软云 Linux (ubuntu 18.04), Standard D13 v2 (8 vcpus, 56 GiB memory);

-- 测试方式 1:采用单核运行横向对比(因为它们都是基于 epoll 的 IO 模型,所以用单核压测验证它们的处理能力);

-- 测试方式 2:采用多核运行横向对比,主要是为了验证两者在添加多(进程|线程)的场景下其整体处理能力是否能够线性增长;

-

-#### 测试场景

-

-这里我们用 NGINX 搭建了一个上游服务器,配置 2 个 worker,接收到请求直接应答 4k 内容,参考配置如下:

+We built an upstream server with NGINX, configured it with 2 workers, and received a request to directly answer 4k content, with the following reference configuration:

```text

server {

@@ -67,13 +61,14 @@ server {

}

```

-- 网络架构示意图如下:(绿色正常负载,未跑满。红色为高压负载,要把进程资源跑满,主要是 CPU)

+- The network architecture schematic is as follows: (green normal load, not run full. Red is a high pressure load, to run the process resources full, mainly CPU)

+是 CPU)

-#### 路由配置

+## Route Configuration

-首先我们找到 APISIX 的入门配置指南,我们添加一条到 /hello 的路由,配置如下:

+First we find the Apache APISIX Getting Started configuration guide and we add a route to /hello with the following configuration:

```text

curl http://127.0.0.1:9080/apisix/admin/routes/1 -X PUT -d '{、

@@ -86,9 +81,9 @@ curl http://127.0.0.1:9080/apisix/admin/routes/1 -X PUT -d '{、

}}'

```

-需要注意的是,这里没并没有开始 proxy_cache 和 proxy_mirror 插件,因为 Enovy 并没有类似的功能;

+Note that the proxy_cache and proxy_mirror plugins are not started here, as Envoy does not have similar functionality.

-然后我们参考 Envoy 官方压测指导 为 Envoy 添加一条路由:

+Then we add a route to Envoy by referring to the official Envoy pressure test guide:

```text

static_resources:

@@ -136,28 +131,31 @@ static_resources:

max_retries: 1000000000

```

-上面的 generate*request_id、dynamic_stats 和 circuit_breakers 部分,在 Envoy 内部是默认开启,但本次压测用不到,需要显式关闭或设置超大阈值从而提升性能。(谁能给我解释下为什么这玩意儿配置这么复杂 -*-!)

+The generate_request_id, dynamic_stats and circuit_breakers sections above are turned on by default inside Envoy, but they are not used in this compression test and need to be turned off explicitly or set to oversize thresholds to improve performance. (Can someone explain to me why this is so complicated to configure -_-!)

+

+## Results

-#### 压测结果

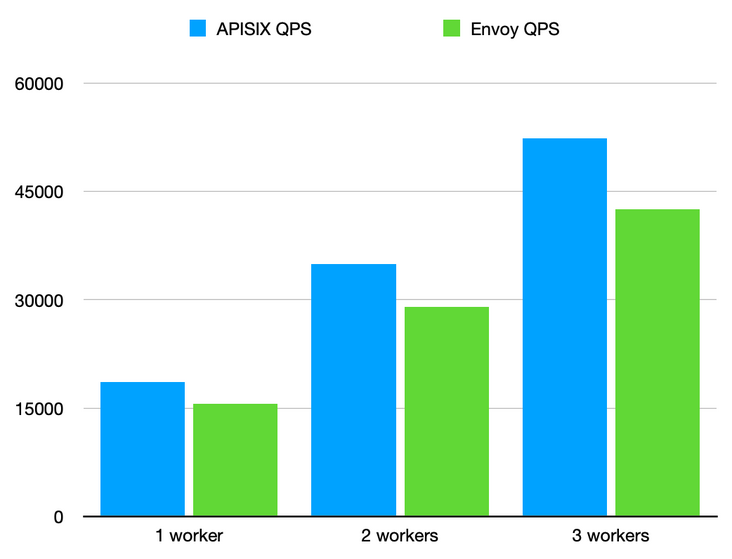

+Single route without any plugins turned on. Turn on different CPU counts for full load stress test.

-单条路由,不开启任何插件。开启不同 CPU 数量,进行满载压力测试。说明:对于 NGINX 叫 worker 数量,Envoy 是 concurrent ,为了统一后面都叫 worker 数量。

+Note: For NGINX called worker number, Envoy is concurrent, in order to unify the number of workers called after.

-| **进程数** | **APISIX QPS** | **APISIX Latency** | **Envoy QPS** | **Envoy Latency** |

+| **Workers** | **APISIX QPS** | **APISIX Latency** | **Envoy QPS** | **Envoy Latency** |

| :------------ | :------------- | :----------------- | :------------ | :---------------- |

| **1 worker** | 18608.4 | 0.96 | 15625.56 | 1.02 |

| **2 workers** | 34975.8 | 1.01 | 29058.135 | 1.09 |

| **3 workers** | 52334.8 | 1.02 | 42561.125 | 1.12 |

-注:原始数据公开在 [gist](https://gist.github.com/aifeiasdf/9fc4585f6404e3a0a70c568c2a14b9c9) 预览。

+Note: The raw data is publicly available at [gist](https://gist.github.com/aifeiasdf/9fc4585f6404e3a0a70c568c2a14b9c9) preview.

-QPS:每秒钟完成的请求数,数量越多越好,数值越大代表单位时间内可以完成的请求数量越多。从 QPS 结果看,APISIX 性能是 Envoy 的 120% 左右,核心数越多 QPS 差距越大。

+QPS: The number of requests completed per second, the higher the number the better, the higher the value means the more requests can be completed per unit time. From the QPS results, Apache APISIX performance is about 120% of Envoy’s, and the higher the number of cores, the bigger the QPS difference.

-Latency:每请求的延迟时间,数值越小越好。它代表每请求从发出后需要经过多长时间可以接收到应答。对于反向代理场景,该数值越小,对请求的影响也就最小。从结果上看,Envoy 的每请求延迟要比 APISIX 多 6-10% ,核心数量越多延迟越大。

+Latency: Latency per request, the smaller the value the better. It represents how long it takes to receive an answer per request from the time it is sent. For reverse proxy scenarios, the smaller the value, the smaller the impact on the request will be. From the results, Envoy’s per-request latency is 6–10% more than Apache APISIX, and the higher the number of cores the higher the latency.

-可以看到两者在单工作线程|进程的模式下,QPS 和 Latency 两个指标差距不大,但是随着工作线程|进程的增加他们的差距逐渐放大,这里我分析可能有以下两方面的原因,NGINX 在高并发场景下用多 worker 和系统的 IO 模型进行交互是不是会更有优势,另外一方面,也可能是 NGINX 自身在实现上面对内存和 CPU 的使用比较“抠门”,这样累积起来的性能优势,以后详细评估评估。

+We can see that the difference between the two metrics in the single-worker thread|process mode, QPS and Latency is not large, but with the increase in the number of threads|processes their gap is gradually enlarged, here I analyze that there may be two reasons, NGINX in the high concurrency scenario with multiple workers and the system IO model for interaction is not more advantageous, on the other hand, also On the other hand, NGINX itself may be more “stingy” in terms of memory and CP [...]

-#### 总结

+## 总结

-总体来说 APISIX 在响应延迟和 QPS 层面都略优于 Envoy, 由于 NGINX 的多 worker 的协作方式在高并发场景下更有优势,得益于此,APISIX 在开启多个 worker 进程后性能提升较 Enovy 更为明显;但是两者并不冲突, Envoy 的总线设计使它在处理东西向流量上有独特的优势, APISIX 在性能和延迟上的表现使它在处理南北向流量上具有海量的吞吐能力,根据自己的业务场景来选择合理的组件配合插件构建自己的服务才是正解。

+In general, Apache APISIX is slightly better than Envoy in terms of response latency and QPS, and due to NGINX’s multi-worker collaboration method, which is more advantageous in high concurrency scenarios, Apache APISIX’s performance improvement is more obvious than Envoy’s after opening multiple worker processes. The bus design of Envoy gives it a unique advantage in handling east-west traffic, while the performance and latency of Apache APISIX gives it a massive throughput capability i [...]

+Apache APISIX

diff --git a/website/blog/2021/06/30/etcd3-support-HTTP-access-perfectly.md b/website/blog/2021/06/30/etcd3-support-HTTP-access-perfectly.md

index 8ffa80e..27c6a5c 100644

--- a/website/blog/2021/06/30/etcd3-support-HTTP-access-perfectly.md

+++ b/website/blog/2021/06/30/etcd3-support-HTTP-access-perfectly.md

@@ -1,6 +1,6 @@

---

-title: "差之毫厘:etcd 3 完美支持 HTTP 访问?"

-author: "罗泽轩"

+title: "Does etcd 3 Support HTTP Access Perfectly?"

+author: "Zexuan Luo"

authorURL: "https://github.com/spacewander"

authorImageURL: "https://avatars.githubusercontent.com/u/4161644?v=4"

keywords:

@@ -8,80 +8,78 @@ keywords:

- etcd

- HTTP

- gRPC

-Description: 从去年 10 月发布 Apache APISIX 2.0 版本以来,现在已经过去了 8 个月。在实践过程中,我们也发现了 etcd 的 HTTP API 的一些跟 gRPC API 交互的问题。事实上,拥有 gRPC-gateway 并不意味着能够完美支持 HTTP 访问,这里还是有些细微的差别。

+Description: It has been 8 months since the release of Apache APISIX version 2.0 last October. In the course of practice, we have also discovered some issues with etcd's HTTP API that interoperate with the gRPC API. In fact, having a gRPC-gateway does not mean that HTTP access is perfectly supported, there are some nuances here.

tags: [Technology]

---

-> 从去年 10 月发布 Apache APISIX 2.0 版本以来,现在已经过去了 8 个月。在实践过程中,我们也发现了 etcd 的 HTTP API 的一些跟 gRPC API 交互的问题。事实上,拥有 gRPC-gateway 并不意味着能够完美支持 HTTP 访问,这里还是有些细微的差别。

+> It has been 8 months since the release of Apache APISIX version 2.0 last October. In the course of practice, we have also discovered some issues with etcd's HTTP API that interoperate with the gRPC API. In fact, having a gRPC-gateway does not mean that HTTP access is perfectly supported, there are some nuances here.

<!--truncate-->

-etcd 升级到 3.x 版本后,其对外 API 的协议从普通的 HTTP1 切换到了 gRPC。为了兼顾那些不能使用 gRPC 的特殊群体,etcd 通过 gRPC-gateway 的方式代理 HTTP1 请求,以 gRPC 形式去访问新的 gRPC API。(由于 HTTP1 念起来太过拗口,以下将之简化成 HTTP,正好和 gRPC 能够对应。请不要纠结 gRPC 也是 HTTP 请求的这种问题。)

+After etcd was upgraded to version 3.x, the protocol of its external API was switched from normal HTTP1 to gRPC. etcd proxied HTTP1 requests through gRPC-gateway to access the new gRPC API in the form of gRPC for those special groups that cannot use gRPC. (Since HTTP1 is too awkward to pronounce, the following is simplified to HTTP, which corresponds to gRPC. Please don’t get hung up on the fact that gRPC is also an HTTP request.)

-Apache APISIX 开始用 etcd 的时候,用的是 etcd v2 的 API。从 Apache APISIX 2.0 版本起,我们把依赖的 etcd 版本升级到 3.x。由于 Lua 生态圈里面没有 gRPC 库,所以 etcd 对 HTTP 的兼容帮了我们很大的忙,这样就不用花很大心思去补这个短板了。

+When Apache APISIX started using etcd, we used the etcd v2 API, and since Apache APISIX version 2.0, we have upgraded our dependency on etcd to 3.x. Since there is no gRPC library in the Lua ecosystem, etcd’s HTTP compatibility has helped us a lot, so we don’t have to go through a lot of effort to patch This was a big help, so we didn’t have to go to a lot of trouble to fill in the gaps.

-从去年 10 月发布 Apache APISIX 2.0 版本以来,现在已经过去了 8 个月。在实践过程中,我们也发现了 etcd 的 HTTP API 的一些跟 gRPC API 交互的问题。事实上,拥有 gRPC-gateway 并不意味着能够完美支持 HTTP 访问,这里还是有些细微的差别。

+It has been 8 months since the release of Apache APISIX version 2.0 last October. In the course of practice, we have also discovered some issues with etcd’s HTTP API that interoperates with the gRPC API. In fact, having a gRPC-gateway does not mean that HTTP access is perfectly supported, there are some nuances here.

-### 打破 gRPC 的默认限制

+## Breaking the Default Restrictions of gRPC

-就在几天前,etcd 发布了 v3.5.0 版本。这个版本的发布,了却困扰我们很长时间的一个问题。

+Just a few days ago, etcd released version v3.5.0. This release solves a problem that has been bothering us for a long time.

-跟 HTTP 不同的是,gRPC 默认限制了一次请求可以读取的数据大小。这个限制叫做 “MaxCallRecvMsgSize”,默认是 4MiB。当 Apache APISIX 全量同步 etcd 数据时,假如配置够多,就会触发这一上限,报错 “grpc: received message larger than max”。

+Unlike HTTP, gRPC limits the size of data that can be read in one request by default. This limit is called “MaxCallRecvMsgSize” and defaults to 4MiB. When Apache APISIX fully synchronizes etcd data, this limit can be triggered if configured enough and the error “grpc: received message larger than max”.

-神奇的是,如果你用 etcdctl 去访问,这时候却不会有任何问题。这是因为这个限制是可以在跟 gRPC server 建立连接时动态设置的,etcdctl 给这个限制设置了一个很大的整数,相当于去掉了这一限制。

+Miraculously, if you use etcdctl to access it, there is no problem at all. This is because this limit can be set dynamically when establishing a connection with the gRPC server. etcdctl sets this limit to a large integer, which is equivalent to removing this limit.

-由于不少用户碰到过同样的问题,我们曾经讨论过对策。

+Since many users have encountered the same problem, we have discussed countermeasures.

+One idea was to use incremental synchronization to simulate full synchronization, which has two drawbacks.

-一个想法是用增量同步模拟全量同步,这么做有两个弊端:

+1. It is complicated to implement and requires a lot of code changes.

+2. It would extend the time required for synchronization.

-1. 实现起来复杂,要改不少代码

-2. 会延长同步所需的时间

-

-另一个想法是修改 etcd。既然能够在 etcdctl 里面去除限制,为什么不对 gRPC-gateway 一视同仁呢?同样的改动可以作用在 gRPC-gateway 上。

-

-我们采用了第二种方案,给 etcd 提了个 PR:https://github.com/etcd-io/etcd/pull/13077

+Another idea is to modify etcd. If you can remove the restrictions in etcdctl, why not treat gRPC-gateway the same way? The same change can be made to gRPC-gateway.

+We’ve adopted the second option, and have given etcd a PR: [PR #13077](https://github.com/etcd-io/etcd/pull/13077).

-最新发布的 v3.5.0 版本就包含了我们贡献的这个改动。如果你遇到 “grpc: received message larger than max”,不妨试一下这个版本。这一改动也被 etcd 开发者 backport 到 3.4 分支上了。3.4 分支的下一个发布,也会带上这个改动。

+The latest release of v3.5.0 includes this change that we contributed. If you encounter “grpc: received message larger than max”, you may want to try this version. This change has also been back-ported to the 3.4 branch by the etcd developers, and the next release of the 3.4 branch will carry this change as well.

-这件事也说明 gRPC-gateway 并非百试百灵。即使用了它,也不能保证 HTTP 访问能够跟 gRPC 访问有一样的体验。

+This incident also shows that gRPC-gateway is not foolproof. Even with it, there is no guarantee that HTTP access will have the same experience as gRPC access.

-### 对服务端证书的有趣用法

+## Interesting Usage of Server-side Certificates

-Apache APISIX 增加了对 etcd mTLS 的支持后,有用户反馈一直没法完成校验,而用 etcdctl 访问则是成功的。在跟用户交流后,我决定拿他的证书来复现下。

+After Apache APISIX added support for etcd mTLS, some users reported that they have been unable to complete the checksum, while accessing with etcdctl was successful. After talking to the user, I decided to take his certificate and reproduce it.

-在复现过程中,我注意到 etcd 日志里面有这样的报错:

+During the replication process, I noticed this error in the etcd log:

``` text

2021-06-09 11:10:13.022735 I | embed: rejected connection from "127.0.0.1:50898" (error "tls: failed to verify client's certificate: x509: certificate specifies an incompatible key usage", ServerName "")

WARNING: 2021/06/09 11:10:13 grpc: addrConn.createTransport failed to connect to {127.0.0.1:12379 0 }. Err :connection error: desc = "transport: authentication handshake failed: remote error: tls: bad certificate". Reconnecting...

```

-“bad certificate” 错误信息,初看像是因为我们发给 etcd 的客户端证书不对。但仔细瞧瞧,会发现这个报错是在 gRPC server 里面报的。

+The “bad certificate” error message looks at first glance like it is because we sent the wrong client certificate to etcd. But if you look closely, you will see that this error is reported inside the gRPC server.

-gRPC-gateway 在 etcd 里面起到一个代理的作用,把外面的 HTTP 请求变成 gRPC server 能处理的 gRPC 请求。

+The gRPC-gateway acts as a proxy inside etcd, turning outside HTTP requests into gRPC requests that the gRPC server can handle.

-大体架构如下:

+The general architecture is as follows:

```text

etcdctl ----> gRPC server

Apache APISIX ---> gRPC-gateway ---> gRPC server

```

-为什么 etcdctl 直连 gRPC server 能通,而中间加一层 gRPC-gateway 就不行?

+Why does etcdctl connect directly to the gRPC server, but not with a gRPC-gateway in between?

-原来当 etcd 启用了客户端证书校验之后,用 gRPC-gateway 连接 gRPC server 就需要提供一个客户端证书。猜猜这个证书从哪来?

+It turns out that when etcd enables client-side certificate validation, a client-side certificate is required to connect to the gRPC server using the gRPC-gateway. Guess where this certificate comes from?

-etcd 把配置的服务端证书直接作为这里的客户端证书用了。

+etcd uses the configured server-side certificate directly as the client-side certificate here.

-一个证书既在服务端上提供验证,又在客户端上表明身份,看上去也没什么问题。除非……

+A certificate that provides both authentication on the server side and identity on the client side doesn’t seem to be a problem. Unless server auth expansion is enabled on the certificate, but client auth is not enabled. Execute the following command on the faulty certificate:

-除非证书上启用了 server auth 的拓展,但是没有启用 client auth。

-

-对有问题的证书执行`openssl x509 -text -noout -in /tmp/bad.crt`

+```shell

+openssl x509 -text -noout -in /tmp/bad.crt

+```

-会看到这样的输出:

+You will see output like this:

```text

X509v3 extensions:

@@ -91,14 +89,15 @@ X509v3 Extended Key Usage:

TLS Web Server Authentication

```

-注意这里的 “TLS Web Server Authentication”,如果我们把它改成 “TLS Web Server Authentication, TLS Web Client Authentication”,抑或不加这个拓展,就没有问题了。

+Note the “TLS Web Server Authentication” here, if we change it to “TLS Web Server Authentication, TLS Web Client Authentication” or without this extension, there will be no problem.

-etcd 上也有关于这个问题的 issue:https://github.com/etcd-io/etcd/issues/9785

+There is also an issue about this problem on etcd’s repository: Issue [#9785](https://github.com/etcd-io/etcd/issues/9785

+).

-### 结语

+## Summary

-虽然我们在上文列出了几点小问题,但是瑕不掩瑜,etcd 对 HTTP 访问的支持还是一个非常有用的特性。

+Although we have listed a few minor issues above, etcd’s support for HTTP access is still a very useful feature.

-感谢 Apache APISIX 的用户们,正是因为我们有着广阔的用户群,才能发现 etcd 的这些细节上的问题。我们作为 etcd 的一大用户,在之后的日子里也将一如既往地跟 etcd 的开发者多多交流。

+Thanks to the users of Apache APISIX, we have a large user base to find these details of etcd. As a large user of etcd, we will continue to communicate with the etcd developers for many years to come.

diff --git a/website/blog/2021/07/14/the-road-to-customization-of-Sina-Weibo-API-gateway-based-on-Apache-APISIX.md b/website/blog/2021/07/14/the-road-to-customization-of-Sina-Weibo-API-gateway-based-on-Apache-APISIX.md

index 614ddd8..789d038 100644

--- a/website/blog/2021/07/14/the-road-to-customization-of-Sina-Weibo-API-gateway-based-on-Apache-APISIX.md

+++ b/website/blog/2021/07/14/the-road-to-customization-of-Sina-Weibo-API-gateway-based-on-Apache-APISIX.md

@@ -1,207 +1,207 @@

---

-title: "基于 Apache APISIX,新浪微博 API 网关的定制化开发之路"

-author: "聂永"

+title: "The Road to Customized Development of Sina Weibo API Gateway"

+author: "Yong Nie"

keywords:

- Apache APISIX

-- 新浪微博

+- Sina

- Weibo

+- Usser Case

- API Gateway

-description: 微博之前的 HTTP API 网关基于 NGINX 搭建,所有路由规则存放在 NGINX conf 配置文件中,带来一系列问题:升级步骤长,对服务增、删、改或跟踪问题时,不够灵活且难以排查问题。经过一番调研之后,我们选择了最接近预期、基于云原生的微服务 API 网关:Apache APISIX,借助其动态、高效、稳定等特性以满足业务的快速响应要求。

+description: Sina Weibo’s previous HTTP API gateway was built based on Nginx, which brought up a series of problems. After some research, we chose Apache APISIX, which is dynamic, efficient and stable to meet the fast response requirements of the business.

tags: [User Case]

---

-> 新浪微博之前的 HTTP API 网关基于 NGINX 搭建,所有路由规则存放在 NGINX conf 配置文件中,带来一系列问题:升级步骤长,对服务增、删、改或跟踪问题时,不够灵活且难以排查问题。经过一番调研之后,我们选择了最接近预期、基于云原生的微服务 API 网关:Apache APISIX,借助其动态、高效、稳定等特性以满足业务的快速响应要求。

+> Sina Weibo’s previous HTTP API gateway was built based on Nginx, which brought up a series of problems. After some research, we chose Apache APISIX, which is dynamic, efficient and stable to meet the fast response requirements of the business.

<!--truncate-->

-微博之前的 HTTP API 网关基于 NGINX 搭建,所有路由规则存放在 NGINX conf 配置文件中,带来一系列问题:升级步骤长,对服务增、删、改或跟踪问题时,不够灵活且难以排查问题。经过一番调研之后,我们选择了最接近预期、基于云原生的微服务 API 网关:Apache APISIX,借助其动态、高效、稳定等特性以满足业务的快速响应要求。

+Sina Weibo’s previous HTTP API gateway was built based on Nginx, and all routing rules were stored in Nginx configuration files, which brought a series of problems: long upgrade steps, inflexibility and difficulty in troubleshooting problems when adding, deleting, changing or tracking services. After some research, we chose the closest expected, cloud-based micro-service API gateway: Apache APISIX, which is dynamic, efficient and stable to meet the rapid response requirements of the business.

-## 1 背景说明

+## Background Information

-在微博,运维同学要创建一个 API 服务,他需要先在 nginx conf 配置文件里面写好,提交到 git 代码仓库里面去,等其它负责上线的运维同学 CheckOut 之后,确认审核成功,才能它们推送部署到线上去,继而粗暴通知 NGINX 重新加载,这才算服务变更成功。

+In Sina Weibo, if an operation engineer wants to create an API service, he/she needs to write it in the Nginx configuration file first, submit it to the git code repository, and wait for other operation engineer responsible for the online checkout to confirm the success of the audit before they can push the deployment to the line, and then brutally notify Nginx to reload it, and only then is the service change successful.

-整个处理流程较长,效率较低,无法满足低代码化的 DevOps 运维趋势。因此,我们期待有一个管理后台入口,运维同学在 UI 界面上就可以操作所有的 http api 路由等配置。

+The whole process is long and inefficient, and cannot meet the trend of low-code DevOps operation and maintenance. Therefore, we expect to have a management backend portal, where operation engineer can operate all the http api routing and other configurations in the UI interface.

-

+

-经过一番调研之后,我们选择了最接近期盼的基于云原生的微服务 API 网关:Apache APISIX。比较看重的点,有这么几个:

+After some research, we chose the closest to the expected cloud-based micro-services API gateway: Apache APISIX.

-1. 基于 NGINX ,技术栈前后统一,后期灰度升级、安全、稳定性等有保障;

-2. 内置统一控制面,多台代理服务统一管理;

-3. 动态 API 调用,即可完成常见资源的修改实时生效,相比传统 NGINX 配置 + reload 方式进步明显;

-4. 路由选项丰富,满足微博路由需求;

-5. 较好扩展性,支持 Consul kv 难度不大;

-6. 性能表现也不错。

+1. Based on Nginx, the technology stack is unified before and after the grayscale upgrade, security, stability, etc. are guaranteed.

+1. Built-in unified control surface, unified management of multiple proxy services.

+1. Dynamic API call, you can complete the common resource modifications in real time, compared to the traditional Nginx configuration + reload way progress is obvious.

+1. Rich routing options to meet the needs of Sina Weibo routing.

+1. Good scalability, support Consul kv.

+1. Good performance.

-

+

-## 2 为什么选择定制化开发

+## Why Did We Choose Custom Development?

-实际业务情况下,我们是没办法直接使用 Apache APISIX 的,原因有以下几点:

+In the actual business situation, we cannot use Apache APISIX directly for the following reasons.

-1. Apache APISIX 不支持 SaaS 多租户,实际需要运维的业务线上层应用有很多,每个业务线的开发或运维同学只需要管理维护自己的各种 rules、upstreams 等规则,彼此之间不相关联;

-2. 当把路由规则发布到线上后,如果出现问题则需要快速的回滚支持;

-3. 当新建或者编辑现有的路由规则时,我们不太放心直接发布到线上,这时就需要它能够支持灰度发布到指定网关实例上,用于仿真或局部测试;

-4. 需要 API 网关能够支持 Consul KV 方式的服务注册和发现机制;

+1. Apache APISIX does not support SaaS multi-tenancy, and there are many upper-layer applications that actually need to be operated and maintained, and each business line development or operation and maintenance student only needs to manage and maintain their own rules, upstreams and other rules, which are not associated with each other.

+1. When the routing rules are published online, they need fast roll back support if problems arise.

+1. When creating or editing existing routing rules, we are not so sure about publishing them directly to the wire, and then we need it to be able to support grayscale publishing to a specified gateway instance for simulation or local testing.

+1. The need for API gateways to be able to support Consul KV-style service registration and discovery mechanisms.

-上述这些需求目前 Apache APISIX 都没有内置支持,所以只能通过定制开发才能让 Apache APISIX 真正在微博内部使用起来。

+None of these requirements are currently supported built-in by Apache APISIX, so custom development is the only way to make Apache APISIX truly usable within Weibo.

-## 3 在 Apache APISIX 的控制面,我们改了些什么

+## What Did We Change in the Control Plane of Apache APISIX?

-我们定制开发时,使用的 Apache APISIX 1.5 版本,Dashboard 也是和 1.5 相匹配的。

+For our custom development, we used Apache APISIX version 1.5, and Apache APISIX Dashboard compatible with Apache APISIX version 1.5.

-定制开发的目标简单清晰,即完全零代码、UI 化,所有七层 HTTP API 服务的创建、编辑、更新、上下线等所有行为都必须在 Dashboard 上面完成。因此实际环境下,我们禁止开发和运维同学直接调用 APISIX Admin API,假如略过 Dashboard 直接调用 APISIX Admin API,就会导致网关操作没办法在 UI 层面上审计,无法走工作流,自然也就没有多少安全性可言。

+The goal of custom development is simple and clear, that is, completely zero code, UI, all seven layers of HTTP API service creation, editing, updating, up and down and all other actions must be done on the Dashboard. Therefore, in the actual environment, we forbid development and operation and operation engineer to call APISIX Admin API directly. If we skip the Dashboard and call APISIX Admin API directly, it will lead to the gateway operation not being audited at the UI level, so we ca [...]

-有一种情况稍微特殊,运维需要调用 API 完成服务的批量导入等,可以调用 H5 Dashboard 的 API 来完成,从而遵守统一的工作流。

+There is a slightly special case, operations and maintenance need to call the API to complete the bulk import of services, you can call the H5 Dashboard API to complete, so as to comply with the unified workflow.

-### 3.1 支持 SaaS 化服务

+### Support Saas-based Services

-企业层面有完整的产品线、业务线数据库,每一个具体产品线、业务线可使用一个 saas_id 值表示。然后在创建网关配置数据插入 ETCD 之前,塞入一个 saas_id 值,在逻辑属性上所有的数据便有了 SaaS 归属。

+A complete database of product lines and business lines is available at the enterprise level, and each specific product line and business line can be represented by a saas_id value. Then, before creating the gateway configuration data for insertion into the ETCD, a saas_id value is plugged in and all the data has a SaaS attribution in terms of logical attributes.

-用户、角色和实际操作的产品线就有了如下对应关联:

+Users, roles and the actual product line of operation are then associated with the following correspondence.

-

+

-一个用户可以被分派承担不同运维角色去管理维护不同的产品线服务。

+A user can be assigned to undertake different operations and maintenance roles to manage and maintain different product lines of services.

-管理员角色很好理解,操作维护服务核心角色,针对服务增 / 删 / 改 / 查等;除此之外,我们还有只读用户的概念,只读用户一般是用于查看服务配置、查看工作流、调试等等。

+The administrator role is very easy to understand, the core role of operation and maintenance services, for service addition / deletion / update / check; in addition, we have the concept of read-only users, read-only users are generally used to view the service configuration, view the workflow, debugging and so on.

-### 3.2 新增路由发布审核工作流

+### Add Audit Function

-

+

-在开源版本中,创建或修改完一个路由之后就可以直接发布。

+In the open source version, a route can be published directly after it is created or modified.

-而在我们的定制版本中,路由创建或修改之后,还需要经过审核工作流处理之后才能发布,处理流程虽然有所拉长,但我们认为在企业层面审核授权后的发布行为才更可信。

+In our custom version, after a route is created or modified, it needs to go through an audit workflow before it can be published, which lengthens the process, but we think it is more credible to publish after the authorization is reviewed at the enterprise level.

-

+

-在创建路由规则时,默认情况下必须要经过审核。为了兼顾效率,新服务录入的时候,可选择免审、快速发布通道,直接点击发布按钮。

+When creating routing rules, they must be reviewed by default. To take into account efficiency, when entering new services, you can choose the no-review, fast-publishing channel and click the publish button directly.

-

+

-当一个重要 API 路由某次调整规则发布上线后出现问题时,可以选择该路由规则上一个版本进行快速回滚,粒度为单个路由的回滚,不会影响到其它路由规则。

+When an important API route has problems after a certain adjustment rule release goes live, you can select the previous version of the routing rule for a quick roll back, with the granularity of a single route roll back that will not affect other routing rules.

-单条路由回滚内部处理流程如下图示。

+The internal processing flow of a single route roll back is shown in the following figure.

-

+

-我们需要为单个路由的每次发布建立版本数据库存储。这样我们在审核之后进行全量发布,每发布一次会就会产生一个版本号,以及对应的完整配置数据;然后版本列表越积越多。当我们需要回滚的时候去版本列表里面选择一个对应版本回滚即可;某种意义上来讲,回滚其实是一个特殊形式的全量发布。

+We need to create version database storage for each release of a single route. This way, when we do a full release after the audit, each release will generate a version number and the corresponding full configuration data; then the version list grows. When we need to roll back, go to the version list and select a corresponding version to rollback; in a sense, the roll back is actually a special form of full release.

-### 3.3 支持灰度发布

+### Support Grayscale Release

-我们定制开发的灰度发布功能和一般社区理解的灰度发布有所不同,相比全量部署的风险有所降低。当某一个路由规则的变更较大时,我们可以选择只在特定有限数量的网关实例上发布并生效,而不是在所有网关实例上发布生效,从而缩小发布范围,降低风险,快速试错。

+Our custom-developed grayscale release feature is different from what the community generally understands as grayscale release, and is less risky compared to full deployment. When a change to a routing rule is large, we can choose to publish and take effect only on a specific limited number of gateway instances, instead of publishing and taking effect on all gateway instances, thus reducing the scope of the release, lowering the risk, and enabling fast trial and error.

-虽然灰度发布是一个低频的行为,但和全量发布之间依然存在状态的转换。

+Although grayscale release is a low-frequency behavior, there is still a state transition between it and full volume release.

-

+

-当灰度发布的占比减少到 0% 的时候,就是全量发布的状态;灰度发布上升到 100% 的情况下,就是下一次的全量发布,这就是它的状态转换。

+When the percentage of gray release decreases to 0%, it is the state of full release; when the gray release rises to 100%, it is the next full release, and this is its state transition.

+The full grayscale publishing feature requires some API support exposed on the gateway instance in addition to the administrative backend support.

-

+

-上图为在操作灰度发布选择具体的网关实例时的截图。

+The above screenshot shows the screenshot when operating Grayscale Publishing to select a specific gateway instance.

-灰度发布完整功能除了管理后台支持,还需要在网关实例上暴露出一些 API 支持。

+The full grayscale publishing feature requires some API support exposed on the gateway instance in addition to the administrative backend support.

-

+

-灰度发布 API 固定 URI, 统一路径为 /admin/services/gray/{SAAS_ID}/routes。不同 HTTP Method 呈现不同业务含义,POST 表示创建,停止灰度是 DELETE,查看就是 GET。

+Grayscale publishing API fixed URI, the unified path is /admin/services/gray/{SAAS_ID}/ routes. Different HTTP Method presents different business meanings, POST means create, DELETE means to stop grayscale, GET means to view.

-#### 3.3.1 启动流程

+#### Activation Process

-

+

-从网关层面发布一个 API,接收数据后 worker 进程校验发送来的数据的合法性,合法数据会通过事件广播给所有的 worker 进程。随后调用灰度发布 API ,添加完灰度规则,在下一个请求被处理时生效。

+An API is published from the gateway level, and after receiving the data the worker process checks the legitimacy of the data sent, and the legitimate data is broadcast to all worker processes via events. Then the grayscale publishing API is called and the grayscale rules are added and take effect when the next request is processed.

-#### 3.3.2 停用流程

+#### Deactivation Process

-

+

-停用流程和灰度分布流程基本一致,通过 DELETE 的方法调用灰度发布的 API,广播给所有的 work 进程,每个 work 接收到需要停用的灰度的 ID 值后在 route 表里进行检测。若 route 表里存在就删除,然后尝试从 ETCD 里面还原出来。如果灰度停用了,要保证原先存在 ETCD 能够还原出来,不能影响到正常服务。

+The deactivation process is basically the same as the grayscale distribution process. The API for grayscale distribution is called by the DELETE method and broadcasted to all work processes. If it exists in the route table, delete it and try to restore it from the ETCD. If the grayscale is deactivated, make sure that the original ETCD can be restored without affecting the normal service.

-### 3.4 支持快速导入

+### Support Fast Import

-除了在管理页面支持创建路由之外,很多运维同学还是比较习惯使用脚本导入。我们有大量的 HTTP API 服务,这些服务要是一个一个手动录入,会非常耗时。如果通过脚本导入,则能够降低很多服务迁移阻力。

+In addition to supporting the creation of routes on the management page, many operation engineer are still more accustomed to using scripts to import. We have a large number of HTTP API services, and it would be very time-consuming to manually enter them one by one. If you import through scripts, you can reduce a lot of service migration resistance.

-通过为管理后台暴露出 Go Import HTTP API,运维同学可以在现成的 Bash Script 脚本文件中填写分配的 token、SaaS ID 以及相关的 UID 等,从而较为快速地导入服务到管理后台中。导入服务后续操作依然还是需要在管理后台 H5 界面上完成。

+By exposing the Go Import HTTP API for the management backend, the operation engineer can fill in the assigned token, SaaS ID and related UIDs in the ready-made Bash Script file to import the services into the management backend more quickly. The subsequent operation of importing services still needs to be done in the management backend H5 interface.

-

+

-## 4 在 Apache APISIX 的数据面,我们改了些什么

+## What Did We Change in the Data Plane of Apache APISIX?

-基于 Apache APISIX 数据面定制开发需要遵循一些代码路径规则。其中,Apache APISIX 网关的代码和定制代码分开存放不同路径,两者协同工作,各自可独立迭代。

+Custom development based on the Apache APISIX data surface requires a number of code path rules to be followed. In particular, the code for the Apache APISIX gateway and the custom code are stored in separate paths, and the two work together and can each be iterated independently.

-

+

-### 4.1 安装包的修改

+### Modification of Installation Package

-因此打包时,不但有定制代码,还需要把依赖、配置等全部打包到一起进行分发。至于输出的格式,要么选择 Docker,要么打到一个 tar 包中,按需选择。

+So when packaging, not only custom code, but also dependencies, configuration, etc. all need to be packaged together for distribution. As for the output format, you can either choose Docker or type it into a tarball, as required.

-

+

-### 4.2 代码的定制开发

+### Custom Development of Code

-有些定制模块需要在被初始化时优先加载,这样对 Apache APISIX 的代码入侵就变得很小,只需要修改 NGINX.conf 文件。

+Some custom modules need to be loaded first when they are initialized, so that the code intrusion into Apache APISIX becomes minimal, requiring only modifications to the Nginx.conf file.

-

+

-比如,需要为 upstream 对象塞入一个 saas_id 属性字段,可以在初始化时调用如下方法。

+For example, if you need to stuff an upstream object with a saas_id attribute field, you can call the following method at initialize time.

-

+

-类似修改等,需要在 init_worker_by_lua_* 阶段完成调用,完成初始化。

+You need to be called in the initworker_by_lua* phase to complete the initialization for similar modifications.

-另外一种情况:如何直接重写当前已有模块的实现。比如有一个 debug 模块,现在需要对它的初始化逻辑进行重构,即对 init_worker 函数进行重写。

+Another scenario: how to directly rewrite the implementation of a currently existing module. For example, if you have a debug module and now you need to refactor its initialization logic, i.e. rewrite the init_worker function.

-

+

-这种方式的好处在于,既能保证 API 原始的物理文件不动,又能加入自定义的 API 具体逻辑的重写,从而降低了后期代码管理的成本,也为后续升级带来很大的便利。

+The advantage of this approach is that it not only keeps the original physical API files intact, but also adds custom API-specific logic rewrites, thus reducing the cost of later code management and bringing great convenience for subsequent upgrades.

-在生产环境下若有类似需求,可以参考以上做法。

+If you have similar needs in a production environment, you can refer to the above approach.

-### 4.3 支持 Consul KV 方式服务发现

+### Support Consul KV

-当前微博很多服务采用 Consul KV 的方式作为服务注册和发现机制。以前 Apache APISIX 是不支持 Consul KV 方式服务发现机制的,就需要在网关层添加一个 consul_kv.lua 模块,同时也需要在管理后台提供 UI 界面支持,如下:

+Currently, most of Weibo services use Consul KV as a service registration and discovery mechanism. Previously, Apache APISIX did not support the Consul KV method of service discovery mechanism, so a `consul_kv.lua` module needs to be added to the gateway layer, and a UI interface needs to be provided in the management backend as follows.

-

+

-在控制台中的 upstream 列表中,填写的所有东西一目了然,鼠标移动到注册服务地址上,就会自动呈现所有注册节点的元数据,极大方便了运维同学日常操作。

+In the upstream list in the console, everything is filled in at a glance, and the metadata of all registered nodes is automatically presented when the mouse is moved over the registered service address, which greatly facilitates the daily operation of our operation engineers.

-

+

-consul_kv.lua 模块在网关层的配置方式较为简单,同时支持多个不同 Consul 集群连接,当然这也是实际环境要求使然。

+The `consul_kv.lua` module is relatively simple to configure at the gateway level, supporting multiple connections to different Consul clusters at the same time, but this is also due to the requirements of the actual environment.

-

+

-目前这部分代码已被合并到 APISIX master 分支中,2.4 版本已包含。

+This code has now been merged into the APISIX master branch and is included in version 2.4.

-该模块的进程模型采用订阅发布模式,每一个网关实例有且只有一个进程去长连接轮询多个的 Consul 服务集群,一旦有了新数据就会一一广播分发到所有业务子进程。

+The module's process model uses a subscription publishing model, where each gateway instance has one and only one process that polls multiple Consul service clusters with long connections and broadcasts new data to all business sub-processes as it becomes available.

-## 5 定制化过程中的一些思考

+## Problems Encountered during Customization

-### 5.1 迁移成本高

+### High Costs for Migration

-在运维层面其实面临一个问题,就是迁移成本的问题。

+At the operation and maintenance level, we are actually facing a problem of migration cost.

-任何一个新事物出现,用于替换现有的基础,都不会一马平川,而是需要经过一段时间慢慢熟悉、增进认知,然后不停试错,慢慢向前推进,逐渐消除大家心中的各种疑惑。只有在稳定运行一段时间,各种问题都得到解决之后,才会进入下一步较为快速的替换阶段。毫无疑问,当前 APISIX 在微博的使用还处于逐步推进的阶段,我们还在不断熟悉、学习并深入了解,同时解决各种各样的迁移问题,以期找到最佳实践路径。

+Any new thing appeared, used to replace the existing foundation, will not be a smooth ride, but need to go through a period of time slowly familiar with, improve knowledge, and then keep trial and error, slowly move forward, and gradually eliminate all kinds of doubts in our minds. Only after a period of stable operation and various problems have been solved, will the next step of more rapid replacement phase be entered. There is no doubt that the use of APISIX in Weibo is still in the s [...]

-举一个例子,在迁移过程中,需要一一将 nginx.conf 文件中的各种上游以及路由等规则导入到网关系统管理后台中,这是一个非常枯燥的手动操作过程,因此我们开发了快速导入接口,提供 bash script 脚本一键录入等功能来简化这个过程。

+For example, during the migration process, you need to import various upstream and routing rules from the Nginx.conf file into the gateway system administration backend one by one, which is a very tedious and manual process.

-

+

-同时我们也会遇到 NGINX 各种复杂变量判断语句,目前主要是发现一个解决一个,不断积累经验。

+At the same time, we will also encounter Nginx various complex variable judgment statements, at present, we mainly find one to solve one, and continue to accumulate experience.

-### 5.2 定制化程度高,导致后续升级成本较高

+### High Costs for Upgrades

-无论是 Apache APISIX 还是 Apache APISIX Dashboard,我们都做了很多定制化开发。这导致升级最新版 Dashboard 较为困难。不过,我们定制开发所选择的 Dashboard 版本基本功能都已具备,满足日常用途倒也足够了。

+High level of customization, resulting in higher costs for subsequent upgrades. We are currently experiencing the same problem as everyone else, many people should be based on version 1.x Apache APISIX how to upgrade to 2.0, we also have a Dashboard of private custom development, the subsequent upgrade costs should be higher.

-针对 Apache APISIX 的定制开发,则可以很轻松地升级,目前已经完成了若干个小版本的升级。

+### Feeding the Community

-### 5.3 反哺社区

+The final part is about the Apache APISIX community. We have been thinking about how to feed features of interest to the Apache APISIX community for everyone to use and modify together.

-最后我们聊聊社区,我们也在想怎么把社区感兴趣的功能反哺给 Apache APISIX 社区,让大家一起使用和修改。

+It is an objective fact that our custom development is driven primarily by actual internal Weibo requirements, and there is some variation from the evolution driven by the Apache APISIX community. However, excluding code that contains sensitive data, there are always common needs at the code level for more general functionality that the enterprise and open source communities can push together to make more stable and mature. For example, a common Consul KV service discovery module, handli [...]

-我们进行定制开发的驱动力主要来自微博内部的实际需求,与 Apache APISIX 社区推动的演进有一些出入,这是客观存在的事实。但除去一些包含敏感数据的代码,企业和社区总会在一些比较通用的功能代码层面存在共同需求,企业和开源社区可以一起推动使之进化得更为稳定成熟。比如通用的 Consul KV 服务发现模块、一些高可用配置文件的处理,以及其它问题的修复等。

-

-这些共同需求一般会在企业内部打磨一段时间,直到完全满足内部需求之后,再逐步提交到社区开源代码分支里,但这也需要一个过程。

+These common requirements are typically polished internally for a period of time until they fully satisfy internal requirements, and then gradually submitted to the community open source branch, but this also requires a process.

diff --git a/website/blog/2021/07/27/use-of-plugin-orchestration-in-Apache-APISIX.md b/website/blog/2021/07/27/use-of-plugin-orchestration-in-Apache-APISIX.md

index 1d1e69a..b1276db 100644

--- a/website/blog/2021/07/27/use-of-plugin-orchestration-in-Apache-APISIX.md

+++ b/website/blog/2021/07/27/use-of-plugin-orchestration-in-Apache-APISIX.md

@@ -1,32 +1,30 @@

---

-title: "插件编排在 Apache APISIX 中的应用与实践"

-author: "琚致远"

+title: "Applying Plugin Orchestration in Apache APISIX"

+author: "Zhiyuan Ju"

authorURL: "https://github.com/juzhiyuan"

authorImageURL: "https://avatars.githubusercontent.com/u/2106987?v=4"

keywords:

-- API 网关

-- APISIX

-- 插件编排

+- Apache APISIX

+- Plugin Orchestration

- Apache APISIX Dashboard

-description: 通过阅读本文,您可以了解 Apache APISIX 与基本使用场景,以及在低代码潮流下,Apache APISIX 是如何集成“拖拽”的插件编排能力的。本文作者琚致远,Apache APISIX PMC,在支流科技负责企业产品与大前端技术。

+- API Gateway

+description: Read this article to learn about Apache APISIX and basic usage scenarios, and how Apache APISIX integrates "drag and drop" plugin orchestration capabilities in a low-code trend.

tags: [Practical Case]

---

-> 通过阅读本文,您可以了解 Apache APISIX 与基本使用场景,以及在低代码潮流下,Apache APISIX 是如何集成“拖拽”的插件编排能力的。本文作者琚致远,Apache APISIX PMC,在支流科技负责企业产品与大前端技术。

+> Read this article to learn about Apache APISIX and basic usage scenarios, and how Apache APISIX integrates "drag and drop" plugin orchestration capabilities in a low-code trend.

<!--truncate-->

-本文作者琚致远,为 Apache APISIX PMC,在支流科技负责企业产品与大前端技术。通过阅读本文,您可以了解 Apache APISIX 与基本使用场景,以及在低代码潮流下,Apache APISIX 是如何集成“拖拽”的插件编排能力的。

+## What is Apache APISIX?

-## 什么是 Apache APISIX

+Apache APISIX is a dynamic, real-time, high-performance API gateway. Apache APISIX provides rich traffic management features such as load balancing, dynamic upstream, canary release, circuit breaking, authentication, observability, and more. It has more than 50 built-in plugins covering authentication, security, traffic control, Serverless, observability, and other aspects to meet the common usage scenarios of enterprise customers.

-Apache APISIX 是一个生产可用的七层全流量处理平台,可作为 API 网关处理业务流量入口,具有极高性能、超低延迟的显著特性。它内置了 50 多种插件,覆盖身份验证、安全防护、流量控制、Serverless、可观测性等多个方面,可满足企业客户常见的使用场景。

-

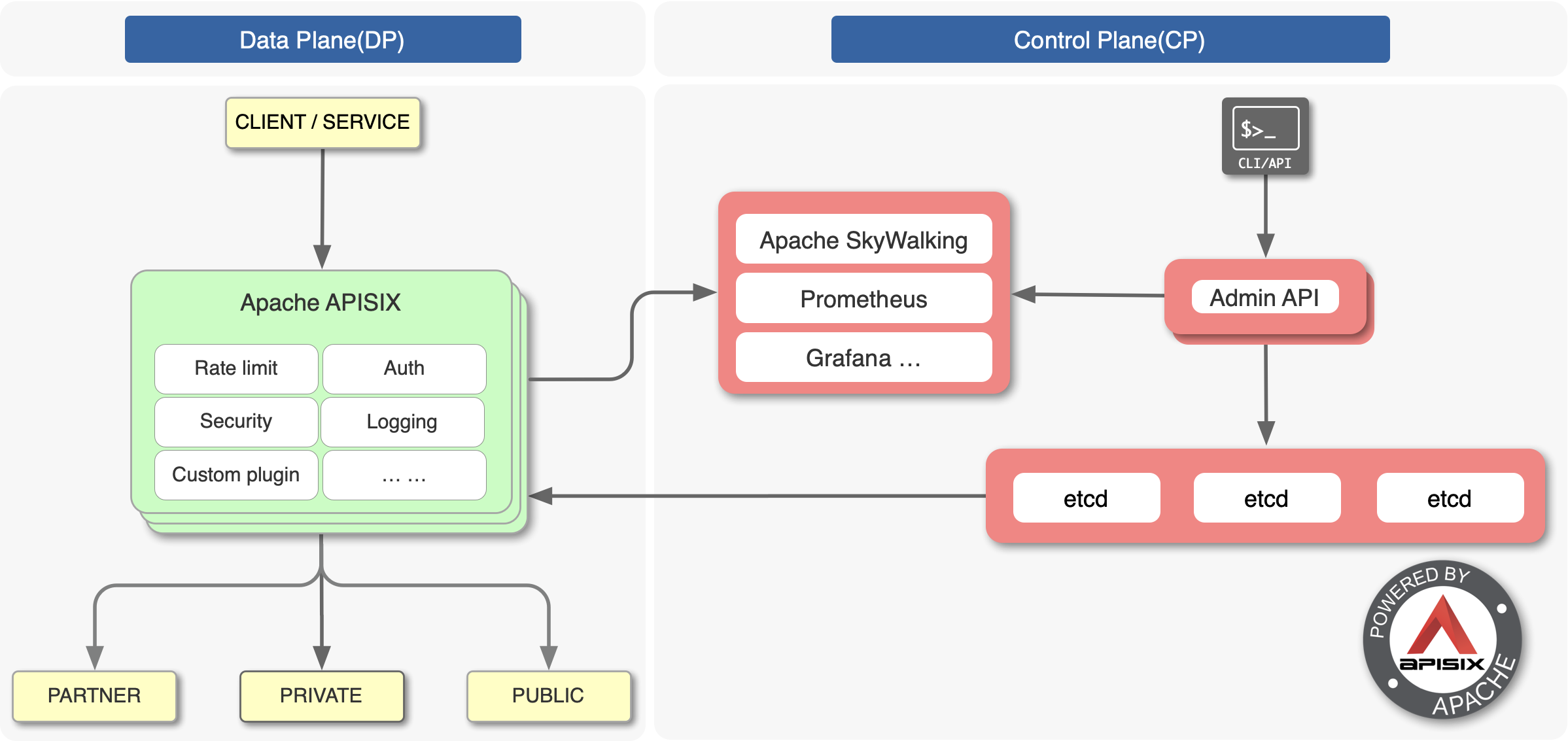

-如下方架构图所示,Apache APISIX 分为数据面(左侧)与控制面(右侧)两部分:通过控制面下发配置到 ETCD,数据面借助丰富的插件处理内外流量。

+As shown in the architecture diagram below, Apache APISIX is divided into two parts: the data plane (left side) and the control plane (right side): the control plane sends down the configuration to ETCD, and the data plane handles internal and external traffic with the help of rich plug-ins.

-Apache APISIX 暴露了一组接口,方便我们为 API 绑定插件。如果我们希望为 API 增加限速能力,只需为 API 绑定 `limit-req` 插件:

+Apache APISIX exposes a set of interfaces that allow us to bind plugins to the API. If we want to add speed-limiting capabilities to the API, we can simply bind the `limit-req` plugin to the API.

``` shell

curl -X PUT http://127.0.0.1:9080/apisix/admin/routes/1 -d '

@@ -51,17 +49,17 @@ curl -X PUT http://127.0.0.1:9080/apisix/admin/routes/1 -d '

}'

```

-调用成功后,当请求到达该 API 时将进行限速管控。

+After a successful call, the request will be speed-limited when it reaches the API.

-该示例使用 `limit-req` 实现 API 限速(特定功能),若针对“根据某个插件的处理结果,决定后续的请求处理逻辑”这种场景化需求,该怎么做呢?当前,现有的插件机制无法满足这种需求,这时便引申出插件编排的能力来解决这个问题。

+This example uses limit-req to implement API speed limit (which is a specific function of Apache APISIX), but how to do it for the scenario of "decide the subsequent request processing logic based on the processing result of a plugin"? Currently, the existing plugin mechanism can not meet this demand, which then leads to the ability of plugin orchestration to solve this problem.

-## 什么是插件编排

+## What is Plugin Orchestration?

-插件编排是低代码的一种表现形式,它可以帮助企业降低使用成本、增加运维效率,是企业数字化转型过程中不可或缺的能力。借助低代码 API 网关 Apache APISIX 中插件编排能力,我们可以轻松地将 50+ 插件通过“拖拽”的方式进行组合编排,被编排的插件也能够共享上下文信息,最终实现场景化需求。

+Plugin orchestration is a form of low-code that can help enterprises reduce usage costs and increase operation and maintenance efficiency, and is an indispensable capability in the process of digital transformation. With the plugin orchestration capability in the low-code API gateway Apache APISIX, we can easily orchestrate 50+ plugins in a “drag-and-drop” way, and the orchestrated plugins can share contextual information to realize scenario-based requirements.

-扩展上述 API 限速的场景:请求使用 `key-auth`插件进行身份认证,若认证通过,将由`kafka-logger` 插件接管并进行日志记录;若认证失败(插件返回 401 状态码),将使用`limit-req` 插件进行限速。

+Extending the above API speed limit scenario: the request is authenticated using the key-auth plugin, and if the authentication passes, the kafka-logger plugin takes over and logs; if the authentication fails (the plugin returns a 401 status code), the limit-req plugin is used to limit the speed.

-见如下操作视频:

+See the following video on how to do it.

<iframe

height="350"

@@ -70,21 +68,25 @@ curl -X PUT http://127.0.0.1:9080/apisix/admin/routes/1 -d '

frameborder="0">

</iframe>

-该视频中,Web 界面列出了目前已有的插件与画板,我们可以将插件拖拽到画板上进行编排,并填写插件绑定的数据,然后便完成了整个流程。在整个过程中:

+In this video, the Web interface lists the currently available plugins and drawing boards, and we can drag and drop the plugins onto the drawing boards to arrange them and fill in the data bound to the plugins, and then the whole process is completed. In the whole process.

+

+The Web interface lists the currently available plugins and drawing boards, and we can drag and drop the plugins onto the drawing boards to arrange them and fill in the data bound to the plugins, and then the whole process is completed. In the whole process.

+

+1. operation visualization: we can use the interface visualization in addition to the creation of API, but also through the ability to orchestrate intuitive and clear scenario design.

-1. 操作可视化:我们除了可以使用界面可视化创建 API 之外,还可以通过编排能力直观、清晰地进行场景化设计;

-2. 流程可复用:通过导入、导出画板的 JSON 数据,可以便捷地复用编排生成的工程数据。

-3. 组合新“插件”:将每一个场景视作一个插件,通过使用条件元件组合不同的插件,来实现插件创造“插件”。

+1. process reusable: by importing and exporting the JSON data of the drawing board, you can easily reuse the project data generated by orchestration.

-## 实现原理

+1. Combine to create new "plugins": treat each scene as a plugin, and combine different plugins by using conditional components to create "plugins".

-那么 Apache APISIX 是如何与低代码能力结合的呢?这需要数据面 Apache APISIX 与控制面 Apache APISIX Dashboard 共同配合完成。整体流程如下:

+## How Plugin Orchestration Works?

+

+So how does Apache APISIX combine with low-code capabilities? This requires the data side Apache APISIX and the control side Apache APISIX Dashboard to work together. The overall process is as follows.

### Apache APISIX

-在 Apache APISIX 中,我们在 Route 实体中新增了 `script` 执行逻辑 [PR](https://github.com/apache/apisix/pull/1982),可用于接收 Dashboard 生成的 Lua 函数并执行,它支持调用已有插件以复用代码。另外,它也作用于 HTTP 请求的生命周期中的各个阶段,如 `access`、`header_filer`、`body_filter` 等,系统会在相应阶段自动执行 `script` 函数对应阶段代码,见如下 `script` 示例:

+In Apache APISIX, we have added `script` execution logic to the Route entity, which can be used to receive and execute Lua functions generated by Dashboard, and it supports calling existing plugins to reuse the code. In addition, it also works on various stages of the HTTP request lifecycle, such as `access`, `header_filer`, `body_filter`, etc. The system will automatically execute the script function corresponding to the stage code at the corresponding stage, see the following `script` [...]

```shell

{

@@ -96,13 +98,13 @@ curl -X PUT http://127.0.0.1:9080/apisix/admin/routes/1 -d '

### Apache APISIX Dashboard

-在 Dashboard 中,它包含了 Web 与 ManagerAPI 共两个子组件:Web 用于提供可视化界面,方便我们配置 API 网关;ManagerAPI 用于提供 RESTful API,供 Web 或其它客户端调用以便操作配置中心(默认为 ETCD),进而间接地控制 Apache APISIX。

+Dashboard contains two sub-components, Web and ManagerAPI: Web provides a visual interface to configure the API gateway; ManagerAPI provides a RESTful API for the Web or other clients to call in order to operate the configuration center (ETCD by default) and thus indirectly control Apache APISIX.

-为了生成合法、有效的 script 函数,ManagerAPI 选择了 DAG 有向无环图的数据结构进行底层设计,并自主研发了 `dag-to-lua` [项目](https://github.com/api7/dag-to-lua):它将根节点作为开始节点,根据判断条件决定下一个流转插件,这将有效避免逻辑死循环。如下为 DAG 数据结构的示意图:

+In order to generate legal and efficient script functions, ManagerAPI chose the DAG directed acyclic graph data structure for the underlying design and developed the `dag-to-lua` [project](https://github.com/api7/dag-to-lua): it takes the root node as the start node and decides the next flow plugin based on the judgment It uses the root node as the start node and decides the next flow plugin based on the judgment condition, which will effectively avoid logical dead loops. The following i [...]

-对应到 ManagerAPI 接收的 `script` 参数上,示例如下:

+Corresponding to the script parameters received by ManagerAPI, the example is as follows.

```shell

{

@@ -130,7 +132,7 @@ curl -X PUT http://127.0.0.1:9080/apisix/admin/routes/1 -d '

},

"rule": {

- "root": "1-2-3", // 起始节点 ID

+ "root": "1-2-3", # initial node ID

"1-2-3": [

[

"code == 200",

@@ -144,37 +146,39 @@ curl -X PUT http://127.0.0.1:9080/apisix/admin/routes/1 -d '

}

```

-即客户端将最终编排后的数据转换为上述格式后,ManagerAPI 会借助 `dag-to-lua` 项目生成 Lua 函数,并交给 Apache APISIX 执行。

+After the client converts the final orchestrated data into the above format, ManagerAPI generates Lua functions with the help of the dag-to-lua project and hands them over to Apache APISIX for execution.

-在 Web 侧,经过挑选、对比与项目验证,我们选择了蚂蚁金服开源的 X6 图编辑引擎作为插件编排 Web 部分的底层框架,除了完善、清晰的文档外,一系列开箱即用的交互组件以及节点可定制化能力也是我们选择它的原因。

+On the Web side, after selection, comparison and project validation, we chose Ant Group's open source X6 graph editing engine as the underlying framework for the Web part of the plugin orchestration. In addition to perfect and clear documentation, a series of out-of-the-box interactive components and node customizability are the reasons we chose it.

-在编排实现过程中,我们抽象出了通用元件与插件元件的概念:通用元件是指开始节点、结束节点与条件判断节点,插件元件则是每一个可用的 Apache APISIX 插件,通过将这些元件拖拽到画板中来完成插件编排的流程。如图所示:

+In the process of orchestration implementation, we abstract the concept of generic components and plug-in components: generic components are start nodes, end nodes and conditional judgment nodes, while plug-in components are every available Apache APISIX plug-in, and the process of plug-in orchestration is completed by dragging and dropping these components into the drawing board. As shown in the figure.

-在拖拽过程中,我们需要限制一系列的边界条件,这里有几个例子:

+During the drag and drop process, we need to restrict a series of boundary conditions, here are a few examples.

-当插件未配置时,系统将出现「存在未配置的元件」的错误提示,可以直观地看到哪个插件没有配置数据:

+When the plugin is not configured, the system will show the error message "There are unconfigured components", which allows you to visually see which plugin does not have configuration data.

-当编辑某条 API 时,若该 API 已经绑定了插件数据,当使用插件编排模式时,系统在检测后将出现警告信息,只有用户明确确认希望使用编排模式时,系统才能继续进行。这可以有效避免 API 数据被误操作的情况。

+When an API is edited, if the API is already bound with plugin data, when using the plugin orchestration mode, a warning message will appear after detection, and the system can only proceed if the user explicitly confirms that he/she wants to use the orchestration mode. This can effectively prevent the API data from being manipulated by mistake.

-此外,还存在诸如开始元件只能有一个输出、条件判断元件只能有一个输入等情况。试想:如果系统不加限制地让用户操作,不合理的插件组合既无意义,又会产生无法预料的错误,因此不断丰富边界条件,也是在设计插件编排时需要着重考虑的问题。

+In addition, there are cases such as the start element can only have one output and the conditional judgment element can only have one input. Imagine: if the system allows users to operate without restrictions, unreasonable plugin combinations will be meaningless and generate unpredictable errors, so the continuous enrichment of boundary conditions is also an important consideration when designing the plugin arrangement.

+

+When we finish the orchestration, we will use the API exposed by X6 to generate the JSON data of the flowchart, then convert it into the DAG data needed by the system, and finally generate Lua functions.

+

+## Future Plans

-当我们完成编排后,将使用 X6 暴露的 API 生成流程图的 JSON 数据,然后转换为系统需要的 DAG 数据,最终生成 Lua 函数。

+The drag-and-drop approach makes it easier for users to combine plugins to meet different scenarios to enhance the scalability and operation and maintenance experience of API gateways. In the process of actual use, there are the following issues that can continue to be optimized.

-## 未来展望

+1. The current boundary judgment conditions of the components are not rich enough, by continuing to improve these conditions to reduce unreasonable combinations of orchestration.

-通过拖拽的方式,可以使得使用人员更方便地组合插件来满足不同的场景,以提升 API 网关可扩展能力与运维体验。在实际使用过程中,存在如下可以继续优化的问题:

+1. There are not many orchestration examples at present, and providing more reference examples can facilitate developers to learn and users to use.

-1. 目前元件的边界判断条件还不够丰富,通过继续完善这些条件,以减少不合理的编排组合;

-2. 当前编排示例不多,提供更多的参考示例可方便开发者学习、供用户使用;

-3. 当前 Apache APISIX 使用了插件定义的 code 进行状态返回(异常则返回状态码,请求终止),可以支持更多 HTTP Response 字段甚至修改插件定义来扩展插件编排能力,如下述插件定义:

+1. The current Apache APISIX uses the code defined by the plugin for status return (exceptions return the status code, the request is terminated), can support more HTTP Response field or even modify the plugin definition to extend the plugin orchestration capabilities, such as the following plugin definition.

```shell

local _M = {

@@ -183,7 +187,7 @@ local _M = {

type = 'auth',

name = plugin_name,

schema = schema,

- # 新增的 result 字段,可存储插件运行结果,并传递到下个插件。

+ # A new result field has been added to store the results of plugin runs and pass them on to the next plugin

result = {

code = {

type = "int"

diff --git a/website/blog/2021/08/05/Kong-to-APISIX.md b/website/blog/2021/08/05/Kong-to-APISIX.md

index 08db1dd..0517904 100644

--- a/website/blog/2021/08/05/Kong-to-APISIX.md

+++ b/website/blog/2021/08/05/Kong-to-APISIX.md

@@ -1,137 +1,127 @@

---

-title: "Kong-To-APISIX 迁移工具"

+title: "Kong-To-APISIX Migration Tool"

author: "吴舒旸"

authorURL: "https://github.com/Yiyiyimu"

authorImageURL: "https://avatars.githubusercontent.com/u/34589752?v=4"

keywords:

- APISIX

- Kong

-- 迁移工具

-- API 网关

-description: Apache APISIX 是一个生产可用的开源七层全流量处理平台,可作为 API 网关处理业务入口流量,具有极高性能、超低延迟,官方支持 dashboard 以及超过五十种插件。如果你正在使用 Kong,对 APISIX 感兴趣又苦于难以上手,不妨试试我们刚开源的迁移工具 Kong-To-APISIX,助你一键平滑迁移。

+- Migration Tool

+- API Gateway

+description: Apache APISIX is a production-ready open source seven-layer full traffic processing platform that serves as an API gateway for business entry traffic with high performance, low latency, official dashboard support, and over fifty plugins. If you are using Kong and are interested in APISIX but struggle to get started, try our just open source migration tool Kong-To-APISIX to help you migrate smoothly with one click.

tags: [Technology]

---

-> Apache APISIX 是一个生产可用的开源七层全流量处理平台,可作为 API 网关处理业务入口流量,具有极高性能、超低延迟,官方支持 dashboard 以及超过五十种插件。如果你正在使用 Kong,对 APISIX 感兴趣又苦于难以上手,不妨试试我们刚开源的迁移工具 Kong-To-APISIX,助你一键平滑迁移。

+> Apache APISIX is a production-ready open source seven-layer full traffic processing platform that serves as an API gateway for business entry traffic with high performance, low latency, official dashboard support, and over fifty plugins. If you are using Kong and are interested in APISIX but struggle to get started, try our just open source migration tool Kong-To-APISIX to help you migrate smoothly with one click.

<!--truncate-->

-Apache APISIX 是一个生产可用的开源七层全流量处理平台,可作为 API 网关处理业务入口流量,具有极高性能、超低延迟,官方支持 dashboard 以及超过五十种插件。如果你正在使用 Kong,对 APISIX 感兴趣又苦于难以上手,不妨试试我们刚开源的迁移工具 Kong-To-APISIX,助你一键平滑迁移。

+Apache APISIX is a production-ready open source seven-layer full traffic processing platform that serves as an API gateway for business entry traffic with high performance, low latency, official dashboard support, and over fifty plugins. If you are using Kong and are interested in APISIX but struggle to get started, try our just open source migration tool Kong-To-APISIX to help you migrate smoothly with one click.

-## 工具能力

+## What is Kong-To-APISIX?

-Kong-To-APISIX 利用 Kong 和 APISIX 的声明式配置文件实现了配置数据的迁移,并根据两侧架构和功能的不同做出相应适配。目前我们支持了 Kong 一侧 Route、Service、Upstream、Target,Consumer 以及三个插件 Rate Limiting、Proxy Caching 以及 Key Authentication 的配置迁移,并以 Kong 的 [Getting Started Guide](https://docs.konghq.com/getting-started-guide/2.4.x/overview/) 为例,完成了一个最小的 demo。

+[Kong-To-APISIX](https://github.com/api7/kong-to-apisix) leverages the declarative configuration files of Kong and APISIX to migrate configuration data, and adapts to the architecture and functionality of both sides. Currently, we support the migration of configuration data for Route, Service, Upstream, Target, Consumer and three plugins Rate Limiting, Proxy Caching and Key Authentication on one side of Kong, and we have completed a minimal demo using Kong’s Getting Started Guide as an e [...]

-## 使用方法

+## How to Migrate?

-1. 使用 Deck 导出 Kong 声明式配置文件,点击查看[具体步骤](https://docs.konghq.com/deck/1.7.x/guides/backup-restore/)

+1. To export a Kong declarative configuration file using Deck, refer to the following steps: [Kong Official Document: Backup and Restore of Kong’s Configuration](https://docs.konghq.com/deck/1.7.x/guides/backup-restore/)

-2. 下载仓库并运行迁移工具,迁移工具会生成声明式配置文件 `apisix.yaml` 待使用

+1. Download the repository and run the migration tool, which will generate the declarative configuration file `apisix.yaml` to be used.

-```shell

-git clone https://github.com/api7/kong-to-apisix

+ ```shell

+ git clone https://github.com/api7/kong-to-apisix

-cd kong-to-apisix

+ cd kong-to-apisix

-make build

+ make build

-./bin/kong-to-apisix migrate --input kong.yaml --output apisix.yaml

+ ./bin/kong-to-apisix migrate --input kong.yaml --output apisix.yaml

-# migrate succeed

-```

+ # migrate succeed

+ ```

-3. 使用 `apisix.yaml`配置 APISIX, 点击查看[具体步骤](https://apisix.apache.org/docs/apisix/stand-alone)。

+1. Use `apisix.yaml` to configure APISIX, refer to [Apache APISIX Official Document: Stand-alone mode](https://apisix.apache.org/docs/apisix/stand-alone).

-## Demo 测试

+## Demo Test

-1. 确保 docker 正常运行,部署测试环境,使用 docker-compose 拉起 APISIX、Kong

+1. Make sure docker is up and running, deploy the test environment, and use docker-compose to run APISIX and Kong.

-```shell

-git clone https://github.com/apache/apisix-docker

+ ```shell

+ git clone https://github.com/apache/apisix-docker

-cd kong-to-apisix

+ cd kong-to-apisix

-./tools/setup.sh

-```

+ ./tools/setup.sh

+ ```

-2. 根据 Kong 的 Getting Started Guide,为 Kong 添加配置并进行测试:

+1. Add configuration to Kong and test it according to Kong's Getting Started Guide.

+ 1. Expose services via Service and Route for routing and forwarding

+ 1. Set up Rate Limiting and Proxy Caching plugins for flow limiting caching

+ 1. Set up Key Authentication plugin for authentication

+ 1. Set up load balancing via Upstream and Target

- a. 通过 Service 和 Route 暴露服务,进行路由转发

+1. Export Kong's declarative configuration file to `kong.yaml`.

- b. 设置 Rate Limiting 和 Proxy Caching 插件做限流缓存

+ ```shell

+ go run ./cmd/dumpkong/main.go

+ ```

- c. 设置 Key Authentication 插件做认证

+1. Run the migration tool, import `kong.yaml` and generate the APISIX configuration file `apisix.yaml` to docker volumes.

- d. 通过 Upstream 和 Target 设置负载均衡

+ ```shell

+ export EXPORT_PATH=./repos/apisix-docker/example/apisix_conf

+ go run ./cmd/kong-to-apisix/main.go

+ ```

-```shell

-./examples/kong-example.sh

-```

+1. Test whether the migrated routes, load balancing, plugins, etc. are working properly on Apache APISIX side.

-3. 导出 Kong 的声明式配置文件到 `kong.yaml`

+ 1. Test key auth plugin.

-```shell

-go run ./cmd/dumpkong/main.go

-```

+ ```shell

+ curl -k -i -m 20 -o /dev/null -s -w %{http_code} http://127.0.0.1:9080/mock

+ # output: 401

+ ```

-4. 运行迁移工具,导入 `kong.yaml` 并生成 APISIX 配置文件 `apisix.yaml` 至 docker volumes

+ 1. Test proxy cache plugin.

-```shell

-export EXPORT_PATH=./repos/apisix-docker/example/apisix_conf

+ ```shell

+ # access for the first time

+ curl -k -I -s -o /dev/null http://127.0.0.1:9080/mock -H "apikey: apikey" -H "Host: mockbin.org"

+ # see if got cached

+ curl -I -s -X GET http://127.0.0.1:9080/mock -H "apikey: apikey" -H "Host: mockbin.org"

+ # output:

+ # HTTP/1.1 200 OK

+ # ...

+ # Apisix-Cache-Status: HIT

+ ```

-go run ./cmd/kong-to-apisix/main.go

-```

+ 1. Test limit count plugin.

-5. 在 APISIX 一侧测试迁移过后的路由、负载均衡、插件等是否正常运行

+ ```shell

+ for i in {1..5}; do

+ curl -s -o /dev/null -X GET http://127.0.0.1:9080/mock -H "apikey: apikey" -H "Host: mockbin.org"

+ done

+ curl -k -i -m 20 -o /dev/null -s -w %{http_code} http://127.0.0.1:9080/mock -H "apikey: apikey" -H "Host: mockbin.org"

+ # output: 429

+ ```

-a. 测试 key auth 插件

+ 1. Test load balance.

-```shell

-curl -k -i -m 20 -o /dev/null -s -w %{http_code} http://127.0.0.1:9080/mock

-# output: 401

-```

-

-b. 测试 proxy cache 插件

-

-```shell

-# access for the first time

-curl -k -I -s -o /dev/null http://127.0.0.1:9080/mock -H "apikey: apikey" -H "Host: mockbin.org"

-# see if got cached

-curl -I -s -X GET http://127.0.0.1:9080/mock -H "apikey: apikey" -H "Host: mockbin.org"

-# output:

-# HTTP/1.1 200 OK

-# ...

-# Apisix-Cache-Status: HIT

-```

-

-c. 测试 limit count 插件

-

-```shell

-for i in {1..5}; do

- curl -s -o /dev/null -X GET http://127.0.0.1:9080/mock -H "apikey: apikey" -H "Host: mockbin.org"

-done

-curl -k -i -m 20 -o /dev/null -s -w %{http_code} http://127.0.0.1:9080/mock -H "apikey: apikey" -H "Host: mockbin.org"

-# output: 429

-```

-

-d. 测试负载均衡

-

-```shell

-httpbin_num=0

-mockbin_num=0for i in {1..8}; do

- body=$(curl -k -i -s http://127.0.0.1:9080/mock -H "apikey: apikey" -H "Host: mockbin.org")

- if [[ $body == *"httpbin"* ]]; then

+ ```shell

+ httpbin_num=0

+ mockbin_num=0for i in {1..8}; do

+ body=$(curl -k -i -s http://127.0.0.1:9080/mock -H "apikey: apikey" -H "Host: mockbin.org")

+ if [[ $body == *"httpbin"* ]]; then

httpbin_num=$((httpbin_num+1))

- elif [[ $body == *"mockbin"* ]]; then

- mockbin_num=$((mockbin_num+1))

- fi

- sleep 1.5done

-echo "httpbin number: "${httpbin_num}", mockbin number: "${mockbin_num}

-# output:

-# httpbin number: 6, mockbin number: 2

-```

-

-## 总结

-

-迁移工具的后续开发计划已在 Kong-To-APISIX 的 GitHub 仓库的 Roadmap 中呈现,欢迎大家访问 Kong-To-APISIX 的 [GitHub 仓库地址](https://github.com/api7/kong-to-apisix) ,测试与使用 Kong-To-APISIX。

-欢迎任何对这个项目感兴趣的人一同来为这个项目作贡献!有任何问题都可以在仓库的 Issues 区讨论。

+ elif [[ $body == *"mockbin"* ]]; then

+ mockbin_num=$((mockbin_num+1))

+ fi

+ sleep 1.5done

+ echo "httpbin number: "${httpbin_num}", mockbin number: "${mockbin_num}

+ # output:

+ # httpbin number: 6, mockbin number: 2

+ ```

+

+## Conclusion

+

+Subsequent development plans for the migration tool are presented in the Roadmap on Kong-To-APISIX's [GitHub repository](https://github.com/api7/kong-to-apisixc). Feel free to test and use Kong-To-APISIX, and discuss any questions you may have in the Issues section of the repository. Anyone who is interested in this project is welcome to contribute to it!

diff --git a/website/blog/2021/08/25/Why-Apache-APISIX-chose-Nginx-and-Lua.md b/website/blog/2021/08/25/Why-Apache-APISIX-chose-Nginx-and-Lua.md

index 64d0997..cadf31a 100644

--- a/website/blog/2021/08/25/Why-Apache-APISIX-chose-Nginx-and-Lua.md

+++ b/website/blog/2021/08/25/Why-Apache-APISIX-chose-Nginx-and-Lua.md

@@ -1,81 +1,77 @@

---

-title: "为什么 Apache APISIX 选择 Nginx + Lua 这个技术栈?"

-author: "罗泽轩"

+title: "Why Apache APISIX chose Nginx and Lua to build API Gateway"

+author: "Zexuan Luo"

authorURL: "https://github.com/spacewander"

authorImageURL: "https://avatars.githubusercontent.com/u/4161644?v=4"

keywords:

-- API 网关

- APISIX

- Apache APISIX

- Lua

- Nginx

-description: 本文由深圳支流科技工程师罗泽轩撰写,介绍了 Apache APISIX 选用 Nginx + Lua 这个技术栈的历史背景和这个技术栈为 APISIX 带来的优势。罗泽轩是 OpenResty 开发者以及 Apache APISIX PMC。

+- API Gateway

+description: Yes, Lua is not a well-known language, and it is probably a long way from the most popular programming language. So why do Apache APISIX and other well-known gateways choose Lua?

tags: [Technology]

---

-> 本文由深圳支流科技工程师罗泽轩撰写,介绍了 Apache APISIX 选用 Nginx + Lua 这个技术栈的历史背景和这个技术栈为 Apache APISIX 带来的优势。罗泽轩是 OpenResty 开发者以及 Apache APISIX PMC。

+> Yes, Lua is not a well-known language, and it is probably a long way from the most popular programming language. So why do Apache APISIX and other well-known gateways choose Lua?

<!--truncate-->

-笔者在今年的 COSCUP 大会做分享时,曾有观众问这样的问题,为什么 Apache APISIX、Kong 和 3scale 这些网关都采用 Lua 来编写逻辑?

+When I was at this year’s COSCUP conference, some visitors asked me why did Apache APISIX, Kong, and 3scale API Gateways all choose Lua to build the program?

-是啊,Lua 并不是一门广为人知的语言,离“主流编程语言”的圈子大概还差个十万八千里吧。甚至有一次,我在跟别人交流的时候,对方在说到 Lua 之前,先停顿了片刻,随后终于打定主意,以"L U A"逐个字母发音的方式表达了对这一罕见之物的称呼。

+Yes, Lua is not a well-known language, and it is probably a long way from the most popular programming language.

-所以,为什么 Apache APISIX 和其他知名的网关会选择用 Lua 呢?

+So why do Apache APISIX and other well-known gateways choose Lua?

-事实上,Apache APISIX 采用的技术栈并不是纯粹的 Lua,准确来说,应该是 Nginx + Lua。Apache APISIX 以底下的 Nginx 为根基,以上层的 Lua 代码为枝叶。

+The technology stack used by Apache APISIX is not only Lua. To be precise, it should be Nginx with Lua. Apache APISIX is based on Nginx and uses Lua to build plugins or other features.

## LuaJIT VS Go

-严谨认真的读者必然会指出,Apache APISIX 并非基于 Nginx + Lua 的技术栈,而是 Nginx + LuaJIT (又称 OpenResty,以下为了避免混乱,会仅仅采用 Nginx + Lua 这样的称呼)。

+Serious readers may point out that Apache APISIX is not based on the Nginx + Lua stack, but Nginx + LuaJIT (also known as OpenResty). LuaJIT is a Just-In-Time Compiler (JIT) for the Lua programming language, its performance is much better than Lua. LuaJIT adds FFI functions to make it easy and efficient to call C code.

-LuaJIT 是 Lua 的一个 JIT 实现,性能比 Lua 好很多,而且额外添加了 FFI 的功能,能方便高效地调用 C 代码。

-由于现行的主流 API 网关,如果不是基于 OpenResty 实现,就是使用 Go 编写,所以时不时会看到各种 Go 和 Lua 谁的性能更好的比较。

+Since the current popular API gateways are either based on OpenResty or Go, developers are having hot debates about the performances of Lua and Go.

-**就我个人观点看,脱离场景比较语言的性能,是没有意义的。**

+**From my point of view, it is meaningless to compare the performance of languages without scenes.**

-首先明确一点,Apache APISIX 是基于 Nginx + Lua 的技术栈,只是外层代码用的是 Lua。所以如果要论证哪种网关性能更好,正确的比较对象是 C + LuaJIT 跟 Go 的比较。网关的性能的大头,在于代理 HTTP 请求和响应,这一块的工作主要是 Nginx 在做。

+First of all, to be clear, Apache APISIX is based on Nginx and Lua, and only the outer layer codes use Lua. So if you want to know which gateway performs better, the correct comparison object is to compare C with LuaJIT and Go. The bulk of the performance of the gateway lies in proxy HTTP requests and responses, and Nginx mainly does this piece of work.

-**所以倘若要比试比试性能,不妨比较 Nginx 和 Go 标准库的 HTTP 实现。**

+**The best way to test gateways’ performances is to compare the HTTP implementation of the Nginx and Go standard libraries.**

-众所周知,Nginx 是一个 bytes matter 的高性能服务器实现,对内存使用非常抠门。举两个例子:

+As we all know, Nginx is a high-performance server, which is very strict with memory usage. Here are two examples:

-1. Nginx 里面的 request header 在大多数时候都只是指向原始的 HTTP 请求数据的一个指针,只有在修改的时候才会创建副本。

-2. Nginx 代理上游响应时对 buffer 的复用逻辑非常复杂,是我读过的最为烧脑的代码之一。

+The request header in Nginx is usually just a pointer to the original HTTP request data, and a copy is created only when it is modified.

-凭借这种抠门,Nginx 得以屹立在高性能服务器之巅。

+When Nginx proxy upstream server’s response, It is very complicated to reuse Buffer.

-相反的,Go 标准库的 HTTP 实现,是一个滥用内存的典型反例。

+With those strict rules, Nginx is one of the most popular and high-performance servers.

-这可不是我的一面之辞,Fasthttp,一个重新实现 Go 标准库里面的 HTTP 包的项目,就举了两个例子:

+In contrast, the HTTP implementation in Go standard library is typical of memory abuse. Fasthttp, a project that re-implements HTTP packages in the Go standard library, gives us two examples:

-1. 标准库的 HTTP Request 结构体没法复用

-2. headers 总是被提前解析好,存储成 map[string][]string,即使没有用到(原文见:https://github.com/valyala/fasthttp#faq )

+We cannot reuse the standard library’s HTTP Request structure;

+Headers are always parsed in advance and stored as a `map [string][]string`, even if they are not used (see: [Fasthttp FAQ](https://github.com/valyala/fasthttp#faq)).

-Fasthttp 文档里面还提到一些 bytes matter 的优化技巧,建议大家可以阅读下。

+The Fasthttp document also mentions some optimization skills for bytes matter, I would suggest that you take a look.

-事实上,即使不去比较作为网关核心的代理功能,用 LuaJIT 写的代码不一定比 Go 差多少。原因有二。

+Actually, codes written in LuaJIT are not necessarily much worse than those written in Go. Here are two reasons:

-**其一,拜 Lua 跟 C 良好的亲和力所赐,许多 Lua 的库核心其实是用 C 写的。**

+**First, most of Lua’s library cores are written in C.**

-比如 lua-cjson 的 json 编解码,lua-resty-core 的 base64 编解码,实际上大头是用 C 实现的。

-而 Go 的库,当然是大部分用 Go 实现的。虽然有 CGO 这种东西,但是受限于 Go 的协程调度和工具链的限制,它在 Go 的生态圈里面只能处于从属的地位。

+For example, lua-cjson and lua-resty-core are implemented with C, but the Go libraries, of course, are mainly implemented with Go. Although there is such a thing called CGO, it is limited by Go's coroutine scheduling and toolchain, and it can only be in a subordinate position in the Go ecosystem.

-关于 LuaJIT 和 Go 对于 C 的亲和力的比较,推荐 Hacker News 上的这篇文章:《Comparing the C FFI overhead in various programming languages》(链接 https://news.ycombinator.com/item?id=17161168 )

+For the comparison of LuaJIT and Go’s affinity with C, here has one post from Hacker News: [Comparing the C FFI overhead in various programming languages](https://news.ycombinator.com/item?id=17161168).

-于是我们比较 Lua 的某些功能,其实还是会回到 C 和 Go 的比较中。

+So when we compare some of Lua’s features, we are actually comparing C and Go.

+Second, LuaJIT’s JIT optimization is unparalleled.

-**其二,LuaJIT 的 JIT 优化无出其右。**

+**Secondly, LuaJIT has one of the best JIT Opitimizations.**

-讨论动态语言的性能,可以把动态语言分成两类,带 JIT 和不带 JIT 的。JIT 优化能够把动态语言的代码在运行时编译成机器码,进而把原来的代码的性能提升一个数量级。

+We could divide dynamic languages into two cases, with or without JIT. JIT optimization can compile dynamic language code into machine code at runtime, thus improving the performance of the original code by order of magnitude.

-带 JIT 的语言还可以分成两类,能充分 JIT 的和只支持部分 JIT 的。而 LuaJIT 属于前者。

+Languages with JIT can also be divided into two cases, those that fully support JIT (e.g LuaJIT) and those that only support part of JIT.

-**人所皆知,Lua 是一门非常简单的语言。相对鲜为人知的是,LuaJIT 的作者 Mike Pall 是一个非常厉害的程序员。这两者的结合,诞生了 LuaJIT 这种能跟 V8 比肩的作品。**

+The debate about who is faster, LuaJIT or V8, has been a hot topic for a long time. In short, the performance of LuaJIT is not much different from that of the pre-compiled Go program.

-关于 LuaJIT 和 V8 到底谁更快,一直是长盛不衰的争论话题。展开讲 LuaJIT 的 JIT 已经超过了本文想要讨论的范畴。简单来说,JIT 加持的 LuaJIT 跟预先编译好的 Go 性能差别并不大。

-

-至于谁比谁慢,慢多少,那就是个见仁见智的问题了。这里我举个例子:

+As for which one is slower and slower by how much, that is a matter of opinion. Here is an example:

```Lua

local text = {"The", "quick", "brown", "fox", "jumped", "over", "the", "lazy", "dog", "at", "a", "restaurant", "near", "the", "lake", "of", "a", "new", "era"}

@@ -113,34 +109,35 @@ func main() {

}

```

-上面两段代码是等价的。你猜是第一个 Lua 版本的快,还是第二个 Go 版本的快?

+The above two code snippets are equivalent. Can you guess whether the first Lua version is faster or the second Go version is shorter?

+

+The first took less than 1 second on my machine, and the second took more than 23 seconds.

-在我的机器上,第一个用时不到 1 秒,第二个花了 23 秒多。

+This example is not to prove that LuaJIT is 20 times faster than Go. I want to show that using a microbenchmark to prove that one language is shorter than another does not make much sense because many factors affect performance. A simple microbenchmark is likely to overemphasize one factor and lead to unexpected results.

-举这个例子并不是想证明 LuaJIT 比 Go 快 20 倍。我只想说明用 micro benchmark 证明某个语言比另一个语言快的意义不大,因为影响性能的因素很多。一个简单的 micro benchmark 很有可能过分强调某一个因素,导致出乎意料的结果。

+## Nginx with Lua: High Performance + Flexibility

-## Nginx + Lua :高性能 + 灵活

+Let’s go back to Apache APISIX’s Nginx and Lua stack. The Nginx + Lua stack brings us more than just high performance.