You are viewing a plain text version of this content. The canonical link for it is here.

Posted to github@arrow.apache.org by GitBox <gi...@apache.org> on 2022/06/11 07:06:40 UTC

[GitHub] [arrow-datafusion] AssHero opened a new pull request, #2721: more data types are supported in hash join

AssHero opened a new pull request, #2721:

URL: https://github.com/apache/arrow-datafusion/pull/2721

# Which issue does this PR close?

Closes #2145

# Rationale for this change

More data types are supported in hash join. such as Date32、Date64、Decimal、Dictionary(_, Utf8)

# What changes are included in this PR?

support Date32/Date64/Decimal/Dictionary data types in equal_rows, also add those data types in can_hash function.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow-datafusion] liukun4515 commented on a diff in pull request #2721: Add additional data types are supported in hash join

Posted by GitBox <gi...@apache.org>.

liukun4515 commented on code in PR #2721:

URL: https://github.com/apache/arrow-datafusion/pull/2721#discussion_r902236962

##########

datafusion/core/src/physical_plan/hash_join.rs:

##########

@@ -1054,6 +1110,116 @@ fn equal_rows(

DataType::LargeUtf8 => {

equal_rows_elem!(LargeStringArray, l, r, left, right, null_equals_null)

}

+ DataType::Decimal(_, lscale) => match r.data_type() {

Review Comment:

I create two table in the spark,

```

spark-sql> desc t1;

c1 decimal(10,3)

spark-sql> desc t2;

c1 decimal(10,4)

and join two table with the eq condition: t1.c1 = t2.c1, get the plan

spark-sql> explain select * from t1,t2 where t1.c1 = t2.c1;

== Physical Plan ==

AdaptiveSparkPlan isFinalPlan=false

+- SortMergeJoin [cast(c1#111 as decimal(11,4))], [cast(c1#112 as decimal(11,4))], Inner

:- Sort [cast(c1#111 as decimal(11,4)) ASC NULLS FIRST], false, 0

: +- Exchange hashpartitioning(cast(c1#111 as decimal(11,4)), 200), ENSURE_REQUIREMENTS, [id=#293]

: +- Filter isnotnull(c1#111)

: +- Scan hive default.t1 [c1#111], HiveTableRelation [`default`.`t1`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, Data Cols: [c1#111], Partition Cols: []]

+- Sort [cast(c1#112 as decimal(11,4)) ASC NULLS FIRST], false, 0

+- Exchange hashpartitioning(cast(c1#112 as decimal(11,4)), 200), ENSURE_REQUIREMENTS, [id=#294]

+- Filter isnotnull(c1#112)

+- Scan hive default.t2 [c1#112], HiveTableRelation [`default`.`t2`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, Data Cols: [c1#112], Partition Cols: []]

```

From the from, we can get the the t1.c1 and t2.c1 will be casted to coerced type(decimal(11,4));

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow-datafusion] AssHero commented on a diff in pull request #2721: Add additional data types are supported in hash join

Posted by GitBox <gi...@apache.org>.

AssHero commented on code in PR #2721:

URL: https://github.com/apache/arrow-datafusion/pull/2721#discussion_r902404072

##########

datafusion/core/src/physical_plan/hash_join.rs:

##########

@@ -1054,6 +1110,116 @@ fn equal_rows(

DataType::LargeUtf8 => {

equal_rows_elem!(LargeStringArray, l, r, left, right, null_equals_null)

}

+ DataType::Decimal(_, lscale) => match r.data_type() {

Review Comment:

I create two tables in datafusion

❯ describe t1;

+-------------+----------------+-------------+

| column_name | data_type | is_nullable |

+-------------+----------------+-------------+

| c1 | Decimal(10, 2) | NO |

+-------------+----------------+-------------+

select * from t1;

+------+

| c1 |

+------+

| 1.00 |

+------+

❯ describe t2;

+-------------+----------------+-------------+

| column_name | data_type | is_nullable |

+-------------+----------------+-------------+

| c1 | Decimal(10, 4) | NO |

+-------------+----------------+-------------+

select * from t2;

+--------+

| c1 |

+--------+

| 1.0000 |

+--------+

this query gets 0 rows

select * from t1 join t2 on t1.c1 = t2.c1;

0 rows in set. Query took 0.005 seconds.

but this query gets one row

select * from t1 join t2 on t1.c1::decimal(10,4) = t2.c1;

+------+--------+

| c1 | c1 |

+------+--------+

| 1.00 | 1.0000 |

+------+--------+

Does the planner still not converts the data types between left and right for decimal ? If so, perhaps we can add this to planner.

please let me know if I miss something? thanks!

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow-datafusion] AssHero commented on pull request #2721: more data types are supported in hash join

Posted by GitBox <gi...@apache.org>.

AssHero commented on PR #2721:

URL: https://github.com/apache/arrow-datafusion/pull/2721#issuecomment-1153837821

> Grate work 👍 This change looks good to me.

>

> I try to trace the failing case and think it's not introduced here? Log shows that right join only matched twice. Need more investigation.

@waynexia Thanks!I run the test case on my server, and get the expected results. I'll check it later.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow-datafusion] liukun4515 commented on a diff in pull request #2721: Add additional data types are supported in hash join

Posted by GitBox <gi...@apache.org>.

liukun4515 commented on code in PR #2721:

URL: https://github.com/apache/arrow-datafusion/pull/2721#discussion_r902228171

##########

datafusion/core/src/physical_plan/hash_join.rs:

##########

@@ -1054,6 +1110,116 @@ fn equal_rows(

DataType::LargeUtf8 => {

equal_rows_elem!(LargeStringArray, l, r, left, right, null_equals_null)

}

+ DataType::Decimal(_, lscale) => match r.data_type() {

Review Comment:

From my knowledge in the datafusion, we should convert the left and right to the same data type in the planner.

The data type of left and right must be same.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow-datafusion] liukun4515 commented on a diff in pull request #2721: Add additional data types are supported in hash join

Posted by GitBox <gi...@apache.org>.

liukun4515 commented on code in PR #2721:

URL: https://github.com/apache/arrow-datafusion/pull/2721#discussion_r903294153

##########

datafusion/core/src/physical_plan/hash_join.rs:

##########

@@ -1054,6 +1110,116 @@ fn equal_rows(

DataType::LargeUtf8 => {

equal_rows_elem!(LargeStringArray, l, r, left, right, null_equals_null)

}

+ DataType::Decimal(_, lscale) => match r.data_type() {

Review Comment:

We should make the data type is same between left and right.

In this pr, we should better forbid calculate the join operation for diff data type, For example

Decimal(10,3) join Decimal(11,3)

I think you can create a follow up pr to make data type coercion in the planner.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow-datafusion] AssHero commented on a diff in pull request #2721: Add additional data types are supported in hash join

Posted by GitBox <gi...@apache.org>.

AssHero commented on code in PR #2721:

URL: https://github.com/apache/arrow-datafusion/pull/2721#discussion_r904444409

##########

datafusion/core/src/physical_plan/hash_join.rs:

##########

@@ -947,6 +951,58 @@ macro_rules! equal_rows_elem {

}};

}

+macro_rules! equal_rows_elem_with_string_dict {

+ ($key_array_type:ident, $l: ident, $r: ident, $left: ident, $right: ident, $null_equals_null: ident) => {{

+ let left_array: &DictionaryArray<$key_array_type> =

+ as_dictionary_array::<$key_array_type>($l);

+ let right_array: &DictionaryArray<$key_array_type> =

+ as_dictionary_array::<$key_array_type>($r);

+

+ let (left_values, left_values_index) = {

+ let keys_col = left_array.keys();

+ if keys_col.is_valid($left) {

+ let values_index = keys_col.value($left).to_usize().ok_or_else(|| {

+ DataFusionError::Internal(format!(

+ "Can not convert index to usize in dictionary of type creating group by value {:?}",

+ keys_col.data_type()

+ ))

+ });

+

+ match values_index {

+ Ok(index) => (as_string_array(left_array.values()), Some(index)),

+ _ => (as_string_array(left_array.values()), None)

Review Comment:

Thanks!

> Thanks again @AssHero -- let me know if you would like help filing the issue for the strange behavior we were seeing with `c5`

yes, i'll trace the issue and try to solve this issue.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow-datafusion] AssHero commented on a diff in pull request #2721: Add additional data types are supported in hash join

Posted by GitBox <gi...@apache.org>.

AssHero commented on code in PR #2721:

URL: https://github.com/apache/arrow-datafusion/pull/2721#discussion_r903699415

##########

datafusion/core/src/physical_plan/hash_join.rs:

##########

@@ -1054,6 +1110,116 @@ fn equal_rows(

DataType::LargeUtf8 => {

equal_rows_elem!(LargeStringArray, l, r, left, right, null_equals_null)

}

+ DataType::Decimal(_, lscale) => match r.data_type() {

Review Comment:

>

yes, we should check the data types and make the coercion in the planner. I'll create an issue and follow up the pr later. Thanks!

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow-datafusion] codecov-commenter commented on pull request #2721: Add additional data types are supported in hash join

Posted by GitBox <gi...@apache.org>.

codecov-commenter commented on PR #2721:

URL: https://github.com/apache/arrow-datafusion/pull/2721#issuecomment-1159787633

# [Codecov](https://codecov.io/gh/apache/arrow-datafusion/pull/2721?src=pr&el=h1&utm_medium=referral&utm_source=github&utm_content=comment&utm_campaign=pr+comments&utm_term=The+Apache+Software+Foundation) Report

> Merging [#2721](https://codecov.io/gh/apache/arrow-datafusion/pull/2721?src=pr&el=desc&utm_medium=referral&utm_source=github&utm_content=comment&utm_campaign=pr+comments&utm_term=The+Apache+Software+Foundation) (8035e46) into [master](https://codecov.io/gh/apache/arrow-datafusion/commit/3f4aaabc74796fe8c538981c259d79da789b96ae?el=desc&utm_medium=referral&utm_source=github&utm_content=comment&utm_campaign=pr+comments&utm_term=The+Apache+Software+Foundation) (3f4aaab) will **decrease** coverage by `0.01%`.

> The diff coverage is `83.17%`.

```diff

@@ Coverage Diff @@

## master #2721 +/- ##

==========================================

- Coverage 84.96% 84.95% -0.02%

==========================================

Files 271 271

Lines 48053 48142 +89

==========================================

+ Hits 40827 40897 +70

- Misses 7226 7245 +19

```

| [Impacted Files](https://codecov.io/gh/apache/arrow-datafusion/pull/2721?src=pr&el=tree&utm_medium=referral&utm_source=github&utm_content=comment&utm_campaign=pr+comments&utm_term=The+Apache+Software+Foundation) | Coverage Δ | |

|---|---|---|

| [datafusion/core/src/physical\_plan/hash\_join.rs](https://codecov.io/gh/apache/arrow-datafusion/pull/2721/diff?src=pr&el=tree&utm_medium=referral&utm_source=github&utm_content=comment&utm_campaign=pr+comments&utm_term=The+Apache+Software+Foundation#diff-ZGF0YWZ1c2lvbi9jb3JlL3NyYy9waHlzaWNhbF9wbGFuL2hhc2hfam9pbi5ycw==) | `94.49% <30.76%> (-1.64%)` | :arrow_down: |

| [datafusion/core/tests/sql/joins.rs](https://codecov.io/gh/apache/arrow-datafusion/pull/2721/diff?src=pr&el=tree&utm_medium=referral&utm_source=github&utm_content=comment&utm_campaign=pr+comments&utm_term=The+Apache+Software+Foundation#diff-ZGF0YWZ1c2lvbi9jb3JlL3Rlc3RzL3NxbC9qb2lucy5ycw==) | `99.10% <100.00%> (+0.02%)` | :arrow_up: |

| [datafusion/core/tests/sql/mod.rs](https://codecov.io/gh/apache/arrow-datafusion/pull/2721/diff?src=pr&el=tree&utm_medium=referral&utm_source=github&utm_content=comment&utm_campaign=pr+comments&utm_term=The+Apache+Software+Foundation#diff-ZGF0YWZ1c2lvbi9jb3JlL3Rlc3RzL3NxbC9tb2QucnM=) | `97.64% <100.00%> (+0.21%)` | :arrow_up: |

| [datafusion/expr/src/utils.rs](https://codecov.io/gh/apache/arrow-datafusion/pull/2721/diff?src=pr&el=tree&utm_medium=referral&utm_source=github&utm_content=comment&utm_campaign=pr+comments&utm_term=The+Apache+Software+Foundation#diff-ZGF0YWZ1c2lvbi9leHByL3NyYy91dGlscy5ycw==) | `90.68% <100.00%> (-0.13%)` | :arrow_down: |

| [datafusion/expr/src/logical\_plan/plan.rs](https://codecov.io/gh/apache/arrow-datafusion/pull/2721/diff?src=pr&el=tree&utm_medium=referral&utm_source=github&utm_content=comment&utm_campaign=pr+comments&utm_term=The+Apache+Software+Foundation#diff-ZGF0YWZ1c2lvbi9leHByL3NyYy9sb2dpY2FsX3BsYW4vcGxhbi5ycw==) | `73.91% <0.00%> (-0.20%)` | :arrow_down: |

| [datafusion/sql/src/planner.rs](https://codecov.io/gh/apache/arrow-datafusion/pull/2721/diff?src=pr&el=tree&utm_medium=referral&utm_source=github&utm_content=comment&utm_campaign=pr+comments&utm_term=The+Apache+Software+Foundation#diff-ZGF0YWZ1c2lvbi9zcWwvc3JjL3BsYW5uZXIucnM=) | `81.36% <0.00%> (-0.05%)` | :arrow_down: |

| [datafusion/core/src/physical\_plan/hash\_utils.rs](https://codecov.io/gh/apache/arrow-datafusion/pull/2721/diff?src=pr&el=tree&utm_medium=referral&utm_source=github&utm_content=comment&utm_campaign=pr+comments&utm_term=The+Apache+Software+Foundation#diff-ZGF0YWZ1c2lvbi9jb3JlL3NyYy9waHlzaWNhbF9wbGFuL2hhc2hfdXRpbHMucnM=) | `40.37% <0.00%> (+0.31%)` | :arrow_up: |

------

[Continue to review full report at Codecov](https://codecov.io/gh/apache/arrow-datafusion/pull/2721?src=pr&el=continue&utm_medium=referral&utm_source=github&utm_content=comment&utm_campaign=pr+comments&utm_term=The+Apache+Software+Foundation).

> **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta?utm_medium=referral&utm_source=github&utm_content=comment&utm_campaign=pr+comments&utm_term=The+Apache+Software+Foundation)

> `Δ = absolute <relative> (impact)`, `ø = not affected`, `? = missing data`

> Powered by [Codecov](https://codecov.io/gh/apache/arrow-datafusion/pull/2721?src=pr&el=footer&utm_medium=referral&utm_source=github&utm_content=comment&utm_campaign=pr+comments&utm_term=The+Apache+Software+Foundation). Last update [3f4aaab...8035e46](https://codecov.io/gh/apache/arrow-datafusion/pull/2721?src=pr&el=lastupdated&utm_medium=referral&utm_source=github&utm_content=comment&utm_campaign=pr+comments&utm_term=The+Apache+Software+Foundation). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments?utm_medium=referral&utm_source=github&utm_content=comment&utm_campaign=pr+comments&utm_term=The+Apache+Software+Foundation).

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow-datafusion] waynexia commented on pull request #2721: more data types are supported in hash join

Posted by GitBox <gi...@apache.org>.

waynexia commented on PR #2721:

URL: https://github.com/apache/arrow-datafusion/pull/2721#issuecomment-1154934428

Cannot reproduce the success and I start to doubt whether that passing run is the correct test case...

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow-datafusion] AssHero closed pull request #2721: more data types are supported in hash join

Posted by GitBox <gi...@apache.org>.

AssHero closed pull request #2721: more data types are supported in hash join

URL: https://github.com/apache/arrow-datafusion/pull/2721

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow-datafusion] waynexia commented on pull request #2721: more data types are supported in hash join

Posted by GitBox <gi...@apache.org>.

waynexia commented on PR #2721:

URL: https://github.com/apache/arrow-datafusion/pull/2721#issuecomment-1153866012

Please let me know if I can provide some info you need🧐

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow-datafusion] waynexia commented on pull request #2721: more data types are supported in hash join

Posted by GitBox <gi...@apache.org>.

waynexia commented on PR #2721:

URL: https://github.com/apache/arrow-datafusion/pull/2721#issuecomment-1154984721

Yes, the log shows there are only two matches. And that decimal number does not like a valid row. So I suspect this is not related to the PR...

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow-datafusion] AssHero commented on pull request #2721: Add additional data types are supported in hash join

Posted by GitBox <gi...@apache.org>.

AssHero commented on PR #2721:

URL: https://github.com/apache/arrow-datafusion/pull/2721#issuecomment-1159603165

> @AssHero would you like some help with this PR? @waynexia points out that @andygrove proposes adding support for date32/date64 in #2746 which is already covered by this PR

>

> I will try and review this PR tomorrow -- I am sorry I haven't done it until now: It looked like you and @waynexia were making good progress

sure, I'll merge this with the new changes in master. And recheck the test cases.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow-datafusion] AssHero commented on pull request #2721: Add additional data types are supported in hash join

Posted by GitBox <gi...@apache.org>.

AssHero commented on PR #2721:

URL: https://github.com/apache/arrow-datafusion/pull/2721#issuecomment-1159636121

merge the new changes in master

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow-datafusion] AssHero commented on a diff in pull request #2721: Add additional data types are supported in hash join

Posted by GitBox <gi...@apache.org>.

AssHero commented on code in PR #2721:

URL: https://github.com/apache/arrow-datafusion/pull/2721#discussion_r901120957

##########

datafusion/core/src/physical_plan/hash_join.rs:

##########

@@ -1054,6 +1079,102 @@ fn equal_rows(

DataType::LargeUtf8 => {

equal_rows_elem!(LargeStringArray, l, r, left, right, null_equals_null)

}

+ DataType::Decimal(_, _) => {

Review Comment:

I think the scale should be the same, precision is insignificant. what is your suggestion?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow-datafusion] waynexia commented on pull request #2721: more data types are supported in hash join

Posted by GitBox <gi...@apache.org>.

waynexia commented on PR #2721:

URL: https://github.com/apache/arrow-datafusion/pull/2721#issuecomment-1153484793

Grate work 👍 This change looks good to me.

I try to trace the failing case and think it's not introduced here? Log shows that right join only matched twice. Need more investigation.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow-datafusion] alamb commented on a diff in pull request #2721: Add additional data types are supported in hash join

Posted by GitBox <gi...@apache.org>.

alamb commented on code in PR #2721:

URL: https://github.com/apache/arrow-datafusion/pull/2721#discussion_r903760605

##########

datafusion/core/src/physical_plan/hash_join.rs:

##########

@@ -1054,6 +1110,116 @@ fn equal_rows(

DataType::LargeUtf8 => {

equal_rows_elem!(LargeStringArray, l, r, left, right, null_equals_null)

}

+ DataType::Decimal(_, lscale) => match r.data_type() {

Review Comment:

Thank you @AssHero

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow-datafusion] AssHero commented on a diff in pull request #2721: Add additional data types are supported in hash join

Posted by GitBox <gi...@apache.org>.

AssHero commented on code in PR #2721:

URL: https://github.com/apache/arrow-datafusion/pull/2721#discussion_r902404072

##########

datafusion/core/src/physical_plan/hash_join.rs:

##########

@@ -1054,6 +1110,116 @@ fn equal_rows(

DataType::LargeUtf8 => {

equal_rows_elem!(LargeStringArray, l, r, left, right, null_equals_null)

}

+ DataType::Decimal(_, lscale) => match r.data_type() {

Review Comment:

I create two tables in datafusion

❯ describe t1;

+-------------+----------------+-------------+

| column_name | data_type | is_nullable |

+-------------+----------------+-------------+

| c1 | Decimal(10, 2) | NO |

+-------------+----------------+-------------+

select * from t1;

+------+

| c1 |

+------+

| 1.00 |

+------+

❯ describe t2;

+-------------+----------------+-------------+

| column_name | data_type | is_nullable |

+-------------+----------------+-------------+

| c1 | Decimal(10, 4) | NO |

+-------------+----------------+-------------+

select * from t2;

+--------+

| c1 |

+--------+

| 1.0000 |

+--------+

this query gets 0 rows

select * from t1 join t2 on t1.c1 = t2.c1;

0 rows in set. Query took 0.005 seconds.

but this query gets one row

select * from t1 join t2 on t1.c1::decimal(10,4) = t2.c1;

+------+--------+

| c1 | c1 |

+------+--------+

| 1.00 | 1.0000 |

+------+--------+

so I think the planner still not converted the data types between left and right(at least for decimal). perhaps we can add this to planner.

please tell me if I miss something? thanks!

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow-datafusion] alamb commented on pull request #2721: Add additional data types are supported in hash join

Posted by GitBox <gi...@apache.org>.

alamb commented on PR #2721:

URL: https://github.com/apache/arrow-datafusion/pull/2721#issuecomment-1162271726

I'll try and take a look at this tomorrow. Sorry for the delay in review, but it is a bit of a crunch time at work (e.g. https://github.com/influxdata/influxdb_iox/issues/4658) so I don't have as much time to devote to things in arrow and datafusion that are not directly connected for the next few weeks.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow-datafusion] liukun4515 commented on a diff in pull request #2721: Add additional data types are supported in hash join

Posted by GitBox <gi...@apache.org>.

liukun4515 commented on code in PR #2721:

URL: https://github.com/apache/arrow-datafusion/pull/2721#discussion_r902242146

##########

datafusion/core/src/physical_plan/hash_join.rs:

##########

@@ -1054,6 +1110,116 @@ fn equal_rows(

DataType::LargeUtf8 => {

equal_rows_elem!(LargeStringArray, l, r, left, right, null_equals_null)

}

+ DataType::Decimal(_, lscale) => match r.data_type() {

+ DataType::Decimal(_, rscale) => {

+ if lscale == rscale {

+ equal_rows_elem!(

+ DecimalArray,

+ l,

+ r,

+ left,

+ right,

+ null_equals_null

+ )

+ } else {

Review Comment:

And the left and right data type must be same, otherwise we need to throw error.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow-datafusion] liukun4515 commented on a diff in pull request #2721: Add additional data types are supported in hash join

Posted by GitBox <gi...@apache.org>.

liukun4515 commented on code in PR #2721:

URL: https://github.com/apache/arrow-datafusion/pull/2721#discussion_r902228171

##########

datafusion/core/src/physical_plan/hash_join.rs:

##########

@@ -1054,6 +1110,116 @@ fn equal_rows(

DataType::LargeUtf8 => {

equal_rows_elem!(LargeStringArray, l, r, left, right, null_equals_null)

}

+ DataType::Decimal(_, lscale) => match r.data_type() {

Review Comment:

From my knowledge in the datafusion, we have converted the left and right to the same data type in the planner.

The data type of left and right must be same.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow-datafusion] alamb commented on a diff in pull request #2721: Add additional data types are supported in hash join

Posted by GitBox <gi...@apache.org>.

alamb commented on code in PR #2721:

URL: https://github.com/apache/arrow-datafusion/pull/2721#discussion_r903011945

##########

datafusion/core/src/physical_plan/hash_join.rs:

##########

@@ -1054,6 +1110,116 @@ fn equal_rows(

DataType::LargeUtf8 => {

equal_rows_elem!(LargeStringArray, l, r, left, right, null_equals_null)

}

+ DataType::Decimal(_, lscale) => match r.data_type() {

+ DataType::Decimal(_, rscale) => {

+ if lscale == rscale {

+ equal_rows_elem!(

+ DecimalArray,

+ l,

+ r,

+ left,

+ right,

+ null_equals_null

+ )

+ } else {

Review Comment:

> If the coerced type has been calculated, we don't need to check the data type between left and right.

I think it would be wise to check the data type between left and right (and throw an error if it does not match) even if the coercion is supposed to do that work. My rationale for the test is so that if anything ever goes wrong with the coercion we would get an error rather than incorrect results

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow-datafusion] AssHero commented on a diff in pull request #2721: Add additional data types are supported in hash join

Posted by GitBox <gi...@apache.org>.

AssHero commented on code in PR #2721:

URL: https://github.com/apache/arrow-datafusion/pull/2721#discussion_r901120957

##########

datafusion/core/src/physical_plan/hash_join.rs:

##########

@@ -1054,6 +1079,102 @@ fn equal_rows(

DataType::LargeUtf8 => {

equal_rows_elem!(LargeStringArray, l, r, left, right, null_equals_null)

}

+ DataType::Decimal(_, _) => {

Review Comment:

I think the scale should be the same, precision is insignificant.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow-datafusion] AssHero commented on a diff in pull request #2721: Add additional data types are supported in hash join

Posted by GitBox <gi...@apache.org>.

AssHero commented on code in PR #2721:

URL: https://github.com/apache/arrow-datafusion/pull/2721#discussion_r902404072

##########

datafusion/core/src/physical_plan/hash_join.rs:

##########

@@ -1054,6 +1110,116 @@ fn equal_rows(

DataType::LargeUtf8 => {

equal_rows_elem!(LargeStringArray, l, r, left, right, null_equals_null)

}

+ DataType::Decimal(_, lscale) => match r.data_type() {

Review Comment:

I create two tables in datafusion

❯ describe t1;

+-------------+----------------+-------------+

| column_name | data_type | is_nullable |

+-------------+----------------+-------------+

| c1 | Decimal(10, 2) | NO |

+-------------+----------------+-------------+

select * from t1;

+------+

| c1 |

+------+

| 1.00 |

+------+

❯ describe t2;

+-------------+----------------+-------------+

| column_name | data_type | is_nullable |

+-------------+----------------+-------------+

| c1 | Decimal(10, 4) | NO |

+-------------+----------------+-------------+

select * from t2;

+--------+

| c1 |

+--------+

| 1.0000 |

+--------+

this query gets 0 rows

select * from t1 join t2 on t1.c1 = t2.c1;

0 rows in set. Query took 0.005 seconds.

but this query gets one row

select * from t1 join t2 on t1.c1::decimal(10,4) = t2.c1;

+------+--------+

| c1 | c1 |

+------+--------+

| 1.00 | 1.0000 |

+------+--------+

so I think the planner still not converted the data types between left and right(at least for decimal). perhaps we can add this to planner.

please let me know if I miss something? thanks!

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow-datafusion] AssHero commented on pull request #2721: more data types are supported in hash join

Posted by GitBox <gi...@apache.org>.

AssHero commented on PR #2721:

URL: https://github.com/apache/arrow-datafusion/pull/2721#issuecomment-1152872254

Hi @alamb , as discussed before, more common used data types, such as Date32/Date64/Decimal/Dictionary(integer/unsigned integer, Utf8) are supported in hash join.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow-datafusion] waynexia commented on pull request #2721: more data types are supported in hash join

Posted by GitBox <gi...@apache.org>.

waynexia commented on PR #2721:

URL: https://github.com/apache/arrow-datafusion/pull/2721#issuecomment-1154902153

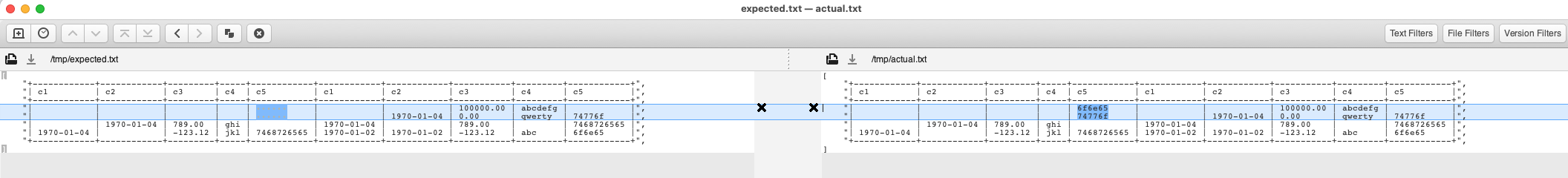

This seems to be a flaky case. I got a passing run today 😢

Here is my log:

<details>

<summary>Error log</summary>

```plaintext

running 1 test

start right join

0 == 0

0 == 0

thread 'sql::joins::join_with_hash_supported_data_type' panicked at 'assertion failed: `(left == right)`

left: `["+------------+------------+---------+-----+------------+------------+------------+-----------+---------+------------+", "| c1 | c2 | c3 | c4 | c5 | c1 | c2 | c3 | c4 | c5 |", "+------------+------------+---------+-----+------------+------------+------------+-----------+---------+------------+", "| | | | | | | | 100000.00 | abcdefg | |", "| | | | | | | 1970-01-04 | 0.00 | qwerty | 74776f |", "| | 1970-01-04 | 789.00 | ghi | | 1970-01-04 | | 789.00 | | 7468726565 |", "| 1970-01-04 | | -123.12 | jkl | 7468726565 | 1970-01-02 | 1970-01-02 | -123.12 | abc | 6f6e65 |", "+------------+------------+---------+-----+------------+------------+------------+-----------+---------+------------+"]`,

right: `["+------------+------------+---------+-----+------------+------------+------------+-----------+---------+------------+", "| c1 | c2 | c3 | c4 | c5 | c1 | c2 | c3 | c4 | c5 |", "+------------+------------+---------+-----+------------+------------+------------+-----------+---------+------------+", "| | | | | | | 1970-01-04 | 0.00 | qwerty | 74776f |", "| | | | | 7468726565 | | | 100000.00 | abcdefg | |", "| | 1970-01-04 | 789.00 | ghi | | 1970-01-04 | | 789.00 | | 7468726565 |", "| 1970-01-04 | | -123.12 | jkl | 7468726565 | 1970-01-02 | 1970-01-02 | -123.12 | abc | 6f6e65 |", "+------------+------------+---------+-----+------------+------------+------------+-----------+---------+------------+"]`:

expected:

[

"+------------+------------+---------+-----+------------+------------+------------+-----------+---------+------------+",

"| c1 | c2 | c3 | c4 | c5 | c1 | c2 | c3 | c4 | c5 |",

"+------------+------------+---------+-----+------------+------------+------------+-----------+---------+------------+",

"| | | | | | | | 100000.00 | abcdefg | |",

"| | | | | | | 1970-01-04 | 0.00 | qwerty | 74776f |",

"| | 1970-01-04 | 789.00 | ghi | | 1970-01-04 | | 789.00 | | 7468726565 |",

"| 1970-01-04 | | -123.12 | jkl | 7468726565 | 1970-01-02 | 1970-01-02 | -123.12 | abc | 6f6e65 |",

"+------------+------------+---------+-----+------------+------------+------------+-----------+---------+------------+",

]

actual:

[

"+------------+------------+---------+-----+------------+------------+------------+-----------+---------+------------+",

"| c1 | c2 | c3 | c4 | c5 | c1 | c2 | c3 | c4 | c5 |",

"+------------+------------+---------+-----+------------+------------+------------+-----------+---------+------------+",

"| | | | | | | 1970-01-04 | 0.00 | qwerty | 74776f |",

"| | | | | 7468726565 | | | 100000.00 | abcdefg | |",

"| | 1970-01-04 | 789.00 | ghi | | 1970-01-04 | | 789.00 | | 7468726565 |",

"| 1970-01-04 | | -123.12 | jkl | 7468726565 | 1970-01-02 | 1970-01-02 | -123.12 | abc | 6f6e65 |",

"+------------+------------+---------+-----+------------+------------+------------+-----------+---------+------------+",

]

', datafusion/core/tests/sql/joins.rs:1388:5

stack backtrace:

0: rust_begin_unwind

at /rustc/51126be1b260216b41143469086e6e6ee567647e/library/std/src/panicking.rs:577:5

1: core::panicking::panic_fmt

at /rustc/51126be1b260216b41143469086e6e6ee567647e/library/core/src/panicking.rs:135:14

2: core::panicking::assert_failed_inner

3: core::panicking::assert_failed

at /rustc/51126be1b260216b41143469086e6e6ee567647e/library/core/src/panicking.rs:174:5

4: sql_integration::sql::joins::join_with_hash_supported_data_type::{{closure}}

at ./tests/sql/joins.rs:1388:5

5: <core::future::from_generator::GenFuture<T> as core::future::future::Future>::poll

at /rustc/51126be1b260216b41143469086e6e6ee567647e/library/core/src/future/mod.rs:84:19

6: <core::pin::Pin<P> as core::future::future::Future>::poll

at /rustc/51126be1b260216b41143469086e6e6ee567647e/library/core/src/future/future.rs:123:9

7: tokio::runtime::basic_scheduler::CoreGuard::block_on::{{closure}}::{{closure}}::{{closure}}

at /home/ruihang/.cargo/registry/src/github.com-1ecc6299db9ec823/tokio-1.19.2/src/runtime/basic_scheduler.rs:508:48

8: tokio::coop::with_budget::{{closure}}

at /home/ruihang/.cargo/registry/src/github.com-1ecc6299db9ec823/tokio-1.19.2/src/coop.rs:102:9

9: std::thread::local::LocalKey<T>::try_with

at /rustc/51126be1b260216b41143469086e6e6ee567647e/library/std/src/thread/local.rs:413:16

10: std::thread::local::LocalKey<T>::with

at /rustc/51126be1b260216b41143469086e6e6ee567647e/library/std/src/thread/local.rs:389:9

11: tokio::coop::with_budget

at /home/ruihang/.cargo/registry/src/github.com-1ecc6299db9ec823/tokio-1.19.2/src/coop.rs:95:5

12: tokio::coop::budget

at /home/ruihang/.cargo/registry/src/github.com-1ecc6299db9ec823/tokio-1.19.2/src/coop.rs:72:5

13: tokio::runtime::basic_scheduler::CoreGuard::block_on::{{closure}}::{{closure}}

at /home/ruihang/.cargo/registry/src/github.com-1ecc6299db9ec823/tokio-1.19.2/src/runtime/basic_scheduler.rs:508:25

14: tokio::runtime::basic_scheduler::Context::enter

at /home/ruihang/.cargo/registry/src/github.com-1ecc6299db9ec823/tokio-1.19.2/src/runtime/basic_scheduler.rs:362:19

15: tokio::runtime::basic_scheduler::CoreGuard::block_on::{{closure}}

at /home/ruihang/.cargo/registry/src/github.com-1ecc6299db9ec823/tokio-1.19.2/src/runtime/basic_scheduler.rs:507:36

16: tokio::runtime::basic_scheduler::CoreGuard::enter::{{closure}}

at /home/ruihang/.cargo/registry/src/github.com-1ecc6299db9ec823/tokio-1.19.2/src/runtime/basic_scheduler.rs:565:57

17: tokio::macros::scoped_tls::ScopedKey<T>::set

at /home/ruihang/.cargo/registry/src/github.com-1ecc6299db9ec823/tokio-1.19.2/src/macros/scoped_tls.rs:61:9

18: tokio::runtime::basic_scheduler::CoreGuard::enter

at /home/ruihang/.cargo/registry/src/github.com-1ecc6299db9ec823/tokio-1.19.2/src/runtime/basic_scheduler.rs:565:27

19: tokio::runtime::basic_scheduler::CoreGuard::block_on

at /home/ruihang/.cargo/registry/src/github.com-1ecc6299db9ec823/tokio-1.19.2/src/runtime/basic_scheduler.rs:498:9

20: tokio::runtime::basic_scheduler::BasicScheduler::block_on

at /home/ruihang/.cargo/registry/src/github.com-1ecc6299db9ec823/tokio-1.19.2/src/runtime/basic_scheduler.rs:174:24

21: tokio::runtime::Runtime::block_on

at /home/ruihang/.cargo/registry/src/github.com-1ecc6299db9ec823/tokio-1.19.2/src/runtime/mod.rs:480:46

22: sql_integration::sql::joins::join_with_hash_supported_data_type

at ./tests/sql/joins.rs:1456:5

23: sql_integration::sql::joins::join_with_hash_supported_data_type::{{closure}}

at ./tests/sql/joins.rs:1209:7

24: core::ops::function::FnOnce::call_once

at /rustc/51126be1b260216b41143469086e6e6ee567647e/library/core/src/ops/function.rs:227:5

25: core::ops::function::FnOnce::call_once

at /rustc/51126be1b260216b41143469086e6e6ee567647e/library/core/src/ops/function.rs:227:5

note: Some details are omitted, run with `RUST_BACKTRACE=full` for a verbose backtrace.

test sql::joins::join_with_hash_supported_data_type ... FAILED

failures:

failures:

sql::joins::join_with_hash_supported_data_type

test result: FAILED. 0 passed; 1 failed; 0 ignored; 0 measured; 366 filtered out; finished in 0.39s

error: test failed, to rerun pass '-p datafusion --test sql_integration'

The terminal process "cargo 'test', '--package', 'datafusion', '--test', 'sql_integration', '--', 'sql::joins::join_with_hash_supported_data_type', '--exact', '--nocapture'" terminated with exit code: 101.

Terminal will be reused by tasks, press any key to close it.

```

</details>

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow-datafusion] alamb commented on pull request #2721: Add additional data types are supported in hash join

Posted by GitBox <gi...@apache.org>.

alamb commented on PR #2721:

URL: https://github.com/apache/arrow-datafusion/pull/2721#issuecomment-1159452860

@AssHero would you like some help with this PR? @waynexia points out that @andygrove proposes adding support for date32/date64 in https://github.com/apache/arrow-datafusion/pull/2746 which is already covered by this PR

I will try and review this PR tomorrow -- I am sorry I haven't done it until now: It looked like you and @waynexia were making good progress

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow-datafusion] waynexia commented on a diff in pull request #2721: Add additional data types are supported in hash join

Posted by GitBox <gi...@apache.org>.

waynexia commented on code in PR #2721:

URL: https://github.com/apache/arrow-datafusion/pull/2721#discussion_r901118189

##########

datafusion/core/tests/sql/joins.rs:

##########

@@ -1254,32 +1373,31 @@ async fn join_with_hash_unsupported_data_type() -> Result<()> {

);

let expected = vec![

- "+----+-----+-----+------------+----+-----+-----+------------+",

- "| c1 | c2 | c3 | c4 | c1 | c2 | c3 | c4 |",

- "+----+-----+-----+------------+----+-----+-----+------------+",

- "| 1 | aaa | 100 | 1970-01-02 | 1 | aaa | 100 | 1970-01-02 |",

- "| 2 | bbb | 200 | 1970-01-03 | 2 | bbb | 200 | 1970-01-03 |",

- "| 3 | ccc | 300 | 1970-01-04 | 3 | ccc | 300 | 1970-01-04 |",

- "+----+-----+-----+------------+----+-----+-----+------------+",

+ "+------------+------------+---------+-----+------------+------------+------------+-----------+---------+------------+",

+ "| c1 | c2 | c3 | c4 | c5 | c1 | c2 | c3 | c4 | c5 |",

+ "+------------+------------+---------+-----+------------+------------+------------+-----------+---------+------------+",

+ "| | | | | | | | 100000.00 | abcdefg | |",

+ "| | | | | | | 1970-01-04 | 0.00 | qwerty | 74776f |",

+ "| | 1970-01-04 | 789.00 | ghi | | 1970-01-04 | | 789.00 | | 7468726565 |",

+ "| 1970-01-04 | | -123.12 | jkl | 7468726565 | 1970-01-02 | 1970-01-02 | -123.12 | abc | 6f6e65 |",

+ "+------------+------------+---------+-----+------------+------------+------------+-----------+---------+------------+",

];

let results = execute_to_batches(&ctx, sql).await;

assert_batches_sorted_eq!(expected, &results);

Review Comment:

I agree. That wrong c5 is not actually a correct row in t1.

>and we then file ticket to chase down the real problem.

@AssHero cannot reproduce this might also be a clue. And I hope we can add c5 back in the next fixing PR.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow-datafusion] AssHero commented on a diff in pull request #2721: Add additional data types are supported in hash join

Posted by GitBox <gi...@apache.org>.

AssHero commented on code in PR #2721:

URL: https://github.com/apache/arrow-datafusion/pull/2721#discussion_r903699415

##########

datafusion/core/src/physical_plan/hash_join.rs:

##########

@@ -1054,6 +1110,116 @@ fn equal_rows(

DataType::LargeUtf8 => {

equal_rows_elem!(LargeStringArray, l, r, left, right, null_equals_null)

}

+ DataType::Decimal(_, lscale) => match r.data_type() {

Review Comment:

>

yes, we should check the data types and make the coercion in the planner. I'll create an issue and follow up the pr later.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow-datafusion] liukun4515 commented on a diff in pull request #2721: Add additional data types are supported in hash join

Posted by GitBox <gi...@apache.org>.

liukun4515 commented on code in PR #2721:

URL: https://github.com/apache/arrow-datafusion/pull/2721#discussion_r902241633

##########

datafusion/core/src/physical_plan/hash_join.rs:

##########

@@ -1054,6 +1110,116 @@ fn equal_rows(

DataType::LargeUtf8 => {

equal_rows_elem!(LargeStringArray, l, r, left, right, null_equals_null)

}

+ DataType::Decimal(_, lscale) => match r.data_type() {

+ DataType::Decimal(_, rscale) => {

+ if lscale == rscale {

+ equal_rows_elem!(

+ DecimalArray,

+ l,

+ r,

+ left,

+ right,

+ null_equals_null

+ )

+ } else {

Review Comment:

If the coerced type has been calculated, we don't need to check the data type between left and right.

Do you think so? @alamb @waynexia

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow-datafusion] alamb commented on a diff in pull request #2721: Add additional data types are supported in hash join

Posted by GitBox <gi...@apache.org>.

alamb commented on code in PR #2721:

URL: https://github.com/apache/arrow-datafusion/pull/2721#discussion_r901089436

##########

datafusion/expr/src/utils.rs:

##########

@@ -682,6 +682,24 @@ pub fn can_hash(data_type: &DataType) -> bool {

},

DataType::Utf8 => true,

DataType::LargeUtf8 => true,

+ DataType::Decimal(_, _) => true,

+ DataType::Date32 => true,

+ DataType::Date64 => true,

+ DataType::Dictionary(key_type, value_type)

+ if *value_type.as_ref() == DataType::Utf8 =>

+ {

+ matches!(

Review Comment:

minor comment: could potentially use `DataType::is_dictionary_key_type` here: https://docs.rs/arrow/16.0.0/arrow/datatypes/enum.DataType.html#method.is_dictionary_key_type

##########

datafusion/core/tests/sql/joins.rs:

##########

@@ -1206,29 +1206,83 @@ async fn join_partitioned() -> Result<()> {

}

#[tokio::test]

-async fn join_with_hash_unsupported_data_type() -> Result<()> {

+async fn join_with_hash_supported_data_type() -> Result<()> {

let ctx = SessionContext::new();

- let schema = Schema::new(vec![

- Field::new("c1", DataType::Int32, true),

- Field::new("c2", DataType::Utf8, true),

- Field::new("c3", DataType::Int64, true),

- Field::new("c4", DataType::Date32, true),

+ let t1_schema = Schema::new(vec![

+ Field::new("c1", DataType::Date32, true),

+ Field::new("c2", DataType::Date64, true),

+ Field::new("c3", DataType::Decimal(5, 2), true),

+ Field::new(

+ "c4",

+ DataType::Dictionary(Box::new(DataType::Int32), Box::new(DataType::Utf8)),

+ true,

+ ),

+ Field::new("c5", DataType::Binary, true),

]);

- let data = RecordBatch::try_new(

- Arc::new(schema),

+ let dict1: DictionaryArray<Int32Type> =

+ vec!["abc", "def", "ghi", "jkl"].into_iter().collect();

+ let binary_value1: Vec<&[u8]> = vec![b"one", b"two", b"", b"three"];

+ let t1_data = RecordBatch::try_new(

+ Arc::new(t1_schema),

+ vec![

+ Arc::new(Date32Array::from(vec![Some(1), Some(2), None, Some(3)])),

+ Arc::new(Date64Array::from(vec![

+ Some(86400000),

+ Some(172800000),

+ Some(259200000),

+ None,

+ ])),

+ Arc::new(

+ DecimalArray::from_iter_values([123, 45600, 78900, -12312])

+ .with_precision_and_scale(5, 2)

+ .unwrap(),

+ ),

+ Arc::new(dict1),

+ Arc::new(BinaryArray::from_vec(binary_value1)),

+ ],

+ )?;

+ let table = MemTable::try_new(t1_data.schema(), vec![vec![t1_data]])?;

+ ctx.register_table("t1", Arc::new(table))?;

+

+ let t2_schema = Schema::new(vec![

+ Field::new("c1", DataType::Date32, true),

+ Field::new("c2", DataType::Date64, true),

+ Field::new("c3", DataType::Decimal(10, 2), true),

+ Field::new(

+ "c4",

+ DataType::Dictionary(Box::new(DataType::Int32), Box::new(DataType::Utf8)),

+ true,

+ ),

+ Field::new("c5", DataType::Binary, true),

+ ]);

+ let dict2: DictionaryArray<Int32Type> =

+ vec!["abc", "abcdefg", "qwerty", ""].into_iter().collect();

+ let binary_value2: Vec<&[u8]> = vec![b"one", b"", b"two", b"three"];

+ let t2_data = RecordBatch::try_new(

+ Arc::new(t2_schema),

vec![

- Arc::new(Int32Array::from_slice(&[1, 2, 3])),

- Arc::new(StringArray::from_slice(&["aaa", "bbb", "ccc"])),

- Arc::new(Int64Array::from_slice(&[100, 200, 300])),

- Arc::new(Date32Array::from(vec![Some(1), Some(2), Some(3)])),

+ Arc::new(Date32Array::from(vec![Some(1), None, None, Some(3)])),

+ Arc::new(Date64Array::from(vec![

+ Some(86400000),

+ None,

+ Some(259200000),

+ None,

+ ])),

+ Arc::new(

+ DecimalArray::from_iter_values([-12312, 10000000, 0, 78900])

+ .with_precision_and_scale(10, 2)

+ .unwrap(),

+ ),

+ Arc::new(dict2),

+ Arc::new(BinaryArray::from_vec(binary_value2)),

],

)?;

- let table = MemTable::try_new(data.schema(), vec![vec![data]])?;

- ctx.register_table("foo", Arc::new(table))?;

+ let table = MemTable::try_new(t2_data.schema(), vec![vec![t2_data]])?;

+ ctx.register_table("t2", Arc::new(table))?;

- // join on hash unsupported data type (Date32), use cross join instead hash join

- let sql = "select * from foo t1 join foo t2 on t1.c4 = t2.c4";

+ // inner join on hash supported data type (Date32)

Review Comment:

As a stylistic thing, I find it easier to debug / work with tests if there are fewer queries in the individual test.

So in this case, perhaps something like

```rust

#[test]

fn hash_join_date32() {

let ctx = make_test_hash_join_ctx();

let sql = "select * from t1 join t2 on t1.c1 = t2.c1";

let msg = format!("Creating logical plan for '{}'", sql);

let plan = ctx

.create_logical_plan(&("explain ".to_owned() + sql))

.expect(&msg);

let state = ctx.state();

let plan = state.optimize(&plan)?;

let expected = vec![

"Explain [plan_type:Utf8, plan:Utf8]",

" Projection: #t1.c1, #t1.c2, #t1.c3, #t1.c4, #t1.c5, #t2.c1, #t2.c2, #t2.c3, #t2.c4, #t2.c5 [c1:Date32;N, c2:Date64;N, c3:Decimal(5, 2);N, c4:Dictionary(Int32, Utf8);N, c5:Binary;N, c1:Date32;N, c2:Date64;N, c3:Decimal(10, 2);N, c4:Dictionary(Int32, Utf8);N, c5:Binary;N]",

" Inner Join: #t1.c1 = #t2.c1 [c1:Date32;N, c2:Date64;N, c3:Decimal(5, 2);N, c4:Dictionary(Int32, Utf8);N, c5:Binary;N, c1:Date32;N, c2:Date64;N, c3:Decimal(10, 2);N, c4:Dictionary(Int32, Utf8);N, c5:Binary;N]",

" TableScan: t1 projection=Some([c1, c2, c3, c4, c5]) [c1:Date32;N, c2:Date64;N, c3:Decimal(5, 2);N, c4:Dictionary(Int32, Utf8);N, c5:Binary;N]",

" TableScan: t2 projection=Some([c1, c2, c3, c4, c5]) [c1:Date32;N, c2:Date64;N, c3:Decimal(10, 2);N, c4:Dictionary(Int32, Utf8);N, c5:Binary;N]",

];

let formatted = plan.display_indent_schema().to_string();

let actual: Vec<&str> = formatted.trim().lines().collect();

assert_eq!(

expected, actual,

"\n\nexpected:\n\n{:#?}\nactual:\n\n{:#?}\n\n",

expected, actual

);

let expected = vec![

"+------------+------------+---------+-----+------------+------------+------------+---------+-----+------------+",

"| c1 | c2 | c3 | c4 | c5 | c1 | c2 | c3 | c4 | c5 |",

"+------------+------------+---------+-----+------------+------------+------------+---------+-----+------------+",

"| 1970-01-02 | 1970-01-02 | 1.23 | abc | 6f6e65 | 1970-01-02 | 1970-01-02 | -123.12 | abc | 6f6e65 |",

"| 1970-01-04 | | -123.12 | jkl | 7468726565 | 1970-01-04 | | 789.00 | | 7468726565 |",

"+------------+------------+---------+-----+------------+------------+------------+---------+-----+------------+",

];

let results = execute_to_batches(&ctx, sql).await;

assert_batches_sorted_eq!(expected, &results);

```

(that way you can get multiple expected tests with one `cargo test` run rather than having to run it several times)

##########

datafusion/core/src/physical_plan/hash_join.rs:

##########

@@ -1054,6 +1079,102 @@ fn equal_rows(

DataType::LargeUtf8 => {

equal_rows_elem!(LargeStringArray, l, r, left, right, null_equals_null)

}

+ DataType::Decimal(_, _) => {

Review Comment:

For decimal, I wonder if we also need to ensure that the precision and scale are the same (e.g. `l.data_type() == r.data_type()`) 🤔

##########

datafusion/core/tests/sql/joins.rs:

##########

@@ -1254,32 +1373,31 @@ async fn join_with_hash_unsupported_data_type() -> Result<()> {

);

let expected = vec![

- "+----+-----+-----+------------+----+-----+-----+------------+",

- "| c1 | c2 | c3 | c4 | c1 | c2 | c3 | c4 |",

- "+----+-----+-----+------------+----+-----+-----+------------+",

- "| 1 | aaa | 100 | 1970-01-02 | 1 | aaa | 100 | 1970-01-02 |",

- "| 2 | bbb | 200 | 1970-01-03 | 2 | bbb | 200 | 1970-01-03 |",

- "| 3 | ccc | 300 | 1970-01-04 | 3 | ccc | 300 | 1970-01-04 |",

- "+----+-----+-----+------------+----+-----+-----+------------+",

+ "+------------+------------+---------+-----+------------+------------+------------+-----------+---------+------------+",

+ "| c1 | c2 | c3 | c4 | c5 | c1 | c2 | c3 | c4 | c5 |",

+ "+------------+------------+---------+-----+------------+------------+------------+-----------+---------+------------+",

+ "| | | | | | | | 100000.00 | abcdefg | |",

+ "| | | | | | | 1970-01-04 | 0.00 | qwerty | 74776f |",

+ "| | 1970-01-04 | 789.00 | ghi | | 1970-01-04 | | 789.00 | | 7468726565 |",

+ "| 1970-01-04 | | -123.12 | jkl | 7468726565 | 1970-01-02 | 1970-01-02 | -123.12 | abc | 6f6e65 |",

+ "+------------+------------+---------+-----+------------+------------+------------+-----------+---------+------------+",

];

let results = execute_to_batches(&ctx, sql).await;

assert_batches_sorted_eq!(expected, &results);

Review Comment:

This test is failing on CI: https://github.com/apache/arrow-datafusion/runs/6953453815?check_suite_focus=true

Looking at the diff it appears to be related to column c5:

And thus it seems unrelated to this PR (though a real bug). Thus I personally suggest you change the query to not select `c5` and we then file ticket to chase down the real problem.

##########

datafusion/core/src/physical_plan/hash_join.rs:

##########

@@ -947,6 +951,27 @@ macro_rules! equal_rows_elem {

}};

}

+macro_rules! equal_rows_elem_with_string_dict {

+ ($key_array_type:ident, $l: ident, $r: ident, $left: ident, $right: ident, $null_equals_null: ident) => {{

Review Comment:

For dictionaries, I think `$left` and `$right` are actually indexes into the *keys* array, and then the keys array contains the corresponding index into `values`.

```text

┌ ─ ─ ─ ─ ─ ─ ─ ─ ─ ─ ─ ─ ─ ─ ─ ─ ─

┌─────────────────┐ ┌─────────┐ │ ┌─────────────────┐

│ │ A │ │ 0 │ │ A │ values[keys[0]]

├─────────────────┤ ├─────────┤ │ ├─────────────────┤

│ │ D │ │ 2 │ │ B │ values[keys[1]]

├─────────────────┤ ├─────────┤ │ ├─────────────────┤

│ │ B │ │ 2 │ │ B │ values[keys[2]]

├─────────────────┤ ├─────────┤ │ ├─────────────────┤

│ │ C │ │ 1 │ │ D │ values[keys[3]]

├─────────────────┤ └─────────┘ │ └─────────────────┘

│ │ E │ keys

└─────────────────┘ │

│ values Logical array

─ ─ ─ ─ ─ ─ ─ ─ ─ ─ ─ ─ ─ ─ ─ ─ ─ ┘ Contents

DictionaryArray

length = 4

```

In other words, I think you need to compare the values using something like:

https://github.com/AssHero/arrow-datafusion/blob/hashjoin/datafusion/common/src/scalar.rs#L338-L361

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow-datafusion] AssHero commented on a diff in pull request #2721: Add additional data types are supported in hash join

Posted by GitBox <gi...@apache.org>.

AssHero commented on code in PR #2721:

URL: https://github.com/apache/arrow-datafusion/pull/2721#discussion_r901123756

##########

datafusion/core/tests/sql/joins.rs:

##########

@@ -1254,32 +1373,31 @@ async fn join_with_hash_unsupported_data_type() -> Result<()> {

);

let expected = vec![

- "+----+-----+-----+------------+----+-----+-----+------------+",

- "| c1 | c2 | c3 | c4 | c1 | c2 | c3 | c4 |",

- "+----+-----+-----+------------+----+-----+-----+------------+",

- "| 1 | aaa | 100 | 1970-01-02 | 1 | aaa | 100 | 1970-01-02 |",

- "| 2 | bbb | 200 | 1970-01-03 | 2 | bbb | 200 | 1970-01-03 |",

- "| 3 | ccc | 300 | 1970-01-04 | 3 | ccc | 300 | 1970-01-04 |",

- "+----+-----+-----+------------+----+-----+-----+------------+",

+ "+------------+------------+---------+-----+------------+------------+------------+-----------+---------+------------+",

+ "| c1 | c2 | c3 | c4 | c5 | c1 | c2 | c3 | c4 | c5 |",

+ "+------------+------------+---------+-----+------------+------------+------------+-----------+---------+------------+",

+ "| | | | | | | | 100000.00 | abcdefg | |",

+ "| | | | | | | 1970-01-04 | 0.00 | qwerty | 74776f |",

+ "| | 1970-01-04 | 789.00 | ghi | | 1970-01-04 | | 789.00 | | 7468726565 |",

+ "| 1970-01-04 | | -123.12 | jkl | 7468726565 | 1970-01-02 | 1970-01-02 | -123.12 | abc | 6f6e65 |",

+ "+------------+------------+---------+-----+------------+------------+------------+-----------+---------+------------+",

];

let results = execute_to_batches(&ctx, sql).await;

assert_batches_sorted_eq!(expected, &results);

Review Comment:

> https://github.com/apache/arrow-datafusion/runs/6953453815?check_suite_focus=tr

Thanks! Let me fix the test case.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow-datafusion] AssHero commented on pull request #2721: Add additional data types are supported in hash join

Posted by GitBox <gi...@apache.org>.

AssHero commented on PR #2721:

URL: https://github.com/apache/arrow-datafusion/pull/2721#issuecomment-1159783732

I have refined the code according to the comments. Please review the code and give me your suggestions. @waynexia @alamb Thanks!

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow-datafusion] AssHero commented on pull request #2721: more data types are supported in hash join

Posted by GitBox <gi...@apache.org>.

AssHero commented on PR #2721:

URL: https://github.com/apache/arrow-datafusion/pull/2721#issuecomment-1154946116

> Cannot reproduce the success and I start to doubt whether that passing run is the correct test case...

the left side should be null if not matched for right join, this

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow-datafusion] AssHero commented on pull request #2721: more data types are supported in hash join

Posted by GitBox <gi...@apache.org>.

AssHero commented on PR #2721:

URL: https://github.com/apache/arrow-datafusion/pull/2721#issuecomment-1154648956

> Please let me know if I can provide some info you need🧐

Do you have any ideas about the test failure? I can not reproduce that case.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow-datafusion] alamb merged pull request #2721: Add additional data types are supported in hash join

Posted by GitBox <gi...@apache.org>.

alamb merged PR #2721:

URL: https://github.com/apache/arrow-datafusion/pull/2721

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org