You are viewing a plain text version of this content. The canonical link for it is here.

Posted to commits@tvm.apache.org by GitBox <gi...@apache.org> on 2022/06/20 08:51:02 UTC

[GitHub] [tvm] TaylorHere opened a new issue, #11783: [Bug] Expand v8 Cannot use and / or / not operator to Expr

TaylorHere opened a new issue, #11783:

URL: https://github.com/apache/tvm/issues/11783

Thanks for participating in the TVM community! We use https://discuss.tvm.ai for any general usage questions and discussions. The issue tracker is used for actionable items such as feature proposals discussion, roadmaps, and bug tracking. You are always welcomed to post on the forum first :smile_cat:

Issues that are inactive for a period of time may get closed. We adopt this policy so that we won't lose track of actionable issues that may fall at the bottom of the pile. Feel free to reopen a new one if you feel there is an additional problem that needs attention when an old one gets closed.

### Expected behavior

compile an ONNX model with Expand op

### Actual behavior

```shell

File ~/src/tvm/python/tvm/relay/frontend/onnx.py:2238, in Expand._impl_v8(cls, inputs, attr, params)

2235 new_shape = _op.maximum(in_shape, shape)

2236 return new_shape

-> 2238 shape = fold_constant(expand_shape(in_shape, shape))

2239 return _op.broadcast_to(inputs[0], shape=shape)

File ~/src/tvm/python/tvm/relay/frontend/onnx.py:2207, in Expand._impl_v8.<locals>.expand_shape(in_shape, shape)

2204 in_dims = infer_shape(in_shape)[0]

2205 new_dims = infer_shape(shape)[0]

-> 2207 if in_dims < new_dims:

2208 in_shape = _op.concatenate(

2209 [

2210 _expr.const(

(...)

2219 axis=0,

2220 )

2221 elif new_dims > in_dims:

File ~/src/tvm/python/tvm/tir/expr.py:185, in ExprOp.__bool__(self)

184 def __bool__(self):

--> 185 return self.__nonzero__()

File ~/src/tvm/python/tvm/tir/expr.py:179, in ExprOp.__nonzero__(self)

178 def __nonzero__(self):

--> 179 raise ValueError(

180 "Cannot use and / or / not operator to Expr, hint: "

181 + "use tvm.tir.all / tvm.tir.any instead"

182 )

ValueError: Cannot use and / or / not operator to Expr, hint: use tvm.tir.all / tvm.tir.any instead

```

### Environment

Any environment details, such as: Operating System, TVM version, etc

```

.-/+oossssoo+/-. taylor@x1

`:+ssssssssssssssssss+:` ---------

-+ssssssssssssssssssyyssss+- OS: Ubuntu 22.04 LTS x86_64

.ossssssssssssssssssdMMMNysssso. Host: 21CBA002CD ThinkPad X1 Carbon Gen 10

/ssssssssssshdmmNNmmyNMMMMhssssss/ Kernel: 5.15.0-39-generic

+ssssssssshmydMMMMMMMNddddyssssssss+ Uptime: 1 day, 2 hours, 29 mins

/sssssssshNMMMyhhyyyyhmNMMMNhssssssss/ Packages: 2178 (dpkg), 10 (flatpak), 19 (snap)

.ssssssssdMMMNhsssssssssshNMMMdssssssss. Shell: zsh 5.8.1

+sssshhhyNMMNyssssssssssssyNMMMysssssss+ Resolution: 2240x1400

ossyNMMMNyMMhsssssssssssssshmmmhssssssso DE: GNOME 42.1

ossyNMMMNyMMhsssssssssssssshmmmhssssssso WM: Mutter

+sssshhhyNMMNyssssssssssssyNMMMysssssss+ WM Theme: Adwaita

.ssssssssdMMMNhsssssssssshNMMMdssssssss. Theme: Yaru-dark [GTK2/3]

/sssssssshNMMMyhhyyyyhdNMMMNhssssssss/ Icons: Yaru [GTK2/3]

+sssssssssdmydMMMMMMMMddddyssssssss+ Terminal: gnome-terminal

/ssssssssssshdmNNNNmyNMMMMhssssss/ CPU: 12th Gen Intel i5-1240P (16) @ 4.400GHz

.ossssssssssssssssssdMMMNysssso. GPU: Intel Alder Lake-P

-+sssssssssssssssssyyyssss+- Memory: 5570MiB / 15701MiB

`:+ssssssssssssssssss+:`

.-/+oossssoo+/-.

```

TVM version

build from source with `intel-mkl` `llvm` `openmp`

at tag `v0.8.0`

### Steps to reproduce

Preferably a minimal script to cause the issue to occur.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@tvm.apache.org.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [tvm] TaylorHere closed issue #11783: [Bug] Expand Op v8 with ConstantOfShape as shape input will failed when because of infer_shape's output

Posted by GitBox <gi...@apache.org>.

TaylorHere closed issue #11783: [Bug] Expand Op v8 with ConstantOfShape as shape input will failed when because of infer_shape's output

URL: https://github.com/apache/tvm/issues/11783

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@tvm.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [tvm] TaylorHere commented on issue #11783: [Bug] Expand Op v8 with ConstantOfShape as shape input will failed when because of infer_shape's output

Posted by GitBox <gi...@apache.org>.

TaylorHere commented on issue #11783:

URL: https://github.com/apache/tvm/issues/11783#issuecomment-1163402246

```python

import numpy as np

import onnx

from tvm.driver import tvmc

def create_initializer_tensor(

name: str,

tensor_array: np.ndarray,

data_type: onnx.TensorProto = onnx.TensorProto.FLOAT,

) -> onnx.TensorProto:

return onnx.helper.make_tensor(

name=name,

data_type=data_type,

dims=tensor_array.shape,

vals=tensor_array.flatten().tolist(),

)

input_4001_np = np.ones(shape=(1,)).astype(np.int64)

input_4001 = create_initializer_tensor(

name="input_4001", tensor_array=input_4001_np, data_type=onnx.TensorProto.INT64

)

attr_value = onnx.helper.make_tensor("input_value", onnx.TensorProto.INT64, [1], [1])

output_892 = onnx.helper.make_tensor_value_info(

"output_892", onnx.TensorProto.INT64, [1]

)

ConstantOfShape_704 = onnx.helper.make_node(

name="ConstantOfShape_704",

op_type="ConstantOfShape",

inputs=["input_4001"],

outputs=["output_892"],

value=attr_value,

)

input_4000_np = np.ones(shape=(3, 2)).astype(np.int64)

input_4000 = create_initializer_tensor(

name="input_4000", tensor_array=input_4000_np, data_type=onnx.TensorProto.INT64

)

output_893 = onnx.helper.make_tensor_value_info(

"output_893", onnx.TensorProto.INT64, [3, 2]

)

Expand_705 = onnx.helper.make_node(

name="Expand_705",

op_type="Expand",

inputs=["input_4000", "output_892"],

outputs=["output_893"],

)

# Create the graph (GraphProto)

graph_def = onnx.helper.make_graph(

nodes=[ConstantOfShape_704, Expand_705],

name="constantOfShape->Expand",

inputs=[],

outputs=[output_893],

initializer=[input_4001, input_4000],

)

# Create the model (ModelProto)

model_def = onnx.helper.make_model(graph_def, producer_name="onnx-example")

model_def.opset_import[0].version = 13

model_def = onnx.shape_inference.infer_shapes(model_def)

onnx.checker.check_model(model_def)

onnx.save(model_def, "convnet.onnx")

mod = tvmc.load('convnet.onnx')

tvmc.compile(mod, 'llvm')

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@tvm.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [tvm] ganler commented on issue #11783: [Bug] Expand Op v8 with ConstantOfShape as shape input will failed when because of infer_shape's output

Posted by GitBox <gi...@apache.org>.

ganler commented on issue #11783:

URL: https://github.com/apache/tvm/issues/11783#issuecomment-1164147654

Would you mind file the model through my email if that is not confidential? I can help take a closer look. :-) @TaylorHere

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@tvm.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [tvm] TaylorHere commented on issue #11783: [Bug] Expand Op v8 with ConstantOfShape as shape input will failed when infer_type

Posted by GitBox <gi...@apache.org>.

TaylorHere commented on issue #11783:

URL: https://github.com/apache/tvm/issues/11783#issuecomment-1160605346

still digging on this, I'm such a newbie to the source code. I have to hack some code into

Expand._impl_v8 and I found that input[0], the `shape` arg in ONNX, I think? which can infer_value as [1 1 1 1 1], but the `shape` field returns an `Any` Expr?

I'm so confused right now, can anybody give a hint?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@tvm.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [tvm] TaylorHere commented on issue #11783: [Bug] Expand Op v8 with ConstantOfShape as shape input will failed when because of infer_shape's output

Posted by GitBox <gi...@apache.org>.

TaylorHere commented on issue #11783:

URL: https://github.com/apache/tvm/issues/11783#issuecomment-1164043203

hi `9bba758` works for me as well, I was worked on `v0.8.0`

`9bba758` raise new errors for my model

```shell

Incompatible broadcast type TensorType([1, 3, 56, 120, 2], float32) and TensorType([3, 2], float32)

The type inference pass was unable to infer a type for this expression.

This usually occurs when an operator call is under constrained in some way, check other reported errors for hints of what may of happened.

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@tvm.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [tvm] ganler commented on issue #11783: [Bug] Expand Op v8 with ConstantOfShape as shape input will failed when because of infer_shape's output

Posted by GitBox <gi...@apache.org>.

ganler commented on issue #11783:

URL: https://github.com/apache/tvm/issues/11783#issuecomment-1161249211

Hi @TaylorHere , welcome to TVM and I am willing to help you on that. To exactly solve the issue you met, can you attached a (minimized) model or a simple code snippet to reproduce the issue? Thanks!

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@tvm.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [tvm] ganler commented on issue #11783: [Bug] Expand Op v8 with ConstantOfShape as shape input will failed when because of infer_shape's output

Posted by GitBox <gi...@apache.org>.

ganler commented on issue #11783:

URL: https://github.com/apache/tvm/issues/11783#issuecomment-1164960100

@TaylorHere Thanks for sharing the model. However, this model itself is invalid that cannot pass onnx full check:

```python

import onnx

onnx_model = onnx.load(args.model)

onnx.checker.check_model(onnx_model, full_check=True)

```

So the model iteself is invalid as its input `0` has no type information. Since you exported the model from PyTorch, I suggest you open an issue there to see what's going on. In the meantime, you might want to export the model with PyTorch nightly as they are continuously making updates for better supporting ONNX.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@tvm.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [tvm] TaylorHere commented on issue #11783: [Bug] Expand Op v8 with ConstantOfShape as shape input will failed when because of infer_shape's output

Posted by GitBox <gi...@apache.org>.

TaylorHere commented on issue #11783:

URL: https://github.com/apache/tvm/issues/11783#issuecomment-1165437514

hi, @ganler, thx for your help, you are right, my model is invalid, exporting with PyTorch nightly works for me.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@tvm.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [tvm] TaylorHere commented on issue #11783: [Bug] Expand Op v8 with ConstantOfShape as shape input will failed when because of infer_shape's output

Posted by GitBox <gi...@apache.org>.

TaylorHere commented on issue #11783:

URL: https://github.com/apache/tvm/issues/11783#issuecomment-1163274687

the ONNX file is too big to upload, I'm trying to build the `constantOfShape` -> `Expand` structure with onnx.helper.

the model is passed through `onnx.checker`

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@tvm.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [tvm] ganler commented on issue #11783: [Bug] Expand Op v8 with ConstantOfShape as shape input will failed when because of infer_shape's output

Posted by GitBox <gi...@apache.org>.

ganler commented on issue #11783:

URL: https://github.com/apache/tvm/issues/11783#issuecomment-1163576581

Hi @TaylorHere , I actually successfully passed the script you provided.

<img width="597" alt="image" src="https://user-images.githubusercontent.com/38074777/175131080-99a0dd0a-bfc6-4c2c-90cb-4b395592f2a6.png">

The git tag of tvm used: 9bba7580b0dcaea4963bd6b35df0bf6bf867b8ff

Can you confirm if you can run this script w/o errors, or I need more steps to reproduce the bug? Thanks!

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@tvm.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

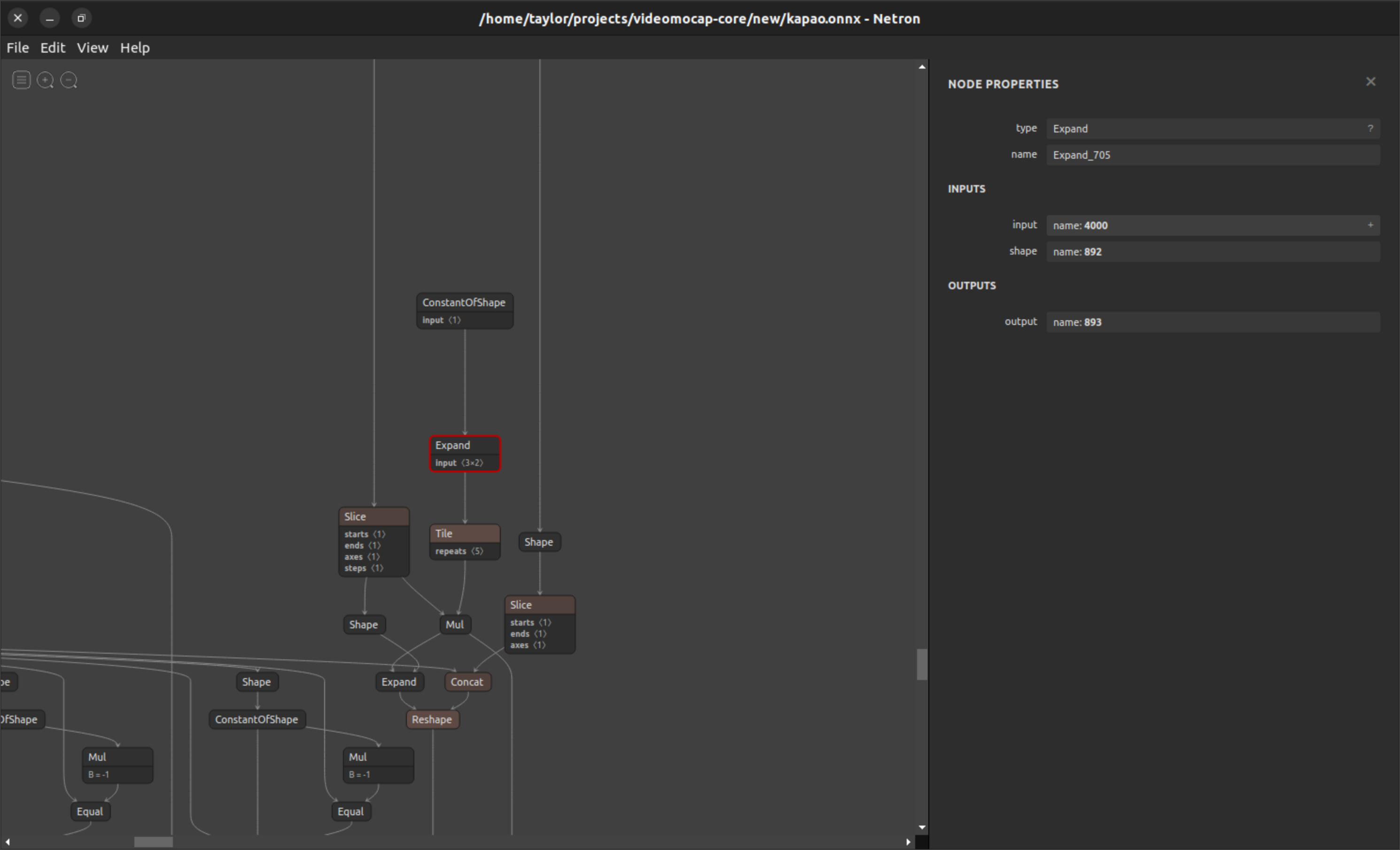

[GitHub] [tvm] TaylorHere commented on issue #11783: [Bug] Expand v8 Cannot use and / or / not operator to Expr

Posted by GitBox <gi...@apache.org>.

TaylorHere commented on issue #11783:

URL: https://github.com/apache/tvm/issues/11783#issuecomment-1160296853

the op in onnx

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@tvm.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [tvm] TaylorHere commented on issue #11783: [Bug] Expand v8 Cannot use and / or / not operator to Expr

Posted by GitBox <gi...@apache.org>.

TaylorHere commented on issue #11783:

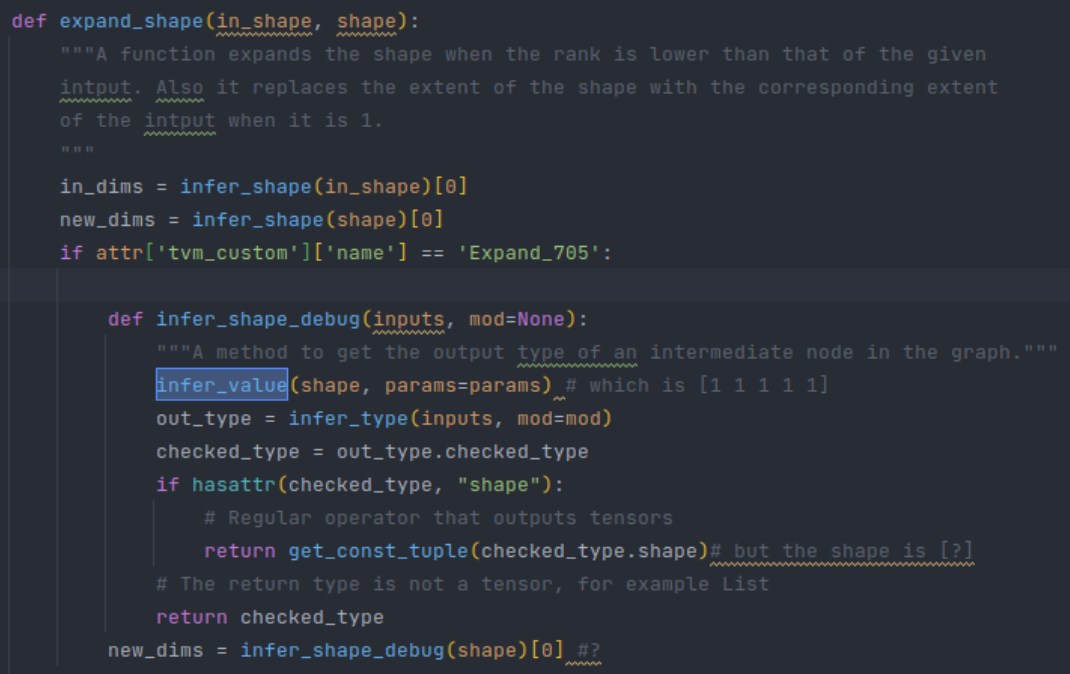

URL: https://github.com/apache/tvm/issues/11783#issuecomment-1160243903

I found out what wrong here.

```python

def expand_shape(in_shape, shape):

"""A function expands the shape when the rank is lower than that of the given

intput. Also it replaces the extent of the shape with the corresponding extent

of the intput when it is 1.

"""

in_dims = infer_shape(in_shape)[0] # <class 'int'>

new_dims = infer_shape(shape)[0] # <class 'tvm.tir.expr.Any'>

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@tvm.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org