You are viewing a plain text version of this content. The canonical link for it is here.

Posted to common-issues@hadoop.apache.org by GitBox <gi...@apache.org> on 2022/02/05 02:40:44 UTC

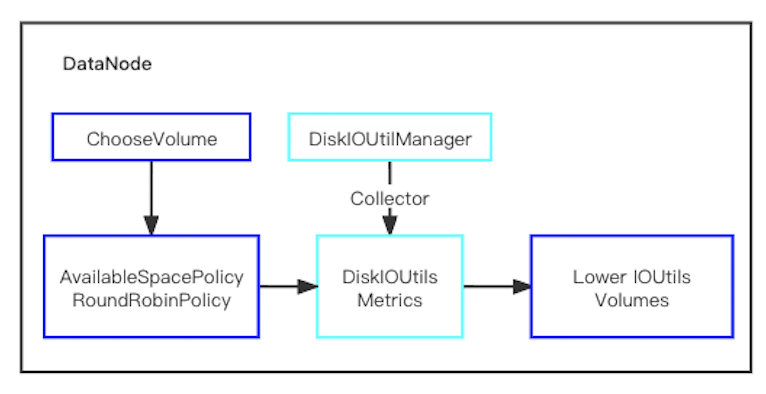

[GitHub] [hadoop] tomscut opened a new pull request #3960: HDFS-16446. Consider ioutils of disk when choosing volume

tomscut opened a new pull request #3960:

URL: https://github.com/apache/hadoop/pull/3960

JIRA: [HDFS-16446](https://issues.apache.org/jira/browse/HDFS-16446).

Consider ioutils of disk when choosing volume.

Principle is as follows:

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For additional commands, e-mail: common-issues-help@hadoop.apache.org

[GitHub] [hadoop] hadoop-yetus commented on pull request #3960: HDFS-16446. Consider ioutils of disk when choosing volume

Posted by GitBox <gi...@apache.org>.

hadoop-yetus commented on pull request #3960:

URL: https://github.com/apache/hadoop/pull/3960#issuecomment-1083649851

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|:----:|----------:|--------:|:--------:|:-------:|

| +0 :ok: | reexec | 0m 55s | | Docker mode activated. |

|||| _ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files found. |

| +0 :ok: | codespell | 0m 0s | | codespell was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to include 4 new or modified test files. |

|||| _ trunk Compile Tests _ |

| +0 :ok: | mvndep | 12m 17s | | Maven dependency ordering for branch |

| +1 :green_heart: | mvninstall | 26m 59s | | trunk passed |

| +1 :green_heart: | compile | 21m 22s | | trunk passed |

| +1 :green_heart: | checkstyle | 4m 2s | | trunk passed |

| +1 :green_heart: | mvnsite | 3m 50s | | trunk passed |

| +1 :green_heart: | javadoc | 3m 58s | | trunk passed |

| +0 :ok: | spotbugs | 0m 33s | | branch/hadoop-project no spotbugs output file (spotbugsXml.xml) |

| +1 :green_heart: | shadedclient | 26m 9s | | branch has no errors when building and testing our client artifacts. |

|||| _ Patch Compile Tests _ |

| +0 :ok: | mvndep | 0m 23s | | Maven dependency ordering for patch |

| +1 :green_heart: | mvninstall | 2m 33s | | the patch passed |

| +1 :green_heart: | compile | 20m 32s | | the patch passed |

| -1 :x: | cc | 20m 32s | [/results-compile-cc-root.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3960/9/artifact/out/results-compile-cc-root.txt) | root generated 22 new + 185 unchanged - 20 fixed = 207 total (was 205) |

| +1 :green_heart: | golang | 20m 32s | | the patch passed |

| +1 :green_heart: | javac | 20m 32s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks issues. |

| -0 :warning: | checkstyle | 3m 54s | [/results-checkstyle-root.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3960/9/artifact/out/results-checkstyle-root.txt) | root: The patch generated 1 new + 516 unchanged - 0 fixed = 517 total (was 516) |

| +1 :green_heart: | mvnsite | 3m 49s | | the patch passed |

| +1 :green_heart: | xml | 0m 2s | | The patch has no ill-formed XML file. |

| +1 :green_heart: | javadoc | 3m 55s | | the patch passed |

| +0 :ok: | spotbugs | 0m 30s | | hadoop-project has no data from spotbugs |

| +1 :green_heart: | shadedclient | 26m 5s | | patch has no errors when building and testing our client artifacts. |

|||| _ Other Tests _ |

| +1 :green_heart: | unit | 0m 28s | | hadoop-project in the patch passed. |

| -1 :x: | unit | 17m 51s | [/patch-unit-hadoop-common-project_hadoop-common.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3960/9/artifact/out/patch-unit-hadoop-common-project_hadoop-common.txt) | hadoop-common in the patch passed. |

| -1 :x: | unit | 352m 32s | [/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3960/9/artifact/out/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt) | hadoop-hdfs in the patch passed. |

| +1 :green_heart: | asflicense | 1m 0s | | The patch does not generate ASF License warnings. |

| | | 543m 40s | | |

| Reason | Tests |

|-------:|:------|

| Failed junit tests | hadoop.service.launcher.TestServiceInterruptHandling |

| | hadoop.crypto.TestCryptoCodec |

| | hadoop.crypto.TestCryptoStreamsWithOpensslSm4CtrCryptoCodec |

| | hadoop.hdfs.server.datanode.TestDataXceiverBackwardsCompat |

| Subsystem | Report/Notes |

|----------:|:-------------|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base: https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3960/9/artifact/out/Dockerfile |

| GITHUB PR | https://github.com/apache/hadoop/pull/3960 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall mvnsite unit shadedclient spotbugs checkstyle codespell cc golang xml |

| uname | Linux 0a731bb5fd2a 4.15.0-162-generic #170-Ubuntu SMP Mon Oct 18 11:38:05 UTC 2021 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / 512a5ba75cde11a93fa66e3b4d40e086803414cc |

| Default Java | Red Hat, Inc.-1.8.0_322-b06 |

| Test Results | https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3960/9/testReport/ |

| Max. process+thread count | 2394 (vs. ulimit of 5500) |

| modules | C: hadoop-project hadoop-common-project/hadoop-common hadoop-hdfs-project/hadoop-hdfs U: . |

| Console output | https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3960/9/console |

| versions | git=2.9.5 maven=3.6.3 spotbugs=4.2.2 |

| Powered by | Apache Yetus 0.14.0-SNAPSHOT https://yetus.apache.org |

This message was automatically generated.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For additional commands, e-mail: common-issues-help@hadoop.apache.org

[GitHub] [hadoop] hadoop-yetus commented on pull request #3960: HDFS-16446. Consider ioutils of disk when choosing volume

Posted by GitBox <gi...@apache.org>.

hadoop-yetus commented on pull request #3960:

URL: https://github.com/apache/hadoop/pull/3960#issuecomment-1077047322

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|:----:|----------:|--------:|:--------:|:-------:|

| +0 :ok: | reexec | 0m 0s | | Docker mode activated. |

| -1 :x: | docker | 19m 36s | | Docker failed to build yetus/hadoop:13467f45240. |

| Subsystem | Report/Notes |

|----------:|:-------------|

| GITHUB PR | https://github.com/apache/hadoop/pull/3960 |

| Console output | https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3960/3/console |

| versions | git=2.17.1 |

| Powered by | Apache Yetus 0.14.0-SNAPSHOT https://yetus.apache.org |

This message was automatically generated.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For additional commands, e-mail: common-issues-help@hadoop.apache.org

[GitHub] [hadoop] hadoop-yetus commented on pull request #3960: HDFS-16446. Consider ioutils of disk when choosing volume

Posted by GitBox <gi...@apache.org>.

hadoop-yetus commented on pull request #3960:

URL: https://github.com/apache/hadoop/pull/3960#issuecomment-1080389063

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|:----:|----------:|--------:|:--------:|:-------:|

| +0 :ok: | reexec | 0m 55s | | Docker mode activated. |

|||| _ Prechecks _ |

| +1 :green_heart: | dupname | 0m 1s | | No case conflicting files found. |

| +0 :ok: | codespell | 0m 0s | | codespell was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to include 4 new or modified test files. |

|||| _ trunk Compile Tests _ |

| +0 :ok: | mvndep | 12m 19s | | Maven dependency ordering for branch |

| +1 :green_heart: | mvninstall | 26m 44s | | trunk passed |

| +1 :green_heart: | compile | 21m 19s | | trunk passed |

| +1 :green_heart: | checkstyle | 4m 0s | | trunk passed |

| +1 :green_heart: | mvnsite | 3m 51s | | trunk passed |

| +1 :green_heart: | javadoc | 4m 2s | | trunk passed |

| +0 :ok: | spotbugs | 0m 32s | | branch/hadoop-project no spotbugs output file (spotbugsXml.xml) |

| +1 :green_heart: | shadedclient | 25m 58s | | branch has no errors when building and testing our client artifacts. |

|||| _ Patch Compile Tests _ |

| +0 :ok: | mvndep | 0m 23s | | Maven dependency ordering for patch |

| +1 :green_heart: | mvninstall | 2m 35s | | the patch passed |

| +1 :green_heart: | compile | 20m 33s | | the patch passed |

| -1 :x: | cc | 20m 33s | [/results-compile-cc-root.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3960/8/artifact/out/results-compile-cc-root.txt) | root generated 20 new + 187 unchanged - 18 fixed = 207 total (was 205) |

| +1 :green_heart: | golang | 20m 33s | | the patch passed |

| +1 :green_heart: | javac | 20m 33s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks issues. |

| -0 :warning: | checkstyle | 3m 54s | [/results-checkstyle-root.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3960/8/artifact/out/results-checkstyle-root.txt) | root: The patch generated 1 new + 516 unchanged - 0 fixed = 517 total (was 516) |

| +1 :green_heart: | mvnsite | 3m 50s | | the patch passed |

| +1 :green_heart: | xml | 0m 3s | | The patch has no ill-formed XML file. |

| +1 :green_heart: | javadoc | 3m 49s | | the patch passed |

| +0 :ok: | spotbugs | 0m 30s | | hadoop-project has no data from spotbugs |

| +1 :green_heart: | shadedclient | 26m 25s | | patch has no errors when building and testing our client artifacts. |

|||| _ Other Tests _ |

| +1 :green_heart: | unit | 0m 29s | | hadoop-project in the patch passed. |

| -1 :x: | unit | 17m 53s | [/patch-unit-hadoop-common-project_hadoop-common.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3960/8/artifact/out/patch-unit-hadoop-common-project_hadoop-common.txt) | hadoop-common in the patch passed. |

| -1 :x: | unit | 337m 26s | [/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3960/8/artifact/out/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt) | hadoop-hdfs in the patch passed. |

| +1 :green_heart: | asflicense | 1m 2s | | The patch does not generate ASF License warnings. |

| | | 528m 12s | | |

| Reason | Tests |

|-------:|:------|

| Failed junit tests | hadoop.service.launcher.TestServiceInterruptHandling |

| | hadoop.crypto.TestCryptoCodec |

| | hadoop.crypto.TestCryptoStreamsWithOpensslSm4CtrCryptoCodec |

| | hadoop.tools.TestHdfsConfigFields |

| Subsystem | Report/Notes |

|----------:|:-------------|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base: https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3960/8/artifact/out/Dockerfile |

| GITHUB PR | https://github.com/apache/hadoop/pull/3960 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall mvnsite unit shadedclient spotbugs checkstyle codespell cc golang xml |

| uname | Linux c600376df19b 4.15.0-162-generic #170-Ubuntu SMP Mon Oct 18 11:38:05 UTC 2021 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / c7882a443a8e7c002a2489d980602f4da36cf310 |

| Default Java | Red Hat, Inc.-1.8.0_322-b06 |

| Test Results | https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3960/8/testReport/ |

| Max. process+thread count | 3137 (vs. ulimit of 5500) |

| modules | C: hadoop-project hadoop-common-project/hadoop-common hadoop-hdfs-project/hadoop-hdfs U: . |

| Console output | https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3960/8/console |

| versions | git=2.9.5 maven=3.6.3 spotbugs=4.2.2 |

| Powered by | Apache Yetus 0.14.0-SNAPSHOT https://yetus.apache.org |

This message was automatically generated.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For additional commands, e-mail: common-issues-help@hadoop.apache.org

[GitHub] [hadoop] hadoop-yetus commented on pull request #3960: HDFS-16446. Consider ioutils of disk when choosing volume

Posted by GitBox <gi...@apache.org>.

hadoop-yetus commented on pull request #3960:

URL: https://github.com/apache/hadoop/pull/3960#issuecomment-1079982869

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|:----:|----------:|--------:|:--------:|:-------:|

| +0 :ok: | reexec | 0m 54s | | Docker mode activated. |

|||| _ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files found. |

| +0 :ok: | codespell | 0m 0s | | codespell was not available. |

| +1 :green_heart: | @author | 0m 1s | | The patch does not contain any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to include 4 new or modified test files. |

|||| _ trunk Compile Tests _ |

| +0 :ok: | mvndep | 12m 13s | | Maven dependency ordering for branch |

| +1 :green_heart: | mvninstall | 27m 6s | | trunk passed |

| +1 :green_heart: | compile | 21m 20s | | trunk passed |

| +1 :green_heart: | checkstyle | 3m 56s | | trunk passed |

| +1 :green_heart: | mvnsite | 3m 50s | | trunk passed |

| +1 :green_heart: | javadoc | 3m 56s | | trunk passed |

| +0 :ok: | spotbugs | 0m 32s | | branch/hadoop-project no spotbugs output file (spotbugsXml.xml) |

| +1 :green_heart: | shadedclient | 26m 18s | | branch has no errors when building and testing our client artifacts. |

|||| _ Patch Compile Tests _ |

| +0 :ok: | mvndep | 0m 23s | | Maven dependency ordering for patch |

| +1 :green_heart: | mvninstall | 2m 36s | | the patch passed |

| +1 :green_heart: | compile | 20m 36s | | the patch passed |

| -1 :x: | cc | 20m 36s | [/results-compile-cc-root.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3960/7/artifact/out/results-compile-cc-root.txt) | root generated 6 new + 201 unchanged - 4 fixed = 207 total (was 205) |

| +1 :green_heart: | golang | 20m 36s | | the patch passed |

| +1 :green_heart: | javac | 20m 36s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks issues. |

| -0 :warning: | checkstyle | 3m 56s | [/results-checkstyle-root.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3960/7/artifact/out/results-checkstyle-root.txt) | root: The patch generated 1 new + 516 unchanged - 0 fixed = 517 total (was 516) |

| +1 :green_heart: | mvnsite | 3m 48s | | the patch passed |

| +1 :green_heart: | xml | 0m 2s | | The patch has no ill-formed XML file. |

| +1 :green_heart: | javadoc | 3m 58s | | the patch passed |

| +0 :ok: | spotbugs | 0m 29s | | hadoop-project has no data from spotbugs |

| -1 :x: | spotbugs | 3m 49s | [/new-spotbugs-hadoop-hdfs-project_hadoop-hdfs.html](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3960/7/artifact/out/new-spotbugs-hadoop-hdfs-project_hadoop-hdfs.html) | hadoop-hdfs-project/hadoop-hdfs generated 2 new + 0 unchanged - 0 fixed = 2 total (was 0) |

| +1 :green_heart: | shadedclient | 26m 11s | | patch has no errors when building and testing our client artifacts. |

|||| _ Other Tests _ |

| +1 :green_heart: | unit | 0m 28s | | hadoop-project in the patch passed. |

| -1 :x: | unit | 17m 50s | [/patch-unit-hadoop-common-project_hadoop-common.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3960/7/artifact/out/patch-unit-hadoop-common-project_hadoop-common.txt) | hadoop-common in the patch passed. |

| -1 :x: | unit | 347m 43s | [/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3960/7/artifact/out/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt) | hadoop-hdfs in the patch passed. |

| -1 :x: | asflicense | 1m 0s | [/results-asflicense.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3960/7/artifact/out/results-asflicense.txt) | The patch generated 1 ASF License warnings. |

| | | 538m 45s | | |

| Reason | Tests |

|-------:|:------|

| SpotBugs | module:hadoop-hdfs-project/hadoop-hdfs |

| | Possible null pointer dereference in new org.apache.hadoop.hdfs.server.datanode.DiskIOUtilManager$DiskLocation(StorageLocation) due to return value of called method Dereferenced at DiskIOUtilManager.java:new org.apache.hadoop.hdfs.server.datanode.DiskIOUtilManager$DiskLocation(StorageLocation) due to return value of called method Dereferenced at DiskIOUtilManager.java:[line 58] |

| | Possible null pointer dereference in new org.apache.hadoop.hdfs.server.datanode.DiskIOUtilManager$DiskLocation(StorageLocation) due to return value of called method Dereferenced at DiskIOUtilManager.java:new org.apache.hadoop.hdfs.server.datanode.DiskIOUtilManager$DiskLocation(StorageLocation) due to return value of called method Dereferenced at DiskIOUtilManager.java:[line 63] |

| Failed junit tests | hadoop.service.launcher.TestServiceInterruptHandling |

| | hadoop.crypto.TestCryptoCodec |

| | hadoop.crypto.TestCryptoStreamsWithOpensslSm4CtrCryptoCodec |

| | hadoop.tools.TestHdfsConfigFields |

| | hadoop.hdfs.server.datanode.fsdataset.TestAvailableSpaceVolumeChoosingPolicy |

| Subsystem | Report/Notes |

|----------:|:-------------|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base: https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3960/7/artifact/out/Dockerfile |

| GITHUB PR | https://github.com/apache/hadoop/pull/3960 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall mvnsite unit shadedclient spotbugs checkstyle codespell cc golang xml |

| uname | Linux 4666557d64a5 4.15.0-162-generic #170-Ubuntu SMP Mon Oct 18 11:38:05 UTC 2021 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / e6565d20d31e73c3b9475eed790b30e802134de2 |

| Default Java | Red Hat, Inc.-1.8.0_322-b06 |

| Test Results | https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3960/7/testReport/ |

| Max. process+thread count | 1899 (vs. ulimit of 5500) |

| modules | C: hadoop-project hadoop-common-project/hadoop-common hadoop-hdfs-project/hadoop-hdfs U: . |

| Console output | https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3960/7/console |

| versions | git=2.9.5 maven=3.6.3 spotbugs=4.2.2 |

| Powered by | Apache Yetus 0.14.0-SNAPSHOT https://yetus.apache.org |

This message was automatically generated.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For additional commands, e-mail: common-issues-help@hadoop.apache.org

[GitHub] [hadoop] jojochuang commented on pull request #3960: HDFS-16446. Consider ioutils of disk when choosing volume

Posted by GitBox <gi...@apache.org>.

jojochuang commented on pull request #3960:

URL: https://github.com/apache/hadoop/pull/3960#issuecomment-1033396454

@ayushtkn fyi

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For additional commands, e-mail: common-issues-help@hadoop.apache.org

[GitHub] [hadoop] tomscut commented on pull request #3960: HDFS-16446. Consider ioutils of disk when choosing volume

Posted by GitBox <gi...@apache.org>.

tomscut commented on pull request #3960:

URL: https://github.com/apache/hadoop/pull/3960#issuecomment-1033479597

> Some quick comments. I'm still trying to understand how the disk io stats get considered. Some documentation would be helpful.

>

> Instead of being part of AvailableSpaceVolumeChoosingPolicy, would it make sense to become a new volume choosing policy?

Thanks @jojochuang for your comments, I will add documentation and update the code.

When doing the POC testing, we define a new policy, named `IOUtilVolumeChoosingPolicy`, only considering the ioutils without considering the disk space usage, it will cause uneven disk utilization.

So we change AvailableSpaceVolumeChoosingPolicy, under the same disk usage, preferred ioutils smaller disks, filtering the busy disks.

What you said about offering a new policy is a good idea.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For additional commands, e-mail: common-issues-help@hadoop.apache.org

[GitHub] [hadoop] tomscut closed pull request #3960: HDFS-16446. Consider ioutils of disk when choosing volume

Posted by GitBox <gi...@apache.org>.

tomscut closed pull request #3960:

URL: https://github.com/apache/hadoop/pull/3960

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For additional commands, e-mail: common-issues-help@hadoop.apache.org

[GitHub] [hadoop] hadoop-yetus commented on pull request #3960: HDFS-16446. Consider ioutils of disk when choosing volume

Posted by GitBox <gi...@apache.org>.

hadoop-yetus commented on pull request #3960:

URL: https://github.com/apache/hadoop/pull/3960#issuecomment-1084434622

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|:----:|----------:|--------:|:--------:|:-------:|

| +0 :ok: | reexec | 0m 55s | | Docker mode activated. |

|||| _ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files found. |

| +0 :ok: | codespell | 0m 0s | | codespell was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to include 4 new or modified test files. |

|||| _ trunk Compile Tests _ |

| +0 :ok: | mvndep | 12m 29s | | Maven dependency ordering for branch |

| +1 :green_heart: | mvninstall | 30m 22s | | trunk passed |

| +1 :green_heart: | compile | 21m 16s | | trunk passed |

| +1 :green_heart: | checkstyle | 4m 5s | | trunk passed |

| +1 :green_heart: | mvnsite | 3m 21s | | trunk passed |

| +1 :green_heart: | javadoc | 3m 31s | | trunk passed |

| +1 :green_heart: | spotbugs | 6m 9s | | trunk passed |

| +1 :green_heart: | shadedclient | 26m 19s | | branch has no errors when building and testing our client artifacts. |

|||| _ Patch Compile Tests _ |

| +0 :ok: | mvndep | 0m 23s | | Maven dependency ordering for patch |

| +1 :green_heart: | mvninstall | 2m 24s | | the patch passed |

| +1 :green_heart: | compile | 20m 37s | | the patch passed |

| -1 :x: | cc | 20m 37s | [/results-compile-cc-root.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3960/10/artifact/out/results-compile-cc-root.txt) | root generated 9 new + 198 unchanged - 7 fixed = 207 total (was 205) |

| +1 :green_heart: | golang | 20m 37s | | the patch passed |

| +1 :green_heart: | javac | 20m 37s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks issues. |

| -0 :warning: | checkstyle | 3m 56s | [/results-checkstyle-root.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3960/10/artifact/out/results-checkstyle-root.txt) | root: The patch generated 1 new + 516 unchanged - 0 fixed = 517 total (was 516) |

| +1 :green_heart: | mvnsite | 3m 20s | | the patch passed |

| +1 :green_heart: | xml | 0m 2s | | The patch has no ill-formed XML file. |

| +1 :green_heart: | javadoc | 3m 24s | | the patch passed |

| +1 :green_heart: | spotbugs | 6m 31s | | the patch passed |

| +1 :green_heart: | shadedclient | 26m 17s | | patch has no errors when building and testing our client artifacts. |

|||| _ Other Tests _ |

| -1 :x: | unit | 17m 53s | [/patch-unit-hadoop-common-project_hadoop-common.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3960/10/artifact/out/patch-unit-hadoop-common-project_hadoop-common.txt) | hadoop-common in the patch passed. |

| -1 :x: | unit | 360m 26s | [/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3960/10/artifact/out/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt) | hadoop-hdfs in the patch passed. |

| +1 :green_heart: | asflicense | 1m 13s | | The patch does not generate ASF License warnings. |

| | | 552m 14s | | |

| Reason | Tests |

|-------:|:------|

| Failed junit tests | hadoop.service.launcher.TestServiceInterruptHandling |

| | hadoop.crypto.TestCryptoCodec |

| | hadoop.crypto.TestCryptoStreamsWithOpensslSm4CtrCryptoCodec |

| | hadoop.hdfs.server.namenode.TestFileTruncate |

| Subsystem | Report/Notes |

|----------:|:-------------|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base: https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3960/10/artifact/out/Dockerfile |

| GITHUB PR | https://github.com/apache/hadoop/pull/3960 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall mvnsite unit shadedclient spotbugs checkstyle codespell cc golang xml |

| uname | Linux f8b5ed4ec11f 4.15.0-162-generic #170-Ubuntu SMP Mon Oct 18 11:38:05 UTC 2021 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / 41cfc189c7216473f4454fbe82012bb8dda52de5 |

| Default Java | Red Hat, Inc.-1.8.0_322-b06 |

| Test Results | https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3960/10/testReport/ |

| Max. process+thread count | 1911 (vs. ulimit of 5500) |

| modules | C: hadoop-common-project/hadoop-common hadoop-hdfs-project/hadoop-hdfs U: . |

| Console output | https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3960/10/console |

| versions | git=2.9.5 maven=3.6.3 spotbugs=4.2.2 |

| Powered by | Apache Yetus 0.14.0-SNAPSHOT https://yetus.apache.org |

This message was automatically generated.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For additional commands, e-mail: common-issues-help@hadoop.apache.org

[GitHub] [hadoop] hadoop-yetus commented on pull request #3960: HDFS-16446. Consider ioutils of disk when choosing volume

Posted by GitBox <gi...@apache.org>.

hadoop-yetus commented on pull request #3960:

URL: https://github.com/apache/hadoop/pull/3960#issuecomment-1030615628

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|:----:|----------:|--------:|:--------:|:-------:|

| +0 :ok: | reexec | 44m 21s | | Docker mode activated. |

|||| _ Prechecks _ |

| +1 :green_heart: | dupname | 0m 1s | | No case conflicting files found. |

| +0 :ok: | codespell | 0m 0s | | codespell was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to include 4 new or modified test files. |

|||| _ trunk Compile Tests _ |

| +0 :ok: | mvndep | 12m 40s | | Maven dependency ordering for branch |

| +1 :green_heart: | mvninstall | 25m 12s | | trunk passed |

| +1 :green_heart: | compile | 20m 57s | | trunk passed |

| +1 :green_heart: | checkstyle | 3m 57s | | trunk passed |

| +1 :green_heart: | mvnsite | 3m 18s | | trunk passed |

| +1 :green_heart: | javadoc | 3m 20s | | trunk passed |

| +1 :green_heart: | spotbugs | 6m 8s | | trunk passed |

| +1 :green_heart: | shadedclient | 25m 56s | | branch has no errors when building and testing our client artifacts. |

|||| _ Patch Compile Tests _ |

| +0 :ok: | mvndep | 0m 28s | | Maven dependency ordering for patch |

| +1 :green_heart: | mvninstall | 2m 27s | | the patch passed |

| +1 :green_heart: | compile | 20m 6s | | the patch passed |

| -1 :x: | cc | 20m 6s | [/results-compile-cc-root.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3960/1/artifact/out/results-compile-cc-root.txt) | root generated 18 new + 189 unchanged - 16 fixed = 207 total (was 205) |

| +1 :green_heart: | golang | 20m 6s | | the patch passed |

| +1 :green_heart: | javac | 20m 6s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks issues. |

| -0 :warning: | checkstyle | 3m 50s | [/results-checkstyle-root.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3960/1/artifact/out/results-checkstyle-root.txt) | root: The patch generated 10 new + 519 unchanged - 0 fixed = 529 total (was 519) |

| +1 :green_heart: | mvnsite | 3m 17s | | the patch passed |

| +1 :green_heart: | xml | 0m 1s | | The patch has no ill-formed XML file. |

| +1 :green_heart: | javadoc | 3m 20s | | the patch passed |

| -1 :x: | spotbugs | 3m 50s | [/new-spotbugs-hadoop-hdfs-project_hadoop-hdfs.html](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3960/1/artifact/out/new-spotbugs-hadoop-hdfs-project_hadoop-hdfs.html) | hadoop-hdfs-project/hadoop-hdfs generated 7 new + 0 unchanged - 0 fixed = 7 total (was 0) |

| -1 :x: | shadedclient | 26m 38s | | patch has errors when building and testing our client artifacts. |

|||| _ Other Tests _ |

| -1 :x: | unit | 17m 31s | [/patch-unit-hadoop-common-project_hadoop-common.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3960/1/artifact/out/patch-unit-hadoop-common-project_hadoop-common.txt) | hadoop-common in the patch passed. |

| -1 :x: | unit | 367m 35s | [/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3960/1/artifact/out/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt) | hadoop-hdfs in the patch passed. |

| -1 :x: | asflicense | 1m 19s | [/results-asflicense.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3960/1/artifact/out/results-asflicense.txt) | The patch generated 1 ASF License warnings. |

| | | 595m 52s | | |

| Reason | Tests |

|-------:|:------|

| SpotBugs | module:hadoop-hdfs-project/hadoop-hdfs |

| | org.apache.hadoop.hdfs.server.datanode.DiskIOUtilManager.getDiskIoUtils() may fail to close stream At DiskIOUtilManager.java:stream At DiskIOUtilManager.java:[line 203] |

| | Possible null pointer dereference in new org.apache.hadoop.hdfs.server.datanode.DiskIOUtilManager$DiskLocation(DiskIOUtilManager, StorageLocation) due to return value of called method Dereferenced at DiskIOUtilManager.java:new org.apache.hadoop.hdfs.server.datanode.DiskIOUtilManager$DiskLocation(DiskIOUtilManager, StorageLocation) due to return value of called method Dereferenced at DiskIOUtilManager.java:[line 56] |

| | Possible null pointer dereference in new org.apache.hadoop.hdfs.server.datanode.DiskIOUtilManager$DiskLocation(DiskIOUtilManager, StorageLocation) due to return value of called method Dereferenced at DiskIOUtilManager.java:new org.apache.hadoop.hdfs.server.datanode.DiskIOUtilManager$DiskLocation(DiskIOUtilManager, StorageLocation) due to return value of called method Dereferenced at DiskIOUtilManager.java:[line 61] |

| | Should org.apache.hadoop.hdfs.server.datanode.DiskIOUtilManager$DiskLocation be a _static_ inner class? At DiskIOUtilManager.java:inner class? At DiskIOUtilManager.java:[lines 43-71] |

| | Should org.apache.hadoop.hdfs.server.datanode.DiskIOUtilManager$IOStat be a _static_ inner class? At DiskIOUtilManager.java:inner class? At DiskIOUtilManager.java:[lines 85-110] |

| | Unread field:DiskIOUtilManager.java:[line 86] |

| | org.apache.hadoop.hdfs.server.datanode.fsdataset.AvailableSpaceVolumeChoosingPolicy$IOUtilVolumePair defines compareTo(AvailableSpaceVolumeChoosingPolicy$IOUtilVolumePair) and uses Object.equals() At AvailableSpaceVolumeChoosingPolicy.java:Object.equals() At AvailableSpaceVolumeChoosingPolicy.java:[lines 442-450] |

| Failed junit tests | hadoop.util.TestNativeLibraryChecker |

| | hadoop.service.launcher.TestServiceInterruptHandling |

| | hadoop.metrics2.sink.TestFileSink |

| | hadoop.crypto.TestCryptoCodec |

| | hadoop.crypto.TestCryptoStreamsWithOpensslSm4CtrCryptoCodec |

| | hadoop.tools.TestHdfsConfigFields |

| | hadoop.hdfs.server.datanode.fsdataset.TestAvailableSpaceVolumeChoosingPolicy |

| Subsystem | Report/Notes |

|----------:|:-------------|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base: https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3960/1/artifact/out/Dockerfile |

| GITHUB PR | https://github.com/apache/hadoop/pull/3960 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall mvnsite unit shadedclient spotbugs checkstyle codespell cc golang xml |

| uname | Linux ffdbe3525f75 4.15.0-162-generic #170-Ubuntu SMP Mon Oct 18 11:38:05 UTC 2021 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / 24e98c8ab8d1596f102c7de7d28553d866bae9ca |

| Default Java | Red Hat, Inc.-1.8.0_322-b06 |

| Test Results | https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3960/1/testReport/ |

| Max. process+thread count | 2137 (vs. ulimit of 5500) |

| modules | C: hadoop-common-project/hadoop-common hadoop-hdfs-project/hadoop-hdfs U: . |

| Console output | https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3960/1/console |

| versions | git=2.9.5 maven=3.6.3 spotbugs=4.2.2 |

| Powered by | Apache Yetus 0.14.0-SNAPSHOT https://yetus.apache.org |

This message was automatically generated.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For additional commands, e-mail: common-issues-help@hadoop.apache.org

[GitHub] [hadoop] jojochuang commented on a change in pull request #3960: HDFS-16446. Consider ioutils of disk when choosing volume

Posted by GitBox <gi...@apache.org>.

jojochuang commented on a change in pull request #3960:

URL: https://github.com/apache/hadoop/pull/3960#discussion_r802294570

##########

File path: hadoop-hdfs-project/hadoop-hdfs/pom.xml

##########

@@ -225,6 +225,11 @@ https://maven.apache.org/xsd/maven-4.0.0.xsd">

<artifactId>lz4-java</artifactId>

<scope>test</scope>

</dependency>

+ <dependency>

Review comment:

Please update LICENSE-binary to include this new dependency.

Also, please manage the dependency version in hadoop-project/pom.xml

##########

File path: hadoop-common-project/hadoop-common/src/main/native/src/org/apache/hadoop/io/nativeio/NativeIO.c

##########

@@ -36,17 +43,91 @@

#include <sys/resource.h>

#include <sys/stat.h>

#include <sys/syscall.h>

-#ifdef HADOOP_PMDK_LIBRARY

Review comment:

Most of the changes in this file is redundant. We want to keep the existing pmem feature (HDFS-13762).

##########

File path: hadoop-hdfs-project/hadoop-hdfs/src/main/java/org/apache/hadoop/hdfs/server/datanode/DiskIOUtilManager.java

##########

@@ -0,0 +1,253 @@

+package org.apache.hadoop.hdfs.server.datanode;

+

+import org.apache.hadoop.conf.Configuration;

+import org.apache.hadoop.hdfs.server.datanode.fsdataset.impl.FsVolumeImpl;

+import org.apache.hadoop.io.nativeio.NativeIO;

+import org.apache.hadoop.util.NativeCodeLoader;

+import org.apache.hadoop.util.Shell;

+

+import java.io.BufferedReader;

+import java.io.FileInputStream;

+import java.io.FileNotFoundException;

+import java.io.IOException;

+import java.io.InputStreamReader;

+import java.nio.charset.Charset;

+import java.nio.file.FileStore;

+import java.nio.file.Files;

+import java.nio.file.Paths;

+import java.util.HashMap;

+import java.util.List;

+import java.util.Map;

+import java.util.concurrent.TimeUnit;

+import java.util.regex.Matcher;

+import java.util.regex.Pattern;

+

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+

+import static org.apache.hadoop.hdfs.DFSConfigKeys.DFS_DATANODE_DISK_STAT_INTERVAL_SECONDS_DEFAULT;

+import static org.apache.hadoop.hdfs.DFSConfigKeys.DFS_DATANODE_DISK_STAT_INTERVAL_SECONDS_KEY;

+

+public class DiskIOUtilManager implements Runnable {

+ public static final Logger LOG =

+ LoggerFactory.getLogger(DiskIOUtilManager.class);

+ private final DataNode dataNode;

+ private volatile long intervalMs;

+ private volatile int ioUtil;

+ private volatile boolean shouldStop = false;

+ private String diskName;

+ private Thread diskStatThread;

+ private Map<StorageLocation, DiskLocation> locationToDisks = new HashMap<>();

+ private Map<DiskLocation, IOStat> ioStats = new HashMap<>();

+

+ private class DiskLocation {

+ private final String diskName;

+ private final StorageLocation location;

+ DiskLocation(StorageLocation location) throws IOException {

+ this.location = location;

+ FileStore fs = Files.getFileStore(Paths.get(location.getUri().getPath()));

+ String diskNamePlace = null;

+ if (NativeCodeLoader.isNativeCodeLoaded() && Shell.LINUX) {

+ try {

+ diskNamePlace = NativeIO.getDiskName(location.getUri().getPath());

+ LOG.info("location is {}, diskname is {}", location.getUri().getPath(), diskNamePlace);

+ } catch (IOException e) {

+ LOG.error(e.toString());

+ diskNamePlace = Paths.get(fs.name()).getFileName().toString();

+ } finally {

+ this.diskName = diskNamePlace;

+ }

+ } else {

+ this.diskName = Paths.get(fs.name()).getFileName().toString();

+ }

+ }

+

+ @Override

+ public String toString() {

+ return location.toString() + " disk: " + diskName;

+ }

+ @Override

+ public int hashCode() {

+ return location.hashCode();

+ }

+ }

+

+// private String getDiskName(String path) {

+// SystemInfo systemInfo = new SystemInfo();

+// systemInfo.getOperatingSystem().getFileSystem().getFileStores();

+// return "";

+// }

+

+ private class IOStat {

+ private String diskName;

+ private long lastTotalTicks;

+ private int util;

+ public IOStat(String diskName, long lastTotalTicks) {

+ this.diskName = diskName;

+ this.lastTotalTicks = lastTotalTicks;

+ }

+

+ public int getUtil() {

+ return util;

+ }

+

+ public void setUtil(int util) {

+ if (util <= 100 && util >= 0) {

+ this.util = util;

+ } else if (util < 0) {

+ this.util = 0;

+ } else {

+ this.util = 100;

+ }

+ }

+

+ public long getLastTotalTicks() {

+ return lastTotalTicks;

+ }

+

+ public void setLastTotalTicks(long lastTotalTicks) {

+ this.lastTotalTicks = lastTotalTicks;

+ }

+ }

+

+ DiskIOUtilManager(DataNode dataNode, Configuration conf) {

+ this.dataNode = dataNode;

+ this.intervalMs = TimeUnit.SECONDS.toMillis(conf.getLong(

+ DFS_DATANODE_DISK_STAT_INTERVAL_SECONDS_KEY,

+ DFS_DATANODE_DISK_STAT_INTERVAL_SECONDS_DEFAULT));

+ if (this.intervalMs < DFS_DATANODE_DISK_STAT_INTERVAL_SECONDS_DEFAULT) {

+ this.intervalMs = 1;

+ }

+ }

+

+ @Override

+ public void run() {

+ FsVolumeImpl.LOG.info(this + " starting disk util stat");

+ while (true) {

+ if (shouldStop) {

+ FsVolumeImpl.LOG.info(this + " stopping disk util stat");

+ break;

+ }

+ if (!Shell.LINUX) {

+ FsVolumeImpl.LOG.debug("Not support disk util stat on this os release");

+ continue;

+ }

+ Map<String, IOStat> allIOStats = getDiskIoUtils();

+ synchronized (this) {

+ for (Map.Entry<DiskLocation, IOStat> entry : ioStats.entrySet()) {

+ String diskName = entry.getKey().diskName;

+ IOStat oldStat = entry.getValue();

+ int util = 0;

+ if (allIOStats.containsKey(diskName)) {

+ long oldTotalTicks = oldStat.getLastTotalTicks();

+ long newTotalTicks = allIOStats.get(diskName).getLastTotalTicks();

+ if (oldTotalTicks != 0) {

+ util = (int) ((double) (newTotalTicks - oldTotalTicks) * 100 / intervalMs);

+ }

+ oldStat.setLastTotalTicks(newTotalTicks);

+ oldStat.setUtil(util);

+ LOG.debug(diskName + " disk io util:" + util);

+ } else {

+ //Maybe this disk has been umounted.

+ oldStat.setUtil(100);

+ }

+ }

+ }

+ try {

+ Thread.sleep(intervalMs);

+ } catch (InterruptedException e) {

+ }

+ }

+ }

+

+ void start() {

+ if (diskStatThread != null) {

+ return;

+ }

+ shouldStop = false;

+ diskStatThread = new Thread(this, threadName());

+ diskStatThread.setDaemon(true);

+ diskStatThread.start();

+ }

+

+ void stop() {

+ shouldStop = true;

+ if (diskStatThread != null) {

+ diskStatThread.interrupt();

+ try {

+ diskStatThread.join();

+ } catch (InterruptedException e) {

+ }

+ }

+ }

+

+ private String threadName() {

+ return "DataNode disk io util manager";

+ }

+

+ private static final String PROC_DISKSSTATS = "/proc/diskstats";

+ private static final Pattern DISK_STAT_FORMAT =

+ Pattern.compile("[ \t]*[0-9]*[ \t]*[0-9]*[ \t]*(\\S*)" +

+ "[ \t]*[0-9]*[ \t]*[0-9]*[ \t]*[0-9]*" +

+ "[ \t]*[0-9]*[ \t]*[0-9]*[ \t]*[0-9]*" +

+ "[ \t]*[0-9]*[ \t]*[0-9]*[ \t]*[0-9]*" +

+ "[ \t]*([0-9]*)[ \t].*");

+

+ private Map<String, IOStat> getDiskIoUtils() {

+ Map<String, IOStat> rets = new HashMap<>();

+ InputStreamReader fReader = null;

+ BufferedReader in = null;

+ try {

+ fReader = new InputStreamReader(

+ new FileInputStream(PROC_DISKSSTATS), Charset.forName("UTF-8"));

+ in = new BufferedReader(fReader);

+ } catch (FileNotFoundException f) {

+ // shouldn't happen....

+ return rets;

+ }

+ try {

+ Matcher mat = null;

+ String str = in.readLine();

+ while (str != null) {

+ mat = DISK_STAT_FORMAT.matcher(str);

+ if (mat.find()) {

+ String diskName = mat.group(1);

+ long totalTicks = Long.parseLong(mat.group(2));

+ LOG.debug(str + " totalTicks:" + totalTicks);

+ IOStat stat = new IOStat(diskName, totalTicks);

+ rets.put(diskName, stat);

+ }

+ str = in.readLine();

+ }

+ } catch (IOException e) {

+ e.printStackTrace();

Review comment:

Please print the message using slf4j. Otherwise it goes to stdout.

##########

File path: hadoop-hdfs-project/hadoop-hdfs/src/main/java/org/apache/hadoop/hdfs/server/datanode/DiskIOUtilManager.java

##########

@@ -0,0 +1,253 @@

+package org.apache.hadoop.hdfs.server.datanode;

+

+import org.apache.hadoop.conf.Configuration;

+import org.apache.hadoop.hdfs.server.datanode.fsdataset.impl.FsVolumeImpl;

+import org.apache.hadoop.io.nativeio.NativeIO;

+import org.apache.hadoop.util.NativeCodeLoader;

+import org.apache.hadoop.util.Shell;

+

+import java.io.BufferedReader;

+import java.io.FileInputStream;

+import java.io.FileNotFoundException;

+import java.io.IOException;

+import java.io.InputStreamReader;

+import java.nio.charset.Charset;

+import java.nio.file.FileStore;

+import java.nio.file.Files;

+import java.nio.file.Paths;

+import java.util.HashMap;

+import java.util.List;

+import java.util.Map;

+import java.util.concurrent.TimeUnit;

+import java.util.regex.Matcher;

+import java.util.regex.Pattern;

+

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+

+import static org.apache.hadoop.hdfs.DFSConfigKeys.DFS_DATANODE_DISK_STAT_INTERVAL_SECONDS_DEFAULT;

+import static org.apache.hadoop.hdfs.DFSConfigKeys.DFS_DATANODE_DISK_STAT_INTERVAL_SECONDS_KEY;

+

+public class DiskIOUtilManager implements Runnable {

+ public static final Logger LOG =

+ LoggerFactory.getLogger(DiskIOUtilManager.class);

+ private final DataNode dataNode;

+ private volatile long intervalMs;

+ private volatile int ioUtil;

+ private volatile boolean shouldStop = false;

+ private String diskName;

+ private Thread diskStatThread;

+ private Map<StorageLocation, DiskLocation> locationToDisks = new HashMap<>();

+ private Map<DiskLocation, IOStat> ioStats = new HashMap<>();

+

+ private class DiskLocation {

+ private final String diskName;

+ private final StorageLocation location;

+ DiskLocation(StorageLocation location) throws IOException {

+ this.location = location;

+ FileStore fs = Files.getFileStore(Paths.get(location.getUri().getPath()));

+ String diskNamePlace = null;

+ if (NativeCodeLoader.isNativeCodeLoaded() && Shell.LINUX) {

+ try {

+ diskNamePlace = NativeIO.getDiskName(location.getUri().getPath());

+ LOG.info("location is {}, diskname is {}", location.getUri().getPath(), diskNamePlace);

+ } catch (IOException e) {

+ LOG.error(e.toString());

+ diskNamePlace = Paths.get(fs.name()).getFileName().toString();

+ } finally {

+ this.diskName = diskNamePlace;

+ }

+ } else {

+ this.diskName = Paths.get(fs.name()).getFileName().toString();

+ }

+ }

+

+ @Override

+ public String toString() {

+ return location.toString() + " disk: " + diskName;

+ }

+ @Override

+ public int hashCode() {

+ return location.hashCode();

+ }

+ }

+

+// private String getDiskName(String path) {

+// SystemInfo systemInfo = new SystemInfo();

+// systemInfo.getOperatingSystem().getFileSystem().getFileStores();

+// return "";

+// }

+

+ private class IOStat {

+ private String diskName;

+ private long lastTotalTicks;

+ private int util;

+ public IOStat(String diskName, long lastTotalTicks) {

+ this.diskName = diskName;

+ this.lastTotalTicks = lastTotalTicks;

+ }

+

+ public int getUtil() {

+ return util;

+ }

+

+ public void setUtil(int util) {

+ if (util <= 100 && util >= 0) {

+ this.util = util;

+ } else if (util < 0) {

+ this.util = 0;

+ } else {

+ this.util = 100;

+ }

+ }

+

+ public long getLastTotalTicks() {

+ return lastTotalTicks;

+ }

+

+ public void setLastTotalTicks(long lastTotalTicks) {

+ this.lastTotalTicks = lastTotalTicks;

+ }

+ }

+

+ DiskIOUtilManager(DataNode dataNode, Configuration conf) {

+ this.dataNode = dataNode;

+ this.intervalMs = TimeUnit.SECONDS.toMillis(conf.getLong(

+ DFS_DATANODE_DISK_STAT_INTERVAL_SECONDS_KEY,

+ DFS_DATANODE_DISK_STAT_INTERVAL_SECONDS_DEFAULT));

+ if (this.intervalMs < DFS_DATANODE_DISK_STAT_INTERVAL_SECONDS_DEFAULT) {

+ this.intervalMs = 1;

+ }

+ }

+

+ @Override

+ public void run() {

+ FsVolumeImpl.LOG.info(this + " starting disk util stat");

+ while (true) {

+ if (shouldStop) {

+ FsVolumeImpl.LOG.info(this + " stopping disk util stat");

+ break;

+ }

+ if (!Shell.LINUX) {

+ FsVolumeImpl.LOG.debug("Not support disk util stat on this os release");

+ continue;

+ }

+ Map<String, IOStat> allIOStats = getDiskIoUtils();

+ synchronized (this) {

+ for (Map.Entry<DiskLocation, IOStat> entry : ioStats.entrySet()) {

+ String diskName = entry.getKey().diskName;

+ IOStat oldStat = entry.getValue();

+ int util = 0;

+ if (allIOStats.containsKey(diskName)) {

+ long oldTotalTicks = oldStat.getLastTotalTicks();

+ long newTotalTicks = allIOStats.get(diskName).getLastTotalTicks();

+ if (oldTotalTicks != 0) {

+ util = (int) ((double) (newTotalTicks - oldTotalTicks) * 100 / intervalMs);

+ }

+ oldStat.setLastTotalTicks(newTotalTicks);

+ oldStat.setUtil(util);

+ LOG.debug(diskName + " disk io util:" + util);

+ } else {

+ //Maybe this disk has been umounted.

+ oldStat.setUtil(100);

+ }

+ }

+ }

+ try {

+ Thread.sleep(intervalMs);

+ } catch (InterruptedException e) {

+ }

+ }

Review comment:

Can you add a try..catch block to wrap this method, and log an ERROR message if any Throwable is thrown from this thread? That'll make troubleshooting easier. Otherwise, this thread could exit without any messages.

##########

File path: hadoop-hdfs-project/hadoop-hdfs/src/main/java/org/apache/hadoop/hdfs/DFSConfigKeys.java

##########

@@ -2001,5 +2001,13 @@

public static final long DFS_LEASE_HARDLIMIT_DEFAULT =

HdfsClientConfigKeys.DFS_LEASE_HARDLIMIT_DEFAULT;

+ public static final String DFS_DATANODE_DISK_STAT_INTERVAL_SECONDS_KEY =

+ "dfs.datanode.disk.stat.interval.seconds";

+ public static final long DFS_DATANODE_DISK_STAT_INTERVAL_SECONDS_DEFAULT = 1L;

+ public static final String

+ DFS_DATANODE_AVAILABLE_SPACE_VOLUME_CHOOSING_POLICY_IO_UTIL_PREFERENCE_ENABLE_KEY =

+ "dfs.datanode.available-space-volume-choosing-policy.io.util.preference.enable";

+ public static final boolean

+ DFS_DATANODE_AVAILABLE_SPACE_VOLUME_CHOOSING_POLICY_IO_UTIL_PREFERENCE_ENABLE_DEFAULT = true;

Review comment:

please add these two config keys into hdfs-default.xml

##########

File path: hadoop-hdfs-project/hadoop-hdfs/src/main/java/org/apache/hadoop/hdfs/server/datanode/DiskIOUtilManager.java

##########

@@ -0,0 +1,253 @@

+package org.apache.hadoop.hdfs.server.datanode;

+

+import org.apache.hadoop.conf.Configuration;

+import org.apache.hadoop.hdfs.server.datanode.fsdataset.impl.FsVolumeImpl;

+import org.apache.hadoop.io.nativeio.NativeIO;

+import org.apache.hadoop.util.NativeCodeLoader;

+import org.apache.hadoop.util.Shell;

+

+import java.io.BufferedReader;

+import java.io.FileInputStream;

+import java.io.FileNotFoundException;

+import java.io.IOException;

+import java.io.InputStreamReader;

+import java.nio.charset.Charset;

+import java.nio.file.FileStore;

+import java.nio.file.Files;

+import java.nio.file.Paths;

+import java.util.HashMap;

+import java.util.List;

+import java.util.Map;

+import java.util.concurrent.TimeUnit;

+import java.util.regex.Matcher;

+import java.util.regex.Pattern;

+

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+

+import static org.apache.hadoop.hdfs.DFSConfigKeys.DFS_DATANODE_DISK_STAT_INTERVAL_SECONDS_DEFAULT;

+import static org.apache.hadoop.hdfs.DFSConfigKeys.DFS_DATANODE_DISK_STAT_INTERVAL_SECONDS_KEY;

+

+public class DiskIOUtilManager implements Runnable {

+ public static final Logger LOG =

+ LoggerFactory.getLogger(DiskIOUtilManager.class);

+ private final DataNode dataNode;

+ private volatile long intervalMs;

+ private volatile int ioUtil;

+ private volatile boolean shouldStop = false;

+ private String diskName;

+ private Thread diskStatThread;

+ private Map<StorageLocation, DiskLocation> locationToDisks = new HashMap<>();

+ private Map<DiskLocation, IOStat> ioStats = new HashMap<>();

+

+ private class DiskLocation {

+ private final String diskName;

+ private final StorageLocation location;

+ DiskLocation(StorageLocation location) throws IOException {

+ this.location = location;

+ FileStore fs = Files.getFileStore(Paths.get(location.getUri().getPath()));

+ String diskNamePlace = null;

+ if (NativeCodeLoader.isNativeCodeLoaded() && Shell.LINUX) {

+ try {

+ diskNamePlace = NativeIO.getDiskName(location.getUri().getPath());

+ LOG.info("location is {}, diskname is {}", location.getUri().getPath(), diskNamePlace);

+ } catch (IOException e) {

+ LOG.error(e.toString());

+ diskNamePlace = Paths.get(fs.name()).getFileName().toString();

+ } finally {

+ this.diskName = diskNamePlace;

+ }

+ } else {

+ this.diskName = Paths.get(fs.name()).getFileName().toString();

+ }

+ }

+

+ @Override

+ public String toString() {

+ return location.toString() + " disk: " + diskName;

+ }

+ @Override

+ public int hashCode() {

+ return location.hashCode();

+ }

+ }

+

+// private String getDiskName(String path) {

Review comment:

Remove it if not used.

##########

File path: hadoop-hdfs-project/hadoop-hdfs/src/main/java/org/apache/hadoop/hdfs/server/datanode/DiskIOUtilManager.java

##########

@@ -0,0 +1,253 @@

+package org.apache.hadoop.hdfs.server.datanode;

+

+import org.apache.hadoop.conf.Configuration;

+import org.apache.hadoop.hdfs.server.datanode.fsdataset.impl.FsVolumeImpl;

+import org.apache.hadoop.io.nativeio.NativeIO;

+import org.apache.hadoop.util.NativeCodeLoader;

+import org.apache.hadoop.util.Shell;

+

+import java.io.BufferedReader;

+import java.io.FileInputStream;

+import java.io.FileNotFoundException;

+import java.io.IOException;

+import java.io.InputStreamReader;

+import java.nio.charset.Charset;

+import java.nio.file.FileStore;

+import java.nio.file.Files;

+import java.nio.file.Paths;

+import java.util.HashMap;

+import java.util.List;

+import java.util.Map;

+import java.util.concurrent.TimeUnit;

+import java.util.regex.Matcher;

+import java.util.regex.Pattern;

+

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+

+import static org.apache.hadoop.hdfs.DFSConfigKeys.DFS_DATANODE_DISK_STAT_INTERVAL_SECONDS_DEFAULT;

+import static org.apache.hadoop.hdfs.DFSConfigKeys.DFS_DATANODE_DISK_STAT_INTERVAL_SECONDS_KEY;

+

+public class DiskIOUtilManager implements Runnable {

+ public static final Logger LOG =

+ LoggerFactory.getLogger(DiskIOUtilManager.class);

+ private final DataNode dataNode;

+ private volatile long intervalMs;

+ private volatile int ioUtil;

+ private volatile boolean shouldStop = false;

+ private String diskName;

+ private Thread diskStatThread;

+ private Map<StorageLocation, DiskLocation> locationToDisks = new HashMap<>();

+ private Map<DiskLocation, IOStat> ioStats = new HashMap<>();

+

+ private class DiskLocation {

+ private final String diskName;

+ private final StorageLocation location;

+ DiskLocation(StorageLocation location) throws IOException {

+ this.location = location;

+ FileStore fs = Files.getFileStore(Paths.get(location.getUri().getPath()));

+ String diskNamePlace = null;

+ if (NativeCodeLoader.isNativeCodeLoaded() && Shell.LINUX) {

+ try {

+ diskNamePlace = NativeIO.getDiskName(location.getUri().getPath());

+ LOG.info("location is {}, diskname is {}", location.getUri().getPath(), diskNamePlace);

+ } catch (IOException e) {

+ LOG.error(e.toString());

+ diskNamePlace = Paths.get(fs.name()).getFileName().toString();

+ } finally {

+ this.diskName = diskNamePlace;

+ }

+ } else {

+ this.diskName = Paths.get(fs.name()).getFileName().toString();

+ }

+ }

+

+ @Override

+ public String toString() {

+ return location.toString() + " disk: " + diskName;

+ }

+ @Override

+ public int hashCode() {

+ return location.hashCode();

+ }

+ }

+

+// private String getDiskName(String path) {

+// SystemInfo systemInfo = new SystemInfo();

+// systemInfo.getOperatingSystem().getFileSystem().getFileStores();

+// return "";

+// }

+

+ private class IOStat {

+ private String diskName;

+ private long lastTotalTicks;

+ private int util;

+ public IOStat(String diskName, long lastTotalTicks) {

+ this.diskName = diskName;

+ this.lastTotalTicks = lastTotalTicks;

+ }

+

+ public int getUtil() {

+ return util;

+ }

+

+ public void setUtil(int util) {

+ if (util <= 100 && util >= 0) {

+ this.util = util;

+ } else if (util < 0) {

+ this.util = 0;

+ } else {

+ this.util = 100;

+ }

+ }

+

+ public long getLastTotalTicks() {

+ return lastTotalTicks;

+ }

+

+ public void setLastTotalTicks(long lastTotalTicks) {

+ this.lastTotalTicks = lastTotalTicks;

+ }

+ }

+

+ DiskIOUtilManager(DataNode dataNode, Configuration conf) {

+ this.dataNode = dataNode;

+ this.intervalMs = TimeUnit.SECONDS.toMillis(conf.getLong(

+ DFS_DATANODE_DISK_STAT_INTERVAL_SECONDS_KEY,

+ DFS_DATANODE_DISK_STAT_INTERVAL_SECONDS_DEFAULT));

+ if (this.intervalMs < DFS_DATANODE_DISK_STAT_INTERVAL_SECONDS_DEFAULT) {

+ this.intervalMs = 1;

+ }

+ }

+

+ @Override

+ public void run() {

+ FsVolumeImpl.LOG.info(this + " starting disk util stat");

+ while (true) {

+ if (shouldStop) {

+ FsVolumeImpl.LOG.info(this + " stopping disk util stat");

+ break;

+ }

+ if (!Shell.LINUX) {

+ FsVolumeImpl.LOG.debug("Not support disk util stat on this os release");

+ continue;

+ }

+ Map<String, IOStat> allIOStats = getDiskIoUtils();

+ synchronized (this) {

+ for (Map.Entry<DiskLocation, IOStat> entry : ioStats.entrySet()) {

+ String diskName = entry.getKey().diskName;

+ IOStat oldStat = entry.getValue();

+ int util = 0;

+ if (allIOStats.containsKey(diskName)) {

+ long oldTotalTicks = oldStat.getLastTotalTicks();

+ long newTotalTicks = allIOStats.get(diskName).getLastTotalTicks();

+ if (oldTotalTicks != 0) {

+ util = (int) ((double) (newTotalTicks - oldTotalTicks) * 100 / intervalMs);

+ }

+ oldStat.setLastTotalTicks(newTotalTicks);

+ oldStat.setUtil(util);

+ LOG.debug(diskName + " disk io util:" + util);

+ } else {

+ //Maybe this disk has been umounted.

+ oldStat.setUtil(100);

+ }

+ }

+ }

+ try {

+ Thread.sleep(intervalMs);

+ } catch (InterruptedException e) {

+ }

+ }

+ }

+

+ void start() {

Review comment:

Where does this method get called?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For additional commands, e-mail: common-issues-help@hadoop.apache.org

[GitHub] [hadoop] tomscut commented on pull request #3960: HDFS-16446. Consider ioutils of disk when choosing volume

Posted by GitBox <gi...@apache.org>.

tomscut commented on pull request #3960:

URL: https://github.com/apache/hadoop/pull/3960#issuecomment-1033469821

>

> AvailableSpaceVolumeChoosingPolicy

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For additional commands, e-mail: common-issues-help@hadoop.apache.org

[GitHub] [hadoop] hadoop-yetus commented on pull request #3960: HDFS-16446. Consider ioutils of disk when choosing volume

Posted by GitBox <gi...@apache.org>.

hadoop-yetus commented on pull request #3960:

URL: https://github.com/apache/hadoop/pull/3960#issuecomment-1034053816

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|:----:|----------:|--------:|:--------:|:-------:|

| +0 :ok: | reexec | 0m 59s | | Docker mode activated. |

|||| _ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files found. |

| +0 :ok: | codespell | 0m 0s | | codespell was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to include 4 new or modified test files. |

|||| _ trunk Compile Tests _ |

| +0 :ok: | mvndep | 25m 6s | | Maven dependency ordering for branch |

| +1 :green_heart: | mvninstall | 29m 19s | | trunk passed |

| +1 :green_heart: | compile | 25m 35s | | trunk passed |

| +1 :green_heart: | checkstyle | 4m 24s | | trunk passed |

| +1 :green_heart: | mvnsite | 3m 52s | | trunk passed |

| +1 :green_heart: | javadoc | 3m 48s | | trunk passed |

| +1 :green_heart: | spotbugs | 7m 7s | | trunk passed |

| +1 :green_heart: | shadedclient | 27m 26s | | branch has no errors when building and testing our client artifacts. |

|||| _ Patch Compile Tests _ |

| +0 :ok: | mvndep | 0m 38s | | Maven dependency ordering for patch |

| +1 :green_heart: | mvninstall | 2m 44s | | the patch passed |

| +1 :green_heart: | compile | 23m 52s | | the patch passed |

| -1 :x: | cc | 23m 52s | [/results-compile-cc-root.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3960/2/artifact/out/results-compile-cc-root.txt) | root generated 22 new + 185 unchanged - 20 fixed = 207 total (was 205) |

| +1 :green_heart: | golang | 23m 52s | | the patch passed |

| +1 :green_heart: | javac | 23m 52s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks issues. |

| -0 :warning: | checkstyle | 4m 6s | [/results-checkstyle-root.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3960/2/artifact/out/results-checkstyle-root.txt) | root: The patch generated 10 new + 519 unchanged - 0 fixed = 529 total (was 519) |

| +1 :green_heart: | mvnsite | 3m 46s | | the patch passed |

| +1 :green_heart: | xml | 0m 1s | | The patch has no ill-formed XML file. |

| +1 :green_heart: | javadoc | 3m 43s | | the patch passed |

| -1 :x: | spotbugs | 4m 24s | [/new-spotbugs-hadoop-hdfs-project_hadoop-hdfs.html](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3960/2/artifact/out/new-spotbugs-hadoop-hdfs-project_hadoop-hdfs.html) | hadoop-hdfs-project/hadoop-hdfs generated 7 new + 0 unchanged - 0 fixed = 7 total (was 0) |

| -1 :x: | shadedclient | 27m 37s | | patch has errors when building and testing our client artifacts. |

|||| _ Other Tests _ |

| -1 :x: | unit | 19m 19s | [/patch-unit-hadoop-common-project_hadoop-common.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3960/2/artifact/out/patch-unit-hadoop-common-project_hadoop-common.txt) | hadoop-common in the patch passed. |

| -1 :x: | unit | 375m 36s | [/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3960/2/artifact/out/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt) | hadoop-hdfs in the patch passed. |

| -1 :x: | asflicense | 1m 12s | [/results-asflicense.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3960/2/artifact/out/results-asflicense.txt) | The patch generated 1 ASF License warnings. |

| | | 594m 10s | | |

| Reason | Tests |

|-------:|:------|

| SpotBugs | module:hadoop-hdfs-project/hadoop-hdfs |

| | org.apache.hadoop.hdfs.server.datanode.DiskIOUtilManager.getDiskIoUtils() may fail to close stream At DiskIOUtilManager.java:stream At DiskIOUtilManager.java:[line 203] |

| | Possible null pointer dereference in new org.apache.hadoop.hdfs.server.datanode.DiskIOUtilManager$DiskLocation(DiskIOUtilManager, StorageLocation) due to return value of called method Dereferenced at DiskIOUtilManager.java:new org.apache.hadoop.hdfs.server.datanode.DiskIOUtilManager$DiskLocation(DiskIOUtilManager, StorageLocation) due to return value of called method Dereferenced at DiskIOUtilManager.java:[line 56] |

| | Possible null pointer dereference in new org.apache.hadoop.hdfs.server.datanode.DiskIOUtilManager$DiskLocation(DiskIOUtilManager, StorageLocation) due to return value of called method Dereferenced at DiskIOUtilManager.java:new org.apache.hadoop.hdfs.server.datanode.DiskIOUtilManager$DiskLocation(DiskIOUtilManager, StorageLocation) due to return value of called method Dereferenced at DiskIOUtilManager.java:[line 61] |

| | Should org.apache.hadoop.hdfs.server.datanode.DiskIOUtilManager$DiskLocation be a _static_ inner class? At DiskIOUtilManager.java:inner class? At DiskIOUtilManager.java:[lines 43-71] |

| | Should org.apache.hadoop.hdfs.server.datanode.DiskIOUtilManager$IOStat be a _static_ inner class? At DiskIOUtilManager.java:inner class? At DiskIOUtilManager.java:[lines 85-110] |

| | Unread field:DiskIOUtilManager.java:[line 86] |

| | org.apache.hadoop.hdfs.server.datanode.fsdataset.AvailableSpaceVolumeChoosingPolicy$IOUtilVolumePair defines compareTo(AvailableSpaceVolumeChoosingPolicy$IOUtilVolumePair) and uses Object.equals() At AvailableSpaceVolumeChoosingPolicy.java:Object.equals() At AvailableSpaceVolumeChoosingPolicy.java:[lines 442-450] |

| Failed junit tests | hadoop.util.TestNativeLibraryChecker |

| | hadoop.service.launcher.TestServiceInterruptHandling |

| | hadoop.crypto.TestCryptoCodec |

| | hadoop.crypto.TestCryptoStreamsWithOpensslSm4CtrCryptoCodec |

| | hadoop.tools.TestHdfsConfigFields |

| | hadoop.hdfs.server.datanode.fsdataset.TestAvailableSpaceVolumeChoosingPolicy |

| Subsystem | Report/Notes |

|----------:|:-------------|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base: https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3960/2/artifact/out/Dockerfile |

| GITHUB PR | https://github.com/apache/hadoop/pull/3960 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall mvnsite unit shadedclient spotbugs checkstyle codespell cc golang xml |

| uname | Linux 011c688e0d67 4.15.0-162-generic #170-Ubuntu SMP Mon Oct 18 11:38:05 UTC 2021 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / 24e98c8ab8d1596f102c7de7d28553d866bae9ca |

| Default Java | Red Hat, Inc.-1.8.0_322-b06 |

| Test Results | https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3960/2/testReport/ |

| Max. process+thread count | 3137 (vs. ulimit of 5500) |

| modules | C: hadoop-common-project/hadoop-common hadoop-hdfs-project/hadoop-hdfs U: . |

| Console output | https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3960/2/console |

| versions | git=2.9.5 maven=3.6.3 spotbugs=4.2.2 |

| Powered by | Apache Yetus 0.14.0-SNAPSHOT https://yetus.apache.org |

This message was automatically generated.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For additional commands, e-mail: common-issues-help@hadoop.apache.org

[GitHub] [hadoop] hadoop-yetus commented on pull request #3960: HDFS-16446. Consider ioutils of disk when choosing volume

Posted by GitBox <gi...@apache.org>.

hadoop-yetus commented on pull request #3960:

URL: https://github.com/apache/hadoop/pull/3960#issuecomment-1077050201

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|:----:|----------:|--------:|:--------:|:-------:|

| +0 :ok: | reexec | 0m 0s | | Docker mode activated. |

| -1 :x: | docker | 14m 54s | | Docker failed to build yetus/hadoop:13467f45240. |

| Subsystem | Report/Notes |

|----------:|:-------------|

| GITHUB PR | https://github.com/apache/hadoop/pull/3960 |

| Console output | https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3960/4/console |

| versions | git=2.17.1 |

| Powered by | Apache Yetus 0.14.0-SNAPSHOT https://yetus.apache.org |

This message was automatically generated.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For additional commands, e-mail: common-issues-help@hadoop.apache.org

[GitHub] [hadoop] hadoop-yetus commented on pull request #3960: HDFS-16446. Consider ioutils of disk when choosing volume

Posted by GitBox <gi...@apache.org>.

hadoop-yetus commented on pull request #3960:

URL: https://github.com/apache/hadoop/pull/3960#issuecomment-1077761539

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|:----:|----------:|--------:|:--------:|:-------:|

| +0 :ok: | reexec | 26m 18s | | Docker mode activated. |

|||| _ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files found. |

| +0 :ok: | codespell | 0m 0s | | codespell was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to include 4 new or modified test files. |

|||| _ trunk Compile Tests _ |

| +0 :ok: | mvndep | 12m 15s | | Maven dependency ordering for branch |

| +1 :green_heart: | mvninstall | 26m 40s | | trunk passed |

| +1 :green_heart: | compile | 21m 39s | | trunk passed |

| +1 :green_heart: | checkstyle | 3m 58s | | trunk passed |

| +1 :green_heart: | mvnsite | 3m 55s | | trunk passed |

| +1 :green_heart: | javadoc | 3m 56s | | trunk passed |

| +0 :ok: | spotbugs | 0m 32s | | branch/hadoop-project no spotbugs output file (spotbugsXml.xml) |

| +1 :green_heart: | shadedclient | 25m 55s | | branch has no errors when building and testing our client artifacts. |

|||| _ Patch Compile Tests _ |

| +0 :ok: | mvndep | 0m 23s | | Maven dependency ordering for patch |

| +1 :green_heart: | mvninstall | 2m 34s | | the patch passed |

| +1 :green_heart: | compile | 20m 28s | | the patch passed |

| -1 :x: | cc | 20m 28s | [/results-compile-cc-root.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3960/5/artifact/out/results-compile-cc-root.txt) | root generated 30 new + 177 unchanged - 28 fixed = 207 total (was 205) |

| +1 :green_heart: | golang | 20m 28s | | the patch passed |

| +1 :green_heart: | javac | 20m 28s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks issues. |

| +1 :green_heart: | checkstyle | 3m 53s | | the patch passed |

| +1 :green_heart: | mvnsite | 3m 47s | | the patch passed |

| +1 :green_heart: | xml | 0m 3s | | The patch has no ill-formed XML file. |

| +1 :green_heart: | javadoc | 3m 49s | | the patch passed |

| +0 :ok: | spotbugs | 0m 29s | | hadoop-project has no data from spotbugs |

| -1 :x: | spotbugs | 3m 50s | [/new-spotbugs-hadoop-hdfs-project_hadoop-hdfs.html](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3960/5/artifact/out/new-spotbugs-hadoop-hdfs-project_hadoop-hdfs.html) | hadoop-hdfs-project/hadoop-hdfs generated 7 new + 0 unchanged - 0 fixed = 7 total (was 0) |

| +1 :green_heart: | shadedclient | 26m 19s | | patch has no errors when building and testing our client artifacts. |

|||| _ Other Tests _ |

| +1 :green_heart: | unit | 0m 28s | | hadoop-project in the patch passed. |

| -1 :x: | unit | 17m 51s | [/patch-unit-hadoop-common-project_hadoop-common.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3960/5/artifact/out/patch-unit-hadoop-common-project_hadoop-common.txt) | hadoop-common in the patch passed. |