You are viewing a plain text version of this content. The canonical link for it is here.

Posted to dev@ambari.apache.org by GitBox <gi...@apache.org> on 2022/09/16 14:54:14 UTC

[GitHub] [ambari] timyuer opened a new pull request, #3365: AMBARI-25731: HdfsResource didn't chown again when reupload local file in resource_management

timyuer opened a new pull request, #3365:

URL: https://github.com/apache/ambari/pull/3365

AMBARI-25731: HdfsResource didn't chown again when reupload local file in resource_management

## What changes were proposed in this pull request?

(Please fill in changes proposed in this fix)

## How was this patch tested?

(Please explain how this patch was tested. Ex: unit tests, manual tests)

(If this patch involves UI changes, please attach a screen-shot; otherwise, remove this)

Please review [Ambari Contributing Guide](https://cwiki.apache.org/confluence/display/AMBARI/How+to+Contribute) before opening a pull request.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: dev-unsubscribe@ambari.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: dev-unsubscribe@ambari.apache.org

For additional commands, e-mail: dev-help@ambari.apache.org

[GitHub] [ambari] timyuer commented on pull request #3365: AMBARI-25731: HdfsResource didn't chown again when reupload local file in resource_management

Posted by GitBox <gi...@apache.org>.

timyuer commented on PR #3365:

URL: https://github.com/apache/ambari/pull/3365#issuecomment-1249959009

Refer to https://github.com/apache/bigtop/pull/1000#issuecomment-1248846681.

In this scenario, when I use HdfsResource upload a file, the logic is:

1. Obtain the file status: owner=ambari-qa

2. If the files are inconsistent, upload the file again. In this case, the file permission is owner= HDFS

3. Grant permissions. The assigned permissions are consistent with the file status permissions, and file permissions are not updated

Because the file status first obtained was outdated, then `_set_owner` function will not take effect. This is not reasonable.

And the updated logic is:

1. Obtain the file status: owner=ambari-qa

2. If the files are inconsistent, upload the file again. In this case, the file permission is owner= HDFS

3. Obtain the file status again, and the file permission is owner= HDFS

4. Assign permissions. If the assigned permissions are inconsistent with the file status permissions, update the file permissions

Of course, this may be redundant when creating the file for the first ti.me, but it requires refactoring the code that created the file.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: dev-unsubscribe@ambari.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: dev-unsubscribe@ambari.apache.org

For additional commands, e-mail: dev-help@ambari.apache.org

[GitHub] [ambari] kevinw66 commented on pull request #3365: AMBARI-25731: HdfsResource didn't chown again when reupload local file in resource_management

Posted by GitBox <gi...@apache.org>.

kevinw66 commented on PR #3365:

URL: https://github.com/apache/ambari/pull/3365#issuecomment-1261166438

Hi @timyuer , could you describe how to reproduce the problem?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: dev-unsubscribe@ambari.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: dev-unsubscribe@ambari.apache.org

For additional commands, e-mail: dev-help@ambari.apache.org

[GitHub] [ambari] timyuer commented on pull request #3365: AMBARI-25731: HdfsResource didn't chown again when reupload local file in resource_management

Posted by GitBox <gi...@apache.org>.

timyuer commented on PR #3365:

URL: https://github.com/apache/ambari/pull/3365#issuecomment-1261836612

> Hi @timyuer , could you describe how to reproduce the problem?

The MapReduce2's Service Check button was hidden because of AMBARI-24921, may be should fix this problem firstly then we can reproduce AMBARI-25731.

@kevinw66

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: dev-unsubscribe@ambari.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: dev-unsubscribe@ambari.apache.org

For additional commands, e-mail: dev-help@ambari.apache.org

[GitHub] [ambari] timyuer commented on pull request #3365: AMBARI-25731: HdfsResource didn't chown again when reupload local file in resource_management

Posted by GitBox <gi...@apache.org>.

timyuer commented on PR #3365:

URL: https://github.com/apache/ambari/pull/3365#issuecomment-1272229048

Success run MR check after update /etc/passwd.

```bash

stderr:

None

stdout:

2022-10-08 04:44:09,626 - Stack Feature Version Info: Cluster Stack=3.2.0, Command Stack=None, Command Version=None -> 3.2.0

2022-10-08 04:44:09,627 - Skipping get_stack_version since /usr/bin/distro-select is not yet available

2022-10-08 04:44:09,632 - HdfsResource['/user/ambari-qa'] {'security_enabled': False, 'hadoop_bin_dir': '/usr/bin', 'keytab': [EMPTY], 'dfs_type': 'HDFS', 'default_fs': 'hdfs://ambari-agent-01:8020', 'hdfs_resource_ignore_file': '/var/lib/ambari-agent/data/.hdfs_resource_ignore', 'hdfs_site': ..., 'kinit_path_local': '/usr/bin/kinit', 'principal_name': [EMPTY], 'user': 'hdfs', 'owner': 'ambari-qa', 'hadoop_conf_dir': '/etc/hadoop/conf', 'type': 'directory', 'action': ['create_on_execute'], 'immutable_paths': [u'/mr-history/done', u'/app-logs', u'/tmp'], 'mode': 0770}

2022-10-08 04:44:09,634 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X GET -d '"'"''"'"' -H '"'"'Content-Length: 0'"'"' '"'"'http://ambari-agent-01:50070/webhdfs/v1/user/ambari-qa?op=GETFILESTATUS&user.name=hdfs'"'"' 1>/tmp/tmpAV7s9y 2>/tmp/tmpszfLHv''] {'logoutput': None, 'quiet': False}

2022-10-08 04:44:09,687 - call returned (0, '')

2022-10-08 04:44:09,688 - get_user_call_output returned (0, u'{"FileStatus":{"accessTime":0,"blockSize":0,"childrenNum":3,"fileId":16388,"group":"hdfs","length":0,"modificationTime":1665204196800,"owner":"ambari-qa","pathSuffix":"","permission":"770","replication":0,"storagePolicy":0,"type":"DIRECTORY"}}200', u'')

2022-10-08 04:44:09,689 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X GET -d '"'"''"'"' -H '"'"'Content-Length: 0'"'"' '"'"'http://ambari-agent-01:50070/webhdfs/v1/user/ambari-qa?op=GETFILESTATUS&user.name=hdfs'"'"' 1>/tmp/tmpRO4Fqu 2>/tmp/tmpS00zD1''] {'logoutput': None, 'quiet': False}

2022-10-08 04:44:09,807 - call returned (0, '')

2022-10-08 04:44:09,807 - get_user_call_output returned (0, u'{"FileStatus":{"accessTime":0,"blockSize":0,"childrenNum":3,"fileId":16388,"group":"hdfs","length":0,"modificationTime":1665204196800,"owner":"ambari-qa","pathSuffix":"","permission":"770","replication":0,"storagePolicy":0,"type":"DIRECTORY"}}200', u'')

2022-10-08 04:44:09,808 - HdfsResource['/user/ambari-qa/mapredsmokeinput'] {'security_enabled': False, 'hadoop_bin_dir': '/usr/bin', 'keytab': [EMPTY], 'source': '/etc/passwd', 'dfs_type': 'HDFS', 'default_fs': 'hdfs://ambari-agent-01:8020', 'hdfs_resource_ignore_file': '/var/lib/ambari-agent/data/.hdfs_resource_ignore', 'hdfs_site': ..., 'kinit_path_local': '/usr/bin/kinit', 'principal_name': [EMPTY], 'user': 'hdfs', 'owner': 'ambari-qa', 'hadoop_conf_dir': '/etc/hadoop/conf', 'type': 'file', 'action': ['create_on_execute'], 'immutable_paths': [u'/mr-history/done', u'/app-logs', u'/tmp'], 'mode': 0770}

2022-10-08 04:44:09,810 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X GET -d '"'"''"'"' -H '"'"'Content-Length: 0'"'"' '"'"'http://ambari-agent-01:50070/webhdfs/v1/user/ambari-qa/mapredsmokeinput?op=GETFILESTATUS&user.name=hdfs'"'"' 1>/tmp/tmpsMzXqS 2>/tmp/tmpB57GCf''] {'logoutput': None, 'quiet': False}

2022-10-08 04:44:10,053 - call returned (0, '')

2022-10-08 04:44:10,053 - get_user_call_output returned (0, u'{"FileStatus":{"accessTime":1665204118565,"blockSize":134217728,"childrenNum":0,"fileId":16397,"group":"hdfs","length":1123,"modificationTime":1665204119318,"owner":"ambari-qa","pathSuffix":"","permission":"770","replication":3,"storagePolicy":0,"type":"FILE"}}200', u'')

2022-10-08 04:44:10,054 - Creating new file /user/ambari-qa/mapredsmokeinput in DFS

2022-10-08 04:44:10,055 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X PUT --data-binary @/etc/passwd -H '"'"'Content-Type: application/octet-stream'"'"' '"'"'http://ambari-agent-01:50070/webhdfs/v1/user/ambari-qa/mapredsmokeinput?op=CREATE&user.name=hdfs&overwrite=True&permission=770'"'"' 1>/tmp/tmpFbeZFF 2>/tmp/tmpnererF''] {'logoutput': None, 'quiet': False}

2022-10-08 04:44:10,554 - call returned (0, '')

2022-10-08 04:44:10,554 - get_user_call_output returned (0, u'201', u'')

2022-10-08 04:44:10,555 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X GET -d '"'"''"'"' -H '"'"'Content-Length: 0'"'"' '"'"'http://ambari-agent-01:50070/webhdfs/v1/user/ambari-qa/mapredsmokeinput?op=GETFILESTATUS&user.name=hdfs'"'"' 1>/tmp/tmpQmU81q 2>/tmp/tmpkYAwuF''] {'logoutput': None, 'quiet': False}

2022-10-08 04:44:10,598 - call returned (0, '')

2022-10-08 04:44:10,598 - get_user_call_output returned (0, u'{"FileStatus":{"accessTime":1665204250115,"blockSize":134217728,"childrenNum":0,"fileId":16452,"group":"hdfs","length":1160,"modificationTime":1665204250549,"owner":"hdfs","pathSuffix":"","permission":"770","replication":3,"storagePolicy":0,"type":"FILE"}}200', u'')

2022-10-08 04:44:10,599 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X PUT -d '"'"''"'"' -H '"'"'Content-Length: 0'"'"' '"'"'http://ambari-agent-01:50070/webhdfs/v1/user/ambari-qa/mapredsmokeinput?op=SETOWNER&owner=ambari-qa&group=&user.name=hdfs'"'"' 1>/tmp/tmpCMvTJ_ 2>/tmp/tmpPLp6sI''] {'logoutput': None, 'quiet': False}

2022-10-08 04:44:10,637 - call returned (0, '')

2022-10-08 04:44:10,637 - get_user_call_output returned (0, u'200', u'')

2022-10-08 04:44:10,638 - HdfsResource['/user/ambari-qa/mapredsmokeoutput'] {'security_enabled': False, 'hadoop_bin_dir': '/usr/bin', 'keytab': [EMPTY], 'dfs_type': 'HDFS', 'default_fs': 'hdfs://ambari-agent-01:8020', 'hdfs_resource_ignore_file': '/var/lib/ambari-agent/data/.hdfs_resource_ignore', 'hdfs_site': ..., 'kinit_path_local': '/usr/bin/kinit', 'principal_name': [EMPTY], 'user': 'hdfs', 'action': ['delete_on_execute'], 'hadoop_conf_dir': '/etc/hadoop/conf', 'type': 'directory', 'immutable_paths': [u'/mr-history/done', u'/app-logs', u'/tmp']}

2022-10-08 04:44:10,639 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X GET -d '"'"''"'"' -H '"'"'Content-Length: 0'"'"' '"'"'http://ambari-agent-01:50070/webhdfs/v1/user/ambari-qa/mapredsmokeoutput?op=GETFILESTATUS&user.name=hdfs'"'"' 1>/tmp/tmptWqM2d 2>/tmp/tmp8437WK''] {'logoutput': None, 'quiet': False}

2022-10-08 04:44:10,673 - call returned (0, '')

2022-10-08 04:44:10,674 - get_user_call_output returned (0, u'{"FileStatus":{"accessTime":0,"blockSize":0,"childrenNum":2,"fileId":16437,"group":"hdfs","length":0,"modificationTime":1665204208837,"owner":"ambari-qa","pathSuffix":"","permission":"755","replication":0,"storagePolicy":0,"type":"DIRECTORY"}}200', u'')

2022-10-08 04:44:10,675 - call['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'curl -sS -L -w '"'"'%{http_code}'"'"' -X DELETE -d '"'"''"'"' -H '"'"'Content-Length: 0'"'"' '"'"'http://ambari-agent-01:50070/webhdfs/v1/user/ambari-qa/mapredsmokeoutput?op=DELETE&recursive=True&user.name=hdfs'"'"' 1>/tmp/tmpiE9byr 2>/tmp/tmpXtIqou''] {'logoutput': None, 'quiet': False}

2022-10-08 04:44:10,715 - call returned (0, '')

2022-10-08 04:44:10,715 - get_user_call_output returned (0, u'{"boolean":true}200', u'')

2022-10-08 04:44:10,716 - HdfsResource[None] {'security_enabled': False, 'hadoop_bin_dir': '/usr/bin', 'keytab': [EMPTY], 'dfs_type': 'HDFS', 'default_fs': 'hdfs://ambari-agent-01:8020', 'hdfs_resource_ignore_file': '/var/lib/ambari-agent/data/.hdfs_resource_ignore', 'hdfs_site': ..., 'kinit_path_local': '/usr/bin/kinit', 'principal_name': [EMPTY], 'user': 'hdfs', 'action': ['execute'], 'hadoop_conf_dir': '/etc/hadoop/conf', 'immutable_paths': [u'/mr-history/done', u'/app-logs', u'/tmp']}

2022-10-08 04:44:10,717 - ExecuteHadoop['jar /usr/lib/hadoop-mapreduce/hadoop-mapreduce-examples-3.*.jar wordcount /user/ambari-qa/mapredsmokeinput /user/ambari-qa/mapredsmokeoutput'] {'bin_dir': '/usr/sbin:/sbin:/usr/lib/ambari-server/*:/usr/sbin:/sbin:/usr/lib/ambari-server/*:/usr/local/sbin:/usr/local/bin:/sbin:/bin:/usr/sbin:/usr/bin:/root/bin:/var/lib/ambari-agent:/var/lib/ambari-agent:/usr/bin:/usr/lib/hadoop-yarn/bin', 'conf_dir': '/etc/hadoop/conf', 'logoutput': True, 'try_sleep': 5, 'tries': 1, 'user': 'ambari-qa'}

2022-10-08 04:44:10,717 - Execute['hadoop --config /etc/hadoop/conf jar /usr/lib/hadoop-mapreduce/hadoop-mapreduce-examples-3.*.jar wordcount /user/ambari-qa/mapredsmokeinput /user/ambari-qa/mapredsmokeoutput'] {'logoutput': True, 'try_sleep': 5, 'environment': {}, 'tries': 1, 'user': 'ambari-qa', 'path': ['/usr/sbin:/sbin:/usr/lib/ambari-server/*:/usr/sbin:/sbin:/usr/lib/ambari-server/*:/usr/local/sbin:/usr/local/bin:/sbin:/bin:/usr/sbin:/usr/bin:/root/bin:/var/lib/ambari-agent:/var/lib/ambari-agent:/usr/bin:/usr/lib/hadoop-yarn/bin']}

22/10/08 04:44:13 INFO client.DefaultNoHARMFailoverProxyProvider: Connecting to ResourceManager at ambari-agent-02/172.19.0.4:8050

22/10/08 04:44:13 INFO client.AHSProxy: Connecting to Application History server at localhost/127.0.0.1:10200

22/10/08 04:44:13 INFO mapreduce.JobResourceUploader: Disabling Erasure Coding for path: /user/ambari-qa/.staging/job_1665204113174_0003

22/10/08 04:44:14 INFO input.FileInputFormat: Total input files to process : 1

22/10/08 04:44:15 INFO mapreduce.JobSubmitter: number of splits:1

22/10/08 04:44:15 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1665204113174_0003

22/10/08 04:44:15 INFO mapreduce.JobSubmitter: Executing with tokens: []

22/10/08 04:44:15 INFO conf.Configuration: resource-types.xml not found

22/10/08 04:44:15 INFO resource.ResourceUtils: Unable to find 'resource-types.xml'.

22/10/08 04:44:15 INFO impl.YarnClientImpl: Submitted application application_1665204113174_0003

22/10/08 04:44:15 INFO mapreduce.Job: The url to track the job: http://ambari-agent-02:8088/proxy/application_1665204113174_0003/

22/10/08 04:44:15 INFO mapreduce.Job: Running job: job_1665204113174_0003

22/10/08 04:44:23 INFO mapreduce.Job: Job job_1665204113174_0003 running in uber mode : false

22/10/08 04:44:23 INFO mapreduce.Job: map 0% reduce 0%

22/10/08 04:44:29 INFO mapreduce.Job: map 100% reduce 0%

22/10/08 04:44:35 INFO mapreduce.Job: map 100% reduce 100%

22/10/08 04:44:36 INFO mapreduce.Job: Job job_1665204113174_0003 completed successfully

22/10/08 04:44:36 INFO mapreduce.Job: Counters: 54

File System Counters

FILE: Number of bytes read=1376

FILE: Number of bytes written=565787

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=1284

HDFS: Number of bytes written=1230

HDFS: Number of read operations=8

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

HDFS: Number of bytes read erasure-coded=0

Job Counters

Launched map tasks=1

Launched reduce tasks=1

Data-local map tasks=1

Total time spent by all maps in occupied slots (ms)=3068

Total time spent by all reduces in occupied slots (ms)=2784

Total time spent by all map tasks (ms)=3068

Total time spent by all reduce tasks (ms)=2784

Total vcore-milliseconds taken by all map tasks=3068

Total vcore-milliseconds taken by all reduce tasks=2784

Total megabyte-milliseconds taken by all map tasks=4712448

Total megabyte-milliseconds taken by all reduce tasks=4276224

Map-Reduce Framework

Map input records=26

Map output records=35

Map output bytes=1300

Map output materialized bytes=1376

Input split bytes=124

Combine input records=35

Combine output records=35

Reduce input groups=35

Reduce shuffle bytes=1376

Reduce input records=35

Reduce output records=35

Spilled Records=70

Shuffled Maps =1

Failed Shuffles=0

Merged Map outputs=1

GC time elapsed (ms)=129

CPU time spent (ms)=1470

Physical memory (bytes) snapshot=1034907648

Virtual memory (bytes) snapshot=6090969088

Total committed heap usage (bytes)=883949568

Peak Map Physical memory (bytes)=837943296

Peak Map Virtual memory (bytes)=3028852736

Peak Reduce Physical memory (bytes)=196964352

Peak Reduce Virtual memory (bytes)=3062116352

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=1160

File Output Format Counters

Bytes Written=1230

2022-10-08 04:44:36,594 - ExecuteHadoop['fs -test -e /user/ambari-qa/mapredsmokeoutput'] {'bin_dir': '/usr/sbin:/sbin:/usr/lib/ambari-server/*:/usr/sbin:/sbin:/usr/lib/ambari-server/*:/usr/local/sbin:/usr/local/bin:/sbin:/bin:/usr/sbin:/usr/bin:/root/bin:/var/lib/ambari-agent:/var/lib/ambari-agent:/usr/bin:/usr/lib/hadoop-yarn/bin', 'user': 'ambari-qa', 'conf_dir': '/etc/hadoop/conf'}

2022-10-08 04:44:36,595 - Execute['hadoop --config /etc/hadoop/conf fs -test -e /user/ambari-qa/mapredsmokeoutput'] {'logoutput': None, 'try_sleep': 0, 'environment': {}, 'tries': 1, 'user': 'ambari-qa', 'path': ['/usr/sbin:/sbin:/usr/lib/ambari-server/*:/usr/sbin:/sbin:/usr/lib/ambari-server/*:/usr/local/sbin:/usr/local/bin:/sbin:/bin:/usr/sbin:/usr/bin:/root/bin:/var/lib/ambari-agent:/var/lib/ambari-agent:/usr/bin:/usr/lib/hadoop-yarn/bin']}

Command completed successfully!

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: dev-unsubscribe@ambari.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: dev-unsubscribe@ambari.apache.org

For additional commands, e-mail: dev-help@ambari.apache.org

[GitHub] [ambari] kevinw66 commented on pull request #3365: AMBARI-25731: HdfsResource didn't chown again when reupload local file in resource_management

Posted by GitBox <gi...@apache.org>.

kevinw66 commented on PR #3365:

URL: https://github.com/apache/ambari/pull/3365#issuecomment-1272268089

Tested with kerberos enabled and disabled, both works.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: dev-unsubscribe@ambari.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: dev-unsubscribe@ambari.apache.org

For additional commands, e-mail: dev-help@ambari.apache.org

[GitHub] [ambari] kevinw66 merged pull request #3365: AMBARI-25731: HdfsResource didn't chown again when reupload local file in resource_management

Posted by GitBox <gi...@apache.org>.

kevinw66 merged PR #3365:

URL: https://github.com/apache/ambari/pull/3365

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: dev-unsubscribe@ambari.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: dev-unsubscribe@ambari.apache.org

For additional commands, e-mail: dev-help@ambari.apache.org

[GitHub] [ambari] kevinw66 commented on a diff in pull request #3365: AMBARI-25731: HdfsResource didn't chown again when reupload local file in resource_management

Posted by GitBox <gi...@apache.org>.

kevinw66 commented on code in PR #3365:

URL: https://github.com/apache/ambari/pull/3365#discussion_r989242960

##########

ambari-common/src/main/python/resource_management/libraries/providers/hdfs_resource.py:

##########

@@ -524,29 +524,33 @@ def _create_file(self, target, source=None, mode=""):

file_status = self._get_file_status(target) if target!=self.main_resource.resource.target else self.target_status

mode = "" if not mode else mode

+ kwargs = {'permission': mode} if mode else {}

if file_status:

+ # Target file exists

if source:

+ # Upload target file

length = file_status['length']

local_file_size = os.stat(source).st_size # TODO: os -> sudo

# TODO: re-implement this using checksums

if local_file_size == length:

Logger.info(format("DFS file {target} is identical to {source}, skipping the copying"))

- return

elif not self.main_resource.resource.replace_existing_files:

Logger.info(format("Not replacing existing DFS file {target} which is different from {source}, due to replace_existing_files=False"))

- return

+ else:

+ Logger.info(format("Reupload file {target} in DFS"))

+

+ self.util.run_command(target, 'CREATE', method='PUT', overwrite=True, assertable_result=False, file_to_put=source, **kwargs)

+ # Get file status again after file reupload

+ self.target_status = self._get_file_status(target)

Review Comment:

We cannot reassign `self.target_status` here.

Because when we create a directory, the `target_status` represents the directory status, if we reassign this variable here, we will get the latest file status we uploaded

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: dev-unsubscribe@ambari.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: dev-unsubscribe@ambari.apache.org

For additional commands, e-mail: dev-help@ambari.apache.org

[GitHub] [ambari] kevinw66 commented on pull request #3365: AMBARI-25731: HdfsResource didn't chown again when reupload local file in resource_management

Posted by GitBox <gi...@apache.org>.

kevinw66 commented on PR #3365:

URL: https://github.com/apache/ambari/pull/3365#issuecomment-1270350498

BTW, `self.main_resource.target` seems incorrect here, should it be `self.main_resource.resource.target`? Can you help check it?

https://github.com/apache/ambari/blob/trunk/ambari-common/src/main/python/resource_management/libraries/providers/hdfs_resource.py#L429

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: dev-unsubscribe@ambari.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: dev-unsubscribe@ambari.apache.org

For additional commands, e-mail: dev-help@ambari.apache.org

[GitHub] [ambari] timyuer commented on pull request #3365: AMBARI-25731: HdfsResource didn't chown again when reupload local file in resource_management

Posted by GitBox <gi...@apache.org>.

timyuer commented on PR #3365:

URL: https://github.com/apache/ambari/pull/3365#issuecomment-1272227320

> BTW, self.main_resource.target seems incorrect here, should it be self.main_resource.resource.target? Can you help check it?

> https://github.com/apache/ambari/blob/trunk/ambari-> common/src/main/python/resource_management/libraries/providers/hdfs_resource.py#L429

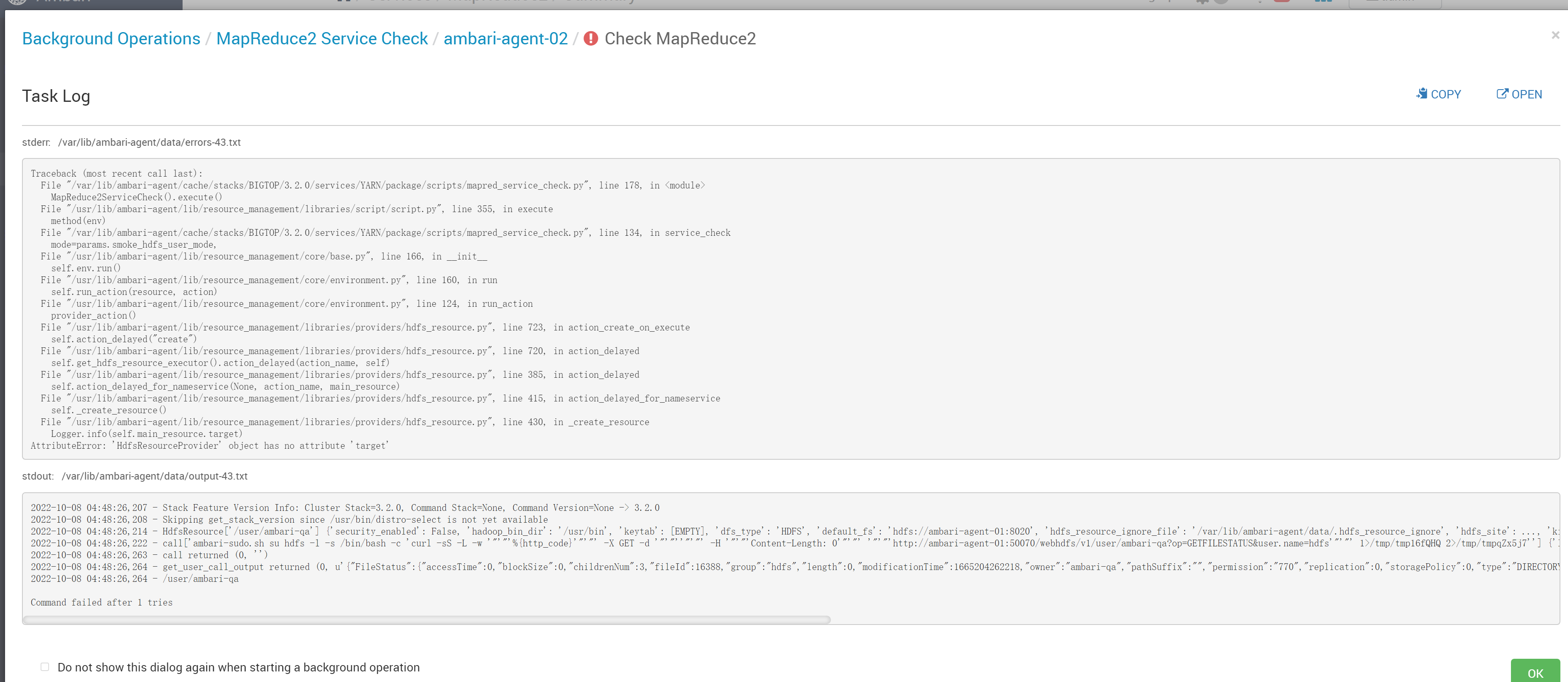

When I want to get `self.main_resource.target`, it report a error as `AttributeError: 'HdfsResourceProvider' object has no attribute 'target'`.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: dev-unsubscribe@ambari.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: dev-unsubscribe@ambari.apache.org

For additional commands, e-mail: dev-help@ambari.apache.org