You are viewing a plain text version of this content. The canonical link for it is here.

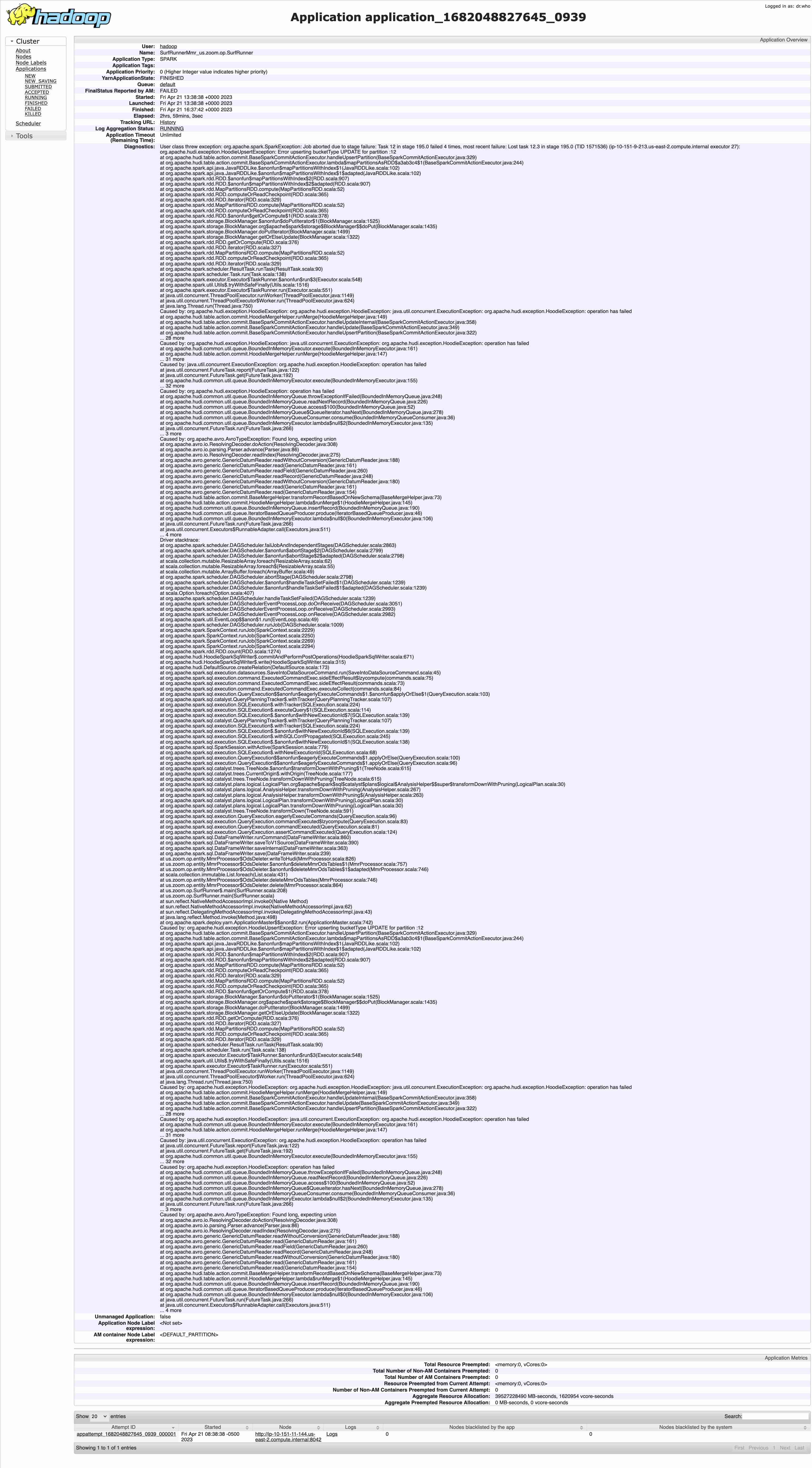

Posted to commits@hudi.apache.org by "easonwood (via GitHub)" <gi...@apache.org> on 2023/04/21 23:48:56 UTC

[GitHub] [hudi] easonwood opened a new issue, #8540: [SUPPORT] Getting error when writing into COW HUDI table if schema changed (datatype changed / column dropped)

easonwood opened a new issue, #8540:

URL: https://github.com/apache/hudi/issues/8540

**Describe the problem you faced**

We have a hudi table created in 20221130, and we did column change by adding some columns.

Now when we do DELETE on whole partitions, it will get error

**To Reproduce**

Steps to reproduce the behavior:

1. We have old data ::

>>> old_df = spark.read.parquet("s3://our-bucket/data-lake-hudi/ods_meeting_mmr_log/ods_mmr_conf_join/cluster=aw1/year=2022/month=11/day=30")

>>> old_df.printSchema()

root

|-- _hoodie_commit_time: string (nullable = true)

|-- _hoodie_commit_seqno: string (nullable = true)

|-- _hoodie_record_key: string (nullable = true)

|-- _hoodie_partition_path: string (nullable = true)

|-- _hoodie_file_name: string (nullable = true)

|-- _hoodie_is_deleted: boolean (nullable = true)

|-- account_id: string (nullable = true)

|-- cluster: string (nullable = true)

|-- day: string (nullable = true)

|-- error_fields: string (nullable = true)

|-- host_name: string (nullable = true)

|-- instance_id: string (nullable = true)

|-- log_time: string (nullable = true)

|-- meeting_uuid: string (nullable = true)

|-- month: string (nullable = true)

|-- region: string (nullable = true)

|-- rowkey: string (nullable = true)

|-- year: string (nullable = true)

|-- mmr_addr: string (nullable = true)

|-- top_mmr_addr: string (nullable = true)

|-- zone_name: string (nullable = true)

|-- client_addr: string (nullable = true)

|-- user_id: long (nullable = true)

|-- user_type: long (nullable = true)

|-- result: long (nullable = true)

|-- failover: long (nullable = true)

|-- name: string (nullable = true)

|-- viewonly_user: long (nullable = true)

|-- db_user_id: string (nullable = true)

|-- host_account: string (nullable = true)

|-- time_cost: long (nullable = true)

|-- device_id: string (nullable = true)

|-- index: long (nullable = true)

|-- persist_index: long (nullable = true)

|-- email: string (nullable = true)

|-- cluster_info: string (nullable = true)

|-- kms_enable: long (nullable = true)

|-- need_holding: long (nullable = true)

|-- not_guest: long (nullable = true)

|-- need_hide_pii: long (nullable = true)

|-- acct_id: string (nullable = true)

**|-- rest_fields: string (nullable = true)**

2. >>> new_df = spark.read.parquet("s3://our-bucket/data-lake-hudi/ods_meeting_mmr_log/ods_mmr_conf_join/cluster=aw1/year=2023/month=04/day=16")

>>> new_df.printSchema()

root

|-- _hoodie_commit_time: string (nullable = true)

|-- _hoodie_commit_seqno: string (nullable = true)

|-- _hoodie_record_key: string (nullable = true)

|-- _hoodie_partition_path: string (nullable = true)

|-- _hoodie_file_name: string (nullable = true)

|-- _hoodie_is_deleted: boolean (nullable = true)

|-- account_id: string (nullable = true)

|-- cluster: string (nullable = true)

|-- day: string (nullable = true)

|-- error_fields: string (nullable = true)

|-- host_name: string (nullable = true)

|-- instance_id: string (nullable = true)

|-- log_time: string (nullable = true)

|-- meeting_uuid: string (nullable = true)

|-- month: string (nullable = true)

|-- region: string (nullable = true)

|-- rowkey: string (nullable = true)

|-- year: string (nullable = true)

|-- mmr_addr: string (nullable = true)

|-- top_mmr_addr: string (nullable = true)

|-- zone_name: string (nullable = true)

|-- client_addr: string (nullable = true)

|-- user_id: long (nullable = true)

|-- user_type: long (nullable = true)

|-- result: long (nullable = true)

|-- failover: long (nullable = true)

|-- name: string (nullable = true)

|-- viewonly_user: long (nullable = true)

|-- db_user_id: string (nullable = true)

|-- host_account: string (nullable = true)

|-- time_cost: long (nullable = true)

|-- device_id: string (nullable = true)

|-- index: long (nullable = true)

|-- persist_index: long (nullable = true)

|-- email: string (nullable = true)

|-- cluster_info: string (nullable = true)

|-- kms_enable: long (nullable = true)

|-- need_holding: long (nullable = true)

|-- not_guest: long (nullable = true)

|-- need_hide_pii: long (nullable = true)

|-- acct_id: string (nullable = true)

**|-- customer_key: string (nullable = true)

|-- phone_id: long (nullable = true)

|-- bind_number: long (nullable = true)

|-- tracking_id: string (nullable = true)

|-- reg_user_id: string (nullable = true)

|-- user_sn: string (nullable = true)

|-- gateways: string (nullable = true)

|-- jid: string (nullable = true)

|-- options2: string (nullable = true)

|-- attendee_option: long (nullable = true)

|-- is_user_failover: long (nullable = true)

|-- attendee_account_id: string (nullable = true)

|-- token_uuid: string (nullable = true)

|-- restrict_join_status_sharing: long (nullable = true)

|-- rest_fields: string (nullable = true)**

3.

when we do DELETE by these config:

"hoodie.fail.on.timeline.archiving": "false",

"hoodie.datasource.write.table.type": "COPY_ON_WRITE",

"hoodie.datasource.write.hive_style_partitioning": "true",

"hoodie.datasource.write.keygenerator.class": "org.apache.hudi.keygen.CustomKeyGenerator",

"hoodie.datasource.hive_sync.enable": "false",

"hoodie.archive.automatic": "false",

"hoodie.metadata.enable": "false",

"hoodie.metadata.enable.full.scan.log.files": "false",

"hoodie.index.type": "SIMPLE",

"hoodie.embed.timeline.server": "false",

"hoodie.avro.schema.external.transformation": "true",

"hoodie.schema.on.read.enable": "true",

"hoodie.datasource.write.reconcile.schema": "true"

4. we got the error :

AvroTypeException: Found long, expecting union

**Environment Description**

* Hudi version :

* Spark version :

* Storage (HDFS/S3/GCS..) : S3

* Running on Docker? (yes/no) : no

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] ad1happy2go commented on issue #8540: [SUPPORT] Getting error when writing into COW HUDI table if schema changed (datatype changed / column dropped)

Posted by "ad1happy2go (via GitHub)" <gi...@apache.org>.

ad1happy2go commented on issue #8540:

URL: https://github.com/apache/hudi/issues/8540#issuecomment-1540652067

This issue is fixed in master.

This is the patch which fix this - https://github.com/apache/hudi/pull/6358

Can you try with later version of hudi which includes this patch.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] easonwood commented on issue #8540: [SUPPORT] Getting error when writing into COW HUDI table if schema changed (datatype changed / column dropped)

Posted by "easonwood (via GitHub)" <gi...@apache.org>.

easonwood commented on issue #8540:

URL: https://github.com/apache/hudi/issues/8540#issuecomment-1522632098

@voonhous

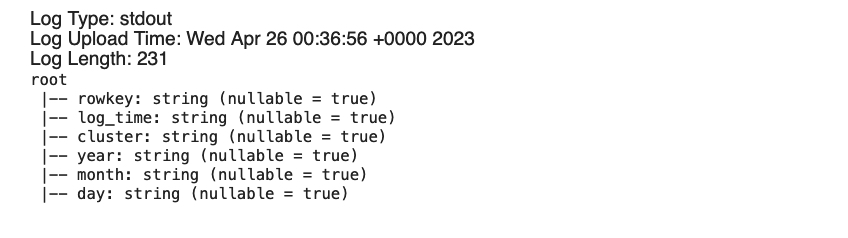

Actually we created a dataframe only containing the primaryKey for this table. And do DELETE operation by writing it to hudi.

Code is like this :

logger.error(s"Schema for dataToDelete: ${dataToDelete.printSchema()}")

dataToDelete

.write

.format("org.apache.hudi")

.options(hudiOptions)

.option(OPERATION_OPT_KEY, DELETE_OPERATION_OPT_VAL)

.option(PRECOMBINE_FIELD_OPT_KEY, precombineKey)

.option(RECORDKEY_FIELD_OPT_KEY, recordKey)

.option(HIVE_PARTITION_EXTRACTOR_CLASS_OPT_KEY, classOf[MultiPartKeysValueExtractor].getName)

.option("hoodie.datasource.write.partitionpath.field", partitionPath)

.option(HoodieWriteConfig.TABLE_NAME, tableName)

.mode(SaveMode.Append)

.save(basePath)

the dataToDelete.printSchema :

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] easonwood commented on issue #8540: [SUPPORT] Getting error when writing into COW HUDI table if schema changed (datatype changed / column dropped)

Posted by "easonwood (via GitHub)" <gi...@apache.org>.

easonwood commented on issue #8540:

URL: https://github.com/apache/hudi/issues/8540#issuecomment-1523375371

@voonhous

Sure, I think we can add some steps in you testcase at last.

1、first create a dataToDelete dataframe, which is from :

val sql = "select id, year, month, day from $tableName where id=$id and year=$year and month=$month and day=$day"

val dataToDelete = spark.sql(query)

2、then do DELETE operation for whole hudi table:

dataToDelete

.write

.format("org.apache.hudi")

.options(hudiOptions)

.option(OPERATION_OPT_KEY, DELETE_OPERATION_OPT_VAL)

.option(PRECOMBINE_FIELD_OPT_KEY, precombineKey)

.option(RECORDKEY_FIELD_OPT_KEY, recordKey)

.option(HIVE_PARTITION_EXTRACTOR_CLASS_OPT_KEY, classOf[MultiPartKeysValueExtractor].getName)

.option("hoodie.datasource.write.partitionpath.field", partitionPath)

.option(HoodieWriteConfig.TABLE_NAME, tableName)

.mode(SaveMode.Append)

.save(basePath)

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] voonhous commented on issue #8540: [SUPPORT] Getting error when writing into COW HUDI table if schema changed (datatype changed / column dropped)

Posted by "voonhous (via GitHub)" <gi...@apache.org>.

voonhous commented on issue #8540:

URL: https://github.com/apache/hudi/issues/8540#issuecomment-1527190526

```scala

test("Test add column + change column type + drop partition") {

withTempDir { tmp =>

val tableName = generateTableName

spark.sql(

s"""

|create table $tableName (

| id string,

| name string,

| price string,

| ts long,

| year string,

| month string,

| day string

|) using hudi

|tblproperties(

| type = 'cow',

| primaryKey = 'id',

| preCombineField = 'ts'

|) partitioned by (`year`, `month`, `day`)

|location '${tmp.getCanonicalPath}'

""".stripMargin)

spark.sql(s"insert into $tableName values (1,'danny','2.22',1000,'2023','04','25')");

checkAnswer(s"select id, name, price, ts, year, month, day from $tableName")(

Seq("1", "danny", "2.22", 1000, "2023", "04", "25")

)

// enable hudi-full schema evolution

spark.sql(s"set hoodie.schema.on.read.enable=true")

// add a column

spark.sql(s"alter table $tableName add column (new_col bigint)")

spark.sql(s"insert into $tableName values " +

s"(2,'danny','2.22',1001,222222,'2023','04','23'), " +

s"(3,'danny','3.33',1001,333333,'2023','04','24')")

checkAnswer(s"select id, name, price, ts, new_col, year, month, day from $tableName")(

Seq("1", "danny", "2.22", 1000, null, "2023", "04", "25"),

Seq("2", "danny", "2.22", 1001, 222222, "2023", "04", "23"),

Seq("3", "danny", "3.33", 1001, 333333, "2023", "04", "24")

)

// change column type of ts to string

spark.sql(s"alter table $tableName alter ts type string")

// insert a new record into a different partition

spark.sql(s"insert into $tableName values (4,'danny','4.44','1002',444444,'2023','04','22')")

checkAnswer(s"select id, name, price, ts, new_col, year, month, day from $tableName")(

Seq("1", "danny", "2.22", "1000", null, "2023", "04", "25"),

Seq("2", "danny", "2.22", "1001", 222222, "2023", "04", "23"),

Seq("3", "danny", "3.33", "1001", 333333, "2023", "04", "24"),

Seq("4", "danny", "4.44", "1002", 444444, "2023", "04", "22")

)

import spark.implicits._

val dataToDelete = Seq(("1", "2023", "04", "25")).toDF("id", "year", "month", "day")

dataToDelete

.write

.format("org.apache.hudi")

.option(OPERATION_OPT_KEY, DELETE_OPERATION_OPT_VAL)

.option(PRECOMBINE_FIELD_OPT_KEY, "ts")

.option(RECORDKEY_FIELD_OPT_KEY, "id")

.option(HIVE_PARTITION_EXTRACTOR_CLASS_OPT_KEY, classOf[MultiPartKeysValueExtractor].getName)

.option("hoodie.schema.on.read.enable", "true")

.option("hoodie.datasource.write.reconcile.schema", "true")

.option(HoodieWriteConfig.TABLE_NAME, tableName)

.mode("append")

.save(s"${tmp.getCanonicalPath}")

val df = spark.read.format("org.apache.hudi").load(s"${tmp.getCanonicalPath}")

df.show(false)

// spark.sql(s"DELETE FROM $tableName WHERE id='1'")

// spark.sql(s"SELECT * FROM $tableName").show(false)

}

}

```

I tried running this, no issue... Can't reproduce your error.

Note: My test case might be wrong as the returned results are incorrect.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] voonhous commented on issue #8540: [SUPPORT] Getting error when writing into COW HUDI table if schema changed (datatype changed / column dropped)

Posted by "voonhous (via GitHub)" <gi...@apache.org>.

voonhous commented on issue #8540:

URL: https://github.com/apache/hudi/issues/8540#issuecomment-1522738859

My bad, i tried reproducing the test cases through the specifications you provided, but cannot seem to replicate them.

Instead of me trying to figure out how one can reproduce your error, can you please provide a minimal example on how one can trigger the error that you're facing?

Thanks.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] voonhous commented on issue #8540: [SUPPORT] Getting error when writing into COW HUDI table if schema changed (datatype changed / column dropped)

Posted by "voonhous (via GitHub)" <gi...@apache.org>.

voonhous commented on issue #8540:

URL: https://github.com/apache/hudi/issues/8540#issuecomment-1521565619

@easonwood I tried reproducing the issue with the latest version of master (d3ed4556c8c5cf4c3380ac573903c92abcffbb1d).

Not sure if I am missing anything, can't seem to trigger your error :(.

```sql

test("Test add column + change column type + drop partition") {

withTempDir { tmp =>

val tableName = generateTableName

spark.sql(

s"""

|create table $tableName (

| id string,

| name string,

| price string,

| ts long,

| year string,

| month string,

| day string

|) using hudi

|tblproperties(

| type = 'cow',

| primaryKey = 'id'

|) partitioned by (`year`, `month`, `day`)

|location '${tmp.getCanonicalPath}'

""".stripMargin)

spark.sql(s"insert into $tableName values (1,'danny','2.22',1000,'2023','04','25')");

checkAnswer(s"select id, name, price, ts, year, month, day from $tableName")(

Seq("1", "danny", "2.22", 1000, "2023", "04", "25")

)

// enable hudi-full schema evolution

spark.sql(s"set hoodie.schema.on.read.enable=true")

// add a column

spark.sql(s"alter table $tableName add column (new_col bigint)")

spark.sql(s"insert into $tableName values " +

s"(2,'danny','2.22',1001,222222,'2023','04','23'), " +

s"(3,'danny','3.33',1001,333333,'2023','04','24')")

checkAnswer(s"select id, name, price, ts, new_col, year, month, day from $tableName")(

Seq("1", "danny", "2.22", 1000, null, "2023", "04", "25"),

Seq("2", "danny", "2.22", 1001, 222222, "2023", "04", "23"),

Seq("3", "danny", "3.33", 1001, 333333, "2023", "04", "24")

)

// change column type of ts to string

spark.sql(s"alter table $tableName alter ts type string")

// insert a new record into a different partition

spark.sql(s"insert into $tableName values (4,'danny','4.44','1002',444444,'2023','04','22')")

checkAnswer(s"select id, name, price, ts, new_col, year, month, day from $tableName")(

Seq("1", "danny", "2.22", "1000", null, "2023", "04", "25"),

Seq("2", "danny", "2.22", "1001", 222222, "2023", "04", "23"),

Seq("3", "danny", "3.33", "1001", 333333, "2023", "04", "24"),

Seq("4", "danny", "4.44", "1002", 444444, "2023", "04", "22")

)

// drop a partition

spark.sql(s"alter table $tableName drop partition (year=2023,month=04,day=24)")

checkAnswer(s"select id, name, price, ts, new_col, year, month, day from $tableName")(

Seq("1", "danny", "2.22", "1000", null, "2023", "04", "25"),

Seq("2", "danny", "2.22", "1001", 222222, "2023", "04", "23"),

Seq("4", "danny", "4.44", "1002", 444444, "2023", "04", "22")

)

}

}

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] voonhous commented on issue #8540: [SUPPORT] Getting error when writing into COW HUDI table if schema changed (datatype changed / column dropped)

Posted by "voonhous (via GitHub)" <gi...@apache.org>.

voonhous commented on issue #8540:

URL: https://github.com/apache/hudi/issues/8540#issuecomment-1521508289

@easonwood How are you deleting the partition? i.e. is the partition being dropped via a Spark-SQL DML?

```sql

ALTER TABLE $tableName DROP PARTTION (cluster=aw1, year=2022, month=11, day=30)

```

Or did you drop it some other way?

Can you try reading the table using a more recent version? Something like 0.13.0 to see if the issue exists??

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] easonwood commented on issue #8540: [SUPPORT] Getting error when writing into COW HUDI table if schema changed (datatype changed / column dropped)

Posted by "easonwood (via GitHub)" <gi...@apache.org>.

easonwood commented on issue #8540:

URL: https://github.com/apache/hudi/issues/8540#issuecomment-1521349927

@voonhous Can you help check this issue ? thanks

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org