You are viewing a plain text version of this content. The canonical link for it is here.

Posted to common-issues@hadoop.apache.org by GitBox <gi...@apache.org> on 2021/11/18 10:24:57 UTC

[GitHub] [hadoop] liubingxing opened a new pull request #3679: HDFS-16333. fix balancer bug when transfer an EC block

liubingxing opened a new pull request #3679:

URL: https://github.com/apache/hadoop/pull/3679

JIRA: [HDFS-16333](https://issues.apache.org/jira/browse/HDFS-16333)

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For additional commands, e-mail: common-issues-help@hadoop.apache.org

[GitHub] [hadoop] hemanthboyina commented on pull request #3679: HDFS-16333. fix balancer bug when transfer an EC block

Posted by GitBox <gi...@apache.org>.

hemanthboyina commented on pull request #3679:

URL: https://github.com/apache/hadoop/pull/3679#issuecomment-973028808

@liubingxing can you extend an UT for your scenario

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For additional commands, e-mail: common-issues-help@hadoop.apache.org

[GitHub] [hadoop] liubingxing commented on a change in pull request #3679: HDFS-16333. fix balancer bug when transfer an EC block

Posted by GitBox <gi...@apache.org>.

liubingxing commented on a change in pull request #3679:

URL: https://github.com/apache/hadoop/pull/3679#discussion_r763930129

##########

File path: hadoop-hdfs-project/hadoop-hdfs/src/test/java/org/apache/hadoop/hdfs/server/balancer/TestBalancer.java

##########

@@ -1615,6 +1619,92 @@ public void testBalancerWithStripedFile() throws Exception {

NameNodeConnector.setWrite2IdFile(false);

}

+ @Test

+ public void testBalancerWithExcludeListWithStripedFile() throws Exception {

+ Configuration conf = new Configuration();

+ initConfWithStripe(conf);

+ NameNodeConnector.setWrite2IdFile(true);

+ doTestBalancerWithExcludeListWithStripedFile(conf);

+ NameNodeConnector.setWrite2IdFile(false);

+ }

+

+ private void doTestBalancerWithExcludeListWithStripedFile(Configuration conf) throws Exception {

+ int numOfDatanodes = dataBlocks + parityBlocks + 3;

+ int numOfRacks = dataBlocks;

+ long capacity = 20 * defaultBlockSize;

+ long[] capacities = new long[numOfDatanodes];

+ Arrays.fill(capacities, capacity);

+ String[] racks = new String[numOfDatanodes];

+ for (int i = 0; i < numOfDatanodes; i++) {

+ racks[i] = "/rack" + (i % numOfRacks);

+ }

+ cluster = new MiniDFSCluster.Builder(conf)

+ .numDataNodes(numOfDatanodes)

+ .racks(racks)

+ .simulatedCapacities(capacities)

+ .build();

+

+ try {

+ cluster.waitActive();

+ client = NameNodeProxies.createProxy(conf, cluster.getFileSystem(0).getUri(),

+ ClientProtocol.class).getProxy();

+ client.enableErasureCodingPolicy(

+ StripedFileTestUtil.getDefaultECPolicy().getName());

+ client.setErasureCodingPolicy("/",

+ StripedFileTestUtil.getDefaultECPolicy().getName());

+

+ long totalCapacity = sum(capacities);

+

+ // fill up the cluster with 30% data. It'll be 45% full plus parity.

+ long fileLen = totalCapacity * 3 / 10;

+ long totalUsedSpace = fileLen * (dataBlocks + parityBlocks) / dataBlocks;

+ FileSystem fs = cluster.getFileSystem(0);

+ DFSTestUtil.createFile(fs, filePath, fileLen, (short) 3, r.nextLong());

+

+ // verify locations of striped blocks

+ LocatedBlocks locatedBlocks = client.getBlockLocations(fileName, 0, fileLen);

+ StripedFileTestUtil.verifyLocatedStripedBlocks(locatedBlocks, groupSize);

+

+ // get datanode report

+ DatanodeInfo[] datanodeReport = client.getDatanodeReport(DatanodeReportType.ALL);

+

+ // add datanode in new rack

+ String newRack = "/rack" + (++numOfRacks);

+ cluster.startDataNodes(conf, 2, true, null,

+ new String[]{newRack, newRack}, null,

+ new long[]{capacity, capacity});

+ totalCapacity += capacity*2;

+ cluster.triggerHeartbeats();

+

+ // add datanode to exclude list

+ Set<String> dnList = new HashSet<>();

+ dnList.add(datanodeReport[0].getHostName());

+ BalancerParameters.Builder pBuilder = new BalancerParameters.Builder();

+ pBuilder.setExcludedNodes(dnList);

+ waitForHeartBeat(totalUsedSpace, totalCapacity, client, cluster);

+

+ // start balancer and check the failed num of moving task

+ Collection<URI> namenodes = DFSUtil.getInternalNsRpcUris(conf);

+ final int run = runBalancer(namenodes, pBuilder.build(), conf, true);

+ if (conf.getInt(

+ DFSConfigKeys.DFS_DATANODE_BALANCE_MAX_NUM_CONCURRENT_MOVES_KEY,

+ DFSConfigKeys.DFS_DATANODE_BALANCE_MAX_NUM_CONCURRENT_MOVES_DEFAULT)

+ == 0) {

+ assertEquals(ExitStatus.NO_MOVE_PROGRESS.getExitCode(), run);

+ } else {

+ assertEquals(ExitStatus.SUCCESS.getExitCode(), run);

+ }

+ waitForHeartBeat(totalUsedSpace, totalCapacity, client, cluster);

Review comment:

I updated the UT and calling `runBalancer` from the unit test.

And I also add a parameter `boolean checkExcludeNodesUtilization` in `waitForBalancer` to determine whether to check the nodeUtilization of excluded datanode

``` java

DatanodeInfo[] datanodeReport =

client.getDatanodeReport(DatanodeReportType.ALL);

assertEquals(datanodeReport.length, cluster.getDataNodes().size());

balanced = true;

int actualExcludedNodeCount = 0;

for (DatanodeInfo datanode : datanodeReport) {

double nodeUtilization = ((double)datanode.getDfsUsed())

/ datanode.getCapacity();

if (Dispatcher.Util.isExcluded(p.getExcludedNodes(), datanode)) {

if (checkExcludeNodesUtilization) {

assertTrue(nodeUtilization == 0);

}

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For additional commands, e-mail: common-issues-help@hadoop.apache.org

[GitHub] [hadoop] liubingxing edited a comment on pull request #3679: HDFS-16333. fix balancer bug when transfer an EC block

Posted by GitBox <gi...@apache.org>.

liubingxing edited a comment on pull request #3679:

URL: https://github.com/apache/hadoop/pull/3679#issuecomment-986568780

@tasanuma Thank you for your review and comments.

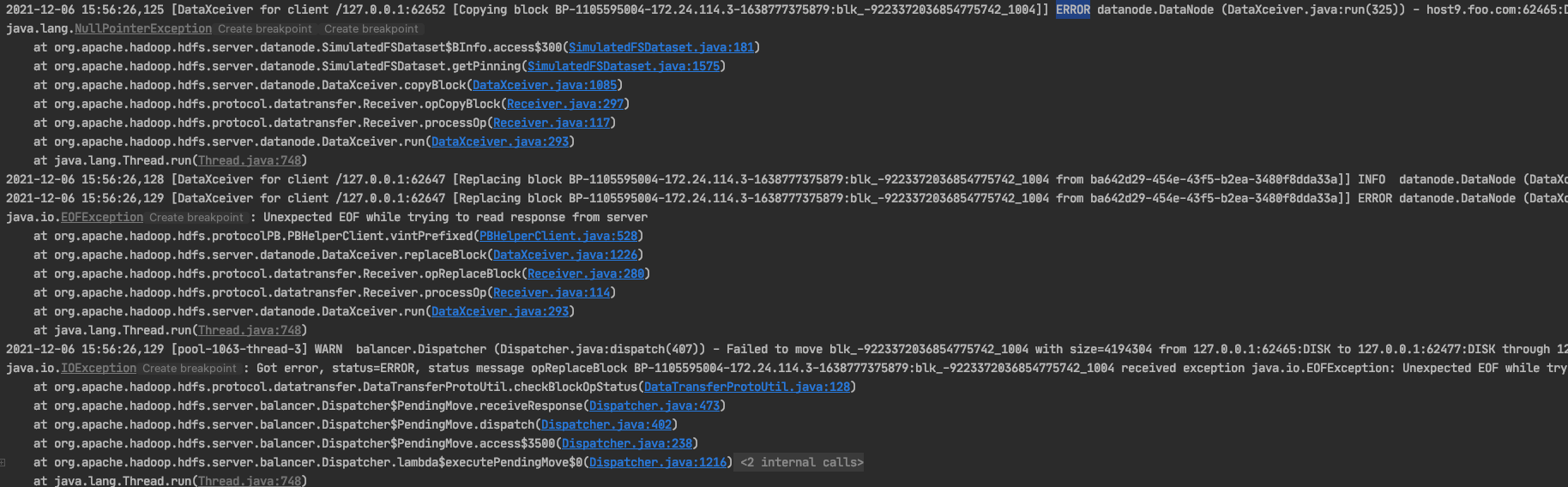

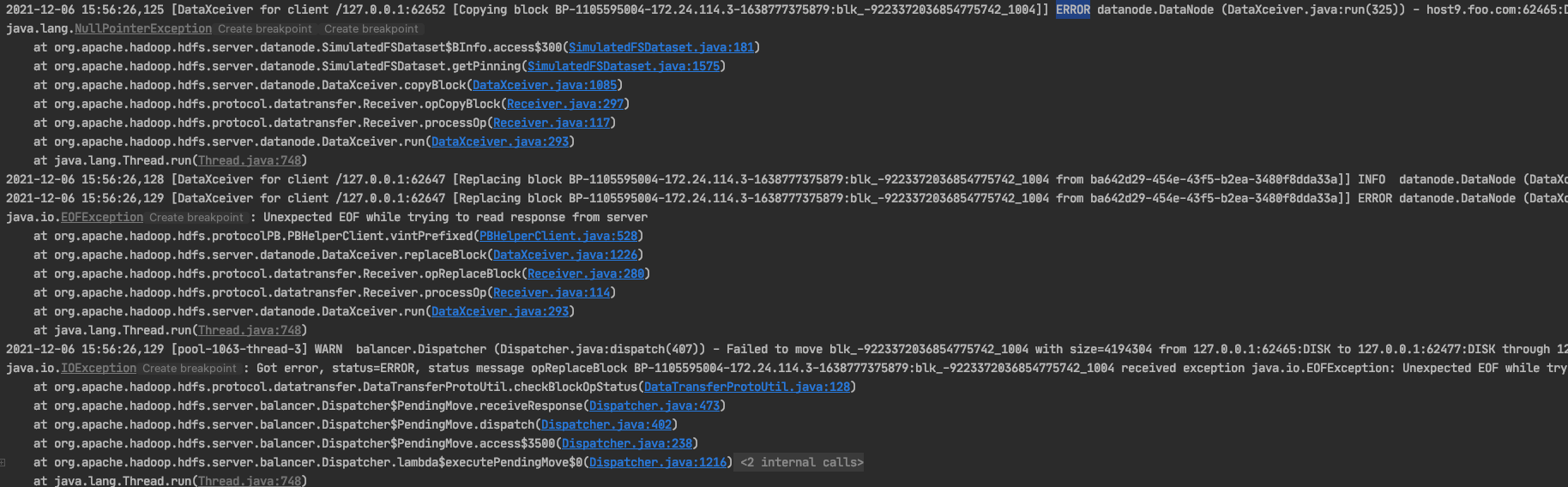

I run the UT `doTestBalancerWithStripedFile` in current trunk branch and sometimes the errors occur .

Therefore, it is not good to use `assertEquals(0, nnc.getBlocksFailed().get())` to check the result in this new UT.

I will redesign a UT to test this scenario as soon as possible.

If you have any suggestions, please let me know, thank you.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For additional commands, e-mail: common-issues-help@hadoop.apache.org

[GitHub] [hadoop] liubingxing commented on a change in pull request #3679: HDFS-16333. fix balancer bug when transfer an EC block

Posted by GitBox <gi...@apache.org>.

liubingxing commented on a change in pull request #3679:

URL: https://github.com/apache/hadoop/pull/3679#discussion_r763923048

##########

File path: hadoop-hdfs-project/hadoop-hdfs/src/test/java/org/apache/hadoop/hdfs/server/balancer/TestBalancer.java

##########

@@ -1615,6 +1619,92 @@ public void testBalancerWithStripedFile() throws Exception {

NameNodeConnector.setWrite2IdFile(false);

}

+ @Test

+ public void testBalancerWithExcludeListWithStripedFile() throws Exception {

+ Configuration conf = new Configuration();

+ initConfWithStripe(conf);

+ NameNodeConnector.setWrite2IdFile(true);

+ doTestBalancerWithExcludeListWithStripedFile(conf);

+ NameNodeConnector.setWrite2IdFile(false);

+ }

+

+ private void doTestBalancerWithExcludeListWithStripedFile(Configuration conf) throws Exception {

+ int numOfDatanodes = dataBlocks + parityBlocks + 3;

+ int numOfRacks = dataBlocks;

+ long capacity = 20 * defaultBlockSize;

+ long[] capacities = new long[numOfDatanodes];

+ Arrays.fill(capacities, capacity);

+ String[] racks = new String[numOfDatanodes];

+ for (int i = 0; i < numOfDatanodes; i++) {

+ racks[i] = "/rack" + (i % numOfRacks);

+ }

+ cluster = new MiniDFSCluster.Builder(conf)

+ .numDataNodes(numOfDatanodes)

+ .racks(racks)

+ .simulatedCapacities(capacities)

+ .build();

+

+ try {

+ cluster.waitActive();

+ client = NameNodeProxies.createProxy(conf, cluster.getFileSystem(0).getUri(),

+ ClientProtocol.class).getProxy();

+ client.enableErasureCodingPolicy(

+ StripedFileTestUtil.getDefaultECPolicy().getName());

+ client.setErasureCodingPolicy("/",

+ StripedFileTestUtil.getDefaultECPolicy().getName());

+

+ long totalCapacity = sum(capacities);

+

+ // fill up the cluster with 30% data. It'll be 45% full plus parity.

+ long fileLen = totalCapacity * 3 / 10;

+ long totalUsedSpace = fileLen * (dataBlocks + parityBlocks) / dataBlocks;

+ FileSystem fs = cluster.getFileSystem(0);

+ DFSTestUtil.createFile(fs, filePath, fileLen, (short) 3, r.nextLong());

+

+ // verify locations of striped blocks

+ LocatedBlocks locatedBlocks = client.getBlockLocations(fileName, 0, fileLen);

+ StripedFileTestUtil.verifyLocatedStripedBlocks(locatedBlocks, groupSize);

+

+ // get datanode report

+ DatanodeInfo[] datanodeReport = client.getDatanodeReport(DatanodeReportType.ALL);

+

+ // add datanode in new rack

+ String newRack = "/rack" + (++numOfRacks);

+ cluster.startDataNodes(conf, 2, true, null,

+ new String[]{newRack, newRack}, null,

+ new long[]{capacity, capacity});

+ totalCapacity += capacity*2;

+ cluster.triggerHeartbeats();

+

+ // add datanode to exclude list

+ Set<String> dnList = new HashSet<>();

+ dnList.add(datanodeReport[0].getHostName());

+ BalancerParameters.Builder pBuilder = new BalancerParameters.Builder();

+ pBuilder.setExcludedNodes(dnList);

+ waitForHeartBeat(totalUsedSpace, totalCapacity, client, cluster);

+

+ // start balancer and check the failed num of moving task

+ Collection<URI> namenodes = DFSUtil.getInternalNsRpcUris(conf);

+ final int run = runBalancer(namenodes, pBuilder.build(), conf, true);

+ if (conf.getInt(

+ DFSConfigKeys.DFS_DATANODE_BALANCE_MAX_NUM_CONCURRENT_MOVES_KEY,

+ DFSConfigKeys.DFS_DATANODE_BALANCE_MAX_NUM_CONCURRENT_MOVES_DEFAULT)

+ == 0) {

+ assertEquals(ExitStatus.NO_MOVE_PROGRESS.getExitCode(), run);

+ } else {

+ assertEquals(ExitStatus.SUCCESS.getExitCode(), run);

+ }

+ waitForHeartBeat(totalUsedSpace, totalCapacity, client, cluster);

+

+ // verify locations of striped blocks

+ locatedBlocks = client.getBlockLocations(fileName, 0, fileLen);

+ StripedFileTestUtil.verifyLocatedStripedBlocks(locatedBlocks, groupSize);

Review comment:

This is because `waitForBalancer` not waiting for namenode to delete extra replicas.

I fix the code and check the total block counts before `StripedFileTestUtil.verifyLocatedStripedBlocks` like this.

```

// check total blocks, max wait time 60s

long startTime = Time.monotonicNow();

int count = 0;

while (count < 20) {

count++;

DatanodeInfo[] datanodeReport1 = client.getDatanodeReport(DatanodeReportType.ALL);

long totalBlocksAfterBalancer = 0;

for (DatanodeInfo dn : datanodeReport1) {

totalBlocksAfterBalancer += dn.getNumBlocks();

}

if (totalBlocks == totalBlocksAfterBalancer) {

System.out.println("wait " + (Time.monotonicNow() - startTime) + "ms to check blocks, count " + count);

break;

}

cluster.triggerHeartbeats();

Thread.sleep(3000L);

}

// verify locations of striped blocks

locatedBlocks = client.getBlockLocations(fileName, 0, fileLen);

StripedFileTestUtil.verifyLocatedStripedBlocks(locatedBlocks, groupSize);

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For additional commands, e-mail: common-issues-help@hadoop.apache.org

[GitHub] [hadoop] liubingxing commented on a change in pull request #3679: HDFS-16333. fix balancer bug when transfer an EC block

Posted by GitBox <gi...@apache.org>.

liubingxing commented on a change in pull request #3679:

URL: https://github.com/apache/hadoop/pull/3679#discussion_r763923048

##########

File path: hadoop-hdfs-project/hadoop-hdfs/src/test/java/org/apache/hadoop/hdfs/server/balancer/TestBalancer.java

##########

@@ -1615,6 +1619,92 @@ public void testBalancerWithStripedFile() throws Exception {

NameNodeConnector.setWrite2IdFile(false);

}

+ @Test

+ public void testBalancerWithExcludeListWithStripedFile() throws Exception {

+ Configuration conf = new Configuration();

+ initConfWithStripe(conf);

+ NameNodeConnector.setWrite2IdFile(true);

+ doTestBalancerWithExcludeListWithStripedFile(conf);

+ NameNodeConnector.setWrite2IdFile(false);

+ }

+

+ private void doTestBalancerWithExcludeListWithStripedFile(Configuration conf) throws Exception {

+ int numOfDatanodes = dataBlocks + parityBlocks + 3;

+ int numOfRacks = dataBlocks;

+ long capacity = 20 * defaultBlockSize;

+ long[] capacities = new long[numOfDatanodes];

+ Arrays.fill(capacities, capacity);

+ String[] racks = new String[numOfDatanodes];

+ for (int i = 0; i < numOfDatanodes; i++) {

+ racks[i] = "/rack" + (i % numOfRacks);

+ }

+ cluster = new MiniDFSCluster.Builder(conf)

+ .numDataNodes(numOfDatanodes)

+ .racks(racks)

+ .simulatedCapacities(capacities)

+ .build();

+

+ try {

+ cluster.waitActive();

+ client = NameNodeProxies.createProxy(conf, cluster.getFileSystem(0).getUri(),

+ ClientProtocol.class).getProxy();

+ client.enableErasureCodingPolicy(

+ StripedFileTestUtil.getDefaultECPolicy().getName());

+ client.setErasureCodingPolicy("/",

+ StripedFileTestUtil.getDefaultECPolicy().getName());

+

+ long totalCapacity = sum(capacities);

+

+ // fill up the cluster with 30% data. It'll be 45% full plus parity.

+ long fileLen = totalCapacity * 3 / 10;

+ long totalUsedSpace = fileLen * (dataBlocks + parityBlocks) / dataBlocks;

+ FileSystem fs = cluster.getFileSystem(0);

+ DFSTestUtil.createFile(fs, filePath, fileLen, (short) 3, r.nextLong());

+

+ // verify locations of striped blocks

+ LocatedBlocks locatedBlocks = client.getBlockLocations(fileName, 0, fileLen);

+ StripedFileTestUtil.verifyLocatedStripedBlocks(locatedBlocks, groupSize);

+

+ // get datanode report

+ DatanodeInfo[] datanodeReport = client.getDatanodeReport(DatanodeReportType.ALL);

+

+ // add datanode in new rack

+ String newRack = "/rack" + (++numOfRacks);

+ cluster.startDataNodes(conf, 2, true, null,

+ new String[]{newRack, newRack}, null,

+ new long[]{capacity, capacity});

+ totalCapacity += capacity*2;

+ cluster.triggerHeartbeats();

+

+ // add datanode to exclude list

+ Set<String> dnList = new HashSet<>();

+ dnList.add(datanodeReport[0].getHostName());

+ BalancerParameters.Builder pBuilder = new BalancerParameters.Builder();

+ pBuilder.setExcludedNodes(dnList);

+ waitForHeartBeat(totalUsedSpace, totalCapacity, client, cluster);

+

+ // start balancer and check the failed num of moving task

+ Collection<URI> namenodes = DFSUtil.getInternalNsRpcUris(conf);

+ final int run = runBalancer(namenodes, pBuilder.build(), conf, true);

+ if (conf.getInt(

+ DFSConfigKeys.DFS_DATANODE_BALANCE_MAX_NUM_CONCURRENT_MOVES_KEY,

+ DFSConfigKeys.DFS_DATANODE_BALANCE_MAX_NUM_CONCURRENT_MOVES_DEFAULT)

+ == 0) {

+ assertEquals(ExitStatus.NO_MOVE_PROGRESS.getExitCode(), run);

+ } else {

+ assertEquals(ExitStatus.SUCCESS.getExitCode(), run);

+ }

+ waitForHeartBeat(totalUsedSpace, totalCapacity, client, cluster);

+

+ // verify locations of striped blocks

+ locatedBlocks = client.getBlockLocations(fileName, 0, fileLen);

+ StripedFileTestUtil.verifyLocatedStripedBlocks(locatedBlocks, groupSize);

Review comment:

This is because `waitForBalancer` not waiting for namenode to delete extra replicas.

I fix the code and check the total block counts before `StripedFileTestUtil.verifyLocatedStripedBlocks`

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For additional commands, e-mail: common-issues-help@hadoop.apache.org

[GitHub] [hadoop] hadoop-yetus commented on pull request #3679: HDFS-16333. fix balancer bug when transfer an EC block

Posted by GitBox <gi...@apache.org>.

hadoop-yetus commented on pull request #3679:

URL: https://github.com/apache/hadoop/pull/3679#issuecomment-988547082

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|:----:|----------:|--------:|:--------:|:-------:|

| +0 :ok: | reexec | 0m 37s | | Docker mode activated. |

|||| _ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files found. |

| +0 :ok: | codespell | 0m 0s | | codespell was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to include 1 new or modified test files. |

|||| _ trunk Compile Tests _ |

| +1 :green_heart: | mvninstall | 32m 8s | | trunk passed |

| +1 :green_heart: | compile | 1m 26s | | trunk passed with JDK Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | compile | 1m 20s | | trunk passed with JDK Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | checkstyle | 1m 3s | | trunk passed |

| +1 :green_heart: | mvnsite | 1m 27s | | trunk passed |

| +1 :green_heart: | javadoc | 1m 1s | | trunk passed with JDK Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 1m 32s | | trunk passed with JDK Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 3m 9s | | trunk passed |

| +1 :green_heart: | shadedclient | 22m 24s | | branch has no errors when building and testing our client artifacts. |

|||| _ Patch Compile Tests _ |

| +1 :green_heart: | mvninstall | 1m 16s | | the patch passed |

| +1 :green_heart: | compile | 1m 19s | | the patch passed with JDK Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javac | 1m 19s | | the patch passed |

| +1 :green_heart: | compile | 1m 12s | | the patch passed with JDK Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | javac | 1m 12s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks issues. |

| +1 :green_heart: | checkstyle | 0m 51s | | hadoop-hdfs-project/hadoop-hdfs: The patch generated 0 new + 150 unchanged - 1 fixed = 150 total (was 151) |

| +1 :green_heart: | mvnsite | 1m 18s | | the patch passed |

| +1 :green_heart: | javadoc | 0m 50s | | the patch passed with JDK Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 1m 25s | | the patch passed with JDK Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 3m 16s | | the patch passed |

| +1 :green_heart: | shadedclient | 22m 3s | | patch has no errors when building and testing our client artifacts. |

|||| _ Other Tests _ |

| -1 :x: | unit | 237m 28s | [/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3679/6/artifact/out/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt) | hadoop-hdfs in the patch passed. |

| +1 :green_heart: | asflicense | 0m 48s | | The patch does not generate ASF License warnings. |

| | | 335m 34s | | |

| Reason | Tests |

|-------:|:------|

| Failed junit tests | hadoop.hdfs.server.datanode.TestDataNodeHotSwapVolumes |

| Subsystem | Report/Notes |

|----------:|:-------------|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base: https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3679/6/artifact/out/Dockerfile |

| GITHUB PR | https://github.com/apache/hadoop/pull/3679 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall mvnsite unit shadedclient spotbugs checkstyle codespell |

| uname | Linux e02472f6a9ee 4.15.0-156-generic #163-Ubuntu SMP Thu Aug 19 23:31:58 UTC 2021 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / ed3a77c6c22487e4ff1d941fe0047f54bf2eb55c |

| Default Java | Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| Multi-JDK versions | /usr/lib/jvm/java-11-openjdk-amd64:Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 /usr/lib/jvm/java-8-openjdk-amd64:Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| Test Results | https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3679/6/testReport/ |

| Max. process+thread count | 3173 (vs. ulimit of 5500) |

| modules | C: hadoop-hdfs-project/hadoop-hdfs U: hadoop-hdfs-project/hadoop-hdfs |

| Console output | https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3679/6/console |

| versions | git=2.25.1 maven=3.6.3 spotbugs=4.2.2 |

| Powered by | Apache Yetus 0.14.0-SNAPSHOT https://yetus.apache.org |

This message was automatically generated.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For additional commands, e-mail: common-issues-help@hadoop.apache.org

[GitHub] [hadoop] hadoop-yetus commented on pull request #3679: HDFS-16333. fix balancer bug when transfer an EC block

Posted by GitBox <gi...@apache.org>.

hadoop-yetus commented on pull request #3679:

URL: https://github.com/apache/hadoop/pull/3679#issuecomment-988779522

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|:----:|----------:|--------:|:--------:|:-------:|

| +0 :ok: | reexec | 0m 46s | | Docker mode activated. |

|||| _ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files found. |

| +0 :ok: | codespell | 0m 1s | | codespell was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to include 1 new or modified test files. |

|||| _ trunk Compile Tests _ |

| +1 :green_heart: | mvninstall | 39m 40s | | trunk passed |

| +1 :green_heart: | compile | 1m 32s | | trunk passed with JDK Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | compile | 1m 22s | | trunk passed with JDK Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | checkstyle | 1m 4s | | trunk passed |

| +1 :green_heart: | mvnsite | 1m 27s | | trunk passed |

| +1 :green_heart: | javadoc | 1m 0s | | trunk passed with JDK Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 1m 32s | | trunk passed with JDK Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 3m 12s | | trunk passed |

| +1 :green_heart: | shadedclient | 22m 14s | | branch has no errors when building and testing our client artifacts. |

|||| _ Patch Compile Tests _ |

| +1 :green_heart: | mvninstall | 1m 17s | | the patch passed |

| +1 :green_heart: | compile | 1m 17s | | the patch passed with JDK Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javac | 1m 17s | | the patch passed |

| +1 :green_heart: | compile | 1m 13s | | the patch passed with JDK Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | javac | 1m 13s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks issues. |

| +1 :green_heart: | checkstyle | 0m 52s | | hadoop-hdfs-project/hadoop-hdfs: The patch generated 0 new + 150 unchanged - 1 fixed = 150 total (was 151) |

| +1 :green_heart: | mvnsite | 1m 22s | | the patch passed |

| +1 :green_heart: | javadoc | 0m 51s | | the patch passed with JDK Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 1m 24s | | the patch passed with JDK Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 3m 15s | | the patch passed |

| +1 :green_heart: | shadedclient | 23m 1s | | patch has no errors when building and testing our client artifacts. |

|||| _ Other Tests _ |

| -1 :x: | unit | 229m 11s | [/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3679/7/artifact/out/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt) | hadoop-hdfs in the patch passed. |

| +1 :green_heart: | asflicense | 0m 46s | | The patch does not generate ASF License warnings. |

| | | 336m 10s | | |

| Reason | Tests |

|-------:|:------|

| Failed junit tests | hadoop.hdfs.TestRollingUpgrade |

| Subsystem | Report/Notes |

|----------:|:-------------|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base: https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3679/7/artifact/out/Dockerfile |

| GITHUB PR | https://github.com/apache/hadoop/pull/3679 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall mvnsite unit shadedclient spotbugs checkstyle codespell |

| uname | Linux e4e612629e2e 4.15.0-156-generic #163-Ubuntu SMP Thu Aug 19 23:31:58 UTC 2021 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / 15a3fbde50af18e7ecf8394f8519e2210c969207 |

| Default Java | Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| Multi-JDK versions | /usr/lib/jvm/java-11-openjdk-amd64:Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 /usr/lib/jvm/java-8-openjdk-amd64:Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| Test Results | https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3679/7/testReport/ |

| Max. process+thread count | 3194 (vs. ulimit of 5500) |

| modules | C: hadoop-hdfs-project/hadoop-hdfs U: hadoop-hdfs-project/hadoop-hdfs |

| Console output | https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3679/7/console |

| versions | git=2.25.1 maven=3.6.3 spotbugs=4.2.2 |

| Powered by | Apache Yetus 0.14.0-SNAPSHOT https://yetus.apache.org |

This message was automatically generated.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For additional commands, e-mail: common-issues-help@hadoop.apache.org

[GitHub] [hadoop] tasanuma commented on a change in pull request #3679: HDFS-16333. fix balancer bug when transfer an EC block

Posted by GitBox <gi...@apache.org>.

tasanuma commented on a change in pull request #3679:

URL: https://github.com/apache/hadoop/pull/3679#discussion_r760910640

##########

File path: hadoop-hdfs-project/hadoop-hdfs/src/main/java/org/apache/hadoop/hdfs/server/balancer/Dispatcher.java

##########

@@ -848,16 +867,30 @@ private long getBlockList() throws IOException {

synchronized (block) {

block.clearLocations();

+ if (blkLocs instanceof StripedBlockWithLocations) {

+ // indices may have been adjusted before

+ ((DBlockStriped) block).setIndices(

+ ((StripedBlockWithLocations) blkLocs).getIndices());

+ }

+

// update locations

+ List<Integer> adjustList = new ArrayList<>();

final String[] datanodeUuids = blkLocs.getDatanodeUuids();

final StorageType[] storageTypes = blkLocs.getStorageTypes();

for (int i = 0; i < datanodeUuids.length; i++) {

final StorageGroup g = storageGroupMap.get(

datanodeUuids[i], storageTypes[i]);

if (g != null) { // not unknown

block.addLocation(g);

+ } else if (blkLocs instanceof StripedBlockWithLocations) {

+ adjustList.add(i);

}

}

+

+ if (blkLocs instanceof StripedBlockWithLocations) {

+ // adjust indices if locations has been updated

Review comment:

Could you please provide more detailed comments on when the locations could be updated and why we need to adjust indices?

##########

File path: hadoop-hdfs-project/hadoop-hdfs/src/test/java/org/apache/hadoop/hdfs/server/balancer/TestBalancer.java

##########

@@ -1615,6 +1619,92 @@ public void testBalancerWithStripedFile() throws Exception {

NameNodeConnector.setWrite2IdFile(false);

}

+ @Test

+ public void testBalancerWithExcludeListWithStripedFile() throws Exception {

+ Configuration conf = new Configuration();

+ initConfWithStripe(conf);

+ NameNodeConnector.setWrite2IdFile(true);

+ doTestBalancerWithExcludeListWithStripedFile(conf);

+ NameNodeConnector.setWrite2IdFile(false);

+ }

+

+ private void doTestBalancerWithExcludeListWithStripedFile(Configuration conf) throws Exception {

+ int numOfDatanodes = dataBlocks + parityBlocks + 3;

+ int numOfRacks = dataBlocks;

+ long capacity = 20 * defaultBlockSize;

+ long[] capacities = new long[numOfDatanodes];

+ Arrays.fill(capacities, capacity);

+ String[] racks = new String[numOfDatanodes];

+ for (int i = 0; i < numOfDatanodes; i++) {

+ racks[i] = "/rack" + (i % numOfRacks);

+ }

+ cluster = new MiniDFSCluster.Builder(conf)

+ .numDataNodes(numOfDatanodes)

+ .racks(racks)

+ .simulatedCapacities(capacities)

+ .build();

+

+ try {

+ cluster.waitActive();

+ client = NameNodeProxies.createProxy(conf, cluster.getFileSystem(0).getUri(),

+ ClientProtocol.class).getProxy();

+ client.enableErasureCodingPolicy(

+ StripedFileTestUtil.getDefaultECPolicy().getName());

+ client.setErasureCodingPolicy("/",

+ StripedFileTestUtil.getDefaultECPolicy().getName());

+

+ long totalCapacity = sum(capacities);

+

+ // fill up the cluster with 30% data. It'll be 45% full plus parity.

+ long fileLen = totalCapacity * 3 / 10;

+ long totalUsedSpace = fileLen * (dataBlocks + parityBlocks) / dataBlocks;

+ FileSystem fs = cluster.getFileSystem(0);

+ DFSTestUtil.createFile(fs, filePath, fileLen, (short) 3, r.nextLong());

+

+ // verify locations of striped blocks

+ LocatedBlocks locatedBlocks = client.getBlockLocations(fileName, 0, fileLen);

+ StripedFileTestUtil.verifyLocatedStripedBlocks(locatedBlocks, groupSize);

+

+ // get datanode report

+ DatanodeInfo[] datanodeReport = client.getDatanodeReport(DatanodeReportType.ALL);

+

+ // add datanode in new rack

+ String newRack = "/rack" + (++numOfRacks);

+ cluster.startDataNodes(conf, 2, true, null,

+ new String[]{newRack, newRack}, null,

+ new long[]{capacity, capacity});

+ totalCapacity += capacity*2;

+ cluster.triggerHeartbeats();

+

+ // add datanode to exclude list

+ Set<String> dnList = new HashSet<>();

+ dnList.add(datanodeReport[0].getHostName());

+ BalancerParameters.Builder pBuilder = new BalancerParameters.Builder();

+ pBuilder.setExcludedNodes(dnList);

+ waitForHeartBeat(totalUsedSpace, totalCapacity, client, cluster);

+

+ // start balancer and check the failed num of moving task

+ Collection<URI> namenodes = DFSUtil.getInternalNsRpcUris(conf);

+ final int run = runBalancer(namenodes, pBuilder.build(), conf, true);

+ if (conf.getInt(

+ DFSConfigKeys.DFS_DATANODE_BALANCE_MAX_NUM_CONCURRENT_MOVES_KEY,

+ DFSConfigKeys.DFS_DATANODE_BALANCE_MAX_NUM_CONCURRENT_MOVES_DEFAULT)

+ == 0) {

+ assertEquals(ExitStatus.NO_MOVE_PROGRESS.getExitCode(), run);

+ } else {

+ assertEquals(ExitStatus.SUCCESS.getExitCode(), run);

+ }

+ waitForHeartBeat(totalUsedSpace, totalCapacity, client, cluster);

Review comment:

How about adding another `void runBalancer` method

```diff

private void runBalancer(Configuration conf, long totalUsedSpace,

long totalCapacity, BalancerParameters p, int excludedNodes)

throws Exception {

+ runBalancer(conf, totalUsedSpace, totalCapacity, p, excludedNodes, false);

+ }

+

+ private void runBalancer(Configuration conf, long totalUsedSpace,

+ long totalCapacity, BalancerParameters p, int excludedNodes, boolean checkFailedNum)

+ throws Exception {

waitForHeartBeat(totalUsedSpace, totalCapacity, client, cluster);

```

and just calling it from the unit test?

```suggestion

runBalancer(namenodes, pBuilder.build(), conf, true);

```

##########

File path: hadoop-hdfs-project/hadoop-hdfs/src/main/java/org/apache/hadoop/hdfs/server/balancer/Dispatcher.java

##########

@@ -532,6 +532,25 @@ public long getNumBytes(StorageGroup storage) {

}

return block.getNumBytes();

}

+

+ public void setIndices(byte[] indices) {

+ this.indices = indices;

+ }

+

+ public void adjustIndices(List<Integer> list) {

Review comment:

Please provide comments on what this method does.

##########

File path: hadoop-hdfs-project/hadoop-hdfs/src/test/java/org/apache/hadoop/hdfs/server/balancer/TestBalancer.java

##########

@@ -1615,6 +1619,92 @@ public void testBalancerWithStripedFile() throws Exception {

NameNodeConnector.setWrite2IdFile(false);

}

+ @Test

+ public void testBalancerWithExcludeListWithStripedFile() throws Exception {

+ Configuration conf = new Configuration();

+ initConfWithStripe(conf);

+ NameNodeConnector.setWrite2IdFile(true);

+ doTestBalancerWithExcludeListWithStripedFile(conf);

+ NameNodeConnector.setWrite2IdFile(false);

+ }

+

+ private void doTestBalancerWithExcludeListWithStripedFile(Configuration conf) throws Exception {

+ int numOfDatanodes = dataBlocks + parityBlocks + 3;

+ int numOfRacks = dataBlocks;

+ long capacity = 20 * defaultBlockSize;

+ long[] capacities = new long[numOfDatanodes];

+ Arrays.fill(capacities, capacity);

+ String[] racks = new String[numOfDatanodes];

+ for (int i = 0; i < numOfDatanodes; i++) {

+ racks[i] = "/rack" + (i % numOfRacks);

+ }

+ cluster = new MiniDFSCluster.Builder(conf)

+ .numDataNodes(numOfDatanodes)

+ .racks(racks)

+ .simulatedCapacities(capacities)

+ .build();

+

+ try {

+ cluster.waitActive();

+ client = NameNodeProxies.createProxy(conf, cluster.getFileSystem(0).getUri(),

+ ClientProtocol.class).getProxy();

+ client.enableErasureCodingPolicy(

+ StripedFileTestUtil.getDefaultECPolicy().getName());

+ client.setErasureCodingPolicy("/",

+ StripedFileTestUtil.getDefaultECPolicy().getName());

+

+ long totalCapacity = sum(capacities);

+

+ // fill up the cluster with 30% data. It'll be 45% full plus parity.

+ long fileLen = totalCapacity * 3 / 10;

+ long totalUsedSpace = fileLen * (dataBlocks + parityBlocks) / dataBlocks;

+ FileSystem fs = cluster.getFileSystem(0);

+ DFSTestUtil.createFile(fs, filePath, fileLen, (short) 3, r.nextLong());

+

+ // verify locations of striped blocks

+ LocatedBlocks locatedBlocks = client.getBlockLocations(fileName, 0, fileLen);

+ StripedFileTestUtil.verifyLocatedStripedBlocks(locatedBlocks, groupSize);

+

+ // get datanode report

+ DatanodeInfo[] datanodeReport = client.getDatanodeReport(DatanodeReportType.ALL);

+

+ // add datanode in new rack

+ String newRack = "/rack" + (++numOfRacks);

+ cluster.startDataNodes(conf, 2, true, null,

+ new String[]{newRack, newRack}, null,

+ new long[]{capacity, capacity});

+ totalCapacity += capacity*2;

+ cluster.triggerHeartbeats();

+

+ // add datanode to exclude list

+ Set<String> dnList = new HashSet<>();

+ dnList.add(datanodeReport[0].getHostName());

+ BalancerParameters.Builder pBuilder = new BalancerParameters.Builder();

+ pBuilder.setExcludedNodes(dnList);

+ waitForHeartBeat(totalUsedSpace, totalCapacity, client, cluster);

+

+ // start balancer and check the failed num of moving task

+ Collection<URI> namenodes = DFSUtil.getInternalNsRpcUris(conf);

+ final int run = runBalancer(namenodes, pBuilder.build(), conf, true);

+ if (conf.getInt(

+ DFSConfigKeys.DFS_DATANODE_BALANCE_MAX_NUM_CONCURRENT_MOVES_KEY,

+ DFSConfigKeys.DFS_DATANODE_BALANCE_MAX_NUM_CONCURRENT_MOVES_DEFAULT)

+ == 0) {

+ assertEquals(ExitStatus.NO_MOVE_PROGRESS.getExitCode(), run);

+ } else {

+ assertEquals(ExitStatus.SUCCESS.getExitCode(), run);

+ }

+ waitForHeartBeat(totalUsedSpace, totalCapacity, client, cluster);

+

+ // verify locations of striped blocks

+ locatedBlocks = client.getBlockLocations(fileName, 0, fileLen);

+ StripedFileTestUtil.verifyLocatedStripedBlocks(locatedBlocks, groupSize);

Review comment:

I did the unit test multiple times, and sometimes the length of the `locatedBlocks` is larger than `groupSize`, and the verification failed. Could you check it?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For additional commands, e-mail: common-issues-help@hadoop.apache.org

[GitHub] [hadoop] hadoop-yetus commented on pull request #3679: HDFS-16333. fix balancer bug when transfer an EC block

Posted by GitBox <gi...@apache.org>.

hadoop-yetus commented on pull request #3679:

URL: https://github.com/apache/hadoop/pull/3679#issuecomment-972735018

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|:----:|----------:|--------:|:--------:|:-------:|

| +0 :ok: | reexec | 0m 0s | | Docker mode activated. |

| -1 :x: | patch | 0m 20s | | https://github.com/apache/hadoop/pull/3679 does not apply to trunk. Rebase required? Wrong Branch? See https://cwiki.apache.org/confluence/display/HADOOP/How+To+Contribute for help. |

| Subsystem | Report/Notes |

|----------:|:-------------|

| GITHUB PR | https://github.com/apache/hadoop/pull/3679 |

| Console output | https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3679/1/console |

| versions | git=2.17.1 |

| Powered by | Apache Yetus 0.14.0-SNAPSHOT https://yetus.apache.org |

This message was automatically generated.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For additional commands, e-mail: common-issues-help@hadoop.apache.org

[GitHub] [hadoop] tasanuma commented on a change in pull request #3679: HDFS-16333. fix balancer bug when transfer an EC block

Posted by GitBox <gi...@apache.org>.

tasanuma commented on a change in pull request #3679:

URL: https://github.com/apache/hadoop/pull/3679#discussion_r764568831

##########

File path: hadoop-hdfs-project/hadoop-hdfs/src/main/java/org/apache/hadoop/hdfs/server/balancer/Dispatcher.java

##########

@@ -848,16 +871,35 @@ private long getBlockList() throws IOException {

synchronized (block) {

block.clearLocations();

+ if (blkLocs instanceof StripedBlockWithLocations) {

+ // EC block may adjust indices before, avoid repeated adjustments

+ ((DBlockStriped) block).setIndices(

+ ((StripedBlockWithLocations) blkLocs).getIndices());

+ }

+

// update locations

+ List<Integer> adjustList = new ArrayList<>();

final String[] datanodeUuids = blkLocs.getDatanodeUuids();

final StorageType[] storageTypes = blkLocs.getStorageTypes();

for (int i = 0; i < datanodeUuids.length; i++) {

final StorageGroup g = storageGroupMap.get(

datanodeUuids[i], storageTypes[i]);

if (g != null) { // not unknown

block.addLocation(g);

+ } else if (blkLocs instanceof StripedBlockWithLocations) {

+ // some datanode may not in storageGroupMap due to decommission operation

+ // or balancer cli with "-exclude" parameter

+ adjustList.add(i);

}

}

+

+ if (!adjustList.isEmpty()) {

+ // block.locations mismatch with block.indices

+ // adjust indices to get correct internalBlock for Datanode in #getInternalBlock

+ ((DBlockStriped) block).adjustIndices(adjustList);

+ Preconditions.checkArgument(((DBlockStriped) block).indices.length

Review comment:

As `Preconditions.checkArgument()` can throw `IllegalArgumentException`, `getBlockList()` should declares it, and `dispatchBlocks()` should catch the exception.

```diff

- private long getBlockList() throws IOException {

+ private long getBlockList() throws IOException, IllegalArgumentException {

```

```diff

try {

final long received = getBlockList();

if (received == 0) {

return;

}

blocksToReceive -= received;

continue;

- } catch (IOException e) {

+ } catch (IOException|IllegalArgumentException e) {

LOG.warn("Exception while getting reportedBlock list", e);

return;

}

```

##########

File path: hadoop-hdfs-project/hadoop-hdfs/src/test/java/org/apache/hadoop/hdfs/server/balancer/TestBalancer.java

##########

@@ -1615,6 +1636,107 @@ public void testBalancerWithStripedFile() throws Exception {

NameNodeConnector.setWrite2IdFile(false);

}

+ @Test

Review comment:

Could you move `testBalancerWithExcludeListWithStripedFile()` and `doTestBalancerWithExcludeListWithStripedFile ()` after `doTestBalancerWithStripedFile`?

##########

File path: hadoop-hdfs-project/hadoop-hdfs/src/test/java/org/apache/hadoop/hdfs/server/balancer/TestBalancer.java

##########

@@ -1615,6 +1619,92 @@ public void testBalancerWithStripedFile() throws Exception {

NameNodeConnector.setWrite2IdFile(false);

}

+ @Test

+ public void testBalancerWithExcludeListWithStripedFile() throws Exception {

+ Configuration conf = new Configuration();

+ initConfWithStripe(conf);

+ NameNodeConnector.setWrite2IdFile(true);

+ doTestBalancerWithExcludeListWithStripedFile(conf);

+ NameNodeConnector.setWrite2IdFile(false);

+ }

+

+ private void doTestBalancerWithExcludeListWithStripedFile(Configuration conf) throws Exception {

+ int numOfDatanodes = dataBlocks + parityBlocks + 3;

+ int numOfRacks = dataBlocks;

+ long capacity = 20 * defaultBlockSize;

+ long[] capacities = new long[numOfDatanodes];

+ Arrays.fill(capacities, capacity);

+ String[] racks = new String[numOfDatanodes];

+ for (int i = 0; i < numOfDatanodes; i++) {

+ racks[i] = "/rack" + (i % numOfRacks);

+ }

+ cluster = new MiniDFSCluster.Builder(conf)

+ .numDataNodes(numOfDatanodes)

+ .racks(racks)

+ .simulatedCapacities(capacities)

+ .build();

+

+ try {

+ cluster.waitActive();

+ client = NameNodeProxies.createProxy(conf, cluster.getFileSystem(0).getUri(),

+ ClientProtocol.class).getProxy();

+ client.enableErasureCodingPolicy(

+ StripedFileTestUtil.getDefaultECPolicy().getName());

+ client.setErasureCodingPolicy("/",

+ StripedFileTestUtil.getDefaultECPolicy().getName());

+

+ long totalCapacity = sum(capacities);

+

+ // fill up the cluster with 30% data. It'll be 45% full plus parity.

+ long fileLen = totalCapacity * 3 / 10;

+ long totalUsedSpace = fileLen * (dataBlocks + parityBlocks) / dataBlocks;

+ FileSystem fs = cluster.getFileSystem(0);

+ DFSTestUtil.createFile(fs, filePath, fileLen, (short) 3, r.nextLong());

+

+ // verify locations of striped blocks

+ LocatedBlocks locatedBlocks = client.getBlockLocations(fileName, 0, fileLen);

+ StripedFileTestUtil.verifyLocatedStripedBlocks(locatedBlocks, groupSize);

+

+ // get datanode report

+ DatanodeInfo[] datanodeReport = client.getDatanodeReport(DatanodeReportType.ALL);

+

+ // add datanode in new rack

+ String newRack = "/rack" + (++numOfRacks);

+ cluster.startDataNodes(conf, 2, true, null,

+ new String[]{newRack, newRack}, null,

+ new long[]{capacity, capacity});

+ totalCapacity += capacity*2;

+ cluster.triggerHeartbeats();

+

+ // add datanode to exclude list

+ Set<String> dnList = new HashSet<>();

+ dnList.add(datanodeReport[0].getHostName());

+ BalancerParameters.Builder pBuilder = new BalancerParameters.Builder();

+ pBuilder.setExcludedNodes(dnList);

+ waitForHeartBeat(totalUsedSpace, totalCapacity, client, cluster);

+

+ // start balancer and check the failed num of moving task

+ Collection<URI> namenodes = DFSUtil.getInternalNsRpcUris(conf);

+ final int run = runBalancer(namenodes, pBuilder.build(), conf, true);

+ if (conf.getInt(

+ DFSConfigKeys.DFS_DATANODE_BALANCE_MAX_NUM_CONCURRENT_MOVES_KEY,

+ DFSConfigKeys.DFS_DATANODE_BALANCE_MAX_NUM_CONCURRENT_MOVES_DEFAULT)

+ == 0) {

+ assertEquals(ExitStatus.NO_MOVE_PROGRESS.getExitCode(), run);

+ } else {

+ assertEquals(ExitStatus.SUCCESS.getExitCode(), run);

+ }

+ waitForHeartBeat(totalUsedSpace, totalCapacity, client, cluster);

+

+ // verify locations of striped blocks

+ locatedBlocks = client.getBlockLocations(fileName, 0, fileLen);

+ StripedFileTestUtil.verifyLocatedStripedBlocks(locatedBlocks, groupSize);

Review comment:

It makes sense. How about using `GenericTestUtils#waitFor` for checking it?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For additional commands, e-mail: common-issues-help@hadoop.apache.org

[GitHub] [hadoop] tasanuma commented on pull request #3679: HDFS-16333. fix balancer bug when transfer an EC block

Posted by GitBox <gi...@apache.org>.

tasanuma commented on pull request #3679:

URL: https://github.com/apache/hadoop/pull/3679#issuecomment-988621405

Thanks for updating it. +1, pending Jenkins.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For additional commands, e-mail: common-issues-help@hadoop.apache.org

[GitHub] [hadoop] hadoop-yetus commented on pull request #3679: HDFS-16333. fix balancer bug when transfer an EC block

Posted by GitBox <gi...@apache.org>.

hadoop-yetus commented on pull request #3679:

URL: https://github.com/apache/hadoop/pull/3679#issuecomment-973036747

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|:----:|----------:|--------:|:--------:|:-------:|

| +0 :ok: | reexec | 0m 45s | | Docker mode activated. |

|||| _ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files found. |

| +0 :ok: | codespell | 0m 1s | | codespell was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain any @author tags. |

| -1 :x: | test4tests | 0m 0s | | The patch doesn't appear to include any new or modified tests. Please justify why no new tests are needed for this patch. Also please list what manual steps were performed to verify this patch. |

|||| _ trunk Compile Tests _ |

| +1 :green_heart: | mvninstall | 36m 14s | | trunk passed |

| +1 :green_heart: | compile | 1m 37s | | trunk passed with JDK Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | compile | 1m 25s | | trunk passed with JDK Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | checkstyle | 1m 2s | | trunk passed |

| +1 :green_heart: | mvnsite | 1m 25s | | trunk passed |

| +1 :green_heart: | javadoc | 1m 1s | | trunk passed with JDK Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 1m 33s | | trunk passed with JDK Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 3m 12s | | trunk passed |

| +1 :green_heart: | shadedclient | 21m 58s | | branch has no errors when building and testing our client artifacts. |

|||| _ Patch Compile Tests _ |

| +1 :green_heart: | mvninstall | 1m 16s | | the patch passed |

| +1 :green_heart: | compile | 1m 18s | | the patch passed with JDK Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javac | 1m 18s | | the patch passed |

| +1 :green_heart: | compile | 1m 13s | | the patch passed with JDK Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | javac | 1m 13s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks issues. |

| -0 :warning: | checkstyle | 0m 54s | [/results-checkstyle-hadoop-hdfs-project_hadoop-hdfs.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3679/2/artifact/out/results-checkstyle-hadoop-hdfs-project_hadoop-hdfs.txt) | hadoop-hdfs-project/hadoop-hdfs: The patch generated 3 new + 40 unchanged - 1 fixed = 43 total (was 41) |

| +1 :green_heart: | mvnsite | 1m 19s | | the patch passed |

| +1 :green_heart: | javadoc | 0m 51s | | the patch passed with JDK Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 1m 25s | | the patch passed with JDK Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 3m 16s | | the patch passed |

| +1 :green_heart: | shadedclient | 23m 7s | | patch has no errors when building and testing our client artifacts. |

|||| _ Other Tests _ |

| +1 :green_heart: | unit | 234m 29s | | hadoop-hdfs in the patch passed. |

| +1 :green_heart: | asflicense | 0m 41s | | The patch does not generate ASF License warnings. |

| | | 337m 59s | | |

| Subsystem | Report/Notes |

|----------:|:-------------|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base: https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3679/2/artifact/out/Dockerfile |

| GITHUB PR | https://github.com/apache/hadoop/pull/3679 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall mvnsite unit shadedclient spotbugs checkstyle codespell |

| uname | Linux 4ddcfd7667b5 4.15.0-156-generic #163-Ubuntu SMP Thu Aug 19 23:31:58 UTC 2021 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / 46dbec8d4354b9fadf094351b3edd501e9f67c40 |

| Default Java | Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| Multi-JDK versions | /usr/lib/jvm/java-11-openjdk-amd64:Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 /usr/lib/jvm/java-8-openjdk-amd64:Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| Test Results | https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3679/2/testReport/ |

| Max. process+thread count | 3060 (vs. ulimit of 5500) |

| modules | C: hadoop-hdfs-project/hadoop-hdfs U: hadoop-hdfs-project/hadoop-hdfs |

| Console output | https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3679/2/console |

| versions | git=2.25.1 maven=3.6.3 spotbugs=4.2.2 |

| Powered by | Apache Yetus 0.14.0-SNAPSHOT https://yetus.apache.org |

This message was automatically generated.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For additional commands, e-mail: common-issues-help@hadoop.apache.org

[GitHub] [hadoop] hadoop-yetus commented on pull request #3679: HDFS-16333. fix balancer bug when transfer an EC block

Posted by GitBox <gi...@apache.org>.

hadoop-yetus commented on pull request #3679:

URL: https://github.com/apache/hadoop/pull/3679#issuecomment-977056058

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|:----:|----------:|--------:|:--------:|:-------:|

| +0 :ok: | reexec | 0m 58s | | Docker mode activated. |

|||| _ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files found. |

| +0 :ok: | codespell | 0m 0s | | codespell was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to include 1 new or modified test files. |

|||| _ trunk Compile Tests _ |

| +1 :green_heart: | mvninstall | 34m 13s | | trunk passed |

| +1 :green_heart: | compile | 1m 28s | | trunk passed with JDK Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | compile | 1m 27s | | trunk passed with JDK Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | checkstyle | 1m 2s | | trunk passed |

| +1 :green_heart: | mvnsite | 1m 28s | | trunk passed |

| +1 :green_heart: | javadoc | 1m 6s | | trunk passed with JDK Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 1m 33s | | trunk passed with JDK Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 3m 19s | | trunk passed |

| +1 :green_heart: | shadedclient | 23m 11s | | branch has no errors when building and testing our client artifacts. |

|||| _ Patch Compile Tests _ |

| +1 :green_heart: | mvninstall | 1m 15s | | the patch passed |

| +1 :green_heart: | compile | 1m 17s | | the patch passed with JDK Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javac | 1m 17s | | the patch passed |

| +1 :green_heart: | compile | 1m 13s | | the patch passed with JDK Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | javac | 1m 13s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks issues. |

| +1 :green_heart: | checkstyle | 0m 53s | | hadoop-hdfs-project/hadoop-hdfs: The patch generated 0 new + 150 unchanged - 1 fixed = 150 total (was 151) |

| +1 :green_heart: | mvnsite | 1m 21s | | the patch passed |

| +1 :green_heart: | javadoc | 0m 53s | | the patch passed with JDK Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 1m 27s | | the patch passed with JDK Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 3m 31s | | the patch passed |

| +1 :green_heart: | shadedclient | 22m 11s | | patch has no errors when building and testing our client artifacts. |

|||| _ Other Tests _ |

| -1 :x: | unit | 245m 42s | [/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3679/4/artifact/out/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt) | hadoop-hdfs in the patch passed. |

| +1 :green_heart: | asflicense | 0m 47s | | The patch does not generate ASF License warnings. |

| | | 347m 58s | | |

| Reason | Tests |

|-------:|:------|

| Failed junit tests | hadoop.hdfs.TestRollingUpgrade |

| Subsystem | Report/Notes |

|----------:|:-------------|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base: https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3679/4/artifact/out/Dockerfile |

| GITHUB PR | https://github.com/apache/hadoop/pull/3679 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall mvnsite unit shadedclient spotbugs checkstyle codespell |

| uname | Linux 0e6e56cf6333 4.15.0-58-generic #64-Ubuntu SMP Tue Aug 6 11:12:41 UTC 2019 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / e999e8708ade3fec186bd54f1705ba91e6add2eb |

| Default Java | Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| Multi-JDK versions | /usr/lib/jvm/java-11-openjdk-amd64:Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 /usr/lib/jvm/java-8-openjdk-amd64:Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| Test Results | https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3679/4/testReport/ |

| Max. process+thread count | 2923 (vs. ulimit of 5500) |

| modules | C: hadoop-hdfs-project/hadoop-hdfs U: hadoop-hdfs-project/hadoop-hdfs |

| Console output | https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3679/4/console |

| versions | git=2.25.1 maven=3.6.3 spotbugs=4.2.2 |

| Powered by | Apache Yetus 0.14.0-SNAPSHOT https://yetus.apache.org |

This message was automatically generated.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For additional commands, e-mail: common-issues-help@hadoop.apache.org

[GitHub] [hadoop] tasanuma merged pull request #3679: HDFS-16333. fix balancer bug when transfer an EC block

Posted by GitBox <gi...@apache.org>.

tasanuma merged pull request #3679:

URL: https://github.com/apache/hadoop/pull/3679

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For additional commands, e-mail: common-issues-help@hadoop.apache.org

[GitHub] [hadoop] liubingxing commented on a change in pull request #3679: HDFS-16333. fix balancer bug when transfer an EC block

Posted by GitBox <gi...@apache.org>.

liubingxing commented on a change in pull request #3679:

URL: https://github.com/apache/hadoop/pull/3679#discussion_r763930129

##########

File path: hadoop-hdfs-project/hadoop-hdfs/src/test/java/org/apache/hadoop/hdfs/server/balancer/TestBalancer.java

##########

@@ -1615,6 +1619,92 @@ public void testBalancerWithStripedFile() throws Exception {

NameNodeConnector.setWrite2IdFile(false);

}

+ @Test

+ public void testBalancerWithExcludeListWithStripedFile() throws Exception {

+ Configuration conf = new Configuration();

+ initConfWithStripe(conf);

+ NameNodeConnector.setWrite2IdFile(true);

+ doTestBalancerWithExcludeListWithStripedFile(conf);

+ NameNodeConnector.setWrite2IdFile(false);

+ }

+

+ private void doTestBalancerWithExcludeListWithStripedFile(Configuration conf) throws Exception {

+ int numOfDatanodes = dataBlocks + parityBlocks + 3;

+ int numOfRacks = dataBlocks;

+ long capacity = 20 * defaultBlockSize;

+ long[] capacities = new long[numOfDatanodes];

+ Arrays.fill(capacities, capacity);

+ String[] racks = new String[numOfDatanodes];

+ for (int i = 0; i < numOfDatanodes; i++) {

+ racks[i] = "/rack" + (i % numOfRacks);

+ }

+ cluster = new MiniDFSCluster.Builder(conf)

+ .numDataNodes(numOfDatanodes)

+ .racks(racks)

+ .simulatedCapacities(capacities)

+ .build();

+

+ try {

+ cluster.waitActive();

+ client = NameNodeProxies.createProxy(conf, cluster.getFileSystem(0).getUri(),

+ ClientProtocol.class).getProxy();

+ client.enableErasureCodingPolicy(

+ StripedFileTestUtil.getDefaultECPolicy().getName());

+ client.setErasureCodingPolicy("/",

+ StripedFileTestUtil.getDefaultECPolicy().getName());

+

+ long totalCapacity = sum(capacities);

+

+ // fill up the cluster with 30% data. It'll be 45% full plus parity.

+ long fileLen = totalCapacity * 3 / 10;

+ long totalUsedSpace = fileLen * (dataBlocks + parityBlocks) / dataBlocks;

+ FileSystem fs = cluster.getFileSystem(0);

+ DFSTestUtil.createFile(fs, filePath, fileLen, (short) 3, r.nextLong());

+

+ // verify locations of striped blocks

+ LocatedBlocks locatedBlocks = client.getBlockLocations(fileName, 0, fileLen);

+ StripedFileTestUtil.verifyLocatedStripedBlocks(locatedBlocks, groupSize);

+

+ // get datanode report

+ DatanodeInfo[] datanodeReport = client.getDatanodeReport(DatanodeReportType.ALL);

+

+ // add datanode in new rack

+ String newRack = "/rack" + (++numOfRacks);

+ cluster.startDataNodes(conf, 2, true, null,

+ new String[]{newRack, newRack}, null,

+ new long[]{capacity, capacity});

+ totalCapacity += capacity*2;

+ cluster.triggerHeartbeats();

+

+ // add datanode to exclude list

+ Set<String> dnList = new HashSet<>();

+ dnList.add(datanodeReport[0].getHostName());

+ BalancerParameters.Builder pBuilder = new BalancerParameters.Builder();

+ pBuilder.setExcludedNodes(dnList);

+ waitForHeartBeat(totalUsedSpace, totalCapacity, client, cluster);

+

+ // start balancer and check the failed num of moving task

+ Collection<URI> namenodes = DFSUtil.getInternalNsRpcUris(conf);

+ final int run = runBalancer(namenodes, pBuilder.build(), conf, true);

+ if (conf.getInt(

+ DFSConfigKeys.DFS_DATANODE_BALANCE_MAX_NUM_CONCURRENT_MOVES_KEY,

+ DFSConfigKeys.DFS_DATANODE_BALANCE_MAX_NUM_CONCURRENT_MOVES_DEFAULT)

+ == 0) {

+ assertEquals(ExitStatus.NO_MOVE_PROGRESS.getExitCode(), run);

+ } else {

+ assertEquals(ExitStatus.SUCCESS.getExitCode(), run);

+ }

+ waitForHeartBeat(totalUsedSpace, totalCapacity, client, cluster);

Review comment:

I updated the UT and calling `runBalancer` from the unit test.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For additional commands, e-mail: common-issues-help@hadoop.apache.org

[GitHub] [hadoop] liubingxing commented on pull request #3679: HDFS-16333. fix balancer bug when transfer an EC block

Posted by GitBox <gi...@apache.org>.

liubingxing commented on pull request #3679:

URL: https://github.com/apache/hadoop/pull/3679#issuecomment-988794542

The failed unit tests is not related to this PR

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For additional commands, e-mail: common-issues-help@hadoop.apache.org

[GitHub] [hadoop] hadoop-yetus commented on pull request #3679: HDFS-16333. fix balancer bug when transfer an EC block

Posted by GitBox <gi...@apache.org>.

hadoop-yetus commented on pull request #3679:

URL: https://github.com/apache/hadoop/pull/3679#issuecomment-988058699

:confetti_ball: **+1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|:----:|----------:|--------:|:--------:|:-------:|

| +0 :ok: | reexec | 0m 43s | | Docker mode activated. |

|||| _ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files found. |

| +0 :ok: | codespell | 0m 0s | | codespell was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to include 1 new or modified test files. |

|||| _ trunk Compile Tests _ |

| +1 :green_heart: | mvninstall | 32m 24s | | trunk passed |

| +1 :green_heart: | compile | 1m 28s | | trunk passed with JDK Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | compile | 1m 19s | | trunk passed with JDK Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | checkstyle | 1m 0s | | trunk passed |

| +1 :green_heart: | mvnsite | 1m 26s | | trunk passed |

| +1 :green_heart: | javadoc | 1m 1s | | trunk passed with JDK Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 1m 36s | | trunk passed with JDK Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 3m 21s | | trunk passed |

| +1 :green_heart: | shadedclient | 22m 54s | | branch has no errors when building and testing our client artifacts. |

|||| _ Patch Compile Tests _ |

| +1 :green_heart: | mvninstall | 1m 16s | | the patch passed |

| +1 :green_heart: | compile | 1m 44s | | the patch passed with JDK Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javac | 1m 44s | | the patch passed |

| +1 :green_heart: | compile | 1m 14s | | the patch passed with JDK Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | javac | 1m 14s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks issues. |

| -0 :warning: | checkstyle | 0m 55s | [/results-checkstyle-hadoop-hdfs-project_hadoop-hdfs.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3679/5/artifact/out/results-checkstyle-hadoop-hdfs-project_hadoop-hdfs.txt) | hadoop-hdfs-project/hadoop-hdfs: The patch generated 3 new + 150 unchanged - 1 fixed = 153 total (was 151) |

| +1 :green_heart: | mvnsite | 1m 20s | | the patch passed |

| +1 :green_heart: | javadoc | 0m 51s | | the patch passed with JDK Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 1m 25s | | the patch passed with JDK Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 3m 11s | | the patch passed |

| +1 :green_heart: | shadedclient | 22m 11s | | patch has no errors when building and testing our client artifacts. |

|||| _ Other Tests _ |

| +1 :green_heart: | unit | 225m 39s | | hadoop-hdfs in the patch passed. |

| +1 :green_heart: | asflicense | 0m 45s | | The patch does not generate ASF License warnings. |

| | | 325m 36s | | |

| Subsystem | Report/Notes |

|----------:|:-------------|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base: https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3679/5/artifact/out/Dockerfile |

| GITHUB PR | https://github.com/apache/hadoop/pull/3679 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall mvnsite unit shadedclient spotbugs checkstyle codespell |

| uname | Linux 72bab9b40a00 4.15.0-156-generic #163-Ubuntu SMP Thu Aug 19 23:31:58 UTC 2021 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / 1d6f9e68c82fedf9831b03cda6010ad3fc979300 |

| Default Java | Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| Multi-JDK versions | /usr/lib/jvm/java-11-openjdk-amd64:Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 /usr/lib/jvm/java-8-openjdk-amd64:Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| Test Results | https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3679/5/testReport/ |

| Max. process+thread count | 3232 (vs. ulimit of 5500) |

| modules | C: hadoop-hdfs-project/hadoop-hdfs U: hadoop-hdfs-project/hadoop-hdfs |

| Console output | https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3679/5/console |

| versions | git=2.25.1 maven=3.6.3 spotbugs=4.2.2 |

| Powered by | Apache Yetus 0.14.0-SNAPSHOT https://yetus.apache.org |

This message was automatically generated.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For additional commands, e-mail: common-issues-help@hadoop.apache.org

[GitHub] [hadoop] liubingxing commented on a change in pull request #3679: HDFS-16333. fix balancer bug when transfer an EC block

Posted by GitBox <gi...@apache.org>.

liubingxing commented on a change in pull request #3679:

URL: https://github.com/apache/hadoop/pull/3679#discussion_r763923048

##########

File path: hadoop-hdfs-project/hadoop-hdfs/src/test/java/org/apache/hadoop/hdfs/server/balancer/TestBalancer.java

##########

@@ -1615,6 +1619,92 @@ public void testBalancerWithStripedFile() throws Exception {

NameNodeConnector.setWrite2IdFile(false);

}

+ @Test

+ public void testBalancerWithExcludeListWithStripedFile() throws Exception {

+ Configuration conf = new Configuration();

+ initConfWithStripe(conf);

+ NameNodeConnector.setWrite2IdFile(true);

+ doTestBalancerWithExcludeListWithStripedFile(conf);

+ NameNodeConnector.setWrite2IdFile(false);

+ }

+

+ private void doTestBalancerWithExcludeListWithStripedFile(Configuration conf) throws Exception {

+ int numOfDatanodes = dataBlocks + parityBlocks + 3;

+ int numOfRacks = dataBlocks;

+ long capacity = 20 * defaultBlockSize;

+ long[] capacities = new long[numOfDatanodes];

+ Arrays.fill(capacities, capacity);

+ String[] racks = new String[numOfDatanodes];

+ for (int i = 0; i < numOfDatanodes; i++) {

+ racks[i] = "/rack" + (i % numOfRacks);

+ }

+ cluster = new MiniDFSCluster.Builder(conf)

+ .numDataNodes(numOfDatanodes)

+ .racks(racks)

+ .simulatedCapacities(capacities)

+ .build();

+

+ try {

+ cluster.waitActive();

+ client = NameNodeProxies.createProxy(conf, cluster.getFileSystem(0).getUri(),

+ ClientProtocol.class).getProxy();

+ client.enableErasureCodingPolicy(

+ StripedFileTestUtil.getDefaultECPolicy().getName());

+ client.setErasureCodingPolicy("/",

+ StripedFileTestUtil.getDefaultECPolicy().getName());

+

+ long totalCapacity = sum(capacities);

+

+ // fill up the cluster with 30% data. It'll be 45% full plus parity.

+ long fileLen = totalCapacity * 3 / 10;

+ long totalUsedSpace = fileLen * (dataBlocks + parityBlocks) / dataBlocks;

+ FileSystem fs = cluster.getFileSystem(0);

+ DFSTestUtil.createFile(fs, filePath, fileLen, (short) 3, r.nextLong());

+

+ // verify locations of striped blocks

+ LocatedBlocks locatedBlocks = client.getBlockLocations(fileName, 0, fileLen);

+ StripedFileTestUtil.verifyLocatedStripedBlocks(locatedBlocks, groupSize);

+

+ // get datanode report

+ DatanodeInfo[] datanodeReport = client.getDatanodeReport(DatanodeReportType.ALL);

+

+ // add datanode in new rack

+ String newRack = "/rack" + (++numOfRacks);

+ cluster.startDataNodes(conf, 2, true, null,

+ new String[]{newRack, newRack}, null,

+ new long[]{capacity, capacity});

+ totalCapacity += capacity*2;

+ cluster.triggerHeartbeats();

+

+ // add datanode to exclude list

+ Set<String> dnList = new HashSet<>();

+ dnList.add(datanodeReport[0].getHostName());