You are viewing a plain text version of this content. The canonical link for it is here.

Posted to commits@tvm.apache.org by GitBox <gi...@apache.org> on 2022/08/21 16:11:56 UTC

[GitHub] [tvm] PhilippvK opened a new pull request, #12522: [AutoTVM] [TVMC] Visualize tuning progress

PhilippvK opened a new pull request, #12522:

URL: https://github.com/apache/tvm/pull/12522

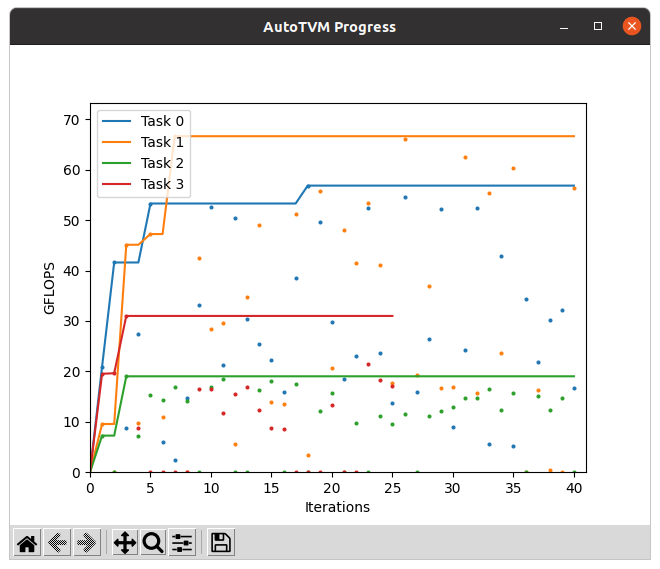

This change adds a callback for visualizing the FLOPS during tuning (AutoTVM only) over time using Matplotlib.

## Usage

### With Python

Create callback (`multi=False`: 1 window per Task, `multi=True`: all tasks in a single window):

```

visualize_callback = autotvm.callback.visualize_progress(task_idx, multi=True)

```

Pass callback to tuner:

```

tuner.tune(

n_trial=100,

measure_option=measure_option,

callbacks=[visualize_callback],

)

```

### With TVM command line

Just add a `--visualize` to the `tvmc tune` command.

## Screenshots

## Additional changes

- Make `si_prefix` in `tvmc/autotuner.py` variable (Prerequisite for a later PR)

## Open Questions

- How to handle the `matplotlib` python dependency? Should I change ``?

- The graph window automatically closes when the tuning is finished. Do we need an option to keep it open until closed?

- A similar feature for the AutoScheduler would be nice, too. I can look into it if there is interest.

- Saving the plot to a file would also be possible. We only need to decide how to expose this to the cmdline (i.e. `--visualize plot.png`)

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@tvm.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [tvm] areusch commented on a diff in pull request #12522: [AutoTVM] [TVMC] Visualize tuning progress

Posted by GitBox <gi...@apache.org>.

areusch commented on code in PR #12522:

URL: https://github.com/apache/tvm/pull/12522#discussion_r972455828

##########

python/tvm/auto_scheduler/task_scheduler.py:

##########

@@ -659,3 +660,87 @@ def post_tune(self, task_scheduler, task_id):

)

)

filep.flush()

+

+

+class VisualizeProgress(TaskSchedulerCallback):

+ """Callback that generates a plot to visualize the tuning progress.

+

+ Parameters

+ ----------

+ title: str

+ Specify the title of the matplotlib figure.

+ si_prefix: str

+ SI prefix for flops

+ keep_open: bool

+ Wait until the matplotlib window was closed by the user.

+ live: bool

+ If false, the graph is only written to the file specified in out_path.

+ out_path: str

+ Path where the graph image should be written (if defined).

+ """

+

+ def __init__(

+ self,

+ title="AutoScheduler Progress",

+ si_prefix="G",

+ keep_open=False,

+ live=True,

+ out_path=None,

+ ):

+ self.best_flops = {}

+ self.all_cts = {}

+ self.si_prefix = si_prefix

+ self.keep_open = keep_open

+ self.live = live

+ self.out_path = out_path

+ self.init_plot(title)

+

+ def __del__(self):

+ import matplotlib.pyplot as plt

+

+ if self.out_path:

+ print(f"Writing plot to file {self.out_path}...")

+ plt.savefig(self.out_path)

+ if self.live and self.keep_open:

+ print("Close matplotlib window to continue...")

+ plt.show()

+

+ def pre_tune(self, task_scheduler, task_id):

+ for i, task in enumerate(task_scheduler.tasks):

+ cts = task_scheduler.task_cts[i]

+ if cts == 0:

+ self.all_cts[i] = [0]

+ self.best_flops[i] = [0]

+ self.update_plot()

+

+ def post_tune(self, task_scheduler, task_id):

+ for i, task in enumerate(task_scheduler.tasks):

+ if task_scheduler.best_costs[i] < 1e9:

+ flops = task_scheduler.tasks[i].compute_dag.flop_ct / task_scheduler.best_costs[i]

+ flops = format_si_prefix(flops, self.si_prefix)

+ cts = task_scheduler.task_cts[i]

+ if cts not in self.all_cts[i]:

+ self.all_cts[i].append(cts)

+ self.best_flops[i].append(flops)

+ else:

+ flops = None

+ self.update_plot()

+

+ def init_plot(self, title):

+ import matplotlib.pyplot as plt

+

+ plt.figure(title)

+

+ def update_plot(self):

+ import matplotlib.pyplot as plt

+

+ plt.clf()

+ for i in range(len(self.all_cts)):

+ if i in self.all_cts and i in self.best_flops:

+ color = plt.cm.tab10(i)

+ plt.plot(self.all_cts[i], self.best_flops[i], color=color, label=f"Task {i}")

+ plt.legend(loc="upper left")

+ plt.xlabel("Iterations")

+ plt.ylabel(f"{self.si_prefix}FLOPS")

+ if self.live:

+ plt.pause(0.05)

Review Comment:

can this be 0?

##########

python/tvm/auto_scheduler/task_scheduler.py:

##########

@@ -659,3 +660,87 @@ def post_tune(self, task_scheduler, task_id):

)

)

filep.flush()

+

+

+class VisualizeProgress(TaskSchedulerCallback):

+ """Callback that generates a plot to visualize the tuning progress.

+

+ Parameters

+ ----------

+ title: str

+ Specify the title of the matplotlib figure.

+ si_prefix: str

+ SI prefix for flops

+ keep_open: bool

+ Wait until the matplotlib window was closed by the user.

+ live: bool

+ If false, the graph is only written to the file specified in out_path.

+ out_path: str

+ Path where the graph image should be written (if defined).

+ """

+

+ def __init__(

+ self,

+ title="AutoScheduler Progress",

+ si_prefix="G",

+ keep_open=False,

+ live=True,

+ out_path=None,

+ ):

+ self.best_flops = {}

+ self.all_cts = {}

+ self.si_prefix = si_prefix

+ self.keep_open = keep_open

+ self.live = live

+ self.out_path = out_path

+ self.init_plot(title)

+

+ def __del__(self):

Review Comment:

we shouldn't do all this stuff in `__del__`. Tracebacks aren't reported and it can be unpredictable when this is invoked. Can you change this to use `__enter__` and `__exit__` so it can be used with `with` statement, and add an explicit part in the `tvmc` tuning infra where we expect this file written?

##########

python/tvm/auto_scheduler/task_scheduler.py:

##########

@@ -659,3 +660,87 @@ def post_tune(self, task_scheduler, task_id):

)

)

filep.flush()

+

+

+class VisualizeProgress(TaskSchedulerCallback):

+ """Callback that generates a plot to visualize the tuning progress.

+

+ Parameters

+ ----------

+ title: str

+ Specify the title of the matplotlib figure.

+ si_prefix: str

+ SI prefix for flops

+ keep_open: bool

+ Wait until the matplotlib window was closed by the user.

+ live: bool

+ If false, the graph is only written to the file specified in out_path.

+ out_path: str

+ Path where the graph image should be written (if defined).

+ """

+

+ def __init__(

+ self,

+ title="AutoScheduler Progress",

+ si_prefix="G",

+ keep_open=False,

+ live=True,

+ out_path=None,

+ ):

+ self.best_flops = {}

+ self.all_cts = {}

+ self.si_prefix = si_prefix

+ self.keep_open = keep_open

+ self.live = live

+ self.out_path = out_path

+ self.init_plot(title)

+

+ def __del__(self):

+ import matplotlib.pyplot as plt

+

+ if self.out_path:

+ print(f"Writing plot to file {self.out_path}...")

+ plt.savefig(self.out_path)

+ if self.live and self.keep_open:

+ print("Close matplotlib window to continue...")

+ plt.show()

+

+ def pre_tune(self, task_scheduler, task_id):

+ for i, task in enumerate(task_scheduler.tasks):

+ cts = task_scheduler.task_cts[i]

+ if cts == 0:

+ self.all_cts[i] = [0]

+ self.best_flops[i] = [0]

+ self.update_plot()

+

+ def post_tune(self, task_scheduler, task_id):

+ for i, task in enumerate(task_scheduler.tasks):

+ if task_scheduler.best_costs[i] < 1e9:

+ flops = task_scheduler.tasks[i].compute_dag.flop_ct / task_scheduler.best_costs[i]

+ flops = format_si_prefix(flops, self.si_prefix)

+ cts = task_scheduler.task_cts[i]

+ if cts not in self.all_cts[i]:

+ self.all_cts[i].append(cts)

+ self.best_flops[i].append(flops)

+ else:

+ flops = None

+ self.update_plot()

Review Comment:

should we always update or only every N?

##########

python/tvm/auto_scheduler/task_scheduler.py:

##########

@@ -659,3 +660,87 @@ def post_tune(self, task_scheduler, task_id):

)

)

filep.flush()

+

+

+class VisualizeProgress(TaskSchedulerCallback):

+ """Callback that generates a plot to visualize the tuning progress.

+

+ Parameters

+ ----------

+ title: str

+ Specify the title of the matplotlib figure.

+ si_prefix: str

+ SI prefix for flops

+ keep_open: bool

+ Wait until the matplotlib window was closed by the user.

+ live: bool

+ If false, the graph is only written to the file specified in out_path.

+ out_path: str

+ Path where the graph image should be written (if defined).

+ """

+

+ def __init__(

+ self,

+ title="AutoScheduler Progress",

+ si_prefix="G",

+ keep_open=False,

+ live=True,

+ out_path=None,

+ ):

+ self.best_flops = {}

+ self.all_cts = {}

+ self.si_prefix = si_prefix

+ self.keep_open = keep_open

+ self.live = live

+ self.out_path = out_path

+ self.init_plot(title)

+

+ def __del__(self):

+ import matplotlib.pyplot as plt

+

+ if self.out_path:

+ print(f"Writing plot to file {self.out_path}...")

+ plt.savefig(self.out_path)

+ if self.live and self.keep_open:

+ print("Close matplotlib window to continue...")

+ plt.show()

+

+ def pre_tune(self, task_scheduler, task_id):

+ for i, task in enumerate(task_scheduler.tasks):

+ cts = task_scheduler.task_cts[i]

+ if cts == 0:

+ self.all_cts[i] = [0]

+ self.best_flops[i] = [0]

+ self.update_plot()

+

+ def post_tune(self, task_scheduler, task_id):

+ for i, task in enumerate(task_scheduler.tasks):

+ if task_scheduler.best_costs[i] < 1e9:

+ flops = task_scheduler.tasks[i].compute_dag.flop_ct / task_scheduler.best_costs[i]

+ flops = format_si_prefix(flops, self.si_prefix)

+ cts = task_scheduler.task_cts[i]

+ if cts not in self.all_cts[i]:

+ self.all_cts[i].append(cts)

+ self.best_flops[i].append(flops)

+ else:

+ flops = None

+ self.update_plot()

+

+ def init_plot(self, title):

+ import matplotlib.pyplot as plt

Review Comment:

can this be moved to the top of the file?

##########

python/tvm/driver/tvmc/autotuner.py:

##########

@@ -139,6 +138,11 @@ def add_tune_parser(subparsers, _, json_params):

help="enable tuning the graph through the AutoScheduler tuner",

action="store_true",

)

+ parser.add_argument(

+ "--visualize",

Review Comment:

this one reads like a boolean option (e.g. action="store_true"). could you better document the behavior?

##########

python/tvm/autotvm/tuner/callback.py:

##########

@@ -178,3 +178,82 @@ def _callback(tuner, inputs, results):

sys.stdout.flush()

return _callback

+

+

+def visualize_progress(

+ idx, title="AutoTVM Progress", si_prefix="G", keep_open=False, live=True, out_path=None

+):

+ """Display tuning progress in graph

+

+ Parameters

+ ----------

+ idx: int

+ Index of the current task.

+ title: str

+ Specify the title of the matplotlib figure.

+ si_prefix: str

+ SI prefix for flops

+ keep_open: bool

+ Wait until the matplotlib window was closed by the user.

+ live: bool

+ If false, the graph is only written to the file specified in out_path.

+ out_path: str

+ Path where the graph image should be written (if defined).

+ """

+ import matplotlib.pyplot as plt

+

+ class _Context(object):

+ """Context to store local variables"""

+

+ def __init__(self):

+ self.keep_open = keep_open

+ self.live = live

+ self.out_path = out_path

+ self.best_flops = [0]

+ self.all_flops = []

+ if idx > 0:

+ plt.figure(title)

+ else:

+ plt.figure(title).clear()

+ self.color = plt.cm.tab10(idx)

+ (self.p,) = plt.plot([0], [0], color=self.color, label=f"Task {idx}")

+ plt.xlabel("Iterations")

+ plt.ylabel(f"{si_prefix}FLOPS")

+ plt.legend(loc="upper left")

+ if self.live:

+ plt.pause(0.05)

+

+ def __del__(self):

Review Comment:

same request here

##########

python/tvm/autotvm/tuner/callback.py:

##########

@@ -178,3 +178,82 @@ def _callback(tuner, inputs, results):

sys.stdout.flush()

return _callback

+

+

+def visualize_progress(

+ idx, title="AutoTVM Progress", si_prefix="G", keep_open=False, live=True, out_path=None

+):

+ """Display tuning progress in graph

+

+ Parameters

+ ----------

+ idx: int

+ Index of the current task.

+ title: str

+ Specify the title of the matplotlib figure.

+ si_prefix: str

+ SI prefix for flops

+ keep_open: bool

+ Wait until the matplotlib window was closed by the user.

+ live: bool

+ If false, the graph is only written to the file specified in out_path.

+ out_path: str

+ Path where the graph image should be written (if defined).

+ """

+ import matplotlib.pyplot as plt

+

+ class _Context(object):

Review Comment:

possible to reuse any of the MS implementation?

##########

python/tvm/autotvm/tuner/callback.py:

##########

@@ -178,3 +178,82 @@ def _callback(tuner, inputs, results):

sys.stdout.flush()

return _callback

+

+

+def visualize_progress(

+ idx, title="AutoTVM Progress", si_prefix="G", keep_open=False, live=True, out_path=None

+):

+ """Display tuning progress in graph

+

+ Parameters

+ ----------

+ idx: int

+ Index of the current task.

+ title: str

+ Specify the title of the matplotlib figure.

+ si_prefix: str

+ SI prefix for flops

+ keep_open: bool

+ Wait until the matplotlib window was closed by the user.

+ live: bool

+ If false, the graph is only written to the file specified in out_path.

+ out_path: str

+ Path where the graph image should be written (if defined).

+ """

+ import matplotlib.pyplot as plt

+

+ class _Context(object):

Review Comment:

i'm not sure you need a class here to store the vars. they should be captured into the closure below if you reference them from there

##########

python/tvm/driver/tvmc/autotuner.py:

##########

@@ -638,6 +685,9 @@ def tune_tasks(

trials: int,

early_stopping: Optional[int] = None,

tuning_records: Optional[str] = None,

+ si_prefix: str = "G",

+ visualize_mode: str = "none",

Review Comment:

here as well

##########

python/tvm/driver/tvmc/autotuner.py:

##########

@@ -596,6 +636,8 @@ def schedule_tasks(

tuning_options: auto_scheduler.TuningOptions,

prior_records: Optional[str] = None,

log_estimated_latency: bool = False,

+ visualize_mode: str = "none",

Review Comment:

can you update this type to reflect the Enum class?

##########

python/tvm/driver/tvmc/autotuner.py:

##########

@@ -148,20 +152,17 @@ def add_tune_parser(subparsers, _, json_params):

auto_scheduler_group.add_argument(

"--cache-line-bytes",

type=int,

- help="the size of cache line in bytes. "

- "If not specified, it will be autoset for the current machine.",

+ help="the size of cache line in bytes. " "If not specified, it will be autoset for the current machine.",

Review Comment:

can you join the strings here and below?

##########

python/tvm/driver/tvmc/autotuner.py:

##########

@@ -228,6 +226,30 @@ def add_tune_parser(subparsers, _, json_params):

parser.set_defaults(**one_entry)

+def parse_visualize_arg(value):

+ live = False

+ file = None

+ if not value:

+ return "none", None

+ splitted = value.split(",")

+ assert len(splitted) <= 2, "The --visualize argument does not accept more than two arguments"

+ for item in splitted:

+ assert len(item) > 0, "The arguments of --visualize can not be an empty string"

+ if item == "live":

+ live = True

+ else:

+ assert file is None, "Only a single path can be passed to the --visualize argument"

+ file = pathlib.Path(item)

+ mode = "none"

Review Comment:

can you create an Enum class to document mode?

##########

python/tvm/driver/tvmc/autotuner.py:

##########

@@ -691,12 +747,25 @@ def tune_tasks(

tuner_obj.load_history(autotvm.record.load_from_file(tuning_records))

logging.info("loaded history in %.2f sec(s)", time.time() - start_time)

+ callbacks = [

+ autotvm.callback.progress_bar(trials, prefix=prefix, si_prefix=si_prefix),

+ autotvm.callback.log_to_file(log_file),

+ ]

+

+ if visualize_mode != "none":

+ assert visualize_mode in ["both", "live", "none"]

Review Comment:

update this to `assert visualize_mode in VisualizeModes`

##########

tests/python/driver/tvmc/test_autotuner.py:

##########

@@ -182,3 +182,18 @@ def test_tune_rpc_tracker_parsing(mock_load_model, mock_tune_model, mock_auto_sc

assert "10.0.0.1" == kwargs["hostname"]

assert "port" in kwargs

assert 9999 == kwargs["port"]

+

Review Comment:

possible to add a test using e.g. pyplot svg backend and a golden svg file?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@tvm.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [tvm] PhilippvK commented on a diff in pull request #12522: [AutoTVM] [TVMC] Visualize tuning progress

Posted by GitBox <gi...@apache.org>.

PhilippvK commented on code in PR #12522:

URL: https://github.com/apache/tvm/pull/12522#discussion_r973563571

##########

python/tvm/autotvm/tuner/callback.py:

##########

@@ -178,3 +178,82 @@ def _callback(tuner, inputs, results):

sys.stdout.flush()

return _callback

+

+

+def visualize_progress(

+ idx, title="AutoTVM Progress", si_prefix="G", keep_open=False, live=True, out_path=None

+):

+ """Display tuning progress in graph

+

+ Parameters

+ ----------

+ idx: int

+ Index of the current task.

+ title: str

+ Specify the title of the matplotlib figure.

+ si_prefix: str

+ SI prefix for flops

+ keep_open: bool

+ Wait until the matplotlib window was closed by the user.

+ live: bool

+ If false, the graph is only written to the file specified in out_path.

+ out_path: str

+ Path where the graph image should be written (if defined).

+ """

+ import matplotlib.pyplot as plt

+

+ class _Context(object):

Review Comment:

I'll try to refactor the code into a common base class `tvm.contrib.tune_viz.TuneVisualizer` and introduce `AutoTVMVisualizer` as well as `AutoSchedulerVisualizer` inheriting from that.

I haven't touched MS (I guess MetaScheduler) yet, did you mean the AutoScheduler callback?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@tvm.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [tvm] PhilippvK commented on a diff in pull request #12522: [AutoTVM] [TVMC] Visualize tuning progress

Posted by GitBox <gi...@apache.org>.

PhilippvK commented on code in PR #12522:

URL: https://github.com/apache/tvm/pull/12522#discussion_r995413239

##########

tests/python/driver/tvmc/test_autotuner.py:

##########

@@ -182,3 +182,18 @@ def test_tune_rpc_tracker_parsing(mock_load_model, mock_tune_model, mock_auto_sc

assert "10.0.0.1" == kwargs["hostname"]

assert "port" in kwargs

assert 9999 == kwargs["port"]

+

Review Comment:

I will look into this.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@tvm.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [tvm] PhilippvK commented on a diff in pull request #12522: [AutoTVM] [TVMC] Visualize tuning progress

Posted by GitBox <gi...@apache.org>.

PhilippvK commented on code in PR #12522:

URL: https://github.com/apache/tvm/pull/12522#discussion_r973540792

##########

python/tvm/driver/tvmc/autotuner.py:

##########

@@ -139,6 +138,11 @@ def add_tune_parser(subparsers, _, json_params):

help="enable tuning the graph through the AutoScheduler tuner",

action="store_true",

)

+ parser.add_argument(

+ "--visualize",

Review Comment:

@areusch I also had the feeling that this is kinda unintuitive, since I added the option to write the visualizations directly to a file. How about introducing two separate command line flags `—visualize[-live]` (Type: `bool`) and `—visualize-[file|path]` (Type: `str`) to deal with that situation? If you would like that approach, please let me know how the two arguments should be called exactly.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@tvm.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [tvm] areusch commented on a diff in pull request #12522: [AutoTVM] [TVMC] Visualize tuning progress

Posted by GitBox <gi...@apache.org>.

areusch commented on code in PR #12522:

URL: https://github.com/apache/tvm/pull/12522#discussion_r975599884

##########

python/tvm/driver/tvmc/autotuner.py:

##########

@@ -139,6 +138,11 @@ def add_tune_parser(subparsers, _, json_params):

help="enable tuning the graph through the AutoScheduler tuner",

action="store_true",

)

+ parser.add_argument(

+ "--visualize",

Review Comment:

i think the existing arg is okay, i agree it's a flag with two meanings, but i think if you add it like this, the --help string will be clear:

```

NO_VISUALIZE_OPTION = object()

parser.add_argument("--visualize", nargs="?", type=str, metavar="file", default=NO_VISUALIZE_OPTION, help="Visualize tuning progress. With no parameter specified, display a pyplot window and update the graph as tuning progresses. With a parameter, instead write the graph to the file specified (format determined by the given file extension).")

```

(I think the "format determined by the given file extension" is correct, but maybe double check that)

Alternatively open to the two options, especially if you think users want both. you could also consider something like `--plot=live,foo.png`, which might provide a nice set of flexibility without users needing to know two options and how they interact.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@tvm.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [tvm] PhilippvK commented on pull request #12522: [AutoTVM] [TVMC] Visualize tuning progress

Posted by GitBox <gi...@apache.org>.

PhilippvK commented on PR #12522:

URL: https://github.com/apache/tvm/pull/12522#issuecomment-1363006473

I will followup with a new branch next year!

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@tvm.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [tvm] PhilippvK commented on pull request #12522: [AutoTVM] [TVMC] Visualize tuning progress

Posted by GitBox <gi...@apache.org>.

PhilippvK commented on PR #12522:

URL: https://github.com/apache/tvm/pull/12522#issuecomment-1223432097

> @PhilippvK this looks very cool! the only thing missing is to declare the dependency on matplotlib so that the user is not surprised with and ImportError when trying to use it :).

>

I already had this in mind (see original post) but was unsure on what the best approach is.

> I feel also this should be added to any of the tutorials, because it is too cool for users to miss out.

Yes. I will try to come up with a short section for the tutorials.

What do you think about the other questions?

- The graph window automatically closes when the tuning is finished. Do we need an option to keep it open until closed?

- A similar feature for the AutoScheduler would be nice, too. I can look into it if there is interest.

- Saving the plot to a file would also be possible. We only need to decide how to expose this to the cmdline (i.e. `--visualize-out plot.png`)

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@tvm.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [tvm] PhilippvK closed pull request #12522: [AutoTVM] [TVMC] Visualize tuning progress

Posted by GitBox <gi...@apache.org>.

PhilippvK closed pull request #12522: [AutoTVM] [TVMC] Visualize tuning progress

URL: https://github.com/apache/tvm/pull/12522

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@tvm.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [tvm] PhilippvK commented on pull request #12522: [AutoTVM] [TVMC] Visualize tuning progress

Posted by GitBox <gi...@apache.org>.

PhilippvK commented on PR #12522:

URL: https://github.com/apache/tvm/pull/12522#issuecomment-1221576263

CC @leandron @giuseros @Mousius @areusch @tqchen

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@tvm.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [tvm] PhilippvK commented on pull request #12522: [AutoTVM] [TVMC] Visualize tuning progress

Posted by GitBox <gi...@apache.org>.

PhilippvK commented on PR #12522:

URL: https://github.com/apache/tvm/pull/12522#issuecomment-1242040117

I reworked the PR and updated the comment accordingly. Thank you for your inputs! Please have another look at the changes as I added several improvements:

- AutoScheduler support

- Mention in Tutorial

- Minimal unit test for cmdline parsing of `--visualize` argument

- Support file export

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@tvm.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [tvm] PhilippvK commented on a diff in pull request #12522: [AutoTVM] [TVMC] Visualize tuning progress

Posted by GitBox <gi...@apache.org>.

PhilippvK commented on code in PR #12522:

URL: https://github.com/apache/tvm/pull/12522#discussion_r973541181

##########

python/tvm/auto_scheduler/task_scheduler.py:

##########

@@ -659,3 +660,87 @@ def post_tune(self, task_scheduler, task_id):

)

)

filep.flush()

+

+

+class VisualizeProgress(TaskSchedulerCallback):

+ """Callback that generates a plot to visualize the tuning progress.

+

+ Parameters

+ ----------

+ title: str

+ Specify the title of the matplotlib figure.

+ si_prefix: str

+ SI prefix for flops

+ keep_open: bool

+ Wait until the matplotlib window was closed by the user.

+ live: bool

+ If false, the graph is only written to the file specified in out_path.

+ out_path: str

+ Path where the graph image should be written (if defined).

+ """

+

+ def __init__(

+ self,

+ title="AutoScheduler Progress",

+ si_prefix="G",

+ keep_open=False,

+ live=True,

+ out_path=None,

+ ):

+ self.best_flops = {}

+ self.all_cts = {}

+ self.si_prefix = si_prefix

+ self.keep_open = keep_open

+ self.live = live

+ self.out_path = out_path

+ self.init_plot(title)

+

+ def __del__(self):

+ import matplotlib.pyplot as plt

+

+ if self.out_path:

+ print(f"Writing plot to file {self.out_path}...")

+ plt.savefig(self.out_path)

+ if self.live and self.keep_open:

+ print("Close matplotlib window to continue...")

+ plt.show()

+

+ def pre_tune(self, task_scheduler, task_id):

+ for i, task in enumerate(task_scheduler.tasks):

+ cts = task_scheduler.task_cts[i]

+ if cts == 0:

+ self.all_cts[i] = [0]

+ self.best_flops[i] = [0]

+ self.update_plot()

+

+ def post_tune(self, task_scheduler, task_id):

+ for i, task in enumerate(task_scheduler.tasks):

+ if task_scheduler.best_costs[i] < 1e9:

+ flops = task_scheduler.tasks[i].compute_dag.flop_ct / task_scheduler.best_costs[i]

+ flops = format_si_prefix(flops, self.si_prefix)

+ cts = task_scheduler.task_cts[i]

+ if cts not in self.all_cts[i]:

+ self.all_cts[i].append(cts)

+ self.best_flops[i].append(flops)

+ else:

+ flops = None

+ self.update_plot()

+

+ def init_plot(self, title):

+ import matplotlib.pyplot as plt

Review Comment:

Since the other callbacks do not need the `matplotlib` Package with would introduce the need to install that package even if that visualize fallback is not used by default. Splitting the callbacks into separate files would make it at least a bit more concise.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@tvm.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [tvm] PhilippvK commented on a diff in pull request #12522: [AutoTVM] [TVMC] Visualize tuning progress

Posted by GitBox <gi...@apache.org>.

PhilippvK commented on code in PR #12522:

URL: https://github.com/apache/tvm/pull/12522#discussion_r976058955

##########

python/tvm/driver/tvmc/autotuner.py:

##########

@@ -139,6 +138,11 @@ def add_tune_parser(subparsers, _, json_params):

help="enable tuning the graph through the AutoScheduler tuner",

action="store_true",

)

+ parser.add_argument(

+ "--visualize",

Review Comment:

@areusch

> `--plot=live,foo.png`

This should be what is implemented right now just with a different name. The main disadvantage here is that the custom format/parsing of the argument (i.e. `live, path.png`, `path.png,live`,...) can be confusing.

> `parser.add_argument("--visualize", nargs="?", ...`

Your proposed solution which accepts either one or no args at all, looks nice at the first glance but the `nargs="?"` might lead to some unexpected behavior if a model path is confused with the argument to the `--visualize` option by argparse. In addition, as you already suggested this only allows:

- No visualizations

- Live only

- File only

That's why I would prefer the two separate options, which also allow a 4 combinations:

- No visualizations

- Live only

- File only

- Both

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@tvm.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org