You are viewing a plain text version of this content. The canonical link for it is here.

Posted to reviews@spark.apache.org by actuaryzhang <gi...@git.apache.org> on 2017/05/25 23:03:20 UTC

[GitHub] spark pull request #18114: [SPARK-20889][SparkR] Grouped documentation for d...

GitHub user actuaryzhang opened a pull request:

https://github.com/apache/spark/pull/18114

[SPARK-20889][SparkR] Grouped documentation for datetime column methods

## What changes were proposed in this pull request?

Grouped documentation for datetime column methods.

You can merge this pull request into a Git repository by running:

$ git pull https://github.com/actuaryzhang/spark sparkRDocDate

Alternatively you can review and apply these changes as the patch at:

https://github.com/apache/spark/pull/18114.patch

To close this pull request, make a commit to your master/trunk branch

with (at least) the following in the commit message:

This closes #18114

----

commit 2c2fa800bb0f4c7f2503a08a9565a8b9ac135d69

Author: Wayne Zhang <ac...@uber.com>

Date: 2017-05-25T21:07:20Z

start working on datetime functions

commit 0d2853d0cff6cbd92fcbb68cebaee0729d25eb8f

Author: Wayne Zhang <ac...@uber.com>

Date: 2017-05-25T22:07:57Z

fix issue in generics and example

----

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastructure@apache.org or file a JIRA ticket

with INFRA.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #18114: [SPARK-20889][SparkR] Grouped documentation for DATETIME...

Posted by AmplabJenkins <gi...@git.apache.org>.

Github user AmplabJenkins commented on the issue:

https://github.com/apache/spark/pull/18114

Merged build finished. Test PASSed.

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastructure@apache.org or file a JIRA ticket

with INFRA.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #18114: [SPARK-20889][SparkR] Grouped documentation for DATETIME...

Posted by AmplabJenkins <gi...@git.apache.org>.

Github user AmplabJenkins commented on the issue:

https://github.com/apache/spark/pull/18114

Merged build finished. Test PASSed.

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastructure@apache.org or file a JIRA ticket

with INFRA.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #18114: [SPARK-20889][SparkR] Grouped documentation for DATETIME...

Posted by felixcheung <gi...@git.apache.org>.

Github user felixcheung commented on the issue:

https://github.com/apache/spark/pull/18114

merged to master. thanks!

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastructure@apache.org or file a JIRA ticket

with INFRA.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #18114: [SPARK-20889][SparkR] Grouped documentation for D...

Posted by asfgit <gi...@git.apache.org>.

Github user asfgit closed the pull request at:

https://github.com/apache/spark/pull/18114

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastructure@apache.org or file a JIRA ticket

with INFRA.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #18114: [SPARK-20889][SparkR] Grouped documentation for d...

Posted by actuaryzhang <gi...@git.apache.org>.

Github user actuaryzhang commented on a diff in the pull request:

https://github.com/apache/spark/pull/18114#discussion_r118605244

--- Diff: R/pkg/R/functions.R ---

@@ -2095,26 +2061,28 @@ setMethod("atan2", signature(y = "Column"),

column(jc)

})

-#' datediff

+#' @section Details:

+#' \code{datediff}: Returns the number of days from \code{start} to \code{end}.

#'

-#' Returns the number of days from \code{start} to \code{end}.

-#'

-#' @param x start Column to use.

-#' @param y end Column to use.

-#'

-#' @rdname datediff

-#' @name datediff

-#' @aliases datediff,Column-method

-#' @family date time functions

+#' @rdname column_datetime_functions

+#' @aliases datediff datediff,Column-method

#' @export

-#' @examples \dontrun{datediff(df$c, x)}

+#' @examples

+#'

+#' \dontrun{

+#' set.seed(11)

+#' tmp <- createDataFrame(data.frame(time_string1 = as.POSIXct(dts),

+#' time_string2 = as.POSIXct(dts[order(runif(length(dts)))])))

+#' tmp2 <- mutate(tmp, timediff = datediff(tmp$time_string1, tmp$time_string2),

+#' monthdiff = months_between(tmp$time_string1, tmp$time_string2))

+#' head(tmp2)}

#' @note datediff since 1.5.0

-setMethod("datediff", signature(y = "Column"),

- function(y, x) {

- if (class(x) == "Column") {

- x <- x@jc

+setMethod("datediff", signature(x = "Column"),

+ function(x, y) {

--- End diff --

Here, `x` and `y` are reversed for easy documentation. Similarly for other methods that take two arguments.

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastructure@apache.org or file a JIRA ticket

with INFRA.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #18114: [SPARK-20889][SparkR] Grouped documentation for DATETIME...

Posted by AmplabJenkins <gi...@git.apache.org>.

Github user AmplabJenkins commented on the issue:

https://github.com/apache/spark/pull/18114

Merged build finished. Test PASSed.

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastructure@apache.org or file a JIRA ticket

with INFRA.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #18114: [SPARK-20889][SparkR] Grouped documentation for DATETIME...

Posted by AmplabJenkins <gi...@git.apache.org>.

Github user AmplabJenkins commented on the issue:

https://github.com/apache/spark/pull/18114

Test FAILed.

Refer to this link for build results (access rights to CI server needed):

https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/78271/

Test FAILed.

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastructure@apache.org or file a JIRA ticket

with INFRA.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #18114: [SPARK-20889][SparkR] Grouped documentation for DATETIME...

Posted by SparkQA <gi...@git.apache.org>.

Github user SparkQA commented on the issue:

https://github.com/apache/spark/pull/18114

**[Test build #77391 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/77391/testReport)** for PR 18114 at commit [`0d2853d`](https://github.com/apache/spark/commit/0d2853d0cff6cbd92fcbb68cebaee0729d25eb8f).

* This patch passes all tests.

* This patch merges cleanly.

* This patch adds no public classes.

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastructure@apache.org or file a JIRA ticket

with INFRA.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #18114: [SPARK-20889][SparkR] Grouped documentation for DATETIME...

Posted by AmplabJenkins <gi...@git.apache.org>.

Github user AmplabJenkins commented on the issue:

https://github.com/apache/spark/pull/18114

Test FAILed.

Refer to this link for build results (access rights to CI server needed):

https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/77452/

Test FAILed.

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastructure@apache.org or file a JIRA ticket

with INFRA.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #18114: [SPARK-20889][SparkR] Grouped documentation for DATETIME...

Posted by SparkQA <gi...@git.apache.org>.

Github user SparkQA commented on the issue:

https://github.com/apache/spark/pull/18114

**[Test build #77447 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/77447/testReport)** for PR 18114 at commit [`944aa92`](https://github.com/apache/spark/commit/944aa92e4c35015010ee9cdaf913f5639670acb6).

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastructure@apache.org or file a JIRA ticket

with INFRA.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #18114: [SPARK-20889][SparkR] Grouped documentation for D...

Posted by felixcheung <gi...@git.apache.org>.

Github user felixcheung commented on a diff in the pull request:

https://github.com/apache/spark/pull/18114#discussion_r123311748

--- Diff: R/pkg/R/functions.R ---

@@ -2048,19 +2041,21 @@ setMethod("atan2", signature(y = "Column"),

column(jc)

})

-#' datediff

-#'

-#' Returns the number of days from \code{start} to \code{end}.

-#'

-#' @param x start Column to use.

-#' @param y end Column to use.

+#' @section Details:

+#' \code{datediff}: Returns the number of days from \code{y} to \code{x}.

#'

-#' @rdname datediff

-#' @name datediff

-#' @aliases datediff,Column-method

-#' @family date time functions

+#' @rdname column_datetime_diff_functions

+#' @aliases datediff datediff,Column-method

#' @export

-#' @examples \dontrun{datediff(df$c, x)}

+#' @examples

+#'

+#' \dontrun{

+#' set.seed(11)

--- End diff --

why need to set seed?

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastructure@apache.org or file a JIRA ticket

with INFRA.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #18114: [SPARK-20889][SparkR] Grouped documentation for D...

Posted by actuaryzhang <gi...@git.apache.org>.

Github user actuaryzhang commented on a diff in the pull request:

https://github.com/apache/spark/pull/18114#discussion_r123327948

--- Diff: R/pkg/R/functions.R ---

@@ -2348,26 +2336,18 @@ setMethod("n", signature(x = "Column"),

count(x)

})

-#' date_format

-#'

-#' Converts a date/timestamp/string to a value of string in the format specified by the date

-#' format given by the second argument.

-#'

-#' A pattern could be for instance \preformatted{dd.MM.yyyy} and could return a string like '18.03.1993'. All

+#' @section Details:

+#' \code{date_format}: Converts a date/timestamp/string to a value of string in the format

+#' specified by the date format given by the second argument. A pattern could be for instance

+#' \code{dd.MM.yyyy} and could return a string like '18.03.1993'. All

#' pattern letters of \code{java.text.SimpleDateFormat} can be used.

-#'

#' Note: Use when ever possible specialized functions like \code{year}. These benefit from a

#' specialized implementation.

#'

-#' @param y Column to compute on.

-#' @param x date format specification.

+#' @rdname column_datetime_diff_functions

#'

-#' @family date time functions

-#' @rdname date_format

-#' @name date_format

-#' @aliases date_format,Column,character-method

+#' @aliases date_format date_format,Column,character-method

#' @export

-#' @examples \dontrun{date_format(df$t, 'MM/dd/yyy')}

--- End diff --

Added back.

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastructure@apache.org or file a JIRA ticket

with INFRA.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #18114: [SPARK-20889][SparkR] Grouped documentation for D...

Posted by felixcheung <gi...@git.apache.org>.

Github user felixcheung commented on a diff in the pull request:

https://github.com/apache/spark/pull/18114#discussion_r123313784

--- Diff: R/pkg/R/functions.R ---

@@ -2348,26 +2336,18 @@ setMethod("n", signature(x = "Column"),

count(x)

})

-#' date_format

-#'

-#' Converts a date/timestamp/string to a value of string in the format specified by the date

-#' format given by the second argument.

-#'

-#' A pattern could be for instance \preformatted{dd.MM.yyyy} and could return a string like '18.03.1993'. All

+#' @section Details:

+#' \code{date_format}: Converts a date/timestamp/string to a value of string in the format

+#' specified by the date format given by the second argument. A pattern could be for instance

+#' \code{dd.MM.yyyy} and could return a string like '18.03.1993'. All

#' pattern letters of \code{java.text.SimpleDateFormat} can be used.

-#'

#' Note: Use when ever possible specialized functions like \code{year}. These benefit from a

#' specialized implementation.

#'

-#' @param y Column to compute on.

-#' @param x date format specification.

+#' @rdname column_datetime_diff_functions

#'

-#' @family date time functions

-#' @rdname date_format

-#' @name date_format

-#' @aliases date_format,Column,character-method

+#' @aliases date_format date_format,Column,character-method

#' @export

-#' @examples \dontrun{date_format(df$t, 'MM/dd/yyy')}

--- End diff --

I think this example is not in the new addition

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastructure@apache.org or file a JIRA ticket

with INFRA.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

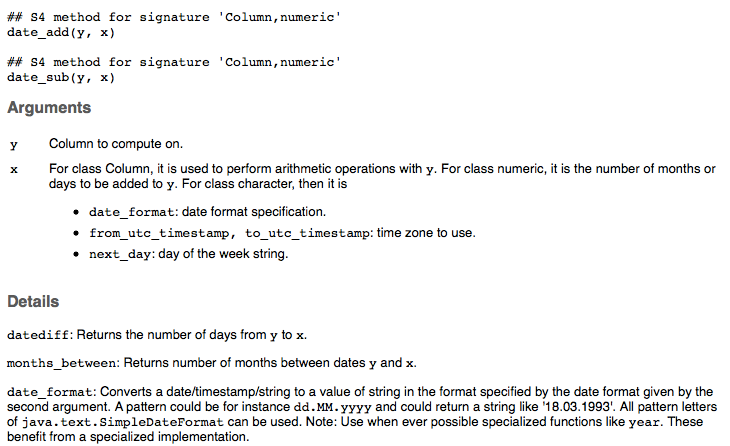

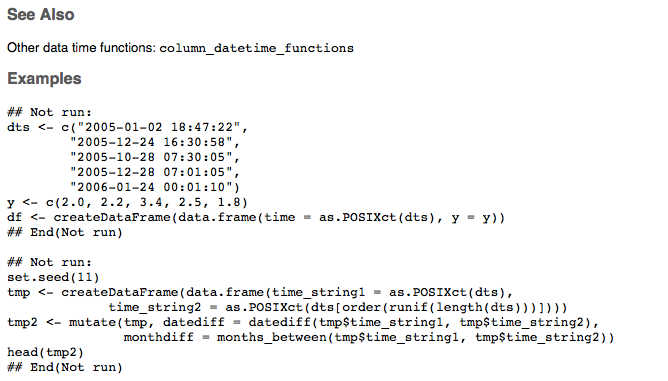

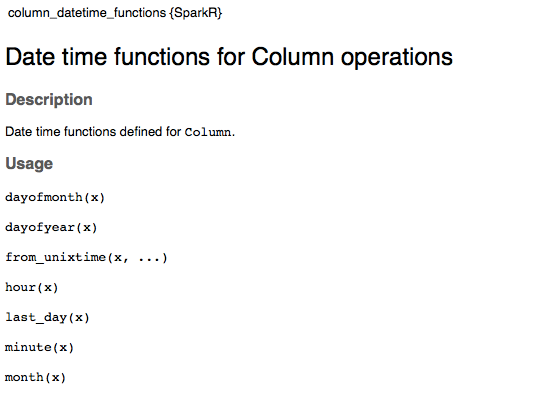

[GitHub] spark issue #18114: [SPARK-20889][SparkR] Grouped documentation for datetime...

Posted by actuaryzhang <gi...@git.apache.org>.

Github user actuaryzhang commented on the issue:

https://github.com/apache/spark/pull/18114

@felixcheung

Created this PR to update the doc for the date time methods, similar to #18114. About 27 date time methods are documented into one page.

I'm attaching the snapshot of part of the new help page.

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastructure@apache.org or file a JIRA ticket

with INFRA.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #18114: [SPARK-20889][SparkR] Grouped documentation for D...

Posted by felixcheung <gi...@git.apache.org>.

Github user felixcheung commented on a diff in the pull request:

https://github.com/apache/spark/pull/18114#discussion_r123313942

--- Diff: R/pkg/R/functions.R ---

@@ -1801,29 +1819,18 @@ setMethod("to_json", signature(x = "Column"),

column(jc)

})

-#' to_timestamp

-#'

-#' Converts the column into a TimestampType. You may optionally specify a format

-#' according to the rules in:

+#' @section Details:

+#' \code{to_timestamp}: Converts the column into a TimestampType. You may optionally specify

+#' a format according to the rules in:

#' \url{http://docs.oracle.com/javase/tutorial/i18n/format/simpleDateFormat.html}.

#' If the string cannot be parsed according to the specified format (or default),

#' the value of the column will be null.

#' By default, it follows casting rules to a TimestampType if the format is omitted

#' (equivalent to \code{cast(df$x, "timestamp")}).

#'

-#' @param x Column to parse.

-#' @param format string to use to parse x Column to TimestampType. (optional)

-#'

-#' @rdname to_timestamp

-#' @name to_timestamp

-#' @family date time functions

-#' @aliases to_timestamp,Column,missing-method

+#' @rdname column_datetime_functions

+#' @aliases to_timestamp to_timestamp,Column,missing-method

#' @export

-#' @examples

-#' \dontrun{

-#' to_timestamp(df$c)

-#' to_timestamp(df$c, 'yyyy-MM-dd')

--- End diff --

I think these examples are not added back - could you check?

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastructure@apache.org or file a JIRA ticket

with INFRA.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #18114: [SPARK-20889][SparkR] Grouped documentation for D...

Posted by felixcheung <gi...@git.apache.org>.

Github user felixcheung commented on a diff in the pull request:

https://github.com/apache/spark/pull/18114#discussion_r123310699

--- Diff: R/pkg/R/functions.R ---

@@ -2458,111 +2441,78 @@ setMethod("instr", signature(y = "Column", x = "character"),

column(jc)

})

-#' next_day

-#'

-#' Given a date column, returns the first date which is later than the value of the date column

-#' that is on the specified day of the week.

-#'

-#' For example, \code{next_day('2015-07-27', "Sunday")} returns 2015-08-02 because that is the first

-#' Sunday after 2015-07-27.

-#'

-#' Day of the week parameter is case insensitive, and accepts first three or two characters:

-#' "Mon", "Tue", "Wed", "Thu", "Fri", "Sat", "Sun".

+#' @section Details:

+#' \code{next_day}: Given a date column, returns the first date which is later than the value of

+#' the date column that is on the specified day of the week. For example,

+#' \code{next_day('2015-07-27', "Sunday")} returns 2015-08-02 because that is the first Sunday

+#' after 2015-07-27. Day of the week parameter is case insensitive, and accepts first three or

+#' two characters: "Mon", "Tue", "Wed", "Thu", "Fri", "Sat", "Sun".

#'

-#' @param y Column to compute on.

-#' @param x Day of the week string.

-#'

-#' @family date time functions

-#' @rdname next_day

-#' @name next_day

-#' @aliases next_day,Column,character-method

+#' @rdname column_datetime_diff_functions

+#' @aliases next_day next_day,Column,character-method

#' @export

-#' @examples

-#'\dontrun{

-#'next_day(df$d, 'Sun')

-#'next_day(df$d, 'Sunday')

-#'}

#' @note next_day since 1.5.0

setMethod("next_day", signature(y = "Column", x = "character"),

function(y, x) {

jc <- callJStatic("org.apache.spark.sql.functions", "next_day", y@jc, x)

column(jc)

})

-#' to_utc_timestamp

-#'

-#' Given a timestamp, which corresponds to a certain time of day in the given timezone, returns

-#' another timestamp that corresponds to the same time of day in UTC.

+#' @section Details:

+#' \code{to_utc_timestamp}: Given a timestamp, which corresponds to a certain time of day

+#' in the given timezone, returns another timestamp that corresponds to the same time of day in UTC.

#'

-#' @param y Column to compute on

-#' @param x timezone to use

-#'

-#' @family date time functions

-#' @rdname to_utc_timestamp

-#' @name to_utc_timestamp

-#' @aliases to_utc_timestamp,Column,character-method

+#' @rdname column_datetime_diff_functions

+#' @aliases to_utc_timestamp to_utc_timestamp,Column,character-method

#' @export

-#' @examples \dontrun{to_utc_timestamp(df$t, 'PST')}

#' @note to_utc_timestamp since 1.5.0

setMethod("to_utc_timestamp", signature(y = "Column", x = "character"),

function(y, x) {

jc <- callJStatic("org.apache.spark.sql.functions", "to_utc_timestamp", y@jc, x)

column(jc)

})

-#' add_months

+#' @section Details:

+#' \code{add_months}: Returns the date that is numMonths after startDate.

--- End diff --

might be a bit confusing what is `numMonths` (`x`) and what is `startDate` (`y`)

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastructure@apache.org or file a JIRA ticket

with INFRA.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #18114: [SPARK-20889][SparkR] Grouped documentation for D...

Posted by actuaryzhang <gi...@git.apache.org>.

Github user actuaryzhang commented on a diff in the pull request:

https://github.com/apache/spark/pull/18114#discussion_r123328685

--- Diff: R/pkg/R/functions.R ---

@@ -2458,111 +2441,78 @@ setMethod("instr", signature(y = "Column", x = "character"),

column(jc)

})

-#' next_day

-#'

-#' Given a date column, returns the first date which is later than the value of the date column

-#' that is on the specified day of the week.

-#'

-#' For example, \code{next_day('2015-07-27', "Sunday")} returns 2015-08-02 because that is the first

-#' Sunday after 2015-07-27.

-#'

-#' Day of the week parameter is case insensitive, and accepts first three or two characters:

-#' "Mon", "Tue", "Wed", "Thu", "Fri", "Sat", "Sun".

+#' @section Details:

+#' \code{next_day}: Given a date column, returns the first date which is later than the value of

+#' the date column that is on the specified day of the week. For example,

+#' \code{next_day('2015-07-27', "Sunday")} returns 2015-08-02 because that is the first Sunday

+#' after 2015-07-27. Day of the week parameter is case insensitive, and accepts first three or

+#' two characters: "Mon", "Tue", "Wed", "Thu", "Fri", "Sat", "Sun".

#'

-#' @param y Column to compute on.

-#' @param x Day of the week string.

-#'

-#' @family date time functions

-#' @rdname next_day

-#' @name next_day

-#' @aliases next_day,Column,character-method

+#' @rdname column_datetime_diff_functions

+#' @aliases next_day next_day,Column,character-method

#' @export

-#' @examples

-#'\dontrun{

-#'next_day(df$d, 'Sun')

-#'next_day(df$d, 'Sunday')

-#'}

#' @note next_day since 1.5.0

setMethod("next_day", signature(y = "Column", x = "character"),

function(y, x) {

jc <- callJStatic("org.apache.spark.sql.functions", "next_day", y@jc, x)

column(jc)

})

-#' to_utc_timestamp

-#'

-#' Given a timestamp, which corresponds to a certain time of day in the given timezone, returns

-#' another timestamp that corresponds to the same time of day in UTC.

+#' @section Details:

+#' \code{to_utc_timestamp}: Given a timestamp, which corresponds to a certain time of day

+#' in the given timezone, returns another timestamp that corresponds to the same time of day in UTC.

#'

-#' @param y Column to compute on

-#' @param x timezone to use

-#'

-#' @family date time functions

-#' @rdname to_utc_timestamp

-#' @name to_utc_timestamp

-#' @aliases to_utc_timestamp,Column,character-method

+#' @rdname column_datetime_diff_functions

+#' @aliases to_utc_timestamp to_utc_timestamp,Column,character-method

#' @export

-#' @examples \dontrun{to_utc_timestamp(df$t, 'PST')}

#' @note to_utc_timestamp since 1.5.0

setMethod("to_utc_timestamp", signature(y = "Column", x = "character"),

function(y, x) {

jc <- callJStatic("org.apache.spark.sql.functions", "to_utc_timestamp", y@jc, x)

column(jc)

})

-#' add_months

+#' @section Details:

+#' \code{add_months}: Returns the date that is numMonths after startDate.

--- End diff --

Yes, this was the original description. Updated to make it clearer. Also, the examples now will help users figure out how to use these methods.

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastructure@apache.org or file a JIRA ticket

with INFRA.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #18114: [SPARK-20889][SparkR] Grouped documentation for DATETIME...

Posted by actuaryzhang <gi...@git.apache.org>.

Github user actuaryzhang commented on the issue:

https://github.com/apache/spark/pull/18114

For the `column_datetime_diff_functions`:

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastructure@apache.org or file a JIRA ticket

with INFRA.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #18114: [SPARK-20889][SparkR] Grouped documentation for D...

Posted by HyukjinKwon <gi...@git.apache.org>.

Github user HyukjinKwon commented on a diff in the pull request:

https://github.com/apache/spark/pull/18114#discussion_r123417000

--- Diff: R/pkg/R/functions.R ---

@@ -34,6 +34,58 @@ NULL

#' df <- createDataFrame(cbind(model = rownames(mtcars), mtcars))}

NULL

+#' Date time functions for Column operations

+#'

+#' Date time functions defined for \code{Column}.

+#'

+#' @param x Column to compute on.

+#' @param format For \code{to_date} and \code{to_timestamp}, it is the string to use to parse

+#' x Column to DateType or TimestampType. For \code{trunc}, it is the string used

+#' for specifying the truncation method. For example, "year", "yyyy", "yy" for

+#' truncate by year, or "month", "mon", "mm" for truncate by month.

+#' @param ... additional argument(s).

+#' @name column_datetime_functions

+#' @rdname column_datetime_functions

+#' @family data time functions

+#' @examples

+#' \dontrun{

+#' dts <- c("2005-01-02 18:47:22",

+#' "2005-12-24 16:30:58",

+#' "2005-10-28 07:30:05",

+#' "2005-12-28 07:01:05",

+#' "2006-01-24 00:01:10")

+#' y <- c(2.0, 2.2, 3.4, 2.5, 1.8)

+#' df <- createDataFrame(data.frame(time = as.POSIXct(dts), y = y))}

+NULL

+

+#' Date time arithmetic functions for Column operations

+#'

+#' Date time arithmetic functions defined for \code{Column}.

+#'

+#' @param y Column to compute on.

+#' @param x For class \code{Column}, it is the column used to perform arithmetic operations

+#' with column \code{y}.For class \code{numeric}, it is the number of months or

+#' days to be added to or subtracted from \code{y}. For class \code{character}, it is

+#' \itemize{

+#' \item \code{date_format}: date format specification.

+#' \item \code{from_utc_timestamp, to_utc_timestamp}: time zone to use.

--- End diff --

little nit `\code{from_utc_timestamp}, \code{to_utc_timestamp}`

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastructure@apache.org or file a JIRA ticket

with INFRA.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #18114: [SPARK-20889][SparkR] Grouped documentation for DATETIME...

Posted by AmplabJenkins <gi...@git.apache.org>.

Github user AmplabJenkins commented on the issue:

https://github.com/apache/spark/pull/18114

Merged build finished. Test FAILed.

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastructure@apache.org or file a JIRA ticket

with INFRA.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #18114: [SPARK-20889][SparkR] Grouped documentation for D...

Posted by actuaryzhang <gi...@git.apache.org>.

Github user actuaryzhang commented on a diff in the pull request:

https://github.com/apache/spark/pull/18114#discussion_r123328197

--- Diff: R/pkg/R/functions.R ---

@@ -34,6 +34,58 @@ NULL

#' df <- createDataFrame(cbind(model = rownames(mtcars), mtcars))}

NULL

+#' Date time functions for Column operations

+#'

+#' Date time functions defined for \code{Column}.

+#'

+#' @param x Column to compute on.

+#' @param format For \code{to_date} and \code{to_timestamp}, it is the string to use to parse

+#' x Column to DateType or TimestampType. For \code{trunc}, it is the string used

+#' for specifying the truncation method. For example, "year", "yyyy", "yy" for

+#' truncate by year, or "month", "mon", "mm" for truncate by month.

+#' @param ... additional argument(s).

+#' @name column_datetime_functions

+#' @rdname column_datetime_functions

+#' @family data time functions

+#' @examples

+#' \dontrun{

+#' dts <- c("2005-01-02 18:47:22",

+#' "2005-12-24 16:30:58",

+#' "2005-10-28 07:30:05",

+#' "2005-12-28 07:01:05",

+#' "2006-01-24 00:01:10")

+#' y <- c(2.0, 2.2, 3.4, 2.5, 1.8)

+#' df <- createDataFrame(data.frame(time = as.POSIXct(dts), y = y))}

+NULL

+

+#' Date time arithmetic functions for Column operations

+#'

+#' Date time arithmetic functions defined for \code{Column}.

+#'

+#' @param y Column to compute on.

+#' @param x For class Column, it is used to perform arithmetic operations with \code{y}.

+#' For class numeric, it is the number of months or days to be added to \code{y}.

--- End diff --

updated. thx

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastructure@apache.org or file a JIRA ticket

with INFRA.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #18114: [SPARK-20889][SparkR] Grouped documentation for DATETIME...

Posted by SparkQA <gi...@git.apache.org>.

Github user SparkQA commented on the issue:

https://github.com/apache/spark/pull/18114

**[Test build #78272 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/78272/testReport)** for PR 18114 at commit [`aab9199`](https://github.com/apache/spark/commit/aab91995a6035b224d5fa7c836787a3a937573a3).

* This patch passes all tests.

* This patch merges cleanly.

* This patch adds no public classes.

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastructure@apache.org or file a JIRA ticket

with INFRA.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #18114: [SPARK-20889][SparkR] Grouped documentation for DATETIME...

Posted by AmplabJenkins <gi...@git.apache.org>.

Github user AmplabJenkins commented on the issue:

https://github.com/apache/spark/pull/18114

Merged build finished. Test PASSed.

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastructure@apache.org or file a JIRA ticket

with INFRA.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #18114: [SPARK-20889][SparkR] Grouped documentation for DATETIME...

Posted by AmplabJenkins <gi...@git.apache.org>.

Github user AmplabJenkins commented on the issue:

https://github.com/apache/spark/pull/18114

Merged build finished. Test FAILed.

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastructure@apache.org or file a JIRA ticket

with INFRA.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #18114: [SPARK-20889][SparkR] Grouped documentation for DATETIME...

Posted by SparkQA <gi...@git.apache.org>.

Github user SparkQA commented on the issue:

https://github.com/apache/spark/pull/18114

**[Test build #78407 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/78407/testReport)** for PR 18114 at commit [`1381dd5`](https://github.com/apache/spark/commit/1381dd5d776486f285ed51ed7ec33c12b40a5590).

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastructure@apache.org or file a JIRA ticket

with INFRA.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #18114: [SPARK-20889][SparkR] Grouped documentation for D...

Posted by felixcheung <gi...@git.apache.org>.

Github user felixcheung commented on a diff in the pull request:

https://github.com/apache/spark/pull/18114#discussion_r123402476

--- Diff: R/pkg/R/functions.R ---

@@ -2458,111 +2441,78 @@ setMethod("instr", signature(y = "Column", x = "character"),

column(jc)

})

-#' next_day

-#'

-#' Given a date column, returns the first date which is later than the value of the date column

-#' that is on the specified day of the week.

-#'

-#' For example, \code{next_day('2015-07-27', "Sunday")} returns 2015-08-02 because that is the first

-#' Sunday after 2015-07-27.

-#'

-#' Day of the week parameter is case insensitive, and accepts first three or two characters:

-#' "Mon", "Tue", "Wed", "Thu", "Fri", "Sat", "Sun".

+#' @section Details:

+#' \code{next_day}: Given a date column, returns the first date which is later than the value of

+#' the date column that is on the specified day of the week. For example,

+#' \code{next_day('2015-07-27', "Sunday")} returns 2015-08-02 because that is the first Sunday

+#' after 2015-07-27. Day of the week parameter is case insensitive, and accepts first three or

+#' two characters: "Mon", "Tue", "Wed", "Thu", "Fri", "Sat", "Sun".

#'

-#' @param y Column to compute on.

-#' @param x Day of the week string.

-#'

-#' @family date time functions

-#' @rdname next_day

-#' @name next_day

-#' @aliases next_day,Column,character-method

+#' @rdname column_datetime_diff_functions

+#' @aliases next_day next_day,Column,character-method

#' @export

-#' @examples

-#'\dontrun{

-#'next_day(df$d, 'Sun')

-#'next_day(df$d, 'Sunday')

-#'}

#' @note next_day since 1.5.0

setMethod("next_day", signature(y = "Column", x = "character"),

function(y, x) {

jc <- callJStatic("org.apache.spark.sql.functions", "next_day", y@jc, x)

column(jc)

})

-#' to_utc_timestamp

-#'

-#' Given a timestamp, which corresponds to a certain time of day in the given timezone, returns

-#' another timestamp that corresponds to the same time of day in UTC.

+#' @section Details:

+#' \code{to_utc_timestamp}: Given a timestamp, which corresponds to a certain time of day

+#' in the given timezone, returns another timestamp that corresponds to the same time of day in UTC.

#'

-#' @param y Column to compute on

-#' @param x timezone to use

-#'

-#' @family date time functions

-#' @rdname to_utc_timestamp

-#' @name to_utc_timestamp

-#' @aliases to_utc_timestamp,Column,character-method

+#' @rdname column_datetime_diff_functions

+#' @aliases to_utc_timestamp to_utc_timestamp,Column,character-method

#' @export

-#' @examples \dontrun{to_utc_timestamp(df$t, 'PST')}

#' @note to_utc_timestamp since 1.5.0

setMethod("to_utc_timestamp", signature(y = "Column", x = "character"),

function(y, x) {

jc <- callJStatic("org.apache.spark.sql.functions", "to_utc_timestamp", y@jc, x)

column(jc)

})

-#' add_months

+#' @section Details:

+#' \code{add_months}: Returns the date that is numMonths after startDate.

--- End diff --

actually, I wasn't sure this would be the form you are using in other documentation, feel free to massage it, change it.

also might be clearer here to say `that is startDate + numMonths` - ie. reverse the order the names are mention to be consistent with parameter order

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastructure@apache.org or file a JIRA ticket

with INFRA.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #18114: [SPARK-20889][SparkR] Grouped documentation for DATETIME...

Posted by SparkQA <gi...@git.apache.org>.

Github user SparkQA commented on the issue:

https://github.com/apache/spark/pull/18114

**[Test build #77441 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/77441/testReport)** for PR 18114 at commit [`944aa92`](https://github.com/apache/spark/commit/944aa92e4c35015010ee9cdaf913f5639670acb6).

* This patch **fails MiMa tests**.

* This patch merges cleanly.

* This patch adds the following public classes _(experimental)_:

* `#' @param x if of class Column, it is used to perform arithmatic operations with

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastructure@apache.org or file a JIRA ticket

with INFRA.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #18114: [SPARK-20889][SparkR] Grouped documentation for DATETIME...

Posted by felixcheung <gi...@git.apache.org>.

Github user felixcheung commented on the issue:

https://github.com/apache/spark/pull/18114

hmm, waiting for AppVeyor

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastructure@apache.org or file a JIRA ticket

with INFRA.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #18114: [SPARK-20889][SparkR] Grouped documentation for D...

Posted by felixcheung <gi...@git.apache.org>.

Github user felixcheung commented on a diff in the pull request:

https://github.com/apache/spark/pull/18114#discussion_r123419080

--- Diff: R/pkg/R/functions.R ---

@@ -2414,20 +2396,23 @@ setMethod("from_json", signature(x = "Column", schema = "structType"),

column(jc)

})

-#' from_utc_timestamp

-#'

-#' Given a timestamp, which corresponds to a certain time of day in UTC, returns another timestamp

-#' that corresponds to the same time of day in the given timezone.

+#' @details

+#' \code{from_utc_timestamp}: Given a timestamp, which corresponds to a certain time of day in UTC,

+#' returns another timestamp that corresponds to the same time of day in the given timezone.

#'

-#' @param y Column to compute on.

-#' @param x time zone to use.

+#' @rdname column_datetime_diff_functions

#'

-#' @family date time functions

-#' @rdname from_utc_timestamp

-#' @name from_utc_timestamp

-#' @aliases from_utc_timestamp,Column,character-method

+#' @aliases from_utc_timestamp from_utc_timestamp,Column,character-method

#' @export

-#' @examples \dontrun{from_utc_timestamp(df$t, 'PST')}

+#' @examples

+#'

+#' \dontrun{

+#' tmp <- mutate(df, from_utc = from_utc_timestamp(df$time, 'PST'),

+#' to_utc = to_utc_timestamp(df$time, 'PST'),

+#' to_unix = unix_timestamp(df$time),

+#' to_unix2 = unix_timestamp(df$time, 'yyyy-MM-dd HH'),

+#' from_unix = from_unixtime(unix_timestamp(df$time)))

--- End diff --

this I don't get - why `from_unixtime` belongs to `column_datetime_diff_functions`?

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastructure@apache.org or file a JIRA ticket

with INFRA.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #18114: [SPARK-20889][SparkR] Grouped documentation for DATETIME...

Posted by AmplabJenkins <gi...@git.apache.org>.

Github user AmplabJenkins commented on the issue:

https://github.com/apache/spark/pull/18114

Test PASSed.

Refer to this link for build results (access rights to CI server needed):

https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/77391/

Test PASSed.

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastructure@apache.org or file a JIRA ticket

with INFRA.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #18114: [SPARK-20889][SparkR] Grouped documentation for D...

Posted by HyukjinKwon <gi...@git.apache.org>.

Github user HyukjinKwon commented on a diff in the pull request:

https://github.com/apache/spark/pull/18114#discussion_r123416302

--- Diff: R/pkg/R/functions.R ---

@@ -2414,20 +2396,23 @@ setMethod("from_json", signature(x = "Column", schema = "structType"),

column(jc)

})

-#' from_utc_timestamp

-#'

-#' Given a timestamp, which corresponds to a certain time of day in UTC, returns another timestamp

-#' that corresponds to the same time of day in the given timezone.

+#' @details

+#' \code{from_utc_timestamp}: Given a timestamp, which corresponds to a certain time of day in UTC,

+#' returns another timestamp that corresponds to the same time of day in the given timezone.

#'

-#' @param y Column to compute on.

-#' @param x time zone to use.

+#' @rdname column_datetime_diff_functions

#'

-#' @family date time functions

-#' @rdname from_utc_timestamp

-#' @name from_utc_timestamp

-#' @aliases from_utc_timestamp,Column,character-method

+#' @aliases from_utc_timestamp from_utc_timestamp,Column,character-method

#' @export

-#' @examples \dontrun{from_utc_timestamp(df$t, 'PST')}

+#' @examples

+#'

+#' \dontrun{

+#' tmp <- mutate(df, from_utc = from_utc_timestamp(df$time, 'PST'),

+#' to_utc = to_utc_timestamp(df$time, 'PST'),

+#' to_unix = unix_timestamp(df$time),

+#' to_unix2 = unix_timestamp(df$time, 'yyyy-MM-dd HH'),

+#' from_unix = from_unixtime(unix_timestamp(df$time)))

--- End diff --

It looks this one should go to `column_datetime_functions` or `from_unixtime` should be in `column_datetime_diff_functions`.

`unix_timestamp` looks a ditto.

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastructure@apache.org or file a JIRA ticket

with INFRA.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #18114: [SPARK-20889][SparkR] Grouped documentation for D...

Posted by felixcheung <gi...@git.apache.org>.

Github user felixcheung commented on a diff in the pull request:

https://github.com/apache/spark/pull/18114#discussion_r118635475

--- Diff: R/pkg/R/functions.R ---

@@ -2095,26 +2061,28 @@ setMethod("atan2", signature(y = "Column"),

column(jc)

})

-#' datediff

+#' @section Details:

+#' \code{datediff}: Returns the number of days from \code{start} to \code{end}.

#'

-#' Returns the number of days from \code{start} to \code{end}.

-#'

-#' @param x start Column to use.

-#' @param y end Column to use.

--- End diff --

two concerns here:

- the doc description is now fairly generalized - is supposed to be `datediff(end, start)` but seems like it's not not clear which is end and which is start

- renaming this could break user calling by name `datediff(df$c, x = "foo")`

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastructure@apache.org or file a JIRA ticket

with INFRA.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #18114: [SPARK-20889][SparkR] Grouped documentation for DATETIME...

Posted by AmplabJenkins <gi...@git.apache.org>.

Github user AmplabJenkins commented on the issue:

https://github.com/apache/spark/pull/18114

Test PASSed.

Refer to this link for build results (access rights to CI server needed):

https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/78272/

Test PASSed.

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastructure@apache.org or file a JIRA ticket

with INFRA.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #18114: [SPARK-20889][SparkR] Grouped documentation for D...

Posted by actuaryzhang <gi...@git.apache.org>.

Github user actuaryzhang commented on a diff in the pull request:

https://github.com/apache/spark/pull/18114#discussion_r123328228

--- Diff: R/pkg/R/functions.R ---

@@ -546,18 +598,20 @@ setMethod("hash",

column(jc)

})

-#' dayofmonth

+#' @section Details:

--- End diff --

Done

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastructure@apache.org or file a JIRA ticket

with INFRA.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #18114: [SPARK-20889][SparkR] Grouped documentation for datetime...

Posted by SparkQA <gi...@git.apache.org>.

Github user SparkQA commented on the issue:

https://github.com/apache/spark/pull/18114

**[Test build #77391 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/77391/testReport)** for PR 18114 at commit [`0d2853d`](https://github.com/apache/spark/commit/0d2853d0cff6cbd92fcbb68cebaee0729d25eb8f).

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastructure@apache.org or file a JIRA ticket

with INFRA.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #18114: [SPARK-20889][SparkR] Grouped documentation for DATETIME...

Posted by SparkQA <gi...@git.apache.org>.

Github user SparkQA commented on the issue:

https://github.com/apache/spark/pull/18114

**[Test build #78271 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/78271/testReport)** for PR 18114 at commit [`311ccc2`](https://github.com/apache/spark/commit/311ccc2f6cd0dc814c1744b47372c65cbe3b0c88).

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastructure@apache.org or file a JIRA ticket

with INFRA.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #18114: [SPARK-20889][SparkR] Grouped documentation for D...

Posted by HyukjinKwon <gi...@git.apache.org>.

Github user HyukjinKwon commented on a diff in the pull request:

https://github.com/apache/spark/pull/18114#discussion_r123416414

--- Diff: R/pkg/R/functions.R ---

@@ -2414,20 +2396,23 @@ setMethod("from_json", signature(x = "Column", schema = "structType"),

column(jc)

})

-#' from_utc_timestamp

-#'

-#' Given a timestamp, which corresponds to a certain time of day in UTC, returns another timestamp

-#' that corresponds to the same time of day in the given timezone.

+#' @details

+#' \code{from_utc_timestamp}: Given a timestamp, which corresponds to a certain time of day in UTC,

+#' returns another timestamp that corresponds to the same time of day in the given timezone.

#'

-#' @param y Column to compute on.

-#' @param x time zone to use.

+#' @rdname column_datetime_diff_functions

#'

-#' @family date time functions

-#' @rdname from_utc_timestamp

-#' @name from_utc_timestamp

-#' @aliases from_utc_timestamp,Column,character-method

+#' @aliases from_utc_timestamp from_utc_timestamp,Column,character-method

#' @export

-#' @examples \dontrun{from_utc_timestamp(df$t, 'PST')}

+#' @examples

+#'

+#' \dontrun{

+#' tmp <- mutate(df, from_utc = from_utc_timestamp(df$time, 'PST'),

+#' to_utc = to_utc_timestamp(df$time, 'PST'),

+#' to_unix = unix_timestamp(df$time),

+#' to_unix2 = unix_timestamp(df$time, 'yyyy-MM-dd HH'),

+#' from_unix = from_unixtime(unix_timestamp(df$time)))

--- End diff --

And ... `from_unixtime(df$t, 'yyyy/MM/dd HH')` looks missed.

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastructure@apache.org or file a JIRA ticket

with INFRA.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #18114: [SPARK-20889][SparkR] Grouped documentation for DATETIME...

Posted by AmplabJenkins <gi...@git.apache.org>.

Github user AmplabJenkins commented on the issue:

https://github.com/apache/spark/pull/18114

Test PASSed.

Refer to this link for build results (access rights to CI server needed):

https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/77459/

Test PASSed.

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastructure@apache.org or file a JIRA ticket

with INFRA.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #18114: [SPARK-20889][SparkR] Grouped documentation for D...

Posted by actuaryzhang <gi...@git.apache.org>.

Github user actuaryzhang commented on a diff in the pull request:

https://github.com/apache/spark/pull/18114#discussion_r123328728

--- Diff: R/pkg/R/functions.R ---

@@ -2774,27 +2724,16 @@ setMethod("format_string", signature(format = "character", x = "Column"),

column(jc)

})

-#' from_unixtime

-#'

-#' Converts the number of seconds from unix epoch (1970-01-01 00:00:00 UTC) to a string

-#' representing the timestamp of that moment in the current system time zone in the given

-#' format.

+#' @section Details:

+#' \code{from_unixtime}: Converts the number of seconds from unix epoch (1970-01-01 00:00:00 UTC) to a

+#' string representing the timestamp of that moment in the current system time zone in the given format.

--- End diff --

Done.

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastructure@apache.org or file a JIRA ticket

with INFRA.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #18114: [SPARK-20889][SparkR] Grouped documentation for DATETIME...

Posted by SparkQA <gi...@git.apache.org>.

Github user SparkQA commented on the issue:

https://github.com/apache/spark/pull/18114

**[Test build #78271 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/78271/testReport)** for PR 18114 at commit [`311ccc2`](https://github.com/apache/spark/commit/311ccc2f6cd0dc814c1744b47372c65cbe3b0c88).

* This patch **fails SparkR unit tests**.

* This patch merges cleanly.

* This patch adds the following public classes _(experimental)_:

* `#' For class numeric, it is the number of months or days to be added to

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastructure@apache.org or file a JIRA ticket

with INFRA.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #18114: [SPARK-20889][SparkR] Grouped documentation for DATETIME...

Posted by actuaryzhang <gi...@git.apache.org>.

Github user actuaryzhang commented on the issue:

https://github.com/apache/spark/pull/18114

@felixcheung Any idea what this message means?

`This patch adds the following public classes (experimental):

#' @Param x For class`

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastructure@apache.org or file a JIRA ticket

with INFRA.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #18114: [SPARK-20889][SparkR] Grouped documentation for D...

Posted by actuaryzhang <gi...@git.apache.org>.

Github user actuaryzhang commented on a diff in the pull request:

https://github.com/apache/spark/pull/18114#discussion_r123328753

--- Diff: R/pkg/R/functions.R ---

@@ -2774,27 +2724,16 @@ setMethod("format_string", signature(format = "character", x = "Column"),

column(jc)

})

-#' from_unixtime

-#'

-#' Converts the number of seconds from unix epoch (1970-01-01 00:00:00 UTC) to a string

-#' representing the timestamp of that moment in the current system time zone in the given

-#' format.

+#' @section Details:

+#' \code{from_unixtime}: Converts the number of seconds from unix epoch (1970-01-01 00:00:00 UTC) to a

+#' string representing the timestamp of that moment in the current system time zone in the given format.

+#' See \href{http://docs.oracle.com/javase/tutorial/i18n/format/simpleDateFormat.html}{

+#' Customizing Formats} for available options.

#'

-#' @param x a Column of unix timestamp.

-#' @param format the target format. See

-#' \href{http://docs.oracle.com/javase/tutorial/i18n/format/simpleDateFormat.html}{

-#' Customizing Formats} for available options.

-#' @param ... further arguments to be passed to or from other methods.

-#' @family date time functions

-#' @rdname from_unixtime

-#' @name from_unixtime

-#' @aliases from_unixtime,Column-method

+#' @rdname column_datetime_functions

+#

--- End diff --

Fixed.

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastructure@apache.org or file a JIRA ticket

with INFRA.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #18114: [SPARK-20889][SparkR] Grouped documentation for DATETIME...

Posted by actuaryzhang <gi...@git.apache.org>.

Github user actuaryzhang commented on the issue:

https://github.com/apache/spark/pull/18114

@felixcheung Thanks so much for the review and comments. Super helpful!

I fixed all the issues you have pointed out in the new commit.

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastructure@apache.org or file a JIRA ticket

with INFRA.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #18114: [SPARK-20889][SparkR] Grouped documentation for DATETIME...

Posted by SparkQA <gi...@git.apache.org>.

Github user SparkQA commented on the issue:

https://github.com/apache/spark/pull/18114

**[Test build #78403 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/78403/testReport)** for PR 18114 at commit [`a9b1049`](https://github.com/apache/spark/commit/a9b1049f9af1d0d41af784f8433e5343dea754a5).

* This patch passes all tests.

* This patch merges cleanly.

* This patch adds the following public classes _(experimental)_:

* `#' For class numeric, it is the number of months or days to be added to or subtracted from

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastructure@apache.org or file a JIRA ticket

with INFRA.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #18114: [SPARK-20889][SparkR] Grouped documentation for D...

Posted by felixcheung <gi...@git.apache.org>.

Github user felixcheung commented on a diff in the pull request:

https://github.com/apache/spark/pull/18114#discussion_r123310134

--- Diff: R/pkg/R/functions.R ---

@@ -2774,27 +2724,16 @@ setMethod("format_string", signature(format = "character", x = "Column"),

column(jc)

})

-#' from_unixtime

-#'

-#' Converts the number of seconds from unix epoch (1970-01-01 00:00:00 UTC) to a string

-#' representing the timestamp of that moment in the current system time zone in the given

-#' format.

+#' @section Details:

+#' \code{from_unixtime}: Converts the number of seconds from unix epoch (1970-01-01 00:00:00 UTC) to a

+#' string representing the timestamp of that moment in the current system time zone in the given format.

--- End diff --

I think we need to call out that "current system time zone" is the one in JVM - in R one could set the default TZ

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastructure@apache.org or file a JIRA ticket

with INFRA.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #18114: [SPARK-20889][SparkR] Grouped documentation for DATETIME...

Posted by SparkQA <gi...@git.apache.org>.

Github user SparkQA commented on the issue:

https://github.com/apache/spark/pull/18114

**[Test build #78407 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/78407/testReport)** for PR 18114 at commit [`1381dd5`](https://github.com/apache/spark/commit/1381dd5d776486f285ed51ed7ec33c12b40a5590).

* This patch passes all tests.

* This patch merges cleanly.

* This patch adds the following public classes _(experimental)_:

* `#' @param x For class

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastructure@apache.org or file a JIRA ticket

with INFRA.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #18114: [SPARK-20889][SparkR] Grouped documentation for DATETIME...

Posted by SparkQA <gi...@git.apache.org>.

Github user SparkQA commented on the issue:

https://github.com/apache/spark/pull/18114

**[Test build #77459 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/77459/testReport)** for PR 18114 at commit [`016bb47`](https://github.com/apache/spark/commit/016bb47b4a949d1c242bd8ccb2f940576867f2dc).

* This patch passes all tests.

* This patch merges cleanly.

* This patch adds the following public classes _(experimental)_:

* `#' @param x For class Column, it is used to perform arithmetic operations with

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastructure@apache.org or file a JIRA ticket

with INFRA.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #18114: [SPARK-20889][SparkR] Grouped documentation for DATETIME...

Posted by SparkQA <gi...@git.apache.org>.

Github user SparkQA commented on the issue:

https://github.com/apache/spark/pull/18114

**[Test build #77447 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/77447/testReport)** for PR 18114 at commit [`944aa92`](https://github.com/apache/spark/commit/944aa92e4c35015010ee9cdaf913f5639670acb6).

* This patch **fails SparkR unit tests**.

* This patch merges cleanly.

* This patch adds the following public classes _(experimental)_:

* `#' @param x if of class Column, it is used to perform arithmatic operations with

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastructure@apache.org or file a JIRA ticket

with INFRA.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #18114: [SPARK-20889][SparkR] Grouped documentation for D...

Posted by felixcheung <gi...@git.apache.org>.

Github user felixcheung commented on a diff in the pull request:

https://github.com/apache/spark/pull/18114#discussion_r123309886

--- Diff: R/pkg/R/functions.R ---

@@ -2774,27 +2724,16 @@ setMethod("format_string", signature(format = "character", x = "Column"),

column(jc)

})

-#' from_unixtime

-#'

-#' Converts the number of seconds from unix epoch (1970-01-01 00:00:00 UTC) to a string

-#' representing the timestamp of that moment in the current system time zone in the given

-#' format.

+#' @section Details:

+#' \code{from_unixtime}: Converts the number of seconds from unix epoch (1970-01-01 00:00:00 UTC) to a

+#' string representing the timestamp of that moment in the current system time zone in the given format.

+#' See \href{http://docs.oracle.com/javase/tutorial/i18n/format/simpleDateFormat.html}{

+#' Customizing Formats} for available options.

#'

-#' @param x a Column of unix timestamp.

-#' @param format the target format. See

-#' \href{http://docs.oracle.com/javase/tutorial/i18n/format/simpleDateFormat.html}{

-#' Customizing Formats} for available options.

-#' @param ... further arguments to be passed to or from other methods.

-#' @family date time functions

-#' @rdname from_unixtime

-#' @name from_unixtime

-#' @aliases from_unixtime,Column-method

+#' @rdname column_datetime_functions

+#

--- End diff --

why a line with `#` (vs `#'`)?

---

If your project is set up for it, you can reply to this email and have your

reply appear on GitHub as well. If your project does not have this feature

enabled and wishes so, or if the feature is enabled but not working, please

contact infrastructure at infrastructure@apache.org or file a JIRA ticket