You are viewing a plain text version of this content. The canonical link for it is here.

Posted to commits@hudi.apache.org by GitBox <gi...@apache.org> on 2022/10/14 08:39:30 UTC

[GitHub] [hudi] idrismike commented on issue #6942: [SUPPORT] Hudi 0.12.0 InsertOverWrite - Getting NoSuchMethodError while writing to table with partitions

idrismike commented on issue #6942:

URL: https://github.com/apache/hudi/issues/6942#issuecomment-1278681326

@yihua I tried with `hoodie.metadata.enable=false`

**PS**: We are using the following set of `hudi` dependencies:

`"org.apache.hudi" %% "hudi-utilities-slim-bundle" % "0.12.0",

"org.apache.hudi" %% "hudi-spark3.2-bundle" % "0.12.0"`

This seems to work.

But, we want the metadata to be enabled because I am querying Hudi using `hive metastore`, and if `metadata` is not enabled (hence not created), hive querying is `super-slow`.

We know that we can create the `metadata indexes` using `HoodieIndexer` asynchronously (Not sure if that is the same `metadata`).

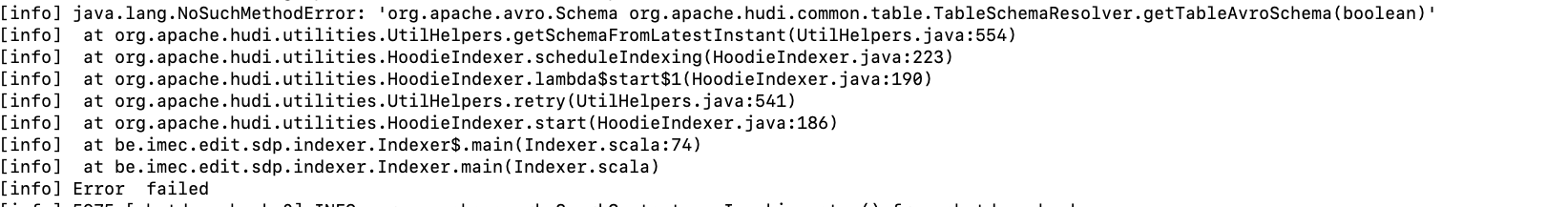

Moreover, using HoodieIndexer from using within `scala code` (i.e. calling HoodieIndexer from within code having spark session intiated) instead of using `spark-submit` - we get the following issue:

**PS**: All setup remains the same as above.

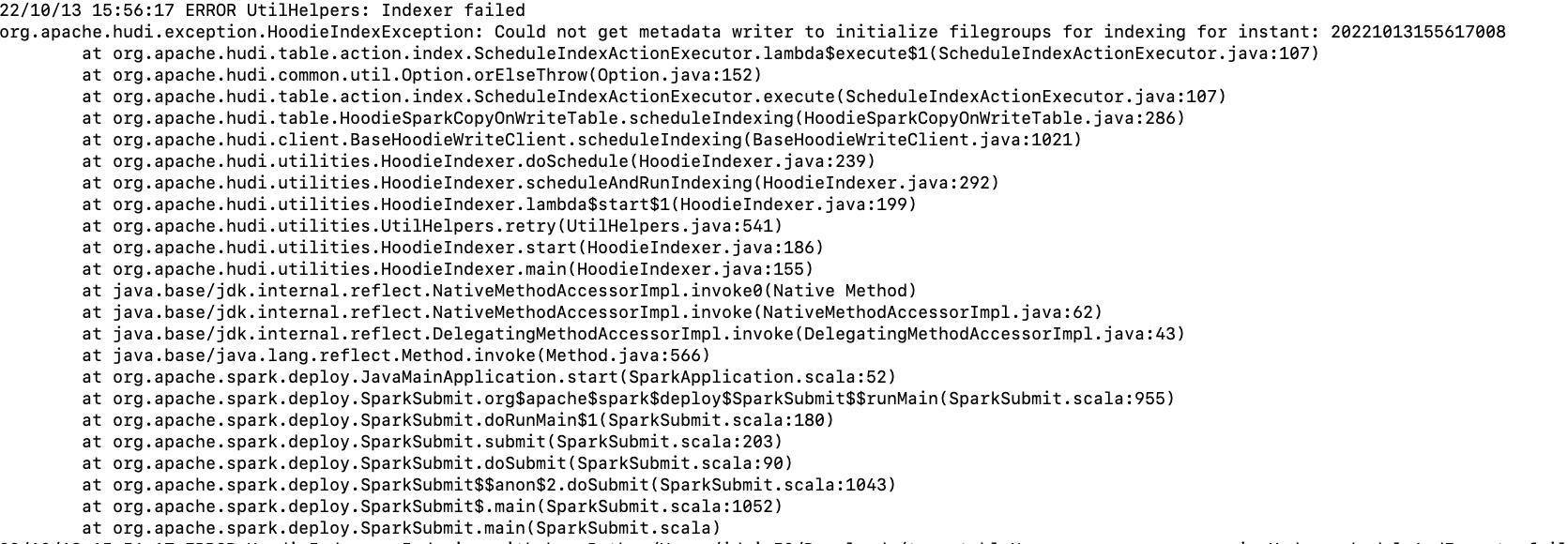

**Aside Question**: Can we generate `indexes` using `HoodieIndexer` for tables written using `hudi 0.9.0`? We tried doing that but get the following issue - hence clarifying that as well.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org