You are viewing a plain text version of this content. The canonical link for it is here.

Posted to gitbox@hive.apache.org by GitBox <gi...@apache.org> on 2020/03/06 22:19:39 UTC

[GitHub] [hive] davidov541 edited a comment on issue #933: HIVE-21218:

Adding support for Confluent Kafka Avro message format

davidov541 edited a comment on issue #933: HIVE-21218: Adding support for Confluent Kafka Avro message format

URL: https://github.com/apache/hive/pull/933#issuecomment-595988456

OK, I was able to successfully test this build using a Confluent single-node cluster and a Hive pseudo-standalone cluster. I was able to create a topic with a simple Avro schema and a few records, and then read that from Hive successfully.

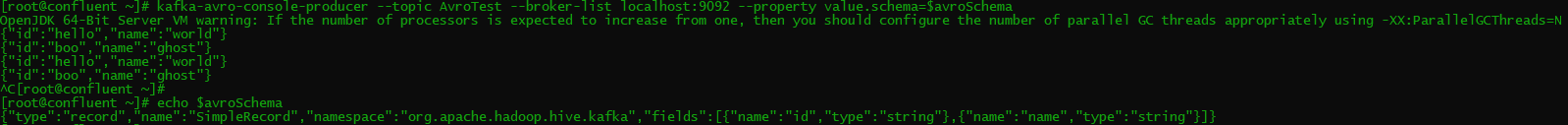

Confluent Cluster Production:

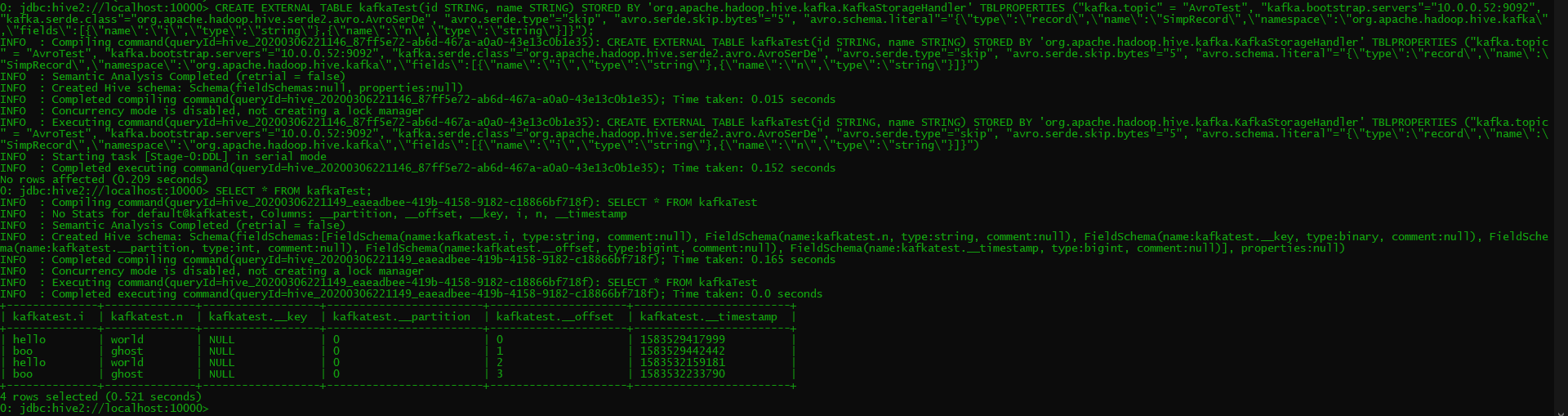

Hive Table Creation and Querying:

One thing I noticed was that on the Hive side, if I used the exact same schema as the SimpleRecord schema which we use for testing, I got the following error. As you can see in the screenshots, I was able to edit the field and schema names, and avoid this error, so it was specifically due to Hive pulling in the SimpleRecord class which we use for testing.

```

2020-03-06T22:05:23,739 WARN [HiveServer2-Handler-Pool: Thread-165] thrift.ThriftCLIService: Error fetching results:

org.apache.hive.service.cli.HiveSQLException: java.io.IOException: java.lang.ClassCastException: org.apache.avro.util.Utf8 cannot be cast to java.lang.String

at org.apache.hive.service.cli.operation.SQLOperation.getNextRowSet(SQLOperation.java:481) ~[hive-service-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT]

at org.apache.hive.service.cli.operation.OperationManager.getOperationNextRowSet(OperationManager.java:331) ~[hive-service-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT]

at org.apache.hive.service.cli.session.HiveSessionImpl.fetchResults(HiveSessionImpl.java:946) ~[hive-service-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT]

at org.apache.hive.service.cli.CLIService.fetchResults(CLIService.java:567) ~[hive-service-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT]

at org.apache.hive.service.cli.thrift.ThriftCLIService.FetchResults(ThriftCLIService.java:801) ~[hive-service-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT]

at org.apache.hive.service.rpc.thrift.TCLIService$Processor$FetchResults.getResult(TCLIService.java:1837) ~[hive-exec-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT]

at org.apache.hive.service.rpc.thrift.TCLIService$Processor$FetchResults.getResult(TCLIService.java:1822) ~[hive-exec-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT]

at org.apache.thrift.ProcessFunction.process(ProcessFunction.java:39) ~[hive-exec-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT]

at org.apache.thrift.TBaseProcessor.process(TBaseProcessor.java:39) ~[hive-exec-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT]

at org.apache.hive.service.auth.TSetIpAddressProcessor.process(TSetIpAddressProcessor.java:56) ~[hive-service-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT]

at org.apache.thrift.server.TThreadPoolServer$WorkerProcess.run(TThreadPoolServer.java:286) ~[hive-exec-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) ~[?:1.8.0_242]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) ~[?:1.8.0_242]

at java.lang.Thread.run(Thread.java:748) [?:1.8.0_242]

Caused by: java.io.IOException: java.lang.ClassCastException: org.apache.avro.util.Utf8 cannot be cast to java.lang.String

at org.apache.hadoop.hive.ql.exec.FetchOperator.getNextRow(FetchOperator.java:638) ~[hive-exec-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT]

at org.apache.hadoop.hive.ql.exec.FetchOperator.pushRow(FetchOperator.java:545) ~[hive-exec-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT]

at org.apache.hadoop.hive.ql.exec.FetchTask.fetch(FetchTask.java:150) ~[hive-exec-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT]

at org.apache.hadoop.hive.ql.Driver.getResults(Driver.java:880) ~[hive-exec-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT]

at org.apache.hadoop.hive.ql.reexec.ReExecDriver.getResults(ReExecDriver.java:241) ~[hive-exec-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT]

at org.apache.hive.service.cli.operation.SQLOperation.getNextRowSet(SQLOperation.java:476) ~[hive-service-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT]

... 13 more

Caused by: java.lang.ClassCastException: org.apache.avro.util.Utf8 cannot be cast to java.lang.String

at org.apache.hadoop.hive.kafka.SimpleRecord.put(SimpleRecord.java:88) ~[kafka-handler-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT]

at org.apache.avro.generic.GenericData.setField(GenericData.java:690) ~[avro-1.8.2.jar:1.8.2]

at org.apache.avro.specific.SpecificDatumReader.readField(SpecificDatumReader.java:119) ~[avro-1.8.2.jar:1.8.2]

at org.apache.avro.generic.GenericDatumReader.readRecord(GenericDatumReader.java:222) ~[avro-1.8.2.jar:1.8.2]

at org.apache.avro.generic.GenericDatumReader.readWithoutConversion(GenericDatumReader.java:175) ~[avro-1.8.2.jar:1.8.2]

at org.apache.avro.generic.GenericDatumReader.read(GenericDatumReader.java:153) ~[avro-1.8.2.jar:1.8.2]

at org.apache.avro.generic.GenericDatumReader.read(GenericDatumReader.java:145) ~[avro-1.8.2.jar:1.8.2]

at org.apache.hadoop.hive.kafka.KafkaSerDe$AvroBytesConverter.getWritable(KafkaSerDe.java:401) ~[kafka-handler-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT]

at org.apache.hadoop.hive.kafka.KafkaSerDe$AvroBytesConverter.getWritable(KafkaSerDe.java:367) ~[kafka-handler-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT]

at org.apache.hadoop.hive.kafka.KafkaSerDe.deserializeKWritable(KafkaSerDe.java:250) ~[kafka-handler-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT]

at org.apache.hadoop.hive.kafka.KafkaSerDe.deserialize(KafkaSerDe.java:238) ~[kafka-handler-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT]

at org.apache.hadoop.hive.ql.exec.FetchOperator.getNextRow(FetchOperator.java:619) ~[hive-exec-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT]

at org.apache.hadoop.hive.ql.exec.FetchOperator.pushRow(FetchOperator.java:545) ~[hive-exec-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT]

at org.apache.hadoop.hive.ql.exec.FetchTask.fetch(FetchTask.java:150) ~[hive-exec-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT]

at org.apache.hadoop.hive.ql.Driver.getResults(Driver.java:880) ~[hive-exec-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT]

at org.apache.hadoop.hive.ql.reexec.ReExecDriver.getResults(ReExecDriver.java:241) ~[hive-exec-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT]

at org.apache.hive.service.cli.operation.SQLOperation.getNextRowSet(SQLOperation.java:476) ~[hive-service-4.0.0-SNAPSHOT.jar:4.0.0-SNAPSHOT]

... 13 more

```

It concerns me that an Avro schema we use for testing is being included in a final build, and also that using it in the Hive table gives this error. I think this is likely a separate issue, but I wanted to pass it by you (@b-slim) first before filing a separate JIRA and ignoring it for now.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

With regards,

Apache Git Services

---------------------------------------------------------------------

To unsubscribe, e-mail: gitbox-unsubscribe@hive.apache.org

For additional commands, e-mail: gitbox-help@hive.apache.org