You are viewing a plain text version of this content. The canonical link for it is here.

Posted to commits@pulsar.apache.org by GitBox <gi...@apache.org> on 2022/10/19 03:06:20 UTC

[GitHub] [pulsar-helm-chart] tianshimoyi opened a new issue, #309: When I set metadataPrefix my cluster doesn't start properly

tianshimoyi opened a new issue, #309:

URL: https://github.com/apache/pulsar-helm-chart/issues/309

**Describe the bug**

When I set metadataPrefix my cluster doesn't start properly. When I cancel this value, the cluster can start.

**To Reproduce**

thi is my value.yaml

```yaml

#

# Licensed to the Apache Software Foundation (ASF) under one

# or more contributor license agreements. See the NOTICE file

# distributed with this work for additional information

# regarding copyright ownership. The ASF licenses this file

# to you under the Apache License, Version 2.0 (the

# "License"); you may not use this file except in compliance

# with the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing,

# software distributed under the License is distributed on an

# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

# KIND, either express or implied. See the License for the

# specific language governing permissions and limitations

# under the License.

#

###

### K8S Settings

###

### Namespace to deploy pulsar

# The namespace to use to deploy the pulsar components, if left empty

# will default to .Release.Namespace (aka helm --namespace).

namespace: "pulsar"

namespaceCreate: false

## clusterDomain as defined for your k8s cluster

clusterDomain: cluster.local

###

### Global Settings

###

## Set to true on install

initialize: false

## Set cluster name

clusterName: tcloud-pulsar

## add custom labels to components of cluster

# labels:

# environment: dev

# customer: apache

## Pulsar Metadata Prefix

##

## By default, pulsar stores all the metadata at root path.

## You can configure to have a prefix (e.g. "/my-pulsar-cluster").

## If you do so, all the pulsar and bookkeeper metadata will

## be stored under the provided path

metadataPrefix: "/tcloud"

## Port name prefix

##

## Used for Istio support which depends on a standard naming of ports

## See https://istio.io/latest/docs/ops/configuration/traffic-management/protocol-selection/#explicit-protocol-selection

## Prefixes are disabled by default

tcpPrefix: "" # For Istio this will be "tcp-"

tlsPrefix: "" # For Istio this will be "tls-"

## Persistence

##

## If persistence is enabled, components that have state will

## be deployed with PersistentVolumeClaims, otherwise, for test

## purposes, they will be deployed with emptyDir

##

## This is a global setting that is applied to all components.

## If you need to disable persistence for a component,

## you can set the `volume.persistence` setting to `false` for

## that component.

##

## Deprecated in favor of using `volumes.persistence`

persistence: true

## Volume settings

volumes:

persistence: true

# configure the components to use local persistent volume

# the local provisioner should be installed prior to enable local persistent volume

local_storage: true

## RBAC

##

## Configure settings related to RBAC such as limiting broker access to single

## namespece or enabling PSP

rbac:

enabled: false

psp: false

limit_to_namespace: false

## AntiAffinity

##

## Flag to enable and disable `AntiAffinity` for all components.

## This is a global setting that is applied to all components.

## If you need to disable AntiAffinity for a component, you can set

## the `affinity.anti_affinity` settings to `false` for that component.

affinity:

anti_affinity: true

# Set the anti affinity type. Valid values:

# requiredDuringSchedulingIgnoredDuringExecution - rules must be met for pod to be scheduled (hard) requires at least one node per replica

# preferredDuringSchedulingIgnoredDuringExecution - scheduler will try to enforce but not guranentee

type: requiredDuringSchedulingIgnoredDuringExecution

## Components

##

## Control what components of Apache Pulsar to deploy for the cluster

components:

# zookeeper

zookeeper: true

# bookkeeper

bookkeeper: true

# bookkeeper - autorecovery

autorecovery: true

# broker

broker: true

# functions

functions: true

# proxy

proxy: true

# toolset

toolset: true

# pulsar manager

pulsar_manager: true

## Monitoring Components

##

## Control what components of the monitoring stack to deploy for the cluster

monitoring:

# monitoring - prometheus

prometheus: true

# monitoring - grafana

grafana: true

# monitoring - node_exporter

node_exporter: true

# alerting - alert-manager

alert_manager: true

## which extra components to deploy (Deprecated)

extra:

# Pulsar proxy

proxy: false

# Bookkeeper auto-recovery

autoRecovery: false

# Pulsar dashboard

# Deprecated

# Replace pulsar-dashboard with pulsar-manager

dashboard: false

# pulsar manager

pulsar_manager: false

# Monitoring stack (prometheus and grafana)

monitoring: false

# Configure Kubernetes runtime for Functions

functionsAsPods: false

## Images

##

## Control what images to use for each component

images:

zookeeper:

repository: harbor.weizhipin.com/tcloud/pulsar-all

tag: 2.9.3

pullPolicy: IfNotPresent

bookie:

repository: harbor.weizhipin.com/tcloud/pulsar-all

tag: 2.9.3

pullPolicy: IfNotPresent

autorecovery:

repository: harbor.weizhipin.com/tcloud/pulsar-all

tag: 2.9.3

pullPolicy: IfNotPresent

broker:

repository: harbor.weizhipin.com/tcloud/pulsar-all

tag: 2.9.3

pullPolicy: IfNotPresent

proxy:

repository: harbor.weizhipin.com/tcloud/pulsar-all

tag: 2.9.3

pullPolicy: IfNotPresent

functions:

repository: harbor.weizhipin.com/tcloud/pulsar-all

tag: 2.9.3

prometheus:

repository: prom/prometheus

tag: v2.17.2

pullPolicy: IfNotPresent

grafana:

repository: harbor.weizhipin.com/tcloud/apache-pulsar-grafana-dashboard-k8s

tag: 0.0.16

pullPolicy: IfNotPresent

pulsar_manager:

repository: harbor.weizhipin.com/tcloud/pulsar-manager

tag: v0.3.0

pullPolicy: IfNotPresent

hasCommand: false

## TLS

## templates/tls-certs.yaml

##

## The chart is using cert-manager for provisioning TLS certs for

## brokers and proxies.

tls:

enabled: false

ca_suffix: ca-tls

# common settings for generating certs

common:

# 90d

duration: 2160h

# 15d

renewBefore: 360h

organization:

- pulsar

keySize: 4096

keyAlgorithm: rsa

keyEncoding: pkcs8

# settings for generating certs for proxy

proxy:

enabled: false

cert_name: tls-proxy

# settings for generating certs for broker

broker:

enabled: false

cert_name: tls-broker

# settings for generating certs for bookies

bookie:

enabled: false

cert_name: tls-bookie

# settings for generating certs for zookeeper

zookeeper:

enabled: false

cert_name: tls-zookeeper

# settings for generating certs for recovery

autorecovery:

cert_name: tls-recovery

# settings for generating certs for toolset

toolset:

cert_name: tls-toolset

# Enable or disable broker authentication and authorization.

auth:

authentication:

enabled: false

provider: "jwt"

jwt:

# Enable JWT authentication

# If the token is generated by a secret key, set the usingSecretKey as true.

# If the token is generated by a private key, set the usingSecretKey as false.

usingSecretKey: false

authorization:

enabled: false

superUsers:

# broker to broker communication

broker: "broker-admin"

# proxy to broker communication

proxy: "proxy-admin"

# pulsar-admin client to broker/proxy communication

client: "admin"

######################################################################

# External dependencies

######################################################################

## cert-manager

## templates/tls-cert-issuer.yaml

##

## Cert manager is used for automatically provisioning TLS certificates

## for components within a Pulsar cluster

certs:

internal_issuer:

apiVersion: cert-manager.io/v1

enabled: false

component: internal-cert-issuer

type: selfsigning

# 90d

duration: 2160h

# 15d

renewBefore: 360h

issuers:

selfsigning:

######################################################################

# Below are settings for each component

######################################################################

## Pulsar: Zookeeper cluster

## templates/zookeeper-statefulset.yaml

##

zookeeper:

# use a component name that matches your grafana configuration

# so the metrics are correctly rendered in grafana dashboard

component: zookeeper

# the number of zookeeper servers to run. it should be an odd number larger than or equal to 3.

replicaCount: 3

updateStrategy:

type: RollingUpdate

podManagementPolicy: Parallel

# If using Prometheus-Operator enable this PodMonitor to discover zookeeper scrape targets

# Prometheus-Operator does not add scrape targets based on k8s annotations

podMonitor:

enabled: false

interval: 10s

scrapeTimeout: 10s

# True includes annotation for statefulset that contains hash of corresponding configmap, which will cause pods to restart on configmap change

restartPodsOnConfigMapChange: false

ports:

http: 8000

client: 2181

clientTls: 2281

follower: 2888

leaderElection: 3888

nodeSelector:

##node.weizhipin.com/business: pulsar

probe:

liveness:

enabled: true

failureThreshold: 10

initialDelaySeconds: 20

periodSeconds: 30

timeoutSeconds: 30

readiness:

enabled: true

failureThreshold: 10

initialDelaySeconds: 20

periodSeconds: 30

timeoutSeconds: 30

startup:

enabled: false

failureThreshold: 30

initialDelaySeconds: 20

periodSeconds: 30

timeoutSeconds: 30

affinity:

anti_affinity: true

# Set the anti affinity type. Valid values:

# requiredDuringSchedulingIgnoredDuringExecution - rules must be met for pod to be scheduled (hard) requires at least one node per replica

# preferredDuringSchedulingIgnoredDuringExecution - scheduler will try to enforce but not guranentee

type: requiredDuringSchedulingIgnoredDuringExecution

annotations:

kubernetes.io/ingress-bandwidth: "500M"

kubernetes.io/egress-bandwidth: "500M"

prometheus.io/scrape: "true"

prometheus.io/port: "8000"

tolerations: []

gracePeriod: 30

resources:

requests:

memory: 2Gi

cpu: 1

limits:

memory: 4Gi

cpu: 2

# extraVolumes and extraVolumeMounts allows you to mount other volumes

# Example Use Case: mount ssl certificates

# extraVolumes:

# - name: ca-certs

# secret:

# defaultMode: 420

# secretName: ca-certs

# extraVolumeMounts:

# - name: ca-certs

# mountPath: /certs

# readOnly: true

extraVolumes: []

extraVolumeMounts: []

# Ensures 2.10.0 non-root docker image works correctly.

securityContext:

fsGroup: 0

fsGroupChangePolicy: "OnRootMismatch"

volumes:

# use a persistent volume or emptyDir

persistence: true

data:

name: data

size: 20Gi

local_storage: true

## If you already have an existent storage class and want to reuse it, you can specify its name with the option below

##

storageClassName: local-hostpath

#

## Instead if you want to create a new storage class define it below

## If left undefined no storage class will be defined along with PVC

##

# storageClass:

# type: pd-ssd

# fsType: xfs

# provisioner: kubernetes.io/gce-pd

## Zookeeper configmap

## templates/zookeeper-configmap.yaml

##

configData:

PULSAR_MEM: >

-Xms64m -Xmx128m

PULSAR_GC: >

-XX:+UseG1GC

-XX:MaxGCPauseMillis=10

-Dcom.sun.management.jmxremote

-Djute.maxbuffer=10485760

-XX:+ParallelRefProcEnabled

-XX:+UnlockExperimentalVMOptions

-XX:+DoEscapeAnalysis

-XX:+DisableExplicitGC

-XX:+ExitOnOutOfMemoryError

-XX:+PerfDisableSharedMem

## Add a custom command to the start up process of the zookeeper pods (e.g. update-ca-certificates, jvm commands, etc)

additionalCommand:

## Zookeeper service

## templates/zookeeper-service.yaml

##

service:

annotations:

service.alpha.kubernetes.io/tolerate-unready-endpoints: "true"

## Zookeeper PodDisruptionBudget

## templates/zookeeper-pdb.yaml

##

pdb:

usePolicy: true

maxUnavailable: 1

## Pulsar: Bookkeeper cluster

## templates/bookkeeper-statefulset.yaml

##

bookkeeper:

# use a component name that matches your grafana configuration

# so the metrics are correctly rendered in grafana dashboard

component: bookie

## BookKeeper Cluster Initialize

## templates/bookkeeper-cluster-initialize.yaml

metadata:

## Set the resources used for running `bin/bookkeeper shell initnewcluster`

##

resources:

# requests:

# memory: 4Gi

# cpu: 2

replicaCount: 4

updateStrategy:

type: RollingUpdate

podManagementPolicy: Parallel

# If using Prometheus-Operator enable this PodMonitor to discover bookie scrape targets

# Prometheus-Operator does not add scrape targets based on k8s annotations

podMonitor:

enabled: false

interval: 10s

scrapeTimeout: 10s

# True includes annotation for statefulset that contains hash of corresponding configmap, which will cause pods to restart on configmap change

restartPodsOnConfigMapChange: false

ports:

http: 8000

bookie: 3181

nodeSelector:

##node.weizhipin.com/business: pulsar

probe:

liveness:

enabled: true

failureThreshold: 60

initialDelaySeconds: 10

periodSeconds: 30

timeoutSeconds: 5

readiness:

enabled: true

failureThreshold: 60

initialDelaySeconds: 10

periodSeconds: 30

timeoutSeconds: 5

startup:

enabled: false

failureThreshold: 30

initialDelaySeconds: 60

periodSeconds: 30

timeoutSeconds: 5

affinity:

anti_affinity: true

# Set the anti affinity type. Valid values:

# requiredDuringSchedulingIgnoredDuringExecution - rules must be met for pod to be scheduled (hard) requires at least one node per replica

# preferredDuringSchedulingIgnoredDuringExecution - scheduler will try to enforce but not guranentee

type: requiredDuringSchedulingIgnoredDuringExecution

annotations:

kubernetes.io/ingress-bandwidth: "500M"

kubernetes.io/egress-bandwidth: "500M"

tolerations: []

gracePeriod: 30

resources:

requests:

memory: 2Gi

cpu: 1

limits:

memory: 4Gi

cpu: 2

# extraVolumes and extraVolumeMounts allows you to mount other volumes

# Example Use Case: mount ssl certificates

# extraVolumes:

# - name: ca-certs

# secret:

# defaultMode: 420

# secretName: ca-certs

# extraVolumeMounts:

# - name: ca-certs

# mountPath: /certs

# readOnly: true

extraVolumes: []

extraVolumeMounts: []

# Ensures 2.10.0 non-root docker image works correctly.

securityContext:

fsGroup: 0

fsGroupChangePolicy: "OnRootMismatch"

volumes:

# use a persistent volume or emptyDir

persistence: true

journal:

name: journal

size: 10Gi

local_storage: true

## If you already have an existent storage class and want to reuse it, you can specify its name with the option below

##

storageClassName: local-hostpath

#

## Instead if you want to create a new storage class define it below

## If left undefined no storage class will be defined along with PVC

##

# storageClass:

# type: pd-ssd

# fsType: xfs

# provisioner: kubernetes.io/gce-pd

useMultiVolumes: false

multiVolumes:

- name: journal0

size: 10Gi

storageClassName: local-hostpath

mountPath: /pulsar/data/bookkeeper/journal0

- name: journal1

size: 10Gi

# storageClassName: existent-storage-class

mountPath: /pulsar/data/bookkeeper/journal1

ledgers:

name: ledgers

size: 50Gi

local_storage: true

storageClassName: local-hostpath

# storageClass:

# ...

useMultiVolumes: false

multiVolumes:

- name: ledgers0

size: 10Gi

storageClassName: local-hostpath

mountPath: /pulsar/data/bookkeeper/ledgers0

- name: ledgers1

size: 10Gi

storageClassName: local-hostpath

mountPath: /pulsar/data/bookkeeper/ledgers1

## use a single common volume for both journal and ledgers

useSingleCommonVolume: false

common:

name: common

size: 60Gi

local_storage: true

storageClassName: local-hostpath

# storageClass: ## this is common too

# ...

## Bookkeeper configmap

## templates/bookkeeper-configmap.yaml

##

configData:

# we use `bin/pulsar` for starting bookie daemons

PULSAR_MEM: >

-Xms128m

-Xmx256m

-XX:MaxDirectMemorySize=256m

PULSAR_GC: >

-XX:+UseG1GC

-XX:MaxGCPauseMillis=10

-XX:+ParallelRefProcEnabled

-XX:+UnlockExperimentalVMOptions

-XX:+DoEscapeAnalysis

-XX:ParallelGCThreads=4

-XX:ConcGCThreads=4

-XX:G1NewSizePercent=50

-XX:+DisableExplicitGC

-XX:-ResizePLAB

-XX:+ExitOnOutOfMemoryError

-XX:+PerfDisableSharedMem

-Xlog:gc*

-Xlog:gc::utctime

-Xlog:safepoint

-Xlog:gc+heap=trace

-verbosegc

# configure the memory settings based on jvm memory settings

dbStorage_writeCacheMaxSizeMb: "32"

dbStorage_readAheadCacheMaxSizeMb: "32"

dbStorage_rocksDB_writeBufferSizeMB: "8"

dbStorage_rocksDB_blockCacheSize: "8388608"

## Add a custom command to the start up process of the bookie pods (e.g. update-ca-certificates, jvm commands, etc)

additionalCommand:

## Bookkeeper Service

## templates/bookkeeper-service.yaml

##

service:

spec:

publishNotReadyAddresses: true

## Bookkeeper PodDisruptionBudget

## templates/bookkeeper-pdb.yaml

##

pdb:

usePolicy: true

maxUnavailable: 1

## Pulsar: Bookkeeper AutoRecovery

## templates/autorecovery-statefulset.yaml

##

autorecovery:

# use a component name that matches your grafana configuration

# so the metrics are correctly rendered in grafana dashboard

component: recovery

replicaCount: 1

# If using Prometheus-Operator enable this PodMonitor to discover autorecovery scrape targets

# # Prometheus-Operator does not add scrape targets based on k8s annotations

podMonitor:

enabled: false

interval: 10s

scrapeTimeout: 10s

# True includes annotation for statefulset that contains hash of corresponding configmap, which will cause pods to restart on configmap change

restartPodsOnConfigMapChange: false

ports:

http: 8000

nodeSelector:

#node.weizhipin.com/business: pulsar

affinity:

anti_affinity: true

# Set the anti affinity type. Valid values:

# requiredDuringSchedulingIgnoredDuringExecution - rules must be met for pod to be scheduled (hard) requires at least one node per replica

# preferredDuringSchedulingIgnoredDuringExecution - scheduler will try to enforce but not guranentee

type: requiredDuringSchedulingIgnoredDuringExecution

annotations:

kubernetes.io/ingress-bandwidth: "500M"

kubernetes.io/egress-bandwidth: "500M"

# tolerations: []

gracePeriod: 30

resources:

requests:

memory: 2Gi

cpu: 1

limits:

memory: 4Gi

cpu: 2

## Bookkeeper auto-recovery configmap

## templates/autorecovery-configmap.yaml

##

configData:

BOOKIE_MEM: >

-Xms64m -Xmx64m

PULSAR_PREFIX_useV2WireProtocol: "true"

## Pulsar Zookeeper metadata. The metadata will be deployed as

## soon as the last zookeeper node is reachable. The deployment

## of other components that depends on zookeeper, such as the

## bookkeeper nodes, broker nodes, etc will only start to be

## deployed when the zookeeper cluster is ready and with the

## metadata deployed

pulsar_metadata:

component: pulsar-init

image:

# the image used for running `pulsar-cluster-initialize` job

repository: harbor.weizhipin.com/tcloud/pulsar-all

tag: 2.9.3

pullPolicy: IfNotPresent

## set an existing configuration store

# configurationStore:

configurationStoreMetadataPrefix: ""

configurationStorePort: 2181

## optional, you can provide your own zookeeper metadata store for other components

# to use this, you should explicit set components.zookeeper to false

#

# userProvidedZookeepers: "zk01.example.com:2181,zk02.example.com:2181"

# Can be used to run extra commands in the initialization jobs e.g. to quit istio sidecars etc.

extraInitCommand: ""

## Pulsar: Broker cluster

## templates/broker-statefulset.yaml

##

broker:

# use a component name that matches your grafana configuration

# so the metrics are correctly rendered in grafana dashboard

component: broker

replicaCount: 3

autoscaling:

enabled: false

minReplicas: 1

maxReplicas: 3

metrics: ~

# If using Prometheus-Operator enable this PodMonitor to discover broker scrape targets

# Prometheus-Operator does not add scrape targets based on k8s annotations

podMonitor:

enabled: false

interval: 10s

scrapeTimeout: 10s

# True includes annotation for statefulset that contains hash of corresponding configmap, which will cause pods to restart on configmap change

restartPodsOnConfigMapChange: false

ports:

http: 8080

https: 8443

pulsar: 6650

pulsarssl: 6651

nodeSelector:

#node.weizhipin.com/business: pulsar

probe:

liveness:

enabled: true

failureThreshold: 10

initialDelaySeconds: 30

periodSeconds: 10

timeoutSeconds: 5

readiness:

enabled: true

failureThreshold: 10

initialDelaySeconds: 30

periodSeconds: 10

timeoutSeconds: 5

startup:

enabled: false

failureThreshold: 30

initialDelaySeconds: 60

periodSeconds: 10

timeoutSeconds: 5

affinity:

anti_affinity: true

# Set the anti affinity type. Valid values:

# requiredDuringSchedulingIgnoredDuringExecution - rules must be met for pod to be scheduled (hard) requires at least one node per replica

# preferredDuringSchedulingIgnoredDuringExecution - scheduler will try to enforce but not guranentee

type: preferredDuringSchedulingIgnoredDuringExecution

annotations:

kubernetes.io/ingress-bandwidth: "500M"

kubernetes.io/egress-bandwidth: "500M"

tolerations: []

gracePeriod: 30

resources:

requests:

memory: 2Gi

cpu: 1

limits:

memory: 4Gi

cpu: 2

# extraVolumes and extraVolumeMounts allows you to mount other volumes

# Example Use Case: mount ssl certificates

# extraVolumes:

# - name: ca-certs

# secret:

# defaultMode: 420

# secretName: ca-certs

# extraVolumeMounts:

# - name: ca-certs

# mountPath: /certs

# readOnly: true

extraVolumes: []

extraVolumeMounts: []

extreEnvs: []

# - name: POD_NAME

# valueFrom:

# fieldRef:

# apiVersion: v1

# fieldPath: metadata.name

## Broker configmap

## templates/broker-configmap.yaml

##

configData:

PULSAR_MEM: >

-Xms128m -Xmx256m -XX:MaxDirectMemorySize=256m

PULSAR_GC: >

-XX:+UseG1GC

-XX:MaxGCPauseMillis=10

-Dio.netty.leakDetectionLevel=disabled

-Dio.netty.recycler.linkCapacity=1024

-XX:+ParallelRefProcEnabled

-XX:+UnlockExperimentalVMOptions

-XX:+DoEscapeAnalysis

-XX:ParallelGCThreads=4

-XX:ConcGCThreads=4

-XX:G1NewSizePercent=50

-XX:+DisableExplicitGC

-XX:-ResizePLAB

-XX:+ExitOnOutOfMemoryError

-XX:+PerfDisableSharedMem

managedLedgerDefaultEnsembleSize: "2"

managedLedgerDefaultWriteQuorum: "2"

managedLedgerDefaultAckQuorum: "2"

## Add a custom command to the start up process of the broker pods (e.g. update-ca-certificates, jvm commands, etc)

additionalCommand:

## Broker service

## templates/broker-service.yaml

##

service:

annotations: {}

## Broker PodDisruptionBudget

## templates/broker-pdb.yaml

##

pdb:

usePolicy: true

maxUnavailable: 1

### Broker service account

## templates/broker-service-account.yaml

service_account:

annotations: {}

## Pulsar: Functions Worker

## templates/function-worker-configmap.yaml

##

functions:

component: functions-worker

## Pulsar: Proxy Cluster

## templates/proxy-statefulset.yaml

##

proxy:

# use a component name that matches your grafana configuration

# so the metrics are correctly rendered in grafana dashboard

component: proxy

replicaCount: 3

autoscaling:

enabled: false

minReplicas: 1

maxReplicas: 3

metrics: ~

# If using Prometheus-Operator enable this PodMonitor to discover proxy scrape targets

# Prometheus-Operator does not add scrape targets based on k8s annotations

podMonitor:

enabled: false

interval: 10s

scrapeTimeout: 10s

# True includes annotation for statefulset that contains hash of corresponding configmap, which will cause pods to restart on configmap change

restartPodsOnConfigMapChange: false

nodeSelector:

#node.weizhipin.com/business: pulsar

probe:

liveness:

enabled: true

failureThreshold: 10

initialDelaySeconds: 30

periodSeconds: 10

timeoutSeconds: 5

readiness:

enabled: true

failureThreshold: 10

initialDelaySeconds: 30

periodSeconds: 10

timeoutSeconds: 5

startup:

enabled: false

failureThreshold: 30

initialDelaySeconds: 60

periodSeconds: 10

timeoutSeconds: 5

affinity:

anti_affinity: true

# Set the anti affinity type. Valid values:

# requiredDuringSchedulingIgnoredDuringExecution - rules must be met for pod to be scheduled (hard) requires at least one node per replica

# preferredDuringSchedulingIgnoredDuringExecution - scheduler will try to enforce but not guranentee

type: requiredDuringSchedulingIgnoredDuringExecution

annotations:

kubernetes.io/ingress-bandwidth: "500M"

kubernetes.io/egress-bandwidth: "500M"

tolerations: []

gracePeriod: 30

resources:

requests:

memory: 2Gi

cpu: 1

limits:

memory: 4Gi

cpu: 2

# extraVolumes and extraVolumeMounts allows you to mount other volumes

# Example Use Case: mount ssl certificates

# extraVolumes:

# - name: ca-certs

# secret:

# defaultMode: 420

# secretName: ca-certs

# extraVolumeMounts:

# - name: ca-certs

# mountPath: /certs

# readOnly: true

extraVolumes: []

extraVolumeMounts: []

extreEnvs: []

# - name: POD_IP

# valueFrom:

# fieldRef:

# apiVersion: v1

# fieldPath: status.podIP

## Proxy configmap

## templates/proxy-configmap.yaml

##

configData:

PULSAR_MEM: >

-Xms64m -Xmx64m -XX:MaxDirectMemorySize=64m

PULSAR_GC: >

-XX:+UseG1GC

-XX:MaxGCPauseMillis=10

-Dio.netty.leakDetectionLevel=disabled

-Dio.netty.recycler.linkCapacity=1024

-XX:+ParallelRefProcEnabled

-XX:+UnlockExperimentalVMOptions

-XX:+DoEscapeAnalysis

-XX:ParallelGCThreads=4

-XX:ConcGCThreads=4

-XX:G1NewSizePercent=50

-XX:+DisableExplicitGC

-XX:-ResizePLAB

-XX:+ExitOnOutOfMemoryError

-XX:+PerfDisableSharedMem

httpNumThreads: "8"

## Add a custom command to the start up process of the proxy pods (e.g. update-ca-certificates, jvm commands, etc)

additionalCommand:

## Proxy service

## templates/proxy-service.yaml

##

ports:

http: 80

https: 443

pulsar: 6650

pulsarssl: 6651

service:

annotations: {}

type: LoadBalancer

## Proxy ingress

## templates/proxy-ingress.yaml

##

ingress:

enabled: false

annotations: {}

tls:

enabled: false

## Optional. Leave it blank if your Ingress Controller can provide a default certificate.

secretName: ""

hostname: ""

path: "/"

## Proxy PodDisruptionBudget

## templates/proxy-pdb.yaml

##

pdb:

usePolicy: true

maxUnavailable: 1

## Pulsar Extra: Dashboard

## templates/dashboard-deployment.yaml

## Deprecated

##

dashboard:

component: dashboard

replicaCount: 1

nodeSelector:

#node.weizhipin.com/business: pulsar

annotations:

kubernetes.io/ingress-bandwidth: "500M"

kubernetes.io/egress-bandwidth: "500M"

tolerations: []

gracePeriod: 0

image:

repository: apachepulsar/pulsar-dashboard

tag: latest

pullPolicy: IfNotPresent

resources:

requests:

memory: 2Gi

cpu: 1

limits:

memory: 4Gi

cpu: 2

## Dashboard service

## templates/dashboard-service.yaml

##

service:

annotations: {}

ports:

- name: server

port: 80

ingress:

enabled: false

annotations: {}

tls:

enabled: false

## Optional. Leave it blank if your Ingress Controller can provide a default certificate.

secretName: ""

## Required if ingress is enabled

hostname: ""

path: "/"

port: 80

## Pulsar ToolSet

## templates/toolset-deployment.yaml

##

toolset:

component: toolset

useProxy: true

replicaCount: 1

# True includes annotation for statefulset that contains hash of corresponding configmap, which will cause pods to restart on configmap change

restartPodsOnConfigMapChange: false

nodeSelector:

#node.weizhipin.com/business: pulsar

annotations: {}

tolerations: []

gracePeriod: 30

resources:

requests:

memory: 2Gi

cpu: 1

limits:

memory: 4Gi

cpu: 2

# extraVolumes and extraVolumeMounts allows you to mount other volumes

# Example Use Case: mount ssl certificates

# extraVolumes:

# - name: ca-certs

# secret:

# defaultMode: 420

# secretName: ca-certs

# extraVolumeMounts:

# - name: ca-certs

# mountPath: /certs

# readOnly: true

extraVolumes: []

extraVolumeMounts: []

## Bastion configmap

## templates/bastion-configmap.yaml

##

configData:

PULSAR_MEM: >

-Xms64M

-Xmx128M

-XX:MaxDirectMemorySize=128M

## Add a custom command to the start up process of the toolset pods (e.g. update-ca-certificates, jvm commands, etc)

additionalCommand:

#############################################################

### Monitoring Stack : Prometheus / Grafana

#############################################################

## Monitoring Stack: Prometheus

## templates/prometheus-deployment.yaml

##

## Deprecated in favor of using `prometheus.rbac.enabled`

prometheus_rbac: false

prometheus:

component: prometheus

rbac:

enabled: true

replicaCount: 1

# True includes annotation for statefulset that contains hash of corresponding configmap, which will cause pods to restart on configmap change

restartPodsOnConfigMapChange: false

nodeSelector:

#node.weizhipin.com/business: pulsar

annotations:

kubernetes.io/ingress-bandwidth: "500M"

kubernetes.io/egress-bandwidth: "500M"

tolerations: []

gracePeriod: 5

port: 9090

enableAdminApi: false

resources:

requests:

memory: 2Gi

cpu: 1

limits:

memory: 4Gi

cpu: 2

volumes:

# use a persistent volume or emptyDir

persistence: true

data:

name: data

size: 10Gi

local_storage: true

## If you already have an existent storage class and want to reuse it, you can specify its name with the option below

##

storageClassName: local-hostpath

#

## Instead if you want to create a new storage class define it below

## If left undefined no storage class will be defined along with PVC

##

# storageClass:

# type: pd-standard

# fsType: xfs

# provisioner: kubernetes.io/gce-pd

## Prometheus service

## templates/prometheus-service.yaml

##

service:

annotations: {}

## Monitoring Stack: Grafana

## templates/grafana-deployment.yaml

##

grafana:

component: grafana

replicaCount: 1

# True includes annotation for statefulset that contains hash of corresponding configmap, which will cause pods to restart on configmap change

restartPodsOnConfigMapChange: false

nodeSelector:

#node.weizhipin.com/business: pulsar

annotations:

kubernetes.io/ingress-bandwidth: "500M"

kubernetes.io/egress-bandwidth: "500M"

tolerations: []

gracePeriod: 30

resources:

requests:

memory: 2Gi

cpu: 1

limits:

memory: 4Gi

cpu: 2

## Grafana service

## templates/grafana-service.yaml

##

service:

type: LoadBalancer

port: 3000

targetPort: 3000

annotations: {}

plugins: []

## Grafana configMap

## templates/grafana-configmap.yaml

##

configData: {}

## Grafana ingress

## templates/grafana-ingress.yaml

##

ingress:

enabled: false

annotations: {}

labels: {}

tls: []

## Optional. Leave it blank if your Ingress Controller can provide a default certificate.

## - secretName: ""

## Extra paths to prepend to every host configuration. This is useful when working with annotation based services.

extraPaths: []

hostname: ""

protocol: http

path: /grafana

port: 80

admin:

user: pulsar

password: pulsar

## Components Stack: pulsar_manager

## templates/pulsar-manager.yaml

##

pulsar_manager:

component: pulsar-manager

replicaCount: 1

# True includes annotation for statefulset that contains hash of corresponding configmap, which will cause pods to restart on configmap change

restartPodsOnConfigMapChange: false

nodeSelector:

#node.weizhipin.com/business: pulsar

annotations:

kubernetes.io/ingress-bandwidth: "500M"

kubernetes.io/egress-bandwidth: "500M"

tolerations: []

gracePeriod: 30

resources:

requests:

memory: 2Gi

cpu: 1

limits:

memory: 4Gi

cpu: 2

configData:

REDIRECT_HOST: "http://127.0.0.1"

REDIRECT_PORT: "9527"

DRIVER_CLASS_NAME: org.postgresql.Driver

URL: jdbc:postgresql://127.0.0.1:5432/pulsar_manager

LOG_LEVEL: DEBUG

## If you enabled authentication support

## JWT_TOKEN: <token>

## SECRET_KEY: data:base64,<secret key>

## Pulsar manager service

## templates/pulsar-manager-service.yaml

##

service:

type: LoadBalancer

port: 9527

targetPort: 9527

annotations: {}

## Pulsar manager ingress

## templates/pulsar-manager-ingress.yaml

##

ingress:

enabled: false

annotations: {}

tls:

enabled: false

## Optional. Leave it blank if your Ingress Controller can provide a default certificate.

secretName: ""

hostname: ""

path: "/"

## If set use existing secret with specified name to set pulsar admin credentials.

existingSecretName:

admin:

user: pulsar

password: pulsar

# These are jobs where job ttl configuration is used

# pulsar-helm-chart/charts/pulsar/templates/pulsar-cluster-initialize.yaml

# pulsar-helm-chart/charts/pulsar/templates/bookkeeper-cluster-initialize.yaml

job:

ttl:

enabled: false

secondsAfterFinished: 3600

```

it will consistently get stuck here

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@pulsar.apache.org.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [pulsar-helm-chart] tianshimoyi commented on issue #309: When I set metadataPrefix my cluster doesn't start properly

Posted by GitBox <gi...@apache.org>.

tianshimoyi commented on issue #309:

URL: https://github.com/apache/pulsar-helm-chart/issues/309#issuecomment-1283368121

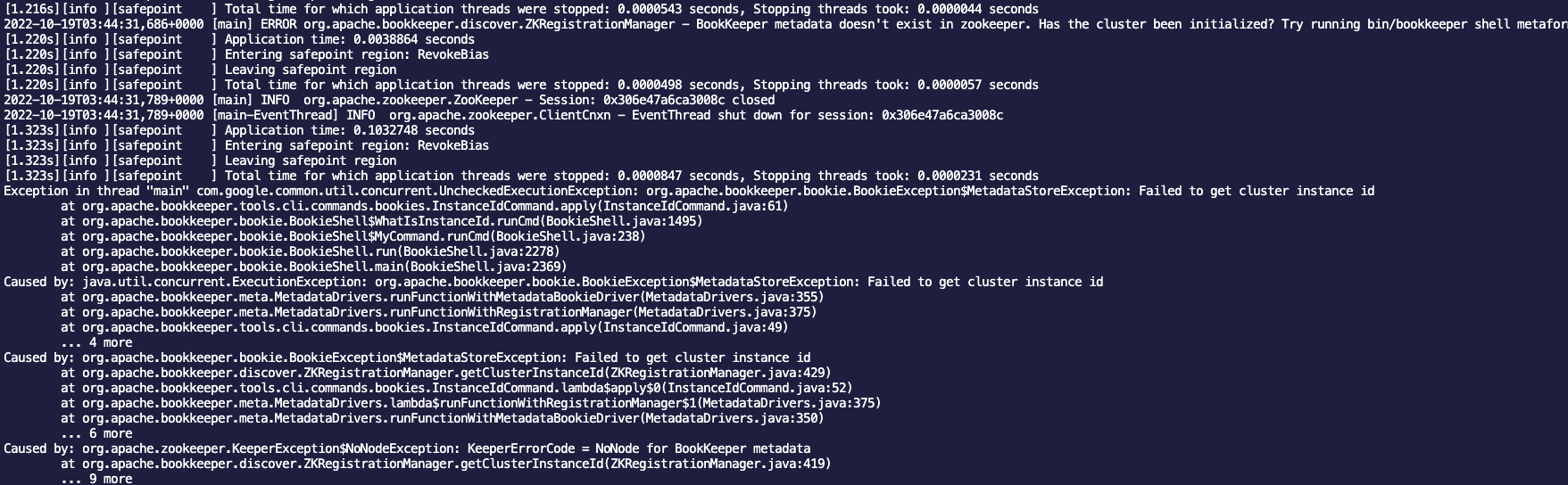

@michaeljmarshall looks like this error

2022-10-19T03:11:20,988+0000 [main-SendThread(pulsar-zookeeper:2181)] INFO org.apache.zookeeper.ClientCnxn - SASL config status: Will not attempt to authenticate using SASL (unknown error)

2022-10-19T03:11:22,522+0000 [main-SendThread(pulsar-zookeeper:2181)] INFO org.apache.zookeeper.ClientCnxn - SASL config status: Will not attempt to authenticate using SASL (unknown error)

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@pulsar.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [pulsar-helm-chart] michaeljmarshall closed issue #309: When I set metadataPrefix my cluster doesn't start properly

Posted by GitBox <gi...@apache.org>.

michaeljmarshall closed issue #309: When I set metadataPrefix my cluster doesn't start properly

URL: https://github.com/apache/pulsar-helm-chart/issues/309

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@pulsar.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [pulsar-helm-chart] tianshimoyi commented on issue #309: When I set metadataPrefix my cluster doesn't start properly

Posted by GitBox <gi...@apache.org>.

tianshimoyi commented on issue #309:

URL: https://github.com/apache/pulsar-helm-chart/issues/309#issuecomment-1283356841

2022-10-19T03:13:39,551+0000 [main] INFO org.apache.zookeeper.ZooKeeper - Client environment:zookeeper.version=3.6.3--6401e4ad2087061bc6b9f80dec2d69f2e3c8660a, built on 04/08/2021 16:35 GMT

2022-10-19T03:13:39,555+0000 [main] INFO org.apache.zookeeper.ZooKeeper - Client environment:host.name=pulsar-bookie-init-6b4vw

2022-10-19T03:13:39,555+0000 [main] INFO org.apache.zookeeper.ZooKeeper - Client environment:java.version=11.0.15

2022-10-19T03:13:39,555+0000 [main] INFO org.apache.zookeeper.ZooKeeper - Client environment:java.vendor=Private Build

2022-10-19T03:13:39,555+0000 [main] INFO org.apache.zookeeper.ZooKeeper - Client environment:java.home=/usr/lib/jvm/java-11-openjdk-amd64

2022-10-19T03:13:39,556+0000 [main] INFO org.apache.zookeeper.ZooKeeper - Client environment:java.class.path=/pulsar/conf:::/pulsar/lib/com.beust-jcommander-1.78.jar:/pulsar/lib/com.carrotsearch-hppc-0.7.3.jar:/pulsar/lib/com.fasterxml.jackson.core-jackson-annotations-2.13.2.jar:/pulsar/lib/com.fasterxml.jackson.core-jackson-core-2.13.2.jar:/pulsar/lib/com.fasterxml.jackson.core-jackson-databind-2.13.2.1.jar:/pulsar/lib/com.fasterxml.jackson.dataformat-jackson-dataformat-yaml-2.13.2.jar:/pulsar/lib/com.fasterxml.jackson.jaxrs-jackson-jaxrs-base-2.13.2.jar:/pulsar/lib/com.fasterxml.jackson.jaxrs-jackson-jaxrs-json-provider-2.13.2.jar:/pulsar/lib/com.fasterxml.jackson.module-jackson-module-jaxb-annotations-2.13.2.jar:/pulsar/lib/com.fasterxml.jackson.module-jackson-module-jsonSchema-2.13.2.jar:/pulsar/lib/com.github.ben-manes.caffeine-caffeine-2.9.1.jar:/pulsar/lib/com.github.seancfoley-ipaddress-5.3.3.jar:/pulsar/lib/com.github.zafarkhaja-java-semver-0.9.0.jar:/pulsar/lib/com.goog

le.api.grpc-proto-google-common-protos-2.0.1.jar:/pulsar/lib/com.google.auth-google-auth-library-credentials-1.4.0.jar:/pulsar/lib/com.google.auth-google-auth-library-oauth2-http-1.4.0.jar:/pulsar/lib/com.google.auto.value-auto-value-annotations-1.9.jar:/pulsar/lib/com.google.code.findbugs-jsr305-3.0.2.jar:/pulsar/lib/com.google.code.gson-gson-2.8.9.jar:/pulsar/lib/com.google.errorprone-error_prone_annotations-2.5.1.jar:/pulsar/lib/com.google.guava-failureaccess-1.0.1.jar:/pulsar/lib/com.google.guava-guava-30.1-jre.jar:/pulsar/lib/com.google.guava-listenablefuture-9999.0-empty-to-avoid-conflict-with-guava.jar:/pulsar/lib/com.google.http-client-google-http-client-1.41.0.jar:/pulsar/lib/com.google.http-client-google-http-client-gson-1.41.0.jar:/pulsar/lib/com.google.http-client-google-http-client-jackson2-1.41.0.jar:/pulsar/lib/com.google.j2objc-j2objc-annotations-1.3.jar:/pulsar/lib/com.google.protobuf-protobuf-java-3.19.2.jar:/pulsar/lib/com.google.protobuf-protobuf-java-util-3.19.2

.jar:/pulsar/lib/com.google.re2j-re2j-1.5.jar:/pulsar/lib/com.squareup.okhttp3-logging-interceptor-4.9.3.jar:/pulsar/lib/com.squareup.okhttp3-okhttp-4.9.3.jar:/pulsar/lib/com.squareup.okio-okio-2.8.0.jar:/pulsar/lib/com.sun.activation-javax.activation-1.2.0.jar:/pulsar/lib/com.typesafe.netty-netty-reactive-streams-2.0.6.jar:/pulsar/lib/com.yahoo.datasketches-memory-0.8.3.jar:/pulsar/lib/com.yahoo.datasketches-sketches-core-0.8.3.jar:/pulsar/lib/commons-cli-commons-cli-1.5.0.jar:/pulsar/lib/commons-codec-commons-codec-1.15.jar:/pulsar/lib/commons-collections-commons-collections-3.2.2.jar:/pulsar/lib/commons-configuration-commons-configuration-1.10.jar:/pulsar/lib/commons-io-commons-io-2.8.0.jar:/pulsar/lib/commons-lang-commons-lang-2.6.jar:/pulsar/lib/commons-logging-commons-logging-1.1.1.jar:/pulsar/lib/io.airlift-aircompressor-0.20.jar:/pulsar/lib/io.dropwizard.metrics-metrics-core-3.2.5.jar:/pulsar/lib/io.dropwizard.metrics-metrics-graphite-3.2.5.jar:/pulsar/lib/io.dropwizard.metr

ics-metrics-jvm-3.2.5.jar:/pulsar/lib/io.grpc-grpc-all-1.45.1.jar:/pulsar/lib/io.grpc-grpc-alts-1.45.1.jar:/pulsar/lib/io.grpc-grpc-api-1.45.1.jar:/pulsar/lib/io.grpc-grpc-auth-1.45.1.jar:/pulsar/lib/io.grpc-grpc-context-1.45.1.jar:/pulsar/lib/io.grpc-grpc-core-1.45.1.jar:/pulsar/lib/io.grpc-grpc-grpclb-1.45.1.jar:/pulsar/lib/io.grpc-grpc-netty-1.45.1.jar:/pulsar/lib/io.grpc-grpc-netty-shaded-1.45.1.jar:/pulsar/lib/io.grpc-grpc-protobuf-1.45.1.jar:/pulsar/lib/io.grpc-grpc-protobuf-lite-1.45.1.jar:/pulsar/lib/io.grpc-grpc-rls-1.45.1.jar:/pulsar/lib/io.grpc-grpc-services-1.45.1.jar:/pulsar/lib/io.grpc-grpc-stub-1.45.1.jar:/pulsar/lib/io.grpc-grpc-xds-1.45.1.jar:/pulsar/lib/io.gsonfire-gson-fire-1.8.5.jar:/pulsar/lib/io.jsonwebtoken-jjwt-api-0.11.1.jar:/pulsar/lib/io.jsonwebtoken-jjwt-impl-0.11.1.jar:/pulsar/lib/io.jsonwebtoken-jjwt-jackson-0.11.1.jar:/pulsar/lib/io.kubernetes-client-java-12.0.1.jar:/pulsar/lib/io.kubernetes-client-java-api-12.0.1.jar:/pulsar/lib/io.kubernetes-client-j

ava-proto-12.0.1.jar:/pulsar/lib/io.netty-netty-buffer-4.1.77.Final.jar:/pulsar/lib/io.netty-netty-codec-4.1.77.Final.jar:/pulsar/lib/io.netty-netty-codec-dns-4.1.77.Final.jar:/pulsar/lib/io.netty-netty-codec-haproxy-4.1.77.Final.jar:/pulsar/lib/io.netty-netty-codec-http-4.1.77.Final.jar:/pulsar/lib/io.netty-netty-codec-http2-4.1.77.Final.jar:/pulsar/lib/io.netty-netty-codec-socks-4.1.77.Final.jar:/pulsar/lib/io.netty-netty-common-4.1.77.Final.jar:/pulsar/lib/io.netty-netty-handler-4.1.77.Final.jar:/pulsar/lib/io.netty-netty-handler-proxy-4.1.77.Final.jar:/pulsar/lib/io.netty-netty-resolver-4.1.77.Final.jar:/pulsar/lib/io.netty-netty-resolver-dns-4.1.77.Final.jar:/pulsar/lib/io.netty-netty-tcnative-boringssl-static-2.0.52.Final-linux-aarch_64.jar:/pulsar/lib/io.netty-netty-tcnative-boringssl-static-2.0.52.Final-linux-x86_64.jar:/pulsar/lib/io.netty-netty-tcnative-boringssl-static-2.0.52.Final-osx-aarch_64.jar:/pulsar/lib/io.netty-netty-tcnative-boringssl-static-2.0.52.Final-osx-x86_

64.jar:/pulsar/lib/io.netty-netty-tcnative-boringssl-static-2.0.52.Final-windows-x86_64.jar:/pulsar/lib/io.netty-netty-tcnative-boringssl-static-2.0.52.Final.jar:/pulsar/lib/io.netty-netty-tcnative-classes-2.0.52.Final.jar:/pulsar/lib/io.netty-netty-transport-4.1.77.Final.jar:/pulsar/lib/io.netty-netty-transport-classes-epoll-4.1.77.Final.jar:/pulsar/lib/io.netty-netty-transport-native-epoll-4.1.77.Final-linux-x86_64.jar:/pulsar/lib/io.netty-netty-transport-native-epoll-4.1.77.Final.jar:/pulsar/lib/io.netty-netty-transport-native-unix-common-4.1.77.Final-linux-x86_64.jar:/pulsar/lib/io.netty-netty-transport-native-unix-common-4.1.77.Final.jar:/pulsar/lib/io.opencensus-opencensus-api-0.28.0.jar:/pulsar/lib/io.opencensus-opencensus-contrib-http-util-0.28.0.jar:/pulsar/lib/io.opencensus-opencensus-proto-0.2.0.jar:/pulsar/lib/io.perfmark-perfmark-api-0.19.0.jar:/pulsar/lib/io.prometheus-simpleclient-0.5.0.jar:/pulsar/lib/io.prometheus-simpleclient_caffeine-0.5.0.jar:/pulsar/lib/io.prome

theus-simpleclient_common-0.5.0.jar:/pulsar/lib/io.prometheus-simpleclient_hotspot-0.5.0.jar:/pulsar/lib/io.prometheus-simpleclient_httpserver-0.5.0.jar:/pulsar/lib/io.prometheus-simpleclient_jetty-0.5.0.jar:/pulsar/lib/io.prometheus-simpleclient_log4j2-0.5.0.jar:/pulsar/lib/io.prometheus-simpleclient_servlet-0.5.0.jar:/pulsar/lib/io.prometheus.jmx-collector-0.14.0.jar:/pulsar/lib/io.swagger-swagger-annotations-1.6.2.jar:/pulsar/lib/io.swagger-swagger-core-1.6.2.jar:/pulsar/lib/io.swagger-swagger-models-1.6.2.jar:/pulsar/lib/io.vertx-vertx-auth-common-3.9.8.jar:/pulsar/lib/io.vertx-vertx-bridge-common-3.9.8.jar:/pulsar/lib/io.vertx-vertx-core-3.9.8.jar:/pulsar/lib/io.vertx-vertx-web-3.9.8.jar:/pulsar/lib/io.vertx-vertx-web-common-3.9.8.jar:/pulsar/lib/jakarta.activation-jakarta.activation-api-1.2.2.jar:/pulsar/lib/jakarta.annotation-jakarta.annotation-api-1.3.5.jar:/pulsar/lib/jakarta.validation-jakarta.validation-api-2.0.2.jar:/pulsar/lib/jakarta.ws.rs-jakarta.ws.rs-api-2.1.6.jar:/

pulsar/lib/jakarta.xml.bind-jakarta.xml.bind-api-2.3.3.jar:/pulsar/lib/javax.annotation-javax.annotation-api-1.3.2.jar:/pulsar/lib/javax.servlet-javax.servlet-api-3.1.0.jar:/pulsar/lib/javax.validation-validation-api-1.1.0.Final.jar:/pulsar/lib/javax.websocket-javax.websocket-client-api-1.0.jar:/pulsar/lib/javax.ws.rs-javax.ws.rs-api-2.1.jar:/pulsar/lib/javax.xml.bind-jaxb-api-2.3.1.jar:/pulsar/lib/jline-jline-2.14.6.jar:/pulsar/lib/net.java.dev.jna-jna-4.2.0.jar:/pulsar/lib/net.jcip-jcip-annotations-1.0.jar:/pulsar/lib/net.jodah-typetools-0.5.0.jar:/pulsar/lib/org.apache.avro-avro-1.10.2.jar:/pulsar/lib/org.apache.avro-avro-protobuf-1.10.2.jar:/pulsar/lib/org.apache.bookkeeper-bookkeeper-common-4.14.5.jar:/pulsar/lib/org.apache.bookkeeper-bookkeeper-common-allocator-4.14.5.jar:/pulsar/lib/org.apache.bookkeeper-bookkeeper-proto-4.14.5.jar:/pulsar/lib/org.apache.bookkeeper-bookkeeper-server-4.14.5.jar:/pulsar/lib/org.apache.bookkeeper-bookkeeper-tools-framework-4.14.5.jar:/pulsar/lib

/org.apache.bookkeeper-circe-checksum-4.14.5.jar:/pulsar/lib/org.apache.bookkeeper-cpu-affinity-4.14.5.jar:/pulsar/lib/org.apache.bookkeeper-statelib-4.14.5.jar:/pulsar/lib/org.apache.bookkeeper-stream-storage-api-4.14.5.jar:/pulsar/lib/org.apache.bookkeeper-stream-storage-common-4.14.5.jar:/pulsar/lib/org.apache.bookkeeper-stream-storage-java-client-4.14.5.jar:/pulsar/lib/org.apache.bookkeeper-stream-storage-java-client-base-4.14.5.jar:/pulsar/lib/org.apache.bookkeeper-stream-storage-proto-4.14.5.jar:/pulsar/lib/org.apache.bookkeeper-stream-storage-server-4.14.5.jar:/pulsar/lib/org.apache.bookkeeper-stream-storage-service-api-4.14.5.jar:/pulsar/lib/org.apache.bookkeeper-stream-storage-service-impl-4.14.5.jar:/pulsar/lib/org.apache.bookkeeper.http-http-server-4.14.5.jar:/pulsar/lib/org.apache.bookkeeper.http-vertx-http-server-4.14.5.jar:/pulsar/lib/org.apache.bookkeeper.stats-bookkeeper-stats-api-4.14.5.jar:/pulsar/lib/org.apache.bookkeeper.stats-codahale-metrics-provider-4.14.5.jar

:/pulsar/lib/org.apache.bookkeeper.stats-prometheus-metrics-provider-4.14.5.jar:/pulsar/lib/org.apache.commons-commons-collections4-4.1.jar:/pulsar/lib/org.apache.commons-commons-compress-1.21.jar:/pulsar/lib/org.apache.commons-commons-lang3-3.11.jar:/pulsar/lib/org.apache.curator-curator-client-5.1.0.jar:/pulsar/lib/org.apache.curator-curator-framework-5.1.0.jar:/pulsar/lib/org.apache.curator-curator-recipes-5.1.0.jar:/pulsar/lib/org.apache.distributedlog-distributedlog-common-4.14.5.jar:/pulsar/lib/org.apache.distributedlog-distributedlog-core-4.14.5-tests.jar:/pulsar/lib/org.apache.distributedlog-distributedlog-core-4.14.5.jar:/pulsar/lib/org.apache.distributedlog-distributedlog-protocol-4.14.5.jar:/pulsar/lib/org.apache.httpcomponents-httpclient-4.5.13.jar:/pulsar/lib/org.apache.httpcomponents-httpcore-4.4.13.jar:/pulsar/lib/org.apache.logging.log4j-log4j-api-2.17.1.jar:/pulsar/lib/org.apache.logging.log4j-log4j-core-2.17.1.jar:/pulsar/lib/org.apache.logging.log4j-log4j-slf4j-im

pl-2.17.1.jar:/pulsar/lib/org.apache.logging.log4j-log4j-web-2.17.1.jar:/pulsar/lib/org.apache.pulsar-bouncy-castle-bc-2.9.3-pkg.jar:/pulsar/lib/org.apache.pulsar-managed-ledger-2.9.3.jar:/pulsar/lib/org.apache.pulsar-pulsar-broker-2.9.3.jar:/pulsar/lib/org.apache.pulsar-pulsar-broker-auth-sasl-2.9.3.jar:/pulsar/lib/org.apache.pulsar-pulsar-broker-common-2.9.3.jar:/pulsar/lib/org.apache.pulsar-pulsar-client-admin-api-2.9.3.jar:/pulsar/lib/org.apache.pulsar-pulsar-client-admin-original-2.9.3.jar:/pulsar/lib/org.apache.pulsar-pulsar-client-api-2.9.3.jar:/pulsar/lib/org.apache.pulsar-pulsar-client-auth-sasl-2.9.3.jar:/pulsar/lib/org.apache.pulsar-pulsar-client-messagecrypto-bc-2.9.3.jar:/pulsar/lib/org.apache.pulsar-pulsar-client-original-2.9.3.jar:/pulsar/lib/org.apache.pulsar-pulsar-client-tools-2.9.3.jar:/pulsar/lib/org.apache.pulsar-pulsar-common-2.9.3.jar:/pulsar/lib/org.apache.pulsar-pulsar-config-validation-2.9.3.jar:/pulsar/lib/org.apache.pulsar-pulsar-functions-api-2.9.3.jar:/

pulsar/lib/org.apache.pulsar-pulsar-functions-instance-2.9.3.jar:/pulsar/lib/org.apache.pulsar-pulsar-functions-local-runner-original-2.9.3.jar:/pulsar/lib/org.apache.pulsar-pulsar-functions-proto-2.9.3.jar:/pulsar/lib/org.apache.pulsar-pulsar-functions-runtime-2.9.3.jar:/pulsar/lib/org.apache.pulsar-pulsar-functions-secrets-2.9.3.jar:/pulsar/lib/org.apache.pulsar-pulsar-functions-utils-2.9.3.jar:/pulsar/lib/org.apache.pulsar-pulsar-functions-worker-2.9.3.jar:/pulsar/lib/org.apache.pulsar-pulsar-io-common-2.9.3.jar:/pulsar/lib/org.apache.pulsar-pulsar-io-core-2.9.3.jar:/pulsar/lib/org.apache.pulsar-pulsar-metadata-2.9.3.jar:/pulsar/lib/org.apache.pulsar-pulsar-package-bookkeeper-storage-2.9.3.jar:/pulsar/lib/org.apache.pulsar-pulsar-package-core-2.9.3.jar:/pulsar/lib/org.apache.pulsar-pulsar-proxy-2.9.3.jar:/pulsar/lib/org.apache.pulsar-pulsar-testclient-2.9.3.jar:/pulsar/lib/org.apache.pulsar-pulsar-transaction-common-2.9.3.jar:/pulsar/lib/org.apache.pulsar-pulsar-transaction-coord

inator-2.9.3.jar:/pulsar/lib/org.apache.pulsar-pulsar-websocket-2.9.3.jar:/pulsar/lib/org.apache.pulsar-pulsar-zookeeper-utils-2.9.3.jar:/pulsar/lib/org.apache.pulsar-testmocks-2.9.3.jar:/pulsar/lib/org.apache.thrift-libthrift-0.14.2.jar:/pulsar/lib/org.apache.yetus-audience-annotations-0.5.0.jar:/pulsar/lib/org.apache.zookeeper-zookeeper-3.6.3.jar:/pulsar/lib/org.apache.zookeeper-zookeeper-jute-3.6.3.jar:/pulsar/lib/org.apache.zookeeper-zookeeper-prometheus-metrics-3.6.3.jar:/pulsar/lib/org.asynchttpclient-async-http-client-2.12.1.jar:/pulsar/lib/org.asynchttpclient-async-http-client-netty-utils-2.12.1.jar:/pulsar/lib/org.bitbucket.b_c-jose4j-0.7.6.jar:/pulsar/lib/org.bouncycastle-bcpkix-jdk15on-1.69.jar:/pulsar/lib/org.bouncycastle-bcprov-ext-jdk15on-1.69.jar:/pulsar/lib/org.bouncycastle-bcprov-jdk15on-1.69.jar:/pulsar/lib/org.bouncycastle-bcutil-jdk15on-1.69.jar:/pulsar/lib/org.checkerframework-checker-qual-3.5.0.jar:/pulsar/lib/org.conscrypt-conscrypt-openjdk-uber-2.5.2.jar:/pul

sar/lib/org.eclipse.jetty-jetty-alpn-conscrypt-server-9.4.43.v20210629.jar:/pulsar/lib/org.eclipse.jetty-jetty-alpn-server-9.4.43.v20210629.jar:/pulsar/lib/org.eclipse.jetty-jetty-client-9.4.43.v20210629.jar:/pulsar/lib/org.eclipse.jetty-jetty-continuation-9.4.43.v20210629.jar:/pulsar/lib/org.eclipse.jetty-jetty-http-9.4.43.v20210629.jar:/pulsar/lib/org.eclipse.jetty-jetty-io-9.4.43.v20210629.jar:/pulsar/lib/org.eclipse.jetty-jetty-proxy-9.4.43.v20210629.jar:/pulsar/lib/org.eclipse.jetty-jetty-security-9.4.43.v20210629.jar:/pulsar/lib/org.eclipse.jetty-jetty-server-9.4.43.v20210629.jar:/pulsar/lib/org.eclipse.jetty-jetty-servlet-9.4.43.v20210629.jar:/pulsar/lib/org.eclipse.jetty-jetty-servlets-9.4.43.v20210629.jar:/pulsar/lib/org.eclipse.jetty-jetty-util-9.4.43.v20210629.jar:/pulsar/lib/org.eclipse.jetty-jetty-util-ajax-9.4.43.v20210629.jar:/pulsar/lib/org.eclipse.jetty.websocket-javax-websocket-client-impl-9.4.43.v20210629.jar:/pulsar/lib/org.eclipse.jetty.websocket-websocket-api-9

.4.43.v20210629.jar:/pulsar/lib/org.eclipse.jetty.websocket-websocket-client-9.4.43.v20210629.jar:/pulsar/lib/org.eclipse.jetty.websocket-websocket-common-9.4.43.v20210629.jar:/pulsar/lib/org.eclipse.jetty.websocket-websocket-server-9.4.43.v20210629.jar:/pulsar/lib/org.eclipse.jetty.websocket-websocket-servlet-9.4.43.v20210629.jar:/pulsar/lib/org.glassfish.hk2-hk2-api-2.6.1.jar:/pulsar/lib/org.glassfish.hk2-hk2-locator-2.6.1.jar:/pulsar/lib/org.glassfish.hk2-hk2-utils-2.6.1.jar:/pulsar/lib/org.glassfish.hk2-osgi-resource-locator-1.0.3.jar:/pulsar/lib/org.glassfish.hk2.external-aopalliance-repackaged-2.6.1.jar:/pulsar/lib/org.glassfish.hk2.external-jakarta.inject-2.6.1.jar:/pulsar/lib/org.glassfish.jersey.containers-jersey-container-servlet-2.34.jar:/pulsar/lib/org.glassfish.jersey.containers-jersey-container-servlet-core-2.34.jar:/pulsar/lib/org.glassfish.jersey.core-jersey-client-2.34.jar:/pulsar/lib/org.glassfish.jersey.core-jersey-common-2.34.jar:/pulsar/lib/org.glassfish.jersey.

core-jersey-server-2.34.jar:/pulsar/lib/org.glassfish.jersey.ext-jersey-entity-filtering-2.34.jar:/pulsar/lib/org.glassfish.jersey.inject-jersey-hk2-2.34.jar:/pulsar/lib/org.glassfish.jersey.media-jersey-media-json-jackson-2.34.jar:/pulsar/lib/org.glassfish.jersey.media-jersey-media-multipart-2.34.jar:/pulsar/lib/org.hdrhistogram-HdrHistogram-2.1.9.jar:/pulsar/lib/org.javassist-javassist-3.25.0-GA.jar:/pulsar/lib/org.jctools-jctools-core-2.1.2.jar:/pulsar/lib/org.jetbrains-annotations-13.0.jar:/pulsar/lib/org.jetbrains.kotlin-kotlin-stdlib-1.4.32.jar:/pulsar/lib/org.jetbrains.kotlin-kotlin-stdlib-common-1.4.32.jar:/pulsar/lib/org.jetbrains.kotlin-kotlin-stdlib-jdk7-1.4.32.jar:/pulsar/lib/org.jetbrains.kotlin-kotlin-stdlib-jdk8-1.4.32.jar:/pulsar/lib/org.jvnet.mimepull-mimepull-1.9.13.jar:/pulsar/lib/org.reactivestreams-reactive-streams-1.0.3.jar:/pulsar/lib/org.rocksdb-rocksdbjni-6.10.2.jar:/pulsar/lib/org.slf4j-jcl-over-slf4j-1.7.32.jar:/pulsar/lib/org.slf4j-jul-to-slf4j-1.7.32.jar

:/pulsar/lib/org.slf4j-slf4j-api-1.7.32.jar:/pulsar/lib/org.xerial.snappy-snappy-java-1.1.7.jar:/pulsar/lib/org.yaml-snakeyaml-1.30.jar:

2022-10-19T03:13:39,556+0000 [main] INFO org.apache.zookeeper.ZooKeeper - Client environment:java.library.path=/usr/java/packages/lib:/usr/lib/x86_64-linux-gnu/jni:/lib/x86_64-linux-gnu:/usr/lib/x86_64-linux-gnu:/usr/lib/jni:/lib:/usr/lib

2022-10-19T03:13:39,556+0000 [main] INFO org.apache.zookeeper.ZooKeeper - Client environment:java.io.tmpdir=/tmp

2022-10-19T03:13:39,556+0000 [main] INFO org.apache.zookeeper.ZooKeeper - Client environment:java.compiler=<NA>

2022-10-19T03:13:39,556+0000 [main] INFO org.apache.zookeeper.ZooKeeper - Client environment:os.name=Linux

2022-10-19T03:13:39,556+0000 [main] INFO org.apache.zookeeper.ZooKeeper - Client environment:os.arch=amd64

2022-10-19T03:13:39,556+0000 [main] INFO org.apache.zookeeper.ZooKeeper - Client environment:os.version=5.4.108-1.el7.elrepo.x86_64

2022-10-19T03:13:39,556+0000 [main] INFO org.apache.zookeeper.ZooKeeper - Client environment:user.name=root

2022-10-19T03:13:39,556+0000 [main] INFO org.apache.zookeeper.ZooKeeper - Client environment:user.home=/root

2022-10-19T03:13:39,556+0000 [main] INFO org.apache.zookeeper.ZooKeeper - Client environment:user.dir=/pulsar

2022-10-19T03:13:39,556+0000 [main] INFO org.apache.zookeeper.ZooKeeper - Client environment:os.memory.free=84MB

2022-10-19T03:13:39,557+0000 [main] INFO org.apache.zookeeper.ZooKeeper - Client environment:os.memory.max=256MB

2022-10-19T03:13:39,557+0000 [main] INFO org.apache.zookeeper.ZooKeeper - Client environment:os.memory.total=130MB

2022-10-19T03:13:39,560+0000 [main] INFO org.apache.zookeeper.ZooKeeper - Initiating client connection, connectString=pulsar-zookeeper sessionTimeout=30000 watcher=org.apache.zookeeper.ZooKeeperMain$MyWatcher@64ba3208

2022-10-19T03:13:39,564+0000 [main] INFO org.apache.zookeeper.common.X509Util - Setting -D jdk.tls.rejectClientInitiatedRenegotiation=true to disable client-initiated TLS renegotiation

2022-10-19T03:13:39,569+0000 [main] INFO org.apache.zookeeper.ClientCnxnSocket - jute.maxbuffer value is 1048575 Bytes

2022-10-19T03:13:39,576+0000 [main] INFO org.apache.zookeeper.ClientCnxn - zookeeper.request.timeout value is 0. feature enabled=false

[0.980s][info ][safepoint ] Application time: 0.1471041 seconds

[0.980s][info ][safepoint ] Entering safepoint region: RevokeBias

[0.980s][info ][safepoint ] Leaving safepoint region

[0.980s][info ][safepoint ] Total time for which application threads were stopped: 0.0002095 seconds, Stopping threads took: 0.0000731 seconds

[0.980s][info ][safepoint ] Application time: 0.0005651 seconds

[0.980s][info ][safepoint ] Entering safepoint region: RevokeBias

[0.981s][info ][safepoint ] Leaving safepoint region

[0.981s][info ][safepoint ] Total time for which application threads were stopped: 0.0001175 seconds, Stopping threads took: 0.0000560 seconds

[0.981s][info ][safepoint ] Application time: 0.0000288 seconds

[0.981s][info ][safepoint ] Entering safepoint region: RevokeBias

[0.981s][info ][safepoint ] Leaving safepoint region

[0.981s][info ][safepoint ] Total time for which application threads were stopped: 0.0000818 seconds, Stopping threads took: 0.0000317 seconds

[0.981s][info ][safepoint ] Application time: 0.0000250 seconds

[0.981s][info ][safepoint ] Entering safepoint region: RevokeBias

[0.981s][info ][safepoint ] Leaving safepoint region

[0.981s][info ][safepoint ] Total time for which application threads were stopped: 0.0000777 seconds, Stopping threads took: 0.0000289 seconds

[0.982s][info ][safepoint ] Application time: 0.0011262 seconds

[0.982s][info ][safepoint ] Entering safepoint region: RevokeBias

[0.982s][info ][safepoint ] Leaving safepoint region

[0.982s][info ][safepoint ] Total time for which application threads were stopped: 0.0000609 seconds, Stopping threads took: 0.0000191 seconds

[0.982s][info ][safepoint ] Application time: 0.0004809 seconds

[0.982s][info ][safepoint ] Entering safepoint region: RevokeBias

[0.983s][info ][safepoint ] Leaving safepoint region

[0.983s][info ][safepoint ] Total time for which application threads were stopped: 0.0001071 seconds, Stopping threads took: 0.0000459 seconds

[0.983s][info ][safepoint ] Application time: 0.0000284 seconds

[0.983s][info ][safepoint ] Entering safepoint region: RevokeBias

[0.983s][info ][safepoint ] Leaving safepoint region

[0.983s][info ][safepoint ] Total time for which application threads were stopped: 0.0000785 seconds, Stopping threads took: 0.0000296 seconds

[0.983s][info ][safepoint ] Application time: 0.0007375 seconds

[0.983s][info ][safepoint ] Entering safepoint region: RevokeBias

[0.983s][info ][safepoint ] Leaving safepoint region

[0.983s][info ][safepoint ] Total time for which application threads were stopped: 0.0000550 seconds, Stopping threads took: 0.0000202 seconds

[0.984s][info ][safepoint ] Application time: 0.0001611 seconds

[0.984s][info ][safepoint ] Entering safepoint region: RevokeBias

[0.984s][info ][safepoint ] Leaving safepoint region

[0.984s][info ][safepoint ] Total time for which application threads were stopped: 0.0000881 seconds, Stopping threads took: 0.0000406 seconds

[0.984s][info ][safepoint ] Application time: 0.0002319 seconds

[0.984s][info ][safepoint ] Entering safepoint region: RevokeBias

[0.984s][info ][safepoint ] Leaving safepoint region

[0.984s][info ][safepoint ] Total time for which application threads were stopped: 0.0000527 seconds, Stopping threads took: 0.0000235 seconds

[0.984s][info ][safepoint ] Application time: 0.0000232 seconds

[0.984s][info ][safepoint ] Entering safepoint region: RevokeBias

[0.984s][info ][safepoint ] Leaving safepoint region

[0.984s][info ][safepoint ] Total time for which application threads were stopped: 0.0000626 seconds, Stopping threads took: 0.0000156 seconds

[0.984s][info ][safepoint ] Application time: 0.0000218 seconds

[0.984s][info ][safepoint ] Entering safepoint region: RevokeBias

[0.984s][info ][safepoint ] Leaving safepoint region

[0.984s][info ][safepoint ] Total time for which application threads were stopped: 0.0000597 seconds, Stopping threads took: 0.0000077 seconds

[0.985s][info ][safepoint ] Application time: 0.0007656 seconds

[0.985s][info ][safepoint ] Entering safepoint region: RevokeBias

[0.985s][info ][safepoint ] Leaving safepoint region

[0.985s][info ][safepoint ] Total time for which application threads were stopped: 0.0000751 seconds, Stopping threads took: 0.0000293 seconds

for does not have the form scheme:id:perm

[0.987s][info ][safepoint ] Application time: 0.0014715 seconds

[0.987s][info ][safepoint ] Entering safepoint region: RevokeBias

[0.987s][info ][safepoint ] Leaving safepoint region

[0.987s][info ][safepoint ] Total time for which application threads were stopped: 0.0001013 seconds, Stopping threads took: 0.0000402 seconds

[0.988s][info ][safepoint ] Application time: 0.0011486 seconds

[0.988s][info ][safepoint ] Entering safepoint region: RevokeBias

[0.988s][info ][safepoint ] Leaving safepoint region

[0.988s][info ][safepoint ] Total time for which application threads were stopped: 0.0000919 seconds, Stopping threads took: 0.0000263 seconds

[0.988s][info ][safepoint ] Application time: 0.0000236 seconds

[0.988s][info ][safepoint ] Entering safepoint region: RevokeBias

[0.988s][info ][safepoint ] Leaving safepoint region

[0.988s][info ][safepoint ] Total time for which application threads were stopped: 0.0000662 seconds, Stopping threads took: 0.0000197 seconds

[0.988s][info ][safepoint ] Application time: 0.0000352 seconds

[0.988s][info ][safepoint ] Entering safepoint region: RevokeBias

[0.988s][info ][safepoint ] Leaving safepoint region

[0.988s][info ][safepoint ] Total time for which application threads were stopped: 0.0000722 seconds, Stopping threads took: 0.0000292 seconds

[0.989s][info ][safepoint ] Application time: 0.0004601 seconds

[0.989s][info ][safepoint ] Entering safepoint region: RevokeBias

[0.989s][info ][safepoint ] Leaving safepoint region

[0.989s][info ][safepoint ] Total time for which application threads were stopped: 0.0000562 seconds, Stopping threads took: 0.0000169 seconds

[0.989s][info ][safepoint ] Application time: 0.0000314 seconds

[0.989s][info ][safepoint ] Entering safepoint region: RevokeBias

[0.989s][info ][safepoint ] Leaving safepoint region

[0.989s][info ][safepoint ] Total time for which application threads were stopped: 0.0000698 seconds, Stopping threads took: 0.0000314 seconds

[0.990s][info ][safepoint ] Application time: 0.0017314 seconds

[0.990s][info ][safepoint ] Entering safepoint region: RevokeBias

[0.991s][info ][safepoint ] Leaving safepoint region

[0.991s][info ][safepoint ] Total time for which application threads were stopped: 0.0000554 seconds, Stopping threads took: 0.0000064 seconds

[0.991s][info ][safepoint ] Application time: 0.0009456 seconds

[0.992s][info ][safepoint ] Entering safepoint region: RevokeBias

[0.992s][info ][safepoint ] Leaving safepoint region

[0.992s][info ][safepoint ] Total time for which application threads were stopped: 0.0000803 seconds, Stopping threads took: 0.0000241 seconds

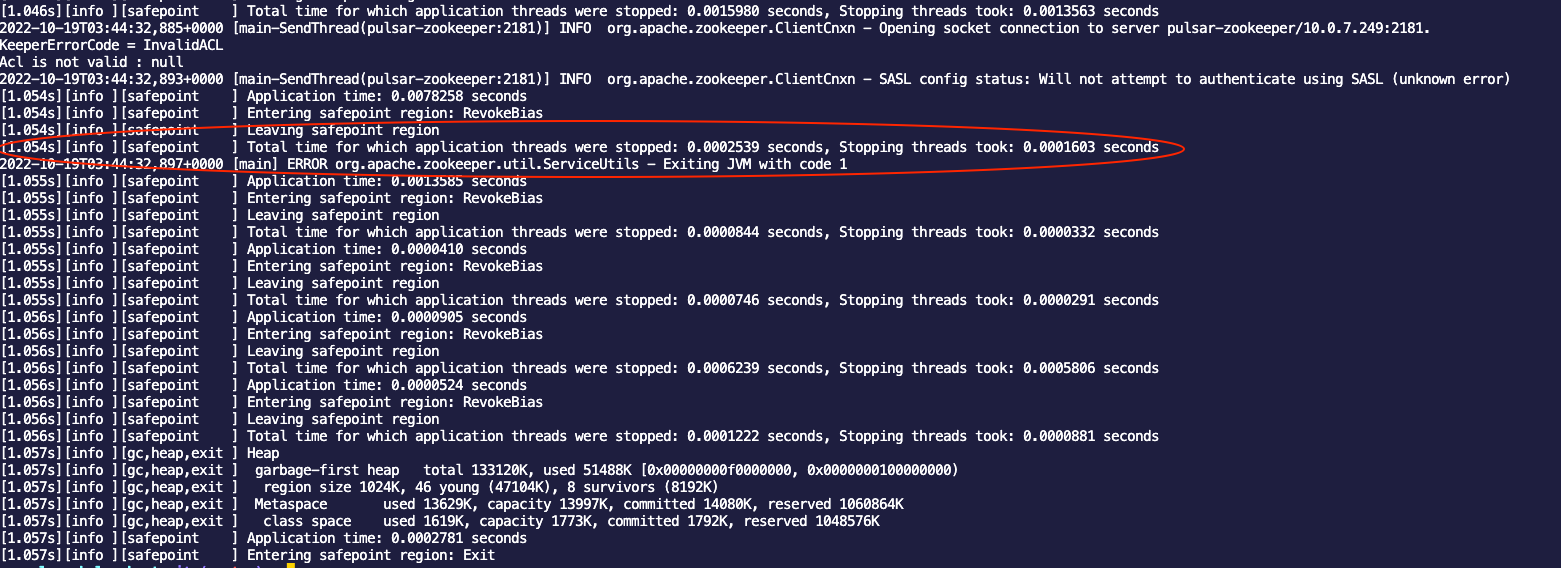

KeeperErrorCode = InvalidACL

Acl is not valid : null

[0.993s][info ][safepoint ] Application time: 0.0016703 seconds

[0.993s][info ][safepoint ] Entering safepoint region: RevokeBias

[0.993s][info ][safepoint ] Leaving safepoint region

[0.993s][info ][safepoint ] Total time for which application threads were stopped: 0.0000910 seconds, Stopping threads took: 0.0000470 seconds

2022-10-19T03:13:39,590+0000 [main-SendThread(pulsar-zookeeper:2181)] INFO org.apache.zookeeper.ClientCnxn - Opening socket connection to server pulsar-zookeeper/10.0.7.238:2181.

2022-10-19T03:13:39,591+0000 [main-SendThread(pulsar-zookeeper:2181)] INFO org.apache.zookeeper.ClientCnxn - SASL config status: Will not attempt to authenticate using SASL (unknown error)

[0.994s][info ][safepoint ] Application time: 0.0005206 seconds

[0.994s][info ][safepoint ] Entering safepoint region: RevokeBias

[0.994s][info ][safepoint ] Leaving safepoint region

[0.994s][info ][safepoint ] Total time for which application threads were stopped: 0.0000548 seconds, Stopping threads took: 0.0000179 seconds

2022-10-19T03:13:39,592+0000 [main] ERROR org.apache.zookeeper.util.ServiceUtils - Exiting JVM with code 1

[0.995s][info ][safepoint ] Application time: 0.0012804 seconds

[0.995s][info ][safepoint ] Entering safepoint region: RevokeBias

[0.995s][info ][safepoint ] Leaving safepoint region

[0.995s][info ][safepoint ] Total time for which application threads were stopped: 0.0000540 seconds, Stopping threads took: 0.0000169 seconds

[0.995s][info ][safepoint ] Application time: 0.0000299 seconds

[0.995s][info ][safepoint ] Entering safepoint region: RevokeBias

[0.995s][info ][safepoint ] Leaving safepoint region

[0.995s][info ][safepoint ] Total time for which application threads were stopped: 0.0000977 seconds, Stopping threads took: 0.0000727 seconds

[0.995s][info ][safepoint ] Application time: 0.0000513 seconds

[0.995s][info ][safepoint ] Entering safepoint region: RevokeBias

[0.996s][info ][safepoint ] Leaving safepoint region

[0.996s][info ][safepoint ] Total time for which application threads were stopped: 0.0000553 seconds, Stopping threads took: 0.0000277 seconds

[0.996s][info ][safepoint ] Application time: 0.0000351 seconds

[0.996s][info ][safepoint ] Entering safepoint region: RevokeBias

[0.996s][info ][safepoint ] Leaving safepoint region

[0.996s][info ][safepoint ] Total time for which application threads were stopped: 0.0000429 seconds, Stopping threads took: 0.0000118 seconds

[0.996s][info ][gc,heap,exit ] Heap

[0.996s][info ][gc,heap,exit ] garbage-first heap total 133120K, used 52499K [0x00000000f0000000, 0x0000000100000000)

[0.996s][info ][gc,heap,exit ] region size 1024K, 47 young (48128K), 8 survivors (8192K)

[0.996s][info ][gc,heap,exit ] Metaspace used 13629K, capacity 14031K, committed 14208K, reserved 1060864K

[0.996s][info ][gc,heap,exit ] class space used 1624K, capacity 1806K, committed 1920K, reserved 1048576K

[0.996s][info ][safepoint ] Application time: 0.0002627 seconds

[0.996s][info ][safepoint ] Entering safepoint region: Exit

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@pulsar.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [pulsar-helm-chart] tianshimoyi closed issue #309: When I set metadataPrefix my cluster doesn't start properly

Posted by GitBox <gi...@apache.org>.

tianshimoyi closed issue #309: When I set metadataPrefix my cluster doesn't start properly

URL: https://github.com/apache/pulsar-helm-chart/issues/309

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@pulsar.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [pulsar-helm-chart] michaeljmarshall commented on issue #309: When I set metadataPrefix my cluster doesn't start properly

Posted by GitBox <gi...@apache.org>.

michaeljmarshall commented on issue #309:

URL: https://github.com/apache/pulsar-helm-chart/issues/309#issuecomment-1283354025

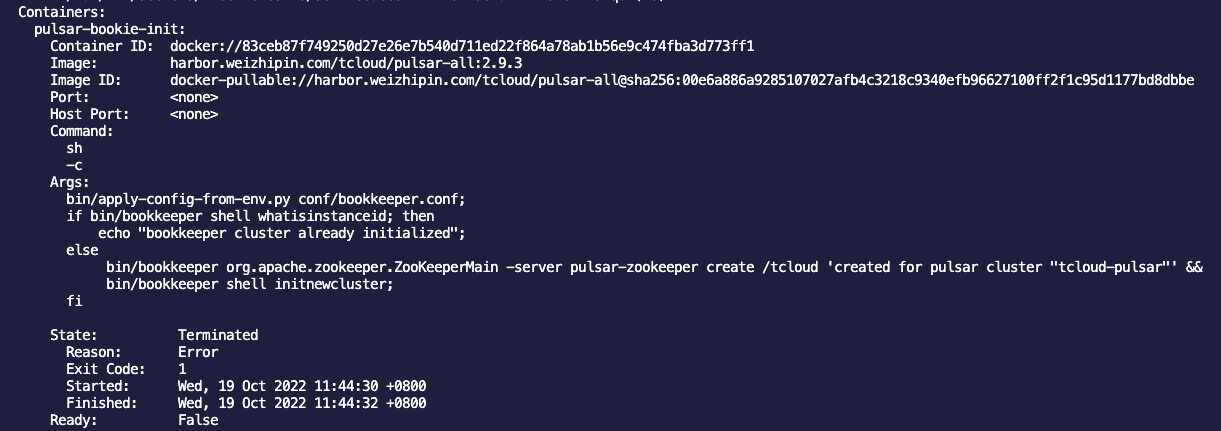

@tianshimoyi - it'd be helpful to see your logs for the `pulsar-bookie-init` and `pulsar-pulsar-init` jobs. Those are `Running` and they have to complete before the other pulsar components can start.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@pulsar.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [pulsar-helm-chart] tianshimoyi commented on issue #309: When I set metadataPrefix my cluster doesn't start properly

Posted by GitBox <gi...@apache.org>.

tianshimoyi commented on issue #309:

URL: https://github.com/apache/pulsar-helm-chart/issues/309#issuecomment-1283463062

@michaeljmarshall From the results, this step is executed incorrectly

```yaml

#

# Licensed to the Apache Software Foundation (ASF) under one

# or more contributor license agreements. See the NOTICE file

# distributed with this work for additional information

# regarding copyright ownership. The ASF licenses this file

# to you under the Apache License, Version 2.0 (the

# "License"); you may not use this file except in compliance

# with the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing,

# software distributed under the License is distributed on an

# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

# KIND, either express or implied. See the License for the

# specific language governing permissions and limitations

# under the License.

#

{{- if or .Release.IsInstall .Values.initialize }}

{{- if .Values.components.bookkeeper }}

apiVersion: batch/v1

kind: Job

metadata:

name: "{{ template "pulsar.fullname" . }}-{{ .Values.bookkeeper.component }}-init"

namespace: {{ template "pulsar.namespace" . }}

labels:

{{- include "pulsar.standardLabels" . | nindent 4 }}

component: "{{ .Values.bookkeeper.component }}-init"

spec:

# This feature was previously behind a feature gate for several Kubernetes versions and will default to true in 1.23 and beyond

# https://kubernetes.io/docs/reference/command-line-tools-reference/feature-gates/

{{- if .Values.job.ttl.enabled }}

ttlSecondsAfterFinished: {{ .Values.job.ttl.secondsAfterFinished }}

{{- end }}

template:

spec:

{{- if and .Values.rbac.enabled .Values.rbac.psp }}

serviceAccountName: "{{ template "pulsar.fullname" . }}-{{ .Values.bookkeeper.component }}"

{{- end }}

initContainers:

- name: wait-zookeeper-ready

image: "{{ .Values.images.bookie.repository }}:{{ .Values.images.bookie.tag }}"

imagePullPolicy: {{ .Values.images.bookie.pullPolicy }}

command: ["sh", "-c"]

args:

- >-

{{- if $zk:=.Values.pulsar_metadata.userProvidedZookeepers }}

until bin/pulsar zookeeper-shell -server {{ $zk }} ls {{ or .Values.metadataPrefix "/" }}; do

echo "user provided zookeepers {{ $zk }} are unreachable... check in 3 seconds ..." && sleep 3;

done;

{{ else }}

until nslookup -ty=a {{ template "pulsar.fullname" . }}-{{ .Values.zookeeper.component }}-{{ add (.Values.zookeeper.replicaCount | int) -1 }}.{{ template "pulsar.fullname" . }}-{{ .Values.zookeeper.component }}.{{ template "pulsar.namespace" . }}; do

sleep 3;

done;

{{- end}}

containers:

- name: "{{ template "pulsar.fullname" . }}-{{ .Values.bookkeeper.component }}-init"

image: "{{ .Values.images.bookie.repository }}:{{ .Values.images.bookie.tag }}"

imagePullPolicy: {{ .Values.images.bookie.pullPolicy }}

{{- if .Values.bookkeeper.metadata.resources }}

resources:

{{ toYaml .Values.bookkeeper.metadata.resources | indent 10 }}

{{- end }}

command: ["sh", "-c"]

args:

- >

bin/apply-config-from-env.py conf/bookkeeper.conf;

{{- include "pulsar.toolset.zookeeper.tls.settings" . | nindent 12 }}

if bin/bookkeeper shell whatisinstanceid; then

echo "bookkeeper cluster already initialized";

else

{{- if not (eq .Values.metadataPrefix "") }}

bin/bookkeeper org.apache.zookeeper.ZooKeeperMain -server {{ template "pulsar.fullname" . }}-{{ .Values.zookeeper.component }} create {{ .Values.metadataPrefix }} 'created for pulsar cluster "{{ template "pulsar.cluster.name" . }}"' &&

{{- end }}

bin/bookkeeper shell initnewcluster;

fi

{{- if .Values.extraInitCommand }}

{{ .Values.extraInitCommand }}

{{- end }}

{{- if and .Values.rbac.enabled .Values.rbac.psp }}

securityContext:

readOnlyRootFilesystem: false

{{- end }}

envFrom:

- configMapRef:

name: "{{ template "pulsar.fullname" . }}-{{ .Values.bookkeeper.component }}"

volumeMounts:

{{- include "pulsar.toolset.certs.volumeMounts" . | nindent 8 }}

volumes:

{{- include "pulsar.toolset.certs.volumes" . | nindent 6 }}

restartPolicy: Never

{{- end }}

{{- end }}

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@pulsar.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [pulsar-helm-chart] michaeljmarshall commented on issue #309: When I set metadataPrefix my cluster doesn't start properly

Posted by GitBox <gi...@apache.org>.

michaeljmarshall commented on issue #309:

URL: https://github.com/apache/pulsar-helm-chart/issues/309#issuecomment-1283373605

I've seen that log many times without an issue. Here is one relevant point of divergence for the helm chart when `metadataPrefix` is enabled:

https://github.com/apache/pulsar-helm-chart/blob/58cd43fe8b11f04682ed2111293f6bf9a2fcc899/charts/pulsar/templates/bookkeeper-cluster-initialize.yaml#L72-L75

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@pulsar.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [pulsar-helm-chart] michaeljmarshall commented on issue #309: When I set metadataPrefix my cluster doesn't start properly

Posted by GitBox <gi...@apache.org>.

michaeljmarshall commented on issue #309:

URL: https://github.com/apache/pulsar-helm-chart/issues/309#issuecomment-1285979726

Reopening this as I think this is likely a bug we'll want to fix in the bookkeeper init template.

--

This is an automated message from the Apache Git Service.