You are viewing a plain text version of this content. The canonical link for it is here.

Posted to commits@airflow.apache.org by GitBox <gi...@apache.org> on 2021/01/07 15:42:24 UTC

[GitHub] [airflow] Overbryd opened a new issue #13542: Task stuck in "scheduled" or "queued" state, pool has open slots

Overbryd opened a new issue #13542:

URL: https://github.com/apache/airflow/issues/13542

**Apache Airflow version**: `2.0.0`

**Kubernetes version (if you are using kubernetes)** (use `kubectl version`):

```

Client Version: version.Info{Major:"1", Minor:"19", GitVersion:"v1.19.3", GitCommit:"1e11e4a2108024935ecfcb2912226cedeafd99df", GitTreeState:"clean", BuildDate:"2020-10-14T12:50:19Z", GoVersion:"go1.15.2", Compiler:"gc", Platform:"darwin/amd64"}

Server Version: version.Info{Major:"1", Minor:"17+", GitVersion:"v1.17.14-gke.1600", GitCommit:"7c407f5cc8632f9af5a2657f220963aa7f1c46e7", GitTreeState:"clean", BuildDate:"2020-12-07T09:22:27Z", GoVersion:"go1.13.15b4", Compiler:"gc", Platform:"linux/amd64"}

```

**Environment**:

- **Cloud provider or hardware configuration**: GKE

- **OS** (e.g. from /etc/os-release):

- **Kernel** (e.g. `uname -a`):

- **Install tools**:

- **Others**:

- Airflow metadata database is hooked up to a PostgreSQL instance

**What happened**:

* Airflow 2.0.0 running on the `KubernetesExecutor` has many tasks stuck in "scheduled" or "queued" state which never get resolved.

* The setup has a `default_pool` of 16 slots.

* Currently no slots are used (see Screenshot), but all slots are queued.

* No work is executed any more. The Executor or Scheduler is stuck.

* There are many many tasks stuck in "scheduled" state

* Tasks in "scheduled" state say `('Not scheduling since there are %s open slots in pool %s and require %s pool slots', 0, 'default_pool', 1)`

That is simply not true, because there is nothing running on the cluster and there are always 16 tasks stuck in "queued".

* There are many tasks stuck in "queued" state

* Tasks in "queued" state say `Task is in the 'running' state which is not a valid state for execution. The task must be cleared in order to be run.`

That is also not true. Nothing is running on the cluster and Airflow is likely just lying to itself. It seems the KubernetesExecutor and the scheduler easily go out of sync.

**What you expected to happen**:

* Airflow should resolve scheduled or queued tasks by itself once the pool has available slots

* Airflow should use all available slots in the pool

* It should be possible to clear a couple hundred tasks and expect the system to stay consistent

**How to reproduce it**:

* Vanilla Airflow 2.0.0 with `KubernetesExecutor` on Python `3.7.9`

* `requirements.txt`

```

pyodbc==4.0.30

pycryptodomex==3.9.9

apache-airflow-providers-google==1.0.0

apache-airflow-providers-odbc==1.0.0

apache-airflow-providers-postgres==1.0.0

apache-airflow-providers-cncf-kubernetes==1.0.0

apache-airflow-providers-sftp==1.0.0

apache-airflow-providers-ssh==1.0.0

```

* The only reliable way to trigger that weird bug is to clear the task state of many tasks at once. (> 300 tasks)

**Anything else we need to know**:

Don't know, as always I am happy to help debug this problem.

The scheduler/executer seems to go out of sync and never back in sync again with the state of the world.

We actually planned to upscale our Airflow installation with many more simultaneous tasks. With these severe yet basic scheduling/queuing problems we cannot move forward at all.

Another strange, likely unrelated observation, the scheduler always uses 100% of the CPU. Burning it. Even with no scheduled or now queued tasks, its always very very busy.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] easontm edited a comment on issue #13542: Task stuck in "scheduled" or "queued" state, pool has all slots queued, nothing is executing

Posted by GitBox <gi...@apache.org>.

easontm edited a comment on issue #13542:

URL: https://github.com/apache/airflow/issues/13542#issuecomment-853117451

More data, if it can benefit:

Airflow version: 2.1.0

Kubernetes version: 1.18.9

I'm using Airflow `2.1.0` (upgraded from `1.10.15` in our development environment) and trying to use the `KubernetesExecutor`. I am running _extremely_ basic DAGs that exist solely to test. In `airflow.cfg` I set `delete_worker_pods = False` so that I could try and examine what's going on. As far as I can tell, my worker pods are being created, immediately considering themselves successful (not sure if they actually receive a task to complete), and terminating. I don't think the pods are "successful but not writing the success state to the DB" because one of my tasks is a simple `CREATE TABLE` statement on my data warehouse, and the table does not appear.

Here are the results of various logs:

If I run `kubectl get pods`, I see my `airflow-webserver` and `airflow-scheduler` pods, as well as some "Completed" worker pods.

```

NAME READY STATUS RESTARTS AGE

airflow-scheduler 1/1 Running 0 45m

airflow-webserver-1 2/2 Running 0 56m

airflow-webserver-2 2/2 Running 0 56m

airflow-webserver-3 2/2 Running 0 45m

airflow-webserver-4 2/2 Running 0 45m

airflow-webserver-5 2/2 Running 0 45m

<GENERATED_WORKER_POD_1> 0/1 Completed 0 15m

<GENERATED_WORKER_POD_2> 0/1 Completed 0 56m

<GENERATED_WORKER_POD_3> 0/1 Completed 0 45m

```

`kubectl logs <my_worker_pod>` - I can tell that the pod was briefly alive but that did nothing, because the only output is a line that always appears as a side effect of the Docker image config. In contrast, if I get the logs from one of my functional `1.10.15` pods, I can see the task start:

```

[2021-06-02 13:33:01,358] {__init__.py:50} INFO - Using executor LocalExecutor

[2021-06-02 13:33:01,358] {dagbag.py:417} INFO - Filling up the DagBag from /usr/local/airflow/dags/my_dag.py

...

etc

```

`kubectl describe pod <my_worker_pod>` - the event log is quite tame:

```

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 3m21s default-scheduler Successfully assigned <GENERATED_POD_NAME> to <EC2_INSTANCE>

Normal Pulling 3m19s kubelet Pulling image <MY_AIRFLOW_2.1.0_DOCKER_IMAGE>

Normal Pulled 3m19s kubelet Successfully pulled image <MY_AIRFLOW_2.1.0_DOCKER_IMAGE>

Normal Created 3m19s kubelet Created container base

Normal Started 3m19s kubelet Started container base

```

`kubectl logs airflow-scheduler` - I've trimmed the logs significantly, but here are the statements mentioning a stuck task

```

[2021-06-02 14:49:42,742] {kubernetes_executor.py:369} INFO - Attempting to finish pod; pod_id: <GENERATED_POD_NAME>; state: None; annotations: {'dag_id': '<TEST_DAG>', 'task_id': '<TASK_ID>', 'execution_date': '2021-05-30T00:00:00+00:00', 'try_number': '1'}

[2021-06-02 14:49:42,748] {kubernetes_executor.py:546} INFO - Changing state of (TaskInstanceKey(dag_id='<TEST_DAG>', task_id='<TASK_ID>', execution_date=datetime.datetime(2021, 5, 30, 0, 0, tzinfo=tzlocal()), try_number=1), None, '<GENERATED_POD_NAME>', 'airflow', '1000200742') to None

[2021-06-02 14:49:42,751] {scheduler_job.py:1212} INFO - Executor reports execution of <TASK_ID> execution_date=2021-05-30 00:00:00+00:00 exited with status None for try_number 1

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] Jorricks commented on issue #13542: Task stuck in "scheduled" or "queued" state, pool has all slots queued, nothing is executing

Posted by GitBox <gi...@apache.org>.

Jorricks commented on issue #13542:

URL: https://github.com/apache/airflow/issues/13542#issuecomment-924363913

> We're continuing to see an issue in `2.1.3` where DAG tasks appear to be running for many hours, even days, but are making no visible progress. This situation persists through scheduler restarts and is not resolved until we clear each stuck task manually twice. The first time we clear the task we see an odd rendering in the UI with a dark blue border that looks like this:

>

> <img alt="Screen Shot 2021-09-21 at 7 40 43 AM" width="637" src="https://user-images.githubusercontent.com/74351/134193377-7f826066-37cc-4f48-bb6f-b2b2224a6be7.png">

>

> The second time we clear the task the border changes to light green and it usually completes as expected.

>

> This is a pretty frustrating situation because the only known path to remediation is manual intervention. As previously stated we're deploying to ECS, with each Airflow process as its own ECS service in an ECS cluster; this generally works well except as noted here.

Hey @maxcountryman,

That sounds very annoying. Sorry to hear that.

I guess you are using the KubernetesExecutor?

Next time that happens could you do the following two things:

1. Send a USR2 kill command to the scheduler and list the output here. Example: `pkill -f -USR2 "airflow scheduler"`

2. Check the TaskInstance that is stuck and especially the `external executor id` attribute of the TaskInstance. You can find this on the detailed view of a task instance.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@airflow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] jbkc85 commented on issue #13542: Task stuck in "scheduled" or "queued" state, pool has all slots queued, nothing is executing

Posted by GitBox <gi...@apache.org>.

jbkc85 commented on issue #13542:

URL: https://github.com/apache/airflow/issues/13542#issuecomment-896304736

@hafid-d / @trucnguyenlam - have you tried separating DAGs into separate pools? The more pools that are available the less 'lanes' you'll have that are piled up. Just beware that in doing this, you are also somewhat breaking the parallelism - but it does organize your DAGs much better over time!

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@airflow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] tienhung2812 commented on issue #13542: Task stuck in "scheduled" or "queued" state, pool has all slots queued, nothing is executing

Posted by GitBox <gi...@apache.org>.

tienhung2812 commented on issue #13542:

URL: https://github.com/apache/airflow/issues/13542#issuecomment-921858288

Hi, just got this issue again on `2.1.3` but the behavior quite different, all of schedulers stuck into a DAG check loop, but restart the scheduler will fix it, all other DAG as stuck in `scheduled`

```

[scheduled]> since the number of tasks running or queued from DAG abc_dag is >= to the DAG's task concurrency limit of 16

airflow_scheduler.2.bj0t349vgttt@ip-172-30-2-178 | [2021-09-17 14:29:07,511] {scheduler_job.py:410} INFO - DAG abc_dag has 16/16 running and queued tasks

airflow_scheduler.2.bj0t349vgttt@ip-172-30-2-178 | [2021-09-17 14:29:07,512] {scheduler_job.py:417} INFO - Not executing <TaskInstance: abc_dag.aa.aa_20.collector_v2 2021-09-17 11:30:00+00:00 [scheduled]> since the number of tasks running or queued from DAG abc_dag is >= to the DAG's task concurrency limit of 16

airflow_scheduler.2.bj0t349vgttt@ip-172-30-2-178 | [2021-09-17 14:29:07,512] {scheduler_job.py:410} INFO - DAG abc_dag has 16/16 running and queued tasks

airflow_scheduler.2.bj0t349vgttt@ip-172-30-2-178 | [2021-09-17 14:29:07,513] {scheduler_job.py:417} INFO - Not executing <TaskInstance: abc_dag.aa.ff_46.collector_v2 2021-09-17 11:30:00+00:00 [scheduled]> since the number of tasks running or queued from DAG abc_dag is >= to the DAG's task concurrency limit of 16

airflow_scheduler.2.bj0t349vgttt@ip-172-30-2-178 | [2021-09-17 14:29:07,513] {scheduler_job.py:410} INFO - DAG abc_dag has 16/16 running and queued tasks`

airflow_scheduler.2.bj0t349vgttt@ip-172-30-2-178 | [2021-09-17 14:29:07,514] {scheduler_job.py:417} INFO - Not executing <TaskInstance: abc_dag.ff.gg_121.collector_v2 2021-09-17 11:30:00+00:00 [scheduled]> since the number of tasks running or queued from DAG abc_dag is >= to the DAG's task concurrency limit of 16

```

The current system have about >= 150 active dags but we have a`abc_dag` have more than 500 tasks inside

I have already increase the scheduler config to handling more task

- max_tis_per_query: 512

- max_dagruns_to_create_per_loop: 50

- max_dagruns_per_loop_to_schedule: 70

I have also add a scheduler healthcheck but it can not detect this issue

Environment:

- Celery Executor

- MySQL 8.0.23-14

- Airflow: 2.1.3

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@airflow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] pelaprat commented on issue #13542: Task stuck in "scheduled" or "queued" state, pool has all slots queued, nothing is executing

Posted by GitBox <gi...@apache.org>.

pelaprat commented on issue #13542:

URL: https://github.com/apache/airflow/issues/13542#issuecomment-821575562

We are experiencing this bug in our Airlfow implementation as well. This is my first time participating in reporting a bug on the Apache Airflow project. Out of curiosity, who is responsible on the Apache Airflow project for prioritizing these issues?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] kaxil edited a comment on issue #13542: Task stuck in "scheduled" or "queued" state, pool has all slots queued, nothing is executing

Posted by GitBox <gi...@apache.org>.

kaxil edited a comment on issue #13542:

URL: https://github.com/apache/airflow/issues/13542#issuecomment-814068888

@lukas-at-harren -- Can you check the Airflow Webserver -> Admin -> Pools and then in the row with your pool (`mssql_dwh`) check the Used slots. And click on the number in Used slots, it should take you to the TaskInstance page that should show the currently "running" taskinstances in that Pool.

It is possible that they are not actually running but somehow got in that state in DB. If you see 3 entries over here, please mark those tasks as success or failed, that should clear your pool.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] lukas-at-harren commented on issue #13542: Task stuck in "scheduled" or "queued" state, pool has all slots queued, nothing is executing

Posted by GitBox <gi...@apache.org>.

lukas-at-harren commented on issue #13542:

URL: https://github.com/apache/airflow/issues/13542#issuecomment-824575868

I can observe the same problem with version 2.0.2:

* Tasks fail, because a DAG/task has gone missing (we are using dynamically created DAGs, and they can go missing)

* The scheduler keeps those queued

* The pool gradually fills up with these queued tasks

* The whole operation stops, because of this behaviour

My current remedy:

* Manually remove those queued tasks

My desired solution:

When a DAG/task goes missing while it is queued, it should end up in a failed state.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] jbkc85 commented on issue #13542: Task stuck in "scheduled" or "queued" state, pool has all slots queued, nothing is executing

Posted by GitBox <gi...@apache.org>.

jbkc85 commented on issue #13542:

URL: https://github.com/apache/airflow/issues/13542#issuecomment-876052790

> > We're only making use of the `default_pool` and tasks are moving from `Queued` to `Running` there but aren't entering the queue despite being in a `Scheduled` state.

>

> We had a similar issue and increased the `default_pool` 10x, from 128 to 1000. This fixed it completely for us, however, we are using Celery Executor though.

> Did you already attempt something similar? Wondering if this helps in your case as well.

We just updated it. We will let you know in the morning - hopefully we see some improvement!

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@airflow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] easontm commented on issue #13542: Task stuck in "scheduled" or "queued" state, pool has all slots queued, nothing is executing

Posted by GitBox <gi...@apache.org>.

easontm commented on issue #13542:

URL: https://github.com/apache/airflow/issues/13542#issuecomment-853693757

I have resolved this issue for my environment! I'm not sure if this is the same "bug" as others, or a different issue with similar symptoms. But here we go

----

In my airflow Docker image, the entrypoint is just a bootstrap script that accepts `webserver` or `scheduler` as arguments, and accepts the appropriate command.

```

# Installing python libs, jars, etc

...

ENTRYPOINT ["/bootstrap.sh"]

```

`bootstrap.sh`:

```

if [ "$1" = "webserver" ]

then

exec airflow webserver

fi

if [ "$1" = "scheduler" ]

then

exec airflow scheduler

fi

```

Previous to #12766, the KubernetesExecutor fed the `airflow tasks run` (or `airflow run` in older versions) into the `command` section of pod YAML.

```

"containers": [

{

"args": [],

"command": [

"airflow",

"run",

"my_dag",

"my_task",

"2021-06-03T03:40:00+00:00",

"--local",

"--pool",

"default_pool",

"-sd",

"/usr/local/airflow/dags/my_dag.py"

],

```

This works fine for my setup -- the `command` just overrides my Docker's `ENTRYPOINT`, the pod executes its given command and terminates on completion. However, [this](https://github.com/apache/airflow/pull/12766/files#diff-681de8974a439f70dfa41705f5c1681ecce615fac6c4c715c1978d28d8f0da84L300) change moved the `airflow tasks run` issuance to the `args` section of the YAML.

```

'containers': [{'args': ['airflow',

'tasks',

'run',

'my_dag,

'my_task',

'2021-06-02T00:00:00+00:00',

'--local',

'--pool',

'default_pool',

'--subdir',

'/usr/local/airflow/dags/my_dag.py'],

'command': None,

```

These new args do not match either `webserver` or `scheduler` in `bootstrap.sh`, therefore the script ends cleanly and so does the pod. Here is my solution, added to the bottom of `bootstrap.sh`:

```

if [ "$1" = "airflow" ] && [ "$2" = "tasks" ] && [ "$3" = "run" ]

then

exec "$@"

fi

```

Rather than just allow the pod to execute _whatever_ it's given in `args` (aka just running `exec "$@"` without a check), I decided to at least make sure the pod is being fed an `airflow run task` command.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] FurcyPin edited a comment on issue #13542: Task stuck in "scheduled" or "queued" state, pool has all slots queued, nothing is executing

Posted by GitBox <gi...@apache.org>.

FurcyPin edited a comment on issue #13542:

URL: https://github.com/apache/airflow/issues/13542#issuecomment-948858685

Hello, I think I ran into the same issue.

Here's all that the relevant info I could find, hoping this will help to solve it.

* Stack: Cloud Composer 2

* Image version: composer-2.0.0-preview.3-airflow-2.1.2

* Executor: I'm not sure what Cloud Composer uses, but the airflow.cfg in the bucket says "CeleryExecutor"

- I am 100% sure that my DAG code does not contain any error (other similar tasks work fine).

- I do not use any pool.

- I do not have a trigger date in the future.

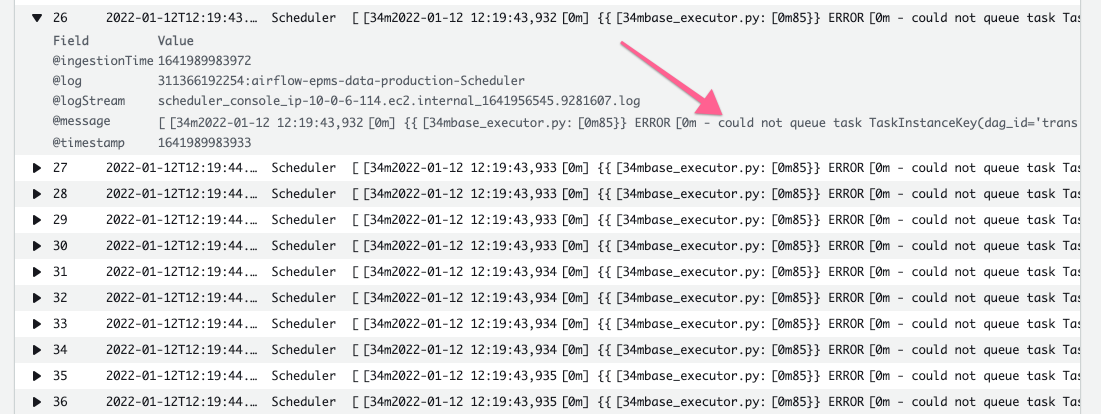

I tried clearing the queued tasks, they immediately appeared as queued again, and the scheduler logged this message (and nothing else useful):

```

could not queue task TaskInstanceKey(dag_id='my_dag', task_id='my_task', execution_date=datetime.datetime(2021, 10, 20, 0, 0, tzinfo=Timezone('UTC')), try_number=2)

```

I tried restarting the scheduler by destroying the pod, it did not change anything.

I tried destroying the Redis pod, but I did not have the necessary permission.

I was running a large number of dags at the same time (more than 20), so it might be linked to `max_dagruns_per_loop_to_schedule`, so I increased it to a number larger than my number of dags,

but the 3 tasks are still stuck in the queued state, even when I clear them.

I'm running out of ideas... luckily it's only a POC, so if someone has some suggestions to what I could try next, I would be happy to try them.

UPDATE : after a little more than 24 hours, the three tasks went into failed state by themselves. I cleared them and they ran with no problem...

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@airflow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] avenkatraman commented on issue #13542: Task stuck in "scheduled" or "queued" state, pool has all slots queued, nothing is executing

Posted by GitBox <gi...@apache.org>.

avenkatraman commented on issue #13542:

URL: https://github.com/apache/airflow/issues/13542#issuecomment-815159787

We're running into a similar problem on Airflow 1.10.14 with the Celery executor. We aren't running anything on k8s infra. We already have reboots of our scheduler every 5m and that didn't help. The problem did eventually seem to go away on its own but we have no idea how long it was like this.

180 slots in the default_pool, 0 used, 180 queued.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] hyungryuk edited a comment on issue #13542: Task stuck in "scheduled" or "queued" state, pool has all slots queued, nothing is executing

Posted by GitBox <gi...@apache.org>.

hyungryuk edited a comment on issue #13542:

URL: https://github.com/apache/airflow/issues/13542#issuecomment-950523692

I solved it!.

I think my case is slightly different with this problem.

In my case, tasks are stuck in scheduled state, and not turned into ququed or running state.

I fixed this with "**AIRFLOW__CORE__PARALLELISM**" env variable.

i've tried fix my dag's CONCURRENCY and MAX_ACTIBE_RUNS config values, but it dosen't help.

changing **AIRFLOW__CORE__PARALLELISM** env variable is helpful!

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@airflow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] Jorricks commented on issue #13542: Task stuck in "scheduled" or "queued" state, pool has all slots queued, nothing is executing

Posted by GitBox <gi...@apache.org>.

Jorricks commented on issue #13542:

URL: https://github.com/apache/airflow/issues/13542#issuecomment-945386663

> i got same issue here. in my case, after i resizing airflow worker pod resouece size. (smaller than before.) it happend!. i'm not sure but it might related with your worker pod resource size.. i guess..

Couple questions to help you further:

- What version are you using?

- What state are your tasks in?

- What is your setup? Celery Redis etc?

- Is this only with TaskInstances that were already present before you scaled down or also with new TaskInstances?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@airflow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] Overbryd edited a comment on issue #13542: Task stuck in "scheduled" or "queued" state, pool has all slots queued, nothing is executing

Posted by GitBox <gi...@apache.org>.

Overbryd edited a comment on issue #13542:

URL: https://github.com/apache/airflow/issues/13542#issuecomment-945785740

I will unsubscribe from this issue.

I have not encountered this issue again (Airflow 2.1.2).

But the following circumstances made this issue pop up again:

* An Airflow DAG/task is being scheduled (not queued yet)

* The Airflow DAG code is being updated, but it contains an error, so that the scheduler cannot load the code and the task that starts up exits immediately

* Now a rare condition takes place: A task is scheduled, but not yet executed.

* The same task will boot a container

* The same task will exit immediately, because the container loads the faulty code and crashes without bringing up the task at all.

* No failure is recorded on the task.

* Then the scheduler thinks the task is queued, but the task crashed immediately (using KubernetesExecutor)

How do I prevent this issue?

I simply make sure the DAG code is 100% clean and loads both in the scheduler and the tasks that start up (using KubernetesExecutor).

How do I recover from this issue?

First, I fix the issue that prevents the DAG code from loading. I restart the scheduler.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@airflow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] Overbryd edited a comment on issue #13542: Task stuck in "scheduled" or "queued" state, pool has all slots queued, nothing is executing

Posted by GitBox <gi...@apache.org>.

Overbryd edited a comment on issue #13542:

URL: https://github.com/apache/airflow/issues/13542#issuecomment-945785740

I will unsubscribe from this issue.

I have not encountered this issue again (Airflow 2.1.2).

But the following circumstances made this issue pop up again:

* An Airflow DAG/task is being scheduled (not queued yet)

* The Airflow DAG code is being updated, but it contains an error, so that the scheduler cannot load the code and the task that starts up exits immediately

* Now a rare condition takes place: A task is scheduled, but not yet executed.

* The same task will boot a container

* The same task will exit immediately, because the container loads the faulty code and crashes without bringing up the task at all.

* No failure is recorded on the task.

* Then the scheduler thinks the task is queued, but the task crashed immediately (using KubernetesExecutor)

* This leads to queued slots filling up over time.

* Once all queued slots of a pool (or the default pool) are filled with queued (but never executed, immediately crashing) tasks, the scheduler and the whole system gets stuck.

How do I prevent this issue?

I simply make sure the DAG code is 100% clean and loads both in the scheduler and the tasks that start up (using KubernetesExecutor).

How do I recover from this issue?

First, I fix the issue that prevents the DAG code from loading. Then I restart the scheduler.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@airflow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] potiuk commented on issue #13542: Task stuck in "scheduled" or "queued" state, pool has all slots queued, nothing is executing

Posted by GitBox <gi...@apache.org>.

potiuk commented on issue #13542:

URL: https://github.com/apache/airflow/issues/13542#issuecomment-1060718792

> Hello, found same issue when i used ver 2.2.4 (latest) maybe we have some workaround for this things ?

@haninp - this might be (and likely is - because MWAA which plays a role here has no 2.2.4 support yet) completely different issue. It's not helpful to say "I also have similar problem" without specifying details, logs .

As a "workaround" (or diagnosis) I suggest you to follow this FAQ here: https://airflow.apache.org/docs/apache-airflow/stable/faq.html?highlight=faq#why-is-task-not-getting-scheduled and double check if your problem is not one of those with the configuration that is explained there.

If you find you stil have a problem, then I invite you to describe it in detail in a separate issue (if this is something that is easily reproducible) or GitHub Discussion (if you have a problem but unsure how to reproduce it). Providing as many details such as your deployment details, logs, circumstances etc. are **crucial** to be able to help you. Just stating "I also have this problem" helps no-one (including yourself because you **might** thiink you delegated the problem and it will be solved, but in fact this might be a completely different problem.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@airflow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] ddcatgg commented on issue #13542: Task stuck in "scheduled" or "queued" state, pool has all slots queued, nothing is executing

Posted by GitBox <gi...@apache.org>.

ddcatgg commented on issue #13542:

URL: https://github.com/apache/airflow/issues/13542#issuecomment-983261840

I ran into the same issue, the scheduler's log:

```

[2021-12-01 11:45:11,850] {scheduler_job.py:1206} INFO - Executor reports execution of jira_pull_5_min.jira_pull execution_date=2021-12-01 03:40:00+00:00 exited with status success for try_number 1

[2021-12-01 11:46:26,870] {scheduler_job.py:941} INFO - 1 tasks up for execution:

<TaskInstance: data_etl_daily_jobs.dwd.dwd_ti_lgc_project 2021-11-29 21:06:00+00:00 [scheduled]>

[2021-12-01 11:46:26,871] {scheduler_job.py:975} INFO - Figuring out tasks to run in Pool(name=data_etl_daily_jobs_pool) with 10 open slots and 1 task instances ready to be queued

[2021-12-01 11:46:26,871] {scheduler_job.py:1002} INFO - DAG data_etl_daily_jobs has 0/16 running and queued tasks

[2021-12-01 11:46:26,871] {scheduler_job.py:1063} INFO - Setting the following tasks to queued state:

<TaskInstance: data_etl_daily_jobs.dwd.dwd_ti_lgc_project 2021-11-29 21:06:00+00:00 [scheduled]>

[2021-12-01 11:46:26,873] {scheduler_job.py:1105} INFO - Sending TaskInstanceKey(dag_id='data_etl_daily_jobs', task_id='dwd.dwd_ti_lgc_project', execution_date=datetime.datetime(2021, 11, 29, 21, 6, tzinfo=Timezone('UTC')), try_number=1) to executor with priority 2 and queue default

[2021-12-01 11:46:26,873] {base_executor.py:85} ERROR - could not queue task TaskInstanceKey(dag_id='data_etl_daily_jobs', task_id='dwd.dwd_ti_lgc_project', execution_date=datetime.datetime(2021, 11, 29, 21, 6, tzinfo=Timezone('UTC')), try_number=1)

```

Stucked task: dwd.dwd_ti_lgc_project

The restart of the executor does not do anything.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@airflow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] ddcatgg edited a comment on issue #13542: Task stuck in "scheduled" or "queued" state, pool has all slots queued, nothing is executing

Posted by GitBox <gi...@apache.org>.

ddcatgg edited a comment on issue #13542:

URL: https://github.com/apache/airflow/issues/13542#issuecomment-983261840

I ran into the same issue, the scheduler's log:

```

[2021-12-01 11:45:11,850] {scheduler_job.py:1206} INFO - Executor reports execution of jira_pull_5_min.jira_pull execution_date=2021-12-01 03:40:00+00:00 exited with status success for try_number 1

[2021-12-01 11:46:26,870] {scheduler_job.py:941} INFO - 1 tasks up for execution:

<TaskInstance: data_etl_daily_jobs.dwd.dwd_ti_lgc_project 2021-11-29 21:06:00+00:00 [scheduled]>

[2021-12-01 11:46:26,871] {scheduler_job.py:975} INFO - Figuring out tasks to run in Pool(name=data_etl_daily_jobs_pool) with 10 open slots and 1 task instances ready to be queued

[2021-12-01 11:46:26,871] {scheduler_job.py:1002} INFO - DAG data_etl_daily_jobs has 0/16 running and queued tasks

[2021-12-01 11:46:26,871] {scheduler_job.py:1063} INFO - Setting the following tasks to queued state:

<TaskInstance: data_etl_daily_jobs.dwd.dwd_ti_lgc_project 2021-11-29 21:06:00+00:00 [scheduled]>

[2021-12-01 11:46:26,873] {scheduler_job.py:1105} INFO - Sending TaskInstanceKey(dag_id='data_etl_daily_jobs', task_id='dwd.dwd_ti_lgc_project', execution_date=datetime.datetime(2021, 11, 29, 21, 6, tzinfo=Timezone('UTC')), try_number=1) to executor with priority 2 and queue default

[2021-12-01 11:46:26,873] {base_executor.py:85} ERROR - could not queue task TaskInstanceKey(dag_id='data_etl_daily_jobs', task_id='dwd.dwd_ti_lgc_project', execution_date=datetime.datetime(2021, 11, 29, 21, 6, tzinfo=Timezone('UTC')), try_number=1)

```

Stucked task: dwd.dwd_ti_lgc_project

The restart of the executor does not do anything.

Version: v2.0.1

Git Version:.release:2.0.1+beb8af5ac6c438c29e2c186145115fb1334a3735

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@airflow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] JavierLopezT edited a comment on issue #13542: Task stuck in "scheduled" or "queued" state, pool has all slots queued, nothing is executing

Posted by GitBox <gi...@apache.org>.

JavierLopezT edited a comment on issue #13542:

URL: https://github.com/apache/airflow/issues/13542#issuecomment-1007235056

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@airflow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] danmactough commented on issue #13542: Task stuck in "scheduled" or "queued" state, pool has all slots queued, nothing is executing

Posted by GitBox <gi...@apache.org>.

danmactough commented on issue #13542:

URL: https://github.com/apache/airflow/issues/13542#issuecomment-1032153919

> Thre is also 2.2.2 as of recently. Can you please upgrade and check it there @danmactough ?

Wow @potiuk I totally missed that update! Huge news! I'll check that out and see if it helps.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@airflow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] Overbryd commented on issue #13542: Task stuck in "scheduled" or "queued" state, pool has all slots queued, nothing is executing

Posted by GitBox <gi...@apache.org>.

Overbryd commented on issue #13542:

URL: https://github.com/apache/airflow/issues/13542#issuecomment-774772238

@kaxil first of all, sorry for my late reply. I am still active on this issue, just so you know. I have been quite busy unfortunately.

You asked whether this might be git-sync related.

I can state that it is not git-sync related.

I observed this issue with local clusters (Dags loaded using Docker volume mounts) as well as with installations that pack the code into the container.

Its a bit hard to track down precisely, and I could only ever see it when using KubernetesExecutor.

If I ever observe it again, I'll try to reproduce it more precisely (currently that cluster is running a low volume of jobs regularly, therefore all is smooth sailing so far).

The only way I could trigger it manually once (as state in my original post) was when I was clearing a large number of tasks at once.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] maxcountryman commented on issue #13542: Task stuck in "scheduled" or "queued" state, pool has all slots queued, nothing is executing

Posted by GitBox <gi...@apache.org>.

maxcountryman commented on issue #13542:

URL: https://github.com/apache/airflow/issues/13542#issuecomment-850527299

I'm on Airflow `2.1.0` deployed to ECS Fargate--seeing similar issues where they are 128 slots, a small number used, but queued tasks that aren't ever run. Perhaps relatedly I also see a task in a `none` state which I'm not able to track down from the UI. Cluster resources seem fine, using less than 50% CPU and memory.

<img width="1297" alt="Screen Shot 2021-05-28 at 9 15 32 AM" src="https://user-images.githubusercontent.com/74351/120013160-43980280-bf95-11eb-95ce-5c6196993f7b.png">

<img width="746" alt="Screen Shot 2021-05-28 at 9 14 58 AM" src="https://user-images.githubusercontent.com/74351/120013097-2fec9c00-bf95-11eb-9e1a-bd062b8fba0c.png">

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] danmactough commented on issue #13542: Task stuck in "scheduled" or "queued" state, pool has all slots queued, nothing is executing

Posted by GitBox <gi...@apache.org>.

danmactough commented on issue #13542:

URL: https://github.com/apache/airflow/issues/13542#issuecomment-1030645181

> @val2k I may know why increasing the `try_number` didn't work. The DAG file that @danmactough posted is missing `session.commit()` after `session.merge()`. After the addition, the DAG file worked as intended for me.

@jpkoponen At least in v2.0.2 (which is the only v2.x version available on AWS MWAA), there's no reason to call `session.commit()` when using the `@provide_session` decorator. It [creates the session](https://github.com/apache/airflow/blob/2.0.2/airflow/utils/session.py#L69), and [calls `session.commit()` for you](https://github.com/apache/airflow/blob/2.0.2/airflow/utils/session.py#L32).

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@airflow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] ashb commented on issue #13542: Task stuck in "scheduled" or "queued" state, pool has all slots queued, nothing is executing

Posted by GitBox <gi...@apache.org>.

ashb commented on issue #13542:

URL: https://github.com/apache/airflow/issues/13542#issuecomment-841375186

Once 2.1 is out/in RC (next week) I will take a look at this issue

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] lukas-at-harren commented on issue #13542: Task stuck in "scheduled" or "queued" state, pool has all slots queued, nothing is executing

Posted by GitBox <gi...@apache.org>.

lukas-at-harren commented on issue #13542:

URL: https://github.com/apache/airflow/issues/13542#issuecomment-824894652

@ashb The setup works like this (I am @overbryd, just a different account.)

* There are dynamic DAGs that are part of the repository, and that gets pulled in regularly with a side-car container on the scheduler.

* The worker has an init container that pulls the latest state of the repository.

* Secondly I have DAGs that are built (very quickly) based on database query results.

This scenario opens up the following edge cases/problems:

* A DAG can go missing, after it has been queued when the entry is removed from the database, rare but happens.

* A DAG can go missing, after it has been queued when a git push happens, which change the DAGs, before the worker started.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] nitinpandey-154 commented on issue #13542: Task stuck in "scheduled" or "queued" state, pool has all slots queued, nothing is executing

Posted by GitBox <gi...@apache.org>.

nitinpandey-154 commented on issue #13542:

URL: https://github.com/apache/airflow/issues/13542#issuecomment-849396016

Any solution or workaround to fix this? This makes the scheduler very unreliable and the tasks are stuck in the queued state.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] minnieshi edited a comment on issue #13542: Task stuck in "scheduled" or "queued" state, pool has all slots queued, nothing is executing

Posted by GitBox <gi...@apache.org>.

minnieshi edited a comment on issue #13542:

URL: https://github.com/apache/airflow/issues/13542#issuecomment-821356514

@SalmonTimo i have access to the workers, as well as the dag logs folder (which is saved in the file share -PVC, and can be viewed via azure storage explorer).

ps. The environment is set up new, and migrated a few tables listed below from old environment. during debug of this stuck situation, the table 'dag', 'task_*', 'celery_*' had been truncated.

```

- celeray_taskmeta

- dag

- dag_run

- log

- task_fail

- task_instance

- task_reschedule

- connections

```

How would like me to fetch the log? Just the dag log run?

update: the one i can view via storage explorer - it is empty, since the task was not executed. i will check the worker itself. and update this comment.

there are no errors

```

$ kubectl -n airflow get pods

NAME READY STATUS RESTARTS AGE

airflow-scheduler-55549d985f-dbw7b 1/1 Running 0 134m

airflow-web-6c8467fd74-9zkj5 1/1 Running 0 134m

airflow-worker-0 1/1 Running 0 115m

airflow-worker-1 1/1 Running 0 125m

nginx-ingress-nginx-controller-6d5794678d-8zdsl 1/1 Running 0 5h16m

nginx-ingress-nginx-controller-6d5794678d-fzhwb 1/1 Running 0 5h15m

telegraf-54cd7f8578-fgdrn 1/1 Running 0 5h16m

DKCPHMAC137:instructions mishi$ kubectl -n airflow logs airflow-worker-0

*** installing global extra pip packages...

Collecting flask_oauthlib==0.9.6

Downloading Flask_OAuthlib-0.9.6-py3-none-any.whl (40 kB)

Collecting cachelib

Downloading cachelib-0.1.1-py3-none-any.whl (13 kB)

Collecting requests-oauthlib<1.2.0,>=0.6.2

Downloading requests_oauthlib-1.1.0-py2.py3-none-any.whl (21 kB)

Requirement already satisfied: Flask in /home/airflow/.local/lib/python3.7/site-packages (from flask_oauthlib==0.9.6) (1.1.2)

Collecting oauthlib!=2.0.3,!=2.0.4,!=2.0.5,<3.0.0,>=1.1.2

Downloading oauthlib-2.1.0-py2.py3-none-any.whl (121 kB)

Requirement already satisfied: requests>=2.0.0 in /home/airflow/.local/lib/python3.7/site-packages (from requests-oauthlib<1.2.0,>=0.6.2->flask_oauthlib==0.9.6) (2.23.0)

Requirement already satisfied: click>=5.1 in /home/airflow/.local/lib/python3.7/site-packages (from Flask->flask_oauthlib==0.9.6) (6.7)

Requirement already satisfied: Werkzeug>=0.15 in /home/airflow/.local/lib/python3.7/site-packages (from Flask->flask_oauthlib==0.9.6) (0.16.1)

Requirement already satisfied: itsdangerous>=0.24 in /home/airflow/.local/lib/python3.7/site-packages (from Flask->flask_oauthlib==0.9.6) (1.1.0)

Requirement already satisfied: Jinja2>=2.10.1 in /home/airflow/.local/lib/python3.7/site-packages (from Flask->flask_oauthlib==0.9.6) (2.11.2)

Requirement already satisfied: urllib3!=1.25.0,!=1.25.1,<1.26,>=1.21.1 in /home/airflow/.local/lib/python3.7/site-packages (from requests>=2.0.0->requests-oauthlib<1.2.0,>=0.6.2->flask_oauthlib==0.9.6) (1.25.11)

Requirement already satisfied: certifi>=2017.4.17 in /home/airflow/.local/lib/python3.7/site-packages (from requests>=2.0.0->requests-oauthlib<1.2.0,>=0.6.2->flask_oauthlib==0.9.6) (2020.11.8)

Requirement already satisfied: idna<3,>=2.5 in /home/airflow/.local/lib/python3.7/site-packages (from requests>=2.0.0->requests-oauthlib<1.2.0,>=0.6.2->flask_oauthlib==0.9.6) (2.8)

Requirement already satisfied: chardet<4,>=3.0.2 in /home/airflow/.local/lib/python3.7/site-packages (from requests>=2.0.0->requests-oauthlib<1.2.0,>=0.6.2->flask_oauthlib==0.9.6) (3.0.4)

Requirement already satisfied: MarkupSafe>=0.23 in /home/airflow/.local/lib/python3.7/site-packages (from Jinja2>=2.10.1->Flask->flask_oauthlib==0.9.6) (1.1.1)

Installing collected packages: cachelib, oauthlib, requests-oauthlib, flask-oauthlib

Attempting uninstall: oauthlib

Found existing installation: oauthlib 3.1.0

Uninstalling oauthlib-3.1.0:

Successfully uninstalled oauthlib-3.1.0

Attempting uninstall: requests-oauthlib

Found existing installation: requests-oauthlib 1.3.0

Uninstalling requests-oauthlib-1.3.0:

Successfully uninstalled requests-oauthlib-1.3.0

Successfully installed cachelib-0.1.1 flask-oauthlib-0.9.6 oauthlib-2.1.0 requests-oauthlib-1.1.0

WARNING: You are using pip version 20.2.4; however, version 21.0.1 is available.

You should consider upgrading via the '/usr/local/bin/python -m pip install --upgrade pip' command.

*** running worker...

[2021-04-16 16:03:50,914] {settings.py:233} DEBUG - Setting up DB connection pool (PID 7)

[2021-04-16 16:03:50,914] {settings.py:300} DEBUG - settings.prepare_engine_args(): Using pool settings. pool_size=5, max_overflow=10, pool_recycle=1800, pid=7

[2021-04-16 16:03:51,233] {sentry.py:179} DEBUG - Could not configure Sentry: No module named 'blinker', using DummySentry instead.

[2021-04-16 16:03:51,286] {__init__.py:45} DEBUG - Cannot import due to doesn't look like a module path

[2021-04-16 16:03:51,685] {cli_action_loggers.py:42} DEBUG - Adding <function default_action_log at 0x7f7cda6afd40> to pre execution callback

The 'worker' command is deprecated and removed in Airflow 2.0, please use 'celery worker' instead

[2021-04-16 16:03:52,608] {cli_action_loggers.py:68} DEBUG - Calling callbacks: [<function default_action_log at 0x7f7cda6afd40>]

[2021-04-16 16:03:53,288: DEBUG/MainProcess] | Worker: Preparing bootsteps.

[2021-04-16 16:03:53,293: DEBUG/MainProcess] | Worker: Building graph...

[2021-04-16 16:03:53,293: DEBUG/MainProcess] | Worker: New boot order: {Timer, Hub, Pool, Autoscaler, StateDB, Beat, Consumer}

[2021-04-16 16:03:53,316: DEBUG/MainProcess] | Consumer: Preparing bootsteps.

[2021-04-16 16:03:53,317: DEBUG/MainProcess] | Consumer: Building graph...

[2021-04-16 16:03:53,393: DEBUG/MainProcess] | Consumer: New boot order: {Connection, Events, Mingle, Gossip, Agent, Tasks, Control, Heart, event loop}

[2021-04-16 16:03:53,427: DEBUG/MainProcess] | Worker: Starting Hub

[2021-04-16 16:03:53,427: DEBUG/MainProcess] ^-- substep ok

[2021-04-16 16:03:53,427: DEBUG/MainProcess] | Worker: Starting Pool

[2021-04-16 16:03:53,749] {settings.py:233} DEBUG - Setting up DB connection pool (PID 17)

[2021-04-16 16:03:53,750] {settings.py:300} DEBUG - settings.prepare_engine_args(): Using pool settings. pool_size=5, max_overflow=10, pool_recycle=1800, pid=17

[2021-04-16 16:03:53,951] {sentry.py:179} DEBUG - Could not configure Sentry: No module named 'blinker', using DummySentry instead.

[2021-04-16 16:03:53,998] {__init__.py:45} DEBUG - Cannot import due to doesn't look like a module path

[2021-04-16 16:03:54,013: DEBUG/MainProcess] ^-- substep ok

[2021-04-16 16:03:54,013: DEBUG/MainProcess] | Worker: Starting Autoscaler

[2021-04-16 16:03:54,014: DEBUG/MainProcess] ^-- substep ok

[2021-04-16 16:03:54,014: DEBUG/MainProcess] | Worker: Starting Consumer

[2021-04-16 16:03:54,014: DEBUG/MainProcess] | Consumer: Starting Connection

[2021-04-16 16:03:54,081: INFO/MainProcess] Connected to redis://redis:**@redis-airflow-osweu-dev.redis.cache.windows.net:6379//

[2021-04-16 16:03:54,081: DEBUG/MainProcess] ^-- substep ok

[2021-04-16 16:03:54,081: DEBUG/MainProcess] | Consumer: Starting Events

[2021-04-16 16:03:54,101: DEBUG/MainProcess] ^-- substep ok

[2021-04-16 16:03:54,101: DEBUG/MainProcess] | Consumer: Starting Mingle

[2021-04-16 16:03:54,102: INFO/MainProcess] mingle: searching for neighbors

[2021-04-16 16:03:54,328] {cli_action_loggers.py:42} DEBUG - Adding <function default_action_log at 0x7fb8a5c12290> to pre execution callback

[2021-04-16 16:03:54,603] {cli_action_loggers.py:68} DEBUG - Calling callbacks: [<function default_action_log at 0x7fb8a5c12290>]

Starting flask

* Serving Flask app "airflow.bin.cli" (lazy loading)

* Environment: production

WARNING: This is a development server. Do not use it in a production deployment.

Use a production WSGI server instead.

* Debug mode: off

[2021-04-16 16:03:54,954] {_internal.py:122} INFO - * Running on http://0.0.0.0:8793/ (Press CTRL+C to quit)

[2021-04-16 16:03:55,194: INFO/MainProcess] mingle: sync with 1 nodes

[2021-04-16 16:03:55,195: DEBUG/MainProcess] mingle: processing reply from celery@airflow-worker-1

[2021-04-16 16:03:55,195: INFO/MainProcess] mingle: sync complete

[2021-04-16 16:03:55,195: DEBUG/MainProcess] ^-- substep ok

[2021-04-16 16:03:55,195: DEBUG/MainProcess] | Consumer: Starting Gossip

[2021-04-16 16:03:55,229: DEBUG/MainProcess] ^-- substep ok

[2021-04-16 16:03:55,230: DEBUG/MainProcess] | Consumer: Starting Tasks

[2021-04-16 16:03:55,240: DEBUG/MainProcess] ^-- substep ok

[2021-04-16 16:03:55,240: DEBUG/MainProcess] | Consumer: Starting Control

[2021-04-16 16:03:55,266: DEBUG/MainProcess] ^-- substep ok

[2021-04-16 16:03:55,267: DEBUG/MainProcess] | Consumer: Starting Heart

[2021-04-16 16:03:55,280: DEBUG/MainProcess] ^-- substep ok

[2021-04-16 16:03:55,280: DEBUG/MainProcess] | Consumer: Starting event loop

[2021-04-16 16:03:55,281: DEBUG/MainProcess] | Worker: Hub.register Autoscaler...

[2021-04-16 16:03:55,281: DEBUG/MainProcess] | Worker: Hub.register Pool...

[2021-04-16 16:03:55,281: INFO/MainProcess] celery@airflow-worker-0 ready.

[2021-04-16 16:03:55,281: DEBUG/MainProcess] basic.qos: prefetch_count->8

[2021-04-16 16:03:56,928: DEBUG/MainProcess] celery@airflow-worker-1 joined the party

**$ kubectl -n airflow logs airflow-worker-1**

*** installing global extra pip packages...

Collecting flask_oauthlib==0.9.6

Downloading Flask_OAuthlib-0.9.6-py3-none-any.whl (40 kB)

Collecting requests-oauthlib<1.2.0,>=0.6.2

Downloading requests_oauthlib-1.1.0-py2.py3-none-any.whl (21 kB)

Collecting oauthlib!=2.0.3,!=2.0.4,!=2.0.5,<3.0.0,>=1.1.2

Downloading oauthlib-2.1.0-py2.py3-none-any.whl (121 kB)

Collecting cachelib

Downloading cachelib-0.1.1-py3-none-any.whl (13 kB)

Requirement already satisfied: Flask in /home/airflow/.local/lib/python3.7/site-packages (from flask_oauthlib==0.9.6) (1.1.2)

Requirement already satisfied: requests>=2.0.0 in /home/airflow/.local/lib/python3.7/site-packages (from requests-oauthlib<1.2.0,>=0.6.2->flask_oauthlib==0.9.6) (2.23.0)

Requirement already satisfied: itsdangerous>=0.24 in /home/airflow/.local/lib/python3.7/site-packages (from Flask->flask_oauthlib==0.9.6) (1.1.0)

Requirement already satisfied: Jinja2>=2.10.1 in /home/airflow/.local/lib/python3.7/site-packages (from Flask->flask_oauthlib==0.9.6) (2.11.2)

Requirement already satisfied: Werkzeug>=0.15 in /home/airflow/.local/lib/python3.7/site-packages (from Flask->flask_oauthlib==0.9.6) (0.16.1)

Requirement already satisfied: click>=5.1 in /home/airflow/.local/lib/python3.7/site-packages (from Flask->flask_oauthlib==0.9.6) (6.7)

Requirement already satisfied: certifi>=2017.4.17 in /home/airflow/.local/lib/python3.7/site-packages (from requests>=2.0.0->requests-oauthlib<1.2.0,>=0.6.2->flask_oauthlib==0.9.6) (2020.11.8)

Requirement already satisfied: idna<3,>=2.5 in /home/airflow/.local/lib/python3.7/site-packages (from requests>=2.0.0->requests-oauthlib<1.2.0,>=0.6.2->flask_oauthlib==0.9.6) (2.8)

Requirement already satisfied: urllib3!=1.25.0,!=1.25.1,<1.26,>=1.21.1 in /home/airflow/.local/lib/python3.7/site-packages (from requests>=2.0.0->requests-oauthlib<1.2.0,>=0.6.2->flask_oauthlib==0.9.6) (1.25.11)

Requirement already satisfied: chardet<4,>=3.0.2 in /home/airflow/.local/lib/python3.7/site-packages (from requests>=2.0.0->requests-oauthlib<1.2.0,>=0.6.2->flask_oauthlib==0.9.6) (3.0.4)

Requirement already satisfied: MarkupSafe>=0.23 in /home/airflow/.local/lib/python3.7/site-packages (from Jinja2>=2.10.1->Flask->flask_oauthlib==0.9.6) (1.1.1)

Installing collected packages: oauthlib, requests-oauthlib, cachelib, flask-oauthlib

Attempting uninstall: oauthlib

Found existing installation: oauthlib 3.1.0

Uninstalling oauthlib-3.1.0:

Successfully uninstalled oauthlib-3.1.0

Attempting uninstall: requests-oauthlib

Found existing installation: requests-oauthlib 1.3.0

Uninstalling requests-oauthlib-1.3.0:

Successfully uninstalled requests-oauthlib-1.3.0

Successfully installed cachelib-0.1.1 flask-oauthlib-0.9.6 oauthlib-2.1.0 requests-oauthlib-1.1.0

WARNING: You are using pip version 20.2.4; however, version 21.0.1 is available.

You should consider upgrading via the '/usr/local/bin/python -m pip install --upgrade pip' command.

*** running worker...

[2021-04-16 15:53:39,557] {settings.py:233} DEBUG - Setting up DB connection pool (PID 6)

[2021-04-16 15:53:39,558] {settings.py:300} DEBUG - settings.prepare_engine_args(): Using pool settings. pool_size=5, max_overflow=10, pool_recycle=1800, pid=6

[2021-04-16 15:53:39,926] {sentry.py:179} DEBUG - Could not configure Sentry: No module named 'blinker', using DummySentry instead.

[2021-04-16 15:53:39,982] {__init__.py:45} DEBUG - Cannot import due to doesn't look like a module path

[2021-04-16 15:53:40,446] {cli_action_loggers.py:42} DEBUG - Adding <function default_action_log at 0x7f424221bd40> to pre execution callback

The 'worker' command is deprecated and removed in Airflow 2.0, please use 'celery worker' instead

[2021-04-16 15:53:41,458] {cli_action_loggers.py:68} DEBUG - Calling callbacks: [<function default_action_log at 0x7f424221bd40>]

[2021-04-16 15:53:42,387: DEBUG/MainProcess] | Worker: Preparing bootsteps.

[2021-04-16 15:53:42,393: DEBUG/MainProcess] | Worker: Building graph...

[2021-04-16 15:53:42,393: DEBUG/MainProcess] | Worker: New boot order: {StateDB, Beat, Timer, Hub, Pool, Autoscaler, Consumer}

[2021-04-16 15:53:42,418: DEBUG/MainProcess] | Consumer: Preparing bootsteps.

[2021-04-16 15:53:42,419: DEBUG/MainProcess] | Consumer: Building graph...

[2021-04-16 15:53:42,517: DEBUG/MainProcess] | Consumer: New boot order: {Connection, Events, Mingle, Gossip, Heart, Tasks, Control, Agent, event loop}

[2021-04-16 15:53:42,556: DEBUG/MainProcess] | Worker: Starting Hub

[2021-04-16 15:53:42,556: DEBUG/MainProcess] ^-- substep ok

[2021-04-16 15:53:42,556: DEBUG/MainProcess] | Worker: Starting Pool

[2021-04-16 15:53:42,904] {settings.py:233} DEBUG - Setting up DB connection pool (PID 16)

[2021-04-16 15:53:42,905] {settings.py:300} DEBUG - settings.prepare_engine_args(): Using pool settings. pool_size=5, max_overflow=10, pool_recycle=1800, pid=16

[2021-04-16 15:53:43,085] {sentry.py:179} DEBUG - Could not configure Sentry: No module named 'blinker', using DummySentry instead.

[2021-04-16 15:53:43,144] {__init__.py:45} DEBUG - Cannot import due to doesn't look like a module path

[2021-04-16 15:53:43,172: DEBUG/MainProcess] ^-- substep ok

[2021-04-16 15:53:43,172: DEBUG/MainProcess] | Worker: Starting Autoscaler

[2021-04-16 15:53:43,172: DEBUG/MainProcess] ^-- substep ok

[2021-04-16 15:53:43,172: DEBUG/MainProcess] | Worker: Starting Consumer

[2021-04-16 15:53:43,173: DEBUG/MainProcess] | Consumer: Starting Connection

[2021-04-16 15:53:43,253: INFO/MainProcess] Connected to redis://redis:**@redis-airflow-osweu-dev.redis.cache.windows.net:6379//

[2021-04-16 15:53:43,253: DEBUG/MainProcess] ^-- substep ok

[2021-04-16 15:53:43,253: DEBUG/MainProcess] | Consumer: Starting Events

[2021-04-16 15:53:43,300: DEBUG/MainProcess] ^-- substep ok

[2021-04-16 15:53:43,300: DEBUG/MainProcess] | Consumer: Starting Mingle

[2021-04-16 15:53:43,300: INFO/MainProcess] mingle: searching for neighbors

[2021-04-16 15:53:43,533] {cli_action_loggers.py:42} DEBUG - Adding <function default_action_log at 0x7fe2c52ec290> to pre execution callback

[2021-04-16 15:53:43,860] {cli_action_loggers.py:68} DEBUG - Calling callbacks: [<function default_action_log at 0x7fe2c52ec290>]

Starting flask

* Serving Flask app "airflow.bin.cli" (lazy loading)

* Environment: production

WARNING: This is a development server. Do not use it in a production deployment.

Use a production WSGI server instead.

* Debug mode: off

[2021-04-16 15:53:44,132] {_internal.py:122} INFO - * Running on http://0.0.0.0:8793/ (Press CTRL+C to quit)

[2021-04-16 15:53:44,382: INFO/MainProcess] mingle: sync with 1 nodes

[2021-04-16 15:53:44,383: DEBUG/MainProcess] mingle: processing reply from celery@airflow-worker-0

[2021-04-16 15:53:44,383: INFO/MainProcess] mingle: sync complete

[2021-04-16 15:53:44,383: DEBUG/MainProcess] ^-- substep ok

[2021-04-16 15:53:44,383: DEBUG/MainProcess] | Consumer: Starting Gossip

[2021-04-16 15:53:44,413: DEBUG/MainProcess] ^-- substep ok

[2021-04-16 15:53:44,414: DEBUG/MainProcess] | Consumer: Starting Heart

[2021-04-16 15:53:44,431: DEBUG/MainProcess] ^-- substep ok

[2021-04-16 15:53:44,431: DEBUG/MainProcess] | Consumer: Starting Tasks

[2021-04-16 15:53:44,439: DEBUG/MainProcess] ^-- substep ok

[2021-04-16 15:53:44,440: DEBUG/MainProcess] | Consumer: Starting Control

[2021-04-16 15:53:44,473: DEBUG/MainProcess] ^-- substep ok

[2021-04-16 15:53:44,473: DEBUG/MainProcess] | Consumer: Starting event loop

[2021-04-16 15:53:44,473: DEBUG/MainProcess] | Worker: Hub.register Autoscaler...

[2021-04-16 15:53:44,473: DEBUG/MainProcess] | Worker: Hub.register Pool...

[2021-04-16 15:53:44,474: INFO/MainProcess] celery@airflow-worker-1 ready.

[2021-04-16 15:53:44,474: DEBUG/MainProcess] basic.qos: prefetch_count->8

[2021-04-16 15:53:45,888: DEBUG/MainProcess] celery@airflow-worker-0 joined the party

[2021-04-16 16:03:34,318: DEBUG/MainProcess] celery@airflow-worker-0 left

[2021-04-16 16:03:54,170: DEBUG/MainProcess] pidbox received method hello(from_node='celery@airflow-worker-0', revoked={}) [reply_to:{'exchange': 'reply.celery.pidbox', 'routing_key': '86623118-5415-3478-a9be-f48f1670b61b'} ticket:07d58af6-f3f2-4404-a926-94eab1e2955c]

[2021-04-16 16:03:54,170: INFO/MainProcess] sync with celery@airflow-worker-0

[2021-04-16 16:03:55,281: DEBUG/MainProcess] celery@airflow-worker-0 joined the party

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] trucnguyenlam edited a comment on issue #13542: Task stuck in "scheduled" or "queued" state, pool has all slots queued, nothing is executing

Posted by GitBox <gi...@apache.org>.

trucnguyenlam edited a comment on issue #13542:

URL: https://github.com/apache/airflow/issues/13542#issuecomment-883251087

@kaxil we're still experiencing this issue in version 2.1.1 even after tuning `parallelism` and `pool` size to 1024

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@airflow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] JD-V commented on issue #13542: Task stuck in "scheduled" or "queued" state, pool has all slots queued, nothing is executing

Posted by GitBox <gi...@apache.org>.

JD-V commented on issue #13542:

URL: https://github.com/apache/airflow/issues/13542#issuecomment-997599254

I am facing the same issue in airflow-2.2.2 with kubernetes Executor.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@airflow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] Overbryd edited a comment on issue #13542: Task stuck in "scheduled" or "queued" state, pool has all slots queued, nothing is executing

Posted by GitBox <gi...@apache.org>.

Overbryd edited a comment on issue #13542:

URL: https://github.com/apache/airflow/issues/13542#issuecomment-945785740

I will unsubscribe from this issue.

I have not encountered this issue again (Airflow 2.1.2).

But the following circumstances made this issue pop up again:

* An Airflow DAG/task is being scheduled (not queued yet)

* The Airflow DAG code is being updated, but it contains an error, so that the scheduler cannot load the code and the task that starts up exits immediately

* Now a rare condition takes place: A task is scheduled, but not yet executed.

* The same task will boot a container

* The same task will exit immediately, because the container loads the faulty code and crashes without bringing up the task at all.

* No failure is recorded on the task.

* Then the scheduler thinks the task is queued, but the task crashed immediately (using KubernetesExecutor)

* This leads to queued slots filling up over time.

* Once all queued slots of a pool (or the default pool) are filled with queued (but never executed, immediately crashing) tasks, the scheduler and the whole system gets stuck.

How do I prevent this issue?

I simply make sure the DAG code is 100% clean and loads both in the scheduler and the tasks that start up (using KubernetesExecutor).

How do I recover from this issue?

First, I fix the issue that prevents the DAG code from loading. I restart the scheduler.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@airflow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] trucnguyenlam commented on issue #13542: Task stuck in "scheduled" or "queued" state, pool has all slots queued, nothing is executing

Posted by GitBox <gi...@apache.org>.

trucnguyenlam commented on issue #13542:

URL: https://github.com/apache/airflow/issues/13542#issuecomment-883251087

@kaxil we're still experiencing this issue in version 2.1.1 even after tuning `parallelism` and `pool` size

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@airflow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] mongakshay edited a comment on issue #13542: Task stuck in "scheduled" or "queued" state, pool has all slots queued, nothing is executing

Posted by GitBox <gi...@apache.org>.

mongakshay edited a comment on issue #13542:

URL: https://github.com/apache/airflow/issues/13542#issuecomment-892630614

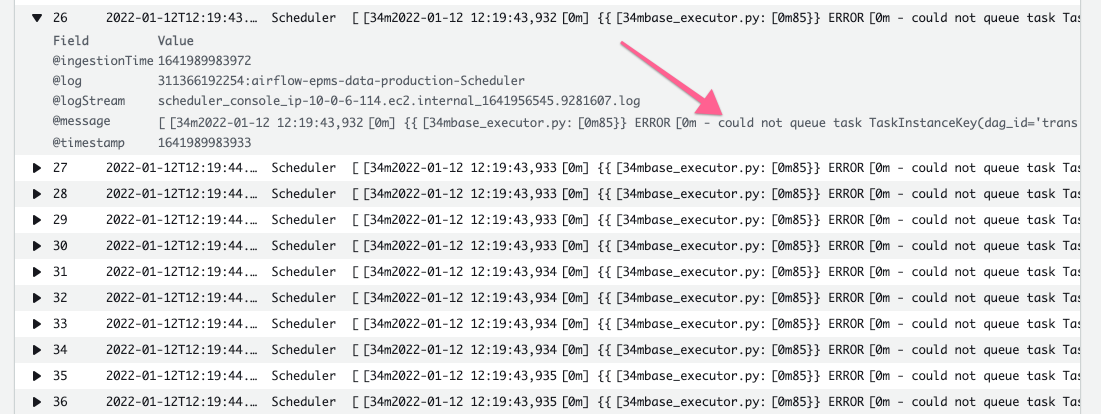

I am also noticing this issue with my Airflow v2.1.2 instance, where the task is forever in queued state, and in the scheduler log I see

```

airflow-scheduler1_1 | [2021-08-04 12:45:23,286] {base_executor.py:85} ERROR - could not queue task TaskInstanceKey(dag_id='example_complex', task_id='create_tag_template_result2', execution_date=datetime.datetime(2021, 8, 3, 22, 39, 34, 577031, tzinfo=Timezone('UTC')), try_number=1)

```

When I sent the SIGUSR2 signal to scheduler I recived:

```

airflow-scheduler1_1 | SIGUSR2 received, printing debug

airflow-scheduler1_1 | --------------------------------------------------------------------------------

airflow-scheduler1_1 | [2021-08-04 13:07:37,230] {base_executor.py:305} INFO - executor.queued (0)

airflow-scheduler1_1 |

airflow-scheduler1_1 | [2021-08-04 13:07:37,231] {base_executor.py:307} INFO - executor.running (1)

airflow-scheduler1_1 | TaskInstanceKey(dag_id='example_complex', task_id='create_tag_template_result2', execution_date=datetime.datetime(2021, 8, 3, 22, 39, 34, 577031, tzinfo=Timezone('UTC')), try_number=1)

airflow-scheduler1_1 | [2021-08-04 13:07:37,233] {base_executor.py:311} INFO - executor.event_buffer (0)

airflow-scheduler1_1 |

airflow-scheduler1_1 | [2021-08-04 13:07:37,234] {celery_executor.py:372} INFO - executor.tasks (1)

airflow-scheduler1_1 | (TaskInstanceKey(dag_id='example_complex', task_id='create_tag_template_result2', execution_date=datetime.datetime(2021, 8, 3, 22, 39, 34, 577031, tzinfo=Timezone('UTC')), try_number=1), <AsyncResult: 1ed20983-dbd3-47c6-8404-345fa0fb75ff>)

airflow-scheduler1_1 | [2021-08-04 13:07:37,235] {celery_executor.py:377} INFO - executor.adopted_task_timeouts (0)

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@airflow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] jbkc85 commented on issue #13542: Task stuck in "scheduled" or "queued" state, pool has all slots queued, nothing is executing

Posted by GitBox <gi...@apache.org>.

jbkc85 commented on issue #13542:

URL: https://github.com/apache/airflow/issues/13542#issuecomment-897078856

> hi @jbkc85 thank you for the suggestion! May I know how to create different pools in Airflow ? many thanks!

Pools are made through the Admin interface. Then you can reference them in the DAGs: https://airflow.apache.org/docs/apache-airflow/stable/concepts/pools.html.

Its a bit of overhead, but once set up you have a little more control over which lanes fill up and which ones don't. Make sure to read about it before implementing!

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@airflow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] mongakshay removed a comment on issue #13542: Task stuck in "scheduled" or "queued" state, pool has all slots queued, nothing is executing

Posted by GitBox <gi...@apache.org>.

mongakshay removed a comment on issue #13542:

URL: https://github.com/apache/airflow/issues/13542#issuecomment-892630614

I am also noticing this issue with my Airflow v2.1.2 instance, where the task is forever in queued state, and in the scheduler log I see

```

airflow-scheduler1_1 | [2021-08-04 12:45:23,286] {base_executor.py:85} ERROR - could not queue task TaskInstanceKey(dag_id='example_complex', task_id='create_tag_template_result2', execution_date=datetime.datetime(2021, 8, 3, 22, 39, 34, 577031, tzinfo=Timezone('UTC')), try_number=1)

```

When I sent the SIGUSR2 signal to scheduler I received:

```

airflow-scheduler1_1 | SIGUSR2 received, printing debug

airflow-scheduler1_1 | --------------------------------------------------------------------------------

airflow-scheduler1_1 | [2021-08-04 13:07:37,230] {base_executor.py:305} INFO - executor.queued (0)

airflow-scheduler1_1 |

airflow-scheduler1_1 | [2021-08-04 13:07:37,231] {base_executor.py:307} INFO - executor.running (1)

airflow-scheduler1_1 | TaskInstanceKey(dag_id='example_complex', task_id='create_tag_template_result2', execution_date=datetime.datetime(2021, 8, 3, 22, 39, 34, 577031, tzinfo=Timezone('UTC')), try_number=1)

airflow-scheduler1_1 | [2021-08-04 13:07:37,233] {base_executor.py:311} INFO - executor.event_buffer (0)

airflow-scheduler1_1 |

airflow-scheduler1_1 | [2021-08-04 13:07:37,234] {celery_executor.py:372} INFO - executor.tasks (1)

airflow-scheduler1_1 | (TaskInstanceKey(dag_id='example_complex', task_id='create_tag_template_result2', execution_date=datetime.datetime(2021, 8, 3, 22, 39, 34, 577031, tzinfo=Timezone('UTC')), try_number=1), <AsyncResult: 1ed20983-dbd3-47c6-8404-345fa0fb75ff>)

airflow-scheduler1_1 | [2021-08-04 13:07:37,235] {celery_executor.py:377} INFO - executor.adopted_task_timeouts (0)

```

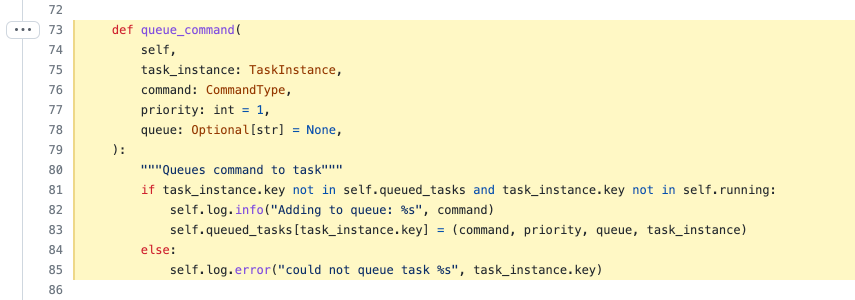

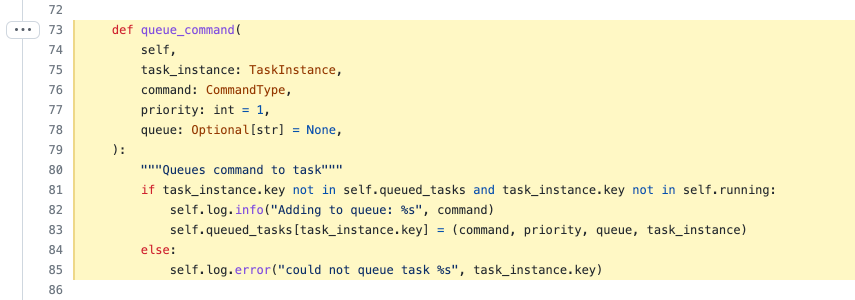

So basically it is trying to queue a running task ? and failing the `if` check

```

if task_instance.key not in self.queued_tasks and task_instance.key not in self.running:

self.log.info("Adding to queue: %s", command)

self.queued_tasks[task_instance.key] = (command, priority, queue, task_instance)

else:

self.log.error("could not queue task %s", task_instance.key)

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@airflow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] hafid-d commented on issue #13542: Task stuck in "scheduled" or "queued" state, pool has all slots queued, nothing is executing

Posted by GitBox <gi...@apache.org>.

hafid-d commented on issue #13542:

URL: https://github.com/apache/airflow/issues/13542#issuecomment-896144592

Same here, facing the issue. Even restarting the scheduler doesn't fix the problem. My dags are still stuck in running state

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@airflow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] minnieshi edited a comment on issue #13542: Task stuck in "scheduled" or "queued" state, pool has all slots queued, nothing is executing

Posted by GitBox <gi...@apache.org>.

minnieshi edited a comment on issue #13542:

URL: https://github.com/apache/airflow/issues/13542#issuecomment-821356514

@SalmonTimo i have access to the workers, as well as the dag logs folder (which is saved in the file share -PVC, and can be viewed via azure storage explorer).

ps. The environment is set up new, and migrated a few tables listed below from old environment. during debug of this stuck situation, the table 'dag', 'task_*', 'celery_*' had been truncated.

```

- celeray_taskmeta

- dag

- dag_run

- log

- task_fail

- task_instance

- task_reschedule

- connections

```

How would like me to fetch the log? Just the dag log run?

update: the one i can view via storage explorer - it is empty, since the task was not executed. i will check the worker itself. and update this comment.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] JavierLopezT commented on issue #13542: Task stuck in "scheduled" or "queued" state, pool has all slots queued, nothing is executing

Posted by GitBox <gi...@apache.org>.

JavierLopezT commented on issue #13542:

URL: https://github.com/apache/airflow/issues/13542#issuecomment-1007235056

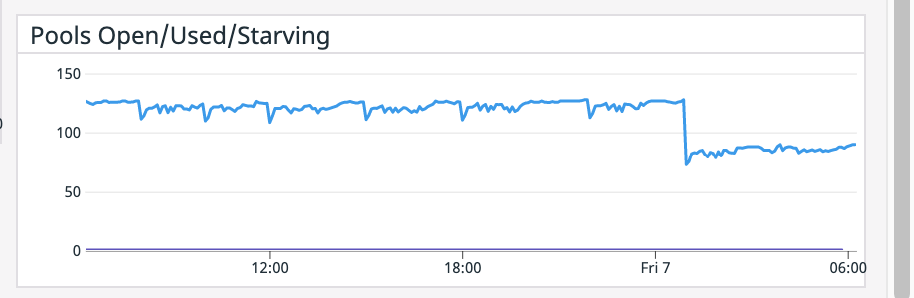

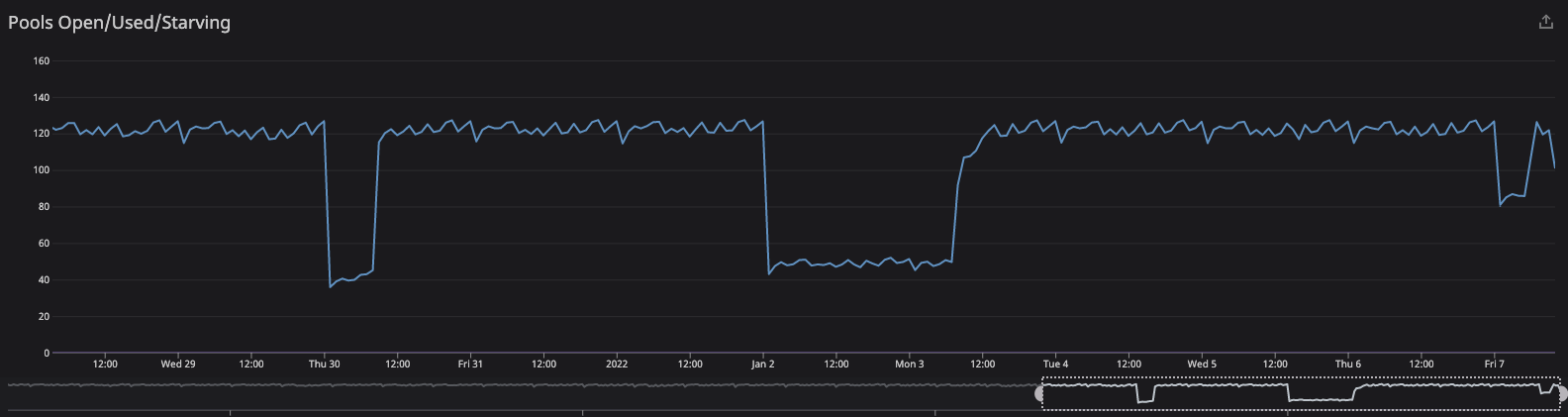

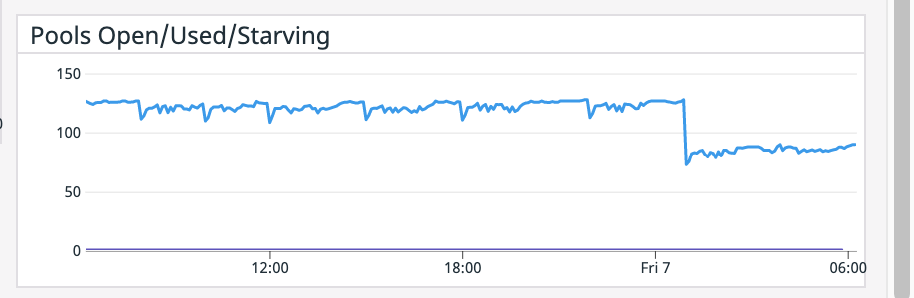

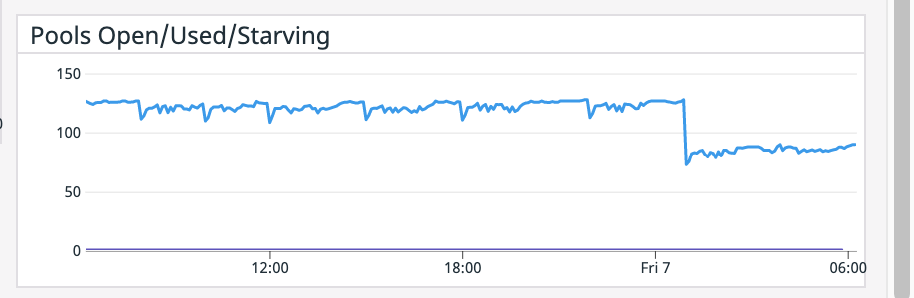

This has started happening to us as well. Randomly (3 nights over 10 days), all tasks get stuck in queued state and we have to manually clear the state for it to recover. We have seen that it is related to the decrease of this metric in datadog:

Airflow 2.1.1 in EC2

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@airflow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] ddcatgg edited a comment on issue #13542: Task stuck in "scheduled" or "queued" state, pool has all slots queued, nothing is executing

Posted by GitBox <gi...@apache.org>.

ddcatgg edited a comment on issue #13542:

URL: https://github.com/apache/airflow/issues/13542#issuecomment-983261840

I ran into the same issue, the scheduler's log:

```