You are viewing a plain text version of this content. The canonical link for it is here.

Posted to commits@hudi.apache.org by GitBox <gi...@apache.org> on 2021/06/14 07:12:11 UTC

[GitHub] [hudi] wangfeigithub opened a new issue #3065: Not in Marker Dir occurs when I write to HDFS using Spark

wangfeigithub opened a new issue #3065:

URL: https://github.com/apache/hudi/issues/3065

**_Tips before filing an issue_**

- Have you gone through our [FAQs](https://cwiki.apache.org/confluence/display/HUDI/FAQ)?

- Join the mailing list to engage in conversations and get faster support at dev-subscribe@hudi.apache.org.

- If you have triaged this as a bug, then file an [issue](https://issues.apache.org/jira/projects/HUDI/issues) directly.

**Describe the problem you faced**

A clear and concise description of the problem.

**To Reproduce**

Steps to reproduce the behavior:

1.Not in Marker Dir occurs when I write to HDFS using Spark

**Expected behavior**

A clear and concise description of what you expected to happen.

**Environment Description**

* Hudi version :0.8

* Spark version :2.4

* Hive version :3.1

* Hadoop version :3.1

* Storage (HDFS/S3/GCS..) :hdfs

* Running on Docker? (yes/no) no

**Additional context**

Add any other context about the problem here.

**Stacktrace**

```

Driver stacktrace:

21/06/10 18:04:57 INFO scheduler.DAGScheduler: Job 16 failed: collect at MarkerFiles.java:150, took 0.100981 s

21/06/10 18:04:57 INFO scheduler.JobScheduler: Finished job streaming job 1623319480000 ms.0 from job set of time 1623319480000 ms

21/06/10 18:04:57 INFO scheduler.JobScheduler: Starting job streaming job 1623319480000 ms.1 from job set of time 1623319480000 ms

21/06/10 18:04:57 INFO spark.SparkContext: Starting job: count at DataEtl.scala:105

21/06/10 18:04:57 ERROR scheduler.JobScheduler: Error running job streaming job 1623319480000 ms.0

org.apache.spark.SparkException: Job aborted due to stage failure: Task 0 in stage 35.0 failed 1 times, most recent failure: Lost task 0.0 in stage 35.0 (TID 85, localhost, executor driver): java.lang.IllegalArgumentException: Not in marker dir. Marker Path=hdfs://192.168.116.12:8020/hive/warehouse/ods3.db/orders\.hoodie\.temp\20210610180447/month_key=default/ecddd088-5b6e-4efe-82c2-c8c86988e8cb-0_0-28-83_20210610180447.parquet.marker.CREATE, Expected Marker Root=/hive/warehouse/ods3.db/orders/.hoodie/.temp/20210610180447

at org.apache.hudi.table.MarkerFiles.stripMarkerFolderPrefix(MarkerFiles.java:179)

at org.apache.hudi.table.MarkerFiles.translateMarkerToDataPath(MarkerFiles.java:157)

at org.apache.hudi.table.MarkerFiles.lambda$createdAndMergedDataPaths$597e6f5a$1(MarkerFiles.java:146)

at org.apache.spark.api.java.JavaRDDLike$$anonfun$fn$1$1.apply(JavaRDDLike.scala:125)

at org.apache.spark.api.java.JavaRDDLike$$anonfun$fn$1$1.apply(JavaRDDLike.scala:125)

at scala.collection.Iterator$$anon$12.nextCur(Iterator.scala:435)

at scala.collection.Iterator$$anon$12.hasNext(Iterator.scala:441)

at scala.collection.Iterator$class.foreach(Iterator.scala:891)

at scala.collection.AbstractIterator.foreach(Iterator.scala:1334)

at scala.collection.generic.Growable$class.$plus$plus$eq(Growable.scala:59)

at scala.collection.mutable.ArrayBuffer.$plus$plus$eq(ArrayBuffer.scala:104)

at scala.collection.mutable.ArrayBuffer.$plus$plus$eq(ArrayBuffer.scala:48)

at scala.collection.TraversableOnce$class.to(TraversableOnce.scala:310)

at scala.collection.AbstractIterator.to(Iterator.scala:1334)

at scala.collection.TraversableOnce$class.toBuffer(TraversableOnce.scala:302)

at scala.collection.AbstractIterator.toBuffer(Iterator.scala:1334)

at scala.collection.TraversableOnce$class.toArray(TraversableOnce.scala:289)

at scala.collection.AbstractIterator.toArray(Iterator.scala:1334)

at org.apache.spark.rdd.RDD$$anonfun$collect$1$$anonfun$13.apply(RDD.scala:945)

at org.apache.spark.rdd.RDD$$anonfun$collect$1$$anonfun$13.apply(RDD.scala:945)

at org.apache.spark.SparkContext$$anonfun$runJob$5.apply(SparkContext.scala:2101)

at org.apache.spark.SparkContext$$anonfun$runJob$5.apply(SparkContext.scala:2101)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:90)

at org.apache.spark.scheduler.Task.run(Task.scala:123)

at org.apache.spark.executor.Executor$TaskRunner$$anonfun$10.apply(Executor.scala:408)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1360)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:414)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

Driver stacktrace:

at org.apache.spark.scheduler.DAGScheduler.org$apache$spark$scheduler$DAGScheduler$$failJobAndIndependentStages(DAGScheduler.scala:1889)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$abortStage$1.apply(DAGScheduler.scala:1877)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$abortStage$1.apply(DAGScheduler.scala:1876)

at scala.collection.mutable.ResizableArray$class.foreach(ResizableArray.scala:59)

at scala.collection.mutable.ArrayBuffer.foreach(ArrayBuffer.scala:48)

at org.apache.spark.scheduler.DAGScheduler.abortStage(DAGScheduler.scala:1876)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$handleTaskSetFailed$1.apply(DAGScheduler.scala:926)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$handleTaskSetFailed$1.apply(DAGScheduler.scala:926)

at scala.Option.foreach(Option.scala:257)

at org.apache.spark.scheduler.DAGScheduler.handleTaskSetFailed(DAGScheduler.scala:926)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.doOnReceive(DAGScheduler.scala:2110)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.onReceive(DAGScheduler.scala:2059)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.onReceive(DAGScheduler.scala:2048)

at org.apache.spark.util.EventLoop$$anon$1.run(EventLoop.scala:49)

at org.apache.spark.scheduler.DAGScheduler.runJob(DAGScheduler.scala:737)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2061)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2082)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2101)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2126)

at org.apache.spark.rdd.RDD$$anonfun$collect$1.apply(RDD.scala:945)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:151)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:112)

at org.apache.spark.rdd.RDD.withScope(RDD.scala:363)

at org.apache.spark.rdd.RDD.collect(RDD.scala:944)

at org.apache.spark.api.java.JavaRDDLike$class.collect(JavaRDDLike.scala:361)

at org.apache.spark.api.java.AbstractJavaRDDLike.collect(JavaRDDLike.scala:45)

at org.apache.hudi.table.MarkerFiles.createdAndMergedDataPaths(MarkerFiles.java:150)

at org.apache.hudi.table.HoodieTable.reconcileAgainstMarkers(HoodieTable.java:449)

at org.apache.hudi.table.HoodieTable.finalizeWrite(HoodieTable.java:398)

at org.apache.hudi.client.AbstractHoodieWriteClient.finalizeWrite(AbstractHoodieWriteClient.java:178)

at org.apache.hudi.client.AbstractHoodieWriteClient.commitStats(AbstractHoodieWriteClient.java:114)

at org.apache.hudi.client.AbstractHoodieWriteClient.commit(AbstractHoodieWriteClient.java:99)

at org.apache.hudi.client.AbstractHoodieWriteClient.commit(AbstractHoodieWriteClient.java:90)

at org.apache.hudi.HoodieSparkSqlWriter$.commitAndPerformPostOperations(HoodieSparkSqlWriter.scala:395)

at org.apache.hudi.HoodieSparkSqlWriter$.write(HoodieSparkSqlWriter.scala:205)

at org.apache.hudi.DefaultSource.createRelation(DefaultSource.scala:125)

at org.apache.spark.sql.execution.datasources.SaveIntoDataSourceCommand.run(SaveIntoDataSourceCommand.scala:45)

at org.apache.spark.sql.execution.command.ExecutedCommandExec.sideEffectResult$lzycompute(commands.scala:70)

at org.apache.spark.sql.execution.command.ExecutedCommandExec.sideEffectResult(commands.scala:68)

at org.apache.spark.sql.execution.command.ExecutedCommandExec.doExecute(commands.scala:86)

at org.apache.spark.sql.execution.SparkPlan$$anonfun$execute$1.apply(SparkPlan.scala:131)

at org.apache.spark.sql.execution.SparkPlan$$anonfun$execute$1.apply(SparkPlan.scala:127)

at org.apache.spark.sql.execution.SparkPlan$$anonfun$executeQuery$1.apply(SparkPlan.scala:155)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:151)

at org.apache.spark.sql.execution.SparkPlan.executeQuery(SparkPlan.scala:152)

at org.apache.spark.sql.execution.SparkPlan.execute(SparkPlan.scala:127)

at org.apache.spark.sql.execution.QueryExecution.toRdd$lzycompute(QueryExecution.scala:80)

at org.apache.spark.sql.execution.QueryExecution.toRdd(QueryExecution.scala:80)

at org.apache.spark.sql.DataFrameWriter$$anonfun$runCommand$1.apply(DataFrameWriter.scala:676)

at org.apache.spark.sql.DataFrameWriter$$anonfun$runCommand$1.apply(DataFrameWriter.scala:676)

at org.apache.spark.sql.execution.SQLExecution$$anonfun$withNewExecutionId$1.apply(SQLExecution.scala:78)

at org.apache.spark.sql.execution.SQLExecution$.withSQLConfPropagated(SQLExecution.scala:125)

at org.apache.spark.sql.execution.SQLExecution$.withNewExecutionId(SQLExecution.scala:73)

at org.apache.spark.sql.DataFrameWriter.runCommand(DataFrameWriter.scala:676)

at org.apache.spark.sql.DataFrameWriter.saveToV1Source(DataFrameWriter.scala:285)

at org.apache.spark.sql.DataFrameWriter.save(DataFrameWriter.scala:271)

at org.apache.spark.sql.DataFrameWriter.save(DataFrameWriter.scala:229)

at com.zt.DataEtl$$anonfun$main$1$$anonfun$apply$2.apply(DataEtl.scala:174)

at com.zt.DataEtl$$anonfun$main$1$$anonfun$apply$2.apply(DataEtl.scala:104)

at org.apache.spark.streaming.dstream.DStream$$anonfun$foreachRDD$1$$anonfun$apply$mcV$sp$3.apply(DStream.scala:628)

at org.apache.spark.streaming.dstream.DStream$$anonfun$foreachRDD$1$$anonfun$apply$mcV$sp$3.apply(DStream.scala:628)

at org.apache.spark.streaming.dstream.ForEachDStream$$anonfun$1$$anonfun$apply$mcV$sp$1.apply$mcV$sp(ForEachDStream.scala:51)

at org.apache.spark.streaming.dstream.ForEachDStream$$anonfun$1$$anonfun$apply$mcV$sp$1.apply(ForEachDStream.scala:51)

at org.apache.spark.streaming.dstream.ForEachDStream$$anonfun$1$$anonfun$apply$mcV$sp$1.apply(ForEachDStream.scala:51)

at org.apache.spark.streaming.dstream.DStream.createRDDWithLocalProperties(DStream.scala:416)

at org.apache.spark.streaming.dstream.ForEachDStream$$anonfun$1.apply$mcV$sp(ForEachDStream.scala:50)

at org.apache.spark.streaming.dstream.ForEachDStream$$anonfun$1.apply(ForEachDStream.scala:50)

at org.apache.spark.streaming.dstream.ForEachDStream$$anonfun$1.apply(ForEachDStream.scala:50)

at scala.util.Try$.apply(Try.scala:192)

at org.apache.spark.streaming.scheduler.Job.run(Job.scala:39)

at org.apache.spark.streaming.scheduler.JobScheduler$JobHandler$$anonfun$run$1.apply$mcV$sp(JobScheduler.scala:257)

at org.apache.spark.streaming.scheduler.JobScheduler$JobHandler$$anonfun$run$1.apply(JobScheduler.scala:257)

at org.apache.spark.streaming.scheduler.JobScheduler$JobHandler$$anonfun$run$1.apply(JobScheduler.scala:257)

at scala.util.DynamicVariable.withValue(DynamicVariable.scala:58)

at org.apache.spark.streaming.scheduler.JobScheduler$JobHandler.run(JobScheduler.scala:256)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

Caused by: java.lang.IllegalArgumentException: Not in marker dir. Marker Path=hdfs://192.168.116.12:8020/hive/warehouse/ods3.db/orders\.hoodie\.temp\20210610180447/month_key=default/ecddd088-5b6e-4efe-82c2-c8c86988e8cb-0_0-28-83_20210610180447.parquet.marker.CREATE, Expected Marker Root=/hive/warehouse/ods3.db/orders/.hoodie/.temp/20210610180447

at org.apache.hudi.common.util.ValidationUtils.checkArgument(ValidationUtils.java:40)

at org.apache.hudi.table.MarkerFiles.stripMarkerFolderPrefix(MarkerFiles.java:179)

at org.apache.hudi.table.MarkerFiles.translateMarkerToDataPath(MarkerFiles.java:157)

at org.apache.hudi.table.MarkerFiles.lambda$createdAndMergedDataPaths$597e6f5a$1(MarkerFiles.java:146)

at org.apache.spark.api.java.JavaRDDLike$$anonfun$fn$1$1.apply(JavaRDDLike.scala:125)

at org.apache.spark.api.java.JavaRDDLike$$anonfun$fn$1$1.apply(JavaRDDLike.scala:125)

at scala.collection.Iterator$$anon$12.nextCur(Iterator.scala:435)

at scala.collection.Iterator$$anon$12.hasNext(Iterator.scala:441)

at scala.collection.Iterator$class.foreach(Iterator.scala:891)

at scala.collection.AbstractIterator.foreach(Iterator.scala:1334)

at scala.collection.generic.Growable$class.$plus$plus$eq(Growable.scala:59)

at scala.collection.mutable.ArrayBuffer.$plus$plus$eq(ArrayBuffer.scala:104)

at scala.collection.mutable.ArrayBuffer.$plus$plus$eq(ArrayBuffer.scala:48)

at scala.collection.TraversableOnce$class.to(TraversableOnce.scala:310)

at scala.collection.AbstractIterator.to(Iterator.scala:1334)

at scala.collection.TraversableOnce$class.toBuffer(TraversableOnce.scala:302)

at scala.collection.AbstractIterator.toBuffer(Iterator.scala:1334)

at scala.collection.TraversableOnce$class.toArray(TraversableOnce.scala:289)

at scala.collection.AbstractIterator.toArray(Iterator.scala:1334)

at org.apache.spark.rdd.RDD$$anonfun$collect$1$$anonfun$13.apply(RDD.scala:945)

at org.apache.spark.rdd.RDD$$anonfun$collect$1$$anonfun$13.apply(RDD.scala:945)

at org.apache.spark.SparkContext$$anonfun$runJob$5.apply(SparkContext.scala:2101)

at org.apache.spark.SparkContext$$anonfun$runJob$5.apply(SparkContext.scala:2101)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:90)

at org.apache.spark.scheduler.Task.run(Task.scala:123)

at org.apache.spark.executor.Executor$TaskRunner$$anonfun$10.apply(Executor.scala:408)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1360)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:414)

... 3 more

21/06/10 18:04:57 INFO scheduler.DAGScheduler: Got job 17 (count at DataEtl.scala:105) with 2 output partitions

21/06/10 18:04:57 INFO scheduler.DAGScheduler: Final stage: ResultStage 36 (count at DataEtl.scala:105)

21/06/10 18:04:57 INFO scheduler.DAGScheduler: Parents of final stage: List()

Exception in thread "main" org.apache.spark.SparkException: Job aborted due to stage failure: Task 0 in stage 35.0 failed 1 times, most recent failure: Lost task 0.0 in stage 35.0 (TID 85, localhost, executor driver): java.lang.IllegalArgumentException: Not in marker dir. Marker Path=hdfs://192.168.116.12:8020/hive/warehouse/ods3.db/orders\.hoodie\.temp\20210610180447/month_key=default/ecddd088-5b6e-4efe-82c2-c8c86988e8cb-0_0-28-83_20210610180447.parquet.marker.CREATE, Expected Marker Root=/hive/warehouse/ods3.db/orders/.hoodie/.temp/20210610180447

at org.apache.hudi.common.util.ValidationUtils.checkArgument(ValidationUtils.java:40)

at org.apache.hudi.table.MarkerFiles.stripMarkerFolderPrefix(MarkerFiles.java:179)

at org.apache.hudi.table.MarkerFiles.translateMarkerToDataPath(MarkerFiles.java:157)

at org.apache.hudi.table.MarkerFiles.lambda$createdAndMergedDataPaths$597e6f5a$1(MarkerFiles.java:146)

at org.apache.spark.api.java.JavaRDDLike$$anonfun$fn$1$1.apply(JavaRDDLike.scala:125)

at org.apache.spark.api.java.JavaRDDLike$$anonfun$fn$1$1.apply(JavaRDDLike.scala:125)

at scala.collection.Iterator$$anon$12.nextCur(Iterator.scala:435)

at scala.collection.Iterator$$anon$12.hasNext(Iterator.scala:441)

at scala.collection.Iterator$class.foreach(Iterator.scala:891)

at scala.collection.AbstractIterator.foreach(Iterator.scala:1334)

at scala.collection.generic.Growable$class.$plus$plus$eq(Growable.scala:59)

at scala.collection.mutable.ArrayBuffer.$plus$plus$eq(ArrayBuffer.scala:104)

at scala.collection.mutable.ArrayBuffer.$plus$plus$eq(ArrayBuffer.scala:48)

at scala.collection.TraversableOnce$class.to(TraversableOnce.scala:310)

at scala.collection.AbstractIterator.to(Iterator.scala:1334)

at scala.collection.TraversableOnce$class.toBuffer(TraversableOnce.scala:302)

at scala.collection.AbstractIterator.toBuffer(Iterator.scala:1334)

at scala.collection.TraversableOnce$class.toArray(TraversableOnce.scala:289)

at scala.collection.AbstractIterator.toArray(Iterator.scala:1334)

at org.apache.spark.rdd.RDD$$anonfun$collect$1$$anonfun$13.apply(RDD.scala:945)

at org.apache.spark.rdd.RDD$$anonfun$collect$1$$anonfun$13.apply(RDD.scala:945)

at org.apache.spark.SparkContext$$anonfun$runJob$5.apply(SparkContext.scala:2101)

at org.apache.spark.SparkContext$$anonfun$runJob$5.apply(SparkContext.scala:2101)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:90)

at org.apache.spark.scheduler.Task.run(Task.scala:123)

at org.apache.spark.executor.Executor$TaskRunner$$anonfun$10.apply(Executor.scala:408)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1360)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:414)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

Driver stacktrace:

at org.apache.spark.scheduler.DAGScheduler.org$apache$spark$scheduler$DAGScheduler$$failJobAndIndependentStages(DAGScheduler.scala:1889)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$abortStage$1.apply(DAGScheduler.scala:1877)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$abortStage$1.apply(DAGScheduler.scala:1876)

at scala.collection.mutable.ResizableArray$class.foreach(ResizableArray.scala:59)

at scala.collection.mutable.ArrayBuffer.foreach(ArrayBuffer.scala:48)

at org.apache.spark.scheduler.DAGScheduler.abortStage(DAGScheduler.scala:1876)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$handleTaskSetFailed$1.apply(DAGScheduler.scala:926)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$handleTaskSetFailed$1.apply(DAGScheduler.scala:926)

at scala.Option.foreach(Option.scala:257)

at org.apache.spark.scheduler.DAGScheduler.handleTaskSetFailed(DAGScheduler.scala:926)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.doOnReceive(DAGScheduler.scala:2110)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.onReceive(DAGScheduler.scala:2059)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.onReceive(DAGScheduler.scala:2048)

at org.apache.spark.util.EventLoop$$anon$1.run(EventLoop.scala:49)

at org.apache.spark.scheduler.DAGScheduler.runJob(DAGScheduler.scala:737)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2061)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2082)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2101)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2126)

at org.apache.spark.rdd.RDD$$anonfun$collect$1.apply(RDD.scala:945)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:151)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:112)

at org.apache.spark.rdd.RDD.withScope(RDD.scala:363)

at org.apache.spark.rdd.RDD.collect(RDD.scala:944)

at org.apache.spark.api.java.JavaRDDLike$class.collect(JavaRDDLike.scala:361)

at org.apache.spark.api.java.AbstractJavaRDDLike.collect(JavaRDDLike.scala:45)

at org.apache.hudi.table.MarkerFiles.createdAndMergedDataPaths(MarkerFiles.java:150)

at org.apache.hudi.table.HoodieTable.reconcileAgainstMarkers(HoodieTable.java:449)

at org.apache.hudi.table.HoodieTable.finalizeWrite(HoodieTable.java:398)

at org.apache.hudi.client.AbstractHoodieWriteClient.finalizeWrite(AbstractHoodieWriteClient.java:178)

at org.apache.hudi.client.AbstractHoodieWriteClient.commitStats(AbstractHoodieWriteClient.java:114)

at org.apache.hudi.client.AbstractHoodieWriteClient.commit(AbstractHoodieWriteClient.java:99)

at org.apache.hudi.client.AbstractHoodieWriteClient.commit(AbstractHoodieWriteClient.java:90)

at org.apache.hudi.HoodieSparkSqlWriter$.commitAndPerformPostOperations(HoodieSparkSqlWriter.scala:395)

at org.apache.hudi.HoodieSparkSqlWriter$.write(HoodieSparkSqlWriter.scala:205)

at org.apache.hudi.DefaultSource.createRelation(DefaultSource.scala:125)

at org.apache.spark.sql.execution.datasources.SaveIntoDataSourceCommand.run(SaveIntoDataSourceCommand.scala:45)

at org.apache.spark.sql.execution.command.ExecutedCommandExec.sideEffectResult$lzycompute(commands.scala:70)

at org.apache.spark.sql.execution.command.ExecutedCommandExec.sideEffectResult(commands.scala:68)

at org.apache.spark.sql.execution.command.ExecutedCommandExec.doExecute(commands.scala:86)

at org.apache.spark.sql.execution.SparkPlan$$anonfun$execute$1.apply(SparkPlan.scala:131)

at org.apache.spark.sql.execution.SparkPlan$$anonfun$execute$1.apply(SparkPlan.scala:127)

at org.apache.spark.sql.execution.SparkPlan$$anonfun$executeQuery$1.apply(SparkPlan.scala:155)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:151)

at org.apache.spark.sql.execution.SparkPlan.executeQuery(SparkPlan.scala:152)

at org.apache.spark.sql.execution.SparkPlan.execute(SparkPlan.scala:127)

at org.apache.spark.sql.execution.QueryExecution.toRdd$lzycompute(QueryExecution.scala:80)

at org.apache.spark.sql.execution.QueryExecution.toRdd(QueryExecution.scala:80)

at org.apache.spark.sql.DataFrameWriter$$anonfun$runCommand$1.apply(DataFrameWriter.scala:676)

at org.apache.spark.sql.DataFrameWriter$$anonfun$runCommand$1.apply(DataFrameWriter.scala:676)

at org.apache.spark.sql.execution.SQLExecution$$anonfun$withNewExecutionId$1.apply(SQLExecution.scala:78)

at org.apache.spark.sql.execution.SQLExecution$.withSQLConfPropagated(SQLExecution.scala:125)

at org.apache.spark.sql.execution.SQLExecution$.withNewExecutionId(SQLExecution.scala:73)

at org.apache.spark.sql.DataFrameWriter.runCommand(DataFrameWriter.scala:676)

at org.apache.spark.sql.DataFrameWriter.saveToV1Source(DataFrameWriter.scala:285)

at org.apache.spark.sql.DataFrameWriter.save(DataFrameWriter.scala:271)

at org.apache.spark.sql.DataFrameWriter.save(DataFrameWriter.scala:229)

at com.zt.DataEtl$$anonfun$main$1$$anonfun$apply$2.apply(DataEtl.scala:174)

at com.zt.DataEtl$$anonfun$main$1$$anonfun$apply$2.apply(DataEtl.scala:104)

at org.apache.spark.streaming.dstream.DStream$$anonfun$foreachRDD$1$$anonfun$apply$mcV$sp$3.apply(DStream.scala:628)

at org.apache.spark.streaming.dstream.DStream$$anonfun$foreachRDD$1$$anonfun$apply$mcV$sp$3.apply(DStream.scala:628)

at org.apache.spark.streaming.dstream.ForEachDStream$$anonfun$1$$anonfun$apply$mcV$sp$1.apply$mcV$sp(ForEachDStream.scala:51)

at org.apache.spark.streaming.dstream.ForEachDStream$$anonfun$1$$anonfun$apply$mcV$sp$1.apply(ForEachDStream.scala:51)

at org.apache.spark.streaming.dstream.ForEachDStream$$anonfun$1$$anonfun$apply$mcV$sp$1.apply(ForEachDStream.scala:51)

at org.apache.spark.streaming.dstream.DStream.createRDDWithLocalProperties(DStream.scala:416)

at org.apache.spark.streaming.dstream.ForEachDStream$$anonfun$1.apply$mcV$sp(ForEachDStream.scala:50)

at org.apache.spark.streaming.dstream.ForEachDStream$$anonfun$1.apply(ForEachDStream.scala:50)

at org.apache.spark.streaming.dstream.ForEachDStream$$anonfun$1.apply(ForEachDStream.scala:50)

at scala.util.Try$.apply(Try.scala:192)

at org.apache.spark.streaming.scheduler.Job.run(Job.scala:39)

at org.apache.spark.streaming.scheduler.JobScheduler$JobHandler$$anonfun$run$1.apply$mcV$sp(JobScheduler.scala:257)

at org.apache.spark.streaming.scheduler.JobScheduler$JobHandler$$anonfun$run$1.apply(JobScheduler.scala:257)

at org.apache.spark.streaming.scheduler.JobScheduler$JobHandler$$anonfun$run$1.apply(JobScheduler.scala:257)

at scala.util.DynamicVariable.withValue(DynamicVariable.scala:58)

at org.apache.spark.streaming.scheduler.JobScheduler$JobHandler.run(JobScheduler.scala:256)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

Caused by: java.lang.IllegalArgumentException: Not in marker dir. Marker Path=hdfs://192.168.116.12:8020/hive/warehouse/ods3.db/orders\.hoodie\.temp\20210610180447/month_key=default/ecddd088-5b6e-4efe-82c2-c8c86988e8cb-0_0-28-83_20210610180447.parquet.marker.CREATE, Expected Marker Root=/hive/warehouse/ods3.db/orders/.hoodie/.temp/20210610180447

at org.apache.hudi.common.util.ValidationUtils.checkArgument(ValidationUtils.java:40)

at org.apache.hudi.table.MarkerFiles.stripMarkerFolderPrefix(MarkerFiles.java:179)

at org.apache.hudi.table.MarkerFiles.translateMarkerToDataPath(MarkerFiles.java:157)

at org.apache.hudi.table.MarkerFiles.lambda$createdAndMergedDataPaths$597e6f5a$1(MarkerFiles.java:146)

at org.apache.spark.api.java.JavaRDDLike$$anonfun$fn$1$1.apply(JavaRDDLike.scala:125)

at org.apache.spark.api.java.JavaRDDLike$$anonfun$fn$1$1.apply(JavaRDDLike.scala:125)

at scala.collection.Iterator$$anon$12.nextCur(Iterator.scala:435)

at scala.collection.Iterator$$anon$12.hasNext(Iterator.scala:441)

at scala.collection.Iterator$class.foreach(Iterator.scala:891)

at scala.collection.AbstractIterator.foreach(Iterator.scala:1334)

at scala.collection.generic.Growable$class.$plus$plus$eq(Growable.scala:59)

at scala.collection.mutable.ArrayBuffer.$plus$plus$eq(ArrayBuffer.scala:104)

at scala.collection.mutable.ArrayBuffer.$plus$plus$eq(ArrayBuffer.scala:48)

at scala.collection.TraversableOnce$class.to(TraversableOnce.scala:310)

at scala.collection.AbstractIterator.to(Iterator.scala:1334)

at scala.collection.TraversableOnce$class.toBuffer(TraversableOnce.scala:302)

at scala.collection.AbstractIterator.toBuffer(Iterator.scala:1334)

at scala.collection.TraversableOnce$class.toArray(TraversableOnce.scala:289)

at scala.collection.AbstractIterator.toArray(Iterator.scala:1334)

at org.apache.spark.rdd.RDD$$anonfun$collect$1$$anonfun$13.apply(RDD.scala:945)

at org.apache.spark.rdd.RDD$$anonfun$collect$1$$anonfun$13.apply(RDD.scala:945)

at org.apache.spark.SparkContext$$anonfun$runJob$5.apply(SparkContext.scala:2101)

at org.apache.spark.SparkContext$$anonfun$runJob$5.apply(SparkContext.scala:2101)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:90)

at org.apache.spark.scheduler.Task.run(Task.scala:123)

at org.apache.spark.executor.Executor$TaskRunner$$anonfun$10.apply(Executor.scala:408)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1360)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:414)

... 3 more

21/06/10 18:04:57 INFO scheduler.DAGScheduler: Missing parents: List()

21/06/10 18:04:57 INFO scheduler.DAGScheduler: Submitting ResultStage 36 (MapPartitionsRDD[8] at filter at DataEtl.scala:92), which has no missing parents

21/06/10 18:04:57 INFO memory.MemoryStore: Block broadcast_23 stored as values in memory (estimated size 14.7 KB, free 1964.9 MB)

21/06/10 18:04:57 INFO spark.ContextCleaner: Cleaned accumulator 456

21/06/10 18:04:57 INFO spark.ContextCleaner: Cleaned accumulator 547

21/06/10 18:04:57 INFO spark.ContextCleaner: Cleaned accumulator 567

21/06/10 18:04:57 INFO spark.ContextCleaner: Cleaned accumulator 460

21/06/10 18:04:57 INFO spark.ContextCleaner: Cleaned accumulator 481

21/06/10 18:04:57 INFO spark.ContextCleaner: Cleaned accumulator 475

21/06/10 18:04:57 INFO spark.ContextCleaner: Cleaned accumulator 498

21/06/10 18:04:57 INFO spark.ContextCleaner: Cleaned accumulator 530

21/06/10 18:04:57 INFO spark.ContextCleaner: Cleaned accumulator 532

21/06/10 18:04:57 INFO spark.ContextCleaner: Cleaned accumulator 573

21/06/10 18:04:57 INFO spark.ContextCleaner: Cleaned accumulator 577

21/06/10 18:04:57 INFO spark.ContextCleaner: Cleaned accumulator 478

21/06/10 18:04:57 INFO spark.ContextCleaner: Cleaned accumulator 536```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] nsivabalan closed issue #3065: Not in Marker Dir occurs when I write to HDFS using Spark

Posted by GitBox <gi...@apache.org>.

nsivabalan closed issue #3065:

URL: https://github.com/apache/hudi/issues/3065

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] wangfeigithub commented on issue #3065: Not in Marker Dir occurs when I write to HDFS using Spark

Posted by GitBox <gi...@apache.org>.

wangfeigithub commented on issue #3065:

URL: https://github.com/apache/hudi/issues/3065#issuecomment-862055951

https://github.com/apache/hudi/issues/3065#issuecomment-862009477

directly writing new files using 0.8

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] n3nash commented on issue #3065: Not in Marker Dir occurs when I write to HDFS using Spark

Posted by GitBox <gi...@apache.org>.

n3nash commented on issue #3065:

URL: https://github.com/apache/hudi/issues/3065#issuecomment-865422520

@wangfeigithub It's hard to say what the issue really is but one thing I can see is that MARKER files are generally under a "." directory. Do you think Windows fs might not be recognizing that ?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] codope commented on issue #3065: Not in Marker Dir occurs when I write to HDFS using Spark

Posted by GitBox <gi...@apache.org>.

codope commented on issue #3065:

URL: https://github.com/apache/hudi/issues/3065#issuecomment-905435084

@wangfeigithub Feel free to create a patch with your fix. Going to close this issue.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] wangfeigithub edited a comment on issue #3065: Not in Marker Dir occurs when I write to HDFS using Spark

Posted by GitBox <gi...@apache.org>.

wangfeigithub edited a comment on issue #3065:

URL: https://github.com/apache/hudi/issues/3065#issuecomment-862055951

https://github.com/apache/hudi/issues/3065#issuecomment-862009477

directly writing new files using 0.8,

This problem was discovered by running the code in Windows Idea and is not a problem in MacOS.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] wangfeigithub commented on issue #3065: Not in Marker Dir occurs when I write to HDFS using Spark

Posted by GitBox <gi...@apache.org>.

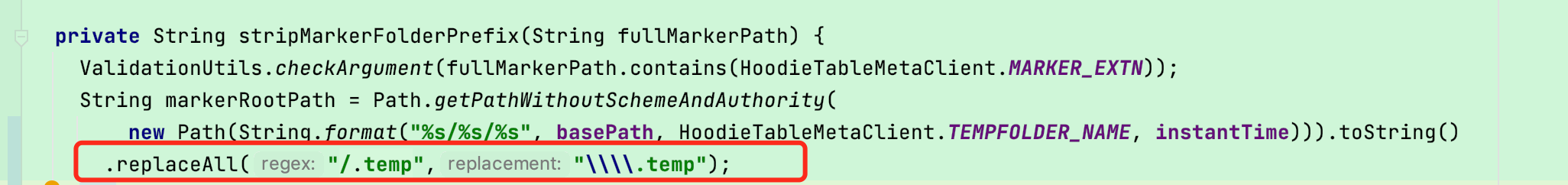

wangfeigithub commented on issue #3065:

URL: https://github.com/apache/hudi/issues/3065#issuecomment-866618158

@n3nash I think it's the window's path conversion problem.

I added this section in the source code to solve the problem

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] codope edited a comment on issue #3065: Not in Marker Dir occurs when I write to HDFS using Spark

Posted by GitBox <gi...@apache.org>.

codope edited a comment on issue #3065:

URL: https://github.com/apache/hudi/issues/3065#issuecomment-905435084

@wangfeigithub Feel free to grab [this JIRA](https://issues.apache.org/jira/browse/HUDI-2360) and create a patch with your fix. Going to close this issue.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] n3nash commented on issue #3065: Not in Marker Dir occurs when I write to HDFS using Spark

Posted by GitBox <gi...@apache.org>.

n3nash commented on issue #3065:

URL: https://github.com/apache/hudi/issues/3065#issuecomment-862009477

@wangfeigithub Are you trying to upgrade from a previous older version of Hudi or are you directly writing new files using 0.8 ?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] wangfeigithub commented on issue #3065: Not in Marker Dir occurs when I write to HDFS using Spark

Posted by GitBox <gi...@apache.org>.

wangfeigithub commented on issue #3065:

URL: https://github.com/apache/hudi/issues/3065#issuecomment-859311343

/hive/warehouse/ods3.db/orders\.hoodie\.temp\20210610180447/month_key=default/ecddd088-5b6e-4efe-82c2-c8c86988e8cb-0_0-28-83_20210610180447.parquet.marker.CREATE.

When I run with Window Idea and generate the Orders \ path,

There are no errors when I use MacOS, and the log shows orders/.

Are \ and / identifying the problem?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] n3nash commented on issue #3065: Not in Marker Dir occurs when I write to HDFS using Spark

Posted by GitBox <gi...@apache.org>.

n3nash commented on issue #3065:

URL: https://github.com/apache/hudi/issues/3065#issuecomment-865422520

@wangfeigithub It's hard to say what the issue really is but one thing I can see is that MARKER files are generally under a "." directory. Do you think Windows fs might not be recognizing that ?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] wangfeigithub edited a comment on issue #3065: Not in Marker Dir occurs when I write to HDFS using Spark

Posted by GitBox <gi...@apache.org>.

wangfeigithub edited a comment on issue #3065:

URL: https://github.com/apache/hudi/issues/3065#issuecomment-859311343

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org