You are viewing a plain text version of this content. The canonical link for it is here.

Posted to commits@hudi.apache.org by GitBox <gi...@apache.org> on 2021/01/15 07:24:00 UTC

[GitHub] [hudi] so-lazy opened a new issue #2338: [SUPPORT] MOR table found duplicate and process so slowly

so-lazy opened a new issue #2338:

URL: https://github.com/apache/hudi/issues/2338

I want to have a pipeline consume incremental data from Kafka. first i have a full data import to a hudi mor table file size around 23G, When this is done, everything is all right. But when i start consuming incremental data from kafka, it's soooo slow and then when i check the data, i found much duplicate records, and after runing incremental data ingesting the file size now is 93.9G, it made me curious, cause i used GLOBAL_BOOM, from the doc i saw the desc below, so i think if i got the same record key, then no need to worry about the duplicate, the same record key records will be updated.

> /**

> * Only applies if index type is GLOBAL_BLOOM.

> * <p>

> * When set to true, an update to a record with a different partition from its existing one

> * will insert the record to the new partition and delete it from the old partition.

> * <p>

> * When set to false, a record will be updated to the old partition.

> */

And u see just a little bit data, it process so slowly, need your help @bvaradar , thanks sooo much.

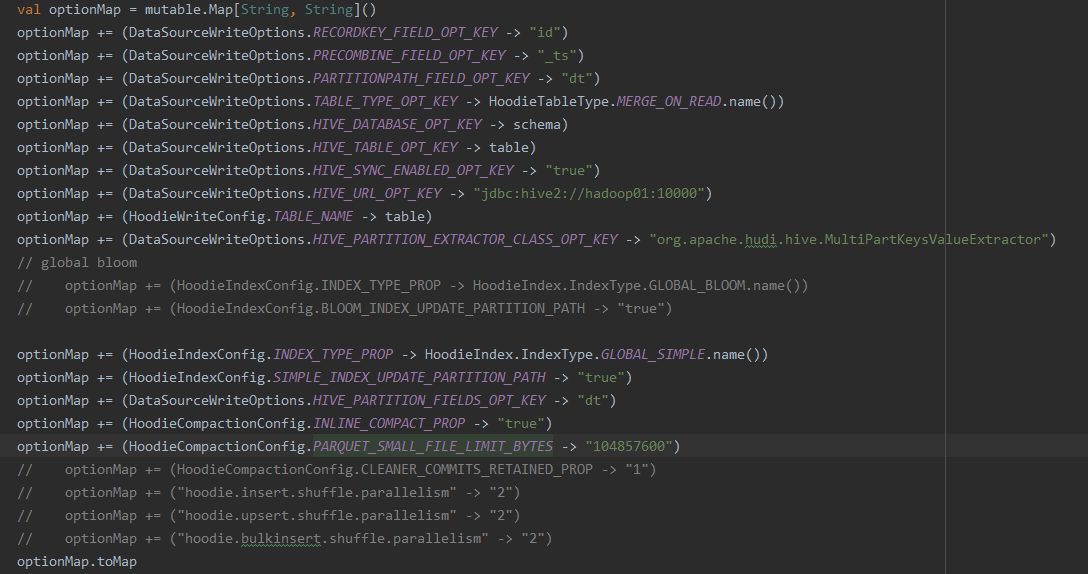

Here is my option:

```

hoodie.datasource.write.recordkey.field -> "id"

hoodie.datasource.write.precombine.field -> "_ts"

hoodie.datasource.write.partitionpath.field -> "dt"

hoodie.datasource.write.table.type -> "MERGE_ON_READ"

hoodie.datasource.hive_sync.database -> "schema"

hoodie.datasource.hive_sync.table -> "table"

hoodie.datasource.hive_sync.enable -> "true"

hoodie.datasource.hive_sync.jdbcurl -> "jdbc:hive2://xxxxxxx"

hoodie.table.name -> "table"

hoodie.datasource.hive_sync.partition_extractor_class -> "org.apache.hudi.hive.MultiPartKeysValueExtractor"

hoodie.index.type -> "GLOBAL_BLOOM"

hoodie.datasource.hive_sync.partition_fields -> "dt"

hoodie.compact.inline -> "true"

hoodie.parquet.small.file.limit -> "104857600"

```

* Hudi version :

0.6

* Spark version :

2.4.6

* Hive version :

3.1.2

* Hadoop version :

3.1.2

* Storage (HDFS/S3/GCS..) :

HDFS

* Running on Docker? (yes/no) :

no

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] bvaradar commented on issue #2338: [SUPPORT] MOR table found duplicate and process so slowly

Posted by GitBox <gi...@apache.org>.

bvaradar commented on issue #2338:

URL: https://github.com/apache/hudi/issues/2338#issuecomment-757125096

@so-lazy :

when you query through spark datasource (not just single file), are you able to see unique record ?

val df = spark.read.format("hudi").load("hdfs://hadoop01:9000/hudi/cars/carsdata/inf_car_bin/*")

....

Also, Are you passing the config (spark.sql.hive.convertMetastoreParquet=false) when you are launching spark ? https://hudi.apache.org/docs/querying_data.html#spark-sql.

Also, I see you have space around "=" sign (set spark.sql.hive.convertMetastoreParquet = false;) Try removing it. Please also enable INFO logging and run the select group by query and attach them.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] so-lazy commented on issue #2338: [SUPPORT] MOR table found duplicate and process so slowly

Posted by GitBox <gi...@apache.org>.

so-lazy commented on issue #2338:

URL: https://github.com/apache/hudi/issues/2338#issuecomment-751638099

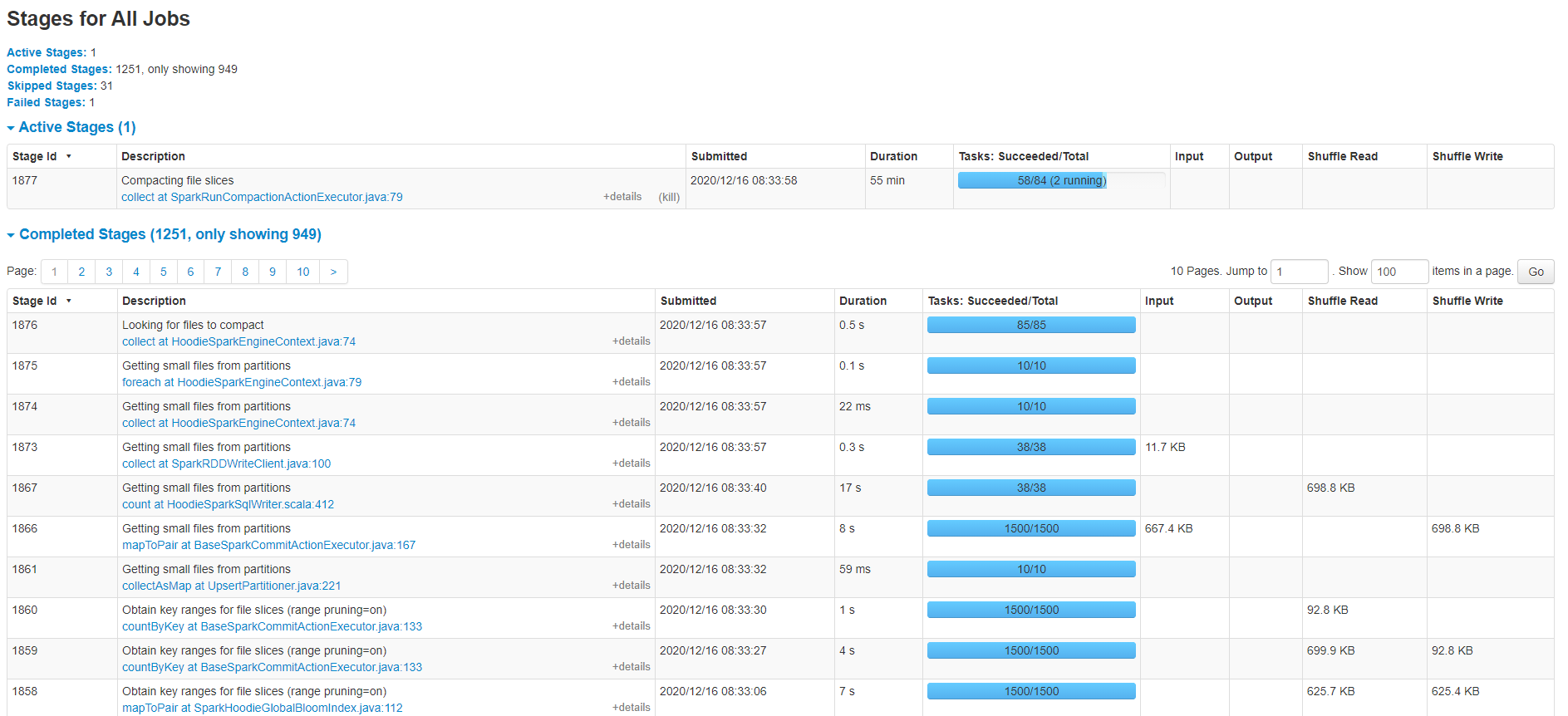

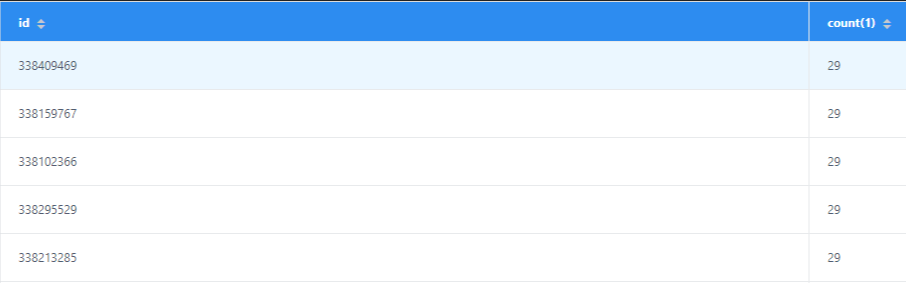

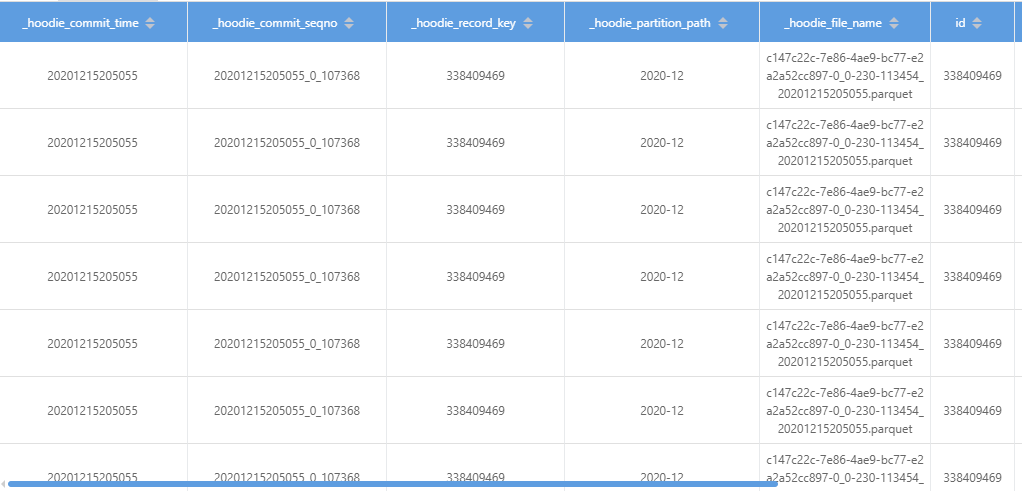

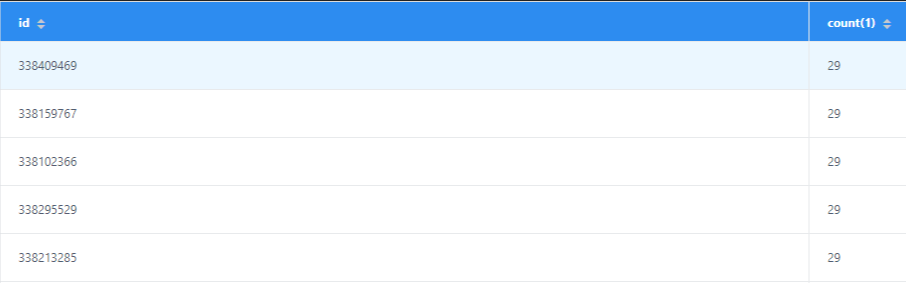

I run `select id,count(1) from table_ro group by id having count(1) > 1` got this

then i query data by id got this

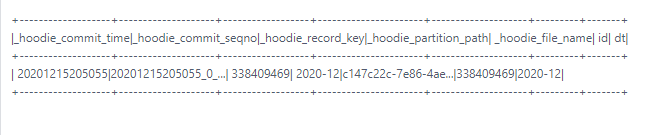

then i go to check the parquet file

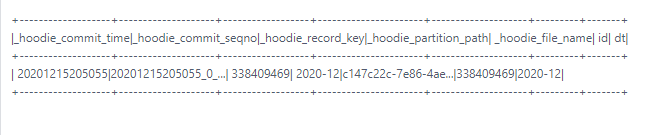

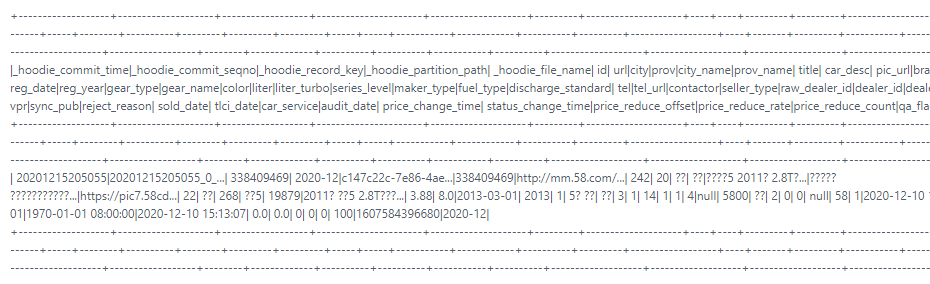

`val df = spark.read.parquet("/hudi/2020-12/c147c22c-7e86-4ae9-bc77-e2a2a52cc897-0_0-230-113454_20201215205055.parquet")

df.createOrReplaceTempView("table")

spark.sql("select _hoodie_commit_time,_hoodie_commit_seqno,_hoodie_record_key,_hoodie_partition_path,_hoodie_file_name,id from table WHERE id = 338409469").show`

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] bvaradar commented on issue #2338: [SUPPORT] MOR table found duplicate and process so slowly

Posted by GitBox <gi...@apache.org>.

bvaradar commented on issue #2338:

URL: https://github.com/apache/hudi/issues/2338#issuecomment-754448424

@so-lazy : I think the hive table may not be a hudi table. can you show the output of the following hive command ?

desc formatted table <table>

Also, can you please attach the listing of .hoodie folder ?

Thanks,

Balaji.V

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] bvaradar edited a comment on issue #2338: [SUPPORT] MOR table found duplicate and process so slowly

Posted by GitBox <gi...@apache.org>.

bvaradar edited a comment on issue #2338:

URL: https://github.com/apache/hudi/issues/2338#issuecomment-757125096

@so-lazy :

when you query through spark datasource (not just single file), are you able to see unique record ?

val df = spark.read.format("hudi").load("hdfs://hadoop01:9000/hudi/cars/carsdata/inf_car_bin/*")

....

Also, Are you passing the config (spark.sql.hive.convertMetastoreParquet=false) when you are launching spark ? https://hudi.apache.org/docs/querying_data.html#spark-sql.

Also, I see you have space around "=" sign (set spark.sql.hive.convertMetastoreParquet = false;) Try removing it. Please also enable INFO logging and run the select group by query and attach them if the problem persists.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] nsivabalan edited a comment on issue #2338: [SUPPORT] MOR table found duplicate and process so slowly

Posted by GitBox <gi...@apache.org>.

nsivabalan edited a comment on issue #2338:

URL: https://github.com/apache/hudi/issues/2338#issuecomment-753746016

@so-lazy : I am looping in @bvaradar to help you out here. But in the mean time, some context around Global_Bloom. Hudi has two kinds of indexes, regular and global. in regular bloom, all record keys within a partition are unique, and so there could be same record key across diff partitions. Within same partition, hudi will take care of updating the records based on record keys and will serve you only the latest snapshot for every record key of interest.

Where as in Global versions, record keys across the entire dataset is unique. in other words, there can't be same record key in different partitions. So, incase you insert a record, rec_1 in partition1 and later try to insert the same record(rec_1) to a diff partition, say partition2, Hudi by default will update the record in partition1. But there is a config which you can set, on which case, hudi will delete this record, rec1 of interest from partition1 and will insert to partition2.

This is the major difference between regular and global versions of index. Since in Global version, all partitions need to be looked up for all records, it is known to be less performant compared to regular index. So, unless you have this requirement, would suggest you to use regular indexes (BLOOM for ex).

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] so-lazy commented on issue #2338: [SUPPORT] MOR table found duplicate and process so slowly

Posted by GitBox <gi...@apache.org>.

so-lazy commented on issue #2338:

URL: https://github.com/apache/hudi/issues/2338#issuecomment-758656180

> @so-lazy :

> Also, I see you have space around "=" sign (set spark.sql.hive.convertMetastoreParquet = false;) Try removing it. Please also enable INFO logging and run the select group by query and attach them if the problem persists.

@bvaradar Sorry,bvaradar, these days i was so busy didn't reply on time. Today i followed your suggest and attach screen shot.

> val df = spark.read.format("hudi").load("hdfs://hadoop01:9000/hudi/cars/carsdata/inf_car_bin/*")

run this i got unique record

> run sql on hive ro table

> Also, Are you passing the config (spark.sql.hive.convertMetastoreParquet=false) when you are launching spark ? https://hudi.apache.org/docs/querying_data.html#spark-sql.

Yeah after passing this config, i can see unique record. thanks so much , and now i am gonna try other index type, for global_bloom , it's so slowly may be there are some problems in my program. Hudi is a good software , thanks for your time and effort.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] bvaradar commented on issue #2338: [SUPPORT] MOR table found duplicate and process so slowly

Posted by GitBox <gi...@apache.org>.

bvaradar commented on issue #2338:

URL: https://github.com/apache/hudi/issues/2338#issuecomment-761532496

cc @nsivabalan

Not sure if you saw this blog about index usages :https://hudi.apache.org/blog/hudi-indexing-mechanisms/

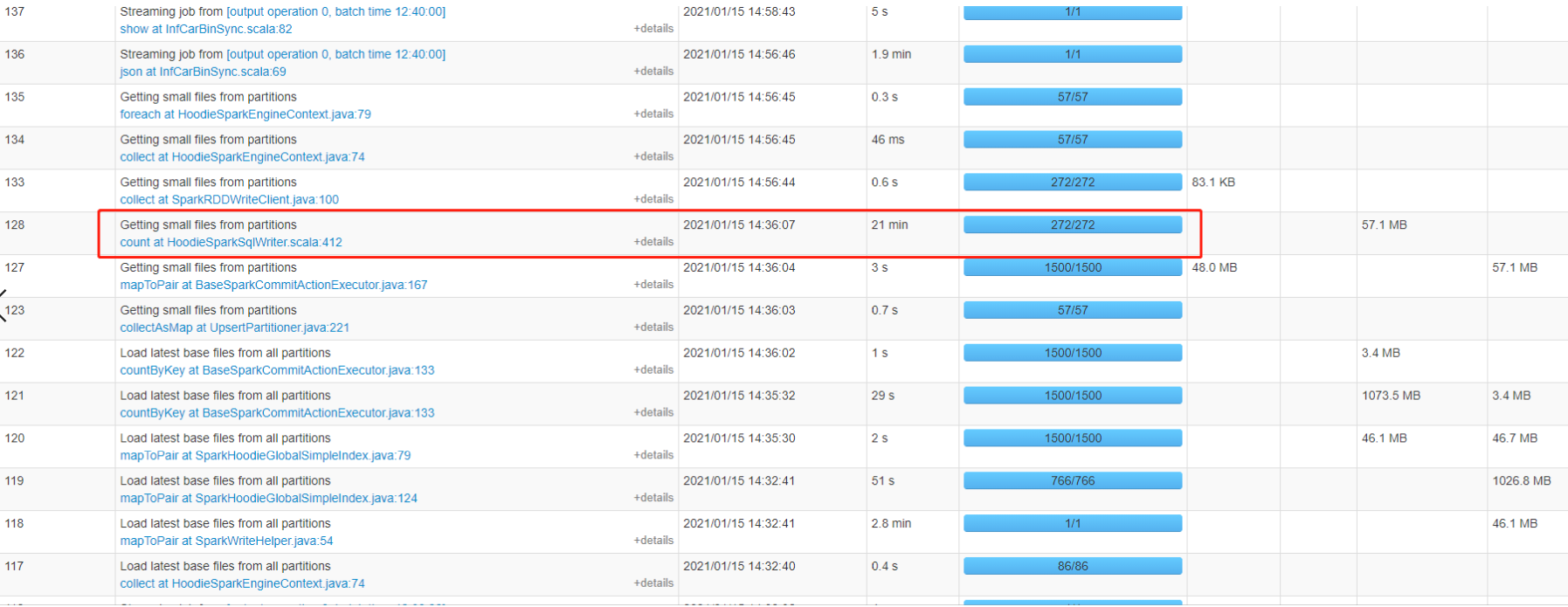

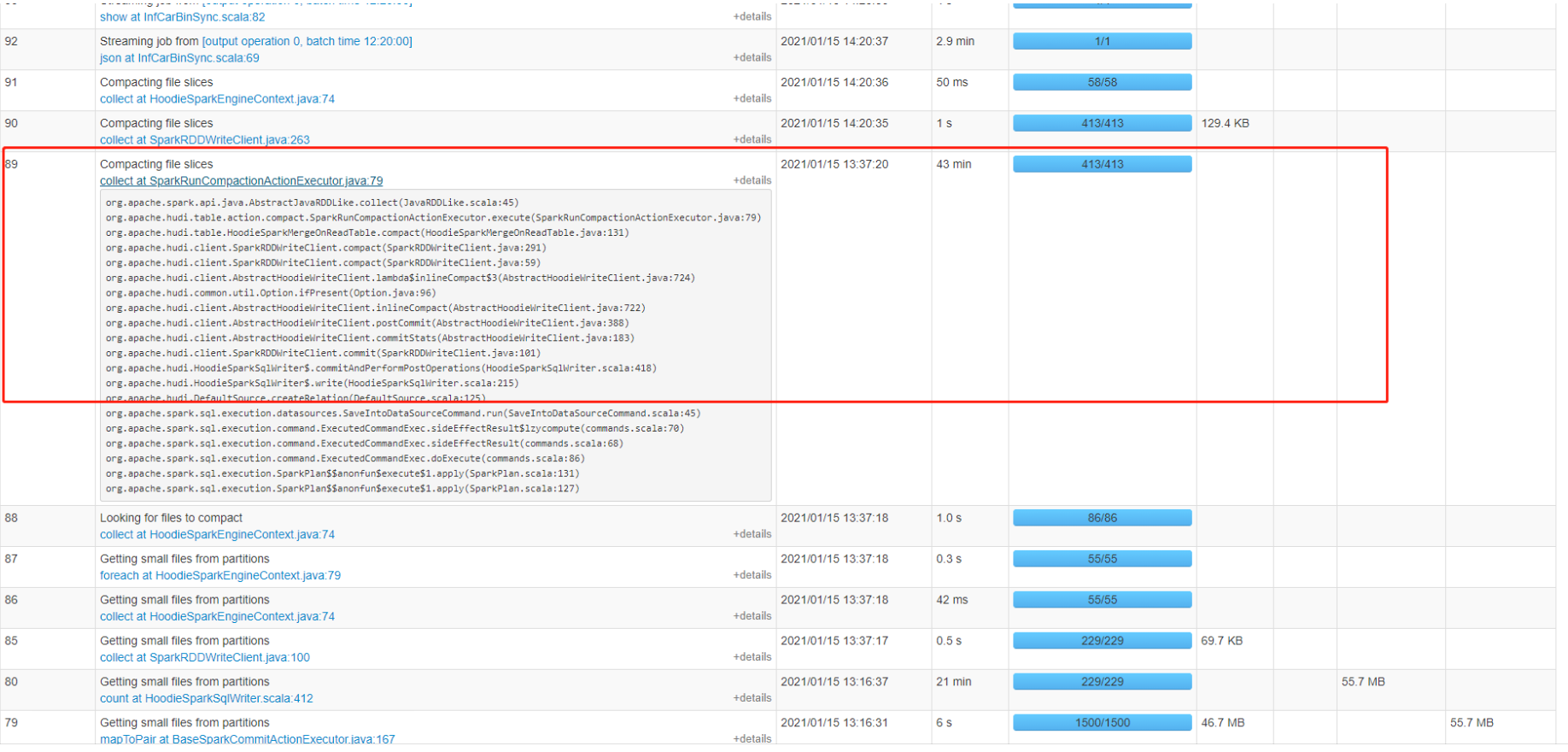

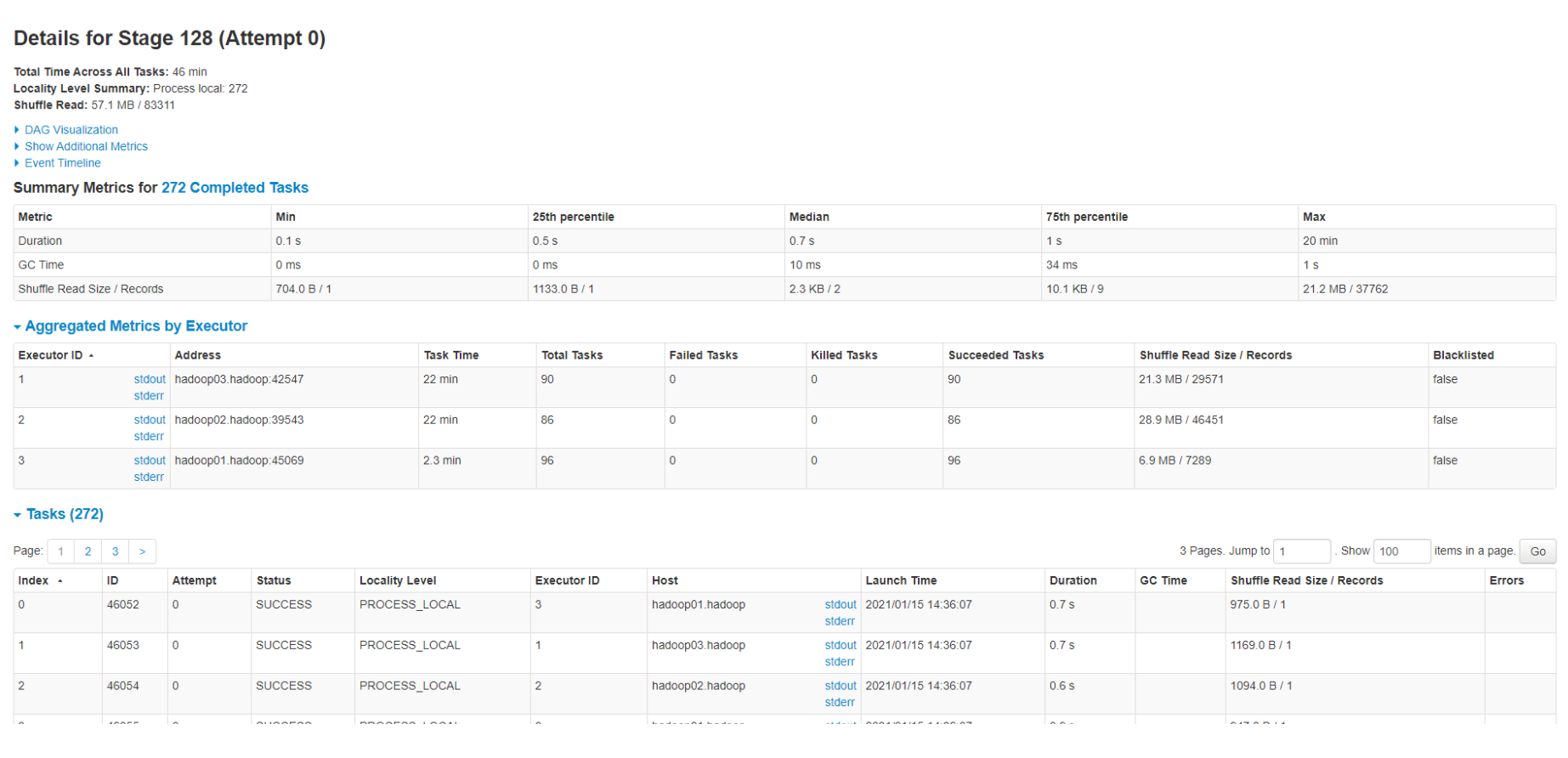

The stage names could be misleading. It is likely the index lookup is running and not finding the small files. Regarding compaction, I see that there are 413 tasks. Do you have sufficient executors to have them run in parallel. Each task would be compacting the delta files and creating a new parquet file.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] so-lazy commented on issue #2338: [SUPPORT] MOR table found duplicate and process so slowly

Posted by GitBox <gi...@apache.org>.

so-lazy commented on issue #2338:

URL: https://github.com/apache/hudi/issues/2338#issuecomment-753710467

@nsivabalan Sir,can u help me out of this? I am so curious, thanks too much

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] so-lazy closed issue #2338: [SUPPORT] MOR table found duplicate and process so slowly

Posted by GitBox <gi...@apache.org>.

so-lazy closed issue #2338:

URL: https://github.com/apache/hudi/issues/2338

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] nsivabalan closed issue #2338: [SUPPORT] MOR table found duplicate and process so slowly

Posted by GitBox <gi...@apache.org>.

nsivabalan closed issue #2338:

URL: https://github.com/apache/hudi/issues/2338

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] bvaradar commented on issue #2338: [SUPPORT] MOR table found duplicate and process so slowly

Posted by GitBox <gi...@apache.org>.

bvaradar commented on issue #2338:

URL: https://github.com/apache/hudi/issues/2338#issuecomment-757125096

@so-lazy :

when you query through spark datasource (not just single file), are you able to see unique record ?

val df = spark.read.format("hudi").load("hdfs://hadoop01:9000/hudi/cars/carsdata/inf_car_bin/*")

....

Also, Are you passing the config (spark.sql.hive.convertMetastoreParquet=false) when you are launching spark ? https://hudi.apache.org/docs/querying_data.html#spark-sql.

Also, I see you have space around "=" sign (set spark.sql.hive.convertMetastoreParquet = false;) Try removing it. Please also enable INFO logging and run the select group by query and attach them.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] nsivabalan commented on issue #2338: [SUPPORT] MOR table found duplicate and process so slowly

Posted by GitBox <gi...@apache.org>.

nsivabalan commented on issue #2338:

URL: https://github.com/apache/hudi/issues/2338#issuecomment-750427679

If you wish to understand diff indexing schemes, please refer to this [blog](https://hudi.apache.org/blog/hudi-indexing-mechanisms/).

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] nsivabalan commented on issue #2338: [SUPPORT] MOR table found duplicate and process so slowly

Posted by GitBox <gi...@apache.org>.

nsivabalan commented on issue #2338:

URL: https://github.com/apache/hudi/issues/2338#issuecomment-750427001

@so-lazy :would u mind elaborating more on your use-case. did you choose Global_bloom intentionally?

And by this statement of yours "i found much duplicate records,..", did you mean to insinuate that compaction hasn't happened and hence you found duplicates or did you refer in general your dataset has duplicates?

Do you want to do dedup for your use-case in general?

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] bvaradar commented on issue #2338: [SUPPORT] MOR table found duplicate and process so slowly

Posted by GitBox <gi...@apache.org>.

bvaradar commented on issue #2338:

URL: https://github.com/apache/hudi/issues/2338#issuecomment-745713009

@nsivabalan : Can you take a look at this.

Thanks,

Balaji.V

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] bvaradar edited a comment on issue #2338: [SUPPORT] MOR table found duplicate and process so slowly

Posted by GitBox <gi...@apache.org>.

bvaradar edited a comment on issue #2338:

URL: https://github.com/apache/hudi/issues/2338#issuecomment-757125096

@so-lazy :

when you query through spark datasource (not just single file), are you able to see unique record ?

val df = spark.read.format("hudi").load("hdfs://hadoop01:9000/hudi/cars/carsdata/inf_car_bin/*")

....

Also, Are you passing the config (spark.sql.hive.convertMetastoreParquet=false) when you are launching spark ? https://hudi.apache.org/docs/querying_data.html#spark-sql.

Also, I see you have space around "=" sign (set spark.sql.hive.convertMetastoreParquet = false;) Try removing it. Please also enable INFO logging and run the select group by query and attach them if the problem persists.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] peng-xin commented on issue #2338: [SUPPORT] MOR table found duplicate and process so slowly

Posted by GitBox <gi...@apache.org>.

peng-xin commented on issue #2338:

URL: https://github.com/apache/hudi/issues/2338#issuecomment-751994816

> I run `select id,count(1) from table_ro group by id having count(1) > 1` got this

>

>

> then i query data by id got this

>

>

> then i go to check the parquet file

> `val df = spark.read.parquet("/hudi/2020-12/c147c22c-7e86-4ae9-bc77-e2a2a52cc897-0_0-230-113454_20201215205055.parquet") df.createOrReplaceTempView("table") spark.sql("select _hoodie_commit_time,_hoodie_commit_seqno,_hoodie_record_key,_hoodie_partition_path,_hoodie_file_name,id from table WHERE id = 338409469").show`

>

when you use spark-sql,you can try `set spark.sql.hive.convertMetastoreParquet = false`;

I ran into the same problem before yesterday

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] so-lazy commented on issue #2338: [SUPPORT] MOR table found duplicate and process so slowly

Posted by GitBox <gi...@apache.org>.

so-lazy commented on issue #2338:

URL: https://github.com/apache/hudi/issues/2338#issuecomment-760708042

@bvaradar sir, now i used global simple index, but for some satages

**Getting small files from partitions**

**Compacting file slices**

they cost so long mintues, and i attach my option, am i wrong with some config?

By the way i searched a lot about global_bloom and global_simple, i am still not so clear, what's the difference between them?

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] so-lazy closed issue #2338: [SUPPORT] MOR table found duplicate and process so slowly

Posted by GitBox <gi...@apache.org>.

so-lazy closed issue #2338:

URL: https://github.com/apache/hudi/issues/2338

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] so-lazy commented on issue #2338: [SUPPORT] MOR table found duplicate and process so slowly

Posted by GitBox <gi...@apache.org>.

so-lazy commented on issue #2338:

URL: https://github.com/apache/hudi/issues/2338#issuecomment-745774960

> @nsivabalan : Can you take a look at this.

>

> Thanks,

> Balaji.V

Thanks for your quick reply

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] nsivabalan commented on issue #2338: [SUPPORT] MOR table found duplicate and process so slowly

Posted by GitBox <gi...@apache.org>.

nsivabalan commented on issue #2338:

URL: https://github.com/apache/hudi/issues/2338#issuecomment-774512527

@so-lazy : Can you please respond to Balaji's comment when you get a chance.

few more questions as we triage the issue.

When you loaded the data to hudi for the first time, did you use bulk-insert of insert operation. Were the configs you used to load the data into hudi first time is same as the ones you have given in description of this issue? If not, would you mind posting those configs as well.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] so-lazy commented on issue #2338: [SUPPORT] MOR table found duplicate and process so slowly

Posted by GitBox <gi...@apache.org>.

so-lazy commented on issue #2338:

URL: https://github.com/apache/hudi/issues/2338#issuecomment-756485939

> @so-lazy : I think the hive table may not be a hudi table. can you show the output of the following hive command ?

>

> desc formatted table

>

> Also, can you please attach the listing of .hoodie folder ?

>

> Thanks,

> Balaji.V

@bvaradar Here are my table desc and .hoodie folder, thanks so much.

[desc table.txt](https://github.com/apache/hudi/files/5784674/desc.table.txt)

[hoodie.tar.gz](https://github.com/apache/hudi/files/5784676/hoodie.tar.gz)

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] nsivabalan commented on issue #2338: [SUPPORT] MOR table found duplicate and process so slowly

Posted by GitBox <gi...@apache.org>.

nsivabalan commented on issue #2338:

URL: https://github.com/apache/hudi/issues/2338#issuecomment-753746016

@so-lazy : I am looping in @bvaradar to help you out here. But in the mean time, some context around Global_Bloom. Hudi has two kinds of indexes, regular and global. in regular bloom, all record keys within a partition are unique, but there could be same record key across diff partitions. Within same partition, hudi will take care of updating the records based on record keys and will serve you only the latest snapshot for every record key of interest.

Where as in Global versions, record keys across the entire dataset is unique. in other words, there can't be same record key in different partitions. So, incase you insert a record, rec_1 in partition1 and later try to insert the same record(rec_1) to a diff partition, say partition2, Hudi by default will update the record in partition1. But there is a config which you can set, on which case, hudi will delete this record, rec1 of interest from partition1 and will insert to partition2.

This is the major difference between regular and global versions of index. Since in Global version, all partitions need to be looked up for all records, it is known to be less performant compared to regular index. So, unless you have this requirement, would suggest you to use regular indexes (BLOOM for ex).

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] so-lazy commented on issue #2338: [SUPPORT] MOR table found duplicate and process so slowly

Posted by GitBox <gi...@apache.org>.

so-lazy commented on issue #2338:

URL: https://github.com/apache/hudi/issues/2338#issuecomment-751363149

> @so-lazy :would u mind elaborating more on your use-case. did you choose Global_bloom intentionally?

> And by this statement of yours "i found much duplicate records,..", did you mean to insinuate that compaction hasn't happened and hence you found duplicates or did you refer in general your dataset has duplicates?

> Do you want to do dedup for your use-case in general?

This is my mysql table

**id** | name | add_time

1 | "so-lazy" | 2020-12-26

yeah, for my use case, i consume binlog delta data from kafka and those data they have primary key "id", i set dt based on the column add_time, and format is yyyy-MM-dd. What i want is if one row id is 1 and at the first time hudi upserts this row into table partitiion 2020-12-26, then next time this id 1 come again,it will be updated.

But now what i found is , when i use spark-sql search "select * from table where id = 1" on hive table synced by hudi, i saw many the same records they are totally the same, all the column values they are the same, also in the same parquet file, but when i read this parquet file by spark, i can only find one row which id = 1 in that parquet file.

Sir do u understand what i mean, thanks sooo much.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] nsivabalan commented on issue #2338: [SUPPORT] MOR table found duplicate and process so slowly

Posted by GitBox <gi...@apache.org>.

nsivabalan commented on issue #2338:

URL: https://github.com/apache/hudi/issues/2338#issuecomment-812713376

Closing due to inactivity. but feel free to reopen to create a new ticket. would be happy to assist you.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org