You are viewing a plain text version of this content. The canonical link for it is here.

Posted to github@arrow.apache.org by GitBox <gi...@apache.org> on 2022/01/14 01:55:06 UTC

[GitHub] [arrow-datafusion] houqp opened a new pull request #1556: Arrow2 merge

houqp opened a new pull request #1556:

URL: https://github.com/apache/arrow-datafusion/pull/1556

# Which issue does this PR close?

Close arrow2 milestone https://github.com/apache/arrow-datafusion/milestone/3

# Rationale for this change

Provide a complete arrow2 based datafusion implementation for full evaluation of the migration. This should give us a good feeling of the arrow2 API UX as well as a starting point for performance benchmarks within datafusion and downstream projects.

The goal is to merge the code into an official arrow2 branch in the short run, until we are comfortable doing the switch in master.

# What changes are included in this PR?

* Switched to arrow2

* Enabled miri test

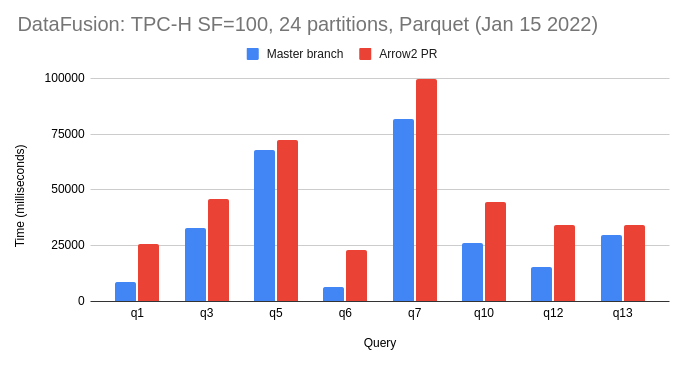

Here is a TPCH benchmark I ran on my Linux laptop:

On avg, we are getting around 5% speed up across the board, with q5 at 11% gain and q12 at only 1%. If this performance gain can also be replicated in downstream projects, then I think it would be a strong case for us to do the arrow2 swtich.

# Are there any user-facing changes?

Yes, downstream consumer of datafusion will need to switch to arrow2 as well.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow-datafusion] houqp commented on a change in pull request #1556: Officially maintained Arrow2 branch

Posted by GitBox <gi...@apache.org>.

houqp commented on a change in pull request #1556:

URL: https://github.com/apache/arrow-datafusion/pull/1556#discussion_r785402462

##########

File path: datafusion/Cargo.toml

##########

@@ -39,25 +39,27 @@ path = "src/lib.rs"

[features]

default = ["crypto_expressions", "regex_expressions", "unicode_expressions"]

-simd = ["arrow/simd"]

+# FIXME: https://github.com/jorgecarleitao/arrow2/issues/580

+#simd = ["arrow/simd"]

+simd = []

crypto_expressions = ["md-5", "sha2", "blake2", "blake3"]

regex_expressions = ["regex"]

unicode_expressions = ["unicode-segmentation"]

-pyarrow = ["pyo3", "arrow/pyarrow"]

+# FIXME: add pyarrow support to arrow2 pyarrow = ["pyo3", "arrow/pyarrow"]

+pyarrow = ["pyo3"]

# Used for testing ONLY: causes all values to hash to the same value (test for collisions)

force_hash_collisions = []

# Used to enable the avro format

-avro = ["avro-rs", "num-traits"]

+avro = ["arrow/io_avro", "arrow/io_avro_async", "arrow/io_avro_compression", "num-traits", "avro-schema"]

[dependencies]

ahash = { version = "0.7", default-features = false }

hashbrown = { version = "0.11", features = ["raw"] }

-arrow = { version = "6.4.0", features = ["prettyprint"] }

-parquet = { version = "6.4.0", features = ["arrow"] }

+parquet = { package = "parquet2", version = "0.8", default_features = false, features = ["stream"] }

sqlparser = "0.13"

paste = "^1.0"

num_cpus = "1.13.0"

-chrono = { version = "0.4", default-features = false }

+chrono = { version = "0.4", default-features = false, features = ["clock"] }

Review comment:

It looks like datafusion is only depending on this to get the current system time in various places. I will file https://github.com/apache/arrow-datafusion/issues/1584 as a follow up

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow-datafusion] houqp edited a comment on pull request #1556: Officially maintained Arrow2 branch

Posted by GitBox <gi...@apache.org>.

houqp edited a comment on pull request #1556:

URL: https://github.com/apache/arrow-datafusion/pull/1556#issuecomment-1017175870

All integration and unit tests are passing now, the MIRI check is failing due to an upstream tokio issue I believe. I will file some follow up issues tomorrow to track the remaining work needed for us to make the final call on master merge.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow-datafusion] houqp commented on pull request #1556: Officially maintained Arrow2 branch

Posted by GitBox <gi...@apache.org>.

houqp commented on pull request #1556:

URL: https://github.com/apache/arrow-datafusion/pull/1556#issuecomment-1017175870

All tests are passing now, the MIRI check is failing due to an upstream tokio issue I believe. I will file some follow up issues tomorrow to track the remaining work needed for us to make the final call on master merge.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow-datafusion] houqp merged pull request #1556: Officially maintained Arrow2 branch

Posted by GitBox <gi...@apache.org>.

houqp merged pull request #1556:

URL: https://github.com/apache/arrow-datafusion/pull/1556

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow-datafusion] xudong963 commented on pull request #1556: Officially maintained Arrow2 branch

Posted by GitBox <gi...@apache.org>.

xudong963 commented on pull request #1556:

URL: https://github.com/apache/arrow-datafusion/pull/1556#issuecomment-1017537586

Thanks @houqp , epic work !

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow-datafusion] ic4y commented on pull request #1556: Officially maintained Arrow2 branch

Posted by GitBox <gi...@apache.org>.

ic4y commented on pull request #1556:

URL: https://github.com/apache/arrow-datafusion/pull/1556#issuecomment-1012809108

I found that the peak memory usage of this branch increases by 80% compared to the master branch。

sql : select avg(user_id) from parquet_event_1 group by user_name limit 10

test dataset : total 450 million, 50 million users

| branch | peak memory |

| ---- | ---- |

| master(d7e465 and 35d65fc) | 10G |

| arrow2_merge | 6G |

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow-datafusion] andygrove edited a comment on pull request #1556: Officially maintained Arrow2 branch

Posted by GitBox <gi...@apache.org>.

andygrove edited a comment on pull request #1556:

URL: https://github.com/apache/arrow-datafusion/pull/1556#issuecomment-1013730047

Metrics and CPU activity charts for query 1 from master & arrow2.

## Master

```

=== Physical plan with metrics ===

SortExec: [l_returnflag@0 ASC NULLS LAST,l_linestatus@1 ASC NULLS LAST], metrics=[output_rows=4, elapsed_compute=148.532µs]

CoalescePartitionsExec, metrics=[output_rows=4, elapsed_compute=26.889µs]

ProjectionExec: expr=[l_returnflag@0 as l_returnflag, l_linestatus@1 as l_linestatus, SUM(lineitem.l_quantity)@2 as sum_qty, SUM(lineitem.l_extendedprice)@3 as sum_base_price, SUM(lineitem.l_extendedprice * Int64(1) - lineitem.l_discount)@4 as sum_disc_price, SUM(lineitem.l_extendedprice * Int64(1) - lineitem.l_discount * Int64(1) + lineitem.l_tax)@5 as sum_charge, AVG(lineitem.l_quantity)@6 as avg_qty, AVG(lineitem.l_extendedprice)@7 as avg_price, AVG(lineitem.l_discount)@8 as avg_disc, COUNT(UInt8(1))@9 as count_order], metrics=[output_rows=4, elapsed_compute=162.216µs]

HashAggregateExec: mode=FinalPartitioned, gby=[l_returnflag@0 as l_returnflag, l_linestatus@1 as l_linestatus], aggr=[SUM(lineitem.l_quantity), SUM(lineitem.l_extendedprice), SUM(lineitem.l_extendedprice * Int64(1) - lineitem.l_discount), SUM(lineitem.l_extendedprice * Int64(1) - lineitem.l_discount Multiply Int64(1) Plus lineitem.l_tax), AVG(lineitem.l_quantity), AVG(lineitem.l_extendedprice), AVG(lineitem.l_discount), COUNT(UInt8(1))], metrics=[output_rows=4, elapsed_compute=836.092µs]

CoalesceBatchesExec: target_batch_size=2048, metrics=[output_rows=96, elapsed_compute=2.467991ms]

RepartitionExec: partitioning=Hash([Column { name: "l_returnflag", index: 0 }, Column { name: "l_linestatus", index: 1 }], 24), metrics=[fetch_time{inputPartition=0}=121.646159259s, repart_time{inputPartition=0}=1.068665ms, send_time{inputPartition=0}=181.052µs]

HashAggregateExec: mode=Partial, gby=[l_returnflag@5 as l_returnflag, l_linestatus@6 as l_linestatus], aggr=[SUM(lineitem.l_quantity), SUM(lineitem.l_extendedprice), SUM(lineitem.l_extendedprice * Int64(1) - lineitem.l_discount), SUM(lineitem.l_extendedprice * Int64(1) - lineitem.l_discount Multiply Int64(1) Plus lineitem.l_tax), AVG(lineitem.l_quantity), AVG(lineitem.l_extendedprice), AVG(lineitem.l_discount), COUNT(UInt8(1))], metrics=[output_rows=96, elapsed_compute=62.022452324s]

ProjectionExec: expr=[l_extendedprice@1 * CAST(1 AS Float64) - l_discount@2 as BinaryExpr-*BinaryExpr--Column-lineitem.l_discountLiteral1Column-lineitem.l_extendedprice, l_quantity@0 as l_quantity, l_extendedprice@1 as l_extendedprice, l_discount@2 as l_discount, l_tax@3 as l_tax, l_returnflag@4 as l_returnflag, l_linestatus@5 as l_linestatus], metrics=[output_rows=591599326, elapsed_compute=2.333647686s]

CoalesceBatchesExec: target_batch_size=2048, metrics=[output_rows=591599326, elapsed_compute=18.07630248s]

FilterExec: l_shipdate@6 <= 10471, metrics=[output_rows=591599326, elapsed_compute=14.438278954s]

ParquetExec: batch_size=4096, limit=None, partitions=[/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-5.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-10.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-11.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-13.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-6.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-14.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-20.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-2.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-22.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-19.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-0.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-16.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-21.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-23.parquet, /mnt/bigdata/tp

ch/sf100-24part-parquet/lineitem/part-4.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-17.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-9.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-1.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-18.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-8.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-12.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-15.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-7.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-3.parquet], metrics=[predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-9.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-22.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-23.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24p

art-parquet/lineitem/part-18.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-1.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-11.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-3.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-5.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-23.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-11.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-1.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-14.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-3.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/

lineitem/part-9.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-21.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-18.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-6.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-7.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-21.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-19.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-10.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-5.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-17.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/l

ineitem/part-15.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-12.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-8.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-16.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-12.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-6.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-16.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-10.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-4.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-20.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/pa

rt-0.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-7.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-2.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-8.parquet}=0, num_predicate_creation_errors=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-22.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-15.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-0.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-14.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-13.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-2.parquet}=0, predicate_evaluation_errors{filename=

/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-19.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-4.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-13.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-17.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-20.parquet}=0]

+--------------+--------------+------------+--------------------+--------------------+--------------------+--------------------+-------------------+----------------------+-------------+

| l_returnflag | l_linestatus | sum_qty | sum_base_price | sum_disc_price | sum_charge | avg_qty | avg_price | avg_disc | count_order |

+--------------+--------------+------------+--------------------+--------------------+--------------------+--------------------+-------------------+----------------------+-------------+

| A | F | 3775127569 | 5660775782243.051 | 5377736101986.644 | 5592847119226.674 | 25.49937018008533 | 38236.11640655464 | 0.05000224292310849 | 148047875 |

| N | F | 98553062 | 147771098385.98004 | 140384965965.03497 | 145999793032.7758 | 25.501556956882876 | 38237.19938880452 | 0.04998528433805398 | 3864590 |

| N | O | 7436302680 | 11150725250531.352 | 10593194904399.148 | 11016931834528.229 | 25.500009351223113 | 38237.22761127162 | 0.049997917972634566 | 291619606 |

| R | F | 3775724821 | 5661602800938.198 | 5378513347920.04 | 5593662028982.476 | 25.500066311082758 | 38236.6972423322 | 0.05000130406955949 | 148067255 |

+--------------+--------------+------------+--------------------+--------------------+--------------------+--------------------+-------------------+----------------------+-------------+

Query 1 iteration 0 took 8165.9 ms

Query 1 avg time: 8165.94 ms

```

## Arrow2 PR

```

=== Physical plan with metrics ===

SortExec: [l_returnflag@0 ASC NULLS LAST,l_linestatus@1 ASC NULLS LAST], metrics=[output_rows=4, elapsed_compute=90.895µs]

CoalescePartitionsExec, metrics=[output_rows=4, elapsed_compute=16.752µs]

ProjectionExec: expr=[l_returnflag@0 as l_returnflag, l_linestatus@1 as l_linestatus, SUM(lineitem.l_quantity)@2 as sum_qty, SUM(lineitem.l_extendedprice)@3 as sum_base_price, SUM(lineitem.l_extendedprice * Int64(1) - lineitem.l_discount)@4 as sum_disc_price, SUM(lineitem.l_extendedprice * Int64(1) - lineitem.l_discount * Int64(1) + lineitem.l_tax)@5 as sum_charge, AVG(lineitem.l_quantity)@6 as avg_qty, AVG(lineitem.l_extendedprice)@7 as avg_price, AVG(lineitem.l_discount)@8 as avg_disc, COUNT(UInt8(1))@9 as count_order], metrics=[output_rows=4, elapsed_compute=269.589µs]

HashAggregateExec: mode=FinalPartitioned, gby=[l_returnflag@0 as l_returnflag, l_linestatus@1 as l_linestatus], aggr=[SUM(lineitem.l_quantity), SUM(lineitem.l_extendedprice), SUM(lineitem.l_extendedprice * Int64(1) - lineitem.l_discount), SUM(lineitem.l_extendedprice * Int64(1) - lineitem.l_discount Multiply Int64(1) Plus lineitem.l_tax), AVG(lineitem.l_quantity), AVG(lineitem.l_extendedprice), AVG(lineitem.l_discount), COUNT(UInt8(1))], metrics=[output_rows=4, elapsed_compute=966.04µs]

CoalesceBatchesExec: target_batch_size=2048, metrics=[output_rows=96, elapsed_compute=3.118624ms]

RepartitionExec: partitioning=Hash([Column { name: "l_returnflag", index: 0 }, Column { name: "l_linestatus", index: 1 }], 24), metrics=[send_time{inputPartition=0}=202.529µs, fetch_time{inputPartition=0}=167.702662304s, repart_time{inputPartition=0}=881.476µs]

HashAggregateExec: mode=Partial, gby=[l_returnflag@5 as l_returnflag, l_linestatus@6 as l_linestatus], aggr=[SUM(lineitem.l_quantity), SUM(lineitem.l_extendedprice), SUM(lineitem.l_extendedprice * Int64(1) - lineitem.l_discount), SUM(lineitem.l_extendedprice * Int64(1) - lineitem.l_discount Multiply Int64(1) Plus lineitem.l_tax), AVG(lineitem.l_quantity), AVG(lineitem.l_extendedprice), AVG(lineitem.l_discount), COUNT(UInt8(1))], metrics=[output_rows=96, elapsed_compute=69.277706429s]

ProjectionExec: expr=[l_extendedprice@1 * CAST(1 AS Float64) - l_discount@2 as BinaryExpr-*BinaryExpr--Column-lineitem.l_discountLiteral1Column-lineitem.l_extendedprice, l_quantity@0 as l_quantity, l_extendedprice@1 as l_extendedprice, l_discount@2 as l_discount, l_tax@3 as l_tax, l_returnflag@4 as l_returnflag, l_linestatus@5 as l_linestatus], metrics=[output_rows=591599326, elapsed_compute=1.948225184s]

CoalesceBatchesExec: target_batch_size=2048, metrics=[output_rows=591599326, elapsed_compute=19.900026641s]

FilterExec: l_shipdate@6 <= 10471, metrics=[output_rows=591599326, elapsed_compute=17.25811968s]

ParquetExec: batch_size=4096, limit=None, partitions=[/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-5.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-10.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-11.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-13.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-6.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-14.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-20.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-2.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-22.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-19.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-0.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-16.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-21.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-23.parquet, /mnt/bigdata/tp

ch/sf100-24part-parquet/lineitem/part-4.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-17.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-9.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-1.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-18.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-8.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-12.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-15.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-7.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-3.parquet], metrics=[row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-23.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-21.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-2.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24p

art-parquet/lineitem/part-11.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-0.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-3.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-0.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-3.parquet}=0, num_predicate_creation_errors=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-12.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-7.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-4.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-2.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-23.parquet}=0, predicate_evaluation_errors{filenam

e=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-9.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-6.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-5.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-18.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-13.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-1.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-10.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-8.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-1.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-4.parquet}=0, predicate_evaluation_errors{filename=/m

nt/bigdata/tpch/sf100-24part-parquet/lineitem/part-5.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-22.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-17.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-9.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-21.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-7.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-12.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-8.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-18.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-16.parquet}=0, predicate_

evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-15.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-14.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-10.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-19.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-16.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-17.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-11.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-15.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-6.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-13.parquet}=0, predicate_evaluation_errors{

filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-20.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-20.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-14.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-19.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-22.parquet}=0]

+--------------+--------------+------------+--------------------+--------------------+--------------------+--------------------+-------------------+---------------------+-------------+

| l_returnflag | l_linestatus | sum_qty | sum_base_price | sum_disc_price | sum_charge | avg_qty | avg_price | avg_disc | count_order |

+--------------+--------------+------------+--------------------+--------------------+--------------------+--------------------+-------------------+---------------------+-------------+

| A | F | 3775127569 | 5660775782243.05 | 5377736101986.642 | 5592847119226.676 | 25.49937018008533 | 38236.11640655463 | 0.05000224292310846 | 148047875 |

| N | F | 98553062 | 147771098385.98004 | 140384965965.0348 | 145999793032.77588 | 25.501556956882876 | 38237.19938880452 | 0.04998528433805397 | 3864590 |

| N | O | 7436302680 | 11150725250531.35 | 10593194904399.143 | 11016931834528.23 | 25.500009351223113 | 38237.22761127161 | 0.04999791797263452 | 291619606 |

| R | F | 3775724821 | 5661602800938.199 | 5378513347920.042 | 5593662028982.476 | 25.500066311082758 | 38236.6972423322 | 0.05000130406955945 | 148067255 |

+--------------+--------------+------------+--------------------+--------------------+--------------------+--------------------+-------------------+---------------------+-------------+

Query 1 iteration 0 took 27109.6 ms

Query 1 avg time: 27109.65 ms

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow-datafusion] houqp commented on a change in pull request #1556: Officially maintained Arrow2 branch

Posted by GitBox <gi...@apache.org>.

houqp commented on a change in pull request #1556:

URL: https://github.com/apache/arrow-datafusion/pull/1556#discussion_r785407092

##########

File path: .github/workflows/rust.yml

##########

@@ -116,6 +116,7 @@ jobs:

cargo test --no-default-features

cargo run --example csv_sql

cargo run --example parquet_sql

+ #nopass

Review comment:

not entirely sure, @Igosuki do you remember why you added these #nopass lines?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow-datafusion] houqp edited a comment on pull request #1556: Officially maintained Arrow2 branch

Posted by GitBox <gi...@apache.org>.

houqp edited a comment on pull request #1556:

URL: https://github.com/apache/arrow-datafusion/pull/1556#issuecomment-1015128595

update: all datafusion unit and integration tests are passing now, down to a single test failure in datafusion-cli related to json display format.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow-datafusion] houqp commented on pull request #1556: Officially maintained Arrow2 branch

Posted by GitBox <gi...@apache.org>.

houqp commented on pull request #1556:

URL: https://github.com/apache/arrow-datafusion/pull/1556#issuecomment-1015128595

all datafusion unit and integration tests are passing now, down to a single test failure in datafusion-cli related to json display format.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow-datafusion] andygrove commented on pull request #1556: Officially maintained Arrow2 branch

Posted by GitBox <gi...@apache.org>.

andygrove commented on pull request #1556:

URL: https://github.com/apache/arrow-datafusion/pull/1556#issuecomment-1013730047

Metrics for query 1 from master & arrow2.

## Master

```

=== Physical plan with metrics ===

SortExec: [l_returnflag@0 ASC NULLS LAST,l_linestatus@1 ASC NULLS LAST], metrics=[output_rows=4, elapsed_compute=148.532µs]

CoalescePartitionsExec, metrics=[output_rows=4, elapsed_compute=26.889µs]

ProjectionExec: expr=[l_returnflag@0 as l_returnflag, l_linestatus@1 as l_linestatus, SUM(lineitem.l_quantity)@2 as sum_qty, SUM(lineitem.l_extendedprice)@3 as sum_base_price, SUM(lineitem.l_extendedprice * Int64(1) - lineitem.l_discount)@4 as sum_disc_price, SUM(lineitem.l_extendedprice * Int64(1) - lineitem.l_discount * Int64(1) + lineitem.l_tax)@5 as sum_charge, AVG(lineitem.l_quantity)@6 as avg_qty, AVG(lineitem.l_extendedprice)@7 as avg_price, AVG(lineitem.l_discount)@8 as avg_disc, COUNT(UInt8(1))@9 as count_order], metrics=[output_rows=4, elapsed_compute=162.216µs]

HashAggregateExec: mode=FinalPartitioned, gby=[l_returnflag@0 as l_returnflag, l_linestatus@1 as l_linestatus], aggr=[SUM(lineitem.l_quantity), SUM(lineitem.l_extendedprice), SUM(lineitem.l_extendedprice * Int64(1) - lineitem.l_discount), SUM(lineitem.l_extendedprice * Int64(1) - lineitem.l_discount Multiply Int64(1) Plus lineitem.l_tax), AVG(lineitem.l_quantity), AVG(lineitem.l_extendedprice), AVG(lineitem.l_discount), COUNT(UInt8(1))], metrics=[output_rows=4, elapsed_compute=836.092µs]

CoalesceBatchesExec: target_batch_size=2048, metrics=[output_rows=96, elapsed_compute=2.467991ms]

RepartitionExec: partitioning=Hash([Column { name: "l_returnflag", index: 0 }, Column { name: "l_linestatus", index: 1 }], 24), metrics=[fetch_time{inputPartition=0}=121.646159259s, repart_time{inputPartition=0}=1.068665ms, send_time{inputPartition=0}=181.052µs]

HashAggregateExec: mode=Partial, gby=[l_returnflag@5 as l_returnflag, l_linestatus@6 as l_linestatus], aggr=[SUM(lineitem.l_quantity), SUM(lineitem.l_extendedprice), SUM(lineitem.l_extendedprice * Int64(1) - lineitem.l_discount), SUM(lineitem.l_extendedprice * Int64(1) - lineitem.l_discount Multiply Int64(1) Plus lineitem.l_tax), AVG(lineitem.l_quantity), AVG(lineitem.l_extendedprice), AVG(lineitem.l_discount), COUNT(UInt8(1))], metrics=[output_rows=96, elapsed_compute=62.022452324s]

ProjectionExec: expr=[l_extendedprice@1 * CAST(1 AS Float64) - l_discount@2 as BinaryExpr-*BinaryExpr--Column-lineitem.l_discountLiteral1Column-lineitem.l_extendedprice, l_quantity@0 as l_quantity, l_extendedprice@1 as l_extendedprice, l_discount@2 as l_discount, l_tax@3 as l_tax, l_returnflag@4 as l_returnflag, l_linestatus@5 as l_linestatus], metrics=[output_rows=591599326, elapsed_compute=2.333647686s]

CoalesceBatchesExec: target_batch_size=2048, metrics=[output_rows=591599326, elapsed_compute=18.07630248s]

FilterExec: l_shipdate@6 <= 10471, metrics=[output_rows=591599326, elapsed_compute=14.438278954s]

ParquetExec: batch_size=4096, limit=None, partitions=[/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-5.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-10.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-11.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-13.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-6.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-14.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-20.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-2.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-22.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-19.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-0.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-16.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-21.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-23.parquet, /mnt/bigdata/tp

ch/sf100-24part-parquet/lineitem/part-4.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-17.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-9.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-1.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-18.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-8.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-12.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-15.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-7.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-3.parquet], metrics=[predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-9.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-22.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-23.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24p

art-parquet/lineitem/part-18.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-1.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-11.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-3.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-5.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-23.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-11.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-1.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-14.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-3.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/

lineitem/part-9.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-21.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-18.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-6.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-7.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-21.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-19.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-10.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-5.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-17.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/l

ineitem/part-15.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-12.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-8.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-16.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-12.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-6.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-16.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-10.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-4.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-20.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/pa

rt-0.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-7.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-2.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-8.parquet}=0, num_predicate_creation_errors=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-22.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-15.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-0.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-14.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-13.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-2.parquet}=0, predicate_evaluation_errors{filename=

/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-19.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-4.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-13.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-17.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-20.parquet}=0]

+--------------+--------------+------------+--------------------+--------------------+--------------------+--------------------+-------------------+----------------------+-------------+

| l_returnflag | l_linestatus | sum_qty | sum_base_price | sum_disc_price | sum_charge | avg_qty | avg_price | avg_disc | count_order |

+--------------+--------------+------------+--------------------+--------------------+--------------------+--------------------+-------------------+----------------------+-------------+

| A | F | 3775127569 | 5660775782243.051 | 5377736101986.644 | 5592847119226.674 | 25.49937018008533 | 38236.11640655464 | 0.05000224292310849 | 148047875 |

| N | F | 98553062 | 147771098385.98004 | 140384965965.03497 | 145999793032.7758 | 25.501556956882876 | 38237.19938880452 | 0.04998528433805398 | 3864590 |

| N | O | 7436302680 | 11150725250531.352 | 10593194904399.148 | 11016931834528.229 | 25.500009351223113 | 38237.22761127162 | 0.049997917972634566 | 291619606 |

| R | F | 3775724821 | 5661602800938.198 | 5378513347920.04 | 5593662028982.476 | 25.500066311082758 | 38236.6972423322 | 0.05000130406955949 | 148067255 |

+--------------+--------------+------------+--------------------+--------------------+--------------------+--------------------+-------------------+----------------------+-------------+

Query 1 iteration 0 took 8165.9 ms

Query 1 avg time: 8165.94 ms

```

## Arrow2 PR

```

=== Physical plan with metrics ===

SortExec: [l_returnflag@0 ASC NULLS LAST,l_linestatus@1 ASC NULLS LAST], metrics=[output_rows=4, elapsed_compute=90.895µs]

CoalescePartitionsExec, metrics=[output_rows=4, elapsed_compute=16.752µs]

ProjectionExec: expr=[l_returnflag@0 as l_returnflag, l_linestatus@1 as l_linestatus, SUM(lineitem.l_quantity)@2 as sum_qty, SUM(lineitem.l_extendedprice)@3 as sum_base_price, SUM(lineitem.l_extendedprice * Int64(1) - lineitem.l_discount)@4 as sum_disc_price, SUM(lineitem.l_extendedprice * Int64(1) - lineitem.l_discount * Int64(1) + lineitem.l_tax)@5 as sum_charge, AVG(lineitem.l_quantity)@6 as avg_qty, AVG(lineitem.l_extendedprice)@7 as avg_price, AVG(lineitem.l_discount)@8 as avg_disc, COUNT(UInt8(1))@9 as count_order], metrics=[output_rows=4, elapsed_compute=269.589µs]

HashAggregateExec: mode=FinalPartitioned, gby=[l_returnflag@0 as l_returnflag, l_linestatus@1 as l_linestatus], aggr=[SUM(lineitem.l_quantity), SUM(lineitem.l_extendedprice), SUM(lineitem.l_extendedprice * Int64(1) - lineitem.l_discount), SUM(lineitem.l_extendedprice * Int64(1) - lineitem.l_discount Multiply Int64(1) Plus lineitem.l_tax), AVG(lineitem.l_quantity), AVG(lineitem.l_extendedprice), AVG(lineitem.l_discount), COUNT(UInt8(1))], metrics=[output_rows=4, elapsed_compute=966.04µs]

CoalesceBatchesExec: target_batch_size=2048, metrics=[output_rows=96, elapsed_compute=3.118624ms]

RepartitionExec: partitioning=Hash([Column { name: "l_returnflag", index: 0 }, Column { name: "l_linestatus", index: 1 }], 24), metrics=[send_time{inputPartition=0}=202.529µs, fetch_time{inputPartition=0}=167.702662304s, repart_time{inputPartition=0}=881.476µs]

HashAggregateExec: mode=Partial, gby=[l_returnflag@5 as l_returnflag, l_linestatus@6 as l_linestatus], aggr=[SUM(lineitem.l_quantity), SUM(lineitem.l_extendedprice), SUM(lineitem.l_extendedprice * Int64(1) - lineitem.l_discount), SUM(lineitem.l_extendedprice * Int64(1) - lineitem.l_discount Multiply Int64(1) Plus lineitem.l_tax), AVG(lineitem.l_quantity), AVG(lineitem.l_extendedprice), AVG(lineitem.l_discount), COUNT(UInt8(1))], metrics=[output_rows=96, elapsed_compute=69.277706429s]

ProjectionExec: expr=[l_extendedprice@1 * CAST(1 AS Float64) - l_discount@2 as BinaryExpr-*BinaryExpr--Column-lineitem.l_discountLiteral1Column-lineitem.l_extendedprice, l_quantity@0 as l_quantity, l_extendedprice@1 as l_extendedprice, l_discount@2 as l_discount, l_tax@3 as l_tax, l_returnflag@4 as l_returnflag, l_linestatus@5 as l_linestatus], metrics=[output_rows=591599326, elapsed_compute=1.948225184s]

CoalesceBatchesExec: target_batch_size=2048, metrics=[output_rows=591599326, elapsed_compute=19.900026641s]

FilterExec: l_shipdate@6 <= 10471, metrics=[output_rows=591599326, elapsed_compute=17.25811968s]

ParquetExec: batch_size=4096, limit=None, partitions=[/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-5.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-10.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-11.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-13.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-6.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-14.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-20.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-2.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-22.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-19.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-0.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-16.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-21.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-23.parquet, /mnt/bigdata/tp

ch/sf100-24part-parquet/lineitem/part-4.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-17.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-9.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-1.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-18.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-8.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-12.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-15.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-7.parquet, /mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-3.parquet], metrics=[row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-23.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-21.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-2.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24p

art-parquet/lineitem/part-11.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-0.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-3.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-0.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-3.parquet}=0, num_predicate_creation_errors=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-12.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-7.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-4.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-2.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-23.parquet}=0, predicate_evaluation_errors{filenam

e=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-9.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-6.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-5.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-18.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-13.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-1.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-10.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-8.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-1.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-4.parquet}=0, predicate_evaluation_errors{filename=/m

nt/bigdata/tpch/sf100-24part-parquet/lineitem/part-5.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-22.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-17.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-9.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-21.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-7.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-12.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-8.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-18.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-16.parquet}=0, predicate_

evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-15.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-14.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-10.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-19.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-16.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-17.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-11.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-15.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-6.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-13.parquet}=0, predicate_evaluation_errors{

filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-20.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-20.parquet}=0, predicate_evaluation_errors{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-14.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-19.parquet}=0, row_groups_pruned{filename=/mnt/bigdata/tpch/sf100-24part-parquet/lineitem/part-22.parquet}=0]

+--------------+--------------+------------+--------------------+--------------------+--------------------+--------------------+-------------------+---------------------+-------------+

| l_returnflag | l_linestatus | sum_qty | sum_base_price | sum_disc_price | sum_charge | avg_qty | avg_price | avg_disc | count_order |

+--------------+--------------+------------+--------------------+--------------------+--------------------+--------------------+-------------------+---------------------+-------------+

| A | F | 3775127569 | 5660775782243.05 | 5377736101986.642 | 5592847119226.676 | 25.49937018008533 | 38236.11640655463 | 0.05000224292310846 | 148047875 |

| N | F | 98553062 | 147771098385.98004 | 140384965965.0348 | 145999793032.77588 | 25.501556956882876 | 38237.19938880452 | 0.04998528433805397 | 3864590 |

| N | O | 7436302680 | 11150725250531.35 | 10593194904399.143 | 11016931834528.23 | 25.500009351223113 | 38237.22761127161 | 0.04999791797263452 | 291619606 |

| R | F | 3775724821 | 5661602800938.199 | 5378513347920.042 | 5593662028982.476 | 25.500066311082758 | 38236.6972423322 | 0.05000130406955945 | 148067255 |

+--------------+--------------+------------+--------------------+--------------------+--------------------+--------------------+-------------------+---------------------+-------------+

Query 1 iteration 0 took 27109.6 ms

Query 1 avg time: 27109.65 ms

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow-datafusion] alamb commented on pull request #1556: Officially maintained Arrow2 branch

Posted by GitBox <gi...@apache.org>.

alamb commented on pull request #1556:

URL: https://github.com/apache/arrow-datafusion/pull/1556#issuecomment-1013079492

Thank you @houqp and @Igosuki -- I'll try and take a look at this later today or tomorrow. I will also start the discussion of "what does this mean for arrow-rs", which I expect may take some time to come to consensus on today

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow-datafusion] ic4y edited a comment on pull request #1556: Officially maintained Arrow2 branch

Posted by GitBox <gi...@apache.org>.

ic4y edited a comment on pull request #1556:

URL: https://github.com/apache/arrow-datafusion/pull/1556#issuecomment-1012809108

I found that the peak memory usage of this branch increases by 80% compared to the master branch。

sql : select avg(user_id) from parquet_event_1 group by user_name limit 10

test dataset : total 450 million, 50 million users

| branch | peak memory |

| ---- | ---- |

| master(d7e465 and 35d65fc) | 6G |

| arrow2_merge | 10G |

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow-datafusion] houqp edited a comment on pull request #1556: Officially maintained Arrow2 branch

Posted by GitBox <gi...@apache.org>.

houqp edited a comment on pull request #1556:

URL: https://github.com/apache/arrow-datafusion/pull/1556#issuecomment-1013835311

Thank you everyone for all the reviews and comments so far. @Igosuki and I have addressed most of them. Here are the two remaining todo items:

- [x] Get the parquet row group filter test to pass

- [x] Restore sql integration test migration. All those sql tests were migrated and passing previously, but those changes got lost when we merged the sql test refactoring from master.

I will keep working on this tomorrow. In the mean time, feel free to send PRs to my fork if you are interested in helping. After these two items are fixed, I will run another round of benchmark to double check the performance fix. It's quite interesting that I got the opposite performance test result initial even without that file buf fix :P I will dig into what's causing that as well.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow-datafusion] yjshen commented on pull request #1556: Officially maintained Arrow2 branch

Posted by GitBox <gi...@apache.org>.

yjshen commented on pull request #1556:

URL: https://github.com/apache/arrow-datafusion/pull/1556#issuecomment-1017190215

Wow! Milestone reached! Thanks for driving on this and making it happen @houqp 👍

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow-datafusion] houqp commented on pull request #1556: Officially maintained Arrow2 branch

Posted by GitBox <gi...@apache.org>.

houqp commented on pull request #1556:

URL: https://github.com/apache/arrow-datafusion/pull/1556#issuecomment-1012678951

Thanks to @Igosuki and @yjshen , we are now down to a single parquet row group pruning related test failure. All other 855 tests are passing without error.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow-datafusion] alamb commented on pull request #1556: Officially maintained Arrow2 branch

Posted by GitBox <gi...@apache.org>.

alamb commented on pull request #1556:

URL: https://github.com/apache/arrow-datafusion/pull/1556#issuecomment-1013246630

@ic4y @tustvold mentioned to me in passing that one of the differences in memory usage might be related to parquet2 decoding entire pages at a time rather than reading them out in smaller batches. I am not sure if that matches your observations

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow-datafusion] houqp commented on pull request #1556: Officially maintained Arrow2 branch

Posted by GitBox <gi...@apache.org>.

houqp commented on pull request #1556:

URL: https://github.com/apache/arrow-datafusion/pull/1556#issuecomment-1017176758

Thank you @jorgecarleitao @yjshen and @Igosuki for your hard work on the migration thus far :)

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow-datafusion] alamb commented on a change in pull request #1556: Officially maintained Arrow2 branch

Posted by GitBox <gi...@apache.org>.

alamb commented on a change in pull request #1556:

URL: https://github.com/apache/arrow-datafusion/pull/1556#discussion_r785303506

##########

File path: .github/workflows/rust.yml

##########

@@ -116,6 +116,7 @@ jobs:

cargo test --no-default-features

cargo run --example csv_sql

cargo run --example parquet_sql

+ #nopass

Review comment:

What does `#nopass` mean?

##########

File path: datafusion/tests/mod.rs

##########

@@ -1,18 +0,0 @@

-// Licensed to the Apache Software Foundation (ASF) under one

Review comment:

By deleting this file (non obviously) prevents the sql integration tests from running

When I restored it

And then tried to run the sql integration tests:

```

cd /Users/alamb/Software/arrow-datafusion && CARGO_TARGET_DIR=/Users/alamb/Software/df-target cargo test -p datafusion --test mod

```

It doesn't compile.

```

cd /Users/alamb/Software/arrow-datafusion && CARGO_TARGET_DIR=/Users/alamb/Software/df-target cargo test -p datafusion --test mod

Compiling datafusion v6.0.0 (/Users/alamb/Software/arrow-datafusion/datafusion)

error[E0432]: unresolved import `arrow::util::display`

--> datafusion/tests/sql/mod.rs:23:11

|

23 | util::display::array_value_to_string,

| ^^^^^^^ could not find `display` in `util`

error[E0433]: failed to resolve: could not find `pretty` in `util`

--> datafusion/tests/sql/explain_analyze.rs:45:34

|

45 | let formatted = arrow::util::pretty::pretty_format_batches(&results).unwrap();

| ^^^^^^ could not find `pretty` in `util`

error[E0433]: failed to resolve: could not find `pretty` in `util`

--> datafusion/tests/sql/explain_analyze.rs:551:31

|

551 | let actual = arrow::util::pretty::pretty_format_batches(&actual).unwrap();

| ^^^^^^ could not find `pretty` in `util`

error[E0433]: failed to resolve: could not find `pretty` in `util`

--> datafusion/tests/sql/explain_analyze.rs:557:31

|

557 | let actual = arrow::util::pretty::pretty_format_batches(&actual).unwrap();

| ^^^^^^ could not find `pretty` in `util`

error[E0433]: failed to resolve: could not find `pretty` in `util`

--> datafusion/tests/sql/explain_analyze.rs:763:34

|

763 | let formatted = arrow::util::pretty::pretty_format_batches(&actual).unwrap();

| ^^^^^^ could not find `pretty` in `util`

error[E0433]: failed to resolve: could not find `pretty` in `util`

--> datafusion/tests/sql/explain_analyze.rs:783:34

|

783 | let formatted = arrow::util::pretty::pretty_format_batches(&actual).unwrap();

| ^^^^^^ could not find `pretty` in `util`

error[E0433]: failed to resolve: use of undeclared type `StringArray`

--> datafusion/tests/sql/functions.rs:89:22

|

89 | Arc::new(StringArray::from(vec!["", "a", "aa", "aaa"])),

| ^^^^^^^^^^^ use of undeclared type `StringArray`

....

```

This naming is very confusing -- I'll open a PR / issue to fix it shortly

##########

File path: .github/workflows/rust.yml

##########

@@ -318,8 +320,7 @@ jobs:

run: |

cargo miri setup

cargo clean

- # Ignore MIRI errors until we can get a clean run

- cargo miri test || true

+ cargo miri test

Review comment:

👍

##########

File path: ballista/rust/core/Cargo.toml

##########

@@ -35,23 +35,21 @@ async-trait = "0.1.36"

futures = "0.3"

hashbrown = "0.11"

log = "0.4"

-prost = "0.8"

+prost = "0.9"

serde = {version = "1", features = ["derive"]}

sqlparser = "0.13"

tokio = "1.0"

-tonic = "0.5"

+tonic = "0.6"

uuid = { version = "0.8", features = ["v4"] }

chrono = { version = "0.4", default-features = false }

-# workaround for https://github.com/apache/arrow-datafusion/issues/1498

-# should be able to remove when we update arrow-flight

-quote = "=1.0.10"

-arrow-flight = { version = "6.4.0" }

+arrow-format = { version = "0.3", features = ["flight-data", "flight-service"] }

Review comment:

https://crates.io/crates/arrow-format for anyone else following along

Perhaps that is something else we could consider putting into the official apache repo over time (to reduce maintenance costs / allow others to help do so)

##########

File path: datafusion/src/physical_plan/expressions/binary.rs

##########

@@ -981,249 +859,125 @@ mod tests {

// 4. verify that the resulting expression is of type C

// 5. verify that the results of evaluation are $VEC

macro_rules! test_coercion {

- ($A_ARRAY:ident, $A_TYPE:expr, $A_VEC:expr, $B_ARRAY:ident, $B_TYPE:expr, $B_VEC:expr, $OP:expr, $C_ARRAY:ident, $C_TYPE:expr, $VEC:expr) => {{

+ ($A_ARRAY:ident, $B_ARRAY:ident, $OP:expr, $C_ARRAY:ident) => {{

let schema = Schema::new(vec![

- Field::new("a", $A_TYPE, false),

- Field::new("b", $B_TYPE, false),

+ Field::new("a", $A_ARRAY.data_type().clone(), false),

+ Field::new("b", $B_ARRAY.data_type().clone(), false),

]);

- let a = $A_ARRAY::from($A_VEC);

- let b = $B_ARRAY::from($B_VEC);

-

// verify that we can construct the expression

let expression =

binary(col("a", &schema)?, $OP, col("b", &schema)?, &schema)?;

let batch = RecordBatch::try_new(

Arc::new(schema.clone()),

- vec![Arc::new(a), Arc::new(b)],

+ vec![Arc::new($A_ARRAY), Arc::new($B_ARRAY)],

)?;

// verify that the expression's type is correct

- assert_eq!(expression.data_type(&schema)?, $C_TYPE);

+ assert_eq!(&expression.data_type(&schema)?, $C_ARRAY.data_type());

// compute

let result = expression.evaluate(&batch)?.into_array(batch.num_rows());

// verify that the array's data_type is correct

Review comment:

```suggestion

// verify that the array is equal

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow-datafusion] houqp commented on a change in pull request #1556: Officially maintained Arrow2 branch

Posted by GitBox <gi...@apache.org>.

houqp commented on a change in pull request #1556:

URL: https://github.com/apache/arrow-datafusion/pull/1556#discussion_r785398875

##########

File path: datafusion/Cargo.toml

##########

@@ -74,14 +76,24 @@ regex = { version = "^1.4.3", optional = true }

lazy_static = { version = "^1.4.0" }

smallvec = { version = "1.6", features = ["union"] }

rand = "0.8"

-avro-rs = { version = "0.13", features = ["snappy"], optional = true }

num-traits = { version = "0.2", optional = true }

pyo3 = { version = "0.14", optional = true }

+avro-schema = { version = "0.2", optional = true }

Review comment:

yes, looks like this is required in order to create an arrow2 avro reader.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow-datafusion] jorgecarleitao commented on a change in pull request #1556: Officially maintained Arrow2 branch

Posted by GitBox <gi...@apache.org>.

jorgecarleitao commented on a change in pull request #1556:

URL: https://github.com/apache/arrow-datafusion/pull/1556#discussion_r785394934

##########

File path: ballista/rust/core/Cargo.toml

##########

@@ -35,23 +35,21 @@ async-trait = "0.1.36"

futures = "0.3"

hashbrown = "0.11"

log = "0.4"

-prost = "0.8"

+prost = "0.9"

serde = {version = "1", features = ["derive"]}

sqlparser = "0.13"

tokio = "1.0"

-tonic = "0.5"

+tonic = "0.6"

uuid = { version = "0.8", features = ["v4"] }

chrono = { version = "0.4", default-features = false }

-# workaround for https://github.com/apache/arrow-datafusion/issues/1498

-# should be able to remove when we update arrow-flight

-quote = "=1.0.10"

-arrow-flight = { version = "6.4.0" }

+arrow-format = { version = "0.3", features = ["flight-data", "flight-service"] }

Review comment:

I agree.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow-datafusion] Igosuki commented on pull request #1556: Officially maintained Arrow2 branch

Posted by GitBox <gi...@apache.org>.

Igosuki commented on pull request #1556:

URL: https://github.com/apache/arrow-datafusion/pull/1556#issuecomment-1013898492

Yes sorry about that, these were simply comments to Indicate that these

particular feature tests were not passing.

Le dim. 16 janv. 2022 à 09:52, QP Hou ***@***.***> a écrit :

> Thank you everyone for all the reviews and comments so far. @Igosuki

> <https://github.com/Igosuki> and I have addressed most of them. Here are

> the two remaining todo items:

>

> - Get the parquet row group filter test to pass

> - Restore sql integration test migration. All those sql tests were

> migrated and passing previously, but those changes got lost when we merged

> the sql test refactoring from master.

>

> I will keep working on this tomorrow. In the mean time, feel free to send

> PRs to my fork if you are interested in helping. After these two items are

> fixed, I will run another round of benchmark to double check the

> performance fix. It's quite interesting that I got the opposite performance

> test result initial even without that file buf fix :P I will dig into

> what's causing that as well.

>

> —

> Reply to this email directly, view it on GitHub

> <https://github.com/apache/arrow-datafusion/pull/1556#issuecomment-1013835311>,

> or unsubscribe

> <https://github.com/notifications/unsubscribe-auth/AADDFBSPYHZOIG4O2XOGWZDUWKBLXANCNFSM5L5P5AVQ>

> .

> Triage notifications on the go with GitHub Mobile for iOS

> <https://apps.apple.com/app/apple-store/id1477376905?ct=notification-email&mt=8&pt=524675>

> or Android

> <https://play.google.com/store/apps/details?id=com.github.android&referrer=utm_campaign%3Dnotification-email%26utm_medium%3Demail%26utm_source%3Dgithub>.

>

> You are receiving this because you were mentioned.Message ID:

> ***@***.***>

>

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow-datafusion] houqp commented on pull request #1556: Officially maintained Arrow2 branch

Posted by GitBox <gi...@apache.org>.

houqp commented on pull request #1556:

URL: https://github.com/apache/arrow-datafusion/pull/1556#issuecomment-1019727038

Quick update on this, I have cleaned up the issues in the arrow2 milestone: https://github.com/apache/arrow-datafusion/milestone/3. The main remaining items are:

* https://github.com/apache/arrow-datafusion/issues/1652

* https://github.com/apache/arrow-datafusion/issues/1656

* https://github.com/apache/arrow-datafusion/issues/1657

I will keep work on issues in the arrow2 milestone whenever I have capacity. If anyone of you are interested in helping, please feel free to comment on those issues or send PRs to the official arrow2 branch.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow-datafusion] alamb commented on pull request #1556: Officially maintained Arrow2 branch

Posted by GitBox <gi...@apache.org>.

alamb commented on pull request #1556:

URL: https://github.com/apache/arrow-datafusion/pull/1556#issuecomment-1013674164

Discussion of arrow-rs / arrow2 is here in case anyone missed it: https://github.com/apache/arrow-rs/issues/1176

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow-datafusion] Igosuki commented on pull request #1556: Officially maintained Arrow2 branch

Posted by GitBox <gi...@apache.org>.

Igosuki commented on pull request #1556:

URL: https://github.com/apache/arrow-datafusion/pull/1556#issuecomment-1013010833

Just use massif or heaptrack

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow-datafusion] Igosuki commented on pull request #1556: Officially maintained Arrow2 branch

Posted by GitBox <gi...@apache.org>.

Igosuki commented on pull request #1556:

URL: https://github.com/apache/arrow-datafusion/pull/1556#issuecomment-1013080406

Ok adding BufReader gave 50% perf on parquet. https://github.com/houqp/arrow-datafusion/pull/19/commits/d8a184969bd2a88292158cbc704e0cb959b28ea6

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow-datafusion] houqp commented on pull request #1556: Officially maintained Arrow2 branch

Posted by GitBox <gi...@apache.org>.

houqp commented on pull request #1556:

URL: https://github.com/apache/arrow-datafusion/pull/1556#issuecomment-1014163126

The parquet row group test failure turned out to be a red herring. The asserted expected result is actually not correct. I have filed a follow up issue at https://github.com/apache/arrow-datafusion/issues/1591. I changed the expected result in this branch to fix the test failure for now. What the predicate pruning logic returns in this branch is more correct than what we have in master, but still wrong. The proper fix is out of scope of arrow2 migration and tracked in #1591.

We are now passing all 856 unit tests. 2 more integration tests to fix, which are caused by difference in how arrow2 formats binary array.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow-datafusion] yjshen commented on pull request #1556: Officially maintained Arrow2 branch

Posted by GitBox <gi...@apache.org>.

yjshen commented on pull request #1556:

URL: https://github.com/apache/arrow-datafusion/pull/1556#issuecomment-1014180045

> The parquet row group test failure turned out to be a red herring. The asserted expected result is actually not correct. I have filed a follow up issue at #1591.

I shared the same observation in https://github.com/houqp/arrow-datafusion/pull/16, but ignored the test at the time.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow-datafusion] yjshen commented on pull request #1556: Officially maintained Arrow2 branch

Posted by GitBox <gi...@apache.org>.

yjshen commented on pull request #1556:

URL: https://github.com/apache/arrow-datafusion/pull/1556#issuecomment-1012813388

@ic4y I'm confused with the numbers and "increases by 80% compared to the master branch"

--

This is an automated message from the Apache Git Service.