You are viewing a plain text version of this content. The canonical link for it is here.

Posted to commits@pulsar.apache.org by GitBox <gi...@apache.org> on 2021/05/13 06:33:42 UTC

[GitHub] [pulsar] devinbost opened a new issue #6054: Catastrophic frequent random subscription freezes, especially on high-traffic topics.

devinbost opened a new issue #6054:

URL: https://github.com/apache/pulsar/issues/6054

**Describe the bug**

Topics randomly freeze, causing catastrophic topic outages on a weekly (or more frequent) basis. This has been an issue as long as my team has used Pulsar, and it's been communicated to a number of folks on the Pulsar PMC committee.

(I thought an issue was already created for this bug, but I couldn't find it anywhere.)

**To Reproduce**

We have not figured out how to reproduce the issue. It's random (seems to be non-deterministic) and doesn't seem to have any clues in the broker logs.

**Expected behavior**

Topics should never just randomly stop working to where the only resolution is restarting the problem broker.

**Steps to Diagnose and Temporarily Resolve**

**Step 2**: Check the rate out on the topic. (click on the topic in the dashboard, or do a stats on the topic and look at the "msgRateOut")

If the rate out is 0 this is likely a frozen topic, but to verify do the following:

In the pulsar dashboard, click on the broker that topic is living on. If you see that there are multiple topic that have a rate out of 0, then proceed to the next step, if not it could potentially be another issue. Investigate further.

**Step 3**: Stop the broker on the server that the topic is living on. `pulsar-broker stop` .

**Step 4**: Wait for the backlog to be consumed and all the functions to be rescheduled. (typically wait for about 5-10 mins)

**Environment:**

```

Docker on bare metal running: `apachepulsar/pulsar-all:2.4.0`

on CentOS.

Brokers are the function workers.

```

This has been an issue with previous versions of Pulsar as well.

**Additional context**

Problem was MUCH worse with Pulsar 2.4.2, so our team needed to roll back to 2.4.0 (which has the problem, but it's less frequent).

This is preventing the team from progressing in the use of Pulsar, and it's causing SLA problems with those who use our service.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [pulsar] devinbost edited a comment on issue #6054: Catastrophic frequent random subscription freezes, especially on high-traffic topics.

Posted by GitBox <gi...@apache.org>.

devinbost edited a comment on issue #6054:

URL: https://github.com/apache/pulsar/issues/6054#issuecomment-839495126

I traced the call chain for where ack's should be getting sent, and I filled in some additional steps:

When `ProducerImpl` sends the messages, it builds `newSend` command instances, which get picked up by `ServerCnx.handleSend(..)`, which goes to `Producer.publishMessage(..)`, then `PersistentTopic.publishMessage(..)`

which calls `asyncAddEntry(headersAndPayload, publishContext)`, which calls `[PersistentTopic].ledger.asyncAddEntry(headersAndPayload, (int) publishContext.getNumberOfMessages(), this, publishContext)`

which creates the `OpAddEntry` and calls `internalAsyncAddEntry(addOperation)` on a different thread, which adds `OpAddEntry` to `[ManagedLedgerImpl].pendingAddEntries`

From somewhere (it's not clear to me exactly where yet) we call `OpAddEntry.safeRun()`, which polls `pendingAddEntries`, gets the callback on `OpAddEntry` (which is the `PersistentTopic` instance) and calls `[PersistentTopic].addComplete(lastEntry, data.asReadOnly(), ctx)`, which calls `publishContext.completed(..)` on `Producer.MessagePublishContext`, which calls `Producer.ServerCnx.execute(this)` on the `MessagePublishContext`, which calls `MessagePublishContext.run()`, which triggers `Producer.ServerCnx.getCommandSender().sendSendReceiptResponse(..)` [SEND_RECEIPT], which writes a `newSendReceiptCommand` to the channel.

So, I added a lot of logging, and to my great surprise, I'm getting an NPE after adding these debug lines to `OpAddEntry.createOpAddEntry(..)`:

```

private static OpAddEntry createOpAddEntry(ManagedLedgerImpl ml, ByteBuf data, AddEntryCallback callback, Object ctx) {

log.debug("Running OpAddEntry.createOpAddEntry(..)");

OpAddEntry op = RECYCLER.get();

log.debug("1. In OpAddEntry.createOpAddEntry, OpAddEntry is {}", op != null ? op.toString() : null);

op.ml = ml;

op.ledger = null;

op.data = data.retain();

op.dataLength = data.readableBytes();

op.callback = callback;

op.ctx = ctx;

op.addOpCount = ManagedLedgerImpl.ADD_OP_COUNT_UPDATER.incrementAndGet(ml);

op.closeWhenDone = false;

op.entryId = -1;

op.startTime = System.nanoTime();

op.state = State.OPEN;

ml.mbean.addAddEntrySample(op.dataLength);

log.debug("2. In OpAddEntry.createOpAddEntry, OpAddEntry is {}", op != null ? op.toString() : null);

return op;

}

```

Here's the stack trace:

```

2021-05-12T06:14:19,569 [pulsar-io-28-32] DEBUG org.apache.bookkeeper.mledger.impl.OpAddEntry - Running OpAddEntry.createOpAddEntry(..)

2021-05-12T06:14:19,569 [pulsar-io-28-32] WARN org.apache.pulsar.broker.service.ServerCnx - [/10.20.20.160:37696] Got exception java.lang.NullPointerException

at org.apache.bookkeeper.mledger.impl.OpAddEntry.toString(OpAddEntry.java:354)

at org.apache.bookkeeper.mledger.impl.OpAddEntry.createOpAddEntry(OpAddEntry.java:100)

at org.apache.bookkeeper.mledger.impl.OpAddEntry.create(OpAddEntry.java:81)

at org.apache.bookkeeper.mledger.impl.ManagedLedgerImpl.asyncAddEntry(ManagedLedgerImpl.java:689)

at org.apache.pulsar.broker.service.persistent.PersistentTopic.asyncAddEntry(PersistentTopic.java:428)

at org.apache.pulsar.broker.service.persistent.PersistentTopic.publishMessage(PersistentTopic.java:404)

at org.apache.pulsar.broker.service.Producer.publishMessageToTopic(Producer.java:224)

at org.apache.pulsar.broker.service.Producer.publishMessage(Producer.java:161)

at org.apache.pulsar.broker.service.ServerCnx.handleSend(ServerCnx.java:1372)

at org.apache.pulsar.common.protocol.PulsarDecoder.channelRead(PulsarDecoder.java:207)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:357)

at io.netty.handler.flow.FlowControlHandler.dequeue(FlowControlHandler.java:200)

at io.netty.handler.flow.FlowControlHandler.channelRead(FlowControlHandler.java:162)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:357)

at io.netty.handler.codec.ByteToMessageDecoder.fireChannelRead(ByteToMessageDecoder.java:324)

at io.netty.handler.codec.ByteToMessageDecoder.fireChannelRead(ByteToMessageDecoder.java:311)

at io.netty.handler.codec.ByteToMessageDecoder.callDecode(ByteToMessageDecoder.java:432)

at io.netty.handler.codec.ByteToMessageDecoder.channelRead(ByteToMessageDecoder.java:276)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:357)

at io.netty.handler.ssl.SslHandler.unwrap(SslHandler.java:1376)

at io.netty.handler.ssl.SslHandler.decodeNonJdkCompatible(SslHandler.java:1265)

at io.netty.handler.ssl.SslHandler.decode(SslHandler.java:1302)

at io.netty.handler.codec.ByteToMessageDecoder.decodeRemovalReentryProtection(ByteToMessageDecoder.java:508)

at io.netty.handler.codec.ByteToMessageDecoder.callDecode(ByteToMessageDecoder.java:447)

at io.netty.handler.codec.ByteToMessageDecoder.channelRead(ByteToMessageDecoder.java:276)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:357)

at io.netty.channel.DefaultChannelPipeline$HeadContext.channelRead(DefaultChannelPipeline.java:1410)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365)

at io.netty.channel.DefaultChannelPipeline.fireChannelRead(DefaultChannelPipeline.java:919)

at io.netty.channel.epoll.AbstractEpollStreamChannel$EpollStreamUnsafe.epollInReady(AbstractEpollStreamChannel.java:795)

at io.netty.channel.epoll.AbstractEpollChannel$AbstractEpollUnsafe$1.run(AbstractEpollChannel.java:425)

at io.netty.util.concurrent.AbstractEventExecutor.safeExecute(AbstractEventExecutor.java:164)

at io.netty.util.concurrent.SingleThreadEventExecutor.runAllTasks(SingleThreadEventExecutor.java:472)

at io.netty.channel.epoll.EpollEventLoop.run(EpollEventLoop.java:384)

at io.netty.util.concurrent.SingleThreadEventExecutor$4.run(SingleThreadEventExecutor.java:989)

at io.netty.util.internal.ThreadExecutorMap$2.run(ThreadExecutorMap.java:74)

at io.netty.util.concurrent.FastThreadLocalRunnable.run(FastThreadLocalRunnable.java:30)

at java.lang.Thread.run(Thread.java:748)

```

The only place we could be throwing an NPE is here:

`log.debug("1. In OpAddEntry.createOpAddEntry, OpAddEntry is {}", op != null ? op.toString() : null);`

So, `op` isn't null until the null check passes?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [pulsar] hpvd commented on issue #6054: Catastrophic frequent random topic freezes, especially on high-traffic topics.

Posted by GitBox <gi...@apache.org>.

hpvd commented on issue #6054:

URL: https://github.com/apache/pulsar/issues/6054#issuecomment-741618223

@kemburi which version are you using?

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [pulsar] lhotari commented on issue #6054: Catastrophic frequent random topic freezes, especially on high-traffic topics.

Posted by GitBox <gi...@apache.org>.

lhotari commented on issue #6054:

URL: https://github.com/apache/pulsar/issues/6054#issuecomment-797031711

@devinbost Would be able to test with a 2.8.0-SNAPSHOT version? What might fix some lockup issues is #9787 .

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [pulsar] devinbost commented on issue #6054: Catastrophic frequent random topic freezes, especially on high-traffic topics.

Posted by GitBox <gi...@apache.org>.

devinbost commented on issue #6054:

URL: https://github.com/apache/pulsar/issues/6054#issuecomment-817014223

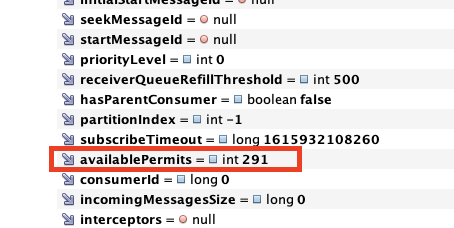

The negative permits value matches between the dispatcher and the broker Consumer for the corresponding topic:

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [pulsar] devinbost commented on issue #6054: Catastrophic frequent random subscription freezes, especially on high-traffic topics.

Posted by GitBox <gi...@apache.org>.

devinbost commented on issue #6054:

URL: https://github.com/apache/pulsar/issues/6054#issuecomment-839540327

That `toString()` bug is addressed by this PR: https://github.com/apache/pulsar/pull/10548

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [pulsar] devinbost commented on issue #6054: Catastrophic frequent random subscription freezes, especially on high-traffic topics.

Posted by GitBox <gi...@apache.org>.

devinbost commented on issue #6054:

URL: https://github.com/apache/pulsar/issues/6054#issuecomment-840891270

@lhotari I've attached thread dumps from two of the brokers that were exhibiting symptoms.

[thread_dump_broker08.log](https://github.com/apache/pulsar/files/6475517/thread_dump_broker08.log)

[thread_dump_broker10.log](https://github.com/apache/pulsar/files/6475518/thread_dump_broker10.log)

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [pulsar] devinbost commented on issue #6054: Catastrophic frequent random subscription freezes, especially on high-traffic topics.

Posted by GitBox <gi...@apache.org>.

devinbost commented on issue #6054:

URL: https://github.com/apache/pulsar/issues/6054#issuecomment-844626492

@rdhabalia This happened after I applied the default broker.conf settings (fixing the non-default configs for ManagedLedger and a few other things that we had) and restarted the brokers.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [pulsar] rdhabalia closed issue #6054: Catastrophic frequent random subscription freezes, especially on high-traffic topics.

Posted by GitBox <gi...@apache.org>.

rdhabalia closed issue #6054:

URL: https://github.com/apache/pulsar/issues/6054

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [pulsar] devinbost edited a comment on issue #6054: Catastrophic frequent random subscription freezes, especially on high-traffic topics.

Posted by GitBox <gi...@apache.org>.

devinbost edited a comment on issue #6054:

URL: https://github.com/apache/pulsar/issues/6054#issuecomment-840894974

I noticed that in almost every case where the broker is getting a SEND command, it's producing a SEND_RECEIPT command:

```

$ cat messages_broker10.log | grep 'Received cmd SEND' | wc -l

20806

$ cat messages_broker10.log | grep 'PulsarCommandSenderImpl.sendSendReceiptResponse' | wc -l

20806

$ cat messages_broker8.log | grep 'Received cmd SEND' | wc -l

44654

$ cat messages_broker8.log | grep 'PulsarCommandSenderImpl.sendSendReceiptResponse' | wc -l

44651

```

I assumed I'd see a gap between them, but unless something is creating some kind of logical deadlock that's causing it to stop emitting logs in between those commands, it seems like the problem is either before the SEND command or after the SEND_RECEIPT command.

With that said, I noticed that the `lastConfirmedEntry` isn't progressing on one of the threads after the freeze occurred:

```

DEBUG org.apache.bookkeeper.mledger.impl.ManagedLedgerImpl - IsValid position: 3215336:10636 -- last: 3215336:11436

DEBUG org.apache.bookkeeper.mledger.impl.ManagedLedgerImpl - IsValid position: 3215336:10672 -- last: 3215336:11436

DEBUG org.apache.bookkeeper.mledger.impl.ManagedLedgerImpl - IsValid position: 3215336:10696 -- last: 3215336:11436

DEBUG org.apache.bookkeeper.mledger.impl.ManagedLedgerImpl - IsValid position: 3215336:10747 -- last: 3215336:11436

DEBUG org.apache.bookkeeper.mledger.impl.ManagedLedgerImpl - IsValid position: 3215336:10775 -- last: 3215336:11436

DEBUG org.apache.bookkeeper.mledger.impl.ManagedLedgerImpl - IsValid position: 3215336:10783 -- last: 3215336:11436

DEBUG org.apache.bookkeeper.mledger.impl.ManagedLedgerImpl - IsValid position: 3215336:10815 -- last: 3215336:11436

DEBUG org.apache.bookkeeper.mledger.impl.ManagedLedgerImpl - IsValid position: 3215336:10840 -- last: 3215336:11436

DEBUG org.apache.bookkeeper.mledger.impl.ManagedLedgerImpl - IsValid position: 3215336:10886 -- last: 3215336:11436

DEBUG org.apache.bookkeeper.mledger.impl.ManagedLedgerImpl - IsValid position: 3215336:10915 -- last: 3215336:11436

DEBUG org.apache.bookkeeper.mledger.impl.ManagedLedgerImpl - IsValid position: 3215336:10916 -- last: 3215336:11436

DEBUG org.apache.bookkeeper.mledger.impl.ManagedLedgerImpl - IsValid position: 3215336:10917 -- last: 3215336:11436

DEBUG org.apache.bookkeeper.mledger.impl.ManagedLedgerImpl - IsValid position: 3215336:10918 -- last: 3215336:11436

DEBUG org.apache.bookkeeper.mledger.impl.ManagedLedgerImpl - IsValid position: 3215336:10919 -- last: 3215336:11436

DEBUG org.apache.bookkeeper.mledger.impl.ManagedLedgerImpl - IsValid position: 3215336:10920 -- last: 3215336:11436

DEBUG org.apache.bookkeeper.mledger.impl.ManagedLedgerImpl - IsValid position: 3215336:10944 -- last: 3215336:11436

DEBUG org.apache.bookkeeper.mledger.impl.ManagedLedgerImpl - IsValid position: 3215336:10945 -- last: 3215336:11436

DEBUG org.apache.bookkeeper.mledger.impl.ManagedLedgerImpl - IsValid position: 3215336:10948 -- last: 3215336:11436

DEBUG org.apache.bookkeeper.mledger.impl.ManagedLedgerImpl - IsValid position: 3215336:10952 -- last: 3215336:11436

DEBUG org.apache.bookkeeper.mledger.impl.ManagedLedgerImpl - IsValid position: 3215336:10954 -- last: 3215336:11436

DEBUG org.apache.bookkeeper.mledger.impl.ManagedLedgerImpl - IsValid position: 3215336:10957 -- last: 3215336:11436

DEBUG org.apache.bookkeeper.mledger.impl.ManagedLedgerImpl - IsValid position: 3215336:10959 -- last: 3215336:11436

DEBUG org.apache.bookkeeper.mledger.impl.ManagedLedgerImpl - IsValid position: 3215336:10963 -- last: 3215336:11436

DEBUG org.apache.bookkeeper.mledger.impl.ManagedLedgerImpl - IsValid position: 3215336:10974 -- last: 3215336:11436

DEBUG org.apache.bookkeeper.mledger.impl.ManagedLedgerImpl - IsValid position: 3215336:10977 -- last: 3215336:11436

DEBUG org.apache.bookkeeper.mledger.impl.ManagedLedgerImpl - IsValid position: 3215336:10982 -- last: 3215336:11436

DEBUG org.apache.bookkeeper.mledger.impl.ManagedLedgerImpl - IsValid position: 3215336:10984 -- last: 3215336:11436

DEBUG org.apache.bookkeeper.mledger.impl.ManagedLedgerImpl - IsValid position: 3215336:11029 -- last: 3215336:11436

DEBUG org.apache.bookkeeper.mledger.impl.ManagedLedgerImpl - IsValid position: 3215336:11036 -- last: 3215336:11436

DEBUG org.apache.bookkeeper.mledger.impl.ManagedLedgerImpl - IsValid position: 3215336:11039 -- last: 3215336:11436

DEBUG org.apache.bookkeeper.mledger.impl.ManagedLedgerImpl - IsValid position: 3215336:11051 -- last: 3215336:11436

DEBUG org.apache.bookkeeper.mledger.impl.ManagedLedgerImpl - IsValid position: 3215336:11053 -- last: 3215336:11436

DEBUG org.apache.bookkeeper.mledger.impl.ManagedLedgerImpl - IsValid position: 3215336:11065 -- last: 3215336:11436

DEBUG org.apache.bookkeeper.mledger.impl.ManagedLedgerImpl - IsValid position: 3215336:11072 -- last: 3215336:11436

DEBUG org.apache.bookkeeper.mledger.impl.ManagedLedgerImpl - IsValid position: 3215336:11077 -- last: 3215336:11436

DEBUG org.apache.bookkeeper.mledger.impl.ManagedLedgerImpl - IsValid position: 3215336:11086 -- last: 3215336:11436

DEBUG org.apache.bookkeeper.mledger.impl.ManagedLedgerImpl - IsValid position: 3215336:11179 -- last: 3215336:11436

DEBUG org.apache.bookkeeper.mledger.impl.ManagedLedgerImpl - IsValid position: 3215336:11190 -- last: 3215336:11436

DEBUG org.apache.bookkeeper.mledger.impl.ManagedLedgerImpl - IsValid position: 3215336:11199 -- last: 3215336:11436

DEBUG org.apache.bookkeeper.mledger.impl.ManagedLedgerImpl - IsValid position: 3215336:11239 -- last: 3215336:11436

DEBUG org.apache.bookkeeper.mledger.impl.ManagedLedgerImpl - IsValid position: 3215336:11252 -- last: 3215336:11436

DEBUG org.apache.bookkeeper.mledger.impl.ManagedLedgerImpl - IsValid position: 3215336:11258 -- last: 3215336:11436

DEBUG org.apache.bookkeeper.mledger.impl.ManagedLedgerImpl - IsValid position: 3215336:11264 -- last: 3215336:11436

DEBUG org.apache.bookkeeper.mledger.impl.ManagedLedgerImpl - IsValid position: 3215336:11358 -- last: 3215336:11436

DEBUG org.apache.bookkeeper.mledger.impl.ManagedLedgerImpl - IsValid position: 3215336:11359 -- last: 3215336:11436

DEBUG org.apache.bookkeeper.mledger.impl.ManagedLedgerImpl - IsValid position: 3215336:11385 -- last: 3215336:11436

DEBUG org.apache.bookkeeper.mledger.impl.ManagedLedgerImpl - IsValid position: 3215336:11437 -- last: 3215336:11436

```

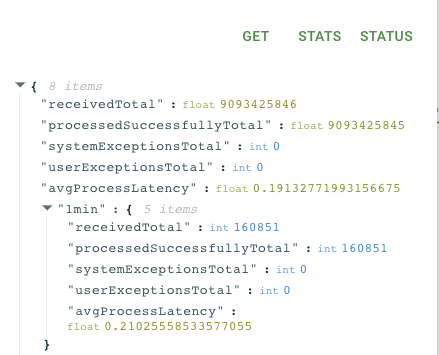

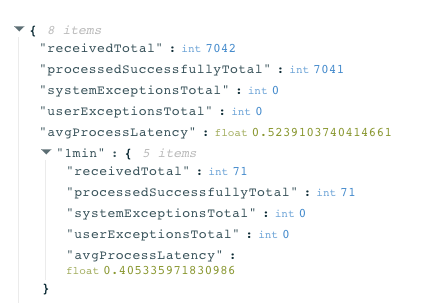

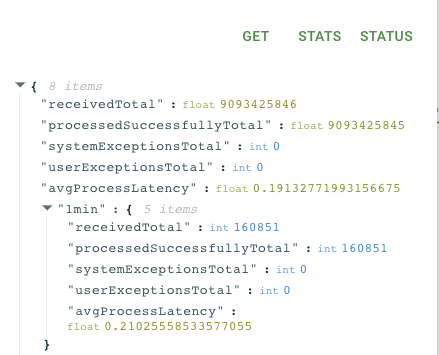

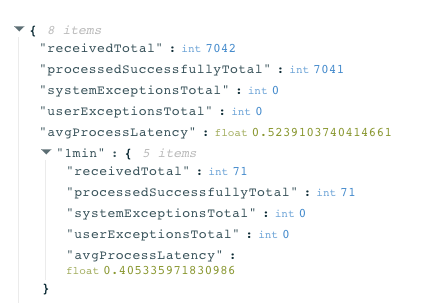

When I say "freeze," that may be misleading because it's not a complete lockup. We still get messages to produce, but it's so slow that it's almost equivalent to being frozen. As a comparison, here's one of the functions when it's healthy:

Here's that same function when it's "frozen":

So, over 1 minute, it's processing 71 messages instead of 160,851 messages.

I also noticed this warning in the logs after it had been stuck for a while, but I'm not sure if it's related:

```

May 12 21:51:16 server10 3ed25e05b45a: 2021-05-13T03:51:16,634 [main-SendThread(server10.example.com:2181)] WARN org.apache.zookeeper.ClientCnxn - Session 0x1028ed903dd038f for sever server10.example.com/10.20.69.29:2181, Closing socket connection. Attempting reconnect except it is a SessionExpiredException.

May 12 21:51:16 server10 3ed25e05b45a: org.apache.zookeeper.ClientCnxn$SessionTimeoutException: Client session timed out, have not heard from server in 10302ms for session id 0x1028ed903dd038f

May 12 21:51:16 server10 3ed25e05b45a: #011at org.apache.zookeeper.ClientCnxn$SendThread.run(ClientCnxn.java:1243) [org.apache.pulsar-pulsar-zookeeper-2.8.0-SNAPSHOT.jar:2.8.0-SNAPSHOT]

May 12 21:51:16 server10 3ed25e05b45a: 2021-05-13T03:51:16,634 [main-SendThread(server10.example.com:2181)] WARN org.apache.zookeeper.ClientCnxn - Session 0x1028ed903dd0390 for sever server10.example.com/10.20.69.29:2181, Closing socket connection. Attempting reconnect except it is a SessionExpiredException.

May 12 21:51:16 server10 3ed25e05b45a: org.apache.zookeeper.ClientCnxn$SessionTimeoutException: Client session timed out, have not heard from server in 12554ms for session id 0x1028ed903dd0390

May 12 21:51:16 server10 3ed25e05b45a: #011at org.apache.zookeeper.ClientCnxn$SendThread.run(ClientCnxn.java:1243) [org.apache.pulsar-pulsar-zookeeper-2.8.0-SNAPSHOT.jar:2.8.0-SNAPSHOT]

May 12 21:51:16 server10 cb3b837bde84: 2021-05-13T03:51:16,635 [NIOWorkerThread-72] WARN org.apache.zookeeper.server.NIOServerCnxn - Unable to read additional data from client sessionid 0x1028ed903dd038f, likely client has closed socket

May 12 21:51:16 server10 3ed25e05b45a: 2021-05-13T03:51:16,637 [pulsar-io-28-20] INFO org.apache.pulsar.broker.service.ServerCnx - Closed connection from /10.20.17.40:44725

May 12 21:51:16 server10 3ed25e05b45a: 2021-05-13T03:51:16,637 [pulsar-io-28-21] INFO org.apache.pulsar.broker.service.ServerCnx - Closed connection from /10.20.17.41:58126

May 12 21:51:16 server10 3ed25e05b45a: 2021-05-13T03:51:16,639 [pulsar-io-28-19] INFO org.apache.pulsar.broker.service.ServerCnx - Closed connection from /10.20.17.41:45541

May 12 21:51:16 server10 3ed25e05b45a: 2021-05-13T03:51:16,780 [pulsar-io-28-15] DEBUG org.apache.pulsar.common.protocol.PulsarDecoder - [/10.20.69.28:36462] Received cmd PING

May 12 21:51:16 server10 3ed25e05b45a: 2021-05-13T03:51:16,780 [pulsar-io-28-15] DEBUG org.apache.pulsar.common.protocol.PulsarHandler - [[id: 0x1f7c0cfe, L:/10.20.69.29:6650 - R:/10.20.69.28:36462]] Replying back to ping message

May 12 21:51:16 server10 3ed25e05b45a: 2021-05-13T03:51:16,780 [pulsar-io-28-15] DEBUG org.apache.pulsar.common.protocol.PulsarDecoder - [/10.20.69.28:36462] Received cmd PONG

May 12 21:51:17 server10 3ed25e05b45a: 2021-05-13T03:51:17,036 [pulsar-io-28-19] DEBUG org.apache.pulsar.common.protocol.PulsarHandler - [[id: 0xb055a726, L:/10.20.69.29:6651 - R:/10.20.69.28:36414]] Sending ping message

May 12 21:51:17 server10 3ed25e05b45a: 2021-05-13T03:51:17,551 [main-SendThread(server09.example.com:2181)] WARN org.apache.zookeeper.ClientCnxn - Unable to reconnect to ZooKeeper service, session 0x1028ed903dd0390 has expired

May 12 21:51:17 server10 3ed25e05b45a: 2021-05-13T03:51:17,551 [main-EventThread] ERROR org.apache.bookkeeper.zookeeper.ZooKeeperWatcherBase - ZooKeeper client connection to the ZooKeeper server has expired!

May 12 21:51:17 server10 3ed25e05b45a: 2021-05-13T03:51:17,551 [main-SendThread(server09.example.com:2181)] WARN org.apache.zookeeper.ClientCnxn - Session 0x1028ed903dd0390 for sever server09.example.com/10.20.69.28:2181, Closing socket connection. Attempting reconnect except it is a SessionExpiredException.

May 12 21:51:17 server10 3ed25e05b45a: org.apache.zookeeper.ClientCnxn$SessionExpiredException: Unable to reconnect to ZooKeeper service, session 0x1028ed903dd0390 has expired

May 12 21:51:17 server10 3ed25e05b45a: #011at org.apache.zookeeper.ClientCnxn$SendThread.onConnected(ClientCnxn.java:1419) ~[org.apache.pulsar-pulsar-zookeeper-2.8.0-SNAPSHOT.jar:2.8.0-SNAPSHOT]

May 12 21:51:17 server10 3ed25e05b45a: #011at org.apache.zookeeper.ClientCnxnSocket.readConnectResult(ClientCnxnSocket.java:154) ~[org.apache.pulsar-pulsar-zookeeper-2.8.0-SNAPSHOT.jar:2.8.0-SNAPSHOT]

May 12 21:51:17 server10 3ed25e05b45a: #011at org.apache.zookeeper.ClientCnxnSocketNIO.doIO(ClientCnxnSocketNIO.java:86) ~[org.apache.pulsar-pulsar-zookeeper-2.8.0-SNAPSHOT.jar:2.8.0-SNAPSHOT]

May 12 21:51:17 server10 3ed25e05b45a: #011at org.apache.zookeeper.ClientCnxnSocketNIO.doTransport(ClientCnxnSocketNIO.java:350) ~[org.apache.pulsar-pulsar-zookeeper-2.8.0-SNAPSHOT.jar:2.8.0-SNAPSHOT]

May 12 21:51:17 server10 3ed25e05b45a: #011at org.apache.zookeeper.ClientCnxn$SendThread.run(ClientCnxn.java:1275) [org.apache.pulsar-pulsar-zookeeper-2.8.0-SNAPSHOT.jar:2.8.0-SNAPSHOT]

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [pulsar] devinbost commented on issue #6054: Catastrophic frequent random subscription freezes, especially on high-traffic topics.

Posted by GitBox <gi...@apache.org>.

devinbost commented on issue #6054:

URL: https://github.com/apache/pulsar/issues/6054#issuecomment-873367594

I mapped out more of the ack flow, so I will add it to what I documented [here](https://github.com/apache/pulsar/issues/6054#issuecomment-839495126) and make it more readable.

The bracket notation below is intended to specify an instance of the class (to distinguish from a static method call.)

When `ProducerImpl` sends the messages, it builds `newSend` command instances,

which get picked up by `ServerCnx.handleSend(..)`,

which goes to `Producer.publishMessage(..)`,

then `PersistentTopic.publishMessage(..)`

which calls `asyncAddEntry(headersAndPayload, publishContext)`,

which calls `[PersistentTopic].ledger.asyncAddEntry(headersAndPayload, (int) publishContext.getNumberOfMessages(), this, publishContext)`

which creates the `OpAddEntry` and calls `internalAsyncAddEntry(addOperation)` on a different thread,

which adds `OpAddEntry` to `[ManagedLedgerImpl].pendingAddEntries`

From somewhere (it's not clear to me exactly where yet) we call `OpAddEntry.safeRun()`,

which polls `pendingAddEntries`,

gets the callback on `OpAddEntry` (which is the `PersistentTopic` instance)

and calls `[PersistentTopic].addComplete(lastEntry, data.asReadOnly(), ctx)`,

which calls `publishContext.completed(..)` on `Producer.MessagePublishContext`,

which calls `Producer.ServerCnx.execute(this)` on the `MessagePublishContext`,

which calls `MessagePublishContext.run()`,

which triggers `Producer.ServerCnx.getCommandSender().sendSendReceiptResponse(..)` [SEND_RECEIPT],

which writes a `newSendReceiptCommand` to the channel.

From there, the client gets the `SEND_RECEIPT` command from `PulsarDecoder`,

which calls `[ClientCnx].handleSendReceipt(..)`,

which calls `[ProducerImpl].ackReceived(..)`,

which calls `releaseSemaphoreForSendOp(..)` to release the semaphore.

The semaphore doesn't block until `maxPendingMessages` is reached, which is 1000 by default.

After a rollover, `pendingAddEntries` is also polled. (Could this access race with the other path that's polling `pendingAddEntries`?) Here's how this happens:

Something triggers `[ManagedLedgerImpl].createComplete(..)`,

which calls `[ManagedLedgerImpl].updateLedgersListAfterRollover(MetaStoreCallback<Void>)`,

which calls `[MetaStoreImpl].asyncUpdateLedgerIds(..)`,

which calls `callback.operationComplete(..)` on the `MetaStoreCallback<Void>`,

which calls `[ManagedLedgerImpl].updateLedgersIdsComplete(..)`,

which polls for `pendingAddEntries`

which calls `op.initiate()` on each `OpAddEntry` in `pendingAddEntries`,

which calls `ledger.asyncAddEntry(..)`,

which calls `[LedgerHandle].asyncAddEntry(..)`,

which calls `[LedgerHandle].doAsyncAddEntry(op)`,

which adds the `op` to `pendingAddOps` on the `LedgerHandle` instance,

which calls `[PendingAddOp].cb.addCompleteWithLatency(..)`

which calls the callback on `[PendingAddOp]`, which is `[OpAddEntry]`

which calls `addCompleteWithLatency` on the `AddCallback` interface,

which calls `[OpAddEntry].addComplete(..)`

which, if it succeeded:

changes its state and recycles it if completed or if there was a failure, it triggers `[OpAddEntry].safeRun()`

which removes itself from `[ManagedLedgerImpl].pendingAddEntries`

and triggers `cb.addComplete(..)` on `[PersistentTopic]`,

which triggers `publishContext.completed(..)` on `[Producer.MessagePublishContext]`

which triggers `producer.publishOperationCompleted()`

which decrements `pendingPublishAcks`

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@pulsar.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [pulsar] devinbost commented on issue #6054: Catastrophic frequent random topic freezes, especially on high-traffic topics.

Posted by GitBox <gi...@apache.org>.

devinbost commented on issue #6054:

URL: https://github.com/apache/pulsar/issues/6054#issuecomment-658404472

@sijie @codelipenghui We have confirmed that this is actually still an issue in Pulsar 2.5.2.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [pulsar] devinbost commented on issue #6054: Catastrophic frequent random subscription freezes, especially on high-traffic topics.

Posted by GitBox <gi...@apache.org>.

devinbost commented on issue #6054:

URL: https://github.com/apache/pulsar/issues/6054#issuecomment-832454134

It turns out that the client producer isn't getting ack's from the broker when the subscription has frozen. The ack's just mysteriously stop.

I walked through the code flow all the way from when the producer starts the process of sending a message to when the ack is sent back to the client.

Simplified, that flow looks like this:

Client builds `newSend` command and drops into executor -> `PulsarDecoder.handleSend(..)` -> `ServerCnx.handleSend(..)` -> `Producer.publishMessage(..)` -> `PersistentTopic.publishMessage(..)`, which writes to the ledger and triggers a callback on the `Producer.MessagePublishContext` instance, which triggers `MessagePublishContext.run()` -> `Producer.ServerCnx.getCommandSender().sendSendReceiptResponse(..)`, which writes a new `CommandSendReceipt` to the Netty channel.

Then, that gets picked up by `PulsarDecoder.handleSendReceipt(..)` -> `ClientCnx.handleSendReceipt(..)`, which writes the log message:

> Got receipt for producer . . .

But, that log line is never reached when the subscription has frozen.

So, the question is: Where in that flow did it stop?

My plan is to add a bunch of debug statements to each method in that flow in a custom build to try to pinpoint where the flow is stopping. It very much seems to be another concurrency issue taking place.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [pulsar] devinbost edited a comment on issue #6054: Catastrophic frequent random subscription freezes, especially on high-traffic topics.

Posted by GitBox <gi...@apache.org>.

devinbost edited a comment on issue #6054:

URL: https://github.com/apache/pulsar/issues/6054#issuecomment-875106224

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@pulsar.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [pulsar] devinbost edited a comment on issue #6054: Catastrophic frequent random topic freezes, especially on high-traffic topics.

Posted by GitBox <gi...@apache.org>.

devinbost edited a comment on issue #6054:

URL: https://github.com/apache/pulsar/issues/6054#issuecomment-800868920

I created a test cluster (on fast hardware) specifically for reproducing this issue. In our very simple function flow, using simple Java functions without external dependencies, on Pulsar **2.6.3**, as soon as we started flowing data (around 4k msg/sec at 140KB/msg average), within seconds the bug appeared (as expected), blocking up the flow, and causing a backlog to accumulate.

I looked up the broker the frozen topic was running on and got heap dumps and thread dumps of the broker and functions running on that broker.

There was nothing abnormal in the thread dumps that I could find. However, the topic stats and internal stats seem to have some clues.

I've attached the topic stats and internal topic stats of the topic upstream from the frozen topic, the frozen topic, and the topic immediately downstream from the frozen topic.

The flow looks like this:

-> `first-function` -> `first-topic` -> `second-function` -> `second-topic` -> `third-function` -> `third-topic` -> fourth-function ->

The `second-topic` is the one that froze and started accumulating backlog.

The `second-topic` reports -45 available permits for its consumer, `third-function`.

All three topics report 0 pendingReadOps.

`first-topic` and `third-topic` have waitingReadOp = true, indicating the subscriptions are waiting for messages.

`second-topic` has waitingReadOp = false, indicating its subscription hasn't caught up or isn't waiting for messages.

`second-topic` reports waitingCursorsCount = 0, so it has no cursors waiting for messages.

`third-topic` has pendingAddEntriesCount = 81, indicating it's waiting for write requests to complete.

`first-topic` and `second-topic` have pendingAddEntriesCount = 0

`third-topic` is in the state ClosingLedger.

`first-topic` and `second-topic` are in the state LedgerOpened

`second-topic`'s cursor has markDeletePosition = 17525:0 and `readPosition` = 17594:9

`third-topic`'s cursor has markDeletePosition = 17551:9 and `readPosition` = 17551:10

So, the `third-topic`'s cursor's `readPosition` is adjacent to its markDeletePosition.

However, `second-topic`'s cursor's `readPosition` is farther ahead than `third-topic`'s `readPosition`.

Is that unusual for a downstream topic's cursor to have a `readPosition` farther ahead (larger number) than the `readPosition` of the topic immediately upstream from it when the downstream topic's only source of messages is that upstream topic and not more than a few hundred thousand messages have been sent through the pipe?

Github won't let me attach .json files, so I had to make them txt files. In the attached zip, they have the .json extension for convenience when viewing.

[first-topic-internal-stats.json.txt](https://github.com/apache/pulsar/files/6154489/first-topic-internal-stats.json.txt)

[first-topic-stats.json.txt](https://github.com/apache/pulsar/files/6154490/first-topic-stats.json.txt)

[second-topic-internal-stats.json.txt](https://github.com/apache/pulsar/files/6154491/second-topic-internal-stats.json.txt)

[second-topic-stats.json.txt](https://github.com/apache/pulsar/files/6154492/second-topic-stats.json.txt)

[third-topic-internal-stats.json.txt](https://github.com/apache/pulsar/files/6154493/third-topic-internal-stats.json.txt)

[third-topic-stats.json.txt](https://github.com/apache/pulsar/files/6154494/third-topic-stats.json.txt)

[stats.zip](https://github.com/apache/pulsar/files/6154471/stats.zip)

I've also attached the broker thread dump: [thread_dump_3-16.txt](https://github.com/apache/pulsar/files/6154506/thread_dump_3-16.txt)

Regarding the heap dump, I can't attach that until I'm able to reproduce this bug with synthetic data, but in the meantime, if anyone wants me to look up specific things in the heap dump, I'll be happy to do that.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [pulsar] lhotari commented on issue #6054: Catastrophic frequent random subscription freezes, especially on high-traffic topics.

Posted by GitBox <gi...@apache.org>.

lhotari commented on issue #6054:

URL: https://github.com/apache/pulsar/issues/6054#issuecomment-1012085314

> Which version of apache pulsar has this fix ?

@skyrocknroll @marcioapm All required fixes are included in Apache Pulsar 2.7.4 and 2.8.2 versions.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@pulsar.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [pulsar] sijie commented on issue #6054: Catastrophic frequent random topic freezes, especially on high-traffic topics.

Posted by GitBox <gi...@apache.org>.

sijie commented on issue #6054:

URL: https://github.com/apache/pulsar/issues/6054#issuecomment-658537755

@devinbost did you happen to have a heap dump?

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [pulsar] devinbost edited a comment on issue #6054: Catastrophic frequent random topic freezes, especially on high-traffic topics.

Posted by GitBox <gi...@apache.org>.

devinbost edited a comment on issue #6054:

URL: https://github.com/apache/pulsar/issues/6054#issuecomment-800868920

I created a test cluster (on fast hardware) specifically for reproducing this issue. In our very simple function flow, using simple Java functions without external dependencies, on Pulsar **2.6.3**, as soon as we started flowing data (around 4k msg/sec at 140KB/msg average), within seconds the bug appeared (as expected), blocking up the flow, and causing a backlog to accumulate.

I looked up the broker the frozen topic was running on and got heap dumps and thread dumps of the broker and functions running on that broker.

There was nothing abnormal in the thread dumps that I could find. However, the topic stats and internal stats seem to have some clues.

I've attached the topic stats and internal topic stats of the topic upstream from the frozen topic, the frozen topic, and the topic immediately downstream from the frozen topic.

The flow looks like this:

-> `first-function` -> `first-topic` -> `second-function` -> `second-topic` -> `third-function` -> `third-topic` -> fourth-function ->

The `second-topic` is the one that froze and started accumulating backlog.

The `second-topic` reports -45 available permits for its consumer, `third-function`.

All three topics report 0 pendingReadOps.

`first-topic` and `third-topic` have waitingReadOp = true, indicating the subscriptions are waiting for messages.

`second-topic` has waitingReadOp = false, indicating its subscription hasn't caught up or isn't waiting for messages.

`second-topic` reports waitingCursorsCount = 0, so it has no cursors waiting for messages.

`third-topic` has pendingAddEntriesCount = 81, indicating it's waiting for write requests to complete.

`first-topic` and `second-topic` have pendingAddEntriesCount = 0

`third-topic` is in the state ClosingLedger.

`first-topic` and `second-topic` are in the state LedgerOpened

`second-topic`'s cursor has markDeletePosition = 17525:0 and readPosition = 17594:9

`third-topic`'s cursor has markDeletePosition = 17551:9 and readPosition = 17551:10

So, the `third-topic`'s cursor's readPosition is adjacent to its markDeletePosition.

However, `second-topic`'s cursor's readPosition is farther ahead than `third-topic`'s readPosition.

Is that unusual for a downstream topic's cursor to have a readPosition farther ahead (larger number) than the topic immediately upstream from it when the downstream topic's only source of messages is that upstream topic and not more than a few hundred thousand messages have been sent through the pipe?

It's silly... Github won't let me attach .json files, so I had to make them txt files. In the attached zip, they have the .json extension for convenience.

[first-topic-internal-stats.json.txt](https://github.com/apache/pulsar/files/6154489/first-topic-internal-stats.json.txt)

[first-topic-stats.json.txt](https://github.com/apache/pulsar/files/6154490/first-topic-stats.json.txt)

[second-topic-internal-stats.json.txt](https://github.com/apache/pulsar/files/6154491/second-topic-internal-stats.json.txt)

[second-topic-stats.json.txt](https://github.com/apache/pulsar/files/6154492/second-topic-stats.json.txt)

[third-topic-internal-stats.json.txt](https://github.com/apache/pulsar/files/6154493/third-topic-internal-stats.json.txt)

[third-topic-stats.json.txt](https://github.com/apache/pulsar/files/6154494/third-topic-stats.json.txt)

[stats.zip](https://github.com/apache/pulsar/files/6154471/stats.zip)

I've also attached the broker thread dump: [thread_dump_3-16.txt](https://github.com/apache/pulsar/files/6154506/thread_dump_3-16.txt)

Regarding the heap dump, I can't attach that until I'm able to reproduce this bug with synthetic data, but in the meantime, if anyone wants me to look up specific things in the heap dump, I'll be happy to do that.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [pulsar] hpvd commented on issue #6054: Catastrophic frequent random topic freezes, especially on high-traffic topics.

Posted by GitBox <gi...@apache.org>.

hpvd commented on issue #6054:

URL: https://github.com/apache/pulsar/issues/6054#issuecomment-765712086

another chance to have this solved in upcoming v2.8 without having to find the dedicated reason, may result from all the work going on using spotbugs all through pulsar's code (including function) see https://github.com/apache/pulsar/issues?q=enable+spotbugs+

Sometimes there is luck :-)

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [pulsar] sbourkeostk commented on issue #6054: Catastrophic frequent random topic freezes, especially on high-traffic topics.

Posted by GitBox <gi...@apache.org>.

sbourkeostk commented on issue #6054:

URL: https://github.com/apache/pulsar/issues/6054#issuecomment-803295399

> After taking a closer look at #7266, it's not clear if it will resolve the issue because we're seeing this issue with functions running with exclusive subscriptions, not shared subscriptions.

You sure? I didn't know it was possible to have have a function on an exclusive subscription.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [pulsar] devinbost removed a comment on issue #6054: Catastrophic frequent random subscription freezes, especially on high-traffic topics.

Posted by GitBox <gi...@apache.org>.

devinbost removed a comment on issue #6054:

URL: https://github.com/apache/pulsar/issues/6054#issuecomment-843692903

To throw another curveball, I reproduced the freeze, and this time the backlog is _completely_ frozen. It's not moving at all. I took a set of heap dumps and thread dumps spaced out several seconds apart from each other, and I captured all processes on the broker involved.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [pulsar] devinbost commented on issue #6054: Catastrophic frequent random subscription freezes, especially on high-traffic topics.

Posted by GitBox <gi...@apache.org>.

devinbost commented on issue #6054:

URL: https://github.com/apache/pulsar/issues/6054#issuecomment-839401880

This issue needs to be reopened since that PR was only a partial fix and doesn't address all the failure scenarios.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [pulsar] devinbost edited a comment on issue #6054: Catastrophic frequent random subscription freezes, especially on high-traffic topics.

Posted by GitBox <gi...@apache.org>.

devinbost edited a comment on issue #6054:

URL: https://github.com/apache/pulsar/issues/6054#issuecomment-839495126

I traced the call chain for where ack's should be getting sent, and I filled in some additional steps:

When `ProducerImpl` sends the messages, it builds `newSend` command instances, which get picked up by `ServerCnx.handleSend(..)`, which goes to `Producer.publishMessage(..)`, then `PersistentTopic.publishMessage(..)`

which calls `asyncAddEntry(headersAndPayload, publishContext)`, which calls `[PersistentTopic].ledger.asyncAddEntry(headersAndPayload, (int) publishContext.getNumberOfMessages(), this, publishContext)`

which creates the `OpAddEntry` and calls `internalAsyncAddEntry(addOperation)` on a different thread, which adds `OpAddEntry` to `[ManagedLedgerImpl].pendingAddEntries`

From somewhere (it's not clear to me exactly where yet) we call `OpAddEntry.safeRun()`, which polls `pendingAddEntries`, gets the callback on `OpAddEntry` (which is the `PersistentTopic` instance) and calls `[PersistentTopic].addComplete(lastEntry, data.asReadOnly(), ctx)`, which calls `publishContext.completed(..)` on `Producer.MessagePublishContext`, which calls `Producer.ServerCnx.execute(this)` on the `MessagePublishContext`, which calls `MessagePublishContext.run()`, which triggers `Producer.ServerCnx.getCommandSender().sendSendReceiptResponse(..)` [SEND_RECEIPT], which writes a `newSendReceiptCommand` to the channel.

From there, the client gets the `SEND_RECEIPT` command from `PulsarDecoder`, which calls `[ClientCnx].handleSendReceipt(..)`, which calls `[ProducerImpl].ackReceived(..)`, which calls `releaseSemaphoreForSendOp(..)` to release the semaphore.

The semaphore doesn't lock until `maxPendingMessages` is reached, which is 1000 by default.

So, I added a lot of logging, and to my great surprise, I'm getting an NPE after adding these debug lines to `OpAddEntry.createOpAddEntry(..)`:

```

private static OpAddEntry createOpAddEntry(ManagedLedgerImpl ml, ByteBuf data, AddEntryCallback callback, Object ctx) {

log.debug("Running OpAddEntry.createOpAddEntry(..)");

OpAddEntry op = RECYCLER.get();

log.debug("1. In OpAddEntry.createOpAddEntry, OpAddEntry is {}", op != null ? op.toString() : null);

op.ml = ml;

op.ledger = null;

op.data = data.retain();

op.dataLength = data.readableBytes();

op.callback = callback;

op.ctx = ctx;

op.addOpCount = ManagedLedgerImpl.ADD_OP_COUNT_UPDATER.incrementAndGet(ml);

op.closeWhenDone = false;

op.entryId = -1;

op.startTime = System.nanoTime();

op.state = State.OPEN;

ml.mbean.addAddEntrySample(op.dataLength);

log.debug("2. In OpAddEntry.createOpAddEntry, OpAddEntry is {}", op != null ? op.toString() : null);

return op;

}

```

Here's the stack trace:

```

2021-05-12T06:14:19,569 [pulsar-io-28-32] DEBUG org.apache.bookkeeper.mledger.impl.OpAddEntry - Running OpAddEntry.createOpAddEntry(..)

2021-05-12T06:14:19,569 [pulsar-io-28-32] WARN org.apache.pulsar.broker.service.ServerCnx - [/10.20.20.160:37696] Got exception java.lang.NullPointerException

at org.apache.bookkeeper.mledger.impl.OpAddEntry.toString(OpAddEntry.java:354)

at org.apache.bookkeeper.mledger.impl.OpAddEntry.createOpAddEntry(OpAddEntry.java:100)

at org.apache.bookkeeper.mledger.impl.OpAddEntry.create(OpAddEntry.java:81)

at org.apache.bookkeeper.mledger.impl.ManagedLedgerImpl.asyncAddEntry(ManagedLedgerImpl.java:689)

at org.apache.pulsar.broker.service.persistent.PersistentTopic.asyncAddEntry(PersistentTopic.java:428)

at org.apache.pulsar.broker.service.persistent.PersistentTopic.publishMessage(PersistentTopic.java:404)

at org.apache.pulsar.broker.service.Producer.publishMessageToTopic(Producer.java:224)

at org.apache.pulsar.broker.service.Producer.publishMessage(Producer.java:161)

at org.apache.pulsar.broker.service.ServerCnx.handleSend(ServerCnx.java:1372)

at org.apache.pulsar.common.protocol.PulsarDecoder.channelRead(PulsarDecoder.java:207)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:357)

at io.netty.handler.flow.FlowControlHandler.dequeue(FlowControlHandler.java:200)

at io.netty.handler.flow.FlowControlHandler.channelRead(FlowControlHandler.java:162)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:357)

at io.netty.handler.codec.ByteToMessageDecoder.fireChannelRead(ByteToMessageDecoder.java:324)

at io.netty.handler.codec.ByteToMessageDecoder.fireChannelRead(ByteToMessageDecoder.java:311)

at io.netty.handler.codec.ByteToMessageDecoder.callDecode(ByteToMessageDecoder.java:432)

at io.netty.handler.codec.ByteToMessageDecoder.channelRead(ByteToMessageDecoder.java:276)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:357)

at io.netty.handler.ssl.SslHandler.unwrap(SslHandler.java:1376)

at io.netty.handler.ssl.SslHandler.decodeNonJdkCompatible(SslHandler.java:1265)

at io.netty.handler.ssl.SslHandler.decode(SslHandler.java:1302)

at io.netty.handler.codec.ByteToMessageDecoder.decodeRemovalReentryProtection(ByteToMessageDecoder.java:508)

at io.netty.handler.codec.ByteToMessageDecoder.callDecode(ByteToMessageDecoder.java:447)

at io.netty.handler.codec.ByteToMessageDecoder.channelRead(ByteToMessageDecoder.java:276)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:357)

at io.netty.channel.DefaultChannelPipeline$HeadContext.channelRead(DefaultChannelPipeline.java:1410)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365)

at io.netty.channel.DefaultChannelPipeline.fireChannelRead(DefaultChannelPipeline.java:919)

at io.netty.channel.epoll.AbstractEpollStreamChannel$EpollStreamUnsafe.epollInReady(AbstractEpollStreamChannel.java:795)

at io.netty.channel.epoll.AbstractEpollChannel$AbstractEpollUnsafe$1.run(AbstractEpollChannel.java:425)

at io.netty.util.concurrent.AbstractEventExecutor.safeExecute(AbstractEventExecutor.java:164)

at io.netty.util.concurrent.SingleThreadEventExecutor.runAllTasks(SingleThreadEventExecutor.java:472)

at io.netty.channel.epoll.EpollEventLoop.run(EpollEventLoop.java:384)

at io.netty.util.concurrent.SingleThreadEventExecutor$4.run(SingleThreadEventExecutor.java:989)

at io.netty.util.internal.ThreadExecutorMap$2.run(ThreadExecutorMap.java:74)

at io.netty.util.concurrent.FastThreadLocalRunnable.run(FastThreadLocalRunnable.java:30)

at java.lang.Thread.run(Thread.java:748)

```

The only place we could be throwing an NPE is here:

`log.debug("1. In OpAddEntry.createOpAddEntry, OpAddEntry is {}", op != null ? op.toString() : null);`

So, `op` isn't null until the null check passes?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [pulsar] devinbost edited a comment on issue #6054: Catastrophic frequent random subscription freezes, especially on high-traffic topics.

Posted by GitBox <gi...@apache.org>.

devinbost edited a comment on issue #6054:

URL: https://github.com/apache/pulsar/issues/6054#issuecomment-839495126

I traced the call chain for where ack's should be getting sent, and I filled in some additional steps:

When `ProducerImpl` sends the messages, it builds `newSend` command instances, which get picked up by `ServerCnx.handleSend(..)`, which goes to `Producer.publishMessage(..)`, then `PersistentTopic.publishMessage(..)`

which calls `asyncAddEntry(headersAndPayload, publishContext)`, which calls `[PersistentTopic].ledger.asyncAddEntry(headersAndPayload, (int) publishContext.getNumberOfMessages(), this, publishContext)`

which creates the `OpAddEntry` and calls `internalAsyncAddEntry(addOperation)` on a different thread, which adds `OpAddEntry` to `[ManagedLedgerImpl].pendingAddEntries`

From somewhere (it's not clear to me exactly where yet) we call `OpAddEntry.safeRun()`, which polls `pendingAddEntries`, gets the callback on `OpAddEntry` (which is the `PersistentTopic` instance) and calls `[PersistentTopic].addComplete(lastEntry, data.asReadOnly(), ctx)`, which calls `publishContext.completed(..)` on `Producer.MessagePublishContext`, which calls `Producer.ServerCnx.execute(this)` on the `MessagePublishContext`, which calls `MessagePublishContext.run()`, which triggers `Producer.ServerCnx.getCommandSender().sendSendReceiptResponse(..)` [SEND_RECEIPT], which writes a `newSendReceiptCommand` to the channel.

From there, the client gets the `SEND_RECEIPT` command from `PulsarDecoder`, which calls `[ClientCnx].handleSendReceipt(..)`, which calls `[ProducerImpl].ackReceived(..)`, which calls `releaseSemaphoreForSendOp(..)` to release the semaphore.

So, I added a lot of logging, and to my great surprise, I'm getting an NPE after adding these debug lines to `OpAddEntry.createOpAddEntry(..)`:

```

private static OpAddEntry createOpAddEntry(ManagedLedgerImpl ml, ByteBuf data, AddEntryCallback callback, Object ctx) {

log.debug("Running OpAddEntry.createOpAddEntry(..)");

OpAddEntry op = RECYCLER.get();

log.debug("1. In OpAddEntry.createOpAddEntry, OpAddEntry is {}", op != null ? op.toString() : null);

op.ml = ml;

op.ledger = null;

op.data = data.retain();

op.dataLength = data.readableBytes();

op.callback = callback;

op.ctx = ctx;

op.addOpCount = ManagedLedgerImpl.ADD_OP_COUNT_UPDATER.incrementAndGet(ml);

op.closeWhenDone = false;

op.entryId = -1;

op.startTime = System.nanoTime();

op.state = State.OPEN;

ml.mbean.addAddEntrySample(op.dataLength);

log.debug("2. In OpAddEntry.createOpAddEntry, OpAddEntry is {}", op != null ? op.toString() : null);

return op;

}

```

Here's the stack trace:

```

2021-05-12T06:14:19,569 [pulsar-io-28-32] DEBUG org.apache.bookkeeper.mledger.impl.OpAddEntry - Running OpAddEntry.createOpAddEntry(..)

2021-05-12T06:14:19,569 [pulsar-io-28-32] WARN org.apache.pulsar.broker.service.ServerCnx - [/10.20.20.160:37696] Got exception java.lang.NullPointerException

at org.apache.bookkeeper.mledger.impl.OpAddEntry.toString(OpAddEntry.java:354)

at org.apache.bookkeeper.mledger.impl.OpAddEntry.createOpAddEntry(OpAddEntry.java:100)

at org.apache.bookkeeper.mledger.impl.OpAddEntry.create(OpAddEntry.java:81)

at org.apache.bookkeeper.mledger.impl.ManagedLedgerImpl.asyncAddEntry(ManagedLedgerImpl.java:689)

at org.apache.pulsar.broker.service.persistent.PersistentTopic.asyncAddEntry(PersistentTopic.java:428)

at org.apache.pulsar.broker.service.persistent.PersistentTopic.publishMessage(PersistentTopic.java:404)

at org.apache.pulsar.broker.service.Producer.publishMessageToTopic(Producer.java:224)

at org.apache.pulsar.broker.service.Producer.publishMessage(Producer.java:161)

at org.apache.pulsar.broker.service.ServerCnx.handleSend(ServerCnx.java:1372)

at org.apache.pulsar.common.protocol.PulsarDecoder.channelRead(PulsarDecoder.java:207)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:357)

at io.netty.handler.flow.FlowControlHandler.dequeue(FlowControlHandler.java:200)

at io.netty.handler.flow.FlowControlHandler.channelRead(FlowControlHandler.java:162)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:357)

at io.netty.handler.codec.ByteToMessageDecoder.fireChannelRead(ByteToMessageDecoder.java:324)

at io.netty.handler.codec.ByteToMessageDecoder.fireChannelRead(ByteToMessageDecoder.java:311)

at io.netty.handler.codec.ByteToMessageDecoder.callDecode(ByteToMessageDecoder.java:432)

at io.netty.handler.codec.ByteToMessageDecoder.channelRead(ByteToMessageDecoder.java:276)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:357)

at io.netty.handler.ssl.SslHandler.unwrap(SslHandler.java:1376)

at io.netty.handler.ssl.SslHandler.decodeNonJdkCompatible(SslHandler.java:1265)

at io.netty.handler.ssl.SslHandler.decode(SslHandler.java:1302)

at io.netty.handler.codec.ByteToMessageDecoder.decodeRemovalReentryProtection(ByteToMessageDecoder.java:508)

at io.netty.handler.codec.ByteToMessageDecoder.callDecode(ByteToMessageDecoder.java:447)

at io.netty.handler.codec.ByteToMessageDecoder.channelRead(ByteToMessageDecoder.java:276)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:357)

at io.netty.channel.DefaultChannelPipeline$HeadContext.channelRead(DefaultChannelPipeline.java:1410)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365)

at io.netty.channel.DefaultChannelPipeline.fireChannelRead(DefaultChannelPipeline.java:919)

at io.netty.channel.epoll.AbstractEpollStreamChannel$EpollStreamUnsafe.epollInReady(AbstractEpollStreamChannel.java:795)

at io.netty.channel.epoll.AbstractEpollChannel$AbstractEpollUnsafe$1.run(AbstractEpollChannel.java:425)

at io.netty.util.concurrent.AbstractEventExecutor.safeExecute(AbstractEventExecutor.java:164)

at io.netty.util.concurrent.SingleThreadEventExecutor.runAllTasks(SingleThreadEventExecutor.java:472)

at io.netty.channel.epoll.EpollEventLoop.run(EpollEventLoop.java:384)

at io.netty.util.concurrent.SingleThreadEventExecutor$4.run(SingleThreadEventExecutor.java:989)

at io.netty.util.internal.ThreadExecutorMap$2.run(ThreadExecutorMap.java:74)

at io.netty.util.concurrent.FastThreadLocalRunnable.run(FastThreadLocalRunnable.java:30)

at java.lang.Thread.run(Thread.java:748)

```

The only place we could be throwing an NPE is here:

`log.debug("1. In OpAddEntry.createOpAddEntry, OpAddEntry is {}", op != null ? op.toString() : null);`

So, `op` isn't null until the null check passes?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [pulsar] devinbost commented on issue #6054: Catastrophic frequent random subscription freezes, especially on high-traffic topics.

Posted by GitBox <gi...@apache.org>.

devinbost commented on issue #6054:

URL: https://github.com/apache/pulsar/issues/6054#issuecomment-844625376

To throw a curveball, I was able to reproduce this issue where the subscription is completely frozen while using the function from the code shared above.

Interestingly, the consumer has negative permits on all four partitions, but the function isn't doing anything.

So, that leads me to believe (again) that pendingAcks have maxed out the semaphore, causing the function to block while it waits for ack's from the broker.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [pulsar] hpvd commented on issue #6054: Catastrophic frequent random topic freezes, especially on high-traffic topics.

Posted by GitBox <gi...@apache.org>.

hpvd commented on issue #6054:

URL: https://github.com/apache/pulsar/issues/6054#issuecomment-765702818

Since it's hard to reproduce, does not happen often and is still there for many versions...

just to give it a try: does this happen on different servers/hardware (not the same part involved in every appearance of the problem)? On other places especially freezing also occur from e.g. memory bit flips...

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [pulsar] lhotari commented on issue #6054: Catastrophic frequent random subscription freezes, especially on high-traffic topics.

Posted by GitBox <gi...@apache.org>.

lhotari commented on issue #6054:

URL: https://github.com/apache/pulsar/issues/6054#issuecomment-842595662

> @lhotari I've been creating fresh builds on master (or branches of master with extra logging) every couple of days to test changes on this issue.

>

> I was pulling the thread dumps out of my heap dumps using VisualVM. I can use jstack instead if that will give a better picture.

ok, I see. That method is fine, I just thought that it's a thread dump of a process which has a debugger attached to it (that's not so good since debugging can change the execution).

It will be useful to have a 3 thread dumps, a few seconds apart. That will help determine if there are threads that are blocked.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [pulsar] devinbost commented on issue #6054: Catastrophic frequent random subscription freezes, especially on high-traffic topics.

Posted by GitBox <gi...@apache.org>.

devinbost commented on issue #6054:

URL: https://github.com/apache/pulsar/issues/6054#issuecomment-830477787

Update on findings:

We discovered that this issue is multi-faceted.

1. The first issue was that the subscription was getting stuck where there were permits > 0, pendingReadOps = 0, and the cursor's read-position was not advancing despite an existing backlog. That issue was mitigated by https://github.com/apache/pulsar/pull/9789 though the root cause of why the cursor wasn't advancing is still unknown. It's possible that some code branch that should be calling `PersistentDispatcherMultipleConsumers.readMoreEntries()` isn't doing so. We also discovered that `readMoreEntries()` wasn't synchronized, which could be causing concurrency issues from preventing the reads or causing permit issues. Any such concurrency issues should be resolved now by https://github.com/apache/pulsar/pull/10413 . After those changes, I wasn't able to reproduce the frozen subscription except when consuming from a partitioned topic using a Pulsar function with several parallel function instances.

2. The second issue is that when consuming from a partitioned, persistent topic using a Pulsar function (on a shared subscription) with parallelism > 1, messages were not being dispatched properly. There were two parts to that issue:

2.1. The first part of the issue was preventing messages from dispatching to functions that have permits > 0. What was happening is that the dispatcher was failing to dispatch to consumers that were reading more than the other consumers (e.g. where one consumer had permits > 0 and the others had permits <= 0.) So, some consumers were just waiting around and not processing messages until the other consumers caught up. That issue was fixed by https://github.com/apache/pulsar/pull/10417

2.2. The second part of the issue is that due to current message batching behavior, too many messages were being dispatched, which resulted in negative permits on the dispatcher. That issue will be fixed by #7266.

3. After applying #10417, #10413, and #9789 in a custom build of Pulsar, we resolved the dispatching issues and discovered a new problem: When consuming from a partitioned, persistent topic using a Pulsar function (on a shared subscription) with parallelism > 1, we discovered that functions with permits <= 0 are sitting around doing nothing despite having received messages from the broker into the `incomingMessages` queue on `ConsumerBase`.

A thread-dump revealed that it appears the functions are stuck waiting for the release of a semaphore during `ProducerImpl.sendAsync()`:

```

"myTenant/myNamespace/function-filter-0" prio=5 tid=32 WAITING

at sun.misc.Unsafe.park(Native Method)

at java.util.concurrent.locks.LockSupport.park(LockSupport.java:175)

at java.util.concurrent.locks.AbstractQueuedSynchronizer.parkAndCheckInterrupt(AbstractQueuedSynchronizer.java:836)

at java.util.concurrent.locks.AbstractQueuedSynchronizer.doAcquireSharedInterruptibly(AbstractQueuedSynchronizer.java:997)

local variable: java.util.concurrent.locks.AbstractQueuedSynchronizer$Node#40

local variable: java.util.concurrent.locks.AbstractQueuedSynchronizer$Node#41

at java.util.concurrent.locks.AbstractQueuedSynchronizer.acquireSharedInterruptibly(AbstractQueuedSynchronizer.java:1304)

local variable: java.util.concurrent.Semaphore$FairSync#1

at java.util.concurrent.Semaphore.acquire(Semaphore.java:312)

at org.apache.pulsar.client.impl.ProducerImpl.canEnqueueRequest(ProducerImpl.java:748)

at org.apache.pulsar.client.impl.ProducerImpl.sendAsync(ProducerImpl.java:391)

local variable: org.apache.pulsar.client.impl.ProducerImpl#1

local variable: org.apache.pulsar.client.impl.ProducerImpl$1#1

local variable: io.netty.buffer.UnpooledHeapByteBuf#17

local variable: org.apache.pulsar.common.api.proto.MessageMetadata#83

local variable: org.apache.pulsar.client.impl.MessageImpl#82

at org.apache.pulsar.client.impl.ProducerImpl.internalSendAsync(ProducerImpl.java:290)

local variable: java.util.concurrent.CompletableFuture#33

at org.apache.pulsar.client.impl.TypedMessageBuilderImpl.sendAsync(TypedMessageBuilderImpl.java:103)

at org.apache.pulsar.functions.sink.PulsarSink$PulsarSinkAtLeastOnceProcessor.sendOutputMessage(PulsarSink.java:274)

local variable: org.apache.pulsar.functions.sink.PulsarSink$PulsarSinkAtLeastOnceProcessor#1

local variable: org.apache.pulsar.functions.instance.SinkRecord#2

at org.apache.pulsar.functions.sink.PulsarSink.write(PulsarSink.java:393)

at org.apache.pulsar.functions.instance.JavaInstanceRunnable.sendOutputMessage(JavaInstanceRunnable.java:349)

at org.apache.pulsar.functions.instance.JavaInstanceRunnable.lambda$processResult$0(JavaInstanceRunnable.java:331)

at org.apache.pulsar.functions.instance.JavaInstanceRunnable$$Lambda$193.accept(<unknown string>)

at java.util.concurrent.CompletableFuture.uniWhenComplete(CompletableFuture.java:774)

local variable: org.apache.pulsar.functions.instance.JavaExecutionResult#1

at java.util.concurrent.CompletableFuture.uniWhenCompleteStage(CompletableFuture.java:792)

local variable: org.apache.pulsar.functions.instance.JavaInstanceRunnable$$Lambda$193#1

local variable: java.util.concurrent.CompletableFuture#35

at java.util.concurrent.CompletableFuture.whenComplete(CompletableFuture.java:2153)

at org.apache.pulsar.functions.instance.JavaInstanceRunnable.processResult(JavaInstanceRunnable.java:322)

at org.apache.pulsar.functions.instance.JavaInstanceRunnable.run(JavaInstanceRunnable.java:275)

local variable: org.apache.pulsar.functions.instance.JavaInstanceRunnable#1

local variable: java.util.concurrent.CompletableFuture#34

at java.lang.Thread.run(Thread.java:748)

```

After disabling batching on functions in a custom build, the issue disappeared. However, performance is severely degraded when batching is disabled, so it's not a viable workaround.

The current behavior expects the semaphore to block the thread since `blockIfQueueFullDisabled = true` by default in functions. So, that implies the producing queue is full.

So, the remaining mysteries are:

Why is the producer-queue full? (Why is the function not getting ack from the broker?)

Or, if the function is getting ack from the broker, why is the pulsar-client not batching and sending?

However, we need to confirm if the thread responsible for consuming is indeed blocked by producing.

(@codelipenghui or @jerrypeng , do either of you know? )

Many thanks to @rdhabalia for helping to deep dive on this issue.

Also thanks to @lhotari for helping with the concurrency side of things.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [pulsar] jerrypeng commented on issue #6054: Catastrophic frequent random topic freezes, especially on high-traffic topics.

Posted by GitBox <gi...@apache.org>.

jerrypeng commented on issue #6054:

URL: https://github.com/apache/pulsar/issues/6054#issuecomment-801529366

> Do they batch by default?

Yes, batching at the pulsar client level is enabled when a Pulsar function is writing messages to the "output" topic.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [pulsar] lhotari commented on issue #6054: Catastrophic frequent random subscription freezes, especially on high-traffic topics.

Posted by GitBox <gi...@apache.org>.

lhotari commented on issue #6054:

URL: https://github.com/apache/pulsar/issues/6054#issuecomment-842595923

> Are there JVM parameters that I should or shouldn't be including when starting the brokers?

I guess the defaults are fine.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org