You are viewing a plain text version of this content. The canonical link for it is here.

Posted to issues@iceberg.apache.org by GitBox <gi...@apache.org> on 2020/12/17 07:08:45 UTC

[GitHub] [iceberg] zhangjun0x01 opened a new issue #1951: Flink : Add support for flink 1.12

zhangjun0x01 opened a new issue #1951:

URL: https://github.com/apache/iceberg/issues/1951

now flink 1.12 has released ,I think we should add the support for flink 1.12

https://flink.apache.org/news/2020/12/10/release-1.12.0.html

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] zhangjun0x01 commented on issue #1951: Flink : Add support for flink 1.12

Posted by GitBox <gi...@apache.org>.

zhangjun0x01 commented on issue #1951:

URL: https://github.com/apache/iceberg/issues/1951#issuecomment-773754797

> The main method caused an error: Detected an UNBOUNDED source with the 'execution.runtime-mode' set to 'BATCH'. This combination is not allowed, please set the 'execution.runtime-mode' to STREAMING or AUTOMATIC

your flink source is streaming source(UNBOUNDED),but you set the exectioin mode is BATCH.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] pan3793 edited a comment on issue #1951: Flink : Add support for flink 1.12

Posted by GitBox <gi...@apache.org>.

pan3793 edited a comment on issue #1951:

URL: https://github.com/apache/iceberg/issues/1951#issuecomment-748210178

@stevenzwu I think we have a different understanding of `Shim`, what I mean is something like:

https://github.com/apache/spark/blob/master/sql/hive/src/main/scala/org/apache/spark/sql/hive/client/HiveShim.scala , so there is no need to maintain each version in a separate module.

And I think the situation of `flink-kafka-connector` is quite different. Firstly, kafka provide a light `kafka-clients` to communicate with broker via RPC protocol, and since 0.10, kafka guarantees that the client is compatible with lower versions of broker, so after dropping support of kafka 0.9 and before versions, there is only one universal kafka connector with latest `kafka-clients`.

> Since the Flink Iceberg connector lives in the Iceberg project.

The module uses some Flink @Internal API which not guarantee compatible in each minor release, i.e. `RowDataTypeInfo` is renamed into `InternalTypeInfo` from flink 1.11 to flink 1.12, so I think the most light way is introduce a `FlinkShim` and use reflection to invoke the specific `method` in specific flink version.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] txdong-sz commented on issue #1951: Flink : Add support for flink 1.12

Posted by GitBox <gi...@apache.org>.

txdong-sz commented on issue #1951:

URL: https://github.com/apache/iceberg/issues/1951#issuecomment-773271127

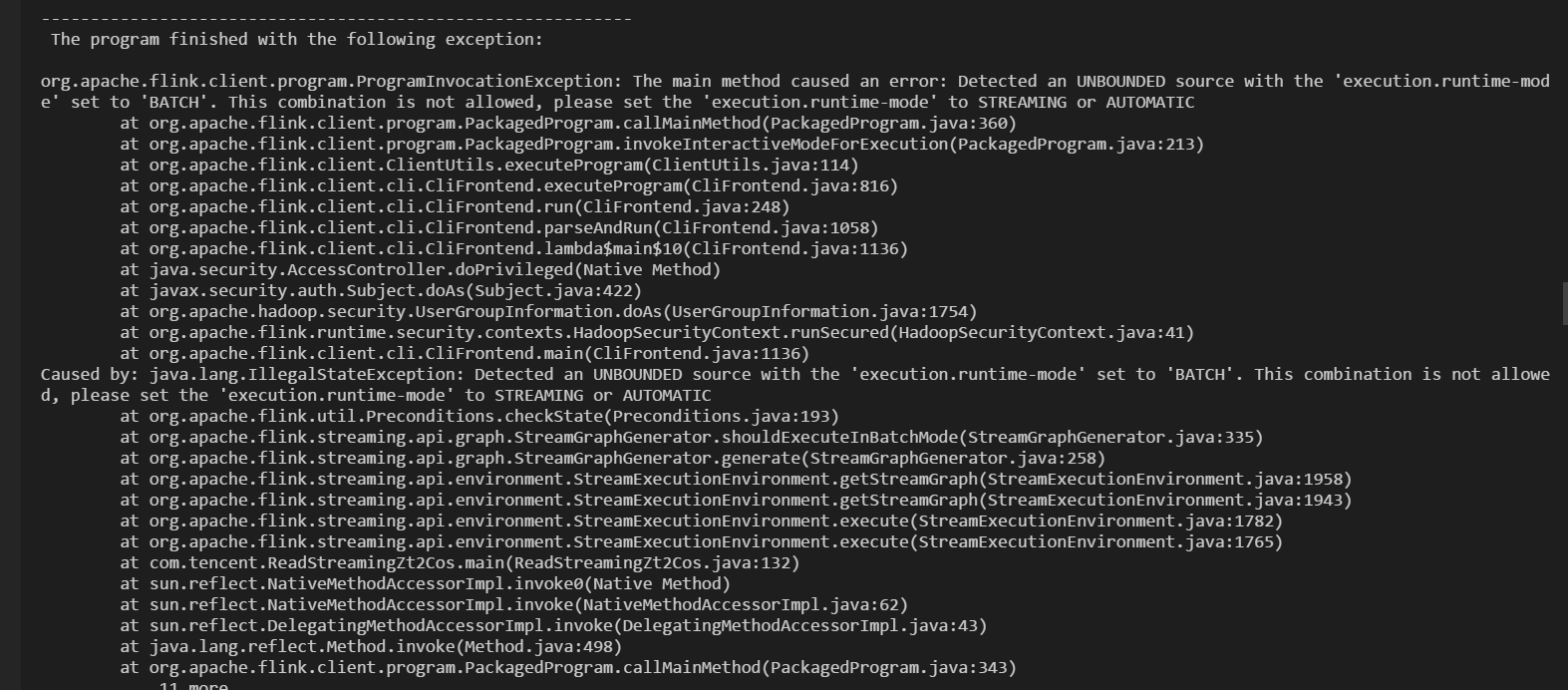

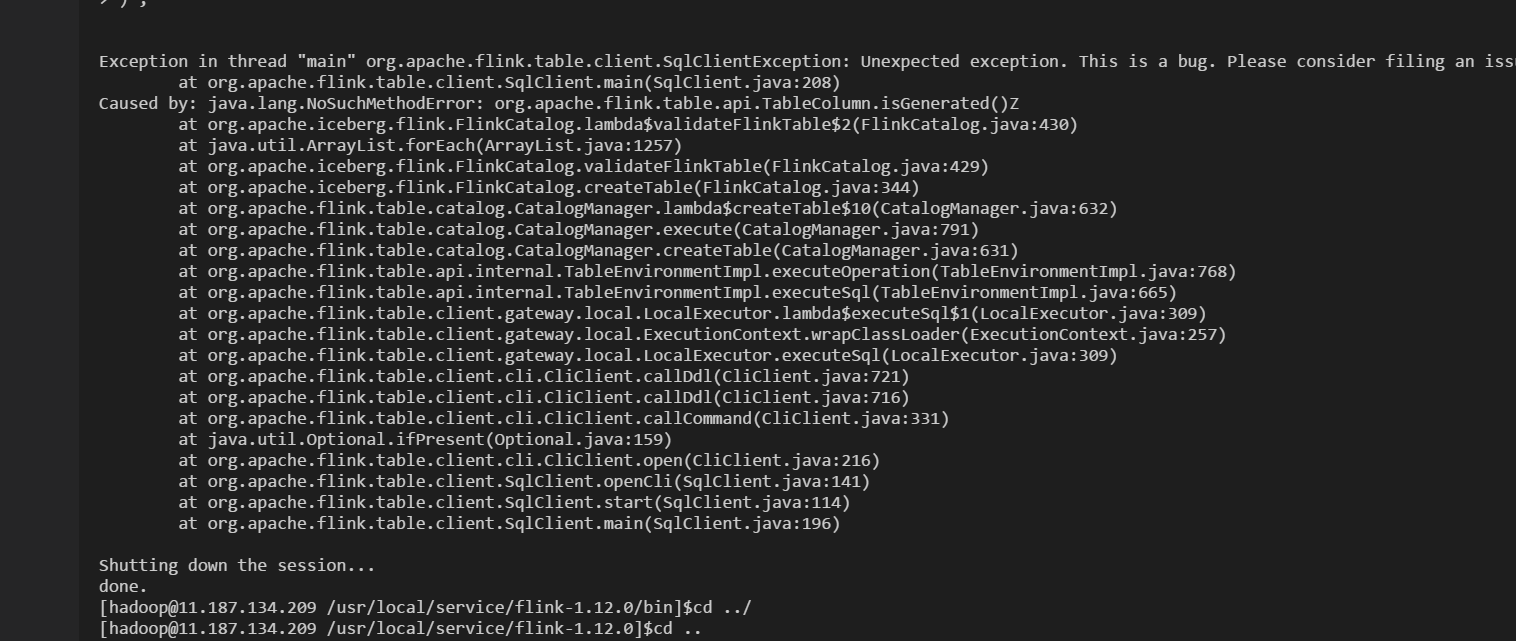

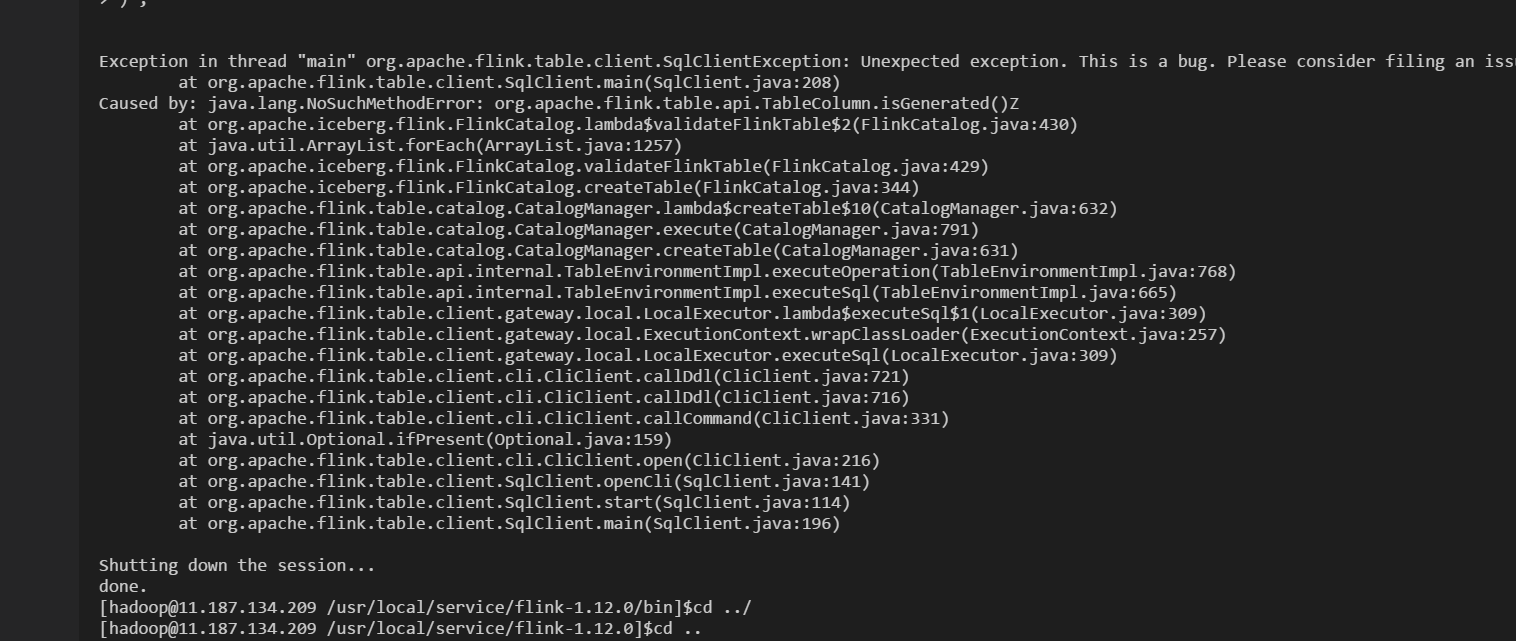

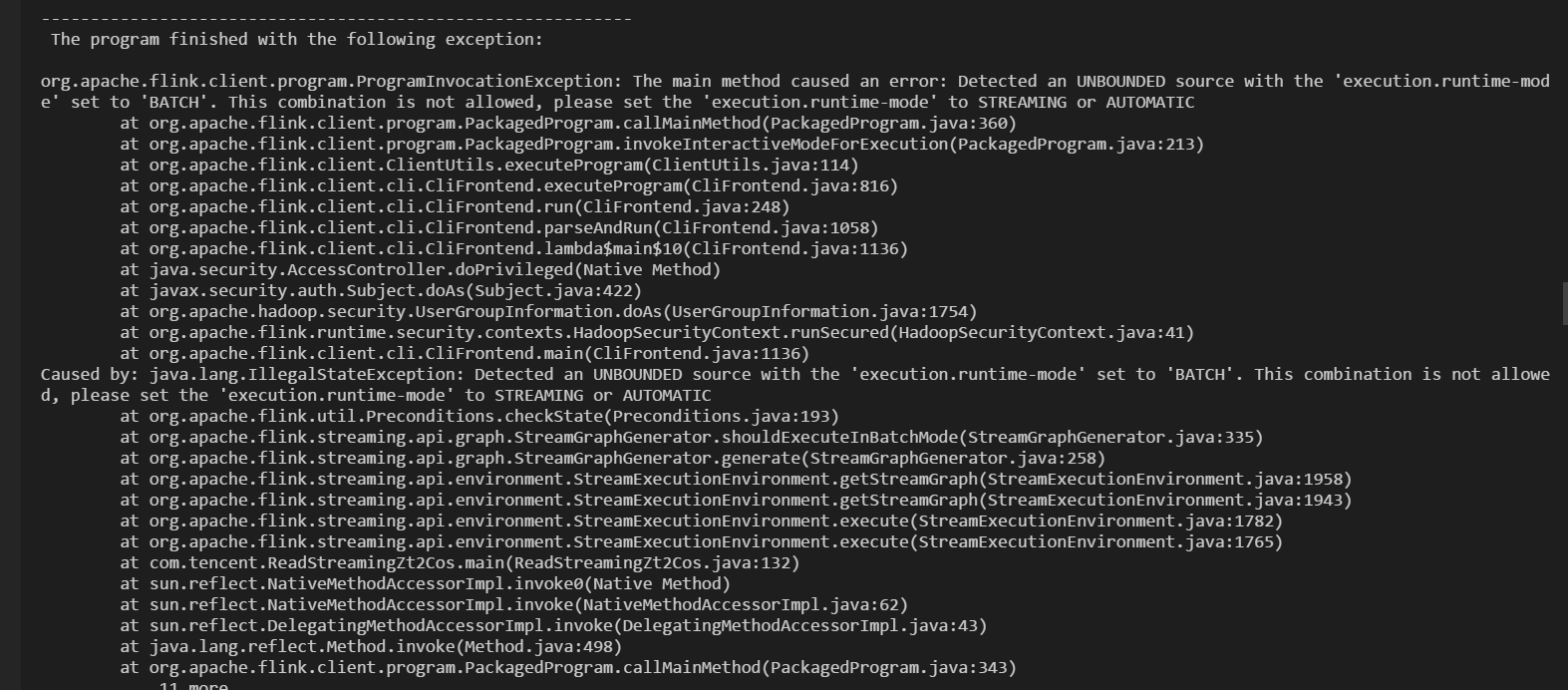

i donnot know how to run a daily report by flink 1.12 iceberg sql table

i write code like this but get a error

could u please help me ? thanks.

bsEnv.setRuntimeMode(RuntimeExecutionMode.BATCH);

String querySql = "SELECT ftime,extinfo,country,province,operator,apn,gw,src_ip_head,info_str,product_id,app_version,sdk_id,sdk_version,hardware_os,qua,upload_ip,client_ip,upload_apn,event_code,event_result,package_size,consume_time,event_value,event_time,upload_time,boundle_id,uin,platform,os_version,channel,brand,model from bfzt3 ";

Table table = tEnv.sqlQuery(querySql);

DataStream<AttaInfo> sinkStream = tEnv.toAppendStream(table, Types.POJO(AttaInfo.class, map));

sinkStream.map(x->1).returns(Types.INT).keyBy(new NullByteKeySelector<Integer>()).reduce((x,y) -> {

return x+y;

}).print();

sinkStream.addSink(new RichSinkFunction<AttaInfo>() {

@Override

public void invoke(AttaInfo value, Context context) throws Exception {

Map<String, String> map2 = value.getEvent_value();

map2.entrySet().stream().forEach(x->{

System.out.println(x.getKey()+":" + x.getValue());

});

}

});

org.apache.flink.client.program.ProgramInvocationException: The main method caused an error: Detected an UNBOUNDED source with the 'execution.runtime-mode' set to 'BATCH'. This combination is not allowed, please set the 'execution.runtime-mode' to STREAMING or AUTOMATIC

at org.apache.flink.client.program.PackagedProgram.callMainMethod(PackagedProgram.java:360)

at org.apache.flink.client.program.PackagedProgram.invokeInteractiveModeForExecution(PackagedProgram.java:213)

at org.apache.flink.client.ClientUtils.executeProgram(ClientUtils.java:114)

at org.apache.flink.client.cli.CliFrontend.executeProgram(CliFrontend.java:816)

at org.apache.flink.client.cli.CliFrontend.run(CliFrontend.java:248)

at org.apache.flink.client.cli.CliFrontend.parseAndRun(CliFrontend.java:1058)

at org.apache.flink.client.cli.CliFrontend.lambda$main$10(CliFrontend.java:1136)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1754)

at org.apache.flink.runtime.security.contexts.HadoopSecurityContext.runSecured(HadoopSecurityContext.java:41)

at org.apache.flink.client.cli.CliFrontend.main(CliFrontend.java:1136)

Caused by: java.lang.IllegalStateException: Detected an UNBOUNDED source with the 'execution.runtime-mode' set to 'BATCH'. This combination is not allowed, please set the 'execution.runtime-mode' to STREAMING or AUTOMATIC

at org.apache.flink.util.Preconditions.checkState(Preconditions.java:193)

at org.apache.flink.streaming.api.graph.StreamGraphGenerator.shouldExecuteInBatchMode(StreamGraphGenerator.java:335)

at org.apache.flink.streaming.api.graph.StreamGraphGenerator.generate(StreamGraphGenerator.java:258)

at org.apache.flink.streaming.api.environment.StreamExecutionEnvironment.getStreamGraph(StreamExecutionEnvironment.java:1958)

at org.apache.flink.streaming.api.environment.StreamExecutionEnvironment.getStreamGraph(StreamExecutionEnvironment.java:1943)

at org.apache.flink.streaming.api.environment.StreamExecutionEnvironment.execute(StreamExecutionEnvironment.java:1782)

at org.apache.flink.streaming.api.environment.StreamExecutionEnvironment.execute(StreamExecutionEnvironment.java:1765)

at com.tencent.ReadStreamingZt2Cos.main(ReadStreamingZt2Cos.java:132)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.flink.client.program.PackagedProgram.callMainMethod(PackagedProgram.java:343)

... 11 more

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] pan3793 commented on issue #1951: Flink : Add support for flink 1.12

Posted by GitBox <gi...@apache.org>.

pan3793 commented on issue #1951:

URL: https://github.com/apache/iceberg/issues/1951#issuecomment-762714531

@txdong-sz Currently, this patch can not work with both Flink 1.11 & 1.12. As previous discussion, community will work on it after Iceberg 0.11 released. So now, you need to merge it manually.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] zhangjun0x01 closed issue #1951: Flink : Add support for flink 1.12

Posted by GitBox <gi...@apache.org>.

zhangjun0x01 closed issue #1951:

URL: https://github.com/apache/iceberg/issues/1951

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] stevenzwu edited a comment on issue #1951: Flink : Add support for flink 1.12

Posted by GitBox <gi...@apache.org>.

stevenzwu edited a comment on issue #1951:

URL: https://github.com/apache/iceberg/issues/1951#issuecomment-748250068

Definitely see the benefit of supporting multiple Flink versions via a shim layer. I am just concerned about the added complexity and maintenance overhead. Wondering how the spark2/3 modules handle the minor version upgrade in Spark.

Another question is if we want to keep supporting all the Flink minor versions. Flink has the policy of two released minor versions. Older Flink versions won't get backport of bug fixes

@rdblue @openinx @JingsongLi

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] pan3793 edited a comment on issue #1951: Flink : Add support for flink 1.12

Posted by GitBox <gi...@apache.org>.

pan3793 edited a comment on issue #1951:

URL: https://github.com/apache/iceberg/issues/1951#issuecomment-748158267

Both Iceberg and Flink are changing rapidly, is there necessary to introduce `FlinkShim` which like `HiveShim` to support multiple Flink versions?

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] pan3793 edited a comment on issue #1951: Flink : Add support for flink 1.12

Posted by GitBox <gi...@apache.org>.

pan3793 edited a comment on issue #1951:

URL: https://github.com/apache/iceberg/issues/1951#issuecomment-748210178

@stevenzwu I think we have a different understanding of `Shim`, what I mean is something like:

https://github.com/apache/spark/blob/master/sql/hive/src/main/scala/org/apache/spark/sql/hive/client/HiveShim.scala , so there is no need to maintain each version in a separate module.

And I think the situation of `flink-kafka-connector` is quite different. Firstly, kafka provide a light `kafka-clients` to communicate with broker via RPC protocol, and since 0.10, kafka guarantees that the client is compatible with lower versions of broker, so after drop support kafka 0.9 and before versions, there is only one universal kafka connector with latest `kafka-clients`.

> Since the Flink Iceberg connector lives in the Iceberg project.

The module uses some Flink @Internal API which not guarantee compatible in each minor release, i.e. `RowDataTypeInfo` is renamed into `InternalTypeInfo` from flink 1.11 to flink 1.12, so I think the most light way is introduce a `FlinkShim` and use reflection to invoke the specific `method` in specific flink version.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] zhangjun0x01 closed issue #1951: Flink : Add support for flink 1.12

Posted by GitBox <gi...@apache.org>.

zhangjun0x01 closed issue #1951:

URL: https://github.com/apache/iceberg/issues/1951

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] zhangjun0x01 commented on issue #1951: Flink : Add support for flink 1.12

Posted by GitBox <gi...@apache.org>.

zhangjun0x01 commented on issue #1951:

URL: https://github.com/apache/iceberg/issues/1951#issuecomment-747887621

> But we probably should discuss when to merge though. maybe after Flink 1.12.1 released?

I think that the changes between minor versions have little impact. Generally, minor version changes are usually optimizations or bug fixes, and no major changes or api modifications will be made.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] pan3793 edited a comment on issue #1951: Flink : Add support for flink 1.12

Posted by GitBox <gi...@apache.org>.

pan3793 edited a comment on issue #1951:

URL: https://github.com/apache/iceberg/issues/1951#issuecomment-748210178

@stevenzwu

> Or are you suggesting that the Flink connector code can handle all the 1.x Flink versions?

Yes, what I mean `Shim` is something like:

https://github.com/apache/spark/blob/master/sql/hive/src/main/scala/org/apache/spark/sql/hive/client/HiveShim.scala , so there is no need to maintain each version in a separate module.

And I think the situation of `flink-kafka-connector` is quite different. Firstly, kafka provide a light `kafka-clients` to communicate with broker via RPC protocol, and since 0.10, kafka guarantees that the client is compatible with lower versions of broker, so after dropping support of kafka 0.9 and earlier versions, there is only one universal kafka connector with latest `kafka-clients`.

> Since the Flink Iceberg connector lives in the Iceberg project.

The module uses some Flink @Internal API which not guarantee compatible in each minor release, i.e. `RowDataTypeInfo` is renamed into `InternalTypeInfo` from flink 1.11 to flink 1.12, so I think the most light way is introduce a `FlinkShim` and use reflection to invoke the specific `method` in specific flink version.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] txdong-sz commented on issue #1951: Flink : Add support for flink 1.12

Posted by GitBox <gi...@apache.org>.

txdong-sz commented on issue #1951:

URL: https://github.com/apache/iceberg/issues/1951#issuecomment-772315140

@zhangjun0x01 thanks very much

i merge into my code and can run very smoothly and find a bug in flink 1.12

https://issues.apache.org/jira/browse/FLINK-21247

https://github.com/apache/flink/pull/14845/files

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] stevenzwu commented on issue #1951: Flink : Add support for flink 1.12

Posted by GitBox <gi...@apache.org>.

stevenzwu commented on issue #1951:

URL: https://github.com/apache/iceberg/issues/1951#issuecomment-747862370

As part of working on the FLIP-27 Flink source, I updated the code with Flink 1.12.0 version. Here is the PR.

https://github.com/apache/iceberg/pull/1956

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] stevenzwu commented on issue #1951: Flink : Add support for flink 1.12

Posted by GitBox <gi...@apache.org>.

stevenzwu commented on issue #1951:

URL: https://github.com/apache/iceberg/issues/1951#issuecomment-748250068

Definitely see the benefit of supporting multiple Flink versions via a shim layer, which does add some complexity and maintenance overhead. Wondering how the spark2/3 modules handle the minor version upgrade in Spark.

@rdblue @openinx @JingsongLi

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] pan3793 edited a comment on issue #1951: Flink : Add support for flink 1.12

Posted by GitBox <gi...@apache.org>.

pan3793 edited a comment on issue #1951:

URL: https://github.com/apache/iceberg/issues/1951#issuecomment-748210178

@stevenzwu I think we have a different understanding of `Shim`, what I mean is something like:

https://github.com/apache/spark/blob/master/sql/hive/src/main/scala/org/apache/spark/sql/hive/client/HiveShim.scala , so there is no need to maintain each version in a separate module.

And I think the situation of `flink-kafka-connector` is quite different. Firstly, kafka provide a light `kafka-clients` to communicate with broker via RPC protocol, and since 0.10, kafka guarantees that the client is compatible with lower versions of broker, so after dropping support of kafka 0.9 and earlier versions, there is only one universal kafka connector with latest `kafka-clients`.

> Since the Flink Iceberg connector lives in the Iceberg project.

The module uses some Flink @Internal API which not guarantee compatible in each minor release, i.e. `RowDataTypeInfo` is renamed into `InternalTypeInfo` from flink 1.11 to flink 1.12, so I think the most light way is introduce a `FlinkShim` and use reflection to invoke the specific `method` in specific flink version.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] pan3793 commented on issue #1951: Flink : Add support for flink 1.12

Posted by GitBox <gi...@apache.org>.

pan3793 commented on issue #1951:

URL: https://github.com/apache/iceberg/issues/1951#issuecomment-748158267

Both Iceberg and Flink are changing rapidly, is there necessary to introduce `FlinkShim` which like `HiveShim` to support multiple Flink version?

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] zhangjun0x01 commented on issue #1951: Flink : Add support for flink 1.12

Posted by GitBox <gi...@apache.org>.

zhangjun0x01 commented on issue #1951:

URL: https://github.com/apache/iceberg/issues/1951#issuecomment-773754797

> The main method caused an error: Detected an UNBOUNDED source with the 'execution.runtime-mode' set to 'BATCH'. This combination is not allowed, please set the 'execution.runtime-mode' to STREAMING or AUTOMATIC

your flink source is streaming source(UNBOUNDED),but you set the exectioin mode is BATCH.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] txdong-sz commented on issue #1951: Flink : Add support for flink 1.12

Posted by GitBox <gi...@apache.org>.

txdong-sz commented on issue #1951:

URL: https://github.com/apache/iceberg/issues/1951#issuecomment-762675949

we use flink 1.12 now . but iceberg cannot supoort flink 1.12 now .

this is a major version of flink 1.12 (stream and batch can written in one code ) so iceberg with flink 1.12 need to be merge soon

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] pan3793 commented on issue #1951: Flink : Add support for flink 1.12

Posted by GitBox <gi...@apache.org>.

pan3793 commented on issue #1951:

URL: https://github.com/apache/iceberg/issues/1951#issuecomment-748210178

@stevenzwu I think we have a different understanding of `Shim`, what I mean is some like:

https://github.com/apache/spark/blob/master/sql/hive/src/main/scala/org/apache/spark/sql/hive/client/HiveShim.scala , so there is no need to maintain each version in a separate module.

And I think the situation of `flink-kafka-connector` is quite different. Firstly, kafka provide a light `kafka-clients` to communicate with broker via RPC protocol, and since 0.10, kafka guarantees that the client is compatible with lower versions of broker, so after drop support kafka 0.9 and before versions, there is only one universal kafka connector with latest `kafka-clients`.

> Since the Flink Iceberg connector lives in the Iceberg project.

The module uses some Flink @Internal API which not guarantee compatible in each minor release, i.e. `RowDataTypeInfo` is renamed into `InternalTypeInfo` from flink 1.11 to flink 1.12, so I think the most light way is introduce a `FlinkShim` and use reflection to invoke the specific `method` in specific flink version.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] openinx commented on issue #1951: Flink : Add support for flink 1.12

Posted by GitBox <gi...@apache.org>.

openinx commented on issue #1951:

URL: https://github.com/apache/iceberg/issues/1951#issuecomment-748746287

I toke a look at the https://github.com/apache/iceberg/pull/1956/files, I don't think `spark`'s `Shim` policy will resolve all flink's incompatibility problem, because not all the incompatibility issues are happen in a single interface (or a separate services). Currently, we iceberg flink module depends on few `internal` interfaces (such as `RowDataTypeInfo`, it was removed in flink 1.12.0), which does not guarantee the interface compatibility from apache flink. The correct way is decoupling dependencies on flink's internal API interfaces, for the `TableColumn` & `RowDataTypeInfo` & `TableResult` issues we may need to change the code design so that we won't depend on those internal API interfaces. The idea way is: upgrading the flink version from 1.11.x to 1.12.x (passing all checks) without changing one line code.

As the iceberg 0.11.0 release is coming, I'd rather to upgrade the flink version after release get finished, because all currently development and testing work are based on flink 1.11.x. I'm afraid that upgrading to 1.12.x will introduce extra instability which may delay the 0.11.0 release.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] zhangjun0x01 commented on issue #1951: Flink : Add support for flink 1.12

Posted by GitBox <gi...@apache.org>.

zhangjun0x01 commented on issue #1951:

URL: https://github.com/apache/iceberg/issues/1951#issuecomment-762695163

> we use flink 1.12 now . but iceberg cannot supoort flink 1.12 now .

> this is a major version of flink 1.12 (stream and batch can written in one code ) so iceberg with flink 1.12 need to be merge soon

>

you can merged the [pr 1956](https://github.com/apache/iceberg/pull/1956) into master

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] txdong-sz commented on issue #1951: Flink : Add support for flink 1.12

Posted by GitBox <gi...@apache.org>.

txdong-sz commented on issue #1951:

URL: https://github.com/apache/iceberg/issues/1951#issuecomment-773271127

i donnot know how to run a daily report by flink 1.12 iceberg sql table

i write code like this but get a error

could u please help me ? thanks.

bsEnv.setRuntimeMode(RuntimeExecutionMode.BATCH);

String querySql = "SELECT ftime,extinfo,country,province,operator,apn,gw,src_ip_head,info_str,product_id,app_version,sdk_id,sdk_version,hardware_os,qua,upload_ip,client_ip,upload_apn,event_code,event_result,package_size,consume_time,event_value,event_time,upload_time,boundle_id,uin,platform,os_version,channel,brand,model from bfzt3 ";

Table table = tEnv.sqlQuery(querySql);

DataStream<AttaInfo> sinkStream = tEnv.toAppendStream(table, Types.POJO(AttaInfo.class, map));

sinkStream.map(x->1).returns(Types.INT).keyBy(new NullByteKeySelector<Integer>()).reduce((x,y) -> {

return x+y;

}).print();

sinkStream.addSink(new RichSinkFunction<AttaInfo>() {

@Override

public void invoke(AttaInfo value, Context context) throws Exception {

Map<String, String> map2 = value.getEvent_value();

map2.entrySet().stream().forEach(x->{

System.out.println(x.getKey()+":" + x.getValue());

});

}

});

org.apache.flink.client.program.ProgramInvocationException: The main method caused an error: Detected an UNBOUNDED source with the 'execution.runtime-mode' set to 'BATCH'. This combination is not allowed, please set the 'execution.runtime-mode' to STREAMING or AUTOMATIC

at org.apache.flink.client.program.PackagedProgram.callMainMethod(PackagedProgram.java:360)

at org.apache.flink.client.program.PackagedProgram.invokeInteractiveModeForExecution(PackagedProgram.java:213)

at org.apache.flink.client.ClientUtils.executeProgram(ClientUtils.java:114)

at org.apache.flink.client.cli.CliFrontend.executeProgram(CliFrontend.java:816)

at org.apache.flink.client.cli.CliFrontend.run(CliFrontend.java:248)

at org.apache.flink.client.cli.CliFrontend.parseAndRun(CliFrontend.java:1058)

at org.apache.flink.client.cli.CliFrontend.lambda$main$10(CliFrontend.java:1136)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1754)

at org.apache.flink.runtime.security.contexts.HadoopSecurityContext.runSecured(HadoopSecurityContext.java:41)

at org.apache.flink.client.cli.CliFrontend.main(CliFrontend.java:1136)

Caused by: java.lang.IllegalStateException: Detected an UNBOUNDED source with the 'execution.runtime-mode' set to 'BATCH'. This combination is not allowed, please set the 'execution.runtime-mode' to STREAMING or AUTOMATIC

at org.apache.flink.util.Preconditions.checkState(Preconditions.java:193)

at org.apache.flink.streaming.api.graph.StreamGraphGenerator.shouldExecuteInBatchMode(StreamGraphGenerator.java:335)

at org.apache.flink.streaming.api.graph.StreamGraphGenerator.generate(StreamGraphGenerator.java:258)

at org.apache.flink.streaming.api.environment.StreamExecutionEnvironment.getStreamGraph(StreamExecutionEnvironment.java:1958)

at org.apache.flink.streaming.api.environment.StreamExecutionEnvironment.getStreamGraph(StreamExecutionEnvironment.java:1943)

at org.apache.flink.streaming.api.environment.StreamExecutionEnvironment.execute(StreamExecutionEnvironment.java:1782)

at org.apache.flink.streaming.api.environment.StreamExecutionEnvironment.execute(StreamExecutionEnvironment.java:1765)

at com.tencent.ReadStreamingZt2Cos.main(ReadStreamingZt2Cos.java:132)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.flink.client.program.PackagedProgram.callMainMethod(PackagedProgram.java:343)

... 11 more

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] stevenzwu commented on issue #1951: Flink : Add support for flink 1.12

Posted by GitBox <gi...@apache.org>.

stevenzwu commented on issue #1951:

URL: https://github.com/apache/iceberg/issues/1951#issuecomment-747862652

But we probably should discuss when to merge though. maybe after Flink 1.12.1 released?

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] stevenzwu commented on issue #1951: Flink : Add support for flink 1.12

Posted by GitBox <gi...@apache.org>.

stevenzwu commented on issue #1951:

URL: https://github.com/apache/iceberg/issues/1951#issuecomment-748185363

pasting my comment from the PR here. let's consolidate the discussion in this issue. I guess both @zhangjun0x01 and @pan3793 was suggesting the shim layer approach. It definitely adds some significant complexity.

------------------------

Are you suggesting that we have a module for each Flink minor version release (like 1.11 and 1.12)? I am worried that It can quickly grow out of control, as Flink does a minor version release typically every 4 months. Flink Kafka connector used to be in this model (like kafka08, kafka09, kafka10, ...). Now it moved to the universal connector and just track the latest release version of kafka-clients.

Or are you suggesting that the Flink connector code can handle all the 1.x Flink versions? I am not sure how flink hive connector handles some of the small API changes among all the versions.

As for spark2 and spark3, that is a major version upgrade. For upgrading Spark from 2.3 to 2.4, I assume Iceberg just upgrade the version in spark2 module. If in the future, Flink introduced major breaking API change and go up to 2.x, we probably should have a flink2 module in Iceberg.

Since the Flink Iceberg connector lives in the Iceberg project, I was thinking that the latest connector can just pick a Flink minor version as the paved path. That is why I was asking if we should wait for Flink 1.12.1 patch release for some bug fixes.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] stevenzwu edited a comment on issue #1951: Flink : Add support for flink 1.12

Posted by GitBox <gi...@apache.org>.

stevenzwu edited a comment on issue #1951:

URL: https://github.com/apache/iceberg/issues/1951#issuecomment-748185363

pasting my comment from the PR here. let's consolidate the discussion in this issue. I guess both @zhangjun0x01 and @pan3793 was suggesting the shim layer approach. It definitely adds some significant complexity.

===================

Are you suggesting that we have a module for each Flink minor version release (like 1.11 and 1.12)? I am worried that It can quickly grow out of control, as Flink does a minor version release typically every 4 months. Flink Kafka connector used to be in this model (like kafka08, kafka09, kafka10, ...). Now it moved to the universal connector and just track the latest release version of kafka-clients.

Or are you suggesting that the Flink connector code can handle all the 1.x Flink versions? I am not sure how flink hive connector handles some of the small API changes among all the versions.

As for spark2 and spark3, that is a major version upgrade. For upgrading Spark from 2.3 to 2.4, I assume Iceberg just upgrade the version in spark2 module. If in the future, Flink introduced major breaking API change and go up to 2.x, we probably should have a flink2 module in Iceberg.

Since the Flink Iceberg connector lives in the Iceberg project, I was thinking that the latest connector can just pick a Flink minor version as the paved path. That is why I was asking if we should wait for Flink 1.12.1 patch release for some bug fixes.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] stevenzwu edited a comment on issue #1951: Flink : Add support for flink 1.12

Posted by GitBox <gi...@apache.org>.

stevenzwu edited a comment on issue #1951:

URL: https://github.com/apache/iceberg/issues/1951#issuecomment-748250068

Definitely see the benefit of supporting multiple Flink versions via a shim layer. I am just concerned about the added complexity and maintenance overhead. Wondering how the spark2/3 modules handle the minor version upgrade in Spark.

@rdblue @openinx @JingsongLi

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org