You are viewing a plain text version of this content. The canonical link for it is here.

Posted to commits@airflow.apache.org by GitBox <gi...@apache.org> on 2021/01/12 02:34:49 UTC

[GitHub] [airflow] totalhack opened a new issue #13625: Scheduler Dying/Hanging v2.0.0: WARNING - Killing DAGFileProcessorProcess

totalhack opened a new issue #13625:

URL: https://github.com/apache/airflow/issues/13625

**Apache Airflow version**: 2.0.0

**Environment**: apache/airflow:2.0.0 docker image, Docker Desktop for Mac 3.0.4

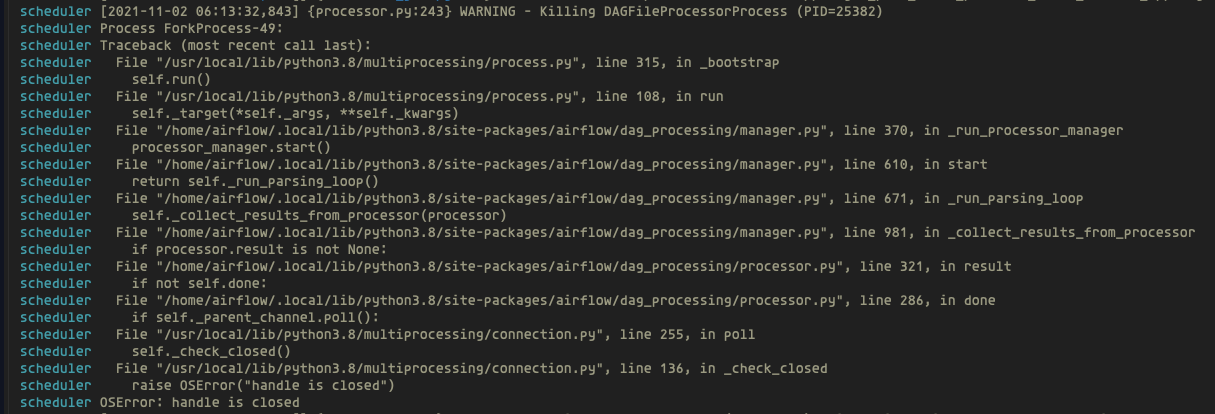

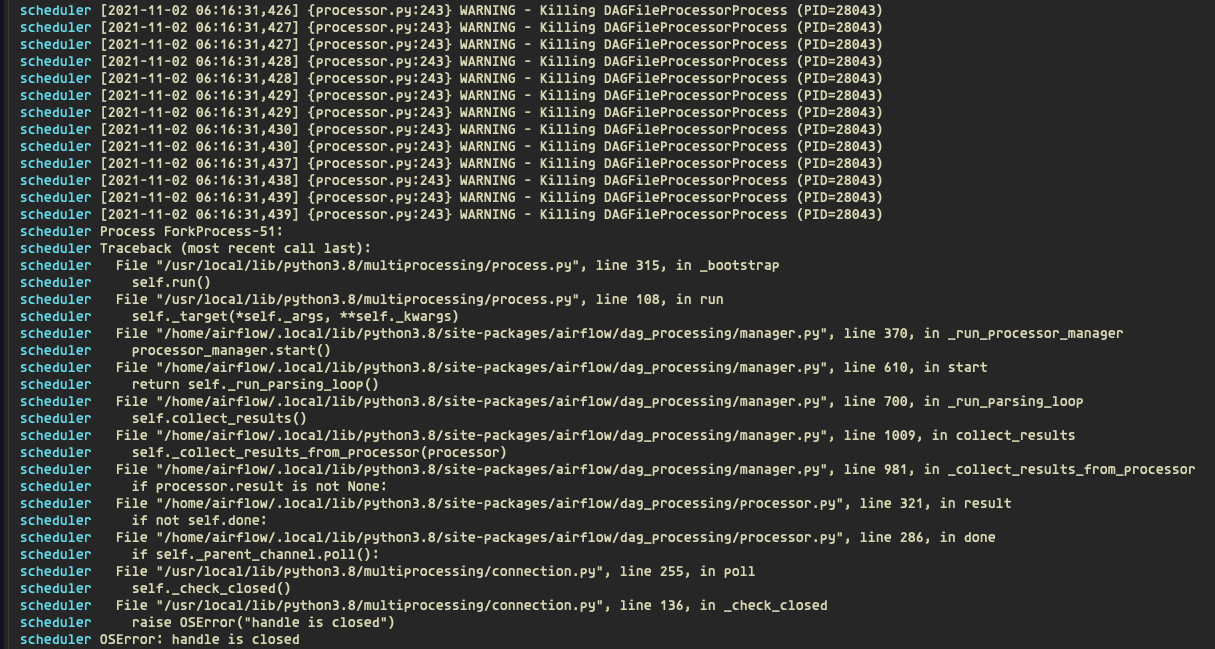

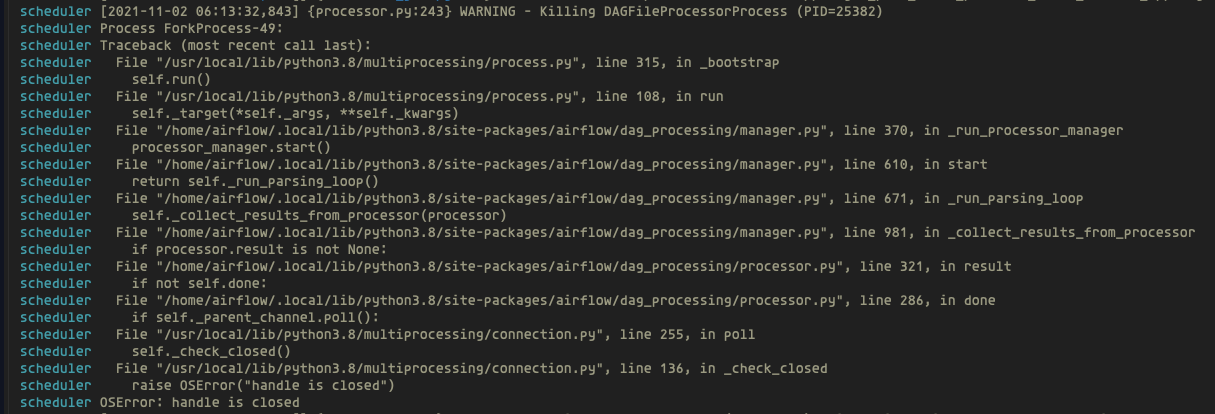

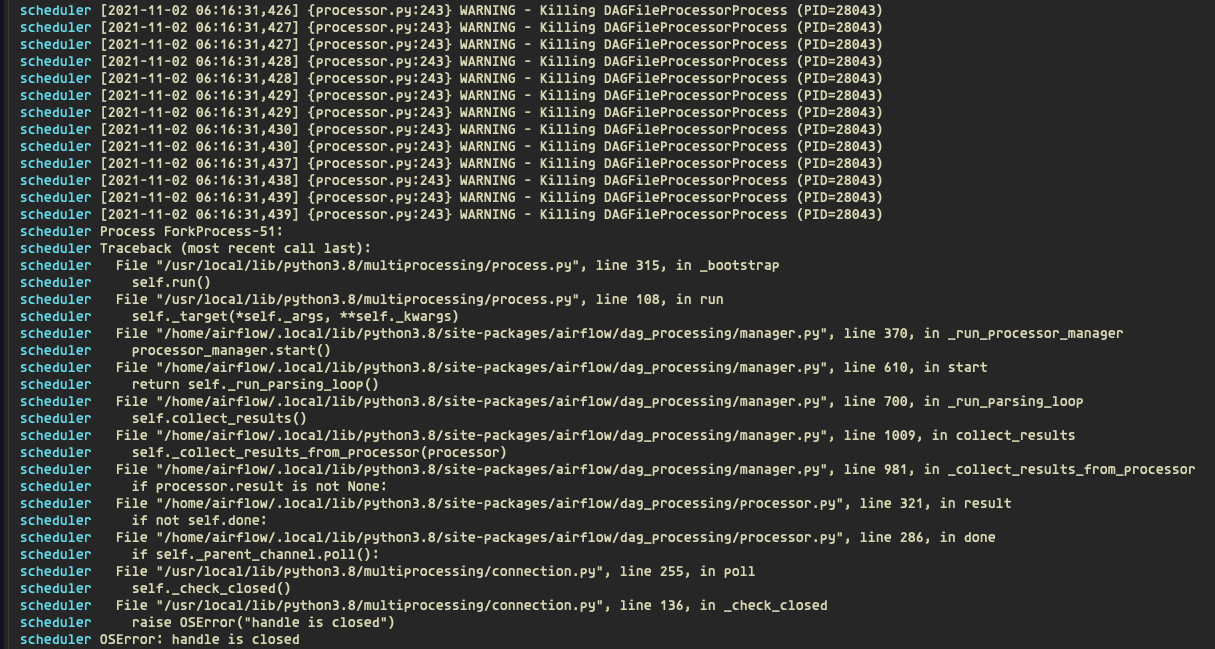

**What happened**: The scheduler runs fine for a bit, then after a few minutes it starts spitting the following out every second (and the container appears to be stuck as it needs to be force killed):

```

scheduler_1 | [2021-01-12 01:45:48,149] {scheduler_job.py:262} WARNING - Killing DAGFileProcessorProcess (PID=1112)

scheduler_1 | [2021-01-12 01:45:49,153] {scheduler_job.py:262} WARNING - Killing DAGFileProcessorProcess (PID=1112)

scheduler_1 | [2021-01-12 01:45:49,159] {scheduler_job.py:262} WARNING - Killing DAGFileProcessorProcess (PID=1112)

scheduler_1 | [2021-01-12 01:45:50,163] {scheduler_job.py:262} WARNING - Killing DAGFileProcessorProcess (PID=1112)

scheduler_1 | [2021-01-12 01:45:50,165] {scheduler_job.py:262} WARNING - Killing DAGFileProcessorProcess (PID=1112)

scheduler_1 | [2021-01-12 01:45:51,169] {scheduler_job.py:262} WARNING - Killing DAGFileProcessorProcess (PID=1112)

scheduler_1 | [2021-01-12 01:45:51,172] {scheduler_job.py:262} WARNING - Killing DAGFileProcessorProcess (PID=1112)

```

Note that there is only a single dag enabled with a single task as I'm just trying to get this off the ground. That dag is scheduled to run daily so it's almost never running aside from when I'm manually testing it.

**What you expected to happen**: The scheduler to stay idle without issues.

**How to reproduce it**: Unclear as it seems to happen almost randomly after a few minutes. Below is the scheduler section of my `airflow.cfg`. The scheduler is using `LocalExecutor`. I have the `scheduler` and `webserver` running in separate containers, which may or may not be related? Let me know what other information might be helpful.

```

[scheduler]

# Task instances listen for external kill signal (when you clear tasks

# from the CLI or the UI), this defines the frequency at which they should

# listen (in seconds).

job_heartbeat_sec = 10

# How often (in seconds) to check and tidy up 'running' TaskInstancess

# that no longer have a matching DagRun

clean_tis_without_dagrun_interval = 15.0

# The scheduler constantly tries to trigger new tasks (look at the

# scheduler section in the docs for more information). This defines

# how often the scheduler should run (in seconds).

scheduler_heartbeat_sec = 10

# The number of times to try to schedule each DAG file

# -1 indicates unlimited number

num_runs = -1

# The number of seconds to wait between consecutive DAG file processing

processor_poll_interval = 10

# after how much time (seconds) a new DAGs should be picked up from the filesystem

min_file_process_interval = 30

# How often (in seconds) to scan the DAGs directory for new files. Default to 5 minutes.

dag_dir_list_interval = 300

# How often should stats be printed to the logs. Setting to 0 will disable printing stats

print_stats_interval = 30

# How often (in seconds) should pool usage stats be sent to statsd (if statsd_on is enabled)

pool_metrics_interval = 5.0

# If the last scheduler heartbeat happened more than scheduler_health_check_threshold

# ago (in seconds), scheduler is considered unhealthy.

# This is used by the health check in the "/health" endpoint

scheduler_health_check_threshold = 30

# How often (in seconds) should the scheduler check for orphaned tasks and SchedulerJobs

orphaned_tasks_check_interval = 300.0

child_process_log_directory = /opt/airflow/logs/scheduler

# Local task jobs periodically heartbeat to the DB. If the job has

# not heartbeat in this many seconds, the scheduler will mark the

# associated task instance as failed and will re-schedule the task.

scheduler_zombie_task_threshold = 300

# Turn off scheduler catchup by setting this to ``False``.

# Default behavior is unchanged and

# Command Line Backfills still work, but the scheduler

# will not do scheduler catchup if this is ``False``,

# however it can be set on a per DAG basis in the

# DAG definition (catchup)

catchup_by_default = False

# This changes the batch size of queries in the scheduling main loop.

# If this is too high, SQL query performance may be impacted by one

# or more of the following:

# - reversion to full table scan

# - complexity of query predicate

# - excessive locking

# Additionally, you may hit the maximum allowable query length for your db.

# Set this to 0 for no limit (not advised)

max_tis_per_query = 512

# Should the scheduler issue ``SELECT ... FOR UPDATE`` in relevant queries.

# If this is set to False then you should not run more than a single

# scheduler at once

use_row_level_locking = True

# Max number of DAGs to create DagRuns for per scheduler loop

#

# Default: 10

# max_dagruns_to_create_per_loop =

# How many DagRuns should a scheduler examine (and lock) when scheduling

# and queuing tasks.

#

# Default: 20

# max_dagruns_per_loop_to_schedule =

# Should the Task supervisor process perform a "mini scheduler" to attempt to schedule more tasks of the

# same DAG. Leaving this on will mean tasks in the same DAG execute quicker, but might starve out other

# dags in some circumstances

#

# Default: True

# schedule_after_task_execution =

# The scheduler can run multiple processes in parallel to parse dags.

# This defines how many processes will run.

parsing_processes = 1

# Turn off scheduler use of cron intervals by setting this to False.

# DAGs submitted manually in the web UI or with trigger_dag will still run.

use_job_schedule = True

# Allow externally triggered DagRuns for Execution Dates in the future

# Only has effect if schedule_interval is set to None in DAG

allow_trigger_in_future = False

max_threads = 1

```

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] imamdigmi commented on issue #13625: Scheduler Dying/Hanging v2.0.0: WARNING - Killing DAGFileProcessorProcess

Posted by GitBox <gi...@apache.org>.

imamdigmi commented on issue #13625:

URL: https://github.com/apache/airflow/issues/13625#issuecomment-957131016

I have the same problem, using Airflow 2.1.3 with KubernetesExecutor (kube 1.20)

cc: @totalhack @kaxil

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@airflow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] steveipkis commented on issue #13625: Scheduler Dying/Hanging v2.0.0: WARNING - Killing DAGFileProcessorProcess

Posted by GitBox <gi...@apache.org>.

steveipkis commented on issue #13625:

URL: https://github.com/apache/airflow/issues/13625#issuecomment-1018780201

I'm also facing the exact same issue: Airflow 2.1.4

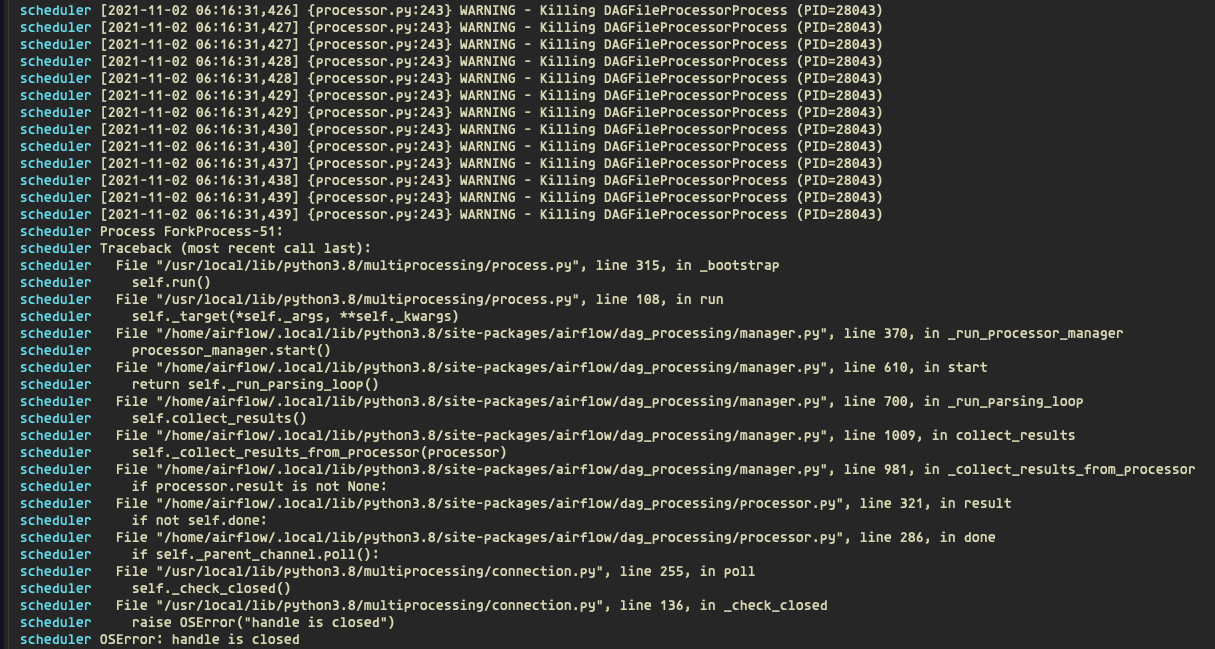

<img width="1138" alt="Screen Shot 2022-01-21 at 12 38 51 PM" src="https://user-images.githubusercontent.com/14340902/150584927-c5873818-de35-43ba-8e15-b89aefcffbb8.png">

Any luck on resolving this?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@airflow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] imamdigmi edited a comment on issue #13625: Scheduler Dying/Hanging v2.0.0: WARNING - Killing DAGFileProcessorProcess

Posted by GitBox <gi...@apache.org>.

imamdigmi edited a comment on issue #13625:

URL: https://github.com/apache/airflow/issues/13625#issuecomment-957131016

I have the same problem, in my cluster there are 6 scheduler replicas, and all replicas are experiencing the same thing, using Airflow 2.1.3 with KubernetesExecutor (kube 1.20)

cc: @totalhack @kaxil

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@airflow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] steveipkis edited a comment on issue #13625: Scheduler Dying/Hanging v2.0.0: WARNING - Killing DAGFileProcessorProcess

Posted by GitBox <gi...@apache.org>.

steveipkis edited a comment on issue #13625:

URL: https://github.com/apache/airflow/issues/13625#issuecomment-1018780201

I'm also facing the exact same issue: Airflow 2.1.4

Number of Schedulers: 1

<img width="1138" alt="Screen Shot 2022-01-21 at 12 38 51 PM" src="https://user-images.githubusercontent.com/14340902/150584927-c5873818-de35-43ba-8e15-b89aefcffbb8.png">

Any luck on resolving this?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@airflow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] imamdigmi commented on issue #13625: Scheduler Dying/Hanging v2.0.0: WARNING - Killing DAGFileProcessorProcess

Posted by GitBox <gi...@apache.org>.

imamdigmi commented on issue #13625:

URL: https://github.com/apache/airflow/issues/13625#issuecomment-977584334

Not yet @DuyHV20150601 , I still got the same error

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@airflow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] tg295 commented on issue #13625: Scheduler Dying/Hanging v2.0.0: WARNING - Killing DAGFileProcessorProcess

Posted by GitBox <gi...@apache.org>.

tg295 commented on issue #13625:

URL: https://github.com/apache/airflow/issues/13625#issuecomment-998952171

I am also experiencing this issue. Airflow 2.2.1 - Celery Executor.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@airflow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] imamdigmi commented on issue #13625: Scheduler Dying/Hanging v2.0.0: WARNING - Killing DAGFileProcessorProcess

Posted by GitBox <gi...@apache.org>.

imamdigmi commented on issue #13625:

URL: https://github.com/apache/airflow/issues/13625#issuecomment-957131016

I have the same problem, using Airflow 2.1.3 with KubernetesExecutor (kube 1.20)

cc: @totalhack @kaxil

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@airflow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] totalhack edited a comment on issue #13625: Scheduler Dying/Hanging v2.0.0: WARNING - Killing DAGFileProcessorProcess

Posted by GitBox <gi...@apache.org>.

totalhack edited a comment on issue #13625:

URL: https://github.com/apache/airflow/issues/13625#issuecomment-758968595

Below is my dag with some placeholders substituted. Note that this isn't even running (or attempting to run) when the problem occurs, as it's scheduled to run once a day. I was able to run this dag successfully.

I also have several other disabled maintenance dags from [here](https://github.com/teamclairvoyant/airflow-maintenance-dags/tree/airflow2.0). They were not run or enabled around the time that the issue occurred but had run fine in previous testing.

I have moved on to getting another scheduling solution in place as this effort is time-sensitive, but I will see if I can reproduce this quickly with debug logging and get back to you. I assume you just mean set logging->logging_level to `DEBUG` in my cfg, if you had other specific debug flags you wanted enabled let me know.

```python

import os

import airflow

from airflow import DAG

from airflow.providers.docker.operators.docker import DockerOperator

IMAGE = os.getenv("IMAGE")

assert IMAGE, "No IMAGE defined"

default_args = {

"owner": "[redacted]",

"start_date": airflow.utils.dates.days_ago(1),

"email": ["redacted"],

"email_on_failure": True,

"email_on_retry": False,

"depends_on_past": False,

"retries": 0,

}

dag = DAG(

"some_dag_id",

default_args=default_args,

schedule_interval="15 7 * * *",

catchup=False,

)

t1 = DockerOperator(

task_id="some_task_id",

image=IMAGE,

command="python /app/some_python_script.py",

api_version="auto",

auto_remove=True,

dag=dag,

)

```

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] imamdigmi edited a comment on issue #13625: Scheduler Dying/Hanging v2.0.0: WARNING - Killing DAGFileProcessorProcess

Posted by GitBox <gi...@apache.org>.

imamdigmi edited a comment on issue #13625:

URL: https://github.com/apache/airflow/issues/13625#issuecomment-957131016

I have the same problem, in my cluster there are 6 scheduler replicas, and all replicas are experiencing the same thing, using Airflow 2.1.3 with KubernetesExecutor (kube 1.20)

cc: @totalhack @kaxil

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@airflow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] totalhack commented on issue #13625: Scheduler Dying/Hanging v2.0.0: WARNING - Killing DAGFileProcessorProcess

Posted by GitBox <gi...@apache.org>.

totalhack commented on issue #13625:

URL: https://github.com/apache/airflow/issues/13625#issuecomment-758968595

Below is my dag with some placeholders substituted. Note that this isn't even running (or attempting to run) when the problem occurs, as it's scheduled to run once a day. I was able to run this dag successfully.

I also have several other disabled maintenance dags from [here](https://github.com/teamclairvoyant/airflow-maintenance-dags/tree/airflow2.0). They were not run or enabled around the time that the issue occurred but had run fine in previous testing.

I have moved on to getting another scheduling solution in place as this effort is time-sensitive, but I will see if I can reproduce this quickly with debug logging and get back to you. I assume you just mean set logging->logging_level to `DEBUG` in my cfg, if you had other specific debug flags you wanted enabled let me know.

```

import os

import airflow

from airflow import DAG

from airflow.providers.docker.operators.docker import DockerOperator

IMAGE = os.getenv("IMAGE")

assert IMAGE, "No IMAGE defined"

default_args = {

"owner": "[redacted]",

"start_date": airflow.utils.dates.days_ago(1),

"email": ["redacted"],

"email_on_failure": True,

"email_on_retry": False,

"depends_on_past": False,

"retries": 0,

}

dag = DAG(

"some_dag_id",

default_args=default_args,

schedule_interval="15 7 * * *",

catchup=False,

)

t1 = DockerOperator(

task_id="some_task_id",

image=IMAGE,

command="python /app/some_python_script.py",

api_version="auto",

auto_remove=True,

dag=dag,

)

```

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] totalhack commented on issue #13625: Scheduler Dying/Hanging v2.0.0: WARNING - Killing DAGFileProcessorProcess

Posted by GitBox <gi...@apache.org>.

totalhack commented on issue #13625:

URL: https://github.com/apache/airflow/issues/13625#issuecomment-759018262

So I've been running it with debug logging for 20 minutes now without issues. It previously happened within a matter of minutes every time. The only thing I changed since yesterday was to downgrade Docker Desktop for Mac to 2.5.0.1, as 3.0.4 was giving me issues in another project. But perhaps that was causing some issues here too.

Since I can't reproduce this I will close this for now, and reopen if it pops up again when I revisit Airflow.

Thanks.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] boring-cyborg[bot] commented on issue #13625: Scheduler Dying/Hanging v2.0.0: WARNING - Killing DAGFileProcessorProcess

Posted by GitBox <gi...@apache.org>.

boring-cyborg[bot] commented on issue #13625:

URL: https://github.com/apache/airflow/issues/13625#issuecomment-758354307

Thanks for opening your first issue here! Be sure to follow the issue template!

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] imamdigmi edited a comment on issue #13625: Scheduler Dying/Hanging v2.0.0: WARNING - Killing DAGFileProcessorProcess

Posted by GitBox <gi...@apache.org>.

imamdigmi edited a comment on issue #13625:

URL: https://github.com/apache/airflow/issues/13625#issuecomment-957131016

I have the same problem, in my cluster there are 6 scheduler replicas, and all replicas are experiencing the same thing, using Airflow 2.1.3 with KubernetesExecutor (kube 1.20)

cc: @totalhack @kaxil

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@airflow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] imamdigmi edited a comment on issue #13625: Scheduler Dying/Hanging v2.0.0: WARNING - Killing DAGFileProcessorProcess

Posted by GitBox <gi...@apache.org>.

imamdigmi edited a comment on issue #13625:

URL: https://github.com/apache/airflow/issues/13625#issuecomment-977584334

Not yet @DuyHV20150601 , I still got the same error. Maybe this https://github.com/apache/airflow/issues/17507#issuecomment-973177410 can solve the issue, but I don't tested it yet

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@airflow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] totalhack closed issue #13625: Scheduler Dying/Hanging v2.0.0: WARNING - Killing DAGFileProcessorProcess

Posted by GitBox <gi...@apache.org>.

totalhack closed issue #13625:

URL: https://github.com/apache/airflow/issues/13625

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] imamdigmi commented on issue #13625: Scheduler Dying/Hanging v2.0.0: WARNING - Killing DAGFileProcessorProcess

Posted by GitBox <gi...@apache.org>.

imamdigmi commented on issue #13625:

URL: https://github.com/apache/airflow/issues/13625#issuecomment-957131016

I have the same problem, using Airflow 2.1.3 with KubernetesExecutor (kube 1.20)

cc: @totalhack @kaxil

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@airflow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] DuyHV20150601 commented on issue #13625: Scheduler Dying/Hanging v2.0.0: WARNING - Killing DAGFileProcessorProcess

Posted by GitBox <gi...@apache.org>.

DuyHV20150601 commented on issue #13625:

URL: https://github.com/apache/airflow/issues/13625#issuecomment-974832422

> I have the same problem, in my cluster there are 6 scheduler replicas, and all replicas are experiencing the same thing, using Airflow 2.1.3 with KubernetesExecutor (kube 1.20)

>

> cc: @totalhack @kaxil

Hi, do u resolve this issue? I'm facing exactly that issue but have no idea to tackle :(

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@airflow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] DuyHV20150601 commented on issue #13625: Scheduler Dying/Hanging v2.0.0: WARNING - Killing DAGFileProcessorProcess

Posted by GitBox <gi...@apache.org>.

DuyHV20150601 commented on issue #13625:

URL: https://github.com/apache/airflow/issues/13625#issuecomment-974832422

> I have the same problem, in my cluster there are 6 scheduler replicas, and all replicas are experiencing the same thing, using Airflow 2.1.3 with KubernetesExecutor (kube 1.20)

>

> cc: @totalhack @kaxil

Hi, do u resolve this issue? I'm facing exactly that issue but have no idea to tackle :(

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@airflow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] kaxil commented on issue #13625: Scheduler Dying/Hanging v2.0.0: WARNING - Killing DAGFileProcessorProcess

Posted by GitBox <gi...@apache.org>.

kaxil commented on issue #13625:

URL: https://github.com/apache/airflow/issues/13625#issuecomment-758879899

Can you add more details to this please?

Post your DAG, and enable debug logging and add the log lines immediately before the `{scheduler_job.py:262} WARNING - Killing DAGFileProcessorProcess`.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org