You are viewing a plain text version of this content. The canonical link for it is here.

Posted to commits@hudi.apache.org by GitBox <gi...@apache.org> on 2022/06/07 03:01:11 UTC

[GitHub] [hudi] jjtjiang opened a new issue, #5777: [SUPPORT] Hudi table has duplicate data.

jjtjiang opened a new issue, #5777:

URL: https://github.com/apache/hudi/issues/5777

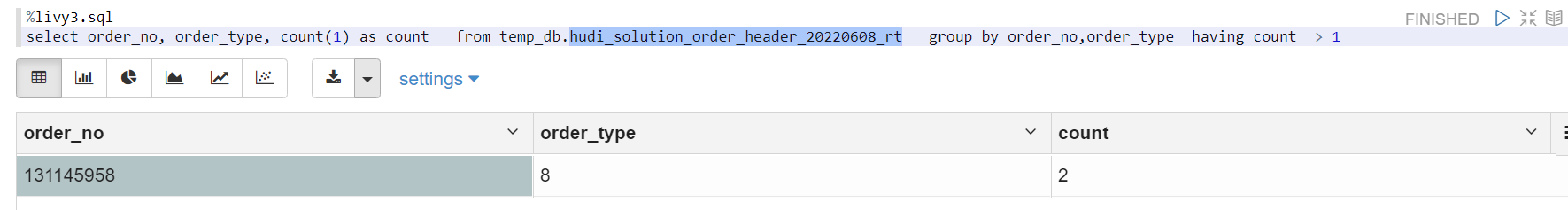

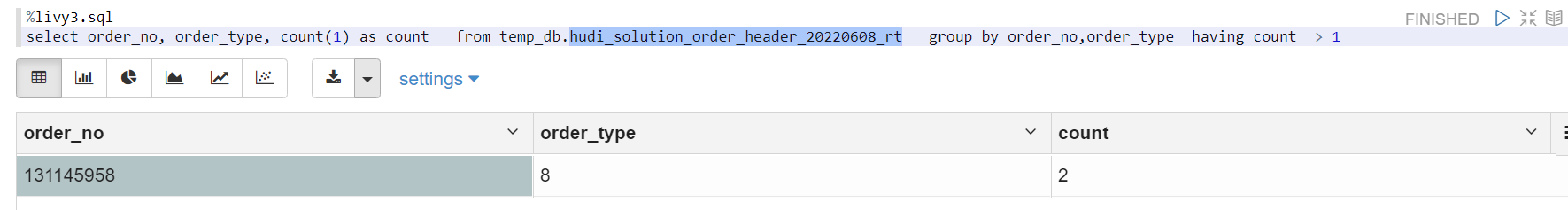

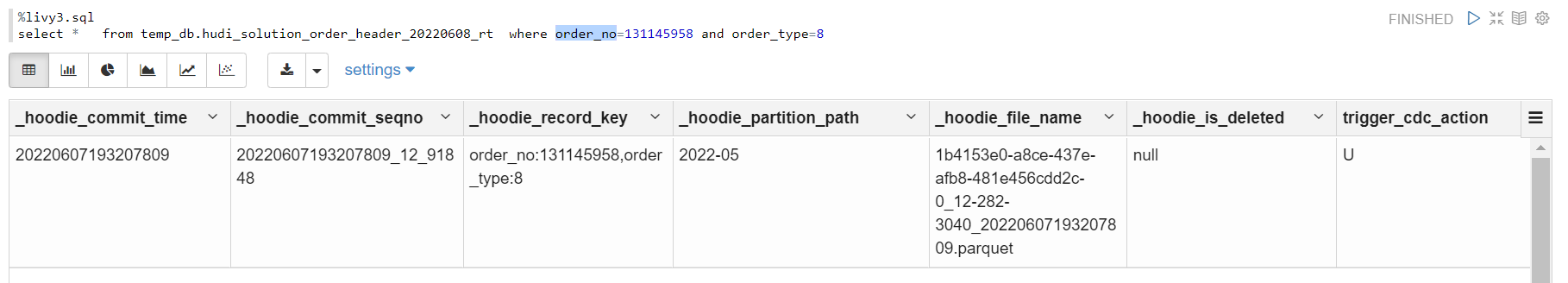

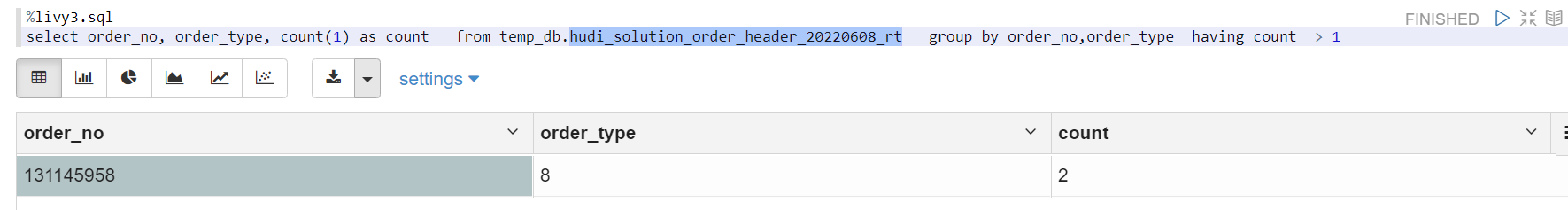

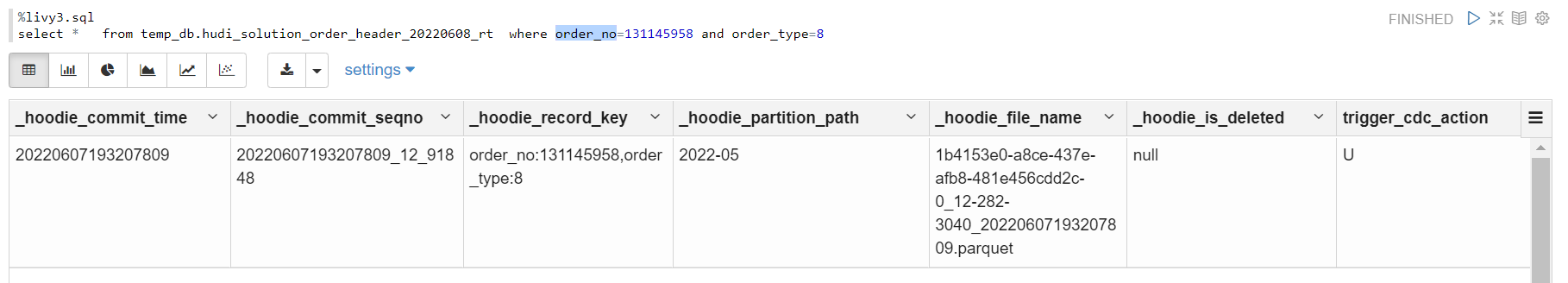

Hudi has duplicate data. The following data are all of the same primary key. It is said that there should be only one. Can you help me see what went wrong?

the duplicate datas are as below

<img width="882" alt="2022-06-07_110034" src="https://user-images.githubusercontent.com/48897688/172286544-edcbbbb6-ba55-40b0-97c6-b35dc9c2d6b2.png">

<html xmlns:o="urn:schemas-microsoft-com:office:office"

xmlns:x="urn:schemas-microsoft-com:office:excel"

xmlns="http://www.w3.org/TR/REC-html40">

<head>

<meta name=ProgId content=Excel.Sheet>

<meta name=Generator content="Microsoft Excel 15">

<link id=Main-File rel=Main-File

href="file:///C:/Users/julianj/AppData/Local/Temp/msohtmlclip1/01/clip.htm">

<link rel=File-List

href="file:///C:/Users/julianj/AppData/Local/Temp/msohtmlclip1/01/clip_filelist.xml">

<style>

<!--table

{mso-displayed-decimal-separator:"\.";

mso-displayed-thousand-separator:"\,";}

@page

{margin:.75in .7in .75in .7in;

mso-header-margin:.3in;

mso-footer-margin:.3in;}

tr

{mso-height-source:auto;

mso-ruby-visibility:none;}

col

{mso-width-source:auto;

mso-ruby-visibility:none;}

br

{mso-data-placement:same-cell;}

td

{padding-top:1px;

padding-right:1px;

padding-left:1px;

mso-ignore:padding;

color:black;

font-size:11.0pt;

font-weight:400;

font-style:normal;

text-decoration:none;

font-family:等线;

mso-generic-font-family:auto;

mso-font-charset:134;

mso-number-format:General;

text-align:general;

vertical-align:middle;

border:none;

mso-background-source:auto;

mso-pattern:auto;

mso-protection:locked visible;

white-space:nowrap;

mso-rotate:0;}

ruby

{ruby-align:left;}

rt

{color:windowtext;

font-size:9.0pt;

font-weight:400;

font-style:normal;

text-decoration:none;

font-family:等线;

mso-generic-font-family:auto;

mso-font-charset:134;

mso-char-type:none;

display:none;}

-->

</style>

</head>

<body link="#0563C1" vlink="#954F72">

_hoodie_commit_time,_hoodie_commit_seqno,_hoodie_record_key,_hoodie_partition_path,_hoodie_file_name,_hoodie_is_deleted,trigger_cdc_action,order_type,order_no,u_version,from_acct_no,from_loc_no,from_contact_no,from_dept_no,from_inv_type,to_acct_no,to_loc_no,to_contact_no,to_dept_no,to_inv_type,ship_to_name,ship_to_addr,ship_to_po_box,ship_to_city,ship_to_state,ship_to_country,ship_to_zip,account_rep,mt_expense_code,int_ref_no,int_ref_type,ext_ref,issue_date,credit_rel_date,pick_date,manifest_date,ship_date,invoice_date,posting_date,expected_date,receiving_date,closed_date,printed_date,delete_date,terms_no,carrier_no,ship_method,freight,resale,sales_terr,credit_rel_code,it_cost_code,sales_tax,entry_datetime,entry_id,total_order,total_cost,sales_total,head_exp_total,sales_rel_date,delete_id,detail_exp_total,rma_disp_type,repick_id,repick_counter,invoice_id,invoice_counter,total_weight,hold_date,hold_id,drop_ship,detail_price_total,ship_to_loc,ship_to_loc_change,q_userid,label_printe

d,label_date,dist_exp_date,prod_exp_date,bol_date,bol_printed,qc_date,schedule_date,approval,fx_total_order,fx_total_cost,fx_sales_total,fx_head_exp_total,fx_detail_exp_total,fx_detail_price_total,h_version,profile_special_handle,fx_currency,company_no,trigger_entry_datetime,cdc_system_time_long,kafka_time_long,process_time_long,date_flag

--

20220522195124598,20220522195124598_15_72084,"order_no:128920931,order_type:1",2022-05,aff347c3-4aca-4ea6-9a3d-77701fa02a50-0_15-1208-76812_20220522195124598.parquet,null,U,1,128920931,>,null,98,null,null,200,121823,1,1,null,null,"CLARK CONSTRUCTION GROUP, LLC | 15144668",7500 OLD GEORGETOWN RD,,BETHESDA,MD,US,20814,3361,null,115596825,8,15144668,null,null,null,null,null,null,null,null,null,null,null,null,EE,1,EDEL,P,Y,4500,B,null,0E-8,2022-05-23 00:38:26.49,705753,1386.00000000,16692.00000000,16678.00000000,0E-8,null,null,0E-8,null,null,null,null,null,0E-8,null,null,D,-15292.00000000,-5,null,611485,null,null,null,null,null,null,null,null,null,0E-8,0E-8,0E-8,0E-8,0E-8,0E-8,0,null,null,1,null,1653266468878,1653266471000,1653274311465,2022-05 | |

20220525062849999,20220525062849999_37_35812,"order_no:128920931,order_type:1",2022-05,dfb96435-4a83-4346-97c8-c0e3b40f44df-0_37-3690-222861_20220525062849999.parquet,null,U,1,128920931,E,null,98,null,null,200,121823,1,1,null,null,"CLARK CONSTRUCTION GROUP, LLC | 15144668",7500 OLD GEORGETOWN RD,,BETHESDA,MD,US,20814,3361,null,115596825,8,15144668,null,null,null,null,null,null,null,null,null,null,null,null,EE,1,EDEL,P,Y,4500,B,null,0E-8,2022-05-25 11:52:28.79,705753,1386.00000000,16692.00000000,16678.00000000,0E-8,null,null,0E-8,null,null,null,null,null,0E-8,null,null,D,-15292.00000000,-5,null,611485,null,null,null,null,null,null,null,null,null,0E-8,0E-8,0E-8,0E-8,0E-8,0E-8,0,null,null,1,null,1653479708171,1653479711000,1653485345674,2022-05 | |

20220525114545803,20220525114545803_10_69828,"order_no:128920931,order_type:1",2022-05,b38e7425-b49b-46d8-8d70-adc6444d369b-0_10-11994-786824_20220525114545803.parquet,null,U,1,128920931,F,null,98,null,null,200,121823,1,1,null,null,"CLARK CONSTRUCTION GROUP, LLC | 15144668",7500 OLD GEORGETOWN RD,,BETHESDA,MD,US,20814,3361,null,115596825,8,15144668,null,null,null,null,null,null,null,null,null,null,null,null,EE,1,EDEL,P,Y,4500,B,null,0E-8,2022-05-25 18:42:59.437,705753,1386.00000000,16692.00000000,16678.00000000,0E-8,null,null,0E-8,null,null,null,null,null,0E-8,null,null,D,-15292.00000000,-5,null,611485,null,null,null,null,null,null,null,null,null,0E-8,0E-8,0E-8,0E-8,0E-8,0E-8,0,null,null,1,null,1653504308578,1653504312000,1653504349920,2022-05 | |

20220520034330656,20220520034330656_54_1564586,"order_no:128920931,order_type:1",2022-02,bf3af60a-6405-4792-a8ff-d1c824011fa4-0_54-134-2854_20220520034330656.parquet,null,,1,128920931,n,null,98,null,null,200,121823,1,1,null,null,"CLARK CONSTRUCTION GROUP, LLC | 15144668",7500 OLD GEORGETOWN RD,,BETHESDA,MD,US,20814,3361,null,115596825,8,15144668,null,null,null,null,null,null,null,null,null,null,null,null,EE,1,NL,P,Y,4500,B,null,0E-8,2022-02-23 22:57:04.19,705753,20988.00000000,50544.00000000,50332.00000000,0E-8,null,null,0E-8,null,null,null,null,null,0E-8,null,null,D,-29344.00000000,0,null,11458,null,null,null,null,null,null,null,null,null,0E-8,0E-8,0E-8,0E-8,0E-8,0E-8,0,null,null,1,null,0,1653043461263,0,2022-02 | | | | |

20220524115142629,20220524115142629_14_30201,"order_no:128920931,order_type:1",2022-05,3904d669-229f-45f5-8a79-7bcda320406d-0_14-11869-823623_20220524115142629.parquet,null,U,1,128920931,C,null,98,null,null,200,121823,1,1,null,null,"CLARK CONSTRUCTION GROUP, LLC | 15144668",7500 OLD GEORGETOWN RD,,BETHESDA,MD,US,20814,3361,null,115596825,8,15144668,null,null,null,null,null,null,null,null,null,null,null,null,EE,1,EDEL,P,Y,4500,B,null,0E-8,2022-05-24 18:44:09.5,705753,1386.00000000,16692.00000000,16678.00000000,0E-8,null,null,0E-8,null,null,null,null,null,0E-8,null,null,D,-15292.00000000,-5,null,611485,null,null,null,null,null,null,null,null,null,0E-8,0E-8,0E-8,0E-8,0E-8,0E-8,0,null,null,1,null,1653417908230,1653417912000,1653418304341,2022-05 | |

20220524044927672,20220524044927672_16_158057,"order_no:128920931,order_type:1",2022-05,d2faa7c0-9a9f-4faa-b0ac-466a4bb21104-0_16-46454-3436140_20220524044927672.parquet,null,U,1,128920931,B,null,98,null,null,200,121823,1,1,null,null,"CLARK CONSTRUCTION GROUP, LLC | 15144668",7500 OLD GEORGETOWN RD,,BETHESDA,MD,US,20814,3361,null,115596825,8,15144668,null,null,null,null,null,null,null,null,null,null,null,null,EE,1,EDEL,P,Y,4500,B,null,0E-8,2022-05-24 11:40:19.907,705753,1386.00000000,16692.00000000,16678.00000000,0E-8,null,null,0E-8,null,null,null,null,null,0E-8,null,null,D,-15292.00000000,-5,null,611485,null,null,null,null,null,null,null,null,null,0E-8,0E-8,0E-8,0E-8,0E-8,0E-8,0,null,null,1,null,1653392708055,1653392709000,1653392968513,2022-05 | |

20220524174827145,20220524174827145_15_55171,"order_no:128920931,order_type:1",2022-05,d15aea03-89cd-4263-a626-a416891998e2-0_15-22714-1584773_20220524174827145.parquet,null,U,1,128920931,D,null,98,null,null,200,121823,1,1,null,null,"CLARK CONSTRUCTION GROUP, LLC | 15144668",7500 OLD GEORGETOWN RD,,BETHESDA,MD,US,20814,3361,null,115596825,8,15144668,null,null,null,null,null,null,null,null,null,null,null,null,EE,1,EDEL,P,Y,4500,B,null,0E-8,2022-05-25 00:42:33.05,705753,1386.00000000,16692.00000000,16678.00000000,0E-8,null,null,0E-8,null,null,null,null,null,0E-8,null,null,D,-15292.00000000,-5,null,611485,null,null,null,null,null,null,null,null,null,0E-8,0E-8,0E-8,0E-8,0E-8,0E-8,0,null,null,1,null,1653439508357,1653439510000,1653439707814,2022-05 | |

20220523115526440,20220523115526440_23_115931,"order_no:128920931,order_type:1",2022-05,5c70f601-24ca-4127-a78d-eabc751c157e-0_23-22412-1675712_20220523115526440.parquet,null,U,1,128920931,@,null,98,null,null,200,121823,1,1,null,null,"CLARK CONSTRUCTION GROUP, LLC | 15144668",7500 OLD GEORGETOWN RD,,BETHESDA,MD,US,20814,3361,null,115596825,8,15144668,null,null,null,null,null,null,null,null,null,null,null,null,EE,1,EDEL,P,Y,4500,B,null,0E-8,2022-05-23 18:42:54.14,705753,1386.00000000,16692.00000000,16678.00000000,0E-8,null,null,0E-8,null,null,null,null,null,0E-8,null,null,D,-15292.00000000,-5,null,611485,null,null,null,null,null,null,null,null,null,0E-8,0E-8,0E-8,0E-8,0E-8,0E-8,0,null,null,1,null,1653331868795,1653331872000,1653332128186,2022-05 | |

20220523175302219,20220523175302219_12_131223,"order_no:128920931,order_type:1",2022-05,be4cd338-ed7f-4f77-a68a-ec5827b2f284-0_12-30844-2300078_20220523175302219.parquet,null,U,1,128920931,A,null,98,null,null,200,121823,1,1,null,null,"CLARK CONSTRUCTION GROUP, LLC | 15144668",7500 OLD GEORGETOWN RD,,BETHESDA,MD,US,20814,3361,null,115596825,8,15144668,null,null,null,null,null,null,null,null,null,null,null,null,EE,1,EDEL,P,Y,4500,B,null,0E-8,2022-05-24 00:47:08.583,705753,1386.00000000,16692.00000000,16678.00000000,0E-8,null,null,0E-8,null,null,null,null,null,0E-8,null,null,D,-15292.00000000,-5,null,611485,null,null,null,null,null,null,null,null,null,0E-8,0E-8,0E-8,0E-8,0E-8,0E-8,0,null,null,1,null,1653353468804,1653353472000,1653353582933,2022-05 | |

20220526054635082,20220526054635082_14_53437,"order_no:128920931,order_type:1",2022-02,e6486128-d1bd-4502-93bf-a2a62487b57a-0_14-3242-194309_20220526054635082.parquet,null,U,1,128920931,H,null,98,null,null,200,121823,1,1,null,null,"CLARK CONSTRUCTION GROUP, LLC | 15144668",7500 OLD GEORGETOWN RD,,BETHESDA,MD,US,20814,3361,null,115596825,8,15144668,null,null,null,null,null,null,null,null,null,null,null,null,EE,1,EDEL,P,Y,4500,B,null,0E-8,2022-02-23 22:57:04.19,705753,1386.00000000,16692.00000000,16678.00000000,0E-8,null,null,0E-8,null,null,null,null,null,0E-8,null,null,D,-15292.00000000,-5,null,611485,null,null,null,null,null,null,null,null,null,0E-8,0E-8,0E-8,0E-8,0E-8,0E-8,0,null,null,1,2022-05-26 11:40:43.31,1653565508332,1653565510000,1653569205882,2022-02

20220525175440741,20220525175440741_18_112555,"order_no:128920931,order_type:1",2022-05,8b66c4b2-41d3-411b-b47a-365d66c30545-0_18-20905-1394340_20220525175440741.parquet,null,U,1,128920931,G,null,98,null,null,200,121823,1,1,null,null,"CLARK CONSTRUCTION GROUP, LLC | 15144668",7500 OLD GEORGETOWN RD,,BETHESDA,MD,US,20814,3361,null,115596825,8,15144668,null,null,null,null,null,null,null,null,null,null,null,null,EE,1,EDEL,P,Y,4500,B,null,0E-8,2022-05-26 00:46:51.087,705753,1386.00000000,16692.00000000,16678.00000000,0E-8,null,null,0E-8,null,null,null,null,null,0E-8,null,null,D,-15292.00000000,-5,null,611485,null,null,null,null,null,null,null,null,null,0E-8,0E-8,0E-8,0E-8,0E-8,0E-8,0,null,null,1,null,1653526208426,1653526211000,1653526481205,2022-05 | |

20220526115135082,20220526115135082_20_45687,"order_no:128920931,order_type:1",2022-02,8fa2c068-5e63-492c-936e-44784384514d-0_20-14278-1055005_20220526115135082.parquet,null,U,1,128920931,I,null,98,null,null,200,121823,1,1,null,null,"CLARK CONSTRUCTION GROUP, LLC | 15144668",7500 OLD GEORGETOWN RD,,BETHESDA,MD,US,20814,3361,null,115596825,8,15144668,null,null,null,null,null,null,null,null,null,null,null,null,EE,1,EDEL,P,Y,4500,B,null,0E-8,2022-02-23 22:57:04.19,705753,1386.00000000,16692.00000000,16678.00000000,0E-8,null,null,0E-8,null,null,null,null,null,0E-8,null,null,D,-15292.00000000,-5,null,611485,null,null,null,null,null,null,null,null,null,0E-8,0E-8,0E-8,0E-8,0E-8,0E-8,0,null,null,1,2022-05-26 18:49:47.27,1653591008450,1653591012000,1653591097211,2022-02

20220526175131339,20220526175131339_62_76091,"order_no:128920931,order_type:1",2022-02,81653f9b-e473-49cb-b741-3f5e5b6149c9-0_62-24975-1905648_20220526175131339.parquet,null,U,1,128920931,J,null,98,null,null,200,121823,1,1,null,null,"CLARK CONSTRUCTION GROUP, LLC | 15144668",7500 OLD GEORGETOWN RD,,BETHESDA,MD,US,20814,3361,null,115596825,8,15144668,null,null,null,null,null,null,null,null,null,null,null,null,EE,1,EDEL,P,Y,4500,B,null,0E-8,2022-02-23 22:57:04.19,705753,1386.00000000,16692.00000000,16678.00000000,0E-8,null,null,0E-8,null,null,null,null,null,0E-8,null,null,D,-15292.00000000,-5,null,611485,null,null,null,null,null,null,null,null,null,0E-8,0E-8,0E-8,0E-8,0E-8,0E-8,0,null,null,1,2022-05-27 00:46:49,1653612608702,1653612611000,1653612692463,2022-02

20220527114624539,20220527114624539_3_104377,"order_no:128920931,order_type:1",2022-02,98e64140-374e-4d6b-939a-c0479174402d-0_3-54741-4245047_20220527114624539.parquet,null,U,1,128920931,L,null,98,null,null,200,121823,1,1,null,null,"CLARK CONSTRUCTION GROUP, LLC | 15144668",7500 OLD GEORGETOWN RD,,BETHESDA,MD,US,20814,3361,null,115596825,8,15144668,null,null,null,null,null,null,null,null,null,null,null,null,EE,1,EDEL,P,Y,4500,B,null,0E-8,2022-02-23 22:57:04.19,705753,1386.00000000,16692.00000000,16678.00000000,0E-8,null,null,0E-8,null,null,null,null,null,0E-8,null,null,D,-15292.00000000,-5,null,611485,null,null,null,null,null,null,null,null,null,0E-8,0E-8,0E-8,0E-8,0E-8,0E-8,0,null,null,1,2022-05-27 18:44:53.437,1653677108696,1653677113000,1653677187264,2022-02

20220527045306746,20220527045306746_129_93388,"order_no:128920931,order_type:1",2022-02,c4723a41-d3fd-405d-9f09-ac97d77f5f9c-0_129-42406-3257055_20220527045306746.parquet,null,U,1,128920931,K,null,98,null,null,200,121823,1,1,null,null,"CLARK CONSTRUCTION GROUP, LLC | 15144668",7500 OLD GEORGETOWN RD,,BETHESDA,MD,US,20814,3361,null,115596825,8,15144668,null,null,null,null,null,null,null,null,null,null,null,null,EE,1,EDEL,P,Y,4500,B,null,0E-8,2022-02-23 22:57:04.19,705753,1386.00000000,16692.00000000,16678.00000000,0E-8,null,null,0E-8,null,null,null,null,null,0E-8,null,null,D,-15292.00000000,-5,null,611485,null,null,null,null,null,null,null,null,null,0E-8,0E-8,0E-8,0E-8,0E-8,0E-8,0,null,null,1,2022-05-27 11:45:37.757,1653652208382,1653652211000,1653652387720,2022-02

20220527175133889,20220527175133889_76_110514,"order_no:128920931,order_type:1",2022-02,6557dd29-8e3f-476e-ad2c-048cebb2de67-0_76-65473-5129641_20220527175133889.parquet,null,U,1,128920931,r,null,98,null,null,200,121823,1,1,null,null,"CLARK CONSTRUCTION GROUP, LLC | 15144668",7500 OLD GEORGETOWN RD,,BETHESDA,MD,US,20814,3361,null,115596825,8,15144668,null,null,null,null,null,null,null,null,null,null,null,null,EE,1,EDEL,P,Y,4500,B,null,0E-8,2022-02-23 22:57:04.19,705753,1386.00000000,16692.00000000,16678.00000000,0E-8,null,null,0E-8,null,null,null,null,null,0E-8,null,null,D,-15292.00000000,-5,null,611485,null,null,null,null,null,null,null,null,null,0E-8,0E-8,0E-8,0E-8,0E-8,0E-8,0,null,null,1,2022-05-28 00:48:26.95,1653699008185,1653699010000,1653699094483,2022-02

20220523044612964,20220523044612964_12_89601,"order_no:128920931,order_type:1",2022-05,95b63044-9b39-49ad-bc82-c557545c7590-0_12-13434-1013256_20220523044612964.parquet,null,U,1,128920931,?,null,98,null,null,200,121823,1,1,null,null,"CLARK CONSTRUCTION GROUP, LLC | 15144668",7500 OLD GEORGETOWN RD,,BETHESDA,MD,US,20814,3361,null,115596825,8,15144668,null,null,null,null,null,null,null,null,null,null,null,null,EE,1,EDEL,P,Y,4500,B,null,0E-8,2022-05-23 11:37:14.83,705753,1386.00000000,16692.00000000,16678.00000000,0E-8,null,null,0E-8,null,null,null,null,null,0E-8,null,null,D,-15292.00000000,-5,null,611485,null,null,null,null,null,null,null,null,null,0E-8,0E-8,0E-8,0E-8,0E-8,0E-8,0,null,null,1,null,1653306068754,1653306071000,1653306373612,2022-05 | |

20220529115029730,20220529115029730_2_136006,"order_no:128920931,order_type:1",2022-02,c724dbb4-823e-4744-963a-e77d5993f0e3-0_2-123894-10301249_20220529115029730.parquet,null,U,1,128920931,w,null,98,null,null,200,121823,1,1,null,null,"CLARK CONSTRUCTION GROUP, LLC | 15144668",7500 OLD GEORGETOWN RD,,BETHESDA,MD,US,20814,3361,null,115596825,8,15144668,null,null,null,null,null,null,null,null,null,null,null,null,EE,1,EDEL,P,Y,4500,B,null,0E-8,2022-02-23 22:57:04.19,705753,1386.00000000,16692.00000000,16678.00000000,0E-8,null,null,0E-8,null,null,null,null,null,0E-8,null,null,D,-15292.00000000,-5,null,611485,null,null,null,null,null,null,null,null,null,0E-8,0E-8,0E-8,0E-8,0E-8,0E-8,0,null,null,1,2022-05-29 18:48:28.79,1653850207907,1653850209000,1653850230521,2022-02

20220527155628105,20220527155628105_17_113704,"order_no:128920931,order_type:1",2022-02,e8345f22-da0e-4b5e-b99e-a32bdd939089-0_17-62157-4858727_20220527155628105.parquet,null,U,1,128920931,p,null,98,null,null,200,121823,1,1,null,null,"CLARK CONSTRUCTION GROUP, LLC | 15144668",7500 OLD GEORGETOWN RD,,BETHESDA,MD,US,20814,3361,null,115596825,8,15144668,2022-05-27 22:45:31.877,null,null,null,null,null,null,null,null,null,null,null,EE,1,NL,P,Y,4500,B,null,0E-8,2022-02-23 22:57:04.19,705753,1386.00000000,16692.00000000,16678.00000000,0E-8,null,null,0E-8,null,null,null,null,null,0E-8,null,null,D,-15292.00000000,-5,null,611485,null,null,null,null,null,null,null,null,null,0E-8,0E-8,0E-8,0E-8,0E-8,0E-8,0,null,null,1,2022-05-27 22:51:14.907,1653692108116,1653692110000,1653692188618,2022-02

20220530175142512,20220530175142512_42_142891,"order_no:128920931,order_type:1",2022-02,1a02bc31-cb63-4d7d-af81-b34eae295eef-0_42-168602-14384290_20220530175142512.parquet,null,U,1,128920931,{,null,98,null,null,200,121823,1,1,null,null,"CLARK CONSTRUCTION GROUP, LLC | 15144668",7500 OLD GEORGETOWN RD,,BETHESDA,MD,US,20814,3361,null,115596825,8,15144668,null,null,null,null,null,null,null,null,null,null,null,null,EE,1,EDEL,P,Y,4500,B,null,0E-8,2022-02-23 22:57:04.19,705753,1386.00000000,16692.00000000,16678.00000000,0E-8,null,null,0E-8,null,null,null,null,null,0E-8,null,null,D,-15292.00000000,-5,null,611485,null,null,null,null,null,null,null,null,null,0E-8,0E-8,0E-8,0E-8,0E-8,0E-8,0,null,null,1,2022-05-31 00:48:37.117,1653958208152,1653958210000,1653958302844,2022-02

20220530175142512,20220530175142512_42_142892,"order_no:128920931,order_type:1",2022-02,1a02bc31-cb63-4d7d-af81-b34eae295eef-0_42-168602-14384290_20220530175142512.parquet,null,U,1,128920931,{,null,98,null,null,200,121823,1,1,null,null,"CLARK CONSTRUCTION GROUP, LLC | 15144668",7500 OLD GEORGETOWN RD,,BETHESDA,MD,US,20814,3361,null,115596825,8,15144668,null,null,null,null,null,null,null,null,null,null,null,null,EE,1,EDEL,P,Y,4500,B,null,0E-8,2022-02-23 22:57:04.19,705753,1386.00000000,16692.00000000,16678.00000000,0E-8,null,null,0E-8,null,null,null,null,null,0E-8,null,null,D,-15292.00000000,-5,null,611485,null,null,null,null,null,null,null,null,null,0E-8,0E-8,0E-8,0E-8,0E-8,0E-8,0,null,null,1,2022-05-31 00:48:37.117,1653958208152,1653958210000,1653958302844,2022-02

20220530175142512,20220530175142512_42_142893,"order_no:128920931,order_type:1",2022-02,1a02bc31-cb63-4d7d-af81-b34eae295eef-0_42-168602-14384290_20220530175142512.parquet,null,U,1,128920931,{,null,98,null,null,200,121823,1,1,null,null,"CLARK CONSTRUCTION GROUP, LLC | 15144668",7500 OLD GEORGETOWN RD,,BETHESDA,MD,US,20814,3361,null,115596825,8,15144668,null,null,null,null,null,null,null,null,null,null,null,null,EE,1,EDEL,P,Y,4500,B,null,0E-8,2022-02-23 22:57:04.19,705753,1386.00000000,16692.00000000,16678.00000000,0E-8,null,null,0E-8,null,null,null,null,null,0E-8,null,null,D,-15292.00000000,-5,null,611485,null,null,null,null,null,null,null,null,null,0E-8,0E-8,0E-8,0E-8,0E-8,0E-8,0,null,null,1,2022-05-31 00:48:37.117,1653958208152,1653958210000,1653958302844,2022-02

20220530175142512,20220530175142512_42_142894,"order_no:128920931,order_type:1",2022-02,1a02bc31-cb63-4d7d-af81-b34eae295eef-0_42-168602-14384290_20220530175142512.parquet,null,U,1,128920931,{,null,98,null,null,200,121823,1,1,null,null,"CLARK CONSTRUCTION GROUP, LLC | 15144668",7500 OLD GEORGETOWN RD,,BETHESDA,MD,US,20814,3361,null,115596825,8,15144668,null,null,null,null,null,null,null,null,null,null,null,null,EE,1,EDEL,P,Y,4500,B,null,0E-8,2022-02-23 22:57:04.19,705753,1386.00000000,16692.00000000,16678.00000000,0E-8,null,null,0E-8,null,null,null,null,null,0E-8,null,null,D,-15292.00000000,-5,null,611485,null,null,null,null,null,null,null,null,null,0E-8,0E-8,0E-8,0E-8,0E-8,0E-8,0,null,null,1,2022-05-31 00:48:37.117,1653958208152,1653958210000,1653958302844,2022-02

20220530175142512,20220530175142512_42_142895,"order_no:128920931,order_type:1",2022-02,1a02bc31-cb63-4d7d-af81-b34eae295eef-0_42-168602-14384290_20220530175142512.parquet,null,U,1,128920931,{,null,98,null,null,200,121823,1,1,null,null,"CLARK CONSTRUCTION GROUP, LLC | 15144668",7500 OLD GEORGETOWN RD,,BETHESDA,MD,US,20814,3361,null,115596825,8,15144668,null,null,null,null,null,null,null,null,null,null,null,null,EE,1,EDEL,P,Y,4500,B,null,0E-8,2022-02-23 22:57:04.19,705753,1386.00000000,16692.00000000,16678.00000000,0E-8,null,null,0E-8,null,null,null,null,null,0E-8,null,null,D,-15292.00000000,-5,null,611485,null,null,null,null,null,null,null,null,null,0E-8,0E-8,0E-8,0E-8,0E-8,0E-8,0,null,null,1,2022-05-31 00:48:37.117,1653958208152,1653958210000,1653958302844,2022-02

20220530175142512,20220530175142512_42_142896,"order_no:128920931,order_type:1",2022-02,1a02bc31-cb63-4d7d-af81-b34eae295eef-0_42-168602-14384290_20220530175142512.parquet,null,U,1,128920931,{,null,98,null,null,200,121823,1,1,null,null,"CLARK CONSTRUCTION GROUP, LLC | 15144668",7500 OLD GEORGETOWN RD,,BETHESDA,MD,US,20814,3361,null,115596825,8,15144668,null,null,null,null,null,null,null,null,null,null,null,null,EE,1,EDEL,P,Y,4500,B,null,0E-8,2022-02-23 22:57:04.19,705753,1386.00000000,16692.00000000,16678.00000000,0E-8,null,null,0E-8,null,null,null,null,null,0E-8,null,null,D,-15292.00000000,-5,null,611485,null,null,null,null,null,null,null,null,null,0E-8,0E-8,0E-8,0E-8,0E-8,0E-8,0,null,null,1,2022-05-31 00:48:37.117,1653958208152,1653958210000,1653958302844,2022-02

20220530175142512,20220530175142512_42_142897,"order_no:128920931,order_type:1",2022-02,1a02bc31-cb63-4d7d-af81-b34eae295eef-0_42-168602-14384290_20220530175142512.parquet,null,U,1,128920931,{,null,98,null,null,200,121823,1,1,null,null,"CLARK CONSTRUCTION GROUP, LLC | 15144668",7500 OLD GEORGETOWN RD,,BETHESDA,MD,US,20814,3361,null,115596825,8,15144668,null,null,null,null,null,null,null,null,null,null,null,null,EE,1,EDEL,P,Y,4500,B,null,0E-8,2022-02-23 22:57:04.19,705753,1386.00000000,16692.00000000,16678.00000000,0E-8,null,null,0E-8,null,null,null,null,null,0E-8,null,null,D,-15292.00000000,-5,null,611485,null,null,null,null,null,null,null,null,null,0E-8,0E-8,0E-8,0E-8,0E-8,0E-8,0,null,null,1,2022-05-31 00:48:37.117,1653958208152,1653958210000,1653958302844,2022-02

20220530175142512,20220530175142512_42_142898,"order_no:128920931,order_type:1",2022-02,1a02bc31-cb63-4d7d-af81-b34eae295eef-0_42-168602-14384290_20220530175142512.parquet,null,U,1,128920931,{,null,98,null,null,200,121823,1,1,null,null,"CLARK CONSTRUCTION GROUP, LLC | 15144668",7500 OLD GEORGETOWN RD,,BETHESDA,MD,US,20814,3361,null,115596825,8,15144668,null,null,null,null,null,null,null,null,null,null,null,null,EE,1,EDEL,P,Y,4500,B,null,0E-8,2022-02-23 22:57:04.19,705753,1386.00000000,16692.00000000,16678.00000000,0E-8,null,null,0E-8,null,null,null,null,null,0E-8,null,null,D,-15292.00000000,-5,null,611485,null,null,null,null,null,null,null,null,null,0E-8,0E-8,0E-8,0E-8,0E-8,0E-8,0,null,null,1,2022-05-31 00:48:37.117,1653958208152,1653958210000,1653958302844,2022-02

20220530175142512,20220530175142512_42_142899,"order_no:128920931,order_type:1",2022-02,1a02bc31-cb63-4d7d-af81-b34eae295eef-0_42-168602-14384290_20220530175142512.parquet,null,U,1,128920931,{,null,98,null,null,200,121823,1,1,null,null,"CLARK CONSTRUCTION GROUP, LLC | 15144668",7500 OLD GEORGETOWN RD,,BETHESDA,MD,US,20814,3361,null,115596825,8,15144668,null,null,null,null,null,null,null,null,null,null,null,null,EE,1,EDEL,P,Y,4500,B,null,0E-8,2022-02-23 22:57:04.19,705753,1386.00000000,16692.00000000,16678.00000000,0E-8,null,null,0E-8,null,null,null,null,null,0E-8,null,null,D,-15292.00000000,-5,null,611485,null,null,null,null,null,null,null,null,null,0E-8,0E-8,0E-8,0E-8,0E-8,0E-8,0,null,null,1,2022-05-31 00:48:37.117,1653958208152,1653958210000,1653958302844,2022-02

20220530175142512,20220530175142512_42_142900,"order_no:128920931,order_type:1",2022-02,1a02bc31-cb63-4d7d-af81-b34eae295eef-0_42-168602-14384290_20220530175142512.parquet,null,U,1,128920931,{,null,98,null,null,200,121823,1,1,null,null,"CLARK CONSTRUCTION GROUP, LLC | 15144668",7500 OLD GEORGETOWN RD,,BETHESDA,MD,US,20814,3361,null,115596825,8,15144668,null,null,null,null,null,null,null,null,null,null,null,null,EE,1,EDEL,P,Y,4500,B,null,0E-8,2022-02-23 22:57:04.19,705753,1386.00000000,16692.00000000,16678.00000000,0E-8,null,null,0E-8,null,null,null,null,null,0E-8,null,null,D,-15292.00000000,-5,null,611485,null,null,null,null,null,null,null,null,null,0E-8,0E-8,0E-8,0E-8,0E-8,0E-8,0,null,null,1,2022-05-31 00:48:37.117,1653958208152,1653958210000,1653958302844,2022-02

20220530120029394,20220530120029394_1_110476,"order_no:128920931,order_type:1",2022-02,3dcbebeb-0f0f-4909-a6be-99f818158dfd-0_1-159061-13509658_20220530120029394.parquet,null,U,1,128920931,z,null,98,null,null,200,121823,1,1,null,null,"CLARK CONSTRUCTION GROUP, LLC | 15144668",7500 OLD GEORGETOWN RD,,BETHESDA,MD,US,20814,3361,null,115596825,8,15144668,null,null,null,null,null,null,null,null,null,null,null,null,EE,1,EDEL,P,Y,4500,B,null,0E-8,2022-02-23 22:57:04.19,705753,1386.00000000,16692.00000000,16678.00000000,0E-8,null,null,0E-8,null,null,null,null,null,0E-8,null,null,D,-15292.00000000,-5,null,611485,null,null,null,null,null,null,null,null,null,0E-8,0E-8,0E-8,0E-8,0E-8,0E-8,0,null,null,1,2022-05-30 18:56:04.623,1653937208440,1653937210000,1653937230279,2022-02

</body>

</html>

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] jjtjiang commented on issue #5777: [SUPPORT] Hudi table has duplicate data.

Posted by GitBox <gi...@apache.org>.

jjtjiang commented on issue #5777:

URL: https://github.com/apache/hudi/issues/5777#issuecomment-1158452178

> And those records will be merged in the compaction process, which could justify the result you see, i.e., no duplication after a while (after the compaction).

Without deduplication, this configuration uses the default value of hoodie.datasource.write.insert.drop.duplicates, which is false.

When we read mor table and there is duplicate data in the log, will the data not be deduplicated by default? I understand that it will be deduplicated when reading, maybe I understand it wrong. I will modify this parameter and test it again.

thanks very much.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] nsivabalan commented on issue #5777: [SUPPORT] Hudi table has duplicate data.

Posted by GitBox <gi...@apache.org>.

nsivabalan commented on issue #5777:

URL: https://github.com/apache/hudi/issues/5777#issuecomment-1251682905

@jiangjiguang : can you respond to above requests. we could not proceed if not for further logs and info requested. whenever you get a chance, can you please respond.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] nsivabalan commented on issue #5777: [SUPPORT] Hudi table has duplicate data.

Posted by GitBox <gi...@apache.org>.

nsivabalan commented on issue #5777:

URL: https://github.com/apache/hudi/issues/5777#issuecomment-1263111919

sorry to have dropped the ball on this. again picking it up.

btw, I see this config `hoodie.datasource.write.insert.drop.duplicates` was proposed earlier. do not set this to true. if yes, records from incoming batch if they are already in storage, it will be dropped.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] codope commented on issue #5777: [SUPPORT] Hudi table has duplicate data.

Posted by "codope (via GitHub)" <gi...@apache.org>.

codope commented on issue #5777:

URL: https://github.com/apache/hudi/issues/5777#issuecomment-1529562670

Not reproducible with the above details. As pointed out above, none of the common cases in which duplicates may arise seem to apply to this issue. Please reopen if you still see duplicates.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] codope closed issue #5777: [SUPPORT] Hudi table has duplicate data.

Posted by "codope (via GitHub)" <gi...@apache.org>.

codope closed issue #5777: [SUPPORT] Hudi table has duplicate data.

URL: https://github.com/apache/hudi/issues/5777

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] YuweiXiao commented on issue #5777: [SUPPORT] Hudi table has duplicate data.

Posted by GitBox <gi...@apache.org>.

YuweiXiao commented on issue #5777:

URL: https://github.com/apache/hudi/issues/5777#issuecomment-1230421004

@nsivabalan will take a deep look on it this week!

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] nsivabalan commented on issue #5777: [SUPPORT] Hudi table has duplicate data.

Posted by "nsivabalan (via GitHub)" <gi...@apache.org>.

nsivabalan commented on issue #5777:

URL: https://github.com/apache/hudi/issues/5777#issuecomment-1524667071

here are the reasons why we might see duplicates. So far, I could not pin point any of them for your use-case. but if you can find anything resembling your use-case, let us know.

1. If you are using "insert" or bulk_insert" as operation type.

2. If you are using any of the global indices (global_bloom or global_simple) and if records migrated from one partition to another, until compaction kicks in, you may see duplicates w/ Read optimized query. but once compaction completes for the older file group of interest, you may not see duplicates.

3. Also, if the index is global one, if records migrate from 1 partition to another and again to another before compaction can kick in, you may see duplicates even w/ RT query. this may not go away even w/ compaction.

4. Multi-writer scenarios, where both writers ingested the same records concurrently for the first time.

5. If writing via spark structured streaming, if you are using "insert" or "bulk_insert", there are chances for duplicates. I will try to update this comment of mine if I can think of any more reason.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

Re: [I] [SUPPORT] Hudi table has duplicate data. [hudi]

Posted by "chenbodeng719 (via GitHub)" <gi...@apache.org>.

chenbodeng719 commented on issue #5777:

URL: https://github.com/apache/hudi/issues/5777#issuecomment-1970368618

@nsivabalan I have the same issue. The below is my flink hudi config.

```

CREATE TABLE hudi_sink(

new_uid STRING PRIMARY KEY NOT ENFORCED,

uid STRING,

oridata STRING,

part INT,

user_update_date STRING,

update_time TIMESTAMP_LTZ(3)

) PARTITIONED BY (

`part`

) WITH (

'table.type' = 'MERGE_ON_READ',

'connector' = 'hudi',

'path' = '%s',

'write.operation' = 'upsert',

'precombine.field' = 'update_time',

'write.tasks' = '%s',

'index.type' = 'BUCKET',

'hoodie.bucket.index.hash.field' = 'new_uid',

'hoodie.bucket.index.num.buckets' = '%s',

'clean.retain_commits' = '0',

'compaction.async.enabled' = 'false'

)

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] nsivabalan commented on issue #5777: [SUPPORT] Hudi table has duplicate data.

Posted by GitBox <gi...@apache.org>.

nsivabalan commented on issue #5777:

URL: https://github.com/apache/hudi/issues/5777#issuecomment-1263114829

I see you have given test data. is everything to be ingested in 1 single commit. or using diff commits. your reproducible script is not very clear on this.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] jjtjiang commented on issue #5777: [SUPPORT] Hudi table has duplicate data.

Posted by GitBox <gi...@apache.org>.

jjtjiang commented on issue #5777:

URL: https://github.com/apache/hudi/issues/5777#issuecomment-1149382055

At the beginning, I used GLOBAL_BLOOM to initialize the historical data, and then the incremental data was changed to BLOOM, havn't change the record key field and partition path field. In the test environment, I have been using hudi for a while, and many tables have duplicate data , these tables are treated the same, and the reason should be the same. Does the index change cause such problems, and if so, these problems may be caused by the index change.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

Re: [I] [SUPPORT] Hudi table has duplicate data. [hudi]

Posted by "chenbodeng719 (via GitHub)" <gi...@apache.org>.

chenbodeng719 commented on issue #5777:

URL: https://github.com/apache/hudi/issues/5777#issuecomment-1970657108

> @chenbodeng719 Can you please create a new issue with all the details about hudi/spark versions and steps to reproduce. Thanks.

ok

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] nsivabalan commented on issue #5777: [SUPPORT] Hudi table has duplicate data.

Posted by GitBox <gi...@apache.org>.

nsivabalan commented on issue #5777:

URL: https://github.com/apache/hudi/issues/5777#issuecomment-1289935566

@jiangjiguang : can you please respond. I might need more info so that we can reproduce on our end.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] YuweiXiao commented on issue #5777: [SUPPORT] Hudi table has duplicate data.

Posted by GitBox <gi...@apache.org>.

YuweiXiao commented on issue #5777:

URL: https://github.com/apache/hudi/issues/5777#issuecomment-1233701226

@jjtjiang Hey, could u post the content of `hoodie.property` under `.hoodie` folder? And which version of hudi are you using?

Could u try testing it with the latest master code? I tested in my local env and log merging logic is working properly.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] nsivabalan commented on issue #5777: [SUPPORT] Hudi table has duplicate data.

Posted by GitBox <gi...@apache.org>.

nsivabalan commented on issue #5777:

URL: https://github.com/apache/hudi/issues/5777#issuecomment-1149123645

@YuweiXiao : I will let you take it from here. once we have answers to above questions, would be easy to narrow it down.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] nsivabalan commented on issue #5777: [SUPPORT] Hudi table has duplicate data.

Posted by GitBox <gi...@apache.org>.

nsivabalan commented on issue #5777:

URL: https://github.com/apache/hudi/issues/5777#issuecomment-1302883337

@jiangjiguang : oops. sorry.

@jjtjiang : can you respond when you can to my above comments.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] nsivabalan commented on issue #5777: [SUPPORT] Hudi table has duplicate data.

Posted by GitBox <gi...@apache.org>.

nsivabalan commented on issue #5777:

URL: https://github.com/apache/hudi/issues/5777#issuecomment-1263114477

@jiangjiguang : did not realize you had give us a reproducible code snippet. so from what you have given above, you could see duplicate data w/ MOR RT query?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] jjtjiang commented on issue #5777: [SUPPORT] Hudi table has duplicate data.

Posted by GitBox <gi...@apache.org>.

jjtjiang commented on issue #5777:

URL: https://github.com/apache/hudi/issues/5777#issuecomment-1158711388

> Yes, it should do dedup for log files. I'll test the default behavior when reading, to see if there is any potential bug.

this is my code and some test data

code :

val dsNew = spark.createDataFrame(rdd, schemaNew)

// dsNew.show(10,false)

HudiForeachBatchDeleteFunction.updateDsw(dsNew, hivePropertiesBean, hudiPropertiesBean)

case class HivePropertiesBean(

var hiveUrl: String,

var hiveUsername: String,

var hivePassword: String)

case class HudiPropertiesBean(

var hudiTableType: String,

var hudiHiveDatabase: String,

var hudiTableName: String,

var hudiLoadPath: String,

var hudiIndexId: String,

var hudiTimestamp: String,

var hasPartition: String,

var hudiPartition: String,

var partitionDateField: String,

var partitionDateType: String,

var initHdfsPath: String,

var payload: String

)

object HudiForeachBatchDeleteFunction {

val hudiOrgPro = "hudi"

def writeOptions(dfRow: DataFrameWriter[Row], hivePropertiesBean: HivePropertiesBean, hudiPropertiesBean: HudiPropertiesBean): Unit = {

val newDfRow: DataFrameWriter[Row] = partitionType(dfRow, hudiPropertiesBean)

newDfRow

.option(DataSourceWriteOptions.PAYLOAD_CLASS_NAME.key(), hudiPropertiesBean.payload)

.option(DataSourceWriteOptions.STREAMING_IGNORE_FAILED_BATCH.key(), "false")

.option(DataSourceWriteOptions.TABLE_TYPE.key(), hudiPropertiesBean.hudiTableType)

.option(HoodieWriteConfig.TBL_NAME.key(), hudiPropertiesBean.hudiTableName)

.option(DataSourceWriteOptions.RECORDKEY_FIELD.key(), hudiPropertiesBean.hudiIndexId)

.option(DataSourceWriteOptions.PRECOMBINE_FIELD.key(), hudiPropertiesBean.hudiTimestamp)

.option(DataSourceWriteOptions.HIVE_SYNC_ENABLED.key(), "true")

.option(DataSourceWriteOptions.HIVE_DATABASE.key(), hudiPropertiesBean.hudiHiveDatabase)

.option(DataSourceWriteOptions.HIVE_TABLE.key(), hudiPropertiesBean.hudiTableName)

.option(DataSourceWriteOptions.HIVE_URL.key(), hivePropertiesBean.hiveUrl)

.option(DataSourceWriteOptions.HIVE_USER.key(), hivePropertiesBean.hiveUsername)

.option(DataSourceWriteOptions.HIVE_PASS.key(), hivePropertiesBean.hivePassword)

.option(HoodieWriteConfig.INSERT_PARALLELISM_VALUE.key,"40")

.option(HoodieWriteConfig.BULKINSERT_PARALLELISM_VALUE.key,"40")

.option(HoodieWriteConfig.UPSERT_PARALLELISM_VALUE.key,"40")

.option(HoodieWriteConfig.DELETE_PARALLELISM_VALUE.key,"40")

.option(DataSourceWriteOptions.INSERT_DROP_DUPS.key,"true")

// .option("hoodie.sql.insert.mode","strict")

//index options

.option(HoodieIndexConfig.INDEX_TYPE.key(), HoodieIndex.IndexType.BLOOM.name())

.option(HoodieIndexConfig.BLOOM_INDEX_UPDATE_PARTITION_PATH_ENABLE.key(), "true")

//.option(HoodieStorageConfig.PARQUET_COMPRESSION_CODEC_NAME.key(), "snappy")

//others

//.option(DataSourceWriteOptions.RECONCILE_SCHEMA.key(), value = true)

.option(DataSourceWriteOptions.HIVE_SKIP_RO_SUFFIX_FOR_READ_OPTIMIZED_TABLE.key(), value = true)

//clustering

// .option(DataSourceWriteOptions.ASYNC_CLUSTERING_ENABLE.key, value = true)

// .option(DataSourceWriteOptions.INLINE_CLUSTERING_ENABLE.key(), value = true)

// .option(HoodieClusteringConfig.ASYNC_CLUSTERING_MAX_COMMITS.key, "4")

// .option(HoodieClusteringConfig.INLINE_CLUSTERING_MAX_COMMITS.key(), "4")

// .option(HoodieClusteringConfig.PLAN_STRATEGY_TARGET_FILE_MAX_BYTES.key(), String.valueOf(120 * 1024 * 1024L))

// .option(HoodieClusteringConfig.PLAN_STRATEGY_SMALL_FILE_LIMIT.key(), String.valueOf(80 * 1024 * 1024L))

// .option(HoodieClusteringConfig.PLAN_STRATEGY_SORT_COLUMNS.key(), hudiPropertiesBean.hudiTimestamp)

// .option(HoodieClusteringConfig.UPDATES_STRATEGY.key(), "org.apache.hudi.client.clustering.update.strategy.SparkAllowUpdateStrategy")

// .option(HoodieClusteringConfig.PRESERVE_COMMIT_METADATA.key(), value = true)

//merge compaction

.option(HoodieMemoryConfig.MAX_MEMORY_FRACTION_FOR_MERGE.key(), "0.8")

.option(HoodieMemoryConfig.MAX_MEMORY_FRACTION_FOR_COMPACTION.key(), "0.8")

//metadata config

.option(HoodieMetadataConfig.COMPACT_NUM_DELTA_COMMITS.key(), "240")

//compaction

.option(HoodieCompactionConfig.INLINE_COMPACT_NUM_DELTA_COMMITS.key(), "5")

.option(HoodieCompactionConfig.ASYNC_CLEAN.key(), "true")

// .option(HoodieCompactionConfig.COMPACTION_LAZY_BLOCK_READ_ENABLE.key(), "true")

.option(HoodieMetadataConfig.ENABLE.key(), "true")

.mode(SaveMode.Append)

.save(hudiPropertiesBean.hudiLoadPath)

}

def partitionType(dfRow: DataFrameWriter[Row], hudiPropertiesBean: HudiPropertiesBean): DataFrameWriter[Row] = {

if (hudiPropertiesBean.hasPartition == "true") {

dfRow

.option(DataSourceWriteOptions.PARTITIONPATH_FIELD.key(), hudiPropertiesBean.hudiPartition)

.option(DataSourceWriteOptions.KEYGENERATOR_CLASS_NAME.key(), classOf[ComplexKeyGenerator].getName)

.option(DataSourceWriteOptions.HIVE_PARTITION_FIELDS.key(), hudiPropertiesBean.hudiPartition)

.option(DataSourceWriteOptions.HIVE_PARTITION_EXTRACTOR_CLASS.key(), classOf[MultiPartKeysValueExtractor].getName)

} else {

dfRow

.option(DataSourceWriteOptions.PARTITIONPATH_FIELD.key(), "")

.option(DataSourceWriteOptions.KEYGENERATOR_CLASS_NAME.key(), classOf[NonpartitionedKeyGenerator].getName)

.option(DataSourceWriteOptions.HIVE_PARTITION_FIELDS.key(), "")

.option(DataSourceWriteOptions.HIVE_PARTITION_EXTRACTOR_CLASS.key(), classOf[NonPartitionedExtractor].getName)

}

dfRow

}

def updateDsw(upsertDs: Dataset[Row], hivePropertiesBean: HivePropertiesBean, hudiPropertiesBean: HudiPropertiesBean): Unit = {

val upsertDsw = upsertDs.toDF().write.format(hudiOrgPro)

.option(DataSourceWriteOptions.OPERATION.key(), DataSourceWriteOptions.UPSERT_OPERATION_OPT_VAL)

HudiForeachBatchDeleteFunction.writeOptions(upsertDsw, hivePropertiesBean, hudiPropertiesBean)

}

// def deleteDsw(deleteDs: Dataset[Row], hivePropertiesBean: HivePropertiesBean, hudiPropertiesBean: HudiPropertiesBean): Unit = {

// val deleteDsDsw = deleteDs.toDF().write.format(hudiOrgPro)

// .option(DataSourceWriteOptions.OPERATION.key(), DataSourceWriteOptions.DELETE_OPERATION_OPT_VAL)

// .option(HoodieWriteConfig.WRITE_PAYLOAD_CLASS_NAME.key(), classOf[EmptyHoodieRecordPayload].getName)

// HudiForeachBatchDeleteFunction.writeOptions(deleteDsDsw, hivePropertiesBean, hudiPropertiesBean)

// }

}

test datas:

{"cdc_trans_id":"0c72a1a3-9c22-4e2f-972f-5a26b75c3e7d","src_db_type":"sybase","cdc_system_time":"2022-06-17 02:55:07.927","src_timezone":"America/Los_Angeles","src_table_schema":"CIS","src_table_alias":"corp","src_table_name":"order_header","cdc_system_time_long":1655459707927,"src_table_jndi":"CORPSB","json_data":{"prod_exp_date":null,"entry_datetime":"2022-06-15 12:03:01.353","closed_date":"2022-06-16 18:05:31.447","printed_date":"2022-06-17 02:55:06.040","total_cost":221.6400,"from_inv_type":1,"ship_to_po_box":"C/O VDC CORP.","hold_id":null,"label_printed":null,"fx_detail_price_total":0.0000,"ship_to_loc":null,"fx_detail_exp_total":0.0000,"ship_to_zip":"78852","bol_date":null,"ship_method":"FG","invoice_id":null,"to_dept_no":null,"bol_printed":null,"order_type":1,"to_contact_no":1,"credit_rel_code":"B","trigger_order_no":131610413,"trigger_cdc_content":null,"from_acct_no":null,"head_exp_total":0.0000,"trigger_sid":955534244,"sales_terr":4592,"trigger_order_type":1,"it_cost_code

":null,"ship_to_country":"US","schedule_date":null,"from_contact_no":null,"ship_to_state":"TX","account_rep":9396,"receiving_date":null,"from_loc_no":5,"hold_date":null,"fx_total_cost":0.0000,"delete_id":null,"repick_counter":null,"expected_date":null,"sales_total":229.4400,"fx_total_order":0.0000,"from_dept_no":null,"dist_exp_date":null,"fx_head_exp_total":0.0000,"resale":"Y","detail_price_total":0.0000,"repick_id":null,"company_no":1,"entry_id":696828,"source_table":"order_header","label_date":null,"order_no":131610413,"sales_rel_date":"2022-06-15 12:04:15.960","qc_date":"2022-06-16 08:40:34.790","trigger_cdc_action":"U","u_version":"N","rma_disp_type":null,"freight":"P","ship_to_addr":"1025 ADAMS CIRCLE","q_userid":696828,"ship_to_name":"LITTELFUSE MEX. MFG. B.V. 80,2747152/ISMAEL JULIAN","to_loc_no":1,"total_weight":1.0000,"invoice_date":"2022-06-16 23:00:00.000","total_order":229.4400,"h_version":1,"issue_date":"2022-06-15 12:03:03.797","ship_to_city":"EAGLE PASS","delete_date"

:null,"pick_date":"2022-06-15 12:57:59.000","posting_date":"2022-06-16 23:00:00.000","manifest_date":null,"int_ref_type":8,"ext_ref":"P22574301","terms_no":"JJ","ship_to_loc_change":null,"fx_currency":null,"credit_rel_date":"2022-06-15 12:08:25.230","profile_special_handle":null,"approval":"Autocred","drop_ship":"D","trigger_entry_datetime":"2022-06-17 02:55:06.580","mt_expense_code":null,"to_acct_no":107685,"sales_tax":null,"detail_exp_total":0.0000,"to_inv_type":null,"date_flag":"2022-06-15 00:00:00.000","carrier_no":1,"int_ref_no":118365916,"invoice_counter":-100,"ship_date":"2022-06-16 08:45:31.940","fx_sales_total":0.0000}}

{"cdc_trans_id":"0c72a1a3-9c22-4e2f-972f-5a26b75c3e7d","src_db_type":"sybase","cdc_system_time":"2022-06-17 02:55:07.927","src_timezone":"America/Los_Angeles","src_table_schema":"CIS","src_table_alias":"corp","src_table_name":"order_header","cdc_system_time_long":1655459707927,"src_table_jndi":"CORPSB","json_data":{"prod_exp_date":null,"entry_datetime":"2022-06-15 12:42:05.427","closed_date":"2022-06-16 18:05:20.860","printed_date":"2022-06-17 02:55:06.663","total_cost":85.6100,"from_inv_type":1,"ship_to_po_box":"S56109749/Scott Stockton","hold_id":null,"label_printed":null,"fx_detail_price_total":0.0000,"ship_to_loc":null,"fx_detail_exp_total":0.0000,"ship_to_zip":"37914","bol_date":null,"ship_method":"FG","invoice_id":null,"to_dept_no":null,"bol_printed":null,"order_type":1,"to_contact_no":null,"credit_rel_code":"B","trigger_order_no":131611668,"trigger_cdc_content":null,"from_acct_no":null,"head_exp_total":0.0000,"trigger_sid":955534245,"sales_terr":4502,"trigger_order_type":1,

"it_cost_code":null,"ship_to_country":"US","schedule_date":null,"from_contact_no":null,"ship_to_state":"TN","account_rep":9396,"receiving_date":null,"from_loc_no":7,"hold_date":null,"fx_total_cost":0.0000,"delete_id":null,"repick_counter":null,"expected_date":null,"sales_total":91.0800,"fx_total_order":0.0000,"from_dept_no":null,"dist_exp_date":null,"fx_head_exp_total":0.0000,"resale":"Y","detail_price_total":-22.3500,"repick_id":null,"company_no":1,"entry_id":-10706,"source_table":"order_header","label_date":null,"order_no":131611668,"sales_rel_date":"2022-06-15 12:43:20.837","qc_date":"2022-06-16 07:35:22.810","trigger_cdc_action":"U","u_version":"+","rma_disp_type":null,"freight":"P","ship_to_addr":"3015 E Governor John Sevier Hwy","q_userid":null,"ship_to_name":"ATC Drivetrain","to_loc_no":1,"total_weight":2.1000,"invoice_date":"2022-06-16 23:00:00.000","total_order":68.7300,"h_version":1,"issue_date":"2022-06-15 12:42:08.913","ship_to_city":"KNOXVILLE","delete_date":null,"pick_

date":"2022-06-15 12:48:40.787","posting_date":"2022-06-16 23:00:00.000","manifest_date":null,"int_ref_type":8,"ext_ref":"P22575455","terms_no":"JJ","ship_to_loc_change":null,"fx_currency":null,"credit_rel_date":"2022-06-15 12:48:14.787","profile_special_handle":null,"approval":"Autocred","drop_ship":"D","trigger_entry_datetime":"2022-06-17 02:55:07.127","mt_expense_code":null,"to_acct_no":107685,"sales_tax":null,"detail_exp_total":0.0000,"to_inv_type":null,"date_flag":"2022-06-15 00:00:00.000","carrier_no":1,"int_ref_no":118368455,"invoice_counter":-100,"ship_date":"2022-06-16 07:40:32.010","fx_sales_total":0.0000}}

{"cdc_trans_id":"0c72a1a3-9c22-4e2f-972f-5a26b75c3e7d","src_db_type":"sybase","cdc_system_time":"2022-06-17 02:55:07.927","src_timezone":"America/Los_Angeles","src_table_schema":"CIS","src_table_alias":"corp","src_table_name":"order_header","cdc_system_time_long":1655459707927,"src_table_jndi":"CORPSB","json_data":{"prod_exp_date":null,"entry_datetime":"2022-06-15 12:58:38.647","closed_date":"2022-06-16 18:05:36.467","printed_date":"2022-06-17 02:55:07.297","total_cost":117.1200,"from_inv_type":1,"ship_to_po_box":"06152022/","hold_id":null,"label_printed":null,"fx_detail_price_total":0.0000,"ship_to_loc":null,"fx_detail_exp_total":0.0000,"ship_to_zip":"80214","bol_date":null,"ship_method":"FG","invoice_id":null,"to_dept_no":null,"bol_printed":null,"order_type":1,"to_contact_no":null,"credit_rel_code":"B","trigger_order_no":131612158,"trigger_cdc_content":null,"from_acct_no":null,"head_exp_total":0.0000,"trigger_sid":955534246,"sales_terr":4502,"trigger_order_type":1,"it_cost_code"

:null,"ship_to_country":"US","schedule_date":null,"from_contact_no":null,"ship_to_state":"CO","account_rep":9396,"receiving_date":null,"from_loc_no":7,"hold_date":null,"fx_total_cost":0.0000,"delete_id":null,"repick_counter":null,"expected_date":null,"sales_total":117.7000,"fx_total_order":0.0000,"from_dept_no":null,"dist_exp_date":null,"fx_head_exp_total":0.0000,"resale":"Y","detail_price_total":0.0000,"repick_id":null,"company_no":1,"entry_id":-10706,"source_table":"order_header","label_date":null,"order_no":131612158,"sales_rel_date":"2022-06-15 12:58:47.410","qc_date":"2022-06-16 07:54:02.423","trigger_cdc_action":"U","u_version":"+","rma_disp_type":null,"freight":"P","ship_to_addr":"10811 W Collins Ave","q_userid":null,"ship_to_name":"Terumo Bct","to_loc_no":1,"total_weight":0.7500,"invoice_date":"2022-06-16 23:00:00.000","total_order":117.7000,"h_version":1,"issue_date":"2022-06-15 12:58:42.460","ship_to_city":"LAKEWOOD","delete_date":null,"pick_date":"2022-06-15 13:04:41.063"

,"posting_date":"2022-06-16 23:00:00.000","manifest_date":null,"int_ref_type":8,"ext_ref":"P22575671","terms_no":"JJ","ship_to_loc_change":null,"fx_currency":null,"credit_rel_date":"2022-06-15 13:03:17.197","profile_special_handle":null,"approval":"Autocred","drop_ship":"D","trigger_entry_datetime":"2022-06-17 02:55:07.607","mt_expense_code":null,"to_acct_no":107685,"sales_tax":null,"detail_exp_total":0.0000,"to_inv_type":null,"date_flag":"2022-06-15 00:00:00.000","carrier_no":1,"int_ref_no":118369157,"invoice_counter":-100,"ship_date":"2022-06-16 07:55:06.710","fx_sales_total":0.0000}}

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] YuweiXiao commented on issue #5777: [SUPPORT] Hudi table has duplicate data.

Posted by GitBox <gi...@apache.org>.

YuweiXiao commented on issue #5777:

URL: https://github.com/apache/hudi/issues/5777#issuecomment-1157542841

Hi @jjtjiang , looking at the data you posted. I am wondering if you enable the de-duplication option during writing. Because there are records with the same key in a single commit (writing to log files).

And those records will be merged in the compaction process, which could justify the result you see, i.e., no duplication after a while (after the compaction).

For de-deup options, check out https://hudi.incubator.apache.org/docs/configurations#hoodiedatasourcewriteinsertdropduplicates

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] nsivabalan commented on issue #5777: [SUPPORT] Hudi table has duplicate data.

Posted by "nsivabalan (via GitHub)" <gi...@apache.org>.

nsivabalan commented on issue #5777:

URL: https://github.com/apache/hudi/issues/5777#issuecomment-1524637082

hey @jjtjiang : for the sample data you have provided, whats the record key field and whats the partition path field. we are taking a detailed look at all data consistency issues. So, wanted to get to the bottom of this.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] jjtjiang commented on issue #5777: [SUPPORT] Hudi table has duplicate data.

Posted by GitBox <gi...@apache.org>.

jjtjiang commented on issue #5777:

URL: https://github.com/apache/hudi/issues/5777#issuecomment-1152188149

> what

sql : elect _hoodie_commit_time,_hoodie_commit_seqno,_hoodie_record_key,_hoodie_partition_path,_hoodie_file_name from hudi_trips_snapshot where order_no=128920931 and order_type=1

|_hoodie_commit_time| _hoodie_commit_seqno| _hoodie_record_key|_hoodie_partition_path| _hoodie_file_name|

+-------------------+---------------------------+-------------------------------+----------------------+---------------------------------------------------------------------------------+

| 20220602081230746|20220602081230746_81_327042|order_no:128920931,order_type:1| 2022-02| 1a02bc31-cb63-4d7d-af81-b34eae295eef-0|

| 20220602081230746|20220602081230746_81_327042|order_no:128920931,order_type:1| 2022-02| 1a02bc31-cb63-4d7d-af81-b34eae295eef-0|

| 20220602081230746|20220602081230746_81_327042|order_no:128920931,order_type:1| 2022-02| 1a02bc31-cb63-4d7d-af81-b34eae295eef-0|

| 20220602081230746|20220602081230746_81_327042|order_no:128920931,order_type:1| 2022-02| 1a02bc31-cb63-4d7d-af81-b34eae295eef-0|

| 20220602081230746|20220602081230746_81_327042|order_no:128920931,order_type:1| 2022-02| 1a02bc31-cb63-4d7d-af81-b34eae295eef-0|

| 20220602081230746|20220602081230746_81_327042|order_no:128920931,order_type:1| 2022-02| 1a02bc31-cb63-4d7d-af81-b34eae295eef-0|

| 20220602081230746|20220602081230746_81_327042|order_no:128920931,order_type:1| 2022-02| 1a02bc31-cb63-4d7d-af81-b34eae295eef-0|

| 20220602081230746|20220602081230746_81_327042|order_no:128920931,order_type:1| 2022-02| 1a02bc31-cb63-4d7d-af81-b34eae295eef-0|

| 20220602081230746|20220602081230746_81_327042|order_no:128920931,order_type:1| 2022-02| 1a02bc31-cb63-4d7d-af81-b34eae295eef-0|

| 20220602081230746|20220602081230746_81_327042|order_no:128920931,order_type:1| 2022-02| 1a02bc31-cb63-4d7d-af81-b34eae295eef-0|

| 20220602081230746|20220602081230746_73_326908|order_no:128920931,order_type:1| 2022-02| 81653f9b-e473-49cb-b741-3f5e5b6149c9-0|

| 20220602081230746|20220602081230746_79_727509|order_no:128920931,order_type:1| 2022-02|9a4700d8-1f27-47dc-98f9-f26bc8259ece-0_79-33673-2654041_20220602081230746.parquet|

| 20220602081230746|20220602081230746_61_326739|order_no:128920931,order_type:1| 2022-02| 98e64140-374e-4d6b-939a-c0479174402d-0|

| 20220602081230746|20220602081230746_74_326931|order_no:128920931,order_type:1| 2022-02| c724dbb4-823e-4744-963a-e77d5993f0e3-0|

| 20220602081230746|20220602081230746_87_327208|order_no:128920931,order_type:1| 2022-02| e6486128-d1bd-4502-93bf-a2a62487b57a-0|

| 20220602081230746|20220602081230746_69_326841|order_no:128920931,order_type:1| 2022-02| 6557dd29-8e3f-476e-ad2c-048cebb2de67-0|

| 20220602081230746|20220602081230746_80_327039|order_no:128920931,order_type:1| 2022-02| 3dcbebeb-0f0f-4909-a6be-99f818158dfd-0|

| 20220602081230746|20220602081230746_75_326962|order_no:128920931,order_type:1| 2022-02| bf3af60a-6405-4792-a8ff-d1c824011fa4-0|

| 20220602081230746|20220602081230746_77_327009|order_no:128920931,order_type:1| 2022-02| e8345f22-da0e-4b5e-b99e-a32bdd939089-0|

| 20220602081230746|20220602081230746_84_327110|order_no:128920931,order_type:1| 2022-02| c4723a41-d3fd-405d-9f09-ac97d77f5f9c-0|

| 20220602081230746|20220602081230746_86_327167|order_no:128920931,order_type:1| 2022-02| 8fa2c068-5e63-492c-936e-44784384514d-0|

+-------------------+---------------------------+-------------------------------+----------------------+---------------------------------------------------------------------------------+

I'll try to reproduce it later.

ddl: CREATE TABLE `temp_db`.`hudi_solution_order_header_20220520` (\n `_hoodie_commit_time` STRING,\n `_hoodie_commit_seqno` STRING,\n `_hoodie_record_key` STRING,\n `_hoodie_partition_path` STRING,\n `_hoodie_file_name` STRING,\n `_hoodie_is_deleted` BOOLEAN,\n `trigger_cdc_action` STRING,\n `order_type` INT,\n `order_no` INT,\n `u_version` STRING,\n `from_acct_no` INT,\n `from_loc_no` INT,\n `from_contact_no` INT,\n `from_dept_no` INT,\n `from_inv_type` INT,\n `to_acct_no` INT,\n `to_loc_no` INT,\n `to_contact_no` INT,\n `to_dept_no` INT,\n `to_inv_type` INT,\n `ship_to_name` STRING,\n `ship_to_addr` STRING,\n `ship_to_po_box` STRING,\n `ship_to_city` STRING,\n `ship_to_state` STRING,\n `ship_to_country` STRING,\n `ship_to_zip` STRING,\n `account_rep` INT,\n `mt_expense_code` STRING,\n `int_ref_no` INT,\n `int_ref_type` INT,\n `ext_ref` STRING,\n `issue_date` TIMESTAMP,\n `credit_rel_date` TIMESTAMP,\n `pick_date` TIMESTAMP,\n `manifest_date`

TIMESTAMP,\n `ship_date` TIMESTAMP,\n `invoice_date` TIMESTAMP,\n `posting_date` TIMESTAMP,\n `expected_date` TIMESTAMP,\n `receiving_date` TIMESTAMP,\n `closed_date` TIMESTAMP,\n `printed_date` TIMESTAMP,\n `delete_date` TIMESTAMP,\n `terms_no` STRING,\n `carrier_no` INT,\n `ship_method` STRING,\n `freight` STRING,\n `resale` STRING,\n `sales_terr` INT,\n `credit_rel_code` STRING,\n `it_cost_code` INT,\n `sales_tax` DECIMAL(20,8),\n `entry_datetime` TIMESTAMP,\n `entry_id` INT,\n `total_order` DECIMAL(20,8),\n `total_cost` DECIMAL(20,8),\n `sales_total` DECIMAL(20,8),\n `head_exp_total` DECIMAL(20,8),\n `sales_rel_date` TIMESTAMP,\n `delete_id` INT,\n `detail_exp_total` DECIMAL(20,8),\n `rma_disp_type` STRING,\n `repick_id` INT,\n `repick_counter` INT,\n `invoice_id` INT,\n `invoice_counter` INT,\n `total_weight` DECIMAL(20,8),\n `hold_date` TIMESTAMP,\n `hold_id` INT,\n `drop_ship` STRING,\n `detail_price_total` DECIMAL(20,8),\n `ship_to_loc` I

NT,\n `ship_to_loc_change` INT,\n `q_userid` INT,\n `label_printed` STRING,\n `label_date` TIMESTAMP,\n `dist_exp_date` TIMESTAMP,\n `prod_exp_date` TIMESTAMP,\n `bol_date` TIMESTAMP,\n `bol_printed` STRING,\n `qc_date` TIMESTAMP,\n `schedule_date` TIMESTAMP,\n `approval` STRING,\n `fx_total_order` DECIMAL(20,8),\n `fx_total_cost` DECIMAL(20,8),\n `fx_sales_total` DECIMAL(20,8),\n `fx_head_exp_total` DECIMAL(20,8),\n `fx_detail_exp_total` DECIMAL(20,8),\n `fx_detail_price_total` DECIMAL(20,8),\n `h_version` INT,\n `profile_special_handle` STRING,\n `fx_currency` STRING,\n `company_no` INT,\n `trigger_entry_datetime` TIMESTAMP,\n `cdc_system_time_long` BIGINT,\n `kafka_time_long` BIGINT,\n `process_time_long` BIGINT,\n `date_flag` STRING)\nUSING hudi\nOPTIONS (\n `hoodie.query.as.ro.table` \true\)\nPARTITIONED BY (date_flag)\nLOCATION \\warehouse\tablespace\managed\hive\temp_db.db\hudi_solution_order_header_20220520\\nTBLPROPERTIES (\n \bucketing_version\

= \2\,\n \last_modified_time\ = \1653531205\,\n \last_modified_by\ = \itjulianj\,\n \last_commit_time_sync\ = \20220605080937159\)\n

thanks

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] YuweiXiao commented on issue #5777: [SUPPORT] Hudi table has duplicate data.

Posted by GitBox <gi...@apache.org>.

YuweiXiao commented on issue #5777:

URL: https://github.com/apache/hudi/issues/5777#issuecomment-1148174762

Could you share your write config, e.g., operation type and index type.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] nsivabalan commented on issue #5777: [SUPPORT] Hudi table has duplicate data.

Posted by GitBox <gi...@apache.org>.

nsivabalan commented on issue #5777:

URL: https://github.com/apache/hudi/issues/5777#issuecomment-1229354613

@alexeykudinkin @YuweiXiao : can you folks follow up on this.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] jiangjiguang commented on issue #5777: [SUPPORT] Hudi table has duplicate data.

Posted by GitBox <gi...@apache.org>.

jiangjiguang commented on issue #5777:

URL: https://github.com/apache/hudi/issues/5777#issuecomment-1290166539

@nsivabalan I do not know it, may be you got the wrong guy ?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] jjtjiang commented on issue #5777: [SUPPORT] Hudi table has duplicate data.

Posted by GitBox <gi...@apache.org>.

jjtjiang commented on issue #5777:

URL: https://github.com/apache/hudi/issues/5777#issuecomment-1148293257

LOBAL_BLOOM is used when the historical data is initialized, but it is configured as BLOOM when it is incremented, so it may be caused by not being able to find BLOOM when it is incremented. I'm going to try again. thanks.I think it would be nice if there was an index error message

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] jjtjiang commented on issue #5777: [SUPPORT] Hudi table has duplicate data.

Posted by GitBox <gi...@apache.org>.

jjtjiang commented on issue #5777:

URL: https://github.com/apache/hudi/issues/5777#issuecomment-1149496653

Through the test, I saw a strange phenomenon. At the beginning, the data was repeated, and after a few minutes to several hours, the query was repeated and there was no repeated data. This happened three times in the past two days.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] YuweiXiao commented on issue #5777: [SUPPORT] Hudi table has duplicate data.

Posted by GitBox <gi...@apache.org>.

YuweiXiao commented on issue #5777:

URL: https://github.com/apache/hudi/issues/5777#issuecomment-1151009189

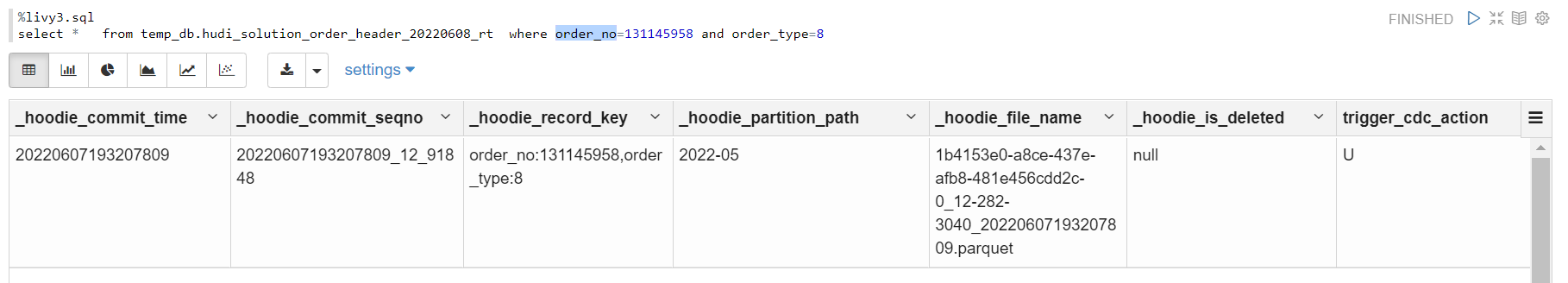

@jjtjiang Hey, another guess is the merging of the base file and log files is turned off. In your latest test, could you paste the full query result of the duplicate case? i.e., `select * from xxx where order_no = xxx and order_type = xxx`.

By the way, is it possible to provide a runnable demo of the issue, so that we could reproduce it and check what is behind the scene.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] nsivabalan commented on issue #5777: [SUPPORT] Hudi table has duplicate data.

Posted by GitBox <gi...@apache.org>.

nsivabalan commented on issue #5777:

URL: https://github.com/apache/hudi/issues/5777#issuecomment-1243948501

@jiangjiguang : gentle ping.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] YuweiXiao commented on issue #5777: [SUPPORT] Hudi table has duplicate data.

Posted by GitBox <gi...@apache.org>.

YuweiXiao commented on issue #5777:

URL: https://github.com/apache/hudi/issues/5777#issuecomment-1158536648

Yes, it should do dedup for log files. I'll test the default behavior when reading, to see if there is any potential bug.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] jjtjiang commented on issue #5777: [SUPPORT] Hudi table has duplicate data.

Posted by GitBox <gi...@apache.org>.

jjtjiang commented on issue #5777:

URL: https://github.com/apache/hudi/issues/5777#issuecomment-1150822255

>

> > In this test, we did not change the index, we only used the bloom index Through the test, I saw a strange phenomenon. At the beginning, the data was repeated, and after a few minutes to several hours, the query was repeated and there was no repeated data. This happened three times in the past two days.

>

> How do you sync your hudi table? I guess your query engine may treat the table as normal parquet files rather than a hudi table.

>

> To verify, could you use spark to read and check out the data? (i.e. `spark.read().format("hudi")`)

when use spark.read().format("hudi") ,the table still have duplicate data. I use struncated streaming to sync data .

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] YuweiXiao commented on issue #5777: [SUPPORT] Hudi table has duplicate data.

Posted by GitBox <gi...@apache.org>.

YuweiXiao commented on issue #5777:

URL: https://github.com/apache/hudi/issues/5777#issuecomment-1149634055

> In this test, we did not change the index, we only used the bloom index Through the test, I saw a strange phenomenon. At the beginning, the data was repeated, and after a few minutes to several hours, the query was repeated and there was no repeated data. This happened three times in the past two days.

How do you sync your hudi table? I guess your query engine may treat the table as normal parquet files rather than a hudi table.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] nsivabalan commented on issue #5777: [SUPPORT] Hudi table has duplicate data.

Posted by GitBox <gi...@apache.org>.

nsivabalan commented on issue #5777:

URL: https://github.com/apache/hudi/issues/5777#issuecomment-1149123244

did you change the index type at any point in time in your table?

Did you happen to change the record key field and partition path field at any point in time?

also, can you disable metadata and try querying your table to see if you could spot duplicates.

And is it first time you are playing around w/ hudi. or you have been using hudi for sometime and you are seeing this exception in one of your tables where as its good in other tables. ?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] jjtjiang commented on issue #5777: [SUPPORT] Hudi table has duplicate data.

Posted by GitBox <gi...@apache.org>.

jjtjiang commented on issue #5777:

URL: https://github.com/apache/hudi/issues/5777#issuecomment-1158535091

> hoodie.datasource.merge.type

My engine is spark ,and i use the default payload class : org.apache.hudi.common.model.OverwriteWithLatestAvroPayload

,spark version is 3.0, hudi version is 0.10.1,

So when we read, it should deduplicate the datas in the log

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] YuweiXiao commented on issue #5777: [SUPPORT] Hudi table has duplicate data.

Posted by GitBox <gi...@apache.org>.

YuweiXiao commented on issue #5777:

URL: https://github.com/apache/hudi/issues/5777#issuecomment-1158514099

There is options `hoodie.datasource.merge.type` to control the combine logic during read, the default is merge records. The option is for spark read, not sure what is the behavior in your query engine. Which engine are you using?

And the merge also relies on the payload class (which specifies how records with duplicate keys merge).

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

Re: [I] [SUPPORT] Hudi table has duplicate data. [hudi]

Posted by "ad1happy2go (via GitHub)" <gi...@apache.org>.

ad1happy2go commented on issue #5777:

URL: https://github.com/apache/hudi/issues/5777#issuecomment-1970644646

@chenbodeng719 Can you please create a new issue with all the details about hudi/spark versions and steps to reproduce. Thanks.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org