You are viewing a plain text version of this content. The canonical link for it is here.

Posted to commits@airflow.apache.org by GitBox <gi...@apache.org> on 2022/08/09 06:51:05 UTC

[GitHub] [airflow] Jorricks opened a new issue, #25615: Random DagRuns set to running during large catch up

Jorricks opened a new issue, #25615:

URL: https://github.com/apache/airflow/issues/25615

### Apache Airflow version

Other Airflow 2 version

### What happened

Airflow version: 2.2.3

We cleared a task for a DAG containing around 10 tasks. This clearing was done for a recent date till almost a year back.

It marks all the DagRuns back to queued, which was good.

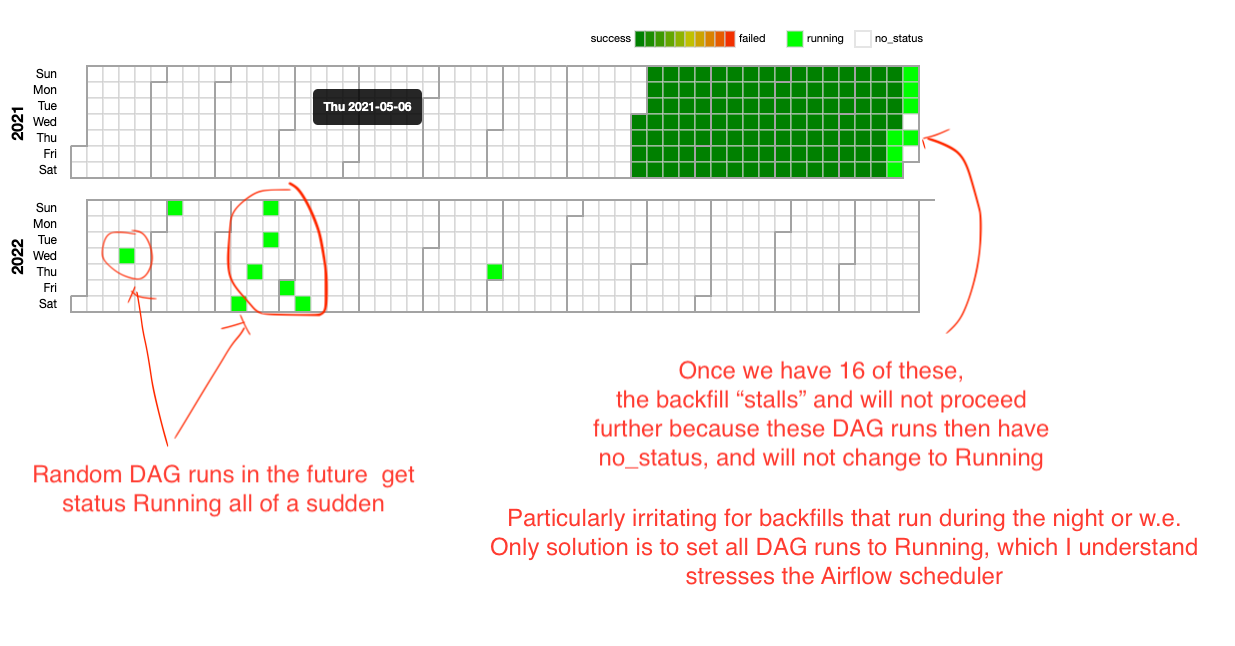

However, at a certain point the DagRuns don't come in order anymore and spawn all over the place as shown in the image.

Because of the `depends_on_past=True`, the DAG will get stuck with this behavior as soon as we have `x` random DagRuns where `x` equals the `max_active_runs`.

### What you think should happen instead

It should have set the DagRuns to running in sequential order.

### How to reproduce

Run a large airflow instance with a lot of DAGs and run 4 schedulers.

Then clear a lot of TaskInstance of the same task.

### Operating System

CentOS Linux 7 (Core)

### Versions of Apache Airflow Providers

None

### Deployment

Virtualenv installation

### Deployment details

- 4 Schedulers

- 4 Webservers

- 4 Celery workers

- Postgres database

- We have a custom plugin

### Anything else

It seems to happen when we clear a lot of (old?) TaskInstances of the same tasks.

### Are you willing to submit PR?

- [X] Yes I am willing to submit a PR!

### Code of Conduct

- [X] I agree to follow this project's [Code of Conduct](https://github.com/apache/airflow/blob/main/CODE_OF_CONDUCT.md)

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@airflow.apache.org.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] potiuk commented on issue #25615: Random DagRuns set to running during large catch up

Posted by GitBox <gi...@apache.org>.

potiuk commented on issue #25615:

URL: https://github.com/apache/airflow/issues/25615#issuecomment-1221561982

That one is really interesting race @ashb @ephraimbuddy - I think we might want to take a closer look at that one :)

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@airflow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] argibbs commented on issue #25615: Random DagRuns set to running during large catch up

Posted by "argibbs (via GitHub)" <gi...@apache.org>.

argibbs commented on issue #25615:

URL: https://github.com/apache/airflow/issues/25615#issuecomment-1480781305

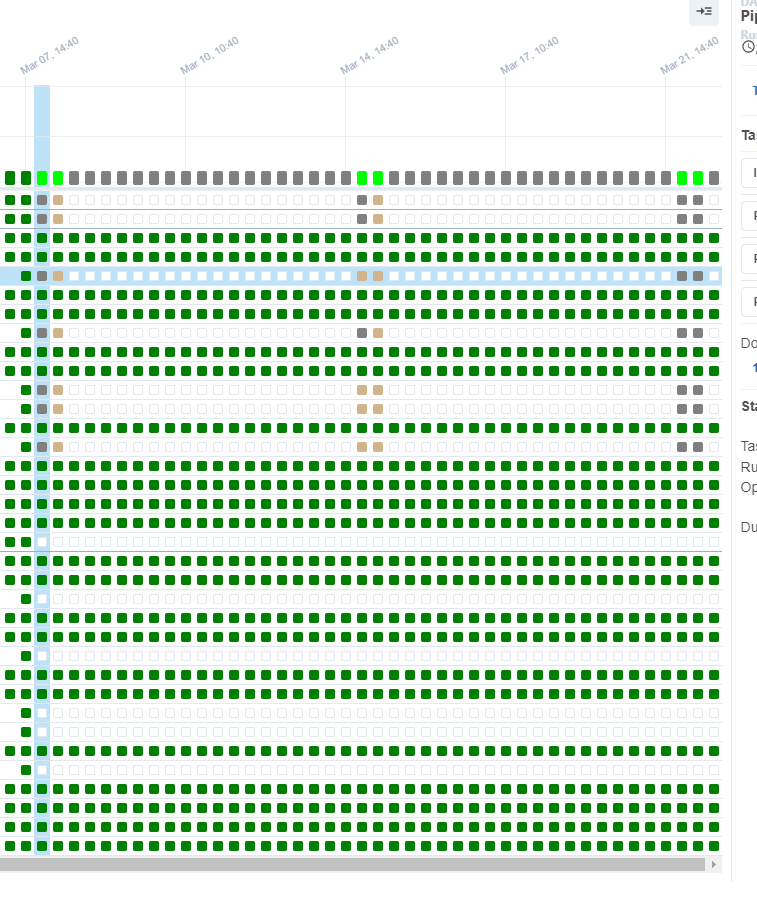

I happened across this bug a while back, and then today saw a really really clear example of it. So just thought I'd share. I added some tasks to a dag, then went back and cleared the recent history to trigger a rebuild of the missing history. I run three schedulers, and you can clearly see how the first locked 20 runs, and actively started up two of them, then the second locked the next 20, and picked up 2 of them, and finally, the 3rd locked the rest and picked up 2 of them...

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@airflow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

Re: [I] Random DagRuns set to running during large catch up [airflow]

Posted by "github-actions[bot] (via GitHub)" <gi...@apache.org>.

github-actions[bot] commented on issue #25615:

URL: https://github.com/apache/airflow/issues/25615#issuecomment-2055719557

This issue has been automatically marked as stale because it has been open for 365 days without any activity. There has been several Airflow releases since last activity on this issue. Kindly asking to recheck the report against latest Airflow version and let us know if the issue is reproducible. The issue will be closed in next 30 days if no further activity occurs from the issue author.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@airflow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] Jorricks commented on issue #25615: Random DagRuns set to running during large catch up

Posted by GitBox <gi...@apache.org>.

Jorricks commented on issue #25615:

URL: https://github.com/apache/airflow/issues/25615#issuecomment-1208992911

I did some initial exploration of what could be causing this issue.

I suppose the issue is in `_start_queued_dagruns` shown [here](https://github.com/apache/airflow/blob/2.2.3/airflow/jobs/scheduler_job.py#L935).

I checked quite thoroughly on the order and limit query denoted by `dag_runs = self._get_next_dagruns_to_examine(State.QUEUED, session)`.

I feel like this is correctly set as `last_scheduling_decision` should be None. Unfortunately, we are still planning our upgrade to Airflow 2.2.3 so I have not been able to verify whether `last_scheduling_decision` is in fact None.

Then, the only reason I have been able to come up with this far is that when there are multiple schedulers in this loop, it could cause issues. Let me reason about this:

Imagine we have a DAG called `my_dag` that has a max of 16 running DagRuns.

1. Scheduler A enters this loop and tries to schedule Queued DagRuns to running for `my_dag`. At this point in time (T), the number of active Runs is 15, which equals one less than the limit. Scheduler A will schedule one extra run.

2. While scheduler A is still in its loop, at time (T+1) a DagRun has been marked Success by Scheduler B.

3. Scheduler C enters this loop and tries to schedule Queued DagRuns to running for `my_dag`. Now it's time (T+2), at this point Scheduler C is also allowed to schedule a task, however, scheduler A locked all the earliest DagRuns, so now scheduler C resorts to way newer DagRuns. This could potentially lead to scheduling a task that is way later than the DagRun that was up next, after the one Scheduler A was scheduling.

4. Scheduler A completes its loop and unlocks the rows.

5. Scheduler C completes its loop and unlocks the rows.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@airflow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org