You are viewing a plain text version of this content. The canonical link for it is here.

Posted to commits@hudi.apache.org by GitBox <gi...@apache.org> on 2022/09/20 03:44:53 UTC

[GitHub] [hudi] IsisPolei opened a new issue, #6720: [SUPPORT]Caused by: org.apache.hudi.exception.HoodieRemoteException: Connect to 192.168.64.107:34446 [/192.168.64.107] failed: Connection refused (Connection refused)

IsisPolei opened a new issue, #6720:

URL: https://github.com/apache/hudi/issues/6720

hudi:0.10.1

spark:3.1.3_scala2.12

background story:

I use SparkRDDWriteClient to process hudi , both app and spark standalone cluster are running in docker. When the app and spark cluster container running in the same local machine, my app work well. But when i deploy the spark cluster in different machine i got a series of connection problems.

machineA(192.168.64.107): spark driver(SparkRDDWriteClient app)

machineB(192.168.64.121):spark standalone cluster(master and worker running in two containers)

Due to the spark network connection mechanism, i have set the connect parms below:

spark.master.url: spark://192.168.64.121:7077

spark.driver.bindAddress: 0.0.0.0

spark.driver.host: 192.168.64.107

spark.driver.port: 10000

The HoodieSparkContext init correctly and i can see the spark job running in the spark web UI. But when the code reach to sparkRDDWriteClient.upsert(), this exception occur:

Caused by: org.apache.hudi.exception.HoodieRemoteException: Connect to 192.168.64.107:34446 [/192.168.64.107] failed: Connection refused (Connection refused) at org.apache.hudi.common.table.view.RemoteHoodieTableFileSystemView.refresh(RemoteHoodieTableFileSystemView.java:420)

at org.apache.hudi.common.table.view.RemoteHoodieTableFileSystemView.sync(RemoteHoodieTableFileSystemView.java:484)

at org.apache.hudi.common.table.view.PriorityBasedFileSystemView.sync(PriorityBasedFileSystemView.java:257)

at org.apache.hudi.client.SparkRDDWriteClient.getTableAndInitCtx(SparkRDDWriteClient.java:493)

at org.apache.hudi.client.SparkRDDWriteClient.getTableAndInitCtx(SparkRDDWriteClient.java:448)

at org.apache.hudi.client.SparkRDDWriteClient.upsert(SparkRDDWriteClient.java:157)

Caused by: org.apache.http.conn.HttpHostConnectException: Connect to 192.168.64.107:34446 [/192.168.64.107] failed: Connection refused (Connection refused)

at org.apache.http.impl.conn.DefaultHttpClientConnectionOperator.connect(DefaultHttpClientConnectionOperator.java:156)

at org.apache.http.impl.conn.PoolingHttpClientConnectionManager.connect(PoolingHttpClientConnectionManager.java:376)

at org.apache.http.impl.execchain.MainClientExec.establishRoute(MainClientExec.java:393)

at org.apache.http.impl.execchain.MainClientExec.execute(MainClientExec.java:236)

at org.apache.http.impl.execchain.ProtocolExec.execute(ProtocolExec.java:186)

at org.apache.http.impl.execchain.RetryExec.execute(RetryExec.java:89)

at org.apache.http.impl.execchain.RedirectExec.execute(RedirectExec.java:110)

at org.apache.http.impl.client.InternalHttpClient.doExecute(InternalHttpClient.java:185)

at org.apache.http.impl.client.CloseableHttpClient.execute(CloseableHttpClient.java:83)

at org.apache.http.impl.client.CloseableHttpClient.execute(CloseableHttpClient.java:56)

at org.apache.http.client.fluent.Request.internalExecute(Request.java:173)

at org.apache.http.client.fluent.Request.execute(Request.java:177)

at org.apache.hudi.common.table.view

It seems like these two container can't connect to each other through the hoodie.filesystem.view.remote.port. So i expose this port of my app container but it doesn't work. Please tell me what i did wrong.

These are my docker-compose.yml:

app:

app:

image: xxx

container_name: app

ports:

- "5008:5005"

- "10000:10000"

- "10001:10001"

spark:

version: '3'

services:

master:

image: bitnami/spark:3.1

container_name: master

hostname: master

environment:

MASTER: spark://master:7077

restart: always

ports:

- "7077:7077"

- "9080:8080"

worker:

image: bitnami/spark:3.1

container_name: worker

restart: always

environment:

SPARK_WORKER_CORES: 5

SPARK_WORKER_MEMORY: 2g

SPARK_WORKER_PORT: 8881

depends_on:

- master

links:

- master

ports:

- "8081:8081"

expose:

- "8881"

I hope i describe the situation clearly, please help.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] nsivabalan commented on issue #6720: [SUPPORT]Caused by: org.apache.hudi.exception.HoodieRemoteException: Connect to 192.168.64.107:34446 [/192.168.64.107] failed: Connection refused (Connection refused)

Posted by GitBox <gi...@apache.org>.

nsivabalan commented on issue #6720:

URL: https://github.com/apache/hudi/issues/6720#issuecomment-1287909176

thanks for the confirmation.

we do have plans to enhance java write client in a few months. Let us know if you can't use spark and in need of java write client.

will go ahead and close the issue for now.

feel free to open a new issue if you need further assistance.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] IsisPolei commented on issue #6720: [SUPPORT]Caused by: org.apache.hudi.exception.HoodieRemoteException: Connect to 192.168.64.107:34446 [/192.168.64.107] failed: Connection refused (Connection refused)

Posted by GitBox <gi...@apache.org>.

IsisPolei commented on issue #6720:

URL: https://github.com/apache/hudi/issues/6720#issuecomment-1251944154

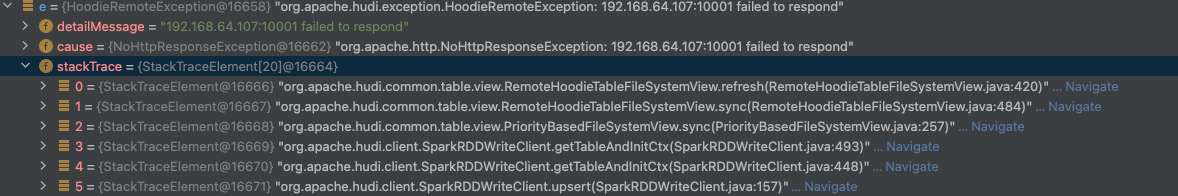

hoodie.filesystem.view.remote.port

this configuration doesn't work, i use idea debug mode and force to set this parameter to the port that my app already expose:

viewConf.setValue("hoodie.filesystem.view.remote.port","10001");

it doesn't work, i got this exception:

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] IsisPolei commented on issue #6720: [SUPPORT]Caused by: org.apache.hudi.exception.HoodieRemoteException: Connect to 192.168.64.107:34446 [/192.168.64.107] failed: Connection refused (Connection refused)

Posted by GitBox <gi...@apache.org>.

IsisPolei commented on issue #6720:

URL: https://github.com/apache/hudi/issues/6720#issuecomment-1254475342

The origin problem is offline compaction. The HoodieJavaWriteClient doesn't support compact inline.

@Override

protected List<WriteStatus> compact(String compactionInstantTime,

boolean shouldComplete) {

throw new HoodieNotSupportedException("Compact is not supported in HoodieJavaClient");

}

So i change my hudi client to SparkRDDWriteClient. This client works like a treat when using spark local mode and standalone mode(in the same host machine).

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] nsivabalan closed issue #6720: [SUPPORT]Caused by: org.apache.hudi.exception.HoodieRemoteException: Connect to 192.168.64.107:34446 [/192.168.64.107] failed: Connection refused (Connection refused)

Posted by GitBox <gi...@apache.org>.

nsivabalan closed issue #6720: [SUPPORT]Caused by: org.apache.hudi.exception.HoodieRemoteException: Connect to 192.168.64.107:34446 [/192.168.64.107] failed: Connection refused (Connection refused)

URL: https://github.com/apache/hudi/issues/6720

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] yihua commented on issue #6720: [SUPPORT]Caused by: org.apache.hudi.exception.HoodieRemoteException: Connect to 192.168.64.107:34446 [/192.168.64.107] failed: Connection refused (Connection refused)

Posted by GitBox <gi...@apache.org>.

yihua commented on issue #6720:

URL: https://github.com/apache/hudi/issues/6720#issuecomment-1254135813

@IsisPolei The latest error you saw is from Spark networking client, and this should not be relevant to Hudi. We also provide a [docker demo](https://hudi.apache.org/docs/docker_demo) using Spark and the source code is [here](https://github.com/apache/hudi/tree/master/docker). We don't encounter the timeline server connection issue in the docker demo setup. Could you check if you can run the docker demo setup and check what the difference is?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] IsisPolei commented on issue #6720: [SUPPORT]Caused by: org.apache.hudi.exception.HoodieRemoteException: Connect to 192.168.64.107:34446 [/192.168.64.107] failed: Connection refused (Connection refused)

Posted by GitBox <gi...@apache.org>.

IsisPolei commented on issue #6720:

URL: https://github.com/apache/hudi/issues/6720#issuecomment-1253129024

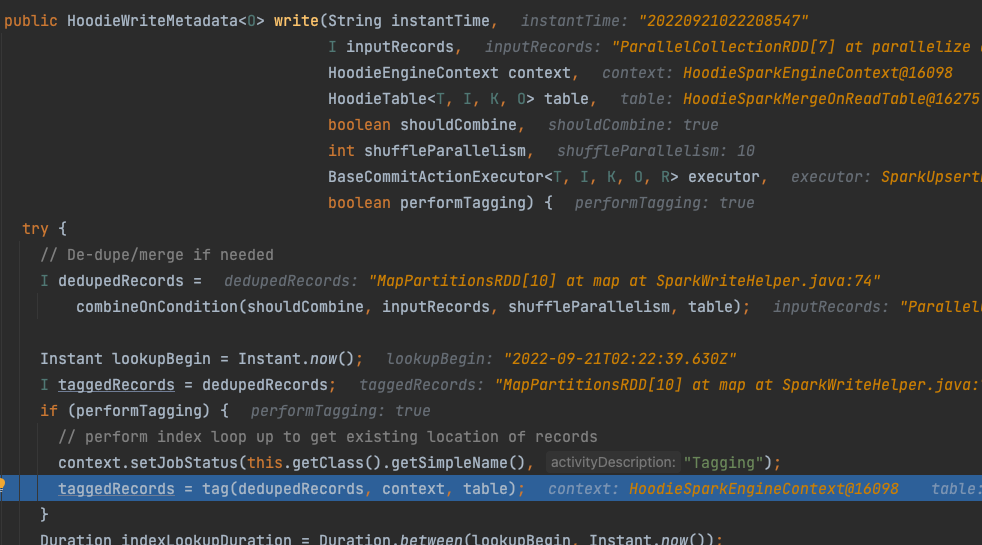

I manage to bypass these two problems by set

hoodie.embed.timeline.server.port: 10001

hoodie.filesystem.view.remote.port: 10002

And expose these two ports of my app container.

When the code reach this line some thing went wrong.

<img width="1367" alt="image" src="https://user-images.githubusercontent.com/41672192/191400573-0f79813e-2d5a-4322-a97a-a31385184f9f.png">

I don't know how this port is used and it seems like this port is random so i can't expose it of my app container.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] IsisPolei commented on issue #6720: [SUPPORT]Caused by: org.apache.hudi.exception.HoodieRemoteException: Connect to 192.168.64.107:34446 [/192.168.64.107] failed: Connection refused (Connection refused)

Posted by GitBox <gi...@apache.org>.

IsisPolei commented on issue #6720:

URL: https://github.com/apache/hudi/issues/6720#issuecomment-1254466665

I think the main reason of this problem is that my app(where SparkRDDWriteClient process hudi data) and the spark cluster which SparkRDDWriteClient connected are deployed in different local machine. When both docker containers run in the same host machine everything work well since the containers can connect to each other with docker bridge network(As the hudi docker demo is also one of this scenario). So i'm trying to find out how exactly hudi and spark connect to each other during this process. First i thought if the HoodieSparkEngineContext init successfully the connection part is over. Apparently there is something more. For example the timeline server and remoteFilySystemView also should be reachable because the application will be running in the spark worker node.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org