You are viewing a plain text version of this content. The canonical link for it is here.

Posted to gitbox@hive.apache.org by "mdayakar (via GitHub)" <gi...@apache.org> on 2023/03/13 17:29:26 UTC

[GitHub] [hive] mdayakar opened a new pull request, #4114: HIVE-27135: Cleaner fails with FileNotFoundException

mdayakar opened a new pull request, #4114:

URL: https://github.com/apache/hive/pull/4114

<!--

Thanks for sending a pull request! Here are some tips for you:

1. If this is your first time, please read our contributor guidelines: https://cwiki.apache.org/confluence/display/Hive/HowToContribute

2. Ensure that you have created an issue on the Hive project JIRA: https://issues.apache.org/jira/projects/HIVE/summary

3. Ensure you have added or run the appropriate tests for your PR:

4. If the PR is unfinished, add '[WIP]' in your PR title, e.g., '[WIP]HIVE-XXXXX: Your PR title ...'.

5. Be sure to keep the PR description updated to reflect all changes.

6. Please write your PR title to summarize what this PR proposes.

7. If possible, provide a concise example to reproduce the issue for a faster review.

-->

### What changes were proposed in this pull request?

The changes will fix HIVE-27135 which was not fixed properly as a part of [HIVE-26481](https://issues.apache.org/jira/browse/HIVE-26481). Now it will not throw FileNotFoundException even staging directory got removed.

<!--

Please clarify what changes you are proposing. The purpose of this section is to outline the changes and how this PR fixes the issue.

If possible, please consider writing useful notes for better and faster reviews in your PR. See the examples below.

1. If you refactor some codes with changing classes, showing the class hierarchy will help reviewers.

2. If you fix some SQL features, you can provide some references of other DBMSes.

3. If there is design documentation, please add the link.

4. If there is a discussion in the mailing list, please add the link.

-->

### Why are the changes needed?

If it throws FileNotFoundException then Cleaner will not be able to clean the orphan files which are already compacted.

<!--

Please clarify why the changes are needed. For instance,

1. If you propose a new API, clarify the use case for a new API.

2. If you fix a bug, you can clarify why it is a bug.

-->

### Does this PR introduce _any_ user-facing change?

No

<!--

Note that it means *any* user-facing change including all aspects such as the documentation fix.

If yes, please clarify the previous behavior and the change this PR proposes - provide the console output, description, screenshot and/or a reproducable example to show the behavior difference if possible.

If possible, please also clarify if this is a user-facing change compared to the released Hive versions or within the unreleased branches such as master.

If no, write 'No'.

-->

### How was this patch tested?

The patch is tested by remote debugging, when the FileSystem listing the files just removed one of the staging directory but it didn't throw FNFE as staging directory has to exclude while listing.

<!--

If tests were added, say they were added here. Please make sure to add some test cases that check the changes thoroughly including negative and positive cases if possible.

If it was tested in a way different from regular unit tests, please clarify how you tested step by step, ideally copy and paste-able, so that other reviewers can test and check, and descendants can verify in the future.

If tests were not added, please describe why they were not added and/or why it was difficult to add.

-->

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: gitbox-unsubscribe@hive.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: gitbox-unsubscribe@hive.apache.org

For additional commands, e-mail: gitbox-help@hive.apache.org

[GitHub] [hive] sonarcloud[bot] commented on pull request #4114: HIVE-27135: Cleaner fails with FileNotFoundException

Posted by "sonarcloud[bot] (via GitHub)" <gi...@apache.org>.

sonarcloud[bot] commented on PR #4114:

URL: https://github.com/apache/hive/pull/4114#issuecomment-1471866715

Kudos, SonarCloud Quality Gate passed! [](https://sonarcloud.io/dashboard?id=apache_hive&pullRequest=4114)

[](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=BUG) [](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=BUG) [0 Bugs](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=BUG)

[](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=VULNERABILITY) [](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=VULNERABILITY) [0 Vulnerabilities](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=VULNERABILITY)

[](https://sonarcloud.io/project/security_hotspots?id=apache_hive&pullRequest=4114&resolved=false&types=SECURITY_HOTSPOT) [](https://sonarcloud.io/project/security_hotspots?id=apache_hive&pullRequest=4114&resolved=false&types=SECURITY_HOTSPOT) [0 Security Hotspots](https://sonarcloud.io/project/security_hotspots?id=apache_hive&pullRequest=4114&resolved=false&types=SECURITY_HOTSPOT)

[](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=CODE_SMELL) [](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=CODE_SMELL) [0 Code Smells](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=CODE_SMELL)

[](https://sonarcloud.io/component_measures?id=apache_hive&pullRequest=4114&metric=coverage&view=list) No Coverage information

[](https://sonarcloud.io/component_measures?id=apache_hive&pullRequest=4114&metric=duplicated_lines_density&view=list) No Duplication information

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: gitbox-unsubscribe@hive.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: gitbox-unsubscribe@hive.apache.org

For additional commands, e-mail: gitbox-help@hive.apache.org

[GitHub] [hive] deniskuzZ commented on a diff in pull request #4114: HIVE-27135: Cleaner fails with FileNotFoundException

Posted by "deniskuzZ (via GitHub)" <gi...@apache.org>.

deniskuzZ commented on code in PR #4114:

URL: https://github.com/apache/hive/pull/4114#discussion_r1145917303

##########

ql/src/java/org/apache/hadoop/hive/ql/io/AcidUtils.java:

##########

@@ -1538,32 +1538,36 @@ private static HdfsDirSnapshot addToSnapshot(Map<Path, HdfsDirSnapshot> dirToSna

public static Map<Path, HdfsDirSnapshot> getHdfsDirSnapshots(final FileSystem fs, final Path path)

throws IOException {

Map<Path, HdfsDirSnapshot> dirToSnapshots = new HashMap<>();

- RemoteIterator<LocatedFileStatus> itr = FileUtils.listFiles(fs, path, true, acidHiddenFileFilter);

- while (itr.hasNext()) {

- FileStatus fStatus = itr.next();

- Path fPath = fStatus.getPath();

- if (fStatus.isDirectory() && acidTempDirFilter.accept(fPath)) {

- addToSnapshot(dirToSnapshots, fPath);

- } else {

- Path parentDirPath = fPath.getParent();

- if (acidTempDirFilter.accept(parentDirPath)) {

- while (isChildOfDelta(parentDirPath, path)) {

- // Some cases there are other directory layers between the delta and the datafiles

- // (export-import mm table, insert with union all to mm table, skewed tables).

- // But it does not matter for the AcidState, we just need the deltas and the data files

- // So build the snapshot with the files inside the delta directory

- parentDirPath = parentDirPath.getParent();

- }

- HdfsDirSnapshot dirSnapshot = addToSnapshot(dirToSnapshots, parentDirPath);

- // We're not filtering out the metadata file and acid format file,

- // as they represent parts of a valid snapshot

- // We're not using the cached values downstream, but we can potentially optimize more in a follow-up task

- if (fStatus.getPath().toString().contains(MetaDataFile.METADATA_FILE)) {

- dirSnapshot.addMetadataFile(fStatus);

- } else if (fStatus.getPath().toString().contains(OrcAcidVersion.ACID_FORMAT)) {

- dirSnapshot.addOrcAcidFormatFile(fStatus);

- } else {

- dirSnapshot.addFile(fStatus);

+ Deque<RemoteIterator<LocatedFileStatus>> stack = new ArrayDeque<>();

Review Comment:

👍

@mdayakar, could we document the side effects of using `listFiles` methods (add a javadoc with the link to [HADOOP-18662](https://issues.apache.org/jira/browse/HADOOP-18662))

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: gitbox-unsubscribe@hive.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: gitbox-unsubscribe@hive.apache.org

For additional commands, e-mail: gitbox-help@hive.apache.org

[GitHub] [hive] sonarcloud[bot] commented on pull request #4114: HIVE-27135: AcidUtils#getHdfsDirSnapshots() throws FNFE when a directory is removed in HDFS

Posted by "sonarcloud[bot] (via GitHub)" <gi...@apache.org>.

sonarcloud[bot] commented on PR #4114:

URL: https://github.com/apache/hive/pull/4114#issuecomment-1494943052

Kudos, SonarCloud Quality Gate passed! [](https://sonarcloud.io/dashboard?id=apache_hive&pullRequest=4114)

[](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=BUG) [](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=BUG) [0 Bugs](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=BUG)

[](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=VULNERABILITY) [](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=VULNERABILITY) [0 Vulnerabilities](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=VULNERABILITY)

[](https://sonarcloud.io/project/security_hotspots?id=apache_hive&pullRequest=4114&resolved=false&types=SECURITY_HOTSPOT) [](https://sonarcloud.io/project/security_hotspots?id=apache_hive&pullRequest=4114&resolved=false&types=SECURITY_HOTSPOT) [0 Security Hotspots](https://sonarcloud.io/project/security_hotspots?id=apache_hive&pullRequest=4114&resolved=false&types=SECURITY_HOTSPOT)

[](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=CODE_SMELL) [](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=CODE_SMELL) [0 Code Smells](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=CODE_SMELL)

[](https://sonarcloud.io/component_measures?id=apache_hive&pullRequest=4114&metric=coverage&view=list) No Coverage information

[](https://sonarcloud.io/component_measures?id=apache_hive&pullRequest=4114&metric=duplicated_lines_density&view=list) No Duplication information

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: gitbox-unsubscribe@hive.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: gitbox-unsubscribe@hive.apache.org

For additional commands, e-mail: gitbox-help@hive.apache.org

[GitHub] [hive] deniskuzZ commented on a diff in pull request #4114: HIVE-27135: Cleaner fails with FileNotFoundException

Posted by "deniskuzZ (via GitHub)" <gi...@apache.org>.

deniskuzZ commented on code in PR #4114:

URL: https://github.com/apache/hive/pull/4114#discussion_r1145991206

##########

ql/src/java/org/apache/hadoop/hive/ql/io/AcidUtils.java:

##########

@@ -1538,32 +1538,36 @@ private static HdfsDirSnapshot addToSnapshot(Map<Path, HdfsDirSnapshot> dirToSna

public static Map<Path, HdfsDirSnapshot> getHdfsDirSnapshots(final FileSystem fs, final Path path)

throws IOException {

Map<Path, HdfsDirSnapshot> dirToSnapshots = new HashMap<>();

- RemoteIterator<LocatedFileStatus> itr = FileUtils.listFiles(fs, path, true, acidHiddenFileFilter);

- while (itr.hasNext()) {

- FileStatus fStatus = itr.next();

- Path fPath = fStatus.getPath();

- if (fStatus.isDirectory() && acidTempDirFilter.accept(fPath)) {

- addToSnapshot(dirToSnapshots, fPath);

- } else {

- Path parentDirPath = fPath.getParent();

- if (acidTempDirFilter.accept(parentDirPath)) {

- while (isChildOfDelta(parentDirPath, path)) {

- // Some cases there are other directory layers between the delta and the datafiles

- // (export-import mm table, insert with union all to mm table, skewed tables).

- // But it does not matter for the AcidState, we just need the deltas and the data files

- // So build the snapshot with the files inside the delta directory

- parentDirPath = parentDirPath.getParent();

- }

- HdfsDirSnapshot dirSnapshot = addToSnapshot(dirToSnapshots, parentDirPath);

- // We're not filtering out the metadata file and acid format file,

- // as they represent parts of a valid snapshot

- // We're not using the cached values downstream, but we can potentially optimize more in a follow-up task

- if (fStatus.getPath().toString().contains(MetaDataFile.METADATA_FILE)) {

- dirSnapshot.addMetadataFile(fStatus);

- } else if (fStatus.getPath().toString().contains(OrcAcidVersion.ACID_FORMAT)) {

- dirSnapshot.addOrcAcidFormatFile(fStatus);

- } else {

- dirSnapshot.addFile(fStatus);

+ Deque<RemoteIterator<LocatedFileStatus>> stack = new ArrayDeque<>();

Review Comment:

unless there is another affected place -1 from me

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: gitbox-unsubscribe@hive.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: gitbox-unsubscribe@hive.apache.org

For additional commands, e-mail: gitbox-help@hive.apache.org

[GitHub] [hive] deniskuzZ commented on a diff in pull request #4114: HIVE-27135: Cleaner fails with FileNotFoundException

Posted by "deniskuzZ (via GitHub)" <gi...@apache.org>.

deniskuzZ commented on code in PR #4114:

URL: https://github.com/apache/hive/pull/4114#discussion_r1145917303

##########

ql/src/java/org/apache/hadoop/hive/ql/io/AcidUtils.java:

##########

@@ -1538,32 +1538,36 @@ private static HdfsDirSnapshot addToSnapshot(Map<Path, HdfsDirSnapshot> dirToSna

public static Map<Path, HdfsDirSnapshot> getHdfsDirSnapshots(final FileSystem fs, final Path path)

throws IOException {

Map<Path, HdfsDirSnapshot> dirToSnapshots = new HashMap<>();

- RemoteIterator<LocatedFileStatus> itr = FileUtils.listFiles(fs, path, true, acidHiddenFileFilter);

- while (itr.hasNext()) {

- FileStatus fStatus = itr.next();

- Path fPath = fStatus.getPath();

- if (fStatus.isDirectory() && acidTempDirFilter.accept(fPath)) {

- addToSnapshot(dirToSnapshots, fPath);

- } else {

- Path parentDirPath = fPath.getParent();

- if (acidTempDirFilter.accept(parentDirPath)) {

- while (isChildOfDelta(parentDirPath, path)) {

- // Some cases there are other directory layers between the delta and the datafiles

- // (export-import mm table, insert with union all to mm table, skewed tables).

- // But it does not matter for the AcidState, we just need the deltas and the data files

- // So build the snapshot with the files inside the delta directory

- parentDirPath = parentDirPath.getParent();

- }

- HdfsDirSnapshot dirSnapshot = addToSnapshot(dirToSnapshots, parentDirPath);

- // We're not filtering out the metadata file and acid format file,

- // as they represent parts of a valid snapshot

- // We're not using the cached values downstream, but we can potentially optimize more in a follow-up task

- if (fStatus.getPath().toString().contains(MetaDataFile.METADATA_FILE)) {

- dirSnapshot.addMetadataFile(fStatus);

- } else if (fStatus.getPath().toString().contains(OrcAcidVersion.ACID_FORMAT)) {

- dirSnapshot.addOrcAcidFormatFile(fStatus);

- } else {

- dirSnapshot.addFile(fStatus);

+ Deque<RemoteIterator<LocatedFileStatus>> stack = new ArrayDeque<>();

Review Comment:

👍

@mdayakar, could we document the side effects of using `listStatusIterator` & `listFiles` methods (add a javadoc with the link to [HADOOP-18662](https://issues.apache.org/jira/browse/HADOOP-18662))

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: gitbox-unsubscribe@hive.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: gitbox-unsubscribe@hive.apache.org

For additional commands, e-mail: gitbox-help@hive.apache.org

[GitHub] [hive] mdayakar commented on a diff in pull request #4114: HIVE-27135: AcidUtils#getHdfsDirSnapshots() throws FNFE when a directory is removed in HDFS

Posted by "mdayakar (via GitHub)" <gi...@apache.org>.

mdayakar commented on code in PR #4114:

URL: https://github.com/apache/hive/pull/4114#discussion_r1152241772

##########

common/src/java/org/apache/hadoop/hive/common/FileUtils.java:

##########

@@ -1376,6 +1376,12 @@ public static RemoteIterator<FileStatus> listStatusIterator(FileSystem fs, Path

status -> filter.accept(status.getPath()));

}

+ public static RemoteIterator<LocatedFileStatus> listLocatedStatusIterator(FileSystem fs, Path path, PathFilter filter)

Review Comment:

Changed code to use FileStatus object and using existing _org.apache.hadoop.hive.common.FileUtils#listStatusIterator()_ API which is used in _org.apache.hadoop.hive.ql.io.AcidUtils#getHdfsDirSnapshotsForCleaner()_ API.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: gitbox-unsubscribe@hive.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: gitbox-unsubscribe@hive.apache.org

For additional commands, e-mail: gitbox-help@hive.apache.org

[GitHub] [hive] deniskuzZ commented on a diff in pull request #4114: HIVE-27135: Cleaner fails with FileNotFoundException

Posted by "deniskuzZ (via GitHub)" <gi...@apache.org>.

deniskuzZ commented on code in PR #4114:

URL: https://github.com/apache/hive/pull/4114#discussion_r1145958007

##########

ql/src/java/org/apache/hadoop/hive/ql/io/AcidUtils.java:

##########

@@ -1538,32 +1538,36 @@ private static HdfsDirSnapshot addToSnapshot(Map<Path, HdfsDirSnapshot> dirToSna

public static Map<Path, HdfsDirSnapshot> getHdfsDirSnapshots(final FileSystem fs, final Path path)

throws IOException {

Map<Path, HdfsDirSnapshot> dirToSnapshots = new HashMap<>();

- RemoteIterator<LocatedFileStatus> itr = FileUtils.listFiles(fs, path, true, acidHiddenFileFilter);

- while (itr.hasNext()) {

- FileStatus fStatus = itr.next();

- Path fPath = fStatus.getPath();

- if (fStatus.isDirectory() && acidTempDirFilter.accept(fPath)) {

- addToSnapshot(dirToSnapshots, fPath);

- } else {

- Path parentDirPath = fPath.getParent();

- if (acidTempDirFilter.accept(parentDirPath)) {

- while (isChildOfDelta(parentDirPath, path)) {

- // Some cases there are other directory layers between the delta and the datafiles

- // (export-import mm table, insert with union all to mm table, skewed tables).

- // But it does not matter for the AcidState, we just need the deltas and the data files

- // So build the snapshot with the files inside the delta directory

- parentDirPath = parentDirPath.getParent();

- }

- HdfsDirSnapshot dirSnapshot = addToSnapshot(dirToSnapshots, parentDirPath);

- // We're not filtering out the metadata file and acid format file,

- // as they represent parts of a valid snapshot

- // We're not using the cached values downstream, but we can potentially optimize more in a follow-up task

- if (fStatus.getPath().toString().contains(MetaDataFile.METADATA_FILE)) {

- dirSnapshot.addMetadataFile(fStatus);

- } else if (fStatus.getPath().toString().contains(OrcAcidVersion.ACID_FORMAT)) {

- dirSnapshot.addOrcAcidFormatFile(fStatus);

- } else {

- dirSnapshot.addFile(fStatus);

+ Deque<RemoteIterator<LocatedFileStatus>> stack = new ArrayDeque<>();

+ stack.push(FileUtils.listLocatedStatusIterator(fs, path, acidHiddenFileFilter));

+ while (!stack.isEmpty()) {

+ RemoteIterator<LocatedFileStatus> itr = stack.pop();

+ while (itr.hasNext()) {

+ FileStatus fStatus = itr.next();

+ Path fPath = fStatus.getPath();

+ if (fStatus.isDirectory()) {

+ stack.push(FileUtils.listLocatedStatusIterator(fs, fPath, acidHiddenFileFilter));

Review Comment:

are you missing `addToSnapshot(dirToSnapshots, fPath)` here?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: gitbox-unsubscribe@hive.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: gitbox-unsubscribe@hive.apache.org

For additional commands, e-mail: gitbox-help@hive.apache.org

[GitHub] [hive] sonarcloud[bot] commented on pull request #4114: HIVE-27135: AcidUtils#getHdfsDirSnapshots() throws FNFE when a directory is removed in HDFS

Posted by "sonarcloud[bot] (via GitHub)" <gi...@apache.org>.

sonarcloud[bot] commented on PR #4114:

URL: https://github.com/apache/hive/pull/4114#issuecomment-1494153045

Kudos, SonarCloud Quality Gate passed! [](https://sonarcloud.io/dashboard?id=apache_hive&pullRequest=4114)

[](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=BUG) [](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=BUG) [0 Bugs](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=BUG)

[](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=VULNERABILITY) [](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=VULNERABILITY) [0 Vulnerabilities](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=VULNERABILITY)

[](https://sonarcloud.io/project/security_hotspots?id=apache_hive&pullRequest=4114&resolved=false&types=SECURITY_HOTSPOT) [](https://sonarcloud.io/project/security_hotspots?id=apache_hive&pullRequest=4114&resolved=false&types=SECURITY_HOTSPOT) [0 Security Hotspots](https://sonarcloud.io/project/security_hotspots?id=apache_hive&pullRequest=4114&resolved=false&types=SECURITY_HOTSPOT)

[](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=CODE_SMELL) [](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=CODE_SMELL) [0 Code Smells](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=CODE_SMELL)

[](https://sonarcloud.io/component_measures?id=apache_hive&pullRequest=4114&metric=coverage&view=list) No Coverage information

[](https://sonarcloud.io/component_measures?id=apache_hive&pullRequest=4114&metric=duplicated_lines_density&view=list) No Duplication information

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: gitbox-unsubscribe@hive.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: gitbox-unsubscribe@hive.apache.org

For additional commands, e-mail: gitbox-help@hive.apache.org

[GitHub] [hive] sonarcloud[bot] commented on pull request #4114: HIVE-27135: Cleaner fails with FileNotFoundException

Posted by "sonarcloud[bot] (via GitHub)" <gi...@apache.org>.

sonarcloud[bot] commented on PR #4114:

URL: https://github.com/apache/hive/pull/4114#issuecomment-1466675024

Kudos, SonarCloud Quality Gate passed! [](https://sonarcloud.io/dashboard?id=apache_hive&pullRequest=4114)

[](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=BUG) [](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=BUG) [0 Bugs](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=BUG)

[](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=VULNERABILITY) [](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=VULNERABILITY) [0 Vulnerabilities](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=VULNERABILITY)

[](https://sonarcloud.io/project/security_hotspots?id=apache_hive&pullRequest=4114&resolved=false&types=SECURITY_HOTSPOT) [](https://sonarcloud.io/project/security_hotspots?id=apache_hive&pullRequest=4114&resolved=false&types=SECURITY_HOTSPOT) [0 Security Hotspots](https://sonarcloud.io/project/security_hotspots?id=apache_hive&pullRequest=4114&resolved=false&types=SECURITY_HOTSPOT)

[](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=CODE_SMELL) [](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=CODE_SMELL) [1 Code Smell](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=CODE_SMELL)

[](https://sonarcloud.io/component_measures?id=apache_hive&pullRequest=4114&metric=coverage&view=list) No Coverage information

[](https://sonarcloud.io/component_measures?id=apache_hive&pullRequest=4114&metric=duplicated_lines_density&view=list) No Duplication information

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: gitbox-unsubscribe@hive.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: gitbox-unsubscribe@hive.apache.org

For additional commands, e-mail: gitbox-help@hive.apache.org

[GitHub] [hive] sonarcloud[bot] commented on pull request #4114: HIVE-27135: Cleaner fails with FileNotFoundException

Posted by "sonarcloud[bot] (via GitHub)" <gi...@apache.org>.

sonarcloud[bot] commented on PR #4114:

URL: https://github.com/apache/hive/pull/4114#issuecomment-1467611628

Kudos, SonarCloud Quality Gate passed! [](https://sonarcloud.io/dashboard?id=apache_hive&pullRequest=4114)

[](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=BUG) [](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=BUG) [0 Bugs](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=BUG)

[](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=VULNERABILITY) [](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=VULNERABILITY) [0 Vulnerabilities](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=VULNERABILITY)

[](https://sonarcloud.io/project/security_hotspots?id=apache_hive&pullRequest=4114&resolved=false&types=SECURITY_HOTSPOT) [](https://sonarcloud.io/project/security_hotspots?id=apache_hive&pullRequest=4114&resolved=false&types=SECURITY_HOTSPOT) [0 Security Hotspots](https://sonarcloud.io/project/security_hotspots?id=apache_hive&pullRequest=4114&resolved=false&types=SECURITY_HOTSPOT)

[](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=CODE_SMELL) [](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=CODE_SMELL) [0 Code Smells](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=CODE_SMELL)

[](https://sonarcloud.io/component_measures?id=apache_hive&pullRequest=4114&metric=coverage&view=list) No Coverage information

[](https://sonarcloud.io/component_measures?id=apache_hive&pullRequest=4114&metric=duplicated_lines_density&view=list) No Duplication information

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: gitbox-unsubscribe@hive.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: gitbox-unsubscribe@hive.apache.org

For additional commands, e-mail: gitbox-help@hive.apache.org

[GitHub] [hive] deniskuzZ commented on a diff in pull request #4114: HIVE-27135: Cleaner fails with FileNotFoundException

Posted by "deniskuzZ (via GitHub)" <gi...@apache.org>.

deniskuzZ commented on code in PR #4114:

URL: https://github.com/apache/hive/pull/4114#discussion_r1145984918

##########

ql/src/java/org/apache/hadoop/hive/ql/io/AcidUtils.java:

##########

@@ -1538,32 +1538,36 @@ private static HdfsDirSnapshot addToSnapshot(Map<Path, HdfsDirSnapshot> dirToSna

public static Map<Path, HdfsDirSnapshot> getHdfsDirSnapshots(final FileSystem fs, final Path path)

throws IOException {

Map<Path, HdfsDirSnapshot> dirToSnapshots = new HashMap<>();

- RemoteIterator<LocatedFileStatus> itr = FileUtils.listFiles(fs, path, true, acidHiddenFileFilter);

- while (itr.hasNext()) {

- FileStatus fStatus = itr.next();

- Path fPath = fStatus.getPath();

- if (fStatus.isDirectory() && acidTempDirFilter.accept(fPath)) {

- addToSnapshot(dirToSnapshots, fPath);

- } else {

- Path parentDirPath = fPath.getParent();

- if (acidTempDirFilter.accept(parentDirPath)) {

- while (isChildOfDelta(parentDirPath, path)) {

- // Some cases there are other directory layers between the delta and the datafiles

- // (export-import mm table, insert with union all to mm table, skewed tables).

- // But it does not matter for the AcidState, we just need the deltas and the data files

- // So build the snapshot with the files inside the delta directory

- parentDirPath = parentDirPath.getParent();

- }

- HdfsDirSnapshot dirSnapshot = addToSnapshot(dirToSnapshots, parentDirPath);

- // We're not filtering out the metadata file and acid format file,

- // as they represent parts of a valid snapshot

- // We're not using the cached values downstream, but we can potentially optimize more in a follow-up task

- if (fStatus.getPath().toString().contains(MetaDataFile.METADATA_FILE)) {

- dirSnapshot.addMetadataFile(fStatus);

- } else if (fStatus.getPath().toString().contains(OrcAcidVersion.ACID_FORMAT)) {

- dirSnapshot.addOrcAcidFormatFile(fStatus);

- } else {

- dirSnapshot.addFile(fStatus);

+ Deque<RemoteIterator<LocatedFileStatus>> stack = new ArrayDeque<>();

Review Comment:

back to Sourabh's concern, what are we fixing here if that method is not used by Cleaner? cc @SourabhBadhya , @veghlaci05

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: gitbox-unsubscribe@hive.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: gitbox-unsubscribe@hive.apache.org

For additional commands, e-mail: gitbox-help@hive.apache.org

[GitHub] [hive] mdayakar commented on a diff in pull request #4114: HIVE-27135: Cleaner fails with FileNotFoundException

Posted by "mdayakar (via GitHub)" <gi...@apache.org>.

mdayakar commented on code in PR #4114:

URL: https://github.com/apache/hive/pull/4114#discussion_r1143305701

##########

ql/src/java/org/apache/hadoop/hive/ql/io/AcidUtils.java:

##########

@@ -1538,32 +1538,36 @@ private static HdfsDirSnapshot addToSnapshot(Map<Path, HdfsDirSnapshot> dirToSna

public static Map<Path, HdfsDirSnapshot> getHdfsDirSnapshots(final FileSystem fs, final Path path)

throws IOException {

Map<Path, HdfsDirSnapshot> dirToSnapshots = new HashMap<>();

- RemoteIterator<LocatedFileStatus> itr = FileUtils.listFiles(fs, path, true, acidHiddenFileFilter);

- while (itr.hasNext()) {

- FileStatus fStatus = itr.next();

- Path fPath = fStatus.getPath();

- if (fStatus.isDirectory() && acidTempDirFilter.accept(fPath)) {

- addToSnapshot(dirToSnapshots, fPath);

- } else {

- Path parentDirPath = fPath.getParent();

- if (acidTempDirFilter.accept(parentDirPath)) {

- while (isChildOfDelta(parentDirPath, path)) {

- // Some cases there are other directory layers between the delta and the datafiles

- // (export-import mm table, insert with union all to mm table, skewed tables).

- // But it does not matter for the AcidState, we just need the deltas and the data files

- // So build the snapshot with the files inside the delta directory

- parentDirPath = parentDirPath.getParent();

- }

- HdfsDirSnapshot dirSnapshot = addToSnapshot(dirToSnapshots, parentDirPath);

- // We're not filtering out the metadata file and acid format file,

- // as they represent parts of a valid snapshot

- // We're not using the cached values downstream, but we can potentially optimize more in a follow-up task

- if (fStatus.getPath().toString().contains(MetaDataFile.METADATA_FILE)) {

- dirSnapshot.addMetadataFile(fStatus);

- } else if (fStatus.getPath().toString().contains(OrcAcidVersion.ACID_FORMAT)) {

- dirSnapshot.addOrcAcidFormatFile(fStatus);

- } else {

- dirSnapshot.addFile(fStatus);

+ Deque<RemoteIterator<LocatedFileStatus>> stack = new ArrayDeque<>();

Review Comment:

@deniskuzZ I just followed the same approach implemented in _getHdfsDirSnapshotsForCleaner()_ API. Here the main problem with the HADOOP API. Already @ayushtkn raised an issue [HADOOP-18662](https://issues.apache.org/jira/browse/HADOOP-18662) but the fix is not yet merged.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: gitbox-unsubscribe@hive.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: gitbox-unsubscribe@hive.apache.org

For additional commands, e-mail: gitbox-help@hive.apache.org

[GitHub] [hive] sonarcloud[bot] commented on pull request #4114: HIVE-27135: AcidUtils#getHdfsDirSnapshots() throws FNFE when a directory is removed in HDFS

Posted by "sonarcloud[bot] (via GitHub)" <gi...@apache.org>.

sonarcloud[bot] commented on PR #4114:

URL: https://github.com/apache/hive/pull/4114#issuecomment-1485028689

Kudos, SonarCloud Quality Gate passed! [](https://sonarcloud.io/dashboard?id=apache_hive&pullRequest=4114)

[](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=BUG) [](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=BUG) [0 Bugs](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=BUG)

[](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=VULNERABILITY) [](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=VULNERABILITY) [0 Vulnerabilities](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=VULNERABILITY)

[](https://sonarcloud.io/project/security_hotspots?id=apache_hive&pullRequest=4114&resolved=false&types=SECURITY_HOTSPOT) [](https://sonarcloud.io/project/security_hotspots?id=apache_hive&pullRequest=4114&resolved=false&types=SECURITY_HOTSPOT) [0 Security Hotspots](https://sonarcloud.io/project/security_hotspots?id=apache_hive&pullRequest=4114&resolved=false&types=SECURITY_HOTSPOT)

[](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=CODE_SMELL) [](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=CODE_SMELL) [1 Code Smell](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=CODE_SMELL)

[](https://sonarcloud.io/component_measures?id=apache_hive&pullRequest=4114&metric=coverage&view=list) No Coverage information

[](https://sonarcloud.io/component_measures?id=apache_hive&pullRequest=4114&metric=duplicated_lines_density&view=list) No Duplication information

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: gitbox-unsubscribe@hive.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: gitbox-unsubscribe@hive.apache.org

For additional commands, e-mail: gitbox-help@hive.apache.org

[GitHub] [hive] mdayakar commented on a diff in pull request #4114: HIVE-27135: AcidUtils#getHdfsDirSnapshots() throws FNFE when a directory is removed in HDFS

Posted by "mdayakar (via GitHub)" <gi...@apache.org>.

mdayakar commented on code in PR #4114:

URL: https://github.com/apache/hive/pull/4114#discussion_r1152239566

##########

ql/src/java/org/apache/hadoop/hive/ql/io/AcidUtils.java:

##########

@@ -1538,32 +1538,36 @@ private static HdfsDirSnapshot addToSnapshot(Map<Path, HdfsDirSnapshot> dirToSna

public static Map<Path, HdfsDirSnapshot> getHdfsDirSnapshots(final FileSystem fs, final Path path)

throws IOException {

Map<Path, HdfsDirSnapshot> dirToSnapshots = new HashMap<>();

- RemoteIterator<LocatedFileStatus> itr = FileUtils.listFiles(fs, path, true, acidHiddenFileFilter);

- while (itr.hasNext()) {

- FileStatus fStatus = itr.next();

- Path fPath = fStatus.getPath();

- if (fStatus.isDirectory() && acidTempDirFilter.accept(fPath)) {

- addToSnapshot(dirToSnapshots, fPath);

- } else {

- Path parentDirPath = fPath.getParent();

- if (acidTempDirFilter.accept(parentDirPath)) {

- while (isChildOfDelta(parentDirPath, path)) {

- // Some cases there are other directory layers between the delta and the datafiles

- // (export-import mm table, insert with union all to mm table, skewed tables).

- // But it does not matter for the AcidState, we just need the deltas and the data files

- // So build the snapshot with the files inside the delta directory

- parentDirPath = parentDirPath.getParent();

- }

- HdfsDirSnapshot dirSnapshot = addToSnapshot(dirToSnapshots, parentDirPath);

- // We're not filtering out the metadata file and acid format file,

- // as they represent parts of a valid snapshot

- // We're not using the cached values downstream, but we can potentially optimize more in a follow-up task

- if (fStatus.getPath().toString().contains(MetaDataFile.METADATA_FILE)) {

- dirSnapshot.addMetadataFile(fStatus);

- } else if (fStatus.getPath().toString().contains(OrcAcidVersion.ACID_FORMAT)) {

- dirSnapshot.addOrcAcidFormatFile(fStatus);

- } else {

- dirSnapshot.addFile(fStatus);

+ Deque<RemoteIterator<LocatedFileStatus>> stack = new ArrayDeque<>();

Review Comment:

Updated accordingly.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: gitbox-unsubscribe@hive.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: gitbox-unsubscribe@hive.apache.org

For additional commands, e-mail: gitbox-help@hive.apache.org

[GitHub] [hive] SourabhBadhya commented on a diff in pull request #4114: HIVE-27135: Cleaner fails with FileNotFoundException

Posted by "SourabhBadhya (via GitHub)" <gi...@apache.org>.

SourabhBadhya commented on code in PR #4114:

URL: https://github.com/apache/hive/pull/4114#discussion_r1135279796

##########

ql/src/java/org/apache/hadoop/hive/ql/io/AcidUtils.java:

##########

@@ -1538,9 +1537,9 @@ private static HdfsDirSnapshot addToSnapshot(Map<Path, HdfsDirSnapshot> dirToSna

public static Map<Path, HdfsDirSnapshot> getHdfsDirSnapshots(final FileSystem fs, final Path path)

Review Comment:

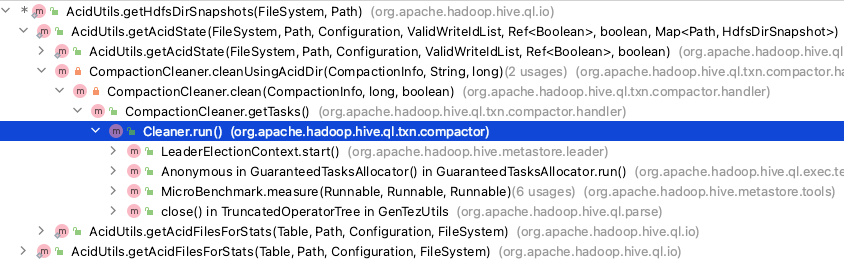

I am not sure if this function is used by the Cleaner in the current upstream code. AFAIK Cleaner uses `getHdfsDirSnapshotsForCleaner` for getting the map of snapshots containing `HdfsDirSnapshot`.

Link for the function `getHdfsDirSnapshotsForCleaner` - https://github.com/apache/hive/blob/master/ql/src/java/org/apache/hadoop/hive/ql/io/AcidUtils.java#L1501

On the cleaner side, the function is used here -

https://github.com/apache/hive/blob/master/ql/src/java/org/apache/hadoop/hive/ql/txn/compactor/handler/CompactionCleaner.java#L269

So from the Cleaner perspective, changing `getHdfsDirSnapshots` might not be very useful.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: gitbox-unsubscribe@hive.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: gitbox-unsubscribe@hive.apache.org

For additional commands, e-mail: gitbox-help@hive.apache.org

[GitHub] [hive] sonarcloud[bot] commented on pull request #4114: HIVE-27135: Cleaner fails with FileNotFoundException

Posted by "sonarcloud[bot] (via GitHub)" <gi...@apache.org>.

sonarcloud[bot] commented on PR #4114:

URL: https://github.com/apache/hive/pull/4114#issuecomment-1476271801

Kudos, SonarCloud Quality Gate passed! [](https://sonarcloud.io/dashboard?id=apache_hive&pullRequest=4114)

[](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=BUG) [](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=BUG) [0 Bugs](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=BUG)

[](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=VULNERABILITY) [](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=VULNERABILITY) [0 Vulnerabilities](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=VULNERABILITY)

[](https://sonarcloud.io/project/security_hotspots?id=apache_hive&pullRequest=4114&resolved=false&types=SECURITY_HOTSPOT) [](https://sonarcloud.io/project/security_hotspots?id=apache_hive&pullRequest=4114&resolved=false&types=SECURITY_HOTSPOT) [0 Security Hotspots](https://sonarcloud.io/project/security_hotspots?id=apache_hive&pullRequest=4114&resolved=false&types=SECURITY_HOTSPOT)

[](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=CODE_SMELL) [](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=CODE_SMELL) [0 Code Smells](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=CODE_SMELL)

[](https://sonarcloud.io/component_measures?id=apache_hive&pullRequest=4114&metric=coverage&view=list) No Coverage information

[](https://sonarcloud.io/component_measures?id=apache_hive&pullRequest=4114&metric=duplicated_lines_density&view=list) No Duplication information

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: gitbox-unsubscribe@hive.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: gitbox-unsubscribe@hive.apache.org

For additional commands, e-mail: gitbox-help@hive.apache.org

[GitHub] [hive] sonarcloud[bot] commented on pull request #4114: HIVE-27135: AcidUtils#getHdfsDirSnapshots() throws FNFE when a directory is removed in HDFS

Posted by "sonarcloud[bot] (via GitHub)" <gi...@apache.org>.

sonarcloud[bot] commented on PR #4114:

URL: https://github.com/apache/hive/pull/4114#issuecomment-1489482725

Kudos, SonarCloud Quality Gate passed! [](https://sonarcloud.io/dashboard?id=apache_hive&pullRequest=4114)

[](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=BUG) [](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=BUG) [0 Bugs](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=BUG)

[](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=VULNERABILITY) [](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=VULNERABILITY) [0 Vulnerabilities](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=VULNERABILITY)

[](https://sonarcloud.io/project/security_hotspots?id=apache_hive&pullRequest=4114&resolved=false&types=SECURITY_HOTSPOT) [](https://sonarcloud.io/project/security_hotspots?id=apache_hive&pullRequest=4114&resolved=false&types=SECURITY_HOTSPOT) [0 Security Hotspots](https://sonarcloud.io/project/security_hotspots?id=apache_hive&pullRequest=4114&resolved=false&types=SECURITY_HOTSPOT)

[](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=CODE_SMELL) [](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=CODE_SMELL) [0 Code Smells](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=CODE_SMELL)

[](https://sonarcloud.io/component_measures?id=apache_hive&pullRequest=4114&metric=coverage&view=list) No Coverage information

[](https://sonarcloud.io/component_measures?id=apache_hive&pullRequest=4114&metric=duplicated_lines_density&view=list) No Duplication information

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: gitbox-unsubscribe@hive.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: gitbox-unsubscribe@hive.apache.org

For additional commands, e-mail: gitbox-help@hive.apache.org

[GitHub] [hive] deniskuzZ commented on a diff in pull request #4114: HIVE-27135: Cleaner fails with FileNotFoundException

Posted by "deniskuzZ (via GitHub)" <gi...@apache.org>.

deniskuzZ commented on code in PR #4114:

URL: https://github.com/apache/hive/pull/4114#discussion_r1145944880

##########

ql/src/java/org/apache/hadoop/hive/ql/io/AcidUtils.java:

##########

@@ -1538,32 +1538,36 @@ private static HdfsDirSnapshot addToSnapshot(Map<Path, HdfsDirSnapshot> dirToSna

public static Map<Path, HdfsDirSnapshot> getHdfsDirSnapshots(final FileSystem fs, final Path path)

throws IOException {

Map<Path, HdfsDirSnapshot> dirToSnapshots = new HashMap<>();

- RemoteIterator<LocatedFileStatus> itr = FileUtils.listFiles(fs, path, true, acidHiddenFileFilter);

- while (itr.hasNext()) {

- FileStatus fStatus = itr.next();

- Path fPath = fStatus.getPath();

- if (fStatus.isDirectory() && acidTempDirFilter.accept(fPath)) {

- addToSnapshot(dirToSnapshots, fPath);

- } else {

- Path parentDirPath = fPath.getParent();

- if (acidTempDirFilter.accept(parentDirPath)) {

- while (isChildOfDelta(parentDirPath, path)) {

- // Some cases there are other directory layers between the delta and the datafiles

- // (export-import mm table, insert with union all to mm table, skewed tables).

- // But it does not matter for the AcidState, we just need the deltas and the data files

- // So build the snapshot with the files inside the delta directory

- parentDirPath = parentDirPath.getParent();

- }

- HdfsDirSnapshot dirSnapshot = addToSnapshot(dirToSnapshots, parentDirPath);

- // We're not filtering out the metadata file and acid format file,

- // as they represent parts of a valid snapshot

- // We're not using the cached values downstream, but we can potentially optimize more in a follow-up task

- if (fStatus.getPath().toString().contains(MetaDataFile.METADATA_FILE)) {

- dirSnapshot.addMetadataFile(fStatus);

- } else if (fStatus.getPath().toString().contains(OrcAcidVersion.ACID_FORMAT)) {

- dirSnapshot.addOrcAcidFormatFile(fStatus);

- } else {

- dirSnapshot.addFile(fStatus);

+ Deque<RemoteIterator<LocatedFileStatus>> stack = new ArrayDeque<>();

+ stack.push(FileUtils.listLocatedStatusIterator(fs, path, acidHiddenFileFilter));

+ while (!stack.isEmpty()) {

+ RemoteIterator<LocatedFileStatus> itr = stack.pop();

+ while (itr.hasNext()) {

+ FileStatus fStatus = itr.next();

+ Path fPath = fStatus.getPath();

+ if (fStatus.isDirectory()) {

+ stack.push(FileUtils.listLocatedStatusIterator(fs, fPath, acidHiddenFileFilter));

+ } else {

+ Path parentDirPath = fPath.getParent();

+ if (acidTempDirFilter.accept(parentDirPath)) {

Review Comment:

why do you need `acidTempDirFilter` filter if listStatusIterator should already pre-filter the results?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: gitbox-unsubscribe@hive.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: gitbox-unsubscribe@hive.apache.org

For additional commands, e-mail: gitbox-help@hive.apache.org

[GitHub] [hive] deniskuzZ commented on a diff in pull request #4114: HIVE-27135: Cleaner fails with FileNotFoundException

Posted by "deniskuzZ (via GitHub)" <gi...@apache.org>.

deniskuzZ commented on code in PR #4114:

URL: https://github.com/apache/hive/pull/4114#discussion_r1145932988

##########

common/src/java/org/apache/hadoop/hive/common/FileUtils.java:

##########

@@ -1376,6 +1376,12 @@ public static RemoteIterator<FileStatus> listStatusIterator(FileSystem fs, Path

status -> filter.accept(status.getPath()));

}

+ public static RemoteIterator<LocatedFileStatus> listLocatedStatusIterator(FileSystem fs, Path path, PathFilter filter)

Review Comment:

why do you need this, you could simply use `listStatusIterator` as in `getHdfsDirSnapshotsForCleaner`

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: gitbox-unsubscribe@hive.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: gitbox-unsubscribe@hive.apache.org

For additional commands, e-mail: gitbox-help@hive.apache.org

[GitHub] [hive] mdayakar commented on a diff in pull request #4114: HIVE-27135: Cleaner fails with FileNotFoundException

Posted by "mdayakar (via GitHub)" <gi...@apache.org>.

mdayakar commented on code in PR #4114:

URL: https://github.com/apache/hive/pull/4114#discussion_r1149137172

##########

ql/src/java/org/apache/hadoop/hive/ql/io/AcidUtils.java:

##########

@@ -1538,32 +1538,36 @@ private static HdfsDirSnapshot addToSnapshot(Map<Path, HdfsDirSnapshot> dirToSna

public static Map<Path, HdfsDirSnapshot> getHdfsDirSnapshots(final FileSystem fs, final Path path)

throws IOException {

Map<Path, HdfsDirSnapshot> dirToSnapshots = new HashMap<>();

- RemoteIterator<LocatedFileStatus> itr = FileUtils.listFiles(fs, path, true, acidHiddenFileFilter);

- while (itr.hasNext()) {

- FileStatus fStatus = itr.next();

- Path fPath = fStatus.getPath();

- if (fStatus.isDirectory() && acidTempDirFilter.accept(fPath)) {

- addToSnapshot(dirToSnapshots, fPath);

- } else {

- Path parentDirPath = fPath.getParent();

- if (acidTempDirFilter.accept(parentDirPath)) {

- while (isChildOfDelta(parentDirPath, path)) {

- // Some cases there are other directory layers between the delta and the datafiles

- // (export-import mm table, insert with union all to mm table, skewed tables).

- // But it does not matter for the AcidState, we just need the deltas and the data files

- // So build the snapshot with the files inside the delta directory

- parentDirPath = parentDirPath.getParent();

- }

- HdfsDirSnapshot dirSnapshot = addToSnapshot(dirToSnapshots, parentDirPath);

- // We're not filtering out the metadata file and acid format file,

- // as they represent parts of a valid snapshot

- // We're not using the cached values downstream, but we can potentially optimize more in a follow-up task

- if (fStatus.getPath().toString().contains(MetaDataFile.METADATA_FILE)) {

- dirSnapshot.addMetadataFile(fStatus);

- } else if (fStatus.getPath().toString().contains(OrcAcidVersion.ACID_FORMAT)) {

- dirSnapshot.addOrcAcidFormatFile(fStatus);

- } else {

- dirSnapshot.addFile(fStatus);

+ Deque<RemoteIterator<LocatedFileStatus>> stack = new ArrayDeque<>();

+ stack.push(FileUtils.listLocatedStatusIterator(fs, path, acidHiddenFileFilter));

+ while (!stack.isEmpty()) {

+ RemoteIterator<LocatedFileStatus> itr = stack.pop();

+ while (itr.hasNext()) {

+ FileStatus fStatus = itr.next();

+ Path fPath = fStatus.getPath();

+ if (fStatus.isDirectory()) {

+ stack.push(FileUtils.listLocatedStatusIterator(fs, fPath, acidHiddenFileFilter));

Review Comment:

No, `addToSnapshot(dirToSnapshots, fPath) ` need to add if a folder contains a file which is taken care in else part. Same logic exists in the existing code.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: gitbox-unsubscribe@hive.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: gitbox-unsubscribe@hive.apache.org

For additional commands, e-mail: gitbox-help@hive.apache.org

[GitHub] [hive] deniskuzZ merged pull request #4114: HIVE-27135: AcidUtils#getHdfsDirSnapshots() throws FNFE when a directory is removed in HDFS

Posted by "deniskuzZ (via GitHub)" <gi...@apache.org>.

deniskuzZ merged PR #4114:

URL: https://github.com/apache/hive/pull/4114

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: gitbox-unsubscribe@hive.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: gitbox-unsubscribe@hive.apache.org

For additional commands, e-mail: gitbox-help@hive.apache.org

[GitHub] [hive] sonarcloud[bot] commented on pull request #4114: HIVE-27135: AcidUtils#getHdfsDirSnapshots() throws FNFE when a directory is removed in HDFS

Posted by "sonarcloud[bot] (via GitHub)" <gi...@apache.org>.

sonarcloud[bot] commented on PR #4114:

URL: https://github.com/apache/hive/pull/4114#issuecomment-1485490382

Kudos, SonarCloud Quality Gate passed! [](https://sonarcloud.io/dashboard?id=apache_hive&pullRequest=4114)

[](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=BUG) [](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=BUG) [0 Bugs](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=BUG)

[](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=VULNERABILITY) [](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=VULNERABILITY) [0 Vulnerabilities](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=VULNERABILITY)

[](https://sonarcloud.io/project/security_hotspots?id=apache_hive&pullRequest=4114&resolved=false&types=SECURITY_HOTSPOT) [](https://sonarcloud.io/project/security_hotspots?id=apache_hive&pullRequest=4114&resolved=false&types=SECURITY_HOTSPOT) [0 Security Hotspots](https://sonarcloud.io/project/security_hotspots?id=apache_hive&pullRequest=4114&resolved=false&types=SECURITY_HOTSPOT)

[](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=CODE_SMELL) [](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=CODE_SMELL) [0 Code Smells](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=CODE_SMELL)

[](https://sonarcloud.io/component_measures?id=apache_hive&pullRequest=4114&metric=coverage&view=list) No Coverage information

[](https://sonarcloud.io/component_measures?id=apache_hive&pullRequest=4114&metric=duplicated_lines_density&view=list) No Duplication information

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: gitbox-unsubscribe@hive.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: gitbox-unsubscribe@hive.apache.org

For additional commands, e-mail: gitbox-help@hive.apache.org

[GitHub] [hive] mdayakar commented on a diff in pull request #4114: HIVE-27135: Cleaner fails with FileNotFoundException

Posted by "mdayakar (via GitHub)" <gi...@apache.org>.

mdayakar commented on code in PR #4114:

URL: https://github.com/apache/hive/pull/4114#discussion_r1146445992

##########

common/src/java/org/apache/hadoop/hive/common/FileUtils.java:

##########

@@ -1376,6 +1376,12 @@ public static RemoteIterator<FileStatus> listStatusIterator(FileSystem fs, Path

status -> filter.accept(status.getPath()));

}

+ public static RemoteIterator<LocatedFileStatus> listLocatedStatusIterator(FileSystem fs, Path path, PathFilter filter)

Review Comment:

The existing code gets the RemoteIterator of LocatedFileStatus objects in org.apache.hadoop.hive.ql.io.AcidUtils#getHdfsDirSnapshots() API so used the same by adding a new API.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: gitbox-unsubscribe@hive.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: gitbox-unsubscribe@hive.apache.org

For additional commands, e-mail: gitbox-help@hive.apache.org

[GitHub] [hive] mdayakar commented on a diff in pull request #4114: HIVE-27135: AcidUtils#getHdfsDirSnapshots() throws FNFE when a directory is removed in HDFS

Posted by "mdayakar (via GitHub)" <gi...@apache.org>.

mdayakar commented on code in PR #4114:

URL: https://github.com/apache/hive/pull/4114#discussion_r1152246387

##########

ql/src/java/org/apache/hadoop/hive/ql/io/AcidUtils.java:

##########

@@ -1538,32 +1538,36 @@ private static HdfsDirSnapshot addToSnapshot(Map<Path, HdfsDirSnapshot> dirToSna

public static Map<Path, HdfsDirSnapshot> getHdfsDirSnapshots(final FileSystem fs, final Path path)

throws IOException {

Map<Path, HdfsDirSnapshot> dirToSnapshots = new HashMap<>();

- RemoteIterator<LocatedFileStatus> itr = FileUtils.listFiles(fs, path, true, acidHiddenFileFilter);

- while (itr.hasNext()) {

- FileStatus fStatus = itr.next();

- Path fPath = fStatus.getPath();

- if (fStatus.isDirectory() && acidTempDirFilter.accept(fPath)) {

- addToSnapshot(dirToSnapshots, fPath);

- } else {

- Path parentDirPath = fPath.getParent();

- if (acidTempDirFilter.accept(parentDirPath)) {

- while (isChildOfDelta(parentDirPath, path)) {

- // Some cases there are other directory layers between the delta and the datafiles

- // (export-import mm table, insert with union all to mm table, skewed tables).

- // But it does not matter for the AcidState, we just need the deltas and the data files

- // So build the snapshot with the files inside the delta directory

- parentDirPath = parentDirPath.getParent();

- }

- HdfsDirSnapshot dirSnapshot = addToSnapshot(dirToSnapshots, parentDirPath);

- // We're not filtering out the metadata file and acid format file,

- // as they represent parts of a valid snapshot

- // We're not using the cached values downstream, but we can potentially optimize more in a follow-up task

- if (fStatus.getPath().toString().contains(MetaDataFile.METADATA_FILE)) {

- dirSnapshot.addMetadataFile(fStatus);

- } else if (fStatus.getPath().toString().contains(OrcAcidVersion.ACID_FORMAT)) {

- dirSnapshot.addOrcAcidFormatFile(fStatus);

- } else {

- dirSnapshot.addFile(fStatus);

+ Deque<RemoteIterator<LocatedFileStatus>> stack = new ArrayDeque<>();

+ stack.push(FileUtils.listLocatedStatusIterator(fs, path, acidHiddenFileFilter));

+ while (!stack.isEmpty()) {

+ RemoteIterator<LocatedFileStatus> itr = stack.pop();

+ while (itr.hasNext()) {

+ FileStatus fStatus = itr.next();

+ Path fPath = fStatus.getPath();

+ if (fStatus.isDirectory()) {

+ stack.push(FileUtils.listLocatedStatusIterator(fs, fPath, acidHiddenFileFilter));

Review Comment:

Here it will not list empty directories, actually the above if condition is obsolete in old code. Tested with old and modified code, both don't add empty directories.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: gitbox-unsubscribe@hive.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: gitbox-unsubscribe@hive.apache.org

For additional commands, e-mail: gitbox-help@hive.apache.org

[GitHub] [hive] sonarcloud[bot] commented on pull request #4114: HIVE-27135: AcidUtils#getHdfsDirSnapshots() throws FNFE when a directory is removed in HDFS

Posted by "sonarcloud[bot] (via GitHub)" <gi...@apache.org>.

sonarcloud[bot] commented on PR #4114:

URL: https://github.com/apache/hive/pull/4114#issuecomment-1490200834

Kudos, SonarCloud Quality Gate passed! [](https://sonarcloud.io/dashboard?id=apache_hive&pullRequest=4114)

[](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=BUG) [](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=BUG) [0 Bugs](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=BUG)

[](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=VULNERABILITY) [](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=VULNERABILITY) [0 Vulnerabilities](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=VULNERABILITY)

[](https://sonarcloud.io/project/security_hotspots?id=apache_hive&pullRequest=4114&resolved=false&types=SECURITY_HOTSPOT) [](https://sonarcloud.io/project/security_hotspots?id=apache_hive&pullRequest=4114&resolved=false&types=SECURITY_HOTSPOT) [0 Security Hotspots](https://sonarcloud.io/project/security_hotspots?id=apache_hive&pullRequest=4114&resolved=false&types=SECURITY_HOTSPOT)

[](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=CODE_SMELL) [](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=CODE_SMELL) [0 Code Smells](https://sonarcloud.io/project/issues?id=apache_hive&pullRequest=4114&resolved=false&types=CODE_SMELL)

[](https://sonarcloud.io/component_measures?id=apache_hive&pullRequest=4114&metric=coverage&view=list) No Coverage information

[](https://sonarcloud.io/component_measures?id=apache_hive&pullRequest=4114&metric=duplicated_lines_density&view=list) No Duplication information

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: gitbox-unsubscribe@hive.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: gitbox-unsubscribe@hive.apache.org

For additional commands, e-mail: gitbox-help@hive.apache.org

[GitHub] [hive] mdayakar commented on a diff in pull request #4114: HIVE-27135: Cleaner fails with FileNotFoundException

Posted by "mdayakar (via GitHub)" <gi...@apache.org>.

mdayakar commented on code in PR #4114:

URL: https://github.com/apache/hive/pull/4114#discussion_r1149137172

##########

ql/src/java/org/apache/hadoop/hive/ql/io/AcidUtils.java:

##########

@@ -1538,32 +1538,36 @@ private static HdfsDirSnapshot addToSnapshot(Map<Path, HdfsDirSnapshot> dirToSna

public static Map<Path, HdfsDirSnapshot> getHdfsDirSnapshots(final FileSystem fs, final Path path)

throws IOException {

Map<Path, HdfsDirSnapshot> dirToSnapshots = new HashMap<>();

- RemoteIterator<LocatedFileStatus> itr = FileUtils.listFiles(fs, path, true, acidHiddenFileFilter);

- while (itr.hasNext()) {

- FileStatus fStatus = itr.next();

- Path fPath = fStatus.getPath();

- if (fStatus.isDirectory() && acidTempDirFilter.accept(fPath)) {

- addToSnapshot(dirToSnapshots, fPath);

- } else {

- Path parentDirPath = fPath.getParent();

- if (acidTempDirFilter.accept(parentDirPath)) {

- while (isChildOfDelta(parentDirPath, path)) {

- // Some cases there are other directory layers between the delta and the datafiles

- // (export-import mm table, insert with union all to mm table, skewed tables).

- // But it does not matter for the AcidState, we just need the deltas and the data files

- // So build the snapshot with the files inside the delta directory

- parentDirPath = parentDirPath.getParent();

- }

- HdfsDirSnapshot dirSnapshot = addToSnapshot(dirToSnapshots, parentDirPath);

- // We're not filtering out the metadata file and acid format file,

- // as they represent parts of a valid snapshot

- // We're not using the cached values downstream, but we can potentially optimize more in a follow-up task

- if (fStatus.getPath().toString().contains(MetaDataFile.METADATA_FILE)) {

- dirSnapshot.addMetadataFile(fStatus);

- } else if (fStatus.getPath().toString().contains(OrcAcidVersion.ACID_FORMAT)) {

- dirSnapshot.addOrcAcidFormatFile(fStatus);

- } else {

- dirSnapshot.addFile(fStatus);

+ Deque<RemoteIterator<LocatedFileStatus>> stack = new ArrayDeque<>();

+ stack.push(FileUtils.listLocatedStatusIterator(fs, path, acidHiddenFileFilter));

+ while (!stack.isEmpty()) {

+ RemoteIterator<LocatedFileStatus> itr = stack.pop();

+ while (itr.hasNext()) {

+ FileStatus fStatus = itr.next();

+ Path fPath = fStatus.getPath();

+ if (fStatus.isDirectory()) {

+ stack.push(FileUtils.listLocatedStatusIterator(fs, fPath, acidHiddenFileFilter));

Review Comment:

No, `addToSnapshot(dirToSnapshots, fPath) ` need to call if a folder contains a file, which is taken care in else part. Same logic exists in the existing code.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: gitbox-unsubscribe@hive.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: gitbox-unsubscribe@hive.apache.org

For additional commands, e-mail: gitbox-help@hive.apache.org

[GitHub] [hive] veghlaci05 commented on a diff in pull request #4114: HIVE-27135: Cleaner fails with FileNotFoundException

Posted by "veghlaci05 (via GitHub)" <gi...@apache.org>.

veghlaci05 commented on code in PR #4114:

URL: https://github.com/apache/hive/pull/4114#discussion_r1143382486

##########

ql/src/java/org/apache/hadoop/hive/ql/io/AcidUtils.java:

##########

@@ -1538,32 +1538,36 @@ private static HdfsDirSnapshot addToSnapshot(Map<Path, HdfsDirSnapshot> dirToSna

public static Map<Path, HdfsDirSnapshot> getHdfsDirSnapshots(final FileSystem fs, final Path path)

throws IOException {

Map<Path, HdfsDirSnapshot> dirToSnapshots = new HashMap<>();

- RemoteIterator<LocatedFileStatus> itr = FileUtils.listFiles(fs, path, true, acidHiddenFileFilter);

- while (itr.hasNext()) {

- FileStatus fStatus = itr.next();

- Path fPath = fStatus.getPath();

- if (fStatus.isDirectory() && acidTempDirFilter.accept(fPath)) {

- addToSnapshot(dirToSnapshots, fPath);

- } else {

- Path parentDirPath = fPath.getParent();

- if (acidTempDirFilter.accept(parentDirPath)) {

- while (isChildOfDelta(parentDirPath, path)) {

- // Some cases there are other directory layers between the delta and the datafiles

- // (export-import mm table, insert with union all to mm table, skewed tables).

- // But it does not matter for the AcidState, we just need the deltas and the data files

- // So build the snapshot with the files inside the delta directory

- parentDirPath = parentDirPath.getParent();

- }

- HdfsDirSnapshot dirSnapshot = addToSnapshot(dirToSnapshots, parentDirPath);

- // We're not filtering out the metadata file and acid format file,

- // as they represent parts of a valid snapshot

- // We're not using the cached values downstream, but we can potentially optimize more in a follow-up task

- if (fStatus.getPath().toString().contains(MetaDataFile.METADATA_FILE)) {

- dirSnapshot.addMetadataFile(fStatus);

- } else if (fStatus.getPath().toString().contains(OrcAcidVersion.ACID_FORMAT)) {

- dirSnapshot.addOrcAcidFormatFile(fStatus);

- } else {

- dirSnapshot.addFile(fStatus);

+ Deque<RemoteIterator<LocatedFileStatus>> stack = new ArrayDeque<>();

Review Comment:

@deniskuzZ

I think this approach is fine for now, as @mdayakar mentioned `getHdfsDirSnapshotsForCleaner()` does the same. However, I would create a follow-up task to eliminate the workaround once HADOOP-18662 is merged.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: gitbox-unsubscribe@hive.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: gitbox-unsubscribe@hive.apache.org

For additional commands, e-mail: gitbox-help@hive.apache.org

[GitHub] [hive] deniskuzZ commented on a diff in pull request #4114: HIVE-27135: AcidUtils#getHdfsDirSnapshots() throws FNFE when a directory is removed in HDFS

Posted by "deniskuzZ (via GitHub)" <gi...@apache.org>.

deniskuzZ commented on code in PR #4114:

URL: https://github.com/apache/hive/pull/4114#discussion_r1151683610

##########

ql/src/java/org/apache/hadoop/hive/ql/io/AcidUtils.java:

##########

@@ -1538,32 +1538,36 @@ private static HdfsDirSnapshot addToSnapshot(Map<Path, HdfsDirSnapshot> dirToSna

public static Map<Path, HdfsDirSnapshot> getHdfsDirSnapshots(final FileSystem fs, final Path path)

throws IOException {

Map<Path, HdfsDirSnapshot> dirToSnapshots = new HashMap<>();

- RemoteIterator<LocatedFileStatus> itr = FileUtils.listFiles(fs, path, true, acidHiddenFileFilter);

- while (itr.hasNext()) {

- FileStatus fStatus = itr.next();

- Path fPath = fStatus.getPath();

- if (fStatus.isDirectory() && acidTempDirFilter.accept(fPath)) {

- addToSnapshot(dirToSnapshots, fPath);

- } else {

- Path parentDirPath = fPath.getParent();

- if (acidTempDirFilter.accept(parentDirPath)) {

- while (isChildOfDelta(parentDirPath, path)) {

- // Some cases there are other directory layers between the delta and the datafiles

- // (export-import mm table, insert with union all to mm table, skewed tables).

- // But it does not matter for the AcidState, we just need the deltas and the data files

- // So build the snapshot with the files inside the delta directory

- parentDirPath = parentDirPath.getParent();

- }

- HdfsDirSnapshot dirSnapshot = addToSnapshot(dirToSnapshots, parentDirPath);

- // We're not filtering out the metadata file and acid format file,

- // as they represent parts of a valid snapshot

- // We're not using the cached values downstream, but we can potentially optimize more in a follow-up task

- if (fStatus.getPath().toString().contains(MetaDataFile.METADATA_FILE)) {

- dirSnapshot.addMetadataFile(fStatus);