You are viewing a plain text version of this content. The canonical link for it is here.

Posted to github@arrow.apache.org by GitBox <gi...@apache.org> on 2020/06/16 13:58:56 UTC

[GitHub] [arrow] maartenbreddels opened a new pull request #7449: ARROW-9133: [C++] Add utf8_upper and utf8_lower

maartenbreddels opened a new pull request #7449:

URL: https://github.com/apache/arrow/pull/7449

This is the initial working version, which is *very* slow though (>100x slower than the ascii versions). It also required libutf8proc to be present.

Please let me know if the general code style etc is ok. I'm using CRTP here, judging from the metaprogramming seen in the rest of the code base, I guess that's fine.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow] maartenbreddels commented on pull request #7449: ARROW-9133: [C++] Add utf8_upper and utf8_lower

Posted by GitBox <gi...@apache.org>.

maartenbreddels commented on pull request #7449:

URL: https://github.com/apache/arrow/pull/7449#issuecomment-647572424

Validating the utf8 string made the results slightly slower, but still much better then the initial results.

Invalid utf8 characters are now replaced by a '?', as commented in the code. The unicode \U+FFFD would be more appropriate, but can lead to string length growth (3x). I think we can discuss this separately from this PR.

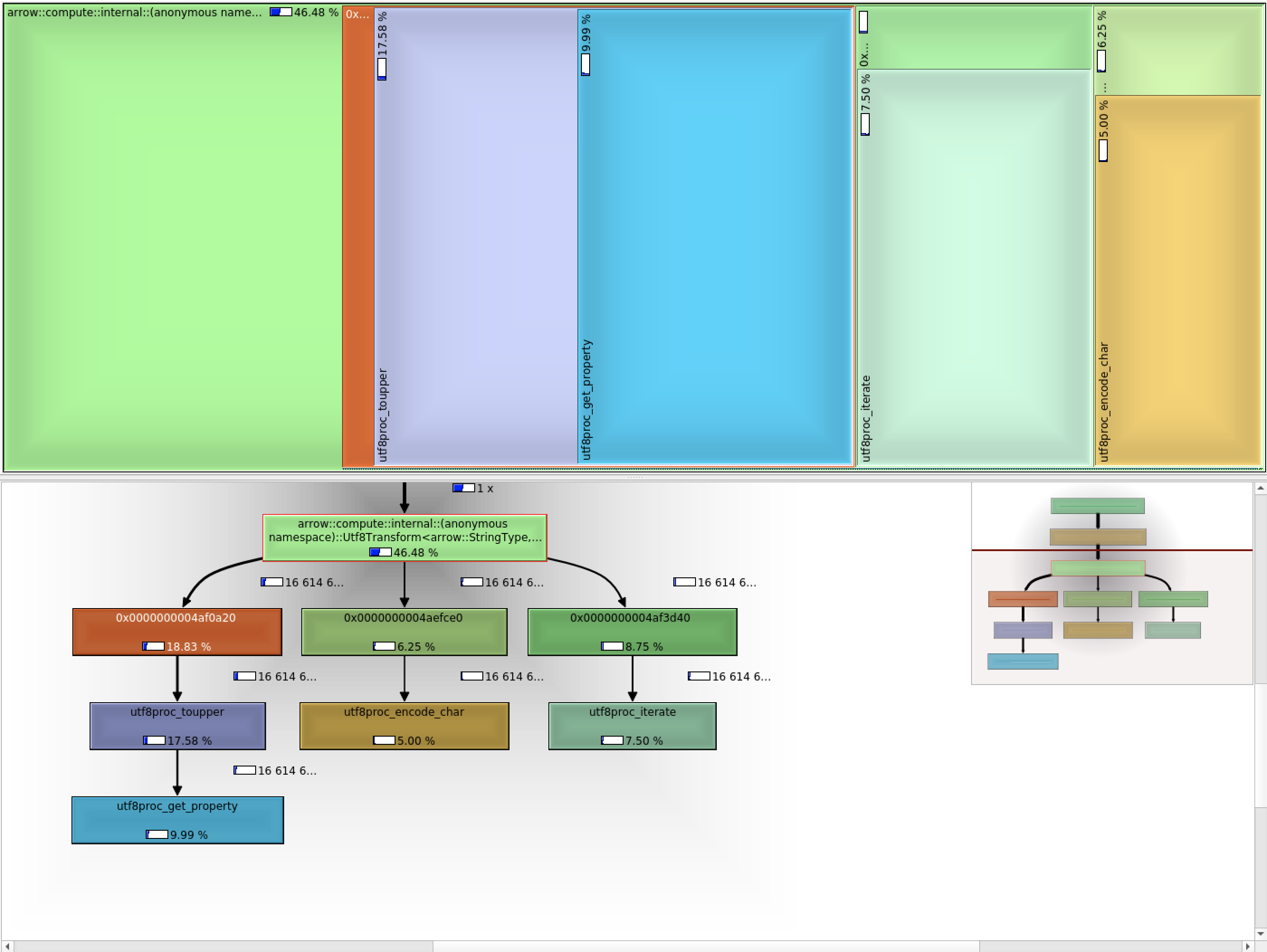

Recap:

Because we cannot use unlib (license issue), and utf8proc gives worse performance (even when inlined), we now have our own utf8 encode/decode. Also, calling upper and lower case functions in utf8proc is quite slow, and is now implemented with a lookup table (also suggested in https://github.com/JuliaStrings/utf8proc/issues/12#issuecomment-645563386) for codepoints up to `0xFFFF`.

Initial performance:

```

Utf8Lower 193873803 ns 193823124 ns 3 bytes_per_second=102.387M/s items_per_second=5.40996M/s

Utf8Upper 197154929 ns 197093083 ns 4 bytes_per_second=100.688M/s items_per_second=5.32021M/s

```

Current performance:

```

Utf8Lower 19677038 ns 19672550 ns 35 bytes_per_second=1008.76M/s items_per_second=53.3015M/s

Utf8Upper 20362432 ns 20360109 ns 34 bytes_per_second=974.698M/s items_per_second=51.5015M/s

```

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow] maartenbreddels commented on pull request #7449: ARROW-9133: [C++] Add utf8_upper and utf8_lower

Posted by GitBox <gi...@apache.org>.

maartenbreddels commented on pull request #7449:

URL: https://github.com/apache/arrow/pull/7449#issuecomment-646605458

Note that the unitests should fail, the PR isn't done, but the tests seem to run 👍

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow] nealrichardson commented on pull request #7449: ARROW-9133: [C++] Add utf8_upper and utf8_lower

Posted by GitBox <gi...@apache.org>.

nealrichardson commented on pull request #7449:

URL: https://github.com/apache/arrow/pull/7449#issuecomment-651369436

> > The version installed is compiled with gcc 8. RTools 35 uses gcc 4.9

>

> What difference does it make? This is plain C.

:shrug: then I'll leave it to you to sort out as this is beyond my knowledge. In the past, undefined symbols error + only compiled for rtools-packages (gcc8) = you need to get it built with rtools-backports too. Maybe something's off with the lib that was built, IDK if anyone has verified that it works.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow] kou commented on pull request #7449: ARROW-9133: [C++] Add utf8_upper and utf8_lower

Posted by GitBox <gi...@apache.org>.

kou commented on pull request #7449:

URL: https://github.com/apache/arrow/pull/7449#issuecomment-648518023

I've added a workaround we already used: https://github.com/apache/arrow/pull/7449/commits/782499f8641da4a23d86125bcc812546107f2ce5

But it doesn't solve this yet.

I'm trying reproducing this on my local environment.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow] pitrou commented on a change in pull request #7449: ARROW-9133: [C++] Add utf8_upper and utf8_lower

Posted by GitBox <gi...@apache.org>.

pitrou commented on a change in pull request #7449:

URL: https://github.com/apache/arrow/pull/7449#discussion_r447161391

##########

File path: cpp/src/arrow/compute/kernels/scalar_string.cc

##########

@@ -30,6 +31,124 @@ namespace internal {

namespace {

+// lookup tables

+std::vector<uint32_t> lut_upper_codepoint;

+std::vector<uint32_t> lut_lower_codepoint;

+std::once_flag flag_case_luts;

+

+constexpr uint32_t REPLACEMENT_CHAR =

+ '?'; // the proper replacement char would be the 0xFFFD codepoint, but that can

+ // increase string length by a factor of 3

+constexpr int MAX_CODEPOINT_LUT = 0xffff; // up to this codepoint is in a lookup table

+

+static inline void utf8_encode(uint8_t*& str, uint32_t codepoint) {

+ if (codepoint < 0x80) {

+ *str++ = codepoint;

+ } else if (codepoint < 0x800) {

+ *str++ = 0xC0 + (codepoint >> 6);

+ *str++ = 0x80 + (codepoint & 0x3F);

+ } else if (codepoint < 0x10000) {

+ *str++ = 0xE0 + (codepoint >> 12);

+ *str++ = 0x80 + ((codepoint >> 6) & 0x3F);

+ *str++ = 0x80 + (codepoint & 0x3F);

+ } else if (codepoint < 0x200000) {

+ *str++ = 0xF0 + (codepoint >> 18);

+ *str++ = 0x80 + ((codepoint >> 12) & 0x3F);

+ *str++ = 0x80 + ((codepoint >> 6) & 0x3F);

+ *str++ = 0x80 + (codepoint & 0x3F);

+ } else {

+ *str++ = codepoint;

+ }

+}

+

+static inline bool utf8_is_continuation(const uint8_t codeunit) {

+ return (codeunit & 0xC0) == 0x80; // upper two bits should be 10

+}

+

+static inline uint32_t utf8_decode(const uint8_t*& str, int64_t& length) {

+ if (*str < 0x80) { //

+ length -= 1;

+ return *str++;

+ } else if (*str < 0xC0) { // invalid non-ascii char

+ length -= 1;

+ str++;

+ return REPLACEMENT_CHAR;

Review comment:

There's no reason a priori to be forgiving on invalid data. It there's a use case to be tolerant, we may add an option. But by default we should error out on invalid input, IMHO.

cc @wesm for opinions

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow] pitrou commented on a change in pull request #7449: ARROW-9133: [C++] Add utf8_upper and utf8_lower

Posted by GitBox <gi...@apache.org>.

pitrou commented on a change in pull request #7449:

URL: https://github.com/apache/arrow/pull/7449#discussion_r445417901

##########

File path: cpp/src/arrow/compute/kernels/scalar_string_benchmark.cc

##########

@@ -52,8 +55,18 @@ static void AsciiUpper(benchmark::State& state) {

UnaryStringBenchmark(state, "ascii_upper");

}

+static void Utf8Upper(benchmark::State& state) {

+ UnaryStringBenchmark(state, "utf8_upper", true);

Review comment:

Note that `RandomArrayGenerator::String` generates an array of pure Ascii characters between `A` and `z`.

Perhaps we need two sets of benchmarks:

* one with pure Ascii values

* one with partly non-Ascii values

##########

File path: cpp/src/arrow/compute/kernels/scalar_string.cc

##########

@@ -30,6 +31,124 @@ namespace internal {

namespace {

+// lookup tables

+std::vector<uint32_t> lut_upper_codepoint;

+std::vector<uint32_t> lut_lower_codepoint;

+std::once_flag flag_case_luts;

+

+constexpr uint32_t REPLACEMENT_CHAR =

+ '?'; // the proper replacement char would be the 0xFFFD codepoint, but that can

+ // increase string length by a factor of 3

+constexpr int MAX_CODEPOINT_LUT = 0xffff; // up to this codepoint is in a lookup table

+

+static inline void utf8_encode(uint8_t*& str, uint32_t codepoint) {

+ if (codepoint < 0x80) {

+ *str++ = codepoint;

+ } else if (codepoint < 0x800) {

+ *str++ = 0xC0 + (codepoint >> 6);

+ *str++ = 0x80 + (codepoint & 0x3F);

+ } else if (codepoint < 0x10000) {

+ *str++ = 0xE0 + (codepoint >> 12);

+ *str++ = 0x80 + ((codepoint >> 6) & 0x3F);

+ *str++ = 0x80 + (codepoint & 0x3F);

+ } else if (codepoint < 0x200000) {

+ *str++ = 0xF0 + (codepoint >> 18);

+ *str++ = 0x80 + ((codepoint >> 12) & 0x3F);

+ *str++ = 0x80 + ((codepoint >> 6) & 0x3F);

+ *str++ = 0x80 + (codepoint & 0x3F);

+ } else {

+ *str++ = codepoint;

+ }

+}

+

+static inline bool utf8_is_continuation(const uint8_t codeunit) {

+ return (codeunit & 0xC0) == 0x80; // upper two bits should be 10

+}

+

+static inline uint32_t utf8_decode(const uint8_t*& str, int64_t& length) {

+ if (*str < 0x80) { //

+ length -= 1;

+ return *str++;

+ } else if (*str < 0xC0) { // invalid non-ascii char

+ length -= 1;

+ str++;

+ return REPLACEMENT_CHAR;

Review comment:

Hmm, I don't really agree with this... If there's some invalid input, we should bail out with `Status::Invalid`, IMO.

##########

File path: cpp/src/arrow/compute/kernels/scalar_string_benchmark.cc

##########

@@ -41,7 +42,9 @@ static void UnaryStringBenchmark(benchmark::State& state, const std::string& fun

ABORT_NOT_OK(CallFunction(func_name, {values}));

}

state.SetItemsProcessed(state.iterations() * array_length);

- state.SetBytesProcessed(state.iterations() * values->data()->buffers[2]->size());

+ state.SetBytesProcessed(state.iterations() *

+ ((touches_offsets ? values->data()->buffers[1]->size() : 0) +

Review comment:

Hmm, this looks a bit pedantic and counter-productive to me. We want Ascii and UTF8 numbers to be directly comparable, so let's keep the original calculation.

##########

File path: cpp/src/arrow/compute/kernels/scalar_string.cc

##########

@@ -30,6 +31,124 @@ namespace internal {

namespace {

+// lookup tables

+std::vector<uint32_t> lut_upper_codepoint;

+std::vector<uint32_t> lut_lower_codepoint;

+std::once_flag flag_case_luts;

+

+constexpr uint32_t REPLACEMENT_CHAR =

+ '?'; // the proper replacement char would be the 0xFFFD codepoint, but that can

+ // increase string length by a factor of 3

+constexpr int MAX_CODEPOINT_LUT = 0xffff; // up to this codepoint is in a lookup table

+

+static inline void utf8_encode(uint8_t*& str, uint32_t codepoint) {

Review comment:

By the way, all those helper functions (encoding/decoding) may also go into `arrow/util/utf8.h`.

##########

File path: cpp/src/arrow/compute/kernels/scalar_string_test.cc

##########

@@ -68,6 +76,64 @@ TYPED_TEST(TestStringKernels, AsciiLower) {

this->string_type(), "[\"aaazzæÆ&\", null, \"\", \"bbb\"]");

}

+TEST(TestStringKernels, Utf8Upper32bitGrowth) {

Review comment:

I'm not convinced it's a good idea to consume more than 2GB RAM in a test. We have something called `LARGE_MEMORY_TEST` for such tests (you can grep for it), though I'm not sure they get exercised on a regular basis.

##########

File path: cpp/src/arrow/compute/kernels/scalar_string_test.cc

##########

@@ -81,5 +147,40 @@ TYPED_TEST(TestStringKernels, StrptimeDoesNotProvideDefaultOptions) {

ASSERT_RAISES(Invalid, CallFunction("strptime", {input}));

}

+TEST(TestStringKernels, UnicodeLibraryAssumptions) {

+ uint8_t output[4];

+ for (utf8proc_int32_t codepoint = 0x100; codepoint < 0x110000; codepoint++) {

+ utf8proc_ssize_t encoded_nbytes = utf8proc_encode_char(codepoint, output);

+ utf8proc_int32_t codepoint_upper = utf8proc_toupper(codepoint);

+ utf8proc_ssize_t encoded_nbytes_upper = utf8proc_encode_char(codepoint_upper, output);

+ if (encoded_nbytes == 2) {

+ EXPECT_LE(encoded_nbytes_upper, 3)

+ << "Expected the upper case codepoint for a 2 byte encoded codepoint to be "

+ "encoded in maximum 3 bytes, not "

+ << encoded_nbytes_upper;

+ }

+ if (encoded_nbytes == 3) {

Review comment:

And what about `encoded_nbytes == 4`?

##########

File path: cpp/src/arrow/compute/kernels/scalar_string.cc

##########

@@ -39,6 +158,121 @@ struct AsciiLength {

}

};

+template <typename Type, template <typename> class Derived>

+struct Utf8Transform {

+ using offset_type = typename Type::offset_type;

+ using DerivedClass = Derived<Type>;

+ using ArrayType = typename TypeTraits<Type>::ArrayType;

+

+ static offset_type Transform(const uint8_t* input, offset_type input_string_ncodeunits,

+ uint8_t* output) {

+ uint8_t* dest = output;

+ utf8_transform(input, input + input_string_ncodeunits, dest,

+ DerivedClass::TransformCodepoint);

+ return (offset_type)(dest - output);

+ }

+

+ static void Exec(KernelContext* ctx, const ExecBatch& batch, Datum* out) {

+ if (batch[0].kind() == Datum::ARRAY) {

+ std::call_once(flag_case_luts, []() {

Review comment:

Could make this a separate helper function, for clarity.

##########

File path: cpp/src/arrow/compute/kernels/scalar_string.cc

##########

@@ -39,6 +158,121 @@ struct AsciiLength {

}

};

+template <typename Type, template <typename> class Derived>

+struct Utf8Transform {

+ using offset_type = typename Type::offset_type;

+ using DerivedClass = Derived<Type>;

+ using ArrayType = typename TypeTraits<Type>::ArrayType;

+

+ static offset_type Transform(const uint8_t* input, offset_type input_string_ncodeunits,

+ uint8_t* output) {

+ uint8_t* dest = output;

+ utf8_transform(input, input + input_string_ncodeunits, dest,

+ DerivedClass::TransformCodepoint);

+ return (offset_type)(dest - output);

+ }

+

+ static void Exec(KernelContext* ctx, const ExecBatch& batch, Datum* out) {

+ if (batch[0].kind() == Datum::ARRAY) {

+ std::call_once(flag_case_luts, []() {

+ lut_upper_codepoint.reserve(MAX_CODEPOINT_LUT + 1);

+ lut_lower_codepoint.reserve(MAX_CODEPOINT_LUT + 1);

+ for (int i = 0; i <= MAX_CODEPOINT_LUT; i++) {

+ lut_upper_codepoint.push_back(utf8proc_toupper(i));

+ lut_lower_codepoint.push_back(utf8proc_tolower(i));

+ }

+ });

+ const ArrayData& input = *batch[0].array();

+ ArrayType input_boxed(batch[0].array());

+ ArrayData* output = out->mutable_array();

+

+ offset_type const* input_string_offsets = input.GetValues<offset_type>(1);

+ utf8proc_uint8_t const* input_str =

+ input.buffers[2]->data() + input_boxed.value_offset(0);

+ offset_type input_ncodeunits = input_boxed.total_values_length();

+ offset_type input_nstrings = (offset_type)input.length;

+

+ // Section 5.18 of the Unicode spec claim that the number of codepoints for case

+ // mapping can grow by a factor of 3. This means grow by a factor of 3 in bytes

+ // However, since we don't support all casings (SpecialCasing.txt) the growth

+ // is actually only at max 3/2 (as covered by the unittest).

+ // Note that rounding down the 3/2 is ok, since only codepoints encoded by

+ // two code units (even) can grow to 3 code units.

+

+ int64_t output_ncodeunits_max = ((int64_t)input_ncodeunits) * 3 / 2;

+ if (output_ncodeunits_max > std::numeric_limits<offset_type>::max()) {

+ ctx->SetStatus(Status::CapacityError(

+ "Result might not fit in a 32bit utf8 array, convert to large_utf8"));

+ return;

+ }

+

+ KERNEL_RETURN_IF_ERROR(

+ ctx, ctx->Allocate(output_ncodeunits_max).Value(&output->buffers[2]));

+ // We could reuse the buffer if it is all ascii, benchmarking showed this not to

+ // matter

+ // output->buffers[1] = input.buffers[1];

+ KERNEL_RETURN_IF_ERROR(ctx,

+ ctx->Allocate((input_nstrings + 1) * sizeof(offset_type))

+ .Value(&output->buffers[1]));

+ utf8proc_uint8_t* output_str = output->buffers[2]->mutable_data();

+ offset_type* output_string_offsets = output->GetMutableValues<offset_type>(1);

+ offset_type output_ncodeunits = 0;

+

+ offset_type output_string_offset = 0;

+ *output_string_offsets = output_string_offset;

+ offset_type input_string_first_offset = input_string_offsets[0];

+ for (int64_t i = 0; i < input_nstrings; i++) {

+ offset_type input_string_offset =

+ input_string_offsets[i] - input_string_first_offset;

+ offset_type input_string_end =

+ input_string_offsets[i + 1] - input_string_first_offset;

+ offset_type input_string_ncodeunits = input_string_end - input_string_offset;

+ offset_type encoded_nbytes = DerivedClass::Transform(

+ input_str + input_string_offset, input_string_ncodeunits,

+ output_str + output_ncodeunits);

+ output_ncodeunits += encoded_nbytes;

+ output_string_offsets[i + 1] = output_ncodeunits;

+ }

+

+ // trim the codepoint buffer, since we allocated too much

+ KERNEL_RETURN_IF_ERROR(

+ ctx,

+ output->buffers[2]->CopySlice(0, output_ncodeunits).Value(&output->buffers[2]));

Review comment:

Hmm, we should be able to resize the buffer instead... except that `KernelContext::Allocate` doesn't return a `ResizableBuffer`. @wesm Do you think we can change that?

##########

File path: cpp/src/arrow/compute/kernels/scalar_string.cc

##########

@@ -39,6 +158,121 @@ struct AsciiLength {

}

};

+template <typename Type, template <typename> class Derived>

+struct Utf8Transform {

+ using offset_type = typename Type::offset_type;

+ using DerivedClass = Derived<Type>;

+ using ArrayType = typename TypeTraits<Type>::ArrayType;

+

+ static offset_type Transform(const uint8_t* input, offset_type input_string_ncodeunits,

+ uint8_t* output) {

+ uint8_t* dest = output;

+ utf8_transform(input, input + input_string_ncodeunits, dest,

+ DerivedClass::TransformCodepoint);

+ return (offset_type)(dest - output);

+ }

+

+ static void Exec(KernelContext* ctx, const ExecBatch& batch, Datum* out) {

+ if (batch[0].kind() == Datum::ARRAY) {

+ std::call_once(flag_case_luts, []() {

+ lut_upper_codepoint.reserve(MAX_CODEPOINT_LUT + 1);

+ lut_lower_codepoint.reserve(MAX_CODEPOINT_LUT + 1);

+ for (int i = 0; i <= MAX_CODEPOINT_LUT; i++) {

+ lut_upper_codepoint.push_back(utf8proc_toupper(i));

+ lut_lower_codepoint.push_back(utf8proc_tolower(i));

+ }

+ });

+ const ArrayData& input = *batch[0].array();

+ ArrayType input_boxed(batch[0].array());

+ ArrayData* output = out->mutable_array();

+

+ offset_type const* input_string_offsets = input.GetValues<offset_type>(1);

+ utf8proc_uint8_t const* input_str =

+ input.buffers[2]->data() + input_boxed.value_offset(0);

+ offset_type input_ncodeunits = input_boxed.total_values_length();

+ offset_type input_nstrings = (offset_type)input.length;

+

+ // Section 5.18 of the Unicode spec claim that the number of codepoints for case

+ // mapping can grow by a factor of 3. This means grow by a factor of 3 in bytes

+ // However, since we don't support all casings (SpecialCasing.txt) the growth

+ // is actually only at max 3/2 (as covered by the unittest).

+ // Note that rounding down the 3/2 is ok, since only codepoints encoded by

+ // two code units (even) can grow to 3 code units.

+

+ int64_t output_ncodeunits_max = ((int64_t)input_ncodeunits) * 3 / 2;

+ if (output_ncodeunits_max > std::numeric_limits<offset_type>::max()) {

+ ctx->SetStatus(Status::CapacityError(

+ "Result might not fit in a 32bit utf8 array, convert to large_utf8"));

+ return;

+ }

+

+ KERNEL_RETURN_IF_ERROR(

+ ctx, ctx->Allocate(output_ncodeunits_max).Value(&output->buffers[2]));

+ // We could reuse the buffer if it is all ascii, benchmarking showed this not to

+ // matter

+ // output->buffers[1] = input.buffers[1];

+ KERNEL_RETURN_IF_ERROR(ctx,

+ ctx->Allocate((input_nstrings + 1) * sizeof(offset_type))

+ .Value(&output->buffers[1]));

+ utf8proc_uint8_t* output_str = output->buffers[2]->mutable_data();

+ offset_type* output_string_offsets = output->GetMutableValues<offset_type>(1);

+ offset_type output_ncodeunits = 0;

+

+ offset_type output_string_offset = 0;

+ *output_string_offsets = output_string_offset;

+ offset_type input_string_first_offset = input_string_offsets[0];

+ for (int64_t i = 0; i < input_nstrings; i++) {

+ offset_type input_string_offset =

+ input_string_offsets[i] - input_string_first_offset;

+ offset_type input_string_end =

+ input_string_offsets[i + 1] - input_string_first_offset;

+ offset_type input_string_ncodeunits = input_string_end - input_string_offset;

+ offset_type encoded_nbytes = DerivedClass::Transform(

+ input_str + input_string_offset, input_string_ncodeunits,

+ output_str + output_ncodeunits);

+ output_ncodeunits += encoded_nbytes;

+ output_string_offsets[i + 1] = output_ncodeunits;

+ }

+

+ // trim the codepoint buffer, since we allocated too much

+ KERNEL_RETURN_IF_ERROR(

+ ctx,

+ output->buffers[2]->CopySlice(0, output_ncodeunits).Value(&output->buffers[2]));

+ } else {

+ const auto& input = checked_cast<const BaseBinaryScalar&>(*batch[0].scalar());

+ auto result = checked_pointer_cast<BaseBinaryScalar>(MakeNullScalar(out->type()));

+ if (input.is_valid) {

+ result->is_valid = true;

+ offset_type data_nbytes = (offset_type)input.value->size();

+ // See note above in the Array version explaining the 3 / 2

+ KERNEL_RETURN_IF_ERROR(ctx,

+ ctx->Allocate(data_nbytes * 3 / 2).Value(&result->value));

+ offset_type encoded_nbytes = DerivedClass::Transform(

+ input.value->data(), data_nbytes, result->value->mutable_data());

+ KERNEL_RETURN_IF_ERROR(

+ ctx, result->value->CopySlice(0, encoded_nbytes).Value(&result->value));

+ }

+ out->value = result;

+ }

+ }

+};

+

+template <typename Type>

+struct Utf8Upper : Utf8Transform<Type, Utf8Upper> {

+ inline static uint32_t TransformCodepoint(char32_t codepoint) {

Review comment:

Why `char32_t`? We're using `uint32_t` elsewhere.

##########

File path: cpp/src/arrow/compute/kernels/scalar_string.cc

##########

@@ -30,6 +31,124 @@ namespace internal {

namespace {

+// lookup tables

+std::vector<uint32_t> lut_upper_codepoint;

+std::vector<uint32_t> lut_lower_codepoint;

+std::once_flag flag_case_luts;

+

+constexpr uint32_t REPLACEMENT_CHAR =

+ '?'; // the proper replacement char would be the 0xFFFD codepoint, but that can

+ // increase string length by a factor of 3

+constexpr int MAX_CODEPOINT_LUT = 0xffff; // up to this codepoint is in a lookup table

+

+static inline void utf8_encode(uint8_t*& str, uint32_t codepoint) {

+ if (codepoint < 0x80) {

+ *str++ = codepoint;

+ } else if (codepoint < 0x800) {

+ *str++ = 0xC0 + (codepoint >> 6);

+ *str++ = 0x80 + (codepoint & 0x3F);

+ } else if (codepoint < 0x10000) {

+ *str++ = 0xE0 + (codepoint >> 12);

+ *str++ = 0x80 + ((codepoint >> 6) & 0x3F);

+ *str++ = 0x80 + (codepoint & 0x3F);

+ } else if (codepoint < 0x200000) {

+ *str++ = 0xF0 + (codepoint >> 18);

+ *str++ = 0x80 + ((codepoint >> 12) & 0x3F);

+ *str++ = 0x80 + ((codepoint >> 6) & 0x3F);

+ *str++ = 0x80 + (codepoint & 0x3F);

+ } else {

+ *str++ = codepoint;

Review comment:

Invalid characters should not be emitted silently.

##########

File path: cpp/src/arrow/compute/kernels/scalar_string.cc

##########

@@ -30,6 +31,124 @@ namespace internal {

namespace {

+// lookup tables

+std::vector<uint32_t> lut_upper_codepoint;

+std::vector<uint32_t> lut_lower_codepoint;

+std::once_flag flag_case_luts;

+

+constexpr uint32_t REPLACEMENT_CHAR =

+ '?'; // the proper replacement char would be the 0xFFFD codepoint, but that can

+ // increase string length by a factor of 3

+constexpr int MAX_CODEPOINT_LUT = 0xffff; // up to this codepoint is in a lookup table

Review comment:

For constants, the recommended spelling would be e.g. `constexpr uint32_t kMaxCodepointLookup`.

##########

File path: cpp/src/arrow/compute/kernels/scalar_string.cc

##########

@@ -39,6 +158,121 @@ struct AsciiLength {

}

};

+template <typename Type, template <typename> class Derived>

+struct Utf8Transform {

+ using offset_type = typename Type::offset_type;

+ using DerivedClass = Derived<Type>;

+ using ArrayType = typename TypeTraits<Type>::ArrayType;

+

+ static offset_type Transform(const uint8_t* input, offset_type input_string_ncodeunits,

+ uint8_t* output) {

+ uint8_t* dest = output;

+ utf8_transform(input, input + input_string_ncodeunits, dest,

+ DerivedClass::TransformCodepoint);

+ return (offset_type)(dest - output);

+ }

+

+ static void Exec(KernelContext* ctx, const ExecBatch& batch, Datum* out) {

+ if (batch[0].kind() == Datum::ARRAY) {

+ std::call_once(flag_case_luts, []() {

+ lut_upper_codepoint.reserve(MAX_CODEPOINT_LUT + 1);

+ lut_lower_codepoint.reserve(MAX_CODEPOINT_LUT + 1);

+ for (int i = 0; i <= MAX_CODEPOINT_LUT; i++) {

+ lut_upper_codepoint.push_back(utf8proc_toupper(i));

+ lut_lower_codepoint.push_back(utf8proc_tolower(i));

+ }

+ });

+ const ArrayData& input = *batch[0].array();

+ ArrayType input_boxed(batch[0].array());

+ ArrayData* output = out->mutable_array();

+

+ offset_type const* input_string_offsets = input.GetValues<offset_type>(1);

+ utf8proc_uint8_t const* input_str =

+ input.buffers[2]->data() + input_boxed.value_offset(0);

+ offset_type input_ncodeunits = input_boxed.total_values_length();

+ offset_type input_nstrings = (offset_type)input.length;

+

+ // Section 5.18 of the Unicode spec claim that the number of codepoints for case

+ // mapping can grow by a factor of 3. This means grow by a factor of 3 in bytes

+ // However, since we don't support all casings (SpecialCasing.txt) the growth

+ // is actually only at max 3/2 (as covered by the unittest).

+ // Note that rounding down the 3/2 is ok, since only codepoints encoded by

+ // two code units (even) can grow to 3 code units.

+

+ int64_t output_ncodeunits_max = ((int64_t)input_ncodeunits) * 3 / 2;

+ if (output_ncodeunits_max > std::numeric_limits<offset_type>::max()) {

+ ctx->SetStatus(Status::CapacityError(

+ "Result might not fit in a 32bit utf8 array, convert to large_utf8"));

+ return;

+ }

+

+ KERNEL_RETURN_IF_ERROR(

+ ctx, ctx->Allocate(output_ncodeunits_max).Value(&output->buffers[2]));

+ // We could reuse the buffer if it is all ascii, benchmarking showed this not to

+ // matter

+ // output->buffers[1] = input.buffers[1];

+ KERNEL_RETURN_IF_ERROR(ctx,

+ ctx->Allocate((input_nstrings + 1) * sizeof(offset_type))

+ .Value(&output->buffers[1]));

+ utf8proc_uint8_t* output_str = output->buffers[2]->mutable_data();

+ offset_type* output_string_offsets = output->GetMutableValues<offset_type>(1);

+ offset_type output_ncodeunits = 0;

+

+ offset_type output_string_offset = 0;

+ *output_string_offsets = output_string_offset;

+ offset_type input_string_first_offset = input_string_offsets[0];

+ for (int64_t i = 0; i < input_nstrings; i++) {

+ offset_type input_string_offset =

+ input_string_offsets[i] - input_string_first_offset;

+ offset_type input_string_end =

+ input_string_offsets[i + 1] - input_string_first_offset;

+ offset_type input_string_ncodeunits = input_string_end - input_string_offset;

+ offset_type encoded_nbytes = DerivedClass::Transform(

+ input_str + input_string_offset, input_string_ncodeunits,

+ output_str + output_ncodeunits);

+ output_ncodeunits += encoded_nbytes;

+ output_string_offsets[i + 1] = output_ncodeunits;

+ }

+

+ // trim the codepoint buffer, since we allocated too much

+ KERNEL_RETURN_IF_ERROR(

+ ctx,

+ output->buffers[2]->CopySlice(0, output_ncodeunits).Value(&output->buffers[2]));

+ } else {

+ const auto& input = checked_cast<const BaseBinaryScalar&>(*batch[0].scalar());

+ auto result = checked_pointer_cast<BaseBinaryScalar>(MakeNullScalar(out->type()));

+ if (input.is_valid) {

+ result->is_valid = true;

+ offset_type data_nbytes = (offset_type)input.value->size();

+ // See note above in the Array version explaining the 3 / 2

+ KERNEL_RETURN_IF_ERROR(ctx,

+ ctx->Allocate(data_nbytes * 3 / 2).Value(&result->value));

+ offset_type encoded_nbytes = DerivedClass::Transform(

+ input.value->data(), data_nbytes, result->value->mutable_data());

+ KERNEL_RETURN_IF_ERROR(

+ ctx, result->value->CopySlice(0, encoded_nbytes).Value(&result->value));

+ }

+ out->value = result;

+ }

+ }

+};

+

+template <typename Type>

+struct Utf8Upper : Utf8Transform<Type, Utf8Upper> {

Review comment:

Why is this templated on Type?

##########

File path: cpp/src/arrow/compute/kernels/scalar_string_test.cc

##########

@@ -68,6 +76,64 @@ TYPED_TEST(TestStringKernels, AsciiLower) {

this->string_type(), "[\"aaazzæÆ&\", null, \"\", \"bbb\"]");

}

+TEST(TestStringKernels, Utf8Upper32bitGrowth) {

+ std::string str(0xffff, 'a');

+ arrow::StringBuilder builder;

+ // 0x7fff * 0xffff is the max a 32 bit string array can hold

+ // since the utf8_upper kernel can grow it by 3/2, the max we should accept is is

+ // 0x7fff * 0xffff * 2/3 = 0x5555 * 0xffff, so this should give us a CapacityError

+ for (int64_t i = 0; i < 0x5556; i++) {

+ ASSERT_OK(builder.Append(str));

+ }

+ std::shared_ptr<arrow::Array> array;

+ arrow::Status st = builder.Finish(&array);

+ const FunctionOptions* options = nullptr;

+ EXPECT_RAISES_WITH_MESSAGE_THAT(CapacityError,

+ testing::HasSubstr("Result might not fit"),

+ CallFunction("utf8_upper", {array}, options));

+}

+

+TYPED_TEST(TestStringKernels, Utf8Upper) {

+ this->CheckUnary("utf8_upper", "[\"aAazZæÆ&\", null, \"\", \"b\"]", this->string_type(),

+ "[\"AAAZZÆÆ&\", null, \"\", \"B\"]");

+

+ // test varying encoding lenghts and thus changing indices/offsets

+ this->CheckUnary("utf8_upper", "[\"ɑɽⱤoW\", null, \"ıI\", \"b\"]", this->string_type(),

+ "[\"ⱭⱤⱤOW\", null, \"II\", \"B\"]");

+

+ // ῦ to Υ͂ not supported

+ // this->CheckUnary("utf8_upper", "[\"ῦɐɜʞȿ\"]", this->string_type(),

+ // "[\"Υ͂ⱯꞫꞰⱾ\"]");

+

+ // test maximum buffer growth

+ this->CheckUnary("utf8_upper", "[\"ɑɑɑɑ\"]", this->string_type(), "[\"ⱭⱭⱭⱭ\"]");

+

+ // Test replacing invalid data by ? (ὖ == \xe1\xbd\x96)

Review comment:

Why do you mention `ὖ` in this comment?

##########

File path: cpp/src/arrow/compute/kernels/scalar_string.cc

##########

@@ -30,6 +31,124 @@ namespace internal {

namespace {

+// lookup tables

+std::vector<uint32_t> lut_upper_codepoint;

+std::vector<uint32_t> lut_lower_codepoint;

+std::once_flag flag_case_luts;

+

+constexpr uint32_t REPLACEMENT_CHAR =

+ '?'; // the proper replacement char would be the 0xFFFD codepoint, but that can

+ // increase string length by a factor of 3

+constexpr int MAX_CODEPOINT_LUT = 0xffff; // up to this codepoint is in a lookup table

+

+static inline void utf8_encode(uint8_t*& str, uint32_t codepoint) {

Review comment:

Also, it disallows non-const references. Instead, you may take a pointer argument.

##########

File path: cpp/src/arrow/compute/kernels/scalar_string.cc

##########

@@ -30,6 +31,124 @@ namespace internal {

namespace {

+// lookup tables

+std::vector<uint32_t> lut_upper_codepoint;

+std::vector<uint32_t> lut_lower_codepoint;

+std::once_flag flag_case_luts;

+

+constexpr uint32_t REPLACEMENT_CHAR =

+ '?'; // the proper replacement char would be the 0xFFFD codepoint, but that can

+ // increase string length by a factor of 3

+constexpr int MAX_CODEPOINT_LUT = 0xffff; // up to this codepoint is in a lookup table

+

+static inline void utf8_encode(uint8_t*& str, uint32_t codepoint) {

Review comment:

Our coding style mandates CamelCase except for trivial accessors.

##########

File path: cpp/src/arrow/compute/kernels/scalar_string_test.cc

##########

@@ -68,6 +76,64 @@ TYPED_TEST(TestStringKernels, AsciiLower) {

this->string_type(), "[\"aaazzæÆ&\", null, \"\", \"bbb\"]");

}

+TEST(TestStringKernels, Utf8Upper32bitGrowth) {

+ std::string str(0xffff, 'a');

+ arrow::StringBuilder builder;

+ // 0x7fff * 0xffff is the max a 32 bit string array can hold

+ // since the utf8_upper kernel can grow it by 3/2, the max we should accept is is

+ // 0x7fff * 0xffff * 2/3 = 0x5555 * 0xffff, so this should give us a CapacityError

+ for (int64_t i = 0; i < 0x5556; i++) {

+ ASSERT_OK(builder.Append(str));

+ }

+ std::shared_ptr<arrow::Array> array;

+ arrow::Status st = builder.Finish(&array);

+ const FunctionOptions* options = nullptr;

+ EXPECT_RAISES_WITH_MESSAGE_THAT(CapacityError,

+ testing::HasSubstr("Result might not fit"),

+ CallFunction("utf8_upper", {array}, options));

+}

+

+TYPED_TEST(TestStringKernels, Utf8Upper) {

+ this->CheckUnary("utf8_upper", "[\"aAazZæÆ&\", null, \"\", \"b\"]", this->string_type(),

+ "[\"AAAZZÆÆ&\", null, \"\", \"B\"]");

+

+ // test varying encoding lenghts and thus changing indices/offsets

+ this->CheckUnary("utf8_upper", "[\"ɑɽⱤoW\", null, \"ıI\", \"b\"]", this->string_type(),

+ "[\"ⱭⱤⱤOW\", null, \"II\", \"B\"]");

+

+ // ῦ to Υ͂ not supported

+ // this->CheckUnary("utf8_upper", "[\"ῦɐɜʞȿ\"]", this->string_type(),

+ // "[\"Υ͂ⱯꞫꞰⱾ\"]");

+

+ // test maximum buffer growth

+ this->CheckUnary("utf8_upper", "[\"ɑɑɑɑ\"]", this->string_type(), "[\"ⱭⱭⱭⱭ\"]");

+

+ // Test replacing invalid data by ? (ὖ == \xe1\xbd\x96)

+ this->CheckUnary("utf8_upper", "[\"ɑa\xFFɑ\", \"ɽ\xe1\xbdɽaa\"]", this->string_type(),

+ "[\"ⱭA?Ɑ\", \"Ɽ?ⱤAA\"]");

+}

+

+TYPED_TEST(TestStringKernels, Utf8Lower) {

+ this->CheckUnary("utf8_lower", "[\"aAazZæÆ&\", null, \"\", \"b\"]", this->string_type(),

+ "[\"aaazzææ&\", null, \"\", \"b\"]");

+

+ // test varying encoding lenghts and thus changing indices/offsets

+ this->CheckUnary("utf8_lower", "[\"ⱭɽⱤoW\", null, \"ıI\", \"B\"]", this->string_type(),

+ "[\"ɑɽɽow\", null, \"ıi\", \"b\"]");

+

+ // ῦ to Υ͂ is not supported, but in principle the reverse is, but it would need

+ // normalization

+ // this->CheckUnary("utf8_lower", "[\"Υ͂ⱯꞫꞰⱾ\"]", this->string_type(),

+ // "[\"ῦɐɜʞȿ\"]");

+

+ // test maximum buffer growth

+ this->CheckUnary("utf8_lower", "[\"ȺȺȺȺ\"]", this->string_type(), "[\"ⱥⱥⱥⱥ\"]");

+

+ // Test replacing invalid data by ? (ὖ == \xe1\xbd\x96)

Review comment:

Why do you mention `ὖ` in this comment?

##########

File path: cpp/src/arrow/compute/kernels/scalar_string.cc

##########

@@ -39,6 +158,121 @@ struct AsciiLength {

}

};

+template <typename Type, template <typename> class Derived>

+struct Utf8Transform {

+ using offset_type = typename Type::offset_type;

+ using DerivedClass = Derived<Type>;

+ using ArrayType = typename TypeTraits<Type>::ArrayType;

+

+ static offset_type Transform(const uint8_t* input, offset_type input_string_ncodeunits,

+ uint8_t* output) {

+ uint8_t* dest = output;

+ utf8_transform(input, input + input_string_ncodeunits, dest,

+ DerivedClass::TransformCodepoint);

+ return (offset_type)(dest - output);

+ }

+

+ static void Exec(KernelContext* ctx, const ExecBatch& batch, Datum* out) {

+ if (batch[0].kind() == Datum::ARRAY) {

+ std::call_once(flag_case_luts, []() {

+ lut_upper_codepoint.reserve(MAX_CODEPOINT_LUT + 1);

+ lut_lower_codepoint.reserve(MAX_CODEPOINT_LUT + 1);

+ for (int i = 0; i <= MAX_CODEPOINT_LUT; i++) {

+ lut_upper_codepoint.push_back(utf8proc_toupper(i));

+ lut_lower_codepoint.push_back(utf8proc_tolower(i));

+ }

+ });

+ const ArrayData& input = *batch[0].array();

+ ArrayType input_boxed(batch[0].array());

+ ArrayData* output = out->mutable_array();

+

+ offset_type const* input_string_offsets = input.GetValues<offset_type>(1);

+ utf8proc_uint8_t const* input_str =

+ input.buffers[2]->data() + input_boxed.value_offset(0);

+ offset_type input_ncodeunits = input_boxed.total_values_length();

+ offset_type input_nstrings = (offset_type)input.length;

Review comment:

`static_cast<offset_type>(input.length)`

##########

File path: cpp/src/arrow/compute/kernels/scalar_string.cc

##########

@@ -39,6 +158,121 @@ struct AsciiLength {

}

};

+template <typename Type, template <typename> class Derived>

+struct Utf8Transform {

+ using offset_type = typename Type::offset_type;

+ using DerivedClass = Derived<Type>;

+ using ArrayType = typename TypeTraits<Type>::ArrayType;

+

+ static offset_type Transform(const uint8_t* input, offset_type input_string_ncodeunits,

+ uint8_t* output) {

+ uint8_t* dest = output;

+ utf8_transform(input, input + input_string_ncodeunits, dest,

+ DerivedClass::TransformCodepoint);

+ return (offset_type)(dest - output);

+ }

+

+ static void Exec(KernelContext* ctx, const ExecBatch& batch, Datum* out) {

+ if (batch[0].kind() == Datum::ARRAY) {

+ std::call_once(flag_case_luts, []() {

+ lut_upper_codepoint.reserve(MAX_CODEPOINT_LUT + 1);

+ lut_lower_codepoint.reserve(MAX_CODEPOINT_LUT + 1);

+ for (int i = 0; i <= MAX_CODEPOINT_LUT; i++) {

+ lut_upper_codepoint.push_back(utf8proc_toupper(i));

+ lut_lower_codepoint.push_back(utf8proc_tolower(i));

+ }

+ });

+ const ArrayData& input = *batch[0].array();

+ ArrayType input_boxed(batch[0].array());

+ ArrayData* output = out->mutable_array();

+

+ offset_type const* input_string_offsets = input.GetValues<offset_type>(1);

+ utf8proc_uint8_t const* input_str =

+ input.buffers[2]->data() + input_boxed.value_offset(0);

+ offset_type input_ncodeunits = input_boxed.total_values_length();

+ offset_type input_nstrings = (offset_type)input.length;

+

+ // Section 5.18 of the Unicode spec claim that the number of codepoints for case

+ // mapping can grow by a factor of 3. This means grow by a factor of 3 in bytes

+ // However, since we don't support all casings (SpecialCasing.txt) the growth

+ // is actually only at max 3/2 (as covered by the unittest).

+ // Note that rounding down the 3/2 is ok, since only codepoints encoded by

+ // two code units (even) can grow to 3 code units.

+

+ int64_t output_ncodeunits_max = ((int64_t)input_ncodeunits) * 3 / 2;

+ if (output_ncodeunits_max > std::numeric_limits<offset_type>::max()) {

+ ctx->SetStatus(Status::CapacityError(

+ "Result might not fit in a 32bit utf8 array, convert to large_utf8"));

+ return;

+ }

+

+ KERNEL_RETURN_IF_ERROR(

+ ctx, ctx->Allocate(output_ncodeunits_max).Value(&output->buffers[2]));

+ // We could reuse the buffer if it is all ascii, benchmarking showed this not to

+ // matter

+ // output->buffers[1] = input.buffers[1];

+ KERNEL_RETURN_IF_ERROR(ctx,

+ ctx->Allocate((input_nstrings + 1) * sizeof(offset_type))

+ .Value(&output->buffers[1]));

+ utf8proc_uint8_t* output_str = output->buffers[2]->mutable_data();

+ offset_type* output_string_offsets = output->GetMutableValues<offset_type>(1);

+ offset_type output_ncodeunits = 0;

+

+ offset_type output_string_offset = 0;

+ *output_string_offsets = output_string_offset;

+ offset_type input_string_first_offset = input_string_offsets[0];

+ for (int64_t i = 0; i < input_nstrings; i++) {

+ offset_type input_string_offset =

+ input_string_offsets[i] - input_string_first_offset;

+ offset_type input_string_end =

+ input_string_offsets[i + 1] - input_string_first_offset;

+ offset_type input_string_ncodeunits = input_string_end - input_string_offset;

+ offset_type encoded_nbytes = DerivedClass::Transform(

+ input_str + input_string_offset, input_string_ncodeunits,

+ output_str + output_ncodeunits);

+ output_ncodeunits += encoded_nbytes;

+ output_string_offsets[i + 1] = output_ncodeunits;

+ }

+

+ // trim the codepoint buffer, since we allocated too much

+ KERNEL_RETURN_IF_ERROR(

+ ctx,

+ output->buffers[2]->CopySlice(0, output_ncodeunits).Value(&output->buffers[2]));

+ } else {

+ const auto& input = checked_cast<const BaseBinaryScalar&>(*batch[0].scalar());

+ auto result = checked_pointer_cast<BaseBinaryScalar>(MakeNullScalar(out->type()));

+ if (input.is_valid) {

+ result->is_valid = true;

+ offset_type data_nbytes = (offset_type)input.value->size();

Review comment:

`static_cast<offset_type>(...)`

##########

File path: cpp/src/arrow/compute/kernels/scalar_string.cc

##########

@@ -39,6 +158,121 @@ struct AsciiLength {

}

};

+template <typename Type, template <typename> class Derived>

+struct Utf8Transform {

+ using offset_type = typename Type::offset_type;

+ using DerivedClass = Derived<Type>;

+ using ArrayType = typename TypeTraits<Type>::ArrayType;

+

+ static offset_type Transform(const uint8_t* input, offset_type input_string_ncodeunits,

+ uint8_t* output) {

+ uint8_t* dest = output;

+ utf8_transform(input, input + input_string_ncodeunits, dest,

+ DerivedClass::TransformCodepoint);

+ return (offset_type)(dest - output);

+ }

+

+ static void Exec(KernelContext* ctx, const ExecBatch& batch, Datum* out) {

+ if (batch[0].kind() == Datum::ARRAY) {

+ std::call_once(flag_case_luts, []() {

+ lut_upper_codepoint.reserve(MAX_CODEPOINT_LUT + 1);

+ lut_lower_codepoint.reserve(MAX_CODEPOINT_LUT + 1);

+ for (int i = 0; i <= MAX_CODEPOINT_LUT; i++) {

+ lut_upper_codepoint.push_back(utf8proc_toupper(i));

+ lut_lower_codepoint.push_back(utf8proc_tolower(i));

+ }

+ });

+ const ArrayData& input = *batch[0].array();

+ ArrayType input_boxed(batch[0].array());

+ ArrayData* output = out->mutable_array();

+

+ offset_type const* input_string_offsets = input.GetValues<offset_type>(1);

+ utf8proc_uint8_t const* input_str =

+ input.buffers[2]->data() + input_boxed.value_offset(0);

+ offset_type input_ncodeunits = input_boxed.total_values_length();

+ offset_type input_nstrings = (offset_type)input.length;

+

+ // Section 5.18 of the Unicode spec claim that the number of codepoints for case

+ // mapping can grow by a factor of 3. This means grow by a factor of 3 in bytes

+ // However, since we don't support all casings (SpecialCasing.txt) the growth

+ // is actually only at max 3/2 (as covered by the unittest).

+ // Note that rounding down the 3/2 is ok, since only codepoints encoded by

+ // two code units (even) can grow to 3 code units.

+

+ int64_t output_ncodeunits_max = ((int64_t)input_ncodeunits) * 3 / 2;

+ if (output_ncodeunits_max > std::numeric_limits<offset_type>::max()) {

+ ctx->SetStatus(Status::CapacityError(

+ "Result might not fit in a 32bit utf8 array, convert to large_utf8"));

+ return;

+ }

+

+ KERNEL_RETURN_IF_ERROR(

+ ctx, ctx->Allocate(output_ncodeunits_max).Value(&output->buffers[2]));

+ // We could reuse the buffer if it is all ascii, benchmarking showed this not to

+ // matter

+ // output->buffers[1] = input.buffers[1];

+ KERNEL_RETURN_IF_ERROR(ctx,

+ ctx->Allocate((input_nstrings + 1) * sizeof(offset_type))

+ .Value(&output->buffers[1]));

+ utf8proc_uint8_t* output_str = output->buffers[2]->mutable_data();

+ offset_type* output_string_offsets = output->GetMutableValues<offset_type>(1);

+ offset_type output_ncodeunits = 0;

+

+ offset_type output_string_offset = 0;

+ *output_string_offsets = output_string_offset;

+ offset_type input_string_first_offset = input_string_offsets[0];

+ for (int64_t i = 0; i < input_nstrings; i++) {

+ offset_type input_string_offset =

+ input_string_offsets[i] - input_string_first_offset;

+ offset_type input_string_end =

+ input_string_offsets[i + 1] - input_string_first_offset;

+ offset_type input_string_ncodeunits = input_string_end - input_string_offset;

Review comment:

Why not simply call `GetView` instead?

```c++

const auto input = boxed_input.GetView(i);

```

##########

File path: cpp/src/arrow/compute/kernels/scalar_string.cc

##########

@@ -39,6 +158,121 @@ struct AsciiLength {

}

};

+template <typename Type, template <typename> class Derived>

+struct Utf8Transform {

+ using offset_type = typename Type::offset_type;

+ using DerivedClass = Derived<Type>;

+ using ArrayType = typename TypeTraits<Type>::ArrayType;

+

+ static offset_type Transform(const uint8_t* input, offset_type input_string_ncodeunits,

+ uint8_t* output) {

+ uint8_t* dest = output;

+ utf8_transform(input, input + input_string_ncodeunits, dest,

+ DerivedClass::TransformCodepoint);

+ return (offset_type)(dest - output);

+ }

+

+ static void Exec(KernelContext* ctx, const ExecBatch& batch, Datum* out) {

+ if (batch[0].kind() == Datum::ARRAY) {

+ std::call_once(flag_case_luts, []() {

+ lut_upper_codepoint.reserve(MAX_CODEPOINT_LUT + 1);

+ lut_lower_codepoint.reserve(MAX_CODEPOINT_LUT + 1);

+ for (int i = 0; i <= MAX_CODEPOINT_LUT; i++) {

+ lut_upper_codepoint.push_back(utf8proc_toupper(i));

+ lut_lower_codepoint.push_back(utf8proc_tolower(i));

+ }

+ });

+ const ArrayData& input = *batch[0].array();

+ ArrayType input_boxed(batch[0].array());

+ ArrayData* output = out->mutable_array();

+

+ offset_type const* input_string_offsets = input.GetValues<offset_type>(1);

+ utf8proc_uint8_t const* input_str =

Review comment:

Just use `uint8_t`.

Also, you should be able to write:

```c++

const uint8_t* input_str = input_boxed->raw_value_offsets();

```

##########

File path: cpp/src/arrow/compute/kernels/scalar_string_test.cc

##########

@@ -81,5 +147,40 @@ TYPED_TEST(TestStringKernels, StrptimeDoesNotProvideDefaultOptions) {

ASSERT_RAISES(Invalid, CallFunction("strptime", {input}));

}

+TEST(TestStringKernels, UnicodeLibraryAssumptions) {

+ uint8_t output[4];

+ for (utf8proc_int32_t codepoint = 0x100; codepoint < 0x110000; codepoint++) {

+ utf8proc_ssize_t encoded_nbytes = utf8proc_encode_char(codepoint, output);

+ utf8proc_int32_t codepoint_upper = utf8proc_toupper(codepoint);

+ utf8proc_ssize_t encoded_nbytes_upper = utf8proc_encode_char(codepoint_upper, output);

+ if (encoded_nbytes == 2) {

+ EXPECT_LE(encoded_nbytes_upper, 3)

+ << "Expected the upper case codepoint for a 2 byte encoded codepoint to be "

+ "encoded in maximum 3 bytes, not "

+ << encoded_nbytes_upper;

+ }

+ if (encoded_nbytes == 3) {

Review comment:

(also, what's the point of two different conditionals if you're doing the same thing inside?)

##########

File path: cpp/src/arrow/compute/kernels/scalar_string.cc

##########

@@ -39,6 +158,121 @@ struct AsciiLength {

}

};

+template <typename Type, template <typename> class Derived>

+struct Utf8Transform {

+ using offset_type = typename Type::offset_type;

+ using DerivedClass = Derived<Type>;

+ using ArrayType = typename TypeTraits<Type>::ArrayType;

+

+ static offset_type Transform(const uint8_t* input, offset_type input_string_ncodeunits,

+ uint8_t* output) {

+ uint8_t* dest = output;

+ utf8_transform(input, input + input_string_ncodeunits, dest,

+ DerivedClass::TransformCodepoint);

+ return (offset_type)(dest - output);

+ }

+

+ static void Exec(KernelContext* ctx, const ExecBatch& batch, Datum* out) {

+ if (batch[0].kind() == Datum::ARRAY) {

+ std::call_once(flag_case_luts, []() {

+ lut_upper_codepoint.reserve(MAX_CODEPOINT_LUT + 1);

+ lut_lower_codepoint.reserve(MAX_CODEPOINT_LUT + 1);

+ for (int i = 0; i <= MAX_CODEPOINT_LUT; i++) {

+ lut_upper_codepoint.push_back(utf8proc_toupper(i));

+ lut_lower_codepoint.push_back(utf8proc_tolower(i));

+ }

+ });

+ const ArrayData& input = *batch[0].array();

+ ArrayType input_boxed(batch[0].array());

+ ArrayData* output = out->mutable_array();

+

+ offset_type const* input_string_offsets = input.GetValues<offset_type>(1);

+ utf8proc_uint8_t const* input_str =

+ input.buffers[2]->data() + input_boxed.value_offset(0);

+ offset_type input_ncodeunits = input_boxed.total_values_length();

+ offset_type input_nstrings = (offset_type)input.length;

+

+ // Section 5.18 of the Unicode spec claim that the number of codepoints for case

+ // mapping can grow by a factor of 3. This means grow by a factor of 3 in bytes

+ // However, since we don't support all casings (SpecialCasing.txt) the growth

+ // is actually only at max 3/2 (as covered by the unittest).

+ // Note that rounding down the 3/2 is ok, since only codepoints encoded by

+ // two code units (even) can grow to 3 code units.

+

+ int64_t output_ncodeunits_max = ((int64_t)input_ncodeunits) * 3 / 2;

+ if (output_ncodeunits_max > std::numeric_limits<offset_type>::max()) {

+ ctx->SetStatus(Status::CapacityError(

+ "Result might not fit in a 32bit utf8 array, convert to large_utf8"));

+ return;

+ }

+

+ KERNEL_RETURN_IF_ERROR(

+ ctx, ctx->Allocate(output_ncodeunits_max).Value(&output->buffers[2]));

+ // We could reuse the buffer if it is all ascii, benchmarking showed this not to

+ // matter

+ // output->buffers[1] = input.buffers[1];

+ KERNEL_RETURN_IF_ERROR(ctx,

+ ctx->Allocate((input_nstrings + 1) * sizeof(offset_type))

+ .Value(&output->buffers[1]));

+ utf8proc_uint8_t* output_str = output->buffers[2]->mutable_data();

Review comment:

Please use `uint8_t`

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow] wesm commented on pull request #7449: ARROW-9133: [C++] Add utf8_upper and utf8_lower

Posted by GitBox <gi...@apache.org>.

wesm commented on pull request #7449:

URL: https://github.com/apache/arrow/pull/7449#issuecomment-647860226

@kou utf8proc should only be used in a small number of compilation units, so what do you think about just using `set_target_properties(... PROPERTIES COMPILE_DEFINITIONS UTF8PROC_STATIC)` in those files?

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow] xhochy commented on pull request #7449: ARROW-9133: [C++] Add utf8_upper and utf8_lower

Posted by GitBox <gi...@apache.org>.

xhochy commented on pull request #7449:

URL: https://github.com/apache/arrow/pull/7449#issuecomment-645401816

> Would a lookup table in the order of 256kb (generated at runtime, not in the binary) per case mapping be acceptable for Arrow?

I would find that acceptable if the mapping is only generated if needed (thus you will have a one-off payment when using a UTF8-kernel). I would though prefer if `utf8proc` could implement it just like this on their side. Can you open an issue there?

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow] wesm commented on pull request #7449: ARROW-9133: [C++] Add utf8_upper and utf8_lower

Posted by GitBox <gi...@apache.org>.

wesm commented on pull request #7449:

URL: https://github.com/apache/arrow/pull/7449#issuecomment-645413587

I also agree with inlining the utf8proc functions until utf8proc can be patched to have better performance. I doubt that these optimizations will meaningfully impact the macroperformance of applications

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow] maartenbreddels commented on a change in pull request #7449: ARROW-9133: [C++] Add utf8_upper and utf8_lower

Posted by GitBox <gi...@apache.org>.

maartenbreddels commented on a change in pull request #7449:

URL: https://github.com/apache/arrow/pull/7449#discussion_r447154836

##########

File path: cpp/src/arrow/compute/kernels/scalar_string.cc

##########

@@ -15,13 +15,15 @@

// specific language governing permissions and limitations

// under the License.

+#include <utf8proc.h>

Review comment:

Np, I'd rather do it correct.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow] wesm commented on pull request #7449: ARROW-9133: [C++] Add utf8_upper and utf8_lower

Posted by GitBox <gi...@apache.org>.

wesm commented on pull request #7449:

URL: https://github.com/apache/arrow/pull/7449#issuecomment-647519089

> The downside is that users of the Arrow library are exposed to the implementation details of how each kernel can grow the resulting array.

I'm not saying that. I'm proposing instead a layered implementation approach. You will still write "utf8_lower(x)" in Python but the execution layer will decide when it's appropriate to split inputs or do type promotion. So Vaex shouldn't have to deal with these details.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow] kou commented on pull request #7449: ARROW-9133: [C++] Add utf8_upper and utf8_lower

Posted by GitBox <gi...@apache.org>.

kou commented on pull request #7449:

URL: https://github.com/apache/arrow/pull/7449#issuecomment-647834815

The change doesn't add `UTF8PROC_STATIC` definition...

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow] pitrou commented on a change in pull request #7449: ARROW-9133: [C++] Add utf8_upper and utf8_lower

Posted by GitBox <gi...@apache.org>.

pitrou commented on a change in pull request #7449:

URL: https://github.com/apache/arrow/pull/7449#discussion_r446946562

##########

File path: cpp/src/arrow/compute/kernels/scalar_string.cc

##########

@@ -30,6 +31,124 @@ namespace internal {

namespace {

+// lookup tables

+std::vector<uint32_t> lut_upper_codepoint;

+std::vector<uint32_t> lut_lower_codepoint;

+std::once_flag flag_case_luts;

+

+constexpr uint32_t REPLACEMENT_CHAR =

+ '?'; // the proper replacement char would be the 0xFFFD codepoint, but that can

+ // increase string length by a factor of 3

+constexpr int MAX_CODEPOINT_LUT = 0xffff; // up to this codepoint is in a lookup table

+

+static inline void utf8_encode(uint8_t*& str, uint32_t codepoint) {

+ if (codepoint < 0x80) {

+ *str++ = codepoint;

+ } else if (codepoint < 0x800) {

+ *str++ = 0xC0 + (codepoint >> 6);

+ *str++ = 0x80 + (codepoint & 0x3F);

+ } else if (codepoint < 0x10000) {

+ *str++ = 0xE0 + (codepoint >> 12);

+ *str++ = 0x80 + ((codepoint >> 6) & 0x3F);

+ *str++ = 0x80 + (codepoint & 0x3F);

+ } else if (codepoint < 0x200000) {

+ *str++ = 0xF0 + (codepoint >> 18);

+ *str++ = 0x80 + ((codepoint >> 12) & 0x3F);

+ *str++ = 0x80 + ((codepoint >> 6) & 0x3F);

+ *str++ = 0x80 + (codepoint & 0x3F);

+ } else {

+ *str++ = codepoint;

+ }

+}

+

+static inline bool utf8_is_continuation(const uint8_t codeunit) {

+ return (codeunit & 0xC0) == 0x80; // upper two bits should be 10

+}

+

+static inline uint32_t utf8_decode(const uint8_t*& str, int64_t& length) {

+ if (*str < 0x80) { //

+ length -= 1;

+ return *str++;

+ } else if (*str < 0xC0) { // invalid non-ascii char

+ length -= 1;

+ str++;

+ return REPLACEMENT_CHAR;

Review comment:

Well, I'm not sure why it would make sense to ignore invalid input data here, unless not ignoring it has a significant cost (which sounds unlikely).

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow] kszucs commented on pull request #7449: ARROW-9133: [C++] Add utf8_upper and utf8_lower

Posted by GitBox <gi...@apache.org>.

kszucs commented on pull request #7449:

URL: https://github.com/apache/arrow/pull/7449#issuecomment-645539612

Added `libutf8proc` dependency to the ursabot builders, same could be done for the docker-compose images. The tests are failing though.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow] pitrou closed pull request #7449: ARROW-9133: [C++] Add utf8_upper and utf8_lower

Posted by GitBox <gi...@apache.org>.

pitrou closed pull request #7449:

URL: https://github.com/apache/arrow/pull/7449

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow] maartenbreddels commented on a change in pull request #7449: ARROW-9133: [C++] Add utf8_upper and utf8_lower

Posted by GitBox <gi...@apache.org>.

maartenbreddels commented on a change in pull request #7449:

URL: https://github.com/apache/arrow/pull/7449#discussion_r446934815

##########

File path: cpp/src/arrow/compute/kernels/scalar_string.cc

##########

@@ -39,6 +158,121 @@ struct AsciiLength {

}

};

+template <typename Type, template <typename> class Derived>

+struct Utf8Transform {

+ using offset_type = typename Type::offset_type;

+ using DerivedClass = Derived<Type>;

+ using ArrayType = typename TypeTraits<Type>::ArrayType;

+

+ static offset_type Transform(const uint8_t* input, offset_type input_string_ncodeunits,

+ uint8_t* output) {

+ uint8_t* dest = output;

+ utf8_transform(input, input + input_string_ncodeunits, dest,

+ DerivedClass::TransformCodepoint);

+ return (offset_type)(dest - output);

+ }

+

+ static void Exec(KernelContext* ctx, const ExecBatch& batch, Datum* out) {

+ if (batch[0].kind() == Datum::ARRAY) {

+ std::call_once(flag_case_luts, []() {

+ lut_upper_codepoint.reserve(MAX_CODEPOINT_LUT + 1);

+ lut_lower_codepoint.reserve(MAX_CODEPOINT_LUT + 1);

+ for (int i = 0; i <= MAX_CODEPOINT_LUT; i++) {

+ lut_upper_codepoint.push_back(utf8proc_toupper(i));

+ lut_lower_codepoint.push_back(utf8proc_tolower(i));

+ }

+ });

+ const ArrayData& input = *batch[0].array();

+ ArrayType input_boxed(batch[0].array());

+ ArrayData* output = out->mutable_array();

+

+ offset_type const* input_string_offsets = input.GetValues<offset_type>(1);

+ utf8proc_uint8_t const* input_str =

+ input.buffers[2]->data() + input_boxed.value_offset(0);

+ offset_type input_ncodeunits = input_boxed.total_values_length();

+ offset_type input_nstrings = (offset_type)input.length;

+

+ // Section 5.18 of the Unicode spec claim that the number of codepoints for case

+ // mapping can grow by a factor of 3. This means grow by a factor of 3 in bytes

+ // However, since we don't support all casings (SpecialCasing.txt) the growth

+ // is actually only at max 3/2 (as covered by the unittest).

+ // Note that rounding down the 3/2 is ok, since only codepoints encoded by

+ // two code units (even) can grow to 3 code units.

+

+ int64_t output_ncodeunits_max = ((int64_t)input_ncodeunits) * 3 / 2;

+ if (output_ncodeunits_max > std::numeric_limits<offset_type>::max()) {

+ ctx->SetStatus(Status::CapacityError(

+ "Result might not fit in a 32bit utf8 array, convert to large_utf8"));

+ return;

+ }

+

+ KERNEL_RETURN_IF_ERROR(

+ ctx, ctx->Allocate(output_ncodeunits_max).Value(&output->buffers[2]));

+ // We could reuse the buffer if it is all ascii, benchmarking showed this not to

+ // matter

+ // output->buffers[1] = input.buffers[1];

+ KERNEL_RETURN_IF_ERROR(ctx,

+ ctx->Allocate((input_nstrings + 1) * sizeof(offset_type))

+ .Value(&output->buffers[1]));

+ utf8proc_uint8_t* output_str = output->buffers[2]->mutable_data();

+ offset_type* output_string_offsets = output->GetMutableValues<offset_type>(1);

+ offset_type output_ncodeunits = 0;

+

+ offset_type output_string_offset = 0;

+ *output_string_offsets = output_string_offset;

+ offset_type input_string_first_offset = input_string_offsets[0];

+ for (int64_t i = 0; i < input_nstrings; i++) {

+ offset_type input_string_offset =

+ input_string_offsets[i] - input_string_first_offset;

+ offset_type input_string_end =

+ input_string_offsets[i + 1] - input_string_first_offset;

+ offset_type input_string_ncodeunits = input_string_end - input_string_offset;

+ offset_type encoded_nbytes = DerivedClass::Transform(

+ input_str + input_string_offset, input_string_ncodeunits,

+ output_str + output_ncodeunits);

+ output_ncodeunits += encoded_nbytes;

+ output_string_offsets[i + 1] = output_ncodeunits;

+ }

+

+ // trim the codepoint buffer, since we allocated too much

+ KERNEL_RETURN_IF_ERROR(

+ ctx,

+ output->buffers[2]->CopySlice(0, output_ncodeunits).Value(&output->buffers[2]));

+ } else {

+ const auto& input = checked_cast<const BaseBinaryScalar&>(*batch[0].scalar());

+ auto result = checked_pointer_cast<BaseBinaryScalar>(MakeNullScalar(out->type()));

+ if (input.is_valid) {

+ result->is_valid = true;

+ offset_type data_nbytes = (offset_type)input.value->size();

+ // See note above in the Array version explaining the 3 / 2

+ KERNEL_RETURN_IF_ERROR(ctx,

+ ctx->Allocate(data_nbytes * 3 / 2).Value(&result->value));

+ offset_type encoded_nbytes = DerivedClass::Transform(

+ input.value->data(), data_nbytes, result->value->mutable_data());

+ KERNEL_RETURN_IF_ERROR(

+ ctx, result->value->CopySlice(0, encoded_nbytes).Value(&result->value));

+ }

+ out->value = result;

+ }

+ }

+};

+

+template <typename Type>

+struct Utf8Upper : Utf8Transform<Type, Utf8Upper> {

Review comment:

Good catch, that was preparing for Upcasting, which would need this, since we agreed not to upcast, we can remove it.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow] maartenbreddels commented on a change in pull request #7449: ARROW-9133: [C++] Add utf8_upper and utf8_lower

Posted by GitBox <gi...@apache.org>.

maartenbreddels commented on a change in pull request #7449:

URL: https://github.com/apache/arrow/pull/7449#discussion_r446988586

##########

File path: cpp/src/arrow/compute/kernels/scalar_string.cc

##########

@@ -39,6 +158,121 @@ struct AsciiLength {

}

};

+template <typename Type, template <typename> class Derived>

+struct Utf8Transform {

+ using offset_type = typename Type::offset_type;

+ using DerivedClass = Derived<Type>;

+ using ArrayType = typename TypeTraits<Type>::ArrayType;

+

+ static offset_type Transform(const uint8_t* input, offset_type input_string_ncodeunits,

+ uint8_t* output) {

+ uint8_t* dest = output;

+ utf8_transform(input, input + input_string_ncodeunits, dest,

+ DerivedClass::TransformCodepoint);

+ return (offset_type)(dest - output);

+ }

+

+ static void Exec(KernelContext* ctx, const ExecBatch& batch, Datum* out) {

+ if (batch[0].kind() == Datum::ARRAY) {

+ std::call_once(flag_case_luts, []() {

+ lut_upper_codepoint.reserve(MAX_CODEPOINT_LUT + 1);

+ lut_lower_codepoint.reserve(MAX_CODEPOINT_LUT + 1);

+ for (int i = 0; i <= MAX_CODEPOINT_LUT; i++) {

+ lut_upper_codepoint.push_back(utf8proc_toupper(i));

+ lut_lower_codepoint.push_back(utf8proc_tolower(i));

+ }

+ });

+ const ArrayData& input = *batch[0].array();

+ ArrayType input_boxed(batch[0].array());

+ ArrayData* output = out->mutable_array();

+

+ offset_type const* input_string_offsets = input.GetValues<offset_type>(1);

+ utf8proc_uint8_t const* input_str =

+ input.buffers[2]->data() + input_boxed.value_offset(0);

+ offset_type input_ncodeunits = input_boxed.total_values_length();

+ offset_type input_nstrings = (offset_type)input.length;

+

+ // Section 5.18 of the Unicode spec claim that the number of codepoints for case

+ // mapping can grow by a factor of 3. This means grow by a factor of 3 in bytes

+ // However, since we don't support all casings (SpecialCasing.txt) the growth

+ // is actually only at max 3/2 (as covered by the unittest).

+ // Note that rounding down the 3/2 is ok, since only codepoints encoded by

+ // two code units (even) can grow to 3 code units.

+

+ int64_t output_ncodeunits_max = ((int64_t)input_ncodeunits) * 3 / 2;

+ if (output_ncodeunits_max > std::numeric_limits<offset_type>::max()) {

+ ctx->SetStatus(Status::CapacityError(

+ "Result might not fit in a 32bit utf8 array, convert to large_utf8"));

+ return;

+ }

+

+ KERNEL_RETURN_IF_ERROR(

+ ctx, ctx->Allocate(output_ncodeunits_max).Value(&output->buffers[2]));

+ // We could reuse the buffer if it is all ascii, benchmarking showed this not to

+ // matter

+ // output->buffers[1] = input.buffers[1];

+ KERNEL_RETURN_IF_ERROR(ctx,

+ ctx->Allocate((input_nstrings + 1) * sizeof(offset_type))

+ .Value(&output->buffers[1]));

+ utf8proc_uint8_t* output_str = output->buffers[2]->mutable_data();

+ offset_type* output_string_offsets = output->GetMutableValues<offset_type>(1);

+ offset_type output_ncodeunits = 0;

+

+ offset_type output_string_offset = 0;

+ *output_string_offsets = output_string_offset;

+ offset_type input_string_first_offset = input_string_offsets[0];

+ for (int64_t i = 0; i < input_nstrings; i++) {

+ offset_type input_string_offset =

+ input_string_offsets[i] - input_string_first_offset;

+ offset_type input_string_end =

+ input_string_offsets[i + 1] - input_string_first_offset;

+ offset_type input_string_ncodeunits = input_string_end - input_string_offset;

Review comment:

Using GetValue now, much cleaner 👍

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow] xhochy commented on pull request #7449: ARROW-9133: [C++] Add utf8_upper and utf8_lower

Posted by GitBox <gi...@apache.org>.

xhochy commented on pull request #7449:

URL: https://github.com/apache/arrow/pull/7449#issuecomment-645253060

The major difference between `unilib` and `utf8proc` in uppercasing a character seems to be that [unilib looks up the uppercase value directly](https://github.com/ufal/unilib/blob/d8276e70b7c11c677897f71030de7258cbb1f99e/unilib/unicode.h#L81) wheras [utf8proc first gets a struct with all properties](https://github.com/JuliaStrings/utf8proc/blob/08f9999a0698639f15d07b12c0065a4494f2d504/utf8proc.c#L377) from which it extracts the uppercase value. Pre-computing the uppercase dictionary first could bring `utf8proc` en par with the performance.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow] maartenbreddels commented on a change in pull request #7449: ARROW-9133: [C++] Add utf8_upper and utf8_lower

Posted by GitBox <gi...@apache.org>.

maartenbreddels commented on a change in pull request #7449:

URL: https://github.com/apache/arrow/pull/7449#discussion_r446964243

##########

File path: cpp/src/arrow/compute/kernels/scalar_string.cc

##########

@@ -39,6 +158,121 @@ struct AsciiLength {

}

};

+template <typename Type, template <typename> class Derived>

+struct Utf8Transform {

+ using offset_type = typename Type::offset_type;

+ using DerivedClass = Derived<Type>;

+ using ArrayType = typename TypeTraits<Type>::ArrayType;

+

+ static offset_type Transform(const uint8_t* input, offset_type input_string_ncodeunits,

+ uint8_t* output) {

+ uint8_t* dest = output;

+ utf8_transform(input, input + input_string_ncodeunits, dest,

+ DerivedClass::TransformCodepoint);

+ return (offset_type)(dest - output);

+ }

+

+ static void Exec(KernelContext* ctx, const ExecBatch& batch, Datum* out) {

+ if (batch[0].kind() == Datum::ARRAY) {

+ std::call_once(flag_case_luts, []() {

+ lut_upper_codepoint.reserve(MAX_CODEPOINT_LUT + 1);

+ lut_lower_codepoint.reserve(MAX_CODEPOINT_LUT + 1);

+ for (int i = 0; i <= MAX_CODEPOINT_LUT; i++) {

+ lut_upper_codepoint.push_back(utf8proc_toupper(i));

+ lut_lower_codepoint.push_back(utf8proc_tolower(i));

+ }

+ });

+ const ArrayData& input = *batch[0].array();

+ ArrayType input_boxed(batch[0].array());

+ ArrayData* output = out->mutable_array();

+

+ offset_type const* input_string_offsets = input.GetValues<offset_type>(1);

+ utf8proc_uint8_t const* input_str =

Review comment:

That's the offset, I don't see a similar method for the data, let me know if I'm wrong.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow] maartenbreddels commented on a change in pull request #7449: ARROW-9133: [C++] Add utf8_upper and utf8_lower

Posted by GitBox <gi...@apache.org>.

maartenbreddels commented on a change in pull request #7449:

URL: https://github.com/apache/arrow/pull/7449#discussion_r446934057

##########

File path: cpp/src/arrow/compute/kernels/scalar_string.cc

##########

@@ -30,6 +31,124 @@ namespace internal {

namespace {

+// lookup tables

+std::vector<uint32_t> lut_upper_codepoint;

+std::vector<uint32_t> lut_lower_codepoint;

+std::once_flag flag_case_luts;

+

+constexpr uint32_t REPLACEMENT_CHAR =

+ '?'; // the proper replacement char would be the 0xFFFD codepoint, but that can

+ // increase string length by a factor of 3

+constexpr int MAX_CODEPOINT_LUT = 0xffff; // up to this codepoint is in a lookup table

+

+static inline void utf8_encode(uint8_t*& str, uint32_t codepoint) {

+ if (codepoint < 0x80) {

+ *str++ = codepoint;

+ } else if (codepoint < 0x800) {