You are viewing a plain text version of this content. The canonical link for it is here.

Posted to commits@hudi.apache.org by GitBox <gi...@apache.org> on 2020/08/29 01:45:09 UTC

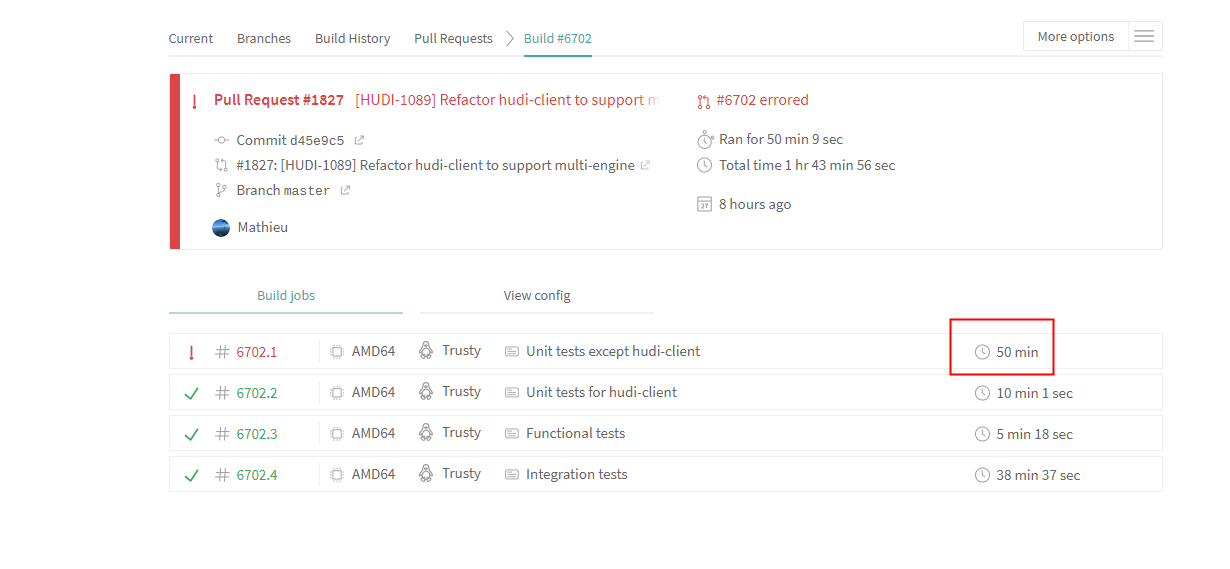

[GitHub] [hudi] wangxianghu opened a new pull request #1827: [HUDI-1089] [WIP]Refactor hudi-client to support multi-engine

wangxianghu opened a new pull request #1827:

URL: https://github.com/apache/hudi/pull/1827

## *Tips*

- *Thank you very much for contributing to Apache Hudi.*

- *Please review https://hudi.apache.org/contributing.html before opening a pull request.*

## What is the purpose of the pull request

*Refactor hudi-client to support multi-engine*

## Brief change log

- *Refactor hudi-client to support multi-engine*

## Verify this pull request

*This pull request is already covered by existing tests*.

## Committer checklist

- [ ] Has a corresponding JIRA in PR title & commit

- [ ] Commit message is descriptive of the change

- [ ] CI is green

- [ ] Necessary doc changes done or have another open PR

- [ ] For large changes, please consider breaking it into sub-tasks under an umbrella JIRA.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] Mathieu1124 commented on a change in pull request #1827: [HUDI-1089] Refactor hudi-client to support multi-engine

Posted by GitBox <gi...@apache.org>.

Mathieu1124 commented on a change in pull request #1827:

URL: https://github.com/apache/hudi/pull/1827#discussion_r457127004

##########

File path: hudi-client/hudi-client-common/src/main/java/org/apache/hudi/common/HoodieEngineContext.java

##########

@@ -0,0 +1,48 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.hudi.common;

+

+import org.apache.hudi.client.TaskContextSupplier;

+import org.apache.hudi.common.config.SerializableConfiguration;

+

+/**

+ * Base class contains the context information needed by the engine at runtime. It will be extended by different

+ * engine implementation if needed.

+ */

+public class HoodieEngineContext {

+ /**

+ * A wrapped hadoop configuration which can be serialized.

+ */

+ private SerializableConfiguration hadoopConf;

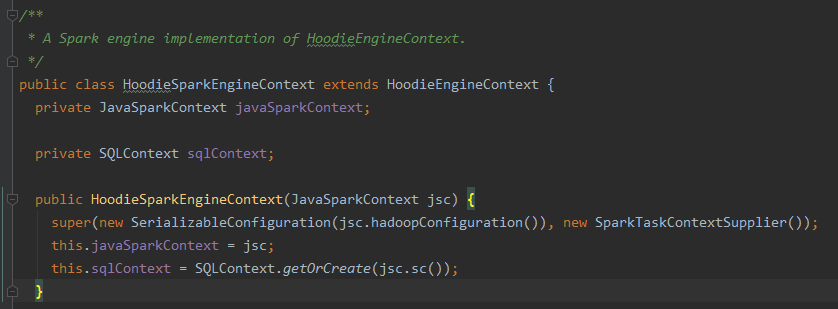

Review comment:

>

>

> Just bump into this... Since this is a generic engine context, will it be better to use a generic name like `engineConfig`?

Hi, henry thanks for your review. This class holds more than config stuff(your can see its child class `HoodieSparkEngineContext`), maybe context is better, WDYT?

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] vinothchandar commented on pull request #1827: [HUDI-1089] Refactor hudi-client to support multi-engine

Posted by GitBox <gi...@apache.org>.

vinothchandar commented on pull request #1827:

URL: https://github.com/apache/hudi/pull/1827#issuecomment-702174407

@leesf do you see the following exception? could not understand how you ll get the other one even.

```

LOG.info("Starting Timeline service !!");

Option<String> hostAddr = context.getProperty(EngineProperty.EMBEDDED_SERVER_HOST);

if (!hostAddr.isPresent()) {

throw new HoodieException("Unable to find host address to bind timeline server to.");

}

timelineServer = Option.of(new EmbeddedTimelineService(context, hostAddr.get(),

config.getClientSpecifiedViewStorageConfig()));

```

Either way, good pointer. the behavior has changed around this a bit actually. So will try and tweak and push a fix

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] wangxianghu commented on pull request #1827: [HUDI-1089] Refactor hudi-client to support multi-engine

Posted by GitBox <gi...@apache.org>.

wangxianghu commented on pull request #1827:

URL: https://github.com/apache/hudi/pull/1827#issuecomment-693113074

> @wangxianghu on the checkstyle change to bump up the line count to 500, I think we should revert to 200 as it is now.

> I checked out a few of the issues. they can be brought within limit, by folding like below.

> if not, we can turn off checkstyle selectively in that block?

>

> ```

>

> public class HoodieSparkMergeHandle<T extends HoodieRecordPayload> extends

> HoodieWriteHandle<T, JavaRDD<HoodieRecord<T>>, JavaRDD<HoodieKey>, JavaRDD<WriteStatus>, JavaPairRDD<HoodieKey, Option<Pair<String, String>>>> {

> ```

Good idea. I'll give a try.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] wangxianghu commented on a change in pull request #1827: [HUDI-1089] Refactor hudi-client to support multi-engine

Posted by GitBox <gi...@apache.org>.

wangxianghu commented on a change in pull request #1827:

URL: https://github.com/apache/hudi/pull/1827#discussion_r485027583

##########

File path: hudi-client/hudi-spark-client/src/main/java/org/apache/hudi/io/HoodieSparkMergeHandle.java

##########

@@ -71,34 +77,25 @@

protected boolean useWriterSchema;

private HoodieBaseFile baseFileToMerge;

- public HoodieMergeHandle(HoodieWriteConfig config, String instantTime, HoodieTable<T> hoodieTable,

- Iterator<HoodieRecord<T>> recordItr, String partitionPath, String fileId, SparkTaskContextSupplier sparkTaskContextSupplier) {

- super(config, instantTime, partitionPath, fileId, hoodieTable, sparkTaskContextSupplier);

+ public HoodieSparkMergeHandle(HoodieWriteConfig config, String instantTime, HoodieTable hoodieTable,

+ Iterator<HoodieRecord<T>> recordItr, String partitionPath, String fileId, TaskContextSupplier taskContextSupplier) {

+ super(config, instantTime, partitionPath, fileId, hoodieTable, taskContextSupplier);

init(fileId, recordItr);

init(fileId, partitionPath, hoodieTable.getBaseFileOnlyView().getLatestBaseFile(partitionPath, fileId).get());

}

/**

* Called by compactor code path.

*/

- public HoodieMergeHandle(HoodieWriteConfig config, String instantTime, HoodieTable<T> hoodieTable,

- Map<String, HoodieRecord<T>> keyToNewRecords, String partitionPath, String fileId,

- HoodieBaseFile dataFileToBeMerged, SparkTaskContextSupplier sparkTaskContextSupplier) {

- super(config, instantTime, partitionPath, fileId, hoodieTable, sparkTaskContextSupplier);

+ public HoodieSparkMergeHandle(HoodieWriteConfig config, String instantTime, HoodieTable hoodieTable,

+ Map<String, HoodieRecord<T>> keyToNewRecords, String partitionPath, String fileId,

+ HoodieBaseFile dataFileToBeMerged, TaskContextSupplier taskContextSupplier) {

+ super(config, instantTime, partitionPath, fileId, hoodieTable, taskContextSupplier);

this.keyToNewRecords = keyToNewRecords;

this.useWriterSchema = true;

init(fileId, this.partitionPath, dataFileToBeMerged);

}

- @Override

Review comment:

> please refrain from moving methods around within the file. it makes life hard during review :(

sorry for the inconvenient, let me see what I can do to avoid this :)

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] Mathieu1124 commented on pull request #1827: [HUDI-1089] Refactor hudi-client to support multi-engine

Posted by GitBox <gi...@apache.org>.

Mathieu1124 commented on pull request #1827:

URL: https://github.com/apache/hudi/pull/1827#issuecomment-659898064

> @Mathieu1124 @leesf can you please share any tests you may have done in your own environment to ensure existing functionality is in tact.. This is a major signal we may not completely get with a PR review

My test is limited, just all the unit tests in source code, and all the demos in the Quick-Start Guide. I am planning to test it in docker env.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] wangxianghu commented on a change in pull request #1827: [HUDI-1089] Refactor hudi-client to support multi-engine

Posted by GitBox <gi...@apache.org>.

wangxianghu commented on a change in pull request #1827:

URL: https://github.com/apache/hudi/pull/1827#discussion_r484832824

##########

File path: hudi-client/pom.xml

##########

@@ -68,6 +107,12 @@

</build>

<dependencies>

+ <!-- Scala -->

+ <dependency>

+ <groupId>org.scala-lang</groupId>

Review comment:

> should we limit scala to just the spark module?

Yes, it is better. can we do it in another PR?

because although some classes have nothing to do with spark, it used `scala.Tuple2`, so scala is still needed.

we can replace it with 'org.apache.hudi.common.util.collection.Pair'

WDYT?

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] wangxianghu commented on pull request #1827: [HUDI-1089] Refactor hudi-client to support multi-engine

Posted by GitBox <gi...@apache.org>.

wangxianghu commented on pull request #1827:

URL: https://github.com/apache/hudi/pull/1827#issuecomment-695785338

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] wangxianghu commented on a change in pull request #1827: [HUDI-1089] Refactor hudi-client to support multi-engine

Posted by GitBox <gi...@apache.org>.

wangxianghu commented on a change in pull request #1827:

URL: https://github.com/apache/hudi/pull/1827#discussion_r493999114

##########

File path: hudi-client/hudi-client-common/src/main/java/org/apache/hudi/table/action/commit/BaseMergeHelper.java

##########

@@ -161,11 +108,11 @@ private static GenericRecord transformRecordBasedOnNewSchema(GenericDatumReader<

/**

* Consumer that dequeues records from queue and sends to Merge Handle.

*/

- private static class UpdateHandler extends BoundedInMemoryQueueConsumer<GenericRecord, Void> {

+ static class UpdateHandler extends BoundedInMemoryQueueConsumer<GenericRecord, Void> {

- private final HoodieMergeHandle upsertHandle;

+ private final HoodieWriteHandle upsertHandle;

Review comment:

> why is this no longer a mergeHandle?

With `parallelDo` method introduced in, this change is no longer needed. rollback already

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] Mathieu1124 edited a comment on pull request #1827: [HUDI-1089] Refactor hudi-client to support multi-engine

Posted by GitBox <gi...@apache.org>.

Mathieu1124 edited a comment on pull request #1827:

URL: https://github.com/apache/hudi/pull/1827#issuecomment-662474645

> @Mathieu1124 , @leesf : @n3nash said he is half way through reviewing. I took another pass and this seems low risk enough for us to merge for 0.6.0.

>

> We have some large PRs pending though #1702 #1678 #1834 . I would like to merge those and then rework this a bit on top of this. How painful do you think the rebase would be? (I can help as much as I can as well). Does this sound like a good plan to you

@vinothchandar, I have taken a quick pass about that three PRs above, can't say that'll be little work, but I am ok with this plan because these three PRs are based on the same base, so leaving this PR at the last could greatly reduce their workload on rebasing and gives more time for us to test this PR.

we'll do our best to push this PR, and merge this for 0.6.0

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] wangxianghu commented on a change in pull request #1827: [HUDI-1089] Refactor hudi-client to support multi-engine

Posted by GitBox <gi...@apache.org>.

wangxianghu commented on a change in pull request #1827:

URL: https://github.com/apache/hudi/pull/1827#discussion_r484933755

##########

File path: hudi-client/hudi-spark-client/src/main/java/org/apache/hudi/io/SparkCreateHandleFactory.java

##########

@@ -0,0 +1,46 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.hudi.io;

+

+import org.apache.hudi.client.TaskContextSupplier;

+import org.apache.hudi.client.WriteStatus;

+import org.apache.hudi.common.model.HoodieKey;

+import org.apache.hudi.common.model.HoodieRecord;

+import org.apache.hudi.common.model.HoodieRecordPayload;

+import org.apache.hudi.common.util.Option;

+import org.apache.hudi.common.util.collection.Pair;

+import org.apache.hudi.config.HoodieWriteConfig;

+import org.apache.hudi.table.HoodieTable;

+import org.apache.spark.api.java.JavaPairRDD;

+import org.apache.spark.api.java.JavaRDD;

+

+public class SparkCreateHandleFactory<T extends HoodieRecordPayload> extends WriteHandleFactory<T, JavaRDD<HoodieRecord<T>>, JavaRDD<HoodieKey>, JavaRDD<WriteStatus>, JavaPairRDD<HoodieKey, Option<Pair<String, String>>>> {

+

+ @Override

+ public HoodieSparkCreateHandle create(final HoodieWriteConfig hoodieConfig,

Review comment:

> same. is there a way to not make these spark specific

I'll give a try

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] vinothchandar commented on pull request #1827: [HUDI-1089] Refactor hudi-client to support multi-engine

Posted by GitBox <gi...@apache.org>.

vinothchandar commented on pull request #1827:

URL: https://github.com/apache/hudi/pull/1827#issuecomment-691505048

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] wangxianghu commented on a change in pull request #1827: [HUDI-1089] Refactor hudi-client to support multi-engine

Posted by GitBox <gi...@apache.org>.

wangxianghu commented on a change in pull request #1827:

URL: https://github.com/apache/hudi/pull/1827#discussion_r484828652

##########

File path: style/checkstyle.xml

##########

@@ -62,7 +62,7 @@

<property name="allowNonPrintableEscapes" value="true"/>

</module>

<module name="LineLength">

- <property name="max" value="200"/>

+ <property name="max" value="500"/>

Review comment:

> let's discuss this in a separate PR? 500 is a really large threshold

very sorry to say this ...

According to the current abstraction,some declaration of the method is longer than 200.

such as `org.apache.hudi.table.action.compact.HoodieSparkMergeOnReadTableCompactor#generateCompactionPlan`

It is 357 characters long

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] wangxianghu commented on a change in pull request #1827: [HUDI-1089] Refactor hudi-client to support multi-engine

Posted by GitBox <gi...@apache.org>.

wangxianghu commented on a change in pull request #1827:

URL: https://github.com/apache/hudi/pull/1827#discussion_r484928117

##########

File path: hudi-spark/src/main/java/org/apache/hudi/bootstrap/SparkParquetBootstrapDataProvider.java

##########

@@ -43,18 +43,18 @@

/**

* Spark Data frame based bootstrap input provider.

*/

-public class SparkParquetBootstrapDataProvider extends FullRecordBootstrapDataProvider {

+public class SparkParquetBootstrapDataProvider extends FullRecordBootstrapDataProvider<JavaRDD<HoodieRecord>> {

private final transient SparkSession sparkSession;

public SparkParquetBootstrapDataProvider(TypedProperties props,

- JavaSparkContext jsc) {

- super(props, jsc);

- this.sparkSession = SparkSession.builder().config(jsc.getConf()).getOrCreate();

+ HoodieSparkEngineContext context) {

+ super(props, context);

+ this.sparkSession = SparkSession.builder().config(context.getJavaSparkContext().getConf()).getOrCreate();

}

@Override

- public JavaRDD<HoodieRecord> generateInputRecordRDD(String tableName, String sourceBasePath,

+ public JavaRDD<HoodieRecord> generateInputRecord(String tableName, String sourceBasePath,

Review comment:

> rename: generateInputRecords

done

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] wangxianghu commented on a change in pull request #1827: [HUDI-1089] Refactor hudi-client to support multi-engine

Posted by GitBox <gi...@apache.org>.

wangxianghu commented on a change in pull request #1827:

URL: https://github.com/apache/hudi/pull/1827#discussion_r484921994

##########

File path: hudi-client/hudi-client-common/src/main/java/org/apache/hudi/index/hbase/BaseHoodieHBaseIndex.java

##########

@@ -0,0 +1,295 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.hudi.index.hbase;

+

+import org.apache.hudi.common.HoodieEngineContext;

+import org.apache.hudi.common.model.HoodieRecordPayload;

+import org.apache.hudi.common.table.HoodieTableMetaClient;

+import org.apache.hudi.common.table.timeline.HoodieTimeline;

+import org.apache.hudi.common.util.ReflectionUtils;

+import org.apache.hudi.config.HoodieHBaseIndexConfig;

+import org.apache.hudi.config.HoodieWriteConfig;

+import org.apache.hudi.exception.HoodieDependentSystemUnavailableException;

+import org.apache.hudi.index.HoodieIndex;

+import org.apache.hudi.table.HoodieTable;

+

+import org.apache.hadoop.conf.Configuration;

+import org.apache.hadoop.hbase.HBaseConfiguration;

+import org.apache.hadoop.hbase.HRegionLocation;

+import org.apache.hadoop.hbase.TableName;

+import org.apache.hadoop.hbase.client.BufferedMutator;

+import org.apache.hadoop.hbase.client.Connection;

+import org.apache.hadoop.hbase.client.ConnectionFactory;

+import org.apache.hadoop.hbase.client.Get;

+import org.apache.hadoop.hbase.client.HTable;

+import org.apache.hadoop.hbase.client.Mutation;

+import org.apache.hadoop.hbase.client.RegionLocator;

+import org.apache.hadoop.hbase.client.Result;

+import org.apache.hadoop.hbase.util.Bytes;

+import org.apache.log4j.LogManager;

+import org.apache.log4j.Logger;

+

+import java.io.IOException;

+import java.io.Serializable;

+import java.util.List;

+

+/**

+ * Hoodie Index implementation backed by HBase.

+ */

+public abstract class BaseHoodieHBaseIndex<T extends HoodieRecordPayload, I, K, O, P> extends HoodieIndex<T, I, K, O, P> {

Review comment:

> are there any code changes here, i.e logic changes?

nothing changed

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] vinothchandar commented on pull request #1827: [HUDI-1089] Refactor hudi-client to support multi-engine

Posted by GitBox <gi...@apache.org>.

vinothchandar commented on pull request #1827:

URL: https://github.com/apache/hudi/pull/1827#issuecomment-698619431

Alright, taking over the wheel :) thanks @wangxianghu . This is true champion effort!

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] vinothchandar commented on pull request #1827: [HUDI-1089] Refactor hudi-client to support multi-engine

Posted by GitBox <gi...@apache.org>.

vinothchandar commented on pull request #1827:

URL: https://github.com/apache/hudi/pull/1827#issuecomment-692257956

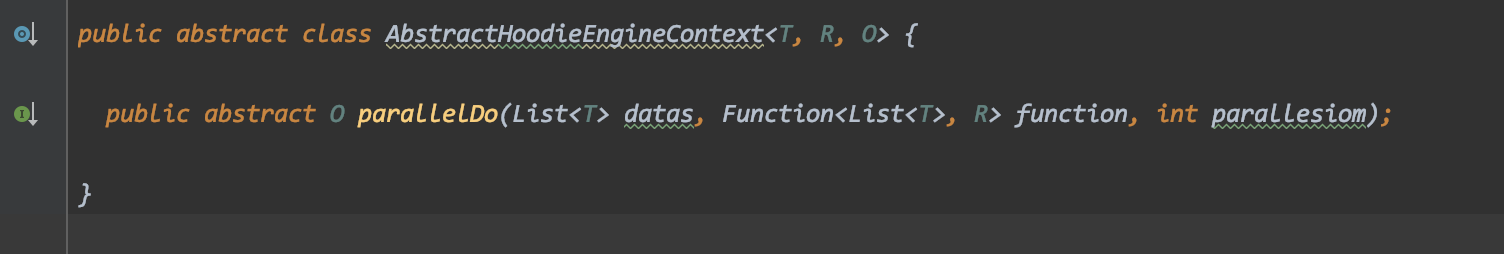

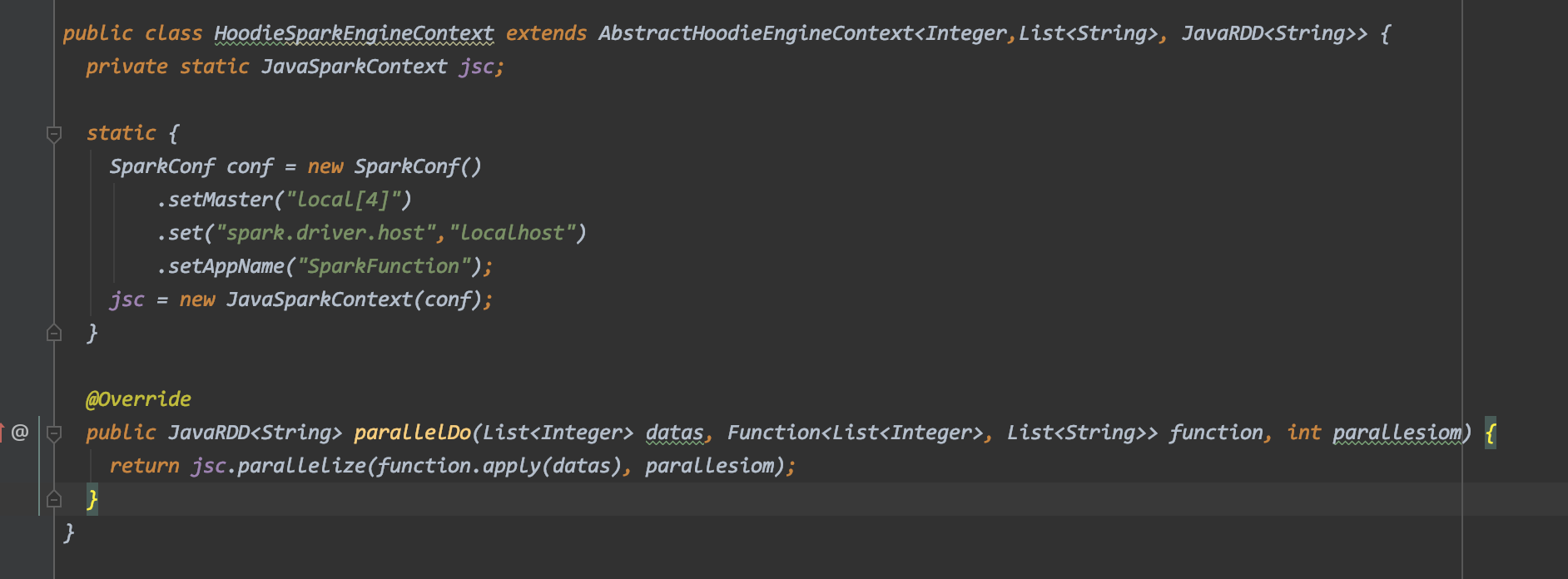

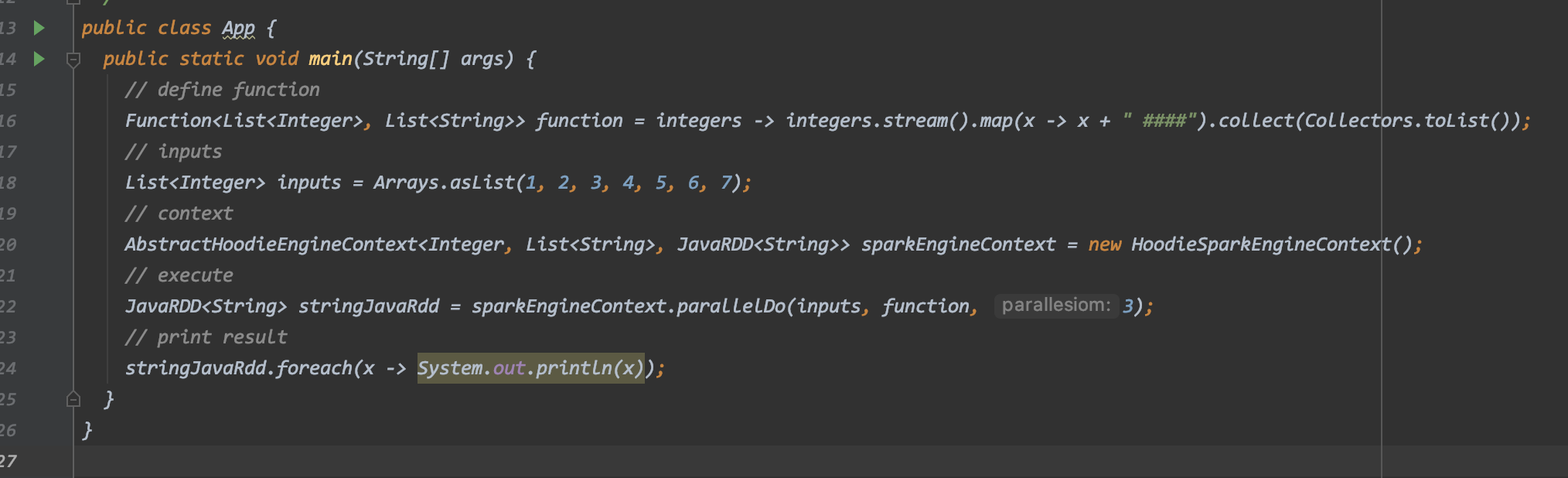

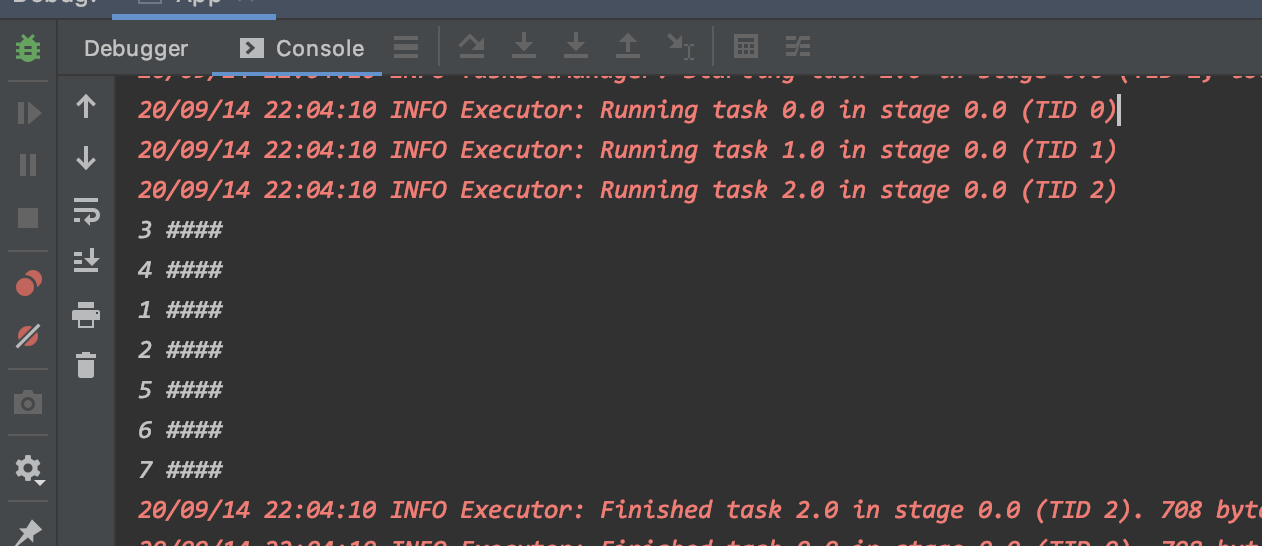

That sounds promising. We cannot easily fix `T,R,O` though right at the class level. it will be different in each setting, right?

We can use generic methods though I think and what I had in mind was something little different

if the method signature can be the following

```

public abstract <I,O> List<O> parallelDo(List<I> data, Function<I, O> func, int parallelism);

```

and for the Spark the implementation

```

public <I,O> List<O> parallelDo(List<I> data, Function<I, O> func, int parallelism) {

return jsc.parallelize(data, parallelism).map(func).collectAsList();

}

```

and the invocation

```

engineContext.parallelDo(Arrays.asList(1,2,3), (a) -> {

return 0;

}, 3);

```

Can you a small Flink implementation as well and confirm this approach will work for Flink as well. Thats another key thing to answer, before we finalize the approach.

Note that

a) some of the code that uses `rdd.mapToPair()` etc before collecting, have to be rewritten using Java Streams. i.e

`engineContext.parallelDo().map()` instead of `jsc.parallelize().map().mapToPair()` currently.

b) Also we should limit the use of parallelDo() to only cases where there are no grouping/aggregations involved. for e.g if we are doing `jsc.parallelize(files, ..).map(record).reduceByKey(..)`, collecting without reducing will lead to OOMs. We can preserve what you are doing currently, for such cases.

But the parallelDo should help up reduce the amount of code broken up (and thus reduce the effort for the flink engine).

@wangxianghu I am happy to shepherd this PR through from this point as well. lmk

cc @yanghua @leesf

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] wangxianghu commented on pull request #1827: [HUDI-1089] Refactor hudi-client to support multi-engine

Posted by GitBox <gi...@apache.org>.

wangxianghu commented on pull request #1827:

URL: https://github.com/apache/hudi/pull/1827#issuecomment-702318528

@vinothchandar The warn log issue is fixed

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] wangxianghu commented on pull request #1827: [HUDI-1089] Refactor hudi-client to support multi-engine

Posted by GitBox <gi...@apache.org>.

wangxianghu commented on pull request #1827:

URL: https://github.com/apache/hudi/pull/1827#issuecomment-702222008

@vinothchandar @yanghua @leesf The demo runs well in my local, except the warning `WARN embedded.EmbeddedTimelineService: Unable to find driver bind address from spark config`

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] vinothchandar commented on a change in pull request #1827: [HUDI-1089] Refactor hudi-client to support multi-engine

Posted by GitBox <gi...@apache.org>.

vinothchandar commented on a change in pull request #1827:

URL: https://github.com/apache/hudi/pull/1827#discussion_r492449543

##########

File path: hudi-client/hudi-client-common/src/main/java/org/apache/hudi/common/HoodieEngineContext.java

##########

@@ -0,0 +1,66 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.hudi.common;

+

+import org.apache.hudi.client.TaskContextSupplier;

+import org.apache.hudi.common.config.SerializableConfiguration;

+

+import java.util.List;

+import java.util.function.Consumer;

+import java.util.function.Function;

+import java.util.stream.Collectors;

+import java.util.stream.Stream;

+

+/**

+ * Base class contains the context information needed by the engine at runtime. It will be extended by different

+ * engine implementation if needed.

+ */

+public class HoodieEngineContext {

+ /**

+ * A wrapped hadoop configuration which can be serialized.

+ */

+ private SerializableConfiguration hadoopConf;

+

+ private TaskContextSupplier taskContextSupplier;

+

+ public HoodieEngineContext(SerializableConfiguration hadoopConf, TaskContextSupplier taskContextSupplier) {

+ this.hadoopConf = hadoopConf;

+ this.taskContextSupplier = taskContextSupplier;

+ }

+

+ public SerializableConfiguration getHadoopConf() {

+ return hadoopConf;

+ }

+

+ public TaskContextSupplier getTaskContextSupplier() {

+ return taskContextSupplier;

+ }

+

+ public <I, O> List<O> map(List<I> data, Function<I, O> func) {

Review comment:

@wangxianghu functionality wise, you are correct. it can be implemented just using Java. but, we do parallelization of different pieces of code e.g deletion of files in parallel using spark for a reason. It significantly speeds these up, for large tables.

All I am saying is to implement the `HoodieSparkEngineContext#map` like below

```

public <I, O> List<O> map(List<I> data, Function<I, O> func, int parallelism) {

return javaSparkContext.parallelize(data, parallelism).map(func).collect();

}

```

similarly for the other two methods. I don't see any issues with this. do you?

##########

File path: hudi-client/hudi-client-common/src/main/java/org/apache/hudi/common/HoodieEngineContext.java

##########

@@ -0,0 +1,66 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.hudi.common;

+

+import org.apache.hudi.client.TaskContextSupplier;

+import org.apache.hudi.common.config.SerializableConfiguration;

+

+import java.util.List;

+import java.util.function.Consumer;

+import java.util.function.Function;

+import java.util.stream.Collectors;

+import java.util.stream.Stream;

+

+/**

+ * Base class contains the context information needed by the engine at runtime. It will be extended by different

+ * engine implementation if needed.

+ */

+public class HoodieEngineContext {

+ /**

+ * A wrapped hadoop configuration which can be serialized.

+ */

+ private SerializableConfiguration hadoopConf;

+

+ private TaskContextSupplier taskContextSupplier;

+

+ public HoodieEngineContext(SerializableConfiguration hadoopConf, TaskContextSupplier taskContextSupplier) {

+ this.hadoopConf = hadoopConf;

+ this.taskContextSupplier = taskContextSupplier;

+ }

+

+ public SerializableConfiguration getHadoopConf() {

+ return hadoopConf;

+ }

+

+ public TaskContextSupplier getTaskContextSupplier() {

+ return taskContextSupplier;

+ }

+

+ public <I, O> List<O> map(List<I> data, Function<I, O> func) {

Review comment:

Is it possible to take a `java.util.function.Function` and then within `HoodieSparkEngineContext#map` wrap that into a `org.apache.spark.api.java.function.Function` ?

##########

File path: hudi-client/hudi-client-common/src/main/java/org/apache/hudi/common/HoodieEngineContext.java

##########

@@ -0,0 +1,66 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.hudi.common;

+

+import org.apache.hudi.client.TaskContextSupplier;

+import org.apache.hudi.common.config.SerializableConfiguration;

+

+import java.util.List;

+import java.util.function.Consumer;

+import java.util.function.Function;

+import java.util.stream.Collectors;

+import java.util.stream.Stream;

+

+/**

+ * Base class contains the context information needed by the engine at runtime. It will be extended by different

+ * engine implementation if needed.

+ */

+public class HoodieEngineContext {

+ /**

+ * A wrapped hadoop configuration which can be serialized.

+ */

+ private SerializableConfiguration hadoopConf;

+

+ private TaskContextSupplier taskContextSupplier;

+

+ public HoodieEngineContext(SerializableConfiguration hadoopConf, TaskContextSupplier taskContextSupplier) {

+ this.hadoopConf = hadoopConf;

+ this.taskContextSupplier = taskContextSupplier;

+ }

+

+ public SerializableConfiguration getHadoopConf() {

+ return hadoopConf;

+ }

+

+ public TaskContextSupplier getTaskContextSupplier() {

+ return taskContextSupplier;

+ }

+

+ public <I, O> List<O> map(List<I> data, Function<I, O> func) {

Review comment:

In Spark, there is a functional interface defined like this

```

package org.apache.spark.api.java.function;

import java.io.Serializable;

/**

* Base interface for functions whose return types do not create special RDDs. PairFunction and

* DoubleFunction are handled separately, to allow PairRDDs and DoubleRDDs to be constructed

* when mapping RDDs of other types.

*/

@FunctionalInterface

public interface Function<T1, R> extends Serializable {

R call(T1 v1) throws Exception;

}

```

##########

File path: hudi-client/hudi-client-common/src/main/java/org/apache/hudi/common/HoodieEngineContext.java

##########

@@ -0,0 +1,66 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.hudi.common;

+

+import org.apache.hudi.client.TaskContextSupplier;

+import org.apache.hudi.common.config.SerializableConfiguration;

+

+import java.util.List;

+import java.util.function.Consumer;

+import java.util.function.Function;

+import java.util.stream.Collectors;

+import java.util.stream.Stream;

+

+/**

+ * Base class contains the context information needed by the engine at runtime. It will be extended by different

+ * engine implementation if needed.

+ */

+public class HoodieEngineContext {

+ /**

+ * A wrapped hadoop configuration which can be serialized.

+ */

+ private SerializableConfiguration hadoopConf;

+

+ private TaskContextSupplier taskContextSupplier;

+

+ public HoodieEngineContext(SerializableConfiguration hadoopConf, TaskContextSupplier taskContextSupplier) {

+ this.hadoopConf = hadoopConf;

+ this.taskContextSupplier = taskContextSupplier;

+ }

+

+ public SerializableConfiguration getHadoopConf() {

+ return hadoopConf;

+ }

+

+ public TaskContextSupplier getTaskContextSupplier() {

+ return taskContextSupplier;

+ }

+

+ public <I, O> List<O> map(List<I> data, Function<I, O> func) {

Review comment:

when the use passes in a regular lambda, into `rdd.map()`, this is what it gets converted into

##########

File path: hudi-client/hudi-client-common/src/main/java/org/apache/hudi/common/HoodieEngineContext.java

##########

@@ -0,0 +1,66 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.hudi.common;

+

+import org.apache.hudi.client.TaskContextSupplier;

+import org.apache.hudi.common.config.SerializableConfiguration;

+

+import java.util.List;

+import java.util.function.Consumer;

+import java.util.function.Function;

+import java.util.stream.Collectors;

+import java.util.stream.Stream;

+

+/**

+ * Base class contains the context information needed by the engine at runtime. It will be extended by different

+ * engine implementation if needed.

+ */

+public class HoodieEngineContext {

+ /**

+ * A wrapped hadoop configuration which can be serialized.

+ */

+ private SerializableConfiguration hadoopConf;

+

+ private TaskContextSupplier taskContextSupplier;

+

+ public HoodieEngineContext(SerializableConfiguration hadoopConf, TaskContextSupplier taskContextSupplier) {

+ this.hadoopConf = hadoopConf;

+ this.taskContextSupplier = taskContextSupplier;

+ }

+

+ public SerializableConfiguration getHadoopConf() {

+ return hadoopConf;

+ }

+

+ public TaskContextSupplier getTaskContextSupplier() {

+ return taskContextSupplier;

+ }

+

+ public <I, O> List<O> map(List<I> data, Function<I, O> func) {

Review comment:

@wangxianghu This is awesome. Hopefully this can reduce the amount of code you need to write for Flink significantly. `TestMarkerFiles` seems to pass, so guess the serialization etc is working as expected.

We can go ahead with doing more files in this approach and remerge the base/child classes back as much as possible. cc @leesf @yanghua as well in case they have more things to add.

cc @bvaradar as well as FYI

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] wangxianghu commented on a change in pull request #1827: [HUDI-1089] Refactor hudi-client to support multi-engine

Posted by GitBox <gi...@apache.org>.

wangxianghu commented on a change in pull request #1827:

URL: https://github.com/apache/hudi/pull/1827#discussion_r492493739

##########

File path: hudi-client/hudi-client-common/src/main/java/org/apache/hudi/common/HoodieEngineContext.java

##########

@@ -0,0 +1,66 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.hudi.common;

+

+import org.apache.hudi.client.TaskContextSupplier;

+import org.apache.hudi.common.config.SerializableConfiguration;

+

+import java.util.List;

+import java.util.function.Consumer;

+import java.util.function.Function;

+import java.util.stream.Collectors;

+import java.util.stream.Stream;

+

+/**

+ * Base class contains the context information needed by the engine at runtime. It will be extended by different

+ * engine implementation if needed.

+ */

+public class HoodieEngineContext {

+ /**

+ * A wrapped hadoop configuration which can be serialized.

+ */

+ private SerializableConfiguration hadoopConf;

+

+ private TaskContextSupplier taskContextSupplier;

+

+ public HoodieEngineContext(SerializableConfiguration hadoopConf, TaskContextSupplier taskContextSupplier) {

+ this.hadoopConf = hadoopConf;

+ this.taskContextSupplier = taskContextSupplier;

+ }

+

+ public SerializableConfiguration getHadoopConf() {

+ return hadoopConf;

+ }

+

+ public TaskContextSupplier getTaskContextSupplier() {

+ return taskContextSupplier;

+ }

+

+ public <I, O> List<O> map(List<I> data, Function<I, O> func) {

Review comment:

> when the use passes in a regular lambda, into `rdd.map()`, this is what it gets converted into

The serializable issue can be solved by introducing a seriableFuncition to replace `java.util.function.Function`

```

public interface SerializableFunction<I, O> extends Serializable {

O call(I v1) throws Exception;

}

```

`HoodieEngineContext` can be

```

public abstract class HoodieEngineContext {

public abstract <I, O> List<O> map(List<I> data, SerializableFunction<I, O> func, int parallelism) ;

}

```

`HoodieSparkEngineContext` can be

```

public class HoodieSparkEngineContext extends HoodieEngineContext {

private static JavaSparkContext jsc;

// tmp

static {

SparkConf conf = new SparkConf()

.setMaster("local[4]")

.set("spark.driver.host","localhost")

.setAppName("HoodieSparkEngineContext");

jsc = new JavaSparkContext(conf);

}

@Override

public <I, O> List<O> map(List<I> data, SerializableFunction<I, O> func, int parallelism) {

return jsc.parallelize(data, parallelism).map(func::call).collect();

}

}

```

this works :)

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] wangxianghu commented on pull request #1827: [HUDI-1089] Refactor hudi-client to support multi-engine

Posted by GitBox <gi...@apache.org>.

wangxianghu commented on pull request #1827:

URL: https://github.com/apache/hudi/pull/1827#issuecomment-702217863

> @leesf do you see the following exception? could not understand how you ll get the other one even.

>

> ```

> LOG.info("Starting Timeline service !!");

> Option<String> hostAddr = context.getProperty(EngineProperty.EMBEDDED_SERVER_HOST);

> if (!hostAddr.isPresent()) {

> throw new HoodieException("Unable to find host address to bind timeline server to.");

> }

> timelineServer = Option.of(new EmbeddedTimelineService(context, hostAddr.get(),

> config.getClientSpecifiedViewStorageConfig()));

> ```

>

> Either way, good pointer. the behavior has changed around this a bit actually. So will try and tweak and push a fix

I got this warning too. The code here seems not the same.

```

// Run Embedded Timeline Server

LOG.info("Starting Timeline service !!");

Option<String> hostAddr = context.getProperty(EngineProperty.EMBEDDED_SERVER_HOST);

timelineServer = Option.of(new EmbeddedTimelineService(context, hostAddr.orElse(null),

config.getClientSpecifiedViewStorageConfig()));

```

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] yanghua commented on pull request #1827: [HUDI-1089] Refactor hudi-client to support multi-engine

Posted by GitBox <gi...@apache.org>.

yanghua commented on pull request #1827:

URL: https://github.com/apache/hudi/pull/1827#issuecomment-690922454

@leesf Thanks for your awesome work. Can you squash these commits for the subsequent review?

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] wangxianghu edited a comment on pull request #1827: [HUDI-1089] Refactor hudi-client to support multi-engine

Posted by GitBox <gi...@apache.org>.

wangxianghu edited a comment on pull request #1827:

URL: https://github.com/apache/hudi/pull/1827#issuecomment-692604118

> That sounds promising. We cannot easily fix `T,R,O` though right at the class level. it will be different in each setting, right?

>

> We can use generic methods though I think and what I had in mind was something little different

> if the method signature can be the following

>

> ```

> public abstract <I,O> List<O> parallelDo(List<I> data, Function<I, O> func, int parallelism);

> ```

>

> and for the Spark the implementation

>

> ```

> public <I,O> List<O> parallelDo(List<I> data, Function<I, O> func, int parallelism) {

> return jsc.parallelize(data, parallelism).map(func).collectAsList();

> }

> ```

>

> and the invocation

>

> ```

> engineContext.parallelDo(Arrays.asList(1,2,3), (a) -> {

> return 0;

> }, 3);

> ```

>

> Can you a small Flink implementation as well and confirm this approach will work for Flink as well. Thats another key thing to answer, before we finalize the approach.

>

> Note that

>

> a) some of the code that uses `rdd.mapToPair()` etc before collecting, have to be rewritten using Java Streams. i.e

>

> `engineContext.parallelDo().map()` instead of `jsc.parallelize().map().mapToPair()` currently.

>

> b) Also we should limit the use of parallelDo() to only cases where there are no grouping/aggregations involved. for e.g if we are doing `jsc.parallelize(files, ..).map(record).reduceByKey(..)`, collecting without reducing will lead to OOMs. We can preserve what you are doing currently, for such cases.

>

> But the parallelDo should help up reduce the amount of code broken up (and thus reduce the effort for the flink engine).

>

> @wangxianghu I am happy to shepherd this PR through from this point as well. lmk

>

> cc @yanghua @leesf

Yes, I noticed that problem lately. we should use generic methods. I also agree with the a) and b) you mentioned.

In the `parallelDo` method flink engine can operate the list directly using Java Stream, I have verified that. but there is a problem:

The function used in spark map operator is `org.apache.spark.api.java.function.Function` while what flink can use is `java.util.function.Function` we should align it.

maybe we can use `java.util.function.Function` only, If this way, there is no need to distinguish spark and flink, there both use java stream to implement those kind operations. the generic method could be like this:

```public class HoodieEngineContext {

public <I, O> List<O> parallelDo(List<I> data, Function<I, O> func) {

return data.stream().map(func).collect(Collectors.toList());

}

}```

WDYT?

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] wangxianghu commented on a change in pull request #1827: [HUDI-1089] Refactor hudi-client to support multi-engine

Posted by GitBox <gi...@apache.org>.

wangxianghu commented on a change in pull request #1827:

URL: https://github.com/apache/hudi/pull/1827#discussion_r492434405

##########

File path: hudi-client/hudi-client-common/src/main/java/org/apache/hudi/common/HoodieEngineContext.java

##########

@@ -0,0 +1,66 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.hudi.common;

+

+import org.apache.hudi.client.TaskContextSupplier;

+import org.apache.hudi.common.config.SerializableConfiguration;

+

+import java.util.List;

+import java.util.function.Consumer;

+import java.util.function.Function;

+import java.util.stream.Collectors;

+import java.util.stream.Stream;

+

+/**

+ * Base class contains the context information needed by the engine at runtime. It will be extended by different

+ * engine implementation if needed.

+ */

+public class HoodieEngineContext {

+ /**

+ * A wrapped hadoop configuration which can be serialized.

+ */

+ private SerializableConfiguration hadoopConf;

+

+ private TaskContextSupplier taskContextSupplier;

+

+ public HoodieEngineContext(SerializableConfiguration hadoopConf, TaskContextSupplier taskContextSupplier) {

+ this.hadoopConf = hadoopConf;

+ this.taskContextSupplier = taskContextSupplier;

+ }

+

+ public SerializableConfiguration getHadoopConf() {

+ return hadoopConf;

+ }

+

+ public TaskContextSupplier getTaskContextSupplier() {

+ return taskContextSupplier;

+ }

+

+ public <I, O> List<O> map(List<I> data, Function<I, O> func) {

Review comment:

> I think we should leave this abstract and let the engines implement this? even for Java. Its better to have a `HoodieJavaEngineContext`. From what I can see, this is not overridden in `HoodieSparkEngineContext` and thus we lose the parallel execution that we currently have with Spark with this change.

as we discussed before, parallelDo model need a function as input parameter, Unfortunately, different engines need different type function, its hard to align them in an abstract parallelDo method. so we agree to use ` java.util.function.Function` as the unified input function. in this way, there is no need to distinguish spark and flink, and the parallelism is not needed too.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] wangxianghu commented on pull request #1827: [HUDI-1089] Refactor hudi-client to support multi-engine

Posted by GitBox <gi...@apache.org>.

wangxianghu commented on pull request #1827:

URL: https://github.com/apache/hudi/pull/1827#issuecomment-698161234

> @wangxianghu Looks like we have much less class splitting now. I want to try and reduce this further if possible.

> If its alright with you, I can take over from here, make some changes and push another commit on top of yours, to try and get this across the finish line. Want to coordinate so that we are not making parallel changes,

@vinothchandar, Yeah, Of course, you can take over from here, this will greatly facilitate the process

thanks 👍

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] vinothchandar commented on pull request #1827: [HUDI-1089] Refactor hudi-client to support multi-engine

Posted by GitBox <gi...@apache.org>.

vinothchandar commented on pull request #1827:

URL: https://github.com/apache/hudi/pull/1827#issuecomment-659789500

@Mathieu1124 @leesf can you please share any tests you may have done in your own environment to ensure existing functionality is in tact.. This is a major signal we may not completely get with a PR review

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] vinothchandar commented on pull request #1827: [HUDI-1089] Refactor hudi-client to support multi-engine

Posted by GitBox <gi...@apache.org>.

vinothchandar commented on pull request #1827:

URL: https://github.com/apache/hudi/pull/1827#issuecomment-691745384

I can help address the remaining feedback. I will push a small diff today/tmrw.

Overall, looks like a reasonable start.

The major feedback I still have is the following

>would a parallelDo(func, parallelism) method in HoodieEngineContext help us avoid a lot of base/child class duplication of logic like this?

Lot of usages are like `jsc.parallelize(list, parallelism).map(func)` , which all require a base-child class now. I am wondering if its easier to take those usages alone and implement as `engineContext.parallelDo(list, func, parallelism)`. This can be the lowest common denominator across Spark/Flink etc. We can avoid splitting a good chunk of classes if we do this IMO. If this is interesting, and we agree, I can try to quantify.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] vinothchandar commented on a change in pull request #1827: [HUDI-1089] Refactor hudi-client to support multi-engine

Posted by GitBox <gi...@apache.org>.

vinothchandar commented on a change in pull request #1827:

URL: https://github.com/apache/hudi/pull/1827#discussion_r492262964

##########

File path: hudi-client/hudi-spark-client/src/main/java/org/apache/hudi/common/HoodieSparkEngineContext.java

##########

@@ -0,0 +1,56 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.hudi.common;

+

+import org.apache.hudi.client.SparkTaskContextSupplier;

+import org.apache.hudi.common.config.SerializableConfiguration;

+import org.apache.spark.api.java.JavaSparkContext;

+import org.apache.spark.sql.SQLContext;

+

+/**

+ * A Spark engine implementation of HoodieEngineContext.

+ */

+public class HoodieSparkEngineContext extends HoodieEngineContext {

Review comment:

can we implement versiosn of `map`, `flatMap`, `forEach` here which use `javaSparkContext.parallelize()` ? It would be good to keep this PR free of any changes in terms of whether we are executing the deletes/lists in parallel or in serial.

##########

File path: hudi-client/hudi-spark-client/src/main/java/org/apache/hudi/table/SparkMarkerFiles.java

##########

@@ -0,0 +1,124 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.hudi.table;

+

+import org.apache.hadoop.fs.FileStatus;

+import org.apache.hadoop.fs.FileSystem;

+import org.apache.hadoop.fs.LocatedFileStatus;

+import org.apache.hadoop.fs.Path;

+import org.apache.hadoop.fs.RemoteIterator;

+import org.apache.hudi.bifunction.wrapper.ThrowingFunction;

+import org.apache.hudi.common.HoodieEngineContext;

+import org.apache.hudi.common.config.SerializableConfiguration;

+import org.apache.hudi.common.model.IOType;

+import org.apache.hudi.common.table.HoodieTableMetaClient;

+import org.apache.hudi.exception.HoodieIOException;

+import org.apache.log4j.LogManager;

+import org.apache.log4j.Logger;

+

+import java.io.IOException;

+import java.util.ArrayList;

+import java.util.Arrays;

+import java.util.HashSet;

+import java.util.List;

+import java.util.Set;

+import java.util.stream.Collectors;

+import java.util.stream.Stream;

+

+import static org.apache.hudi.bifunction.wrapper.BiFunctionWrapper.throwingConsumerWrapper;

+import static org.apache.hudi.bifunction.wrapper.BiFunctionWrapper.throwingFlatMapWrapper;

+

+public class SparkMarkerFiles extends BaseMarkerFiles {

+

+ private static final Logger LOG = LogManager.getLogger(SparkMarkerFiles.class);

+

+ public SparkMarkerFiles(HoodieTable table, String instantTime) {

+ super(table, instantTime);

+ }

+

+ public SparkMarkerFiles(FileSystem fs, String basePath, String markerFolderPath, String instantTime) {

+ super(fs, basePath, markerFolderPath, instantTime);

+ }

+

+ @Override

+ public boolean deleteMarkerDir(HoodieEngineContext context, int parallelism) {

+ try {

+ if (fs.exists(markerDirPath)) {

+ FileStatus[] fileStatuses = fs.listStatus(markerDirPath);

+ List<String> markerDirSubPaths = Arrays.stream(fileStatuses)

+ .map(fileStatus -> fileStatus.getPath().toString())

+ .collect(Collectors.toList());

+

+ if (markerDirSubPaths.size() > 0) {

+ SerializableConfiguration conf = new SerializableConfiguration(fs.getConf());

+ context.foreach(markerDirSubPaths, throwingConsumerWrapper(subPathStr -> {

+ Path subPath = new Path(subPathStr);

+ FileSystem fileSystem = subPath.getFileSystem(conf.get());

+ fileSystem.delete(subPath, true);

+ }));

+ }

+

+ boolean result = fs.delete(markerDirPath, true);

+ LOG.info("Removing marker directory at " + markerDirPath);

+ return result;

+ }

+ } catch (IOException ioe) {

+ throw new HoodieIOException(ioe.getMessage(), ioe);

+ }

+ return false;

+ }

+

+ @Override

+ public Set<String> createdAndMergedDataPaths(HoodieEngineContext context, int parallelism) throws IOException {

Review comment:

we are not using parallelism here. This will lead to a perf regression w.r.t master.

##########

File path: hudi-client/hudi-client-common/src/main/java/org/apache/hudi/common/HoodieEngineContext.java

##########

@@ -0,0 +1,66 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.hudi.common;

+

+import org.apache.hudi.client.TaskContextSupplier;

+import org.apache.hudi.common.config.SerializableConfiguration;

+

+import java.util.List;

+import java.util.function.Consumer;

+import java.util.function.Function;

+import java.util.stream.Collectors;

+import java.util.stream.Stream;

+

+/**

+ * Base class contains the context information needed by the engine at runtime. It will be extended by different

+ * engine implementation if needed.

+ */

+public class HoodieEngineContext {

+ /**

+ * A wrapped hadoop configuration which can be serialized.

+ */

+ private SerializableConfiguration hadoopConf;

+

+ private TaskContextSupplier taskContextSupplier;

+

+ public HoodieEngineContext(SerializableConfiguration hadoopConf, TaskContextSupplier taskContextSupplier) {

+ this.hadoopConf = hadoopConf;

+ this.taskContextSupplier = taskContextSupplier;

+ }

+

+ public SerializableConfiguration getHadoopConf() {

+ return hadoopConf;

+ }

+

+ public TaskContextSupplier getTaskContextSupplier() {

+ return taskContextSupplier;

+ }

+

+ public <I, O> List<O> map(List<I> data, Function<I, O> func) {

Review comment:

Also these APIs should take in a `parallelism` parameter, no?

##########

File path: hudi-client/hudi-spark-client/src/main/java/org/apache/hudi/table/SparkMarkerFiles.java

##########

@@ -0,0 +1,124 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.hudi.table;

+

+import org.apache.hadoop.fs.FileStatus;

+import org.apache.hadoop.fs.FileSystem;

+import org.apache.hadoop.fs.LocatedFileStatus;

+import org.apache.hadoop.fs.Path;

+import org.apache.hadoop.fs.RemoteIterator;

+import org.apache.hudi.bifunction.wrapper.ThrowingFunction;

+import org.apache.hudi.common.HoodieEngineContext;

+import org.apache.hudi.common.config.SerializableConfiguration;

+import org.apache.hudi.common.model.IOType;

+import org.apache.hudi.common.table.HoodieTableMetaClient;

+import org.apache.hudi.exception.HoodieIOException;

+import org.apache.log4j.LogManager;

+import org.apache.log4j.Logger;

+

+import java.io.IOException;

+import java.util.ArrayList;

+import java.util.Arrays;

+import java.util.HashSet;

+import java.util.List;

+import java.util.Set;

+import java.util.stream.Collectors;

+import java.util.stream.Stream;

+

+import static org.apache.hudi.bifunction.wrapper.BiFunctionWrapper.throwingConsumerWrapper;

+import static org.apache.hudi.bifunction.wrapper.BiFunctionWrapper.throwingFlatMapWrapper;

+

+public class SparkMarkerFiles extends BaseMarkerFiles {

Review comment:

Given this file is now free of Spark, we dont have the need of breaking these into base and child classes right.

##########

File path: hudi-client/hudi-client-common/src/main/java/org/apache/hudi/common/HoodieEngineContext.java

##########

@@ -0,0 +1,66 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.hudi.common;

+

+import org.apache.hudi.client.TaskContextSupplier;

+import org.apache.hudi.common.config.SerializableConfiguration;

+

+import java.util.List;

+import java.util.function.Consumer;

+import java.util.function.Function;

+import java.util.stream.Collectors;

+import java.util.stream.Stream;

+

+/**

+ * Base class contains the context information needed by the engine at runtime. It will be extended by different

+ * engine implementation if needed.

+ */

+public class HoodieEngineContext {

+ /**

+ * A wrapped hadoop configuration which can be serialized.

+ */

+ private SerializableConfiguration hadoopConf;

+

+ private TaskContextSupplier taskContextSupplier;

+

+ public HoodieEngineContext(SerializableConfiguration hadoopConf, TaskContextSupplier taskContextSupplier) {

+ this.hadoopConf = hadoopConf;

+ this.taskContextSupplier = taskContextSupplier;

+ }

+

+ public SerializableConfiguration getHadoopConf() {

+ return hadoopConf;

+ }

+

+ public TaskContextSupplier getTaskContextSupplier() {

+ return taskContextSupplier;

+ }

+

+ public <I, O> List<O> map(List<I> data, Function<I, O> func) {

Review comment:

I think we should leave this abstract and let the engines implement this? even for Java. Its better to have a `HoodieJavaEngineContext`. From what I can see, this is not overridden in `HoodieSparkEngineContext` and thus we lose the parallel execution that we currently have with Spark with this change.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] vinothchandar commented on pull request #1827: [HUDI-1089] Refactor hudi-client to support multi-engine

Posted by GitBox <gi...@apache.org>.

vinothchandar commented on pull request #1827:

URL: https://github.com/apache/hudi/pull/1827#issuecomment-701414946

rebasing against master now, its a pretty tricky one, given #2048 has introduced a new action and made changes on top of WriteClient

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] vinothchandar commented on a change in pull request #1827: [HUDI-1089] Refactor hudi-client to support multi-engine

Posted by GitBox <gi...@apache.org>.

vinothchandar commented on a change in pull request #1827:

URL: https://github.com/apache/hudi/pull/1827#discussion_r492872504

##########

File path: hudi-client/hudi-client-common/src/main/java/org/apache/hudi/common/HoodieEngineContext.java

##########

@@ -0,0 +1,66 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.