You are viewing a plain text version of this content. The canonical link for it is here.

Posted to notifications@couchdb.apache.org by GitBox <gi...@apache.org> on 2020/01/09 14:10:29 UTC

[GitHub] [couchdb] fne opened a new issue #2430: Running CouchDB in

container and then the couchdb pod doesn't release RAM consumed

fne opened a new issue #2430: Running CouchDB in container and then the couchdb pod doesn't release RAM consumed

URL: https://github.com/apache/couchdb/issues/2430

[NOTE]: # ( ^^ Provide a general summary of the issue in the title above. ^^ )

We use couchdb into an AKS environment as a cluster and using three pods

## Description

[NOTE]: # ( Describe the problem you're encountering. )

[TIP]: # ( Do NOT give us access or passwords to your actual CouchDB! )

When Couchdb starts consuming RAM it does not release it.

## Steps to Reproduce

[NOTE]: # ( Include commands to reproduce, if possible. curl is preferred. )

## Expected Behaviour

Release the ram when it's possible

[NOTE]: # ( Tell us what you expected to happen. )

## Your Environment

AKS

[TIP]: # ( Include as many relevant details about your environment as possible. )

[TIP]: # ( You can paste the output of curl http://YOUR-COUCHDB:5984/ here. )

* CouchDB version used:2.3.1

* Browser name and version:

* Operating system and version: linux

## Additional Context

[TIP]: # ( Add any other context about the problem here. )

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

With regards,

Apache Git Services

[GitHub] [couchdb] wohali commented on issue #2430: Running CouchDB in

container and then the couchdb pod doesn't release RAM consumed

Posted by GitBox <gi...@apache.org>.

wohali commented on issue #2430: Running CouchDB in container and then the couchdb pod doesn't release RAM consumed

URL: https://github.com/apache/couchdb/issues/2430#issuecomment-598967954

This doesn't sound like a CouchDB issue, but an intra-node networking issue - something that's known to cause issues with CouchDB clustering in 2.x and 3.x. We'd recommend you re-try your setup with the 3.0.0 image as well, if you can.

Going to close for now, but if an issue that's specific to CouchDB can be isolated, we're always happy to reopen.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

With regards,

Apache Git Services

[GitHub] [couchdb] fne commented on issue #2430: Running CouchDB in

container and then the couchdb pod doesn't release RAM consumed

Posted by GitBox <gi...@apache.org>.

fne commented on issue #2430: Running CouchDB in container and then the couchdb pod doesn't release RAM consumed

URL: https://github.com/apache/couchdb/issues/2430#issuecomment-586178919

Hello

We have those results :

/_node/_local/_stats/fabric

`{

worker: {

timeouts: {

value: 2,

type: "counter",

desc: "number of worker timeouts"

}

},

open_shard: {

timeouts: {

value: 5,

type: "counter",

desc: "number of open shard timeouts"

}

},

read_repairs: {

success: {

value: 403,

type: "counter",

desc: "number of successful read repair operations"

},

failure: {

value: 0,

type: "counter",

desc: "number of failed read repair operations"

}

},

doc_update: {

errors: {

value: 2039,

type: "counter",

desc: "number of document update errors"

},

mismatched_errors: {

value: 0,

type: "counter",

desc: "number of document update errors with multiple error types"

},

write_quorum_errors: {

value: 82,

type: "counter",

desc: "number of write quorum errors"

}

}

}`

/_membership:

{

all_nodes: [

"couchdb@couchdb-prod-couchdb-0.couchdb-prod-couchdb.prod.svc.cluster.local",

"couchdb@couchdb-prod-couchdb-1.couchdb-prod-couchdb.prod.svc.cluster.local",

"couchdb@couchdb-prod-couchdb-2.couchdb-prod-couchdb.prod.svc.cluster.local"

],

cluster_nodes: [

"couchdb@couchdb-prod-couchdb-0.couchdb-prod-couchdb.prod.svc.cluster.local",

"couchdb@couchdb-prod-couchdb-1.couchdb-prod-couchdb.prod.svc.cluster.local",

"couchdb@couchdb-prod-couchdb-2.couchdb-prod-couchdb.prod.svc.cluster.local"

]

}

/_node/_local/_stats/rexi:

`{

buffered: {

value: 897,

type: "counter",

desc: "number of rexi messages buffered"

},

down: {

value: 4207,

type: "counter",

desc: "number of rexi_DOWN messages handled"

},

dropped: {

value: 0,

type: "counter",

desc: "number of rexi messages dropped from buffers"

},

streams: {

timeout: {

init_stream: {

value: 0,

type: "counter",

desc: "number of rexi stream initialization timeouts"

},

stream: {

value: 0,

type: "counter",

desc: "number of rexi stream timeouts"

},

wait_for_ack: {

value: 0,

type: "counter",

desc: "number of rexi stream timeouts while waiting for acks"

}

}

}

}`

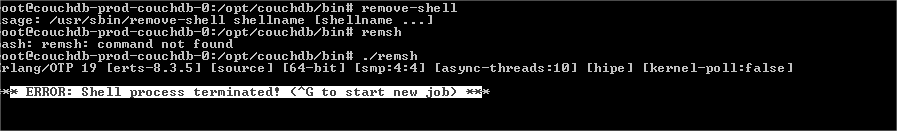

But when we execute remsh we have this error message :

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

With regards,

Apache Git Services

[GitHub] [couchdb] fne commented on issue #2430: Running CouchDB in

container and then the couchdb pod doesn't release RAM consumed

Posted by GitBox <gi...@apache.org>.

fne commented on issue #2430: Running CouchDB in container and then the couchdb pod doesn't release RAM consumed

URL: https://github.com/apache/couchdb/issues/2430#issuecomment-584017826

I also have this error and couchdb restart every day :

`stderr,"[notice] 2020-02-10T05:38:21.730591Z couchdb@couchdb-prod-couchdb-0.couchdb-prod-couchdb.prod.svc.cluster.local u003c0.356.0u003e -------- couch_replicator_clustering : cluster unstable ","2020-02-10T05:38:21.819Z","aks-nodepool1-33780697-2",,,54f99bab651526d6b30c03971db90dc9f445713a34320a6592213271cc655077

stderr,"[notice] 2020-02-10T05:38:21.803308Z couchdb@couchdb-prod-couchdb-0.couchdb-prod-couchdb.prod.svc.cluster.local u003c0.277.0u003e -------- rexi_buffer : cluster unstable ","2020-02-10T05:38:21.819Z","aks-nodepool1-33780697-2",,,54f99bab651526d6b30c03971db90dc9f445713a34320a6592213271cc655077

stderr,"[notice] 2020-02-10T05:38:21.814623Z couchdb@couchdb-prod-couchdb-0.couchdb-prod-couchdb.prod.svc.cluster.local u003c0.274.0u003e -------- rexi_server_mon : cluster unstable ","2020-02-10T05:38:21.819Z","aks-nodepool1-33780697-2",,,54f99bab651526d6b30c03971db90dc9f445713a34320a6592213271cc655077

stderr,"[notice] 2020-02-10T05:38:21.803005Z couchdb@couchdb-prod-couchdb-0.couchdb-prod-couchdb.prod.svc.cluster.local u003c0.278.0u003e -------- rexi_server_mon : cluster unstable ","2020-02-10T05:38:21.819Z","aks-nodepool1-33780697-2",,,54f99bab651526d6b30c03971db90dc9f445713a34320a6592213271cc655077

stderr,"[notice] 2020-02-10T05:38:21.815623Z couchdb@couchdb-prod-couchdb-0.couchdb-prod-couchdb.prod.svc.cluster.local u003c0.273.0u003e -------- rexi_server : cluster unstable ","2020-02-10T05:38:21.819Z","aks-nodepool1-33780697-2",,,54f99bab651526d6b30c03971db90dc9f445713a34320a6592213271cc655077

stderr,"[notice] 2020-02-10T05:38:21.811468Z couchdb@couchdb-prod-couchdb-0.couchdb-prod-couchdb.prod.svc.cluster.local u003c0.428.0u003e -------- Stopping replicator db changes listener u003c0.7246.0u003e ","2020-02-10T05:38:21.819Z","aks-nodepool1-33780697-2",,,54f99bab651526d6b30c03971db90dc9f445713a34320a6592213271cc655077

`

Is it a bad config ?? We had a cluster of 3 nodes with 4gigas dedicated to each couchdb node

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

With regards,

Apache Git Services

[GitHub] [couchdb] fne commented on issue #2430: Running CouchDB in

container and then the couchdb pod doesn't release RAM consumed

Posted by GitBox <gi...@apache.org>.

fne commented on issue #2430: Running CouchDB in container and then the couchdb pod doesn't release RAM consumed

URL: https://github.com/apache/couchdb/issues/2430#issuecomment-586569507

Our configuration

`## clusterSize is the initial size of the CouchDB cluster.

clusterSize: 3

## If allowAdminParty is enabled the cluster will start up without any database

## administrator account; i.e., all users will be granted administrative

## access. Otherwise, the system will look for a Secret called

## <ReleaseName>-couchdb containing `adminUsername`, `adminPassword` and

## `cookieAuthSecret` keys. See the `createAdminSecret` flag.

## ref: https://kubernetes.io/docs/concepts/configuration/secret/

allowAdminParty: false

## If createAdminSecret is enabled a Secret called <ReleaseName>-couchdb will

## be created containing auto-generated credentials. Users who prefer to set

## these values themselves have a couple of options:

##

## 1) The `adminUsername`, `adminPassword`, and `cookieAuthSecret` can be

## defined directly in the chart's values. Note that all of a chart's values

## are currently stored in plaintext in a ConfigMap in the tiller namespace.

##

## 2) This flag can be disabled and a Secret with the required keys can be

## created ahead of time.

createAdminSecret: true

adminUsername:

adminPassword:

# cookieAuthSecret: neither_is_this

## The storage volume used by each Pod in the StatefulSet. If a

## persistentVolume is not enabled, the Pods will use `emptyDir` ephemeral

## local storage. Setting the storageClass attribute to "-" disables dynamic

## provisioning of Persistent Volumes; leaving it unset will invoke the default

## provisioner.

persistentVolume:

enabled: true

accessModes:

- ReadWriteOnce

size: 500Gi

storageClass: managed-premium

## The CouchDB image

image:

repository: couchdb

tag: 2.3.0

pullPolicy: IfNotPresent

## Sidecar that connects the individual Pods into a cluster

helperImage:

repository: kocolosk/couchdb-statefulset-assembler

tag: 1.1.0

pullPolicy: IfNotPresent

## CouchDB is happy to spin up cluster nodes in parallel, but if you encounter

## problems you can try setting podManagementPolicy to the StatefulSet default

## `OrderedReady`

podManagementPolicy: Parallel

## To better tolerate Node failures, we can prevent Kubernetes scheduler from

## assigning more than one Pod of CouchDB StatefulSet per Node using podAntiAffinity.

affinity:

# podAntiAffinity:

# requiredDuringSchedulingIgnoredDuringExecution:

# - labelSelector:

# matchExpressions:

# - key: "app"

# operator: In

# values:

# - couchdb

# topologyKey: "kubernetes.io/hostname"

## A StatefulSet requires a headless Service to establish the stable network

## identities of the Pods, and that Service is created automatically by this

## chart without any additional configuration. The Service block below refers

## to a second Service that governs how clients connect to the CouchDB cluster.

service:

enabled: true

type: ClusterIP

externalPort: 5984

## An Ingress resource can provide name-based virtual hosting and TLS

## termination among other things for CouchDB deployments which are accessed

## from outside the Kubernetes cluster.

## ref: https://kubernetes.io/docs/concepts/services-networking/ingress/

ingress:

enabled: false

hosts:

- chart-example.local

annotations:

# kubernetes.io/ingress.class: nginx

# kubernetes.io/tls-acme: "true"

tls:

# Secrets must be manually created in the namespace.

# - secretName: chart-example-tls

# hosts:

# - chart-example.local

## Optional resource requests and limits for the CouchDB container

## ref: http://kubernetes.io/docs/user-guide/compute-resources/

resources:

# requests:

# cpu: 100m

# memory: 128Mi

limits:

cpu: 2000m

memory: 4096Mi

## erlangFlags is a map that is passed to the Erlang VM as flags using the

## ERL_FLAGS env. `name` and `setcookie` flags are minimally required to

## establish connectivity between cluster nodes.

## ref: http://erlang.org/doc/man/erl.html#init_flags

erlangFlags:

name: couchdb

setcookie: monster

## couchdbConfig will override default CouchDB configuration settings.

## The contents of this map are reformatted into a .ini file laid down

## by a ConfigMap object.

## ref: http://docs.couchdb.org/en/latest/config/index.html

couchdbConfig:

# cluster:

# q: 8 # Create 8 shards for each database

chttpd:

bind_address: any

# chttpd.require_valid_user disables all the anonymous requests to the port

# 5984 when is set to true.

require_valid_user: true

httpd:

WWW-Authenticate: Basic realm="administrator"

#compacte db

compactions:

_default: [{db_fragmentation, "50%"}, {view_fragmentation, "50%"}]

licensees: [{db_fragmentation, "50%"}, {view_fragmentation, "50%"}]

licensees_qualities: [{db_fragmentation, "50%"}, {view_fragmentation, "50%"}]

licensees_diplomas: [{db_fragmentation, "50%"}, {view_fragmentation, "50%"}]

structures: [{db_fragmentation, "50%"}, {view_fragmentation, "50%"}]

`

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

With regards,

Apache Git Services

[GitHub] [couchdb] nickva commented on issue #2430: Running CouchDB in

container and then the couchdb pod doesn't release RAM consumed

Posted by GitBox <gi...@apache.org>.

nickva commented on issue #2430: Running CouchDB in container and then the couchdb pod doesn't release RAM consumed

URL: https://github.com/apache/couchdb/issues/2430#issuecomment-584196840

Those message might indicate that the connections between nodes get interrupted.

Make sure you have a stable and relatively low latency connection between your cluster nodes.

For additional debugging could check out these ares:

1) Does `http $DB/_membership` return the expected set of nodes under all nodes and cluster nodes fields:

An example on my machine:

```

$ http $DB/_membership

{

"all_nodes": [

"node1@127.0.0.1",

"node2@127.0.0.1",

"node3@127.0.0.1"

],

"cluster_nodes": [

"node1@127.0.0.1",

"node2@127.0.0.1",

"node3@127.0.0.1"

]

}

```

2) Then if it does inspect _stats/rexi and _stats/fabric on some of the nodes:

```

http $DB/_node/_local/_stats/fabric

{

"doc_update": {

"errors": {

"desc": "number of document update errors",

"type": "counter",

"value": 0

},

"mismatched_errors": {

"desc": "number of document update errors with multiple error types",

"type": "counter",

"value": 0

},

"write_quorum_errors": {

"desc": "number of write quorum errors",

"type": "counter",

"value": 0

}

},

"open_shard": {

"timeouts": {

"desc": "number of open shard timeouts",

"type": "counter",

"value": 0

}

},

"read_repairs": {

"failure": {

"desc": "number of failed read repair operations",

"type": "counter",

"value": 0

},

"success": {

"desc": "number of successful read repair operations",

"type": "counter",

"value": 0

}

},

"worker": {

"timeouts": {

"desc": "number of worker timeouts",

"type": "counter",

"value": 0

}

}

}

```

```

http $DB/_node/_local/_stats/rexi

{

"buffered": {

"desc": "number of rexi messages buffered",

"type": "counter",

"value": 0

},

"down": {

"desc": "number of rexi_DOWN messages handled",

"type": "counter",

"value": 0

},

"dropped": {

"desc": "number of rexi messages dropped from buffers",

"type": "counter",

"value": 0

},

"streams": {

"timeout": {

"init_stream": {

"desc": "number of rexi stream initialization timeouts",

"type": "counter",

"value": 0

},

"stream": {

"desc": "number of rexi stream timeouts",

"type": "counter",

"value": 0

},

"wait_for_ack": {

"desc": "number of rexi stream timeouts while waiting for acks",

"type": "counter",

"value": 0

}

}

}

}

```

3) If you have remsh installed (in later releases it is part of the release in bin/ folder) you can run it see if connect you to the Erlang VM on one of the nodes and run `mem3:compare_nodelists()`

```

$ remsh

Erlang/OTP 21 [erts-10.3.5.3] [source] [64-bit] [smp:12:12] [ds:12:12:10] [async-threads:1] [hipe]

Eshell V10.3.5.3 (abort with ^G)

(node1@127.0.0.1)1> mem3:compare_nodelists().

[{non_member_nodes,[]},

{bad_nodes,[]},

{{cluster_nodes,['node1@127.0.0.1','node2@127.0.0.1',

'node3@127.0.0.1']},

['node1@127.0.0.1','node2@127.0.0.1','node3@127.0.0.1']}]

```

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

With regards,

Apache Git Services

[GitHub] [couchdb] nickva commented on issue #2430: Running CouchDB in

container and then the couchdb pod doesn't release RAM consumed

Posted by GitBox <gi...@apache.org>.

nickva commented on issue #2430: Running CouchDB in container and then the couchdb pod doesn't release RAM consumed

URL: https://github.com/apache/couchdb/issues/2430#issuecomment-586653684

Thanks for stats and the additional info, @fne

Based on the stats from fabric and `rexi`, it looks like there are interment connection errors between nodes in the cluster. It seems some nodes in the cluster, get disconnected from other nodes. I was specifically looking at `rexi_DOWN` messages, write with quorum errors.

`rexi` in this case is an internal component which performs inter-node RPC.

These disconnects can also be seen in the original logs as `rexi_buffer : cluster unstable` lines. . Those are expected during the initial cluster startup and shutdown.

Monitor to see when those errors start going up and how it relates to RAM usage. In other words see if increase in connection errors coincide with increased RAM usage.

Also there was bug in 2.3.1 release where sometimes requests like _changes feed workers were not cleaned up sometimes after the client connection ends. Is your RAM usage increase correlated with specific types of requests?

Another experiment could be, for debugging purposes, to start single node cluster and see if it experiences the same issue.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

With regards,

Apache Git Services

[GitHub] [couchdb] wohali closed issue #2430: Running CouchDB in container

and then the couchdb pod doesn't release RAM consumed

Posted by GitBox <gi...@apache.org>.

wohali closed issue #2430: Running CouchDB in container and then the couchdb pod doesn't release RAM consumed

URL: https://github.com/apache/couchdb/issues/2430

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

With regards,

Apache Git Services

[GitHub] [couchdb] fne edited a comment on issue #2430: Running CouchDB in

container and then the couchdb pod doesn't release RAM consumed

Posted by GitBox <gi...@apache.org>.

fne edited a comment on issue #2430: Running CouchDB in container and then the couchdb pod doesn't release RAM consumed

URL: https://github.com/apache/couchdb/issues/2430#issuecomment-586569507

Our configuration

```

## clusterSize is the initial size of the CouchDB cluster.

clusterSize: 3

## If allowAdminParty is enabled the cluster will start up without any database

## administrator account; i.e., all users will be granted administrative

## access. Otherwise, the system will look for a Secret called

## <ReleaseName>-couchdb containing `adminUsername`, `adminPassword` and

## `cookieAuthSecret` keys. See the `createAdminSecret` flag.

## ref: https://kubernetes.io/docs/concepts/configuration/secret/

allowAdminParty: false

## If createAdminSecret is enabled a Secret called <ReleaseName>-couchdb will

## be created containing auto-generated credentials. Users who prefer to set

## these values themselves have a couple of options:

##

## 1) The `adminUsername`, `adminPassword`, and `cookieAuthSecret` can be

## defined directly in the chart's values. Note that all of a chart's values

## are currently stored in plaintext in a ConfigMap in the tiller namespace.

##

## 2) This flag can be disabled and a Secret with the required keys can be

## created ahead of time.

createAdminSecret: true

adminUsername:

adminPassword:

# cookieAuthSecret: neither_is_this

## The storage volume used by each Pod in the StatefulSet. If a

## persistentVolume is not enabled, the Pods will use `emptyDir` ephemeral

## local storage. Setting the storageClass attribute to "-" disables dynamic

## provisioning of Persistent Volumes; leaving it unset will invoke the default

## provisioner.

persistentVolume:

enabled: true

accessModes:

- ReadWriteOnce

size: 500Gi

storageClass: managed-premium

## The CouchDB image

image:

repository: couchdb

tag: 2.3.0

pullPolicy: IfNotPresent

## Sidecar that connects the individual Pods into a cluster

helperImage:

repository: kocolosk/couchdb-statefulset-assembler

tag: 1.1.0

pullPolicy: IfNotPresent

## CouchDB is happy to spin up cluster nodes in parallel, but if you encounter

## problems you can try setting podManagementPolicy to the StatefulSet default

## `OrderedReady`

podManagementPolicy: Parallel

## To better tolerate Node failures, we can prevent Kubernetes scheduler from

## assigning more than one Pod of CouchDB StatefulSet per Node using podAntiAffinity.

affinity:

# podAntiAffinity:

# requiredDuringSchedulingIgnoredDuringExecution:

# - labelSelector:

# matchExpressions:

# - key: "app"

# operator: In

# values:

# - couchdb

# topologyKey: "kubernetes.io/hostname"

## A StatefulSet requires a headless Service to establish the stable network

## identities of the Pods, and that Service is created automatically by this

## chart without any additional configuration. The Service block below refers

## to a second Service that governs how clients connect to the CouchDB cluster.

service:

enabled: true

type: ClusterIP

externalPort: 5984

## An Ingress resource can provide name-based virtual hosting and TLS

## termination among other things for CouchDB deployments which are accessed

## from outside the Kubernetes cluster.

## ref: https://kubernetes.io/docs/concepts/services-networking/ingress/

ingress:

enabled: false

hosts:

- chart-example.local

annotations:

# kubernetes.io/ingress.class: nginx

# kubernetes.io/tls-acme: "true"

tls:

# Secrets must be manually created in the namespace.

# - secretName: chart-example-tls

# hosts:

# - chart-example.local

## Optional resource requests and limits for the CouchDB container

## ref: http://kubernetes.io/docs/user-guide/compute-resources/

resources:

# requests:

# cpu: 100m

# memory: 128Mi

limits:

cpu: 2000m

memory: 4096Mi

## erlangFlags is a map that is passed to the Erlang VM as flags using the

## ERL_FLAGS env. `name` and `setcookie` flags are minimally required to

## establish connectivity between cluster nodes.

## ref: http://erlang.org/doc/man/erl.html#init_flags

erlangFlags:

name: couchdb

setcookie: monster

## couchdbConfig will override default CouchDB configuration settings.

## The contents of this map are reformatted into a .ini file laid down

## by a ConfigMap object.

## ref: http://docs.couchdb.org/en/latest/config/index.html

couchdbConfig:

# cluster:

# q: 8 # Create 8 shards for each database

chttpd:

bind_address: any

# chttpd.require_valid_user disables all the anonymous requests to the port

# 5984 when is set to true.

require_valid_user: true

httpd:

WWW-Authenticate: Basic realm="administrator"

#compacte db

compactions:

_default: [{db_fragmentation, "50%"}, {view_fragmentation, "50%"}]

licensees: [{db_fragmentation, "50%"}, {view_fragmentation, "50%"}]

licensees_qualities: [{db_fragmentation, "50%"}, {view_fragmentation, "50%"}]

licensees_diplomas: [{db_fragmentation, "50%"}, {view_fragmentation, "50%"}]

structures: [{db_fragmentation, "50%"}, {view_fragmentation, "50%"}]

```

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

With regards,

Apache Git Services