You are viewing a plain text version of this content. The canonical link for it is here.

Posted to jira@kafka.apache.org by GitBox <gi...@apache.org> on 2020/10/09 05:58:17 UTC

[GitHub] [kafka] chia7712 opened a new pull request #9401: KAFKA-9628 Replace Produce request with automated protocol

chia7712 opened a new pull request #9401:

URL: https://github.com/apache/kafka/pull/9401

issue: https://issues.apache.org/jira/browse/KAFKA-9628

this PR is a part of KAFKA-9628.

### Committer Checklist (excluded from commit message)

- [ ] Verify design and implementation

- [ ] Verify test coverage and CI build status

- [ ] Verify documentation (including upgrade notes)

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kafka] chia7712 commented on a change in pull request #9401: KAFKA-9628 Replace Produce request/response with automated protocol

Posted by GitBox <gi...@apache.org>.

chia7712 commented on a change in pull request #9401:

URL: https://github.com/apache/kafka/pull/9401#discussion_r514903001

##########

File path: clients/src/main/resources/common/message/ProduceRequest.json

##########

@@ -33,21 +33,21 @@

"validVersions": "0-8",

"flexibleVersions": "none",

"fields": [

- { "name": "TransactionalId", "type": "string", "versions": "3+", "nullableVersions": "0+", "entityType": "transactionalId",

+ { "name": "TransactionalId", "type": "string", "versions": "3+", "nullableVersions": "3+", "ignorable": true, "entityType": "transactionalId",

Review comment:

you are right. will Roger that.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kafka] hachikuji commented on pull request #9401: KAFKA-9628 Replace Produce request/response with automated protocol

Posted by GitBox <gi...@apache.org>.

hachikuji commented on pull request #9401:

URL: https://github.com/apache/kafka/pull/9401#issuecomment-729870471

Great work @chia7712 ! With this and #9547, we have converted all of the protocols, which was a huge community effort!

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kafka] dajac commented on a change in pull request #9401: KAFKA-9628 Replace Produce request/response with automated protocol

Posted by GitBox <gi...@apache.org>.

dajac commented on a change in pull request #9401:

URL: https://github.com/apache/kafka/pull/9401#discussion_r526178616

##########

File path: clients/src/test/java/org/apache/kafka/common/requests/RequestResponseTest.java

##########

@@ -1730,7 +1768,6 @@ private DeleteTopicsResponse createDeleteTopicsResponse() {

private InitProducerIdRequest createInitPidRequest() {

InitProducerIdRequestData requestData = new InitProducerIdRequestData()

- .setTransactionalId(null)

Review comment:

We should keep this one.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kafka] chia7712 commented on a change in pull request #9401: KAFKA-9628 Replace Produce request/response with automated protocol

Posted by GitBox <gi...@apache.org>.

chia7712 commented on a change in pull request #9401:

URL: https://github.com/apache/kafka/pull/9401#discussion_r519081195

##########

File path: clients/src/main/resources/common/message/ProduceRequest.json

##########

@@ -33,21 +33,21 @@

"validVersions": "0-8",

"flexibleVersions": "none",

"fields": [

- { "name": "TransactionalId", "type": "string", "versions": "3+", "nullableVersions": "0+", "entityType": "transactionalId",

+ { "name": "TransactionalId", "type": "string", "versions": "3+", "nullableVersions": "3+", "ignorable": true, "entityType": "transactionalId",

"about": "The transactional ID, or null if the producer is not transactional." },

{ "name": "Acks", "type": "int16", "versions": "0+",

"about": "The number of acknowledgments the producer requires the leader to have received before considering a request complete. Allowed values: 0 for no acknowledgments, 1 for only the leader and -1 for the full ISR." },

- { "name": "TimeoutMs", "type": "int32", "versions": "0+",

+ { "name": "Timeout", "type": "int32", "versions": "0+",

Review comment:

oh, I trace the code again. You are right. The name is not serialized.

I will revise the field names as you suggested.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kafka] hachikuji commented on pull request #9401: KAFKA-9628 Replace Produce request/response with automated protocol

Posted by GitBox <gi...@apache.org>.

hachikuji commented on pull request #9401:

URL: https://github.com/apache/kafka/pull/9401#issuecomment-718828042

@chia7712 One thing that would be useful is running the producer-performance test, just to make sure the the performance is inline. Might be worth checking flame graphs as well.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kafka] hachikuji commented on a change in pull request #9401: KAFKA-9628 Replace Produce request/response with automated protocol

Posted by GitBox <gi...@apache.org>.

hachikuji commented on a change in pull request #9401:

URL: https://github.com/apache/kafka/pull/9401#discussion_r514467652

##########

File path: clients/src/main/java/org/apache/kafka/common/requests/ProduceRequest.java

##########

@@ -210,65 +142,42 @@ public String toString() {

}

}

+ /**

+ * We have to copy acks, timeout, transactionalId and partitionSizes from data since data maybe reset to eliminate

+ * the reference to ByteBuffer but those metadata are still useful.

+ */

private final short acks;

private final int timeout;

private final String transactionalId;

-

- private final Map<TopicPartition, Integer> partitionSizes;

-

+ // visible for testing

+ final Map<TopicPartition, Integer> partitionSizes;

+ private boolean hasTransactionalRecords = false;

+ private boolean hasIdempotentRecords = false;

// This is set to null by `clearPartitionRecords` to prevent unnecessary memory retention when a produce request is

// put in the purgatory (due to client throttling, it can take a while before the response is sent).

// Care should be taken in methods that use this field.

- private volatile Map<TopicPartition, MemoryRecords> partitionRecords;

- private boolean hasTransactionalRecords = false;

- private boolean hasIdempotentRecords = false;

-

- private ProduceRequest(short version, short acks, int timeout, Map<TopicPartition, MemoryRecords> partitionRecords, String transactionalId) {

- super(ApiKeys.PRODUCE, version);

- this.acks = acks;

- this.timeout = timeout;

-

- this.transactionalId = transactionalId;

- this.partitionRecords = partitionRecords;

- this.partitionSizes = createPartitionSizes(partitionRecords);

+ private volatile ProduceRequestData data;

- for (MemoryRecords records : partitionRecords.values()) {

- setFlags(records);

- }

- }

-

- private static Map<TopicPartition, Integer> createPartitionSizes(Map<TopicPartition, MemoryRecords> partitionRecords) {

- Map<TopicPartition, Integer> result = new HashMap<>(partitionRecords.size());

- for (Map.Entry<TopicPartition, MemoryRecords> entry : partitionRecords.entrySet())

- result.put(entry.getKey(), entry.getValue().sizeInBytes());

- return result;

- }

-

- public ProduceRequest(Struct struct, short version) {

+ public ProduceRequest(ProduceRequestData produceRequestData, short version) {

super(ApiKeys.PRODUCE, version);

- partitionRecords = new HashMap<>();

- for (Object topicDataObj : struct.getArray(TOPIC_DATA_KEY_NAME)) {

- Struct topicData = (Struct) topicDataObj;

- String topic = topicData.get(TOPIC_NAME);

- for (Object partitionResponseObj : topicData.getArray(PARTITION_DATA_KEY_NAME)) {

- Struct partitionResponse = (Struct) partitionResponseObj;

- int partition = partitionResponse.get(PARTITION_ID);

- MemoryRecords records = (MemoryRecords) partitionResponse.getRecords(RECORD_SET_KEY_NAME);

- setFlags(records);

- partitionRecords.put(new TopicPartition(topic, partition), records);

- }

- }

- partitionSizes = createPartitionSizes(partitionRecords);

- acks = struct.getShort(ACKS_KEY_NAME);

- timeout = struct.getInt(TIMEOUT_KEY_NAME);

- transactionalId = struct.getOrElse(NULLABLE_TRANSACTIONAL_ID, null);

- }

-

- private void setFlags(MemoryRecords records) {

- Iterator<MutableRecordBatch> iterator = records.batches().iterator();

- MutableRecordBatch entry = iterator.next();

- hasIdempotentRecords = hasIdempotentRecords || entry.hasProducerId();

- hasTransactionalRecords = hasTransactionalRecords || entry.isTransactional();

+ this.data = produceRequestData;

+ this.data.topicData().forEach(topicProduceData -> topicProduceData.partitions()

+ .forEach(partitionProduceData -> {

+ MemoryRecords records = MemoryRecords.readableRecords(partitionProduceData.records());

+ Iterator<MutableRecordBatch> iterator = records.batches().iterator();

+ MutableRecordBatch entry = iterator.next();

+ hasIdempotentRecords = hasIdempotentRecords || entry.hasProducerId();

Review comment:

Nevermind, I guess we have to do it here because the server needs to validate the request received from the client.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kafka] dajac commented on a change in pull request #9401: KAFKA-9628 Replace Produce request/response with automated protocol

Posted by GitBox <gi...@apache.org>.

dajac commented on a change in pull request #9401:

URL: https://github.com/apache/kafka/pull/9401#discussion_r521902936

##########

File path: clients/src/main/java/org/apache/kafka/common/requests/ProduceRequest.java

##########

@@ -194,7 +106,27 @@ private ProduceRequest build(short version, boolean validate) {

ProduceRequest.validateRecords(version, records);

}

}

- return new ProduceRequest(version, acks, timeout, partitionRecords, transactionalId);

+

+ List<ProduceRequestData.TopicProduceData> tpd = partitionRecords

Review comment:

I wonder if we could avoid all of this by requesting the `Sender` to create `TopicProduceData` directly. It seems that the `Sender` creates `partitionRecords` right before calling the builder: https://github.com/apache/kafka/blob/trunk/clients/src/main/java/org/apache/kafka/clients/producer/internals/Sender.java#L734. So we may be able to directly construct the expect data structure there.

##########

File path: clients/src/main/java/org/apache/kafka/common/requests/ProduceRequest.java

##########

@@ -323,27 +222,30 @@ public String toString(boolean verbose) {

@Override

public ProduceResponse getErrorResponse(int throttleTimeMs, Throwable e) {

/* In case the producer doesn't actually want any response */

- if (acks == 0)

- return null;

-

+ if (acks == 0) return null;

Errors error = Errors.forException(e);

- Map<TopicPartition, ProduceResponse.PartitionResponse> responseMap = new HashMap<>();

- ProduceResponse.PartitionResponse partitionResponse = new ProduceResponse.PartitionResponse(error);

-

- for (TopicPartition tp : partitions())

- responseMap.put(tp, partitionResponse);

-

- return new ProduceResponse(responseMap, throttleTimeMs);

+ return new ProduceResponse(new ProduceResponseData()

+ .setResponses(partitionSizes().keySet().stream().collect(Collectors.groupingBy(TopicPartition::topic)).entrySet()

+ .stream()

+ .map(entry -> new ProduceResponseData.TopicProduceResponse()

+ .setPartitionResponses(entry.getValue().stream().map(p -> new ProduceResponseData.PartitionProduceResponse()

+ .setIndex(p.partition())

+ .setRecordErrors(Collections.emptyList())

+ .setBaseOffset(INVALID_OFFSET)

+ .setLogAppendTimeMs(RecordBatch.NO_TIMESTAMP)

+ .setLogStartOffset(INVALID_OFFSET)

+ .setErrorMessage(e.getMessage())

+ .setErrorCode(error.code()))

+ .collect(Collectors.toList()))

+ .setName(entry.getKey()))

+ .collect(Collectors.toList()))

+ .setThrottleTimeMs(throttleTimeMs));

Review comment:

It seems that we could create `ProduceResponseData` based on `data`. This avoids the cost of the group-by operation and the cost of constructing `partitionSizes`. That should bring the benchmark inline with what we had before. Would this work?

##########

File path: clients/src/main/java/org/apache/kafka/common/requests/ProduceResponse.java

##########

@@ -204,118 +75,79 @@ public ProduceResponse(Map<TopicPartition, PartitionResponse> responses) {

* @param throttleTimeMs Time in milliseconds the response was throttled

*/

public ProduceResponse(Map<TopicPartition, PartitionResponse> responses, int throttleTimeMs) {

- this.responses = responses;

- this.throttleTimeMs = throttleTimeMs;

+ this(new ProduceResponseData()

+ .setResponses(responses.entrySet()

Review comment:

As we care of performances here, I wonder if we should try not using the stream api here.

Another trick would be to turn `TopicProduceResponse` in the `ProduceResponse` schema into a map by setting `"mapKey": true` for the topic name. This would allow to iterate over `responses`, get or create `TopicProduceResponse` for the topic, and add the `PartitionProduceResponse` into it.

It may be worth trying different implementation to compare their performances.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kafka] chia7712 commented on a change in pull request #9401: KAFKA-9628 Replace Produce request/response with automated protocol

Posted by GitBox <gi...@apache.org>.

chia7712 commented on a change in pull request #9401:

URL: https://github.com/apache/kafka/pull/9401#discussion_r526187166

##########

File path: clients/src/main/java/org/apache/kafka/clients/producer/internals/TransactionManager.java

##########

@@ -580,7 +580,6 @@ synchronized void bumpIdempotentEpochAndResetIdIfNeeded() {

if (currentState != State.INITIALIZING && !hasProducerId()) {

transitionTo(State.INITIALIZING);

InitProducerIdRequestData requestData = new InitProducerIdRequestData()

- .setTransactionalId(null)

Review comment:

Sorry that I did not test them on my local before pushing :(

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kafka] dajac commented on a change in pull request #9401: KAFKA-9628 Replace Produce request/response with automated protocol

Posted by GitBox <gi...@apache.org>.

dajac commented on a change in pull request #9401:

URL: https://github.com/apache/kafka/pull/9401#discussion_r526368983

##########

File path: clients/src/main/resources/common/message/ProduceRequest.json

##########

@@ -33,21 +33,21 @@

"validVersions": "0-8",

"flexibleVersions": "none",

"fields": [

- { "name": "TransactionalId", "type": "string", "versions": "3+", "nullableVersions": "0+", "entityType": "transactionalId",

+ { "name": "TransactionalId", "type": "string", "versions": "3+", "nullableVersions": "3+", "default": "null", "ignorable": true, "entityType": "transactionalId",

Review comment:

Indeed, that makes sense. My bad!

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kafka] chia7712 commented on a change in pull request #9401: KAFKA-9628 Replace Produce request/response with automated protocol

Posted by GitBox <gi...@apache.org>.

chia7712 commented on a change in pull request #9401:

URL: https://github.com/apache/kafka/pull/9401#discussion_r526314648

##########

File path: clients/src/main/resources/common/message/ProduceRequest.json

##########

@@ -33,21 +33,21 @@

"validVersions": "0-8",

"flexibleVersions": "none",

"fields": [

- { "name": "TransactionalId", "type": "string", "versions": "3+", "nullableVersions": "0+", "entityType": "transactionalId",

+ { "name": "TransactionalId", "type": "string", "versions": "3+", "nullableVersions": "3+", "default": "null", "ignorable": true, "entityType": "transactionalId",

Review comment:

> So we may as well fail fast.

That make sense. will revert this change.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kafka] chia7712 commented on a change in pull request #9401: KAFKA-9628 Replace Produce request/response with automated protocol

Posted by GitBox <gi...@apache.org>.

chia7712 commented on a change in pull request #9401:

URL: https://github.com/apache/kafka/pull/9401#discussion_r519251131

##########

File path: clients/src/main/java/org/apache/kafka/common/requests/ProduceResponse.java

##########

@@ -204,118 +75,78 @@ public ProduceResponse(Map<TopicPartition, PartitionResponse> responses) {

* @param throttleTimeMs Time in milliseconds the response was throttled

*/

public ProduceResponse(Map<TopicPartition, PartitionResponse> responses, int throttleTimeMs) {

- this.responses = responses;

- this.throttleTimeMs = throttleTimeMs;

+ this(new ProduceResponseData()

+ .setResponses(responses.entrySet()

+ .stream()

+ .collect(Collectors.groupingBy(e -> e.getKey().topic()))

+ .entrySet()

+ .stream()

+ .map(topicData -> new ProduceResponseData.TopicProduceResponse()

+ .setTopic(topicData.getKey())

+ .setPartitionResponses(topicData.getValue()

+ .stream()

+ .map(p -> new ProduceResponseData.PartitionProduceResponse()

+ .setPartition(p.getKey().partition())

+ .setBaseOffset(p.getValue().baseOffset)

+ .setLogStartOffset(p.getValue().logStartOffset)

+ .setLogAppendTime(p.getValue().logAppendTime)

+ .setErrorMessage(p.getValue().errorMessage)

+ .setErrorCode(p.getValue().error.code())

+ .setRecordErrors(p.getValue().recordErrors

+ .stream()

+ .map(e -> new ProduceResponseData.BatchIndexAndErrorMessage()

+ .setBatchIndex(e.batchIndex)

+ .setBatchIndexErrorMessage(e.message))

+ .collect(Collectors.toList())))

+ .collect(Collectors.toList())))

+ .collect(Collectors.toList()))

+ .setThrottleTimeMs(throttleTimeMs));

}

/**

- * Constructor from a {@link Struct}.

+ * Visible for testing.

*/

- public ProduceResponse(Struct struct) {

- responses = new HashMap<>();

- for (Object topicResponse : struct.getArray(RESPONSES_KEY_NAME)) {

- Struct topicRespStruct = (Struct) topicResponse;

- String topic = topicRespStruct.get(TOPIC_NAME);

-

- for (Object partResponse : topicRespStruct.getArray(PARTITION_RESPONSES_KEY_NAME)) {

- Struct partRespStruct = (Struct) partResponse;

- int partition = partRespStruct.get(PARTITION_ID);

- Errors error = Errors.forCode(partRespStruct.get(ERROR_CODE));

- long offset = partRespStruct.getLong(BASE_OFFSET_KEY_NAME);

- long logAppendTime = partRespStruct.hasField(LOG_APPEND_TIME_KEY_NAME) ?

- partRespStruct.getLong(LOG_APPEND_TIME_KEY_NAME) : RecordBatch.NO_TIMESTAMP;

- long logStartOffset = partRespStruct.getOrElse(LOG_START_OFFSET_FIELD, INVALID_OFFSET);

-

- List<RecordError> recordErrors = Collections.emptyList();

- if (partRespStruct.hasField(RECORD_ERRORS_KEY_NAME)) {

- Object[] recordErrorsArray = partRespStruct.getArray(RECORD_ERRORS_KEY_NAME);

- if (recordErrorsArray.length > 0) {

- recordErrors = new ArrayList<>(recordErrorsArray.length);

- for (Object indexAndMessage : recordErrorsArray) {

- Struct indexAndMessageStruct = (Struct) indexAndMessage;

- recordErrors.add(new RecordError(

- indexAndMessageStruct.getInt(BATCH_INDEX_KEY_NAME),

- indexAndMessageStruct.get(BATCH_INDEX_ERROR_MESSAGE_FIELD)

- ));

- }

- }

- }

-

- String errorMessage = partRespStruct.getOrElse(ERROR_MESSAGE_FIELD, null);

- TopicPartition tp = new TopicPartition(topic, partition);

- responses.put(tp, new PartitionResponse(error, offset, logAppendTime, logStartOffset, recordErrors, errorMessage));

- }

- }

- this.throttleTimeMs = struct.getOrElse(THROTTLE_TIME_MS, DEFAULT_THROTTLE_TIME);

- }

-

@Override

- protected Struct toStruct(short version) {

- Struct struct = new Struct(ApiKeys.PRODUCE.responseSchema(version));

-

- Map<String, Map<Integer, PartitionResponse>> responseByTopic = CollectionUtils.groupPartitionDataByTopic(responses);

- List<Struct> topicDatas = new ArrayList<>(responseByTopic.size());

- for (Map.Entry<String, Map<Integer, PartitionResponse>> entry : responseByTopic.entrySet()) {

- Struct topicData = struct.instance(RESPONSES_KEY_NAME);

- topicData.set(TOPIC_NAME, entry.getKey());

- List<Struct> partitionArray = new ArrayList<>();

- for (Map.Entry<Integer, PartitionResponse> partitionEntry : entry.getValue().entrySet()) {

- PartitionResponse part = partitionEntry.getValue();

- short errorCode = part.error.code();

- // If producer sends ProduceRequest V3 or earlier, the client library is not guaranteed to recognize the error code

- // for KafkaStorageException. In this case the client library will translate KafkaStorageException to

- // UnknownServerException which is not retriable. We can ensure that producer will update metadata and retry

- // by converting the KafkaStorageException to NotLeaderOrFollowerException in the response if ProduceRequest version <= 3

- if (errorCode == Errors.KAFKA_STORAGE_ERROR.code() && version <= 3)

- errorCode = Errors.NOT_LEADER_OR_FOLLOWER.code();

- Struct partStruct = topicData.instance(PARTITION_RESPONSES_KEY_NAME)

- .set(PARTITION_ID, partitionEntry.getKey())

- .set(ERROR_CODE, errorCode)

- .set(BASE_OFFSET_KEY_NAME, part.baseOffset);

- partStruct.setIfExists(LOG_APPEND_TIME_KEY_NAME, part.logAppendTime);

- partStruct.setIfExists(LOG_START_OFFSET_FIELD, part.logStartOffset);

-

- if (partStruct.hasField(RECORD_ERRORS_KEY_NAME)) {

- List<Struct> recordErrors = Collections.emptyList();

- if (!part.recordErrors.isEmpty()) {

- recordErrors = new ArrayList<>();

- for (RecordError indexAndMessage : part.recordErrors) {

- Struct indexAndMessageStruct = partStruct.instance(RECORD_ERRORS_KEY_NAME)

- .set(BATCH_INDEX_KEY_NAME, indexAndMessage.batchIndex)

- .set(BATCH_INDEX_ERROR_MESSAGE_FIELD, indexAndMessage.message);

- recordErrors.add(indexAndMessageStruct);

- }

- }

- partStruct.set(RECORD_ERRORS_KEY_NAME, recordErrors.toArray());

- }

-

- partStruct.setIfExists(ERROR_MESSAGE_FIELD, part.errorMessage);

- partitionArray.add(partStruct);

- }

- topicData.set(PARTITION_RESPONSES_KEY_NAME, partitionArray.toArray());

- topicDatas.add(topicData);

- }

- struct.set(RESPONSES_KEY_NAME, topicDatas.toArray());

- struct.setIfExists(THROTTLE_TIME_MS, throttleTimeMs);

+ public Struct toStruct(short version) {

+ return data.toStruct(version);

+ }

- return struct;

+ public ProduceResponseData data() {

+ return this.data;

}

+ /**

+ * this method is used by testing only.

+ * TODO: refactor the tests which are using this method and then remove this method from production code.

Review comment:

https://issues.apache.org/jira/browse/KAFKA-10697

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kafka] chia7712 commented on a change in pull request #9401: KAFKA-9628 Replace Produce request/response with automated protocol

Posted by GitBox <gi...@apache.org>.

chia7712 commented on a change in pull request #9401:

URL: https://github.com/apache/kafka/pull/9401#discussion_r525683594

##########

File path: clients/src/main/java/org/apache/kafka/clients/producer/internals/Sender.java

##########

@@ -560,13 +562,24 @@ private void handleProduceResponse(ClientResponse response, Map<TopicPartition,

log.trace("Received produce response from node {} with correlation id {}", response.destination(), correlationId);

// if we have a response, parse it

if (response.hasResponse()) {

+ // TODO: Sender should exercise PartitionProduceResponse rather than ProduceResponse.PartitionResponse

+ // https://issues.apache.org/jira/browse/KAFKA-10696

Review comment:

copy that

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kafka] chia7712 commented on pull request #9401: KAFKA-9628 Replace Produce request/response with automated protocol

Posted by GitBox <gi...@apache.org>.

chia7712 commented on pull request #9401:

URL: https://github.com/apache/kafka/pull/9401#issuecomment-728812062

@hachikuji @ijuma It seems to me the root cause of regression is caused by the conversion between old data struct and auto-generated protocol. As we all care for performance, I address more improvements (they were viewed as follow-up before) in this PR.

There are 5 possible improvements.

1. - [x] git rid of struct allocation

1. - [x] rewrite RequestUtils.hasTransactionalRecords & RequestUtils.hasIdempotentRecords to avoid duplicate loop and record instantiation (thanks to @hachikuji)

1. - [x] Sender#sendProduceRequest should use auto-generated protocol directly (thanks to @dajac)

1. - [ ] Replace ProduceResponse.PartitionResponse by auto-generated PartitionProduceResponse (https://issues.apache.org/jira/browse/KAFKA-10709)

1. - [ ] KafkaApis#handleProduceRequest should use auto-generated protocol (https://issues.apache.org/jira/browse/KAFKA-10730)

The recent commits include only 1, 2, and 3 since they touch fewer code. I also re-run all perf tests and all tests cases show better throughput with current PR even if 4. and 5. are not included.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kafka] hachikuji commented on pull request #9401: KAFKA-9628 Replace Produce request/response with automated protocol

Posted by GitBox <gi...@apache.org>.

hachikuji commented on pull request #9401:

URL: https://github.com/apache/kafka/pull/9401#issuecomment-724862848

For what it's worth, I think we'll get back whatever we lose here by taking `Struct` out of the serialization path.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kafka] chia7712 commented on a change in pull request #9401: KAFKA-9628 Replace Produce request/response with automated protocol

Posted by GitBox <gi...@apache.org>.

chia7712 commented on a change in pull request #9401:

URL: https://github.com/apache/kafka/pull/9401#discussion_r514819326

##########

File path: clients/src/main/java/org/apache/kafka/common/requests/ProduceRequest.java

##########

@@ -210,65 +142,42 @@ public String toString() {

}

}

+ /**

+ * We have to copy acks, timeout, transactionalId and partitionSizes from data since data maybe reset to eliminate

+ * the reference to ByteBuffer but those metadata are still useful.

+ */

private final short acks;

private final int timeout;

private final String transactionalId;

-

- private final Map<TopicPartition, Integer> partitionSizes;

-

+ // visible for testing

+ final Map<TopicPartition, Integer> partitionSizes;

+ private boolean hasTransactionalRecords = false;

+ private boolean hasIdempotentRecords = false;

// This is set to null by `clearPartitionRecords` to prevent unnecessary memory retention when a produce request is

// put in the purgatory (due to client throttling, it can take a while before the response is sent).

// Care should be taken in methods that use this field.

- private volatile Map<TopicPartition, MemoryRecords> partitionRecords;

- private boolean hasTransactionalRecords = false;

- private boolean hasIdempotentRecords = false;

-

- private ProduceRequest(short version, short acks, int timeout, Map<TopicPartition, MemoryRecords> partitionRecords, String transactionalId) {

- super(ApiKeys.PRODUCE, version);

- this.acks = acks;

- this.timeout = timeout;

-

- this.transactionalId = transactionalId;

- this.partitionRecords = partitionRecords;

- this.partitionSizes = createPartitionSizes(partitionRecords);

+ private volatile ProduceRequestData data;

- for (MemoryRecords records : partitionRecords.values()) {

- setFlags(records);

- }

- }

-

- private static Map<TopicPartition, Integer> createPartitionSizes(Map<TopicPartition, MemoryRecords> partitionRecords) {

- Map<TopicPartition, Integer> result = new HashMap<>(partitionRecords.size());

- for (Map.Entry<TopicPartition, MemoryRecords> entry : partitionRecords.entrySet())

- result.put(entry.getKey(), entry.getValue().sizeInBytes());

- return result;

- }

-

- public ProduceRequest(Struct struct, short version) {

+ public ProduceRequest(ProduceRequestData produceRequestData, short version) {

super(ApiKeys.PRODUCE, version);

- partitionRecords = new HashMap<>();

- for (Object topicDataObj : struct.getArray(TOPIC_DATA_KEY_NAME)) {

- Struct topicData = (Struct) topicDataObj;

- String topic = topicData.get(TOPIC_NAME);

- for (Object partitionResponseObj : topicData.getArray(PARTITION_DATA_KEY_NAME)) {

- Struct partitionResponse = (Struct) partitionResponseObj;

- int partition = partitionResponse.get(PARTITION_ID);

- MemoryRecords records = (MemoryRecords) partitionResponse.getRecords(RECORD_SET_KEY_NAME);

- setFlags(records);

- partitionRecords.put(new TopicPartition(topic, partition), records);

- }

- }

- partitionSizes = createPartitionSizes(partitionRecords);

- acks = struct.getShort(ACKS_KEY_NAME);

- timeout = struct.getInt(TIMEOUT_KEY_NAME);

- transactionalId = struct.getOrElse(NULLABLE_TRANSACTIONAL_ID, null);

- }

-

- private void setFlags(MemoryRecords records) {

- Iterator<MutableRecordBatch> iterator = records.batches().iterator();

- MutableRecordBatch entry = iterator.next();

- hasIdempotentRecords = hasIdempotentRecords || entry.hasProducerId();

- hasTransactionalRecords = hasTransactionalRecords || entry.isTransactional();

+ this.data = produceRequestData;

+ this.data.topicData().forEach(topicProduceData -> topicProduceData.partitions()

+ .forEach(partitionProduceData -> {

+ MemoryRecords records = MemoryRecords.readableRecords(partitionProduceData.records());

+ Iterator<MutableRecordBatch> iterator = records.batches().iterator();

+ MutableRecordBatch entry = iterator.next();

+ hasIdempotentRecords = hasIdempotentRecords || entry.hasProducerId();

Review comment:

clients module has some tests which depends on it so I moves the helper to ```RequestUtils```.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kafka] hachikuji commented on pull request #9401: KAFKA-9628 Replace Produce request/response with automated protocol

Posted by GitBox <gi...@apache.org>.

hachikuji commented on pull request #9401:

URL: https://github.com/apache/kafka/pull/9401#issuecomment-728545356

It would be helpful if someone can reproduce the tests I did to make sure it is not something funky in my environment.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kafka] chia7712 commented on a change in pull request #9401: KAFKA-9628 Replace Produce request/response with automated protocol

Posted by GitBox <gi...@apache.org>.

chia7712 commented on a change in pull request #9401:

URL: https://github.com/apache/kafka/pull/9401#discussion_r526032716

##########

File path: clients/src/test/java/org/apache/kafka/clients/NetworkClientTest.java

##########

@@ -154,8 +155,11 @@ public void testClose() {

client.poll(1, time.milliseconds());

assertTrue("The client should be ready", client.isReady(node, time.milliseconds()));

- ProduceRequest.Builder builder = ProduceRequest.Builder.forCurrentMagic((short) 1, 1000,

- Collections.emptyMap());

+ ProduceRequest.Builder builder = ProduceRequest.forCurrentMagic(new ProduceRequestData()

+ .setTopicData(new ProduceRequestData.TopicProduceDataCollection())

+ .setAcks((short) 1)

+ .setTimeoutMs(1000)

+ .setTransactionalId(null));

Review comment:

make sense to me. will remove this redundant setter

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kafka] ijuma commented on pull request #9401: KAFKA-9628 Replace Produce request/response with automated protocol

Posted by GitBox <gi...@apache.org>.

ijuma commented on pull request #9401:

URL: https://github.com/apache/kafka/pull/9401#issuecomment-728974716

Thanks @chia7712. Can you check that the @hachikuji's perf test doesn't result in significantly increased latency?

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kafka] chia7712 commented on a change in pull request #9401: KAFKA-9628 Replace Produce request/response with automated protocol

Posted by GitBox <gi...@apache.org>.

chia7712 commented on a change in pull request #9401:

URL: https://github.com/apache/kafka/pull/9401#discussion_r526039872

##########

File path: clients/src/main/resources/common/message/ProduceRequest.json

##########

@@ -33,21 +33,21 @@

"validVersions": "0-8",

"flexibleVersions": "none",

"fields": [

- { "name": "TransactionalId", "type": "string", "versions": "3+", "nullableVersions": "0+", "entityType": "transactionalId",

+ { "name": "TransactionalId", "type": "string", "versions": "3+", "nullableVersions": "3+", "default": "null", "entityType": "transactionalId",

Review comment:

you are right.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kafka] chia7712 commented on a change in pull request #9401: KAFKA-9628 Replace Produce request/response with automated protocol

Posted by GitBox <gi...@apache.org>.

chia7712 commented on a change in pull request #9401:

URL: https://github.com/apache/kafka/pull/9401#discussion_r526280042

##########

File path: clients/src/main/resources/common/message/ProduceRequest.json

##########

@@ -33,21 +33,21 @@

"validVersions": "0-8",

"flexibleVersions": "none",

"fields": [

- { "name": "TransactionalId", "type": "string", "versions": "3+", "nullableVersions": "0+", "entityType": "transactionalId",

+ { "name": "TransactionalId", "type": "string", "versions": "3+", "nullableVersions": "3+", "default": "null", "ignorable": true, "entityType": "transactionalId",

Review comment:

It seems to me ignorable should be true in order to keep behavior consistency. With "ignore=false", setting value to ```TransactionalId``` can cause ```UnsupportedVersionException``` if the version is small than 3. The previous code (https://github.com/apache/kafka/blob/trunk/clients/src/main/java/org/apache/kafka/common/requests/ProduceRequest.java#L286) does not cause such exception.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kafka] chia7712 edited a comment on pull request #9401: KAFKA-9628 Replace Produce request/response with automated protocol

Posted by GitBox <gi...@apache.org>.

chia7712 edited a comment on pull request #9401:

URL: https://github.com/apache/kafka/pull/9401#issuecomment-728812062

@hachikuji @ijuma It seems to me the root cause of regression is caused by the conversion between old data struct and auto-generated protocol. As we all care for performance, I address more improvements (they were viewed as follow-up before) in this PR.

There are 5 possible improvements.

1. - [x] git rid of struct allocation (thanks to @hachikuji)

1. - [x] rewrite RequestUtils.hasTransactionalRecords & RequestUtils.hasIdempotentRecords to avoid duplicate loop and record instantiation (thanks to @hachikuji)

1. - [x] Sender#sendProduceRequest should use auto-generated protocol directly (thanks to @dajac)

1. - [ ] [client side] Replace ProduceResponse.PartitionResponse by auto-generated PartitionProduceResponse (https://issues.apache.org/jira/browse/KAFKA-10709)

1. - [ ] [server side] KafkaApis#handleProduceRequest should use auto-generated protocol (https://issues.apache.org/jira/browse/KAFKA-10730)

The recent commits include only 1, 2, and 3 since they touch fewer code. I also re-run all perf tests ~and all tests cases show better throughput with current PR even though 4. and 5. are not included.~

Most of test cases show good improvements. By the contrast, the other tests (single partition + single producer) get either insignificant improvement or small regression. However, it seems to me they can get good improvement by remaining tasks (4 and 5)

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kafka] chia7712 commented on a change in pull request #9401: KAFKA-9628 Replace Produce request/response with automated protocol

Posted by GitBox <gi...@apache.org>.

chia7712 commented on a change in pull request #9401:

URL: https://github.com/apache/kafka/pull/9401#discussion_r522670294

##########

File path: clients/src/main/java/org/apache/kafka/common/requests/ProduceResponse.java

##########

@@ -204,118 +75,79 @@ public ProduceResponse(Map<TopicPartition, PartitionResponse> responses) {

* @param throttleTimeMs Time in milliseconds the response was throttled

*/

public ProduceResponse(Map<TopicPartition, PartitionResponse> responses, int throttleTimeMs) {

- this.responses = responses;

- this.throttleTimeMs = throttleTimeMs;

+ this(new ProduceResponseData()

+ .setResponses(responses.entrySet()

Review comment:

I have addressed your suggestion and it does improve the performance.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kafka] hachikuji edited a comment on pull request #9401: KAFKA-9628 Replace Produce request/response with automated protocol

Posted by GitBox <gi...@apache.org>.

hachikuji edited a comment on pull request #9401:

URL: https://github.com/apache/kafka/pull/9401#issuecomment-728383115

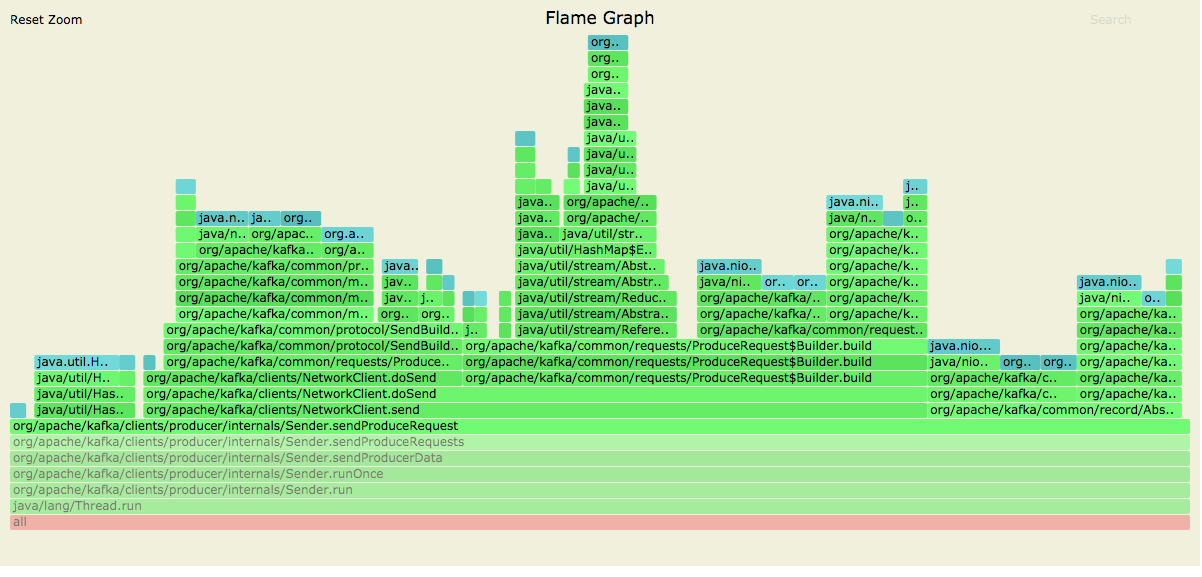

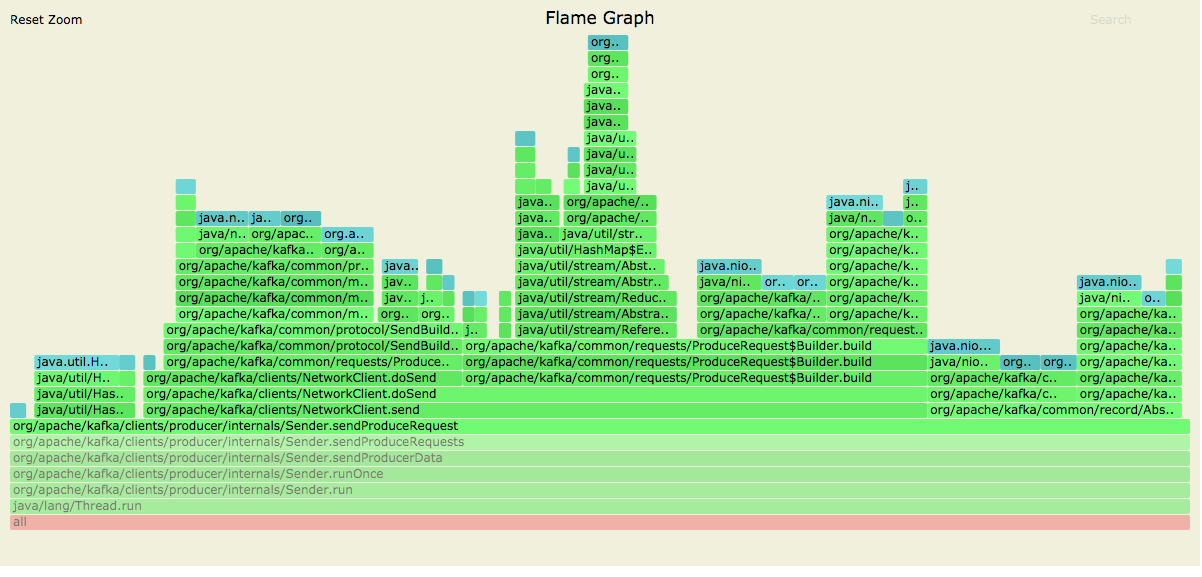

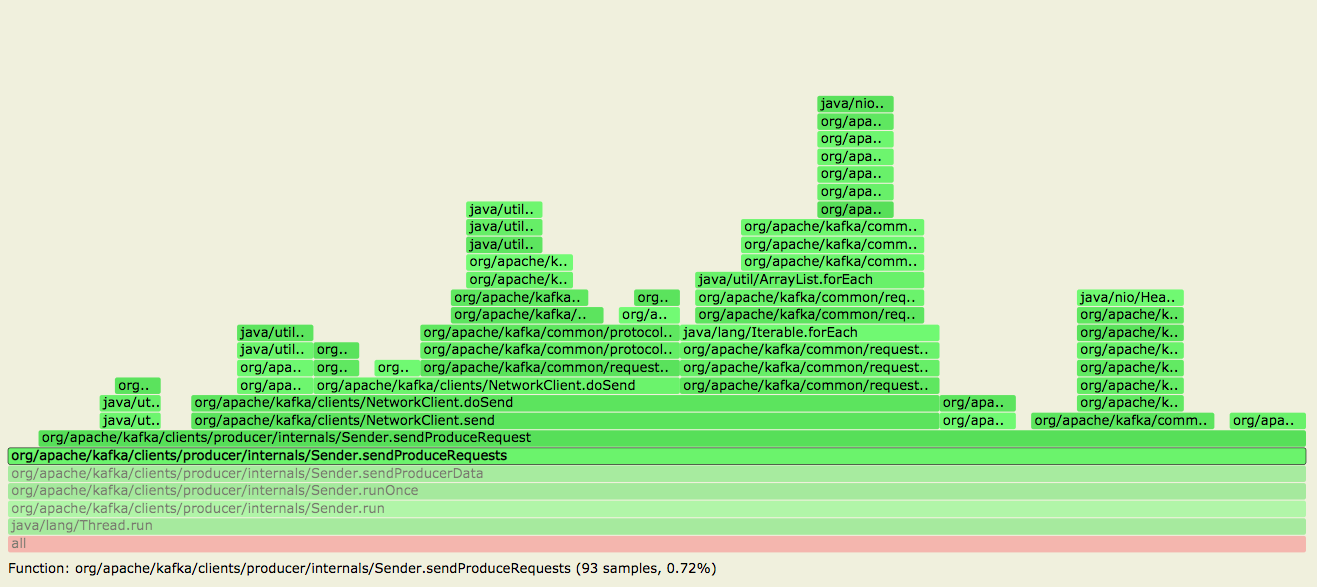

Posting allocation flame graphs from the producer before and after this patch:

So we succeeded in getting rid of the extra allocations in the network layer!

I generated these graphs using the producer performance test writing to a topic with 10 partitions on a cluster with a single broker.

```

> bin/kafka-producer-perf-test.sh --topic foo --num-records 250000000 --throughput -1 --record-size 256 --producer-props bootstrap.servers=localhost:9092

```

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kafka] chia7712 commented on a change in pull request #9401: KAFKA-9628 Replace Produce request/response with automated protocol

Posted by GitBox <gi...@apache.org>.

chia7712 commented on a change in pull request #9401:

URL: https://github.com/apache/kafka/pull/9401#discussion_r521927723

##########

File path: clients/src/main/java/org/apache/kafka/common/requests/ProduceRequest.java

##########

@@ -194,7 +106,27 @@ private ProduceRequest build(short version, boolean validate) {

ProduceRequest.validateRecords(version, records);

}

}

- return new ProduceRequest(version, acks, timeout, partitionRecords, transactionalId);

+

+ List<ProduceRequestData.TopicProduceData> tpd = partitionRecords

Review comment:

nice suggestion.

Could I address this in follow-up? I had filed jira (KAFKA-10696 ~ KAFKA-10698) to have ```Sender``` use auto-generated protocol directly.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kafka] chia7712 commented on a change in pull request #9401: KAFKA-9628 Replace Produce request/response with automated protocol

Posted by GitBox <gi...@apache.org>.

chia7712 commented on a change in pull request #9401:

URL: https://github.com/apache/kafka/pull/9401#discussion_r521957221

##########

File path: clients/src/main/java/org/apache/kafka/common/requests/ProduceRequest.java

##########

@@ -194,7 +106,27 @@ private ProduceRequest build(short version, boolean validate) {

ProduceRequest.validateRecords(version, records);

}

}

- return new ProduceRequest(version, acks, timeout, partitionRecords, transactionalId);

+

+ List<ProduceRequestData.TopicProduceData> tpd = partitionRecords

Review comment:

oh. The jira I created does not cover this issue. open a new ticket (https://issues.apache.org/jira/browse/KAFKA-10709)

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kafka] chia7712 edited a comment on pull request #9401: KAFKA-9628 Replace Produce request/response with automated protocol

Posted by GitBox <gi...@apache.org>.

chia7712 edited a comment on pull request #9401:

URL: https://github.com/apache/kafka/pull/9401#issuecomment-728812062

@hachikuji @ijuma It seems to me the root cause of regression is caused by the conversion between old data struct and auto-generated protocol. As we all care for performance, I address more improvements (they were viewed as follow-up before) in this PR.

There are 5 possible improvements.

1. - [x] git rid of struct allocation (thanks to @hachikuji)

1. - [x] rewrite RequestUtils.hasTransactionalRecords & RequestUtils.hasIdempotentRecords to avoid duplicate loop and record instantiation (thanks to @hachikuji)

1. - [x] Sender#sendProduceRequest should use auto-generated protocol directly (thanks to @dajac)

1. - [ ] Replace ProduceResponse.PartitionResponse by auto-generated PartitionProduceResponse (https://issues.apache.org/jira/browse/KAFKA-10709)

1. - [ ] KafkaApis#handleProduceRequest should use auto-generated protocol (https://issues.apache.org/jira/browse/KAFKA-10730)

The recent commits include only 1, 2, and 3 since they touch fewer code. I also re-run all perf tests ~and all tests cases show better throughput with current PR even though 4. and 5. are not included.~

Most of test cases show good improvements. By the contrast, the other tests (single partition + single producer) get insignificant improvement. However, it seems to me they can get good benefited by remaining improvement (4 and 5)

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kafka] chia7712 commented on a change in pull request #9401: KAFKA-9628 Replace Produce request/response with automated protocol

Posted by GitBox <gi...@apache.org>.

chia7712 commented on a change in pull request #9401:

URL: https://github.com/apache/kafka/pull/9401#discussion_r521931887

##########

File path: clients/src/main/java/org/apache/kafka/common/requests/ProduceRequest.java

##########

@@ -323,27 +222,30 @@ public String toString(boolean verbose) {

@Override

public ProduceResponse getErrorResponse(int throttleTimeMs, Throwable e) {

/* In case the producer doesn't actually want any response */

- if (acks == 0)

- return null;

-

+ if (acks == 0) return null;

Errors error = Errors.forException(e);

- Map<TopicPartition, ProduceResponse.PartitionResponse> responseMap = new HashMap<>();

- ProduceResponse.PartitionResponse partitionResponse = new ProduceResponse.PartitionResponse(error);

-

- for (TopicPartition tp : partitions())

- responseMap.put(tp, partitionResponse);

-

- return new ProduceResponse(responseMap, throttleTimeMs);

+ return new ProduceResponse(new ProduceResponseData()

+ .setResponses(partitionSizes().keySet().stream().collect(Collectors.groupingBy(TopicPartition::topic)).entrySet()

+ .stream()

+ .map(entry -> new ProduceResponseData.TopicProduceResponse()

+ .setPartitionResponses(entry.getValue().stream().map(p -> new ProduceResponseData.PartitionProduceResponse()

+ .setIndex(p.partition())

+ .setRecordErrors(Collections.emptyList())

+ .setBaseOffset(INVALID_OFFSET)

+ .setLogAppendTimeMs(RecordBatch.NO_TIMESTAMP)

+ .setLogStartOffset(INVALID_OFFSET)

+ .setErrorMessage(e.getMessage())

+ .setErrorCode(error.code()))

+ .collect(Collectors.toList()))

+ .setName(entry.getKey()))

+ .collect(Collectors.toList()))

+ .setThrottleTimeMs(throttleTimeMs));

Review comment:

I used ```data``` to generate ProduceResponseData. However, the ```data``` may be null when create ProduceResponseData. That is to say, it require if-else to handle null ```data``` in ```getErrorResponse```. It seems to me that is a bit ugly so not sure whether it is worth doing that.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kafka] chia7712 commented on pull request #9401: KAFKA-9628 Replace Produce request/response with automated protocol

Posted by GitBox <gi...@apache.org>.

chia7712 commented on pull request #9401:

URL: https://github.com/apache/kafka/pull/9401#issuecomment-723875285

> Can we summarize the regression here for a real world workload?

@ijuma I have attached benchmark result to description. I will loop more benchmark later.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kafka] hachikuji commented on a change in pull request #9401: KAFKA-9628 Replace Produce request/response with automated protocol

Posted by GitBox <gi...@apache.org>.

hachikuji commented on a change in pull request #9401:

URL: https://github.com/apache/kafka/pull/9401#discussion_r525586697

##########

File path: clients/src/main/java/org/apache/kafka/clients/producer/internals/Sender.java

##########

@@ -560,13 +562,24 @@ private void handleProduceResponse(ClientResponse response, Map<TopicPartition,

log.trace("Received produce response from node {} with correlation id {}", response.destination(), correlationId);

// if we have a response, parse it

if (response.hasResponse()) {

+ // TODO: Sender should exercise PartitionProduceResponse rather than ProduceResponse.PartitionResponse

+ // https://issues.apache.org/jira/browse/KAFKA-10696

Review comment:

nit: since we have the jira for tracking, can we remove the TODO? A few more of these in the PR.

##########

File path: clients/src/test/java/org/apache/kafka/common/requests/ProduceResponseTest.java

##########

@@ -100,16 +113,92 @@ public void produceResponseRecordErrorsTest() {

ProduceResponse response = new ProduceResponse(responseData);

Struct struct = response.toStruct(ver);

assertEquals("Should use schema version " + ver, ApiKeys.PRODUCE.responseSchema(ver), struct.schema());

- ProduceResponse.PartitionResponse deserialized = new ProduceResponse(struct).responses().get(tp);

+ ProduceResponse.PartitionResponse deserialized = new ProduceResponse(new ProduceResponseData(struct, ver)).responses().get(tp);

if (ver >= 8) {

assertEquals(1, deserialized.recordErrors.size());

assertEquals(3, deserialized.recordErrors.get(0).batchIndex);

assertEquals("Record error", deserialized.recordErrors.get(0).message);

assertEquals("Produce failed", deserialized.errorMessage);

} else {

assertEquals(0, deserialized.recordErrors.size());

- assertEquals(null, deserialized.errorMessage);

+ assertNull(deserialized.errorMessage);

}

}

}

+

+ /**

+ * the schema in this test is from previous code and the automatic protocol should be compatible to previous schema.

+ */

+ @Test

+ public void testCompatibility() {

Review comment:

I think this test might be overkill. We haven't done anything like this for the other converted APIs. It's a little similar to `MessageTest.testRequestSchemas`, which was useful verifying the generated schemas when the message generator was being written. Soon `testRequestSchemas` will be redundant, so I guess we have to decide if we just trust the generator and our compatibility system tests or if we want some other canonical representation. Thoughts?

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kafka] chia7712 commented on a change in pull request #9401: KAFKA-9628 Replace Produce request/response with automated protocol

Posted by GitBox <gi...@apache.org>.

chia7712 commented on a change in pull request #9401:

URL: https://github.com/apache/kafka/pull/9401#discussion_r526029843

##########

File path: clients/src/main/java/org/apache/kafka/clients/producer/internals/Sender.java

##########

@@ -560,13 +562,24 @@ private void handleProduceResponse(ClientResponse response, Map<TopicPartition,

log.trace("Received produce response from node {} with correlation id {}", response.destination(), correlationId);

// if we have a response, parse it

if (response.hasResponse()) {

+ // Sender should exercise PartitionProduceResponse rather than ProduceResponse.PartitionResponse

+ // https://issues.apache.org/jira/browse/KAFKA-10696

ProduceResponse produceResponse = (ProduceResponse) response.responseBody();

- for (Map.Entry<TopicPartition, ProduceResponse.PartitionResponse> entry : produceResponse.responses().entrySet()) {

- TopicPartition tp = entry.getKey();

- ProduceResponse.PartitionResponse partResp = entry.getValue();

+ produceResponse.data().responses().forEach(r -> r.partitionResponses().forEach(p -> {

+ TopicPartition tp = new TopicPartition(r.name(), p.index());

+ ProduceResponse.PartitionResponse partResp = new ProduceResponse.PartitionResponse(

+ Errors.forCode(p.errorCode()),

+ p.baseOffset(),

+ p.logAppendTimeMs(),

+ p.logStartOffset(),

+ p.recordErrors()

+ .stream()

+ .map(e -> new ProduceResponse.RecordError(e.batchIndex(), e.batchIndexErrorMessage()))

+ .collect(Collectors.toList()),

Review comment:

The reason we got rid of streaming APIs is because it produces extra collection (groupBy). However, in this case we have to create a new collection to carry non-auto-generated data (and https://issues.apache.org/jira/browse/KAFKA-10696 will eliminate such conversion) even if we get rid of stream APIs. Hence, it should be fine to keep stream APIs here.

##########

File path: clients/src/main/java/org/apache/kafka/common/requests/ProduceResponse.java

##########

@@ -203,119 +77,88 @@ public ProduceResponse(Map<TopicPartition, PartitionResponse> responses) {

* @param responses Produced data grouped by topic-partition

* @param throttleTimeMs Time in milliseconds the response was throttled

*/

+ @Deprecated

public ProduceResponse(Map<TopicPartition, PartitionResponse> responses, int throttleTimeMs) {

- this.responses = responses;

- this.throttleTimeMs = throttleTimeMs;

+ this(toData(responses, throttleTimeMs));

}

- /**

- * Constructor from a {@link Struct}.

- */

- public ProduceResponse(Struct struct) {

- responses = new HashMap<>();

- for (Object topicResponse : struct.getArray(RESPONSES_KEY_NAME)) {

- Struct topicRespStruct = (Struct) topicResponse;

- String topic = topicRespStruct.get(TOPIC_NAME);

-

- for (Object partResponse : topicRespStruct.getArray(PARTITION_RESPONSES_KEY_NAME)) {

- Struct partRespStruct = (Struct) partResponse;

- int partition = partRespStruct.get(PARTITION_ID);

- Errors error = Errors.forCode(partRespStruct.get(ERROR_CODE));

- long offset = partRespStruct.getLong(BASE_OFFSET_KEY_NAME);

- long logAppendTime = partRespStruct.hasField(LOG_APPEND_TIME_KEY_NAME) ?

- partRespStruct.getLong(LOG_APPEND_TIME_KEY_NAME) : RecordBatch.NO_TIMESTAMP;

- long logStartOffset = partRespStruct.getOrElse(LOG_START_OFFSET_FIELD, INVALID_OFFSET);

-

- List<RecordError> recordErrors = Collections.emptyList();

- if (partRespStruct.hasField(RECORD_ERRORS_KEY_NAME)) {

- Object[] recordErrorsArray = partRespStruct.getArray(RECORD_ERRORS_KEY_NAME);

- if (recordErrorsArray.length > 0) {

- recordErrors = new ArrayList<>(recordErrorsArray.length);

- for (Object indexAndMessage : recordErrorsArray) {

- Struct indexAndMessageStruct = (Struct) indexAndMessage;

- recordErrors.add(new RecordError(

- indexAndMessageStruct.getInt(BATCH_INDEX_KEY_NAME),

- indexAndMessageStruct.get(BATCH_INDEX_ERROR_MESSAGE_FIELD)

- ));

- }

- }

- }

+ @Override

+ protected Send toSend(String destination, ResponseHeader header, short apiVersion) {

+ return SendBuilder.buildResponseSend(destination, header, this.data, apiVersion);

+ }

- String errorMessage = partRespStruct.getOrElse(ERROR_MESSAGE_FIELD, null);

- TopicPartition tp = new TopicPartition(topic, partition);

- responses.put(tp, new PartitionResponse(error, offset, logAppendTime, logStartOffset, recordErrors, errorMessage));

+ private static ProduceResponseData toData(Map<TopicPartition, PartitionResponse> responses, int throttleTimeMs) {

+ ProduceResponseData data = new ProduceResponseData().setThrottleTimeMs(throttleTimeMs);

+ responses.forEach((tp, response) -> {

+ ProduceResponseData.TopicProduceResponse tpr = data.responses().find(tp.topic());

+ if (tpr == null) {

+ tpr = new ProduceResponseData.TopicProduceResponse().setName(tp.topic());

+ data.responses().add(tpr);

}

- }

- this.throttleTimeMs = struct.getOrElse(THROTTLE_TIME_MS, DEFAULT_THROTTLE_TIME);

+ tpr.partitionResponses()

+ .add(new ProduceResponseData.PartitionProduceResponse()

+ .setIndex(tp.partition())

+ .setBaseOffset(response.baseOffset)

+ .setLogStartOffset(response.logStartOffset)

+ .setLogAppendTimeMs(response.logAppendTime)

+ .setErrorMessage(response.errorMessage)

+ .setErrorCode(response.error.code())

+ .setRecordErrors(response.recordErrors

+ .stream()

+ .map(e -> new ProduceResponseData.BatchIndexAndErrorMessage()

+ .setBatchIndex(e.batchIndex)

+ .setBatchIndexErrorMessage(e.message))

+ .collect(Collectors.toList())));

Review comment:

ditto

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kafka] chia7712 commented on a change in pull request #9401: KAFKA-9628 Replace Produce request/response with automated protocol

Posted by GitBox <gi...@apache.org>.

chia7712 commented on a change in pull request #9401:

URL: https://github.com/apache/kafka/pull/9401#discussion_r521927723

##########

File path: clients/src/main/java/org/apache/kafka/common/requests/ProduceRequest.java

##########

@@ -194,7 +106,27 @@ private ProduceRequest build(short version, boolean validate) {

ProduceRequest.validateRecords(version, records);

}

}

- return new ProduceRequest(version, acks, timeout, partitionRecords, transactionalId);

+

+ List<ProduceRequestData.TopicProduceData> tpd = partitionRecords

Review comment:

nice suggestion.

Could I address this in follow-up? I had filed jira (KAFKA-10696 ~ KAFKA-10698) to have ```Sender`` use auto-generated protocol directly.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kafka] hachikuji commented on a change in pull request #9401: KAFKA-9628 Replace Produce request/response with automated protocol

Posted by GitBox <gi...@apache.org>.

hachikuji commented on a change in pull request #9401:

URL: https://github.com/apache/kafka/pull/9401#discussion_r520740954

##########

File path: clients/src/main/java/org/apache/kafka/common/requests/ProduceResponse.java

##########

@@ -204,118 +75,78 @@ public ProduceResponse(Map<TopicPartition, PartitionResponse> responses) {

* @param throttleTimeMs Time in milliseconds the response was throttled

*/

public ProduceResponse(Map<TopicPartition, PartitionResponse> responses, int throttleTimeMs) {

- this.responses = responses;

- this.throttleTimeMs = throttleTimeMs;

+ this(new ProduceResponseData()

+ .setResponses(responses.entrySet()

+ .stream()

+ .collect(Collectors.groupingBy(e -> e.getKey().topic()))

+ .entrySet()

+ .stream()

+ .map(topicData -> new ProduceResponseData.TopicProduceResponse()

Review comment:

Oh, I was just emphasizing that it is a matter of taste. It's up to you if you agree or not.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kafka] chia7712 commented on pull request #9401: KAFKA-9628 Replace Produce request/response with automated protocol

Posted by GitBox <gi...@apache.org>.

chia7712 commented on pull request #9401:

URL: https://github.com/apache/kafka/pull/9401#issuecomment-728999480

> Can you check that the @hachikuji's perf test doesn't result in significantly increased latency?

I don't observer significantly increased latency on my local. The env is shown below.

- INTEL 10900K 10C20T 3.70 GHz ~ 5.30 GHz

- DDR4-2933 128 GB

- SSD

- Ubuntu 20.04

- OpenJDK 11.0.9.1

**trunk** (53026b799c609373696382c685b9080630128af2)

> 250000000 records sent, 1143578.577571 records/sec (279.19 MB/sec), 109.01 ms avg latency, 422.00 ms max latency, 107 ms 50th, 132 ms 95th, 146 ms 99th, 263 ms 99.9th.

**patch**

> 250000000 records sent, 1202958.315088 records/sec (293.69 MB/sec), 103.60 ms avg latency, 432.00 ms max latency, 101 ms 50th, 122 ms 95th, 146 ms 99th, 278 ms 99.9th.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kafka] chia7712 commented on pull request #9401: KAFKA-9628 Replace Produce request/response with automated protocol

Posted by GitBox <gi...@apache.org>.

chia7712 commented on pull request #9401:

URL: https://github.com/apache/kafka/pull/9401#issuecomment-721487169

@lbradstreet Thanks for your response.

> is this the overall broker side regression since you need both the construction and toStruct?

you are right and it seems to me the solution to fix regression is that server should use automatic protocol response rather than wrapped response. However, it may make a big patch so it would be better to address in another PR. (BTW, fetch protocol has similar issue https://github.com/apache/kafka/blob/trunk/clients/src/main/java/org/apache/kafka/common/requests/FetchResponse.java#L281)

> Could you please also provide an analysis of the garbage generation using gc.alloc.rate.norm?

construction regression:

- 3.293 -> 580.099 ns/op

- 24.000 -> 2776.000 B/op

toStruct improvement:

- 825.889 -> 318.530 ns/op

- 2208.000 -> 896.000 B/op

We can reduce the regression (in construction) by replacing steam APIs by for-loop. However, I prefer stream Apis since it is more readable and the true solution is to use auto-generated protocols on server-side.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kafka] hachikuji commented on a change in pull request #9401: KAFKA-9628 Replace Produce request/response with automated protocol

Posted by GitBox <gi...@apache.org>.

hachikuji commented on a change in pull request #9401:

URL: https://github.com/apache/kafka/pull/9401#discussion_r518984064

##########

File path: clients/src/main/java/org/apache/kafka/common/requests/ProduceResponse.java

##########

@@ -204,118 +75,78 @@ public ProduceResponse(Map<TopicPartition, PartitionResponse> responses) {

* @param throttleTimeMs Time in milliseconds the response was throttled

*/

public ProduceResponse(Map<TopicPartition, PartitionResponse> responses, int throttleTimeMs) {

- this.responses = responses;

- this.throttleTimeMs = throttleTimeMs;

+ this(new ProduceResponseData()

+ .setResponses(responses.entrySet()

+ .stream()

+ .collect(Collectors.groupingBy(e -> e.getKey().topic()))

+ .entrySet()

+ .stream()

+ .map(topicData -> new ProduceResponseData.TopicProduceResponse()

Review comment:

Not required, but this would be easier to follow up if we had some helpers.

##########

File path: clients/src/main/java/org/apache/kafka/clients/producer/internals/Sender.java

##########

@@ -560,13 +561,23 @@ private void handleProduceResponse(ClientResponse response, Map<TopicPartition,

log.trace("Received produce response from node {} with correlation id {}", response.destination(), correlationId);

// if we have a response, parse it

if (response.hasResponse()) {

+ // TODO: Sender should exercise PartitionProduceResponse rather than ProduceResponse.PartitionResponse

Review comment:

Is the plan to save this for a follow-up? It looks like it will be a bit of effort to trace down all the uses, but seems doable.

##########

File path: clients/src/main/java/org/apache/kafka/common/requests/ProduceResponse.java

##########

@@ -204,118 +75,78 @@ public ProduceResponse(Map<TopicPartition, PartitionResponse> responses) {

* @param throttleTimeMs Time in milliseconds the response was throttled

*/

public ProduceResponse(Map<TopicPartition, PartitionResponse> responses, int throttleTimeMs) {

- this.responses = responses;

- this.throttleTimeMs = throttleTimeMs;

+ this(new ProduceResponseData()

+ .setResponses(responses.entrySet()

+ .stream()

+ .collect(Collectors.groupingBy(e -> e.getKey().topic()))

+ .entrySet()

+ .stream()

+ .map(topicData -> new ProduceResponseData.TopicProduceResponse()

+ .setTopic(topicData.getKey())

+ .setPartitionResponses(topicData.getValue()

+ .stream()

+ .map(p -> new ProduceResponseData.PartitionProduceResponse()

+ .setPartition(p.getKey().partition())

+ .setBaseOffset(p.getValue().baseOffset)

+ .setLogStartOffset(p.getValue().logStartOffset)

+ .setLogAppendTime(p.getValue().logAppendTime)

+ .setErrorMessage(p.getValue().errorMessage)

+ .setErrorCode(p.getValue().error.code())

+ .setRecordErrors(p.getValue().recordErrors

+ .stream()

+ .map(e -> new ProduceResponseData.BatchIndexAndErrorMessage()

+ .setBatchIndex(e.batchIndex)

+ .setBatchIndexErrorMessage(e.message))

+ .collect(Collectors.toList())))

+ .collect(Collectors.toList())))

+ .collect(Collectors.toList()))

+ .setThrottleTimeMs(throttleTimeMs));

}

/**

- * Constructor from a {@link Struct}.

+ * Visible for testing.

*/

- public ProduceResponse(Struct struct) {

- responses = new HashMap<>();

- for (Object topicResponse : struct.getArray(RESPONSES_KEY_NAME)) {

- Struct topicRespStruct = (Struct) topicResponse;

- String topic = topicRespStruct.get(TOPIC_NAME);

-

- for (Object partResponse : topicRespStruct.getArray(PARTITION_RESPONSES_KEY_NAME)) {

- Struct partRespStruct = (Struct) partResponse;

- int partition = partRespStruct.get(PARTITION_ID);

- Errors error = Errors.forCode(partRespStruct.get(ERROR_CODE));

- long offset = partRespStruct.getLong(BASE_OFFSET_KEY_NAME);

- long logAppendTime = partRespStruct.hasField(LOG_APPEND_TIME_KEY_NAME) ?

- partRespStruct.getLong(LOG_APPEND_TIME_KEY_NAME) : RecordBatch.NO_TIMESTAMP;

- long logStartOffset = partRespStruct.getOrElse(LOG_START_OFFSET_FIELD, INVALID_OFFSET);

-

- List<RecordError> recordErrors = Collections.emptyList();

- if (partRespStruct.hasField(RECORD_ERRORS_KEY_NAME)) {

- Object[] recordErrorsArray = partRespStruct.getArray(RECORD_ERRORS_KEY_NAME);

- if (recordErrorsArray.length > 0) {

- recordErrors = new ArrayList<>(recordErrorsArray.length);

- for (Object indexAndMessage : recordErrorsArray) {

- Struct indexAndMessageStruct = (Struct) indexAndMessage;

- recordErrors.add(new RecordError(

- indexAndMessageStruct.getInt(BATCH_INDEX_KEY_NAME),

- indexAndMessageStruct.get(BATCH_INDEX_ERROR_MESSAGE_FIELD)

- ));

- }

- }

- }

-

- String errorMessage = partRespStruct.getOrElse(ERROR_MESSAGE_FIELD, null);

- TopicPartition tp = new TopicPartition(topic, partition);

- responses.put(tp, new PartitionResponse(error, offset, logAppendTime, logStartOffset, recordErrors, errorMessage));

- }

- }

- this.throttleTimeMs = struct.getOrElse(THROTTLE_TIME_MS, DEFAULT_THROTTLE_TIME);

- }

-

@Override

- protected Struct toStruct(short version) {

- Struct struct = new Struct(ApiKeys.PRODUCE.responseSchema(version));

-

- Map<String, Map<Integer, PartitionResponse>> responseByTopic = CollectionUtils.groupPartitionDataByTopic(responses);

- List<Struct> topicDatas = new ArrayList<>(responseByTopic.size());

- for (Map.Entry<String, Map<Integer, PartitionResponse>> entry : responseByTopic.entrySet()) {

- Struct topicData = struct.instance(RESPONSES_KEY_NAME);

- topicData.set(TOPIC_NAME, entry.getKey());

- List<Struct> partitionArray = new ArrayList<>();

- for (Map.Entry<Integer, PartitionResponse> partitionEntry : entry.getValue().entrySet()) {

- PartitionResponse part = partitionEntry.getValue();

- short errorCode = part.error.code();

- // If producer sends ProduceRequest V3 or earlier, the client library is not guaranteed to recognize the error code

- // for KafkaStorageException. In this case the client library will translate KafkaStorageException to

- // UnknownServerException which is not retriable. We can ensure that producer will update metadata and retry

- // by converting the KafkaStorageException to NotLeaderOrFollowerException in the response if ProduceRequest version <= 3

- if (errorCode == Errors.KAFKA_STORAGE_ERROR.code() && version <= 3)

- errorCode = Errors.NOT_LEADER_OR_FOLLOWER.code();

- Struct partStruct = topicData.instance(PARTITION_RESPONSES_KEY_NAME)

- .set(PARTITION_ID, partitionEntry.getKey())

- .set(ERROR_CODE, errorCode)

- .set(BASE_OFFSET_KEY_NAME, part.baseOffset);

- partStruct.setIfExists(LOG_APPEND_TIME_KEY_NAME, part.logAppendTime);

- partStruct.setIfExists(LOG_START_OFFSET_FIELD, part.logStartOffset);

-

- if (partStruct.hasField(RECORD_ERRORS_KEY_NAME)) {

- List<Struct> recordErrors = Collections.emptyList();

- if (!part.recordErrors.isEmpty()) {

- recordErrors = new ArrayList<>();

- for (RecordError indexAndMessage : part.recordErrors) {

- Struct indexAndMessageStruct = partStruct.instance(RECORD_ERRORS_KEY_NAME)

- .set(BATCH_INDEX_KEY_NAME, indexAndMessage.batchIndex)

- .set(BATCH_INDEX_ERROR_MESSAGE_FIELD, indexAndMessage.message);

- recordErrors.add(indexAndMessageStruct);

- }

- }

- partStruct.set(RECORD_ERRORS_KEY_NAME, recordErrors.toArray());

- }

-

- partStruct.setIfExists(ERROR_MESSAGE_FIELD, part.errorMessage);

- partitionArray.add(partStruct);

- }

- topicData.set(PARTITION_RESPONSES_KEY_NAME, partitionArray.toArray());

- topicDatas.add(topicData);

- }

- struct.set(RESPONSES_KEY_NAME, topicDatas.toArray());