You are viewing a plain text version of this content. The canonical link for it is here.

Posted to commits@hudi.apache.org by GitBox <gi...@apache.org> on 2022/09/05 06:03:13 UTC

[GitHub] [hudi] FredMkl opened a new issue, #6591: [SUPPORT]Duplicate records in MOR

FredMkl opened a new issue, #6591:

URL: https://github.com/apache/hudi/issues/6591

**Describe the problem you faced**

We use MOR table, we found that when updating an existing set of rows to another partition will result in both a)generate a parquet file b)an update written to a log file. This brings duplicate records

**To Reproduce**

```

//action1: spark-dataframe write

import org.apache.spark.sql.SaveMode._

import org.apache.hudi.DataSourceReadOptions._

import org.apache.hudi.DataSourceWriteOptions._

import org.apache.spark.sql.{DataFrame, Row, SparkSession}

import scala.collection.mutable

val tableName = "f_schedule_test"

val basePath = "oss://nbadatalake-poc/fred/warehouse/dw/f_schedule_test"

val spark = SparkSession.builder.enableHiveSupport.getOrCreate

import spark.implicits._

// spark-shell

val df = Seq(

("1", "10001", "2022-08-30","2022-08-30 12:00:00.000","2022-08-30"),

("2", "10002", "2022-08-31","2022-08-30 12:00:00.000","2022-08-30"),

("3", "10003", "2022-08-31","2022-08-30 12:00:00.000","2022-08-30"),

("4", "10004", "2022-08-31","2022-08-30 12:00:00.000","2022-08-30"),

("5", "10005", "2022-08-31","2022-08-30 12:00:00.000","2022-08-30"),

("6", "10006", "2022-08-31","2022-08-30 12:00:00.000","2022-08-30")

).toDF("game_schedule_id", "game_id", "game_date_cn", "insert_date", "dt")

// df.show()

val hudiOptions = mutable.Map(

"hoodie.datasource.write.table.type" -> "MERGE_ON_READ",

"hoodie.datasource.write.operation" -> "upsert",

"hoodie.datasource.write.recordkey.field" -> "game_schedule_id",

"hoodie.datasource.write.precombine.field" -> "insert_date",

"hoodie.datasource.write.partitionpath.field" -> "dt",

"hoodie.index.type" -> "GLOBAL_BLOOM",

"hoodie.compact.inline" -> "true",

"hoodie.datasource.write.keygenerator.class" -> "org.apache.hudi.keygen.ComplexKeyGenerator"

)

//step1: insert --no issue

df.write.format("hudi").

options(hudiOptions).

mode(Append).

save(basePath)

//step2: move part data to another partition --no issue

val df1 = spark.sql("select * from dw.f_schedule_test where dt = '2022-08-30'").withColumn("dt",lit("2022-08-31")).limit(3)

df1.write.format("hudi").

options(hudiOptions).

mode(Append).

save(basePath)

//step3: move back --duplicate occurs

//Updating an existing set of rows will result in either a) a companion log/delta file for an existing base parquet file generated from a previous compaction or b) an update written to a log/delta file in case no compaction ever happened for it.

val df2 = spark.sql("select * from dw.f_schedule_test where dt = '2022-08-31'").withColumn("dt",lit("2022-08-30")).limit(3)

df2.write.format("hudi").

options(hudiOptions).

mode(Append).

save(basePath)

```

**Checking scripts:**

```

select * from dw.f_schedule_test where game_schedule_id = 1;

select _hoodie_file_name,count(*) as co from dw.f_schedule_test group by _hoodie_file_name;

```

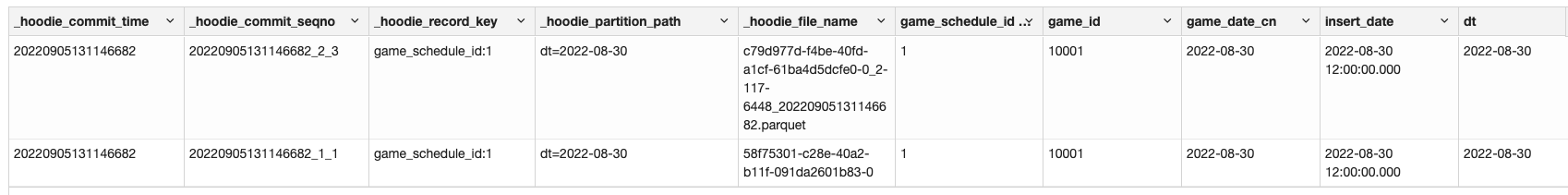

**results:**

**Expected behavior**

Duplicate records should not occur

**Environment Description**

* Hudi version :0.10.1

* Spark version :3.2.1

* Hive version :3.1.2

* Hadoop version :3.2.1

* Storage (HDFS/S3/GCS..) :OSS

* Running on Docker? (yes/no) :no

[hoodie.zip](https://github.com/apache/hudi/files/9487065/hoodie.zip)

**Stacktrace**

```Pls check logs as attached

[hoodie.zip](https://github.com/apache/hudi/files/9487078/hoodie.zip)

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] nsivabalan commented on issue #6591: [SUPPORT]Duplicate records in MOR

Posted by GitBox <gi...@apache.org>.

nsivabalan commented on issue #6591:

URL: https://github.com/apache/hudi/issues/6591#issuecomment-1246366467

atleast I will file a follow up jira so that we don't miss this. thanks for filing the issue.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] ad1happy2go commented on issue #6591: [SUPPORT]Duplicate records in MOR

Posted by "ad1happy2go (via GitHub)" <gi...@apache.org>.

ad1happy2go commented on issue #6591:

URL: https://github.com/apache/hudi/issues/6591#issuecomment-1525265398

JIRA created to fix the issue - https://issues.apache.org/jira/browse/HUDI-6146

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] FredMkl closed issue #6591: [SUPPORT]Duplicate records in MOR

Posted by GitBox <gi...@apache.org>.

FredMkl closed issue #6591: [SUPPORT]Duplicate records in MOR

URL: https://github.com/apache/hudi/issues/6591

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] ad1happy2go commented on issue #6591: [SUPPORT]Duplicate records in MOR

Posted by "ad1happy2go (via GitHub)" <gi...@apache.org>.

ad1happy2go commented on issue #6591:

URL: https://github.com/apache/hudi/issues/6591#issuecomment-1525255395

Issue still exists in master.

Reproducible script -

```

//action1: spark-dataframe write

import org.apache.spark.sql.SaveMode._

import org.apache.hudi.DataSourceReadOptions._

import org.apache.hudi.DataSourceWriteOptions._

import org.apache.spark.sql.{DataFrame, Row, SparkSession}

import scala.collection.mutable

val tableName = "issue_6591_try6"

val basePath = "file:///tmp/issue_6591_try6"

val spark = SparkSession.builder.enableHiveSupport.getOrCreate

import spark.implicits._

// spark-shell

val df = Seq(

("1", "10001", "2022-08-30","2022-08-30 12:00:00.000","2022-08-30"),

("2", "10002", "2022-08-31","2022-08-30 12:00:00.000","2022-08-30"),

("3", "10003", "2022-08-31","2022-08-30 12:00:00.000","2022-08-30"),

("4", "10004", "2022-08-31","2022-08-30 12:00:00.000","2022-08-30"),

("5", "10005", "2022-08-31","2022-08-30 12:00:00.000","2022-08-30"),

("6", "10006", "2022-08-31","2022-08-30 12:00:00.000","2022-08-30")

).toDF("game_schedule_id", "game_id", "game_date_cn", "insert_date", "dt")

df.createOrReplaceTempView("f_schedule_test")

// df.show()

val hudiOptions = mutable.Map(

"hoodie.table.name" -> tableName,

"hoodie.datasource.write.table.type" -> "MERGE_ON_READ",

"hoodie.datasource.write.operation" -> "upsert",

"hoodie.datasource.write.recordkey.field" -> "game_schedule_id",

"hoodie.datasource.write.precombine.field" -> "insert_date",

"hoodie.datasource.write.partitionpath.field" -> "dt",

"hoodie.index.type" -> "GLOBAL_BLOOM",

"hoodie.compact.inline" -> "true",

"hoodie.datasource.write.keygenerator.class" -> "org.apache.hudi.keygen.ComplexKeyGenerator"

)

//step1: insert --no issue

df.write.format("hudi").

options(hudiOptions).

mode(Append).

save(basePath)

spark.read.format("hudi").load(basePath).show(false)

spark.read.format("hudi").load(basePath).createOrReplaceTempView("f_schedule_test")

//step2: move part data to another partition --no issue

val df1 = spark.sql("select * from f_schedule_test where dt = '2022-08-30'").withColumn("dt",lit("2022-08-31")).limit(3)

df1.write.format("hudi").

options(hudiOptions).

mode(Append).

save(basePath)

spark.read.format("hudi").load(basePath).show(false)

//step3: move back --duplicate occurs

//Updating an existing set of rows will result in either a) a companion log/delta file for an existing base parquet file generated from a previous compaction or b) an update written to a log/delta file in case no compaction ever happened for it.

spark.read.format("hudi").load(basePath).createOrReplaceTempView("f_schedule_test")

val df2 = spark.sql("select * from f_schedule_test where dt = '2022-08-31'").withColumn("dt",lit("2022-08-30")).limit(3)

df2.write.format("hudi").

options(hudiOptions).

mode(Append).

save(basePath)

spark.read.format("hudi").load(basePath).show(false)

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] codope commented on issue #6591: [SUPPORT]Duplicate records in MOR

Posted by "codope (via GitHub)" <gi...@apache.org>.

codope commented on issue #6591:

URL: https://github.com/apache/hudi/issues/6591#issuecomment-1524756517

Reopening to validate against master.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] nsivabalan closed issue #6591: [SUPPORT]Duplicate records in MOR

Posted by "nsivabalan (via GitHub)" <gi...@apache.org>.

nsivabalan closed issue #6591: [SUPPORT]Duplicate records in MOR

URL: https://github.com/apache/hudi/issues/6591

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] FredMkl commented on issue #6591: [SUPPORT]Duplicate records in MOR

Posted by GitBox <gi...@apache.org>.

FredMkl commented on issue #6591:

URL: https://github.com/apache/hudi/issues/6591#issuecomment-1246449719

>

Thank you for reply, actually we found that even if a compaction kicks in, the duplication data is still there.

We tried the settings below, then the duplicate disappear, unfortunately this is not we want.

hoodie.compact.inline.trigger.strategy = 'NUM_COMMITS',

hoodie.compact.inline.max.delta.commits = '1'

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] nsivabalan commented on issue #6591: [SUPPORT]Duplicate records in MOR

Posted by "nsivabalan (via GitHub)" <gi...@apache.org>.

nsivabalan commented on issue #6591:

URL: https://github.com/apache/hudi/issues/6591#issuecomment-1526991688

Its already fixed w/ this patch https://github.com/apache/hudi/pull/8490

```

scala> spark.read.format("hudi").load(basePath).show(false)

+-------------------+---------------------+------------------+----------------------+---------------------------------------------------------------------------+----------------+-------+------------+-----------------------+----------+

|_hoodie_commit_time|_hoodie_commit_seqno |_hoodie_record_key|_hoodie_partition_path|_hoodie_file_name |game_schedule_id|game_id|game_date_cn|insert_date |dt |

+-------------------+---------------------+------------------+----------------------+---------------------------------------------------------------------------+----------------+-------+------------+-----------------------+----------+

|20230427215728276 |20230427215728276_0_3|game_schedule_id:5|2022-08-30 |bd4d1121-57bc-4103-91a0-5541a474ef9e-0_0-28-369_20230427215728276.parquet |5 |10005 |2022-08-31 |2022-08-30 12:00:00.000|2022-08-30|

|20230427215728276 |20230427215728276_0_4|game_schedule_id:6|2022-08-30 |bd4d1121-57bc-4103-91a0-5541a474ef9e-0_0-28-369_20230427215728276.parquet |6 |10006 |2022-08-31 |2022-08-30 12:00:00.000|2022-08-30|

|20230427215728276 |20230427215728276_0_5|game_schedule_id:1|2022-08-30 |bd4d1121-57bc-4103-91a0-5541a474ef9e-0_0-28-369_20230427215728276.parquet |1 |10001 |2022-08-30 |2022-08-30 12:00:00.000|2022-08-30|

|20230427215753406 |20230427215753406_1_0|game_schedule_id:2|2022-08-30 |484347af-e681-4b1e-ad99-e7c1cd9adeea-0_1-150-1051_20230427215753406.parquet|2 |10002 |2022-08-31 |2022-08-30 12:00:00.000|2022-08-30|

|20230427215753406 |20230427215753406_1_1|game_schedule_id:3|2022-08-30 |484347af-e681-4b1e-ad99-e7c1cd9adeea-0_1-150-1051_20230427215753406.parquet|3 |10003 |2022-08-31 |2022-08-30 12:00:00.000|2022-08-30|

|20230427215753406 |20230427215753406_1_2|game_schedule_id:4|2022-08-30 |484347af-e681-4b1e-ad99-e7c1cd9adeea-0_1-150-1051_20230427215753406.parquet|4 |10004 |2022-08-31 |2022-08-30 12:00:00.000|2022-08-30|

+-------------------+---------------------+------------------+----------------------+---------------------------------------------------------------------------+----------------+-------+------------+-----------------------+----------+

scala> spark.read.format("hudi").load(basePath).count

res9: Long = 6

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] nsivabalan commented on issue #6591: [SUPPORT]Duplicate records in MOR

Posted by GitBox <gi...@apache.org>.

nsivabalan commented on issue #6591:

URL: https://github.com/apache/hudi/issues/6591#issuecomment-1247367471

yes, I get it.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] nsivabalan commented on issue #6591: [SUPPORT]Duplicate records in MOR

Posted by GitBox <gi...@apache.org>.

nsivabalan commented on issue #6591:

URL: https://github.com/apache/hudi/issues/6591#issuecomment-1246261284

yes, this is a known limitation we have w/ MOR table. If a compaction kicks in, you may not see the update in older partitions/ where it was deleted. it will be an issue until then.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org