You are viewing a plain text version of this content. The canonical link for it is here.

Posted to dev@mxnet.apache.org by Przemyslaw Tredak <no...@github.com> on 2020/08/17 22:00:49 UTC

[apache/incubator-mxnet] [RFC] GPU performance improvements in MXNet

engine (#18951)

# Introduction

MXNet's dependency engine's design is very elegant. It provides an easy way to track any kind of dependencies (data dependencies, resource dependencies etc.) on any kind of device (CPU, GPU) using a single mechanism.

However, as the speed of GPUs increased, it becomes increasingly clear that its implementation in MXNet has overheads. They are especially visible when doing imperative computation (non-engine related overheads of which prompted another [RFC](https://github.com/apache/incubator-mxnet/issues/17097)), but they exist also for the hybridized models.

This RFC explores the changes to the MXNet's engine needed to maximise the utilization of GPUs.

# The problem

In order to understand the problem that this RFC tries to solve, let us look at a simple script:

```python

import mxnet as mx

sizes = [int(x) for x in [1e7, 1e5, 1e3, 1e1]]

N = 100

ctx=mx.gpu()

for size in sizes:

a = mx.random.uniform(shape=(size,), ctx=ctx)

b = mx.random.uniform(shape=(size,), ctx=ctx)

mx.nd.waitall()

for _ in range(N):

c = a + b

mx.nd.waitall()

```

It imperatively launches an elementwise addition of 2 tensors of different sizes (from 40MB down to 40 B). For clarity of the pictures I launched this script setting `MNET_GPU_WORKER_NTHREADS=1`, but the findings are the same when using multiple worker threads.

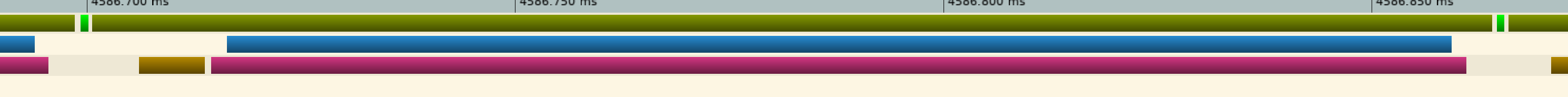

Here is the profile of one of the 40MB addition:

3 rows shown in that picture are, from top to bottom:

- executed operators (dark green is the addition operator, bright green is the `DeleteVariable`

used for memory tracking)

- GPU kernels (with only 1 bar, blue, representing the actual addition kernel)

- CPU worker thread activity (only CUDA APIs are shown - gold bar is `cudaLaunchKernel` and pink bar is `cudaStreamSynchronize`)

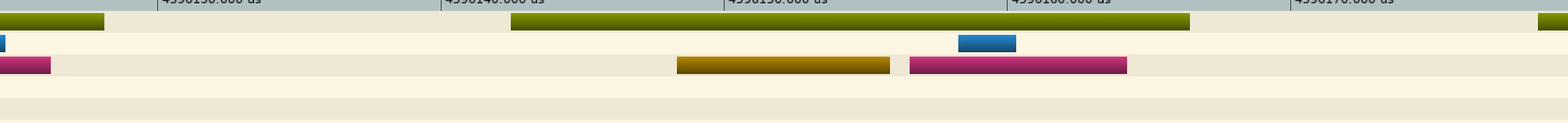

As you can see, even for tensors of the size of 40 MB, there is a significant portion of the time when the GPU stays idle (no kernel is running). When we look at the addition of 0.4 MB tensors, that becomes even more apparent:

The white regions visible here with no operator running are mostly due to the Python-C++ interface (which is handled by the already mentioned [RFC](https://github.com/apache/incubator-mxnet/issues/17097)). But even if we disregard this, the time spent for the entire operator (dark green) is much longer than the time needed for the kernel (blue).

Just for comparison - when performing ResNet inference on ImageNet (with batch size 1 and `float16` as datatype) the typical size of the activation tensor is < 0.4 MB (for training it is few tens of MB).

# The life of an engine op

In order to understand those overheads, we need to understand what are the different stages of executing an op in MXNet. Let us look again at the profile of the 40MB addition, this time annotated:

There are 3 phases in the op execution:

- Preparation and launching of the GPU kernel (i.e. calling `FCompute`)

- Synchronization with the GPU

- Updating dependencies in the engine

As you can see, the bulk of time is spent on the synchronization, as the GPU worker waits for the GPU kernel to finish, in order to update the dependencies. During that time no useful work happens on the CPU side. This is because the MXNet's engine gives a guarantee that the dependency update is called only when the results are available and ready to be consumed by any consumer.

# The workaround

The hybridization mechanism in MXNet offers a "cheat" - bulking of multiple operations into a single function pushed to the engine. This enables launching those bulked operations without synchronization. The speedup of bulking is significant - so significant in fact, that the default for inference is to bulk all operations (during training the default bulk size is 15 operations).

This approach has a few issues however:

- it is unavailable to the fully imperative execution

- it eliminates the biggest advantage of the MXNet's engine - ability to launch work from multiple threads (since the entire bulk is launched from a single worker thread)

- there are still overheads on the bulk boundaries

- dependencies are updated only after the entire bulk finishes (which can e.g. reduce the overlap of communication and computation as communication can start only after a bulk finishes)

# Proposed solution

In this RFC I would like to propose to weaken the guarantees of the MXNet engine in order to harness this additional asynchronicity of execution (so not only Python thread - worker threads) of GPU kernels with respect to CPU. In this proposal dependency update would happen not when the kernel is finished, but when it is scheduled to GPU. This removes the need for the sync after the kernel is launched, but instead requires ops to sync on their inputs to become ready.

This change on its own does not really give much improvement (besides eliminating the overhead of dependency update) as there still is a sync, but it enables an important optimization. Let us consider a chain of 2 ops: `A -> B`. In the current scheme, op `A` does not know anything about `B`. More specifically, it does not know which GPU worker will execute `B` and which CUDA stream will be used for that. Therefore, it needs to sync fully (via `cudaStreamSynchronize()`) to be sure that however `B` is launched, it will be able to see the data. In the new scheme it is `B` that does synchronization. The difference here is that `B` knows everything, including the streams that were used for both `A` and `B`. When both `A` and `B` are GPU operations (CPU operations are largely unaffected by this proposal, since they are already synchronous with respect to the worker), then there are 2 possibilities:

- `A` and `B` use the same CUDA stream: then the synchronization can be omitted completely, as the CUDA stream semantics prevent `B` to start before `A` is finished -> the worker thread on CPU *is not blocked*

- `A` and `B` use different CUDA streams: then `B` can use CUDA events and `cudaStreamWaitEvent` API to perform synchronization again *without blocking the CPU thread*

The advantage of this approach is that the GPU worker threads can start launching new operations while the previous ones are not yet finished, removing the overheads of launch and dependency update. It is especially important for networks with a lot of small operators, where the CPU thread will be able to "get ahead" launching small kernels while some longer running GPU kernel is running.

If `B` is CPU operator waiting on a GPU operator, it would still need to perform `cudaStreamSynchronize()`, so the performance would be the same.

## Impact

To assess the impact, I used inference with RN50_v2 from GluonCV on ImageNet, with batch size 32 and float32 precision on V100. I ran it in imperative mode and then hybridized with both bulk size 15 (default) and 1. The time to perform 100 iterations was 4s with imperative mode, 3.8s with bulk size equal to 1 and 3s with bulk size equal to 15. This shows, that out of 1s difference between the imperative mode and fully hybridized, 0.8s was actually due to the overheads described in this RFC. Implementing the changes proposed could make imperative usage of MXNet much closer in speed to the hybridized models (while improving the speed of hybridized models too), making it much easier to get good performance.

## Challenges

The biggest challenge is that this change requires changes to memory management. Currently, memory is returned to the cache once all the operations using it are finished. This means that it is free to be taken by any new operator. However, with the proposal described in this RFC, memory would be returned potentially before all the operations are done executing. This means that in order to reuse this memory, the subsequent operations would need to be able to synchronize on it. That is why I propose moving the engine variable from NDArray to the actual memory used by that NDArray. This has a few benefits:

- enables synchronizing on the memory returned to the cache

- enables earlier returning of the memory (so e.g. it can be done at the time of the NDArray destructor called in Python instead of at the unspecified time in the future - this could help solve issues like https://github.com/apache/incubator-mxnet/issues/17335)

# Call for help

Thank you @eric-haibin-lin @DickJC123 @sandeep-krishnamurthy @Kh4L for discussions and offering help. I will not be able to implement this RFC in the near future, so help will be greatly appreciated.

--

You are receiving this because you are subscribed to this thread.

Reply to this email directly or view it on GitHub:

https://github.com/apache/incubator-mxnet/issues/18951

Re: [apache/incubator-mxnet] [RFC] GPU performance improvements in

MXNet engine (#18951)

Posted by Przemyslaw Tredak <no...@github.com>.

@KellenSunderland The problem is that it is impossible to predict which stream will be chosen for the next operator, and issuing waits on all streams would mean that you never get the parallel execution. To choose the right stream to wait one needs to do it from the second op, not the first (and then you basically end up with this proposal).

--

You are receiving this because you are subscribed to this thread.

Reply to this email directly or view it on GitHub:

https://github.com/apache/incubator-mxnet/issues/18951#issuecomment-675186927

Re: [apache/incubator-mxnet] [RFC] GPU performance improvements in

MXNet engine (#18951)

Posted by Kellen Sunderland <no...@github.com>.

I really like this proposal, thanks for the great write-up Przemyslaw.

I haven't totally thought through pros/cons, but would it be possible to return a cudaStreamWaitEvent by default after every block of operators is called, and use that as a reference for any dependent block of ops? Would this unblock our GPU worker threads because we're not calling a cudaStreamSync?

If I'm understanding correctly that would be the equivalent of what you're proposing in your second scenario (when we have two cuda streams)? Would it have a lot of overhead in scenario 1 where we use same stream?

--

You are receiving this because you are subscribed to this thread.

Reply to this email directly or view it on GitHub:

https://github.com/apache/incubator-mxnet/issues/18951#issuecomment-675180856

Re: [apache/incubator-mxnet] [RFC] GPU performance improvements in

MXNet engine (#18951)

Posted by Przemyslaw Tredak <no...@github.com>.

Definitely - it should not be the responsibility of the operator to do this synchronization - it should be handled automatically by the framework.

--

You are receiving this because you commented.

Reply to this email directly or view it on GitHub:

https://github.com/apache/incubator-mxnet/issues/18951#issuecomment-675734328

Re: [apache/incubator-mxnet] [RFC] GPU performance improvements in

MXNet engine (#18951)

Posted by Sheng Zha <no...@github.com>.

sounds like a good idea. maybe we can extend the lambda a bit to implement the synchronization as decorator?

--

You are receiving this because you are subscribed to this thread.

Reply to this email directly or view it on GitHub:

https://github.com/apache/incubator-mxnet/issues/18951#issuecomment-675713957