You are viewing a plain text version of this content. The canonical link for it is here.

Posted to commits@hudi.apache.org by GitBox <gi...@apache.org> on 2020/06/23 03:08:08 UTC

[GitHub] [hudi] somebol opened a new issue #1757: Slow Bulk Insert Performance [SUPPORT]

somebol opened a new issue #1757:

URL: https://github.com/apache/hudi/issues/1757

Hi Team,

We are trying to load a very large dataset into hudi. The bulk insert job took ~16.5 hours to complete. The job was run with vanilla settings without any optimisations.

How can we tune the job to make it run faster?

**Dataset**

Data stored in HDFS / parquet

size: 5.5 TB

number of files: 27000

number of records: ~300 billion

**hudi options**

.option(RECORDKEY_FIELD_OPT_KEY(), "id")

.option(PARTITIONPATH_FIELD_OPT_KEY(), "<partition>")

.option(PRECOMBINE_FIELD_OPT_KEY(), "<ts>")

.option(HIVE_STYLE_PARTITIONING_OPT_KEY(), "true")

.option(TABLE_TYPE_OPT_KEY(), MOR_TABLE_TYPE_OPT_VAL())

.option(OPERATION_OPT_KEY(), BULK_INSERT_OPERATION_OPT_VAL())

.option(TABLE_NAME, "<name>")

**spark conf**

conf.set("spark.debug.maxToStringFields", "100");

conf.set("spark.sql.shuffle.partitions", "2001");

conf.set("spark.sql.warehouse.dir", "/user/hive/warehouse");

conf.set("spark.sql.autoBroadcastJoinThreshold", "31457280");

conf.set("spark.sql.hive.filesourcePartitionFileCacheSize", "2000000000");

conf.set("spark.sql.sources.partitionOverwriteMode", "dynamic");

conf.set("mapreduce.input.fileinputformat.input.dir.recursive", "true");

conf.set("spark.storage.replication.proactive", "true");

**spark submit**

SPARK_CMD="spark2-submit \

--files log4j.properties

--conf "spark.driver.extraJavaOptions=${log4j_setting}" \

--conf "spark.executor.extraJavaOptions=${log4j_setting}" \

--conf spark.kryoserializer.buffer.max=2040M \

--num-executors 25 \

--executor-cores 5 \

--driver-memory 8G \

--executor-memory 21G \

--master yarn \

--deploy-mode client

**Environment Description**

* Hudi version : 0.5.3

* Spark version : 2.40

* Cloudera version : 6.33

* Hadoop version : 3.0.0

* Storage (HDFS/S3/GCS..) : HDFS

* Running on Docker? (yes/no) : No

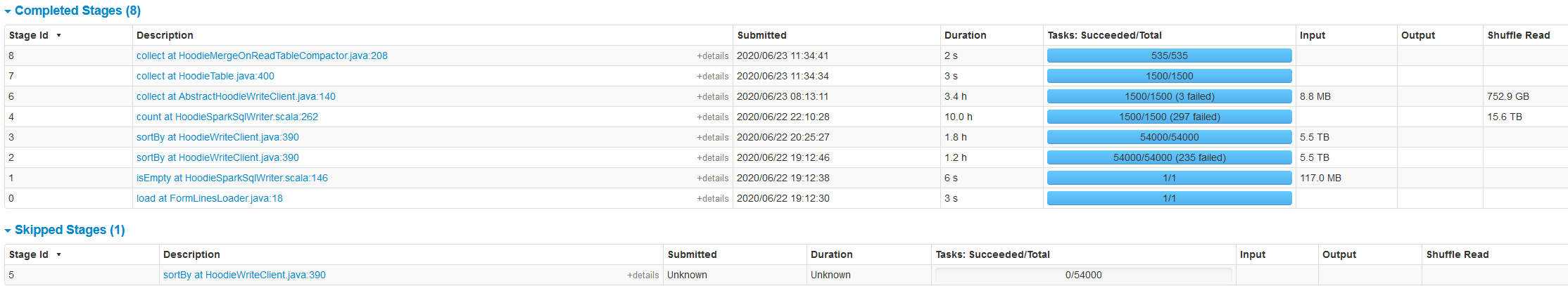

**screenshot of spark stages**

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] nsivabalan edited a comment on issue #1757: Slow Bulk Insert Performance [SUPPORT]

Posted by GitBox <gi...@apache.org>.

nsivabalan edited a comment on issue #1757:

URL: https://github.com/apache/hudi/issues/1757#issuecomment-813112202

Hudi also has an optimized version of bulk insert with row writing which is ~30 to 40% faster than regular bulk_insert. You can enable this by setting this config "hoodie.datasource.write.row.writer.enable" to true.

Also, we have 3 different sort modes in bulk_inserts. https://hudi.apache.org/docs/configurations.html#withBulkInsertSortMode

Feel free to play around these config knobs for better perf.

Closing this due to no activity. But feel free to reach out to us if you need more help. Happy to assist you.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] nsivabalan closed issue #1757: Slow Bulk Insert Performance [SUPPORT]

Posted by GitBox <gi...@apache.org>.

nsivabalan closed issue #1757:

URL: https://github.com/apache/hudi/issues/1757

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] somebol commented on issue #1757: Slow Bulk Insert Performance [SUPPORT]

Posted by GitBox <gi...@apache.org>.

somebol commented on issue #1757:

URL: https://github.com/apache/hudi/issues/1757#issuecomment-648435649

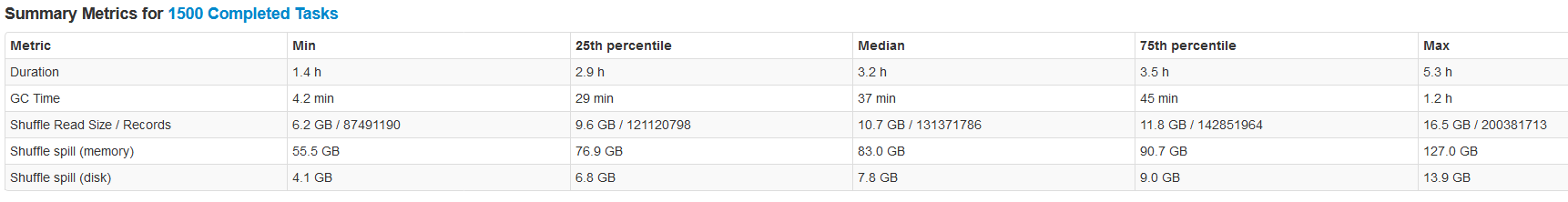

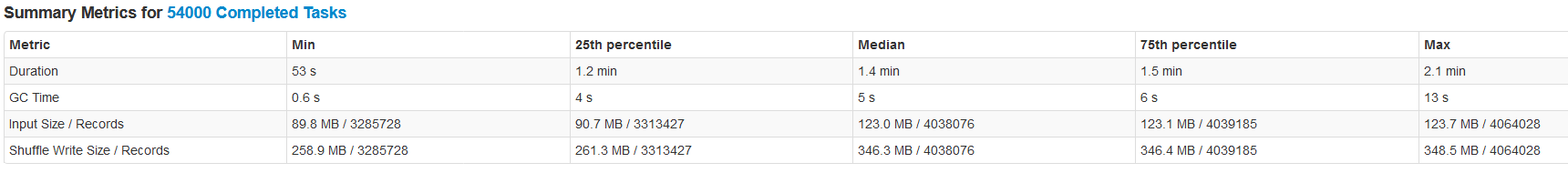

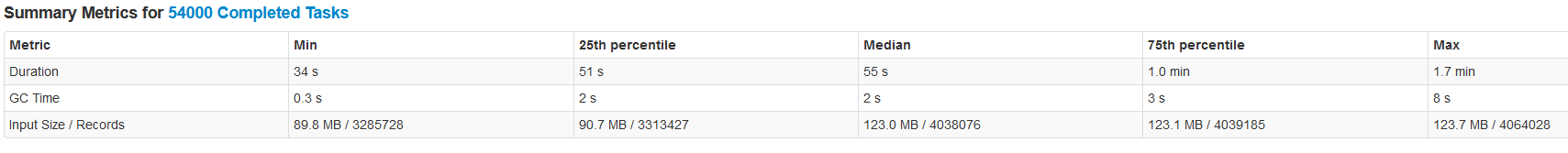

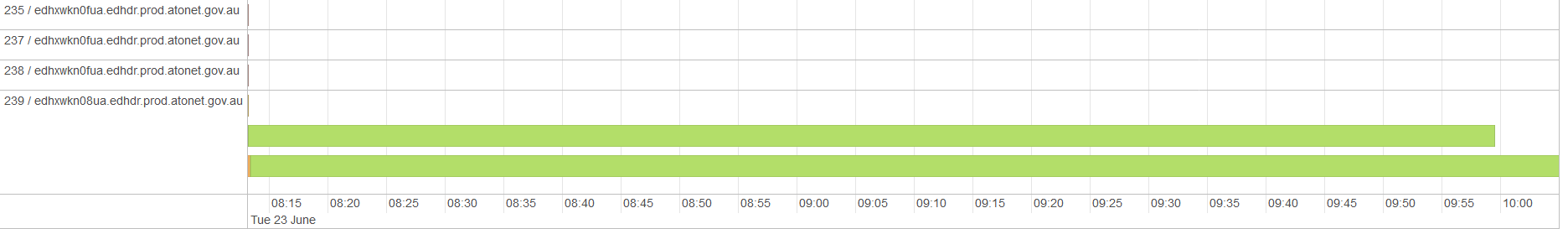

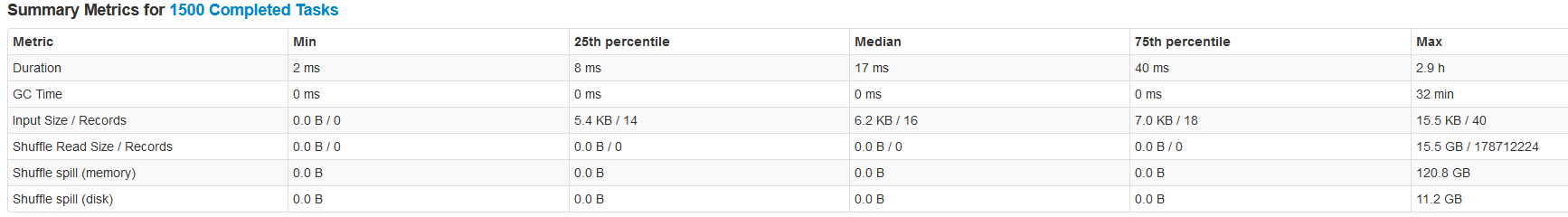

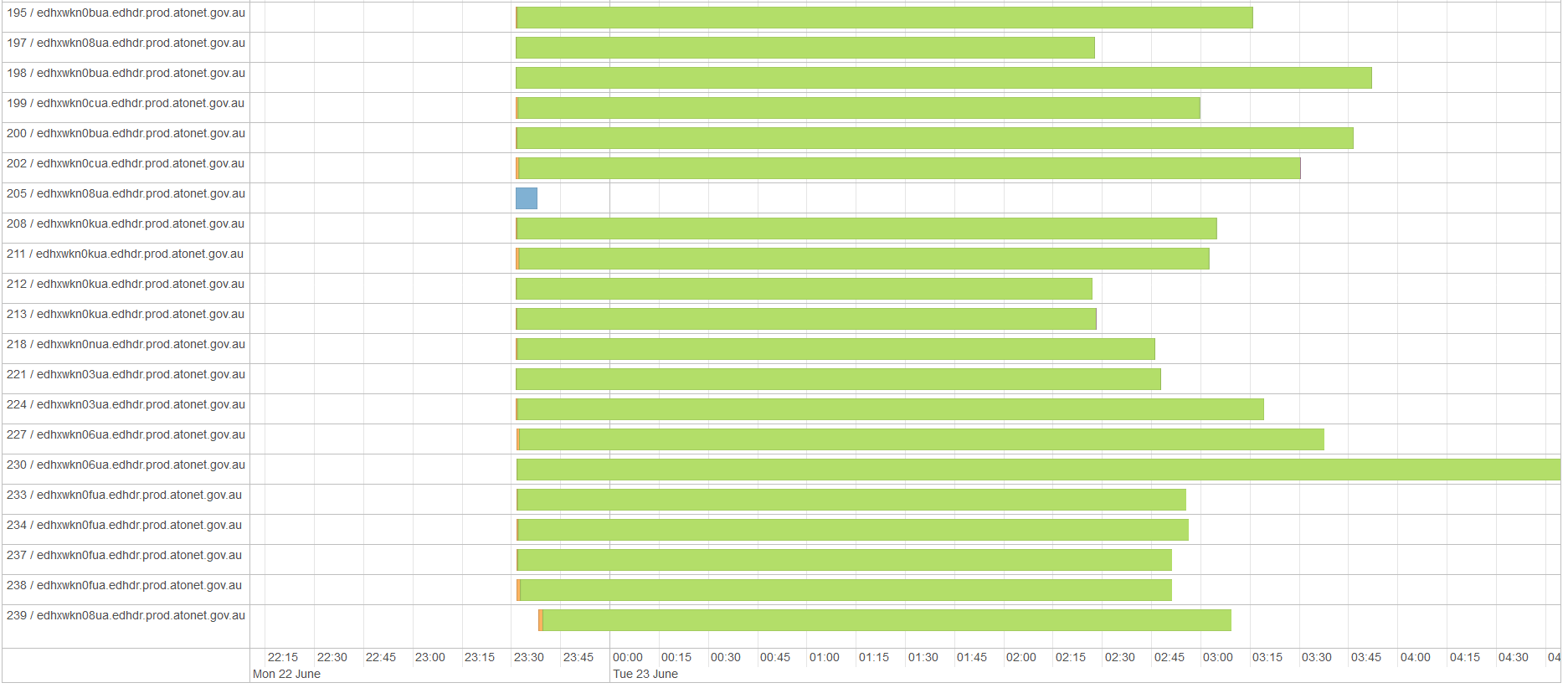

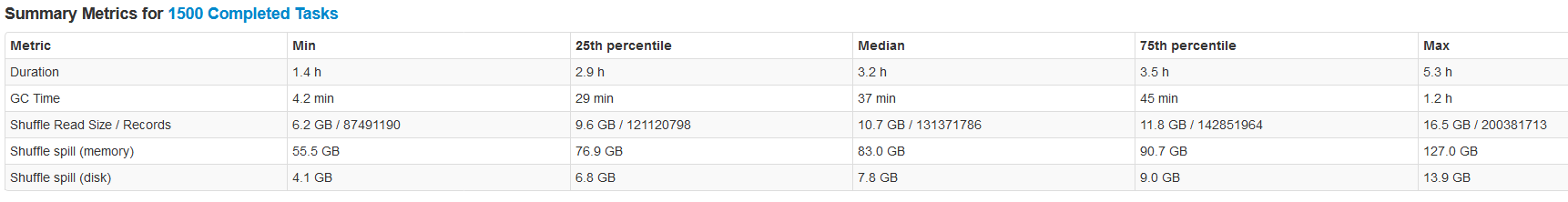

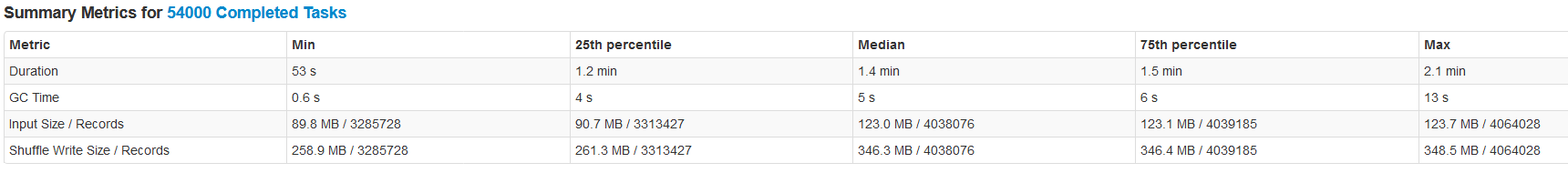

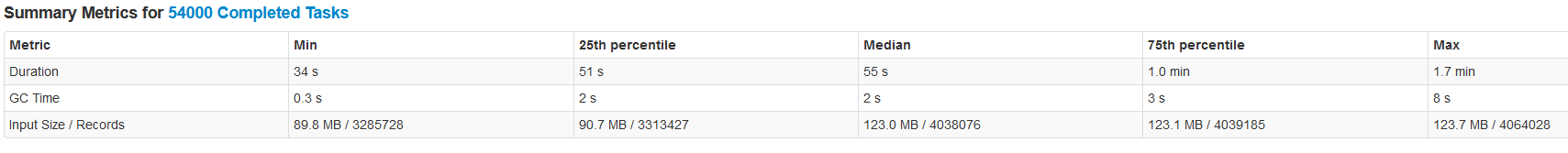

stages 4 & 6 seem to have the most skew.

**screenshots of stage details**

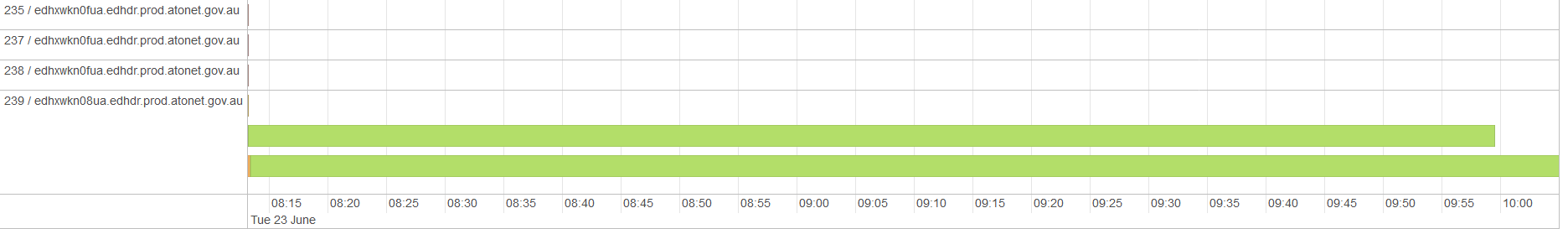

*stage 6

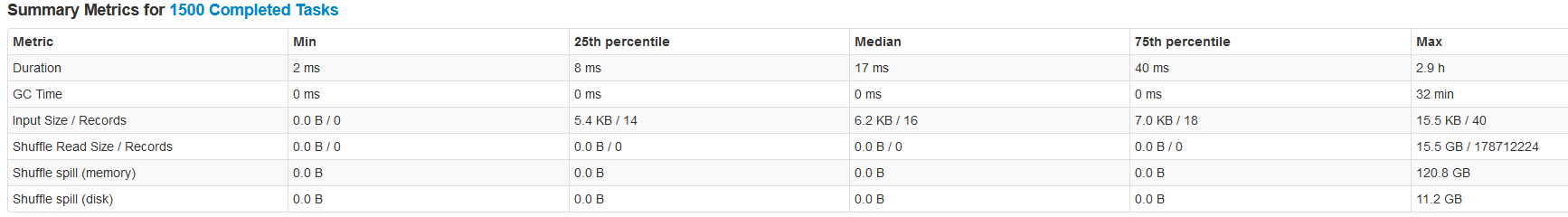

*stage 4

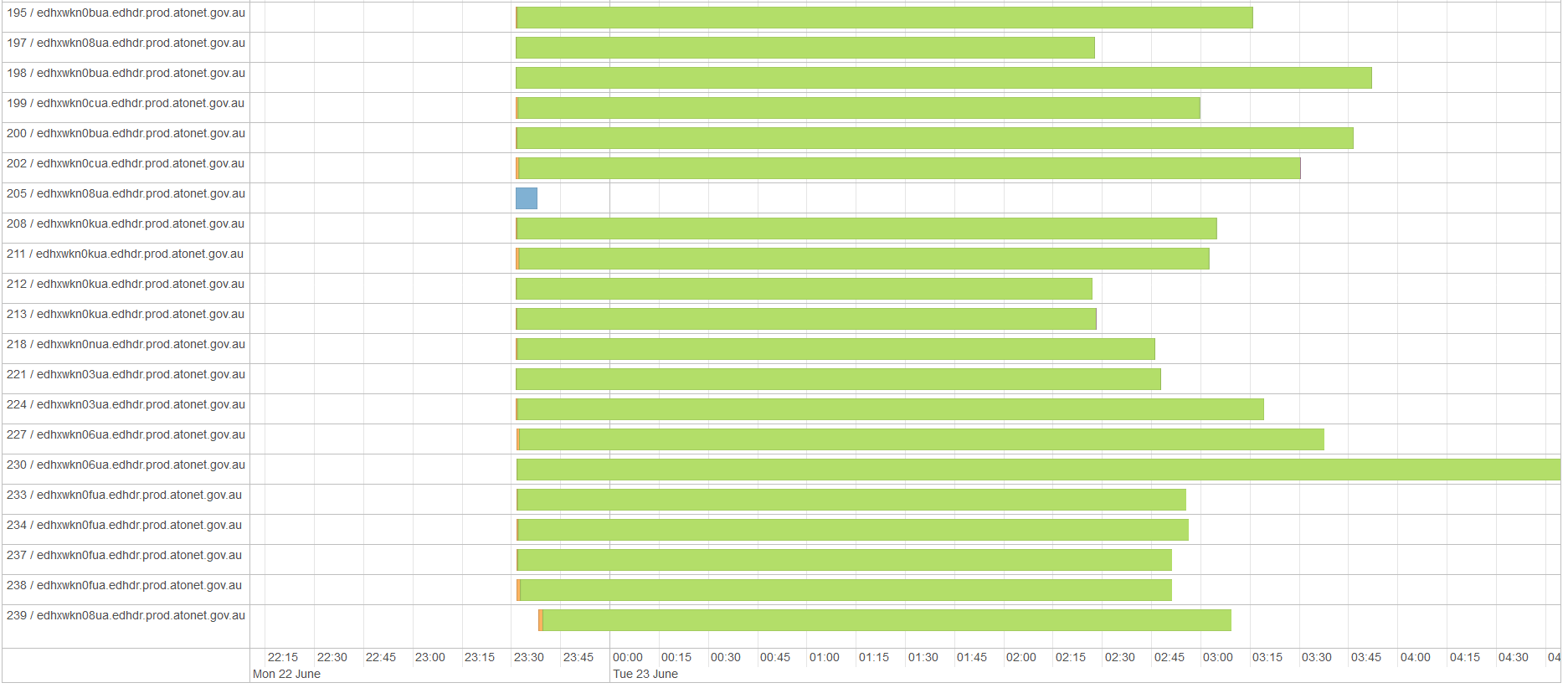

*stage 3

*stage 2

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] nsivabalan commented on issue #1757: Slow Bulk Insert Performance [SUPPORT]

Posted by GitBox <gi...@apache.org>.

nsivabalan commented on issue #1757:

URL: https://github.com/apache/hudi/issues/1757#issuecomment-767492497

@somebol : can you please check Vinoth's response above and try it out. Let us know if you need more help. Hudi has some perf improvements specific to file listing in latest release that could help you.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] somebol commented on issue #1757: Slow Bulk Insert Performance [SUPPORT]

Posted by GitBox <gi...@apache.org>.

somebol commented on issue #1757:

URL: https://github.com/apache/hudi/issues/1757#issuecomment-648381931

@vinothchandar are you able suggest some tweaks?

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] somebol edited a comment on issue #1757: Slow Bulk Insert Performance [SUPPORT]

Posted by GitBox <gi...@apache.org>.

somebol edited a comment on issue #1757:

URL: https://github.com/apache/hudi/issues/1757#issuecomment-648435649

stages 4 & 6 seem to have the most skew.

***screenshots of stage details***

**stage 6**

**stage 4**

**stage 3**

**stage 2**

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] nsivabalan commented on issue #1757: Slow Bulk Insert Performance [SUPPORT]

Posted by GitBox <gi...@apache.org>.

nsivabalan commented on issue #1757:

URL: https://github.com/apache/hudi/issues/1757#issuecomment-813112202

Hudi also has an optimized version of bulk insert with row writing which is ~30 to 40% faster than regular bulk_insert. You can enable this by setting this config "hoodie.datasource.write.row.writer.enable" to true. Closing this due to no activity. But feel free to reach out to us if you need more help. Happy to assist you.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] vinothchandar edited a comment on issue #1757: Slow Bulk Insert Performance [SUPPORT]

Posted by GitBox <gi...@apache.org>.

vinothchandar edited a comment on issue #1757:

URL: https://github.com/apache/hudi/issues/1757#issuecomment-648478687

> " hoodie.[insert|upsert|bulkinsert].shuffle.parallelism such that its atleast input_data_size/500MB

The reason for this was the 2GB limitation in Spark shuffle.. I see you are on Spark 2.4, which should work with larger than 2GB partitions.

Still worth trying to increase the parallelism to 10K , may be.. it will ensure that the memory needed for each partition is lower (spark's own datastructures).. Also notice how much data it's shuffle spilling.. In all your runs, GC time is very high.. stage 4 75th percentile, for e.g 45 mins out of 3.5h. Consider giving large heap and tune gc bit? https://cwiki.apache.org/confluence/display/HUDI/Tuning+Guide has a sample to try..

Looking at stage 4:

it's the actual write.. line [here](https://github.com/apache/hudi/blob/release-0.5.3/hudi-spark/src/main/scala/org/apache/hudi/HoodieSparkSqlWriter.scala#L262) triggers the DAG and counts the errors during write.. The workload distribution there is based on Spark sort's range partitioner.. seems like it does a reasonable job (2.5x swing between min and max records).. Guess it is what it is.. the sorting here is useful down the line as you perform upserts.. e.g if you have ordered keys , this pre-sort gives you very good index performance.

Looking at stage 6:

Seems like Spark lost a partition in the mean time and thus recomputed the RDD from scratch.. You can see one max task taking up 3hrs there.. My guess is its recomputing. if we make stage 4 more scalable, then I think this will also go away IMO.. Since shuffle uses local disk, I will also ensure cluster is big enough to hold the data needed for shuffle..

Btw all these are spark shuffle tuning, not Hudi specific per se.. and a 5.5TB shuffle is a good size :)

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] vinothchandar commented on issue #1757: Slow Bulk Insert Performance [SUPPORT]

Posted by GitBox <gi...@apache.org>.

vinothchandar commented on issue #1757:

URL: https://github.com/apache/hudi/issues/1757#issuecomment-648396783

@somebol assuming this is an initial load and after this, you would do insert/upsert operations incrementally?

High level, `bulk_insert` does a sort and writes out the data. From what I can tell, you have sufficient parallelism. But a bunch of tasks are failing and retrying probably adds a bunch of time to the runs? (stage 2, 4). Could you look into how skewed task runtimes within those stages are?

P.S: We do incur the cost of Row -> GenericRecord -> Parquet (@nsivabalan has a branch with a fix, that will make it to 0.6.0)

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] vinothchandar commented on issue #1757: Slow Bulk Insert Performance [SUPPORT]

Posted by GitBox <gi...@apache.org>.

vinothchandar commented on issue #1757:

URL: https://github.com/apache/hudi/issues/1757#issuecomment-648478687

> " hoodie.[insert|upsert|bulkinsert].shuffle.parallelism such that its atleast input_data_size/500MB

The reason for this was the 2GB limitation in Spark shuffle.. I see you are on Spark 2.4, which should work get rid of this anyway.

Still worth trying to increase the parallelism to 10K , may be.. it will ensure that the memory needed for each partition is lower (spark's own datastructures).. Also notice how much data it's shuffle spilling.. In all your runs, GC time is very high.. stage 4 75th percentile, for e.g 45 mins out of 3.5h. Consider giving large heap and tune gc bit? https://cwiki.apache.org/confluence/display/HUDI/Tuning+Guide has a sample to try..

Looking at stage 4:

it's the actual write.. line [here](https://github.com/apache/hudi/blob/release-0.5.3/hudi-spark/src/main/scala/org/apache/hudi/HoodieSparkSqlWriter.scala#L262) triggers the DAG and counts the errors during write.. The workload distribution there is based on Spark sort's range partitioner.. seems like it does a reasonable job (2.5x swing between min and max records).. Guess it is what it is.. the sorting here is useful down the line as you perform upserts.. e.g if you have ordered keys , this pre-sort gives you very good index performance.

Looking at stage 6:

Seems like Spark lost a partition in the mean time and thus recomputed the RDD from scratch.. You can see one max task taking up 3hrs there.. My guess is its recomputing. if we make stage 4 more scalable, then I think this will also go away IMO.. Since shuffle uses local disk, I will also ensure cluster is big enough to hold the data needed for shuffle..

Btw all these are spark shuffle tuning, not Hudi specific per se.. and a 5.5TB shuffle is a good size :)

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] somebol commented on issue #1757: Slow Bulk Insert Performance [SUPPORT]

Posted by GitBox <gi...@apache.org>.

somebol commented on issue #1757:

URL: https://github.com/apache/hudi/issues/1757#issuecomment-648432948

@vinothchandar yes, this is an initial load and we plan to use upsert for incrementals. The task failures are mainly due to preemption.

would there be any benefit say increasing bulkimport parallelism to say ~10000?

The tuning guide mentions " `hoodie.[insert|upsert|bulkinsert].shuffle.parallelism` such that its atleast input_data_size/500MB" in our case the input data size is 5.5 TB, so by that calculation our parallelism should be around 11000.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org