You are viewing a plain text version of this content. The canonical link for it is here.

Posted to dev@madlib.apache.org by GitBox <gi...@apache.org> on 2020/07/20 21:13:34 UTC

[GitHub] [madlib] Advitya17 opened a new pull request #506: DL: Add grid/random search for model selection with `generate_model_selection_configs`

Advitya17 opened a new pull request #506:

URL: https://github.com/apache/madlib/pull/506

JIRA: MADLIB-1439

The `load_model_selection_table` function requires the user to manually specify the grid of compile and fit params. Hence, we implement a function called `generate_model_selection_configs` (in the same module) to perform grid/random search.

The user would declare the compile and fit params grid separately as strings enveloping python dictionaries, and the name of the search algorithm (along with any corresponding arguments), and the output format of the new function would be the same as the previous one for better integrability with other MADlib functions related to model training etc.

This pull request includes the implementation, unit tests (in python and SQL) and documentation for the newly created function.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [madlib] fmcquillan99 edited a comment on pull request #506: DL: Add grid/random search for model selection with `generate_model_selection_configs`

Posted by GitBox <gi...@apache.org>.

fmcquillan99 edited a comment on pull request #506:

URL: https://github.com/apache/madlib/pull/506#issuecomment-665993038

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [madlib] khannaekta commented on a change in pull request #506: DL: Add grid/random search for model selection with `generate_model_selection_configs`

Posted by GitBox <gi...@apache.org>.

khannaekta commented on a change in pull request #506:

URL: https://github.com/apache/madlib/pull/506#discussion_r465932779

##########

File path: src/ports/postgres/modules/deep_learning/madlib_keras_wrapper.py_in

##########

@@ -201,13 +201,14 @@ def parse_and_validate_compile_params(str_of_args):

"""

literal_eval_compile_params = ['metrics', 'loss_weights',

'weighted_metrics', 'sample_weight_mode']

- accepted_compile_params = literal_eval_compile_params + ['optimizer', 'loss']

+ accepted_compile_params = literal_eval_compile_params + ['optimizer', 'loss', 'optimizer_params_list']

compile_dict = convert_string_of_args_to_dict(str_of_args)

compile_dict = validate_and_literal_eval_keys(compile_dict,

literal_eval_compile_params,

accepted_compile_params)

- _assert('optimizer' in compile_dict, "optimizer is a required parameter for compile")

+ # _assert('optimizer' in compile_dict, "optimizer is a required parameter for compile")

Review comment:

Remove these commented out code.

##########

File path: src/ports/postgres/modules/deep_learning/madlib_keras_model_selection.py_in

##########

@@ -442,28 +468,43 @@ class MstSearch():

if self.random_state:

np.random.seed(self.random_state+seed_changes)

seed_changes += 1

-

param_values = params_dict[cp]

-

- # sampling from a distribution

- if param_values[-1] in ['linear', 'log']:

- _assert(len(param_values) == 3,

- "DL: {0} should have exactly 3 elements if picking from a distribution".format(cp))

- _assert(param_values[1] > param_values[0],

- "DL: {0} should be of the format [lower_bound, uppper_bound, distribution_type]".format(cp))

- if param_values[-1] == 'linear':

- config_dict[cp] = np.random.uniform(param_values[0], param_values[1])

- elif param_values[-1] == 'log':

- config_dict[cp] = np.power(10, np.random.uniform(np.log10(param_values[0]),

- np.log10(param_values[1])))

- else:

- plpy.error("DL: Choose a valid distribution type! ('linear' or 'log')")

- # random sampling

+ if cp == ModelSelectionSchema.OPTIMIZER_PARAMS_LIST:

+ opt_dict = np.random.choice(param_values)

+ opt_combination = {}

+ for i in opt_dict:

+ opt_values = opt_dict[i]

+ if self.random_state:

+ np.random.seed(self.random_state+seed_changes)

+ seed_changes += 1

+ opt_combination[i] = self.sample_val(cp, opt_values)

+ config_dict[cp] = opt_combination

else:

- config_dict[cp] = np.random.choice(params_dict[cp])

-

+ config_dict[cp] = self.sample_val(cp, param_values)

return config_dict, seed_changes

+ def sample_val(self, cp, param_value_list):

Review comment:

Good to have a comment for what this function does.

##########

File path: src/ports/postgres/modules/deep_learning/test/unit_tests/test_madlib_keras_model_selection_table.py_in

##########

@@ -77,6 +373,8 @@ class LoadModelSelectionTableTestCase(unittest.TestCase):

"batch_size=10,epochs=1"

]

+ # plpy.execute("SELECT * FROM invalid_table;")

Review comment:

we can remove this comment code

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [madlib] fmcquillan99 edited a comment on pull request #506: DL: Add grid/random search for model selection with `generate_model_selection_configs`

Posted by GitBox <gi...@apache.org>.

fmcquillan99 edited a comment on pull request #506:

URL: https://github.com/apache/madlib/pull/506#issuecomment-665993038

errors and issues

(1)

```

SELECT madlib.generate_model_selection_configs(

'model_arch_library', -- model architecture table

'mst_table', -- model selection table output

ARRAY[1,2], -- model ids from model architecture table

$$

{

'lr': [1.0, 2.0, 'linear']

}

$$, -- compile_param_grid

$$

{ 'batch_size': [8],

'epochs': [1]

}

$$, -- fit_param_grid

'random', -- search_type (‘grid’ or ‘random’, default ‘grid’)

5, -- num_configs (number of sampled parameters. Default=10) [to limit testing]

NULL, -- random_state

NULL -- object table (Default=None)

);]

```

produces

```

InternalError: (psycopg2.errors.InternalError_) TypeError: cannot concatenate 'str' and 'float' objects (plpython.c:5038)

CONTEXT: Traceback (most recent call last):

PL/Python function "generate_model_selection_configs", line 21, in <module>

mst_loader = madlib_keras_model_selection.MstSearch(**globals())

PL/Python function "generate_model_selection_configs", line 42, in wrapper

PL/Python function "generate_model_selection_configs", line 287, in __init__

PL/Python function "generate_model_selection_configs", line 426, in find_random_combinations

PL/Python function "generate_model_selection_configs", line 490, in generate_row_string

PL/Python function "generate_model_selection_configs"

[SQL: SELECT madlib.generate_model_selection_configs(

'model_arch_library', -- model architecture table

'mst_table', -- model selection table output

ARRAY[1,2], -- model ids from model architecture table

$$

{ 'loss': ['categorical_crossentropy'],

'lr': [0.0001, 0.1, 'linear']

}

$$, -- compile_param_grid

$$

{ 'batch_size': [8],

'epochs': [1]

}

$$, -- fit_param_grid

'random', -- search_type (‘grid’ or ‘random’, default ‘grid’)

5, -- num_configs (number of sampled parameters. Default=10) [to limit testing]

NULL, -- random_state

NULL -- object table (Default=None)

);]

(Background on this error at: http://sqlalche.me/e/2j85)

```

Likewise

```

DROP TABLE IF EXISTS mst_table, mst_table_summary;

SELECT madlib.generate_model_selection_configs(

'model_arch_library', -- model architecture table

'mst_table', -- model selection table output

ARRAY[1,2], -- model ids from model architecture table

$$

{

'lr': [1.0, 2.0, 'log'],

}

$$, -- compile_param_grid

$$

{ 'batch_size': [8],

'epochs': [1]

}

$$, -- fit_param_grid

'random', -- search_type (‘grid’ or ‘random’, default ‘grid’)

1, -- num_configs (number of sampled parameters. Default=10) [to limit testing]

NULL, -- random_state

NULL -- object table (Default=None)

);

SELECT * FROM mst_table ORDER BY mst_key;

```

produces

```

InternalError: (psycopg2.errors.InternalError_) TypeError: cannot concatenate 'str' and 'numpy.float64' objects (plpython.c:5038)

CONTEXT: Traceback (most recent call last):

PL/Python function "generate_model_selection_configs", line 21, in <module>

mst_loader = madlib_keras_model_selection.MstSearch(**globals())

PL/Python function "generate_model_selection_configs", line 42, in wrapper

PL/Python function "generate_model_selection_configs", line 287, in __init__

PL/Python function "generate_model_selection_configs", line 426, in find_random_combinations

PL/Python function "generate_model_selection_configs", line 490, in generate_row_string

PL/Python function "generate_model_selection_configs"

[SQL: SELECT madlib.generate_model_selection_configs(

'model_arch_library', -- model architecture table

'mst_table', -- model selection table output

ARRAY[1,2], -- model ids from model architecture table

$$

{

'lr': [1.0, 2.0, 'log'],

}

$$, -- compile_param_grid

$$

{ 'batch_size': [8],

'epochs': [1]

}

$$, -- fit_param_grid

'random', -- search_type (‘grid’ or ‘random’, default ‘grid’)

1, -- num_configs (number of sampled parameters. Default=10) [to limit testing]

NULL, -- random_state

NULL -- object table (Default=None)

);]

(Background on this error at: http://sqlalche.me/e/2j85)

```

(2)

For search_type = 'grid' or 'random', use should be able to enter part of the string, e.g., 'rand' for random or 'g' for for grid. There is a MADlib function that supports this.

(3)

change the name of the function from `generate_model_selection_configs`

to `generate_model_configs`

(4)

remove exclamations ! from error messages and random capitalization. Suggested messages:

"DL: 'num_configs' and 'random_state' must be NULL for grid search"

"DL: Cannot search from a distribution with grid search"

"DL: 'num_configs' cannot be NULL for random search"

"DL: 'search_type' must be either 'grid' or 'random'"

"DL: Please choose a valid distribution type ('linear' or 'log')"

"DL: {0} should be of the format [lower_bound, upper_bound, distribution_type]"

(5)

In addition to `linear` sampling and `log` sampling we should add another type

called `log_near_one`

```

config_dict[cp] = 1.0 - np.power(10, np.random.uniform (np.log10 (1.0 - param_values[1]), np.log10(1.0 - param_values[0]) ) )

```

This type of sampling is useful for exponentially weighted average type params like momentum, which are very sensitive to changes near 1. It has the effect of producing more values near 1 than regular log sampling.

e.g.

momentum values in range [0.9000, 0.9005] average the prev 10 values no matter where you are in the range (no diff)

but

momentum values in range [0.9990, 0.9995] average the prev 1000 values for the left side and prev 2000 values for the right side (big diff), so you want to generate more samples nearer to the right side to get better coverage.

(6)

```

DROP TABLE IF EXISTS mst_table, mst_table_summary;

SELECT madlib.generate_model_selection_configs(

'model_arch_library', -- model architecture table

'mst_table', -- model selection table output

ARRAY[1], -- model ids from model architecture table

$$

{ 'loss': ['categorical_crossentropy'],

'optimizer': ['Adam'],

'lr': [0.9, 0.95, 'log'],

'metrics': ['accuracy']

}

$$, -- compile_param_grid

$$

{ 'batch_size': [8, 32, 64, 128, 256, 1024, 4096],

'epochs': [1, 2, 3, 5, 10, 12]

}

$$, -- fit_param_grid

'random', -- search_type

5, -- num_configs

NULL, -- random_state

NULL -- object table (Default=None)

);

SELECT * FROM mst_table ORDER BY mst_key;

```

followed by

```

SELECT madlib.generate_model_selection_configs(

'model_arch_library', -- model architecture table

'mst_table', -- model selection table output

ARRAY[1], -- model ids from model architecture table

$$

{ 'loss': ['categorical_crossentropy'],

'optimizer': ['SGD'],

'metrics': ['accuracy']

}

$$, -- compile_param_grid

$$

{ 'batch_size': [8, 32, 64, 128, 256, 1024, 4096],

'epochs': [1, 2, 3, 5, 10, 12]

}

$$, -- fit_param_grid

'random', -- search_type

5, -- num_configs

NULL, -- random_state

NULL -- object table (Default=None)

);

SELECT * FROM mst_table ORDER BY mst_key;

```

produces

```

IntegrityError: (psycopg2.errors.UniqueViolation) plpy.SPIError: duplicate key value violates unique constraint "mst_table_model_id_key" (seg0 10.128.0.41:40000 pid=22297)

DETAIL: Key (model_id, compile_params, fit_params)=(1, optimizer='SGD()',metrics=['accuracy'],loss='categorical_crossentropy', epochs=12,batch_size=32) already exists.

CONTEXT: Traceback (most recent call last):

PL/Python function "generate_model_selection_configs", line 22, in <module>

mst_loader.load()

PL/Python function "generate_model_selection_configs", line 313, in load

PL/Python function "generate_model_selection_configs", line 566, in insert_into_mst_table

PL/Python function "generate_model_selection_configs"

[SQL: SELECT madlib.generate_model_selection_configs( 'model_arch_library', -- model architecture table

'mst_table', -- model selection table output

ARRAY[1], -- model ids from model architecture table

$$

{ 'loss': ['categorical_crossentropy'],

'optimizer': ['SGD'],

'metrics': ['accuracy']

}

$$, -- compile_param_grid

$$

{ 'batch_size': [8, 32, 64, 128, 256, 1024, 4096],

'epochs': [1, 2, 3, 5, 10, 12]

}

$$, -- fit_param_grid

'random', -- search_type

5, -- num_configs

NULL, -- random_state

NULL -- object table (Default=None)

);]

(Background on this error at: http://sqlalche.me/e/gkpj)

```

But it only produced the error every 2nd time I did this. i.e., 1-pass it would work then the 2nd pass it would throw the error.

When it does pass, it produces

```

mst_key | model_id | compile_params | fit_params

---------+----------+----------------------------------------------------------------------------------------------+--------------------------

1 | 1 | optimizer='Adam(lr=0.9063214445649174)',metrics=['accuracy'],loss='categorical_crossentropy' | epochs=10,batch_size=256

2 | 1 | optimizer='Adam(lr=0.9367722192055232)',metrics=['accuracy'],loss='categorical_crossentropy' | epochs=5,batch_size=256

3 | 1 | optimizer='Adam(lr=0.9212048311857509)',metrics=['accuracy'],loss='categorical_crossentropy' | epochs=2,batch_size=32

4 | 1 | optimizer='Adam(lr=0.9193149125403647)',metrics=['accuracy'],loss='categorical_crossentropy' | epochs=3,batch_size=256

5 | 1 | optimizer='Adam(lr=0.9326284661833211)',metrics=['accuracy'],loss='categorical_crossentropy' | epochs=2,batch_size=256

6 | 1 | optimizer='SGD()',metrics=['accuracy'],loss='categorical_crossentropy' | epochs=10,batch_size=256

7 | 1 | optimizer='SGD()',metrics=['accuracy'],loss='categorical_crossentropy' | epochs=5,batch_size=8

8 | 1 | optimizer='SGD()',metrics=['accuracy'],loss='categorical_crossentropy' | epochs=2,batch_size=1024

9 | 1 | optimizer='SGD()',metrics=['accuracy'],loss='categorical_crossentropy' | epochs=3,batch_size=32

10 | 1 | optimizer='SGD()',metrics=['accuracy'],loss='categorical_crossentropy' | epochs=12,batch_size=8

(10 rows)

```

is `optimizer='SGD()'...` correct or should it be `optimizer='SGD'...` ?

(7)

Not all sub-params apply to all params. For example, for optimizer, `lr` and `decay` might only apply to certain optimizer types and not others:

```

optimizer='SGD'

optimizer='rmsprop(lr=0.0001, decay=1e-6)'

optimizer='adam(lr=0.0001)'

```

In the previous method we accounted for that by doing:

```

SELECT madlib.load_model_selection_table('model_arch_library', -- model architecture table

'mst_table', -- model selection table output

ARRAY[1,2], -- model ids from model architecture table

ARRAY[ -- compile params

$$loss='categorical_crossentropy',optimizer='rmsprop(lr=0.0001, decay=1e-6)',metrics=['accuracy']$$,

$$loss='categorical_crossentropy',optimizer='rmsprop(lr=0.001, decay=1e-6)',metrics=['accuracy']$$,

$$loss='categorical_crossentropy',optimizer='adam(lr=0.0001)',metrics=['accuracy']$$,

$$loss='categorical_crossentropy',optimizer='adam(lr=0.001)',metrics=['accuracy']$$

],

ARRAY[ -- fit params

$$batch_size=64,epochs=5$$,

$$batch_size=128,epochs=5$$

]

);

```

but how do we do this in the new method `generate_model_configs`? You could call it multiple times and incrementally build up the `mst_table` but when autoML methods call this function we need to support a 1-shot manner. I would suggest nested dictionaries like:

```

SELECT madlib.generate_model_configs(

'model_arch_library', -- model architecture table

'mst_table', -- model selection table output

ARRAY[1], -- model ids from model architecture table

$$

{ 'loss': ['categorical_crossentropy'],

'my_list': [

{'optimizer': ['SGD', 'Adagrad']},

{'optimizer': ['rmsprop'], 'lr': [0.9, 0.95, 'log'], 'decay': [1e-6, 1e-4, 'log']},

{'optimizer': ['Adam'], 'lr': [0.99, 0.995, 'log']}

],

'metrics': ['accuracy']

}

$$, -- compile_param_grid

$$

{ 'batch_size': [8, 32, 64, 128, 256, 1024, 4096],

'epochs': [1, 2, 3, 5, 10, 12]

}

$$, -- fit_param_grid

'random', -- search_type

5, -- num_configs

NULL, -- random_state

NULL -- object table (Default=None)

```

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [madlib] Advitya17 edited a comment on pull request #506: DL: Add grid/random search for model selection with `generate_model_selection_configs`

Posted by GitBox <gi...@apache.org>.

Advitya17 edited a comment on pull request #506:

URL: https://github.com/apache/madlib/pull/506#issuecomment-669530020

@khannaekta I have made the changes specified above.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [madlib] Advitya17 edited a comment on pull request #506: DL: Add grid/random search for model selection with `generate_model_selection_configs`

Posted by GitBox <gi...@apache.org>.

Advitya17 edited a comment on pull request #506:

URL: https://github.com/apache/madlib/pull/506#issuecomment-668955356

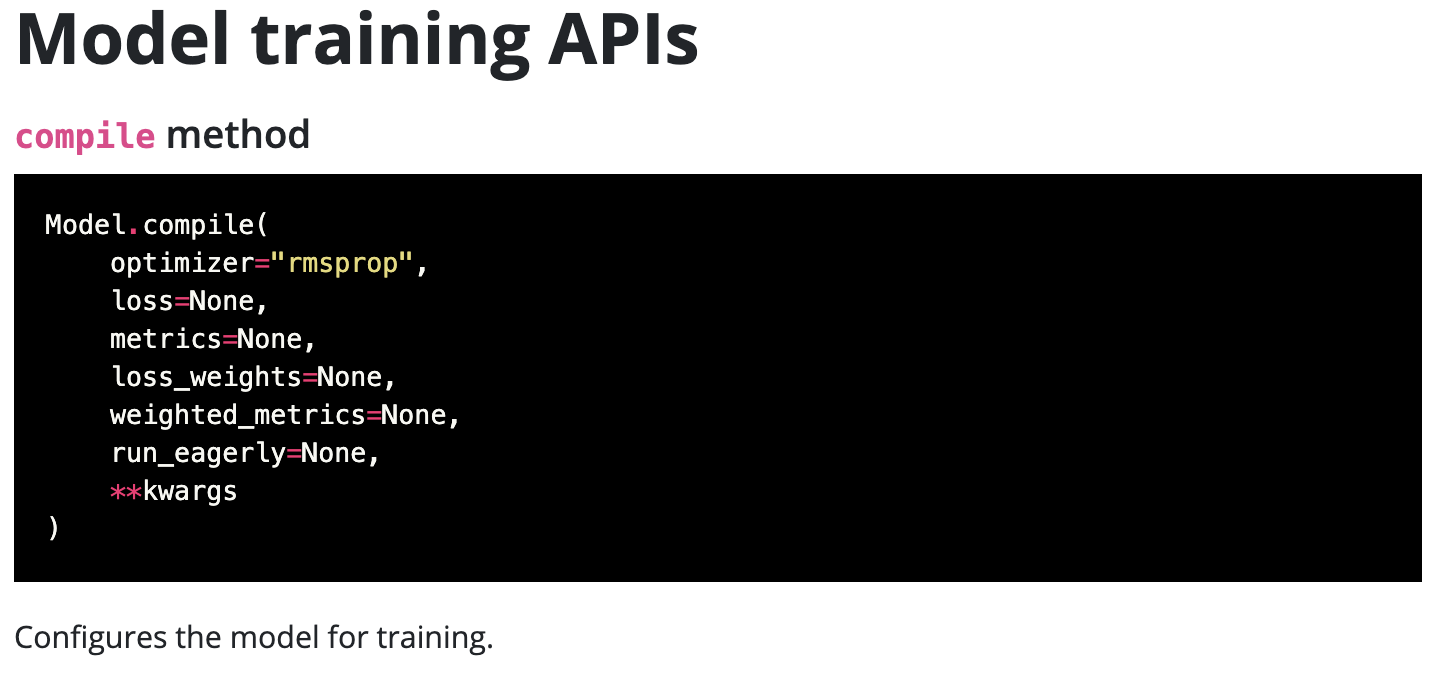

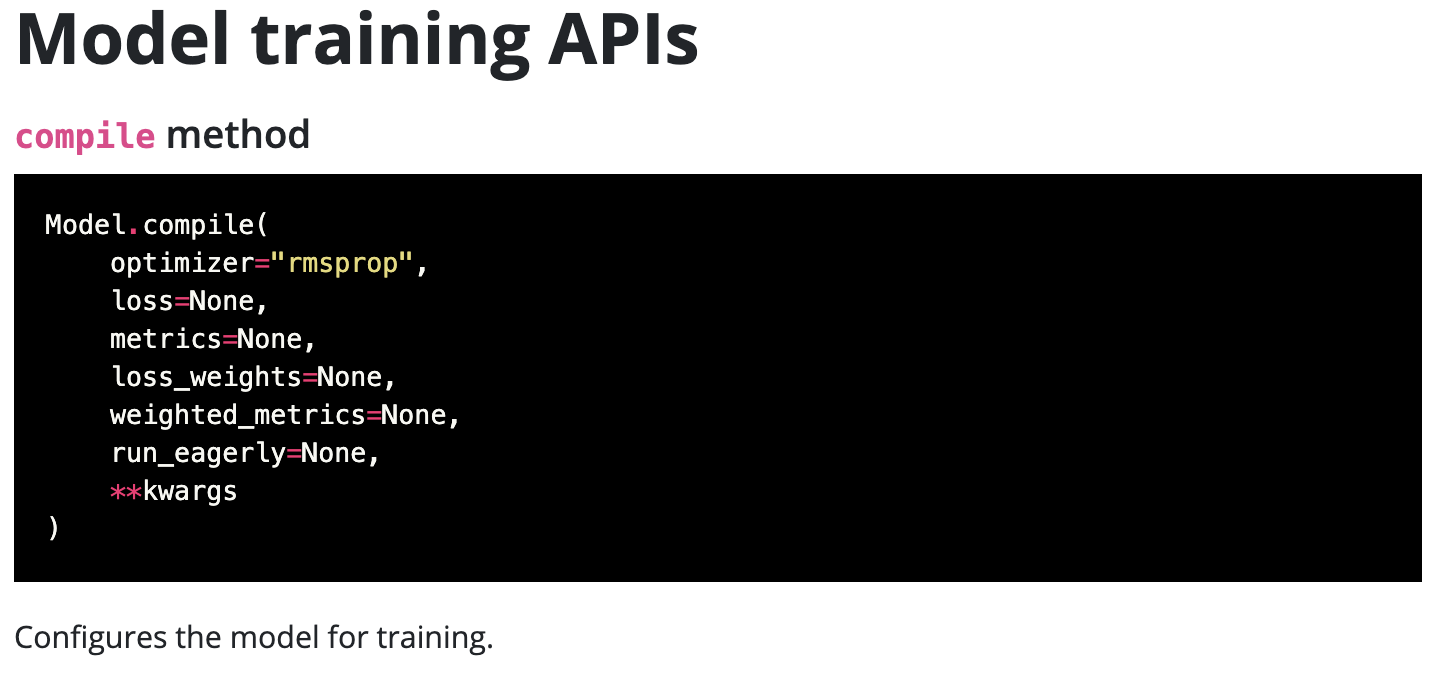

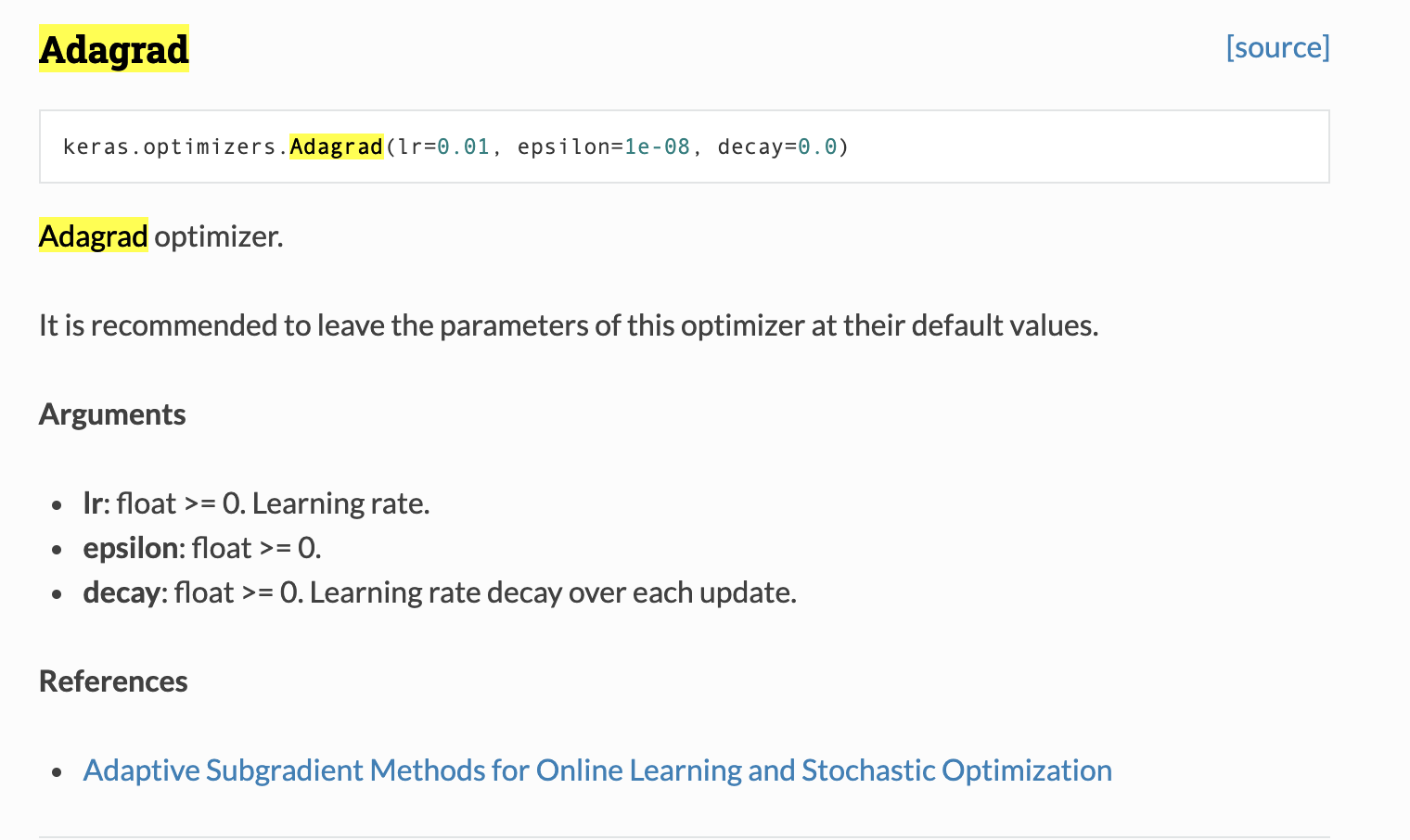

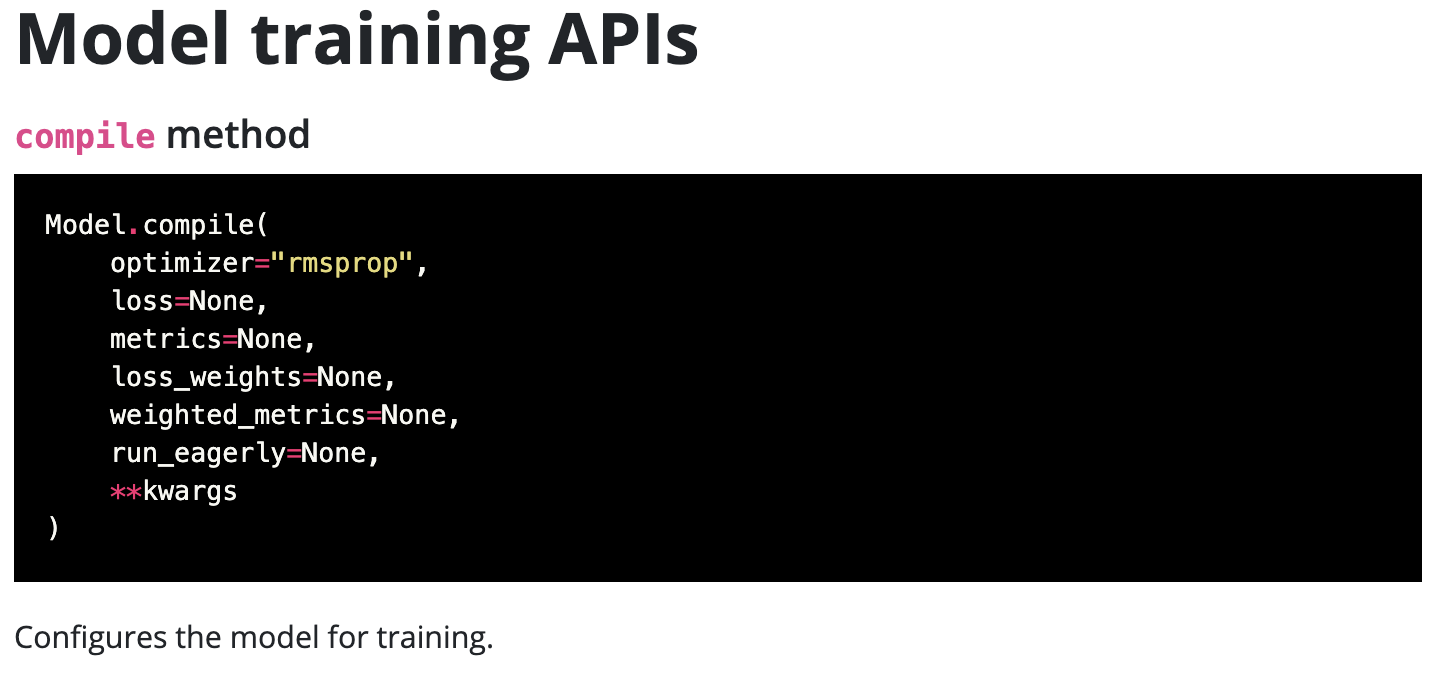

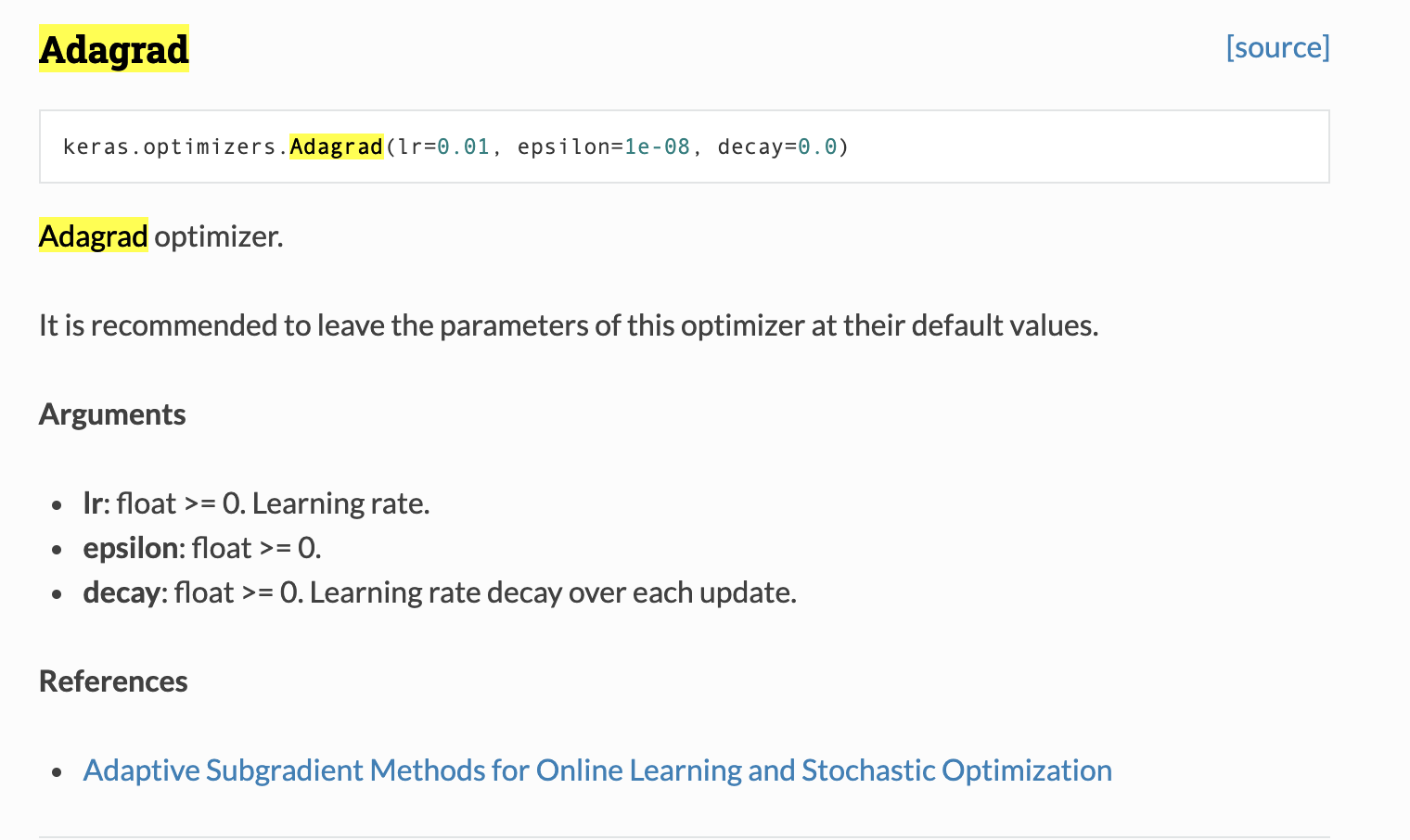

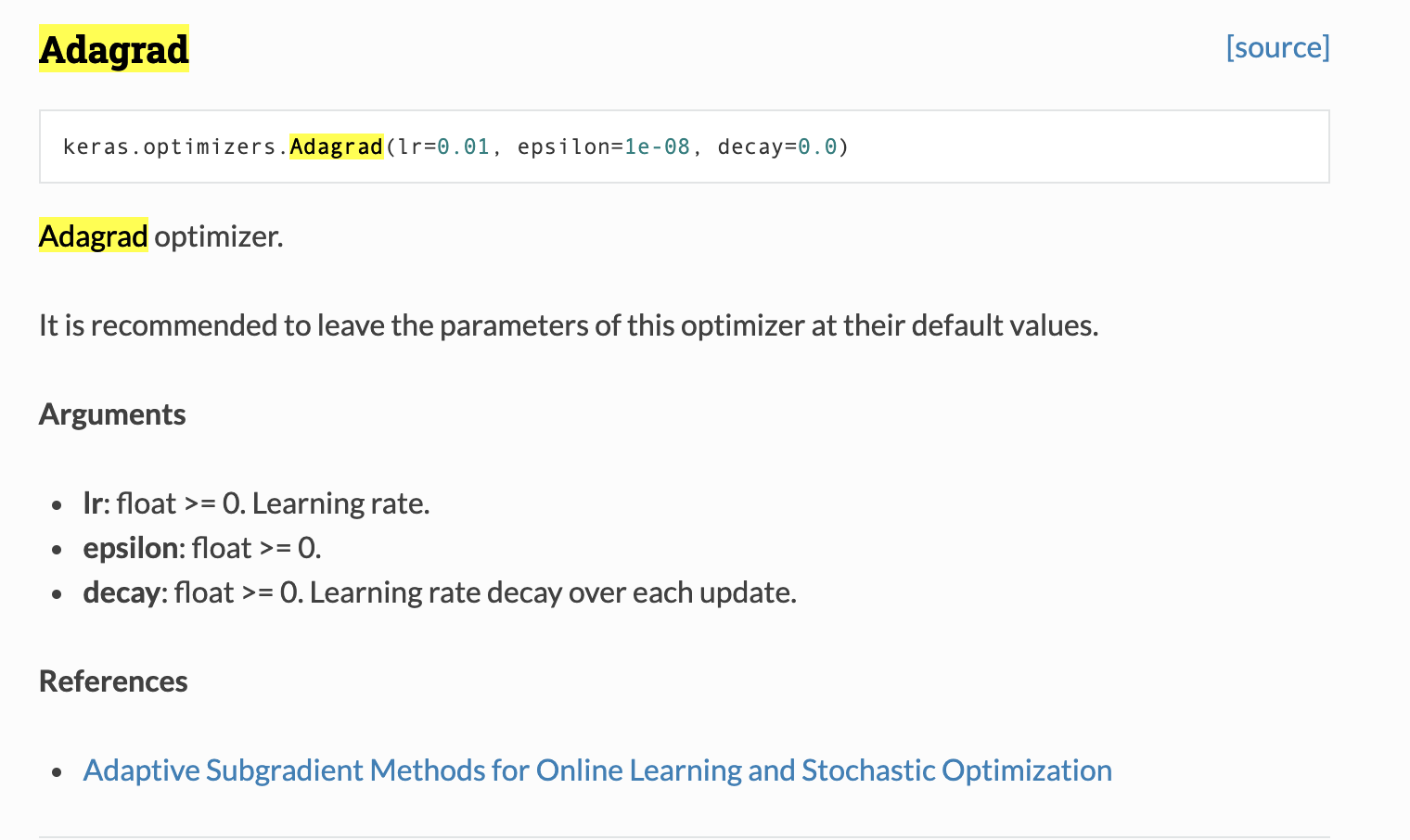

According to the keras documentation, it seems there is a default value for optimizer as well (just that it seems to be rmsprop instead of my manually chosen SGD).

From my understanding of the documentation and its language, yes optimizer is required for compiling a keras model, but they seem to already be using a default optimizer (with default params) to compile the keras model when the user may not specify one.

Please correct me if I missed anything or didn't understand it right.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [madlib] Advitya17 commented on pull request #506: DL: Add grid/random search for model selection with `generate_model_selection_configs`

Posted by GitBox <gi...@apache.org>.

Advitya17 commented on pull request #506:

URL: https://github.com/apache/madlib/pull/506#issuecomment-668955356

According to the keras documentation, it seems there is a default value for optimizer as well (just that it seems to be rmsprop instead of my manually chosen SGD).

From my understanding of the documentation and its language, yes optimizer is required for compiling a keras model, but they seem to already be using a default optimizer (with default params) to compile the keras model when the user may not specify one. Please correct me if I missed anything or didn't understand it right.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [madlib] Advitya17 commented on pull request #506: DL: Add grid/random search for model selection with `generate_model_selection_configs`

Posted by GitBox <gi...@apache.org>.

Advitya17 commented on pull request #506:

URL: https://github.com/apache/madlib/pull/506#issuecomment-668949266

(8) I assume it's the right syntax (as part of the keras documentation)

(9) Yes, I currently have SGD as the default when the user wishes to tune any optimizer params but doesn't specify an optimizer to tune.

Does that help?

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [madlib] Advitya17 commented on pull request #506: DL: Add grid/random search for model selection with `generate_model_selection_configs`

Posted by GitBox <gi...@apache.org>.

Advitya17 commented on pull request #506:

URL: https://github.com/apache/madlib/pull/506#issuecomment-669330584

I have switched the default optimizer from SGD to RMSprop.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [madlib] Advitya17 edited a comment on pull request #506: DL: Add grid/random search for model selection with `generate_model_selection_configs`

Posted by GitBox <gi...@apache.org>.

Advitya17 edited a comment on pull request #506:

URL: https://github.com/apache/madlib/pull/506#issuecomment-668955356

According to the keras documentation, it seems there is a default value for optimizer as well (just that it seems to be rmsprop instead of my manually chosen SGD).

From my understanding of the documentation and its language, yes optimizer is required for compiling a keras model, but they seem to already be using a default optimizer (and with default params) to compile the keras model when the user may not specify one.

Please correct me if I missed anything or didn't understand it right.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [madlib] Advitya17 commented on pull request #506: DL: Add grid/random search for model selection with `generate_model_selection_configs`

Posted by GitBox <gi...@apache.org>.

Advitya17 commented on pull request #506:

URL: https://github.com/apache/madlib/pull/506#issuecomment-669530020

@khannaekta I have make the changes specified above.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [madlib] orhankislal merged pull request #506: DL: Add grid/random search for model selection with `generate_model_configs`

Posted by GitBox <gi...@apache.org>.

orhankislal merged pull request #506:

URL: https://github.com/apache/madlib/pull/506

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [madlib] fmcquillan99 edited a comment on pull request #506: DL: Add grid/random search for model selection with `generate_model_selection_configs`

Posted by GitBox <gi...@apache.org>.

fmcquillan99 edited a comment on pull request #506:

URL: https://github.com/apache/madlib/pull/506#issuecomment-665993038

errors and issues

(1)

```

SELECT madlib.generate_model_selection_configs(

'model_arch_library', -- model architecture table

'mst_table', -- model selection table output

ARRAY[1,2], -- model ids from model architecture table

$$

{

'lr': [1.0, 2.0, 'linear']

}

$$, -- compile_param_grid

$$

{ 'batch_size': [8],

'epochs': [1]

}

$$, -- fit_param_grid

'random', -- search_type (‘grid’ or ‘random’, default ‘grid’)

5, -- num_configs (number of sampled parameters. Default=10) [to limit testing]

NULL, -- random_state

NULL -- object table (Default=None)

);]

```

produces

```

InternalError: (psycopg2.errors.InternalError_) TypeError: cannot concatenate 'str' and 'float' objects (plpython.c:5038)

CONTEXT: Traceback (most recent call last):

PL/Python function "generate_model_selection_configs", line 21, in <module>

mst_loader = madlib_keras_model_selection.MstSearch(**globals())

PL/Python function "generate_model_selection_configs", line 42, in wrapper

PL/Python function "generate_model_selection_configs", line 287, in __init__

PL/Python function "generate_model_selection_configs", line 426, in find_random_combinations

PL/Python function "generate_model_selection_configs", line 490, in generate_row_string

PL/Python function "generate_model_selection_configs"

[SQL: SELECT madlib.generate_model_selection_configs(

'model_arch_library', -- model architecture table

'mst_table', -- model selection table output

ARRAY[1,2], -- model ids from model architecture table

$$

{ 'loss': ['categorical_crossentropy'],

'lr': [0.0001, 0.1, 'linear']

}

$$, -- compile_param_grid

$$

{ 'batch_size': [8],

'epochs': [1]

}

$$, -- fit_param_grid

'random', -- search_type (‘grid’ or ‘random’, default ‘grid’)

5, -- num_configs (number of sampled parameters. Default=10) [to limit testing]

NULL, -- random_state

NULL -- object table (Default=None)

);]

(Background on this error at: http://sqlalche.me/e/2j85)

```

Likewise

```

DROP TABLE IF EXISTS mst_table, mst_table_summary;

SELECT madlib.generate_model_selection_configs(

'model_arch_library', -- model architecture table

'mst_table', -- model selection table output

ARRAY[1,2], -- model ids from model architecture table

$$

{

'lr': [1.0, 2.0, 'log'],

}

$$, -- compile_param_grid

$$

{ 'batch_size': [8],

'epochs': [1]

}

$$, -- fit_param_grid

'random', -- search_type (‘grid’ or ‘random’, default ‘grid’)

1, -- num_configs (number of sampled parameters. Default=10) [to limit testing]

NULL, -- random_state

NULL -- object table (Default=None)

);

SELECT * FROM mst_table ORDER BY mst_key;

```

produces

```

InternalError: (psycopg2.errors.InternalError_) TypeError: cannot concatenate 'str' and 'numpy.float64' objects (plpython.c:5038)

CONTEXT: Traceback (most recent call last):

PL/Python function "generate_model_selection_configs", line 21, in <module>

mst_loader = madlib_keras_model_selection.MstSearch(**globals())

PL/Python function "generate_model_selection_configs", line 42, in wrapper

PL/Python function "generate_model_selection_configs", line 287, in __init__

PL/Python function "generate_model_selection_configs", line 426, in find_random_combinations

PL/Python function "generate_model_selection_configs", line 490, in generate_row_string

PL/Python function "generate_model_selection_configs"

[SQL: SELECT madlib.generate_model_selection_configs(

'model_arch_library', -- model architecture table

'mst_table', -- model selection table output

ARRAY[1,2], -- model ids from model architecture table

$$

{

'lr': [1.0, 2.0, 'log'],

}

$$, -- compile_param_grid

$$

{ 'batch_size': [8],

'epochs': [1]

}

$$, -- fit_param_grid

'random', -- search_type (‘grid’ or ‘random’, default ‘grid’)

1, -- num_configs (number of sampled parameters. Default=10) [to limit testing]

NULL, -- random_state

NULL -- object table (Default=None)

);]

(Background on this error at: http://sqlalche.me/e/2j85)

```

(2)

For search_type = 'grid' or 'random', use should be able to enter part of the string, e.g., 'rand' for random or 'g' for for grid. There is a MADlib function that supports this.

(3)

change the name of the function from `generate_model_selection_configs`

to `generate_model_configs`

(4)

remove exclamations ! from error messages and random capitalization. Suggested messages:

"DL: 'num_configs' and 'random_state' must be NULL for grid search"

"DL: Cannot search from a distribution with grid search"

"DL: 'num_configs' cannot be NULL for random search"

"DL: 'search_type' must be either 'grid' or 'random'"

"DL: Please choose a valid distribution type ('linear' or 'log')"

"DL: {0} should be of the format [lower_bound, upper_bound, distribution_type]"

(5)

In addition to `linear` sampling and `log` sampling we should add another type

called `log_near_one`

```

config_dict[cp] = 1.0 - np.power(10, np.random.uniform (np.log10 (1.0 - param_values[1]), np.log10(1.0 - param_values[0]) ) )

```

This type of sampling is useful for exponentially weighted average type params like momentum, which are very sensitive to changes near 1. It has the effect of producing more values near 1 than regular log sampling.

e.g.

momentum values in range [0.9000, 0.9005] average the prev 10 values no matter where you are in the range (no diff)

but

momentum values in range [0.9990, 0.9995] average the prev 1000 values for the left side and prev 2000 values for the right side (big diff), so you want to generate more samples nearer to the right side to get better coverage.

(6)

```

DROP TABLE IF EXISTS mst_table, mst_table_summary;

SELECT madlib.generate_model_selection_configs(

'model_arch_library', -- model architecture table

'mst_table', -- model selection table output

ARRAY[1], -- model ids from model architecture table

$$

{ 'loss': ['categorical_crossentropy'],

'optimizer': ['Adam'],

'lr': [0.9, 0.95, 'log'],

'metrics': ['accuracy']

}

$$, -- compile_param_grid

$$

{ 'batch_size': [8, 32, 64, 128, 256, 1024, 4096],

'epochs': [1, 2, 3, 5, 10, 12]

}

$$, -- fit_param_grid

'random', -- search_type

5, -- num_configs

NULL, -- random_state

NULL -- object table (Default=None)

);

SELECT * FROM mst_table ORDER BY mst_key;

```

followed by

```

SELECT madlib.generate_model_selection_configs(

'model_arch_library', -- model architecture table

'mst_table', -- model selection table output

ARRAY[1], -- model ids from model architecture table

$$

{ 'loss': ['categorical_crossentropy'],

'optimizer': ['SGD'],

'metrics': ['accuracy']

}

$$, -- compile_param_grid

$$

{ 'batch_size': [8, 32, 64, 128, 256, 1024, 4096],

'epochs': [1, 2, 3, 5, 10, 12]

}

$$, -- fit_param_grid

'random', -- search_type

5, -- num_configs

NULL, -- random_state

NULL -- object table (Default=None)

);

SELECT * FROM mst_table ORDER BY mst_key;

```

produces

```

IntegrityError: (psycopg2.errors.UniqueViolation) plpy.SPIError: duplicate key value violates unique constraint "mst_table_model_id_key" (seg0 10.128.0.41:40000 pid=22297)

DETAIL: Key (model_id, compile_params, fit_params)=(1, optimizer='SGD()',metrics=['accuracy'],loss='categorical_crossentropy', epochs=12,batch_size=32) already exists.

CONTEXT: Traceback (most recent call last):

PL/Python function "generate_model_selection_configs", line 22, in <module>

mst_loader.load()

PL/Python function "generate_model_selection_configs", line 313, in load

PL/Python function "generate_model_selection_configs", line 566, in insert_into_mst_table

PL/Python function "generate_model_selection_configs"

[SQL: SELECT madlib.generate_model_selection_configs( 'model_arch_library', -- model architecture table

'mst_table', -- model selection table output

ARRAY[1], -- model ids from model architecture table

$$

{ 'loss': ['categorical_crossentropy'],

'optimizer': ['SGD'],

'metrics': ['accuracy']

}

$$, -- compile_param_grid

$$

{ 'batch_size': [8, 32, 64, 128, 256, 1024, 4096],

'epochs': [1, 2, 3, 5, 10, 12]

}

$$, -- fit_param_grid

'random', -- search_type

5, -- num_configs

NULL, -- random_state

NULL -- object table (Default=None)

);]

(Background on this error at: http://sqlalche.me/e/gkpj)

```

But it only produced the error every 2nd time I did this. i.e., 1-pass it would work then the 2nd pass it would throw the error.

When it does pass, it produces

```

mst_key | model_id | compile_params | fit_params

---------+----------+----------------------------------------------------------------------------------------------+--------------------------

1 | 1 | optimizer='Adam(lr=0.9063214445649174)',metrics=['accuracy'],loss='categorical_crossentropy' | epochs=10,batch_size=256

2 | 1 | optimizer='Adam(lr=0.9367722192055232)',metrics=['accuracy'],loss='categorical_crossentropy' | epochs=5,batch_size=256

3 | 1 | optimizer='Adam(lr=0.9212048311857509)',metrics=['accuracy'],loss='categorical_crossentropy' | epochs=2,batch_size=32

4 | 1 | optimizer='Adam(lr=0.9193149125403647)',metrics=['accuracy'],loss='categorical_crossentropy' | epochs=3,batch_size=256

5 | 1 | optimizer='Adam(lr=0.9326284661833211)',metrics=['accuracy'],loss='categorical_crossentropy' | epochs=2,batch_size=256

6 | 1 | optimizer='SGD()',metrics=['accuracy'],loss='categorical_crossentropy' | epochs=10,batch_size=256

7 | 1 | optimizer='SGD()',metrics=['accuracy'],loss='categorical_crossentropy' | epochs=5,batch_size=8

8 | 1 | optimizer='SGD()',metrics=['accuracy'],loss='categorical_crossentropy' | epochs=2,batch_size=1024

9 | 1 | optimizer='SGD()',metrics=['accuracy'],loss='categorical_crossentropy' | epochs=3,batch_size=32

10 | 1 | optimizer='SGD()',metrics=['accuracy'],loss='categorical_crossentropy' | epochs=12,batch_size=8

(10 rows)

```

is `optimizer='SGD()'...` correct or should it be `optimizer='SGD'...` ?

(7)

Not all sub-params apply to all params. For example, for optimizer, `lr` and `decay` might only apply to certain optimizer types and not others:

```

optimizer='SGD'

optimizer='rmsprop(lr=0.0001, decay=1e-6)'

optimizer='adam(lr=0.0001)'

```

In the previous method we accounted for that by doing:

```

SELECT madlib.load_model_selection_table('model_arch_library', -- model architecture table

'mst_table', -- model selection table output

ARRAY[1,2], -- model ids from model architecture table

ARRAY[ -- compile params

$$loss='categorical_crossentropy',optimizer='rmsprop(lr=0.0001, decay=1e-6)',metrics=['accuracy']$$,

$$loss='categorical_crossentropy',optimizer='rmsprop(lr=0.001, decay=1e-6)',metrics=['accuracy']$$,

$$loss='categorical_crossentropy',optimizer='adam(lr=0.0001)',metrics=['accuracy']$$,

$$loss='categorical_crossentropy',optimizer='adam(lr=0.001)',metrics=['accuracy']$$

],

ARRAY[ -- fit params

$$batch_size=64,epochs=5$$,

$$batch_size=128,epochs=5$$

]

);

```

but how do we do this in the new method `generate_model_configs`? You could call it multiple times and incrementally build up the `mst_table` but when autoML methods call this function we need to support a 1-shot manner. I would suggest nested dictionaries like:

```

SELECT madlib.generate_model_configs(

'model_arch_library', -- model architecture table

'mst_table', -- model selection table output

ARRAY[1], -- model ids from model architecture table

$$

{ 'loss': ['categorical_crossentropy'],

'my_list': [

{'optimizer': ['SGD', 'Adagrad']},

{'optimizer': ['rmsprop'], 'lr': [0.9, 0.95, 'log'], 'decay': [1e-6, 1e-4, 'log']},

{'optimizer': ['Adam'], 'lr': [0.99, 0.995, 'log']}

],

'metrics': ['accuracy']

}

$$, -- compile_param_grid

$$

{ 'batch_size': [8, 32, 64, 128, 256, 1024, 4096],

'epochs': [1, 2, 3, 5, 10, 12]

}

$$, -- fit_param_grid

'random', -- search_type

5, -- num_configs

NULL, -- random_state

NULL -- object table (Default=None)

```

So I think we should support both single dictionary and nested dictionary syntax.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [madlib] fmcquillan99 edited a comment on pull request #506: DL: Add grid/random search for model selection with `generate_model_selection_configs`

Posted by GitBox <gi...@apache.org>.

fmcquillan99 edited a comment on pull request #506:

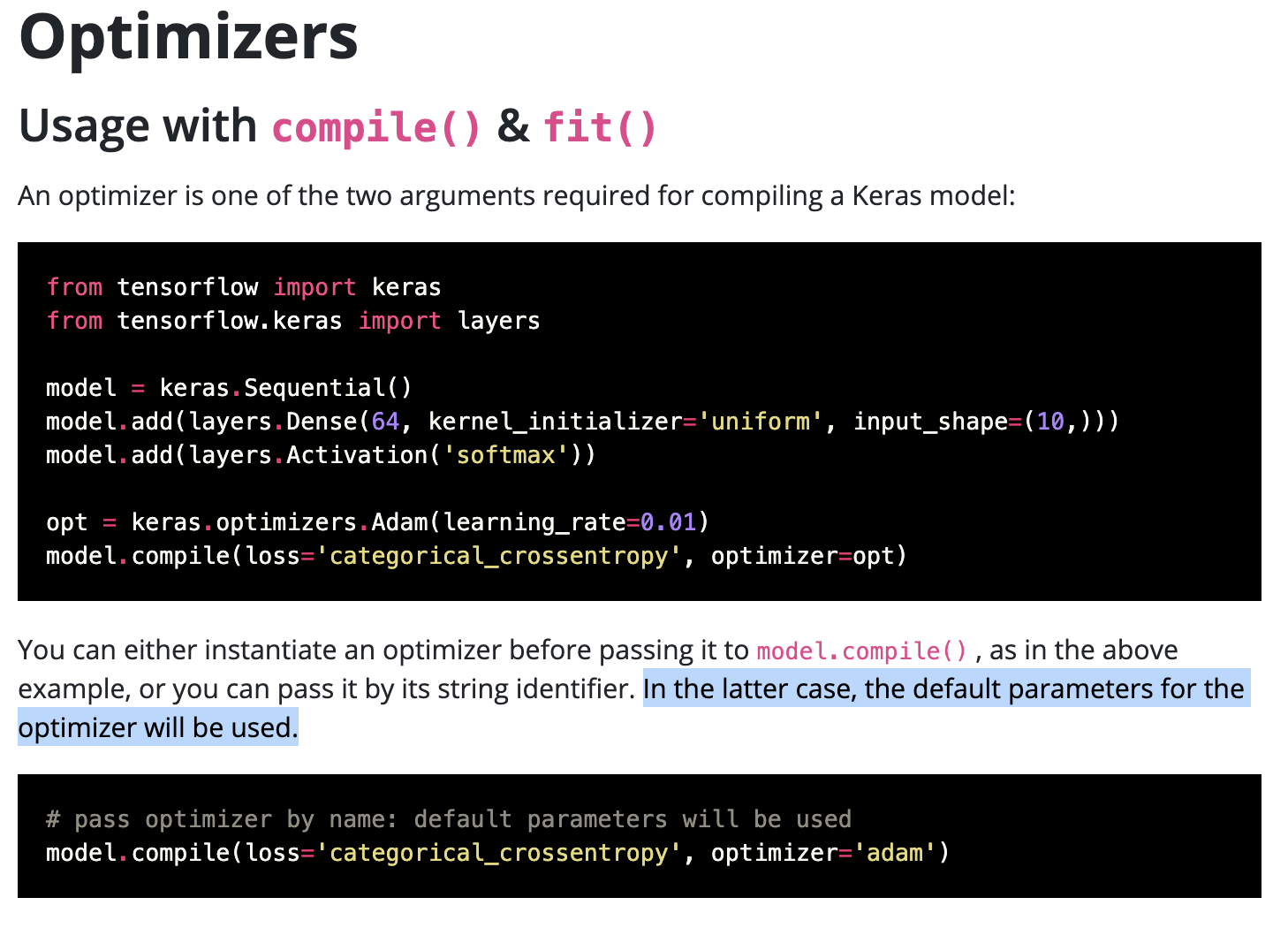

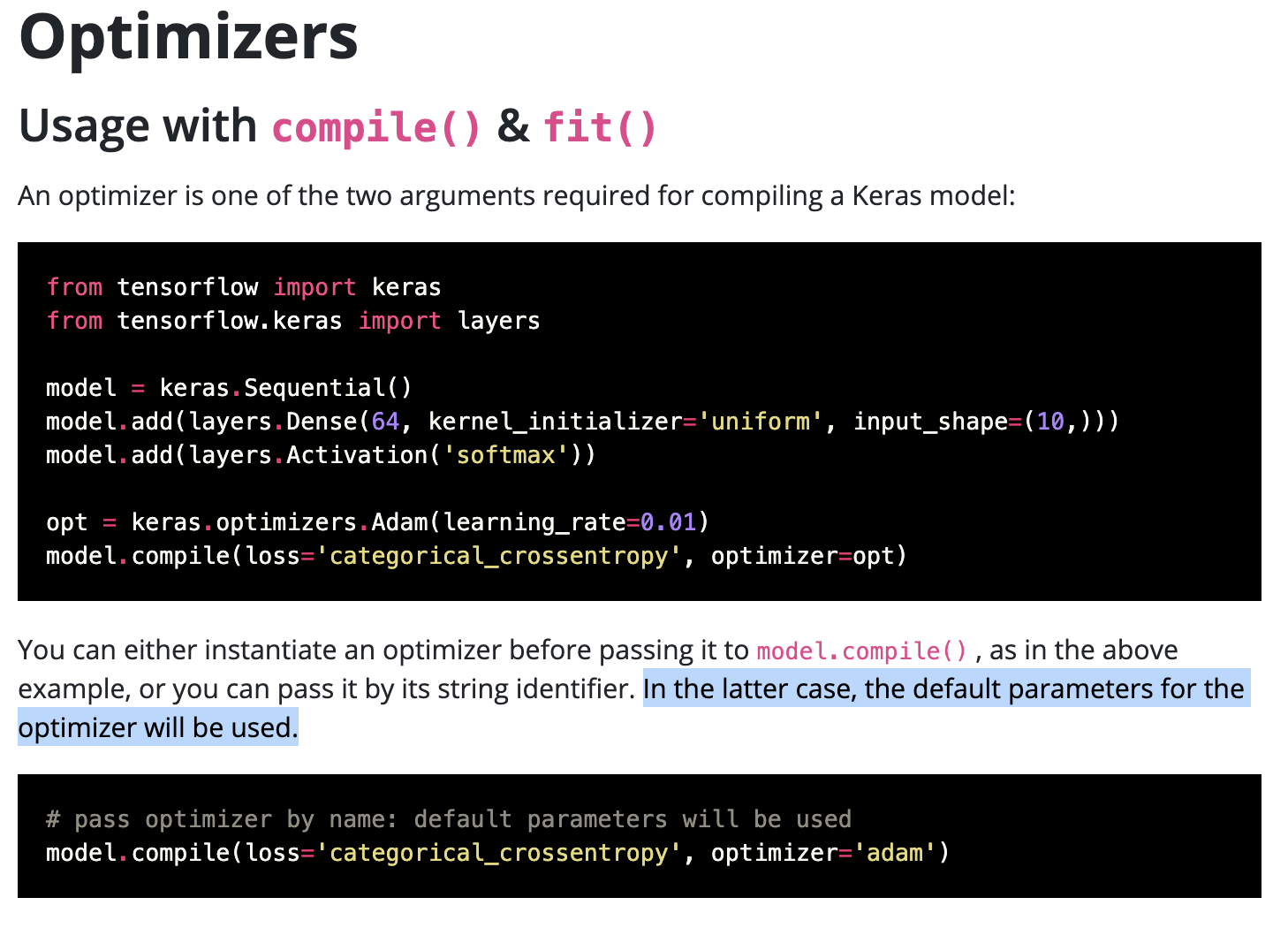

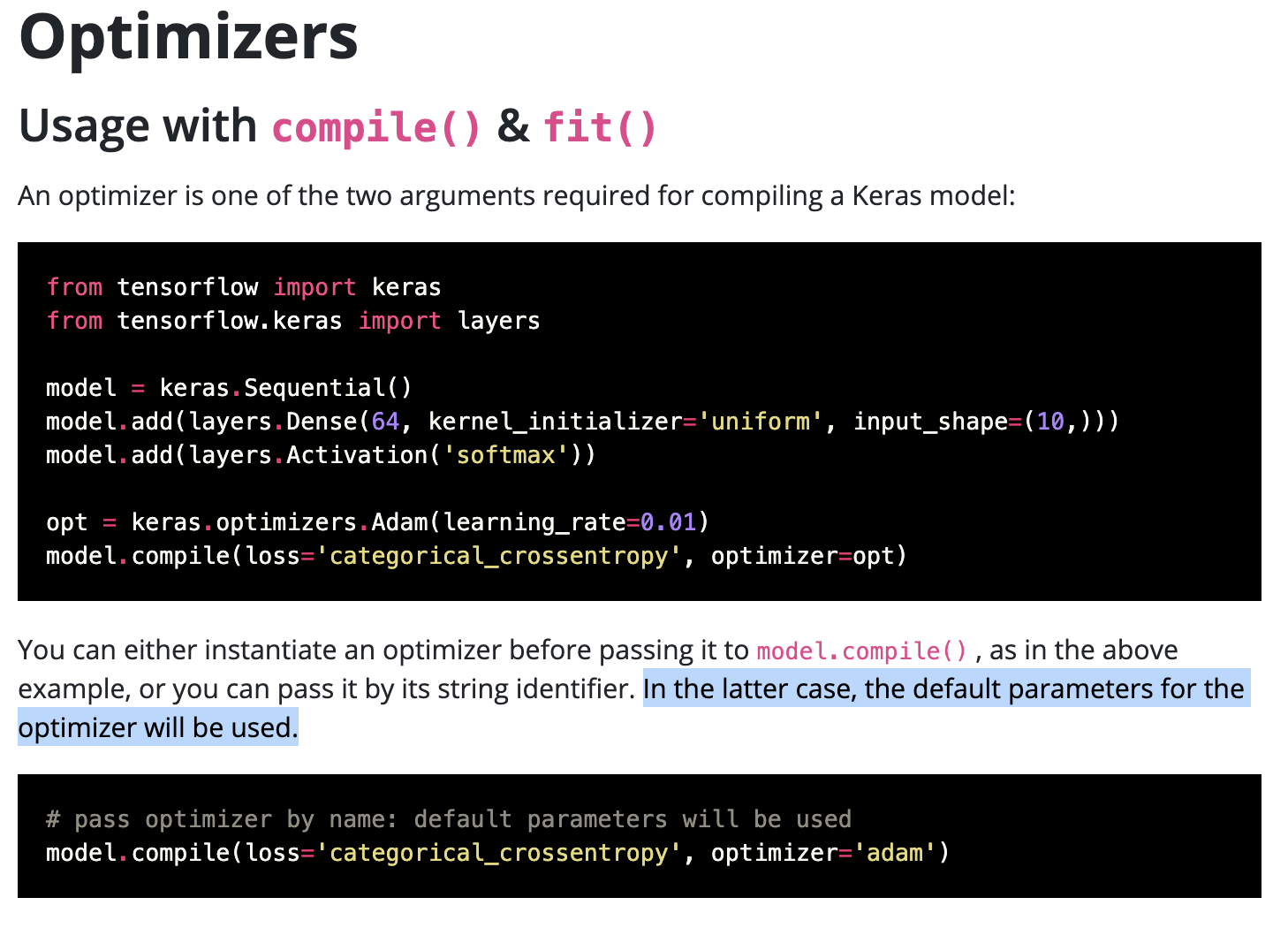

URL: https://github.com/apache/madlib/pull/506#issuecomment-668952722

for (9)

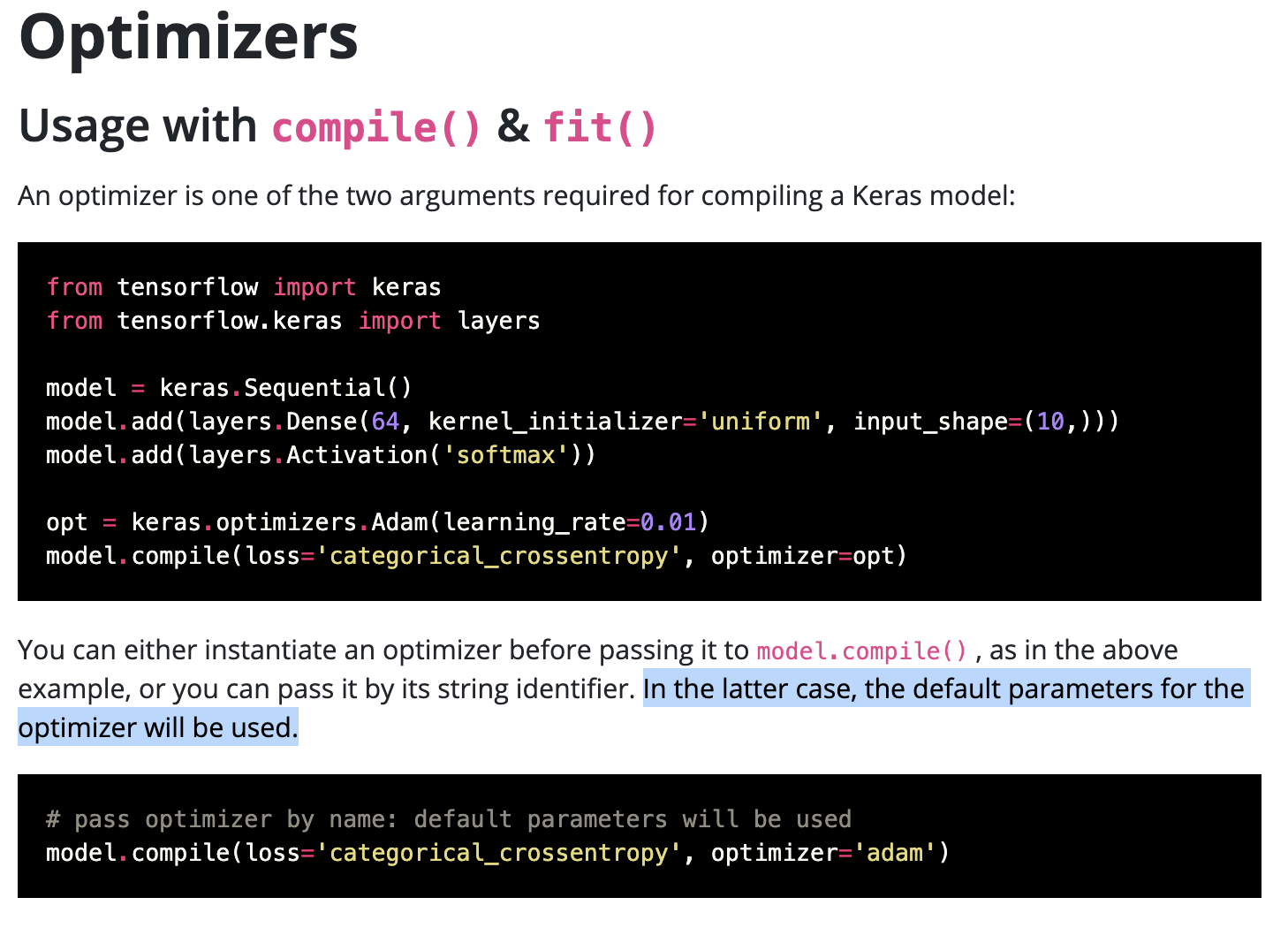

Keras docs say that optimizer and loss function are mandatory params, so I don't think we should put SGD if user does not specify an optimizer. We should let it fail, no?

https://keras.io/api/optimizers/

`An optimizer is one of the two arguments required for compiling a Keras model`

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [madlib] fmcquillan99 commented on pull request #506: DL: Add grid/random search for model selection with `generate_model_selection_configs`

Posted by GitBox <gi...@apache.org>.

fmcquillan99 commented on pull request #506:

URL: https://github.com/apache/madlib/pull/506#issuecomment-667323102

Actually #6 is fine, I should have read this in more detail

```IntegrityError: (psycopg2.errors.UniqueViolation) plpy.SPIError: duplicate key value violates unique constraint "mst_table_model_id_key" (seg0 10.128.0.41:40000 pid=22297)

DETAIL: Key (model_id, compile_params, fit_params)=(1, optimizer='SGD()',metrics=['accuracy'],loss='categorical_crossentropy', epochs=12,batch_size=32) already exists.

```

No need to do anything, I will put a better note in the user docs about this corner case.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [madlib] fmcquillan99 commented on pull request #506: DL: Add grid/random search for model selection with `generate_model_selection_configs`

Posted by GitBox <gi...@apache.org>.

fmcquillan99 commented on pull request #506:

URL: https://github.com/apache/madlib/pull/506#issuecomment-665993038

errors and issues

(1)

```

SELECT madlib.generate_model_selection_configs(

'model_arch_library', -- model architecture table

'mst_table', -- model selection table output

ARRAY[1,2], -- model ids from model architecture table

$$

{

'lr': [1.0, 2.0, 'linear']

}

$$, -- compile_param_grid

$$

{ 'batch_size': [8],

'epochs': [1]

}

$$, -- fit_param_grid

'random', -- search_type (‘grid’ or ‘random’, default ‘grid’)

5, -- num_configs (number of sampled parameters. Default=10) [to limit testing]

NULL, -- random_state

NULL -- object table (Default=None)

);]

```

produces

```

InternalError: (psycopg2.errors.InternalError_) TypeError: cannot concatenate 'str' and 'float' objects (plpython.c:5038)

CONTEXT: Traceback (most recent call last):

PL/Python function "generate_model_selection_configs", line 21, in <module>

mst_loader = madlib_keras_model_selection.MstSearch(**globals())

PL/Python function "generate_model_selection_configs", line 42, in wrapper

PL/Python function "generate_model_selection_configs", line 287, in __init__

PL/Python function "generate_model_selection_configs", line 426, in find_random_combinations

PL/Python function "generate_model_selection_configs", line 490, in generate_row_string

PL/Python function "generate_model_selection_configs"

[SQL: SELECT madlib.generate_model_selection_configs(

'model_arch_library', -- model architecture table

'mst_table', -- model selection table output

ARRAY[1,2], -- model ids from model architecture table

$$

{ 'loss': ['categorical_crossentropy'],

'lr': [0.0001, 0.1, 'linear']

}

$$, -- compile_param_grid

$$

{ 'batch_size': [8],

'epochs': [1]

}

$$, -- fit_param_grid

'random', -- search_type (‘grid’ or ‘random’, default ‘grid’)

5, -- num_configs (number of sampled parameters. Default=10) [to limit testing]

NULL, -- random_state

NULL -- object table (Default=None)

);]

(Background on this error at: http://sqlalche.me/e/2j85)

```

Likewise

```

DROP TABLE IF EXISTS mst_table, mst_table_summary;

SELECT madlib.generate_model_selection_configs(

'model_arch_library', -- model architecture table

'mst_table', -- model selection table output

ARRAY[1,2], -- model ids from model architecture table

$$

{

'lr': [1.0, 2.0, 'log'],

}

$$, -- compile_param_grid

$$

{ 'batch_size': [8],

'epochs': [1]

}

$$, -- fit_param_grid

'random', -- search_type (‘grid’ or ‘random’, default ‘grid’)

1, -- num_configs (number of sampled parameters. Default=10) [to limit testing]

NULL, -- random_state

NULL -- object table (Default=None)

);

SELECT * FROM mst_table ORDER BY mst_key;

```

produces

```

InternalError: (psycopg2.errors.InternalError_) TypeError: cannot concatenate 'str' and 'numpy.float64' objects (plpython.c:5038)

CONTEXT: Traceback (most recent call last):

PL/Python function "generate_model_selection_configs", line 21, in <module>

mst_loader = madlib_keras_model_selection.MstSearch(**globals())

PL/Python function "generate_model_selection_configs", line 42, in wrapper

PL/Python function "generate_model_selection_configs", line 287, in __init__

PL/Python function "generate_model_selection_configs", line 426, in find_random_combinations

PL/Python function "generate_model_selection_configs", line 490, in generate_row_string

PL/Python function "generate_model_selection_configs"

[SQL: SELECT madlib.generate_model_selection_configs(

'model_arch_library', -- model architecture table

'mst_table', -- model selection table output

ARRAY[1,2], -- model ids from model architecture table

$$

{

'lr': [1.0, 2.0, 'log'],

}

$$, -- compile_param_grid

$$

{ 'batch_size': [8],

'epochs': [1]

}

$$, -- fit_param_grid

'random', -- search_type (‘grid’ or ‘random’, default ‘grid’)

1, -- num_configs (number of sampled parameters. Default=10) [to limit testing]

NULL, -- random_state

NULL -- object table (Default=None)

);]

(Background on this error at: http://sqlalche.me/e/2j85)

```

(2)

For search_type = 'grid' or 'random', use should be able to enter part of the string, e.g., 'rand' for random or 'g' for for grid. There is a MADlib function that supports this.

(3)

change the name of the function from `generate_model_selection_configs`

to `generate_model_configs`

(4)

remove exclamations ! from error messages and random capitalization. Suggested messages:

"DL: 'num_configs' and 'random_state' must be NULL for grid search"

"DL: Cannot search from a distribution with grid search"

"DL: 'num_configs' cannot be NULL for random search"

"DL: 'search_type' must be either 'grid' or 'random'"

"DL: Please choose a valid distribution type ('linear' or 'log')"

"DL: {0} should be of the format [lower_bound, upper_bound, distribution_type]"

(5)

In addition to `linear` sampling and `log` sampling we should add another type

called `log_near_one`

config_dict[cp] = 1.0 - np.power(

10,

np.random.uniform(

np.log10(1.0 - param_values[1]),

np.log10(1.0 - param_values[0])

)

)

This type of sampling is useful for exponentially weighted average type params like momentum, which are very sensitive to changes near 1. It has the effect of producing more values near 1 than regular log sampling.

e.g.

momentum values in range [0.9000, 0.9005] average the prev 10 values no matter where you are in the range (no diff)

but

momentum values in range [0.9990, 0.9995] average the prev 1000 values for the left side and prev 2000 values for the right side (big diff), so you want to generate more samples nearer to the right side to get better coverage.

(6)

```

DROP TABLE IF EXISTS mst_table, mst_table_summary;

SELECT madlib.generate_model_selection_configs(

'model_arch_library', -- model architecture table

'mst_table', -- model selection table output

ARRAY[1], -- model ids from model architecture table

$$

{ 'loss': ['categorical_crossentropy'],

'optimizer': ['Adam'],

'lr': [0.9, 0.95, 'log'],

'metrics': ['accuracy']

}

$$, -- compile_param_grid

$$

{ 'batch_size': [8, 32, 64, 128, 256, 1024, 4096],

'epochs': [1, 2, 3, 5, 10, 12]

}

$$, -- fit_param_grid

'random', -- search_type

5, -- num_configs

NULL, -- random_state

NULL -- object table (Default=None)

);

SELECT * FROM mst_table ORDER BY mst_key;

```

followed by

```

SELECT madlib.generate_model_selection_configs(

'model_arch_library', -- model architecture table

'mst_table', -- model selection table output

ARRAY[1], -- model ids from model architecture table

$$

{ 'loss': ['categorical_crossentropy'],

'optimizer': ['SGD'],

'metrics': ['accuracy']

}

$$, -- compile_param_grid

$$

{ 'batch_size': [8, 32, 64, 128, 256, 1024, 4096],

'epochs': [1, 2, 3, 5, 10, 12]

}

$$, -- fit_param_grid

'random', -- search_type

5, -- num_configs

NULL, -- random_state

NULL -- object table (Default=None)

);

SELECT * FROM mst_table ORDER BY mst_key;

```

produces

```

IntegrityError: (psycopg2.errors.UniqueViolation) plpy.SPIError: duplicate key value violates unique constraint "mst_table_model_id_key" (seg0 10.128.0.41:40000 pid=22297)

DETAIL: Key (model_id, compile_params, fit_params)=(1, optimizer='SGD()',metrics=['accuracy'],loss='categorical_crossentropy', epochs=12,batch_size=32) already exists.

CONTEXT: Traceback (most recent call last):

PL/Python function "generate_model_selection_configs", line 22, in <module>

mst_loader.load()

PL/Python function "generate_model_selection_configs", line 313, in load

PL/Python function "generate_model_selection_configs", line 566, in insert_into_mst_table

PL/Python function "generate_model_selection_configs"

[SQL: SELECT madlib.generate_model_selection_configs( 'model_arch_library', -- model architecture table

'mst_table', -- model selection table output

ARRAY[1], -- model ids from model architecture table

$$

{ 'loss': ['categorical_crossentropy'],

'optimizer': ['SGD'],

'metrics': ['accuracy']

}

$$, -- compile_param_grid

$$

{ 'batch_size': [8, 32, 64, 128, 256, 1024, 4096],

'epochs': [1, 2, 3, 5, 10, 12]

}

$$, -- fit_param_grid

'random', -- search_type

5, -- num_configs

NULL, -- random_state

NULL -- object table (Default=None)

);]

(Background on this error at: http://sqlalche.me/e/gkpj)

```

But it only produced the error every 2nd time I did this. i.e., 1-pass it would work then the 2nd pass it would throw the error.

When it does pass, it produces

```

mst_key | model_id | compile_params | fit_params

---------+----------+----------------------------------------------------------------------------------------------+--------------------------

1 | 1 | optimizer='Adam(lr=0.9063214445649174)',metrics=['accuracy'],loss='categorical_crossentropy' | epochs=10,batch_size=256

2 | 1 | optimizer='Adam(lr=0.9367722192055232)',metrics=['accuracy'],loss='categorical_crossentropy' | epochs=5,batch_size=256

3 | 1 | optimizer='Adam(lr=0.9212048311857509)',metrics=['accuracy'],loss='categorical_crossentropy' | epochs=2,batch_size=32

4 | 1 | optimizer='Adam(lr=0.9193149125403647)',metrics=['accuracy'],loss='categorical_crossentropy' | epochs=3,batch_size=256

5 | 1 | optimizer='Adam(lr=0.9326284661833211)',metrics=['accuracy'],loss='categorical_crossentropy' | epochs=2,batch_size=256

6 | 1 | optimizer='SGD()',metrics=['accuracy'],loss='categorical_crossentropy' | epochs=10,batch_size=256

7 | 1 | optimizer='SGD()',metrics=['accuracy'],loss='categorical_crossentropy' | epochs=5,batch_size=8

8 | 1 | optimizer='SGD()',metrics=['accuracy'],loss='categorical_crossentropy' | epochs=2,batch_size=1024

9 | 1 | optimizer='SGD()',metrics=['accuracy'],loss='categorical_crossentropy' | epochs=3,batch_size=32

10 | 1 | optimizer='SGD()',metrics=['accuracy'],loss='categorical_crossentropy' | epochs=12,batch_size=8

(10 rows)

```

is `optimizer='SGD()'...` correct or should it be `optimizer='SGD'...` ?

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [madlib] Advitya17 edited a comment on pull request #506: DL: Add grid/random search for model selection with `generate_model_selection_configs`

Posted by GitBox <gi...@apache.org>.

Advitya17 edited a comment on pull request #506:

URL: https://github.com/apache/madlib/pull/506#issuecomment-668949266

(8) I assume it's the right syntax, and the optimizer would just take the default param values (as part of the keras documentation shown below)

(9) Yes, I currently have SGD as the default when the user wishes to tune any optimizer params but doesn't specify an optimizer to tune.

Does that help?

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [madlib] fmcquillan99 edited a comment on pull request #506: DL: Add grid/random search for model selection with `generate_model_selection_configs`

Posted by GitBox <gi...@apache.org>.

fmcquillan99 edited a comment on pull request #506:

URL: https://github.com/apache/madlib/pull/506#issuecomment-665993038

errors and issues

(1)

```

SELECT madlib.generate_model_selection_configs(

'model_arch_library', -- model architecture table

'mst_table', -- model selection table output

ARRAY[1,2], -- model ids from model architecture table

$$

{

'lr': [1.0, 2.0, 'linear']

}

$$, -- compile_param_grid

$$

{ 'batch_size': [8],

'epochs': [1]

}

$$, -- fit_param_grid

'random', -- search_type (‘grid’ or ‘random’, default ‘grid’)

5, -- num_configs (number of sampled parameters. Default=10) [to limit testing]

NULL, -- random_state

NULL -- object table (Default=None)

);]

```

produces

```

InternalError: (psycopg2.errors.InternalError_) TypeError: cannot concatenate 'str' and 'float' objects (plpython.c:5038)

CONTEXT: Traceback (most recent call last):

PL/Python function "generate_model_selection_configs", line 21, in <module>

mst_loader = madlib_keras_model_selection.MstSearch(**globals())

PL/Python function "generate_model_selection_configs", line 42, in wrapper

PL/Python function "generate_model_selection_configs", line 287, in __init__

PL/Python function "generate_model_selection_configs", line 426, in find_random_combinations

PL/Python function "generate_model_selection_configs", line 490, in generate_row_string

PL/Python function "generate_model_selection_configs"

[SQL: SELECT madlib.generate_model_selection_configs(

'model_arch_library', -- model architecture table

'mst_table', -- model selection table output

ARRAY[1,2], -- model ids from model architecture table

$$

{ 'loss': ['categorical_crossentropy'],

'lr': [0.0001, 0.1, 'linear']

}

$$, -- compile_param_grid

$$

{ 'batch_size': [8],

'epochs': [1]

}

$$, -- fit_param_grid

'random', -- search_type (‘grid’ or ‘random’, default ‘grid’)

5, -- num_configs (number of sampled parameters. Default=10) [to limit testing]

NULL, -- random_state

NULL -- object table (Default=None)

);]

(Background on this error at: http://sqlalche.me/e/2j85)

```

Likewise

```

DROP TABLE IF EXISTS mst_table, mst_table_summary;

SELECT madlib.generate_model_selection_configs(

'model_arch_library', -- model architecture table

'mst_table', -- model selection table output

ARRAY[1,2], -- model ids from model architecture table

$$

{

'lr': [1.0, 2.0, 'log'],

}

$$, -- compile_param_grid

$$

{ 'batch_size': [8],

'epochs': [1]

}

$$, -- fit_param_grid

'random', -- search_type (‘grid’ or ‘random’, default ‘grid’)

1, -- num_configs (number of sampled parameters. Default=10) [to limit testing]

NULL, -- random_state

NULL -- object table (Default=None)

);

SELECT * FROM mst_table ORDER BY mst_key;

```

produces

```

InternalError: (psycopg2.errors.InternalError_) TypeError: cannot concatenate 'str' and 'numpy.float64' objects (plpython.c:5038)

CONTEXT: Traceback (most recent call last):

PL/Python function "generate_model_selection_configs", line 21, in <module>

mst_loader = madlib_keras_model_selection.MstSearch(**globals())

PL/Python function "generate_model_selection_configs", line 42, in wrapper

PL/Python function "generate_model_selection_configs", line 287, in __init__

PL/Python function "generate_model_selection_configs", line 426, in find_random_combinations

PL/Python function "generate_model_selection_configs", line 490, in generate_row_string

PL/Python function "generate_model_selection_configs"

[SQL: SELECT madlib.generate_model_selection_configs(

'model_arch_library', -- model architecture table

'mst_table', -- model selection table output

ARRAY[1,2], -- model ids from model architecture table

$$

{

'lr': [1.0, 2.0, 'log'],

}

$$, -- compile_param_grid

$$

{ 'batch_size': [8],

'epochs': [1]

}

$$, -- fit_param_grid

'random', -- search_type (‘grid’ or ‘random’, default ‘grid’)

1, -- num_configs (number of sampled parameters. Default=10) [to limit testing]

NULL, -- random_state

NULL -- object table (Default=None)

);]

(Background on this error at: http://sqlalche.me/e/2j85)

```

(2)

For search_type = 'grid' or 'random', use should be able to enter part of the string, e.g., 'rand' for random or 'g' for for grid. There is a MADlib function that supports this.

(3)

change the name of the function from `generate_model_selection_configs`

to `generate_model_configs`

(4)

remove exclamations ! from error messages and random capitalization. Suggested messages:

"DL: 'num_configs' and 'random_state' must be NULL for grid search"

"DL: Cannot search from a distribution with grid search"

"DL: 'num_configs' cannot be NULL for random search"

"DL: 'search_type' must be either 'grid' or 'random'"

"DL: Please choose a valid distribution type ('linear' or 'log')"

"DL: {0} should be of the format [lower_bound, upper_bound, distribution_type]"

(5)

In addition to `linear` sampling and `log` sampling we should add another type

called `log_near_one`

```

config_dict[cp] = 1.0 - np.power(10, np.random.uniform (np.log10 (1.0 - param_values[1]), np.log10(1.0 - param_values[0]) ) )

```

This type of sampling is useful for exponentially weighted average type params like momentum, which are very sensitive to changes near 1. It has the effect of producing more values near 1 than regular log sampling.

e.g.

momentum values in range [0.9000, 0.9005] average the prev 10 values no matter where you are in the range (no diff)

but

momentum values in range [0.9990, 0.9995] average the prev 1000 values for the left side and prev 2000 values for the right side (big diff), so you want to generate more samples nearer to the right side to get better coverage.

(6)

```

DROP TABLE IF EXISTS mst_table, mst_table_summary;

SELECT madlib.generate_model_selection_configs(

'model_arch_library', -- model architecture table

'mst_table', -- model selection table output

ARRAY[1], -- model ids from model architecture table

$$

{ 'loss': ['categorical_crossentropy'],

'optimizer': ['Adam'],

'lr': [0.9, 0.95, 'log'],

'metrics': ['accuracy']

}

$$, -- compile_param_grid

$$

{ 'batch_size': [8, 32, 64, 128, 256, 1024, 4096],

'epochs': [1, 2, 3, 5, 10, 12]

}

$$, -- fit_param_grid

'random', -- search_type

5, -- num_configs

NULL, -- random_state

NULL -- object table (Default=None)

);

SELECT * FROM mst_table ORDER BY mst_key;

```

followed by

```

SELECT madlib.generate_model_selection_configs(

'model_arch_library', -- model architecture table

'mst_table', -- model selection table output

ARRAY[1], -- model ids from model architecture table

$$

{ 'loss': ['categorical_crossentropy'],

'optimizer': ['SGD'],

'metrics': ['accuracy']

}

$$, -- compile_param_grid

$$

{ 'batch_size': [8, 32, 64, 128, 256, 1024, 4096],

'epochs': [1, 2, 3, 5, 10, 12]

}

$$, -- fit_param_grid

'random', -- search_type

5, -- num_configs

NULL, -- random_state

NULL -- object table (Default=None)

);

SELECT * FROM mst_table ORDER BY mst_key;

```

produces

```

IntegrityError: (psycopg2.errors.UniqueViolation) plpy.SPIError: duplicate key value violates unique constraint "mst_table_model_id_key" (seg0 10.128.0.41:40000 pid=22297)

DETAIL: Key (model_id, compile_params, fit_params)=(1, optimizer='SGD()',metrics=['accuracy'],loss='categorical_crossentropy', epochs=12,batch_size=32) already exists.

CONTEXT: Traceback (most recent call last):

PL/Python function "generate_model_selection_configs", line 22, in <module>

mst_loader.load()

PL/Python function "generate_model_selection_configs", line 313, in load

PL/Python function "generate_model_selection_configs", line 566, in insert_into_mst_table

PL/Python function "generate_model_selection_configs"

[SQL: SELECT madlib.generate_model_selection_configs( 'model_arch_library', -- model architecture table

'mst_table', -- model selection table output

ARRAY[1], -- model ids from model architecture table

$$

{ 'loss': ['categorical_crossentropy'],

'optimizer': ['SGD'],

'metrics': ['accuracy']

}

$$, -- compile_param_grid

$$

{ 'batch_size': [8, 32, 64, 128, 256, 1024, 4096],

'epochs': [1, 2, 3, 5, 10, 12]

}

$$, -- fit_param_grid

'random', -- search_type

5, -- num_configs

NULL, -- random_state

NULL -- object table (Default=None)

);]

(Background on this error at: http://sqlalche.me/e/gkpj)

```

But it only produced the error every 2nd time I did this. i.e., 1-pass it would work then the 2nd pass it would throw the error.

When it does pass, it produces

```

mst_key | model_id | compile_params | fit_params

---------+----------+----------------------------------------------------------------------------------------------+--------------------------

1 | 1 | optimizer='Adam(lr=0.9063214445649174)',metrics=['accuracy'],loss='categorical_crossentropy' | epochs=10,batch_size=256

2 | 1 | optimizer='Adam(lr=0.9367722192055232)',metrics=['accuracy'],loss='categorical_crossentropy' | epochs=5,batch_size=256

3 | 1 | optimizer='Adam(lr=0.9212048311857509)',metrics=['accuracy'],loss='categorical_crossentropy' | epochs=2,batch_size=32

4 | 1 | optimizer='Adam(lr=0.9193149125403647)',metrics=['accuracy'],loss='categorical_crossentropy' | epochs=3,batch_size=256

5 | 1 | optimizer='Adam(lr=0.9326284661833211)',metrics=['accuracy'],loss='categorical_crossentropy' | epochs=2,batch_size=256

6 | 1 | optimizer='SGD()',metrics=['accuracy'],loss='categorical_crossentropy' | epochs=10,batch_size=256

7 | 1 | optimizer='SGD()',metrics=['accuracy'],loss='categorical_crossentropy' | epochs=5,batch_size=8

8 | 1 | optimizer='SGD()',metrics=['accuracy'],loss='categorical_crossentropy' | epochs=2,batch_size=1024

9 | 1 | optimizer='SGD()',metrics=['accuracy'],loss='categorical_crossentropy' | epochs=3,batch_size=32

10 | 1 | optimizer='SGD()',metrics=['accuracy'],loss='categorical_crossentropy' | epochs=12,batch_size=8

(10 rows)

```

is `optimizer='SGD()'...` correct or should it be `optimizer='SGD'...` ?

(7)

Not all sub-params apply to all params. For example, for optimizer, `lr` and `decay` might only apply to certain optimizer types and not others:

```

optimizer='SGD'

optimizer='rmsprop(lr=0.0001, decay=1e-6)'

optimizer='adam(lr=0.0001)'

```

In the previous method we accounted for that by doing:

```

SELECT madlib.load_model_selection_table('model_arch_library', -- model architecture table

'mst_table', -- model selection table output

ARRAY[1,2], -- model ids from model architecture table

ARRAY[ -- compile params

$$loss='categorical_crossentropy',optimizer='rmsprop(lr=0.0001, decay=1e-6)',metrics=['accuracy']$$,

$$loss='categorical_crossentropy',optimizer='rmsprop(lr=0.001, decay=1e-6)',metrics=['accuracy']$$,

$$loss='categorical_crossentropy',optimizer='adam(lr=0.0001)',metrics=['accuracy']$$,

$$loss='categorical_crossentropy',optimizer='adam(lr=0.001)',metrics=['accuracy']$$

],

ARRAY[ -- fit params

$$batch_size=64,epochs=5$$,

$$batch_size=128,epochs=5$$

]

);

```

but how do we do this in the new method `generate_model_configs`? You could call it multiple times and incrementally build up the `mst_table` but when autoML methods call this function we need to support a 1-shot manner. Perhaps we need to support nested dictionaries? Something like this:

```

SELECT madlib.generate_model_selection_configs(

'model_arch_library', -- model architecture table

'mst_table', -- model selection table output

ARRAY[1], -- model ids from model architecture table

$$

{ 'loss': ['categorical_crossentropy'],

{

'opt1': {'optimizer': ['SGD']},

'opt2': {'optimizer': ['rmsprop'], 'lr': [0.9, 0.95, 'log'], 'decay': [1e-6, 1e-4, 'log']},

'opt3': {'optimizer': ['Adam'], 'lr': [0.9, 0.95, 'log']}

},

'metrics': ['accuracy']

}

$$, -- compile_param_grid

$$

{ 'batch_size': [8, 32, 64, 128, 256, 1024, 4096],

'epochs': [1, 2, 3, 5, 10, 12]

}

$$, -- fit_param_grid

'random', -- search_type

5, -- num_configs

NULL, -- random_state

NULL -- object table (Default=None)

```

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [madlib] Advitya17 edited a comment on pull request #506: DL: Add grid/random search for model selection with `generate_model_selection_configs`

Posted by GitBox <gi...@apache.org>.

Advitya17 edited a comment on pull request #506:

URL: https://github.com/apache/madlib/pull/506#issuecomment-668949266

(8) I assume it's the right syntax, and the optimizer would just take the default param values (as part of the keras documentation)

(9) Yes, I currently have SGD as the default when the user wishes to tune any optimizer params but doesn't specify an optimizer to tune.

Does that help?

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [madlib] fmcquillan99 commented on pull request #506: DL: Add grid/random search for model selection with `generate_model_selection_configs`

Posted by GitBox <gi...@apache.org>.

fmcquillan99 commented on pull request #506:

URL: https://github.com/apache/madlib/pull/506#issuecomment-668952722

for (9)

Keras docs say that optimizer and loss function are mandatory params, so I don't think we should put SGD if user does not specify an optimizer. We should let it fail, no?

https://keras.io/api/optimizers/

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [madlib] Advitya17 edited a comment on pull request #506: DL: Add grid/random search for model selection with `generate_model_selection_configs`

Posted by GitBox <gi...@apache.org>.

Advitya17 edited a comment on pull request #506:

URL: https://github.com/apache/madlib/pull/506#issuecomment-668955356

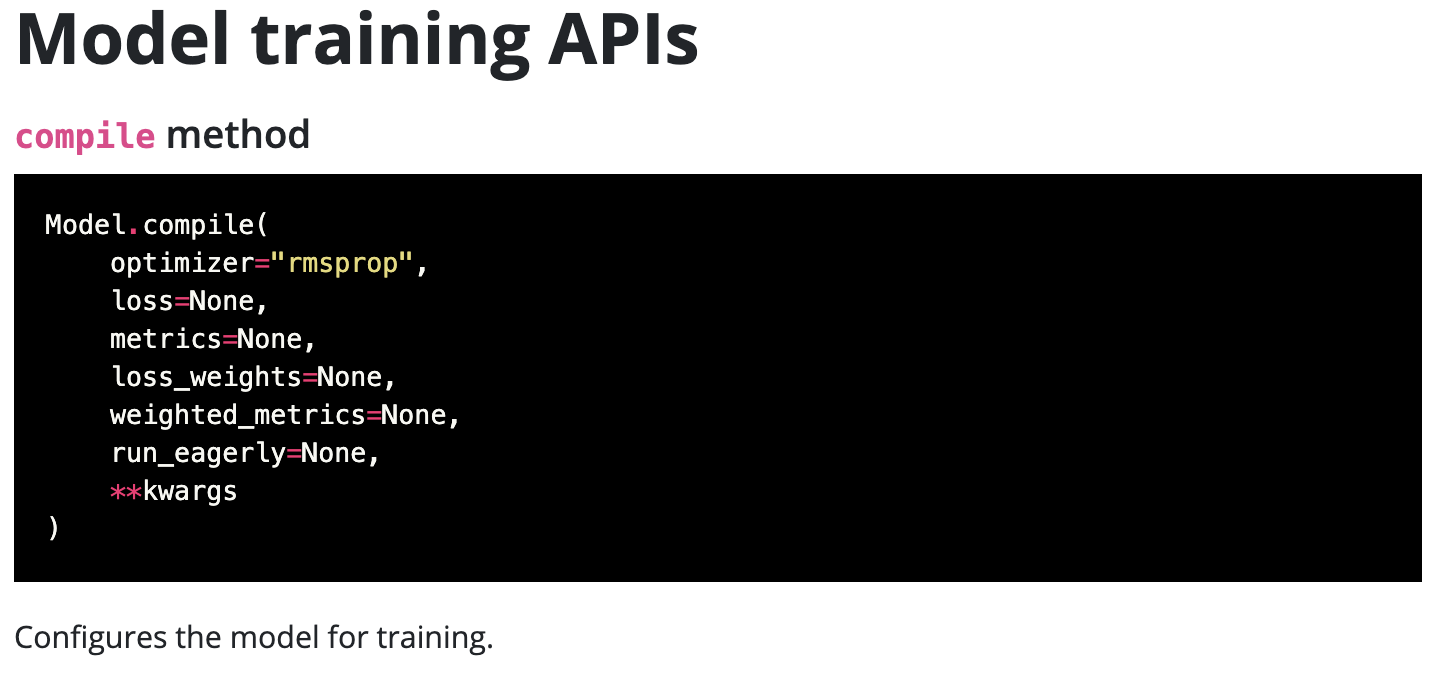

According to the keras documentation, it seems there is a default value for optimizer as well (just that it seems to be rmsprop instead of my manually chosen SGD).

From my understanding of the documentation and its language, yes optimizer is required for compiling a keras model, but they seem to already be using a default optimizer (and with default params) to compile the keras model when the user may not specify one.

Please correct me if I missed anything or didn't understand it right.

Compile method reference - https://keras.io/api/models/model_training_apis/#compile-method

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [madlib] fmcquillan99 commented on pull request #506: DL: Add grid/random search for model selection with `generate_model_selection_configs`

Posted by GitBox <gi...@apache.org>.

fmcquillan99 commented on pull request #506:

URL: https://github.com/apache/madlib/pull/506#issuecomment-668947847

From my previous comments:

1,2,3,4,5,7 are fixed

6 is OK as is, no fix needed

Additional questions:

(8)

is this the right syntax for an optimizer with no params?

```

optimizer='Adagrad()',metrics=['accuracy'],loss='categorical_crossentropy'

```

(9)

if I did not put an optimizer:

```

DROP TABLE IF EXISTS mst_table, mst_table_summary;

SELECT madlib.generate_model_configs(

'model_arch_library', -- model architecture table

'mst_table', -- model selection table output

ARRAY[1,2], -- model ids from model architecture table

$$

{'loss': ['categorical_crossentropy'],

'optimizer_params_list': [ {'lr': [0.0001, 0.1, 'log']} ],

'metrics': ['accuracy']}

$$, -- compile_param_grid

$$

{ 'batch_size': [8],

'epochs': [1]

}

$$, -- fit_param_grid

'random', -- search_type (‘grid’ or ‘random’, default ‘grid’)

2, -- num_configs (number of sampled parameters. Default=10) [to limit testing]

NULL, -- random_state

NULL -- object table (Default=None)

);

```

then `SGD` shows up:

```

mst_key | model_id | compile_params | fit_params

---------+----------+-----------------------------------------------------------------------------------------------+-----------------------

1 | 1 | optimizer='SGD(lr=0.002963575680717671)',metrics=['accuracy'],loss='categorical_crossentropy' | epochs=1,batch_size=8

2 | 2 | optimizer='SGD(lr=0.027802557490831045)',metrics=['accuracy'],loss='categorical_crossentropy' | epochs=1,batch_size=8

(2 rows)

```

Do we do that or does Keras do that?

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [madlib] fmcquillan99 commented on pull request #506: DL: Add grid/random search for model selection with `generate_model_selection_configs`

Posted by GitBox <gi...@apache.org>.

fmcquillan99 commented on pull request #506:

URL: https://github.com/apache/madlib/pull/506#issuecomment-670230673

LGTM

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [madlib] fmcquillan99 edited a comment on pull request #506: DL: Add grid/random search for model selection with `generate_model_selection_configs`

Posted by GitBox <gi...@apache.org>.

fmcquillan99 edited a comment on pull request #506:

URL: https://github.com/apache/madlib/pull/506#issuecomment-668947847

From my previous comments:

1,2,3,4,5,7 are fixed

6 is OK as is, no fix needed

Additional questions:

(8)

is this the right syntax for an optimizer with no params? i.e., `Adagrad()`

```

optimizer='Adagrad()',metrics=['accuracy'],loss='categorical_crossentropy'

```

(9)

if I did not put an optimizer:

```

DROP TABLE IF EXISTS mst_table, mst_table_summary;

SELECT madlib.generate_model_configs(

'model_arch_library', -- model architecture table

'mst_table', -- model selection table output

ARRAY[1,2], -- model ids from model architecture table

$$

{'loss': ['categorical_crossentropy'],

'optimizer_params_list': [ {'lr': [0.0001, 0.1, 'log']} ],

'metrics': ['accuracy']}

$$, -- compile_param_grid

$$

{ 'batch_size': [8],

'epochs': [1]

}

$$, -- fit_param_grid

'random', -- search_type (‘grid’ or ‘random’, default ‘grid’)

2, -- num_configs (number of sampled parameters. Default=10) [to limit testing]

NULL, -- random_state

NULL -- object table (Default=None)

);

```

then `SGD` shows up:

```

mst_key | model_id | compile_params | fit_params

---------+----------+-----------------------------------------------------------------------------------------------+-----------------------

1 | 1 | optimizer='SGD(lr=0.002963575680717671)',metrics=['accuracy'],loss='categorical_crossentropy' | epochs=1,batch_size=8

2 | 2 | optimizer='SGD(lr=0.027802557490831045)',metrics=['accuracy'],loss='categorical_crossentropy' | epochs=1,batch_size=8

(2 rows)

```

Do we do that or does Keras do that?

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [madlib] orhankislal commented on a change in pull request #506: DL: Add grid/random search for model selection with `generate_model_selection_configs`

Posted by GitBox <gi...@apache.org>.

orhankislal commented on a change in pull request #506:

URL: https://github.com/apache/madlib/pull/506#discussion_r457713770

##########

File path: src/ports/postgres/modules/deep_learning/madlib_keras_model_selection.py_in

##########

@@ -203,3 +212,365 @@ class MstLoader():

object_table_name=ModelSelectionSchema.OBJECT_TABLE,

**locals())

plpy.execute(insert_summary_query)

+

+@MinWarning("warning")

+class MstSearch():

+ """

+ The utility class for generating model selection configs and loading into a MST table with model parameters.

+