You are viewing a plain text version of this content. The canonical link for it is here.

Posted to reviews@spark.apache.org by xuanyuanking <gi...@git.apache.org> on 2018/05/19 12:03:36 UTC

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

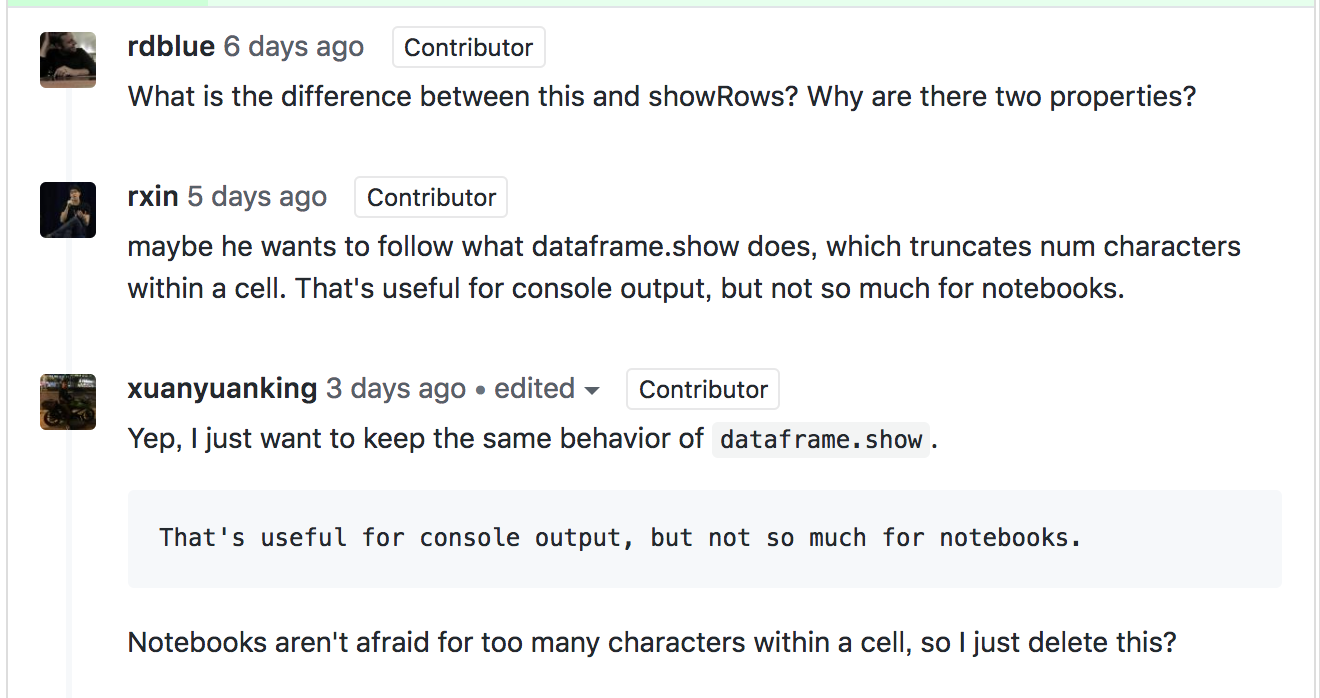

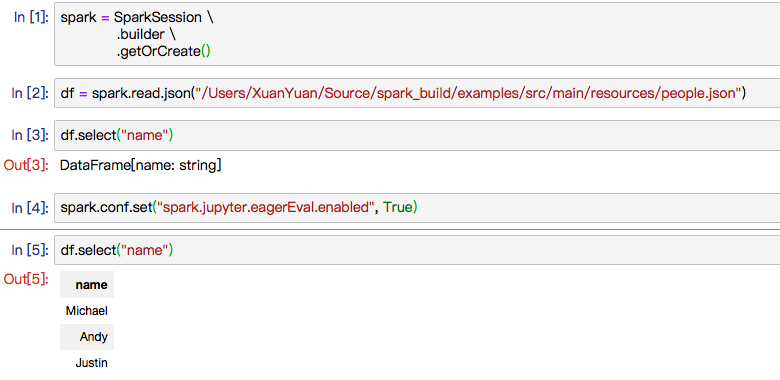

GitHub user xuanyuanking opened a pull request:

https://github.com/apache/spark/pull/21370

[SPARK-24215][PySpark] Implement _repr_html_ for dataframes in PySpark

## What changes were proposed in this pull request?

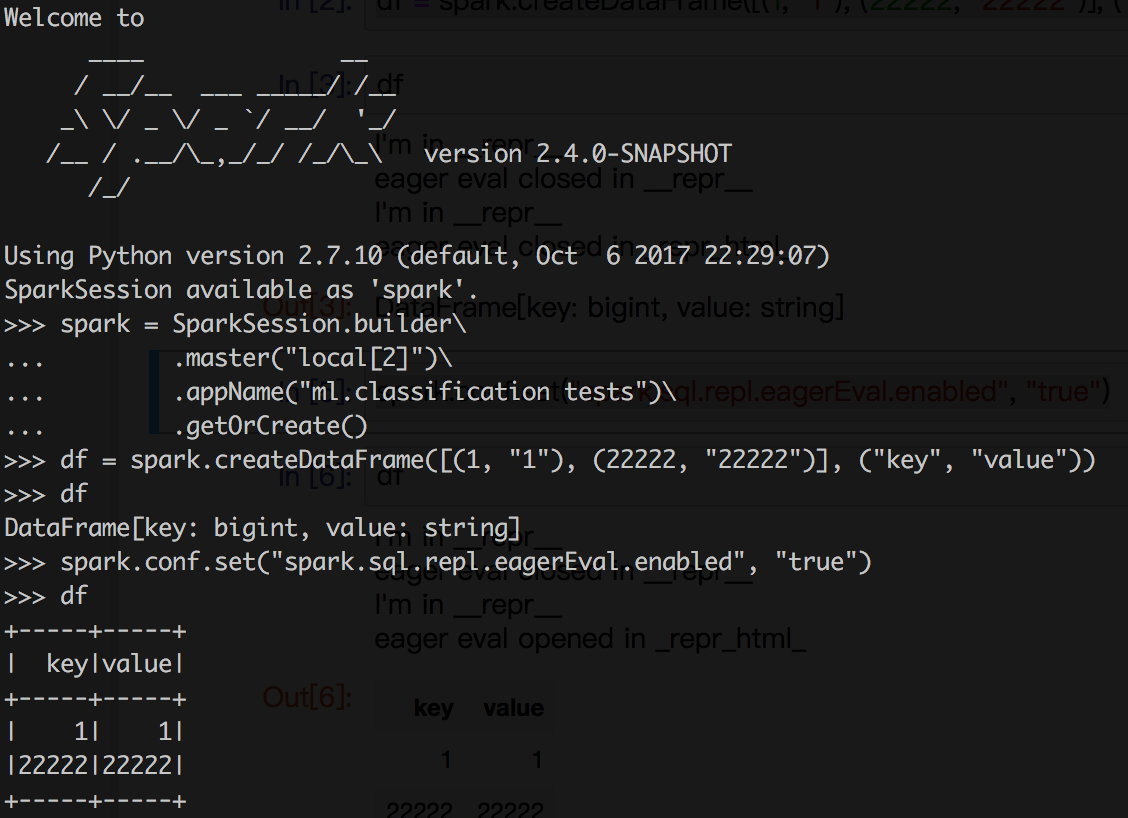

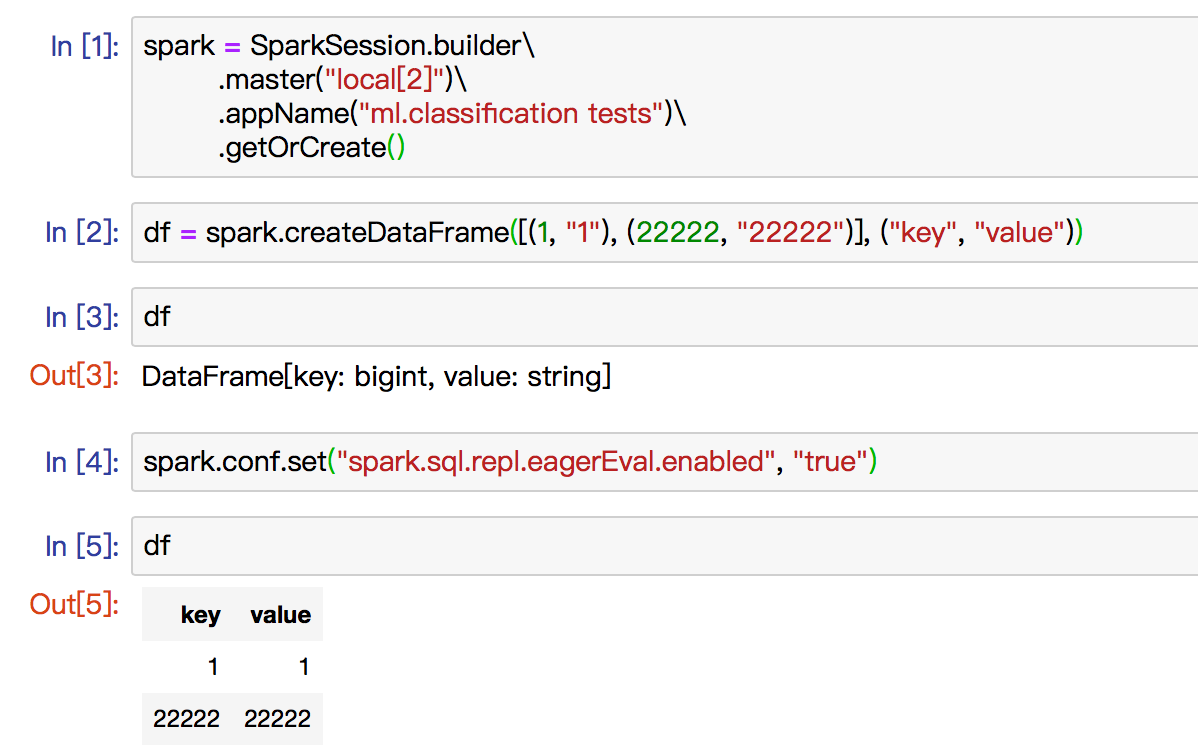

Implement _repr_html_ for PySpark while in notebook and add config named "spark.jupyter.eagerEval.enabled" to control this.

The dev list thread for context: http://apache-spark-developers-list.1001551.n3.nabble.com/eager-execution-and-debuggability-td23928.html

## How was this patch tested?

New ut in DataFrameSuite and manual test in jupyter. Some screenshot below:

You can merge this pull request into a Git repository by running:

$ git pull https://github.com/xuanyuanking/spark SPARK-24215

Alternatively you can review and apply these changes as the patch at:

https://github.com/apache/spark/pull/21370.patch

To close this pull request, make a commit to your master/trunk branch

with (at least) the following in the commit message:

This closes #21370

----

commit ebc0b11fd006386d32949f56228e2671297373fc

Author: Yuanjian Li <xy...@...>

Date: 2018-05-19T11:56:02Z

SPARK-24215: Implement __repr__ and _repr_html_ for dataframes in PySpark

----

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #21370: [SPARK-24215][PySpark] Implement _repr_html_ for datafra...

Posted by AmplabJenkins <gi...@git.apache.org>.

Github user AmplabJenkins commented on the issue:

https://github.com/apache/spark/pull/21370

Test FAILed.

Refer to this link for build results (access rights to CI server needed):

https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/91205/

Test FAILed.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Posted by xuanyuanking <gi...@git.apache.org>.

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/21370#discussion_r189567315

--- Diff: docs/configuration.md ---

@@ -456,6 +456,29 @@ Apart from these, the following properties are also available, and may be useful

from JVM to Python worker for every task.

</td>

</tr>

+<tr>

+ <td><code>spark.jupyter.eagerEval.enabled</code></td>

+ <td>false</td>

+ <td>

+ Open eager evaluation on jupyter or not. If yes, dataframe will be ran automatically

+ and html table will feedback the queries user have defined (see

+ <a href="https://issues.apache.org/jira/browse/SPARK-24215">SPARK-24215</a> for more details).

+ </td>

+</tr>

+<tr>

+ <td><code>spark.jupyter.default.showRows</code></td>

--- End diff --

change to spark.jupyter.eagerEval.showRows,thanks

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Posted by xuanyuanking <gi...@git.apache.org>.

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/21370#discussion_r190153907

--- Diff: docs/configuration.md ---

@@ -456,6 +456,29 @@ Apart from these, the following properties are also available, and may be useful

from JVM to Python worker for every task.

</td>

</tr>

+<tr>

+ <td><code>spark.jupyter.eagerEval.enabled</code></td>

--- End diff --

Thanks, done. feb5f4a.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Posted by xuanyuanking <gi...@git.apache.org>.

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/21370#discussion_r189613358

--- Diff: sql/core/src/main/scala/org/apache/spark/sql/Dataset.scala ---

@@ -292,31 +297,25 @@ class Dataset[T] private[sql](

}

// Create SeparateLine

- val sep: String = colWidths.map("-" * _).addString(sb, "+", "+", "+\n").toString()

+ val sep: String = if (html) {

+ // Initial append table label

+ sb.append("<table border='1'>\n")

+ "\n"

+ } else {

+ colWidths.map("-" * _).addString(sb, "+", "+", "+\n").toString()

+ }

// column names

- rows.head.zipWithIndex.map { case (cell, i) =>

- if (truncate > 0) {

- StringUtils.leftPad(cell, colWidths(i))

- } else {

- StringUtils.rightPad(cell, colWidths(i))

- }

- }.addString(sb, "|", "|", "|\n")

-

+ appendRowString(rows.head, truncate, colWidths, html, true, sb)

sb.append(sep)

// data

- rows.tail.foreach {

- _.zipWithIndex.map { case (cell, i) =>

- if (truncate > 0) {

- StringUtils.leftPad(cell.toString, colWidths(i))

- } else {

- StringUtils.rightPad(cell.toString, colWidths(i))

- }

- }.addString(sb, "|", "|", "|\n")

+ rows.tail.foreach { row =>

+ appendRowString(row.map(_.toString), truncate, colWidths, html, false, sb)

--- End diff --

I see, the `cell.toString` has been called here. https://github.com/apache/spark/pull/21370/files/f2bb8f334631734869ddf5d8ef1eca1fa29d334a#diff-7a46f10c3cedbf013cf255564d9483cdR271

Got it, I'll fix this in next commit.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #21370: [SPARK-24215][PySpark] Implement _repr_html_ for datafra...

Posted by SparkQA <gi...@git.apache.org>.

Github user SparkQA commented on the issue:

https://github.com/apache/spark/pull/21370

**[Test build #91205 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/91205/testReport)** for PR 21370 at commit [`94f3414`](https://github.com/apache/spark/commit/94f3414ebb689f4435018eab2e888e7d2974dc98).

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Posted by ueshin <gi...@git.apache.org>.

Github user ueshin commented on a diff in the pull request:

https://github.com/apache/spark/pull/21370#discussion_r192207299

--- Diff: python/pyspark/sql/dataframe.py ---

@@ -351,8 +352,70 @@ def show(self, n=20, truncate=True, vertical=False):

else:

print(self._jdf.showString(n, int(truncate), vertical))

+ @property

+ def _eager_eval(self):

+ """Returns true if the eager evaluation enabled.

+ """

+ return self.sql_ctx.getConf(

+ "spark.sql.repl.eagerEval.enabled", "false").lower() == "true"

+

+ @property

+ def _max_num_rows(self):

+ """Returns the max row number for eager evaluation.

+ """

+ return int(self.sql_ctx.getConf(

+ "spark.sql.repl.eagerEval.maxNumRows", "20"))

+

+ @property

+ def _truncate(self):

+ """Returns the truncate length for eager evaluation.

+ """

+ return int(self.sql_ctx.getConf(

+ "spark.sql.repl.eagerEval.truncate", "20"))

+

def __repr__(self):

- return "DataFrame[%s]" % (", ".join("%s: %s" % c for c in self.dtypes))

+ if not self._support_repr_html and self._eager_eval:

+ vertical = False

+ return self._jdf.showString(

+ self._max_num_rows, self._truncate, vertical)

+ else:

+ return "DataFrame[%s]" % (", ".join("%s: %s" % c for c in self.dtypes))

+

+ def _repr_html_(self):

+ """Returns a dataframe with html code when you enabled eager evaluation

+ by 'spark.sql.repl.eagerEval.enabled', this only called by REPL you are

+ using support eager evaluation with HTML.

+ """

+ import cgi

+ if not self._support_repr_html:

+ self._support_repr_html = True

+ if self._eager_eval:

+ max_num_rows = self._max_num_rows

--- End diff --

We need to adjust `max_num_rows` as the same as Scala side like `val numRows = _numRows.max(0).min(Int.MaxValue - 1)`?

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Posted by ueshin <gi...@git.apache.org>.

Github user ueshin commented on a diff in the pull request:

https://github.com/apache/spark/pull/21370#discussion_r191594348

--- Diff: python/pyspark/sql/dataframe.py ---

@@ -351,8 +352,62 @@ def show(self, n=20, truncate=True, vertical=False):

else:

print(self._jdf.showString(n, int(truncate), vertical))

+ def _get_repl_config(self):

+ """Return the configs for eager evaluation each time when __repr__ or

+ _repr_html_ called by user or notebook.

+ """

+ eager_eval = self.sql_ctx.getConf(

+ "spark.sql.repl.eagerEval.enabled", "false").lower() == "true"

+ console_row = int(self.sql_ctx.getConf(

+ "spark.sql.repl.eagerEval.maxNumRows", u"20"))

+ console_truncate = int(self.sql_ctx.getConf(

+ "spark.sql.repl.eagerEval.truncate", u"20"))

--- End diff --

ditto.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #21370: [SPARK-24215][PySpark] Implement _repr_html_ for datafra...

Posted by AmplabJenkins <gi...@git.apache.org>.

Github user AmplabJenkins commented on the issue:

https://github.com/apache/spark/pull/21370

Merged build finished. Test PASSed.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Posted by xuanyuanking <gi...@git.apache.org>.

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/21370#discussion_r192348972

--- Diff: docs/configuration.md ---

@@ -456,6 +456,29 @@ Apart from these, the following properties are also available, and may be useful

from JVM to Python worker for every task.

</td>

</tr>

+<tr>

+ <td><code>spark.sql.repl.eagerEval.enabled</code></td>

+ <td>false</td>

+ <td>

+ Enable eager evaluation or not. If true and REPL you are using supports eager evaluation,

+ dataframe will be ran automatically and html table will feedback the queries user have defined

+ (see <a href="https://issues.apache.org/jira/browse/SPARK-24215">SPARK-24215</a> for more details).

+ </td>

+</tr>

+<tr>

+ <td><code>spark.sql.repl.eagerEval.maxNumRows</code></td>

+ <td>20</td>

+ <td>

+ Default number of rows in HTML table.

--- End diff --

Got it, more detailed description in 7f43a8b. Please check.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement eager evaluation...

Posted by xuanyuanking <gi...@git.apache.org>.

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/21370#discussion_r194784664

--- Diff: sql/core/src/main/scala/org/apache/spark/sql/Dataset.scala ---

@@ -3209,6 +3222,19 @@ class Dataset[T] private[sql](

}

}

+ private[sql] def getRowsToPython(

+ _numRows: Int,

+ truncate: Int,

+ vertical: Boolean): Array[Any] = {

+ EvaluatePython.registerPicklers()

+ val numRows = _numRows.max(0).min(Int.MaxValue - 1)

+ val rows = getRows(numRows, truncate, vertical).map(_.toArray).toArray

+ val toJava: (Any) => Any = EvaluatePython.toJava(_, ArrayType(ArrayType(StringType)))

+ val iter: Iterator[Array[Byte]] = new SerDeUtil.AutoBatchedPickler(

+ rows.iterator.map(toJava))

+ PythonRDD.serveIterator(iter, "serve-GetRows")

--- End diff --

Same answer with @HyukjinKwon about the return type, and actually the exact return type we need here is Array[Array[String]], this defined in `toJava` func.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement eager evaluation...

Posted by xuanyuanking <gi...@git.apache.org>.

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/21370#discussion_r194794700

--- Diff: docs/configuration.md ---

@@ -456,6 +456,33 @@ Apart from these, the following properties are also available, and may be useful

from JVM to Python worker for every task.

</td>

</tr>

+<tr>

+ <td><code>spark.sql.repl.eagerEval.enabled</code></td>

+ <td>false</td>

+ <td>

+ Enable eager evaluation or not. If true and the REPL you are using supports eager evaluation,

+ Dataset will be ran automatically. The HTML table which generated by <code>_repl_html_</code>

+ called by notebooks like Jupyter will feedback the queries user have defined. For plain Python

+ REPL, the output will be shown like <code>dataframe.show()</code>

+ (see <a href="https://issues.apache.org/jira/browse/SPARK-24215">SPARK-24215</a> for more details).

+ </td>

+</tr>

+<tr>

+ <td><code>spark.sql.repl.eagerEval.maxNumRows</code></td>

+ <td>20</td>

+ <td>

+ Default number of rows in eager evaluation output HTML table generated by <code>_repr_html_</code> or plain text,

+ this only take effect when <code>spark.sql.repl.eagerEval.enabled</code> is set to true.

--- End diff --

Got it, thanks.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Posted by xuanyuanking <gi...@git.apache.org>.

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/21370#discussion_r192772009

--- Diff: python/pyspark/sql/dataframe.py ---

@@ -351,8 +354,70 @@ def show(self, n=20, truncate=True, vertical=False):

else:

print(self._jdf.showString(n, int(truncate), vertical))

+ @property

+ def _eager_eval(self):

+ """Returns true if the eager evaluation enabled.

+ """

+ return self.sql_ctx.getConf(

+ "spark.sql.repl.eagerEval.enabled", "false").lower() == "true"

+

+ @property

+ def _max_num_rows(self):

+ """Returns the max row number for eager evaluation.

+ """

+ return int(self.sql_ctx.getConf(

+ "spark.sql.repl.eagerEval.maxNumRows", "20"))

+

+ @property

+ def _truncate(self):

+ """Returns the truncate length for eager evaluation.

+ """

+ return int(self.sql_ctx.getConf(

+ "spark.sql.repl.eagerEval.truncate", "20"))

+

def __repr__(self):

- return "DataFrame[%s]" % (", ".join("%s: %s" % c for c in self.dtypes))

+ if not self._support_repr_html and self._eager_eval:

+ vertical = False

+ return self._jdf.showString(

+ self._max_num_rows, self._truncate, vertical)

+ else:

+ return "DataFrame[%s]" % (", ".join("%s: %s" % c for c in self.dtypes))

+

+ def _repr_html_(self):

+ """Returns a dataframe with html code when you enabled eager evaluation

+ by 'spark.sql.repl.eagerEval.enabled', this only called by REPL you are

+ using support eager evaluation with HTML.

+ """

+ import cgi

+ if not self._support_repr_html:

+ self._support_repr_html = True

+ if self._eager_eval:

+ max_num_rows = max(self._max_num_rows, 0)

+ with SCCallSiteSync(self._sc) as css:

+ vertical = False

+ sock_info = self._jdf.getRowsToPython(

+ max_num_rows, self._truncate, vertical)

+ rows = list(_load_from_socket(sock_info, BatchedSerializer(PickleSerializer())))

+ head = rows[0]

+ row_data = rows[1:]

+ has_more_data = len(row_data) > max_num_rows

+ row_data = row_data[0:max_num_rows]

+

+ html = "<table border='1'>\n<tr><th>"

+ # generate table head

+ html += "</th><th>".join(map(lambda x: cgi.escape(x), head)) + "</th></tr>\n"

+ # generate table rows

+ for row in row_data:

+ data = "<tr><td>" + "</td><td>".join(map(lambda x: cgi.escape(x), row)) + \

+ "</td></tr>\n"

--- End diff --

Thanks, more clearer.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Posted by gatorsmile <gi...@git.apache.org>.

Github user gatorsmile commented on a diff in the pull request:

https://github.com/apache/spark/pull/21370#discussion_r194276179

--- Diff: python/pyspark/sql/tests.py ---

@@ -3074,6 +3074,36 @@ def test_checking_csv_header(self):

finally:

shutil.rmtree(path)

+ def test_repr_html(self):

--- End diff --

This function only covers the most basic positive case. We need also add more test cases. For example, the results when `spark.sql.repl.eagerEval.enabled` is set to `false`.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Posted by ueshin <gi...@git.apache.org>.

Github user ueshin commented on a diff in the pull request:

https://github.com/apache/spark/pull/21370#discussion_r190172068

--- Diff: sql/core/src/main/scala/org/apache/spark/sql/Dataset.scala ---

@@ -358,6 +357,43 @@ class Dataset[T] private[sql](

sb.toString()

}

+ /**

+ * Transform current row string and append to builder

+ *

+ * @param row Current row of string

+ * @param truncate If set to more than 0, truncates strings to `truncate` characters and

+ * all cells will be aligned right.

+ * @param colWidths The width of each column

+ * @param html If set to true, return output as html table.

+ * @param head Set to true while current row is table head.

+ * @param sb StringBuilder for current row.

+ */

+ private[sql] def appendRowString(

+ row: Seq[String],

+ truncate: Int,

+ colWidths: Array[Int],

+ html: Boolean,

+ head: Boolean,

+ sb: StringBuilder): Unit = {

+ val data = row.zipWithIndex.map { case (cell, i) =>

+ if (truncate > 0) {

+ StringUtils.leftPad(cell, colWidths(i))

+ } else {

+ StringUtils.rightPad(cell, colWidths(i))

+ }

+ }

+ (html, head) match {

+ case (true, true) =>

+ data.map(StringEscapeUtils.escapeHtml).addString(

+ sb, "<tr><th>", "</th>\n<th>", "</th></tr>\n")

+ case (true, false) =>

+ data.map(StringEscapeUtils.escapeHtml).addString(

+ sb, "<tr><td>", "</td>\n<td>", "</td></tr>\n")

--- End diff --

ditto.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #21370: [SPARK-24215][PySpark] Implement _repr_html_ for datafra...

Posted by SparkQA <gi...@git.apache.org>.

Github user SparkQA commented on the issue:

https://github.com/apache/spark/pull/21370

**[Test build #91449 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/91449/testReport)** for PR 21370 at commit [`597b8d5`](https://github.com/apache/spark/commit/597b8d515fd3bcd117b22ae29b05cd8a58d37ca2).

* This patch passes all tests.

* This patch merges cleanly.

* This patch adds no public classes.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Posted by HyukjinKwon <gi...@git.apache.org>.

Github user HyukjinKwon commented on a diff in the pull request:

https://github.com/apache/spark/pull/21370#discussion_r189512922

--- Diff: docs/configuration.md ---

@@ -456,6 +456,29 @@ Apart from these, the following properties are also available, and may be useful

from JVM to Python worker for every task.

</td>

</tr>

+<tr>

+ <td><code>spark.jupyter.eagerEval.enabled</code></td>

+ <td>false</td>

+ <td>

+ Open eager evaluation on jupyter or not. If yes, dataframe will be ran automatically

+ and html table will feedback the queries user have defined (see

+ <a href="https://issues.apache.org/jira/browse/SPARK-24215">SPARK-24215</a> for more details).

--- End diff --

Yea, I felt the same thing too but there were the same few instances in this page.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #21370: [SPARK-24215][PySpark] Implement _repr_html_ for datafra...

Posted by AmplabJenkins <gi...@git.apache.org>.

Github user AmplabJenkins commented on the issue:

https://github.com/apache/spark/pull/21370

Merged build finished. Test PASSed.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Posted by HyukjinKwon <gi...@git.apache.org>.

Github user HyukjinKwon commented on a diff in the pull request:

https://github.com/apache/spark/pull/21370#discussion_r191692934

--- Diff: sql/core/src/main/scala/org/apache/spark/sql/Dataset.scala ---

@@ -291,37 +289,57 @@ class Dataset[T] private[sql](

}

}

+ rows = rows.map {

+ _.zipWithIndex.map { case (cell, i) =>

--- End diff --

nit:

```

rows.map { row =>

row.zipWithIndex...

``

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #21370: [SPARK-24215][PySpark] Implement _repr_html_ for datafra...

Posted by SparkQA <gi...@git.apache.org>.

Github user SparkQA commented on the issue:

https://github.com/apache/spark/pull/21370

**[Test build #90871 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/90871/testReport)** for PR 21370 at commit [`ebc0b11`](https://github.com/apache/spark/commit/ebc0b11fd006386d32949f56228e2671297373fc).

* This patch **fails SparkR unit tests**.

* This patch merges cleanly.

* This patch adds no public classes.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #21370: [SPARK-24215][PySpark] Implement _repr_html_ for datafra...

Posted by SparkQA <gi...@git.apache.org>.

Github user SparkQA commented on the issue:

https://github.com/apache/spark/pull/21370

**[Test build #90834 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/90834/testReport)** for PR 21370 at commit [`ebc0b11`](https://github.com/apache/spark/commit/ebc0b11fd006386d32949f56228e2671297373fc).

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Posted by rxin <gi...@git.apache.org>.

Github user rxin commented on a diff in the pull request:

https://github.com/apache/spark/pull/21370#discussion_r190803641

--- Diff: docs/configuration.md ---

@@ -456,6 +456,29 @@ Apart from these, the following properties are also available, and may be useful

from JVM to Python worker for every task.

</td>

</tr>

+<tr>

+ <td><code>spark.sql.repl.eagerEval.enabled</code></td>

+ <td>false</td>

+ <td>

+ Enable eager evaluation or not. If true and repl you're using supports eager evaluation,

+ dataframe will be ran automatically and html table will feedback the queries user have defined

+ (see <a href="https://issues.apache.org/jira/browse/SPARK-24215">SPARK-24215</a> for more details).

+ </td>

+</tr>

+<tr>

+ <td><code>spark.sql.repl.eagerEval.showRows</code></td>

--- End diff --

maxNumRows

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Posted by HyukjinKwon <gi...@git.apache.org>.

Github user HyukjinKwon commented on a diff in the pull request:

https://github.com/apache/spark/pull/21370#discussion_r191693929

--- Diff: sql/core/src/main/scala/org/apache/spark/sql/Dataset.scala ---

@@ -291,37 +289,57 @@ class Dataset[T] private[sql](

}

}

+ rows = rows.map {

+ _.zipWithIndex.map { case (cell, i) =>

+ if (truncate > 0) {

+ StringUtils.leftPad(cell, colWidths(i))

+ } else {

+ StringUtils.rightPad(cell, colWidths(i))

+ }

+ }

+ }

+ }

+ rows

+ }

+

+ /**

+ * Compose the string representing rows for output

+ *

+ * @param _numRows Number of rows to show

+ * @param truncate If set to more than 0, truncates strings to `truncate` characters and

+ * all cells will be aligned right.

+ * @param vertical If set to true, prints output rows vertically (one line per column value).

+ */

+ private[sql] def showString(

+ _numRows: Int,

+ truncate: Int = 20,

+ vertical: Boolean = false): String = {

+ val numRows = _numRows.max(0).min(Int.MaxValue - 1)

+ // Get rows represented by Seq[Seq[String]], we may get one more line if it has more data.

+ val rows = getRows(numRows, truncate, vertical)

+ val fieldNames = rows.head

+ val data = rows.tail

+

+ val hasMoreData = data.length > numRows

+ val dataRows = data.take(numRows)

+

+ val sb = new StringBuilder

+ if (!vertical) {

// Create SeparateLine

- val sep: String = colWidths.map("-" * _).addString(sb, "+", "+", "+\n").toString()

+ val sep: String = fieldNames.map(_.length).toArray

+ .map("-" * _).addString(sb, "+", "+", "+\n").toString()

// column names

- rows.head.zipWithIndex.map { case (cell, i) =>

- if (truncate > 0) {

- StringUtils.leftPad(cell, colWidths(i))

- } else {

- StringUtils.rightPad(cell, colWidths(i))

- }

- }.addString(sb, "|", "|", "|\n")

-

+ fieldNames.addString(sb, "|", "|", "|\n")

sb.append(sep)

// data

- rows.tail.foreach {

- _.zipWithIndex.map { case (cell, i) =>

- if (truncate > 0) {

- StringUtils.leftPad(cell.toString, colWidths(i))

- } else {

- StringUtils.rightPad(cell.toString, colWidths(i))

- }

- }.addString(sb, "|", "|", "|\n")

+ dataRows.foreach {

+ _.addString(sb, "|", "|", "|\n")

--- End diff --

nit: we could just make it inlined

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Posted by gatorsmile <gi...@git.apache.org>.

Github user gatorsmile commented on a diff in the pull request:

https://github.com/apache/spark/pull/21370#discussion_r189661812

--- Diff: python/pyspark/sql/dataframe.py ---

@@ -347,13 +347,26 @@ def show(self, n=20, truncate=True, vertical=False):

name | Bob

"""

if isinstance(truncate, bool) and truncate:

- print(self._jdf.showString(n, 20, vertical))

+ print(self._jdf.showString(n, 20, vertical, False))

else:

- print(self._jdf.showString(n, int(truncate), vertical))

+ print(self._jdf.showString(n, int(truncate), vertical, False))

def __repr__(self):

return "DataFrame[%s]" % (", ".join("%s: %s" % c for c in self.dtypes))

+ def _repr_html_(self):

--- End diff --

Add comments above this to explain this goal of this function.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #21370: [SPARK-24215][PySpark] Implement _repr_html_ for datafra...

Posted by AmplabJenkins <gi...@git.apache.org>.

Github user AmplabJenkins commented on the issue:

https://github.com/apache/spark/pull/21370

Test PASSed.

Refer to this link for build results (access rights to CI server needed):

https://amplab.cs.berkeley.edu/jenkins//job/testing-k8s-prb-make-spark-distribution/3772/

Test PASSed.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #21370: [SPARK-24215][PySpark] Implement _repr_html_ for datafra...

Posted by AmplabJenkins <gi...@git.apache.org>.

Github user AmplabJenkins commented on the issue:

https://github.com/apache/spark/pull/21370

Test PASSed.

Refer to this link for build results (access rights to CI server needed):

https://amplab.cs.berkeley.edu/jenkins//job/testing-k8s-prb-make-spark-distribution/3623/

Test PASSed.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement eager evaluation...

Posted by felixcheung <gi...@git.apache.org>.

Github user felixcheung commented on a diff in the pull request:

https://github.com/apache/spark/pull/21370#discussion_r194951791

--- Diff: sql/core/src/main/scala/org/apache/spark/sql/Dataset.scala ---

@@ -3209,6 +3222,19 @@ class Dataset[T] private[sql](

}

}

+ private[sql] def getRowsToPython(

+ _numRows: Int,

+ truncate: Int,

+ vertical: Boolean): Array[Any] = {

+ EvaluatePython.registerPicklers()

+ val numRows = _numRows.max(0).min(Int.MaxValue - 1)

+ val rows = getRows(numRows, truncate, vertical).map(_.toArray).toArray

+ val toJava: (Any) => Any = EvaluatePython.toJava(_, ArrayType(ArrayType(StringType)))

+ val iter: Iterator[Array[Byte]] = new SerDeUtil.AutoBatchedPickler(

+ rows.iterator.map(toJava))

+ PythonRDD.serveIterator(iter, "serve-GetRows")

--- End diff --

re the py4j commit - there's a good reason for it @gatorsmile

not sure if the change to return type is required with the py4j change though

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Posted by xuanyuanking <gi...@git.apache.org>.

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/21370#discussion_r190154145

--- Diff: sql/core/src/main/scala/org/apache/spark/sql/Dataset.scala ---

@@ -237,9 +238,13 @@ class Dataset[T] private[sql](

* @param truncate If set to more than 0, truncates strings to `truncate` characters and

* all cells will be aligned right.

* @param vertical If set to true, prints output rows vertically (one line per column value).

+ * @param html If set to true, return output as html table.

--- End diff --

Thanks for guidance, I will do this in next commit.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #21370: [SPARK-24215][PySpark] Implement _repr_html_ for datafra...

Posted by AmplabJenkins <gi...@git.apache.org>.

Github user AmplabJenkins commented on the issue:

https://github.com/apache/spark/pull/21370

Merged build finished. Test PASSed.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #21370: [SPARK-24215][PySpark] Implement _repr_html_ for datafra...

Posted by AmplabJenkins <gi...@git.apache.org>.

Github user AmplabJenkins commented on the issue:

https://github.com/apache/spark/pull/21370

Test FAILed.

Refer to this link for build results (access rights to CI server needed):

https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/90872/

Test FAILed.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Posted by xuanyuanking <gi...@git.apache.org>.

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/21370#discussion_r192548361

--- Diff: docs/configuration.md ---

@@ -456,6 +456,33 @@ Apart from these, the following properties are also available, and may be useful

from JVM to Python worker for every task.

</td>

</tr>

+<tr>

+ <td><code>spark.sql.repl.eagerEval.enabled</code></td>

+ <td>false</td>

+ <td>

+ Enable eager evaluation or not. If true and REPL you are using supports eager evaluation,

--- End diff --

Thanks, done in 5b36604.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #21370: [SPARK-24215][PySpark] Implement _repr_html_ for datafra...

Posted by AmplabJenkins <gi...@git.apache.org>.

Github user AmplabJenkins commented on the issue:

https://github.com/apache/spark/pull/21370

Merged build finished. Test PASSed.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Posted by felixcheung <gi...@git.apache.org>.

Github user felixcheung commented on a diff in the pull request:

https://github.com/apache/spark/pull/21370#discussion_r189447446

--- Diff: python/pyspark/sql/dataframe.py ---

@@ -78,6 +78,12 @@ def __init__(self, jdf, sql_ctx):

self.is_cached = False

self._schema = None # initialized lazily

self._lazy_rdd = None

+ self._eager_eval = sql_ctx.getConf(

+ "spark.jupyter.eagerEval.enabled", "false").lower() == "true"

--- End diff --

let's add all these to documentation too

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Posted by ueshin <gi...@git.apache.org>.

Github user ueshin commented on a diff in the pull request:

https://github.com/apache/spark/pull/21370#discussion_r191853613

--- Diff: sql/core/src/main/scala/org/apache/spark/sql/Dataset.scala ---

@@ -291,37 +289,57 @@ class Dataset[T] private[sql](

}

}

+ rows = rows.map {

+ _.zipWithIndex.map { case (cell, i) =>

+ if (truncate > 0) {

+ StringUtils.leftPad(cell, colWidths(i))

+ } else {

+ StringUtils.rightPad(cell, colWidths(i))

+ }

+ }

+ }

--- End diff --

Seems like the truncation is already done when creating `rows` above?

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #21370: [SPARK-24215][PySpark] Implement _repr_html_ for datafra...

Posted by AmplabJenkins <gi...@git.apache.org>.

Github user AmplabJenkins commented on the issue:

https://github.com/apache/spark/pull/21370

Test PASSed.

Refer to this link for build results (access rights to CI server needed):

https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/91354/

Test PASSed.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #21370: [SPARK-24215][PySpark] Implement _repr_html_ for datafra...

Posted by SparkQA <gi...@git.apache.org>.

Github user SparkQA commented on the issue:

https://github.com/apache/spark/pull/21370

**[Test build #91206 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/91206/testReport)** for PR 21370 at commit [`425bee1`](https://github.com/apache/spark/commit/425bee1628917859b58dc87faccb7bc6146b7f1f).

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Posted by ueshin <gi...@git.apache.org>.

Github user ueshin commented on a diff in the pull request:

https://github.com/apache/spark/pull/21370#discussion_r189493218

--- Diff: sql/core/src/main/scala/org/apache/spark/sql/Dataset.scala ---

@@ -292,31 +297,25 @@ class Dataset[T] private[sql](

}

// Create SeparateLine

- val sep: String = colWidths.map("-" * _).addString(sb, "+", "+", "+\n").toString()

+ val sep: String = if (html) {

+ // Initial append table label

+ sb.append("<table border='1'>\n")

+ "\n"

+ } else {

+ colWidths.map("-" * _).addString(sb, "+", "+", "+\n").toString()

+ }

// column names

- rows.head.zipWithIndex.map { case (cell, i) =>

- if (truncate > 0) {

- StringUtils.leftPad(cell, colWidths(i))

- } else {

- StringUtils.rightPad(cell, colWidths(i))

- }

- }.addString(sb, "|", "|", "|\n")

-

+ appendRowString(rows.head, truncate, colWidths, html, true, sb)

sb.append(sep)

// data

- rows.tail.foreach {

- _.zipWithIndex.map { case (cell, i) =>

- if (truncate > 0) {

- StringUtils.leftPad(cell.toString, colWidths(i))

- } else {

- StringUtils.rightPad(cell.toString, colWidths(i))

- }

- }.addString(sb, "|", "|", "|\n")

+ rows.tail.foreach { row =>

+ appendRowString(row.map(_.toString), truncate, colWidths, html, false, sb)

--- End diff --

We don't need `.map(_.toString)`?

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #21370: [SPARK-24215][PySpark] Implement _repr_html_ for datafra...

Posted by SparkQA <gi...@git.apache.org>.

Github user SparkQA commented on the issue:

https://github.com/apache/spark/pull/21370

**[Test build #91449 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/91449/testReport)** for PR 21370 at commit [`597b8d5`](https://github.com/apache/spark/commit/597b8d515fd3bcd117b22ae29b05cd8a58d37ca2).

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #21370: [SPARK-24215][PySpark] Implement _repr_html_ for datafra...

Posted by rdblue <gi...@git.apache.org>.

Github user rdblue commented on the issue:

https://github.com/apache/spark/pull/21370

@rxin, `__repr__` is the equivalent for ipython and the python REPL. `_repr_html_` is the convention used by jupyter to replicate `__repr__` in notebooks with HTML output.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Posted by gatorsmile <gi...@git.apache.org>.

Github user gatorsmile commented on a diff in the pull request:

https://github.com/apache/spark/pull/21370#discussion_r194629747

--- Diff: sql/core/src/main/scala/org/apache/spark/sql/Dataset.scala ---

@@ -3209,6 +3222,19 @@ class Dataset[T] private[sql](

}

}

+ private[sql] def getRowsToPython(

+ _numRows: Int,

+ truncate: Int,

+ vertical: Boolean): Array[Any] = {

+ EvaluatePython.registerPicklers()

+ val numRows = _numRows.max(0).min(Int.MaxValue - 1)

+ val rows = getRows(numRows, truncate, vertical).map(_.toArray).toArray

+ val toJava: (Any) => Any = EvaluatePython.toJava(_, ArrayType(ArrayType(StringType)))

+ val iter: Iterator[Array[Byte]] = new SerDeUtil.AutoBatchedPickler(

+ rows.iterator.map(toJava))

+ PythonRDD.serveIterator(iter, "serve-GetRows")

--- End diff --

`PythonRDD.serveIterator(iter, "serve-GetRows")` returns `Int`, but the return type of `getRowsToPython ` is `Array[Any]`. How does it work? cc @xuanyuanking @HyukjinKwon

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Posted by xuanyuanking <gi...@git.apache.org>.

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/21370#discussion_r189574938

--- Diff: sql/core/src/main/scala/org/apache/spark/sql/Dataset.scala ---

@@ -237,9 +238,13 @@ class Dataset[T] private[sql](

* @param truncate If set to more than 0, truncates strings to `truncate` characters and

* all cells will be aligned right.

* @param vertical If set to true, prints output rows vertically (one line per column value).

+ * @param html If set to true, return output as html table.

--- End diff --

We can do this in python side, I implement it in scala side mainly consider to reuse the code and logic of `show()`, maybe it's more natural in `show df as html` call showString.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Posted by xuanyuanking <gi...@git.apache.org>.

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/21370#discussion_r191702826

--- Diff: sql/core/src/main/scala/org/apache/spark/sql/Dataset.scala ---

@@ -231,16 +234,17 @@ class Dataset[T] private[sql](

}

/**

- * Compose the string representing rows for output

+ * Get rows represented in Sequence by specific truncate and vertical requirement.

*

- * @param _numRows Number of rows to show

+ * @param numRows Number of rows to return

* @param truncate If set to more than 0, truncates strings to `truncate` characters and

* all cells will be aligned right.

- * @param vertical If set to true, prints output rows vertically (one line per column value).

+ * @param vertical If set to true, the rows to return don't need truncate.

--- End diff --

Yep, all abbreviation done in d4bf01a.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #21370: [SPARK-24215][PySpark] Implement _repr_html_ for datafra...

Posted by AmplabJenkins <gi...@git.apache.org>.

Github user AmplabJenkins commented on the issue:

https://github.com/apache/spark/pull/21370

Test PASSed.

Refer to this link for build results (access rights to CI server needed):

https://amplab.cs.berkeley.edu/jenkins//job/testing-k8s-prb-make-spark-distribution/3413/

Test PASSed.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #21370: [SPARK-24215][PySpark] Implement _repr_html_ for datafra...

Posted by SparkQA <gi...@git.apache.org>.

Github user SparkQA commented on the issue:

https://github.com/apache/spark/pull/21370

**[Test build #91354 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/91354/testReport)** for PR 21370 at commit [`9c6b3bb`](https://github.com/apache/spark/commit/9c6b3bbc430ffbcb752dc9870df877728f356cb8).

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Posted by HyukjinKwon <gi...@git.apache.org>.

Github user HyukjinKwon commented on a diff in the pull request:

https://github.com/apache/spark/pull/21370#discussion_r194278100

--- Diff: python/pyspark/sql/dataframe.py ---

@@ -351,8 +354,68 @@ def show(self, n=20, truncate=True, vertical=False):

else:

print(self._jdf.showString(n, int(truncate), vertical))

+ @property

+ def _eager_eval(self):

+ """Returns true if the eager evaluation enabled.

+ """

+ return self.sql_ctx.getConf(

+ "spark.sql.repl.eagerEval.enabled", "false").lower() == "true"

--- End diff --

Probably, we should access to SQLConf object. 1. Agree with not hardcoding it in general but 2. IMHO I want to avoid Py4J JVM accesses in the test because the test can likely be more flaky up to my knowledge, on the other hand (unlike Scala or Java side).

Maybe we should try to take a look about this hardcoding if we see more occurrences next time

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement eager evaluation...

Posted by xuanyuanking <gi...@git.apache.org>.

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/21370#discussion_r194794008

--- Diff: sql/core/src/main/scala/org/apache/spark/sql/Dataset.scala ---

@@ -3209,6 +3222,19 @@ class Dataset[T] private[sql](

}

}

+ private[sql] def getRowsToPython(

--- End diff --

Got it, the follow up pr I'm working on will add more test for `getRows` and `getRowsToPython`.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Posted by gatorsmile <gi...@git.apache.org>.

Github user gatorsmile commented on a diff in the pull request:

https://github.com/apache/spark/pull/21370#discussion_r189660891

--- Diff: sql/core/src/main/scala/org/apache/spark/sql/Dataset.scala ---

@@ -237,9 +238,13 @@ class Dataset[T] private[sql](

* @param truncate If set to more than 0, truncates strings to `truncate` characters and

* all cells will be aligned right.

* @param vertical If set to true, prints output rows vertically (one line per column value).

+ * @param html If set to true, return output as html table.

--- End diff --

This should not be done in Dataset.scala.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Posted by xuanyuanking <gi...@git.apache.org>.

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/21370#discussion_r191687426

--- Diff: sql/core/src/main/scala/org/apache/spark/sql/Dataset.scala ---

@@ -231,16 +234,17 @@ class Dataset[T] private[sql](

}

/**

- * Compose the string representing rows for output

+ * Get rows represented in Sequence by specific truncate and vertical requirement.

*

- * @param _numRows Number of rows to show

+ * @param numRows Number of rows to return

* @param truncate If set to more than 0, truncates strings to `truncate` characters and

* all cells will be aligned right.

- * @param vertical If set to true, prints output rows vertically (one line per column value).

+ * @param vertical If set to true, the rows to return don't need truncate.

*/

- private[sql] def showString(

- _numRows: Int, truncate: Int = 20, vertical: Boolean = false): String = {

- val numRows = _numRows.max(0).min(Int.MaxValue - 1)

--- End diff --

Yep, thanks, my mistake here.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Posted by felixcheung <gi...@git.apache.org>.

Github user felixcheung commented on a diff in the pull request:

https://github.com/apache/spark/pull/21370#discussion_r189447465

--- Diff: sql/core/src/main/scala/org/apache/spark/sql/Dataset.scala ---

@@ -237,9 +236,13 @@ class Dataset[T] private[sql](

* @param truncate If set to more than 0, truncates strings to `truncate` characters and

* all cells will be aligned right.

* @param vertical If set to true, prints output rows vertically (one line per column value).

+ * @param html If set to true, return output as html table.

*/

private[sql] def showString(

- _numRows: Int, truncate: Int = 20, vertical: Boolean = false): String = {

+ _numRows: Int,

+ truncate: Int = 20,

--- End diff --

when the output is truncated, does jupyter handle that properly?

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Posted by gatorsmile <gi...@git.apache.org>.

Github user gatorsmile commented on a diff in the pull request:

https://github.com/apache/spark/pull/21370#discussion_r194277082

--- Diff: sql/core/src/main/scala/org/apache/spark/sql/Dataset.scala ---

@@ -3209,6 +3222,19 @@ class Dataset[T] private[sql](

}

}

+ private[sql] def getRowsToPython(

--- End diff --

In DataFrameSuite, we have multiple test cases for `showString` instead of `getRows `, which is introduced in this PR.

We also need the unit test cases for `getRowsToPython`.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Posted by gatorsmile <gi...@git.apache.org>.

Github user gatorsmile commented on a diff in the pull request:

https://github.com/apache/spark/pull/21370#discussion_r192446542

--- Diff: docs/configuration.md ---

@@ -456,6 +456,33 @@ Apart from these, the following properties are also available, and may be useful

from JVM to Python worker for every task.

</td>

</tr>

+<tr>

+ <td><code>spark.sql.repl.eagerEval.enabled</code></td>

+ <td>false</td>

+ <td>

+ Enable eager evaluation or not. If true and REPL you are using supports eager evaluation,

+ dataframe will be ran automatically. HTML table will feedback the queries user have defined if

+ <code>_repl_html_</code> called by notebooks like Jupyter, otherwise for plain Python REPL, output

+ will be shown like <code>dataframe.show()</code>

+ (see <a href="https://issues.apache.org/jira/browse/SPARK-24215">SPARK-24215</a> for more details).

+ </td>

+</tr>

+<tr>

+ <td><code>spark.sql.repl.eagerEval.maxNumRows</code></td>

+ <td>20</td>

+ <td>

+ Default number of rows in eager evaluation output HTML table generated by <code>_repr_html_</code> or plain text,

+ this only take effect when <code>spark.sql.repl.eagerEval.enabled</code> set to true.

--- End diff --

`set to` -> `is set to`

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #21370: [SPARK-24215][PySpark] Implement _repr_html_ for datafra...

Posted by xuanyuanking <gi...@git.apache.org>.

Github user xuanyuanking commented on the issue:

https://github.com/apache/spark/pull/21370

```

Test coverage is the most critical when we refactor the existing code and add new features. Hopefully, when you submit new PRs in the future, could you also improve this part?

```

Of cause, I'll do this in a follow up PR and answer all question from Xiao this night. Thanks for all your comments.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Posted by xuanyuanking <gi...@git.apache.org>.

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/21370#discussion_r189570764

--- Diff: sql/core/src/main/scala/org/apache/spark/sql/Dataset.scala ---

@@ -358,6 +357,43 @@ class Dataset[T] private[sql](

sb.toString()

}

+ /**

+ * Transform current row string and append to builder

+ *

+ * @param row Current row of string

+ * @param truncate If set to more than 0, truncates strings to `truncate` characters and

+ * all cells will be aligned right.

+ * @param colWidths The width of each column

+ * @param html If set to true, return output as html table.

+ * @param head Set to true while current row is table head.

+ * @param sb StringBuilder for current row.

+ */

+ private[sql] def appendRowString(

+ row: Seq[String],

+ truncate: Int,

+ colWidths: Array[Int],

+ html: Boolean,

+ head: Boolean,

+ sb: StringBuilder): Unit = {

+ val data = row.zipWithIndex.map { case (cell, i) =>

+ if (truncate > 0) {

+ StringUtils.leftPad(cell, colWidths(i))

+ } else {

+ StringUtils.rightPad(cell, colWidths(i))

+ }

+ }

+ (html, head) match {

+ case (true, true) =>

+ data.map(StringEscapeUtils.escapeHtml).addString(

+ sb, "<tr><th>", "</th><th>", "</th></tr>")

--- End diff --

the "\n" added in seperatedLine:https://github.com/apache/spark/pull/21370/files#diff-7a46f10c3cedbf013cf255564d9483cdR300

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Posted by gatorsmile <gi...@git.apache.org>.

Github user gatorsmile commented on a diff in the pull request:

https://github.com/apache/spark/pull/21370#discussion_r194276795

--- Diff: docs/configuration.md ---

@@ -456,6 +456,33 @@ Apart from these, the following properties are also available, and may be useful

from JVM to Python worker for every task.

</td>

</tr>

+<tr>

--- End diff --

These confs are not part of `spark.sql("SET -v").show(numRows = 200, truncate = false)`.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #21370: [SPARK-24215][PySpark] Implement _repr_html_ for datafra...

Posted by AmplabJenkins <gi...@git.apache.org>.

Github user AmplabJenkins commented on the issue:

https://github.com/apache/spark/pull/21370

Merged build finished. Test PASSed.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Posted by gatorsmile <gi...@git.apache.org>.

Github user gatorsmile commented on a diff in the pull request:

https://github.com/apache/spark/pull/21370#discussion_r194275282

--- Diff: docs/configuration.md ---

@@ -456,6 +456,33 @@ Apart from these, the following properties are also available, and may be useful

from JVM to Python worker for every task.

</td>

</tr>

+<tr>

+ <td><code>spark.sql.repl.eagerEval.enabled</code></td>

+ <td>false</td>

+ <td>

+ Enable eager evaluation or not. If true and the REPL you are using supports eager evaluation,

+ Dataset will be ran automatically. The HTML table which generated by <code>_repl_html_</code>

+ called by notebooks like Jupyter will feedback the queries user have defined. For plain Python

+ REPL, the output will be shown like <code>dataframe.show()</code>

+ (see <a href="https://issues.apache.org/jira/browse/SPARK-24215">SPARK-24215</a> for more details).

+ </td>

+</tr>

+<tr>

+ <td><code>spark.sql.repl.eagerEval.maxNumRows</code></td>

+ <td>20</td>

+ <td>

+ Default number of rows in eager evaluation output HTML table generated by <code>_repr_html_</code> or plain text,

+ this only take effect when <code>spark.sql.repl.eagerEval.enabled</code> is set to true.

--- End diff --

take -> takes

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #21370: [SPARK-24215][PySpark] Implement _repr_html_ for datafra...

Posted by SparkQA <gi...@git.apache.org>.

Github user SparkQA commented on the issue:

https://github.com/apache/spark/pull/21370

**[Test build #90834 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/90834/testReport)** for PR 21370 at commit [`ebc0b11`](https://github.com/apache/spark/commit/ebc0b11fd006386d32949f56228e2671297373fc).

* This patch **fails to build**.

* This patch merges cleanly.

* This patch adds no public classes.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #21370: [SPARK-24215][PySpark] Implement _repr_html_ for datafra...

Posted by rxin <gi...@git.apache.org>.

Github user rxin commented on the issue:

https://github.com/apache/spark/pull/21370

Can we also do something a bit more generic that works for non-Jupyter notebooks as well? For example, in IPython or just plain Python REPL.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Posted by ueshin <gi...@git.apache.org>.

Github user ueshin commented on a diff in the pull request:

https://github.com/apache/spark/pull/21370#discussion_r189606444

--- Diff: sql/core/src/main/scala/org/apache/spark/sql/Dataset.scala ---

@@ -292,31 +297,25 @@ class Dataset[T] private[sql](

}

// Create SeparateLine

- val sep: String = colWidths.map("-" * _).addString(sb, "+", "+", "+\n").toString()

+ val sep: String = if (html) {

+ // Initial append table label

+ sb.append("<table border='1'>\n")

+ "\n"

+ } else {

+ colWidths.map("-" * _).addString(sb, "+", "+", "+\n").toString()

+ }

// column names

- rows.head.zipWithIndex.map { case (cell, i) =>

- if (truncate > 0) {

- StringUtils.leftPad(cell, colWidths(i))

- } else {

- StringUtils.rightPad(cell, colWidths(i))

- }

- }.addString(sb, "|", "|", "|\n")

-

+ appendRowString(rows.head, truncate, colWidths, html, true, sb)

sb.append(sep)

// data

- rows.tail.foreach {

- _.zipWithIndex.map { case (cell, i) =>

- if (truncate > 0) {

- StringUtils.leftPad(cell.toString, colWidths(i))

- } else {

- StringUtils.rightPad(cell.toString, colWidths(i))

- }

- }.addString(sb, "|", "|", "|\n")

+ rows.tail.foreach { row =>

+ appendRowString(row.map(_.toString), truncate, colWidths, html, false, sb)

--- End diff --

I know this is not your change, but the `rows` is already `Seq[Seq[String]]` and the `row` is `Seq[String]`, so I think we can remove it.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Posted by xuanyuanking <gi...@git.apache.org>.

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/21370#discussion_r192772218

--- Diff: python/pyspark/sql/dataframe.py ---

@@ -351,8 +354,70 @@ def show(self, n=20, truncate=True, vertical=False):

else:

print(self._jdf.showString(n, int(truncate), vertical))

+ @property

+ def _eager_eval(self):

+ """Returns true if the eager evaluation enabled.

+ """

+ return self.sql_ctx.getConf(

+ "spark.sql.repl.eagerEval.enabled", "false").lower() == "true"

+

+ @property

+ def _max_num_rows(self):

+ """Returns the max row number for eager evaluation.

+ """

+ return int(self.sql_ctx.getConf(

+ "spark.sql.repl.eagerEval.maxNumRows", "20"))

+

+ @property

+ def _truncate(self):

+ """Returns the truncate length for eager evaluation.

+ """

+ return int(self.sql_ctx.getConf(

+ "spark.sql.repl.eagerEval.truncate", "20"))

+

def __repr__(self):

- return "DataFrame[%s]" % (", ".join("%s: %s" % c for c in self.dtypes))

+ if not self._support_repr_html and self._eager_eval:

+ vertical = False

+ return self._jdf.showString(

+ self._max_num_rows, self._truncate, vertical)

+ else:

+ return "DataFrame[%s]" % (", ".join("%s: %s" % c for c in self.dtypes))

+

+ def _repr_html_(self):

+ """Returns a dataframe with html code when you enabled eager evaluation

+ by 'spark.sql.repl.eagerEval.enabled', this only called by REPL you are

+ using support eager evaluation with HTML.

+ """

+ import cgi

+ if not self._support_repr_html:

+ self._support_repr_html = True

+ if self._eager_eval:

+ max_num_rows = max(self._max_num_rows, 0)

+ with SCCallSiteSync(self._sc) as css:

+ vertical = False

+ sock_info = self._jdf.getRowsToPython(

+ max_num_rows, self._truncate, vertical)

+ rows = list(_load_from_socket(sock_info, BatchedSerializer(PickleSerializer())))

+ head = rows[0]

+ row_data = rows[1:]

+ has_more_data = len(row_data) > max_num_rows

+ row_data = row_data[0:max_num_rows]

+

+ html = "<table border='1'>\n<tr><th>"

+ # generate table head

+ html += "</th><th>".join(map(lambda x: cgi.escape(x), head)) + "</th></tr>\n"

+ # generate table rows

+ for row in row_data:

+ data = "<tr><td>" + "</td><td>".join(map(lambda x: cgi.escape(x), row)) + \

+ "</td></tr>\n"

+ html += data

+ html += "</table>\n"

+ if has_more_data:

+ html += "only showing top %d %s\n" % (

--- End diff --

Maybe we need this? Just want to keep same with `df.show()`.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #21370: [SPARK-24215][PySpark] Implement _repr_html_ for datafra...

Posted by AmplabJenkins <gi...@git.apache.org>.

Github user AmplabJenkins commented on the issue:

https://github.com/apache/spark/pull/21370

Test FAILed.

Refer to this link for build results (access rights to CI server needed):

https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/91410/

Test FAILed.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Posted by xuanyuanking <gi...@git.apache.org>.

Github user xuanyuanking commented on a diff in the pull request:

https://github.com/apache/spark/pull/21370#discussion_r190154231

--- Diff: sql/core/src/main/scala/org/apache/spark/sql/Dataset.scala ---

@@ -358,6 +357,43 @@ class Dataset[T] private[sql](

sb.toString()

}

+ /**

+ * Transform current row string and append to builder

+ *

+ * @param row Current row of string

+ * @param truncate If set to more than 0, truncates strings to `truncate` characters and

+ * all cells will be aligned right.

+ * @param colWidths The width of each column

+ * @param html If set to true, return output as html table.

+ * @param head Set to true while current row is table head.

+ * @param sb StringBuilder for current row.

+ */

+ private[sql] def appendRowString(

+ row: Seq[String],

+ truncate: Int,

+ colWidths: Array[Int],

+ html: Boolean,

+ head: Boolean,

+ sb: StringBuilder): Unit = {

+ val data = row.zipWithIndex.map { case (cell, i) =>

+ if (truncate > 0) {

+ StringUtils.leftPad(cell, colWidths(i))

+ } else {

+ StringUtils.rightPad(cell, colWidths(i))

+ }

+ }

+ (html, head) match {

+ case (true, true) =>

+ data.map(StringEscapeUtils.escapeHtml).addString(

+ sb, "<tr><th>", "</th><th>", "</th></tr>")

--- End diff --

Thanks, done. feb5f4a.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #21370: [SPARK-24215][PySpark] Implement _repr_html_ for datafra...

Posted by SparkQA <gi...@git.apache.org>.

Github user SparkQA commented on the issue:

https://github.com/apache/spark/pull/21370

**[Test build #91206 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/91206/testReport)** for PR 21370 at commit [`425bee1`](https://github.com/apache/spark/commit/425bee1628917859b58dc87faccb7bc6146b7f1f).

* This patch passes all tests.

* This patch merges cleanly.

* This patch adds no public classes.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Posted by viirya <gi...@git.apache.org>.

Github user viirya commented on a diff in the pull request:

https://github.com/apache/spark/pull/21370#discussion_r190251443

--- Diff: python/pyspark/sql/dataframe.py ---

@@ -347,13 +347,30 @@ def show(self, n=20, truncate=True, vertical=False):

name | Bob

"""

if isinstance(truncate, bool) and truncate:

- print(self._jdf.showString(n, 20, vertical))

+ print(self._jdf.showString(n, 20, vertical, False))

else:

- print(self._jdf.showString(n, int(truncate), vertical))

+ print(self._jdf.showString(n, int(truncate), vertical, False))

def __repr__(self):

return "DataFrame[%s]" % (", ".join("%s: %s" % c for c in self.dtypes))

+ def _repr_html_(self):

+ """Returns a dataframe with html code when you enabled eager evaluation

+ by 'spark.sql.repl.eagerEval.enabled', this only called by repr you're

--- End diff --

repr -> repl?

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #21370: [SPARK-24215][PySpark] Implement _repr_html_ for datafra...

Posted by AmplabJenkins <gi...@git.apache.org>.

Github user AmplabJenkins commented on the issue:

https://github.com/apache/spark/pull/21370

Merged build finished. Test FAILed.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #21370: [SPARK-24215][PySpark] Implement _repr_html_ for datafra...

Posted by AmplabJenkins <gi...@git.apache.org>.

Github user AmplabJenkins commented on the issue:

https://github.com/apache/spark/pull/21370

Test PASSed.

Refer to this link for build results (access rights to CI server needed):

https://amplab.cs.berkeley.edu/jenkins//job/testing-k8s-prb-make-spark-distribution/3494/

Test PASSed.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #21370: [SPARK-24215][PySpark] Implement _repr_html_ for datafra...

Posted by AmplabJenkins <gi...@git.apache.org>.

Github user AmplabJenkins commented on the issue:

https://github.com/apache/spark/pull/21370

Test FAILed.

Refer to this link for build results (access rights to CI server needed):

https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/91022/

Test FAILed.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Posted by gatorsmile <gi...@git.apache.org>.

Github user gatorsmile commented on a diff in the pull request:

https://github.com/apache/spark/pull/21370#discussion_r194287915

--- Diff: python/pyspark/sql/dataframe.py ---

@@ -351,8 +354,68 @@ def show(self, n=20, truncate=True, vertical=False):

else:

print(self._jdf.showString(n, int(truncate), vertical))

+ @property

+ def _eager_eval(self):

+ """Returns true if the eager evaluation enabled.

+ """

+ return self.sql_ctx.getConf(

+ "spark.sql.repl.eagerEval.enabled", "false").lower() == "true"

--- End diff --

In the ongoing release, a nice-to-have refactoring is to move all the Core Confs into a single file just like what we did in Spark SQL Conf. Default values, boundary checking, types and descriptions. Thus, in PySpark, it would be better to do it starting from now.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #21370: [SPARK-24215][PySpark] Implement _repr_html_ for ...

Posted by viirya <gi...@git.apache.org>.

Github user viirya commented on a diff in the pull request:

https://github.com/apache/spark/pull/21370#discussion_r189509097