You are viewing a plain text version of this content. The canonical link for it is here.

Posted to commits@mxnet.apache.org by la...@apache.org on 2020/12/08 16:14:25 UTC

[incubator-mxnet] branch master updated: [DOC] Fix warnings in

tutorials and turn on -W (#19624)

This is an automated email from the ASF dual-hosted git repository.

lausen pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/incubator-mxnet.git

The following commit(s) were added to refs/heads/master by this push:

new 6cbd3ae [DOC] Fix warnings in tutorials and turn on -W (#19624)

6cbd3ae is described below

commit 6cbd3ae772550d3e61c455590cea29373362af02

Author: Sheng Zha <sz...@users.noreply.github.com>

AuthorDate: Tue Dec 8 11:13:09 2020 -0500

[DOC] Fix warnings in tutorials and turn on -W (#19624)

---

ci/docker/runtime_functions.sh | 40 +-

.../normalization/imgs => _static}/NCHW_BN.png | Bin

.../normalization/imgs => _static}/NCHW_IN.png | Bin

.../normalization/imgs => _static}/NCHW_LN.png | Bin

.../normalization/imgs => _static}/NTC_BN.png | Bin

.../normalization/imgs => _static}/NTC_IN.png | Bin

.../normalization/imgs => _static}/NTC_LN.png | Bin

docs/python_docs/_static/autograd_gradient.png | Bin 0 -> 39559 bytes

.../packages/gluon/blocks => _static}/blocks.svg | 0

.../loss/images => _static}/contrastive_loss.jpeg | Bin

.../packages/gluon/loss => _static}/ctc_loss.png | Bin

.../imgs => _static}/data_normalization.jpeg | Bin

.../blocks/activations/images => _static}/elu.png | Bin

.../blocks/activations/images => _static}/gelu.png | Bin

.../gluon/loss/images => _static}/inuktitut_1.png | Bin

.../gluon/loss/images => _static}/inuktitut_2.png | Bin

.../activations/images => _static}/leakyrelu.png | Bin

.../activations/images => _static}/prelu.png | Bin

.../blocks/activations/images => _static}/relu.png | Bin

.../blocks/activations/images => _static}/selu.png | Bin

.../activations/images => _static}/sigmoid.png | Bin

.../blocks/activations/images => _static}/silu.png | Bin

.../activations/images => _static}/softrelu.png | Bin

.../activations/images => _static}/softsign.png | Bin

.../activations/images => _static}/swish.png | Bin

.../blocks/activations/images => _static}/tanh.png | Bin

.../gluon/loss => _static}/triplet_loss.png | Bin

docs/python_docs/python/Makefile_sphinx | 2 +-

docs/python_docs/python/api/gluon/rnn/index.rst | 1 -

docs/python_docs/python/api/kvstore/index.rst | 35 +-

docs/python_docs/python/api/npx/index.rst | 1 +

.../python/tutorials/deploy/export/onnx.md | 12 +-

.../getting-started/crash-course/0-introduction.md | 12 +-

.../getting-started/crash-course/1-nparray.md | 50 +-

.../getting-started/crash-course/2-create-nn.md | 1134 +++++++++++---------

.../getting-started/crash-course/3-autograd.md | 48 +-

.../getting-started/crash-course/4-components.md | 79 +-

.../getting-started/crash-course/5-datasets.md | 98 +-

.../getting-started/crash-course/6-train-nn.md | 106 +-

.../getting-started/crash-course/7-use-gpus.md | 35 +-

.../gluon_from_experiment_to_deployment.md | 19 +-

.../logistic_regression_explained.md | 20 +-

.../tutorials/getting-started/to-mxnet/pytorch.md | 18 +-

.../python/tutorials/packages/autograd/index.md | 12 +-

.../gluon/blocks/activations/activations.md | 48 +-

.../packages/gluon/blocks/custom-layer.md | 2 +-

.../gluon/blocks/custom_layer_beginners.md | 2 +-

.../tutorials/packages/gluon/blocks/hybridize.md | 8 +-

.../python/tutorials/packages/gluon/blocks/init.md | 6 +-

.../python/tutorials/packages/gluon/blocks/nn.md | 32 +-

.../tutorials/packages/gluon/blocks/parameters.md | 22 +-

.../packages/gluon/blocks/save_load_params.md | 10 +-

.../packages/gluon/data/data_augmentation.md | 34 +-

.../tutorials/packages/gluon/data/datasets.md | 32 +-

.../tutorials/packages/gluon/image/info_gan.md | 68 +-

.../python/tutorials/packages/gluon/image/mnist.md | 22 +-

.../tutorials/packages/gluon/loss/custom-loss.md | 14 +-

.../tutorials/packages/gluon/loss/kl_divergence.md | 16 +-

.../python/tutorials/packages/gluon/loss/loss.md | 34 +-

.../packages/gluon/training/fit_api_tutorial.md | 10 +-

.../learning_rates/learning_rate_finder.md | 4 +-

.../learning_rates/learning_rate_schedules.md | 14 +-

.../learning_rate_schedules_advanced.md | 14 +-

.../packages/gluon/training/normalization/index.md | 18 +-

.../tutorials/packages/gluon/training/trainer.md | 24 +-

.../python/tutorials/packages/kvstore/kvstore.md | 2 +-

.../packages/legacy/ndarray/01-ndarray-intro.md | 6 +-

.../legacy/ndarray/02-ndarray-operations.md | 2 +-

.../packages/legacy/ndarray/03-ndarray-contexts.md | 4 +-

.../legacy/ndarray/gotchas_numpy_in_mxnet.md | 20 +-

.../packages/legacy/ndarray/sparse/csr.md | 12 +-

.../packages/legacy/ndarray/sparse/row_sparse.md | 12 +-

.../packages/legacy/ndarray/sparse/train_gluon.md | 74 +-

.../python/tutorials/packages/np/index.rst | 4 +-

.../python/tutorials/packages/np/np-vs-numpy.md | 18 +-

.../tutorials/packages/onnx/fine_tuning_gluon.md | 8 +-

.../packages/onnx/inference_on_onnx_model.md | 21 +-

.../python/tutorials/packages/optimizer/index.md | 61 +-

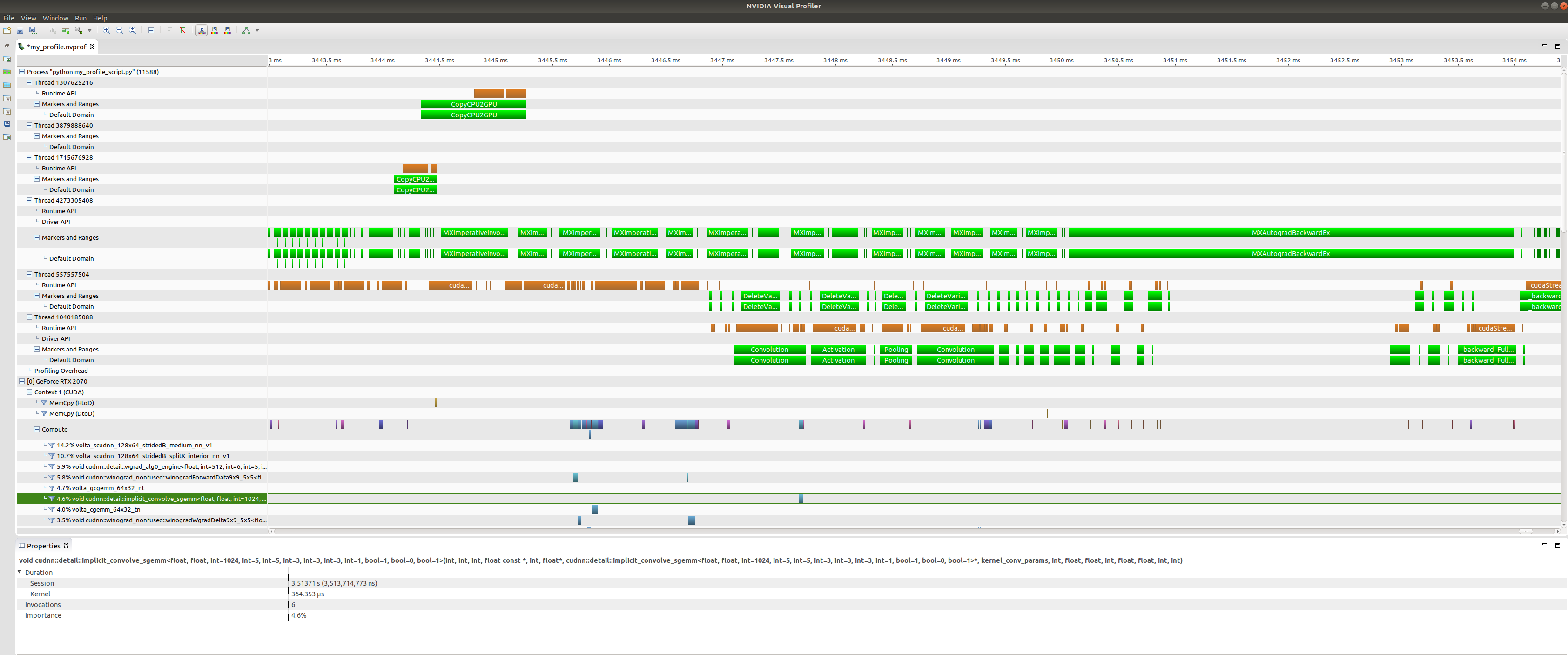

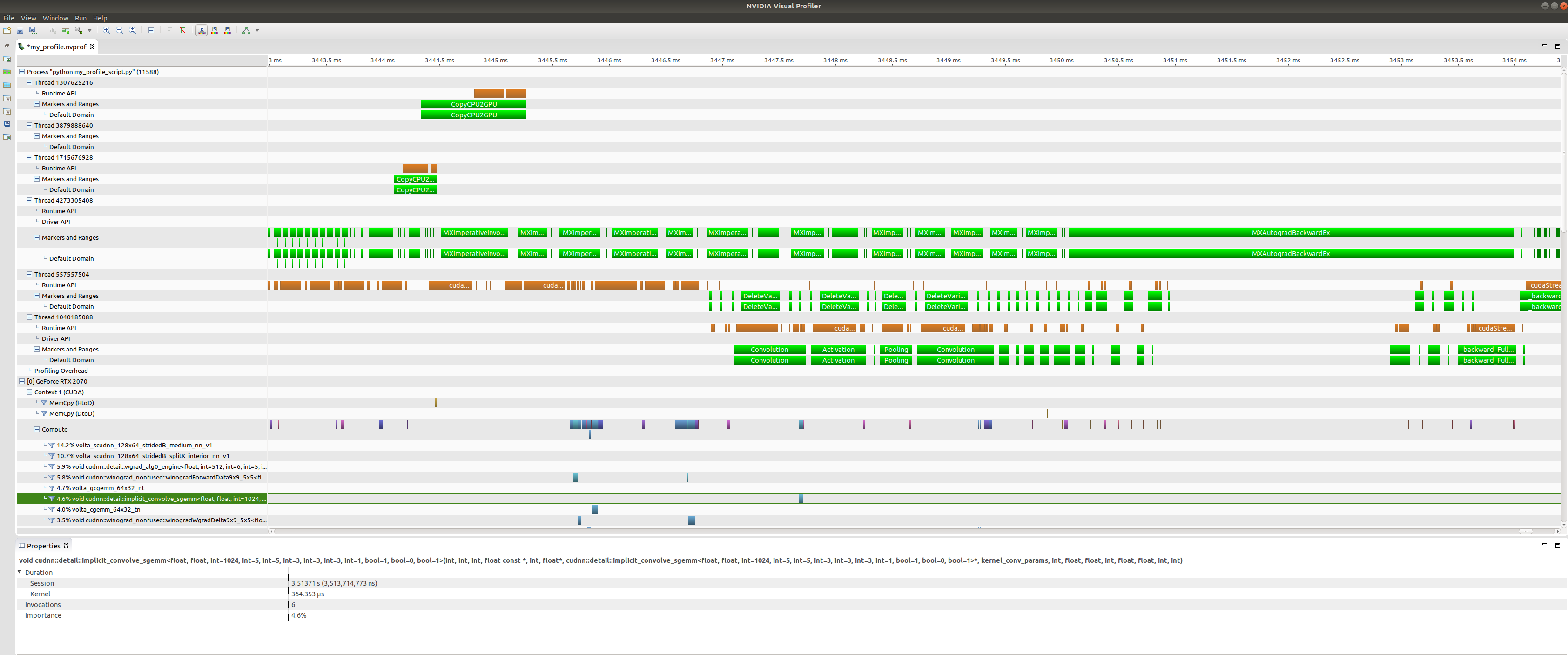

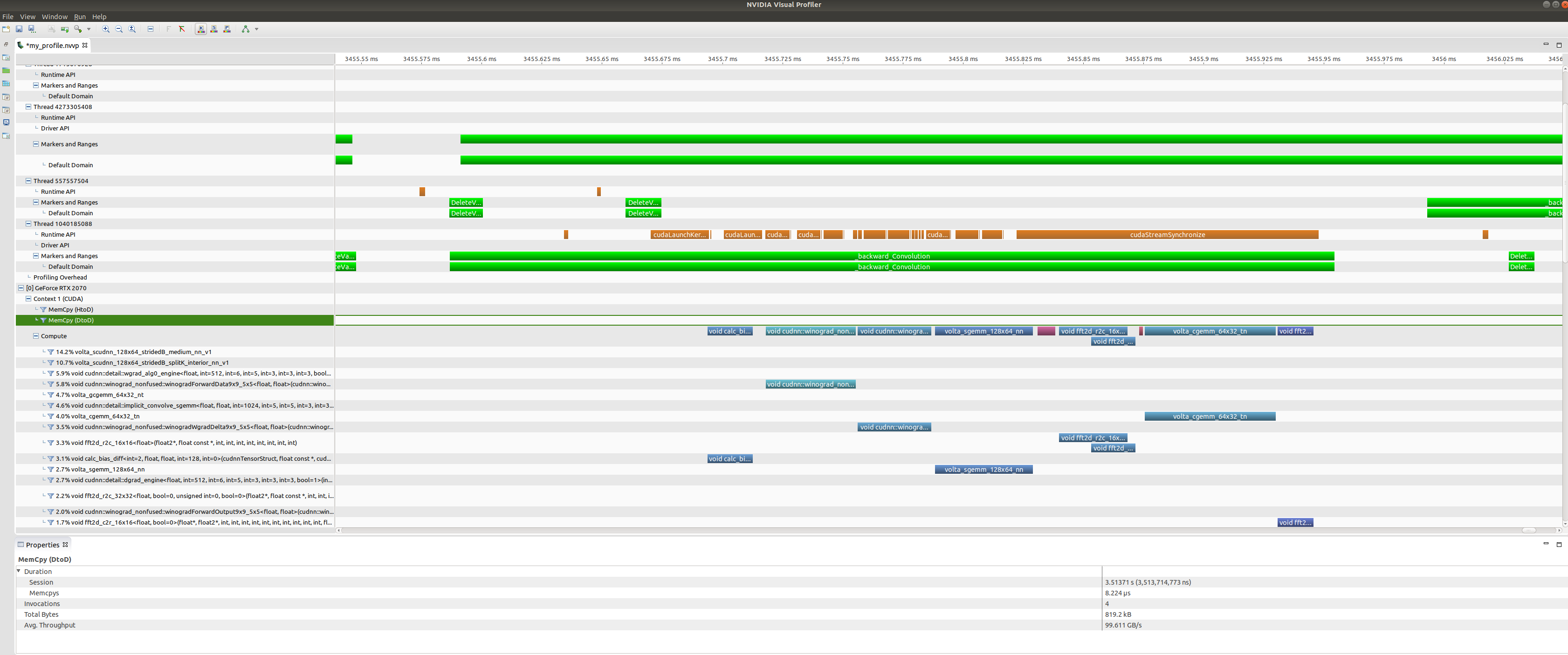

.../tutorials/performance/backend/profiler.md | 14 +-

python/mxnet/gluon/rnn/rnn_cell.py | 6 +-

python/mxnet/numpy/random.py | 70 +-

src/operator/contrib/transformer.cc | 86 +-

82 files changed, 1333 insertions(+), 1243 deletions(-)

diff --git a/ci/docker/runtime_functions.sh b/ci/docker/runtime_functions.sh

index be8cdfb..53330bc 100755

--- a/ci/docker/runtime_functions.sh

+++ b/ci/docker/runtime_functions.sh

@@ -712,6 +712,7 @@ sanity_cpp() {

sanity_python() {

set -ex

+ export DMLC_LOG_STACK_TRACE_DEPTH=100

python3 -m pylint --rcfile=ci/other/pylintrc --ignore-patterns=".*\.so$$,.*\.dll$$,.*\.dylib$$" python/mxnet

OMP_NUM_THREADS=$(expr $(nproc) / 4) pytest -n 4 tests/tutorials/test_sanity_tutorials.py

}

@@ -728,7 +729,7 @@ cd_unittest_ubuntu() {

export MXNET_SUBGRAPH_VERBOSE=0

export MXNET_ENABLE_CYTHON=0

export CD_JOB=1 # signal this is a CD run so any unecessary tests can be skipped

- export DMLC_LOG_STACK_TRACE_DEPTH=10

+ export DMLC_LOG_STACK_TRACE_DEPTH=100

local mxnet_variant=${1:?"This function requires a mxnet variant as the first argument"}

@@ -767,7 +768,7 @@ unittest_ubuntu_python3_cpu() {

export MXNET_STORAGE_FALLBACK_LOG_VERBOSE=0

export MXNET_SUBGRAPH_VERBOSE=0

export MXNET_ENABLE_CYTHON=0

- export DMLC_LOG_STACK_TRACE_DEPTH=10

+ export DMLC_LOG_STACK_TRACE_DEPTH=100

OMP_NUM_THREADS=$(expr $(nproc) / 4) pytest -m 'not serial' -k 'not test_operator' -n 4 --durations=50 --cov-report xml:tests_unittest.xml --verbose tests/python/unittest

MXNET_ENGINE_TYPE=NaiveEngine \

OMP_NUM_THREADS=$(expr $(nproc) / 4) pytest -m 'not serial' -k 'test_operator' -n 4 --durations=50 --cov-report xml:tests_unittest.xml --cov-append --verbose tests/python/unittest

@@ -781,7 +782,7 @@ unittest_ubuntu_python3_cpu_mkldnn() {

export MXNET_STORAGE_FALLBACK_LOG_VERBOSE=0

export MXNET_SUBGRAPH_VERBOSE=0

export MXNET_ENABLE_CYTHON=0

- export DMLC_LOG_STACK_TRACE_DEPTH=10

+ export DMLC_LOG_STACK_TRACE_DEPTH=100

OMP_NUM_THREADS=$(expr $(nproc) / 4) pytest -m 'not serial' -k 'not test_operator' -n 4 --durations=50 --cov-report xml:tests_unittest.xml --verbose tests/python/unittest

MXNET_ENGINE_TYPE=NaiveEngine \

OMP_NUM_THREADS=$(expr $(nproc) / 4) pytest -m 'not serial' -k 'test_operator' -n 4 --durations=50 --cov-report xml:tests_unittest.xml --cov-append --verbose tests/python/unittest

@@ -797,7 +798,7 @@ unittest_ubuntu_python3_gpu() {

export MXNET_SUBGRAPH_VERBOSE=0

export CUDNN_VERSION=${CUDNN_VERSION:-7.0.3}

export MXNET_ENABLE_CYTHON=0

- export DMLC_LOG_STACK_TRACE_DEPTH=10

+ export DMLC_LOG_STACK_TRACE_DEPTH=100

MXNET_GPU_MEM_POOL_TYPE=Unpooled \

OMP_NUM_THREADS=$(expr $(nproc) / 4) pytest -m 'not serial' -k 'not test_operator and not test_amp_init.py' -n 4 --durations=50 --cov-report xml:tests_gpu.xml --verbose tests/python/gpu

MXNET_GPU_MEM_POOL_TYPE=Unpooled \

@@ -816,7 +817,7 @@ unittest_ubuntu_python3_gpu_cython() {

export CUDNN_VERSION=${CUDNN_VERSION:-7.0.3}

export MXNET_ENABLE_CYTHON=1

export MXNET_ENFORCE_CYTHON=1

- export DMLC_LOG_STACK_TRACE_DEPTH=10

+ export DMLC_LOG_STACK_TRACE_DEPTH=100

check_cython

MXNET_GPU_MEM_POOL_TYPE=Unpooled \

OMP_NUM_THREADS=$(expr $(nproc) / 4) pytest -m 'not serial' -k 'not test_operator and not test_amp_init.py' -n 4 --durations=50 --cov-report xml:tests_gpu.xml --verbose tests/python/gpu

@@ -834,7 +835,7 @@ unittest_ubuntu_python3_gpu_nocudnn() {

export MXNET_SUBGRAPH_VERBOSE=0

export CUDNN_OFF_TEST_ONLY=true

export MXNET_ENABLE_CYTHON=0

- export DMLC_LOG_STACK_TRACE_DEPTH=10

+ export DMLC_LOG_STACK_TRACE_DEPTH=100

MXNET_GPU_MEM_POOL_TYPE=Unpooled \

OMP_NUM_THREADS=$(expr $(nproc) / 4) pytest -m 'not serial' -k 'not test_operator and not test_amp_init.py' -n 4 --durations=50 --cov-report xml:tests_gpu.xml --verbose tests/python/gpu

MXNET_GPU_MEM_POOL_TYPE=Unpooled \

@@ -846,6 +847,7 @@ unittest_ubuntu_python3_gpu_nocudnn() {

unittest_cpp() {

set -ex

+ export DMLC_LOG_STACK_TRACE_DEPTH=100

build/tests/mxnet_unit_tests

}

@@ -853,6 +855,7 @@ unittest_centos7_cpu() {

set -ex

source /opt/rh/rh-python36/enable

cd /work/mxnet

+ export DMLC_LOG_STACK_TRACE_DEPTH=100

OMP_NUM_THREADS=$(expr $(nproc) / 4) python -m pytest -m 'not serial' -k 'not test_operator' -n 4 --durations=50 --cov-report xml:tests_unittest.xml --verbose tests/python/unittest

MXNET_ENGINE_TYPE=NaiveEngine \

OMP_NUM_THREADS=$(expr $(nproc) / 4) python -m pytest -m 'not serial' -k 'test_operator' -n 4 --durations=50 --cov-report xml:tests_unittest.xml --cov-append --verbose tests/python/unittest

@@ -865,7 +868,7 @@ unittest_centos7_gpu() {

source /opt/rh/rh-python36/enable

cd /work/mxnet

export CUDNN_VERSION=${CUDNN_VERSION:-7.0.3}

- export DMLC_LOG_STACK_TRACE_DEPTH=10

+ export DMLC_LOG_STACK_TRACE_DEPTH=100

MXNET_GPU_MEM_POOL_TYPE=Unpooled \

OMP_NUM_THREADS=$(expr $(nproc) / 4) pytest -m 'not serial' -k 'not test_operator and not test_amp_init.py' -n 4 --durations=50 --cov-report xml:tests_gpu.xml --cov-append --verbose tests/python/gpu

MXNET_GPU_MEM_POOL_TYPE=Unpooled \

@@ -879,7 +882,7 @@ integrationtest_ubuntu_cpu_onnx() {

set -ex

export PYTHONPATH=./python/

export MXNET_SUBGRAPH_VERBOSE=0

- export DMLC_LOG_STACK_TRACE_DEPTH=10

+ export DMLC_LOG_STACK_TRACE_DEPTH=100

python3 tests/python/unittest/onnx/backend_test.py

OMP_NUM_THREADS=$(expr $(nproc) / 4) pytest -n 4 tests/python/unittest/onnx/mxnet_export_test.py

OMP_NUM_THREADS=$(expr $(nproc) / 4) pytest -n 4 tests/python/unittest/onnx/test_models.py

@@ -894,7 +897,7 @@ integrationtest_ubuntu_cpu_dist_kvstore() {

export MXNET_STORAGE_FALLBACK_LOG_VERBOSE=0

export MXNET_SUBGRAPH_VERBOSE=0

export MXNET_USE_OPERATOR_TUNING=0

- export DMLC_LOG_STACK_TRACE_DEPTH=10

+ export DMLC_LOG_STACK_TRACE_DEPTH=100

cd tests/nightly/

python3 ../../tools/launch.py -n 7 --launcher local python3 dist_sync_kvstore.py --type=gluon_step_cpu

python3 ../../tools/launch.py -n 7 --launcher local python3 dist_sync_kvstore.py --type=gluon_sparse_step_cpu

@@ -910,6 +913,7 @@ integrationtest_ubuntu_cpu_dist_kvstore() {

integrationtest_ubuntu_gpu_dist_kvstore() {

set -ex

+ export DMLC_LOG_STACK_TRACE_DEPTH=100

pushd .

cd /work/mxnet/python

pip3 install -e .

@@ -929,7 +933,7 @@ integrationtest_ubuntu_gpu_byteps() {

git clone -b v0.2.3 https://github.com/bytedance/byteps ~/byteps

export MXNET_STORAGE_FALLBACK_LOG_VERBOSE=0

export MXNET_SUBGRAPH_VERBOSE=0

- export DMLC_LOG_STACK_TRACE_DEPTH=10

+ export DMLC_LOG_STACK_TRACE_DEPTH=100

cd tests/nightly/

export NVIDIA_VISIBLE_DEVICES=0

@@ -952,7 +956,7 @@ test_ubuntu_cpu_python3() {

set -ex

pushd .

export MXNET_LIBRARY_PATH=/work/build/libmxnet.so

- export DMLC_LOG_STACK_TRACE_DEPTH=10

+ export DMLC_LOG_STACK_TRACE_DEPTH=100

VENV=mxnet_py3_venv

virtualenv -p `which python3` $VENV

source $VENV/bin/activate

@@ -976,7 +980,7 @@ unittest_ubuntu_python3_arm() {

export MXNET_STORAGE_FALLBACK_LOG_VERBOSE=0

export MXNET_SUBGRAPH_VERBOSE=0

export MXNET_ENABLE_CYTHON=0

- export DMLC_LOG_STACK_TRACE_DEPTH=10

+ export DMLC_LOG_STACK_TRACE_DEPTH=100

python3 -m pytest -n 2 --verbose tests/python/unittest/test_engine.py

}

@@ -1010,7 +1014,7 @@ test_rat_check() {

nightly_test_KVStore_singleNode() {

set -ex

export PYTHONPATH=./python/

- export DMLC_LOG_STACK_TRACE_DEPTH=10

+ export DMLC_LOG_STACK_TRACE_DEPTH=100

tests/nightly/test_kvstore.py

}

@@ -1018,7 +1022,7 @@ nightly_test_KVStore_singleNode() {

nightly_test_large_tensor() {

set -ex

export PYTHONPATH=./python/

- export DMLC_LOG_STACK_TRACE_DEPTH=10

+ export DMLC_LOG_STACK_TRACE_DEPTH=100

pytest --timeout=0 --forked tests/nightly/test_np_large_array.py

}

@@ -1026,7 +1030,7 @@ nightly_test_large_tensor() {

nightly_model_backwards_compat_test() {

set -ex

export PYTHONPATH=/work/mxnet/python/

- export DMLC_LOG_STACK_TRACE_DEPTH=10

+ export DMLC_LOG_STACK_TRACE_DEPTH=100

./tests/nightly/model_backwards_compatibility_check/model_backward_compat_checker.sh

}

@@ -1034,7 +1038,7 @@ nightly_model_backwards_compat_test() {

nightly_model_backwards_compat_train() {

set -ex

export PYTHONPATH=./python/

- export DMLC_LOG_STACK_TRACE_DEPTH=10

+ export DMLC_LOG_STACK_TRACE_DEPTH=100

./tests/nightly/model_backwards_compatibility_check/train_mxnet_legacy_models.sh

}

@@ -1043,7 +1047,7 @@ nightly_tutorial_test_ubuntu_python3_gpu() {

cd /work/mxnet/docs

export BUILD_VER=tutorial

export MXNET_DOCS_BUILD_MXNET=0

- export DMLC_LOG_STACK_TRACE_DEPTH=10

+ export DMLC_LOG_STACK_TRACE_DEPTH=100

make html

export MXNET_STORAGE_FALLBACK_LOG_VERBOSE=0

export MXNET_SUBGRAPH_VERBOSE=0

@@ -1055,7 +1059,7 @@ nightly_tutorial_test_ubuntu_python3_gpu() {

nightly_estimator() {

set -ex

- export DMLC_LOG_STACK_TRACE_DEPTH=10

+ export DMLC_LOG_STACK_TRACE_DEPTH=100

cd /work/mxnet/tests/nightly/estimator

export PYTHONPATH=/work/mxnet/python/

pytest test_estimator_cnn.py

diff --git a/docs/python_docs/python/tutorials/packages/gluon/training/normalization/imgs/NCHW_BN.png b/docs/python_docs/_static/NCHW_BN.png

similarity index 100%

rename from docs/python_docs/python/tutorials/packages/gluon/training/normalization/imgs/NCHW_BN.png

rename to docs/python_docs/_static/NCHW_BN.png

diff --git a/docs/python_docs/python/tutorials/packages/gluon/training/normalization/imgs/NCHW_IN.png b/docs/python_docs/_static/NCHW_IN.png

similarity index 100%

rename from docs/python_docs/python/tutorials/packages/gluon/training/normalization/imgs/NCHW_IN.png

rename to docs/python_docs/_static/NCHW_IN.png

diff --git a/docs/python_docs/python/tutorials/packages/gluon/training/normalization/imgs/NCHW_LN.png b/docs/python_docs/_static/NCHW_LN.png

similarity index 100%

rename from docs/python_docs/python/tutorials/packages/gluon/training/normalization/imgs/NCHW_LN.png

rename to docs/python_docs/_static/NCHW_LN.png

diff --git a/docs/python_docs/python/tutorials/packages/gluon/training/normalization/imgs/NTC_BN.png b/docs/python_docs/_static/NTC_BN.png

similarity index 100%

rename from docs/python_docs/python/tutorials/packages/gluon/training/normalization/imgs/NTC_BN.png

rename to docs/python_docs/_static/NTC_BN.png

diff --git a/docs/python_docs/python/tutorials/packages/gluon/training/normalization/imgs/NTC_IN.png b/docs/python_docs/_static/NTC_IN.png

similarity index 100%

rename from docs/python_docs/python/tutorials/packages/gluon/training/normalization/imgs/NTC_IN.png

rename to docs/python_docs/_static/NTC_IN.png

diff --git a/docs/python_docs/python/tutorials/packages/gluon/training/normalization/imgs/NTC_LN.png b/docs/python_docs/_static/NTC_LN.png

similarity index 100%

rename from docs/python_docs/python/tutorials/packages/gluon/training/normalization/imgs/NTC_LN.png

rename to docs/python_docs/_static/NTC_LN.png

diff --git a/docs/python_docs/_static/autograd_gradient.png b/docs/python_docs/_static/autograd_gradient.png

new file mode 100644

index 0000000..793fef3

Binary files /dev/null and b/docs/python_docs/_static/autograd_gradient.png differ

diff --git a/docs/python_docs/python/tutorials/packages/gluon/blocks/blocks.svg b/docs/python_docs/_static/blocks.svg

similarity index 100%

rename from docs/python_docs/python/tutorials/packages/gluon/blocks/blocks.svg

rename to docs/python_docs/_static/blocks.svg

diff --git a/docs/python_docs/python/tutorials/packages/gluon/loss/images/contrastive_loss.jpeg b/docs/python_docs/_static/contrastive_loss.jpeg

similarity index 100%

rename from docs/python_docs/python/tutorials/packages/gluon/loss/images/contrastive_loss.jpeg

rename to docs/python_docs/_static/contrastive_loss.jpeg

diff --git a/docs/python_docs/python/tutorials/packages/gluon/loss/ctc_loss.png b/docs/python_docs/_static/ctc_loss.png

similarity index 100%

rename from docs/python_docs/python/tutorials/packages/gluon/loss/ctc_loss.png

rename to docs/python_docs/_static/ctc_loss.png

diff --git a/docs/python_docs/python/tutorials/packages/gluon/training/normalization/imgs/data_normalization.jpeg b/docs/python_docs/_static/data_normalization.jpeg

similarity index 100%

rename from docs/python_docs/python/tutorials/packages/gluon/training/normalization/imgs/data_normalization.jpeg

rename to docs/python_docs/_static/data_normalization.jpeg

diff --git a/docs/python_docs/python/tutorials/packages/gluon/blocks/activations/images/elu.png b/docs/python_docs/_static/elu.png

similarity index 100%

rename from docs/python_docs/python/tutorials/packages/gluon/blocks/activations/images/elu.png

rename to docs/python_docs/_static/elu.png

diff --git a/docs/python_docs/python/tutorials/packages/gluon/blocks/activations/images/gelu.png b/docs/python_docs/_static/gelu.png

similarity index 100%

rename from docs/python_docs/python/tutorials/packages/gluon/blocks/activations/images/gelu.png

rename to docs/python_docs/_static/gelu.png

diff --git a/docs/python_docs/python/tutorials/packages/gluon/loss/images/inuktitut_1.png b/docs/python_docs/_static/inuktitut_1.png

similarity index 100%

rename from docs/python_docs/python/tutorials/packages/gluon/loss/images/inuktitut_1.png

rename to docs/python_docs/_static/inuktitut_1.png

diff --git a/docs/python_docs/python/tutorials/packages/gluon/loss/images/inuktitut_2.png b/docs/python_docs/_static/inuktitut_2.png

similarity index 100%

rename from docs/python_docs/python/tutorials/packages/gluon/loss/images/inuktitut_2.png

rename to docs/python_docs/_static/inuktitut_2.png

diff --git a/docs/python_docs/python/tutorials/packages/gluon/blocks/activations/images/leakyrelu.png b/docs/python_docs/_static/leakyrelu.png

similarity index 100%

rename from docs/python_docs/python/tutorials/packages/gluon/blocks/activations/images/leakyrelu.png

rename to docs/python_docs/_static/leakyrelu.png

diff --git a/docs/python_docs/python/tutorials/packages/gluon/blocks/activations/images/prelu.png b/docs/python_docs/_static/prelu.png

similarity index 100%

rename from docs/python_docs/python/tutorials/packages/gluon/blocks/activations/images/prelu.png

rename to docs/python_docs/_static/prelu.png

diff --git a/docs/python_docs/python/tutorials/packages/gluon/blocks/activations/images/relu.png b/docs/python_docs/_static/relu.png

similarity index 100%

rename from docs/python_docs/python/tutorials/packages/gluon/blocks/activations/images/relu.png

rename to docs/python_docs/_static/relu.png

diff --git a/docs/python_docs/python/tutorials/packages/gluon/blocks/activations/images/selu.png b/docs/python_docs/_static/selu.png

similarity index 100%

rename from docs/python_docs/python/tutorials/packages/gluon/blocks/activations/images/selu.png

rename to docs/python_docs/_static/selu.png

diff --git a/docs/python_docs/python/tutorials/packages/gluon/blocks/activations/images/sigmoid.png b/docs/python_docs/_static/sigmoid.png

similarity index 100%

rename from docs/python_docs/python/tutorials/packages/gluon/blocks/activations/images/sigmoid.png

rename to docs/python_docs/_static/sigmoid.png

diff --git a/docs/python_docs/python/tutorials/packages/gluon/blocks/activations/images/silu.png b/docs/python_docs/_static/silu.png

similarity index 100%

rename from docs/python_docs/python/tutorials/packages/gluon/blocks/activations/images/silu.png

rename to docs/python_docs/_static/silu.png

diff --git a/docs/python_docs/python/tutorials/packages/gluon/blocks/activations/images/softrelu.png b/docs/python_docs/_static/softrelu.png

similarity index 100%

rename from docs/python_docs/python/tutorials/packages/gluon/blocks/activations/images/softrelu.png

rename to docs/python_docs/_static/softrelu.png

diff --git a/docs/python_docs/python/tutorials/packages/gluon/blocks/activations/images/softsign.png b/docs/python_docs/_static/softsign.png

similarity index 100%

rename from docs/python_docs/python/tutorials/packages/gluon/blocks/activations/images/softsign.png

rename to docs/python_docs/_static/softsign.png

diff --git a/docs/python_docs/python/tutorials/packages/gluon/blocks/activations/images/swish.png b/docs/python_docs/_static/swish.png

similarity index 100%

rename from docs/python_docs/python/tutorials/packages/gluon/blocks/activations/images/swish.png

rename to docs/python_docs/_static/swish.png

diff --git a/docs/python_docs/python/tutorials/packages/gluon/blocks/activations/images/tanh.png b/docs/python_docs/_static/tanh.png

similarity index 100%

rename from docs/python_docs/python/tutorials/packages/gluon/blocks/activations/images/tanh.png

rename to docs/python_docs/_static/tanh.png

diff --git a/docs/python_docs/python/tutorials/packages/gluon/loss/triplet_loss.png b/docs/python_docs/_static/triplet_loss.png

similarity index 100%

rename from docs/python_docs/python/tutorials/packages/gluon/loss/triplet_loss.png

rename to docs/python_docs/_static/triplet_loss.png

diff --git a/docs/python_docs/python/Makefile_sphinx b/docs/python_docs/python/Makefile_sphinx

index a90366f..2ddc7dc 100644

--- a/docs/python_docs/python/Makefile_sphinx

+++ b/docs/python_docs/python/Makefile_sphinx

@@ -37,7 +37,7 @@ endif # $(NUMJOBS)

# End number of processors detection

# You can set these variables from the command line.

-SPHINXOPTS = -j$(NPROCS) -c ../scripts --keep-going

+SPHINXOPTS = -j$(NPROCS) -c ../scripts --keep-going -W

SPHINXBUILD = sphinx-build

PAPER =

BUILDDIR = _build

diff --git a/docs/python_docs/python/api/gluon/rnn/index.rst b/docs/python_docs/python/api/gluon/rnn/index.rst

index 637a0a3..9ee3139 100644

--- a/docs/python_docs/python/api/gluon/rnn/index.rst

+++ b/docs/python_docs/python/api/gluon/rnn/index.rst

@@ -25,7 +25,6 @@ Build-in recurrent neural network layers are provided in the following two modul

:nosignatures:

mxnet.gluon.rnn

- mxnet.gluon.contrib.rnn

.. currentmodule:: mxnet.gluon

diff --git a/docs/python_docs/python/api/kvstore/index.rst b/docs/python_docs/python/api/kvstore/index.rst

index f79a719..247256a 100644

--- a/docs/python_docs/python/api/kvstore/index.rst

+++ b/docs/python_docs/python/api/kvstore/index.rst

@@ -15,9 +15,34 @@

specific language governing permissions and limitations

under the License.

-mxnet.kvstore

-=============

+KVStore: Communication for Distributed Training

+===============================================

+.. currentmodule:: mxnet.kvstore

-.. automodule:: mxnet.kvstore

- :members:

- :autosummary:

+

+Horovod

+=======

+

+.. autosummary::

+ :toctree: generated/

+

+ Horovod

+

+BytePS

+======

+

+.. autosummary::

+ :toctree: generated/

+

+ BytePS

+

+

+KVStore Interface

+=================

+

+.. autosummary::

+ :toctree: generated/

+

+ KVStore

+ KVStoreBase

+ KVStoreServer

diff --git a/docs/python_docs/python/api/npx/index.rst b/docs/python_docs/python/api/npx/index.rst

index 4cc2684..e5f1d8a 100644

--- a/docs/python_docs/python/api/npx/index.rst

+++ b/docs/python_docs/python/api/npx/index.rst

@@ -84,6 +84,7 @@ More operators

sigmoid

smooth_l1

softmax

+ log_softmax

topk

waitall

load

diff --git a/docs/python_docs/python/tutorials/deploy/export/onnx.md b/docs/python_docs/python/tutorials/deploy/export/onnx.md

index 4867bc8..60f3b2e 100644

--- a/docs/python_docs/python/tutorials/deploy/export/onnx.md

+++ b/docs/python_docs/python/tutorials/deploy/export/onnx.md

@@ -28,7 +28,7 @@ In this tutorial, we will learn how to use MXNet to ONNX exporter on pre-trained

## Prerequisites

To run the tutorial you will need to have installed the following python modules:

-- [MXNet >= 1.3.0](/get_started)

+- [MXNet >= 1.3.0](https://mxnet.apache.org/get_started)

- [onnx]( https://github.com/onnx/onnx#installation) v1.2.1 (follow the install guide)

*Note:* MXNet-ONNX importer and exporter follows version 7 of ONNX operator set which comes with ONNX v1.2.1.

@@ -44,7 +44,7 @@ logging.basicConfig(level=logging.INFO)

## Downloading a model from the MXNet model zoo

-We download the pre-trained ResNet-18 [ImageNet](http://www.image-net.org/) model from the [MXNet Model Zoo](/api/python/docs/api/gluon/model_zoo/index.html).

+We download the pre-trained ResNet-18 [ImageNet](http://www.image-net.org/) model from the [MXNet Model Zoo](../../../api/gluon/model_zoo/index.rst).

We will also download synset file to match labels.

```{.python .input}

@@ -59,7 +59,7 @@ Now, we have downloaded ResNet-18 symbol, params and synset file on the disk.

## MXNet to ONNX exporter API

-Let us describe the MXNet's `export_model` API.

+Let us describe the MXNet's `export_model` API.

```{.python .input}

help(onnx_mxnet.export_model)

@@ -74,7 +74,7 @@ export_model(sym, params, input_shape, input_type=<type 'numpy.float32'>, onnx_f

Exports the MXNet model file, passed as a parameter, into ONNX model.

Accepts both symbol,parameter objects as well as json and params filepaths as input.

Operator support and coverage - https://cwiki.apache.org/confluence/display/MXNET/MXNet-ONNX+Integration

-

+

Parameters

----------

sym : str or symbol object

@@ -89,7 +89,7 @@ export_model(sym, params, input_shape, input_type=<type 'numpy.float32'>, onnx_f

Path where to save the generated onnx file

verbose : Boolean

If true will print logs of the model conversion

-

+

Returns

-------

onnx_file_path : str

@@ -145,6 +145,6 @@ model_proto = onnx.load_model(converted_model_path)

checker.check_graph(model_proto.graph)

```

-If the converted protobuf format doesn't qualify to ONNX proto specifications, the checker will throw errors, but in this case it successfully passes.

+If the converted protobuf format doesn't qualify to ONNX proto specifications, the checker will throw errors, but in this case it successfully passes.

This method confirms exported model protobuf is valid. Now, the model is ready to be imported in other frameworks for inference!

diff --git a/docs/python_docs/python/tutorials/getting-started/crash-course/0-introduction.md b/docs/python_docs/python/tutorials/getting-started/crash-course/0-introduction.md

index 190bf13..c5093e2 100644

--- a/docs/python_docs/python/tutorials/getting-started/crash-course/0-introduction.md

+++ b/docs/python_docs/python/tutorials/getting-started/crash-course/0-introduction.md

@@ -33,9 +33,9 @@ Apache MXNet is an open-source deep learning framework that provides a comprehen

#### Tensors A.K.A Arrays

-Tensors give us a generic way of describing $n$-dimensional **arrays** with an arbitrary number of axes. Vectors, for example, are first-order tensors, and matrices are second-order tensors. Tensors with more than two orders(axes) do not have special mathematical names. The [ndarray](https://mxnet.apache.org/versions/1.7/api/python/docs/api/ndarray/index.html) package in MXNet provides a tensor implementation. This class is similar to NumPy's ndarray with additional features. First, MXNe [...]

+Tensors give us a generic way of describing $n$-dimensional **arrays** with an arbitrary number of axes. Vectors, for example, are first-order tensors, and matrices are second-order tensors. Tensors with more than two orders(axes) do not have special mathematical names. The [NP](../../../api/np/index.rst) package in MXNet provides a NumPy-compatible tensor implementation, `np.ndarray` with additional features. First, MXNet’s `np.ndarray` supports fast execution on a wide range of hardwar [...]

-You will get familiar to arrays in the [next section](1-nparray.md) of this crash course.

+You will get familiar to arrays in the [next section](./1-nparray.ipynb) of this crash course.

### Computing paradigms

@@ -44,9 +44,9 @@ You will get familiar to arrays in the [next section](1-nparray.md) of this cras

Neural network designs like [ResNet-152](https://www.cv-foundation.org/openaccess/content_cvpr_2016/papers/He_Deep_Residual_Learning_CVPR_2016_paper.pdf) have a fair degree of regularity. They consist of _blocks_ of repeated (or at least similarly designed) layers; these blocks then form the basis of more complex network designs. A block can be a single layer, a component consisting of multiple layers, or the entire complex neural network itself! One benefit of working with the block abs [...]

-From a programming standpoint, a block is represented by a class and [Block](https://mxnet.apache.org/versions/1.7/api/python/docs/api/gluon/nn/index.html#mxnet.gluon.nn.Block) is the base class for all neural networks layers in MXNet. Any subclass of it must define a forward propagation function that transforms its input into output and must store any necessary parameters if required.

+From a programming standpoint, a block is represented by a class and [Block](../../../api/gluon/block.rst#mxnet.gluon.Block) is the base class for all neural networks layers in MXNet. Any subclass of it must define a forward propagation function that transforms its input into output and must store any necessary parameters if required.

-You will see more about blocks in [Array](1-nparray.md) and [Create neural network](2-create-nn.md) sections.

+You will see more about blocks in [Array](./1-nparray.ipynb) and [Create neural network](./2-create-nn.ipynb) sections.

#### HybridBlock

@@ -62,7 +62,7 @@ You can learn more about the difference between symbolic vs. imperative programm

When designing MXNet, developers considered whether it was possible to harness the benefits of both imperative and symbolic programming. The developers believed that users should be able to develop and debug using pure imperative programming, while having the ability to convert most programs into symbolic programming to be run when product-level computing performance and deployment are required.

-In hybrid programming, you can build models using either the [HybridBlock](https://mxnet.apache.org/versions/1.7/api/python/docs/api/gluon/hybrid_block.html) or the [HybridSequential](https://mxnet.apache.org/versions/1.6/api/python/docs/api/gluon/nn/index.html#mxnet.gluon.nn.HybridSequential) and [HybridConcurrent](https://mxnet.incubator.apache.org/versions/1.7/api/python/docs/api/gluon/contrib/index.html#mxnet.gluon.contrib.nn.HybridConcurrent) classes. By default, they are executed i [...]

+In hybrid programming, you can build models using either the [HybridBlock](../../../api/gluon/hybrid_block.rst#mxnet.gluon.HybridBlock) or the [HybridSequential](../../../api/gluon/nn/index.rst#mxnet.gluon.nn.HybridSequential) and [HybridConcatenate](../../../api/gluon/nn/index.rst#mxnet.gluon.nn.HybridConcatenate) classes. By default, they are executed in the same way [Block](../../../api/gluon/block.rst#mxnet.gluon.Block) or [Sequential](../../../api/gluon/nn/index.rst#mxnet.gluon.nn.S [...]

You will learn more about hybrid blocks and use them in the upcoming sections of the course.

@@ -72,7 +72,7 @@ Gluon is an imperative high-level front end API in MXNet for deep learning that

## Next steps

-Dive deeper on [array representations](1-nparray.md) in MXNet.

+Dive deeper on [array representations](./1-nparray.ipynb) in MXNet.

## References

1. [Dive into Deep Learning](http://d2l.ai/)

diff --git a/docs/python_docs/python/tutorials/getting-started/crash-course/1-nparray.md b/docs/python_docs/python/tutorials/getting-started/crash-course/1-nparray.md

index 79f2d9c..112e9ee 100644

--- a/docs/python_docs/python/tutorials/getting-started/crash-course/1-nparray.md

+++ b/docs/python_docs/python/tutorials/getting-started/crash-course/1-nparray.md

@@ -28,7 +28,7 @@ To get started, run the following commands to import the `np` package together

with the NumPy extensions package `npx`. Together, `np` with `npx` make up the

NP on MXNet front end.

-```python

+```{.python .input}

import mxnet as mx

from mxnet import np, npx

npx.set_np() # Activate NumPy-like mode.

@@ -38,21 +38,21 @@ In this step, create a 2D array (also called a matrix). The following code

example creates a matrix with values from two sets of numbers: 1, 2, 3 and 4, 5,

6. This might also be referred to as a tuple of a tuple of integers.

-```python

+```{.python .input}

np.array(((1, 2, 3), (5, 6, 7)))

```

You can also create a very simple matrix with the same shape (2 rows by 3

columns), but fill it with 1's.

-```python

+```{.python .input}

x = np.full((2, 3), 1)

x

```

Alternatively, you could use the following array creation routine.

-```python

+```{.python .input}

x = np.ones((2, 3))

x

```

@@ -61,7 +61,7 @@ You can create arrays whose values are sampled randomly. For example, sampling

values uniformly between -1 and 1. The following code example creates the same

shape, but with random sampling.

-```python

+```{.python .input}

y = np.random.uniform(-1, 1, (2, 3))

y

```

@@ -73,27 +73,27 @@ addition, `.dtype` tells the data type of the stored values. As you notice when

we generate random uniform values we generate `float32` not `float64` as normal

NumPy arrays.

-```python

+```{.python .input}

(x.shape, x.size, x.dtype)

```

You could also specifiy the datatype when you create your ndarray.

-```python

+```{.python .input}

x = np.full((2, 3), 1, dtype="int8")

x.dtype

```

Versus the default of `float32`.

-```python

+```{.python .input}

x = np.full((2, 3), 1)

x.dtype

```

When we multiply, by default we use the datatype with the most precision.

-```python

+```{.python .input}

x = x.astype("int8") + x.astype(int) + x.astype("float32")

x.dtype

```

@@ -104,48 +104,48 @@ A ndarray supports a large number of standard mathematical operations. Here are

some examples. You can perform element-wise multiplication by using the

following code example.

-```python

+```{.python .input}

x * y

```

You can perform exponentiation by using the following code example.

-```python

+```{.python .input}

np.exp(y)

```

You can also find a matrix’s transpose to compute a proper matrix-matrix product

by using the following code example.

-```python

+```{.python .input}

np.dot(x, y.T)

```

Alternatively, you could use the matrix multiplication function.

-```python

+```{.python .input}

np.matmul(x, y.T)

```

You can leverage built in operators, like summation.

-```python

+```{.python .input}

x.sum()

```

You can also gather a mean value.

-```python

+```{.python .input}

x.mean()

```

You can perform flatten and reshape just like you normally would in NumPy!

-```python

+```{.python .input}

x.flatten()

```

-```python

+```{.python .input}

x.reshape(6, 1)

```

@@ -155,19 +155,19 @@ The ndarrays support slicing in many ways you might want to access your data.

The following code example shows how to read a particular element, which returns

a 1D array with shape `(1,)`.

-```python

+```{.python .input}

y[1, 2]

```

This example shows how to read the second and third columns from `y`.

-```python

+```{.python .input}

y[:, 1:3]

```

This example shows how to write to a specific element.

-```python

+```{.python .input}

y[:, 1:3] = 2

y

```

@@ -175,7 +175,7 @@ y

You can perform multi-dimensional slicing, which is shown in the following code

example.

-```python

+```{.python .input}

y[1:2, 0:2] = 4

y

```

@@ -185,12 +185,12 @@ y

You can convert MXNet ndarrays to and from NumPy ndarrays, as shown in the

following example. The converted arrays do not share memory.

-```python

+```{.python .input}

a = x.asnumpy()

(type(a), a)

```

-```python

+```{.python .input}

a = np.array(a)

(type(a), a)

```

@@ -198,7 +198,7 @@ a = np.array(a)

Additionally, you can move them to different GPU contexts. You will dive more

into this later, but here is an example for now.

-```python

+```{.python .input}

a.copyto(mx.gpu(0))

```

@@ -208,4 +208,4 @@ Ndarrays also have some additional features which make Deep Learning possible

and efficient. Namely, differentiation, and being able to leverage GPU's.

Another important feature of ndarrays that we will discuss later is

autograd. But first, we will abstract an additional level and talk about building

-Neural Network Layers [Step 2: Create a neural network](2-create-nn.md)

+Neural Network Layers [Step 2: Create a neural network](./2-create-nn.ipynb)

diff --git a/docs/python_docs/python/tutorials/getting-started/crash-course/2-create-nn.md b/docs/python_docs/python/tutorials/getting-started/crash-course/2-create-nn.md

index b80d50d..494e786 100644

--- a/docs/python_docs/python/tutorials/getting-started/crash-course/2-create-nn.md

+++ b/docs/python_docs/python/tutorials/getting-started/crash-course/2-create-nn.md

@@ -1,533 +1,609 @@

-<!--- Licensed to the Apache Software Foundation (ASF) under one -->

-<!--- or more contributor license agreements. See the NOTICE file -->

-<!--- distributed with this work for additional information -->

-<!--- regarding copyright ownership. The ASF licenses this file -->

-<!--- to you under the Apache License, Version 2.0 (the -->

-<!--- "License"); you may not use this file except in compliance -->

-<!--- with the License. You may obtain a copy of the License at -->

-

-<!--- http://www.apache.org/licenses/LICENSE-2.0 -->

-

-<!--- Unless required by applicable law or agreed to in writing, -->

-<!--- software distributed under the License is distributed on an -->

-<!--- "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY -->

-<!--- KIND, either express or implied. See the License for the -->

-<!--- specific language governing permissions and limitations -->

+<!--- Licensed to the Apache Software Foundation (ASF) under one -->

+<!--- or more contributor license agreements. See the NOTICE file -->

+<!--- distributed with this work for additional information -->

+<!--- regarding copyright ownership. The ASF licenses this file -->

+<!--- to you under the Apache License, Version 2.0 (the -->

+<!--- "License"); you may not use this file except in compliance -->

+<!--- with the License. You may obtain a copy of the License at -->

+

+<!--- http://www.apache.org/licenses/LICENSE-2.0 -->

+

+<!--- Unless required by applicable law or agreed to in writing, -->

+<!--- software distributed under the License is distributed on an -->

+<!--- "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY -->

+<!--- KIND, either express or implied. See the License for the -->

+<!--- specific language governing permissions and limitations -->

<!--- under the License. -->

-# Step 2: Create a neural network

-

-In this step, you learn how to use NP on Apache MXNet to create neural networks

-in Gluon. In addition to the `np` package that you learned about in the previous

-step [Step 1: Manipulate data with NP on MXNet](1-nparray.md), you also need to

-import the neural network modules from `gluon`. Gluon includes built-in neural

-network layers in the following two modules:

-

-1. `mxnet.gluon.nn`: NN module that maintained by the mxnet team

-2. `mxnet.gluon.contrib.nn`: Experiemental module that is contributed by the

-community

-

-Use the following commands to import the packages required for this step.

-

-```python

-from mxnet import np, npx

-from mxnet.gluon import nn

-npx.set_np() # Change MXNet to the numpy-like mode.

-```

-

-## Create your neural network's first layer

-

-In this section, you will create a simple neural network with Gluon. One of the

-simplest network you can create is a single **Dense** layer or **densely-

-connected** layer. A dense layer consists of nodes in the input that are

-connected to every node in the next layer. Use the following code example to

-start with a dense layer with five output units.

-

-```python

-layer = nn.Dense(5)

-layer

-# output: Dense(-1 -> 5, linear)

-```

-

-In the example above, the output is `Dense(-1 -> 5, linear)`. The **-1** in the

-output denotes that the size of the input layer is not specified during

-initialization.

-

-You can also call the **Dense** layer with an `in_units` parameter if you know

-the shape of your input unit.

-

-```python

-layer = nn.Dense(5,in_units=3)

-layer

-```

-

-In addition to the `in_units` param, you can also add an activation function to

-the layer using the `activation` param. The Dense layer implements the operation

-

-$$output = \sigma(W \cdot X + b)$$

-

-Call the Dense layer with an `activation` parameter to use an activation

-function.

-

-```python

-layer = nn.Dense(5, in_units=3,activation='relu')

-```

-

-Voila! Congratulations on creating a simple neural network. But for most of your

-use cases, you will need to create a neural network with more than one dense

-layer or with multiple types of other layers. In addition to the `Dense` layer,

-you can find more layers at [mxnet nn

-layers](https://mxnet.apache.org/versions/1.6/api/python/docs/api/gluon/nn/index.html#module-

-mxnet.gluon.nn)

-

-So now that you have created a neural network, you are probably wondering how to

-pass data into your network?

-

-First, you need to initialize the network weights, if you use the default

-initialization method which draws random values uniformly in the range $[-0.7,

-0.7]$. You can see this in the following example.

-

-**Note**: Initialization is discussed at a little deeper detail in the next

-notebook

-

-```python

-layer.initialize()

-```

-

-Now that you have initialized your network, you can give it data. Passing data

-through a network is also called a forward pass. You can do a forward pass with

-random data, shown in the following example. First, you create a `(10,3)` shape

-random input `x` and feed the data into the layer to compute the output.

-

-```python

-x = np.random.uniform(-1,1,(10,3))

-layer(x)

-```

-

-The layer produces a `(10,5)` shape output from your `(10,3)` input.

-

-**When you don't specify the `in_unit` parameter, the system automatically

-infers it during the first time you feed in data during the first forward step

-after you create and initialize the weights.**

-

-

-```python

-layer.params

-```

-

-The `weights` and `bias` can be accessed using the `.data()` method.

-

-```python

-layer.weight.data()

-```

-

-## Chain layers into a neural network using nn.Sequential

-

-Sequential provides a special way of rapidly building networks when when the

-network architecture follows a common design pattern: the layers look like a

-stack of pancakes. Many networks follow this pattern: a bunch of layers, one

-stacked on top of another, where the output of each layer is fed directly to the

-input to the next layer. To use sequential, simply provide a list of layers

-(pass in the layers by calling `net.add(<Layer goes here!>`). To do this you can

-use your previous example of Dense layers and create a 3-layer multi layer

-perceptron. You can create a sequential block using `nn.Sequential()` method and

-add layers using `add()` method.

-

-```python

-net = nn.Sequential()

-

-net.add(nn.Dense(5,in_units=3,activation='relu'),

- nn.Dense(25, activation='relu'), nn.Dense(2) )

-net

-```

-

-The layers are ordered exactly the way you defined your neural network with

-index starting from 0. You can access the layers by indexing the network using

-`[]`.

-

-```python

-net[1]

-```

-

-## Create a custom neural network architecture flexibly

-

-`nn.Sequential()` allows you to create your multi-layer neural network with

-existing layers from `gluon.nn`. It also includes a pre-defined `forward()`

-function that sequentially executes added layers. But what if the built-in

-layers are not sufficient for your needs. If you want to create networks like

-ResNet which has complex but repeatable components, how do you create such a

-network?

-

-In gluon, every neural network layer is defined by using a base class

-`nn.Block()`. A Block has one main job - define a forward method that takes some

-input x and generates an output. A Block can just do something simple like apply

-an activation function. It can combine multiple layers together in a single

-block or also combine a bunch of other Blocks together in creative ways to

-create complex networks like Resnet. In this case, you will construct three

-Dense layers. The `forward()` method can then invoke the layers in turn to

-generate its output.

-

-Create a subclass of `nn.Block` and implement two methods by using the following

-code.

-

-- `__init__` create the layers

-- `forward` define the forward function.

-

-```

-class Net(nn.Block):

- def __init__(self): super().__init__()

- def forward(self, x): return x```

-

-```python

-class MLP(nn.Block):

- def __init__(self): super().__init__() self.dense1 = nn.Dense(5,activation='relu') self.dense2 = nn.Dense(25,activation='relu') self.dense3 = nn.Dense(2)

- def forward(self, x): layer1 = self.dense1(x) layer2 = self.dense2(layer1) layer3 = self.dense3(layer2) return layer3 net = MLP()

-net

-```

-

-```python

-net.dense1.params

-```

-Each layer includes parameters that are stored in a `Parameter` class. You can

-access them using the `params()` method.

-

-## Creating custom layers using Parameters (Blocks API)

-

-MXNet includes a `Parameter` method to hold your parameters in each layer. You

-can create custom layers using the `Parameter` class to include computation that

-may otherwise be not included in the built-in layers. For example, for a dense

-layer, the weights and biases will be created using the `Parameter` method. But

-if you want to add additional computation to the dense layer, you can create it

-using parameter method.

-

-Instantiate a parameter, e.g weights with a size `(5,0)` using the `shape`

-argument.

-

-```python

-from mxnet.gluon import Parameter

-

-weight = Parameter("custom_parameter_weight",shape=(5,-1))

-bias = Parameter("custom_parameter_bias",shape=(5,-1))

-

-weight,bias

-```

-

-The `Parameter` method includes a `grad_req` argument that specifies how you

-want to capture gradients for this Parameter. Under the hood, that lets gluon

-know that it has to call `.attach_grad()` on the underlying array. By default,

-the gradient is updated everytime the gradient is written to the grad

-`grad_req='write'`.

-

-Now that you know how parameters work, you are ready to create your very own

-fully-connected custom layer.

-

-To create the custom layers using parameters, you can use the same skeleton with

-`nn.Block` base class. You will create a custom dense layer that takes parameter

-x and returns computed `w*x + b` without any activation function

-

-```python

-class custom_layer(nn.Block):

- def __init__(self,out_units,in_units=0): super().__init__() self.weight = Parameter("weight",shape=(in_units,out_units),allow_deferred_init=True) self.bias = Parameter("bias",shape=(out_units,),allow_deferred_init=True)

- def forward(self, x): return np.dot(x, self.weight.data()) + self.bias.data()```

-

-Parameter can be instantiated before the corresponding data is instantiated. For

-example, when you instantiate a Block but the shapes of each parameter still

-need to be inferred, the Parameter will wait for the shape to be inferred before

-allocating memory.

-

-```python

-dense = custom_layer(3,in_units=5)

-dense.initialize()

-dense(np.random.uniform(size=(4, 5)))

-```

-

-Similarly, you can use the following code to implement a famous network called

-[LeNet](http://yann.lecun.com/exdb/lenet/) through `nn.Block` using the built-in

-`Dense` layer and using `custom_layer` as the last layer

-

-```python

-class LeNet(nn.Block):

- def __init__(self): super().__init__() self.conv1 = nn.Conv2D(channels=6, kernel_size=3, activation='relu') self.pool1 = nn.MaxPool2D(pool_size=2, strides=2) self.conv2 = nn.Conv2D(channels=16, kernel_size=3, activation='relu') self.pool2 = nn.MaxPool2D(pool_size=2, strides=2) self.dense1 = nn.Dense(120, activation="relu") self.dense2 = nn.Dense(84, activation="relu") self.dense3 = nn.Dense(10)

- def forward(self, x): x = self.conv1(x) x = self.pool1(x) x = self.conv2(x) x = self.pool2(x) x = self.dense1(x) x = self.dense2(x) x = self.dense3(x) return x Lenet = LeNet()

-```

-

-```python

-class LeNet_custom(nn.Block):

- def __init__(self): super().__init__() self.conv1 = nn.Conv2D(channels=6, kernel_size=3, activation='relu') self.pool1 = nn.MaxPool2D(pool_size=2, strides=2) self.conv2 = nn.Conv2D(channels=16, kernel_size=3, activation='relu') self.pool2 = nn.MaxPool2D(pool_size=2, strides=2) self.dense1 = nn.Dense(120, activation="relu") self.dense2 = nn.Dense(84, activation="relu") self.dense3 = custom_layer(10,84)

- def forward(self, x): x = self.conv1(x) x = self.pool1(x) x = self.conv2(x) x = self.pool2(x) x = self.dense1(x) x = self.dense2(x) x = self.dense3(x) return x Lenet_custom = LeNet_custom()

-```

-

-```python

-image_data = np.random.uniform(-1,1, (1,1,28,28))

-

-Lenet.initialize()

-Lenet_custom.initialize()

-

-print("Lenet:")

-print(Lenet(image_data))

-

-print("Custom Lenet:")

-print(Lenet_custom(image_data))

-```

-

-

-You can use `.data` method to access the weights and bias of a particular layer.

-For example, the following accesses the first layer's weight and sixth layer's bias.

-

-```python

-Lenet.conv1.weight.data().shape, Lenet.dense1.bias.data().shape

-```

-

-## Using predefined (pretrained) architectures

-

-Till now, you have seen how to create your own neural network architectures. But

-what if you want to replicate or baseline your dataset using some of the common

-models in computer visions or natural language processing (NLP). Gluon includes

-common architectures that you can directly use. The Gluon Model Zoo provides a

-collection of off-the-shelf models e.g. RESNET, BERT etc. These architectures

-are found at:

-

-- [Gluon CV model zoo](https://gluon-cv.mxnet.io/model_zoo/index.html)

-

-- [Gluon NLP model zoo](https://gluon-nlp.mxnet.io/model_zoo/index.html)

-

-```python

-from mxnet.gluon import model_zoo

-

-net = model_zoo.vision.resnet50_v2(pretrained=True)

-net.hybridize()

-

-dummy_input = np.ones(shape=(1,3,224,224))

-output = net(dummy_input)

-output.shape

-```

-

-## Deciding the paradigm for your network

-

-In MXNet, Gluon API (Imperative programming paradigm) provides a user friendly

-way for quick prototyping, easy debugging and natural control flow for people

-familiar with python programming.

-

-However, at the backend, MXNET can also convert the network using Symbolic or

-Declarative programming into static graphs with low level optimizations on

-operators. However, static graphs are less flexible because any logic must be

-encoded into the graph as special operators like scan, while_loop and cond. It’s

-also hard to debug.

-

-So how can you make use of symbolic programming while getting the flexibility of

-imperative programming to quickly prototype and debug?

-

-Enter **HybridBlock**

-

-HybridBlocks can run in a fully imperatively way where you define their

-computation with real functions acting on real inputs. But they’re also capable

-of running symbolically, acting on placeholders. Gluon hides most of this under

-the hood so you will only need to know how it works when you want to write your

-own layers.

-

-```python

-net_hybrid_seq = nn.HybridSequential()

-

-net_hybrid_seq.add(nn.Dense(5,in_units=3,activation='relu'),

- nn.Dense(25, activation='relu'), nn.Dense(2) )

-net_hybrid_seq

-```

-

-To compile and optimize `HybridSequential`, you can call its `hybridize` method.

-

-```python

-net_hybrid_seq.hybridize()

-```

-

-

-## Creating custom layers using Parameters (HybridBlocks API)

-

-When you instantiated your custom layer, you specified the input dimension

-`in_units` that initializes the weights with the shape specified by `in_units`

-and `out_units`. If you leave the shape of `in_unit` as unknown, you defer the

-shape to the first forward pass. For the custom layer, you define the

-`infer_shape()` method and let the shape be inferred at runtime.

-

-```python

-class custom_layer(nn.HybridBlock):

- def __init__(self,out_units,in_units=-1): super().__init__() self.weight = Parameter("weight",shape=(in_units,out_units),allow_deferred_init=True) self.bias = Parameter("bias",shape=(out_units,),allow_deferred_init=True) def forward(self, x):

- print(self.weight.shape,self.bias.shape) return np.dot(x, self.weight.data()) + self.bias.data() def infer_shape(self, x):

- print(self.weight.shape,x.shape) self.weight.shape = (x.shape[-1],self.weight.shape[1]) dense = custom_layer(3)

-dense.initialize()

-dense(np.random.uniform(size=(4, 5)))

-```

-

-### Performance

-

-To get a sense of the speedup from hybridizing, you can compare the performance

-before and after hybridizing by measuring the time it takes to make 1000 forward

-passes through the network.

-

-```python

-from time import time

-

-def benchmark(net, x):

- y = net(x) start = time() for i in range(1,1000): y = net(x) return time() - start

-x_bench = np.random.normal(size=(1,512))

-

-net_hybrid_seq = nn.HybridSequential()

-

-net_hybrid_seq.add(nn.Dense(256,activation='relu'),

- nn.Dense(128, activation='relu'), nn.Dense(2) )net_hybrid_seq.initialize()

-

-print('Before hybridizing: %.4f sec'%(benchmark(net_hybrid_seq, x_bench)))

-net_hybrid_seq.hybridize()

-print('After hybridizing: %.4f sec'%(benchmark(net_hybrid_seq, x_bench)))

-```

-

-Peeling back another layer, you also have a `HybridBlock` which is the hybrid

-version of the `Block` API.

-

-Similar to the `Blocks` API, you define a `forward` function for `HybridBlock`

-that takes an input `x`. MXNet takes care of hybridizing the model at the

-backend so you don't have to make changes to your code to convert it to a

-symbolic paradigm.

-

-```python

-from mxnet.gluon import HybridBlock

-

-class MLP_Hybrid(HybridBlock):

- def __init__(self): super().__init__() self.dense1 = nn.Dense(256,activation='relu') self.dense2 = nn.Dense(128,activation='relu') self.dense3 = nn.Dense(2)

- def forward(self, x): layer1 = self.dense1(x) layer2 = self.dense2(layer1) layer3 = self.dense3(layer2) return layer3 net_Hybrid = MLP_Hybrid()

-net_Hybrid.initialize()

-

-print('Before hybridizing: %.4f sec'%(benchmark(net_Hybrid, x_bench)))

-net_Hybrid.hybridize()

-print('After hybridizing: %.4f sec'%(benchmark(net_Hybrid, x_bench)))

-```

-

-Given a HybridBlock whose forward computation consists of going through other

-HybridBlocks, you can compile that section of the network by calling the

-HybridBlocks `.hybridize()` method.

-

-All of MXNet’s predefined layers are HybridBlocks. This means that any network

-consisting entirely of predefined MXNet layers can be compiled and run at much

-faster speeds by calling `.hybridize()`.

-

-## Saving and Loading your models

-

-The Blocks API also includes saving your models during and after training so

-that you can host the model for inference or avoid training the model again from

-scratch. Another reason would be to train your model using one language (like

-Python that has a lot of tools for training) and run inference using a different

-language.

-

-There are two ways to save your model in MXNet.

-1. Save/load the model weights/parameters only

-2. Save/load the model weights/parameters and the architectures

-

+# Step 2: Create a neural network

+

+In this step, you learn how to use NP on Apache MXNet to create neural networks

+in Gluon. In addition to the `np` package that you learned about in the previous

+step [Step 1: Manipulate data with NP on MXNet](./1-nparray.ipynb), you also need to

+import the neural network modules from `gluon`. Gluon includes built-in neural

+network layers in the following two modules:

+

+1. `mxnet.gluon.nn`: NN module that maintained by the mxnet team

+2. `mxnet.gluon.contrib.nn`: Experiemental module that is contributed by the

+community

+

+Use the following commands to import the packages required for this step.

+

+```{.python .input}

+from mxnet import np, npx

+from mxnet.gluon import nn

+npx.set_np() # Change MXNet to the numpy-like mode.

+```

+

+## Create your neural network's first layer

+

+In this section, you will create a simple neural network with Gluon. One of the

+simplest network you can create is a single **Dense** layer or **densely-

+connected** layer. A dense layer consists of nodes in the input that are

+connected to every node in the next layer. Use the following code example to

+start with a dense layer with five output units.

+

+```{.python .input}

+layer = nn.Dense(5)

+layer

+# output: Dense(-1 -> 5, linear)

+```

+

+In the example above, the output is `Dense(-1 -> 5, linear)`. The **-1** in the

+output denotes that the size of the input layer is not specified during

+initialization.

+

+You can also call the **Dense** layer with an `in_units` parameter if you know

+the shape of your input unit.

+

+```{.python .input}

+layer = nn.Dense(5,in_units=3)

+layer

+```

+

+In addition to the `in_units` param, you can also add an activation function to

+the layer using the `activation` param. The Dense layer implements the operation

+

+$$output = \sigma(W \cdot X + b)$$

+

+Call the Dense layer with an `activation` parameter to use an activation

+function.

+

+```{.python .input}

+layer = nn.Dense(5, in_units=3,activation='relu')

+```

+

+Voila! Congratulations on creating a simple neural network. But for most of your

+use cases, you will need to create a neural network with more than one dense

+layer or with multiple types of other layers. In addition to the `Dense` layer,

+you can find more layers at [mxnet nn layers](../../../api/gluon/nn/index.rst#module-mxnet.gluon.nn)

+

+So now that you have created a neural network, you are probably wondering how to

+pass data into your network?

+

+First, you need to initialize the network weights, if you use the default

+initialization method which draws random values uniformly in the range $[-0.7,

+0.7]$. You can see this in the following example.

+

+**Note**: Initialization is discussed at a little deeper detail in the next

+notebook

+

+```{.python .input}

+layer.initialize()

+```

+

+Now that you have initialized your network, you can give it data. Passing data

+through a network is also called a forward pass. You can do a forward pass with

+random data, shown in the following example. First, you create a `(10,3)` shape

+random input `x` and feed the data into the layer to compute the output.

+

+```{.python .input}

+x = np.random.uniform(-1,1,(10,3))

+layer(x)

+```

+

+The layer produces a `(10,5)` shape output from your `(10,3)` input.

+

+**When you don't specify the `in_unit` parameter, the system automatically

+infers it during the first time you feed in data during the first forward step

+after you create and initialize the weights.**

+

+

+```{.python .input}

+layer.params

+```

+

+The `weights` and `bias` can be accessed using the `.data()` method.

+

+```{.python .input}

+layer.weight.data()

+```

+

+## Chain layers into a neural network using nn.Sequential

+

+Sequential provides a special way of rapidly building networks when when the

+network architecture follows a common design pattern: the layers look like a

+stack of pancakes. Many networks follow this pattern: a bunch of layers, one

+stacked on top of another, where the output of each layer is fed directly to the

+input to the next layer. To use sequential, simply provide a list of layers

+(pass in the layers by calling `net.add(<Layer goes here!>`). To do this you can

+use your previous example of Dense layers and create a 3-layer multi layer

+perceptron. You can create a sequential block using `nn.Sequential()` method and

+add layers using `add()` method.

+

+```{.python .input}

+net = nn.Sequential()

+

+net.add(nn.Dense(5,in_units=3,activation='relu'),

+ nn.Dense(25, activation='relu'), nn.Dense(2))

+net

+```

+

+The layers are ordered exactly the way you defined your neural network with

+index starting from 0. You can access the layers by indexing the network using

+`[]`.

+

+```{.python .input}

+net[1]

+```

+

+## Create a custom neural network architecture flexibly

+

+`nn.Sequential()` allows you to create your multi-layer neural network with

+existing layers from `gluon.nn`. It also includes a pre-defined `forward()`

+function that sequentially executes added layers. But what if the built-in

+layers are not sufficient for your needs. If you want to create networks like

+ResNet which has complex but repeatable components, how do you create such a

+network?

+

+In gluon, every neural network layer is defined by using a base class

+`nn.Block()`. A Block has one main job - define a forward method that takes some

+input x and generates an output. A Block can just do something simple like apply

+an activation function. It can combine multiple layers together in a single

+block or also combine a bunch of other Blocks together in creative ways to

+create complex networks like Resnet. In this case, you will construct three

+Dense layers. The `forward()` method can then invoke the layers in turn to

+generate its output.

+

+Create a subclass of `nn.Block` and implement two methods by using the following

+code.

+

+- `__init__` create the layers

+- `forward` define the forward function.

+

+```{.python .input}

+class Net(nn.Block):

+ def __init__(self):

+ super().__init__()

+ def forward(self, x):

+ return x

+```

+

+```{.python .input}

+class MLP(nn.Block):

+ def __init__(self): super().__init__() self.dense1 = nn.Dense(5,activation='relu') self.dense2 = nn.Dense(25,activation='relu') self.dense3 = nn.Dense(2)

+ def forward(self, x): layer1 = self.dense1(x) layer2 = self.dense2(layer1) layer3 = self.dense3(layer2) return layer3 net = MLP()

+net

+```

+

+```{.python .input}

+net.dense1.params

+```

+Each layer includes parameters that are stored in a `Parameter` class. You can

+access them using the `params()` method.

+

+## Creating custom layers using Parameters (Blocks API)

+

+MXNet includes a `Parameter` method to hold your parameters in each layer. You

+can create custom layers using the `Parameter` class to include computation that

+may otherwise be not included in the built-in layers. For example, for a dense

+layer, the weights and biases will be created using the `Parameter` method. But

+if you want to add additional computation to the dense layer, you can create it

+using parameter method.

+

+Instantiate a parameter, e.g weights with a size `(5,0)` using the `shape`

+argument.

+

+```{.python .input}

+from mxnet.gluon import Parameter

+

+weight = Parameter("custom_parameter_weight",shape=(5,-1))

+bias = Parameter("custom_parameter_bias",shape=(5,-1))

+

+weight,bias

+```

+

+The `Parameter` method includes a `grad_req` argument that specifies how you

+want to capture gradients for this Parameter. Under the hood, that lets gluon

+know that it has to call `.attach_grad()` on the underlying array. By default,

+the gradient is updated everytime the gradient is written to the grad

+`grad_req='write'`.

+

+Now that you know how parameters work, you are ready to create your very own

+fully-connected custom layer.

+

+To create the custom layers using parameters, you can use the same skeleton with

+`nn.Block` base class. You will create a custom dense layer that takes parameter

+x and returns computed `w*x + b` without any activation function

+

+```{.python .input}

+class custom_layer(nn.Block):

+ def __init__(self, out_units, in_units=0):

+ super().__init__()

+ self.weight = Parameter("weight", shape=(in_units,out_units), allow_deferred_init=True)

+ self.bias = Parameter("bias", shape=(out_units,), allow_deferred_init=True)

+ def forward(self, x):

+ return np.dot(x, self.weight.data()) + self.bias.data()

+```

+

+Parameter can be instantiated before the corresponding data is instantiated. For

+example, when you instantiate a Block but the shapes of each parameter still

+need to be inferred, the Parameter will wait for the shape to be inferred before

+allocating memory.

+

+```{.python .input}

+dense = custom_layer(3,in_units=5)

+dense.initialize()

+dense(np.random.uniform(size=(4, 5)))

+```

+

+Similarly, you can use the following code to implement a famous network called

+[LeNet](http://yann.lecun.com/exdb/lenet/) through `nn.Block` using the built-in

+`Dense` layer and using `custom_layer` as the last layer

+

+```{.python .input}

+class LeNet(nn.Block):

+ def __init__(self):

+ super().__init__()

+ self.conv1 = nn.Conv2D(channels=6, kernel_size=3, activation='relu')

+ self.pool1 = nn.MaxPool2D(pool_size=2, strides=2)

+ self.conv2 = nn.Conv2D(channels=16, kernel_size=3, activation='relu')

+ self.pool2 = nn.MaxPool2D(pool_size=2, strides=2)

+ self.dense1 = nn.Dense(120, activation="relu")

+ self.dense2 = nn.Dense(84, activation="relu")

+ self.dense3 = nn.Dense(10)

+ def forward(self, x):

+ x = self.conv1(x)

+ x = self.pool1(x)

+ x = self.conv2(x)

+ x = self.pool2(x)

+ x = self.dense1(x)

+ x = self.dense2(x)

+ x = self.dense3(x)

+ return x

+

+lenet = LeNet()

+```

+

+```{.python .input}

+class LeNet_custom(nn.Block):

+ def __init__(self):

+ super().__init__()

+ self.conv1 = nn.Conv2D(channels=6, kernel_size=3, activation='relu')

+ self.pool1 = nn.MaxPool2D(pool_size=2, strides=2)

+ self.conv2 = nn.Conv2D(channels=16, kernel_size=3, activation='relu')

+ self.pool2 = nn.MaxPool2D(pool_size=2, strides=2)

+ self.dense1 = nn.Dense(120, activation="relu")

+ self.dense2 = nn.Dense(84, activation="relu")

+ self.dense3 = custom_layer(10,84)

+ def forward(self, x):

+ x = self.conv1(x)

+ x = self.pool1(x)

+ x = self.conv2(x)

+ x = self.pool2(x)

+ x = self.dense1(x)

+ x = self.dense2(x)

+ x = self.dense3(x)

+ return x

+

+lenet_custom = LeNet_custom()

+```

+

+```{.python .input}

+image_data = np.random.uniform(-1,1, (1,1,28,28))

+

+lenet.initialize()

+lenet_custom.initialize()

+

+print("Lenet:")

+print(lenet(image_data))

+

+print("Custom Lenet:")

+print(lenet_custom(image_data))

+```

+

+

+You can use `.data` method to access the weights and bias of a particular layer.

+For example, the following accesses the first layer's weight and sixth layer's bias.

+

+```{.python .input}

+lenet.conv1.weight.data().shape, lenet.dense1.bias.data().shape

+```

+

+## Using predefined (pretrained) architectures

+

+Till now, you have seen how to create your own neural network architectures. But

+what if you want to replicate or baseline your dataset using some of the common

+models in computer visions or natural language processing (NLP). Gluon includes

+common architectures that you can directly use. The Gluon Model Zoo provides a

+collection of off-the-shelf models e.g. RESNET, BERT etc. These architectures

+are found at:

+

+- [Gluon CV model zoo](https://cv.gluon.ai/model_zoo/index.html)

+

+- [Gluon NLP model zoo](https://nlp.gluon.ai/model_zoo/index.html)

+

+```{.python .input}

+from mxnet.gluon import model_zoo

+

+net = model_zoo.vision.resnet50_v2(pretrained=True)

+net.hybridize()

+

+dummy_input = np.ones(shape=(1,3,224,224))

+output = net(dummy_input)

+output.shape

+```

+

+## Deciding the paradigm for your network

+

+In MXNet, Gluon API (Imperative programming paradigm) provides a user friendly

+way for quick prototyping, easy debugging and natural control flow for people

+familiar with python programming.

+

+However, at the backend, MXNET can also convert the network using Symbolic or

+Declarative programming into static graphs with low level optimizations on

+operators. However, static graphs are less flexible because any logic must be

+encoded into the graph as special operators like scan, while_loop and cond. It’s

+also hard to debug.

+

+So how can you make use of symbolic programming while getting the flexibility of

+imperative programming to quickly prototype and debug?

+

+Enter **HybridBlock**

+

+HybridBlocks can run in a fully imperatively way where you define their

+computation with real functions acting on real inputs. But they’re also capable

+of running symbolically, acting on placeholders. Gluon hides most of this under

+the hood so you will only need to know how it works when you want to write your

+own layers.

+

+```{.python .input}

+net_hybrid_seq = nn.HybridSequential()

+

+net_hybrid_seq.add(nn.Dense(5,in_units=3,activation='relu'),

+ nn.Dense(25, activation='relu'), nn.Dense(2) )

+net_hybrid_seq

+```

+

+To compile and optimize `HybridSequential`, you can call its `hybridize` method.

+

+```{.python .input}

+net_hybrid_seq.hybridize()

+```

+

+

+## Creating custom layers using Parameters (HybridBlocks API)

+

+When you instantiated your custom layer, you specified the input dimension

+`in_units` that initializes the weights with the shape specified by `in_units`

+and `out_units`. If you leave the shape of `in_unit` as unknown, you defer the

+shape to the first forward pass. For the custom layer, you define the

+`infer_shape()` method and let the shape be inferred at runtime.

+

+```{.python .input}

+class CustomLayer(nn.HybridBlock):

+ def __init__(self, out_units, in_units=-1):

+ super().__init__()

+ self.weight = Parameter("weight", shape=(in_units, out_units), allow_deferred_init=True)

+ self.bias = Parameter("bias", shape=(out_units,), allow_deferred_init=True)

+

+ def forward(self, x):

+ print(self.weight.shape, self.bias.shape)

+ return np.dot(x, self.weight.data()) + self.bias.data()

+

+ def infer_shape(self, x):

+ print(self.weight.shape,x.shape)

+ self.weight.shape = (x.shape[-1],self.weight.shape[1])

+ dense = CustomLayer(3)

+

+dense.initialize()

+dense(np.random.uniform(size=(4, 5)))

+```

+

+### Performance

+

+To get a sense of the speedup from hybridizing, you can compare the performance

+before and after hybridizing by measuring the time it takes to make 1000 forward

+passes through the network.

+

+```{.python .input}

+from time import time

+

+def benchmark(net, x):

+ y = net(x)

+ start = time()

+ for i in range(1,1000):

+ y = net(x)

+ return time() - start

+

+x_bench = np.random.normal(size=(1,512))

+

+net_hybrid_seq = nn.HybridSequential()

+

+net_hybrid_seq.add(nn.Dense(256,activation='relu'),

+ nn.Dense(128, activation='relu'),

+ nn.Dense(2))

+net_hybrid_seq.initialize()

+

+print('Before hybridizing: %.4f sec'%(benchmark(net_hybrid_seq, x_bench)))

+net_hybrid_seq.hybridize()

+print('After hybridizing: %.4f sec'%(benchmark(net_hybrid_seq, x_bench)))

+```

+

+Peeling back another layer, you also have a `HybridBlock` which is the hybrid