You are viewing a plain text version of this content. The canonical link for it is here.

Posted to commits@zeppelin.apache.org by zj...@apache.org on 2017/08/28 00:11:18 UTC

[3/3] zeppelin git commit: [ZEPPELIN-2753] Basic Implementation of

IPython Interpreter

[ZEPPELIN-2753] Basic Implementation of IPython Interpreter

### What is this PR for?

This is the first step for implement IPython Interpreter in Zeppelin. I just use the jupyter_client to create and manage the ipython kernel. We don't need to care about python compilation and execution, all the things are delegated to ipython kernel. Ideally all the features of ipython should be available in Zeppelin as well.

For now, user can use %python.ipython for IPython Interpreter. And if ipython is available, the default python interpreter will use ipython. But user can still set `zeppelin.python.useIPython` as false to enforce to use the old implementation of python interpreter.

Main features:

* IPython interpreter support

** All the ipython features are available, including visualization, ipython magics.

* ZeppelinContext support

* Streaming output support

* Support Ipython in PySpark

Regarding the visualization, ideally all the visualization libraries work in jupyter should also work here.

In unit test, I only verify the following 3 popular visualization library. could add more later.

* matplotlib

* bokeh

* ggplot

### What type of PR is it?

[Feature ]

### Todos

* [ ] - Task

### What is the Jira issue?

* https://issues.apache.org/jira/browse/ZEPPELIN-2753

### How should this be tested?

Unit test is added.

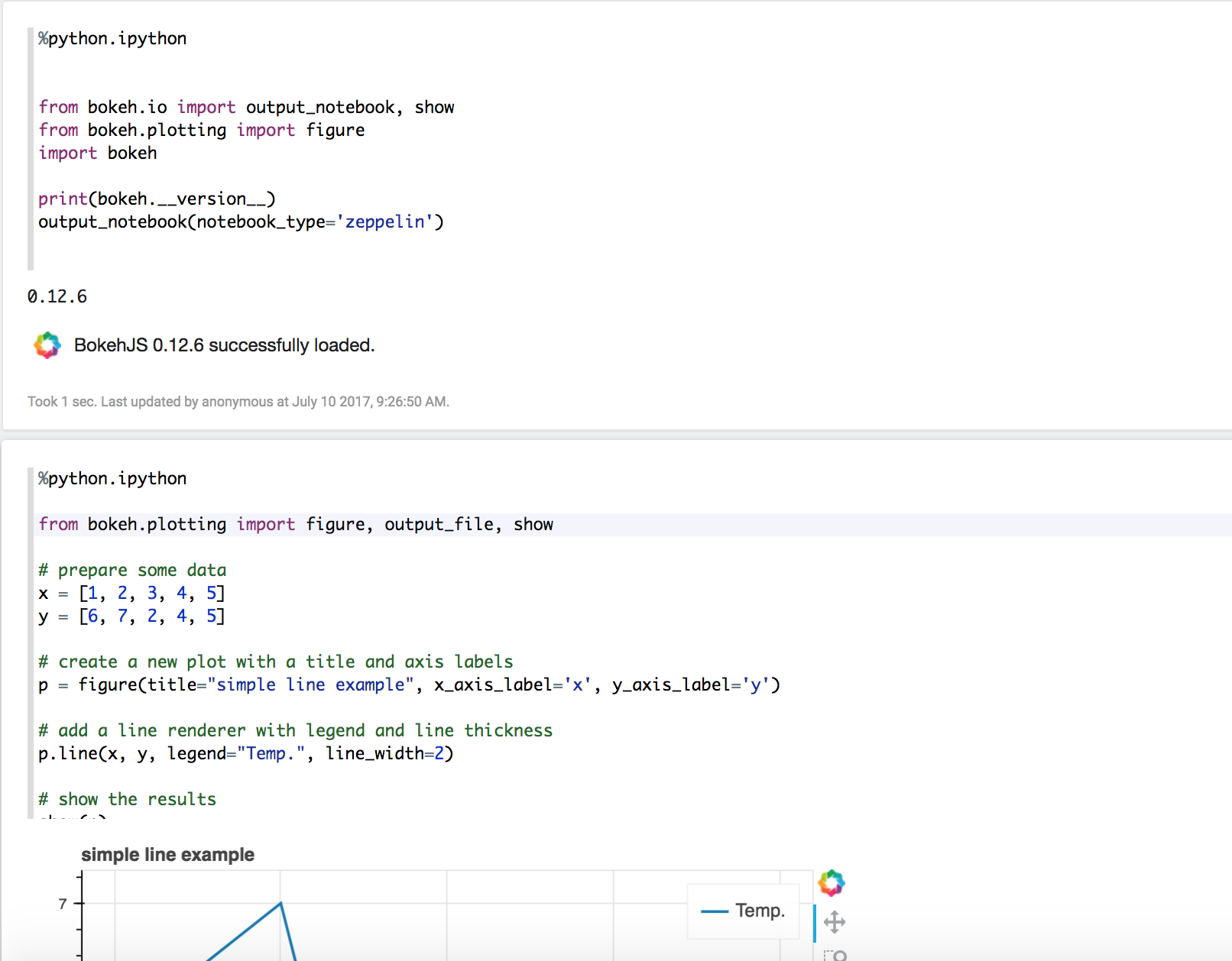

### Screenshots (if appropriate)

Verify bokeh in IPython Interpreter

Verify matplotlib

Verify ZeppelinContext

Verify Streaming

### Questions:

* Does the licenses files need update? No

* Is there breaking changes for older versions? No

* Does this needs documentation? No

Author: Jeff Zhang <zj...@apache.org>

Closes #2474 from zjffdu/ZEPPELIN-2753 and squashes the following commits:

e869f31 [Jeff Zhang] address comments

b0b5c95 [Jeff Zhang] [ZEPPELIN-2753] Basic Implementation of IPython Interpreter

Project: http://git-wip-us.apache.org/repos/asf/zeppelin/repo

Commit: http://git-wip-us.apache.org/repos/asf/zeppelin/commit/32517c9d

Tree: http://git-wip-us.apache.org/repos/asf/zeppelin/tree/32517c9d

Diff: http://git-wip-us.apache.org/repos/asf/zeppelin/diff/32517c9d

Branch: refs/heads/master

Commit: 32517c9d9fbdc2235560388a47f9e3eff4ec4854

Parents: 26a39df

Author: Jeff Zhang <zj...@apache.org>

Authored: Sat Aug 26 11:59:43 2017 +0800

Committer: Jeff Zhang <zj...@apache.org>

Committed: Mon Aug 28 08:11:04 2017 +0800

----------------------------------------------------------------------

.travis.yml | 24 +-

alluxio/pom.xml | 1 +

docs/interpreter/python.md | 64 ++

docs/interpreter/spark.md | 6 +

pom.xml | 13 +-

python/README.md | 19 +

python/pom.xml | 65 ++

.../apache/zeppelin/python/IPythonClient.java | 211 ++++++

.../zeppelin/python/IPythonInterpreter.java | 359 +++++++++

.../zeppelin/python/PythonInterpreter.java | 66 ++

.../zeppelin/python/PythonZeppelinContext.java | 49 ++

python/src/main/proto/ipython.proto | 102 +++

python/src/main/resources/grpc/generate_rpc.sh | 18 +

.../resources/grpc/python/ipython_client.py | 36 +

.../main/resources/grpc/python/ipython_pb2.py | 751 +++++++++++++++++++

.../resources/grpc/python/ipython_pb2_grpc.py | 129 ++++

.../resources/grpc/python/ipython_server.py | 155 ++++

.../resources/grpc/python/zeppelin_python.py | 107 +++

.../src/main/resources/interpreter-setting.json | 23 +

.../zeppelin/python/IPythonInterpreterTest.java | 402 ++++++++++

.../python/PythonInterpreterMatplotlibTest.java | 1 +

.../python/PythonInterpreterPandasSqlTest.java | 38 +-

.../zeppelin/python/PythonInterpreterTest.java | 2 +

python/src/test/resources/log4j.properties | 31 +

spark/pom.xml | 51 +-

.../zeppelin/spark/IPySparkInterpreter.java | 110 +++

.../zeppelin/spark/PySparkInterpreter.java | 92 ++-

.../src/main/resources/interpreter-setting.json | 17 +

.../main/resources/python/zeppelin_ipyspark.py | 53 ++

.../sparkr-resources/interpreter-setting.json | 11 +

.../zeppelin/spark/IPySparkInterpreterTest.java | 203 +++++

.../spark/PySparkInterpreterMatplotlibTest.java | 18 +-

.../zeppelin/spark/PySparkInterpreterTest.java | 36 +-

spark/src/test/resources/log4j.properties | 2 +

testing/install_external_dependencies.sh | 3 +-

zeppelin-interpreter/pom.xml | 5 -

.../interpreter/BaseZeppelinContext.java | 4 +

.../zeppelin/interpreter/InterpreterOutput.java | 11 +

.../remote/RemoteInterpreterServer.java | 7 +

.../util/InterpreterOutputStream.java | 2 +-

zeppelin-jupyter/pom.xml | 1 +

zeppelin-server/pom.xml | 6 +-

.../org/apache/zeppelin/WebDriverManager.java | 1 +

.../integration/InterpreterModeActionsIT.java | 23 +-

.../zeppelin/integration/SparkParagraphIT.java | 2 +-

.../zeppelin/rest/AbstractTestRestApi.java | 3 +

.../zeppelin/rest/ZeppelinSparkClusterTest.java | 6 +-

zeppelin-zengine/pom.xml | 13 +-

48 files changed, 3257 insertions(+), 95 deletions(-)

----------------------------------------------------------------------

http://git-wip-us.apache.org/repos/asf/zeppelin/blob/32517c9d/.travis.yml

----------------------------------------------------------------------

diff --git a/.travis.yml b/.travis.yml

index 099fb38..97ca60a 100644

--- a/.travis.yml

+++ b/.travis.yml

@@ -37,7 +37,7 @@ addons:

env:

global:

# Interpreters does not required by zeppelin-server integration tests

- - INTERPRETERS='!hbase,!pig,!jdbc,!file,!flink,!ignite,!kylin,!python,!lens,!cassandra,!elasticsearch,!bigquery,!alluxio,!scio,!livy,!groovy'

+ - INTERPRETERS='!hbase,!pig,!jdbc,!file,!flink,!ignite,!kylin,!lens,!cassandra,!elasticsearch,!bigquery,!alluxio,!scio,!livy,!groovy'

matrix:

include:

@@ -53,7 +53,7 @@ matrix:

sudo: false

dist: trusty

jdk: "oraclejdk8"

- env: WEB_E2E="true" SCALA_VER="2.11" SPARK_VER="2.1.0" HADOOP_VER="2.6" PROFILE="-Pweb-ci -Pscala-2.11" BUILD_FLAG="package -DskipTests -DskipRat" TEST_FLAG="verify -DskipRat" MODULES="-pl ${INTERPRETERS}" TEST_MODULES="-pl zeppelin-web" TEST_PROJECTS="-Pweb-e2e"

+ env: PYTHON="2" WEB_E2E="true" SCALA_VER="2.11" SPARK_VER="2.1.0" HADOOP_VER="2.6" PROFILE="-Pweb-ci -Pscala-2.11" BUILD_FLAG="package -DskipTests -DskipRat" TEST_FLAG="verify -DskipRat" MODULES="-pl ${INTERPRETERS}" TEST_MODULES="-pl zeppelin-web" TEST_PROJECTS="-Pweb-e2e"

addons:

apt:

sources:

@@ -68,54 +68,54 @@ matrix:

# After issues are fixed these tests need to be included back by removing them from the "-Dtests.to.exclude" property

- jdk: "oraclejdk8"

dist: precise

- env: SCALA_VER="2.11" SPARK_VER="2.2.0" HADOOP_VER="2.6" PROFILE="-Pspark-2.2 -Pweb-ci -Pscalding -Phelium-dev -Pexamples -Pscala-2.11" BUILD_FLAG="package -Pbuild-distr -DskipRat" TEST_FLAG="verify -Pusing-packaged-distr -DskipRat" MODULES="-pl ${INTERPRETERS}" TEST_PROJECTS="-Dtests.to.exclude=**/ZeppelinSparkClusterTest.java,**/org.apache.zeppelin.spark.*,**/HeliumApplicationFactoryTest.java -DfailIfNoTests=false"

+ env: PYTHON="3" SCALA_VER="2.11" SPARK_VER="2.2.0" HADOOP_VER="2.6" PROFILE="-Pspark-2.2 -Pweb-ci -Pscalding -Phelium-dev -Pexamples -Pscala-2.11" BUILD_FLAG="package -Pbuild-distr -DskipRat" TEST_FLAG="verify -Pusing-packaged-distr -DskipRat" MODULES="-pl ${INTERPRETERS}" TEST_PROJECTS="-Dtests.to.exclude=**/ZeppelinSparkClusterTest.java,**/org.apache.zeppelin.spark.*,**/HeliumApplicationFactoryTest.java -DfailIfNoTests=false"

# Test selenium with spark module for 1.6.3

- jdk: "oraclejdk7"

dist: precise

- env: TEST_SELENIUM="true" SCALA_VER="2.10" SPARK_VER="1.6.3" HADOOP_VER="2.6" PROFILE="-Pweb-ci -Pspark-1.6 -Phadoop-2.6 -Phelium-dev -Pexamples" BUILD_FLAG="package -DskipTests -DskipRat" TEST_FLAG="verify -DskipRat" TEST_PROJECTS="-pl .,zeppelin-interpreter,zeppelin-zengine,zeppelin-server,zeppelin-display,spark-dependencies,spark -Dtest=org.apache.zeppelin.AbstractFunctionalSuite -DfailIfNoTests=false"

+ env: PYTHON="2" TEST_SELENIUM="true" SCALA_VER="2.10" SPARK_VER="1.6.3" HADOOP_VER="2.6" PROFILE="-Pweb-ci -Pspark-1.6 -Phadoop-2.6 -Phelium-dev -Pexamples" BUILD_FLAG="package -DskipTests -DskipRat" TEST_FLAG="verify -DskipRat" TEST_PROJECTS="-pl .,zeppelin-interpreter,zeppelin-zengine,zeppelin-server,zeppelin-display,spark-dependencies,spark,python -Dtest=org.apache.zeppelin.AbstractFunctionalSuite -DfailIfNoTests=false"

# Test interpreter modules

- jdk: "oraclejdk7"

dist: precise

- env: SCALA_VER="2.10" PROFILE="-Pscalding" BUILD_FLAG="package -DskipTests -DskipRat -Pr" TEST_FLAG="test -DskipRat" MODULES="-pl $(echo .,zeppelin-interpreter,${INTERPRETERS} | sed 's/!//g')" TEST_PROJECTS=""

+ env: PYTHON="3" SCALA_VER="2.10" PROFILE="-Pscalding" BUILD_FLAG="install -DskipTests -DskipRat -Pr" TEST_FLAG="test -DskipRat" MODULES="-pl $(echo .,zeppelin-interpreter,${INTERPRETERS} | sed 's/!//g')" TEST_PROJECTS=""

# Test spark module for 2.2.0 with scala 2.11, livy

- jdk: "oraclejdk8"

dist: precise

- env: SCALA_VER="2.11" SPARK_VER="2.2.0" HADOOP_VER="2.6" PROFILE="-Pweb-ci -Pspark-2.2 -Phadoop-2.6 -Pscala-2.11" SPARKR="true" BUILD_FLAG="package -DskipTests -DskipRat" TEST_FLAG="test -DskipRat" MODULES="-pl .,zeppelin-interpreter,zeppelin-zengine,zeppelin-server,zeppelin-display,spark-dependencies,spark,livy" TEST_PROJECTS="-Dtest=ZeppelinSparkClusterTest,org.apache.zeppelin.spark.*,org.apache.zeppelin.livy.* -DfailIfNoTests=false"

+ env: PYTHON="2" SCALA_VER="2.11" SPARK_VER="2.2.0" HADOOP_VER="2.6" PROFILE="-Pweb-ci -Pspark-2.2 -Phadoop-2.6 -Pscala-2.11" SPARKR="true" BUILD_FLAG="install -DskipTests -DskipRat" TEST_FLAG="test -DskipRat" MODULES="-pl .,zeppelin-interpreter,zeppelin-zengine,zeppelin-server,zeppelin-display,spark-dependencies,spark,python,livy" TEST_PROJECTS="-Dtest=ZeppelinSparkClusterTest,org.apache.zeppelin.spark.*,org.apache.zeppelin.livy.* -DfailIfNoTests=false"

# Test spark module for 2.1.0 with scala 2.11, livy

- jdk: "oraclejdk7"

dist: precise

- env: SCALA_VER="2.11" SPARK_VER="2.1.0" HADOOP_VER="2.6" PROFILE="-Pweb-ci -Pspark-2.1 -Phadoop-2.6 -Pscala-2.11" SPARKR="true" BUILD_FLAG="package -DskipTests -DskipRat" TEST_FLAG="test -DskipRat" MODULES="-pl .,zeppelin-interpreter,zeppelin-zengine,zeppelin-server,zeppelin-display,spark-dependencies,spark,livy" TEST_PROJECTS="-Dtest=ZeppelinSparkClusterTest,org.apache.zeppelin.spark.*,org.apache.zeppelin.livy.* -DfailIfNoTests=false"

+ env: PYTHON="2" SCALA_VER="2.11" SPARK_VER="2.1.0" HADOOP_VER="2.6" PROFILE="-Pweb-ci -Pspark-2.1 -Phadoop-2.6 -Pscala-2.11" SPARKR="true" BUILD_FLAG="install -DskipTests -DskipRat" TEST_FLAG="test -DskipRat" MODULES="-pl .,zeppelin-interpreter,zeppelin-zengine,zeppelin-server,zeppelin-display,spark-dependencies,spark,python,livy" TEST_PROJECTS="-Dtest=ZeppelinSparkClusterTest,org.apache.zeppelin.spark.*,org.apache.zeppelin.livy.* -DfailIfNoTests=false"

# Test spark module for 2.0.2 with scala 2.11

- jdk: "oraclejdk7"

dist: precise

- env: SCALA_VER="2.11" SPARK_VER="2.0.2" HADOOP_VER="2.6" PROFILE="-Pweb-ci -Pspark-2.0 -Phadoop-2.6 -Pscala-2.11" SPARKR="true" BUILD_FLAG="package -DskipTests -DskipRat" TEST_FLAG="test -DskipRat" MODULES="-pl .,zeppelin-interpreter,zeppelin-zengine,zeppelin-server,zeppelin-display,spark-dependencies,spark" TEST_PROJECTS="-Dtest=ZeppelinSparkClusterTest,org.apache.zeppelin.spark.* -DfailIfNoTests=false"

+ env: PYTHON="2" SCALA_VER="2.11" SPARK_VER="2.0.2" HADOOP_VER="2.6" PROFILE="-Pweb-ci -Pspark-2.0 -Phadoop-2.6 -Pscala-2.11" SPARKR="true" BUILD_FLAG="install -DskipTests -DskipRat" TEST_FLAG="test -DskipRat" MODULES="-pl .,zeppelin-interpreter,zeppelin-zengine,zeppelin-server,zeppelin-display,spark-dependencies,spark,python" TEST_PROJECTS="-Dtest=ZeppelinSparkClusterTest,org.apache.zeppelin.spark.* -DfailIfNoTests=false"

# Test spark module for 1.6.3 with scala 2.10

- jdk: "oraclejdk7"

dist: precise

- env: SCALA_VER="2.10" SPARK_VER="1.6.3" HADOOP_VER="2.6" PROFILE="-Pweb-ci -Pspark-1.6 -Phadoop-2.6 -Pscala-2.10" SPARKR="true" BUILD_FLAG="package -DskipTests -DskipRat" TEST_FLAG="test -DskipRat" MODULES="-pl .,zeppelin-interpreter,zeppelin-zengine,zeppelin-server,zeppelin-display,spark-dependencies,spark" TEST_PROJECTS="-Dtest=ZeppelinSparkClusterTest,org.apache.zeppelin.spark.*,org.apache.zeppelin.spark.* -DfailIfNoTests=false"

+ env: PYTHON="3" SCALA_VER="2.10" SPARK_VER="1.6.3" HADOOP_VER="2.6" PROFILE="-Pweb-ci -Pspark-1.6 -Phadoop-2.6 -Pscala-2.10" SPARKR="true" BUILD_FLAG="install -DskipTests -DskipRat" TEST_FLAG="test -DskipRat" MODULES="-pl .,zeppelin-interpreter,zeppelin-zengine,zeppelin-server,zeppelin-display,spark-dependencies,spark,python" TEST_PROJECTS="-Dtest=ZeppelinSparkClusterTest,org.apache.zeppelin.spark.*,org.apache.zeppelin.spark.* -DfailIfNoTests=false"

# Test spark module for 1.6.3 with scala 2.11

- jdk: "oraclejdk7"

dist: precise

- env: SCALA_VER="2.11" SPARK_VER="1.6.3" HADOOP_VER="2.6" PROFILE="-Pweb-ci -Pspark-1.6 -Phadoop-2.6 -Pscala-2.11" SPARKR="true" BUILD_FLAG="package -DskipTests -DskipRat" TEST_FLAG="test -DskipRat" MODULES="-pl .,zeppelin-interpreter,zeppelin-zengine,zeppelin-server,zeppelin-display,spark-dependencies,spark" TEST_PROJECTS="-Dtest=ZeppelinSparkClusterTest,org.apache.zeppelin.spark.* -DfailIfNoTests=false"

+ env: PYTHON="2" SCALA_VER="2.11" SPARK_VER="1.6.3" HADOOP_VER="2.6" PROFILE="-Pweb-ci -Pspark-1.6 -Phadoop-2.6 -Pscala-2.11" SPARKR="true" BUILD_FLAG="install -DskipTests -DskipRat" TEST_FLAG="test -DskipRat" MODULES="-pl .,zeppelin-interpreter,zeppelin-zengine,zeppelin-server,zeppelin-display,spark-dependencies,spark,python" TEST_PROJECTS="-Dtest=ZeppelinSparkClusterTest,org.apache.zeppelin.spark.* -DfailIfNoTests=false"

# Test python/pyspark with python 2, livy 0.2

- sudo: required

dist: precise

jdk: "oraclejdk7"

- env: PYTHON="2" SCALA_VER="2.10" SPARK_VER="1.6.1" HADOOP_VER="2.6" LIVY_VER="0.2.0" PROFILE="-Pspark-1.6 -Phadoop-2.6 -Plivy-0.2" BUILD_FLAG="package -am -DskipTests -DskipRat" TEST_FLAG="verify -DskipRat" MODULES="-pl .,zeppelin-interpreter,zeppelin-display,spark-dependencies,spark,python,livy" TEST_PROJECTS="-Dtest=LivySQLInterpreterTest,org.apache.zeppelin.spark.PySpark*Test,org.apache.zeppelin.python.* -Dpyspark.test.exclude='' -DfailIfNoTests=false"

+ env: PYTHON="2" SCALA_VER="2.10" SPARK_VER="1.6.1" HADOOP_VER="2.6" LIVY_VER="0.2.0" PROFILE="-Pspark-1.6 -Phadoop-2.6 -Plivy-0.2 -Pscala-2.10" BUILD_FLAG="install -am -DskipTests -DskipRat" TEST_FLAG="verify -DskipRat" MODULES="-pl .,zeppelin-interpreter,zeppelin-display,spark-dependencies,spark,python,livy" TEST_PROJECTS="-Dtest=LivySQLInterpreterTest,org.apache.zeppelin.spark.PySpark*Test,org.apache.zeppelin.python.* -Dpyspark.test.exclude='' -DfailIfNoTests=false"

# Test python/pyspark with python 3, livy 0.3

- sudo: required

dist: precise

jdk: "oraclejdk7"

- env: PYTHON="3" SCALA_VER="2.11" SPARK_VER="2.0.0" HADOOP_VER="2.6" LIVY_VER="0.3.0" PROFILE="-Pspark-2.0 -Phadoop-2.6 -Pscala-2.11 -Plivy-0.3" BUILD_FLAG="package -am -DskipTests -DskipRat" TEST_FLAG="verify -DskipRat" MODULES="-pl .,zeppelin-interpreter,zeppelin-display,spark-dependencies,spark,python,livy" TEST_PROJECTS="-Dtest=LivySQLInterpreterTest,org.apache.zeppelin.spark.PySpark*Test,org.apache.zeppelin.python.* -Dpyspark.test.exclude='' -DfailIfNoTests=false"

+ env: PYTHON="3" SCALA_VER="2.11" SPARK_VER="2.0.0" HADOOP_VER="2.6" LIVY_VER="0.3.0" PROFILE="-Pspark-2.0 -Phadoop-2.6 -Pscala-2.11 -Plivy-0.3" BUILD_FLAG="install -am -DskipTests -DskipRat" TEST_FLAG="verify -DskipRat" MODULES="-pl .,zeppelin-interpreter,zeppelin-display,spark-dependencies,spark,python,livy" TEST_PROJECTS="-Dtest=LivySQLInterpreterTest,org.apache.zeppelin.spark.PySpark*Test,org.apache.zeppelin.python.* -Dpyspark.test.exclude='' -DfailIfNoTests=false"

before_install:

# check files included in commit range, clear bower_components if a bower.json file has changed.

http://git-wip-us.apache.org/repos/asf/zeppelin/blob/32517c9d/alluxio/pom.xml

----------------------------------------------------------------------

diff --git a/alluxio/pom.xml b/alluxio/pom.xml

index 38135b8..ed6b981 100644

--- a/alluxio/pom.xml

+++ b/alluxio/pom.xml

@@ -47,6 +47,7 @@

<dependency>

<groupId>com.google.guava</groupId>

<artifactId>guava</artifactId>

+ <version>15.0</version>

</dependency>

<dependency>

http://git-wip-us.apache.org/repos/asf/zeppelin/blob/32517c9d/docs/interpreter/python.md

----------------------------------------------------------------------

diff --git a/docs/interpreter/python.md b/docs/interpreter/python.md

index b4b5ca8..1965fc9 100644

--- a/docs/interpreter/python.md

+++ b/docs/interpreter/python.md

@@ -232,6 +232,70 @@ SELECT * FROM rates WHERE age < 40

Otherwise it can be referred to as `%python.sql`

+## IPython Support

+

+IPython is more powerful than the default python interpreter with extra functionality. You can use IPython with Python2 or Python3 which depends on which python you set `zeppelin.python`.

+

+ **Pre-requests**

+

+ - Jupyter `pip install jupyter`

+ - grpcio `pip install grpcio`

+

+If you already install anaconda, then you just need to install `grpcio` as Jupyter is already included in anaconda.

+

+In addition to all basic functions of the python interpreter, you can use all the IPython advanced features as you use it in Jupyter Notebook.

+

+e.g.

+

+Use IPython magic

+

+```

+%python.ipython

+

+#python help

+range?

+

+#timeit

+%timeit range(100)

+```

+

+Use matplotlib

+

+```

+%python.ipython

+

+

+%matplotlib inline

+import matplotlib.pyplot as plt

+

+print("hello world")

+data=[1,2,3,4]

+plt.figure()

+plt.plot(data)

+```

+

+We also make `ZeppelinContext` available in IPython Interpreter. You can use `ZeppelinContext` to create dynamic forms and display pandas DataFrame.

+

+e.g.

+

+Create dynamic form

+

+```

+z.input(name='my_name', defaultValue='hello')

+```

+

+Show pandas dataframe

+

+```

+import pandas as pd

+df = pd.DataFrame({'id':[1,2,3], 'name':['a','b','c']})

+z.show(df)

+

+```

+

+By default, we would use IPython in `%python.python` if IPython is available. Otherwise it would fall back to the original Python implementation.

+If you don't want to use IPython, then you can set `zeppelin.python.useIPython` as `false` in interpreter setting.

+

## Technical description

For in-depth technical details on current implementation please refer to [python/README.md](https://github.com/apache/zeppelin/blob/master/python/README.md).

http://git-wip-us.apache.org/repos/asf/zeppelin/blob/32517c9d/docs/interpreter/spark.md

----------------------------------------------------------------------

diff --git a/docs/interpreter/spark.md b/docs/interpreter/spark.md

index 122c8db..8ba9247 100644

--- a/docs/interpreter/spark.md

+++ b/docs/interpreter/spark.md

@@ -414,6 +414,12 @@ You can choose one of `shared`, `scoped` and `isolated` options wheh you configu

Spark interpreter creates separated Scala compiler per each notebook but share a single SparkContext in `scoped` mode (experimental).

It creates separated SparkContext per each notebook in `isolated` mode.

+## IPython support

+

+By default, zeppelin would use IPython in `pyspark` when IPython is available, Otherwise it would fall back to the original PySpark implementation.

+If you don't want to use IPython, then you can set `zeppelin.spark.useIPython` as `false` in interpreter setting. For the IPython features, you can refer doc

+[Python Interpreter](python.html)

+

## Setting up Zeppelin with Kerberos

Logical setup with Zeppelin, Kerberos Key Distribution Center (KDC), and Spark on YARN:

http://git-wip-us.apache.org/repos/asf/zeppelin/blob/32517c9d/pom.xml

----------------------------------------------------------------------

diff --git a/pom.xml b/pom.xml

index b856454..acfcd05 100644

--- a/pom.xml

+++ b/pom.xml

@@ -101,7 +101,6 @@

<libthrift.version>0.9.2</libthrift.version>

<gson.version>2.2</gson.version>

<gson-extras.version>0.2.1</gson-extras.version>

- <guava.version>15.0</guava.version>

<jetty.version>9.2.15.v20160210</jetty.version>

<httpcomponents.core.version>4.4.1</httpcomponents.core.version>

<httpcomponents.client.version>4.5.1</httpcomponents.client.version>

@@ -246,12 +245,6 @@

<version>${commons.cli.version}</version>

</dependency>

- <dependency>

- <groupId>com.google.guava</groupId>

- <artifactId>guava</artifactId>

- <version>${guava.version}</version>

- </dependency>

-

<!-- Apache Shiro -->

<dependency>

<groupId>org.apache.shiro</groupId>

@@ -404,7 +397,7 @@

</goals>

<configuration>

<failOnViolation>true</failOnViolation>

- <excludes>org/apache/zeppelin/interpreter/thrift/*,org/apache/zeppelin/scio/avro/*</excludes>

+ <excludes>org/apache/zeppelin/interpreter/thrift/*,org/apache/zeppelin/scio/avro/*,org/apache/zeppelin/python/proto/*</excludes>

</configuration>

</execution>

<execution>

@@ -414,7 +407,7 @@

<goal>checkstyle-aggregate</goal>

</goals>

<configuration>

- <excludes>org/apache/zeppelin/interpreter/thrift/*,org/apache/zeppelin/scio/avro/*</excludes>

+ <excludes>org/apache/zeppelin/interpreter/thrift/*,org/apache/zeppelin/scio/avro/*,org/apache/zeppelin/python/proto/*</excludes>

</configuration>

</execution>

</executions>

@@ -1082,6 +1075,8 @@

<!--The following files are mechanical-->

<exclude>**/R/rzeppelin/DESCRIPTION</exclude>

<exclude>**/R/rzeppelin/NAMESPACE</exclude>

+

+ <exclude>python/src/main/resources/grpc/**/*</exclude>

</excludes>

</configuration>

http://git-wip-us.apache.org/repos/asf/zeppelin/blob/32517c9d/python/README.md

----------------------------------------------------------------------

diff --git a/python/README.md b/python/README.md

index cd8a0ca..7a20e8d 100644

--- a/python/README.md

+++ b/python/README.md

@@ -50,3 +50,22 @@ mvn -Dpython.test.exclude='' test -pl python -am

* Matplotlib figures are displayed inline with the notebook automatically using a built-in backend for zeppelin in conjunction with a post-execute hook.

* `%python.sql` support for Pandas DataFrames is optional and provided using https://github.com/yhat/pandasql if user have one installed

+

+

+# IPython Overview

+IPython interpreter for Apache Zeppelin

+

+# IPython Requirements

+You need to install the following python packages to make the IPython interpreter work.

+ * jupyter 5.x

+ * IPython

+ * ipykernel

+ * grpcio

+

+If you have installed anaconda, then you just need to install grpc.

+

+# IPython Architecture

+Current interpreter delegate the whole work to ipython kernel via `jupyter_client`. Zeppelin would launch a python process which host the ipython kernel.

+Zeppelin interpreter process will communicate with the python process via `grpc`. Ideally every feature works in IPython should work in Zeppelin as well.

+

+

http://git-wip-us.apache.org/repos/asf/zeppelin/blob/32517c9d/python/pom.xml

----------------------------------------------------------------------

diff --git a/python/pom.xml b/python/pom.xml

index 380a874..d46cd10 100644

--- a/python/pom.xml

+++ b/python/pom.xml

@@ -41,6 +41,7 @@

</python.test.exclude>

<pypi.repo.url>https://pypi.python.org/packages</pypi.repo.url>

<python.py4j.repo.folder>/64/5c/01e13b68e8caafece40d549f232c9b5677ad1016071a48d04cc3895acaa3</python.py4j.repo.folder>

+ <grpc.version>1.4.0</grpc.version>

</properties>

<dependencies>

@@ -73,6 +74,28 @@

<artifactId>slf4j-log4j12</artifactId>

</dependency>

+ <dependency>

+ <groupId>io.grpc</groupId>

+ <artifactId>grpc-netty</artifactId>

+ <version>${grpc.version}</version>

+ </dependency>

+ <dependency>

+ <groupId>io.grpc</groupId>

+ <artifactId>grpc-protobuf</artifactId>

+ <version>${grpc.version}</version>

+ </dependency>

+ <dependency>

+ <groupId>io.grpc</groupId>

+ <artifactId>grpc-stub</artifactId>

+ <version>${grpc.version}</version>

+ </dependency>

+

+ <dependency>

+ <groupId>com.google.guava</groupId>

+ <artifactId>guava</artifactId>

+ <version>18.0</version>

+ </dependency>

+

<!-- test libraries -->

<dependency>

<groupId>junit</groupId>

@@ -88,7 +111,36 @@

</dependencies>

<build>

+

+ <extensions>

+ <extension>

+ <groupId>kr.motd.maven</groupId>

+ <artifactId>os-maven-plugin</artifactId>

+ <version>1.4.1.Final</version>

+ </extension>

+ </extensions>

+

<plugins>

+

+ <plugin>

+ <groupId>org.xolstice.maven.plugins</groupId>

+ <artifactId>protobuf-maven-plugin</artifactId>

+ <version>0.5.0</version>

+ <configuration>

+ <protocArtifact>com.google.protobuf:protoc:3.3.0:exe:${os.detected.classifier}</protocArtifact>

+ <pluginId>grpc-java</pluginId>

+ <pluginArtifact>io.grpc:protoc-gen-grpc-java:1.4.0:exe:${os.detected.classifier}</pluginArtifact>

+ </configuration>

+ <executions>

+ <execution>

+ <goals>

+ <goal>compile</goal>

+ <goal>compile-custom</goal>

+ </goals>

+ </execution>

+ </executions>

+ </plugin>

+

<plugin>

<artifactId>maven-enforcer-plugin</artifactId>

<version>1.3.1</version>

@@ -136,6 +188,19 @@

</executions>

</plugin>

+ <!-- publish test jar as well so that spark module can use it -->

+ <plugin>

+ <groupId>org.apache.maven.plugins</groupId>

+ <artifactId>maven-jar-plugin</artifactId>

+ <version>3.0.2</version>

+ <executions>

+ <execution>

+ <goals>

+ <goal>test-jar</goal>

+ </goals>

+ </execution>

+ </executions>

+ </plugin>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

http://git-wip-us.apache.org/repos/asf/zeppelin/blob/32517c9d/python/src/main/java/org/apache/zeppelin/python/IPythonClient.java

----------------------------------------------------------------------

diff --git a/python/src/main/java/org/apache/zeppelin/python/IPythonClient.java b/python/src/main/java/org/apache/zeppelin/python/IPythonClient.java

new file mode 100644

index 0000000..40b9afd

--- /dev/null

+++ b/python/src/main/java/org/apache/zeppelin/python/IPythonClient.java

@@ -0,0 +1,211 @@

+/*

+* Licensed to the Apache Software Foundation (ASF) under one or more

+* contributor license agreements. See the NOTICE file distributed with

+* this work for additional information regarding copyright ownership.

+* The ASF licenses this file to You under the Apache License, Version 2.0

+* (the "License"); you may not use this file except in compliance with

+* the License. You may obtain a copy of the License at

+*

+* http://www.apache.org/licenses/LICENSE-2.0

+*

+* Unless required by applicable law or agreed to in writing, software

+* distributed under the License is distributed on an "AS IS" BASIS,

+* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+* See the License for the specific language governing permissions and

+* limitations under the License.

+*/

+

+package org.apache.zeppelin.python;

+

+import io.grpc.ManagedChannel;

+import io.grpc.ManagedChannelBuilder;

+import io.grpc.stub.StreamObserver;

+import org.apache.zeppelin.interpreter.util.InterpreterOutputStream;

+import org.apache.zeppelin.python.proto.CancelRequest;

+import org.apache.zeppelin.python.proto.CancelResponse;

+import org.apache.zeppelin.python.proto.CompletionRequest;

+import org.apache.zeppelin.python.proto.CompletionResponse;

+import org.apache.zeppelin.python.proto.ExecuteRequest;

+import org.apache.zeppelin.python.proto.ExecuteResponse;

+import org.apache.zeppelin.python.proto.ExecuteStatus;

+import org.apache.zeppelin.python.proto.IPythonGrpc;

+import org.apache.zeppelin.python.proto.OutputType;

+import org.apache.zeppelin.python.proto.StatusRequest;

+import org.apache.zeppelin.python.proto.StatusResponse;

+import org.apache.zeppelin.python.proto.StopRequest;

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+

+import java.io.IOException;

+import java.util.ArrayList;

+import java.util.Iterator;

+import java.util.List;

+import java.util.Random;

+import java.util.concurrent.TimeUnit;

+import java.util.concurrent.atomic.AtomicBoolean;

+

+/**

+ * Grpc client for IPython kernel

+ */

+public class IPythonClient {

+

+ private static final Logger LOGGER = LoggerFactory.getLogger(IPythonClient.class.getName());

+

+ private final ManagedChannel channel;

+ private final IPythonGrpc.IPythonBlockingStub blockingStub;

+ private final IPythonGrpc.IPythonStub asyncStub;

+

+ private Random random = new Random();

+

+ /**

+ * Construct client for accessing RouteGuide server at {@code host:port}.

+ */

+ public IPythonClient(String host, int port) {

+ this(ManagedChannelBuilder.forAddress(host, port).usePlaintext(true));

+ }

+

+ /**

+ * Construct client for accessing RouteGuide server using the existing channel.

+ */

+ public IPythonClient(ManagedChannelBuilder<?> channelBuilder) {

+ channel = channelBuilder.build();

+ blockingStub = IPythonGrpc.newBlockingStub(channel);

+ asyncStub = IPythonGrpc.newStub(channel);

+ }

+

+ public void shutdown() throws InterruptedException {

+ channel.shutdown().awaitTermination(5, TimeUnit.SECONDS);

+ }

+

+ // execute the code and make the output as streaming by writing it to InterpreterOutputStream

+ // one by one.

+ public ExecuteResponse stream_execute(ExecuteRequest request,

+ final InterpreterOutputStream interpreterOutput) {

+ final ExecuteResponse.Builder finalResponseBuilder = ExecuteResponse.newBuilder()

+ .setStatus(ExecuteStatus.SUCCESS);

+ final AtomicBoolean completedFlag = new AtomicBoolean(false);

+ LOGGER.debug("stream_execute code:\n" + request.getCode());

+ asyncStub.execute(request, new StreamObserver<ExecuteResponse>() {

+ int index = 0;

+ boolean isPreviousOutputImage = false;

+

+ @Override

+ public void onNext(ExecuteResponse executeResponse) {

+ if (executeResponse.getType() == OutputType.TEXT) {

+ try {

+ LOGGER.debug("Interpreter Streaming Output: " + executeResponse.getOutput());

+ if (isPreviousOutputImage) {

+ // add '\n' when switch from image to text

+ interpreterOutput.write("\n".getBytes());

+ }

+ isPreviousOutputImage = false;

+ interpreterOutput.write(executeResponse.getOutput().getBytes());

+ interpreterOutput.getInterpreterOutput().flush();

+ } catch (IOException e) {

+ LOGGER.error("Unexpected IOException", e);

+ }

+ }

+ if (executeResponse.getType() == OutputType.IMAGE) {

+ try {

+ LOGGER.debug("Interpreter Streaming Output: IMAGE_DATA");

+ if (index != 0) {

+ // add '\n' if this is the not the first element. otherwise it would mix the image

+ // with the text

+ interpreterOutput.write("\n".getBytes());

+ }

+ interpreterOutput.write(("%img " + executeResponse.getOutput()).getBytes());

+ interpreterOutput.getInterpreterOutput().flush();

+ isPreviousOutputImage = true;

+ } catch (IOException e) {

+ LOGGER.error("Unexpected IOException", e);

+ }

+ }

+ if (executeResponse.getStatus() == ExecuteStatus.ERROR) {

+ // set the finalResponse to ERROR if any ERROR happens, otherwise the finalResponse would

+ // be SUCCESS.

+ finalResponseBuilder.setStatus(ExecuteStatus.ERROR);

+ }

+ index++;

+ }

+

+ @Override

+ public void onError(Throwable throwable) {

+ try {

+ interpreterOutput.getInterpreterOutput().flush();

+ } catch (IOException e) {

+ LOGGER.error("Unexpected IOException", e);

+ }

+ LOGGER.error("Fail to call IPython grpc", throwable);

+ }

+

+ @Override

+ public void onCompleted() {

+ synchronized (completedFlag) {

+ try {

+ LOGGER.debug("stream_execute is completed");

+ interpreterOutput.getInterpreterOutput().flush();

+ } catch (IOException e) {

+ LOGGER.error("Unexpected IOException", e);

+ }

+ completedFlag.set(true);

+ completedFlag.notify();

+ }

+ }

+ });

+

+ synchronized (completedFlag) {

+ if (!completedFlag.get()) {

+ try {

+ completedFlag.wait();

+ } catch (InterruptedException e) {

+ LOGGER.error("Unexpected Interruption", e);

+ }

+ }

+ }

+ return finalResponseBuilder.build();

+ }

+

+ // blocking execute the code

+ public ExecuteResponse block_execute(ExecuteRequest request) {

+ ExecuteResponse.Builder responseBuilder = ExecuteResponse.newBuilder();

+ responseBuilder.setStatus(ExecuteStatus.SUCCESS);

+ Iterator<ExecuteResponse> iter = blockingStub.execute(request);

+ StringBuilder outputBuilder = new StringBuilder();

+ while (iter.hasNext()) {

+ ExecuteResponse nextResponse = iter.next();

+ if (nextResponse.getStatus() == ExecuteStatus.ERROR) {

+ responseBuilder.setStatus(ExecuteStatus.ERROR);

+ }

+ outputBuilder.append(nextResponse.getOutput());

+ }

+ responseBuilder.setOutput(outputBuilder.toString());

+ return responseBuilder.build();

+ }

+

+ public CancelResponse cancel(CancelRequest request) {

+ return blockingStub.cancel(request);

+ }

+

+ public CompletionResponse complete(CompletionRequest request) {

+ return blockingStub.complete(request);

+ }

+

+ public StatusResponse status(StatusRequest request) {

+ return blockingStub.status(request);

+ }

+

+ public void stop(StopRequest request) {

+ asyncStub.stop(request, null);

+ }

+

+

+ public static void main(String[] args) {

+ IPythonClient client = new IPythonClient("localhost", 50053);

+ client.status(StatusRequest.newBuilder().build());

+

+ ExecuteResponse response = client.block_execute(ExecuteRequest.newBuilder().

+ setCode("abcd=2").build());

+ System.out.println(response.getOutput());

+

+ }

+}

http://git-wip-us.apache.org/repos/asf/zeppelin/blob/32517c9d/python/src/main/java/org/apache/zeppelin/python/IPythonInterpreter.java

----------------------------------------------------------------------

diff --git a/python/src/main/java/org/apache/zeppelin/python/IPythonInterpreter.java b/python/src/main/java/org/apache/zeppelin/python/IPythonInterpreter.java

new file mode 100644

index 0000000..9b6f730

--- /dev/null

+++ b/python/src/main/java/org/apache/zeppelin/python/IPythonInterpreter.java

@@ -0,0 +1,359 @@

+/*

+* Licensed to the Apache Software Foundation (ASF) under one or more

+* contributor license agreements. See the NOTICE file distributed with

+* this work for additional information regarding copyright ownership.

+* The ASF licenses this file to You under the Apache License, Version 2.0

+* (the "License"); you may not use this file except in compliance with

+* the License. You may obtain a copy of the License at

+*

+* http://www.apache.org/licenses/LICENSE-2.0

+*

+* Unless required by applicable law or agreed to in writing, software

+* distributed under the License is distributed on an "AS IS" BASIS,

+* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+* See the License for the specific language governing permissions and

+* limitations under the License.

+*/

+

+package org.apache.zeppelin.python;

+

+import org.apache.commons.exec.CommandLine;

+import org.apache.commons.exec.DefaultExecutor;

+import org.apache.commons.exec.ExecuteException;

+import org.apache.commons.exec.ExecuteResultHandler;

+import org.apache.commons.exec.ExecuteWatchdog;

+import org.apache.commons.exec.LogOutputStream;

+import org.apache.commons.exec.PumpStreamHandler;

+import org.apache.commons.exec.environment.EnvironmentUtils;

+import org.apache.commons.io.FileUtils;

+import org.apache.commons.io.IOUtils;

+import org.apache.commons.lang.StringUtils;

+import org.apache.zeppelin.interpreter.Interpreter;

+import org.apache.zeppelin.interpreter.InterpreterContext;

+import org.apache.zeppelin.interpreter.InterpreterResult;

+import org.apache.zeppelin.interpreter.remote.RemoteInterpreterUtils;

+import org.apache.zeppelin.interpreter.thrift.InterpreterCompletion;

+import org.apache.zeppelin.interpreter.util.InterpreterOutputStream;

+import org.apache.zeppelin.python.proto.CancelRequest;

+import org.apache.zeppelin.python.proto.CompletionRequest;

+import org.apache.zeppelin.python.proto.CompletionResponse;

+import org.apache.zeppelin.python.proto.ExecuteRequest;

+import org.apache.zeppelin.python.proto.ExecuteResponse;

+import org.apache.zeppelin.python.proto.ExecuteStatus;

+import org.apache.zeppelin.python.proto.IPythonStatus;

+import org.apache.zeppelin.python.proto.StatusRequest;

+import org.apache.zeppelin.python.proto.StatusResponse;

+import org.apache.zeppelin.python.proto.StopRequest;

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+import py4j.GatewayServer;

+

+import java.io.File;

+import java.io.FileInputStream;

+import java.io.IOException;

+import java.io.InputStream;

+import java.net.URISyntaxException;

+import java.net.URL;

+import java.nio.file.Files;

+import java.nio.file.Path;

+import java.nio.file.Paths;

+import java.util.ArrayList;

+import java.util.List;

+import java.util.Map;

+import java.util.Properties;

+

+/**

+ * IPython Interpreter for Zeppelin

+ */

+public class IPythonInterpreter extends Interpreter implements ExecuteResultHandler {

+

+ private static final Logger LOGGER = LoggerFactory.getLogger(IPythonInterpreter.class);

+

+ private ExecuteWatchdog watchDog;

+ private IPythonClient ipythonClient;

+ private GatewayServer gatewayServer;

+

+ private PythonZeppelinContext zeppelinContext;

+ private String pythonExecutable;

+ private long ipythonLaunchTimeout;

+ private String additionalPythonPath;

+ private String additionalPythonInitFile;

+

+ private InterpreterOutputStream interpreterOutput = new InterpreterOutputStream(LOGGER);

+

+ public IPythonInterpreter(Properties properties) {

+ super(properties);

+ }

+

+ /**

+ * Sub class can customize the interpreter by adding more python packages under PYTHONPATH.

+ * e.g. PySparkInterpreter

+ *

+ * @param additionalPythonPath

+ */

+ public void setAdditionalPythonPath(String additionalPythonPath) {

+ this.additionalPythonPath = additionalPythonPath;

+ }

+

+ /**

+ * Sub class can customize the interpreter by running additional python init code.

+ * e.g. PySparkInterpreter

+ *

+ * @param additionalPythonInitFile

+ */

+ public void setAdditionalPythonInitFile(String additionalPythonInitFile) {

+ this.additionalPythonInitFile = additionalPythonInitFile;

+ }

+

+ @Override

+ public void open() {

+ try {

+ if (ipythonClient != null) {

+ // IPythonInterpreter might already been opened by PythonInterpreter

+ return;

+ }

+ pythonExecutable = getProperty().getProperty("zeppelin.python", "python");

+ ipythonLaunchTimeout = Long.parseLong(

+ getProperty().getProperty("zeppelin.ipython.launch.timeout", "30000"));

+ this.zeppelinContext = new PythonZeppelinContext(

+ getInterpreterGroup().getInterpreterHookRegistry(),

+ Integer.parseInt(getProperty().getProperty("zeppelin.python.maxResult", "1000")));

+ int ipythonPort = RemoteInterpreterUtils.findRandomAvailablePortOnAllLocalInterfaces();

+ int jvmGatewayPort = RemoteInterpreterUtils.findRandomAvailablePortOnAllLocalInterfaces();

+ LOGGER.info("Launching IPython Kernel at port: " + ipythonPort);

+ LOGGER.info("Launching JVM Gateway at port: " + jvmGatewayPort);

+ ipythonClient = new IPythonClient("127.0.0.1", ipythonPort);

+ launchIPythonKernel(ipythonPort);

+ setupJVMGateway(jvmGatewayPort);

+ } catch (Exception e) {

+ throw new RuntimeException("Fail to open IPythonInterpreter", e);

+ }

+ }

+

+ public boolean checkIPythonPrerequisite() {

+ ProcessBuilder processBuilder = new ProcessBuilder("pip", "freeze");

+ try {

+ File stderrFile = File.createTempFile("zeppelin", ".txt");

+ processBuilder.redirectError(stderrFile);

+ File stdoutFile = File.createTempFile("zeppelin", ".txt");

+ processBuilder.redirectOutput(stdoutFile);

+

+ Process proc = processBuilder.start();

+ int ret = proc.waitFor();

+ if (ret != 0) {

+ LOGGER.warn("Fail to run pip freeze.\n" +

+ IOUtils.toString(new FileInputStream(stderrFile)));

+ return false;

+ }

+ String freezeOutput = IOUtils.toString(new FileInputStream(stdoutFile));

+ if (!freezeOutput.contains("jupyter-client=")) {

+ InterpreterContext.get().out.write("jupyter-client is not installed\n".getBytes());

+ return false;

+ }

+ if (!freezeOutput.contains("ipykernel=")) {

+ InterpreterContext.get().out.write("ipkernel is not installed\n".getBytes());

+ return false;

+ }

+ if (!freezeOutput.contains("ipython=")) {

+ InterpreterContext.get().out.write("ipython is not installed\n".getBytes());

+ return false;

+ }

+ if (!freezeOutput.contains("grpcio=")) {

+ InterpreterContext.get().out.write("grpcio is not installed\n".getBytes());

+ return false;

+ }

+ LOGGER.info("IPython prerequisite is meet");

+ return true;

+ } catch (Exception e) {

+ LOGGER.warn("Fail to checkIPythonPrerequisite", e);

+ return false;

+ }

+ }

+

+ private void setupJVMGateway(int jvmGatewayPort) throws IOException {

+ gatewayServer = new GatewayServer(this, jvmGatewayPort);

+ gatewayServer.start();

+

+ InputStream input =

+ getClass().getClassLoader().getResourceAsStream("grpc/python/zeppelin_python.py");

+ List<String> lines = IOUtils.readLines(input);

+ ExecuteResponse response = ipythonClient.block_execute(ExecuteRequest.newBuilder()

+ .setCode(StringUtils.join(lines, System.lineSeparator())

+ .replace("${JVM_GATEWAY_PORT}", jvmGatewayPort + "")).build());

+ if (response.getStatus() == ExecuteStatus.ERROR) {

+ throw new IOException("Fail to setup JVMGateway\n" + response.getOutput());

+ }

+

+ if (additionalPythonInitFile != null) {

+ input = getClass().getClassLoader().getResourceAsStream(additionalPythonInitFile);

+ lines = IOUtils.readLines(input);

+ response = ipythonClient.block_execute(ExecuteRequest.newBuilder()

+ .setCode(StringUtils.join(lines, System.lineSeparator())

+ .replace("${JVM_GATEWAY_PORT}", jvmGatewayPort + "")).build());

+ if (response.getStatus() == ExecuteStatus.ERROR) {

+ throw new IOException("Fail to run additional Python init file: "

+ + additionalPythonInitFile + "\n" + response.getOutput());

+ }

+ }

+ }

+

+

+ private void launchIPythonKernel(int ipythonPort)

+ throws IOException, URISyntaxException {

+ // copy the python scripts to a temp directory, then launch ipython kernel in that folder

+ File tmpPythonScriptFolder = Files.createTempDirectory("zeppelin_ipython").toFile();

+ String[] ipythonScripts = {"ipython_server.py", "ipython_pb2.py", "ipython_pb2_grpc.py"};

+ for (String ipythonScript : ipythonScripts) {

+ URL url = getClass().getClassLoader().getResource("grpc/python"

+ + "/" + ipythonScript);

+ FileUtils.copyURLToFile(url, new File(tmpPythonScriptFolder, ipythonScript));

+ }

+

+ CommandLine cmd = CommandLine.parse(pythonExecutable);

+ cmd.addArgument(tmpPythonScriptFolder.getAbsolutePath() + "/ipython_server.py");

+ cmd.addArgument(ipythonPort + "");

+ DefaultExecutor executor = new DefaultExecutor();

+ ProcessLogOutputStream processOutput = new ProcessLogOutputStream(LOGGER);

+ executor.setStreamHandler(new PumpStreamHandler(processOutput));

+ watchDog = new ExecuteWatchdog(ExecuteWatchdog.INFINITE_TIMEOUT);

+ executor.setWatchdog(watchDog);

+

+ String py4jLibPath = null;

+ if (System.getenv("ZEPPELIN_HOME") != null) {

+ py4jLibPath = System.getenv("ZEPPELIN_HOME") + File.separator

+ + PythonInterpreter.ZEPPELIN_PY4JPATH;

+ } else {

+ Path workingPath = Paths.get("..").toAbsolutePath();

+ py4jLibPath = workingPath + File.separator + PythonInterpreter.ZEPPELIN_PY4JPATH;

+ }

+ if (additionalPythonPath != null) {

+ // put the py4j at the end, because additionalPythonPath may already contain py4j.

+ // e.g. PySparkInterpreter

+ additionalPythonPath = additionalPythonPath + ":" + py4jLibPath;

+ } else {

+ additionalPythonPath = py4jLibPath;

+ }

+ Map<String, String> envs = EnvironmentUtils.getProcEnvironment();

+ if (envs.containsKey("PYTHONPATH")) {

+ envs.put("PYTHONPATH", additionalPythonPath + ":" + envs.get("PYTHONPATH"));

+ } else {

+ envs.put("PYTHONPATH", additionalPythonPath);

+ }

+

+ LOGGER.debug("PYTHONPATH: " + envs.get("PYTHONPATH"));

+ executor.execute(cmd, envs, this);

+

+ // wait until IPython kernel is started or timeout

+ long startTime = System.currentTimeMillis();

+ while (true) {

+ try {

+ Thread.sleep(100);

+ } catch (InterruptedException e) {

+ LOGGER.error("Interrupted by something", e);

+ }

+

+ try {

+ StatusResponse response = ipythonClient.status(StatusRequest.newBuilder().build());

+ if (response.getStatus() == IPythonStatus.RUNNING) {

+ LOGGER.info("IPython Kernel is Running");

+ break;

+ } else {

+ LOGGER.info("Wait for IPython Kernel to be started");

+ }

+ } catch (Exception e) {

+ // ignore the exception, because is may happen when grpc server has not started yet.

+ LOGGER.info("Wait for IPython Kernel to be started");

+ }

+

+ if ((System.currentTimeMillis() - startTime) > ipythonLaunchTimeout) {

+ throw new IOException("Fail to launch IPython Kernel in " + ipythonLaunchTimeout / 1000

+ + " seconds");

+ }

+ }

+ }

+

+ @Override

+ public void close() {

+ if (watchDog != null) {

+ LOGGER.debug("Kill IPython Process");

+ ipythonClient.stop(StopRequest.newBuilder().build());

+ watchDog.destroyProcess();

+ gatewayServer.shutdown();

+ }

+ }

+

+ @Override

+ public InterpreterResult interpret(String st, InterpreterContext context) {

+ zeppelinContext.setGui(context.getGui());

+ interpreterOutput.setInterpreterOutput(context.out);

+ ExecuteResponse response =

+ ipythonClient.stream_execute(ExecuteRequest.newBuilder().setCode(st).build(),

+ interpreterOutput);

+ try {

+ interpreterOutput.getInterpreterOutput().flush();

+ } catch (IOException e) {

+ throw new RuntimeException("Fail to write output", e);

+ }

+ InterpreterResult result = new InterpreterResult(

+ InterpreterResult.Code.valueOf(response.getStatus().name()));

+ return result;

+ }

+

+ @Override

+ public void cancel(InterpreterContext context) {

+ ipythonClient.cancel(CancelRequest.newBuilder().build());

+ }

+

+ @Override

+ public FormType getFormType() {

+ return FormType.SIMPLE;

+ }

+

+ @Override

+ public int getProgress(InterpreterContext context) {

+ return 0;

+ }

+

+ @Override

+ public List<InterpreterCompletion> completion(String buf, int cursor,

+ InterpreterContext interpreterContext) {

+ List<InterpreterCompletion> completions = new ArrayList<>();

+ CompletionResponse response =

+ ipythonClient.complete(

+ CompletionRequest.getDefaultInstance().newBuilder().setCode(buf)

+ .setCursor(cursor).build());

+ for (int i = 0; i < response.getMatchesCount(); i++) {

+ completions.add(new InterpreterCompletion(

+ response.getMatches(i), response.getMatches(i), ""));

+ }

+ return completions;

+ }

+

+ public PythonZeppelinContext getZeppelinContext() {

+ return zeppelinContext;

+ }

+

+ @Override

+ public void onProcessComplete(int exitValue) {

+ LOGGER.warn("Python Process is completed with exitValue: " + exitValue);

+ }

+

+ @Override

+ public void onProcessFailed(ExecuteException e) {

+ LOGGER.warn("Exception happens in Python Process", e);

+ }

+

+ private static class ProcessLogOutputStream extends LogOutputStream {

+

+ private Logger logger;

+

+ public ProcessLogOutputStream(Logger logger) {

+ this.logger = logger;

+ }

+

+ @Override

+ protected void processLine(String s, int i) {

+ this.logger.debug("Process Output: " + s);

+ }

+ }

+}

http://git-wip-us.apache.org/repos/asf/zeppelin/blob/32517c9d/python/src/main/java/org/apache/zeppelin/python/PythonInterpreter.java

----------------------------------------------------------------------

diff --git a/python/src/main/java/org/apache/zeppelin/python/PythonInterpreter.java b/python/src/main/java/org/apache/zeppelin/python/PythonInterpreter.java

index 0bfcae0..50f6a8b 100644

--- a/python/src/main/java/org/apache/zeppelin/python/PythonInterpreter.java

+++ b/python/src/main/java/org/apache/zeppelin/python/PythonInterpreter.java

@@ -91,6 +91,7 @@ public class PythonInterpreter extends Interpreter implements ExecuteResultHandl

private static final int MAX_TIMEOUT_SEC = 10;

private long pythonPid = 0;

+ private IPythonInterpreter iPythonInterpreter;

Integer statementSetNotifier = new Integer(0);

@@ -219,6 +220,37 @@ public class PythonInterpreter extends Interpreter implements ExecuteResultHandl

@Override

public void open() {

+ // try IPythonInterpreter first. If it is not available, we will fallback to the original

+ // python interpreter implementation.

+ iPythonInterpreter = getIPythonInterpreter();

+ if (getProperty().getProperty("zeppelin.python.useIPython", "true").equals("true") &&

+ iPythonInterpreter.checkIPythonPrerequisite()) {

+ try {

+ iPythonInterpreter.open();

+ if (InterpreterContext.get() != null) {

+ InterpreterContext.get().out.write(("IPython is available, " +

+ "use IPython for PythonInterpreter\n")

+ .getBytes());

+ }

+ LOG.info("Use IPythonInterpreter to replace PythonInterpreter");

+ return;

+ } catch (Exception e) {

+ iPythonInterpreter = null;

+ }

+ }

+ // reset iPythonInterpreter to null

+ iPythonInterpreter = null;

+

+ try {

+ if (InterpreterContext.get() != null) {

+ InterpreterContext.get().out.write(("IPython is not available, " +

+ "use the native PythonInterpreter\n")

+ .getBytes());

+ }

+ } catch (IOException e) {

+ LOG.warn("Fail to write InterpreterOutput", e.getMessage());

+ }

+

// Add matplotlib display hook

InterpreterGroup intpGroup = getInterpreterGroup();

if (intpGroup != null && intpGroup.getInterpreterHookRegistry() != null) {

@@ -232,8 +264,27 @@ public class PythonInterpreter extends Interpreter implements ExecuteResultHandl

}

}

+ private IPythonInterpreter getIPythonInterpreter() {

+ LazyOpenInterpreter lazy = null;

+ IPythonInterpreter ipython = null;

+ Interpreter p = getInterpreterInTheSameSessionByClassName(IPythonInterpreter.class.getName());

+

+ while (p instanceof WrappedInterpreter) {

+ if (p instanceof LazyOpenInterpreter) {

+ lazy = (LazyOpenInterpreter) p;

+ }

+ p = ((WrappedInterpreter) p).getInnerInterpreter();

+ }

+ ipython = (IPythonInterpreter) p;

+ return ipython;

+ }

+

@Override

public void close() {

+ if (iPythonInterpreter != null) {

+ iPythonInterpreter.close();

+ return;

+ }

pythonscriptRunning = false;

pythonScriptInitialized = false;

@@ -319,6 +370,9 @@ public class PythonInterpreter extends Interpreter implements ExecuteResultHandl

@Override

public InterpreterResult interpret(String cmd, InterpreterContext contextInterpreter) {

+ if (iPythonInterpreter != null) {

+ return iPythonInterpreter.interpret(cmd, contextInterpreter);

+ }

if (cmd == null || cmd.isEmpty()) {

return new InterpreterResult(Code.SUCCESS, "");

}

@@ -411,6 +465,9 @@ public class PythonInterpreter extends Interpreter implements ExecuteResultHandl

@Override

public void cancel(InterpreterContext context) {

+ if (iPythonInterpreter != null) {

+ iPythonInterpreter.cancel(context);

+ }

try {

interrupt();

} catch (IOException e) {

@@ -425,11 +482,17 @@ public class PythonInterpreter extends Interpreter implements ExecuteResultHandl

@Override

public int getProgress(InterpreterContext context) {

+ if (iPythonInterpreter != null) {

+ return iPythonInterpreter.getProgress(context);

+ }

return 0;

}

@Override

public Scheduler getScheduler() {

+ if (iPythonInterpreter != null) {

+ return iPythonInterpreter.getScheduler();

+ }

return SchedulerFactory.singleton().createOrGetFIFOScheduler(

PythonInterpreter.class.getName() + this.hashCode());

}

@@ -437,6 +500,9 @@ public class PythonInterpreter extends Interpreter implements ExecuteResultHandl

@Override

public List<InterpreterCompletion> completion(String buf, int cursor,

InterpreterContext interpreterContext) {

+ if (iPythonInterpreter != null) {

+ return iPythonInterpreter.completion(buf, cursor, interpreterContext);

+ }

return null;

}

http://git-wip-us.apache.org/repos/asf/zeppelin/blob/32517c9d/python/src/main/java/org/apache/zeppelin/python/PythonZeppelinContext.java

----------------------------------------------------------------------

diff --git a/python/src/main/java/org/apache/zeppelin/python/PythonZeppelinContext.java b/python/src/main/java/org/apache/zeppelin/python/PythonZeppelinContext.java

new file mode 100644

index 0000000..3d476e0

--- /dev/null

+++ b/python/src/main/java/org/apache/zeppelin/python/PythonZeppelinContext.java

@@ -0,0 +1,49 @@

+/*

+* Licensed to the Apache Software Foundation (ASF) under one or more

+* contributor license agreements. See the NOTICE file distributed with

+* this work for additional information regarding copyright ownership.

+* The ASF licenses this file to You under the Apache License, Version 2.0

+* (the "License"); you may not use this file except in compliance with

+* the License. You may obtain a copy of the License at

+*

+* http://www.apache.org/licenses/LICENSE-2.0

+*

+* Unless required by applicable law or agreed to in writing, software

+* distributed under the License is distributed on an "AS IS" BASIS,

+* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+* See the License for the specific language governing permissions and

+* limitations under the License.

+*/

+

+package org.apache.zeppelin.python;

+

+import org.apache.zeppelin.interpreter.BaseZeppelinContext;

+import org.apache.zeppelin.interpreter.InterpreterHookRegistry;

+

+import java.util.List;

+import java.util.Map;

+

+/**

+ * ZeppelinContext for Python

+ */

+public class PythonZeppelinContext extends BaseZeppelinContext {

+

+ public PythonZeppelinContext(InterpreterHookRegistry hooks, int maxResult) {

+ super(hooks, maxResult);

+ }

+

+ @Override

+ public Map<String, String> getInterpreterClassMap() {

+ return null;

+ }

+

+ @Override

+ public List<Class> getSupportedClasses() {

+ return null;

+ }

+

+ @Override

+ protected String showData(Object obj) {

+ return null;

+ }

+}

http://git-wip-us.apache.org/repos/asf/zeppelin/blob/32517c9d/python/src/main/proto/ipython.proto

----------------------------------------------------------------------

diff --git a/python/src/main/proto/ipython.proto b/python/src/main/proto/ipython.proto

new file mode 100644

index 0000000..a54f36d

--- /dev/null

+++ b/python/src/main/proto/ipython.proto

@@ -0,0 +1,102 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+syntax = "proto3";

+

+option java_multiple_files = true;

+option java_package = "org.apache.zeppelin.python.proto";

+option java_outer_classname = "IPythonProto";

+option objc_class_prefix = "IPython";

+

+package ipython;

+

+// The IPython service definition.

+service IPython {

+ // Sends code

+ rpc execute (ExecuteRequest) returns (stream ExecuteResponse) {}

+

+ // Get completion

+ rpc complete (CompletionRequest) returns (CompletionResponse) {}

+

+ // Cancel the running statement

+ rpc cancel (CancelRequest) returns (CancelResponse) {}

+

+ // Get ipython kernel status

+ rpc status (StatusRequest) returns (StatusResponse) {}

+

+ rpc stop(StopRequest) returns (StopResponse) {}

+}

+

+enum ExecuteStatus {

+ SUCCESS = 0;

+ ERROR = 1;

+}

+

+enum IPythonStatus {

+ STARTING = 0;

+ RUNNING = 1;

+}

+

+enum OutputType {

+ TEXT = 0;

+ IMAGE = 1;

+}

+

+// The request message containing the code

+message ExecuteRequest {

+ string code = 1;

+}

+

+// The response message containing the execution result.

+message ExecuteResponse {

+ ExecuteStatus status = 1;

+ OutputType type = 2;

+ string output = 3;

+}

+

+message CancelRequest {

+

+}

+

+message CancelResponse {

+

+}

+

+message CompletionRequest {

+ string code = 1;

+ int32 cursor = 2;

+}

+

+message CompletionResponse {

+ repeated string matches = 1;

+}

+

+message StatusRequest {

+

+}

+

+message StatusResponse {

+ IPythonStatus status = 1;

+}

+

+message StopRequest {

+

+}

+

+message StopResponse {

+

+}

\ No newline at end of file

http://git-wip-us.apache.org/repos/asf/zeppelin/blob/32517c9d/python/src/main/resources/grpc/generate_rpc.sh

----------------------------------------------------------------------

diff --git a/python/src/main/resources/grpc/generate_rpc.sh b/python/src/main/resources/grpc/generate_rpc.sh

new file mode 100755

index 0000000..efa5fbe

--- /dev/null

+++ b/python/src/main/resources/grpc/generate_rpc.sh

@@ -0,0 +1,18 @@

+# Licensed to the Apache Software Foundation (ASF) under one or more

+# contributor license agreements. See the NOTICE file distributed with

+# this work for additional information regarding copyright ownership.

+# The ASF licenses this file to You under the Apache License, Version 2.0

+# (the "License"); you may not use this file except in compliance with

+# the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+

+#!/usr/bin/env bash

+

+python -m grpc_tools.protoc -I../../proto --python_out=python --grpc_python_out=python ../../proto/ipython.proto

http://git-wip-us.apache.org/repos/asf/zeppelin/blob/32517c9d/python/src/main/resources/grpc/python/ipython_client.py

----------------------------------------------------------------------

diff --git a/python/src/main/resources/grpc/python/ipython_client.py b/python/src/main/resources/grpc/python/ipython_client.py

new file mode 100644

index 0000000..b8d1ee0

--- /dev/null

+++ b/python/src/main/resources/grpc/python/ipython_client.py

@@ -0,0 +1,36 @@

+# Licensed to the Apache Software Foundation (ASF) under one or more

+# contributor license agreements. See the NOTICE file distributed with

+# this work for additional information regarding copyright ownership.

+# The ASF licenses this file to You under the Apache License, Version 2.0

+# (the "License"); you may not use this file except in compliance with

+# the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+

+

+import grpc

+

+import ipython_pb2

+import ipython_pb2_grpc

+

+

+def run():

+ channel = grpc.insecure_channel('localhost:50053')

+ stub = ipython_pb2_grpc.IPythonStub(channel)

+ response = stub.execute(ipython_pb2.ExecuteRequest(code="import time\nfor i in range(1,4):\n\ttime.sleep(1)\n\tprint(i)\n" +

+ "%matplotlib inline\nimport matplotlib.pyplot as plt\ndata=[1,1,2,3,4]\nplt.figure()\nplt.plot(data)"))

+ for r in response:

+ print("output:" + r.output)

+

+ response = stub.execute(ipython_pb2.ExecuteRequest(code="range?"))

+ for r in response:

+ print(r)

+

+if __name__ == '__main__':

+ run()