You are viewing a plain text version of this content. The canonical link for it is here.

Posted to commits@hudi.apache.org by GitBox <gi...@apache.org> on 2022/04/24 05:59:09 UTC

[GitHub] [hudi] chaplinthink commented on issue #3657: [SUPPORT] Failed to insert data by flink-sql

chaplinthink commented on issue #3657:

URL: https://github.com/apache/hudi/issues/3657#issuecomment-1107728664

Same problem. And the Flink job always restart.

Flink version: 1.12.2

Hudi version: 0.9.0

I used the hudi dependecy:

```

<dependency>

<groupId>org.apache.hudi</groupId>

<artifactId>hudi-flink-bundle_2.11</artifactId>

<version>0.9.0</version>

</dependency>

```

Stack trace:

```

Caused by: java.lang.IllegalArgumentException: hoodie.properties file seems invalid. Please check for left over `.updated` files if any, manually copy it to hoodie.properties and retry

at org.apache.hudi.common.util.ValidationUtils.checkArgument(ValidationUtils.java:40)

at org.apache.hudi.common.table.HoodieTableConfig.<init>(HoodieTableConfig.java:185)

at org.apache.hudi.common.table.HoodieTableMetaClient.<init>(HoodieTableMetaClient.java:114)

at org.apache.hudi.common.table.HoodieTableMetaClient.<init>(HoodieTableMetaClient.java:74)

at org.apache.hudi.common.table.HoodieTableMetaClient$Builder.build(HoodieTableMetaClient.java:611)

at org.apache.hudi.table.HoodieFlinkTable.create(HoodieFlinkTable.java:48)

at org.apache.hudi.sink.partitioner.profile.WriteProfile.<init>(WriteProfile.java:118)

at org.apache.hudi.sink.partitioner.profile.DeltaWriteProfile.<init>(DeltaWriteProfile.java:43)

at org.apache.hudi.sink.partitioner.profile.WriteProfiles.getWriteProfile(WriteProfiles.java:74)

at org.apache.hudi.sink.partitioner.profile.WriteProfiles.lambda$singleton$0(WriteProfiles.java:63)

at java.util.HashMap.computeIfAbsent(HashMap.java:1118)

at org.apache.hudi.sink.partitioner.profile.WriteProfiles.singleton(WriteProfiles.java:62)

at org.apache.hudi.sink.partitioner.BucketAssigners.create(BucketAssigners.java:56)

at org.apache.hudi.sink.partitioner.BucketAssignFunction.open(BucketAssignFunction.java:121)

at org.apache.flink.api.common.functions.util.FunctionUtils.openFunction(FunctionUtils.java:34)

at org.apache.flink.streaming.api.operators.AbstractUdfStreamOperator.open(AbstractUdfStreamOperator.java:102)

at org.apache.flink.streaming.api.operators.KeyedProcessOperator.open(KeyedProcessOperator.java:55)

at org.apache.hudi.sink.partitioner.BucketAssignOperator.open(BucketAssignOperator.java:41)

at org.apache.flink.streaming.runtime.tasks.OperatorChain.initializeStateAndOpenOperators(OperatorChain.java:428)

at org.apache.flink.streaming.runtime.tasks.StreamTask.lambda$beforeInvoke$2(StreamTask.java:543)

at org.apache.flink.streaming.runtime.tasks.StreamTaskActionExecutor$1.runThrowing(StreamTaskActionExecutor.java:50)

at org.apache.flink.streaming.runtime.tasks.StreamTask.beforeInvoke(StreamTask.java:533)

at org.apache.flink.streaming.runtime.tasks.StreamTask.invoke(StreamTask.java:573)

at org.apache.flink.runtime.taskmanager.Task.doRun(Task.java:755)

at org.apache.flink.runtime.taskmanager.Task.run(Task.java:570)

at java.lang.Thread.run(Thread.java:745)

Suppressed: java.lang.NullPointerException

at org.apache.hudi.sink.partitioner.BucketAssignFunction.close(BucketAssignFunction.java:242)

at org.apache.flink.api.common.functions.util.FunctionUtils.closeFunction(FunctionUtils.java:41)

at org.apache.flink.streaming.api.operators.AbstractUdfStreamOperator.dispose(AbstractUdfStreamOperator.java:117)

at org.apache.flink.streaming.runtime.tasks.StreamTask.disposeAllOperators(StreamTask.java:791)

at org.apache.flink.streaming.runtime.tasks.StreamTask.runAndSuppressThrowable(StreamTask.java:770)

at org.apache.flink.streaming.runtime.tasks.StreamTask.cleanUpInvoke(StreamTask.java:689)

at org.apache.flink.streaming.runtime.tasks.StreamTask.invoke(StreamTask.java:593)

... 3 more

```

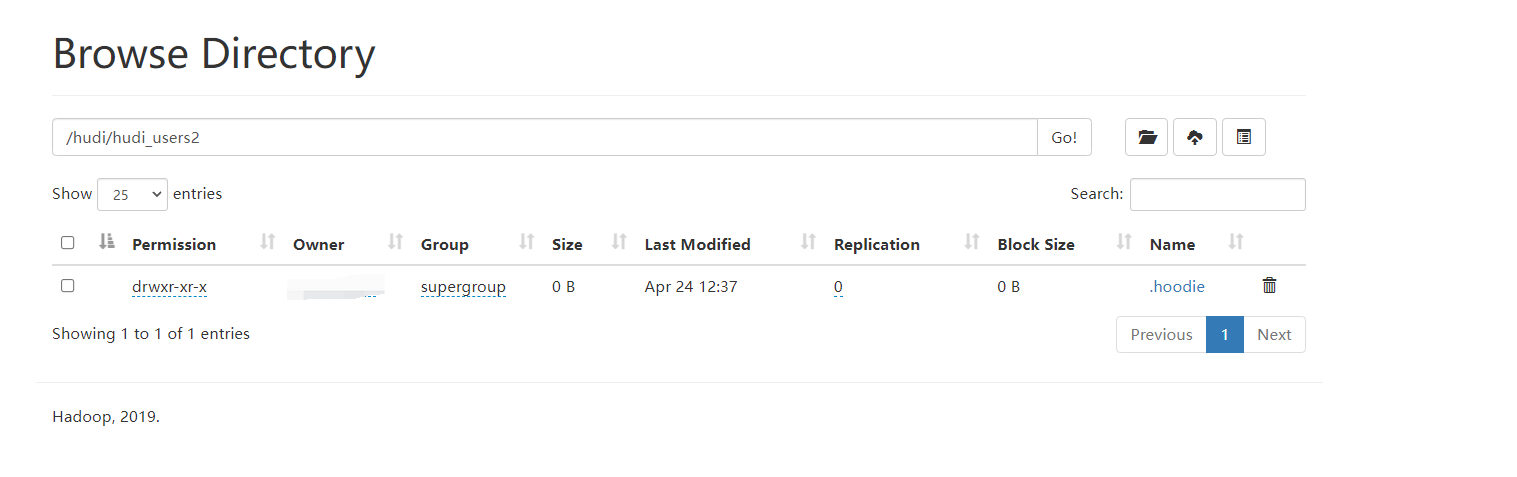

I tested in my local enviroment. The hdfs just have a **.hoodie** directory, no data file.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org