You are viewing a plain text version of this content. The canonical link for it is here.

Posted to issues@iceberg.apache.org by GitBox <gi...@apache.org> on 2022/06/21 08:51:35 UTC

[GitHub] [iceberg] Zhangg7723 opened a new pull request, #5100: Data: delete compaction optimization by bloom filter

Zhangg7723 opened a new pull request, #5100:

URL: https://github.com/apache/iceberg/pull/5100

**Purpose**

V2 table support equality deletes for row level delete, delete rows are loaded into memory when rewrite or query job is running, but this will cause OOM with too many delete rows in a real scenario, especially in flink upsert mode. As issue #4312 mentioned, flink rewrite jobs caused out of memory exception which also happen with spark or other engine support v2 table.

delete rows in hash set occupy most of the heap memory.

**Goal**

Reduce delete rows loaded in memory, optimize the performance of delete compaction by bloom filter, just for parquet format in this PR, thanks for the pull request of @huaxingao about the parquet bloom filter support #4831, and we are working on orc format.

**How**

<img width="377" alt="image" src="https://user-images.githubusercontent.com/18146312/174741477-02a9fcae-dade-494c-803e-bcd2cb45c61a.png">

Before reading the equality delete data, load the bloom filter of current data file, then filter delete rows not in this data file.

**Verification**

We verified the performance improvement by a test case.

Environment:

Job: spark rewrite job with 300 million data rows and 30 million delete rows

executor num:2

executor memory:2G

executor core:8

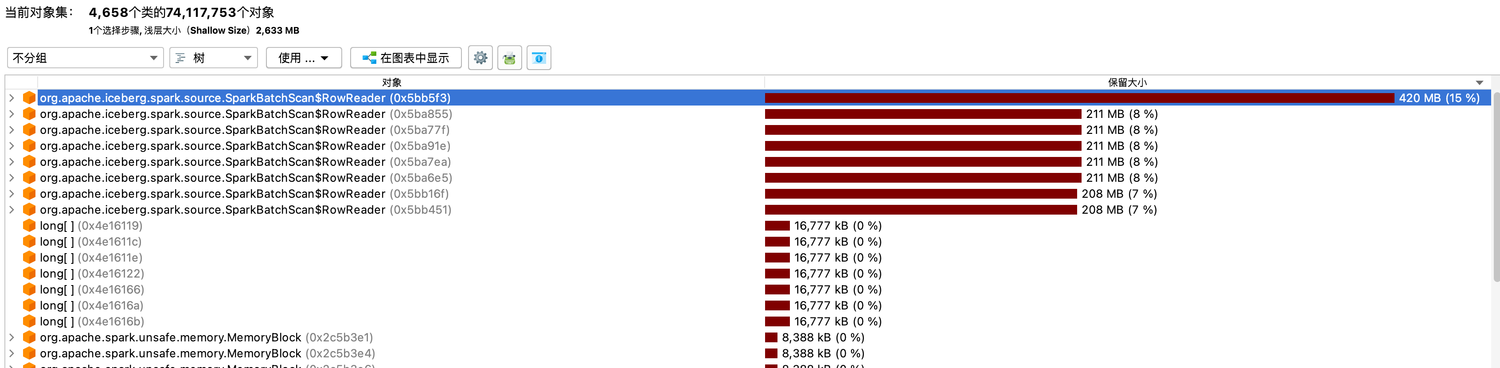

Before optimization:

<img width="666" alt="image" src="https://user-images.githubusercontent.com/18146312/174747923-a3fb0452-8dab-447b-89ba-2e788a91ece4.png">

JVM did full gc frequently,this job failed in the end.

After optimization:

<img width="666" alt="image" src="https://user-images.githubusercontent.com/18146312/174748078-8763ad9d-d90a-4c49-933b-fc587c0d5610.png">

Obviously, the memory pressure reduced, and the job finished successfully.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] chenjunjiedada commented on a diff in pull request #5100: Data: delete compaction optimization by bloom filter

Posted by GitBox <gi...@apache.org>.

chenjunjiedada commented on code in PR #5100:

URL: https://github.com/apache/iceberg/pull/5100#discussion_r906624572

##########

data/src/main/java/org/apache/iceberg/data/DeleteFilter.java:

##########

@@ -133,10 +144,19 @@ public CloseableIterable<T> filter(CloseableIterable<T> records) {

private List<Predicate<T>> applyEqDeletes() {

List<Predicate<T>> isInDeleteSets = Lists.newArrayList();

+ Map<BlockMetaData, BloomFilterReader> parquetBloomFilterReader = Maps.newHashMap();

+ ParquetFileReader parquetReader = null;

+ Predicate<Record> isInBloomFilter = null;

if (eqDeletes.isEmpty()) {

return isInDeleteSets;

}

+ // load bloomfilter readers from data file

+ if (filePath.endsWith(".parquet")) {

+ parquetReader = ParquetUtil.openFile(getInputFile(filePath));

Review Comment:

Can we use `try-with-resource` here?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] jiamin13579 commented on pull request #5100: Data: delete compaction optimization by bloom filter

Posted by "jiamin13579 (via GitHub)" <gi...@apache.org>.

jiamin13579 commented on PR #5100:

URL: https://github.com/apache/iceberg/pull/5100#issuecomment-1512387056

We also encountered the same problem

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] Zhangg7723 commented on a diff in pull request #5100: Data: delete compaction optimization by bloom filter

Posted by GitBox <gi...@apache.org>.

Zhangg7723 commented on code in PR #5100:

URL: https://github.com/apache/iceberg/pull/5100#discussion_r907988087

##########

data/src/main/java/org/apache/iceberg/data/DeleteFilter.java:

##########

@@ -133,10 +144,19 @@ public CloseableIterable<T> filter(CloseableIterable<T> records) {

private List<Predicate<T>> applyEqDeletes() {

List<Predicate<T>> isInDeleteSets = Lists.newArrayList();

+ Map<BlockMetaData, BloomFilterReader> parquetBloomFilterReader = Maps.newHashMap();

+ ParquetFileReader parquetReader = null;

+ Predicate<Record> isInBloomFilter = null;

if (eqDeletes.isEmpty()) {

return isInDeleteSets;

}

+ // load bloomfilter readers from data file

+ if (filePath.endsWith(".parquet")) {

+ parquetReader = ParquetUtil.openFile(getInputFile(filePath));

Review Comment:

Maybe it's a big change , I want to keep this reader open during the iteration of delete files, and considering orc format, the delete iteration need to be encapsulated in a new function, any advise for this change?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] chenjunjiedada commented on a diff in pull request #5100: Data: delete compaction optimization by bloom filter

Posted by GitBox <gi...@apache.org>.

chenjunjiedada commented on code in PR #5100:

URL: https://github.com/apache/iceberg/pull/5100#discussion_r907183040

##########

data/src/main/java/org/apache/iceberg/data/DeleteFilter.java:

##########

@@ -133,10 +144,19 @@ public CloseableIterable<T> filter(CloseableIterable<T> records) {

private List<Predicate<T>> applyEqDeletes() {

List<Predicate<T>> isInDeleteSets = Lists.newArrayList();

+ Map<BlockMetaData, BloomFilterReader> parquetBloomFilterReader = Maps.newHashMap();

+ ParquetFileReader parquetReader = null;

+ Predicate<Record> isInBloomFilter = null;

if (eqDeletes.isEmpty()) {

return isInDeleteSets;

}

+ // load bloomfilter readers from data file

+ if (filePath.endsWith(".parquet")) {

Review Comment:

Do we want to check whether the bloom filter is turned on to avoid reading the footer if it is not?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] chenjunjiedada commented on a diff in pull request #5100: Data: delete compaction optimization by bloom filter

Posted by GitBox <gi...@apache.org>.

chenjunjiedada commented on code in PR #5100:

URL: https://github.com/apache/iceberg/pull/5100#discussion_r907015357

##########

data/src/main/java/org/apache/iceberg/data/DeleteFilter.java:

##########

@@ -148,6 +168,12 @@ private List<Predicate<T>> applyEqDeletes() {

Schema deleteSchema = TypeUtil.select(requiredSchema, ids);

+ if (filePath.endsWith(".parquet") && parquetReader != null) {

Review Comment:

I see, how about adding another `ctor` to accept `DataFile` parameter?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] Zhangg7723 commented on a diff in pull request #5100: Data: delete compaction optimization by bloom filter

Posted by GitBox <gi...@apache.org>.

Zhangg7723 commented on code in PR #5100:

URL: https://github.com/apache/iceberg/pull/5100#discussion_r906952929

##########

data/src/main/java/org/apache/iceberg/data/DeleteFilter.java:

##########

@@ -148,6 +168,12 @@ private List<Predicate<T>> applyEqDeletes() {

Schema deleteSchema = TypeUtil.select(requiredSchema, ids);

+ if (filePath.endsWith(".parquet") && parquetReader != null) {

Review Comment:

yes, you are right, but dataFile is not passed into DeleteFilter as parameter, it was changed to filePath in #4381

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] chenjunjiedada commented on a diff in pull request #5100: Data: delete compaction optimization by bloom filter

Posted by GitBox <gi...@apache.org>.

chenjunjiedada commented on code in PR #5100:

URL: https://github.com/apache/iceberg/pull/5100#discussion_r907181733

##########

data/src/main/java/org/apache/iceberg/data/DeleteFilter.java:

##########

@@ -148,6 +168,12 @@ private List<Predicate<T>> applyEqDeletes() {

Schema deleteSchema = TypeUtil.select(requiredSchema, ids);

+ if (filePath.endsWith(".parquet") && parquetReader != null) {

Review Comment:

I see, any concern if we change it to `DataFile`?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] Zhangg7723 commented on pull request #5100: Data: delete compaction optimization by bloom filter

Posted by GitBox <gi...@apache.org>.

Zhangg7723 commented on PR #5100:

URL: https://github.com/apache/iceberg/pull/5100#issuecomment-1162822289

@rdblue can you give a review? thank you.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] chenjunjiedada commented on a diff in pull request #5100: Data: delete compaction optimization by bloom filter

Posted by GitBox <gi...@apache.org>.

chenjunjiedada commented on code in PR #5100:

URL: https://github.com/apache/iceberg/pull/5100#discussion_r906624770

##########

data/src/main/java/org/apache/iceberg/data/DeleteFilter.java:

##########

@@ -148,6 +168,12 @@ private List<Predicate<T>> applyEqDeletes() {

Schema deleteSchema = TypeUtil.select(requiredSchema, ids);

+ if (filePath.endsWith(".parquet") && parquetReader != null) {

Review Comment:

I think we can change the ctor parameter from `String` to `DataFile` and then here we can check file.format().

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] Zhangg7723 commented on a diff in pull request #5100: Data: delete compaction optimization by bloom filter

Posted by GitBox <gi...@apache.org>.

Zhangg7723 commented on code in PR #5100:

URL: https://github.com/apache/iceberg/pull/5100#discussion_r907977433

##########

data/src/main/java/org/apache/iceberg/data/DeleteFilter.java:

##########

@@ -148,6 +168,12 @@ private List<Predicate<T>> applyEqDeletes() {

Schema deleteSchema = TypeUtil.select(requiredSchema, ids);

+ if (filePath.endsWith(".parquet") && parquetReader != null) {

Review Comment:

the change for constructor parameters is only for Trino supporting mor,Trino wrapped a dummy fileScanTask for data file currently, the author wants to remove fileScanTask implemented in Trino, and use the filePath parameter. If we change it to dataFile, compatibility is a problem.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] Zhangg7723 commented on a diff in pull request #5100: Data: delete compaction optimization by bloom filter

Posted by GitBox <gi...@apache.org>.

Zhangg7723 commented on code in PR #5100:

URL: https://github.com/apache/iceberg/pull/5100#discussion_r907983599

##########

data/src/main/java/org/apache/iceberg/data/DeleteFilter.java:

##########

@@ -133,10 +144,19 @@ public CloseableIterable<T> filter(CloseableIterable<T> records) {

private List<Predicate<T>> applyEqDeletes() {

List<Predicate<T>> isInDeleteSets = Lists.newArrayList();

+ Map<BlockMetaData, BloomFilterReader> parquetBloomFilterReader = Maps.newHashMap();

+ ParquetFileReader parquetReader = null;

+ Predicate<Record> isInBloomFilter = null;

if (eqDeletes.isEmpty()) {

return isInDeleteSets;

}

+ // load bloomfilter readers from data file

+ if (filePath.endsWith(".parquet")) {

Review Comment:

You mean we check the bloom filter by table properties, right? but the bloom filter properties may be updated, the bloom filter in current file is unmatched with table properties.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org