You are viewing a plain text version of this content. The canonical link for it is here.

Posted to commits@hudi.apache.org by GitBox <gi...@apache.org> on 2022/02/17 10:22:33 UTC

[GitHub] [hudi] gaoasi opened a new issue #4836: [SUPPORT]flink sql write data to hudi ,do not execute compaction

gaoasi opened a new issue #4836:

URL: https://github.com/apache/hudi/issues/4836

hi.

I want to write data to hudi through flink sql,

my table building statement is:

========================create source table ==================

CREATE TABLE kafkaTable (

volume INT,

ts STRING,

symbol STRING,

`year` INT,

`month` STRING,

high DOUBLE,

low DOUBLE,

`key` STRING,

`date` STRING,

`close` DOUBLE,

`open` DOUBLE,

`day` STRING

) WITH (

'connector' = 'kafka',

'topic' = 'hudi-test-topic',

'properties.bootstrap.servers' = 'client-38888b3:9092',

'properties.group.id' = 'testGroup',

'format' = 'json',

'scan.startup.mode' = 'earliest-offset'

);

========================created source table ===================

======================== create target table ===================

CREATE TABLE hudi_test_topic(

volume INT PRIMARY KEY NOT ENFORCED,

ts STRING,

symbol STRING,

`year` INT,

`month` STRING,

high DOUBLE,

low DOUBLE,

`key` STRING,

`date` STRING,

`close` DOUBLE,

`open` DOUBLE,

`day` STRING

)

PARTITIONED BY (`date`)

WITH (

'connector' = 'hudi',

'path' = 'hdfs://bmr-cluster/user/hudi/warehouse/test/hudi-test-topic',

'table.type' = 'MERGE_ON_READ',

'hive_sync.enable'='true',

'hive_sync.mode' = 'hms',

'hoodie.datasource.write.recordkey.field'='volume',

'write.precombine.field'='ts',

'read.streaming.enabled' = 'true',

'read.streaming.check-interval' = '4',

'hive_sync.metastore.uris' = 'thrift://master-2b29900-1:9083,thrift://master-2b29900-2:9083',

'hive_sync.table'='huditesttopic',

'hive_sync.db'='test',

'write.tasks'='4',

'metadata.compaction.delta_commits'='1',

'compaction.schedule.enabled'='true',

'compaction.trigger.strategy'='num_commits',

'compaction.async.enabled'='true',

'compaction.tasks'='2'

);

======================== created target table ===================

======================== insert data sql =======================

insert into hudi_test_topic select * from kafkaTable;

======================== inserted data sql =======================

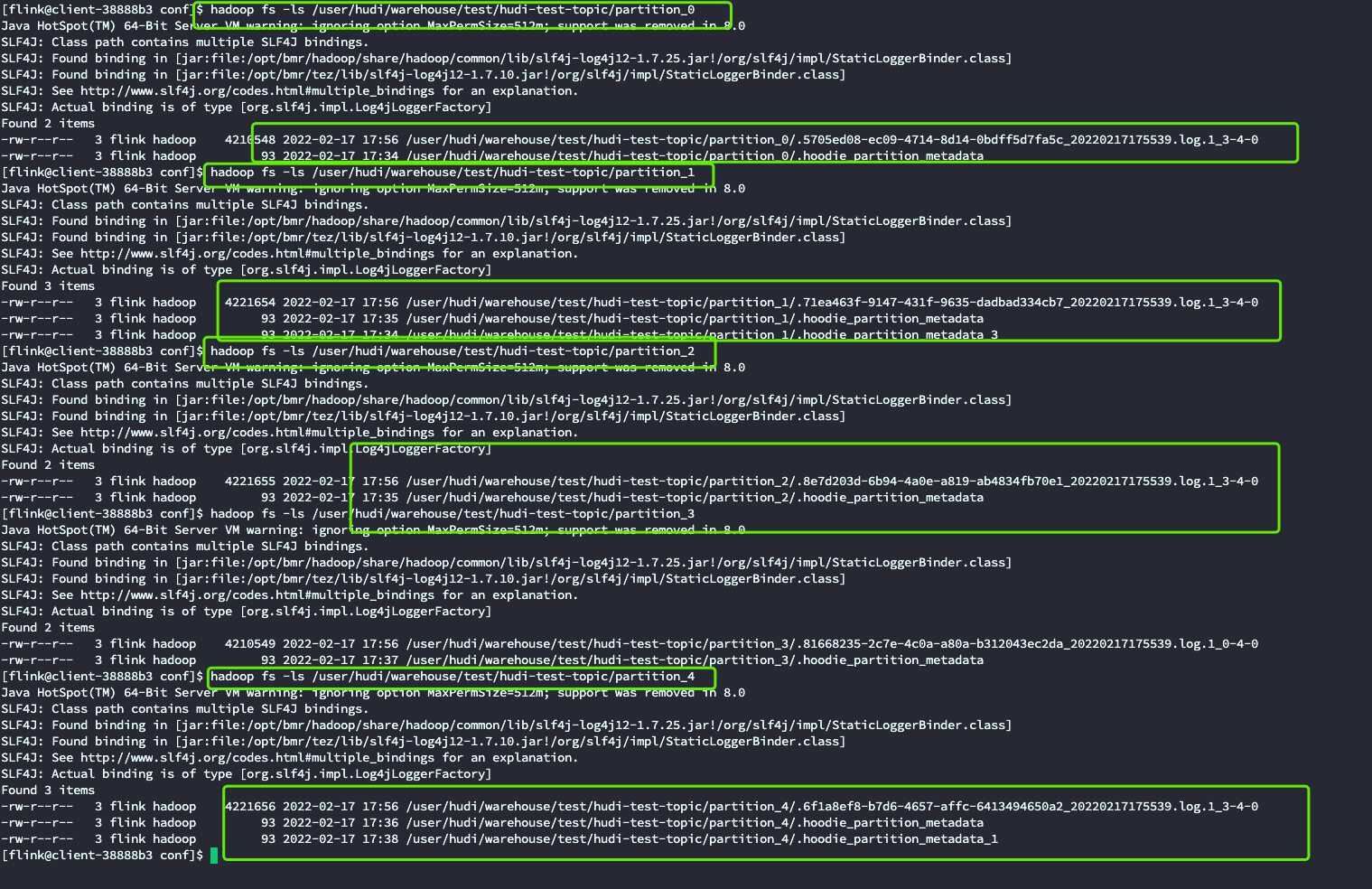

i checked my table path ,there is no data file:

I want to know what other configuration items need to be configured?

or,I need to perform compression manually

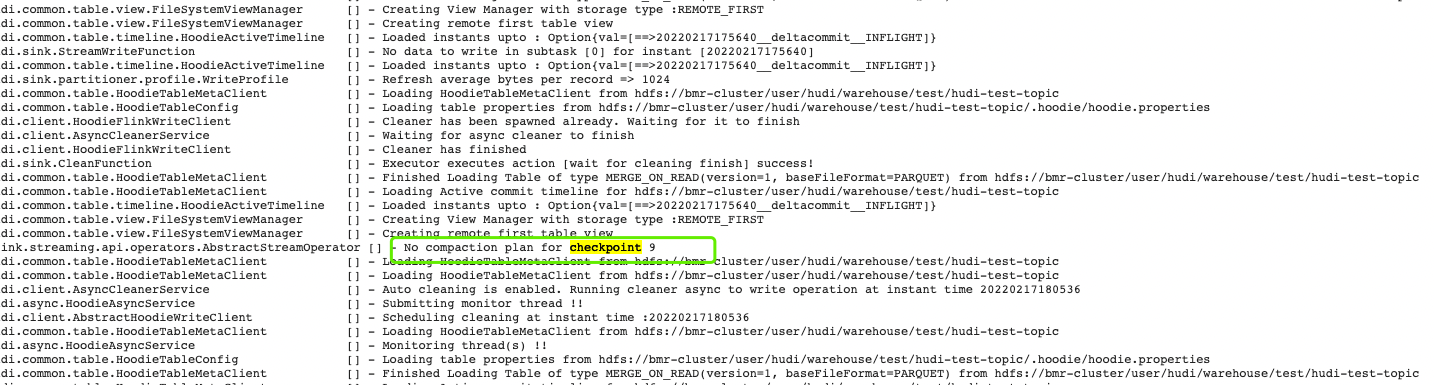

I can't figure out why the compression is not performed,the flink job log is :

can anybody help me

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] gaoasi closed issue #4836: [SUPPORT]flink sql write data to hudi ,do not execute compaction

Posted by GitBox <gi...@apache.org>.

gaoasi closed issue #4836:

URL: https://github.com/apache/hudi/issues/4836

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] gaoasi commented on issue #4836: [SUPPORT]flink sql write data to hudi ,do not execute compaction

Posted by GitBox <gi...@apache.org>.

gaoasi commented on issue #4836:

URL: https://github.com/apache/hudi/issues/4836#issuecomment-1043986617

The problem is solved:

i changed my table configuration:

CREATE TABLE hudi_test_topic(

volume INT PRIMARY KEY NOT ENFORCED,

ts STRING,

symbol STRING,

`year` INT,

`month` STRING,

high DOUBLE,

low DOUBLE,

`key` STRING,

`date` STRING,

`close` DOUBLE,

`open` DOUBLE,

`day` STRING

)

PARTITIONED BY (`date`)

WITH (

'connector' = 'hudi',

'path' = 'hdfs://bmr-cluster/user/hudi/warehouse/test/hudi-test-topic',

'table.type' = 'MERGE_ON_READ',

'hive_sync.enable'='true',

'hive_sync.mode' = 'hms',

'hoodie.datasource.write.recordkey.field'='volume',

'write.precombine.field'='ts',

'read.streaming.enabled' = 'true',

'read.streaming.check-interval' = '4',

'hive_sync.metastore.uris' = 'thrift://master-2b29900-1:9083,thrift://master-2b29900-2:9083',

'hive_sync.table'='huditesttopic',

'hive_sync.db'='test',

'write.tasks'='4',

'metadata.compaction.delta_commits'='1',

'compaction.delta_seconds'='2',

'compaction.schedule.enabled'='true',

'compaction.trigger.strategy'='time_elapsed',

'compaction.timeout.seconds'='1200',

'hoodie.compact.inline.max.delta.seconds'='1',

'compaction.async.enabled'='true',

'compaction.tasks'='2'

);

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org