You are viewing a plain text version of this content. The canonical link for it is here.

Posted to issues@flink.apache.org by GitBox <gi...@apache.org> on 2019/12/24 04:23:49 UTC

[GitHub] [flink] wuchong opened a new pull request #10669:

[FLINK-15192][docs][table] Split 'SQL' page into multiple sub pages for

better readability

wuchong opened a new pull request #10669: [FLINK-15192][docs][table] Split 'SQL' page into multiple sub pages for better readability

URL: https://github.com/apache/flink/pull/10669

<!--

*Thank you very much for contributing to Apache Flink - we are happy that you want to help us improve Flink. To help the community review your contribution in the best possible way, please go through the checklist below, which will get the contribution into a shape in which it can be best reviewed.*

*Please understand that we do not do this to make contributions to Flink a hassle. In order to uphold a high standard of quality for code contributions, while at the same time managing a large number of contributions, we need contributors to prepare the contributions well, and give reviewers enough contextual information for the review. Please also understand that contributions that do not follow this guide will take longer to review and thus typically be picked up with lower priority by the community.*

## Contribution Checklist

- Make sure that the pull request corresponds to a [JIRA issue](https://issues.apache.org/jira/projects/FLINK/issues). Exceptions are made for typos in JavaDoc or documentation files, which need no JIRA issue.

- Name the pull request in the form "[FLINK-XXXX] [component] Title of the pull request", where *FLINK-XXXX* should be replaced by the actual issue number. Skip *component* if you are unsure about which is the best component.

Typo fixes that have no associated JIRA issue should be named following this pattern: `[hotfix] [docs] Fix typo in event time introduction` or `[hotfix] [javadocs] Expand JavaDoc for PuncuatedWatermarkGenerator`.

- Fill out the template below to describe the changes contributed by the pull request. That will give reviewers the context they need to do the review.

- Make sure that the change passes the automated tests, i.e., `mvn clean verify` passes. You can set up Travis CI to do that following [this guide](https://flink.apache.org/contributing/contribute-code.html#open-a-pull-request).

- Each pull request should address only one issue, not mix up code from multiple issues.

- Each commit in the pull request has a meaningful commit message (including the JIRA id)

- Once all items of the checklist are addressed, remove the above text and this checklist, leaving only the filled out template below.

**(The sections below can be removed for hotfixes of typos)**

-->

## What is the purpose of the change

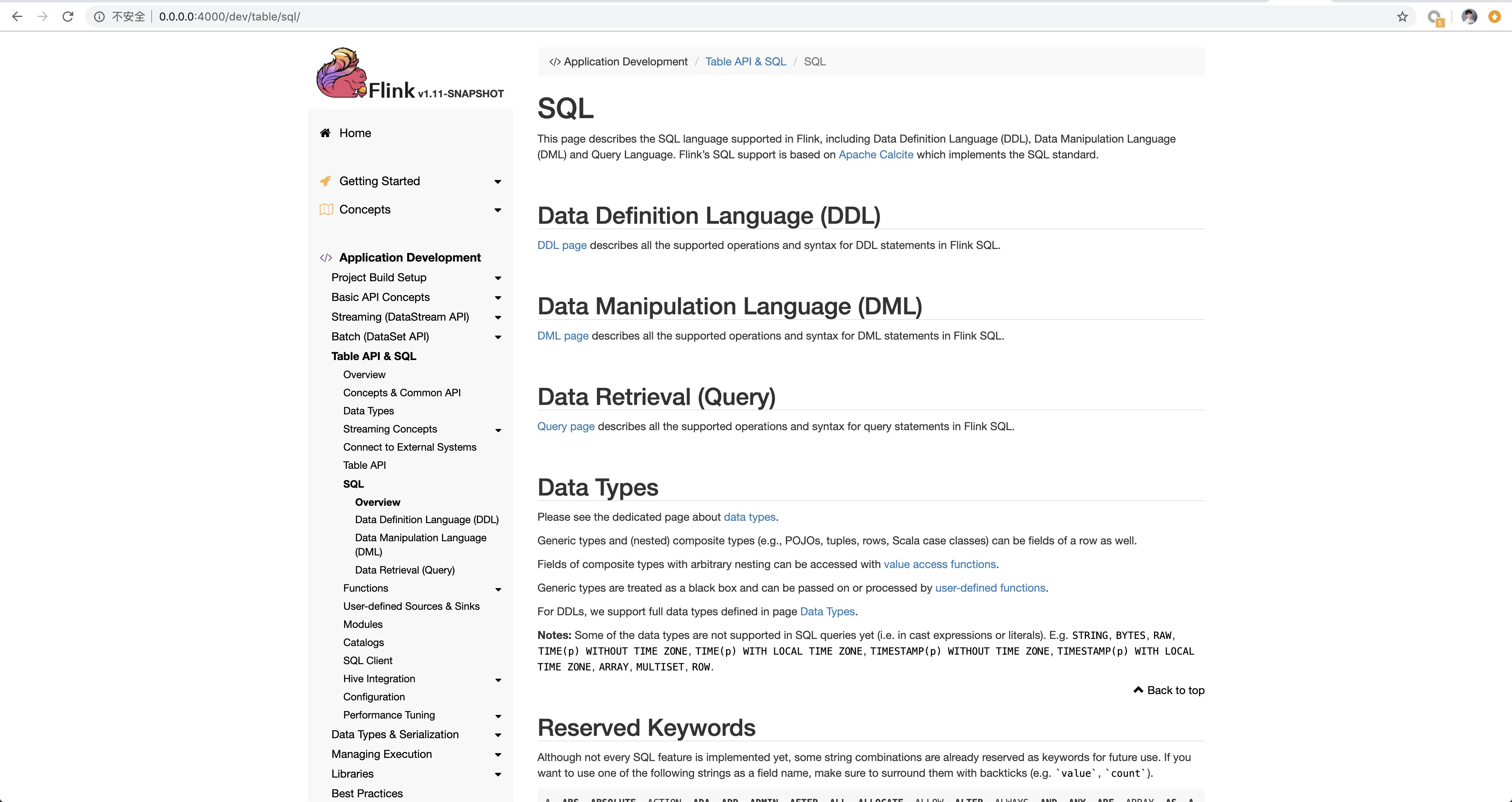

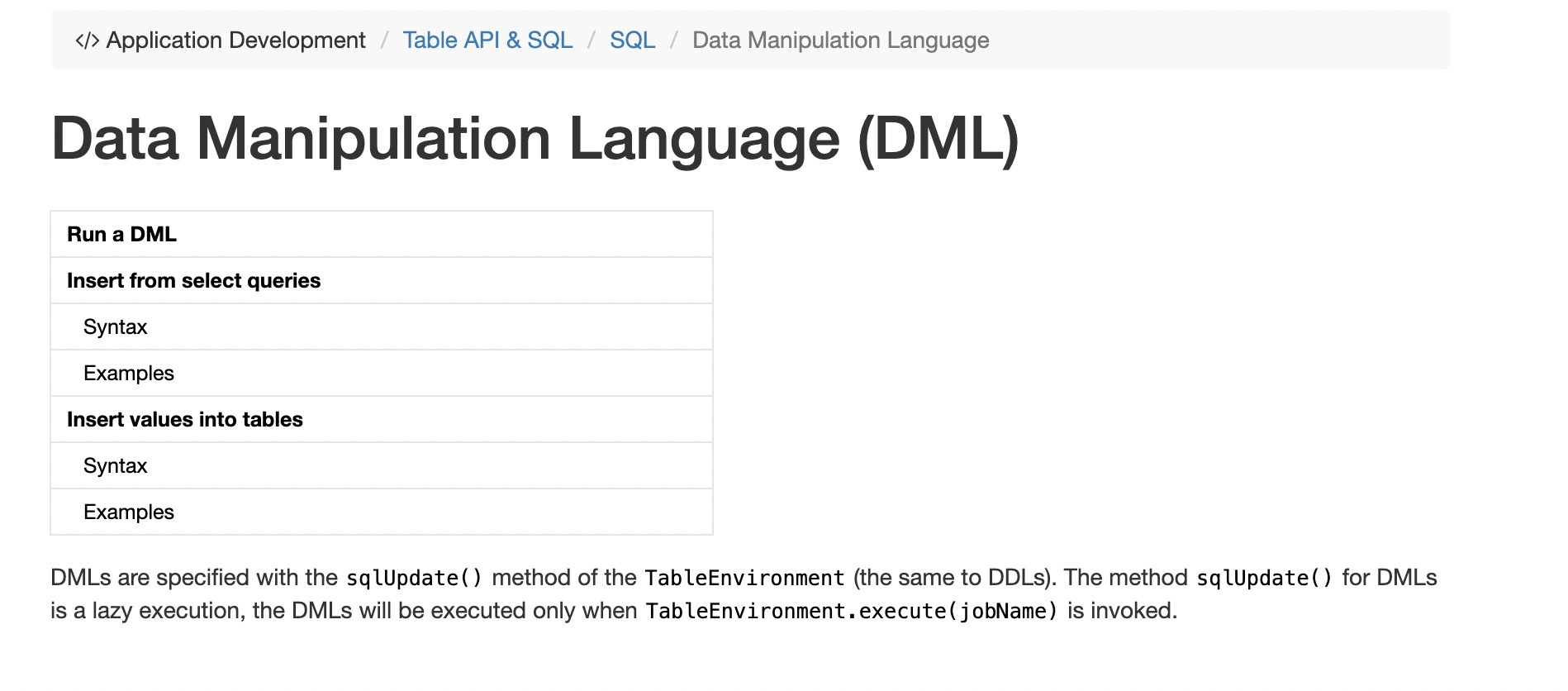

"SQL" page is getting bigger and bigger, it is hard to find useful information from a big page. This PR split the "SQL" page into multiple sub-pages for better readability.

## Brief change log

It split "SQL" page into the following structure:

- SQL

- Overview

- Data Definition Language (DDL)

- Data Manipulation Language (DML)

- Data Retrieval (Query)

A screen shot:

## Verifying this change

This is a pure documentation updates, no tests is added.

## Does this pull request potentially affect one of the following parts:

- Dependencies (does it add or upgrade a dependency): (yes / **no**)

- The public API, i.e., is any changed class annotated with `@Public(Evolving)`: (yes / **no**)

- The serializers: (yes / **no** / don't know)

- The runtime per-record code paths (performance sensitive): (yes / **no** / don't know)

- Anything that affects deployment or recovery: JobManager (and its components), Checkpointing, Yarn/Mesos, ZooKeeper: (yes / **no** / don't know)

- The S3 file system connector: (yes / **no** / don't know)

## Documentation

- Does this pull request introduce a new feature? (yes / **no**)

- If yes, how is the feature documented? (**not applicable** / docs / JavaDocs / not documented)

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

With regards,

Apache Git Services

[GitHub] [flink] sjwiesman commented on a change in pull request #10669:

[FLINK-15192][docs][table] Restructure "SQL" pages for better readability

Posted by GitBox <gi...@apache.org>.

sjwiesman commented on a change in pull request #10669: [FLINK-15192][docs][table] Restructure "SQL" pages for better readability

URL: https://github.com/apache/flink/pull/10669#discussion_r361866041

##########

File path: docs/dev/table/sql/create.md

##########

@@ -0,0 +1,240 @@

+---

+title: "CREATE Statements"

+nav-parent_id: sql

+nav-pos: 2

+---

+<!--

+Licensed to the Apache Software Foundation (ASF) under one

+or more contributor license agreements. See the NOTICE file

+distributed with this work for additional information

+regarding copyright ownership. The ASF licenses this file

+to you under the Apache License, Version 2.0 (the

+"License"); you may not use this file except in compliance

+with the License. You may obtain a copy of the License at

+

+ http://www.apache.org/licenses/LICENSE-2.0

+

+Unless required by applicable law or agreed to in writing,

+software distributed under the License is distributed on an

+"AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+KIND, either express or implied. See the License for the

+specific language governing permissions and limitations

+under the License.

+-->

+

+* This will be replaced by the TOC

+{:toc}

+

+CREATE statements are used to register a table/view/function into current or specified [Catalog]({{ site.baseurl }}/dev/table/catalogs.html). A registered table/view/function can be used in SQL queries.

+

+Flink SQL supports the following CREATE statements for now:

+

+- CREATE TABLE

+- CREATE VIEW

+- CREATE FUNCTION

+- CREATE DATABASE

+

+## Run a CREATE statement

+

+CREATE statements can be executed with the `sqlUpdate()` method of the `TableEnvironment`, or executed in [SQL CLI]({{ site.baseurl }}/dev/table/sqlClient.html). The `sqlUpdate()` method returns nothing for a successful CREATE operation, otherwise will throw an exception.

+

+The following examples show how to run a CREATE statement in `TableEnvironment`.

+

+<div class="codetabs" markdown="1">

+<div data-lang="java" markdown="1">

+{% highlight java %}

+EnvironmentSettings settings = EnvironmentSettings.newInstance()...

+TableEnvironment tableEnv = TableEnvironment.create(settings);

+

+// SQL query with a registered table

+// register a table named "Orders"

+tableEnv.sqlUpdate("CREATE TABLE Orders (`user` BIGINT, product STRING, amount INT) WITH (...)");

+// run a SQL query on the Table and retrieve the result as a new Table

+Table result = tableEnv.sqlQuery(

+ "SELECT product, amount FROM Orders WHERE product LIKE '%Rubber%'");

+

+// SQL update with a registered table

+// register a TableSink

+tableEnv.sqlUpdate("CREATE TABLE RubberOrders(product STRING, amount INT) WITH (...)");

+// run a SQL update query on the Table and emit the result to the TableSink

+tableEnv.sqlUpdate(

+ "INSERT INTO RubberOrders SELECT product, amount FROM Orders WHERE product LIKE '%Rubber%'");

+{% endhighlight %}

+</div>

+

+<div data-lang="scala" markdown="1">

+{% highlight scala %}

+val settings = EnvironmentSettings.newInstance()...

+val tableEnv = TableEnvironment.create(settings)

+

+// SQL query with a registered table

+// register a table named "Orders"

+tableEnv.sqlUpdate("CREATE TABLE Orders (`user` BIGINT, product STRING, amount INT) WITH (...)");

+// run a SQL query on the Table and retrieve the result as a new Table

+val result = tableEnv.sqlQuery(

+ "SELECT product, amount FROM Orders WHERE product LIKE '%Rubber%'");

+

+// SQL update with a registered table

+// register a TableSink

+tableEnv.sqlUpdate("CREATE TABLE RubberOrders(product STRING, amount INT) WITH ('connector.path'='/path/to/file' ...)");

+// run a SQL update query on the Table and emit the result to the TableSink

+tableEnv.sqlUpdate(

+ "INSERT INTO RubberOrders SELECT product, amount FROM Orders WHERE product LIKE '%Rubber%'")

+{% endhighlight %}

+</div>

+

+<div data-lang="python" markdown="1">

+{% highlight python %}

+settings = EnvironmentSettings.newInstance()...

+table_env = TableEnvironment.create(settings)

+

+# SQL query with a registered table

+# register a table named "Orders"

+tableEnv.sqlUpdate("CREATE TABLE Orders (`user` BIGINT, product STRING, amount INT) WITH (...)");

+# run a SQL query on the Table and retrieve the result as a new Table

+result = tableEnv.sqlQuery(

+ "SELECT product, amount FROM Orders WHERE product LIKE '%Rubber%'");

+

+# SQL update with a registered table

+# register a TableSink

+table_env.sql_update("CREATE TABLE RubberOrders(product STRING, amount INT) WITH (...)")

+# run a SQL update query on the Table and emit the result to the TableSink

+table_env \

+ .sql_update("INSERT INTO RubberOrders SELECT product, amount FROM Orders WHERE product LIKE '%Rubber%'")

+{% endhighlight %}

+</div>

+</div>

+

+The following examples show how to run a CREATE statement in SQL CLI.

+

+{% highlight sql %}

+Flink SQL> CREATE TABLE Orders (`user` BIGINT, product STRING, amount INT) WITH (...);

+[INFO] Table has been created.

+

+Flink SQL> CREATE TABLE RubberOrders (product STRING, amount INT) WITH (...);

+[INFO] Table has been created.

+

+Flink SQL> INSERT INTO RubberOrders SELECT product, amount FROM Orders WHERE product LIKE '%Rubber%';

+[INFO] Submitting SQL update statement to the cluster...

+{% endhighlight %}

+

+

+{% top %}

+

+## CREATE TABLE

+

+{% highlight sql %}

+CREATE TABLE [catalog_name.][db_name.]table_name

+ (

+ { <column_definition> | <computed_column_definition> }[ , ...n]

+ [ <watermark_definition> ]

+ )

+ [COMMENT table_comment]

+ [PARTITIONED BY (partition_column_name1, partition_column_name2, ...)]

+ WITH (key1=val1, key2=val2, ...)

+

+<column_definition>:

+ column_name column_type [COMMENT column_comment]

+

+<computed_column_definition>:

+ column_name AS computed_column_expression [COMMENT column_comment]

+

+<watermark_definition>:

+ WATERMARK FOR rowtime_column_name AS watermark_strategy_expression

+

+{% endhighlight %}

+

+Creates a table with the given name. If a table with the same name already exists in the catalog, an exception is thrown.

+

+**COMPUTED COLUMN**

+

+Column declared with syntax "`column_name AS computed_column_expression`" is a computed column. A computed column is a virtual column that is not physically stored in the table. The column is computed from an non-query expression that uses other columns in the same table. For example, a computed column can have the definition: `cost AS price * qty`. The expression can be a noncomputed column name, constant, (user-defined/system) function, variable, and any combination of these connected by one or more operators. The expression cannot be a subquery.

+

+Computed column is introduced to Flink for defining [time attributes]({{ site.baseurl}}/dev/table/streaming/time_attributes.html) in CREATE TABLE statement.

Review comment:

```suggestion

Computed columns are commonly used in Flink for defining [time attributes]({{ site.baseurl}}/dev/table/streaming/time_attributes.html) in CREATE TABLE statements.

```

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

With regards,

Apache Git Services

[GitHub] [flink] flinkbot edited a comment on issue #10669:

[FLINK-15192][docs][table] Restructure "SQL" pages for better readability

Posted by GitBox <gi...@apache.org>.

flinkbot edited a comment on issue #10669: [FLINK-15192][docs][table] Restructure "SQL" pages for better readability

URL: https://github.com/apache/flink/pull/10669#issuecomment-568653494

<!--

Meta data

Hash:aac6bab3b96e3d721234030ce35e24006b6bf7c5 Status:FAILURE URL:https://dev.azure.com/rmetzger/5bd3ef0a-4359-41af-abca-811b04098d2e/_build/results?buildId=3870 TriggerType:PUSH TriggerID:aac6bab3b96e3d721234030ce35e24006b6bf7c5

Hash:aac6bab3b96e3d721234030ce35e24006b6bf7c5 Status:FAILURE URL:https://travis-ci.com/flink-ci/flink/builds/142187553 TriggerType:PUSH TriggerID:aac6bab3b96e3d721234030ce35e24006b6bf7c5

Hash:422e61ea5836e54ce771d7ddd2004cfeb9b951ff Status:SUCCESS URL:https://travis-ci.com/flink-ci/flink/builds/142361200 TriggerType:PUSH TriggerID:422e61ea5836e54ce771d7ddd2004cfeb9b951ff

Hash:422e61ea5836e54ce771d7ddd2004cfeb9b951ff Status:SUCCESS URL:https://dev.azure.com/rmetzger/5bd3ef0a-4359-41af-abca-811b04098d2e/_build/results?buildId=3926 TriggerType:PUSH TriggerID:422e61ea5836e54ce771d7ddd2004cfeb9b951ff

Hash:85cdf8111bffb7ea2bffef7d7dba18aa126c254b Status:SUCCESS URL:https://travis-ci.com/flink-ci/flink/builds/142377471 TriggerType:PUSH TriggerID:85cdf8111bffb7ea2bffef7d7dba18aa126c254b

Hash:85cdf8111bffb7ea2bffef7d7dba18aa126c254b Status:SUCCESS URL:https://dev.azure.com/rmetzger/5bd3ef0a-4359-41af-abca-811b04098d2e/_build/results?buildId=3940 TriggerType:PUSH TriggerID:85cdf8111bffb7ea2bffef7d7dba18aa126c254b

Hash:0015b63f086202e706c2248581e9828abd2589ba Status:SUCCESS URL:https://travis-ci.com/flink-ci/flink/builds/142436142 TriggerType:PUSH TriggerID:0015b63f086202e706c2248581e9828abd2589ba

Hash:0015b63f086202e706c2248581e9828abd2589ba Status:SUCCESS URL:https://dev.azure.com/rmetzger/5bd3ef0a-4359-41af-abca-811b04098d2e/_build/results?buildId=3950 TriggerType:PUSH TriggerID:0015b63f086202e706c2248581e9828abd2589ba

Hash:a7b6ade38aaea781df30a8e5acf98c6c913731a3 Status:PENDING URL:https://travis-ci.com/flink-ci/flink/builds/142562117 TriggerType:PUSH TriggerID:a7b6ade38aaea781df30a8e5acf98c6c913731a3

Hash:a7b6ade38aaea781df30a8e5acf98c6c913731a3 Status:PENDING URL:https://dev.azure.com/rmetzger/5bd3ef0a-4359-41af-abca-811b04098d2e/_build/results?buildId=3968 TriggerType:PUSH TriggerID:a7b6ade38aaea781df30a8e5acf98c6c913731a3

-->

## CI report:

* aac6bab3b96e3d721234030ce35e24006b6bf7c5 Travis: [FAILURE](https://travis-ci.com/flink-ci/flink/builds/142187553) Azure: [FAILURE](https://dev.azure.com/rmetzger/5bd3ef0a-4359-41af-abca-811b04098d2e/_build/results?buildId=3870)

* 422e61ea5836e54ce771d7ddd2004cfeb9b951ff Travis: [SUCCESS](https://travis-ci.com/flink-ci/flink/builds/142361200) Azure: [SUCCESS](https://dev.azure.com/rmetzger/5bd3ef0a-4359-41af-abca-811b04098d2e/_build/results?buildId=3926)

* 85cdf8111bffb7ea2bffef7d7dba18aa126c254b Travis: [SUCCESS](https://travis-ci.com/flink-ci/flink/builds/142377471) Azure: [SUCCESS](https://dev.azure.com/rmetzger/5bd3ef0a-4359-41af-abca-811b04098d2e/_build/results?buildId=3940)

* 0015b63f086202e706c2248581e9828abd2589ba Travis: [SUCCESS](https://travis-ci.com/flink-ci/flink/builds/142436142) Azure: [SUCCESS](https://dev.azure.com/rmetzger/5bd3ef0a-4359-41af-abca-811b04098d2e/_build/results?buildId=3950)

* a7b6ade38aaea781df30a8e5acf98c6c913731a3 Travis: [PENDING](https://travis-ci.com/flink-ci/flink/builds/142562117) Azure: [PENDING](https://dev.azure.com/rmetzger/5bd3ef0a-4359-41af-abca-811b04098d2e/_build/results?buildId=3968)

<details>

<summary>Bot commands</summary>

The @flinkbot bot supports the following commands:

- `@flinkbot run travis` re-run the last Travis build

- `@flinkbot run azure` re-run the last Azure build

</details>

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

With regards,

Apache Git Services

[GitHub] [flink] danny0405 commented on a change in pull request #10669:

[FLINK-15192][docs][table] Restructure "SQL" pages for better readability

Posted by GitBox <gi...@apache.org>.

danny0405 commented on a change in pull request #10669: [FLINK-15192][docs][table] Restructure "SQL" pages for better readability

URL: https://github.com/apache/flink/pull/10669#discussion_r361578477

##########

File path: docs/dev/table/sql/ddl.md

##########

@@ -0,0 +1,297 @@

+---

+title: "Data Definition Language (DDL)"

+nav-title: "Data Definition Language"

+nav-parent_id: sql

+nav-pos: 1

+---

+<!--

+Licensed to the Apache Software Foundation (ASF) under one

+or more contributor license agreements. See the NOTICE file

+distributed with this work for additional information

+regarding copyright ownership. The ASF licenses this file

+to you under the Apache License, Version 2.0 (the

+"License"); you may not use this file except in compliance

+with the License. You may obtain a copy of the License at

+

+ http://www.apache.org/licenses/LICENSE-2.0

+

+Unless required by applicable law or agreed to in writing,

+software distributed under the License is distributed on an

+"AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+KIND, either express or implied. See the License for the

+specific language governing permissions and limitations

+under the License.

+-->

+

+* This will be replaced by the TOC

+{:toc}

+

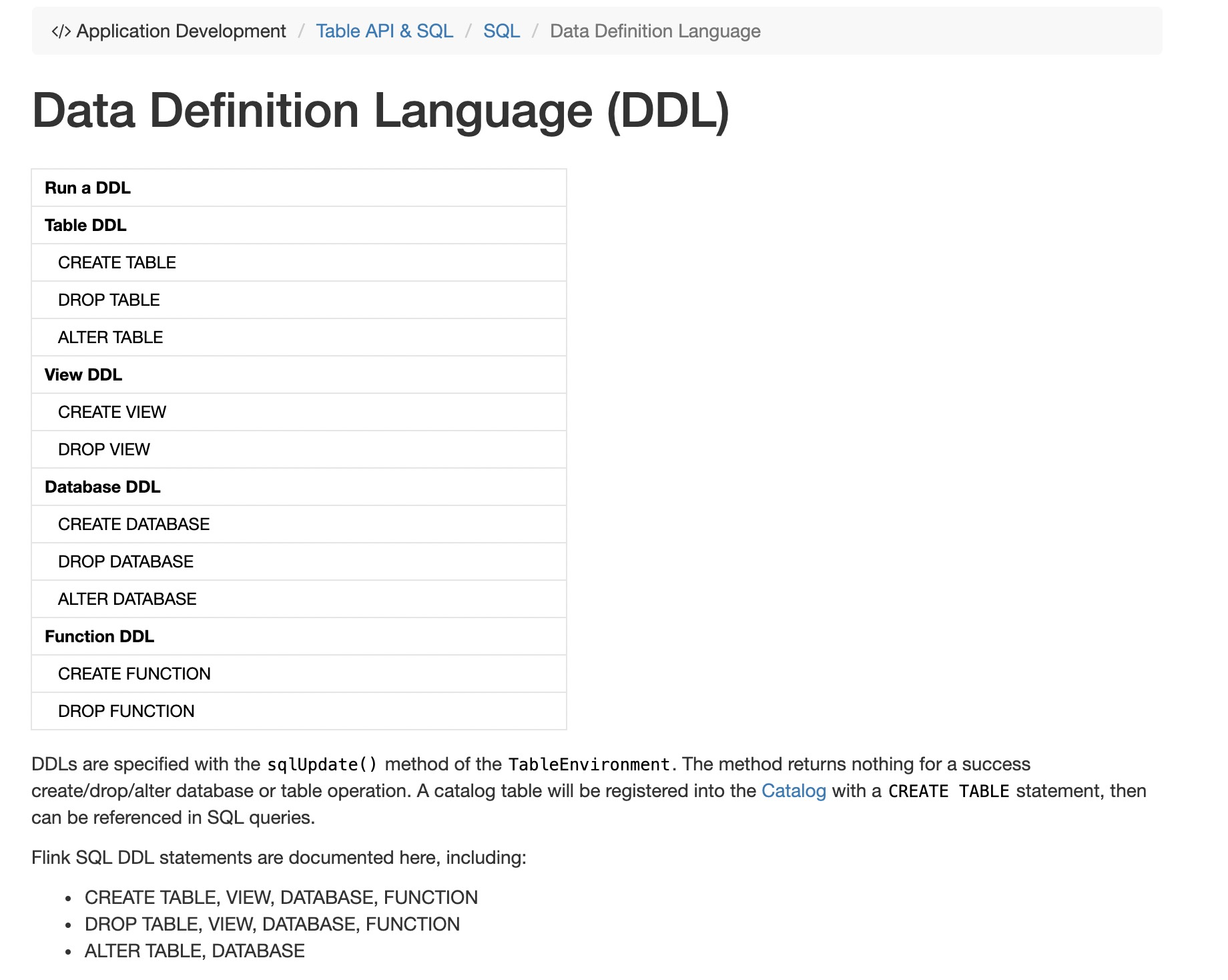

+DDLs are specified with the `sqlUpdate()` method of the `TableEnvironment`. The method returns nothing for a success create/drop/alter database or table operation. A catalog table will be registered into the [Catalog]({{ site.baseurl }}/dev/table/catalogs.html) with a `CREATE TABLE` statement, then can be referenced in SQL queries.

+

+Flink SQL DDL statements are documented here, including:

+

+- CREATE TABLE, VIEW, DATABASE, FUNCTION

+- DROP TABLE, VIEW, DATABASE, FUNCTION

+- ALTER TABLE, DATABASE

+

+## Run a DDL

+

+The following examples show how to run a SQL DDL in `TableEnvironment`.

+

+<div class="codetabs" markdown="1">

+<div data-lang="java" markdown="1">

+{% highlight java %}

+EnvironmentSettings settings = EnvironmentSettings.newInstance()...

+TableEnvironment tableEnv = TableEnvironment.create(settings);

+

+// SQL query with a registered table

+// register a table named "Orders"

+tableEnv.sqlUpdate("CREATE TABLE Orders (`user` BIGINT, product VARCHAR, amount INT) WITH (...)");

+// run a SQL query on the Table and retrieve the result as a new Table

+Table result = tableEnv.sqlQuery(

+ "SELECT product, amount FROM Orders WHERE product LIKE '%Rubber%'");

+

+// SQL update with a registered table

+// register a TableSink

+tableEnv.sqlUpdate("CREATE TABLE RubberOrders(product VARCHAR, amount INT) WITH (...)");

+// run a SQL update query on the Table and emit the result to the TableSink

+tableEnv.sqlUpdate(

+ "INSERT INTO RubberOrders SELECT product, amount FROM Orders WHERE product LIKE '%Rubber%'");

+{% endhighlight %}

+</div>

+

+<div data-lang="scala" markdown="1">

+{% highlight scala %}

+val settings = EnvironmentSettings.newInstance()...

+val tableEnv = TableEnvironment.create(settings)

+

+// SQL query with a registered table

+// register a table named "Orders"

+tableEnv.sqlUpdate("CREATE TABLE Orders (`user` BIGINT, product VARCHAR, amount INT) WITH (...)");

+// run a SQL query on the Table and retrieve the result as a new Table

+val result = tableEnv.sqlQuery(

+ "SELECT product, amount FROM Orders WHERE product LIKE '%Rubber%'");

+

+// SQL update with a registered table

+// register a TableSink

+tableEnv.sqlUpdate("CREATE TABLE RubberOrders(product VARCHAR, amount INT) WITH ('connector.path'='/path/to/file' ...)");

+// run a SQL update query on the Table and emit the result to the TableSink

+tableEnv.sqlUpdate(

+ "INSERT INTO RubberOrders SELECT product, amount FROM Orders WHERE product LIKE '%Rubber%'")

+{% endhighlight %}

+</div>

+

+<div data-lang="python" markdown="1">

+{% highlight python %}

+settings = EnvironmentSettings.newInstance()...

+table_env = TableEnvironment.create(settings)

+

+# SQL update with a registered table

+# register a TableSink

+table_env.sql_update("CREATE TABLE RubberOrders(product VARCHAR, amount INT) with (...)")

+# run a SQL update query on the Table and emit the result to the TableSink

+table_env \

+ .sql_update("INSERT INTO RubberOrders SELECT product, amount FROM Orders WHERE product LIKE '%Rubber%'")

+{% endhighlight %}

+</div>

+</div>

+

+{% top %}

+

+## Table DDL

+

+### CREATE TABLE

+

+{% highlight sql %}

+CREATE TABLE [catalog_name.][db_name.]table_name

+ (

+ { <column_definition> | <computed_column_definition> }[ , ...n]

+ [ <watermark_definition> ]

+ )

+ [COMMENT table_comment]

+ [PARTITIONED BY (partition_column_name1, partition_column_name2, ...)]

+ WITH (key1=val1, key2=val2, ...)

+

+<column_definition>:

+ column_name column_type [COMMENT column_comment]

+

+<computed_column_definition>:

+ column_name AS computed_column_expression [COMMENT column_comment]

+

+<watermark_definition>:

+ WATERMARK FOR rowtime_column_name AS watermark_strategy_expression

+

+{% endhighlight %}

+

+Creates a table with the given name. If a table with the same name already exists in the catalog, an exception is thrown.

+

+**COMPUTED COLUMN**

+

+Column declared with syntax "`column_name AS computed_column_expression`" is a computed column. A computed column is a virtual column that is not physically stored in the table. The column is computed from an non-query expression that uses other columns in the same table. For example, a computed column can have the definition: `cost AS price * qty`. The expression can be a noncomputed column name, constant, (user-defined/system) function, variable, and any combination of these connected by one or more operators. The expression cannot be a subquery.

+

+Computed column is introduced to Flink for defining [time attributes]({{ site.baseurl}}/dev/table/streaming/time_attributes.html) in CREATE TABLE DDL.

+A [processing time attribute]({{ site.baseurl}}/dev/table/streaming/time_attributes.html#processing-time) can be defined easily via `proc AS PROCTIME()` using the system `PROCTIME()` function.

+On the other hand, computed column can be used to derive event time column because an event time column may need to be derived from existing fields, e.g. the original field is not `TIMESTAMP(3)` type or is nested in a JSON string.

+

+Notes:

+

+- A computed column defined on a source table is computed after reading from the source, it can be used in the following SELECT query statements.

+- A computed column cannot be the target of an INSERT statement, INSERT statement should match SELECT clause's schema with sink table's schema without computed column.

+

Review comment:

INSERT statement target table schema should match SELECT clause's schema without computed column.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

With regards,

Apache Git Services

[GitHub] [flink] danny0405 commented on a change in pull request #10669:

[FLINK-15192][docs][table] Restructure "SQL" pages for better readability

Posted by GitBox <gi...@apache.org>.

danny0405 commented on a change in pull request #10669: [FLINK-15192][docs][table] Restructure "SQL" pages for better readability

URL: https://github.com/apache/flink/pull/10669#discussion_r361578634

##########

File path: docs/dev/table/sql/ddl.md

##########

@@ -0,0 +1,297 @@

+---

+title: "Data Definition Language (DDL)"

+nav-title: "Data Definition Language"

+nav-parent_id: sql

+nav-pos: 1

+---

+<!--

+Licensed to the Apache Software Foundation (ASF) under one

+or more contributor license agreements. See the NOTICE file

+distributed with this work for additional information

+regarding copyright ownership. The ASF licenses this file

+to you under the Apache License, Version 2.0 (the

+"License"); you may not use this file except in compliance

+with the License. You may obtain a copy of the License at

+

+ http://www.apache.org/licenses/LICENSE-2.0

+

+Unless required by applicable law or agreed to in writing,

+software distributed under the License is distributed on an

+"AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+KIND, either express or implied. See the License for the

+specific language governing permissions and limitations

+under the License.

+-->

+

+* This will be replaced by the TOC

+{:toc}

+

+DDLs are specified with the `sqlUpdate()` method of the `TableEnvironment`. The method returns nothing for a success create/drop/alter database or table operation. A catalog table will be registered into the [Catalog]({{ site.baseurl }}/dev/table/catalogs.html) with a `CREATE TABLE` statement, then can be referenced in SQL queries.

+

+Flink SQL DDL statements are documented here, including:

+

+- CREATE TABLE, VIEW, DATABASE, FUNCTION

+- DROP TABLE, VIEW, DATABASE, FUNCTION

+- ALTER TABLE, DATABASE

+

+## Run a DDL

+

+The following examples show how to run a SQL DDL in `TableEnvironment`.

+

+<div class="codetabs" markdown="1">

+<div data-lang="java" markdown="1">

+{% highlight java %}

+EnvironmentSettings settings = EnvironmentSettings.newInstance()...

+TableEnvironment tableEnv = TableEnvironment.create(settings);

+

+// SQL query with a registered table

+// register a table named "Orders"

+tableEnv.sqlUpdate("CREATE TABLE Orders (`user` BIGINT, product VARCHAR, amount INT) WITH (...)");

+// run a SQL query on the Table and retrieve the result as a new Table

+Table result = tableEnv.sqlQuery(

+ "SELECT product, amount FROM Orders WHERE product LIKE '%Rubber%'");

+

+// SQL update with a registered table

+// register a TableSink

+tableEnv.sqlUpdate("CREATE TABLE RubberOrders(product VARCHAR, amount INT) WITH (...)");

+// run a SQL update query on the Table and emit the result to the TableSink

+tableEnv.sqlUpdate(

+ "INSERT INTO RubberOrders SELECT product, amount FROM Orders WHERE product LIKE '%Rubber%'");

+{% endhighlight %}

+</div>

+

+<div data-lang="scala" markdown="1">

+{% highlight scala %}

+val settings = EnvironmentSettings.newInstance()...

+val tableEnv = TableEnvironment.create(settings)

+

+// SQL query with a registered table

+// register a table named "Orders"

+tableEnv.sqlUpdate("CREATE TABLE Orders (`user` BIGINT, product VARCHAR, amount INT) WITH (...)");

+// run a SQL query on the Table and retrieve the result as a new Table

+val result = tableEnv.sqlQuery(

+ "SELECT product, amount FROM Orders WHERE product LIKE '%Rubber%'");

+

+// SQL update with a registered table

+// register a TableSink

+tableEnv.sqlUpdate("CREATE TABLE RubberOrders(product VARCHAR, amount INT) WITH ('connector.path'='/path/to/file' ...)");

+// run a SQL update query on the Table and emit the result to the TableSink

+tableEnv.sqlUpdate(

+ "INSERT INTO RubberOrders SELECT product, amount FROM Orders WHERE product LIKE '%Rubber%'")

+{% endhighlight %}

+</div>

+

+<div data-lang="python" markdown="1">

+{% highlight python %}

+settings = EnvironmentSettings.newInstance()...

+table_env = TableEnvironment.create(settings)

+

+# SQL update with a registered table

+# register a TableSink

+table_env.sql_update("CREATE TABLE RubberOrders(product VARCHAR, amount INT) with (...)")

+# run a SQL update query on the Table and emit the result to the TableSink

+table_env \

+ .sql_update("INSERT INTO RubberOrders SELECT product, amount FROM Orders WHERE product LIKE '%Rubber%'")

+{% endhighlight %}

+</div>

+</div>

+

+{% top %}

+

+## Table DDL

+

+### CREATE TABLE

+

+{% highlight sql %}

+CREATE TABLE [catalog_name.][db_name.]table_name

+ (

+ { <column_definition> | <computed_column_definition> }[ , ...n]

+ [ <watermark_definition> ]

+ )

+ [COMMENT table_comment]

+ [PARTITIONED BY (partition_column_name1, partition_column_name2, ...)]

+ WITH (key1=val1, key2=val2, ...)

+

+<column_definition>:

+ column_name column_type [COMMENT column_comment]

+

+<computed_column_definition>:

+ column_name AS computed_column_expression [COMMENT column_comment]

+

+<watermark_definition>:

+ WATERMARK FOR rowtime_column_name AS watermark_strategy_expression

+

+{% endhighlight %}

+

+Creates a table with the given name. If a table with the same name already exists in the catalog, an exception is thrown.

+

+**COMPUTED COLUMN**

+

+Column declared with syntax "`column_name AS computed_column_expression`" is a computed column. A computed column is a virtual column that is not physically stored in the table. The column is computed from an non-query expression that uses other columns in the same table. For example, a computed column can have the definition: `cost AS price * qty`. The expression can be a noncomputed column name, constant, (user-defined/system) function, variable, and any combination of these connected by one or more operators. The expression cannot be a subquery.

+

+Computed column is introduced to Flink for defining [time attributes]({{ site.baseurl}}/dev/table/streaming/time_attributes.html) in CREATE TABLE DDL.

+A [processing time attribute]({{ site.baseurl}}/dev/table/streaming/time_attributes.html#processing-time) can be defined easily via `proc AS PROCTIME()` using the system `PROCTIME()` function.

+On the other hand, computed column can be used to derive event time column because an event time column may need to be derived from existing fields, e.g. the original field is not `TIMESTAMP(3)` type or is nested in a JSON string.

+

+Notes:

+

+- A computed column defined on a source table is computed after reading from the source, it can be used in the following SELECT query statements.

+- A computed column cannot be the target of an INSERT statement, INSERT statement should match SELECT clause's schema with sink table's schema without computed column.

+

+

+**WATERMARK**

+

+The WATERMARK definition is used to define [event time attribute]({{ site.baseurl }}/dev/table/streaming/time_attributes.html#event-time) in CREATE TABLE DDL.

+

+The “`FOR rowtime_column_name`” statement defines which existing column is marked as event time attribute, the column must be `TIMESTAMP(3)` type and top-level in the schema and can be a computed column.

+

+The “`AS watermark_strategy_expression`” statement defines watermark generation strategy. It allows arbitrary non-query expression (can reference computed columns) to calculate watermark. The expression return type must be `TIMESTAMP(3)` which represents the timestamp since Epoch.

+

+The returned watermark will be emitted only if it is non-null and its value is larger than the previously emitted local watermark (to preserve the contract of ascending watermarks). The watermark generation expression is called by the framework for every record.

+The framework will periodically emit the largest generated watermark. If the current watermark is still identical to the previous one, or is null, or the value of the returned watermark is smaller than that of the last emitted one, then no new watermark will be emitted.

Review comment:

`is called` -> `is evaluated`

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

With regards,

Apache Git Services

[GitHub] [flink] flinkbot edited a comment on issue #10669:

[FLINK-15192][docs][table] Restructure "SQL" pages for better readability

Posted by GitBox <gi...@apache.org>.

flinkbot edited a comment on issue #10669: [FLINK-15192][docs][table] Restructure "SQL" pages for better readability

URL: https://github.com/apache/flink/pull/10669#issuecomment-568653494

<!--

Meta data

Hash:aac6bab3b96e3d721234030ce35e24006b6bf7c5 Status:FAILURE URL:https://dev.azure.com/rmetzger/5bd3ef0a-4359-41af-abca-811b04098d2e/_build/results?buildId=3870 TriggerType:PUSH TriggerID:aac6bab3b96e3d721234030ce35e24006b6bf7c5

Hash:aac6bab3b96e3d721234030ce35e24006b6bf7c5 Status:FAILURE URL:https://travis-ci.com/flink-ci/flink/builds/142187553 TriggerType:PUSH TriggerID:aac6bab3b96e3d721234030ce35e24006b6bf7c5

Hash:422e61ea5836e54ce771d7ddd2004cfeb9b951ff Status:SUCCESS URL:https://travis-ci.com/flink-ci/flink/builds/142361200 TriggerType:PUSH TriggerID:422e61ea5836e54ce771d7ddd2004cfeb9b951ff

Hash:422e61ea5836e54ce771d7ddd2004cfeb9b951ff Status:SUCCESS URL:https://dev.azure.com/rmetzger/5bd3ef0a-4359-41af-abca-811b04098d2e/_build/results?buildId=3926 TriggerType:PUSH TriggerID:422e61ea5836e54ce771d7ddd2004cfeb9b951ff

Hash:85cdf8111bffb7ea2bffef7d7dba18aa126c254b Status:SUCCESS URL:https://travis-ci.com/flink-ci/flink/builds/142377471 TriggerType:PUSH TriggerID:85cdf8111bffb7ea2bffef7d7dba18aa126c254b

Hash:85cdf8111bffb7ea2bffef7d7dba18aa126c254b Status:SUCCESS URL:https://dev.azure.com/rmetzger/5bd3ef0a-4359-41af-abca-811b04098d2e/_build/results?buildId=3940 TriggerType:PUSH TriggerID:85cdf8111bffb7ea2bffef7d7dba18aa126c254b

Hash:0015b63f086202e706c2248581e9828abd2589ba Status:UNKNOWN URL:TBD TriggerType:PUSH TriggerID:0015b63f086202e706c2248581e9828abd2589ba

-->

## CI report:

* aac6bab3b96e3d721234030ce35e24006b6bf7c5 Travis: [FAILURE](https://travis-ci.com/flink-ci/flink/builds/142187553) Azure: [FAILURE](https://dev.azure.com/rmetzger/5bd3ef0a-4359-41af-abca-811b04098d2e/_build/results?buildId=3870)

* 422e61ea5836e54ce771d7ddd2004cfeb9b951ff Travis: [SUCCESS](https://travis-ci.com/flink-ci/flink/builds/142361200) Azure: [SUCCESS](https://dev.azure.com/rmetzger/5bd3ef0a-4359-41af-abca-811b04098d2e/_build/results?buildId=3926)

* 85cdf8111bffb7ea2bffef7d7dba18aa126c254b Travis: [SUCCESS](https://travis-ci.com/flink-ci/flink/builds/142377471) Azure: [SUCCESS](https://dev.azure.com/rmetzger/5bd3ef0a-4359-41af-abca-811b04098d2e/_build/results?buildId=3940)

* 0015b63f086202e706c2248581e9828abd2589ba UNKNOWN

<details>

<summary>Bot commands</summary>

The @flinkbot bot supports the following commands:

- `@flinkbot run travis` re-run the last Travis build

- `@flinkbot run azure` re-run the last Azure build

</details>

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

With regards,

Apache Git Services

[GitHub] [flink] wuchong commented on issue #10669:

[FLINK-15192][docs][table] Restructure "SQL" pages for better readability

Posted by GitBox <gi...@apache.org>.

wuchong commented on issue #10669: [FLINK-15192][docs][table] Restructure "SQL" pages for better readability

URL: https://github.com/apache/flink/pull/10669#issuecomment-569594878

Thanks @sjwiesman for the great suggestions! I updated the PR according to your comment.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

With regards,

Apache Git Services

[GitHub] [flink] flinkbot edited a comment on issue #10669:

[FLINK-15192][docs][table] Split 'SQL' page into multiple sub pages for

better readability

Posted by GitBox <gi...@apache.org>.

flinkbot edited a comment on issue #10669: [FLINK-15192][docs][table] Split 'SQL' page into multiple sub pages for better readability

URL: https://github.com/apache/flink/pull/10669#issuecomment-568653494

<!--

Meta data

Hash:aac6bab3b96e3d721234030ce35e24006b6bf7c5 Status:FAILURE URL:https://dev.azure.com/rmetzger/5bd3ef0a-4359-41af-abca-811b04098d2e/_build/results?buildId=3870 TriggerType:PUSH TriggerID:aac6bab3b96e3d721234030ce35e24006b6bf7c5

Hash:aac6bab3b96e3d721234030ce35e24006b6bf7c5 Status:FAILURE URL:https://travis-ci.com/flink-ci/flink/builds/142187553 TriggerType:PUSH TriggerID:aac6bab3b96e3d721234030ce35e24006b6bf7c5

Hash:422e61ea5836e54ce771d7ddd2004cfeb9b951ff Status:UNKNOWN URL:TBD TriggerType:PUSH TriggerID:422e61ea5836e54ce771d7ddd2004cfeb9b951ff

-->

## CI report:

* aac6bab3b96e3d721234030ce35e24006b6bf7c5 Travis: [FAILURE](https://travis-ci.com/flink-ci/flink/builds/142187553) Azure: [FAILURE](https://dev.azure.com/rmetzger/5bd3ef0a-4359-41af-abca-811b04098d2e/_build/results?buildId=3870)

* 422e61ea5836e54ce771d7ddd2004cfeb9b951ff UNKNOWN

<details>

<summary>Bot commands</summary>

The @flinkbot bot supports the following commands:

- `@flinkbot run travis` re-run the last Travis build

- `@flinkbot run azure` re-run the last Azure build

</details>

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

With regards,

Apache Git Services

[GitHub] [flink] flinkbot edited a comment on issue #10669:

[FLINK-15192][docs][table] Split 'SQL' page into multiple sub pages for

better readability

Posted by GitBox <gi...@apache.org>.

flinkbot edited a comment on issue #10669: [FLINK-15192][docs][table] Split 'SQL' page into multiple sub pages for better readability

URL: https://github.com/apache/flink/pull/10669#issuecomment-568653494

<!--

Meta data

Hash:aac6bab3b96e3d721234030ce35e24006b6bf7c5 Status:FAILURE URL:https://dev.azure.com/rmetzger/5bd3ef0a-4359-41af-abca-811b04098d2e/_build/results?buildId=3870 TriggerType:PUSH TriggerID:aac6bab3b96e3d721234030ce35e24006b6bf7c5

Hash:aac6bab3b96e3d721234030ce35e24006b6bf7c5 Status:FAILURE URL:https://travis-ci.com/flink-ci/flink/builds/142187553 TriggerType:PUSH TriggerID:aac6bab3b96e3d721234030ce35e24006b6bf7c5

Hash:422e61ea5836e54ce771d7ddd2004cfeb9b951ff Status:SUCCESS URL:https://travis-ci.com/flink-ci/flink/builds/142361200 TriggerType:PUSH TriggerID:422e61ea5836e54ce771d7ddd2004cfeb9b951ff

Hash:422e61ea5836e54ce771d7ddd2004cfeb9b951ff Status:SUCCESS URL:https://dev.azure.com/rmetzger/5bd3ef0a-4359-41af-abca-811b04098d2e/_build/results?buildId=3926 TriggerType:PUSH TriggerID:422e61ea5836e54ce771d7ddd2004cfeb9b951ff

Hash:85cdf8111bffb7ea2bffef7d7dba18aa126c254b Status:UNKNOWN URL:TBD TriggerType:PUSH TriggerID:85cdf8111bffb7ea2bffef7d7dba18aa126c254b

-->

## CI report:

* aac6bab3b96e3d721234030ce35e24006b6bf7c5 Travis: [FAILURE](https://travis-ci.com/flink-ci/flink/builds/142187553) Azure: [FAILURE](https://dev.azure.com/rmetzger/5bd3ef0a-4359-41af-abca-811b04098d2e/_build/results?buildId=3870)

* 422e61ea5836e54ce771d7ddd2004cfeb9b951ff Travis: [SUCCESS](https://travis-ci.com/flink-ci/flink/builds/142361200) Azure: [SUCCESS](https://dev.azure.com/rmetzger/5bd3ef0a-4359-41af-abca-811b04098d2e/_build/results?buildId=3926)

* 85cdf8111bffb7ea2bffef7d7dba18aa126c254b UNKNOWN

<details>

<summary>Bot commands</summary>

The @flinkbot bot supports the following commands:

- `@flinkbot run travis` re-run the last Travis build

- `@flinkbot run azure` re-run the last Azure build

</details>

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

With regards,

Apache Git Services

[GitHub] [flink] flinkbot edited a comment on issue #10669:

[FLINK-15192][docs][table] Split 'SQL' page into multiple sub pages for

better readability

Posted by GitBox <gi...@apache.org>.

flinkbot edited a comment on issue #10669: [FLINK-15192][docs][table] Split 'SQL' page into multiple sub pages for better readability

URL: https://github.com/apache/flink/pull/10669#issuecomment-568653494

<!--

Meta data

Hash:aac6bab3b96e3d721234030ce35e24006b6bf7c5 Status:FAILURE URL:https://dev.azure.com/rmetzger/5bd3ef0a-4359-41af-abca-811b04098d2e/_build/results?buildId=3870 TriggerType:PUSH TriggerID:aac6bab3b96e3d721234030ce35e24006b6bf7c5

Hash:aac6bab3b96e3d721234030ce35e24006b6bf7c5 Status:FAILURE URL:https://travis-ci.com/flink-ci/flink/builds/142187553 TriggerType:PUSH TriggerID:aac6bab3b96e3d721234030ce35e24006b6bf7c5

-->

## CI report:

* aac6bab3b96e3d721234030ce35e24006b6bf7c5 Travis: [FAILURE](https://travis-ci.com/flink-ci/flink/builds/142187553) Azure: [FAILURE](https://dev.azure.com/rmetzger/5bd3ef0a-4359-41af-abca-811b04098d2e/_build/results?buildId=3870)

<details>

<summary>Bot commands</summary>

The @flinkbot bot supports the following commands:

- `@flinkbot run travis` re-run the last Travis build

- `@flinkbot run azure` re-run the last Azure build

</details>

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

With regards,

Apache Git Services

[GitHub] [flink] sjwiesman commented on a change in pull request #10669:

[FLINK-15192][docs][table] Restructure "SQL" pages for better readability

Posted by GitBox <gi...@apache.org>.

sjwiesman commented on a change in pull request #10669: [FLINK-15192][docs][table] Restructure "SQL" pages for better readability

URL: https://github.com/apache/flink/pull/10669#discussion_r361867117

##########

File path: docs/dev/table/sql/create.md

##########

@@ -0,0 +1,240 @@

+---

+title: "CREATE Statements"

+nav-parent_id: sql

+nav-pos: 2

+---

+<!--

+Licensed to the Apache Software Foundation (ASF) under one

+or more contributor license agreements. See the NOTICE file

+distributed with this work for additional information

+regarding copyright ownership. The ASF licenses this file

+to you under the Apache License, Version 2.0 (the

+"License"); you may not use this file except in compliance

+with the License. You may obtain a copy of the License at

+

+ http://www.apache.org/licenses/LICENSE-2.0

+

+Unless required by applicable law or agreed to in writing,

+software distributed under the License is distributed on an

+"AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+KIND, either express or implied. See the License for the

+specific language governing permissions and limitations

+under the License.

+-->

+

+* This will be replaced by the TOC

+{:toc}

+

+CREATE statements are used to register a table/view/function into current or specified [Catalog]({{ site.baseurl }}/dev/table/catalogs.html). A registered table/view/function can be used in SQL queries.

+

+Flink SQL supports the following CREATE statements for now:

+

+- CREATE TABLE

+- CREATE VIEW

+- CREATE FUNCTION

+- CREATE DATABASE

+

+## Run a CREATE statement

+

+CREATE statements can be executed with the `sqlUpdate()` method of the `TableEnvironment`, or executed in [SQL CLI]({{ site.baseurl }}/dev/table/sqlClient.html). The `sqlUpdate()` method returns nothing for a successful CREATE operation, otherwise will throw an exception.

+

+The following examples show how to run a CREATE statement in `TableEnvironment`.

+

+<div class="codetabs" markdown="1">

+<div data-lang="java" markdown="1">

+{% highlight java %}

+EnvironmentSettings settings = EnvironmentSettings.newInstance()...

+TableEnvironment tableEnv = TableEnvironment.create(settings);

+

+// SQL query with a registered table

+// register a table named "Orders"

+tableEnv.sqlUpdate("CREATE TABLE Orders (`user` BIGINT, product STRING, amount INT) WITH (...)");

+// run a SQL query on the Table and retrieve the result as a new Table

+Table result = tableEnv.sqlQuery(

+ "SELECT product, amount FROM Orders WHERE product LIKE '%Rubber%'");

+

+// SQL update with a registered table

+// register a TableSink

+tableEnv.sqlUpdate("CREATE TABLE RubberOrders(product STRING, amount INT) WITH (...)");

+// run a SQL update query on the Table and emit the result to the TableSink

+tableEnv.sqlUpdate(

+ "INSERT INTO RubberOrders SELECT product, amount FROM Orders WHERE product LIKE '%Rubber%'");

+{% endhighlight %}

+</div>

+

+<div data-lang="scala" markdown="1">

+{% highlight scala %}

+val settings = EnvironmentSettings.newInstance()...

+val tableEnv = TableEnvironment.create(settings)

+

+// SQL query with a registered table

+// register a table named "Orders"

+tableEnv.sqlUpdate("CREATE TABLE Orders (`user` BIGINT, product STRING, amount INT) WITH (...)");

+// run a SQL query on the Table and retrieve the result as a new Table

+val result = tableEnv.sqlQuery(

+ "SELECT product, amount FROM Orders WHERE product LIKE '%Rubber%'");

+

+// SQL update with a registered table

+// register a TableSink

+tableEnv.sqlUpdate("CREATE TABLE RubberOrders(product STRING, amount INT) WITH ('connector.path'='/path/to/file' ...)");

+// run a SQL update query on the Table and emit the result to the TableSink

+tableEnv.sqlUpdate(

+ "INSERT INTO RubberOrders SELECT product, amount FROM Orders WHERE product LIKE '%Rubber%'")

+{% endhighlight %}

+</div>

+

+<div data-lang="python" markdown="1">

+{% highlight python %}

+settings = EnvironmentSettings.newInstance()...

+table_env = TableEnvironment.create(settings)

+

+# SQL query with a registered table

+# register a table named "Orders"

+tableEnv.sqlUpdate("CREATE TABLE Orders (`user` BIGINT, product STRING, amount INT) WITH (...)");

+# run a SQL query on the Table and retrieve the result as a new Table

+result = tableEnv.sqlQuery(

+ "SELECT product, amount FROM Orders WHERE product LIKE '%Rubber%'");

+

+# SQL update with a registered table

+# register a TableSink

+table_env.sql_update("CREATE TABLE RubberOrders(product STRING, amount INT) WITH (...)")

+# run a SQL update query on the Table and emit the result to the TableSink

+table_env \

+ .sql_update("INSERT INTO RubberOrders SELECT product, amount FROM Orders WHERE product LIKE '%Rubber%'")

+{% endhighlight %}

+</div>

+</div>

+

+The following examples show how to run a CREATE statement in SQL CLI.

+

+{% highlight sql %}

+Flink SQL> CREATE TABLE Orders (`user` BIGINT, product STRING, amount INT) WITH (...);

+[INFO] Table has been created.

+

+Flink SQL> CREATE TABLE RubberOrders (product STRING, amount INT) WITH (...);

+[INFO] Table has been created.

+

+Flink SQL> INSERT INTO RubberOrders SELECT product, amount FROM Orders WHERE product LIKE '%Rubber%';

+[INFO] Submitting SQL update statement to the cluster...

+{% endhighlight %}

+

+

+{% top %}

+

+## CREATE TABLE

+

+{% highlight sql %}

+CREATE TABLE [catalog_name.][db_name.]table_name

+ (

+ { <column_definition> | <computed_column_definition> }[ , ...n]

+ [ <watermark_definition> ]

+ )

+ [COMMENT table_comment]

+ [PARTITIONED BY (partition_column_name1, partition_column_name2, ...)]

+ WITH (key1=val1, key2=val2, ...)

+

+<column_definition>:

+ column_name column_type [COMMENT column_comment]

+

+<computed_column_definition>:

+ column_name AS computed_column_expression [COMMENT column_comment]

+

+<watermark_definition>:

+ WATERMARK FOR rowtime_column_name AS watermark_strategy_expression

+

+{% endhighlight %}

+

+Creates a table with the given name. If a table with the same name already exists in the catalog, an exception is thrown.

+

+**COMPUTED COLUMN**

+

+Column declared with syntax "`column_name AS computed_column_expression`" is a computed column. A computed column is a virtual column that is not physically stored in the table. The column is computed from an non-query expression that uses other columns in the same table. For example, a computed column can have the definition: `cost AS price * qty`. The expression can be a noncomputed column name, constant, (user-defined/system) function, variable, and any combination of these connected by one or more operators. The expression cannot be a subquery.

+

+Computed column is introduced to Flink for defining [time attributes]({{ site.baseurl}}/dev/table/streaming/time_attributes.html) in CREATE TABLE statement.

+A [processing time attribute]({{ site.baseurl}}/dev/table/streaming/time_attributes.html#processing-time) can be defined easily via `proc AS PROCTIME()` using the system `PROCTIME()` function.

+On the other hand, computed column can be used to derive event time column because an event time column may need to be derived from existing fields, e.g. the original field is not `TIMESTAMP(3)` type or is nested in a JSON string.

+

+Notes:

+

+- A computed column defined on a source table is computed after reading from the source, it can be used in the following SELECT query statements.

+- A computed column cannot be the target of an INSERT statement. In INSERT statement, the schema of SELECT clause should match the schema of target table without computed columns.

+

+

+**WATERMARK**

+

+The WATERMARK definition is used to define [event time attribute]({{ site.baseurl }}/dev/table/streaming/time_attributes.html#event-time) in CREATE TABLE statement.

+

+The “`FOR rowtime_column_name`” statement defines which existing column is marked as event time attribute, the column must be `TIMESTAMP(3)` type and top-level in the schema and can be a computed column.

+

+The “`AS watermark_strategy_expression`” statement defines watermark generation strategy. It allows arbitrary non-query expression (can reference computed columns) to calculate watermark. The expression return type must be `TIMESTAMP(3)` which represents the timestamp since Epoch.

+

+The returned watermark will be emitted only if it is non-null and its value is larger than the previously emitted local watermark (to preserve the contract of ascending watermarks). The watermark generation expression is evaluated by the framework for every record.

+The framework will periodically emit the largest generated watermark. If the current watermark is still identical to the previous one, or is null, or the value of the returned watermark is smaller than that of the last emitted one, then no new watermark will be emitted.

+Watermark is emitted in an interval defined by [`pipeline.auto-watermark-interval`]({{ site.baseurl }}/ops/config.html#pipeline-auto-watermark-interval) configuration.

+If watermark interval is `0ms`, the generated watermarks will be emitted per-record if it is not null and greater than the last emitted one.

+

+For common cases, Flink provide some suggested and easy-to-use ways to define commonly used watermark strategies. Such as:

+

+- Strictly ascending timestamps: `WATERMARK FOR rowtime_column AS rowtime_column`

+

+ Emits a watermark of the maximum observed timestamp so far. Rows that have a timestamp smaller to the max timestamp are not late.

+

+- Ascending timestamps: `WATERMARK FOR rowtime_column AS rowtime_column - INTERVAL '0.001' SECOND`

+

+ Emits a watermark of the maximum observed timestamp so far minus 1 (the smallest unit of watermark is millisecond). Rows that have a timestamp equal to the max timestamp are not late.

+

+- Bounded out of orderness timestamps: `WATERMARK FOR rowtime_column AS rowtimeField - INTERVAL 'string' timeUnit`

+

+ Emits watermarks which are the maximum observed timestamp minus the specified delay, e.g. `WATERMARK FOR rowtime_column AS rowtimeField - INTERVAL '5' SECOND` is a 5 seconds delayed watermark strategy.

+

+- Preserves assigned watermarks from source (**Not supported yet**): `WATERMARK FOR rowtime_column AS SYSTEM_WATERMARK()`

+

+ Indicates the watermarks are preserved from the source (e.g. some sources can generate watermarks themselves).

+

+**PARTITIONED BY**

+

+Partition the created table by the specified columns. A directory is created for each partition if this table is used as a filesystem sink.

+

+**WITH OPTIONS**

+

+Table properties used to create a table source/sink. The properties are usually used to find and create the underlying connector.

+

+The key and value of expression `key1=val1` should both be string literal. See details in [Connect to External Systems]({{ site.baseurl }}/dev/table/connect.html) for all the supported table properties of different connectors.

+

+**Notes:** The table name can be of three formats: 1. `catalog_name.db_name.table_name` 2. `db_name.table_name` 3. `table_name`. For `catalog_name.db_name.table_name`, the table would be registered into metastore with catalog named "catalog_name" and database named "db_name"; for `db_name.table_name`, the table would be registered into the current catalog of the execution table environment and database named "db_name"; for `table_name`, the table would be registered into the current catalog and database of the execution table environment.

+

+**Notes:** The table registered with `CREATE TABLE` statement can be used as both table source and table sink, we can not decide if it is used as a source or sink until it is referenced in the DMLs.

+

+{% top %}

+

+

+## CREATE VIEW

+

+*TODO: should add descriptions.*

+

+## CREATE FUNCTION

+

+*TODO: should add descriptions.*

Review comment:

Please either fill these in or remove the sections entirely and re-add them in a follow up PR.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

With regards,

Apache Git Services

[GitHub] [flink] danny0405 commented on issue #10669:

[FLINK-15192][docs][table] Restructure "SQL" pages for better readability

Posted by GitBox <gi...@apache.org>.

danny0405 commented on issue #10669: [FLINK-15192][docs][table] Restructure "SQL" pages for better readability

URL: https://github.com/apache/flink/pull/10669#issuecomment-569184987

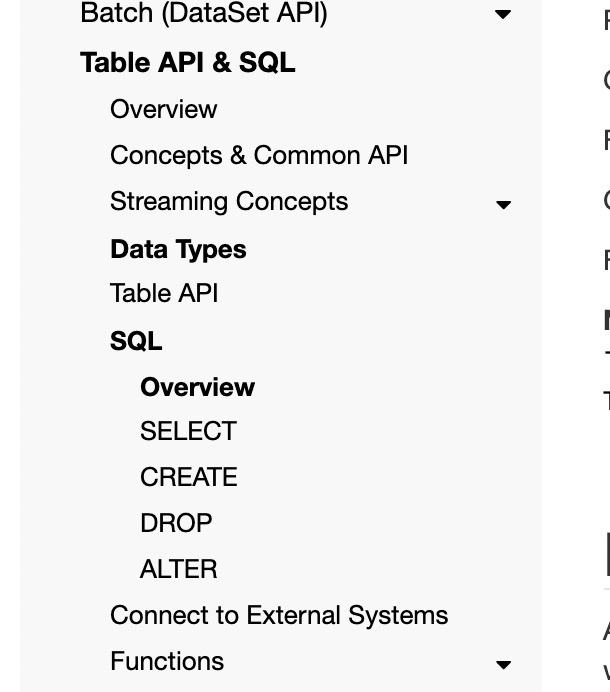

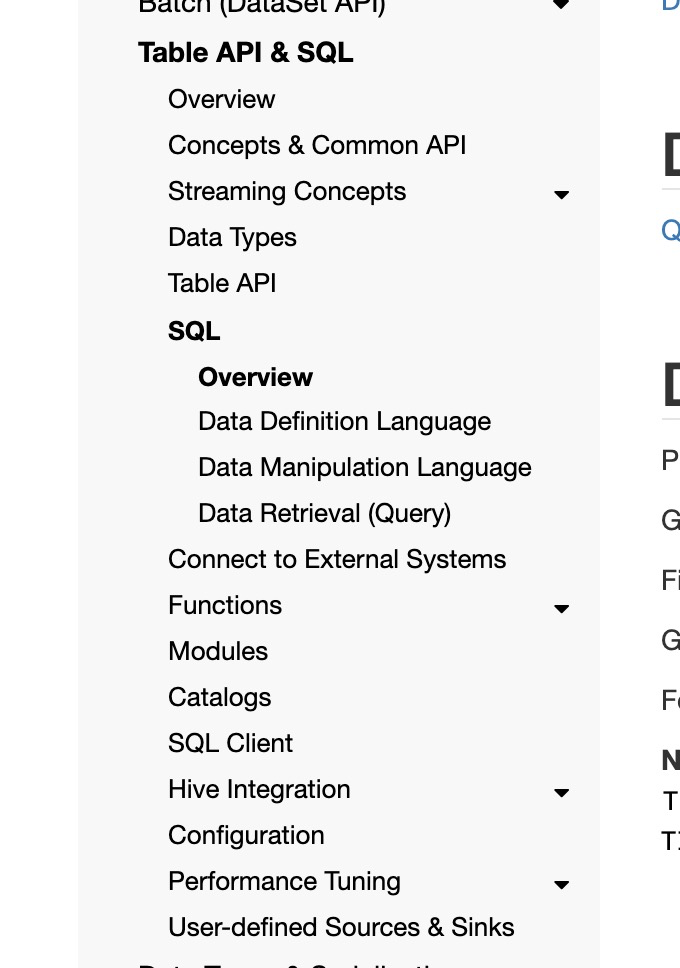

Thanks for refactoring this, the page looks much better now, i would suggest to split the page by specific clause, i.e. `select` be a page and `insert` be a page and there is navigate button on the left side for each of them. We do not really need the `DML` or `DDL` terminology which is hard to understand.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

With regards,

Apache Git Services

[GitHub] [flink] flinkbot edited a comment on issue #10669:

[FLINK-15192][docs][table] Restructure "SQL" pages for better readability

Posted by GitBox <gi...@apache.org>.

flinkbot edited a comment on issue #10669: [FLINK-15192][docs][table] Restructure "SQL" pages for better readability

URL: https://github.com/apache/flink/pull/10669#issuecomment-568653494

<!--

Meta data

Hash:aac6bab3b96e3d721234030ce35e24006b6bf7c5 Status:FAILURE URL:https://dev.azure.com/rmetzger/5bd3ef0a-4359-41af-abca-811b04098d2e/_build/results?buildId=3870 TriggerType:PUSH TriggerID:aac6bab3b96e3d721234030ce35e24006b6bf7c5

Hash:aac6bab3b96e3d721234030ce35e24006b6bf7c5 Status:FAILURE URL:https://travis-ci.com/flink-ci/flink/builds/142187553 TriggerType:PUSH TriggerID:aac6bab3b96e3d721234030ce35e24006b6bf7c5

Hash:422e61ea5836e54ce771d7ddd2004cfeb9b951ff Status:SUCCESS URL:https://travis-ci.com/flink-ci/flink/builds/142361200 TriggerType:PUSH TriggerID:422e61ea5836e54ce771d7ddd2004cfeb9b951ff

Hash:422e61ea5836e54ce771d7ddd2004cfeb9b951ff Status:SUCCESS URL:https://dev.azure.com/rmetzger/5bd3ef0a-4359-41af-abca-811b04098d2e/_build/results?buildId=3926 TriggerType:PUSH TriggerID:422e61ea5836e54ce771d7ddd2004cfeb9b951ff

Hash:85cdf8111bffb7ea2bffef7d7dba18aa126c254b Status:SUCCESS URL:https://travis-ci.com/flink-ci/flink/builds/142377471 TriggerType:PUSH TriggerID:85cdf8111bffb7ea2bffef7d7dba18aa126c254b

Hash:85cdf8111bffb7ea2bffef7d7dba18aa126c254b Status:SUCCESS URL:https://dev.azure.com/rmetzger/5bd3ef0a-4359-41af-abca-811b04098d2e/_build/results?buildId=3940 TriggerType:PUSH TriggerID:85cdf8111bffb7ea2bffef7d7dba18aa126c254b

Hash:0015b63f086202e706c2248581e9828abd2589ba Status:SUCCESS URL:https://travis-ci.com/flink-ci/flink/builds/142436142 TriggerType:PUSH TriggerID:0015b63f086202e706c2248581e9828abd2589ba

Hash:0015b63f086202e706c2248581e9828abd2589ba Status:SUCCESS URL:https://dev.azure.com/rmetzger/5bd3ef0a-4359-41af-abca-811b04098d2e/_build/results?buildId=3950 TriggerType:PUSH TriggerID:0015b63f086202e706c2248581e9828abd2589ba

Hash:a7b6ade38aaea781df30a8e5acf98c6c913731a3 Status:SUCCESS URL:https://travis-ci.com/flink-ci/flink/builds/142562117 TriggerType:PUSH TriggerID:a7b6ade38aaea781df30a8e5acf98c6c913731a3

Hash:a7b6ade38aaea781df30a8e5acf98c6c913731a3 Status:SUCCESS URL:https://dev.azure.com/rmetzger/5bd3ef0a-4359-41af-abca-811b04098d2e/_build/results?buildId=3968 TriggerType:PUSH TriggerID:a7b6ade38aaea781df30a8e5acf98c6c913731a3

Hash:ed1dd747484047d882a18b28b4cfbcd181e05828 Status:UNKNOWN URL:TBD TriggerType:PUSH TriggerID:ed1dd747484047d882a18b28b4cfbcd181e05828

Hash:486586a05e1d3a0e09e1e2b6f3f0b6d728bc58f6 Status:SUCCESS URL:https://travis-ci.com/flink-ci/flink/builds/142619003 TriggerType:PUSH TriggerID:486586a05e1d3a0e09e1e2b6f3f0b6d728bc58f6

Hash:486586a05e1d3a0e09e1e2b6f3f0b6d728bc58f6 Status:SUCCESS URL:https://dev.azure.com/rmetzger/5bd3ef0a-4359-41af-abca-811b04098d2e/_build/results?buildId=3976 TriggerType:PUSH TriggerID:486586a05e1d3a0e09e1e2b6f3f0b6d728bc58f6

-->

## CI report:

* aac6bab3b96e3d721234030ce35e24006b6bf7c5 Travis: [FAILURE](https://travis-ci.com/flink-ci/flink/builds/142187553) Azure: [FAILURE](https://dev.azure.com/rmetzger/5bd3ef0a-4359-41af-abca-811b04098d2e/_build/results?buildId=3870)

* 422e61ea5836e54ce771d7ddd2004cfeb9b951ff Travis: [SUCCESS](https://travis-ci.com/flink-ci/flink/builds/142361200) Azure: [SUCCESS](https://dev.azure.com/rmetzger/5bd3ef0a-4359-41af-abca-811b04098d2e/_build/results?buildId=3926)

* 85cdf8111bffb7ea2bffef7d7dba18aa126c254b Travis: [SUCCESS](https://travis-ci.com/flink-ci/flink/builds/142377471) Azure: [SUCCESS](https://dev.azure.com/rmetzger/5bd3ef0a-4359-41af-abca-811b04098d2e/_build/results?buildId=3940)

* 0015b63f086202e706c2248581e9828abd2589ba Travis: [SUCCESS](https://travis-ci.com/flink-ci/flink/builds/142436142) Azure: [SUCCESS](https://dev.azure.com/rmetzger/5bd3ef0a-4359-41af-abca-811b04098d2e/_build/results?buildId=3950)

* a7b6ade38aaea781df30a8e5acf98c6c913731a3 Travis: [SUCCESS](https://travis-ci.com/flink-ci/flink/builds/142562117) Azure: [SUCCESS](https://dev.azure.com/rmetzger/5bd3ef0a-4359-41af-abca-811b04098d2e/_build/results?buildId=3968)

* ed1dd747484047d882a18b28b4cfbcd181e05828 UNKNOWN

* 486586a05e1d3a0e09e1e2b6f3f0b6d728bc58f6 Travis: [SUCCESS](https://travis-ci.com/flink-ci/flink/builds/142619003) Azure: [SUCCESS](https://dev.azure.com/rmetzger/5bd3ef0a-4359-41af-abca-811b04098d2e/_build/results?buildId=3976)

<details>

<summary>Bot commands</summary>

The @flinkbot bot supports the following commands:

- `@flinkbot run travis` re-run the last Travis build

- `@flinkbot run azure` re-run the last Azure build

</details>

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

With regards,

Apache Git Services

[GitHub] [flink] flinkbot edited a comment on issue #10669:

[FLINK-15192][docs][table] Restructure "SQL" pages for better readability

Posted by GitBox <gi...@apache.org>.

flinkbot edited a comment on issue #10669: [FLINK-15192][docs][table] Restructure "SQL" pages for better readability

URL: https://github.com/apache/flink/pull/10669#issuecomment-568653494

<!--

Meta data

Hash:aac6bab3b96e3d721234030ce35e24006b6bf7c5 Status:FAILURE URL:https://dev.azure.com/rmetzger/5bd3ef0a-4359-41af-abca-811b04098d2e/_build/results?buildId=3870 TriggerType:PUSH TriggerID:aac6bab3b96e3d721234030ce35e24006b6bf7c5

Hash:aac6bab3b96e3d721234030ce35e24006b6bf7c5 Status:FAILURE URL:https://travis-ci.com/flink-ci/flink/builds/142187553 TriggerType:PUSH TriggerID:aac6bab3b96e3d721234030ce35e24006b6bf7c5

Hash:422e61ea5836e54ce771d7ddd2004cfeb9b951ff Status:SUCCESS URL:https://travis-ci.com/flink-ci/flink/builds/142361200 TriggerType:PUSH TriggerID:422e61ea5836e54ce771d7ddd2004cfeb9b951ff

Hash:422e61ea5836e54ce771d7ddd2004cfeb9b951ff Status:SUCCESS URL:https://dev.azure.com/rmetzger/5bd3ef0a-4359-41af-abca-811b04098d2e/_build/results?buildId=3926 TriggerType:PUSH TriggerID:422e61ea5836e54ce771d7ddd2004cfeb9b951ff

Hash:85cdf8111bffb7ea2bffef7d7dba18aa126c254b Status:SUCCESS URL:https://travis-ci.com/flink-ci/flink/builds/142377471 TriggerType:PUSH TriggerID:85cdf8111bffb7ea2bffef7d7dba18aa126c254b

Hash:85cdf8111bffb7ea2bffef7d7dba18aa126c254b Status:SUCCESS URL:https://dev.azure.com/rmetzger/5bd3ef0a-4359-41af-abca-811b04098d2e/_build/results?buildId=3940 TriggerType:PUSH TriggerID:85cdf8111bffb7ea2bffef7d7dba18aa126c254b

Hash:0015b63f086202e706c2248581e9828abd2589ba Status:SUCCESS URL:https://travis-ci.com/flink-ci/flink/builds/142436142 TriggerType:PUSH TriggerID:0015b63f086202e706c2248581e9828abd2589ba

Hash:0015b63f086202e706c2248581e9828abd2589ba Status:SUCCESS URL:https://dev.azure.com/rmetzger/5bd3ef0a-4359-41af-abca-811b04098d2e/_build/results?buildId=3950 TriggerType:PUSH TriggerID:0015b63f086202e706c2248581e9828abd2589ba

Hash:a7b6ade38aaea781df30a8e5acf98c6c913731a3 Status:SUCCESS URL:https://travis-ci.com/flink-ci/flink/builds/142562117 TriggerType:PUSH TriggerID:a7b6ade38aaea781df30a8e5acf98c6c913731a3

Hash:a7b6ade38aaea781df30a8e5acf98c6c913731a3 Status:SUCCESS URL:https://dev.azure.com/rmetzger/5bd3ef0a-4359-41af-abca-811b04098d2e/_build/results?buildId=3968 TriggerType:PUSH TriggerID:a7b6ade38aaea781df30a8e5acf98c6c913731a3

Hash:ed1dd747484047d882a18b28b4cfbcd181e05828 Status:UNKNOWN URL:TBD TriggerType:PUSH TriggerID:ed1dd747484047d882a18b28b4cfbcd181e05828

-->

## CI report:

* aac6bab3b96e3d721234030ce35e24006b6bf7c5 Travis: [FAILURE](https://travis-ci.com/flink-ci/flink/builds/142187553) Azure: [FAILURE](https://dev.azure.com/rmetzger/5bd3ef0a-4359-41af-abca-811b04098d2e/_build/results?buildId=3870)

* 422e61ea5836e54ce771d7ddd2004cfeb9b951ff Travis: [SUCCESS](https://travis-ci.com/flink-ci/flink/builds/142361200) Azure: [SUCCESS](https://dev.azure.com/rmetzger/5bd3ef0a-4359-41af-abca-811b04098d2e/_build/results?buildId=3926)

* 85cdf8111bffb7ea2bffef7d7dba18aa126c254b Travis: [SUCCESS](https://travis-ci.com/flink-ci/flink/builds/142377471) Azure: [SUCCESS](https://dev.azure.com/rmetzger/5bd3ef0a-4359-41af-abca-811b04098d2e/_build/results?buildId=3940)

* 0015b63f086202e706c2248581e9828abd2589ba Travis: [SUCCESS](https://travis-ci.com/flink-ci/flink/builds/142436142) Azure: [SUCCESS](https://dev.azure.com/rmetzger/5bd3ef0a-4359-41af-abca-811b04098d2e/_build/results?buildId=3950)

* a7b6ade38aaea781df30a8e5acf98c6c913731a3 Travis: [SUCCESS](https://travis-ci.com/flink-ci/flink/builds/142562117) Azure: [SUCCESS](https://dev.azure.com/rmetzger/5bd3ef0a-4359-41af-abca-811b04098d2e/_build/results?buildId=3968)

* ed1dd747484047d882a18b28b4cfbcd181e05828 UNKNOWN

<details>

<summary>Bot commands</summary>

The @flinkbot bot supports the following commands:

- `@flinkbot run travis` re-run the last Travis build

- `@flinkbot run azure` re-run the last Azure build

</details>

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

With regards,

Apache Git Services

[GitHub] [flink] flinkbot edited a comment on issue #10669:

[FLINK-15192][docs][table] Split 'SQL' page into multiple sub pages for

better readability

Posted by GitBox <gi...@apache.org>.

flinkbot edited a comment on issue #10669: [FLINK-15192][docs][table] Split 'SQL' page into multiple sub pages for better readability

URL: https://github.com/apache/flink/pull/10669#issuecomment-568653494

<!--

Meta data

Hash:aac6bab3b96e3d721234030ce35e24006b6bf7c5 Status:FAILURE URL:https://dev.azure.com/rmetzger/5bd3ef0a-4359-41af-abca-811b04098d2e/_build/results?buildId=3870 TriggerType:PUSH TriggerID:aac6bab3b96e3d721234030ce35e24006b6bf7c5

Hash:aac6bab3b96e3d721234030ce35e24006b6bf7c5 Status:FAILURE URL:https://travis-ci.com/flink-ci/flink/builds/142187553 TriggerType:PUSH TriggerID:aac6bab3b96e3d721234030ce35e24006b6bf7c5

Hash:422e61ea5836e54ce771d7ddd2004cfeb9b951ff Status:SUCCESS URL:https://travis-ci.com/flink-ci/flink/builds/142361200 TriggerType:PUSH TriggerID:422e61ea5836e54ce771d7ddd2004cfeb9b951ff

Hash:422e61ea5836e54ce771d7ddd2004cfeb9b951ff Status:SUCCESS URL:https://dev.azure.com/rmetzger/5bd3ef0a-4359-41af-abca-811b04098d2e/_build/results?buildId=3926 TriggerType:PUSH TriggerID:422e61ea5836e54ce771d7ddd2004cfeb9b951ff

-->

## CI report:

* aac6bab3b96e3d721234030ce35e24006b6bf7c5 Travis: [FAILURE](https://travis-ci.com/flink-ci/flink/builds/142187553) Azure: [FAILURE](https://dev.azure.com/rmetzger/5bd3ef0a-4359-41af-abca-811b04098d2e/_build/results?buildId=3870)

* 422e61ea5836e54ce771d7ddd2004cfeb9b951ff Travis: [SUCCESS](https://travis-ci.com/flink-ci/flink/builds/142361200) Azure: [SUCCESS](https://dev.azure.com/rmetzger/5bd3ef0a-4359-41af-abca-811b04098d2e/_build/results?buildId=3926)

<details>

<summary>Bot commands</summary>

The @flinkbot bot supports the following commands:

- `@flinkbot run travis` re-run the last Travis build

- `@flinkbot run azure` re-run the last Azure build

</details>

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

With regards,

Apache Git Services

[GitHub] [flink] flinkbot edited a comment on issue #10669:

[FLINK-15192][docs][table] Split 'SQL' page into multiple sub pages for

better readability

Posted by GitBox <gi...@apache.org>.

flinkbot edited a comment on issue #10669: [FLINK-15192][docs][table] Split 'SQL' page into multiple sub pages for better readability

URL: https://github.com/apache/flink/pull/10669#issuecomment-568653494

<!--

Meta data

Hash:aac6bab3b96e3d721234030ce35e24006b6bf7c5 Status:PENDING URL:https://dev.azure.com/rmetzger/5bd3ef0a-4359-41af-abca-811b04098d2e/_build/results?buildId=3870 TriggerType:PUSH TriggerID:aac6bab3b96e3d721234030ce35e24006b6bf7c5

Hash:aac6bab3b96e3d721234030ce35e24006b6bf7c5 Status:PENDING URL:https://travis-ci.com/flink-ci/flink/builds/142187553 TriggerType:PUSH TriggerID:aac6bab3b96e3d721234030ce35e24006b6bf7c5

-->

## CI report:

* aac6bab3b96e3d721234030ce35e24006b6bf7c5 Travis: [PENDING](https://travis-ci.com/flink-ci/flink/builds/142187553) Azure: [PENDING](https://dev.azure.com/rmetzger/5bd3ef0a-4359-41af-abca-811b04098d2e/_build/results?buildId=3870)

<details>

<summary>Bot commands</summary>

The @flinkbot bot supports the following commands:

- `@flinkbot run travis` re-run the last Travis build

- `@flinkbot run azure` re-run the last Azure build

</details>

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

With regards,

Apache Git Services

[GitHub] [flink] sjwiesman commented on issue #10669:

[FLINK-15192][docs][table] Restructure "SQL" pages for better readability

Posted by GitBox <gi...@apache.org>.

sjwiesman commented on issue #10669: [FLINK-15192][docs][table] Restructure "SQL" pages for better readability

URL: https://github.com/apache/flink/pull/10669#issuecomment-569356922

Please add a redirect from the old sql page to the new overview page.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

With regards,

Apache Git Services

[GitHub] [flink] wuchong commented on issue #10669:

[FLINK-15192][docs][table] Restructure "SQL" pages for better readability

Posted by GitBox <gi...@apache.org>.

wuchong commented on issue #10669: [FLINK-15192][docs][table] Restructure "SQL" pages for better readability

URL: https://github.com/apache/flink/pull/10669#issuecomment-569213420

Hi @danny0405 , thanks for the suggestion. I agree with you. Many users don't understand what is DML and DDL, and they do not need to know that at all. I restructured the pages again to `SELECT`, `CREATE`, `DROP`, `ALTER` pages, so that users can find what they need from the title quickly. Please have a look again @danny0405 , @bowenli86 , @JingsongLi .

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

With regards,

Apache Git Services

[GitHub] [flink] sjwiesman commented on a change in pull request #10669:

[FLINK-15192][docs][table] Restructure "SQL" pages for better readability

Posted by GitBox <gi...@apache.org>.

sjwiesman commented on a change in pull request #10669: [FLINK-15192][docs][table] Restructure "SQL" pages for better readability

URL: https://github.com/apache/flink/pull/10669#discussion_r361867385

##########

File path: docs/dev/table/sql/queries.md

##########

@@ -22,20 +22,18 @@ specific language governing permissions and limitations

under the License.

-->

-This is a complete list of Data Definition Language (DDL) and Data Manipulation Language (DML) constructs supported in Flink.

* This will be replaced by the TOC

{:toc}

-## Query

-SQL queries are specified with the `sqlQuery()` method of the `TableEnvironment`. The method returns the result of the SQL query as a `Table`. A `Table` can be used in [subsequent SQL and Table API queries](common.html#mixing-table-api-and-sql), be [converted into a DataSet or DataStream](common.html#integration-with-datastream-and-dataset-api), or [written to a TableSink](common.html#emit-a-table)). SQL and Table API queries can be seamlessly mixed and are holistically optimized and translated into a single program.

+SELECT queries are specified with the `sqlQuery()` method of the `TableEnvironment`. The method returns the result of the SELECT query as a `Table`. A `Table` can be used in [subsequent SQL and Table API queries]({{ site.baseurl }}/dev/table/common.html#mixing-table-api-and-sql), be [converted into a DataSet or DataStream]({{ site.baseurl }}/dev/table/common.html#integration-with-datastream-and-dataset-api), or [written to a TableSink]({{ site.baseurl }}/dev/table/common.html#emit-a-table)). SQL and Table API queries can be seamlessly mixed and are holistically optimized and translated into a single program.

-In order to access a table in a SQL query, it must be [registered in the TableEnvironment](common.html#register-tables-in-the-catalog). A table can be registered from a [TableSource](common.html#register-a-tablesource), [Table](common.html#register-a-table), [CREATE TABLE statement](#create-table), [DataStream, or DataSet](common.html#register-a-datastream-or-dataset-as-table). Alternatively, users can also [register catalogs in a TableEnvironment](catalogs.html) to specify the location of the data sources.

+In order to access a table in a SQL query, it must be [registered in the TableEnvironment]({{ site.baseurl }}/dev/table/common.html#register-tables-in-the-catalog). A table can be registered from a [TableSource]({{ site.baseurl }}/dev/table/common.html#register-a-tablesource), [Table]({{ site.baseurl }}/dev/table/common.html#register-a-table), [CREATE TABLE statement](#create-table), [DataStream, or DataSet]({{ site.baseurl }}/dev/table/common.html#register-a-datastream-or-dataset-as-table). Alternatively, users can also [register catalogs in a TableEnvironment]({{ site.baseurl }}/dev/table/catalogs.html) to specify the location of the data sources.

For convenience `Table.toString()` automatically registers the table under a unique name in its `TableEnvironment` and returns the name. Hence, `Table` objects can be directly inlined into SQL queries (by string concatenation) as shown in the examples below.