You are viewing a plain text version of this content. The canonical link for it is here.

Posted to notifications@accumulo.apache.org by GitBox <gi...@apache.org> on 2022/04/29 13:19:47 UTC

[GitHub] [accumulo] dlmarion opened a new pull request, #2665: Eventually Consistent scans / ScanServer feature

dlmarion opened a new pull request, #2665:

URL: https://github.com/apache/accumulo/pull/2665

This commit builds on the changes added in prior commits

8be98d6, 50b9267f, and 39bc7a0 to create a new server

component that implements TabletHostingServer and uses

the TabletScanClientService Thrift API to serve client

scan requests on Tablets outside the TabletServer. To

accomplish this the new server (ScanServer) constructs a

new type of tablet called a SnapshotTablet which is comprised

of the files in the metadata table and not the in-memory

mutations that the TabletServer might contain. The Accumulo

client has been modified to allow the user to set a flag

on scans to make them eventually consistent, meaning that

the user is ok with scanning data that may not be immediately

consistent with the version of the Tablet that is being hosted

by the TabletServer.

This feature is optional and experimental.

Closes #2411

Co-authored-by: Keith Turner <kt...@apache.org>

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: notifications-unsubscribe@accumulo.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [accumulo] dlmarion commented on a diff in pull request #2665: Eventually Consistent scans / ScanServer feature

Posted by GitBox <gi...@apache.org>.

dlmarion commented on code in PR #2665:

URL: https://github.com/apache/accumulo/pull/2665#discussion_r939135839

##########

server/tserver/src/main/java/org/apache/accumulo/tserver/ScanServer.java:

##########

@@ -0,0 +1,987 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * https://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing,

+ * software distributed under the License is distributed on an

+ * "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+ * KIND, either express or implied. See the License for the

+ * specific language governing permissions and limitations

+ * under the License.

+ */

+package org.apache.accumulo.tserver;

+

+import static java.nio.charset.StandardCharsets.UTF_8;

+import static org.apache.accumulo.fate.util.UtilWaitThread.sleepUninterruptibly;

+

+import java.io.IOException;

+import java.io.UncheckedIOException;

+import java.net.UnknownHostException;

+import java.nio.ByteBuffer;

+import java.util.ArrayList;

+import java.util.Collection;

+import java.util.Collections;

+import java.util.HashMap;

+import java.util.HashSet;

+import java.util.List;

+import java.util.Map;

+import java.util.Map.Entry;

+import java.util.Objects;

+import java.util.Set;

+import java.util.UUID;

+import java.util.concurrent.ConcurrentHashMap;

+import java.util.concurrent.ConcurrentSkipListSet;

+import java.util.concurrent.TimeUnit;

+import java.util.concurrent.atomic.AtomicLong;

+import java.util.concurrent.locks.Condition;

+import java.util.concurrent.locks.ReentrantReadWriteLock;

+import java.util.stream.Collectors;

+

+import org.apache.accumulo.core.Constants;

+import org.apache.accumulo.core.client.AccumuloException;

+import org.apache.accumulo.core.clientImpl.thrift.ThriftSecurityException;

+import org.apache.accumulo.core.conf.AccumuloConfiguration;

+import org.apache.accumulo.core.conf.Property;

+import org.apache.accumulo.core.dataImpl.KeyExtent;

+import org.apache.accumulo.core.dataImpl.thrift.InitialMultiScan;

+import org.apache.accumulo.core.dataImpl.thrift.InitialScan;

+import org.apache.accumulo.core.dataImpl.thrift.IterInfo;

+import org.apache.accumulo.core.dataImpl.thrift.MultiScanResult;

+import org.apache.accumulo.core.dataImpl.thrift.ScanResult;

+import org.apache.accumulo.core.dataImpl.thrift.TColumn;

+import org.apache.accumulo.core.dataImpl.thrift.TKeyExtent;

+import org.apache.accumulo.core.dataImpl.thrift.TRange;

+import org.apache.accumulo.core.file.blockfile.cache.impl.BlockCacheConfiguration;

+import org.apache.accumulo.core.metadata.ScanServerRefTabletFile;

+import org.apache.accumulo.core.metadata.StoredTabletFile;

+import org.apache.accumulo.core.metadata.schema.Ample;

+import org.apache.accumulo.core.metadata.schema.TabletMetadata;

+import org.apache.accumulo.core.metrics.MetricsUtil;

+import org.apache.accumulo.core.securityImpl.thrift.TCredentials;

+import org.apache.accumulo.core.spi.scan.ScanServerSelector;

+import org.apache.accumulo.core.tabletserver.thrift.ActiveScan;

+import org.apache.accumulo.core.tabletserver.thrift.NoSuchScanIDException;

+import org.apache.accumulo.core.tabletserver.thrift.NotServingTabletException;

+import org.apache.accumulo.core.tabletserver.thrift.TSampleNotPresentException;

+import org.apache.accumulo.core.tabletserver.thrift.TSamplerConfiguration;

+import org.apache.accumulo.core.tabletserver.thrift.TabletScanClientService;

+import org.apache.accumulo.core.tabletserver.thrift.TooManyFilesException;

+import org.apache.accumulo.core.trace.thrift.TInfo;

+import org.apache.accumulo.core.util.Halt;

+import org.apache.accumulo.core.util.HostAndPort;

+import org.apache.accumulo.core.util.threads.ThreadPools;

+import org.apache.accumulo.fate.util.UtilWaitThread;

+import org.apache.accumulo.fate.zookeeper.ServiceLock;

+import org.apache.accumulo.fate.zookeeper.ServiceLock.LockLossReason;

+import org.apache.accumulo.fate.zookeeper.ServiceLock.LockWatcher;

+import org.apache.accumulo.fate.zookeeper.ZooCache;

+import org.apache.accumulo.fate.zookeeper.ZooReaderWriter;

+import org.apache.accumulo.fate.zookeeper.ZooUtil.NodeExistsPolicy;

+import org.apache.accumulo.server.AbstractServer;

+import org.apache.accumulo.server.GarbageCollectionLogger;

+import org.apache.accumulo.server.ServerContext;

+import org.apache.accumulo.server.ServerOpts;

+import org.apache.accumulo.server.conf.TableConfiguration;

+import org.apache.accumulo.server.fs.VolumeManager;

+import org.apache.accumulo.server.rpc.ServerAddress;

+import org.apache.accumulo.server.rpc.TServerUtils;

+import org.apache.accumulo.server.rpc.ThriftProcessorTypes;

+import org.apache.accumulo.server.security.SecurityUtil;

+import org.apache.accumulo.tserver.TabletServerResourceManager.TabletResourceManager;

+import org.apache.accumulo.tserver.metrics.TabletServerScanMetrics;

+import org.apache.accumulo.tserver.session.MultiScanSession;

+import org.apache.accumulo.tserver.session.ScanSession;

+import org.apache.accumulo.tserver.session.ScanSession.TabletResolver;

+import org.apache.accumulo.tserver.session.Session;

+import org.apache.accumulo.tserver.session.SessionManager;

+import org.apache.accumulo.tserver.session.SingleScanSession;

+import org.apache.accumulo.tserver.tablet.SnapshotTablet;

+import org.apache.accumulo.tserver.tablet.Tablet;

+import org.apache.accumulo.tserver.tablet.TabletBase;

+import org.apache.thrift.TException;

+import org.apache.thrift.TProcessor;

+import org.apache.zookeeper.KeeperException;

+import org.checkerframework.checker.nullness.qual.Nullable;

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+

+import com.beust.jcommander.Parameter;

+import com.github.benmanes.caffeine.cache.CacheLoader;

+import com.github.benmanes.caffeine.cache.Caffeine;

+import com.github.benmanes.caffeine.cache.LoadingCache;

+import com.github.benmanes.caffeine.cache.Scheduler;

+import com.google.common.annotations.VisibleForTesting;

+import com.google.common.base.Preconditions;

+import com.google.common.collect.Sets;

+

+public class ScanServer extends AbstractServer

+ implements TabletScanClientService.Iface, TabletHostingServer {

+

+ public static class ScanServerOpts extends ServerOpts {

+ @Parameter(required = false, names = {"-g", "--group"},

+ description = "Optional group name that will be made available to the ScanServerSelector client plugin. If not specified will be set to '"

+ + ScanServerSelector.DEFAULT_SCAN_SERVER_GROUP_NAME

+ + "'. Groups support at least two use cases : dedicating resources to scans and/or using different hardware for scans.")

+ private String groupName = ScanServerSelector.DEFAULT_SCAN_SERVER_GROUP_NAME;

+

+ public String getGroupName() {

+ return groupName;

+ }

+ }

+

+ private static final Logger log = LoggerFactory.getLogger(ScanServer.class);

+

+ private static class TabletMetadataLoader implements CacheLoader<KeyExtent,TabletMetadata> {

+

+ private final Ample ample;

+

+ private TabletMetadataLoader(Ample ample) {

+ this.ample = ample;

+ }

+

+ @Override

+ public @Nullable TabletMetadata load(KeyExtent keyExtent) {

+ long t1 = System.currentTimeMillis();

+ var tm = ample.readTablet(keyExtent);

+ long t2 = System.currentTimeMillis();

+ LOG.trace("Read metadata for 1 tablet in {} ms", t2 - t1);

+ return tm;

+ }

+

+ @Override

+ @SuppressWarnings("unchecked")

Review Comment:

Resolved in f437fed

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: notifications-unsubscribe@accumulo.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [accumulo] dlmarion commented on a diff in pull request #2665: Eventually Consistent scans / ScanServer feature

Posted by GitBox <gi...@apache.org>.

dlmarion commented on code in PR #2665:

URL: https://github.com/apache/accumulo/pull/2665#discussion_r939180215

##########

core/src/main/java/org/apache/accumulo/core/spi/scan/DefaultScanServerSelector.java:

##########

@@ -0,0 +1,415 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * https://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing,

+ * software distributed under the License is distributed on an

+ * "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+ * KIND, either express or implied. See the License for the

+ * specific language governing permissions and limitations

+ * under the License.

+ */

+package org.apache.accumulo.core.spi.scan;

+

+import static java.nio.charset.StandardCharsets.UTF_8;

+

+import java.lang.reflect.Type;

+import java.security.SecureRandom;

+import java.time.Duration;

+import java.util.ArrayList;

+import java.util.Collection;

+import java.util.Collections;

+import java.util.HashMap;

+import java.util.List;

+import java.util.Map;

+import java.util.Set;

+import java.util.concurrent.TimeUnit;

+import java.util.function.Supplier;

+

+import org.apache.accumulo.core.conf.ConfigurationTypeHelper;

+import org.apache.accumulo.core.data.TabletId;

+

+import com.google.common.base.Preconditions;

+import com.google.common.base.Suppliers;

+import com.google.common.collect.Sets;

+import com.google.common.hash.HashCode;

+import com.google.common.hash.Hashing;

+import com.google.gson.Gson;

+import com.google.gson.reflect.TypeToken;

+

+import edu.umd.cs.findbugs.annotations.SuppressFBWarnings;

+

+/**

+ * The default Accumulo selector for scan servers. This dispatcher will :

+ *

+ * <ul>

+ * <li>Hash each tablet to a per attempt configurable number of scan servers and then randomly

+ * choose one of those scan servers. Using hashing allows different client to select the same scan

+ * servers for a given tablet.</li>

+ * <li>Use a per attempt configurable busy timeout.</li>

+ * </ul>

+ *

+ * <p>

+ * This class accepts a single configuration that has a json value. To configure this class set

+ * {@code scan.server.selector.opts.profiles=<json>} in the accumulo client configuration along with

+ * the config for the class. The following is the default configuration value.

+ * </p>

+ * <p>

+ * {@value DefaultScanServerSelector#PROFILES_DEFAULT}

+ * </p>

+ *

+ * The json is structured as a list of profiles, with each profile having the following fields.

+ *

+ * <ul>

+ * <li><b>isDefault : </b> A boolean that specifies whether this is the default profile. One and

+ * only one profile must set this to true.</li>

+ * <li><b>maxBusyTimeout : </b> The maximum busy timeout to use. The busy timeout from the last

+ * attempt configuration grows exponentially up to this max.</li>

+ * <li><b>scanTypeActivations : </b> A list of scan types that will activate this profile. Scan

+ * types are specified by setting {@code scan_type=<scan_type>} as execution on the scanner. See

+ * {@link org.apache.accumulo.core.client.ScannerBase#setExecutionHints(Map)}</li>

+ * <li><b>group : </b> Scan servers can be started with an optional group. If specified, this option

+ * will limit the scan servers used to those that were started with this group name. If not

+ * specified, the set of scan servers that did not specify a group will be used. Grouping scan

+ * servers supports at least two use cases. First groups can be used to dedicate resources for

+ * certain scans. Second groups can be used to have different hardware/VM types for scans, for

+ * example could have some scans use expensive high memory VMs and others use cheaper burstable VMs.

+ * <li><b>attemptPlans : </b> A list of configuration to use for each scan attempt. Each list object

+ * has the following fields:

+ * <ul>

+ * <li><b>servers : </b> The number of servers to randomly choose from for this attempt.</li>

+ * <li><b>busyTimeout : </b> The busy timeout to use for this attempt.</li>

+ * <li><b>salt : </b> An optional string to append when hashing the tablet. When this is set

+ * differently for attempts it has the potential to cause the set of servers chosen from to be

+ * disjoint. When not set or the same, the servers between attempts will be subsets.</li>

+ * </ul>

+ * </li>

+ * </ul>

+ *

+ * <p>

+ * Below is an example configuration with two profiles, one is the default and the other is used

+ * when the scan execution hint {@code scan_type=slow} is set.

+ * </p>

+ *

+ * <pre>

+ * [

+ * {

+ * "isDefault":true,

+ * "maxBusyTimeout":"5m",

+ * "busyTimeoutMultiplier":4,

+ * "attemptPlans":[

+ * {"servers":"3", "busyTimeout":"33ms"},

+ * {"servers":"100%", "busyTimeout":"100ms"}

+ * ]

+ * },

+ * {

+ * "scanTypeActivations":["slow"],

+ * "maxBusyTimeout":"20m",

+ * "busyTimeoutMultiplier":8,

+ * "group":"lowcost",

+ * "attemptPlans":[

+ * {"servers":"1", "busyTimeout":"10s"},

+ * {"servers":"3", "busyTimeout":"30s","salt":"42"},

+ * {"servers":"9", "busyTimeout":"60s","salt":"84"}

+ * ]

+ * }

+ * ]

+ * </pre>

+ *

+ * <p>

+ * For the default profile in the example it will start off by choosing randomly from 3 scan servers

+ * based on a hash of the tablet with no salt. For the first attempt it will use a busy timeout of

+ * 33 milliseconds. If the first attempt returns with busy, then it will randomly choose from 100%

+ * or all servers for the second attempt and use a busy timeout of 100ms. For subsequent attempts it

+ * will keep choosing from all servers and start multiplying the busy timeout by 4 until the max

+ * busy timeout of 4 minutes is reached.

+ * </p>

+ *

+ * <p>

+ * For the profile activated by {@code scan_type=slow} it start off by choosing randomly from 1 scan

+ * server based on a hash of the tablet with no salt and a busy timeout of 10s. The second attempt

+ * will choose from 3 scan servers based on a hash of the tablet plus the salt {@literal 42}.

+ * Without the salt, the single scan servers from the first attempt would always be included in the

+ * set of 3. With the salt the single scan server from the first attempt may not be included. The

+ * third attempt will choose a scan server from 9 using the salt {@literal 84} and a busy timeout of

+ * 60s. The different salt means the set of servers that attempts 2 and 3 choose from may be

+ * disjoint. Attempt 4 and greater will continue to choose from the same 9 servers as attempt 3 and

+ * will keep increasing the busy timeout by multiplying 8 until the maximum of 20 minutes is

+ * reached. For this profile it will choose from scan servers in the group {@literal lowcost}.

+ * </p>

+ */

+public class DefaultScanServerSelector implements ScanServerSelector {

Review Comment:

Addressed in 9ec7f5f. Renamed class to ConfigurableScanServerSelector. I wasn't sure what to call the strategy.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: notifications-unsubscribe@accumulo.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [accumulo] dlmarion commented on a diff in pull request #2665: Eventually Consistent scans / ScanServer feature

Posted by GitBox <gi...@apache.org>.

dlmarion commented on code in PR #2665:

URL: https://github.com/apache/accumulo/pull/2665#discussion_r939179808

##########

core/src/main/java/org/apache/accumulo/core/clientImpl/ScannerIterator.java:

##########

@@ -84,7 +85,8 @@ public class ScannerIterator implements Iterator<Entry<Key,Value>> {

new ScanState(context, tableId, authorizations, new Range(range), options.fetchedColumns,

size, options.serverSideIteratorList, options.serverSideIteratorOptions, isolated,

readaheadThreshold, options.getSamplerConfiguration(), options.batchTimeOut,

- options.classLoaderContext, options.executionHints);

+ options.classLoaderContext, options.executionHints,

+ (options.getConsistencyLevel().equals(ConsistencyLevel.EVENTUAL)) ? true : false);

Review Comment:

Addressed in 9ec7f5f

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: notifications-unsubscribe@accumulo.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [accumulo] dlmarion commented on a diff in pull request #2665: Eventually Consistent scans / ScanServer feature

Posted by GitBox <gi...@apache.org>.

dlmarion commented on code in PR #2665:

URL: https://github.com/apache/accumulo/pull/2665#discussion_r940240058

##########

core/src/main/java/org/apache/accumulo/core/clientImpl/ClientContext.java:

##########

@@ -332,6 +392,51 @@ public synchronized BatchWriterConfig getBatchWriterConfig() {

return batchWriterConfig;

}

+ public static class ScanServerInfo {

+ public final UUID uuid;

+ public final String group;

+

+ public ScanServerInfo(UUID uuid, String group) {

+ this.uuid = uuid;

+ this.group = group;

+ }

+

+ }

Review Comment:

Removed class and replaced with Pair in d416900f14ac0c0e88af37aff49cb3b97a6a8173

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: notifications-unsubscribe@accumulo.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [accumulo] dlmarion commented on pull request #2665: Eventually Consistent scans / ScanServer feature

Posted by GitBox <gi...@apache.org>.

dlmarion commented on PR #2665:

URL: https://github.com/apache/accumulo/pull/2665#issuecomment-1152237400

I kicked off a full build with ITs and the only ITs that failed are the same ones that are currently failing in `main`.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: notifications-unsubscribe@accumulo.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [accumulo] ctubbsii commented on pull request #2665: Eventually Consistent scans / ScanServer feature

Posted by GitBox <gi...@apache.org>.

ctubbsii commented on PR #2665:

URL: https://github.com/apache/accumulo/pull/2665#issuecomment-1154733877

@keith-turner wrote:

> > It's not the scan execution hints that are modifying the behavior... it's the configured dispatcher. And, the scan hints are still not affecting the data returned... it's the server that it was dispatched to that is doing that.

>

> If scan hints+config can change the behavior of a scanner from immediate to eventual I think this could lead to disaster. Consider something like the Accumulo GC algorithm where its correctness relies on only using scanners with immediate consistency. Consider the following situation.

>

> * Person A writes a scanner that requires immediate consistency and sets a scan hint with intention of changing cache behavior to be opportunistic.

> * Later Person B changes Accumulo configuration such that it causes the scan hints set by person A to now make the scanner coded by person A be eventually consistent.

I think it's an exaggeration to call this a disaster. Scan hints controlling a specific configured dispatcher's behavior should already be documented in that dispatcher's documentation and stable before users can rely on it for stable behavior. This is not a problem. If we change the semantics of *any* configuration, we can break things users intended with their previous configuration. This situation is no different... scan hints are just configuration for a dispatcher that are semantically constrained to that dispatcher's documented behavior.

Having this as behavior with an explicit API method to configure isn't any different. A configured dispatcher could just ignore that configuration and dispatch to an eventually consistent ScanServer instead of a TabletServer. Hints are just another kind of configuration. Whether that configuration is set by an API with a different name, or set by the API that sets hints, we're in the same situation... we have to trust the dispatcher that the user has configured to do the thing we expect it to, based on whatever configuration is set on the scan task, regardless of how it is set.

The main difference here, is that it already logically makes sense to use scan hints to modify the dispatcher behavior, because that's what that configuration is *for*.

>

> If the code in question were the Accumulo GC, this could cause files to be deleted when they should not be. Eventual vs immediate consistency is so important to some algorithms that it should always be explicitly declared per scanner and never be overridden by config that could impact all scanners in an indiscriminate manner without consideration of individual circumstances and per scanner intent.

I think the discussion of the accumulo-gc is a bit of a red herring. That scans the metadata. It is already well documented that all metadata scans are always dispatched to an executor named "meta", and should always be immediately consistent. Even it if it wasn't, though, I don't think by setting the scan configuration via executor hints is substantially different than setting other scan configuration via other APIs. It's all configuration, and the dispatcher's behavior ultimately has to be documented, known, and relied upon in order to get any kind of guarantees about any scan results.

@dlmarion wrote:

> Since immediate/strict consistency is the default, maybe we just need a method to disable it for a specific query instead of specifying the value. For example, `enableEventualConsistency()`, `relaxReadGuarantees()`, `disableConsistentReads()`, `allowStaleReads()`, etc.

>

> I'm also thinking that there should be a table configuration that enables/disables this feature. Currently, an admin can spin up some ScanServers and an application developer can enable eventual consistency, but do we want that on the `metadata` table for example?

I would like to keep configuration simple. I've read so many articles about software complexity killing projects, and I think Accumulo is already in that risky area, where every new complex feature we add, often for niche use cases, adds an obtuse amount of complexity. We already have an overwhelming amount of single-purpose configuration elements that micro-manage so many elements of Accumulo's operations. We have an opportunity here to keep things *simple*. The dispatcher is already one such configurable component. If the dispatcher is responsible for deciding which server to use, and we already have a way to pass configuration to a dispatcher through the scan hints, then I don't see why we need to have additional configuration that add to the bloat. Let's be modular... let's let the configurable dispatcher to the work. We can add this feature without any additional user facing complexity... if we recognize that scan hints are merely dispatcher configuration, and the dispatcher

is already a pluggable module, and all of these configurations are already per-table or per-scan.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: notifications-unsubscribe@accumulo.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [accumulo] dlmarion commented on pull request #2665: Eventually Consistent scans / ScanServer feature

Posted by GitBox <gi...@apache.org>.

dlmarion commented on PR #2665:

URL: https://github.com/apache/accumulo/pull/2665#issuecomment-1158113117

@keith-turner - I think your latest commit left `ScanServerIT.testBatchScannerTimeout` in a broken state.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: notifications-unsubscribe@accumulo.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [accumulo] dlmarion commented on a diff in pull request #2665: Eventually Consistent scans / ScanServer feature

Posted by GitBox <gi...@apache.org>.

dlmarion commented on code in PR #2665:

URL: https://github.com/apache/accumulo/pull/2665#discussion_r881550685

##########

core/src/main/java/org/apache/accumulo/core/clientImpl/ThriftScanner.java:

##########

@@ -358,7 +380,22 @@ else if (log.isTraceEnabled())

}

TraceUtil.setException(child2, e, false);

- sleepMillis = pause(sleepMillis, maxSleepTime);

+ sleepMillis = pause(sleepMillis, maxSleepTime, scanState.runOnScanServer);

+ } catch (ScanServerBusyException e) {

+ error = "Scan failed, scan server was busy " + loc;

Review Comment:

fix applied in ec7cbad

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: notifications-unsubscribe@accumulo.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [accumulo] ivakegg commented on a diff in pull request #2665: Eventually Consistent scans / ScanServer feature

Posted by GitBox <gi...@apache.org>.

ivakegg commented on code in PR #2665:

URL: https://github.com/apache/accumulo/pull/2665#discussion_r863767744

##########

core/src/main/java/org/apache/accumulo/core/clientImpl/ScanAttemptsImpl.java:

##########

@@ -0,0 +1,120 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing,

+ * software distributed under the License is distributed on an

+ * "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+ * KIND, either express or implied. See the License for the

+ * specific language governing permissions and limitations

+ * under the License.

+ */

+package org.apache.accumulo.core.clientImpl;

+

+import java.util.Collection;

+import java.util.Map;

+import java.util.Objects;

+import java.util.concurrent.ConcurrentHashMap;

+

+import org.apache.accumulo.core.data.TabletId;

+import org.apache.accumulo.core.spi.scan.ScanServerDispatcher.ScanAttempt;

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+

+import com.google.common.collect.Collections2;

+import com.google.common.collect.Maps;

+

+public class ScanAttemptsImpl {

Review Comment:

I would love some class documentation that gives me an idea what this class is used for.

##########

core/src/main/java/org/apache/accumulo/core/clientImpl/TabletServerBatchReaderIterator.java:

##########

@@ -453,26 +482,40 @@ public void run() {

private void doLookups(Map<String,Map<KeyExtent,List<Range>>> binnedRanges,

final ResultReceiver receiver, List<Column> columns) {

- if (timedoutServers.containsAll(binnedRanges.keySet())) {

- // all servers have timed out

- throw new TimedOutException(timedoutServers);

- }

-

- // when there are lots of threads and a few tablet servers

- // it is good to break request to tablet servers up, the

- // following code determines if this is the case

int maxTabletsPerRequest = Integer.MAX_VALUE;

- if (numThreads / binnedRanges.size() > 1) {

- int totalNumberOfTablets = 0;

- for (Entry<String,Map<KeyExtent,List<Range>>> entry : binnedRanges.entrySet()) {

- totalNumberOfTablets += entry.getValue().size();

- }

- maxTabletsPerRequest = totalNumberOfTablets / numThreads;

- if (maxTabletsPerRequest == 0) {

- maxTabletsPerRequest = 1;

+ long busyTimeout = 0;

+ Duration scanServerDispatcherDelay = null;

+ Map<String,ScanAttemptsImpl.ScanAttemptReporter> reporters = Map.of();

+

+ if (options.getConsistencyLevel().equals(ConsistencyLevel.EVENTUAL)) {

Review Comment:

Is it possible to have the consistency level set to EVENTUAL but have no scan servers? I think I see that in that case the rebinToScanServers will delegate the scans to tservers. But in that case we don't get the advantage of the code in the else statement. I guess I am wondering whether the ScanServerData concept should be used no matter the consistency level and the rebinToScanServers will take care of delegating to scan servers vs tservers based on the consistency level.

##########

core/src/main/java/org/apache/accumulo/core/metadata/ScanServerRefTabletFile.java:

##########

@@ -0,0 +1,98 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing,

+ * software distributed under the License is distributed on an

+ * "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+ * KIND, either express or implied. See the License for the

+ * specific language governing permissions and limitations

+ * under the License.

+ */

+package org.apache.accumulo.core.metadata;

+

+import java.util.UUID;

+

+import org.apache.accumulo.core.data.Value;

+import org.apache.hadoop.fs.Path;

+import org.apache.hadoop.io.Text;

+

+public class ScanServerRefTabletFile extends TabletFile {

+

+ private final Value NULL_VALUE = new Value(new byte[0]);

+ private final Text colf;

+ private final Text colq;

+

+ public ScanServerRefTabletFile(String file, String serverAddress, UUID serverLockUUID) {

+ super(new Path(file));

+ this.colf = new Text(serverAddress);

+ this.colq = new Text(serverLockUUID.toString());

+ }

+

+ public ScanServerRefTabletFile(String file, Text colf, Text colq) {

+ super(new Path(file));

+ this.colf = colf;

+ this.colq = colq;

+ }

+

+ public String getRowSuffix() {

+ return this.getPathStr();

+ }

+

+ public Text getServerAddress() {

+ return this.colf;

+ }

+

+ public Text getServerLockUUID() {

+ return this.colq;

+ }

+

+ public Value getValue() {

+ return NULL_VALUE;

+ }

+

+ @Override

+ public int hashCode() {

+ final int prime = 31;

+ int result = super.hashCode();

+ result = prime * result + ((colf == null) ? 0 : colf.hashCode());

+ result = prime * result + ((colq == null) ? 0 : colq.hashCode());

+ return result;

+ }

+

+ @Override

+ public boolean equals(Object obj) {

+ if (this == obj)

+ return true;

+ if (!super.equals(obj))

+ return false;

+ if (getClass() != obj.getClass())

+ return false;

+ ScanServerRefTabletFile other = (ScanServerRefTabletFile) obj;

+ if (colf == null) {

Review Comment:

An EqualsBuilder may make this a little cleaner.

##########

core/src/main/java/org/apache/accumulo/core/spi/scan/DefaultScanServerDispatcher.java:

##########

@@ -0,0 +1,232 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing,

+ * software distributed under the License is distributed on an

+ * "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+ * KIND, either express or implied. See the License for the

+ * specific language governing permissions and limitations

+ * under the License.

+ */

+package org.apache.accumulo.core.spi.scan;

+

+import static java.nio.charset.StandardCharsets.UTF_8;

+

+import java.security.SecureRandom;

+import java.time.Duration;

+import java.util.ArrayList;

+import java.util.Collections;

+import java.util.HashMap;

+import java.util.List;

+import java.util.Map;

+import java.util.Set;

+import java.util.concurrent.TimeUnit;

+import java.util.function.Supplier;

+

+import org.apache.accumulo.core.data.TabletId;

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+

+import com.google.common.base.Preconditions;

+import com.google.common.base.Suppliers;

+import com.google.common.collect.Sets;

+import com.google.common.hash.HashCode;

+import com.google.common.hash.Hashing;

+

+/**

+ * The default Accumulo dispatcher for scan servers. This dispatcher will hash tablets to a few

+ * random scan servers (defaults to 3). So a given tablet will always go to the same 3 scan servers.

+ * When scan servers are busy, this dispatcher will rapidly expand the number of scan servers it

+ * randomly chooses from for a given tablet. With the default settings and 1000 scan servers that

+ * are busy, this dispatcher would randomly choose from 3, 21, 144, and then 1000 scan servers.

+ * After getting to a point where we are raondomly choosing from all scan server, if busy is still

+ * being observed then this dispatcher will start to exponentially increase the busy timeout. If all

+ * scan servers are busy then its best to just go to one and wait for your scan to run, which is why

+ * the busy timeout increases exponentially when it seems like everything is busy.

+ *

+ * <p>

+ * The following options are accepted in {@link #init(InitParameters)}

+ * </p>

+ *

+ * <ul>

+ * <li><b>initialServers</b> the initial number of servers to randomly choose from for a given

+ * tablet. Defaults to 3.</li>

+ * <li><b>initialBusyTimeout</b>The initial busy timeout to use when contacting a scan servers. If

+ * the scan does start running within the busy timeout then another scan server can be tried.

+ * Defaults to PT0.033S see {@link Duration#parse(CharSequence)}</li>

+ * <li><b>maxBusyTimeout</b>When busy is repeatedly seen, then the busy timeout will be increased

+ * exponentially. This setting controls the maximum busyTimeout. Defaults to PT30M</li>

+ * <li><b>maxDepth</b>When busy is observed the number of servers to randomly chose from is

+ * expanded. This setting controls how many busy observations it will take before we choose from all

+ * servers.</li>

+ * </ul>

+ *

+ *

+ */

+public class DefaultScanServerDispatcher implements ScanServerDispatcher {

+

+ private static final Logger LOG = LoggerFactory.getLogger(DefaultScanServerDispatcher.class);

+

+ private static final SecureRandom RANDOM = new SecureRandom();

+

+ protected Duration initialBusyTimeout;

+ protected Duration maxBusyTimeout;

+

+ protected int initialServers;

+ protected int maxDepth;

+

+ private Supplier<List<String>> orderedScanServersSupplier;

+

+ private static final Set<String> OPT_NAMES =

+ Set.of("initialServers", "maxDepth", "initialBusyTimeout", "maxBusyTimeout");

+

+ @Override

+ public void init(InitParameters params) {

+ // avoid constantly resorting the scan servers, just do it periodically in case they change

+ orderedScanServersSupplier = Suppliers.memoizeWithExpiration(() -> {

+ List<String> oss = new ArrayList<>(params.getScanServers().get());

+ Collections.sort(oss);

+ return Collections.unmodifiableList(oss);

+ }, 100, TimeUnit.MILLISECONDS);

+

+ var opts = params.getOptions();

+

+ var diff = Sets.difference(opts.keySet(), OPT_NAMES);

+

+ Preconditions.checkArgument(diff.isEmpty(), "Unknown options %s", diff);

+

+ initialServers = Integer.parseInt(opts.getOrDefault("initialServers", "3"));

+ maxDepth = Integer.parseInt(opts.getOrDefault("maxDepth", "3"));

+ initialBusyTimeout = Duration.parse(opts.getOrDefault("initialBusyTimeout", "PT0.033S"));

+ maxBusyTimeout = Duration.parse(opts.getOrDefault("maxBusyTimeout", "PT30M"));

+

+ Preconditions.checkArgument(initialServers > 0, "initialServers must be positive : %s",

+ initialServers);

+ Preconditions.checkArgument(maxDepth > 0, "maxDepth must be positive : %s", maxDepth);

+ Preconditions.checkArgument(initialBusyTimeout.compareTo(Duration.ZERO) > 0,

+ "initialBusyTimeout must be positive %s", initialBusyTimeout);

+ Preconditions.checkArgument(maxBusyTimeout.compareTo(Duration.ZERO) > 0,

+ "maxBusyTimeout must be positive %s", maxBusyTimeout);

+

+ LOG.debug(

+ "DefaultScanServerDispatcher configured with initialServers: {}"

+ + ", maxDepth: {}, initialBusyTimeout: {}, maxBusyTimeout: {}",

+ initialServers, maxDepth, initialBusyTimeout, maxBusyTimeout);

+ }

+

+ @Override

+ public Actions determineActions(DispatcherParameters params) {

+

+ // only get this once and use it for the entire method so that the method uses a consistent

+ // snapshot

+ List<String> orderedScanServers = orderedScanServersSupplier.get();

+

+ if (orderedScanServers.isEmpty()) {

+ return new Actions() {

+ @Override

+ public String getScanServer(TabletId tabletId) {

+ return null;

+ }

+

+ @Override

+ public Duration getDelay() {

+ return Duration.ZERO;

+ }

+

+ @Override

+ public Duration getBusyTimeout() {

+ return Duration.ZERO;

+ }

+ };

+ }

+

+ Map<TabletId,String> serversToUse = new HashMap<>();

+

+ long maxBusyAttempts = 0;

+

+ for (TabletId tablet : params.getTablets()) {

+

+ // TODO handle io errors

+ long busyAttempts = params.getAttempts(tablet).stream()

+ .filter(sa -> sa.getResult() == ScanAttempt.Result.BUSY).count();

+

+ maxBusyAttempts = Math.max(maxBusyAttempts, busyAttempts);

+

+ String serverToUse = null;

+

+ var hashCode = hashTablet(tablet);

+

+ int numServers;

+

+ if (busyAttempts < maxDepth) {

+ numServers = (int) Math

+ .round(initialServers * Math.pow(orderedScanServers.size() / (double) initialServers,

+ busyAttempts / (double) maxDepth));

+ } else {

+ numServers = orderedScanServers.size();

+ }

+

+ int serverIndex =

+ (Math.abs(hashCode.asInt()) + RANDOM.nextInt(numServers)) % orderedScanServers.size();

+

+ // TODO could check if errors were seen on this server in past attempts

Review Comment:

TODO ?

##########

core/src/main/java/org/apache/accumulo/core/spi/scan/DefaultScanServerDispatcher.java:

##########

@@ -0,0 +1,232 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing,

+ * software distributed under the License is distributed on an

+ * "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+ * KIND, either express or implied. See the License for the

+ * specific language governing permissions and limitations

+ * under the License.

+ */

+package org.apache.accumulo.core.spi.scan;

+

+import static java.nio.charset.StandardCharsets.UTF_8;

+

+import java.security.SecureRandom;

+import java.time.Duration;

+import java.util.ArrayList;

+import java.util.Collections;

+import java.util.HashMap;

+import java.util.List;

+import java.util.Map;

+import java.util.Set;

+import java.util.concurrent.TimeUnit;

+import java.util.function.Supplier;

+

+import org.apache.accumulo.core.data.TabletId;

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+

+import com.google.common.base.Preconditions;

+import com.google.common.base.Suppliers;

+import com.google.common.collect.Sets;

+import com.google.common.hash.HashCode;

+import com.google.common.hash.Hashing;

+

+/**

+ * The default Accumulo dispatcher for scan servers. This dispatcher will hash tablets to a few

+ * random scan servers (defaults to 3). So a given tablet will always go to the same 3 scan servers.

+ * When scan servers are busy, this dispatcher will rapidly expand the number of scan servers it

+ * randomly chooses from for a given tablet. With the default settings and 1000 scan servers that

+ * are busy, this dispatcher would randomly choose from 3, 21, 144, and then 1000 scan servers.

+ * After getting to a point where we are raondomly choosing from all scan server, if busy is still

+ * being observed then this dispatcher will start to exponentially increase the busy timeout. If all

+ * scan servers are busy then its best to just go to one and wait for your scan to run, which is why

+ * the busy timeout increases exponentially when it seems like everything is busy.

+ *

+ * <p>

+ * The following options are accepted in {@link #init(InitParameters)}

+ * </p>

+ *

+ * <ul>

+ * <li><b>initialServers</b> the initial number of servers to randomly choose from for a given

+ * tablet. Defaults to 3.</li>

+ * <li><b>initialBusyTimeout</b>The initial busy timeout to use when contacting a scan servers. If

+ * the scan does start running within the busy timeout then another scan server can be tried.

+ * Defaults to PT0.033S see {@link Duration#parse(CharSequence)}</li>

+ * <li><b>maxBusyTimeout</b>When busy is repeatedly seen, then the busy timeout will be increased

+ * exponentially. This setting controls the maximum busyTimeout. Defaults to PT30M</li>

+ * <li><b>maxDepth</b>When busy is observed the number of servers to randomly chose from is

+ * expanded. This setting controls how many busy observations it will take before we choose from all

+ * servers.</li>

+ * </ul>

+ *

+ *

+ */

+public class DefaultScanServerDispatcher implements ScanServerDispatcher {

+

+ private static final Logger LOG = LoggerFactory.getLogger(DefaultScanServerDispatcher.class);

+

+ private static final SecureRandom RANDOM = new SecureRandom();

+

+ protected Duration initialBusyTimeout;

+ protected Duration maxBusyTimeout;

+

+ protected int initialServers;

+ protected int maxDepth;

+

+ private Supplier<List<String>> orderedScanServersSupplier;

+

+ private static final Set<String> OPT_NAMES =

+ Set.of("initialServers", "maxDepth", "initialBusyTimeout", "maxBusyTimeout");

+

+ @Override

+ public void init(InitParameters params) {

+ // avoid constantly resorting the scan servers, just do it periodically in case they change

+ orderedScanServersSupplier = Suppliers.memoizeWithExpiration(() -> {

+ List<String> oss = new ArrayList<>(params.getScanServers().get());

+ Collections.sort(oss);

+ return Collections.unmodifiableList(oss);

+ }, 100, TimeUnit.MILLISECONDS);

+

+ var opts = params.getOptions();

+

+ var diff = Sets.difference(opts.keySet(), OPT_NAMES);

+

+ Preconditions.checkArgument(diff.isEmpty(), "Unknown options %s", diff);

+

+ initialServers = Integer.parseInt(opts.getOrDefault("initialServers", "3"));

+ maxDepth = Integer.parseInt(opts.getOrDefault("maxDepth", "3"));

+ initialBusyTimeout = Duration.parse(opts.getOrDefault("initialBusyTimeout", "PT0.033S"));

+ maxBusyTimeout = Duration.parse(opts.getOrDefault("maxBusyTimeout", "PT30M"));

+

+ Preconditions.checkArgument(initialServers > 0, "initialServers must be positive : %s",

+ initialServers);

+ Preconditions.checkArgument(maxDepth > 0, "maxDepth must be positive : %s", maxDepth);

+ Preconditions.checkArgument(initialBusyTimeout.compareTo(Duration.ZERO) > 0,

+ "initialBusyTimeout must be positive %s", initialBusyTimeout);

+ Preconditions.checkArgument(maxBusyTimeout.compareTo(Duration.ZERO) > 0,

+ "maxBusyTimeout must be positive %s", maxBusyTimeout);

+

+ LOG.debug(

+ "DefaultScanServerDispatcher configured with initialServers: {}"

+ + ", maxDepth: {}, initialBusyTimeout: {}, maxBusyTimeout: {}",

+ initialServers, maxDepth, initialBusyTimeout, maxBusyTimeout);

+ }

+

+ @Override

+ public Actions determineActions(DispatcherParameters params) {

+

+ // only get this once and use it for the entire method so that the method uses a consistent

+ // snapshot

+ List<String> orderedScanServers = orderedScanServersSupplier.get();

+

+ if (orderedScanServers.isEmpty()) {

+ return new Actions() {

+ @Override

+ public String getScanServer(TabletId tabletId) {

+ return null;

+ }

+

+ @Override

+ public Duration getDelay() {

+ return Duration.ZERO;

+ }

+

+ @Override

+ public Duration getBusyTimeout() {

+ return Duration.ZERO;

+ }

+ };

+ }

+

+ Map<TabletId,String> serversToUse = new HashMap<>();

+

+ long maxBusyAttempts = 0;

+

+ for (TabletId tablet : params.getTablets()) {

+

+ // TODO handle io errors

Review Comment:

TODO ?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: notifications-unsubscribe@accumulo.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [accumulo] keith-turner commented on a diff in pull request #2665: Eventually Consistent scans / ScanServer feature

Posted by GitBox <gi...@apache.org>.

keith-turner commented on code in PR #2665:

URL: https://github.com/apache/accumulo/pull/2665#discussion_r866147045

##########

core/src/main/java/org/apache/accumulo/core/spi/scan/DefaultScanServerDispatcher.java:

##########

@@ -0,0 +1,232 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing,

+ * software distributed under the License is distributed on an

+ * "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+ * KIND, either express or implied. See the License for the

+ * specific language governing permissions and limitations

+ * under the License.

+ */

+package org.apache.accumulo.core.spi.scan;

+

+import static java.nio.charset.StandardCharsets.UTF_8;

+

+import java.security.SecureRandom;

+import java.time.Duration;

+import java.util.ArrayList;

+import java.util.Collections;

+import java.util.HashMap;

+import java.util.List;

+import java.util.Map;

+import java.util.Set;

+import java.util.concurrent.TimeUnit;

+import java.util.function.Supplier;

+

+import org.apache.accumulo.core.data.TabletId;

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+

+import com.google.common.base.Preconditions;

+import com.google.common.base.Suppliers;

+import com.google.common.collect.Sets;

+import com.google.common.hash.HashCode;

+import com.google.common.hash.Hashing;

+

+/**

+ * The default Accumulo dispatcher for scan servers. This dispatcher will hash tablets to a few

+ * random scan servers (defaults to 3). So a given tablet will always go to the same 3 scan servers.

+ * When scan servers are busy, this dispatcher will rapidly expand the number of scan servers it

+ * randomly chooses from for a given tablet. With the default settings and 1000 scan servers that

+ * are busy, this dispatcher would randomly choose from 3, 21, 144, and then 1000 scan servers.

+ * After getting to a point where we are raondomly choosing from all scan server, if busy is still

+ * being observed then this dispatcher will start to exponentially increase the busy timeout. If all

+ * scan servers are busy then its best to just go to one and wait for your scan to run, which is why

+ * the busy timeout increases exponentially when it seems like everything is busy.

+ *

+ * <p>

+ * The following options are accepted in {@link #init(InitParameters)}

+ * </p>

+ *

+ * <ul>

+ * <li><b>initialServers</b> the initial number of servers to randomly choose from for a given

+ * tablet. Defaults to 3.</li>

+ * <li><b>initialBusyTimeout</b>The initial busy timeout to use when contacting a scan servers. If

+ * the scan does start running within the busy timeout then another scan server can be tried.

+ * Defaults to PT0.033S see {@link Duration#parse(CharSequence)}</li>

+ * <li><b>maxBusyTimeout</b>When busy is repeatedly seen, then the busy timeout will be increased

+ * exponentially. This setting controls the maximum busyTimeout. Defaults to PT30M</li>

+ * <li><b>maxDepth</b>When busy is observed the number of servers to randomly chose from is

+ * expanded. This setting controls how many busy observations it will take before we choose from all

+ * servers.</li>

+ * </ul>

+ *

+ *

+ */

+public class DefaultScanServerDispatcher implements ScanServerDispatcher {

+

+ private static final Logger LOG = LoggerFactory.getLogger(DefaultScanServerDispatcher.class);

+

+ private static final SecureRandom RANDOM = new SecureRandom();

+

+ protected Duration initialBusyTimeout;

+ protected Duration maxBusyTimeout;

+

+ protected int initialServers;

+ protected int maxDepth;

+

+ private Supplier<List<String>> orderedScanServersSupplier;

+

+ private static final Set<String> OPT_NAMES =

+ Set.of("initialServers", "maxDepth", "initialBusyTimeout", "maxBusyTimeout");

+

+ @Override

+ public void init(InitParameters params) {

+ // avoid constantly resorting the scan servers, just do it periodically in case they change

+ orderedScanServersSupplier = Suppliers.memoizeWithExpiration(() -> {

+ List<String> oss = new ArrayList<>(params.getScanServers().get());

+ Collections.sort(oss);

+ return Collections.unmodifiableList(oss);

+ }, 100, TimeUnit.MILLISECONDS);

+

+ var opts = params.getOptions();

+

+ var diff = Sets.difference(opts.keySet(), OPT_NAMES);

+

+ Preconditions.checkArgument(diff.isEmpty(), "Unknown options %s", diff);

+

+ initialServers = Integer.parseInt(opts.getOrDefault("initialServers", "3"));

+ maxDepth = Integer.parseInt(opts.getOrDefault("maxDepth", "3"));

+ initialBusyTimeout = Duration.parse(opts.getOrDefault("initialBusyTimeout", "PT0.033S"));

+ maxBusyTimeout = Duration.parse(opts.getOrDefault("maxBusyTimeout", "PT30M"));

+

+ Preconditions.checkArgument(initialServers > 0, "initialServers must be positive : %s",

+ initialServers);

+ Preconditions.checkArgument(maxDepth > 0, "maxDepth must be positive : %s", maxDepth);

+ Preconditions.checkArgument(initialBusyTimeout.compareTo(Duration.ZERO) > 0,

+ "initialBusyTimeout must be positive %s", initialBusyTimeout);

+ Preconditions.checkArgument(maxBusyTimeout.compareTo(Duration.ZERO) > 0,

+ "maxBusyTimeout must be positive %s", maxBusyTimeout);

+

+ LOG.debug(

+ "DefaultScanServerDispatcher configured with initialServers: {}"

+ + ", maxDepth: {}, initialBusyTimeout: {}, maxBusyTimeout: {}",

+ initialServers, maxDepth, initialBusyTimeout, maxBusyTimeout);

+ }

+

+ @Override

+ public Actions determineActions(DispatcherParameters params) {

+

+ // only get this once and use it for the entire method so that the method uses a consistent

+ // snapshot

+ List<String> orderedScanServers = orderedScanServersSupplier.get();

+

+ if (orderedScanServers.isEmpty()) {

+ return new Actions() {

+ @Override

+ public String getScanServer(TabletId tabletId) {

+ return null;

+ }

+

+ @Override

+ public Duration getDelay() {

+ return Duration.ZERO;

+ }

+

+ @Override

+ public Duration getBusyTimeout() {

+ return Duration.ZERO;

+ }

+ };

+ }

+

+ Map<TabletId,String> serversToUse = new HashMap<>();

+

+ long maxBusyAttempts = 0;

+

+ for (TabletId tablet : params.getTablets()) {

+

+ // TODO handle io errors

Review Comment:

I'll take a look at this.

##########

core/src/main/java/org/apache/accumulo/core/spi/scan/DefaultScanServerDispatcher.java:

##########

@@ -0,0 +1,232 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing,

+ * software distributed under the License is distributed on an

+ * "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+ * KIND, either express or implied. See the License for the

+ * specific language governing permissions and limitations

+ * under the License.

+ */

+package org.apache.accumulo.core.spi.scan;

+

+import static java.nio.charset.StandardCharsets.UTF_8;

+

+import java.security.SecureRandom;

+import java.time.Duration;

+import java.util.ArrayList;

+import java.util.Collections;

+import java.util.HashMap;

+import java.util.List;

+import java.util.Map;

+import java.util.Set;

+import java.util.concurrent.TimeUnit;

+import java.util.function.Supplier;

+

+import org.apache.accumulo.core.data.TabletId;

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+

+import com.google.common.base.Preconditions;

+import com.google.common.base.Suppliers;

+import com.google.common.collect.Sets;

+import com.google.common.hash.HashCode;

+import com.google.common.hash.Hashing;

+

+/**

+ * The default Accumulo dispatcher for scan servers. This dispatcher will hash tablets to a few

+ * random scan servers (defaults to 3). So a given tablet will always go to the same 3 scan servers.

+ * When scan servers are busy, this dispatcher will rapidly expand the number of scan servers it

+ * randomly chooses from for a given tablet. With the default settings and 1000 scan servers that

+ * are busy, this dispatcher would randomly choose from 3, 21, 144, and then 1000 scan servers.

+ * After getting to a point where we are raondomly choosing from all scan server, if busy is still

+ * being observed then this dispatcher will start to exponentially increase the busy timeout. If all

+ * scan servers are busy then its best to just go to one and wait for your scan to run, which is why

+ * the busy timeout increases exponentially when it seems like everything is busy.

+ *

+ * <p>

+ * The following options are accepted in {@link #init(InitParameters)}

+ * </p>

+ *

+ * <ul>

+ * <li><b>initialServers</b> the initial number of servers to randomly choose from for a given

+ * tablet. Defaults to 3.</li>

+ * <li><b>initialBusyTimeout</b>The initial busy timeout to use when contacting a scan servers. If

+ * the scan does start running within the busy timeout then another scan server can be tried.

+ * Defaults to PT0.033S see {@link Duration#parse(CharSequence)}</li>

+ * <li><b>maxBusyTimeout</b>When busy is repeatedly seen, then the busy timeout will be increased

+ * exponentially. This setting controls the maximum busyTimeout. Defaults to PT30M</li>

+ * <li><b>maxDepth</b>When busy is observed the number of servers to randomly chose from is

+ * expanded. This setting controls how many busy observations it will take before we choose from all

+ * servers.</li>

+ * </ul>

+ *

+ *

+ */

+public class DefaultScanServerDispatcher implements ScanServerDispatcher {

+

+ private static final Logger LOG = LoggerFactory.getLogger(DefaultScanServerDispatcher.class);

+

+ private static final SecureRandom RANDOM = new SecureRandom();

+

+ protected Duration initialBusyTimeout;

+ protected Duration maxBusyTimeout;

+

+ protected int initialServers;

+ protected int maxDepth;

+

+ private Supplier<List<String>> orderedScanServersSupplier;

+

+ private static final Set<String> OPT_NAMES =

+ Set.of("initialServers", "maxDepth", "initialBusyTimeout", "maxBusyTimeout");

+

+ @Override

+ public void init(InitParameters params) {

+ // avoid constantly resorting the scan servers, just do it periodically in case they change

+ orderedScanServersSupplier = Suppliers.memoizeWithExpiration(() -> {

+ List<String> oss = new ArrayList<>(params.getScanServers().get());

+ Collections.sort(oss);

+ return Collections.unmodifiableList(oss);

+ }, 100, TimeUnit.MILLISECONDS);

+

+ var opts = params.getOptions();

+

+ var diff = Sets.difference(opts.keySet(), OPT_NAMES);

+

+ Preconditions.checkArgument(diff.isEmpty(), "Unknown options %s", diff);

+

+ initialServers = Integer.parseInt(opts.getOrDefault("initialServers", "3"));

+ maxDepth = Integer.parseInt(opts.getOrDefault("maxDepth", "3"));

+ initialBusyTimeout = Duration.parse(opts.getOrDefault("initialBusyTimeout", "PT0.033S"));

+ maxBusyTimeout = Duration.parse(opts.getOrDefault("maxBusyTimeout", "PT30M"));

+

+ Preconditions.checkArgument(initialServers > 0, "initialServers must be positive : %s",

+ initialServers);

+ Preconditions.checkArgument(maxDepth > 0, "maxDepth must be positive : %s", maxDepth);

+ Preconditions.checkArgument(initialBusyTimeout.compareTo(Duration.ZERO) > 0,

+ "initialBusyTimeout must be positive %s", initialBusyTimeout);

+ Preconditions.checkArgument(maxBusyTimeout.compareTo(Duration.ZERO) > 0,

+ "maxBusyTimeout must be positive %s", maxBusyTimeout);

+

+ LOG.debug(

+ "DefaultScanServerDispatcher configured with initialServers: {}"

+ + ", maxDepth: {}, initialBusyTimeout: {}, maxBusyTimeout: {}",

+ initialServers, maxDepth, initialBusyTimeout, maxBusyTimeout);

+ }

+

+ @Override

+ public Actions determineActions(DispatcherParameters params) {

+

+ // only get this once and use it for the entire method so that the method uses a consistent

+ // snapshot

+ List<String> orderedScanServers = orderedScanServersSupplier.get();

+

+ if (orderedScanServers.isEmpty()) {

+ return new Actions() {

+ @Override

+ public String getScanServer(TabletId tabletId) {

+ return null;

+ }

+

+ @Override

+ public Duration getDelay() {

+ return Duration.ZERO;

+ }

+

+ @Override

+ public Duration getBusyTimeout() {

+ return Duration.ZERO;

+ }

+ };

+ }

+

+ Map<TabletId,String> serversToUse = new HashMap<>();

+

+ long maxBusyAttempts = 0;

+

+ for (TabletId tablet : params.getTablets()) {

+

+ // TODO handle io errors

+ long busyAttempts = params.getAttempts(tablet).stream()

+ .filter(sa -> sa.getResult() == ScanAttempt.Result.BUSY).count();

+

+ maxBusyAttempts = Math.max(maxBusyAttempts, busyAttempts);

+

+ String serverToUse = null;

+

+ var hashCode = hashTablet(tablet);

+

+ int numServers;

+

+ if (busyAttempts < maxDepth) {

+ numServers = (int) Math

+ .round(initialServers * Math.pow(orderedScanServers.size() / (double) initialServers,

+ busyAttempts / (double) maxDepth));

+ } else {

+ numServers = orderedScanServers.size();

+ }

+

+ int serverIndex =

+ (Math.abs(hashCode.asInt()) + RANDOM.nextInt(numServers)) % orderedScanServers.size();

+

+ // TODO could check if errors were seen on this server in past attempts

Review Comment:

I'll also look at this.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: notifications-unsubscribe@accumulo.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [accumulo] keith-turner commented on a diff in pull request #2665: Eventually Consistent scans / ScanServer feature

Posted by GitBox <gi...@apache.org>.

keith-turner commented on code in PR #2665:

URL: https://github.com/apache/accumulo/pull/2665#discussion_r883197611

##########

shell/src/main/java/org/apache/accumulo/shell/commands/ScanCommand.java:

##########

@@ -419,6 +438,7 @@ public Options getOptions() {

outputFileOpt.setArgName("file");

contextOpt.setArgName("context");

executionHintsOpt.setArgName("<key>=<value>{,<key>=<value>}");

+ scanServerOpt.setArgName("immediate|eventual");

Review Comment:

>That makes sense if the desired primary outcome was "eventual consistency". It's not, though. It's merely an acceptable side-effect of what the user is really asking for, and not its essential/sufficient characteristic.

I agree that a developer will enable eventual consistency because they want better performance and/or availability and they are ok with stale data. One important thing to consider is that for evaluating code for correctness (like I am reviewing Accumulo code that someone else wrote) is that the consistency level of the data returned by the scanner is extremely important (probably more important than the performance benefits desired by the author). When considering other names, the fact that a scanner may return stale data needs to be very clearly communicated.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: notifications-unsubscribe@accumulo.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [accumulo] keith-turner commented on a diff in pull request #2665: Eventually Consistent scans / ScanServer feature

Posted by GitBox <gi...@apache.org>.

keith-turner commented on code in PR #2665:

URL: https://github.com/apache/accumulo/pull/2665#discussion_r884057335

##########

core/src/main/java/org/apache/accumulo/core/conf/Property.java:

##########

@@ -395,6 +395,74 @@ public enum Property {

+ "indefinitely. Default is 0 to block indefinitely. Only valid when tserver available "

+ "threshold is set greater than 0. Added with version 1.10",

"1.10.0"),

+ // properties that are specific to scan server behavior

+ @Experimental

+ SSERV_PREFIX("sserver.", null, PropertyType.PREFIX,

+ "Properties in this category affect the behavior of the scan servers", "2.1.0"),

+ @Experimental

+ SSERV_DATACACHE_SIZE("sserver.cache.data.size", "10%", PropertyType.MEMORY,

+ "Specifies the size of the cache for RFile data blocks on each scan server.", "2.1.0"),

+ @Experimental

+ SSERV_INDEXCACHE_SIZE("sserver.cache.index.size", "25%", PropertyType.MEMORY,

+ "Specifies the size of the cache for RFile index blocks on each scan server.", "2.1.0"),

+ @Experimental

+ SSERV_SUMMARYCACHE_SIZE("sserver.cache.summary.size", "10%", PropertyType.MEMORY,

+ "Specifies the size of the cache for summary data on each scan server.", "2.1.0"),

+ @Experimental

+ SSERV_DEFAULT_BLOCKSIZE("sserver.default.blocksize", "1M", PropertyType.BYTES,

+ "Specifies a default blocksize for the scan server caches", "2.1.0"),

+ @Experimental

+ SSERV_CACHED_TABLET_METADATA_EXPIRATION("sserver.cache.metadata.expiration", "5m",

+ PropertyType.TIMEDURATION, "The time after which cached tablet metadata will be refreshed.",

+ "2.1.0"),

+ @Experimental

+ SSERV_PORTSEARCH("sserver.port.search", "true", PropertyType.BOOLEAN,

+ "if the ports above are in use, search higher ports until one is available", "2.1.0"),

+ @Experimental

+ SSERV_CLIENTPORT("sserver.port.client", "9996", PropertyType.PORT,

+ "The port used for handling client connections on the tablet servers", "2.1.0"),

+ @Experimental

+ SSERV_MAX_MESSAGE_SIZE("sserver.server.message.size.max", "1G", PropertyType.BYTES,

+ "The maximum size of a message that can be sent to a scan server.", "2.1.0"),

+ @Experimental

+ SSERV_MINTHREADS("sserver.server.threads.minimum", "2", PropertyType.COUNT,

+ "The minimum number of threads to use to handle incoming requests.", "2.1.0"),

+ @Experimental

+ SSERV_MINTHREADS_TIMEOUT("sserver.server.threads.timeout", "10s", PropertyType.TIMEDURATION,

Review Comment:

Based on the problems I saw in testing, I think this default should be different. But not sure what it should be yet. Also want to go back compare the tserver and sserver thread pool behavior/creating/config to 1.10.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: notifications-unsubscribe@accumulo.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [accumulo] dlmarion commented on pull request #2665: Eventually Consistent scans / ScanServer feature

Posted by GitBox <gi...@apache.org>.

dlmarion commented on PR #2665:

URL: https://github.com/apache/accumulo/pull/2665#issuecomment-1151357227

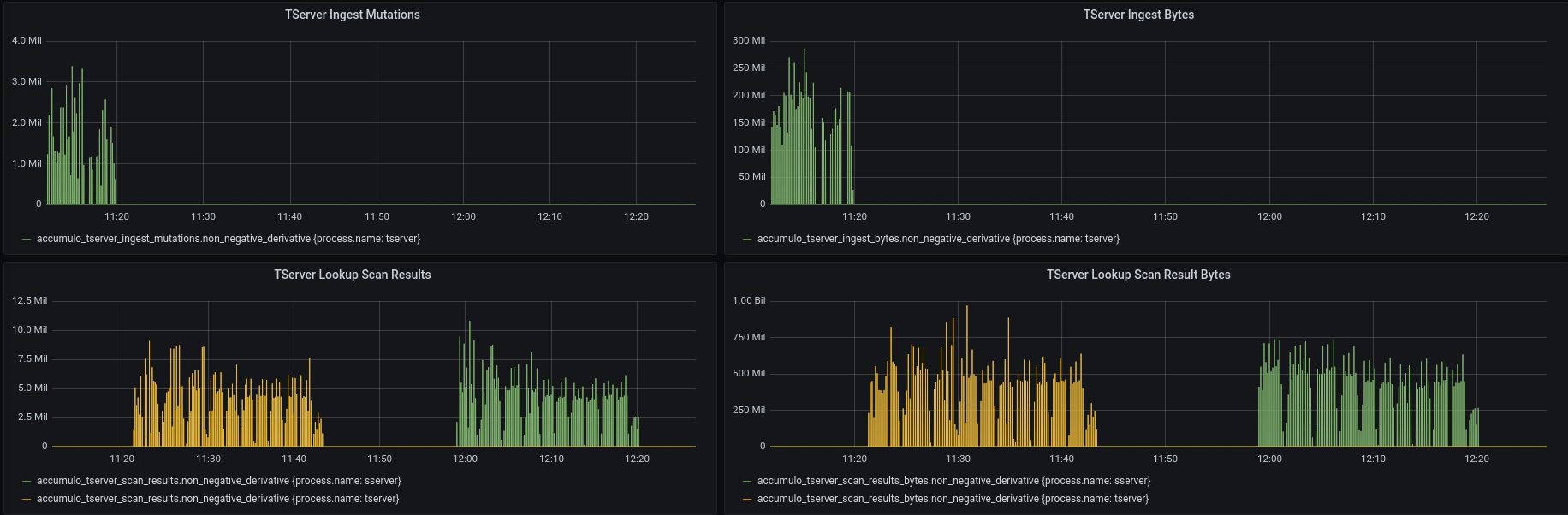

I started up a 2 node cluster from commit `fcd2738` and my accumulo-testing [branch ](https://github.com/dlmarion/accumulo-testing/tree/ci-verify-consistency-level) which includes changes to set the consistency level for the continuous walker, batch walker, scanner, and verify applications. I loaded data for an hour using `cingest ingest`, then used `cingest verify` jobs to test my changes in commits abcd8a7, 406df63, and c7dd148. The first job ran with consistency level `IMMEDIATE` (which uses the tserver) and the second job ran with `EVENTUAL` (which uses the sserver). I confirmed that the scans during the second job showed up on the Active Scans page in the monitor and confirmed that the metrics worked using Grafana. In the Grafana screenshot below you can see the end of the ingest process happening and then the two verify jobs, first one using the tserver and the second one using the sserver.

I should also note that the verify jobs both came back with the same results.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: notifications-unsubscribe@accumulo.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [accumulo] keith-turner commented on pull request #2665: Eventually Consistent scans / ScanServer feature

Posted by GitBox <gi...@apache.org>.

keith-turner commented on PR #2665:

URL: https://github.com/apache/accumulo/pull/2665#issuecomment-1149136177

@dlmarion made a few suggestions about the testing. I updated and the report and ran some new tests based on that. The new tests results are in the report.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: notifications-unsubscribe@accumulo.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [accumulo] dlmarion commented on pull request #2665: Eventually Consistent scans / ScanServer feature

Posted by GitBox <gi...@apache.org>.

dlmarion commented on PR #2665:

URL: https://github.com/apache/accumulo/pull/2665#issuecomment-1138630511

Using `set/getConsistencyLevel` on ScannerBase allows us to change the implementation without changing the API. Using a name tied to the implementation will cause API churn if the implementation changes. I'm not tied to `set/getConsistencyLevel`.

I'm waiting for some consensus before change the API method names.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: notifications-unsubscribe@accumulo.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [accumulo] ctubbsii commented on pull request #2665: Eventually Consistent scans / ScanServer feature

Posted by GitBox <gi...@apache.org>.

ctubbsii commented on PR #2665:

URL: https://github.com/apache/accumulo/pull/2665#issuecomment-1156539782

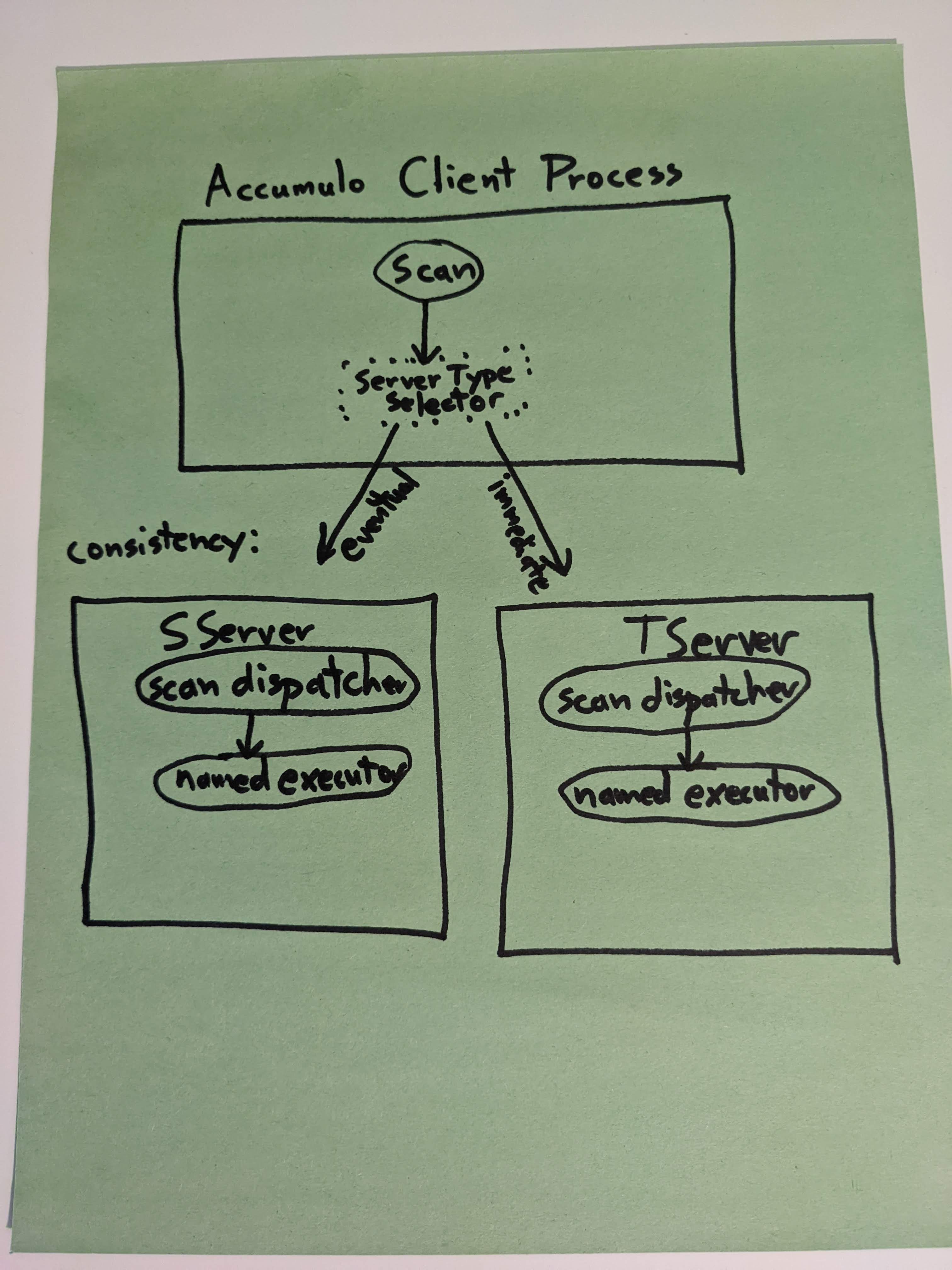

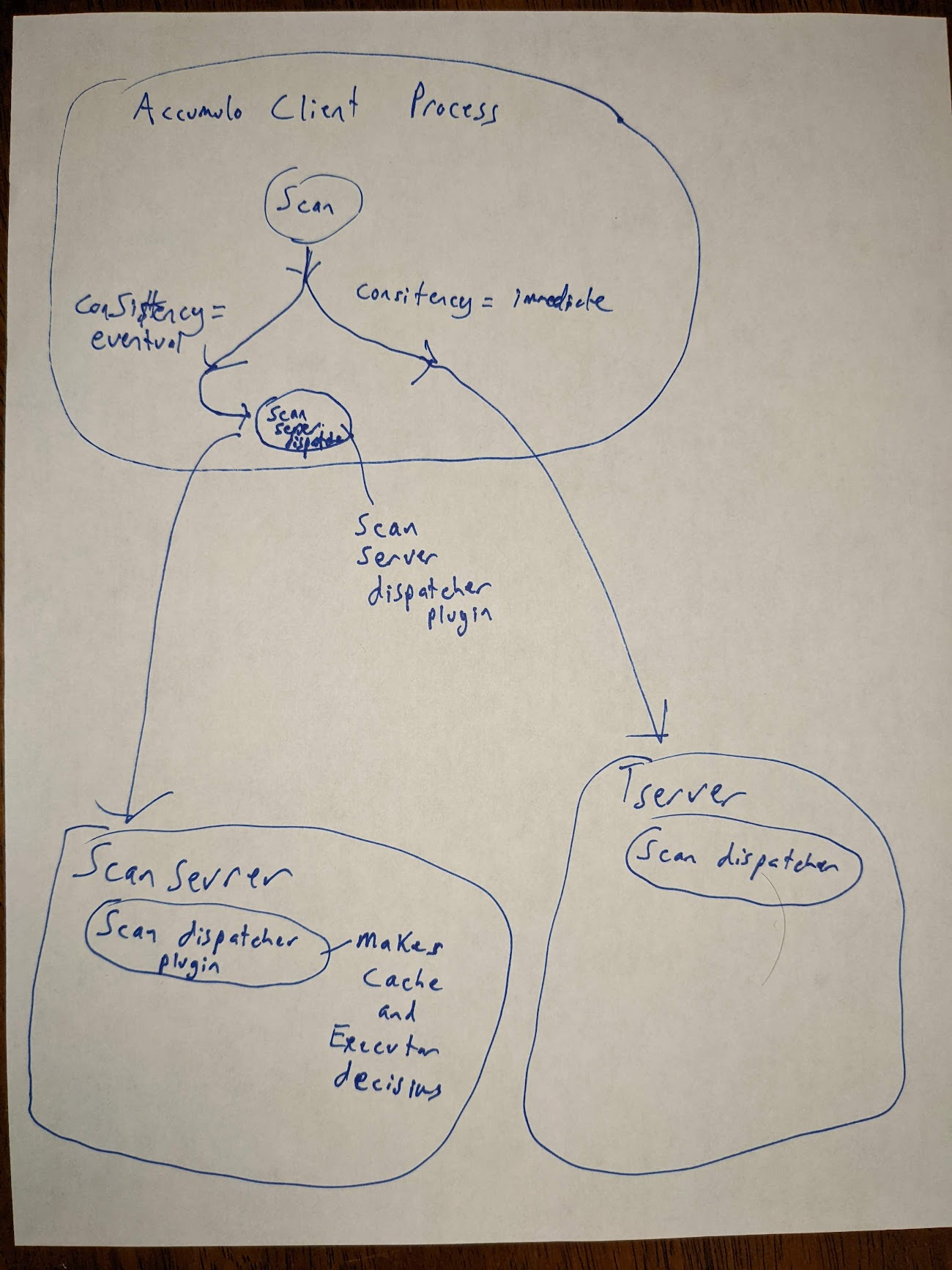

Okay, thanks. That helps. I guess I was thinking the current dispatcher ran in the client side. So, using scan hints wouldn't even work the way I imagined it. We need the scan server dispatcher plugin to run on the client side first.

A few thoughts based on my new understanding:

1. The client side dispatcher concept is very different from the executor dispatching that is done in the tserver, but has a very similar name. It might be helpful to have this named completely differently... like "server chooser" or "tablet scanner server type selector" or something along those lines (not necessarily as verbose as that latter one... but something to make it clearly distinct from the executor pool dispatching inside the server).